Abstract

Background:

The Health Literacy Questionnaire (HLQ) is a multidimensional generic questionnaire developed to capture a wide range of health literacy needs. There is a need for validation evidence for the Norwegian version of the HLQ (N-HLQ).

Objective:

The present study tested an initial version of the Norwegian HLQ by exploring its utility and construct validity among a group of nursing students.

Methods:

A pre-test survey was performed in participants (N = 18) who were asked to consider every item in the N-HLQ (44 items across nine scales). The N-HLQ was then administered to 368 respondents. Scale consistency was identified and extracted in a series of factor analyses (principal component analysis [PCA] with oblimin rotation) demanding a nine-dimension solution performed on randomly drawn 50% of the samples obtained by bootstrapping. Correlations between the nine factors obtained in the 13-factor PCA and the scale scores computed by the scale scoring syntaxes provided by the authors of the original HLQ were estimated.

Key Results:

The pre-test survey did not result in the need to rephrase items. The internal consistency of the nine HLQ scales was high, ranging from 0.81 to 0.72. The best fit for reproduction of the scales from the original HLQ was found for these dimensions: “1. feeling understood and supported by health care providers,” “2. having sufficient information to manage my health,” and “3. actively managing my health.” For the dimensions “7. navigating in the healthcare system” and “8. ability to find good health information,” a rather high degree of overlap was found, as indicated by relatively low differences between mean highest correlations and mean next-highest correlations.

Conclusions:

Despite some possible overlap between dimensions 7 and 8, the N-HLQ appeared relatively robust. Thus, this study's results contribute to the evidence validation base for the N-HLQ in Norwegian populations. [HLRP: Health Literacy Research and Practice. 2020;4(4):e190–e199.]

Plain Language Summary:

This study tested the Norwegian version of the Health Literacy Questionnaire. The questionnaire (44 items across nine scales) was completed by 368 nursing students. Despite some overlap between scale 7 (“navigating in the health care system”) and scale 8 (“ability to find good health information”), the questionnaire appears to serve as a good measurement for health literacy in the Norwegian population.

People are increasingly given responsibility for own their health and, consequently, health literacy (HL) has become a topic of growing interest. Research has demonstrated associations between low functional HL (i.e., health-related reading and numeracy ability) and poor health-related outcomes, such as increased hospital admissions and readmissions (Baker et al., 2002; Chen et al., 2013; Choet et al., 2008; Scott et al., 2002), less participation in preventive activities (Adams et al., 2013; Thomson & Hoffman-Goetz, 2012; von Wagner et al., 2007), poorer self-management of chronic conditions (Aung et al., 2012; Gazmararian et al., 2003; Williams et al., 1998), poorer disease outcomes (Peterson et al., 2011; Schillinger et al., 2002; Yamashita & Kart, 2011), lower functional status (Wolf et al., 2005), and increased mortality (Bostock & Steptoe, 2012; Peterson et al., 2009; Sudore et al., 2006). More recently, using more dynamic and multidimensional measures of HL, associations have also been found with screening behavior, diabetes control, and patients' perceptions of quality of life (Jovanić et al., 2018; O'Hara et al., 2018; Olesen et al., 2017).

HL is defined in numerous ways. However, the current common understanding is that HL is a multidimensional concept comprising a range of cognitive, affective, social, and personal skills and attributes. According to Nutbeam (2008), HL contains three levels, progressing from basic skills in reading and writing (functional HL) to the ability to derive meaning from different forms of communication and apply new information to changing situations (interactive HL) and finally the ability to achieve policy and organizational changes (critical HL).

Although research suggests unambiguous associations between HL and health outcomes, a major shortcoming of such findings is that data have frequently been derived from suboptimal instruments (Jordan et al., 2011). Jordan et al. (2011) have highlighted limitations regarding the general conceptualisation of HL, coupled with weak psychometric properties of the instruments used, and they claimed that HL has not been consistently measured, thus making it difficult to interpret and compare HL at the individual and population levels.

Until recently, most instruments measuring HL have been unidimensional, focusing on health-related numeracy and reading skills (Berkman et al., 2010). For example, instruments frequently used to measure HL include the Rapid Estimate of Adult Literacy in Medicine focusing on word recognition (Davis et al., 1993) and the Test of Functional Health Literacy (Parker et al., 1995) testing health-related reading and numeracy skills. Similarly, the Newest Vital Sign is a commonly used instrument focused on testing a respondent's understanding of a food label (Weiss et al., 2005). Therefore, a need for more comprehensive assessment tools has recently been recognized (Altin et al., 2014), and in the past 5 to 6 years multidimensional assessment tools have been developed, such as the European Health Literacy Survey Questionnaire (HLS-EU-Q) (Sørensen et al., 2013) and the Health Literacy Questionnaire (HLQ) (Osborne et al., 2013), yielding a more multifaceted picture of HL. Although the HLS-EU-Q was developed and tested among the Norwegian population (Finbråten et al., 2017; Sorensen et al., 2013), there is a need for additional multidimensional HL instruments in the Norwegian setting, such as the HLQ. The HLS-EU-Q was specifically designed to compare populations using three dimensions; however, the advantages of the nine-dimension HLQ questionnaire is that it can provide a nuanced evaluation of education programs and derive HL profiles that might, in turn, facilitate intervention development and service improvement (Batterham et al., 2014). The HLQ has been translated into more than 15 languages. It has undergone validity testing in Germany, Denmark, France, and Slovakia and has been shown to have strong psychometric properties in the translated versions as well as the original (Debessue et al., 2018; Elsworth et al., 2016; Kolarčik et al, 2015; Maindal et al., 2016; Nolte et al., 2017). In the last couple of years, the HLQ has also been used in Norwegian settings (Larsen et al., 2018; Stømer et al., 2019). Therefore, it is important to explore the utility and construct validity of the Norwegian version of the HLQ.

There is a growing acceptance of the view that the validation of self-reported instruments should be seen as an accumulation and evaluation of sources of validity evidence (American Psychological Association & National Council on Measurement in Education, 2014; Kane, 2013; Zumbo & Chan, 2014). Rather than relying on a strict factor analysis of psychometrical properties alone, according to Hawkins et al., (2018), the validation of HLQ should be based on a network of different empirical evidence that supports the intended interpretation and use of HLQ scores. As such, using a student sample for the initial validation of the questionnaire would contribute to the basis of validation evidence, especially in the context of a younger population. Hence, the current study aims to implement and test an initial version of the Norwegian HLQ, exploring its utility in the field as well as testing its construct validity within a group of nursing students.

Method

The Health Literacy Questionnaire

The HLQ is based on the World Health Organization's (WHO) definition of HL, described as the “cognitive and social skills which determine the motivation and ability of people to access, understand and use information in ways which promote and maintain good health” (Nutbeam, 1998, p. 13). The three levels of health literacy described in Nutbeam's (2008) theoretical model (i.e., functional HL, interactive HL, and critical HL) are incorporated within and across the domains of HLQ, thereby providing the possibilities to capture respondents' capability at each of these levels (Osborne et al., 2013). This link between Nutbeam's schema of HL and the HLQ was revealed through a validity-driven item-writing process based on citizens' lived experiences (Osborne et al, 2013), as illustrated in Table 1.

Table 1.

Linkage Between the Nutbeam's Schema of Health Literacy and the Health Literacy Questionnaire

| Nutbeam's schema | Broad matching Health Literacy Questionnaire domainsa |

|---|---|

| Basic/functional health literacy: sufficient basic skills in reading and writing to be able function effectively in everyday situations | 2. Having sufficient information to manage my health 8. Ability to find good-quality health information 9. Understanding health information well enough to know what to do |

| Communicative/interactive health literacy: more advanced cognitive and literacy skills that, together with social skills, can be used to actively participate in everyday activities, to extract information and derive meaning from different forms of communication, and to apply new information to changing circumstances | 1. Feeling understood and supported by health care providers 3. Actively managing my health 4. Social support for health 6. Ability to actively engage with health care providers 7. Navigating the health system 8. Ability to find good quality-health information |

| Critical literacy: more advanced cognitive skills, that together with social skills, can be applied to critically analyze information and to use this information to exert greater control over life events and situations | 3. Actively managing my health 4. Social support for health 5. Appraisal of health information |

Note.

Within each Health Literacy Questionnaire scale there are some elements of the three levels of Nutbeam's schema, so overlap is expected. Reprinted (permission is not required) under the Creative Commons Attribution License (CC BY) from Osborne et al., 2013.

The development process of the HLQ consisted of two concept mapping workshops involving 27 workshop participants, comprising a patient group (N = 12) and a health care professional and researcher group (N = 15). Due to the concept mapping process developed by Trochim et al., (1994), a structured brainstorming process was initiated. The workshop participants were introduced to seeding statements based on the WHO's definition of HL, such as “thinking broadly about your experiences in trying to look after your health, what abilities does a person need to have to get, understand, and use health information to make informed decisions about their health?” The process confirmed that health literacy encompasses a broad range of concepts (Buchbinder et al., 2013; Jordan et al., 2011).

The next step of the development process included interviews with community members and patients and extensive validity testing in a construction sample (N = 634) and a confirmation sample (N = 405) in Australia (Osborne et al., 2013). The HLQ consists of 44 items in nine domains of health literacy. The first 5 scales, constituting part 1 of the HLQ, are scored on a 4-point, Likert-type response scale (strongly disagree, disagree, agree, strongly agree). The last four scales, constituting part 2, are scored on a 5-point response scale, where respondents rate the item levels by the difficulty in undertaking a task (cannot do, usually difficult, sometimes difficult, usually easy, always easy). The HLQ scales are listed in Table 2. The 44 items are published in the original HLQ validation paper (Osborne et al., 2013).

Table 2.

Sample Background Characteristics (N = 368)

| Characteristic | n (%) |

|---|---|

|

| |

| Age (years) | |

| Range | 18–64 |

| Mean | 24 |

| SD | 7.1 |

|

| |

| Gender (female) | 307 (86) |

|

| |

| Speaking Norwegian when growing up | 282 (83) |

|

| |

| Living with someone | 254 (76) |

|

| |

| Studying at a university in western Norway | 221 (61) |

|

| |

| Studying at a Capital university | 147 (39) |

|

| |

| Long-term health problem or disease | 60 (18) |

Translation process. The translation and cultural adaptation of the HLQ into Norwegian followed a standardized protocol provided by the authors of the HLQ (Hawkins et al., 2020). Forward translation was guided by comprehensive item intent descriptions, and items were then reviewed for cultural appropriateness and measurement (linguistic) equivalence. Blind-back translation was also undertaken. The meaning of every nuance in the final translation was verified with one of the authors of the orignal HLQ (Osborne et al., 2013) through two consensus conferences in addition to written reports.

We performed a web survey pre-test to capture how the wording of the translated items were understood and experienced by Norwegian responders. Participants were included by a convenient sampling method, and the 18 participants (10 women, 8 men) were all citizens who lived in the western part of Norway, ranging in age from 30 to 80 years. The participants were given access to the web-based HLQ after giving their consent to participate. After finishing the questionnaire, participants were asked to consider every item in terms of (1) difficult to answer, (2) unclear, (3) use of difficult words, or (4) upsetting. Participants were also asked to provide comments or suggest alternative words or terms in a free-text response alternative at the end of the survey.

Setting. The sample method used for testing the structure validity of HLQ was a convenience sample collected at two different universities in Norway in 2016: one in the capital of Norway, with the largest number of nursing students in the country, and the other in western Norway, the third most densely populated urban area in Norway. The population invited was nursing students in the first semester of the bachelor's degree program. The number of first-semester nursing students in 2016 was 302 and 570 for the two universities, respectively, and the grade point average requirement for admission to the two nurse education programs was 4.6 and 4.7 (grading range: 2 to 6).

Permission to conduct the study was obtained from both universities. Information about the study, including the purpose, scope, content, confidentiality, voluntary nature of participation, and the ability to withdraw from the study at any time, was provided to students in both oral and written formats.

A web-based questionnaire was made available on the students' official learning platform. Students who agreed to participate answered the questionnaire on campus after a lecture. The link to the questionnaire was available for about 40 to 60 minutes, and the link was closed after the last student finished the questionnaire. It was only possible for the participants to answer the questionnaire on campus. Therefore, no personal internet protocol addresses were collected or stored for the web-based survey. No information about personal or health issues was collected; thus, according to Norwegian law, the study did not require formal ethical approval from the Norwegian National Ethics Committee.

Respondents. At the capital university, the recruitment of participants was performed after lectures provided for three different student groups. Of 181 nursing students attending the group lectures, 147 agreed to participate. At the university in western Norway, the data collection was performed after a lecture provided for the total group of first-semester nursing students. Here, a total of 250 were invited, and 221 agreed to participate. Thus, the total population of first-semester nursing students at the two universities was 872 and, of the 432 invited to participate, 368 agreed (85% response rate).

Respondents' ages ranged from 18 to 64 years, and the mean age was 24 years. Most of the sample were women (86%) and who were living with someone (76%). Furthermore, 83% of the sample spoke Norwegian when growing up, and 18% reported having a long-term health problem or disease. Participants' background characteristics are summarized in Table 2.

Analyses

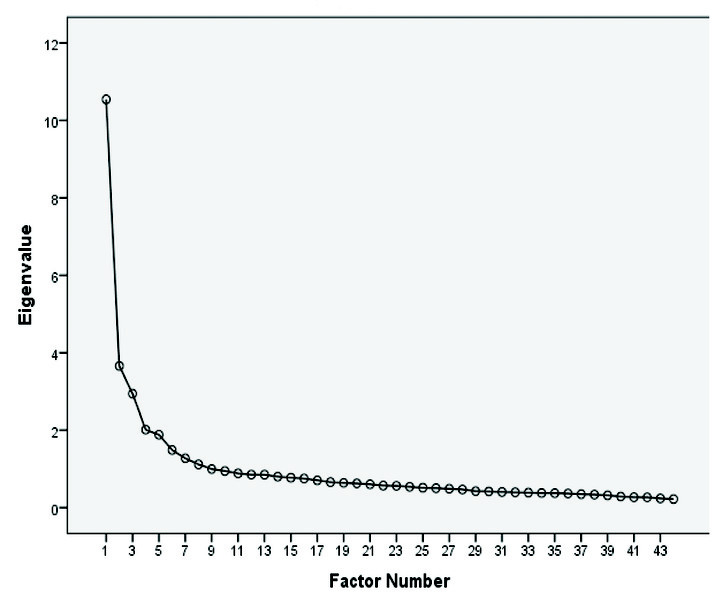

The HLQ scales were calculated by adding the item scores and dividing the number of items in each respective scale; the first 5 scales ranged between 1 and 4 while the remainder ranged between 1 and 5 (Osborne et al., 2013) using SPSS Statistics version 22. The internal consistency of each of the predefined HLQ scales was assessed using Cronbach's alpha. We initially performed exploratory factor analyses and confirmatory analyses. Screen plots from exploratory factor analyses gave no clear indications as to the number of dimensions to be extracted, and a confirmatory analysis of the nine dimensions yielded an unsatisfactory fit (Figure 1).

Figure 1.

Scree plot for the 44-item Health Literacy Questionnaire.

To explore if a subset of the nine dimensions proposed by Osborne et al. (2013) could be consistently identified and extracted, a series of 13 factor analyses (principal component analysis [PCA] with oblimin rotation) demanding a nine-dimension solution was performed on randomly drawn 50% of the samples, obtained by bootstrapping. The purpose of choosing bootstrapping was that it would give us an understanding of how stable our results from the PCA were when repeating the analysis on randomly drawn samples. In this study, the analysis was thus repeated 13 times. Bootstrapping is considered a valuable resampling methodology as it can provide a large number of permutations of the sample, thereby enabling us to analyze samples and provide summary statistics (Costello & Osborne, 2005). The advantages of bootstrapping are that it does not require normal distribution—even with limited sample size, and it allows researchers to move beyond traditional parameter estimates to any statistic estimates, such as structure and pattern coefficients (Lu et al., 2014; Zientek & Thompson, 2007).

To compare results from the 13 rounds of factor analyses performed on these randomly drawn subsamples, factor scores were retained as separate variables (“factor scores”) for each round. Correlations between the nine factor scores obtained in the 13 nine-factor PCA and the a priori scales provided by Osborne et al. (2013) could then be estimated, thereby enabling us to assess which of the nine scales proposed by Osborne et al. (2013) could actually be retrieved with some consistency in our own sample.

Results

In the web-survey pre-test, participants commented that the following items in the questionnaire appeared to be unclear from their perspective: item 7: “When I see new information about health, I check up on whether it is true or not” (reported as unclear by n = 3), item 8 “I have at least one health care provider I can discuss my health problems with” (reported unclear by n = 4), item 11 “If I need help, I have plenty of people I can rely on” (reported as unclear by n = 1), and item 22 “I can rely on at least one healthcare provider” (reported as unclear by n = 1). Participants suggested no concrete alternatives for these items. No participants reported that any of the items were “difficult to answer,” had “difficult words,” or were “upsetting.”. Suggested alternative wordings brought up during in-depth discussions were found to put the Norwegian version at risk of deviating in meaning from the original English version. Therefore, discussions did not result in rephrasing of any of the items.

Scalability/Internal Consistency

Overall, the internal consistency of the nine HLQ scales was high, ranging from 0.81 to 0.72 (Table 3). The highest internal consistencies were found for “1. Feeling understood and supported by health care providers” (0.81) and “6. Ability to actively engage with healthcare providers” (0.81). The lowest were for the scales “9. Understanding health information well enough to know what to do” (0.72) and “5. Ability to find good health information” (0.72). Thus, homogeneity within scales measured by internal consistency was satisfactory for all subscales.

Table 3.

Internal Consistency of the Nine Health Literacy Questionnaire Scales (Cronbach's Alpha), Mean Scores, and Correlations (x 1,000) Between the Nine Factor Scores and the Predefined Scale Scores (N = 368)

| Health Literacy Questionnaire | Cronbach's alpha | Mean (SD) | Highest correlation Mean (SD) | Near highest correlation Mean (SD) | Difference between the two means |

|---|---|---|---|---|---|

| Health Literacy Questionnaire scales part 1 (possible scores 1–4) | |||||

| Feeling understood and supported by health care providers (4 items) | .81 | 2.96 (0.64) | .936 (11.4) | .413 (58.6) | .523 |

| Having sufficient information to manage my health (4 items) | .76 | 2.96 (0.47) | .904 (64.2) | .346 (74.5) | .558 |

| Actively managing my health (4 items) | .81 | 2.86 (0.51) | .950 (30.5) | .328 (81) | .622 |

| Social support for health (4 items) | .78 | 3.13 (0.51) | .889 (42.3) | .477 (28.2) | .412 |

| Appraisal of health information (5 items) | .80 | 2.78 (0.49) | .906 (26.4) | .368 (64.6) | .538 |

| Health Literacy Questionnaire scales part 2 (possible scores 1–5) | |||||

| Ability to actively engage with health care providers (5 items) | .81 | 3.56 (0.59) | .899 (40.4) | .451 (82.1) | .448 |

| Navigating in the health care system (6 items) | .75 | 3.50 (0.56) | 772 (51.4) | .546 (90.8) | .226 |

| Ability to find good health information (5 items) | .72 | 3.69 (0.50) | .686 (60.3) | .529 (58.8) | .157 |

| Understanding health information well enough to know what to do (5 items) | .72 | 3.71 (0.50) | .860 (71.4) | .470 (55) | .390 |

Dimensional Structure

For each of the 13 separate nine-dimensional factor analyses (PCA) performed among randomly selected subsamples, the factors most closely reflecting the original nine scales were identified, allowing us to correlate factor scores with the scale scores derived from the a priori scales. High correlation indicated that the PCA had achieved a close correspondence with the original scales, thereby confirming the a priori nine-dimensional structure. Consistently high correlations between the a priori scale scores and the factor scores indicate a good fit, and the large difference between the highest and the next-highest correlation obtained in each of the 13 trials provides further evidence of the distinctiveness of the factors obtained. The highest average correlation and the difference between mean highest correlation and mean next-highest correlation of the nine factor scores and the HLQ index syntax score ranged from 0.950 to 0.686 and 0.157 to 0.622, respectively (Table 2).The best fit or reproduction of the scales from the original HLQ were found for “1. Feeling understood and supported by health care providers”, “2. Having sufficient information to manage my health,” “3. Actively managing my health,” and “5. Appraisal of health information.” The scales “7. Navigating in the health care system” and “8. Ability to find good health information” showed a rather high degree of overlap, as indicated by relatively low differences between mean highest correlations and mean next-highest correlations.

Discussion

The purpose of this study was to acquire initial insights into the construct validity of the HLQ. Our validity testing indicated that the questionnaire seemed relatively robust as the items showed acceptability by respondents and the scales shows overall good internal consistency. The nine factors extracted were relatively distinct, thereby making it possible, in principle, to compare scale scores across populations.

According to our results, scales “7. Navigating the health care system” and “8. Ability to find good health information” in part 2 of the N-HLQ were the hardest to recapture. This finding is supported by previous HLQ validation reports. An overlap of scales 7 to 9 was reported in the Danish HLQ validation study (Maindal et al., 2016), and strong associations among scales 7 to 9 were noted in the German HLQ validation study (Nolte et al., 2017) as well as the original English version (Osborne et al., 2013). In the present study, scale “9. Understanding health information well enough to know what to do” was found to be the next weakest factor after scales 7 and 8. The inter-factor correlations between these scales might be characterized as medium-sized, with correlation between 7 and 8, 7 and 9, and 8 and 9, being 0.59, 0.56, and 0.70, respectively. Stronger associations between these factors were found in the German validation study (characterized as large inter-factor correlations; Nolte et al., 2017). The authors of the Danish validation study (Maindal et al., 2016) argued that the reason for the overlap among scales 7 to 9 might be a high-order factor or causal linkages determining the stronger associations among them. Scales 8 and 9 focus on the ability to locate (scale 8) and appraise (scale 9) health information, whereas scale 7 (health system navigation) may be seen as being a closely linked outcome of these abilities (Maindal et al., 2016). Our data support these previous findings.

Study Limitations

A limitation of the current study might be that it is based on a relatively young and homogeneous group of participants with limited experience with the health services. Furthermore, the participants were bachelor degree-seeking nursing students, and the health perspective of their studies might somehow affect their perceptions of health care and their responses. However, including students for validation of self-reported instruments is regarded as an acceptable strategy in social behavioral and health sciences (van Ballegooijen et al., 2016). To reduce the influence of the participants' educational background in the current study, we included only first-semester students as they were at the beginning of their education process. We also support the view of Hawkins et al. (2018), who claimed that the validation of HLQ should be based on a range of validation evidence; thus, the current study contributes to the basis of validation evidence, especially in the context of a younger population. However, as HLQ is designed to be implemented across populations, further work with a wider range of respondents, including community health, hospital, and home care settings, is needed.

Compared to test-based tools such as Rapid Estimate of Adult Literacy (Davis et al., 1993) and the Test of Functional Health Literacy (Parker et al., 1995), which have more objective outcomes, there might a risk that measures from self-reported instruments such as HLQ are biased (Chang et al., 2019). Biased self-reported estimates can occur for various reasons, including gender differences. (Ruiz-Cantero et al., 2007; Weber at al., 2019). However, in the development phase of the HLQ, items biased for gender were eliminated (Osborne et al., 2013). Furthermore, several previous HLQ studies have looked at whether gender is a bias and concluded that it was not a threat to the results (Aaby et al., 2017; Friis et al., 2016).

Due to differences across cultures, the translations and adaptions of questionnaires regarding health and health care may be challenging (Acquadro et al., 2008; Epstein et al., 2015). Literature reviews have not yet revealed any gold standard procedure for such processes. However, the inclusion of an expert panel and back-translations seem to be essential elements (Epstein et al., 2015). The translation and adaption process in our study followed the process recommended by the authors of the original questionnaire, who included both of these steps (Hawkins et al., 2018). The value of including an expert panel with a clinical health background should be highlighted. In line with the German validation study, the translation processes included a discussion of some of the health terms in the questionnaire, such as health care providers (Nolte et al., 2017). Although the authors of the German validation study adjusted this to “doctors and therapists,” the consensus was to choose a term that was close to the original for the Norwegian HLQ. The pilot testing in this study did not indicate that respondents had any difficulties comprehending the chosen term.

Despite the indication that the pre-test included some uncertainty about four of the items, in-depth discussions did not result in any revisions. Finding the balance between a linguistic and conceptual equivalence to the original questionnaire is not a straightforward process (Chang et al., 1999). The translation team followed a structured translation procedure that included several extensive discussions of the items during which both cultural and linguistic aspects were considered. Thus, we trusted that the final version would be appropriate.

At this stage, there is scant evidence of the predictive validity of the HLQ. However, it is important to generate evidence of the HLQ's performance in cross-sectional studies and various populations at step one. As many studies have shown (Debussche et al., 2018; Kolarčik et al., 2015; Maindal et al., 2016; Nolte et al., 2017), the HLQ has acceptable strong properties in diverse settings, and future research should demonstrate whether the questionnaire predicts important outcomes such as improved access to services, better use of medicines, increased uptake of preventive health behaviors, and other behaviors related to health.

Conclusion

This study aimed to acquire initial insights into the construct validity of the Norwegian adaptation of the HLQ. Despite some possible overlap between two of the scales in part 2 of the questionnaire, the N-HLQ appears relatively robust and might serve as a good foundation for valid measurement in Norwegian populations. However, these findings cannot be generalized to all populations. Future research should include a wider range of respondents, including those in community health, hospital, and home care settings.

References

- Aaby A. Friis K. Christensen B. Rowlands G. Maindal H. T. (2017). Health literacy is associated with health behaviour and self-reported health: A large population-based study in individuals with cardiovascular disease. European Journal of Preventive Cardiology, 24(17), 1880–1888 10.1177/2047487317729538 PMID: [DOI] [PMC free article] [PubMed] [Google Scholar]

- Acquadro C. Conway K. Hareendran A. Aaronson N. (2008). Literature review of methods to translate health-related quality of life questionnaires for use in multinational clinical trials. Value in Health, 11(3), 509–521 10.1111/j.1524-4733.2007.00292.x PMID: [DOI] [PubMed] [Google Scholar]

- Adams R. J. Piantadosi C. Ettridge K. Miller C. Wilson C. Tucker G. Hill C. L. (2013). Functional health literacy mediates the relationship between socio-economic status, perceptions and lifestyle behaviors related to cancer risk in an Australian population. Patient Education and Counseling, 91, 206–212 10.1016/j.pec.2012.12.001 PMID: [DOI] [PubMed] [Google Scholar]

- Altin S. V. Finke I. Kautz-Freimuth S. Stock S. (2014). The evolution of health literacy assessment tools: A systematic review. BMC Public Health, 14, 1207 10.1186/1471-2458-14-1207 PMID: [DOI] [PMC free article] [PubMed] [Google Scholar]

- American Psychological Association & National Council on Measurement in Education. (2014). Standards for educational and psychological testing. American Educational Research Association. [Google Scholar]

- Aung M. N. Lorga T. Srikrajang J. Promtingkran N. Kreuangchai S. Tonpanya W. Vivarakanon P. Jaiin P. Praipaksin N. Payaprom A. (2012). Assessing awareness and knowledge of hypertension in an at-risk population in the Karen ethnic rural community, Thasongyang, Thailand. International Journal of General Medicine, 5, 553–561 10.2147/IJGM.S29406 PMID: [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baker D. W. Gazmararian J. A. Williams M. V. Scott T. Parker R. M. Green D. Peel J. (2002). Functional health literacy and the risk of hospital admission among Medicare managed care enrollees. American Journal of Public Health, 92, 1278–1283 10.2105/AJPH.92.8.1278PMID:12144984 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Batterham R. W. Buchbinder R. Beauchamp A. Dodson S. Elsworth G. R. Osborne R. H. (2014). The OPtimising HEalth LIterAcy (OPHELIA) process: Study protocol for using health literacy profiling and community engagement to create and implement health reform. BMC Public Health, 14, 694 10.1186/1471-2458-14-694 PMID: [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berkman N. D. Davis T. C. McCormack L. (2010). Health literacy: What is it? Journal of Health Communication, 15(2 Suppl. 2), 9–19 10.1080/10810730.2010.499985 PMID: [DOI] [PubMed] [Google Scholar]

- Bostock S. Steptoe A. (2012). Association between low functional health literacy and mortality in older adults: Longitudinal cohort study. BMJ (Clinical Research Edition), 344, e1602 10.1136/bmj.e1602 PMID: [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buchbinder R. Batterham R. Ciciriello S. Newman S. Horgan B. Ueffing E. Osborne R. H. (2011). Health literacy: What is it and why is it important to measure? The Journal of Rheumatology, 38(8), 1791–1797 10.3899/jrheum.110406 PMID: [DOI] [PubMed] [Google Scholar]

- Chang A. M. Chau J. P. Holroyd E. (1999). Translation of questionnaires and issues of equivalence. Journal of Advanced Nursing, 29, 316–322 10.1046/j.1365-2648.1999.00891.x PMID: [DOI] [PubMed] [Google Scholar]

- Chang E. M. Gillespie E. F. Shaverdian N. (2019). Truthfulness in patient-reported outcomes: Factors affecting patients' responses and impact on data quality. Patient Related Outcome Measures, 10, 171–186 10.2147/PROM.S178344 PMID: [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen J. Z. Hsu H. C. Tung H. J. Pan L. Y. (2013). Effects of health literacy to self-efficacy and preventive care utilization among older adults. Geriatrics & Gerontology International, 13, 70–76 10.1111/j.1447-0594.2012.00862.x PMID: [DOI] [PubMed] [Google Scholar]

- Cho Y. I. Lee S.-Y. D. Arozullah A. M. Crittenden K. S. (2008). Effects of health literacy on health status and health service utilization amongst the elderly. Social Science & Medicine, 66(8), 1809–1816 10.1016/j.socscimed.2008.01.003 PMID: [DOI] [PubMed] [Google Scholar]

- Costello A. B. Osborne J. (2005). Best practices in exploratory factor analysis: Four recommendations for getting the most from your analysis. Practical Assessment, Research & Evaluation, 10(7), 1–9 : 10.7275/jyj1-4868 [DOI] [Google Scholar]

- Davis T. C. Long S. W. Jackson R. H. Mayeaux E. J. George R. B. Murphy P. W. Crouch M. A. (1993). Rapid estimate of adult literacy in medicine: A shortened screening instrument. Family Medicine: Journal, 25(6), 391–395 PMID: [PubMed] [Google Scholar]

- Debussche X. Lenclume V. Balcou-Debussche M. Alakian D. Sokolowsky C. Ballet D. Elsworth G. R. Osborne R. H. Huiart L. (2018). Characterisation of health literacy strengths and weaknesses among people at metabolic and cardiovascular risk: Validity testing of the Health Literacy Questionnaire. SAGE Open Medicine. https://journals.sagepub.com/doi/10.1177/2050312118801250 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Elsworth G. R. Beauchamp A. Osborne R. H. (2016). Measuring health literacy in community agencies: A Bayesian study of the factor structure and measurement invariance of the health literacy questionnaire (HLQ). BMC Health Services Research, 16, 508 10.1186/s12913-016-1754-2 PMID: [DOI] [PMC free article] [PubMed] [Google Scholar]

- Epstein J. Santo R. M. Guillemin F. (2015). A review of guidelines for cross-cultural adaptation of questionnaires could not bring out a consensus. Journal of Clinical Epidemiology, 68(4), 435–441 10.1016/j.jclinepi.2014.11.021 PMID: [DOI] [PubMed] [Google Scholar]

- Finbråten H. S. Pettersen K. S. Wilde-Larsson B. Nordström G. Trollvik A. Guttersrud Ø. (2017). Validating the European Health Literacy Survey Questionnaire in people with type 2 diabetes: Latent trait analyses applying multidimensional Rasch modelling and confirmatory factor analysis. Journal of Advanced Nursing, 73, 2730–2744 10.1111/jan.13342PMID:28543754 [DOI] [PubMed] [Google Scholar]

- Friis K. Vind B. D. Simmons R. K. Maindal H. T. (2016). The relationship between health literacy and health behaviour in people with diabetes: A Danish population-based study. Journal of Diabetes Research, 2016, 7823130 10.1155/2016/7823130 PMID: [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gazmararian J. A. Williams M. V. Peel J. Baker D. W. (2003). Health literacy and knowledge of chronic disease. Patient Education and Counseling, 51, 267–275 10.1016/S0738-3991(02)00239-2 PMID: [DOI] [PubMed] [Google Scholar]

- Hawkins M. Elsworth G. R. Osborne R. H. (2018). Application of validity theory and methodology to patient-reported outcome measures (PROMs): Building an argument for validity. Quality of Life Research: An International Journal of Quality of Life Aspects of Treatment, Care and Rehabilitation, 27, 1695–1710 10.1007/s11136-018-1815-6 PMID: [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hawkins M. Cheng C. Elsworth G.R. Osborne R. H. (2020). Translation method is validity evidence for construct equivalence: analysis of secondary data routinely collected during translations of the Health Literacy Questionnaire (HLQ). BMC Medical Research Methodology, 20(1), (130). 10.1186/s12874-020-00962-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jordan J. E. Osborne R. H. Buchbinder R. (2011). Critical appraisal of health literacy indices revealed variable underlying constructs, narrow content and psychometric weaknesses. Journal of Clinical Epidemiology, 64, 366–379 10.1016/j.jclinepi.2010.04.005 PMID: [DOI] [PubMed] [Google Scholar]

- Jovanić M. Zdravković M. Stanisavljević D. Jović Vraneš A. (2018). Exploring the importance of health literacy for the quality of life in patients with heart failure. International Journal of Environmental Research and Public Health, 15(8), 1761 10.3390/ijerph15081761 PMID: [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kane M. (2013). The argument-based approach to validation. School Psychology Review, 42(4), 448–457. [Google Scholar]

- Kolarčik P. Čepová E. Madarasová Gecková A. Tavel P. Osborne R. H. (2015). Validation of Slovak version of Health Literacy Questionnaire. European Journal of Public Health, 25(5, Suppl. 3), 592–604 10.1093/eurpub/ckv176.15125527526 [DOI] [Google Scholar]

- Larsen M. H. Staalesen Y. Borge C. R. Osborne R. H. Andersen M. H. Wahl A. K. (2018). Health literacy: a new piece of the puzzle in psoriasis care? Results from a cross-sectional study. British Journal of Dermatology, 180(6), 1506–1516 10.1111/bjd.17595 PMID: [DOI] [PubMed] [Google Scholar]

- Lu W. Miao J. McKyer E. Lisako J.(2014). A primer on bootstrap factor analysis as applied to health studies research. American Journal of Health Education, 45(4), 199–204 10.1080/19325037.2014.916639 [DOI] [Google Scholar]

- Maindal H. T. Kayser L. Norgaard O. Bo A. Elsworth G. R. Osborne R. H. (2016). Cultural adaptation and validation of the Health Literacy Questionnaire (HLQ): Robust nine-dimension Danish language confirmatory factor model. Spring-erPlus, 5(1), 1232 10.1186/s40064-016-2887-9 PMID: [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nolte S. Osborne R. H. Dwinger S. Elsworth G. R. Conrad M. L. Rose M. Zill J. M. (2017). German translation, cultural adaptation, and validation of the Health Literacy Questionnaire (HLQ). PLoS One, 12(2), e0172340 10.1371/journal.pone.0172340 PMID: [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nutbeam D. (2008). The evolving concept of health literacy. Social Science & Medicine, 67, 2072–2078 10.1016/j.socscimed.2008.09.050 PMID: [DOI] [PubMed] [Google Scholar]

- Nutbeam D. (1998). Health promotion glossary. Health Promotion International, 13(4), 349–364 10.1093/heapro/13.4.349 [DOI] [PubMed] [Google Scholar]

- O'Hara J. McPhee C. Dodson S. Cooper A. Wildey C. Hawkins M. Beauchamp A. (2018). Barriers to breast cancer screening among diverse cultural groups in Melbourne, Australia. International Journal of Environmental Research and Public Health, 15(8), E1677 10.3390/ijerph15081677 PMID: [DOI] [PMC free article] [PubMed] [Google Scholar]

- Olesen K. F Reynheim A. L. Joensen L. Ridderstråle M. Kayser L. Maindal H. T. Willaing I. (2017). Higher health literacy is associated with better glycemic control in adults with type 1 diabetes: A cohort study among 1399 Danes. BMJ Open Diabetes Research & Care, 5, e000437 10.1136/bmjdrc-2017-000437 PMID: [DOI] [PMC free article] [PubMed] [Google Scholar]

- Osborne R. H. Batterham R. W. Elsworth G. R. Hawkins M. Buchbinder R. (2013). The grounded psychometric development and initial validation of the Health Literacy Questionnaire (HLQ). BMC Public Health, 13, 658 10.1186/1471-2458-13-658 PMID: [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parker R. M. Baker D. W. Williams M. V. Nurss J. R. (1995). The test of functional health literacy in adults: A new instrument for measuring patients' literacy skills. Journal of General Internal Medicine, 10(10), 537–541 10.1007/BF02640361 PMID: [DOI] [PubMed] [Google Scholar]

- Peterson P. N. Shetterly S. M. Clarke C. L. Allen L. A. Matlock D. D. Magid D. J. Masoudi F. A. (2009). Low health literacy is associated with increased risk of mortality in patients with heart failure. Circulation, 120(Suppl. 18), 749. [Google Scholar]

- Peterson P. N. Shetterly S. M. Clarke C. L. Bekelman D. B. Chan P. S. Allen L. A. Masoudi F. A. (2011). Health literacy and outcomes among patients with heart failure. Journal of the American Medical Association, 305, 1695–1701 10.1001/jama.2011.512 PMID: [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ruiz-Cantero M. T. Vives-Cases C. Artazcoz L. Delgado A. García Calvente M. M. Miqueo C. Valls C. (2007). A framework to analyse gender bias in epidemiological research. Journal of Epidemiology and Community Health, 61(Suppl. 2), ii46–ii53. 10.1136/jech.2007.062034 PMID: [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schillinger D. Grumbach K. Piette J. Wang F. Osmond D. Daher C. Bindman A. B. (2002). Association of health literacy with diabetes outcomes. Journal of the American Medical Association, 288, 475–482 10.1001/jama.288.4.475 PMID: [DOI] [PubMed] [Google Scholar]

- Scott T. L. Gazmararian J. A. Williams M. V. Baker D. W. (2002). Health literacy and preventive health care use among Medicare enrollees in a managed care organization. Medical Care, 40(5), 395–404 10.1097/00005650-200205000-00005 PMID: [DOI] [PubMed] [Google Scholar]

- Sørensen K. Van den Broucke S. Pelikan J. M. Fullam J. Doyle G. Slonska Z. HLS-EU Consortium. (2013). Measuring health literacy in populations: Illuminating the design and development process of the European Health Literacy Survey Questionnaire (HLS-EU-Q). BMC Public Health, 13(1), 948 10.1186/1471-2458-13-948 PMID: [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stømer U. E. Gøransson L. G. Wahl A. K. Urstad K. H. (2019). A cross-sectional study of health literacy in patients with chronic kidney disease: associations with demographic and clinical variables. Nursing Open, 6(4), 1481–1490 10.1002/nop2.350 PMID: [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sudore R. L. Yaffe K. Satterfield S. Harris T. B. Mehta K. M. Simonsick E. M. Schillinger D. (2006). Limited literacy and mortality in the elderly: The health, aging, and body composition study. Journal of General Internal Medicine, 21, 806–812 10.1111/j.1525-1497.2006.00539.x PMID: [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thomson M. D. Hoffman-Goetz L. (2012). Application of the health literacy framework to diet-related cancer prevention conversations of older immigrant women to Canada. Health Promotion International, 27, 33–44 10.1093/heapro/dar019 PMID: [DOI] [PubMed] [Google Scholar]

- Trochim W. M. Cook J. A. Setze R. J. (1994). Using concept mapping to develop a conceptual framework of staff 's views of a supported employment program for individuals with severe mental illness. Journal of Consulting and Clinical Psychology, 62(4), 766–775 10.1037/0022-006X.62.4.766 PMID: [DOI] [PubMed] [Google Scholar]

- van Ballegooijen W. Riper H. Cuijpers P. van Oppen P. Smit J. H. (2016). Validation of online psychometric instruments for common mental health disorders: a systematic review. BMC Psychiatry, 16, 45 10.1186/s12888-016-0735-7 PMID: [DOI] [PMC free article] [PubMed] [Google Scholar]

- von Wagner C. Knight K. Steptoe A. Wardle J. (2007). Functional health literacy and health-promoting behaviour in a national sample of British adults. Journal of Epidemiology and Community Health, 61, 1086–1090 10.1136/jech.2006.053967 PMID: [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weiss B. D. Mays M. Z. Martz W. Castro K. M. DeWalt D. A. Pignone M. P. Hale F. A. (2005). Quick assessment of literacy in primary care: The newest vital sign. Annals of Family Medicine, 3(6), 514–522 10.1370/afm.405 PMID: [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weber A. M. Cislaghi B. Meausoone V. Abdalla S. Mejía-Guevara I. Loftus P. the Gender Equality, Norms and Health Steering Committee. (2019). Gender norms and health: Insights from global survey data. Lancet, 393(10189), 2455–2468 10.1016/S0140-6736(19)30765-2 PMID: [DOI] [PubMed] [Google Scholar]

- Williams M. V. Baker D. W. Parker R. M. Nurss J. R. (1998). Relationship of functional health literacy to patients' knowledge of their chronic disease. A study of patients with hypertension and diabetes. Archives of Internal Medicine, 158, 166–172 10.1001/archinte.158.2.166 PMID: [DOI] [PubMed] [Google Scholar]

- Wolf M. S. Gazmararian J. A. Baker D. W. (2005). Health literacy and functional health status among older adults. Archives of Internal Medicine, 165, 1946–1952 10.1001/archinte.165.17.1946 PMID: [DOI] [PubMed] [Google Scholar]

- Yamashita T. Kart C. S. (2011). Is diabetes-specific health literacy associated with diabetes-related outcomes in older adults? Journal of Diabetes, 3, 138–146 10.1111/j.1753-0407.2011.00112.x PMID: [DOI] [PubMed] [Google Scholar]

- Zientek L. R. Thompson B. (2007). Applying the bootstrap to the multivariate case: Bootstrap component/factor analysis. Behavior Research Methods, 39, 318–325 10.3758/BF03193163 PMID: [DOI] [PubMed] [Google Scholar]

- Zumbo B. D., Chan E. K. (Eds.). (2014). Social Indicators Research Series: Vol. 54. Validity and validation in social, behavioral, and health sciences. 10.1007/978-3-319-07794-9 [DOI] [Google Scholar]