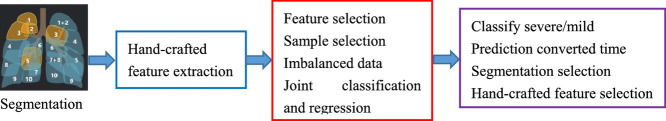

Graphical abstract

Keywords: Coronavirus disease, CT Scan data, Feature selection, Sample selection, Imbalance classification

Abstract

With the rapidly worldwide spread of Coronavirus disease (COVID-19), it is of great importance to conduct early diagnosis of COVID-19 and predict the conversion time that patients possibly convert to the severe stage, for designing effective treatment plans and reducing the clinicians’ workloads. In this study, we propose a joint classification and regression method to determine whether the patient would develop severe symptoms in the later time formulated as a classification task, and if yes, the conversion time will be predicted formulated as a classification task. To do this, the proposed method takes into account 1) the weight for each sample to reduce the outliers’ influence and explore the problem of imbalance classification, and 2) the weight for each feature via a sparsity regularization term to remove the redundant features of the high-dimensional data and learn the shared information across two tasks, i.e., the classification and the regression. To our knowledge, this study is the first work to jointly predict the disease progression and the conversion time, which could help clinicians to deal with the potential severe cases in time or even save the patients’ lives. Experimental analysis was conducted on a real data set from two hospitals with 408 chest computed tomography (CT) scans. Results show that our method achieves the best classification (e.g., 85.91% of accuracy) and regression (e.g., 0.462 of the correlation coefficient) performance, compared to all comparison methods. Moreover, our proposed method yields 76.97% of accuracy for predicting the severe cases, 0.524 of the correlation coefficient, and 0.55 days difference for the conversion time.

1. Introduction

Coronavirus disease (COVID-19) is an infectious disease caused by the severe-acute respiratory symptom Coronavirus 2 (SARS-Cov2), and has resulted in more than 1.3 million confirmed cases and about 75k deaths worldwide as of April 7, 2020 (JHU, 2020). Due to its rapid spread, infection cases are increasing with the high fatality rate (e.g., up to 5.7%). Statistical models and machine learning methods have been developing to analyze the transmission dynamics and conduct early diagnosis of COVID-19 (del Rio, Malani, 2020, Li, Guan, Wu, Wang, Zhou, Tong, Ren, Leung, Lau, Wong, et al., 2020). For example, (Wu et al., 2020) employed the susceptible-exposed-infectious-recovered model to estimate the number of the COVID-19 cases in Wuhan based on the number of exported COVID-19 cases which moved from Wuhan to other cities in China.

The increasing number of confirmed COVID-19 cases results in the lack of the clinicians and the increase of the clinicians’ workloads. Many laboratory techniques have been used to confirm the suspected COVID-19 cases by clinicians (Jung et al., 2020), including real-time reverse transcription polymerase chain reaction (RT-PCR) (Corman, Bleicker, Brünink, Drosten, Zambon, 2020, Ai, Yang, Hou, Zhan, Chen, Lv, Tao, Sun, Xia, 2020), non-PCR tests (e.g., isothermal nucleic acid amplification technology (Craw and Balachandran, 2012)), non-contrast chest computed tomography (CT) and radiographs (Lee et al., 2020), and so on. It is well known that manual identification of COVID-19 is time-consuming and increases the infection risk of the clinicians (Kong and Agarwal, 2020). Moreover, laboratory tests are usually prohibited for all suspected cases due to the limitation of the test kits (Kong, Agarwal, 2020, Ng, Lee, Yang, Yang, Li, Wang, Lui, Lo, Leung, Khong, et al., 2020). Also, RT-PCR has been widely used to confirm COVID-19, but easily results in low sensitivity (Chaganti et al., 2020). As a good alternative, artificial intelligence techniques on the available data from laboratory tests have been playing important roles on the confirmation and follow-up of COVID-19 cases. For example, (Alom et al., 2020) employed the inception residual recurrent convolutional neural network (CNN) and transfer learning on X-ray and CT scan images to detect COVID-19 and segment the infected regions of COVID-19. (Ozkaya et al., 2020) first applied CNN to fuse and rank deep features for the early detection of COVID-19, and then used support vector machine (SVM) to conduct binary classification using the obtained deep features.

Imaging data is playing an important role in the diagnosis of all kinds of pneumonia diseases including COVID-19 (Shi et al., 2020a), so CT has been popularly used and applied to help monitor imaging changes and measure the disease severity such as in China (Zhao, Zhong, Xie, Yu, Liu, 2020, Chaganti, Balachandran, Chabin, Cohen, Flohr, Georgescu, Grenier, Grbic, Liu, Mellot, Murray, Nicolaou, Parker, Re, Sanelli, Sauter, Xu, Yoo, Ziebandt, Comaniciu). For example, (Chaganti et al., 2020) designed an automated system to quantify the abnormal tomographic patterns appeared in COVID-19 and (Li et al., 2020a) employed CNNs with the imaging features of radiographs and CT images for identifying COVID-19.

In this work, we investigate a new early diagnosis method to predict whether the mild confirmed cases (i.e., non-severe cases) of COVID-19 would develop severe symptoms in the later time and estimate the time interval. However, it is challenging due to many issues, such as small infected lesions in the chest CT scan at the early stage, appearances similar to other pneumonia, the data set with high-dimensional features and small-sized samples, and imbalanced group distribution.

First, the infected lesions in the chest CT scan at the early stage are usually small and their appearances are quite similar to that of other pneumonia. Given the early stage of COVID-19 with minor imaging signs, it is difficult to predict its future progression status. Conventional severity assessment methods can easily distinguish a severe sign of the image from the mild sign, since the changes of CT data are correlated with the disease severity, e.g., the lung involvement and abnormalities increase while the symptoms become severe. However, the infected volume of the non-severe COVID-19 cases is usually mild. For example, (Guan et al., 2020) showed that 84.4% of non-severe patients had mild symptoms and more than 95% severe cases had severe symptoms on CT changes. On the other hand, the clinicians have few prior knowledge about whether or when the non-severe cases convert to severe cases, so early diagnosis and conversion time prediction could reduce the clinicians’ workloads or even save patients’ levies.

Second, the collected data set usually has a small number of samples (i.e., small-sized samples) and high-dimensional features. Due to all kinds of reasons, such as data protection, data security, and the scenario of acute infectious diseases, a small number of subjects are available for early diagnosis of COVID-19. The limited samples or subjects are difficult to build an effective artificial intelligence model. Moreover, high-dimensional features for each imaging data are often extracted, by considering to capture the comprehensive changes of the disease. Hence, both of scenarios often result in the issue of over-fitting and the issue of curse of dimensionality (Hu, Zhu, Zhu, Gan, 2020, Zhu, Suk, Shen, 2014).

Third, the class or group distribution of the data set is generally imbalanced. In particular, the number of severe cases is much smaller than the number of non-severe cases, e.g., about 20% reported2 Such a scenario poses a challenge for most classification methods because they were designed under the assumption that each class has an equal number of samples (Adeli et al., 2020). As a result, previous classification techniques output poor predictive performance, especially for the minority class (Adeli et al., 2020w, Zhu, Zhu, Zheng, 2020).

In this work, we propose a novel joint regression and classification method to identify the severe COVID-19 cases from the non-severe cases and predict the conversion time from a non-severe case to the severe case in a unified framework. Specifically, we employ the logistic regression for the binary classification task and the linear regression for the regression task. Moreover, we employ an ℓ2,1-norm regularization term on both the classification coefficient matrix and the regression coefficient matrix to consider the correlation between the two tasks as well as select the useful features for disease diagnosis and conversion time prediction. We further design a novel method to learn the weights of the samples, i.e., automatically learning the weight of each sample so that the important samples have large weights and the unimportant samples have small or even zero weights. Moreover, the samples with zero weights in each class are excluded to the process of the construction of the joint classification and regression, thus the problem of imbalance classification can be solved.

Different from previous literature, the contribution of our proposed method is listed as follows.

First, our method considers the issues, including imbalance classification, feature selection, and sample weight, in the same framework. Moreover, our method takes into account the correlation between the classification task and the regression task. In the literature, few study has focused on exploring the above issues simultaneously. For example, the studies separately conduct feature selection (Zhu et al., 2017b) and sample weight (Hu, Zhu, Zhu, Gan, 2020, Zhu, Yang, Zhang, Zhang, 2019), while (Zhu et al., 2014) proposed to conduct joint classification and regression. Recently, a few studies simultaneously conduct feature selection and sample selection (Adeli et al., 2020w, Hu, Zhu, Zhu, Gan, 2020).

Second, a few machine learning methods were proposed to conduct the diagnosis of COVID-19 disease. For example, (Tang et al., 2020) employed random forest to detect the severe cases from the confirmed cases based on the CT scan data. (Shi et al., 2020a) conducted the same task by a two-step strategy, i.e., automatically categorizing all subjects into groups followed by random forests in each group for classification. However, previous literature did not take into account any of the above issues. Recently, deep learning techniques (Alom, Rahman, Nasrin, Taha, Asari, Ozkaya, Ozturk, Barstugan, Li, Qin, Xu, Yin, Wang, Kong, Bai, Lu, Fang, Song, et al., 2020) have been employed to conduct early diagnosis of COVID-19, but lacking the interpretability. To our knowledge, this is the first study simultaneously detecting the severe cases and predicting the conversion time, which have widely applications because the severe cases could endanger patients’ lives and correctly predicting of the conversion time makes the clinicians take care the patients early or even save the patients’ lives.

2. Related work

AI technologies have widely been applied in the study of COVID-19 disease with the medical imaging data including X-ray and Computed Tomography (CT), in the applications of segmentation (Fan, Zhou, Ji, Zhou, Chen, Fu, Shen, Shao, 2020, Xie, Jacobs, Charbonnier, van Ginneken, 2020) and diagnosis (Kang, Xia, Yan, Wan, Shi, Yuan, Jiang, Wu, Sui, Zhang, et al., 2020, Ouyang, Huo, Xia, Shan, Liu, Mo, Yan, Ding, Yang, Song, et al., 2020). In this study, we give a brief review of the COVID-19 diagnosis and prognosis.

In the past few months, a large number of computer-aided diagnosis methods have been designed for the COVID-19 diagnosis. For example, (Chen et al., 2020) and (Bhandari et al., 2020) employed the logistic regression model to conduct COVID-19 disease diagnosis. (Hassanien et al., 2020) proposed to first use the multi-level threshold method to pre-process the images of COVID-19 patients, and then utilize SVM to distinguish the COVID-19 patients from normal cases. (Shaban et al., 2020) proposed a hybrid feature selection method to find informative features from chest CT images before using the kNN classifier to conduct COVID-19 disease diagnosis. Considering that deep learning methods always outperform traditional machine learning methods in real applications, (Song et al., 2020) proposed a CT diagnosis system based on the ResNet model to identify COVID-19 patients from healthy people. (Fan et al., 2020) proposed to first employ a deep segmentation network to automatically identify infected regions in chest CT scans, and then conduct the COVID-19 diagnosis by a semi-supervised way. (Xie et al., 2020b) designed two diverse relational U-networks to segment pulmonary lobes with CT images to output the high-level convolution features for COVID-19 diagnosis.

Considering the fact that mild patients may quickly deteriorate into the severe/critical stage, it is crucial to predict the development of patients disease. To do this, machine learning methods have widely been proposed to construct predict models to evaluate patients’ conditions. For example, (Yan et al., 2020) employed the XGBoost classifier to select informative features first and then predict the survival rates as well as the maximum number of days for severe patients. (Chakraborty and Ghosh, 2020) proposed to forecast the number of daily confirmed COVID-19 patients from different countries and (Salgotra et al., 2020) designed a gene expression programming-based method to predict the number of confirmed COVID-19 patients. (Petropoulos and Makridakis, 2020) employed the exponential smoothing method to forecast the confirmed COVID-19 cases and (Xie et al., 2020a) proposed a multivariable logistic regression model to predict the inpatient mortality of the COVID-19 patients. In addition, deep learning methods are also investigated to design prediction model. For example, (Arora et al., 2020) employed the recurrent neural network and the long-short term memory (LSTM) network to predict the number of confirmed COVID-19 cases in India. (Ibrahim et al., 2020) proposed a LSTM model to forecast the number of infected COVID-19 cases.

The literature demonstrated that each task can provide the complementary information to other tasks in multi-task learning (Zhu et al., 2014), so the framework integrating the classification task with the regression task has been designed to conduct diagnosis analysis as well as forecast the disease trend in the future. For example, (Wang et al., 2020) proposed a fully deep learning method on the original chest CT images to conduct the COVID-19 diagnosis and the prognostic analysis. (Bai et al., 2020) proposed a LSTM model to identify the severe patients and predict the patients with potential malignant progression (Bai et al., 2020). However, to the best of our knowledge, this work is the first study to simultaneously distinguish the severe cases from the mild cases and predict the conversion time from the mild cases to the severe cases.

3. Materials and image preprocessing

This study investigated the chest CT images of 408 confirmed COVID-19 patients. The demographic information is summarized in Table 1 . If a patient has multiple scans over time, the first scan is used. All CT images was provided by Shanghai Public Health Clinical Center and Sicuan University West China Hospital. Informed consents were waived and all private information of patients was anonymized. Moreover, the ethics of committees of these two institutes approved the protocol of this study.

Table 1.

Demographic information of all subjects. The numbers in parentheses denote the number of subjects in each class. It is noteworthy that 52 cases were converted to severe on average 5.64 days and 34 cases were severe at admission.

| Severe cases | Non-severe cases | |

|---|---|---|

| (86) | (322) | |

| Female/male | 35/51 | 160/162 |

| Age | 55.43 ± 16.35 | 49.30 ± 15.70 |

All patients were confirmed by the national centers for disease control (CDC) based on the positive new Coronavirus nucleic acid antibody. Moreover, patients with large motion artifacts or pre-existing lung cancer conditions on the CT scans were excluded from this study.

3.1. Image acquisition parameters

All patients underwent the thin-section CT scan by the scanners including SCENARIA 64 from Hitachi, Brilliance 64 from Philips, uCT 528 from United Imaging. The CT protocol is listed as follows: kV: 120, slice thickness: 1-1.5 mm, and breath hold at full inspiration. More details about both the image acquisition and the image pre-processing can be found in (Shan, Gao, Wang, Shi, Shi, Han, Xue, Shen, Shi, 2020, Shi, Xia, Shan, Wu, Wei, Yuan, Jiang, Gao, Sui, Shen, 2020). Moreover, we used the mediastinal window (with window width 350 hounsfield unit (HU) and window level 40 HU) and the lung window (with window width 1200 HU and window level-600 HU) for reading analysis.

3.2. Image pre-processing

We utilized the disease characteristics, i.e., infection locations and spreading patterns, to extract handcrafted features of each COVID-19 chest CT image. To do this, we used the COVID-19 chest CT analysis tool developed by Shanghai United Imaging Intelligence Co. Ltd., and followed the literature (Shan et al., 2020) to calculate the quantitative features.

First, the COVID-19 chest CT analysis tool designed a deep learning method named VB-net to automatically segment infected lung regions and lung fields bilaterally. The infected lung regions were mainly related to manifestations of pneumonia, such as mosaic sign, ground glass opacification, lesion-related signs, and interlobular septal thickening.

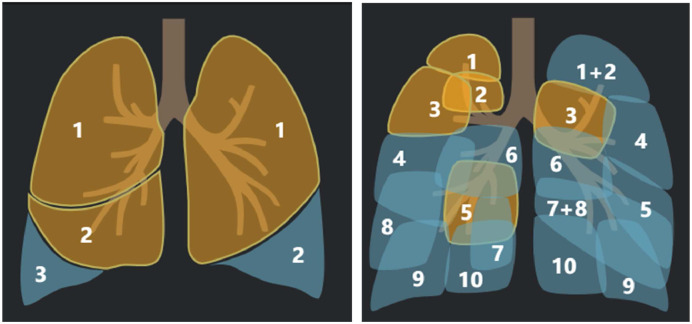

Second, after the segmentation process, the lung fields included the left lung and the right lung, 5 lung lobes, and 18 pulmonary segments, as shown in Fig. 1 . Specifically, the left lung included superior lobe and inferior lobe, while the right lung included superior lobe, middle lobe, and inferior lobe. Moreover, the left lung has 8 pulmonary segments and the right lung has 10 pulmonary segments. As a result, we had 26 regions of interest (ROIs) for each CT images.

Fig. 1.

The visualization of the segments of a chest CT scan image, i.e., 5 lung lobes (left) and 18 pulmonary segments.

Third, we partitioned each segment to five parts based on the HU ranges, i.e., and HU [50,∞]. Specifically, indicates the parts with the HU range between and -700, indicates the parts with the HU range between -700 and -500, indicates the parts with the HU range between -500 and -200, indicates the parts with the HU range between -200 and 50, and HU [50,∞] indicates the parts with the HU range between 50 and ∞. As a result, each CT image was partitioned to 130 parts (i.e., ).

In this study, we extracted three kinds of handcrafted features from each part, i.e., density feature, volume feature, and mass feature. Specifically, we obtained the volume feature as the total volume of infected region and the density feature by calculating the averaged HU value within the infected region. We further followed (Song et al., 2014) to define the mass feature to simultaneously reflect the volume and density of subsolid nodule because the mass feature has been demonstrated to have potentially superior reproducibility to 3D volumetry, i.e., .

Finally, each CT image is represented by 390-dimension handcrafted features in this study.

4. Method

In this paper, we denote matrices, vectors, and scalars, respectively, as boldface uppercase letters, boldface lowercase letters, and normal italic letters. Specifically, we denote a matrix as . The i-th row and j-th column of X are denoted as x i and x j, respectively. We further denote the Frobenius norm and the ℓ2,1-norm of a matrix X as and respectively. We also denote the transpose operator, the trace operator, and the inverse of a matrix X as X T, tr(X), and respectively.

4.1. Sparse logistic regression

In the classification problem, given the feature matrix including n samples () and their corresponding labels the logistic regression is employed to distinguish the severe cases (i.e., ) from the confirmed COVID-19 cases (i.e., ). Specifically, by denoting as the coefficient vector, the logistic loss function is defined as

| (1) |

where λ 1 is a tuning parameter, and the ℓ2-norm regularization term on the coefficient vector w is used to control the complexity of the logistic regression. Eq. (1) conducts the classification task without taking into account the issues, such as feature selection, sample weight, and imbalance classification.

First, in real applications, clinicians have prior knowledge on the regions of the CT scan data which are possible related to the disease, but we cannot only extract the features from these regions because they may cooperate with other regions to influence the disease. As a result, we extract the features from all imaging data to obtain the high-dimensional data, which captures the comprehensive changes of confirmed COVID-19 cases but increases the store and computation costs as well as easily results in the issue of curse of dimensionality (Zhu, Zhu, Zheng, 2020, Shen, Zhu, Zheng, Zhu, 2020). To address this issue, we design machine learning models to automatically recognize the features related to the disease by taking into account the correlation among the features. Specifically, we replace the ℓ2-norm on the coefficient vector w (i.e., ) by the ℓ1-norm (i.e., ‖w‖1 and ), which outputs sparse elements to make the corresponding features (i.e., the rows in X) not involving the classification task, i.e.,

| (2) |

where λ 2 is a tuning parameter.

4.2. Balanced and sparse logistic regression

In binary classification, the issue of imbalance classification easily results in the classification results bias to the majority class, i.e., outputting high false negatives. In the literature, both re-sampling methods and cost-sensitive learning methods (Zhu et al., 2019) have been used for solve the issue of imbalance classification.

Recently, robust loss functions has been widely designed to reduce the influences of outliers by taking into account the sample weight in robust statistics (Zhu et al., 2020b). Specifically, robust loss functions use a weight vector to automatically output small weights to the samples with large estimation errors and large weights to the samples with small estimation errors. As a consequence, the samples with large estimation errors are regarded as outliers and their influences are reduced. In the literature, a number of robust loss functions have been developed, including function, Cauchy function, and Geman–McClure estimator, and so on (Hu, Zhu, Zhu, Gan, 2020, Zhu, Gan, Lu, Li, Zhang, 2020). However, the robust loss function was not designed to explore the issue of the imbalance classification.

In this paper, motivated by the robust loss functions assigning weights to the samples, we propose a new method to assign a weight to each sample as well as to solve the problem of imbalance classification. By regarding that different samples have different contributions to the construction of the classification model, our method expects to assign large weights to the important samples and small weights to the unimportant samples. Moreover, by regarding of the problem of imbalance classification, our method expects to set different numbers of zero weights to different classes so that there is a balance of the sample number between the positive class and the negative class. To do this, we employ an ℓ0-norm constraint on the weight vector α to have the following loss function:

| (3) |

where indicates the weight set of all negative samples and indicates the weight set of all positive samples. The constraint ‘’ indicates that the number of non-zero elements in the negative class is . Specifically, after receiving the estimation value for each sample, i.e., we first sort the estimation values of all samples in the same class with an increase order, and then keep the original weights to the weights of the negative samples with the smallest estimation values and 0 to the weights of the left negative samples. In this way, either the negative samples or the positive samples with zero weights will not be involved the process of the classification model.

Our method in Eq. (3) has at least two advantages, i.e., automatically selecting important samples (i.e., reducing the influence of the outliers) to learn the classification model and adjusting the number of selected samples for each class by tuning the values of and (e.g., ) to solve the imbalance classification problem. In particular, our method in Eq. (3) employs the ℓ0-norm constraint for each class to output exactly predefined non-zero elements. On the contrary, self-paced learning uses the ℓ1-norm constraint for all samples or other robust loss functions (Zhu, Zhu, Zheng, 2020, Hu, Zhu, Zhu, Gan, 2020) to estimate the sample weight without guaranteeing the exact number of non-zero elements. As a result, compared to self-paced learning only considering the sample weight to reduce the influence of outliers, our method takes into account the sample weight to remove outliers not to involve the process of the model construction as well as solve the problem of imbalance classification.

4.3. Joint logistic regression and linear regression

Besides distinguishing the severe cases from the non-severe cases, predicting the conversion time converting a non-severe case to a severe case is also important because it may be related to the patients’ lives. To do this, a naive solution is to separately conduct a classification task to diagnose the severe cases and a regression task to predict the conversion time. Obviously, the separate strategy ignores the correlation among two tasks. In this paper, by regarding the prediction of conversion time as a regression task, we define a ridge regression to linearly characterize the correlation between the feature matrix X and the vector of the conversion time by

| (4) |

where is the coefficient vector for the regression task and λ 3 is a tuning parameter.

Similar to the classification task in Eq. (3), the regression task in Eq. (4) still needs to consider the issues, such as feature selection, sample weight, and imbalance classification. Moreover, in this study, we conduct joint classification and regression (i.e., multi-task learning) by simultaneously considering a classification task and a regression task in the same framework. We expect that each task could obtain information from another task so that the model effectiveness of each of them can be improved by the shared information. Specifically, we employ the ℓ2,1-norm regularization term with respect to both the variable w and the variable v to obtain the following objective function:

| (5) |

where is the sample weight vector for the regression task and . γ and λ are tuning parameters. ‖[w, v]‖2,1 indicates that the selected features are obtained by the classification and regression model. Moreover, the selected features are their shared or common information benefiting each of them (Evgeniou and Pontil, 2004).

Eq. (5) needs a tuning parameter γ to have a magnitude or importance trade-off between two tasks. However, the process of tuning parameter is time-consuming and needs prior knowledge. In this work, we use a squared root operator on the second term of Eq. (5) to automatically obtain their weights. It is noteworthy that we keep the parameter λ to be tuned because it controls the sparsity of the term ‖[w, v]‖2,1 and the sparsity will be changed based on the data distribution (Evgeniou, Pontil, 2004, Zhu, Li, Zhang, Xu, Yu, Wang, 2017). Hence, the final objective function of our proposed joint classification and regression method is:

| (6) |

To solve the optimization problem in Eq. (6), i.e., optimizing the variables v and β, we compute the derivatives of the square root in Eq. (6) and obtain the following formulation

The values of γ in Eq. (7b) is automatically obtained without the tuning process and can be regarded as the weight of the tasks. Specifically, if the prediction error is small, the value of γ is large, i.e., the regression task is more important than the classification task. Hence, the optimization of the value of γ automatically balances the contributions of two tasks. As a result, the optimization of Eq. (6) is changed to optimize Eq. (7a).

4.4. Optimization

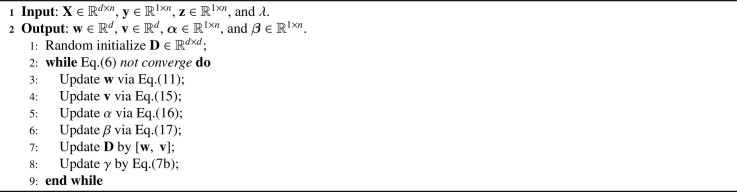

In this paper, we employ the alternating optimization strategy (Bezdek and Hathaway, 2003) to optimize the variables w, α, v, and β, in Eq. (7a). We list the pseudo of our optimization method in Algorithm 1 and report the details as follows.

Algorithm 1.

The pseudo of our optimization method.

(i) Update w by fixing α, β and v

After other variables are fixed, the objective function with respect to w in Eq. (7a) becomes

| (8) |

where is a diagonal matrix and the value of its i-th diagonal element is . To solve the imbalance classification problem, we set to obtain 2k samples for the training process. Hence, Eq. (8) becomes

| (9) |

By setting and Eq. (9) becomes

| (10) |

In this paper, we employ the Newton’s method (Liu and Nocedal, 1989) to minimize Eq. (10) by the following update rules

| (11) |

where and are defined as

| (12) |

(ii) Update v by fixing α, β and w

The objective function with respect to v in Eq. (7a) is

| (13) |

By letting and we have

| (14) |

Eq. (14) has a closed-form solution, i.e.,

| (15) |

(iii) Update α and β by fixing v and w

By denoting the estimation value of the classification task for the i-th sample as (), we sort the values () with an increase order for each class to denote the weight set of k negative samples with the smallest estimation values as (where if [i] < [j]) and the weight set of k positive samples with the smallest estimation values as (where if [i] < [j]), and then we have

| (16) |

where is the index of the original order before the sorting and is the index of the increase order after the sorting. [i]⋈j indicates that the j-th index in the original order is matched with the [i]-th index in the increase order.

By denoting the estimation value of the regression task for the i-th sample as (), we sort the values () with an increase order for each class to denote the weight set of k negative samples with the smallest estimation values as (where if [i] < [j]) and the weight set of k positive samples with the smallest estimation values as (where if [i] < [j]), and then we have

| (17) |

4.5. Convergence analysis

Algorithm 1 involves to optimize five variables (i.e., w, α, v, β, and γ). By denoting w t, α t, v t, β t, and γt, respectively, as the t-th iteration results of w, α, v, β, and γ, we denote the objective function value of the t-th iteration of Eq. (6) as J(w t, α t, v t, β t, γt).

By fixing α t, v t, β t, and γt, we employ the Newton’s method to optimize w and the Newton’s method can achieve the convergence, so we have

| (18) |

The optimizations of the variables (i.e., α, v, β, and γ) have closed-form solutions, so we have

| (19) |

| (20) |

| (21) |

| (22) |

By integrating the inequalities, i.e., Eqs. (18)-(22), we obtain

| (23) |

According to Eq. (23), the objective function values in Eq. (6) gradually decrease with the increase of iterations until Algorithm 1 converges. Hence, the convergence proof of Algorithm 1 to optimize Eq. (6) is completed.

5. Experiments

We experimentally evaluated our method, compared to state-of-the-art classification and regression methods, on a real COVID-19 data set with the chest CT scan data, in terms of binary classification and regression performance.

5.1. Experimental setting

We selected SVM and Ridge regression, respectively, as the baseline methods for the classification task and the regression task. Other comparison methods include ℓ1-SVM (L1SVM) (Chang and Lin, 2011), random forest (Liaw and Wiener, 2002), Sample-Feature Selection (SFS) (Adeli et al., 2020), ℓ1-SVR (Chang and Lin, 2011) (L1SVR), Least Absolute Shrinkage and Selection Operator (Lasso) (Tibshirani, 1996), Matrix-Similarity Feature Selection (MSFS) (Zhu et al., 2014), and three deep learning methods (e.g., Hyperface (Ranjan et al., 2017), COVID-CAPS (Afshar et al., 2020), and CNN combine with Extreme learning machine (CNNE) (Duan et al., 2018)). We summarize the details of all comparison methods in Table 2 . It is noteworthy that random forest can be used for feature selection, sample selection, and imbalance classification. However, in this study, we only used random forest to consider the problem of imbalance classification.

Table 2.

The summarization of all methods. The first block and the second block, respectively, list the classification methods and the regression methods, while all multi-task methods are listed in the third block. Note that, FS: feature selection, SW: sample weight, IMB: imbalance classification, CLASS: classification, and REG: regression.

| Methods | FS | SW | IMB | CLASS | REG |

|---|---|---|---|---|---|

| SVM (Chang and Lin, 2011) | √ | ||||

| L1SVM (Chang and Lin, 2011) | √ | √ | |||

| Random forest (Liaw and Wiener, 2002) | √ | √ | |||

| SFS (Adeli et al., 2020) | √ | √ | √ | √ | |

| Ridge regression | √ | ||||

| L1SVR (Chang and Lin, 2011) | √ | √ | |||

| Lasso (Tibshirani, 1996) | √ | √ | |||

| Random forest (Liaw and Wiener, 2002) | √ | √ | √ | ||

| MSFS (Zhu et al., 2014) | √ | √ | √ | ||

| Hyperface (Ranjan et al., 2017) | √ | √ | |||

| COVID-CAPS (Afshar et al., 2020) | √ | √ | √ | ||

| CNNE (Duan et al., 2018) | √ | √ | |||

| Proposed | √ | √ | √ | √ | √ |

In our experiments, we repeated the 5-fold cross-validation scheme 20 times for all methods to report the average results as the final results. In the model selection, we set in Eq. (6), and fixed for solving the problem of imbalance classification for our method. We followed the literature to set the parameters of the comparison methods so that they outputted the best results.

The evaluation metrics include accuracy, specificity, sensitivity, and area under the ROC curve (AUC) for the classification task, as well as correlation coefficient (CC) and root mean square error (RMSE) for the regression task.

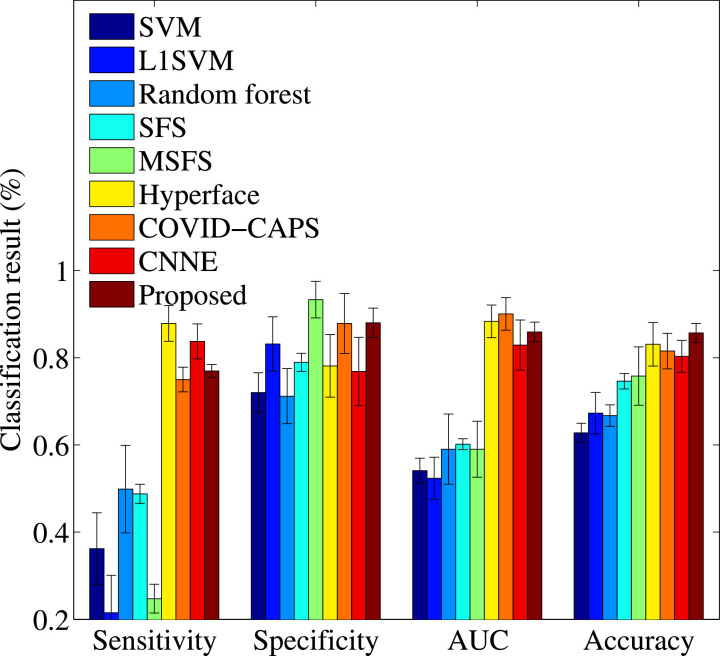

5.2. Classification results

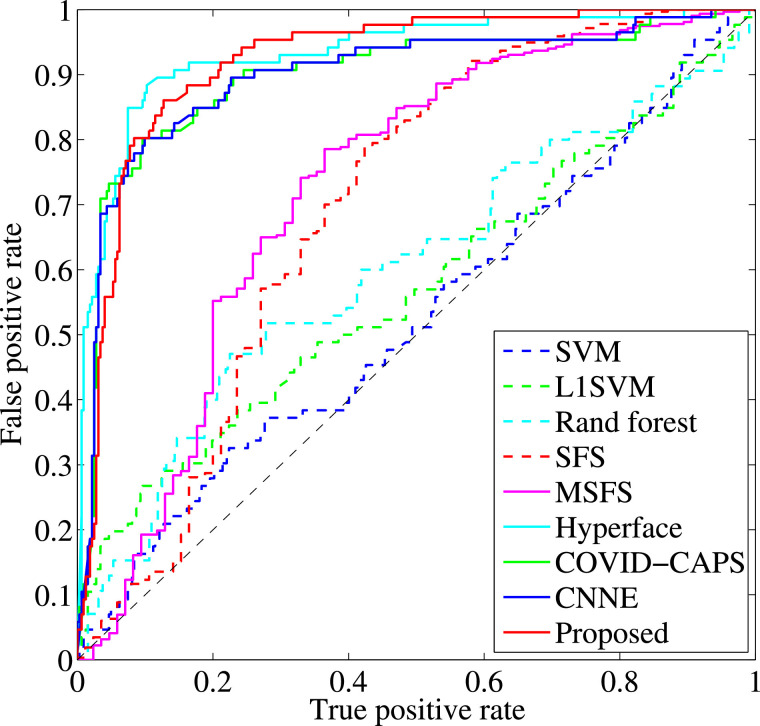

We report the classification performance and the Receiver Operating Characteristic (ROC) curves, respectively, of all methods in Fig. 2 and Fig. 3 . We also report the classification performance of our proposed method using single-task learning and multi-task learning in Table 3 . Based on the results, we conclude our observations as follows.

Fig. 2.

Classification results of all methods.

Fig. 3.

ROC curves of all methods.

Table 3.

Classification results (%) of three methods. Proposed W/O Regression indicates Eq. (3).

| Methods | SFS (Adeli et al., 2020) | Proposed w/o Regression | Proposed |

|---|---|---|---|

| Accuracy | 78.18 ± 3.71 | 83.25 ± 2.44 | 85.69 ± 2.20 |

| Sensitivity | 50.65 ± 6.33 | 70.73 ± 3.36 | 76.97 ± 3.36 |

| Specificity | 86.31 ± 2.69 | 86.60 ± 3.45 | 88.02 ± 1.45 |

| AUC | 73.88 ± 6.66 | 81.74 ± 3.30 | 85.91 ± 2.27 |

First, it could be observed that the proposed method achieved the best classification performance, followed by Hyperface, COVID-CAPS, CNNE, MSFS, SFS, L1SVM, Random forest, and SVM. Specifically, our proposed method improves on average by 32.80% and 2.61%, respectively, compared to the worst comparison method (i.e., SVM) and the best comparison methods (i.e., Hyperface), in terms of all four evaluation metrics. The reason is the fact that our method takes into account the issues in the same framework, such as feature selection removing the redundant features, sample weight reducing the influence of the outliers, imbalance classification reducing the issue of high false negatives, and joint classification and regression utilizing the share information between two tasks to improve the model effectiveness of each of them.

Second, it is important to conduct dimensionality reduction (including feature selection) for analyzing the high-dimensional data, which easily results in the issue of curse of dimensionality (Shen et al., 2020). In the literature, many studies showed that the classification model on the high-dimensional data will output low performance (Zhu et al., 2014). Fig. 2 verified the above statement. In our experiments, only SVM does not consider the issue of high-dimensional data and achieves the worst classification performance. More specifically, the best comparison method (i.e., Hyperface) improves by 26.32% and the worst comparison method (i.e., Random forest) improves by 4.52%, for all evaluation metrics, compared to the baseline SVM.

Third, it is useful to use joint classification and regression framework for detecting the severe cases from mild confirmation cases. As shown in Table 3 and Fig. 2, our proposed method conducting joint classification and regression achieves better classification performance, compared to the single-task based classification methods, e.g., random forest, L1SVM, SFS, and SVM. Moreover, the methods (e.g., MSFS, Hyperface, COVID-CAPS, and our method) are joint models. However, our method outperforms other joint models since our proposed method takes into account one more constraint. In particular, we conducted single-task classification using Eq. (3), i.e., our proposed method without considering the regression task, Proposed w/o Regression in Table 3. As a result, Proposed w/o Regression outperforms SFS since both of them take into account three following constraints, such as feature selection, sample weight, and imbalance classification.

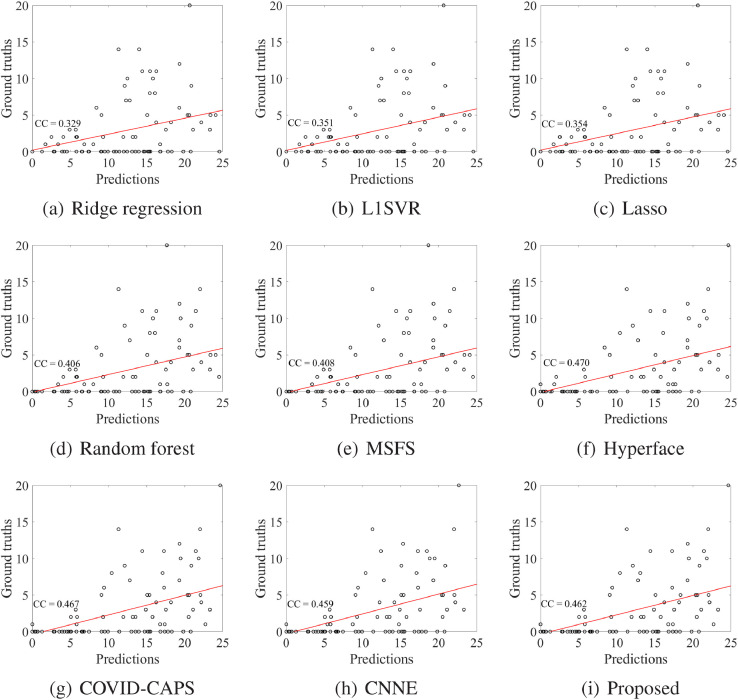

5.3. Regression results

We evaluated the regression performance through the prediction of conversion time from the non-severe case to the severe case. We reported the regression results (i.e., correlation coefficient (CC) and RMSE) of all methods in Table 4 and scatter plots of all methods in Fig. 4 .

Table 4.

Regression results of all methods.

| Methods | CC | RMSE |

|---|---|---|

| Ridge regression | 0.329 ± 0.158 | 20.02 ± 9.724 |

| L1SVR (Chang and Lin, 2011) | 0.351 ± 0.085 | 10.49 ± 2.072 |

| Lasso (Tibshirani, 1996) | 0.354 ± 0.165 | 9.92 ± 9.571 |

| Random forest (Liaw and Wiener, 2002) | 0.406 ± 0.188 | 13.22 ± 6.762 |

| MSFS (Zhu et al., 2014) | 0.408 ± 0.092 | 9.29 ± 1.104 |

| Hyperface (Ranjan et al., 2017) | 0.470 ± 0.026 | 7.58 ± 0.719 |

| COVID-CAPS (Afshar et al., 2020) | 0.467 ± 0.023 | 7.89 ± 0.451 |

| CNNE (Duan et al., 2018) | 0.459 ± 0.033 | 8.61 ± 0.084 |

| Proposed | 0.462 ± 0.056 | 7.35 ± 1.087 |

Fig. 4.

Scatter plots and the corresponding correlation coefficients (CCs) of all methods for predicting the severe cases.

First, the regression performance of the methods without dimensionality reduction (e.g., Ridge regression) is worse than the methods with dimensionality reduction, e.g., Lasso, L1SVR, MSFS, Hyperface, COVID-CAPS, CNNE, and our proposed method. Moreover, our method outperforms the traditional machine learning methods such as Ridge regression, L1SVR, Lasso, Random forst, and MSFS. For example, our method receives the best performance for correlation coefficient (e.g., 0.462) and RMSE (e.g., 7.351). However, some deep learning methods (e.g., Hyperface and COVID-CAPS) are better than our proposed method a little bit, in terms of correlation coefficient.

Second, similar to the results of the classification task, the results of the regression task show the advantages of the considerations, such as feature selection, sample weight, imbalance classification, and joint classification and regression. In particular, our proposed method considering all four considerations improves 0.054 and 1.940, respectively, in terms of correlation coefficient and RMSE, compared to MSFS which takes two considerations into account, such as feature selection, and joint classification and regression.

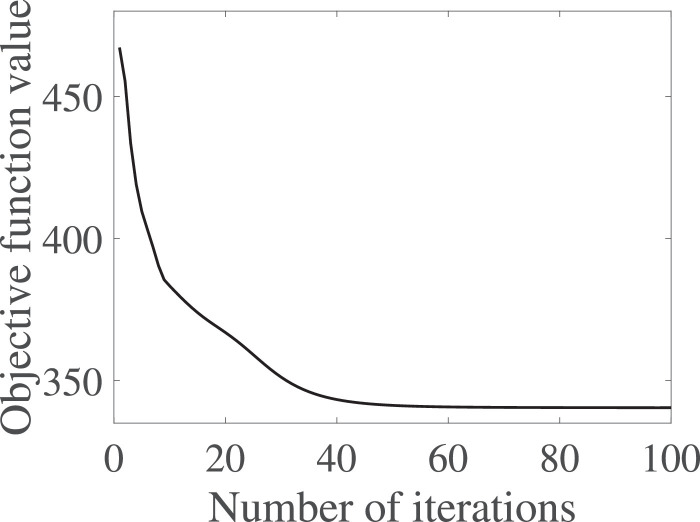

5.4. Ablation analysis

In this section, we evaluate the effectiveness of each part in our proposed joint model by analyzing the following scenarios, i.e., the effectiveness of each part in the joint model, the parameter sensitivity of λ in Eq. (6), and the convergence analysis of Algorithm 1. As a result, we report the results of our proposed method only conducting the classification task (i.e., Proposed w/o Regression in Eq. (3)) in Table 3 and the results of our proposed method only conducting the regression task (i.e., Lasso) in Table 4. We also list the variations of all evaluation metrics on our proposed method with different values of λ in Table 5 and the variations of the objective function values of Eq. (6) in Fig. 5 .

Table 5.

The results of all evaluation metrics with different values of λ.

| λ | Accuracy | Sensitivity | Specificity | AUC | CC | RMSE |

|---|---|---|---|---|---|---|

| 0.001 | 81.59 ± 1.79 | 75.92 ± 2.09 | 83.11 ± 2.19 | 82.99 ± 3.43 | 0.423 ± 0.026 | 9.58 ± 0.719 |

| 0.01 | 83.57 ± 2.21 | 78.31 ± 2.31 | 84.98 ± 1.91 | 83.28 ± 4.14 | 0.451 ± 0.053 | 8.88 ± 1.217 |

| 0.1 | 82.15 ± 2.08 | 73.16 ± 2.83 | 84.56 ± 2.89 | 83.21 ± 3.55 | 0.449 ± 0.043 | 8.11 ± 1.191 |

| 1 | 84.57 ± 3.25 | 77.11 ± 3.06 | 86.75 ± 2.85 | 84.65 ± 3.74 | 0.428 ± 0.039 | 9.35 ± 1.084 |

| 10 | 85.69 ± 2.20 | 76.97 ± 3.36 | 88.02 ± 1.45 | 85.91 ± 2.27 | 0.462 ± 0.056 | 7.35 ± 1.087 |

| 100 | 84.21 ± 2.74 | 77.12 ± 3.50 | 86.11 ± 3.06 | 83.65 ± 4.52 | 0.448 ± 0.071 | 7.68 ± 1.464 |

| 1000 | 83.44 ± 3.74 | 78.53 ± 3.55 | 84.55 ± 2.80 | 83.78 ± 3.82 | 0.439 ± 0.081 | 8.49 ± 1.641 |

Fig. 5.

The variations of the objective function values of Eq. (6) at different iterations.

Based on Tables 3 and 4, our joint model separately considering either the classification task or the regression task outperforms some comparison methods. For example, Proposed w/o Regression is better than SFS, which was designed to conduct the classification task only. Lasso outperforms two classic regression methods, i.e., Ridge regression and L1SVR. This demonstrates that our joint models work well even they conduct one task only.

Table 5 shows that the proposed method is sensitive to the parameters’ setting since different values of λ lead to different performances. This indicates importance of turning the parameter for our proposed method. To do this, the cross-validation scheme is a good method for tuning the parameter. For example, the best performance for both the classification task and the regression task can be easily obtained while setting λ = 10 by the 5-fold cross-validation scheme.

We proposed Algorithm 1 to optimize our objective function Eq. (6) and theoretically proved its convergence. We experimentally verified the convergence of Algorithm 1 by investigating the variations of the objective function values of Eq. (6) at different iteration times. We reported the results in Fig. 5 while setting the stop criteria of Algorithm 1 as where obj(t) is the objective function value of Eq. (6) in the t-iteration. We have two observations from Fig. 5. On one hand, Algorithm 1 sharply reduced the objective function values in the first several iterations and then began to be stable. On the other hand, Algorithm 1 achieves its convergence within tens of iteration times. Hence, Algorithm 1 solving our objective function in Eq. (6) achieves fast convergence.

6. Discussion

6.1. Imbalance classification

In the classification task, our method investigates the issues, i.e., feature selection, sample weight, imbalance classification, and joint classification and regression. As a result, our method outperforms all comparison methods only focusing on part of four issues. Moreover, our solution for each issue is shown reasonable and feasible. An interesting question is which issue dominates the COVID-19 analysis with chest CT scan data. There is not theoretical answer. However, based on our experimental results, the problem of imbalance classification should be the first issue to be considered due to the following reasons.

First, it is necessary to take into account the problem of imbalance classification. In our experiments, random forest outperforms L1SVM (e.g., improving by 6.84% on average for all evaluation metrics) because random forest considers the problem of imbalance classification and L1SVM takes into account the issue of high-dimensional data. Moreover, the only difference between SFS and MSFS is that SFS considers the problem of imbalance classification and MSFS conducts a joint classification and regression. As a result, SFS beats MSFS a little bit, i.e., 1.26% improvement in terms of all evaluation metrics.

Second, in Fig. 2, the sensitivities of the methods (e.g., SVM, L1SVM, and MSFS) are low, e.g., 23.86%, 26.73%, and 47.45% respectively. The reason is that their classifiers could directly predict the samples of the minority class with the label of the majority class to output high accuracy, e.g., 67.35%, 75.26%, and 86.31%, respectively. On the contrary, the methods (e.g., random forest, SFS, and our Proposed w/o Regression) consider the issue of imbalance classification to output the high sensitivities, e.g., 49.61%, 50.65%, and 70.73%, respectively.

6.2. Top selected regions

In this section, we list the top selected features (i.e., the chest regions) of our proposed method in Table 6 , which could help the clinicians to improve the efficiency and the effectiveness of the disease diagnosis. To do this, we first obtained the totally selected number for each feature across 100 experiments, i.e., repeating the 5-fold cross validation scheme 20 times, and then reported top 22 selected features (i.e., regions), each of which was selected at least 90 out of 100 times. We list our observations as follows.

Table 6.

Regions distribution at different HU ranges for the top selected regions.

| Hu ranges | left lung (6) | right lung (16) |

|---|---|---|

| 0 | 2 | |

| 1 | 8 | |

| 2 | 5 | |

| 1 | 0 | |

| [50, ∞] | 2 | 1 |

First, most selected features (i.e., 17 out of 22) are in the HU range of corresponding to the regions of ground glass opacity which has been demonstrated related to the severity of COVID-19 (Tang et al., 2020). Second, the region number in the right lung is larger than the number in the left lung, i.e., 16 vs. 6. The possible reason is that the virus might easily infect the regions in the right lung (Shi et al., 2020b). Third, we extracted 3 kinds of handcrafted features, i.e., the density features, the mass features, and the volume features, from each part. Moreover, the mass feature is related to both the density feature and the volume feature. Based on the results, our method selected 4 and 7 density features, respectively, from the left lung and the right lung, and 2 and 6 mass features, respectively, from the left lung and the right lung. However, our method only selected 3 volume feature from the right lung. Hence, we would have the conclusion that the density feature is the most important in our experiments, followed by the mass feature and the volume feature.

6.3. Importance of prediction and time estimation of severe cases

To our knowledge, this study is the first work to simultaneously predict and estimate the conversion time of COVID-19 developing to severe symptoms using the chest CT scan data.

First, our method obtains higher sensitivity, i.e., 76.97%, compared to (Tang et al., 2020), i.e., 74.5% of sensitivity. That is, our method achieves higher accuracy for classifying the severe cases than (Tang et al., 2020). The reason could contribute to that 1) our method designs a novel solution for the problem of imbalance classification, and 2) the regression information in our proposed joint model improves the classification performance.

Second, as shown in Fig. 4, the correlation coefficient (i.e., 0.524) between our predictions and the corresponding ground truths for the severe cases is larger than the value (i.e., 0.462) in Table 4 which measures the correlation between our predictions and the corresponding ground truths for all subjects. Moreover, our proposed method yields the averaged conversion time (i.e., 4.59 ± 0.223 days, which has 0.55 days different from the ground truth of the conversion time, i.e., 5.64 ± 4.30 days) from all non-serve cases to the severe stage, with the least estimation error (i.e., 6.01 ± 1.22), compared to all comparison methods. The possible reason should be that the classification information could improve the effectiveness of the regression task in our proposed joint model. Hence, our proposed method imply that our proposed method is good at predicting the conversion time from the non-severe stage to the severe stage. In real applications, correctly classifying severe cases is more important than correctly classifying the non-severe cases because the former could reduce the clinicians workloads. In particular, the correct prediction of the conversion time could help the clinicians designing effective treatment plan for the potential severe cases in time or even save the patients’ lives.

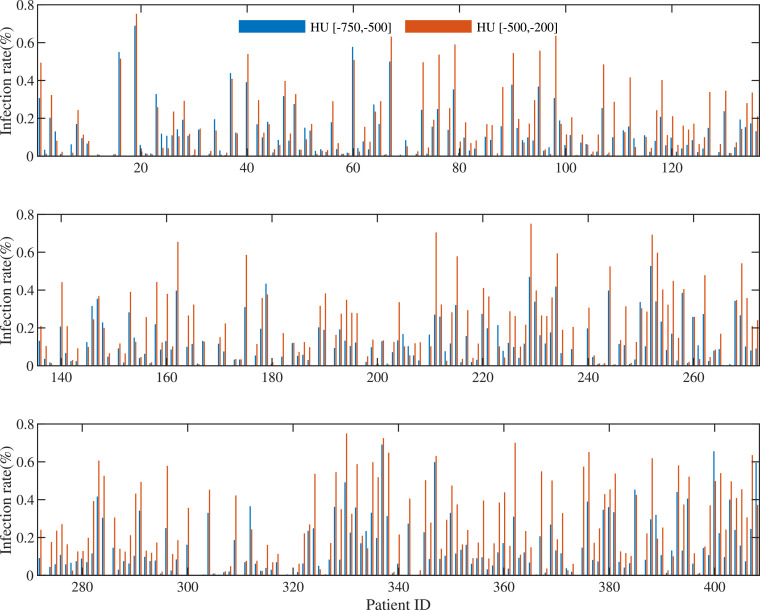

6.4. Limitations

Recently, many studies focused on the study of early diagnosis of COVID-19. For example, (Shi et al., 2020a) conducted binary classification on 1658 confirmed cases and 1027 community acquired pneumonia cases to achieve 90.7% of sensitivity and 87.9% of accuracy. (Tang et al., 2020) focused on detecting 121 non-severe cases from 55 severe cases to yield 74.5% of sensitivity and 87.5% of accuracy. Compared to (Tang, Zhao, Xie, Zhong, Shi, Liu, Shen, 2020, Shi, Xia, Shan, Wu, Wei, Yuan, Jiang, Gao, Sui, Shen, 2020), this study only yielded an accuracy of 85.91%, which seems lower than that reported in previous work. However, the task in this paper tried to solve is more different, as we predict whether the patient would develop severe symptoms from the mild symptoms. This would result in the problem of imbalance classification since only a small portion of patients would convert severe based on the prevalence rate. First, the problem of imbalance classification of our data set is bias, i.e., 86 severe cases vs. 322 non-severe cases. This makes difficult to construct effective classification models. Second, the difference of infected volumes between the severe cases and the non-severe cases is small, as shown in Fig. 6 , while the corresponding difference is distinguished in (Tang et al., 2020), thus the latter can easily conduct classification. With the increase of available the data of severe cases, the accuracy of our method could be further improved. In our future work, we plan to generate new samples for the minority class to lessen the problem of imbalance classification, as well as design new deep transfer learning methods using other data sources (e.g., X-ray data) to solve the issue of small-sized sample and high-dimensional features.

Fig. 6.

Ratios of infected volumes in the HU ranges of [-700,-500] and [-500, -200], where patient IDs 1–322 are non-severe cases and patient IDs (323–408) are severe cases.

This study only focused on binary classification, i.e., severe cases vs. non-severe cases. In our future work, we plan to conduct multi-class classification on four types of COVID-19 diagnosis, i.e., mild, common, severe, and critical.

7. Conclusion

In this paper, we proposed a new method to jointly conduct disease identification and conversion time prediction, by taking into account the issues, such as high-dimensional data, small-sized sample, outlier influence, and imbalance classification. To do this, we designed a sparsity regularization term to conduct feature selection and learn the shared information between two tasks, and proposed a new method to take into account the sample weight and the issue of imbalance classification. Finally, experimental results showed that our proposed method achieved the best performance for detecting the severe case from non-severe cases and the conversion time from the mild confirmed case to the severe case with the CT data in a real data set, compared to the comparison methods.

In the future, we can use registration techniques (Luan, Qi, Xue, Chen, Shen, 2008, Fan, Rao, Hurt, Giannetta, Korczykowski, Shera, Avants, Gee, Wang, Shen, 2007, Xue, Shen, Davatzikos, 2006) to align the longitudinal images of the same patients for more accurate measurement of local infection changes for helping patient management.

CRediT authorship contribution statement

Xiaofeng Zhu: Conceptualization, Methodology, Software, Writing - original draft. Bin Song: Validation, Resources, Data curation. Feng Shi: Methodology, Investigation, Writing - original draft. Yanbo Chen: Software, Validation, Investigation. Rongyao Hu: Formal analysis, Investigation, Methodology, Writing - original draft. Jiangzhang Gan: Methodology, Software, Writing - review & editing. Wenhai Zhang: Data curation, Visualization. Man Li: Data curation, Visualization. Liye Wang: Resources, Data curation, Visualization. Yaozong Gao: Methodology, Writing - review & editing. Fei Shan: Resources, Data curation, Supervision, Writing - review & editing. Dinggang Shen: Writing - review & editing, Supervision, Project administration.

Declaration of Competing Interest

F.S., Y.C., W.Z., M.L., L.W., Y.G., and D.S. are employees of Shanghai United Imaging Intelligence Co., Ltd. The company has no role in designing and performing the surveillances and analyzing and interpreting the data. All other authors report no conflicts of interest relevant to this article.

Acknowledgement

This work was supported in part by the National Key Research and Development Program of China under Grants 2018AAA0102200 and 2018YFC0116400, the National Natural Science Foundation of China under Grant 61876046, the Sichuan Science and Technology Program under Grants 2018GZDZX0032 and 2019YFG0535, and the Novel Coronavirus Special Research Foundation of the Shanghai Municipal Science and Technology Commission under Grant 20441900600.

Footnotes

References

- Adeli E., Li X., Kwon D., Zhang Y., Pohl K. Logistic regression confined by cardinality-constrained sample and feature selection. IEEE Trans. Pattern Anal. Mach. Intell. 2020;42(7):1713–1728. doi: 10.1109/TPAMI.2019.2901688. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Afshar P., Heidarian S., Naderkhani F., Oikonomou A., Plataniotis K.N., Mohammadi A. Covid-caps: a capsule network-based framework for identification of covid-19 cases from x-ray images. arXiv:2004.02696. 2020 doi: 10.1016/j.patrec.2020.09.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ai T., Yang Z., Hou H., Zhan C., Chen C., Lv W., Tao Q., Sun Z., Xia L. Correlation of chest ct and RT-PCR testing in coronavirus disease 2019 (covid-19) in china: a report of 1014 cases. Radiology. 2020:200642. doi: 10.1148/radiol.2020200642. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alom, M. Z., Rahman, M. M. S., Nasrin, M. S., Taha, T. M., Asari, V. K., 2020. Covid_mtnet: Covid-19 detection with multi-task deep learning approaches. arXiv:2004.03747.

- Arora P., Kumar H., Panigrahi B.K. Prediction and analysis of covid-19 positive cases using deep learning models: a descriptive case study of india. Chaos, Solitons & Fractals. 2020:110017. doi: 10.1016/j.chaos.2020.110017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bai, X., Fang, C., Zhou, Y., Bai, S., Liu, Z., Xia, L., Chen, Q., Xu, Y., Xia, T., Gong, S., et al., 2020. Predicting covid-19 malignant progression with ai techniques. [DOI] [PMC free article] [PubMed]

- Bezdek J.C., Hathaway R.J. Convergence of alternating optimization. Neural, Parallel & Scientific Computations. 2003;11:351–368. [Google Scholar]

- Bhandari S., Shaktawat A.S., Tak A., Patel B., Shukla J., Singhal S., Gupta K., Gupta J., Kakkar S., Dube A., et al. Logistic regression analysis to predict mortality risk in covid-19 patients from routine hematologic parameters. Ibnosina Journal of Medicine and Biomedical Sciences. 2020;12:123. [Google Scholar]

- Chaganti, S., Balachandran, A., Chabin, G., Cohen, S., Flohr, T., Georgescu, B., Grenier, P., Grbic, S., Liu, S., Mellot, F., Murray, N., Nicolaou, S., Parker, W., Re, T., Sanelli, P., Sauter, A. W., Xu, Z., Yoo, Y., Ziebandt, V., Comaniciu, D., 2020. Quantification of tomographic patterns associated with covid-19 from chest ct. arXiv:2004.01279. [DOI] [PMC free article] [PubMed]

- Chakraborty T., Ghosh I. Real-time forecasts and risk assessment of novel coronavirus (covid-19) cases: a data-driven analysis. Chaos, Solitons & Fractals. 2020:109850. doi: 10.1016/j.chaos.2020.109850. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chang C.C., Lin C.J. Libsvm: a library for support vector machines. ACM transactions on intelligent systems and technology (TIST) 2011;2:1–27. [Google Scholar]

- Chen X., Tang Y., Mo Y., Li S., Lin D., Yang Z., Yang Z., Sun H., Qiu J., Liao Y., et al. A diagnostic model for coronavirus disease 2019 (covid-19) based on radiological semantic and clinical features: a multi-center study. Eur Radiol. 2020:1. doi: 10.1007/s00330-020-06829-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corman V., Bleicker T., Brünink S., Drosten C., Zambon M. Diagnostic detection of 2019-NCOV by real-time RT-PCR. World Health Organization, Jan. 2020;17 [Google Scholar]

- Craw P., Balachandran W. Isothermal nucleic acid amplification technologies for point-of-care diagnostics: a critical review. Lab Chip. 2012;12:2469–2486. doi: 10.1039/c2lc40100b. [DOI] [PubMed] [Google Scholar]

- Duan M., Li K., Yang C., Li K. A hybrid deep learning cnn–elm for age and gender classification. Neurocomputing. 2018;275:448–461. [Google Scholar]

- Evgeniou T., Pontil M. SIGKDD. 2004. Regularized multi–task learning; pp. 109–117. [Google Scholar]

- Fan D.P., Zhou T., Ji G.P., Zhou Y., Chen G., Fu H., Shen J., Shao L. Inf-net: automatic covid-19 lung infection segmentation from CT images. IEEE Trans Med Imaging. 2020 doi: 10.1109/TMI.2020.2996645. [DOI] [PubMed] [Google Scholar]

- Fan Y., Rao H., Hurt H., Giannetta J., Korczykowski M., Shera D., Avants B.B., Gee J.C., Wang J., Shen D. Multivariate examination of brain abnormality using both structural and functional MRI. Neuroimage. 2007;36:1189–1199. doi: 10.1016/j.neuroimage.2007.04.009. [DOI] [PubMed] [Google Scholar]

- Guan W.j., Ni Z.y., Hu Y., Liang W.h., Ou C.q., He J.x., Liu L., Shan H., Lei C.l., Hui D.S., et al. Clinical characteristics of coronavirus disease 2019 in china. N top N. Engl. J. Med. 2020 doi: 10.1056/NEJMoa2002032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hassanien A.E., Mahdy L.N., Ezzat K.A., Elmousalami H.H., Ella H.A. Automatic x-ray covid-19 lung image classification system based on multi-level thresholding and support vector machine. medRxiv. 2020 [Google Scholar]

- Hu R., Zhu X., Zhu Y., Gan J. Robust svm with adaptive graph learning. World Wide Web. 2020;23:1945–1968. [Google Scholar]

- Ibrahim M.R., Haworth J., Lipani A., Aslam N., Cheng T., Christie N. Variational-lstm autoencoder to forecast the spread of coronavirus across the globe. medRxiv. 2020 doi: 10.1371/journal.pone.0246120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- JHU, 2020. Coronavirus covid-19 global cases by the center for systems science and engineering (CSSE) at johns Hopkins university. https://gisanddata.maps.arcgis.com/apps/opsdashboard/index.html#/bda75 94740fd402994234 67b48e9ecf6.

- Jung S.m., Akhmetzhanov A.R., Hayashi K., Linton N.M., Yang Y., Yuan B., Kobayashi T., Kinoshita R., Nishiura H. Real-time estimation of the risk of death from novel coronavirus (covid-19) infection: inference using exported cases. J Clin Med. 2020;9:523. doi: 10.3390/jcm9020523. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kang H., Xia L., Yan F., Wan Z., Shi F., Yuan H., Jiang H., Wu D., Sui H., Zhang C., et al. Diagnosis of coronavirus disease 2019 (covid-19) with structured latent multi-view representation learning. IEEE Trans Med Imaging. 2020;39(8):2606–2614. doi: 10.1109/TMI.2020.2992546. [DOI] [PubMed] [Google Scholar]

- Kong W., Agarwal P.P. Chest imaging appearance of covid-19 infection. Radiology: Cardiothoracic Imaging. 2020;2:e200028. doi: 10.1148/ryct.2020200028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee E.Y., Ng M.Y., Khong P.L. Covid-19 pneumonia: what has CT taught us? The Lancet Infectious Diseases. 2020;20:384–385. doi: 10.1016/S1473-3099(20)30134-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li L., Qin L., Xu Z., Yin Y., Wang X., Kong B., Bai J., Lu Y., Fang Z., Song Q., et al. Artificial intelligence distinguishes covid-19 from community acquired pneumonia on chest ct. Radiology. 2020:200905. doi: 10.1148/radiol.2020200905. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li Q., Guan X., Wu P., Wang X., Zhou L., Tong Y., Ren R., Leung K.S., Lau E.H., Wong J.Y., et al. Early transmission dynamics in wuhan, china, of novel coronavirus–infected pneumonia. N top N. Engl. J. Med. 2020 doi: 10.1056/NEJMoa2001316. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liaw A., Wiener M., et al. Classification and regression by randomforest. R news. 2002;2:18–22. [Google Scholar]

- Liu D.C., Nocedal J. On the limited memory bfgs method for large scale optimization. Math Program. 1989;45:503–528. [Google Scholar]

- Luan H., Qi F., Xue Z., Chen L., Shen D. Multimodality image registration by maximization of quantitative–qualitative measure of mutual information. Pattern Recognit. 2008;41:285–298. [Google Scholar]

- Ng M.Y., Lee E.Y., Yang J., Yang F., Li X., Wang H., Lui M.M.s., Lo C.S.Y., Leung B., Khong P.L., et al. Imaging profile of the covid-19 infection: radiologic findings and literature review. Radiology: Cardiothoracic Imaging. 2020;2:e200034. doi: 10.1148/ryct.2020200034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ouyang X., Huo J., Xia L., Shan F., Liu J., Mo Z., Yan F., Ding Z., Yang Q., Song B., et al. Dual-sampling attention network for diagnosis of covid-19 from community acquired pneumonia. IEEE Trans Med Imaging. 2020;39(8):2595–2605. doi: 10.1109/TMI.2020.2995508. [DOI] [PubMed] [Google Scholar]

- Ozkaya, U., Ozturk, S., Barstugan, M., 2020. Coronavirus (covid-19) classification using deep features fusion and ranking technique. arXiv:2004.03698.

- Petropoulos F., Makridakis S. Forecasting the novel coronavirus covid-19. PLoS ONE. 2020;15:e0231236. doi: 10.1371/journal.pone.0231236. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ranjan R., Patel V.M., Chellappa R. Hyperface: a deep multi-task learning framework for face detection, landmark localization, pose estimation, and gender recognition. IEEE Trans Pattern Anal Mach Intell. 2017;41:121–135. doi: 10.1109/TPAMI.2017.2781233. [DOI] [PubMed] [Google Scholar]

- del Rio C., Malani P.N. Covid-19 new insights on a rapidly changing epidemic. JAMA. 2020 doi: 10.1001/jama.2020.3072. [DOI] [PubMed] [Google Scholar]

- Salgotra R., Gandomi M., Gandomi A.H. Time series analysis and forecast of the covid-19 pandemic in india using genetic programming. Chaos, Solitons & Fractals. 2020:109945. doi: 10.1016/j.chaos.2020.109945. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shaban W.M., Rabie A.H., Saleh A.I., Abo-Elsoud M. A new covid-19 patients detection strategy (cpds) based on hybrid feature selection and enhanced knn classifier. Knowl Based Syst. 2020:106270. doi: 10.1016/j.knosys.2020.106270. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shan F., Gao Y., Wang J., Shi W., Shi N., Han M., Xue Z., Shen D., Shi Y. Lung infection quantification of covid-19 in ct images with deep learning. arXiv:2003.04655. 2020 [Google Scholar]

- Shen H.T., Zhu Y., Zheng W., Zhu X. Half-quadratic minimization for unsupervised feature selection on incomplete data. IEEE transactions on neural networks and learning systems. 2020 doi: 10.1109/TNNLS.2020.3009632. [DOI] [PubMed] [Google Scholar]; 10.1109/TNNLS.2020.3009632

- Shi F., Xia L., Shan F., Wu D., Wei Y., Yuan H., Jiang H., Gao Y., Sui H., Shen D. Large-scale screening of covid-19 from community acquired pneumonia using infection size-aware classification. arXiv:2003.09860. 2020 doi: 10.1088/1361-6560/abe838. [DOI] [PubMed] [Google Scholar]

- Shi H., Han X., Jiang N., Cao Y., Alwalid O., Gu J., Fan Y., Zheng C. Radiological findings from 81 patients with covid-19 pneumonia in Wuhan, China: a descriptive study. The Lancet Infectious Diseases. 2020 doi: 10.1016/S1473-3099(20)30086-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Song Y., Zheng S., Li L., Zhang X., Zhang X., Huang Z., Chen J., Zhao H., Jie Y., Wang R., et al. Deep learning enables accurate diagnosis of novel coronavirus (covid-19) with ct images. medRxiv. 2020 doi: 10.1109/TCBB.2021.3065361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Song Y.S., Park C.M., Park S.J., Lee S.M., Jeon Y.K., Goo J.M. Volume and mass doubling times of persistent pulmonary subsolid nodules detected in patients without known malignancy. Radiology. 2014;273:276–284. doi: 10.1148/radiol.14132324. [DOI] [PubMed] [Google Scholar]

- Tang Z., Zhao W., Xie X., Zhong Z., Shi F., Liu J., Shen D. Severity assessment of coronavirus disease 2019 (covid-19) using quantitative features from chest CT images. arXiv:2003.11988. 2020 [Google Scholar]

- Tibshirani R. Regression shrinkage and selection via the lasso. Journal of the Royal Statistical Society: Series B (Methodological) 1996;58:267–288. [Google Scholar]

- Wang S., Zha Y., Li W., Wu Q., Li X., Niu M., Wang M., Qiu X., Li H., Yu H., et al. A fully automatic deep learning system for covid-19 diagnostic and prognostic analysis. European Respiratory Journal. 2020 doi: 10.1183/13993003.00775-2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wu J.T., Leung K., Leung G.M. Nowcasting and forecasting the potential domestic and international spread of the 2019-ncov outbreak originating in wuhan, china: a modelling study. The Lancet. 2020;395:689–697. doi: 10.1016/S0140-6736(20)30260-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xie, J., Hungerford, D., Chen, H., Abrams, S. T., Li, S., Wang, G., Wang, Y., Kang, H., Bonnett, L., Zheng, R., et al., 2020a. Development and external validation of a prognostic multivariable model on admission for hospitalized patients with covid-19.

- Xie W., Jacobs C., Charbonnier J.P., van Ginneken B. Relational modeling for robust and efficient pulmonary lobe segmentation in CT scans. IEEE Trans Med Imaging. 2020 doi: 10.1109/TMI.2020.2995108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xue Z., Shen D., Davatzikos C. Classic: consistent longitudinal alignment and segmentation for serial image computing. Neuroimage. 2006;30:388–399. doi: 10.1016/j.neuroimage.2005.09.054. [DOI] [PubMed] [Google Scholar]

- Yan L., Zhang H.T., Goncalves J., Xiao Y., Wang M., Guo Y., Sun C., Tang X., Jin L., Zhang M., et al. A machine learning-based model for survival prediction in patients with severe covid-19 infection. MedRxiv. 2020;24(10):2798–2805. doi: 10.1109/JBHI.2020.3019505. [DOI] [Google Scholar]

- Zhao W., Zhong Z., Xie X., Yu Q., Liu J. Relation between chest ct findings and clinical conditions of coronavirus disease (covid-19) pneumonia: a multicenter study. American Journal of Roentgenology. 2020:1–6. doi: 10.2214/AJR.20.22976. [DOI] [PubMed] [Google Scholar]

- Zhu X., Gan J., Lu G., Li J., Zhang S. Spectral clustering via half-quadratic optimization. World Wide Web. 2020;23:1969–1988. [Google Scholar]

- Zhu X., Li X., Zhang S., Xu Z., Yu L., Wang C. Graph pca hashing for similarity search. IEEE Trans Multimedia. 2017;19:2033–2044. [Google Scholar]

- Zhu X., Suk H.I., Shen D. A novel matrix-similarity based loss function for joint regression and classification in ad diagnosis. Neuroimage. 2014;100:91–105. doi: 10.1016/j.neuroimage.2014.05.078. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhu X., Suk H.I., Wang L., Lee S.W., Shen D., Initiative A.D.N., et al. A novel relational regularization feature selection method for joint regression and classification in ad diagnosis. Med Image Anal. 2017;38:205–214. doi: 10.1016/j.media.2015.10.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhu X., Yang J., Zhang C., Zhang S. Efficient utilization of missing data in cost-sensitive learning. IEEE Transactions on Knowledge and Data Engineering. 2019 [Google Scholar]; 10.1109/TKDE.2019.2956530

- Zhu X., Zhu Y., Zheng W. Spectral rotation for deep one-step clustering. Pattern Recognit. 2020;105:107175. [Google Scholar]