Abstract

Introduction

Online open book assessment has been a common alternative to a traditional invigilated test or examination during the COVID-19 pandemic. However, its unsupervised nature increases ease of cheating, which is an academic integrity concern. This study's purpose was to evaluate the integrity of two online open book assessments with different formats (1. Tightly time restricted - 50 min for mid-semester and 2. Take home - any 4 h within a 24-h window for end of semester) implemented in a radiologic pathology unit of a Bachelor of Science (Medical Radiation Science) course during the pandemic.

Methods

This was a retrospective study involving a review and analysis of existing information related to the integrity of the two radiologic pathology assessments. Three integrity evaluation approaches were employed. The first approach was to review all the Turnitin plagiarism detection software reports with use of ‘seven-words-in-a-row’ criterion to identify any potential collusion. The second approach was to search for highly irrelevant assessment answers during marking for detection of other cheating types. Examples of highly irrelevant answers included those not addressing question requirements and stating patients' clinical information not from given patient histories. The third approach was an assessment score statistical analysis through descriptive and inferential statistics to identify any abnormal patterns that might suggest cheating occurred. An abnormal pattern example was high assessment scores. The descriptive statistics used were minimum, maximum, range, first quartile, median, third quartile, interquartile range, mean, standard deviation, fail and full mark rates. T-test was employed to compare mean scores between the two assessments in this year (2020), between the two assessments in the last year (2019), between the two mid-semester assessments in 2019 and 2020, and between this and last years' end of semester assessments. A p-value of less than 0.05 was considered statistically significant.

Results

No cheating evidence was found in all Turnitin reports and assessment answers. The mean scores of the end of semester assessments in 2019 (88.2%) and 2020 (90.9%) were similar (p = 0.098). However, the mean score of the online open book mid-semester assessment in 2020 (62.8%) was statistically significantly lower than that of the traditional invigilated mid-semester assessment in 2019 (71.8%) with p < 0.0001.

Conclusion

This study shows the use of the online open book assessments with tight time restrictions and the take home formats in the radiologic pathology unit did not have any academic integrity issues. Apparently, the strict assessment time limit played an important role in maintaining their integrity.

Keywords: Academic integrity, Authenticity, Cheating, COVID-19, Online open book assessment, Radiologic pathology

Résumé

Introduction

L’évaluation à livres ouverts en ligne est une solution de rechange courante aux tests et examens sous surveillance traditionnels durant la pandémie de COVID-19. Cependant, l'absence de supervision rend la tricherie plus facile, ce qui constitue une source de préoccupation d'intégrité. Cette étude vise à évaluer l'intégrité de deux évaluations à livres ouverts en ligne sous différents formats (1. Restriction de temps serrée – 50 min pour un examen de mi-session, et 2. Examen à la maison – toute période de 4 heures dans une fenêtre de 24 heures pour l'examen de fin de session) dans une unité de pathologie radiologique d'un cours de baccalauréat en sciences (Sciences de la radiation médicale) durant la pandémie.

Méthodologie

Il s'agit d'une étude rétrospective comportant l'examen et l'analyse de l'information existante à l’égard de l'intégrité de deux évaluations en pathologie radiologique. Trois approches d’évaluation de l'intégrité ont été utilisées. La première consistait à évaluer tous les rapports Turnitin à l'aide du critère « sept mots en ligne » afin d'identifier toute collusion possible. La deuxième approche consistait à rechercher les réponses fortement non pertinentes à l’évaluation durant la notation afin de reconnaître les autres formes de tricherie. Les exemples de réponses fortement non pertinentes comprennent celles qui ne répondent pas aux exigences de la question et qui donnent des renseignements cliniques sur le patient qui n'apparaissent pas dans les antécédents fournis pour le patient. La troisième approche faisait appel à une analyse de la notation utilisant les statistiques descriptives et déductives afin d'identifier les modèles anormaux susceptibles d'indiquer une tricherie. Un exemple de modèle anormal est une note d’évaluation élevée. Les statistiques descriptives utilisées étaient les notes minimum et maximum, le premier quartile, la médiane, le troisième quartile, l’écart interquartile, la moyenne, l’écart-type, l’échec et le taux d’étudiants ayant obtenu la note parfaite. Un test T a été utilisé pour comparer les notes moyennes entre les deux évaluations de cette année (2020), entre les deux évaluations de l'année dernière (2019), entre les deux évaluations de mi-session (2020 et 2019), et entre l’évaluation de fin de session de cette année et celle de l'an dernier. Une valeur p inférieure à 0,05 était jugée statistiquement significative.

Résultats

Aucune évidence de tricherie n'a été constatée dans les rapports Turnitin et les réponses aux évaluations. Les notes moyennes de l’évaluation de fin de session de 2019 (88,2%) et 2020 (90,9%) étaient similaires (p = 0,098). Cependant, la note moyenne de l’évaluation de mi-session à livres ouverts en 2020 (62,8%) était plus basse de façon statistiquement significative à celle de l’évaluation sous surveillance traditionnelle de mi-session de 2019 (71,8%) avec p < 0,0001.

Conclusion

Cette étude montre que l'utilisation de l’évaluation à livres ouverts en format de durée restreinte et d'examen à la maison pour l'unité de pathologie radiologique n'a pas entraîné de problèmes d'intégrité académique. Apparemment, la durée strictement limitée de l’évaluation a joué un rôle important dans le maintien de l'intégrité.

Introduction

Online open book assessment (test/examination) is not a new assessment method in higher education. It has been used in many online undergraduate and postgraduate courses for years. Students are allowed to use any reference resources for answering questions during the assessments without supervision.1 It can be conducted in either a tightly time restricted (e.g. 2–3 h for completion) or take home (e.g. 24–48 h) format.2 Due to COVID-19 restrictions on gathering and movement for infection control, it has become one of the common assessment methods to replace traditional invigilated assessments.3, 4, 5 The use of the online open book assessment is to gauge individual students’ development of knowledge against academic and/or professional standards. This is to ensure that they possess adequate capabilities to meet requirements of their own study and/or work in the future. If they obtain assistance from others to complete their assessments, this is determined as cheating because the submitted works do not represent their own knowledge achievement. In any academic setting, the students must demonstrate honesty, which is known as academic integrity. The unsupervised nature of the online open book assessment increases ease of cheating. For example, the students can approach contract cheating websites, and paid or unpaid third parties (e.g. classmates, friends, relatives, etc.) for the assistance in completing the assessment tasks, breaching the academic integrity requirement.4, 5, 6, 7, 8

For meeting the academic integrity requirement, various universities provide online open book assessment design guidelines to assist their staff in developing this online assessment appropriately. One common suggestion is to use long questions that require application of knowledge or reflection on personal experience for the assessment, and avoid setting factual recall questions (e.g. multiple choice and short questions, etc.). For example, a long question that requires students to tailor their answer specific to a scenario (e.g. solving a particular problem, etc.) or to demonstrate their achievement of learning objectives based on their own experience is recommended. Also, limiting the assessment time can reduce the chance for them to seek assistance from other parties.2 , 8 , 9 However, a recent large Australian study involving 14,086 students and 1,147 teaching staff has shown that the students are more likely to cheat in the assessments with the knowledge application and the short completion time (e.g. 3–7 days) requirements. This is because of increased difficulty and pressure associated with the time constraint.6 That research evidence seems against the aforementioned universities’ suggestions to certain extent.

For the author's institution, similar to other universities worldwide,3, 4, 5 the traditional invigilated assessments were not allowed during the pandemic (first half of 2020). Every unit (subject) coordinator needed to rush at converting the original invigilated assessments to other suitable alternatives. The most common alternative was the online open book assessment. This is because it would be unnecessary to change the original assessment questions if they were in line with the universities' online open book assessment design guidelines, addressing the tight time constraint.2 , 4 , 8 , 9 The author was a coordinator of a radiologic pathology unit (Medical Radiation Pathology 2) for third year medical imaging and radiation therapy students of an Australian Bachelor of Science (Medical Radiation Science) course. Originally, this unit had three traditional invigilated assessments, including a 50-min mid-semester test, a 50-min end of semester test, and a 2-h final examination. For meeting the unit learning outcomes and registering body's (Medical Radiation Practice Board of Australia [MRPBA]) professional capability requirements,10 each of the two tests had six long essay questions, which required the students to interpret six cases with patients' clinical histories and multimodality medical images (including general radiography, fluoroscopy, mammography, ultrasound, computed tomography, magnetic resonance imaging and nuclear medicine), and construct written, informed opinions about medically significant findings in a timely fashion. The final examination had five long, 19 short, and 12 multiple choice questions.

To mitigate COVID-19 impacts, it was decided to remove the final examination from this unit since the first and the second halves of this unit contents were covered by the mid-semester and the end of semester assessments. It was unnecessary to have one more assessment to evaluate the students' capabilities. Also, due to the authentic nature of the two tests,10 , 11 no change was made to the original papers for their online delivery except the end of semester assessment duration. This change of the duration was required by the university that any online assessment administered after May 1, 2020 must have a duration of at least 4 h and open for a minimum period of 24 h. This arrangement allowed the students to handle any potential technical problems, such as internet interruption encountered during the assessment. The end of semester assessment duration became 4 h and it was open for 24 h for the students to download the online paper and submit the completed work to a Turnitin dropbox (Turnitin, CA, USA) for cheating detection and marking. Both online assessments had the same numbers of questions (six long essay questions), answer requirements and marks allocated to each, but covering different body systems. These settings were also the same as those of previous year's traditional invigilated 50-min mid-semester and end of semester assessments. Levels of difficulty of 2019's and 2020's assessments were similar, although the questions were different.

Despite the recent research findings showing the assessments with the knowledge application and the short completion time requirements being the facilitators of the assessment cheating,6 these arrangements were recommended by different universities for maintaining the integrity of the online open book assessment.2 , 8 , 9 It is worthwhile to investigate any impact of the assessment durations on the integrity of the online open book assessment with the knowledge application requirement. This study's purpose was to evaluate the integrity of the two online open book assessments with different time limits implemented in a radiologic pathology unit of a Bachelor of Science (Medical Radiation Science) course during the pandemic. It is expected that this study's outcomes can provide further insights about the appropriate time limit for avoiding the cheating in the online open book assessment, which has become the common assessment method in response to the pandemic.3 , 4 , 8

Methods

This was a retrospective study involving a review and analysis of existing information related to the academic integrity of the online open book mid-semester and end of semester assessments of the radiologic pathology unit that the author was its coordinator. Forty-eight students enrolled in this radiologic pathology unit in 2020. Thirty (63%) students were female and 18 (38%) were male. Their mean age was 23 years, ranging from 20 to 40 years. The institutional review board approved this study and granted a waiver of consent on August 4, 2020 (approval number HRE2020-0432). The mid-semester assessment in April 2020 was tightly time restricted with a duration of 50 min. The end of semester assessment in June 2020 allowed the students to spend a maximum of 4 h to complete the assessment anytime within the 24-h window. The assessment papers were available for the students to download from the university's learning management system (Blackboard Learn, DC, USA) within the specific timeframes. The timeframes for the mid-semester and the end of semester assessments were 50 min and 24 h, respectively. The students were required to use their own computers to enter their answers into the assessment paper documents and submit the completed papers to the Turnitin dropboxes available on the Blackboard within the timeframes. No technical strategy was implemented to prevent the students spending more than 4 h to complete the end of semester assessment. However, they were informed that the assessment paper submission was an implied declaration of only a maximum of 4 h used to complete the assessment. The end of semester assessment could be considered the take home assessment.2 Although technical strategies such as Blackboard Test function could be used to enforce the assessment time restriction, the author's institution discouraged their staff to use it in order to prevent Blackboard server overload.

Turnitin is a text matching system able to highlight any texts within an electronic document matching those in existing publications and previously submitted works by other individuals, such as students, for detecting any potential collusion.12 Turnitin is not capable of detecting the other cheating types involving contract cheating websites, or the use of students' friends or relatives. However, according to recent research findings,13 experienced markers can differentiate between genuine student works and those completed by the third parties for most (96%) of the time. Marking of the two assessments involved the unit coordinator (the author), and a co-teacher. Each had 18 years of teaching and marking experience in higher education. Also, the author was an undergraduate medical imaging course coordinator and the co-teacher was a consultant radiologist in the past. Currently, the author is a member of his university's central and faculty student discipline panels and an inquiry officer for academic misconduct incidents. The co-teacher is a professor in medical imaging. The unit coordinator was responsible for marking all questions in the two assessments except two questions set by the co-teacher. The co-teacher marked his own questions and double marked a few randomly selected papers from the two assessments for post-marking moderation. The other types of cheating not detected by the Turnitin should be identified through this rigorous marking process.

Three approaches were employed to evaluate the integrity of the two assessments. The first approach was to review all answer texts highlighted in each assessment Turnitin report. If a highlighted text had seven or more consecutive words to express an idea that matched those in another student's submitted paper and the idea could be expressed in a different way, this would indicate a potential collusion based on the ‘seven-words-in-a-row’ criterion. However, when seven or more consecutive words were technical terms, this was not considered a collusion. An example of this was T1 and T2 weighted magnetic resonance images.12 , 14 , 15 As per the author's university academic integrity guidelines,16 no ‘safe’ level of the Turnitin similarity score was set for the collusion detection in this study. This is because the similarity score is generated through dividing the number of highlighted words by total number of words of a document. Even a paper with a similarity score of 1% may have seven or more consecutive words matching another person's work when the total number of words is not small.

The second approach was to search for highly irrelevant assessment answers during marking for detecting other cheating types not covered by the Turnitin. The literature has indicated that the assessment assistance provided by the contract cheating websites, their friends and relatives tends to be unable to address the specific assessment requirements, leading to the highly irrelevant answers.5 , 13 , 17 Each question of the assessments required the students to determine the imaging modalities and techniques such as contrast phases for all given images with justification. Also, they needed to identify abnormalities shown on the given images and suggest a diagnosis based on both imaging findings and given patients’ clinical information (e.g. blood test results, etc.). Presence of a highly irrelevant answer would be an indication of potential cheating.

The third approach was an assessment score statistical analysis through descriptive and inferential statistics to identify any abnormal patterns such as high assessment scores that might suggest that cheating occurred. The descriptive statistics used were minimum, maximum, range, first quartile, median, third quartile, interquartile range, mean, standard deviation (SD), fail and full mark rates. T-test was employed to compare mean scores between the two assessments in this year (2020), between the two assessments in the last year (2019), between the two mid-semester assessments in 2019 and 2020, and between this and last years' end of semester assessments.4 , 17, 18, 19 Forty-seven students were enrolled in this radiologic pathology unit in 2019. GraphPad InStat 3.06 (GraphPad Software Inc, CA, USA) was used for the statistical analysis. A p-value less than 0.05 was considered of statistical significance.

Results

All Turnitin reports for the two assessments showed no student's answer text had seven or more consecutive words for the idea expression that matched those in the existing publications and the works submitted by the other individuals, suggesting no collusion. However, their overall Turnitin similarity scores were high (mid-semester assessment: 52%–90% and end of semester assessment: 30%–80%). These scores were contributed by common texts within cover pages of the assessment papers and questions. No highly irrelevant assessment answer was identified during the marking and the post-marking moderation. Nonetheless, some students did not answer some parts of the questions and/or provided incorrect (but still relevant) answers. These phenomena were more common in the mid-semester assessment.

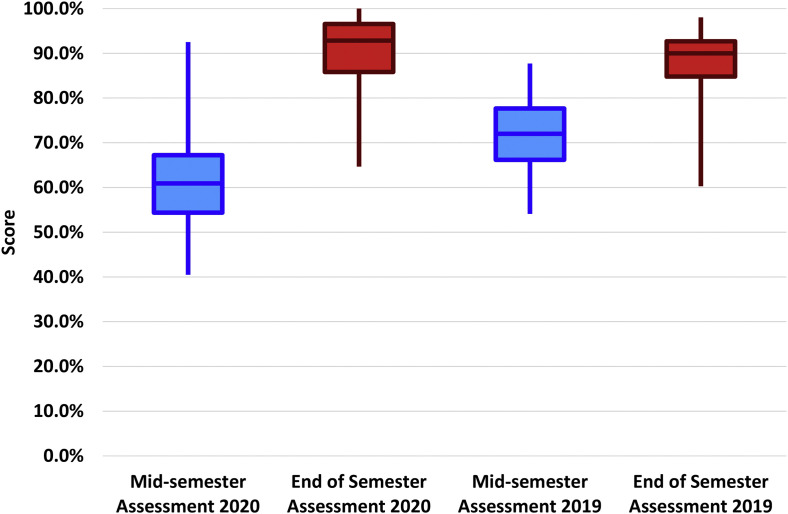

Table 1 and Fig. 1 illustrate the current and previous years’ mid-semester and end of semester assessment score statistics. For both 2019 and 2020, the end of semester assessment scores were higher than the mid-semester ones, and statistically significant mean differences are noted (p < 0.0001). The mean scores of the end of semester assessments in 2019 (88.2%) and 2020 (90.9%) were similar (p = 0.098). These indicate no abnormal pattern to suggest any cheating occur in the online open book end of semester assessment.1 However, the mean score of the online open book mid-semester assessment in 2020 (62.8%) was statistically significantly lower than that of the traditional invigilated mid-semester assessment in 2019 (71.8%) with p < 0.0001. Other statistics of the online open book mid-semester assessment scores also show noticeable differences (including a lower minimum and a higher maximum leading to a wider range and a greater SD, and a non-zero fail rate) when compared with those of the traditional invigilated mid-semester assessment. The statistically significantly lower mean score, the smaller minimum, and the non-zero fail rate can be considered findings to suggest that no cheating should happen in the online open book mid-semester assessment.18 , 19 For the online open book end of semester assessment, a non-zero full mark rate (8.3%) is noted. Nonetheless, neither any student failed nor obtained full marks in the two traditional invigilated assessments.

Table 1.

Mid-semester and End of Semester Assessment Score Statistics 2019 and 2020.

| Assessment (Year) | Score (%) |

Fail Rate (%) | Full Mark Rate (%) | ||

|---|---|---|---|---|---|

| Mean | SD | ||||

| Online Open Book (2020) N = 48 |

Mid-semester | 62.8 | 11.5 | 6.3 | 0.0 |

| End of Semester | 90.9 | 8.2 | 0.0 | 8.3 | |

| Traditional Invigilated (2019) N = 47 |

Mid-semester | 71.8 | 8.7 | 0.0 | 0.0 |

| End of Semester | 88.2 | 7.6 | 0.0 | 0.0 | |

Max, maximum; Min, minimum; N, sample size; SD, standard deviation.

Assessment passing score: 50%.

P-values of mean score comparisons by t-test: <0.0001 (2 assessments in 2020); <0.0001 (2 assessments in 2019); <0.0001 (2 mid-semester assessments); 0.098 (2 end of semester assessments).

Fig. 1.

A box plot of the mid-semester and end of semester assessment scores in 2019 and 2020. Assessment passing score: 50%.

Discussion

Due to the constantly changing university's assessment policy in response to the COVID-19 pandemic, the formats of the online open book mid-semester and the end of semester assessments implemented in the radiologic pathology unit were different. The former was tightly time restricted (50 min) while the latter was take home (4 h to complete the assessment anytime within the 24-h window). Although the recent large Australian survey study with 14,086 students and 1,147 teaching staff6 and a personal view article4 indicate the assessments with the knowledge application and the short completion time requirements are the facilitators of the cheating, this study's results based on the real students' data suggest that no cheating should occur in the two assessments. The apparent contradiction may be due to the assessment time limit.5

A previous study has shown a minimum of 6 h is required for a contract cheating provider to complete an assessment for a student.7 This implies it should be difficult for a student to ask a contract cheating provider to complete the online open book mid-semester assessment within 50 min. Also, it is suggested that the appropriate time for prepared students to complete a discussion question within an online open book assessment should be about 15–30 min.9 The appropriate time limit for each assessment in the radiologic pathology unit should be 1.5–3 h for the six questions. This indicates it was not easy, even for the prepared students, to complete all mid-semester assessment questions. Hence, this was challenging for any student to use the other cheating approaches such as collusion and asking the friends and relatives for the assistance in this assessment.5 This study's findings of the higher overall Turnitin similarity scores for the mid-semester assessment ranging between 52% and 90% (contributed by the shorter answers in relation to the common texts within the assessment cover page and questions), and more missing answers for some question parts were also in line with this suggestion.

In contrast, it might be feasible for the students to use the aforementioned cheating approaches in the end of semester assessment. The students could download the assessment paper immediately after its release and ask someone to assist in completing it within the 24-h window, despite no cheating found in this assessment.7 , 9 There were a number of potential factors discouraging the students to cheat in this assessment. For example, the students were aware that the Turnitin was used and able to detect the cheating.17 Also, the markers were the experienced teaching staff familiar with the cheating detection and their academic abilities.13 , 17 , 20 At the beginning of the course, they were required to complete the university's mandatory academic integrity program.21 They learnt from this program that the cheating is an unethical behaviour and it may have potential consequences. For example, the contract cheating providers can blackmail them.4 , 13 , 20, 21, 22 Furthermore, they might have concerns about quality of the works completed within 24 h by the cheating providers.7 Besides, they might not know the cheating approaches for the online open book assessment that were hard to be detected.20 This is because the assessment method was relatively new to them. Many students did not even know how to appropriately prepare for the assessment.4

The statistically significantly lower mean score, the smaller minimum score and the non-zero fail rate of the 2020 mid-semester assessment might be due to the students unfamiliar with this assessment method (Table 1 and Fig. 1). Although its maximum score was higher than that of the corresponding assessment in 2019, the higher maximum score might be contributed by a student with more experience, higher intelligence and/or better literacy skills (an outlier).1 , 4 , 5 It seems the students' mid-semester assessment experience contributed to the improved end of semester assessment results in 2019 and 2020 (Table 1 and Fig. 1). No statistically significant mean difference is found between the two end of semester assessments, which is in line with the findings of the previous studies.1 The non-zero full mark rate of 2020's end of semester assessment can be attributed to its longer duration (Table 1). This allowed some students to fully utilise available reference resources and write up comprehensive answers.1 , 4 , 5 Nevertheless, apparently, this longer duration affected its authenticity. An authentic assessment should be able to evaluate students' capability to complete real world tasks.11 According to the MRPBA, any registered Australian medical radiation practitioners are required to provide the informed opinions about the medically significant findings to appropriate medical personnel in a timely manner.10 This requirement is particularly important for medical emergency situations, which demand practitioners' immediate responses and disallow a search for resources.4 As noted in a study about final year student radiographers' image interpretation performances, average time to interpret one image was 33.25 s.23 The original assessment time limit, 50 min, appears more appropriate to evaluate the students' ability to meet the MPRBA requirement.10 However, guidelines for supporting the students in preparing for the online open book assessment should be provided in the future.1

This study had two main limitations. This was a retrospective study rather than a randomised controlled trial, which is able to provide more rigorous findings.24 However, the retrospective study could better reflect the real situations during the COVID-19 pandemic, which is complementary to the randomised controlled trial.4 , 25 Also, the study's results were only based on one unit (subject) in one Australian undergraduate medical radiation science course although this setting is common for medical radiation science education research. To ensure its rigor, multiple approaches were used to evaluate the integrity of the two online open book assessments.26

Conclusion

This study shows the tightly time restricted (50 min) and the take home (4 h within the 24-h window) online open book assessments, which were implemented in the Medical Radiation Pathology 2 unit (subject) of the Bachelor of Science (Medical Radiation Science) course during the COVID-19 pandemic, did not have any academic integrity issues. Apparently, the strict assessment time limits played an important role in maintaining their integrity despite that other factors (e.g. the use of the Turnitin, the implementation of the academic integrity program, the involvement of the experienced markers, the reputations and the capabilities of the cheating providers, etc.) might contribute to this. As the longer assessment duration increases the chance of the cheating and negatively affects the authenticity of the radiologic pathology assessment, the appropriate online open book assessment duration should be the same as the original invigilated one. If this is not allowed, alternatives such as online viva voce through the Blackboard Collaborate Ultra should be considered for replacing the online open book assessment.

Caution is necessary when transferring this study's findings to other settings. For rigorously studying the impact of the assessment durations on the integrity of the online open book assessment, a randomised controlled trial should be conducted. This should involve a random assignment of a greater number of students to groups with different time limits for completing the same assessment paper under the invigilated (control groups) and the online open book conditions (experimental groups). Also, more subjects and courses should be covered in the future studies.

Footnotes

Contributor: The author contributed to the conception or design of the work, the acquisition, analysis, or interpretation of the data. The author was involved in drafting and commenting on the paper and has approved the final version.

Funding: This study did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Competing interests: The author has completed the ICMJE uniform disclosure form at www.icmje.org/coi_disclosure.pdf and declare: no financial relationships with any organizations that might have an interest in the submitted work in the previous three years; no other relationships or activities that could appear to have influenced the submitted work.

Ethical approval: The institutional review board approved this study and granted the waiver of consent on August 4, 2020 (approval number: HRE2020-0432).

References

- 1.Rakes G.C. Open book testing in online learning environments. J Interact Online Learn. 2008;7(1):1–9. [Google Scholar]

- 2.Deakin University Ensuring academic integrity and assessment security with redesigned online delivery. 2020. http://wordpress-ms.deakin.edu.au/dteach/wp-content/uploads/sites/103/2020/03/DigitalExamsAssessmentGuide1.pdf?_ga=2.238469376.1226538136.1593148790-2108778828.1593059977 Available at:

- 3.The University of Adelaide COVID-19 updates: assessment and grades. 2020. https://www.adelaide.edu.au/covid-19/student-information/assessment-and-grades#will-exams-be-held Available at:

- 4.Jervis C.G., Brown L.R. The prospects of sitting “end of year” open book exams in the light of COVID-19: a medical student’s perspective. Med Teach. 2020;42(7):830–831. doi: 10.1080/0142159X.2020.1766668. [DOI] [PubMed] [Google Scholar]

- 5.Macquarie University Online exam delivery tips: the online open-book examination. 2020. https://teche.mq.edu.au/2020/04/online-exam-delivery-tips-the-online-open-book-examination/ Available at:

- 6.Bretag T., Harper R., Burton M. Contract cheating and assessment design: exploring the relationship. Assess Eval High Educ. 2019;44(5):676–691. [Google Scholar]

- 7.Sutherland-Smith W., Dullaghan K. You don't always get what you pay for: user experiences of engaging with contract cheating sites. Assess Eval High Educ. 2019;44(8):1148–1162. [Google Scholar]

- 8.University of New South Wales Why do online open-book exams deserve a place in your course? 2020. https://www.education.unsw.edu.au/news/why-do-online-open-book-exams-deserve-a-place-in-your-course Available at:

- 9.The Pennsylvania State University Online assessment. 2020. https://sites.psu.edu/onlineassessment/analyze-evidence/ Available at:

- 10.Medical Radiation Practice Board of Australia Professional capabilities for medical radiation practitioners. 2020. https://www.medicalradiationpracticeboard.gov.au/documents/default.aspx?record=WD19%2f29238&dbid=AP&chksum=qSaH9FIsI%2ble99APBZNqIQ%3d%3d Available at:

- 11.Villarroel V., Bloxham S., Bruna D., Bruna C., Herrera-Seda C. Authentic assessment: creating a blueprint for course design. Assess Eval High Educ. 2018;43(5):840–854. [Google Scholar]

- 12.Turnitin Advanced similarity report settings. 2020. https://help.turnitin.com/feedback-studio/turnitin-website/instructor/assignment-management/advanced-similarity-report-settings.htm Available at:

- 13.Dawson P., Sutherland-Smith W. Can markers detect contract cheating? Results from a pilot study. Assess Eval High Educ. 2018;43(2):286–293. [Google Scholar]

- 14.Duddu V. Plagiarism: avoid it like the plague. Telangana J Psychiatry. 2018;4(1):3–5. [Google Scholar]

- 15.Maxel O.J.M. Plagiarism: the cancer of East African university education. J Educ Pract. 2013;4(17):137–143. [Google Scholar]

- 16.Curtin University Academic integrity: guide for students. 2019. https://students.curtin.edu.au/wp-content/uploads/sites/6/2019/11/AI_student_guide5.11.2019.pdf Available at:

- 17.Harper R., Bretag T., Rundle K. Detecting contract cheating: examining the role of assessment type. High Educ Res Dev. 2020 doi: 10.1080/07294360.2020.1724899. [DOI] [Google Scholar]

- 18.Bloemers W., Oud A., van Dam K. Cheating on unproctored internet intelligence tests: strategies and effects. Person Assess Dec. 2016;2(1):21–29. [Google Scholar]

- 19.Chen M. Detect multiple choice exam cheating pattern by applying multivariate statistics. Proceedings of the International Conference on Industrial Engineering and Operations Management; 2017 Oct 25-26; Bogota, Colombia. p. 173-181. 2017. http://ieomsociety.org/bogota2017/papers/38.pdf Available at:

- 20.Dawson P., Sutherland-Smith W. Can training improve marker accuracy at detecting contract cheating? A multi-disciplinary pre-post study. Assess Eval High Educ. 2019;44(5):715–725. [Google Scholar]

- 21.Yorke J., Sefcik L., Veeran-Colton T. Contract cheating and blackmail: a risky business? Stud High Educ. 2020 doi: 10.1080/03075079.2020.1730313. [DOI] [Google Scholar]

- 22.Curtin University Academic integrity program (AIP) 2020. https://students.curtin.edu.au/essentials/rights/academic-integrity/aip/ Available at:

- 23.Whitaker S., Cox W.A.S. An investigation to ascertain whether or not time pressure influences the accuracy of final year student radiographers in abnormality detection when interpreting conventional appendicular trauma radiographs: a pilot study. Radiography. 2020;26(3):e140–e145. doi: 10.1016/j.radi.2019.12.010. [DOI] [PubMed] [Google Scholar]

- 24.Styles B., Torgerson C. Randomised controlled trials (RCTs) in education research - methodological debates, questions, challenges. Educ Res. 2018;60(3):255–264. [Google Scholar]

- 25.Kim H., Lee S., Kim J.H. Real-world evidence versus randomized controlled trial: clinical research based on electronic medical records. J Korean Med Sci. 2018;33(34):e213. doi: 10.3346/jkms.2018.33.e213. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Ng C.K.C. Evidence-based education in radiography. In: Brown T., Williams B., editors. Evidence-based Education in the Health Professions. Radcliffe Publishing Ltd; London: 2015. pp. 448–468. [Google Scholar]