Abstract

Background

Secondary analysis of health administrative databases is indispensable to enriching our understanding of health trajectories, health care utilization, and real-world risks and benefits of drugs among large populations.

Objectives

This systematic review aimed at assessing evidence about the validity of algorithms for the identification of individuals suffering from nonarthritic chronic noncancer pain (CNCP) in administrative databases.

Methods

Studies reporting measures of diagnostic accuracy of such algorithms and published in English or French were searched in the Medline, Embase, CINAHL, AgeLine, PsycINFO, and Abstracts in Social Gerontology electronic databases without any dates of coverage restrictions up to March 1, 2018. Reference lists of included studies were also screened for additional publications.

Results

Only six studies focused on commonly studied CNCP conditions and were included in the review. Some algorithms showed a ≥60% combination of sensitivity and specificity values (back pain disorders in general, fibromyalgia, low back pain, migraine, neck/back problems studied together). Only algorithms designed to identify fibromyalgia cases reached a ≥80% combination (without replication of findings in other studies/databases).

Conclusions

In summary, the present investigation informs us about the limited amount of literature available to guide and support the use of administrative databases as valid sources of data for research on CNCP. Considering the added value of such data sources, the important research gaps identified in this innovative review provide important directions for future research. The review protocol was registered with PROSPERO (CRD42018086402).

Keywords: Chronic Pain, Algorithms, Diagnostic Codes, Validity, Accuracy, Sensitivity, Specificity, Administrative Databases, Claims, Back Pain, Neck Pain, Neuropathic Pain, Complex Regional Pain Syndrome, Fibromyalgia, Headache, Migraine

Introduction

Health administrative databases are commonly used to conduct epidemiologic, pharmacoepidemiologic, and pharmacoeconomic research and are indispensable to enrich our understanding of health trajectories, health care utilization, and real-world risks and benefits of drugs among large populations [1–5]. However, the validity of studies conducted with these data sources relies greatly on the accuracy of the diagnostic information used to create or characterize cohorts of patients suffering from particular health disorders [6–11]. In fact, many administrative databases used for research purposes are by-products of physician billing claims in the context of which it is diagnostic codes are entered, for example, according to the International Classification of Diseases (ICD codes) [12, 13]. Such codes are sometimes misclassified, and it has been shown that their validity varies according to the characteristics of patients, clinical conditions, health care encounters, physicians, and their billing practices [14, 15]. Moreover, the validity of a given algorithm may vary from one data source to another due to variability of database completeness across jurisdictions (e.g., remuneration methods, diagnostic code types and specificity, number of diagnostic fields) [6]. Using validated algorithms to identify and characterize specific patient populations is thus a priority in order to reduce bias in administrative database studies [10, 11].

In the field of chronic noncancer pain (CNCP), longitudinal population-based studies representative of the real-world clinical context are clearly needed [16–18]. For example, such studies are important complements to randomized clinical trials that study the benefits and risks of pain pharmacotherapy (e.g., larger sample sizes, possibility to study long-term effects, greater external validity). This is especially important in a context where CNCP treatment is characterized by off-label prescribing, polypharmacy, and multimorbidity [19–25], profiles that are often not represented in clinical trials. Using health administrative data for such purposes represents an attractive and efficient strategy. A large number of studies have used various ICD coding algorithms to identify individuals suffering from CNCP or to adjust for the presence of CNCP as a comorbidity [12, 26–52]. However, the validity of these algorithms has not always been established before their use. For instance, a previous systematic review of algorithms used to identify various health conditions in US and Canadian administrative databases suggested that only 17.5% of studies report on the validation of the algorithms used [15].

Considering that CNCP is commonly under-reported, underdiagnosed, and under-recognized in primary care settings [18, 53–57], possibly resulting in an increased likelihood of diagnostic code misclassification in administrative databases, we wonder if such data sources are valid for research on CNCP. To the best of our knowledge, evidence about the validity of case-finding algorithms for commonly studied CNCP conditions such as back pain or neuropathic pain has never been compiled and synthesized. The objective of this systematic review was to document validation studies of algorithms for the identification of individuals suffering from nonarthritic CNCP using health administrative databases.

Methods

This study is among a series of systematic reviews of validated methods for identifying various chronic diseases using health care administrative data that have been conducted by the Quebec SUPPORT Unit (Support for People and Patient-Oriented Research and Trials) as part of its mandate to implement strategies to facilitate access to and use of health research data. This work was conducted according to the Preferred Reporting Items for Systematic Review and Meta-Analysis protocols (PRISMA-P) 2015 statement [58] and recommendations specific to reviews of test accuracy [59]. The study was registered in the PROSPERO international database of systematic reviews (CRD42018086402) and can be accessed at: https://www.crd.york.ac.uk/prospero/display_record.php? RecordID=86402.

Eligibility Criteria

To be included in the review, studies had to be original investigations reporting on the validity of algorithms for the identification of CNCP cases in administrative/claims data (studies about the quality of computerized medical records studies were excluded). Peer-reviewed journal articles and reports published in English or in French were eligible, and validation studies could be conducted against various types of reference standards (e.g., disease-specific registries, medical chart review, patient self-report) and about various types of codes (e.g., ICD-9, ICD-10, ICD-10CM, ICD-10CA, non-ICD codes). Commonly studied CNCP conditions such as back pain, neck pain, neuropathic pain, complex regional pain syndrome, fibromyalgia, headache, and migraine [60] were considered. In past years, several systematic reviews have been published about the validity of algorithms for the identification of individuals suffering from rheumatoid arthritis, osteoarthritis, systematic lupus erythematosus (SLE), and other rheumatic conditions such as lupus nephritis, polymyalgia rheumatica, ankylosing spondylitis, Sjögren syndrome, and vasculitis [61–64], and algorithms with acceptable diagnostic accuracy measures were reported. Therefore, retrieved studies focusing solely on these conditions were excluded from the present systematic review. As fibromyalgia is sometimes but not always classified as a rheumatic condition [65], it was included in our review. Neurodegenerative disorders (e.g., amyotrophic lateral sclerosis and other motor neuron diseases, multiple sclerosis, Parkinson’s disease, or Guillain-Barre syndrome) or abdominal painful conditions (e.g., irritable bowel syndrome, ulcerative colitis, Crohn’s disease, colonic ischemia, gastro-oesophageal reflux disease, primary sclerosing cholangitis) are not systematically considered CNCP [60, 66]. Articles about such conditions were therefore excluded (a posteriori). If a study reported on the validity of many health conditions, only algorithms related to nonrheumatic commonly studied CNCP conditions were reviewed.

Information Sources and Search Strategy

Studies were identified on March 1, 2018, by searching the following computerized databases without any dates of coverage restrictions: Medline (EBSCO; PubMed for the past two years), Embase (Ovid), CINAHL (EBSCO), AgeLine (EBSCO), PsycINFO (EBSCO), and Abstracts in Social Gerontology (EBSCO). The search strategy was developed in collaboration with experienced medical librarians, a pain epidemiologist, and a primary care physician and included several synonyms for 1) commonly studied CNCP conditions, 2) validation studies, and 3) administrative databases (Supplementary Data). Different types of CNCP conditions, defined by the International Association for the Study of Pain (IASP) Task Force for the Classification of Chronic Pain, were represented in our search strategy [60]. All citations were entered in the citation management software Zotero, and duplicates were removed.

Study Selection

Using the Rayyan web application, the whole screening and selection process was achieved by two independent trained reviewers who met and resolved disagreements with a third party if needed. First, titles and abstracts of all citations retrieved from electronic databases were screened with the aim of identifying articles fitting the prespecified eligibility criteria. All abstracts identified by the reviewers in the abstract screening phase were then assessed in full text for inclusion. The reference list of studies included in the present review was also scanned for potential nonretrieved original investigations (snowball citation searching). At the end of the process, the final list of pain conditions and articles included in the review was validated by a pain epidemiologist and a primary care physician, who were not previously involved in the study selection process.

Data Collection Process

Using a pilot-tested standardized extraction form, data collection was achieved by one reviewer and then validated by a second reviewer (who resolved disagreements with a third party if needed). A tool containing detailed definitions of each piece of information/variable to be extracted was used by the reviewers to better standardize data collection. In the context of our study, obtaining/confirming data from investigators was not needed.

Data Items

For each study meeting the selection criteria, the following information was retrieved: authors, title of the study, state/province and country where it was conducted, administrative database to be validated, reference standard used, study population characteristics and size, and CNCP conditions under study. Moreover, each algorithm was described in detail, including its content, types of codes used, and inclusion of pharmacy claims data in the algorithm. For each algorithm, the following measures of diagnostic accuracy and their respective 95% confidence intervals (95%CI) were extracted when available: 1) sensitivity (SEN): probability that a patient is identified as a CNCP case in the administrative database given the presence of CNCP according to the reference standard, 2) specificity (SP): probability that a patient is not identified as a CNCP case in the administrative database given the absence of CNCP according to the reference standard, 3) positive predictive value (PPV): probability of suffering from CNCP according to the reference standard given that the patient is identified as a CNCP case in the administrative database, 4) negative predictive value (NPV): probability of not suffering from CNCP according to the reference standard given that the patient is not identified as a CNCP case in the administrative database, and 5) kappa coefficient: degree of agreement between the administrative database and the reference standard corrected for chance. When available, measures of diagnostic accuracy were extracted according to sex and age subgroups. All of these statistics ranged between 0 and 1 (0–100%), with higher values indicating better validity/accuracy of an algorithm [67]. To the best of our knowledge, there is no consensus regarding specific cutoffs indicating what can be considered poor vs high SEN and SP values. We chose 60% and 80% arbitrary cutoffs to ease our interpretation.

Risk of Bias

The type of reference standards used in the validity studies was the main aspect considered in terms of quality of reviewed studies. In fact, the assessment of an algorithm’s validity is based on the premise that the reference standard against which it is tested represents the truth—which is not always the case. Although retrospective medical chart review is a widely applicable research methodology, such routinely collected data were not originally intended for research purposes and may be lacking in quality (misclassification and missing information) [68, 69]. One can hypothesize that the quality of clinical information about pain contained in medical charts can vary from one setting to another (primary care vs tertiary care pain clinic). As for self-reported diagnoses, their validity is not always optimal and is variable across chronic health conditions and patient populations [70–78]. Clinician-confirmed diagnoses collected in the context of disease-specific registries were thus considered a high-quality reference standard in comparison with primary care medical chart review or patient self-report. The reporting of key measures of diagnostic accuracy (SEN, SP, PPV, NPV) and external validity of results (e.g., algorithms validated in several databases) were also considered when looking at the quality of reviewed studies.

Synthesis of Results

The results of included studies were described narratively and combined in tables in order to describe 1) the characteristics of the various studies and 2) the algorithms and measures of diagnostic accuracy reported for each. The quantity and quality of available evidence were also depicted in a summary table.

Results

Study Selection

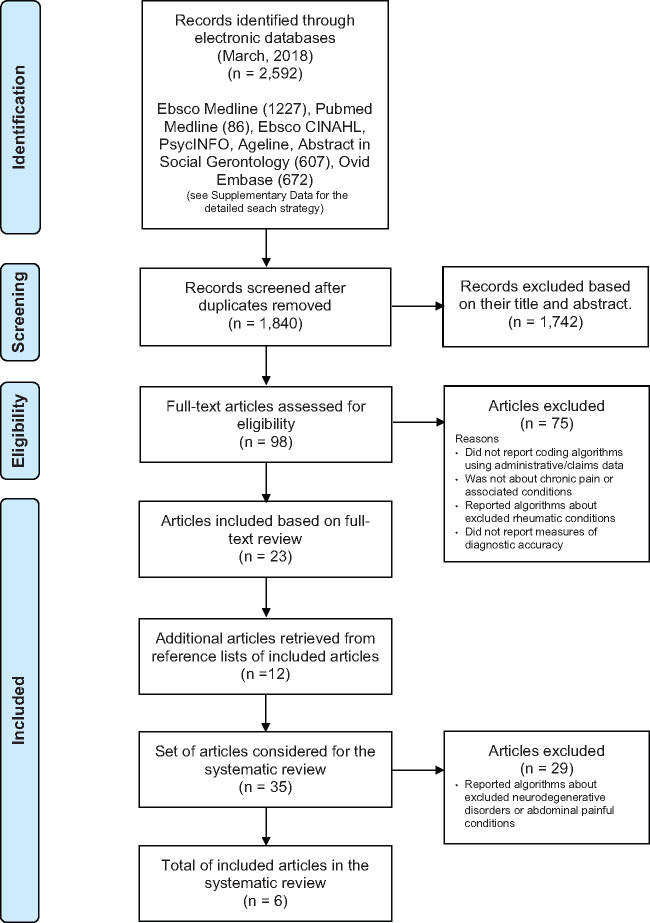

The study selection flow diagram is shown in Figure 1. A total of 1,840 articles were identified, but most were excluded based on their title and abstract. From the remaining 98 full-text articles, 75 did not meet the selection criteria. Combining the remaining articles retrieved from the electronic search (N = 23) and those identified through their reference lists (N = 12), a total of 35 journal articles were considered. After the exclusion of 29 articles about neurodegenerative disorders or abdominal painful conditions [79–107], only six studies were found to report on the validity of algorithms for identification of commonly studied CNCP: back disorders in general [108], complex regional pain syndrome [108], fibromyalgia [108–110], headache/migraine studied together [111], low back pain [108], migraine [111, 112], neck/back problems studied together [108], painful diabetic peripheral neuropathy [113], and painful neuropathic disorders in general [108].

Figure 1.

Study selection flow diagram.

Included Studies

Characteristics of the included studies are presented in Table 1 (in alphabetical order according to the last name of the first author). Two studies (33.3%) were published in the five years preceding the electronic search. Studies were all conducted using US (3/6 = 50%) or Canadian administrative databases (3/6 = 50%). Back or neck pain algorithms were only tested in a Canadian context. A given study could focus on more than one CNCP condition and test more than one algorithm for a given CNCP condition.

Table 1.

Characteristics of the studies included in the systematic review (N = 35)

| Authors | Title of the Study | Country | Administrative Database | Reference Standard Used for the Validation Study | Study Population | Chronic Pain Conditions Studied |

|---|---|---|---|---|---|---|

| 1. Hartsfield et al. 2008 | Painful diabetic peripheral neuropathy in a managed care setting: Patient identification, prevalence estimates, and pharmacy utilization patterns | Colorado, United States | Kaiser Permanente Colorado Diabetes Registry | Medical chart review | Patients aged 18 years and over (N = 300) | Painful diabetic peripheral neuropathy |

| 2. Katz et al. 1997 | Sensitivity and positive predictive value of Medicare Part B physician claims for rheumatologic diagnoses and procedures | Massachusetts, Colorado, Virginia, United States | Medicare Part B physician claims | Medical chart review (rheumatologists in separate practices) | Patients (N = 342) | Fibromyalgia (and rheumatic conditions excluded from the present review) |

| 3. Kolodner 2004 | Pharmacy and medical claims data identified migraine sufferers with high specificity but modest sensitivity | United States | Health plan enrollment and medical group files (southeastern Michigan) | Patient self-report (telephone interviews) | Patients aged 18–55 years (N = 8,579) | Headache, migraine (and other chronic conditions excluded from the present review) |

| 4. Lacasse et al. 2015 | Is the Quebec provincial administrative database a valid source for research on chronic non-cancer pain? | Quebec, Canada | Quebec Universal Health Insurance Database | Quebec Pain Registry (tertiary care provincial registry) | Patients (N = 561) | Neck and back problems, back disorders, lower back pain, complex regional pain syndrome, fibromyalgia, painful neuropathic disorders |

| 5. Marrie et al. 2012 | The incidence and prevalence of fibromyalgia are higher in multiple sclerosis than the general population: A population-based study | Manitoba, Canada | Manitoba Universal Health Insurance Database | Medical chart review | Patients (N = 430) | Fibromyalgia |

| 6. Marrie et al. 2013 | The utility of administrative data for surveillance of comorbidity in multiple sclerosis: A validation study | Manitoba, Canada | Manitoba Universal Health Insurance Database | Medical chart review | Patients (N = 430) | Migraine (and other chronic conditions excluded from the present review) |

Tested Algorithms

For the purpose of this review, each algorithm was assigned a unique identification number (ID). The detailed description of algorithms and measures of diagnostic accuracy reported for each are presented in the Supplementary Data (in alphabetical order according to the CNCP condition studied). Overall, the six included studies reported measures of diagnostic accuracy for 99 algorithms designed to identify CNCP cases in administrative/claims data. Reported accuracy measures were accompanied by their respective 95% CIs in the great majority of cases (96/99 algorithms). Only one study reported measures of diagnostic accuracy across males and females [111].

Tested algorithms designed to identify commonly studied CNCP conditions focused on back disorders in general (N = 2; ID: 1 and 2), complex regional pain syndrome (N = 4; ID: 3 to 6), fibromyalgia (N = 34; ID: 7 to 40), headache/migraine studied together (N = 12; ID: 41 to 52), low back pain (N = 3; ID: 53 to 55), migraine (N = 38; ID: 56 to 93), neck/back problems studied together (N = 2; ID: 94 and 95), painful diabetic peripheral neuropathy (N = 2; ID: 96 and 97), and painful neuropathic disorders in general (N = 2; ID: 98 and 99). Such algorithms were diverse in terms of the number of health care encounters and time window considered. For all of the CNCP conditions mentioned above, pharmacy claims data were not considered in the algorithms, except for headache and/or migraine. The four key measures of diagnostic accuracy (SEN, SP, PPV, NPV) were reported for the great majority of algorithms.

As shown in the Supplementary Data, several algorithms designed to identify patients’ suffering from CNCP showed a ≥60% combination of SEN and SP values (≤40% chances of false negatives and false positives): back disorders in general (ID: 2), fibromyalgia (ID: 17 to 19, 23 to 40), low back pain (ID: 55), migraine (ID: 60), and neck/back problems studied together (ID: 95). Algorithms designed to identify patients suffering from complex regional pain syndrome, headache/migraine studied together, painful diabetic peripheral neuropathy, and painful neuropathic disorders in general did not reach the 60% SEN and SP cutoff. Only algorithms designed to identify fibromyalgia cases and tested in one study reached an ≥80% combination of SEN and SP values (ID: 17–19, 23–25, 30, 31, 36, 37) [110]. A summary of the quantity and quality of the available literature is presented in Table 2. The most frequently used reference standard was medical chart review (4/6 = 66.7%), followed by disease-specific patient registries (1/6 = 16.7%) and patient self-report (1/6 = 16.7%).

Table 2.

Summary of the available literature about algorithms for identification of commonly studied CNCP

| Classic/Commonly Studied CNCP | Multiple Different Algorithms Were Tested | Quality of Reference Standards Used | Key Measures of Diagnostic Accuracy Were Calculated for Tested Algorithms | Diversity of Administrative Databases in Which Algorithms Were Tested | At Least 1 Algorithm Showed ≥60 Combination of SEN and SP | Same Algorithm Showed ≥60% Combination of SEN and SP in >1 Database | At Least 1 Algorithm Showed ≥80% Combination of SEN and SP |

|---|---|---|---|---|---|---|---|

| Back disorders in general | – | Pain-specific patient registry | Yes | – | Yes | No | |

| N = 2 | N = 1 | ||||||

| Complex regional pain syndrome | – | Pain-specific patient registry | Yes | – | No | No | |

| N = 4 | N = 1 | ||||||

| Fibromyalgia | ++ | Pain-specific patient registry and medical chart review | Yes (except for 2 algorithms) | + | Yes | No | Yes |

| N = 34 | N = 3 | ||||||

| Headache/migraine studied together | + | Patient self-report | Yes | – | No | No | |

| N = 12 | N = 1 | ||||||

| Low back pain | – | Pain-specific patient registry | Yes | – | Yes | No | |

| N = 3 | N = 1 | ||||||

| Migraine | ++ | Patient self-report + medical chart review | Yes | ± | Yes | No | No |

| N = 38 | N = 2 | ||||||

| Neck/back problems studied together | – | Pain-specific patient registry | Yes | – | Yes | No | |

| N = 2 | N = 1 | ||||||

| Painful diabetic peripheral neuropathy | – | Medical chart review | Yes | – | No | No | |

| N = 2 | N = 1 | ||||||

| Painful neuropathic disorders in general | – | Pain-specific patient registry | Yes | – | No | No | |

| N = 2 | N = 1 | ||||||

| Other important CNCP conditions (e.g., chronic postsurgical pain, chronic post-traumatic pain, phantom limb pain) |

In the table, shaded cells = not applicable.

SEN = sensitivity; SP = specificity.

Discussion

To our knowledge, this study is the first attempt to synthesize evidence about the validity of algorithms to identify individuals suffering from nonarthritic CNCP in health administrative databases. Based on our results, a very limited amount of literature is available to support the use of administrative databases as valid sources of data for research on CNCP. However, our results provide valuable information to yield a number of key findings, identify research gaps, and make several recommendations for future research:

Key Finding 1

Few studies (N = 6) examined the validity of algorithms to identify individuals suffering from commonly studied nonrheumatic CNCP conditions in administrative databases [108–113]. This finding is surprising and could be explained by many factors, including 1) the presence of a publication bias (only studies with results that are statistically or clinically significant are published [114]), 2) the lack of awareness that using validated algorithms is a priority to reduce bias in database studies [10, 11], and 3) the lack of awareness of the value of observational research using administrative data in the community of pain researchers. Considering the added value of such data sources in pain research, new CNCP case-finding algorithms should be developed, and well-designed validation studies should be conducted and published.

Key Finding 2

All studies were conducted in US or Canadian administrative databases. This was not a surprising result considering that they are internationally recognized health databases and have long been used by epidemiologists, pharmacoepidemiologists, and health economists [1, 3, 115, 116]. It is also consistent with other systematic reviews about the validity of algorithms for the identification of individuals suffering from osteoarthritis (100% conducted in the United States or Canada) [63] or rheumatic diseases in general (83% of included studies were conducted in the United States or Canada) [64]. This finding could imply that little is known about the quality of claims databases for pain research in many other countries. We, however, have to keep in mind that studies about the quality of computerized medical records were not included in the present review and that such databases are extensively used for research purposes outside of North America (e.g., UK’s General Practice Research Database [GPRD]) [117]. The fact that back or neck pain algorithms were not tested in US databases was surprising considering the significant burden of these conditions [118].

Key Finding 3

For many CNCP conditions, very few different algorithms were tested for accuracy (≤4 algorithms for each of the following conditions: back disorders in general, complex regional pain syndrome, low back pain, neck/back problems studied together, painful diabetic peripheral neuropathy, or painful neuropathic disorders in general).

Key Finding 4

Pharmacy claims data were not included in the commonly studied CNCP algorithms, except for headache and/or migraine. This was expected as, contrary to conditions such as arthritis where prescription claims are often used in case-finding algorithms [61], many pharmacological treatments used for CNCP are not specific to a particular type of syndrome (e.g., opioids, nonsteroidal anti-inflammatory drugs) or not specific to pain management (e.g., antidepressants, anticonvulsants, cannabinoids). However, it would be important to test and publish about the contribution of adding pharmacy claims to the equation, no matter if the results are positive or not. Key findings 3 and 4 could be explained by selective reporting and publication of results. Our findings emphasize the need for more studies aiming to develop, test, refine, and publish case-finding algorithms.

Key Finding 5

The diversity of administrative databases in which case-finding algorithms were tested is limited. In fact, none of the specific combinations of codes, time windows, and number of health care encounters was tested in more than one database. As the validity of a given algorithm could vary from one data source to another because of the variability of database completeness across jurisdictions [6], this constitutes an important limitation of available evidence.

Key Finding 6

Some algorithms designed to identify patients suffering from CNCP showed a ≥60% combination of SEN and SP values and could be useful (back disorders in general, fibromyalgia, low back pain, migraine, neck/back problems studied together). When selecting the optimal CNCP algorithm for an administrative database study, researchers should, however, assess the relative importance of SEN, SP, PPV, and NPV and prioritize the accuracy measure that is most relevant to their research question [119]. Misclassification of CNCP cases can significantly impact a study’s internal validity. For example, it could affect researchers’ capacity to correctly control for confounding, a constant challenge in observational designs [3]. Also, it is necessary to select algorithms with very high SEN for prevalence studies, as this approach minimizes the number of false negatives [119, 120]. On the other hand, if the goal is to select a CNCP cohort for upcoming studies, one might risk missing some cases and assume the loss of external validity.

Key Finding 7

Only some algorithms designed to identify fibromyalgia cases reached ≥80% combination of SEN and SP values (ID: 17–19, 23–25, 30, 31, 36, 37). They were, however, tested in only one study/database and validated against medical records [110]. Possible explanations for the absence of comparable positive results in the two other studies that tested fibromyalgia algorithms are 1) the shorter case identification time windows (one year or less in the Lacasse et al. and Katz et al. studies [108, 109] vs two to five years for algorithms showing a ≥80% combination of SEN and SP values in the Marrie et al. study [110]) and 2) the reference standards used (of lower quality in the Marrie et al. study vs the Lacasse et al. study). In the future, time and resources should be invested to achieve replication of findings before concluding on the validity of an algorithm.

Key Finding 8

Algorithms designed to identify patients suffering from complex regional pain syndrome, headache/migraine studied together, painful diabetic peripheral neuropathy, and painful neuropathic disorders in general did not reach acceptable SEN and SP cutoffs. This finding can perhaps be explained by the fact that CNCP is commonly under-reported, underdiagnosed, and under-recognized in primary care settings [18, 53–57]. The challenges surrounding the diagnosis of neuropathic pain [121] and the fact that only one diagnostic code per medical visit is recorded in some administrative databases could thus explain the lack of diagnostic accuracy [108].

Key Finding 9

The quality of the reference standard used in the studies aimed at the validation of algorithms to identify individuals suffering from commonly studied CNCP conditions was variable, but many used medical chart review. When choosing a validated CNCP algorithm, researchers should be aware of the potential impact of the reference standard’s quality on the estimation of accuracy measures.

Key Finding 10

Measures of diagnostic accuracy of few algorithms were presented across males and females (only in one study of headache and/or migraine). Growing attention is given to the importance of considering sex and gender in health research [122–125]. Considering the relevance of these health determinants to the pain experience [126, 127], such subgroup stratification of validity results should be achieved when possible.

Key Finding 11

According to the available literature, the diagnostic accuracy of case-finding algorithms for CNCP (all types considered) or specific conditions such as chronic postsurgical pain, chronic post-traumatic pain, or phantom limb pain has never been investigated. An earlier study showed that as few as 0–0.36% of patients who were enrolled in a chronic pain registry had an ICD-9 pain code (307.8, 338, 338.2, or 338.4) recorded in administrative databases [108]. In another study, an algorithm combining pain-related ICD-9 codes, opioid medication, and pain scores was shown to be valid for the identification of individuals suffering from chronic pain in primary care electronic records [128]. Although this algorithm was applied to Canadian health insurance databases [129], its validity in such administrative claims was never evaluated. One more time, this emphasizes the need for studies aimed at the development/validation of new case-finding algorithms, including ways to identify patients no matter what type of CNCP they are suffering from.

Study Limitations

Despite the development of a thorough search strategy, about one-third of studies were retrieved through reference lists of articles included in the present review. This demonstrates the difficulty of identifying studies about chronic pain case-finding algorithms from electronic searches and a possible lack of consistent terminology in the scientific literature. Further studies should look at the state of the situation and the development of recommendations to that effect. Resource constraints brought us to exclude a review of gray literature and an assessment of the quality of reporting of included studies, for example, using an appraisal tool such as the Quality Assessment of Diagnostic Accuracy Studies (QUADAS) tool [130]. However, important quality components of validation studies of case-finding algorithms were considered in the interpretation of our results, such as the quality of the reference standard used for validation, the reporting of all key measures of diagnostic accuracy, and the external validity of studies. As stated, our search strategy was designed to capture studies about commonly studied CNCP conditions. Another limit of our paper is the exclusion of studies about rheumatic conditions (because systematic reviews reporting acceptable diagnostic accuracy measures were already available [61–64]). In fact, many recent studies have been published about the validity of arthritis case-finding algorithms [131–138].

Conclusions

A small quantity of algorithms with fair diagnostic accuracy is available to identify patients suffering from commonly studied CNCP conditions such as back and neck pain, fibromyalgia, and migraine. However, their diagnostic accuracy should always be interpreted depending on the intended purpose and considering the absence of evidence regarding the replicability of findings across studies and databases. According to the available literature, several CNCP conditions have never been the subject of validated algorithms (algorithms with poor diagnostic accuracy or not developed/tested at all). The present investigation informs us about the limited amount of literature available to support the use of administrative databases as valid sources of data for research on CNCP. Considering the added value of such data sources, the above-mentioned research gaps provide important directions for future research. It should be noted that linking administrative databases with other data sources containing valid pain-related data (e.g., survey data, patient registries) is a valuable option until more evidence is gained in the field.

Supplementary Material

Acknowledgments

We would like to thank medical librarians Ms. Kathy Rose, Ms. Josée Toulouse, and Ms. Nathalie Rheault, who helped with the development of the electronic search strategies. Special thanks to Ms. Carol-Ann Fortin and Ms. Kassandra Gamache, who contributed to study selection and data extraction, and to Dr. Martin Fortin, who validated the content of search strategies. We would also like to thank Mr. Mohamed Walid Mardhy and Ms. Hermine Lore Nguena Nguefack, who helped with the classification of CNCP conditions and with the finalization of the manuscript, respectively.

Members of the TORSADE Cohort Working Group: Gillian Bartlett, Lucie Blais, David Buckeridge, Manon Choinière, Catherine Hudon, Anaïs Lacasse, Benoit Lamarche, Alexandre Lebel, Amélie Quesnel-Vallée, Pasquale Roberge, Alain Vanasse, Valérie Émond, Sonia Jean, Marie-Pascale Pomey, Mike Benigeri, Anne-Marie Cloutier, Marc Dorais, Josiane Courteau, Mireille Courteau, Stéphanie Plante, Annie Giguère, Isabelle Leroux, Denis Roy, Jaime Borja, André Néron, Jean-François Ethier, Roxanne Dault, Marc-Antoine Côté-Marcil, Pier Tremblay, Sonia Quirion, Jacques Rheaume, François Dubé.

Supplementary Data

Supplementary data are available at Pain Medicine online.

Funding sources: This study was supported by the Quebec SUPPORT Unit (Support for People and Patient-Oriented Research and Trials), an initiative funded by the Canadian Institutes of Health Research (CIHR), the Ministère de la Santé et des Services Sociaux du Québec, and the Fonds de Recherche du Québec Santé. These three funders were not involved in the design, the data collection or interpretation, or the publication of the review.

Conflicts of interest: The authors declare no conflicts of interest and no financial interests related to this study.

References

- 1. Schneeweiss S, Avorn J.. A review of uses of health care utilization databases for epidemiologic research on therapeutics. J Clin Epidemiol 2005;58(4):323–37. [DOI] [PubMed] [Google Scholar]

- 2. Tamblyn R, Lavoie G, Petrella L, Monette J.. The use of prescription claims databases in pharmacoepidemiological research: The accuracy and comprehensiveness of the prescription claims database in Quebec. J Clin Epidemiol 1995;48(8):999–1009. [DOI] [PubMed] [Google Scholar]

- 3. Strom BL, Kimmel SE, Hennessy S.. Pharmacoepidemiology. 5th ed.Sussex: Wiley-Blackwell; 2012. [Google Scholar]

- 4. Bernatsky S, Lix L, O’donnell S, Lacaille D.. Consensus statements for the use of administrative health data in rheumatic disease research and surveillance. J Rheumatol 2013;40(1):66–73. [DOI] [PubMed] [Google Scholar]

- 5. Hashimoto RE, Brodt ED, Skelly AC, Dettori JR.. Administrative database studies: Goldmine or goose chase? Evid Based Spine Care J 2014;5(2):74–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Lix LM, Walker R, Quan H, Nesdole R, Yang J, Chen G.. Features of physician services databases in Canada. Chronic Dis Inj Can 2012;32(4):186–93. [PubMed] [Google Scholar]

- 7. Wilchesky M, Tamblyn RM, Huang A.. Validation of diagnostic codes within medical services claims. J Clin Epidemiol 2004;57(2):131–41. [DOI] [PubMed] [Google Scholar]

- 8. Rawson NS, D'Arcy C.. Assessing the validity of diagnostic information in administrative health care utilization data: Experience in Saskatchewan. Pharmacoepidemiol Drug Saf 1998;7(6):389–98. [DOI] [PubMed] [Google Scholar]

- 9. Terris DD, Litaker DG, Koroukian SM.. Health state information derived from secondary databases is affected by multiple sources of bias. J Clin Epidemiol 2007;60(7):734–41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Benchimol EI, Manuel DG, To T, Griffiths AM, Rabeneck L, Guttmann A.. Development and use of reporting guidelines for assessing the quality of validation studies of health administrative data. J Clin Epidemiol 2011;64(8):821–9. [DOI] [PubMed] [Google Scholar]

- 11. De Coster C, Quan H, Finlayson A, et al. Identifying priorities in methodological research using ICD-9-CM and ICD-10 administrative data: Report from an international consortium. BMC Health Serv Res 2006;6(1):77. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.WHO. International Classification of Diseases, Ninth Revision. Geneva: World Health Organization; 1978. [Google Scholar]

- 13.WHO. International Classification of Diseases, Tenth Revision. Geneva: World Health Organization; 1990. [Google Scholar]

- 14. Cadieux G, Buckeridge DL, Jacques A, Libman M, Dendukuri N, Tamblyn R.. Patient, physician, encounter, and billing characteristics predict the accuracy of syndromic surveillance case definitions. BMC Public Health 2012;12(166):1–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. McPheeters ML, Sathe NA, Jerome RN, Carnahan RM.. Methods for systematic reviews of administrative database studies capturing health outcomes of interest. Vaccine 2013;31(Suppl 10):K2–6. [DOI] [PubMed] [Google Scholar]

- 16. Rowbotham MC, Gilron I, Glazer C, et al. Can pragmatic trials help us better understand chronic pain and improve treatment? Pain 2013;154(5):643–6. [DOI] [PubMed] [Google Scholar]

- 17. Bellows BK, Kuo KL, Biltaji E, et al. Real-world evidence in pain research: A review of data sources. J Pain Palliat Care Pharmacother 2014;28(3):294–304. [DOI] [PubMed] [Google Scholar]

- 18. Kingma EM, Rosmalen JG.. The power of longitudinal population-based studies for investigating the etiology of chronic widespread pain. Pain 2012;153(12):2305–6. [DOI] [PubMed] [Google Scholar]

- 19. Berlach DM, Shir Y, Ware MA.. Experience with the synthetic cannabinoid nabilone in chronic noncancer pain. Pain Med 2006;7(1):25–9. [DOI] [PubMed] [Google Scholar]

- 20. Fitzcharles MA, Shir Y.. Management of chronic pain in the rheumatic diseases with insights for the clinician. Ther Adv Musculoskelet Dis 2011;3(4):179–90. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Giladi H, Choiniere M, Fitzcharles MA, Ware MA, Tan X, Shir Y.. Pregabalin for chronic pain: Does one medication fit all? Curr Med Res Opin 2015;31(7):1403–11. [DOI] [PubMed] [Google Scholar]

- 22. Eguale T, Buckeridge DL, Winslade NE, Benedetti A, Hanley JA, Tamblyn R.. Drug, patient, and physician characteristics associated with off-label prescribing in primary care. Arch Intern Med 2012;172(10):781–8. [DOI] [PubMed] [Google Scholar]

- 23. Fitzcharles MA, Ste-Marie PA, Goldenberg DL, et al. 2012 Canadian Guidelines for the diagnosis and management of fibromyalgia syndrome: Executive summary. Pain Res Manag 2013;18(3):119–26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Attal N, Cruccu G, Baron R, et al. EFNS guidelines on the pharmacological treatment of neuropathic pain: 2010 revision. Eur J Neurol 2010;17(9):1113–e88. [DOI] [PubMed] [Google Scholar]

- 25. Menditto E, Gimeno Miguel A, Moreno Juste A, et al. Patterns of multimorbidity and polypharmacy in young and adult population: Systematic associations among chronic diseases and drugs using factor analysis. PLoS One 2019;14(2):e0210701. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Berger A, Dukes EM, Oster G.. Clinical characteristics and economic costs of patients with painful neuropathic disorders. J Pain 2004;5(3):143–9. [DOI] [PubMed] [Google Scholar]

- 27. Cherkin DC, Deyo RA, Volinn E, Loeser JD.. Use of the International Classification of Diseases (ICD-9-CM) to identify hospitalizations for mechanical low back problems in administrative databases. Spine (Phila Pa 1976) 1992;17(7):817–25. [DOI] [PubMed] [Google Scholar]

- 28. Einstadter D, Kent DL, Fihn SD, Deyo RA.. Variation in the rate of cervical spine surgery in Washington State. Med Care 1993;31(8):711–8. [DOI] [PubMed] [Google Scholar]

- 29. Lachaine J, Beauchemin C, Landry PA.. Clinical and economic characteristics of patients with fibromyalgia syndrome. Clin J Pain 2010;26(4):284–90. [DOI] [PubMed] [Google Scholar]

- 30. Lachaine J, Gordon A, Choiniere M, Collet JP, Dion D, Tarride JE.. Painful neuropathic disorders: An analysis of the Regie de l'Assurance Maladie du Quebec database. Pain Res Manag 2007;12(1):31–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Lavis JN, Malter A, Anderson GM, et al. Trends in hospital use for mechanical neck and back problems in Ontario and the United States: Discretionary care in different health care systems. CMAJ 1998;158(1):29–36. [PMC free article] [PubMed] [Google Scholar]

- 32. Moritz S, Liu MF, Rickhi B, Xu TJ, Paccagnan P, Quan H.. Reduced health resource use after acupuncture for low-back pain. J Altern Complement Med 2011;17(11):1015–9. [DOI] [PubMed] [Google Scholar]

- 33. Power JD, Perruccio AV, Desmeules M, Lagace C, Badley EM.. Ambulatory physician care for musculoskeletal disorders in Canada. J Rheumatol 2006;33(1):133–9. [PubMed] [Google Scholar]

- 34. Taylor VM, Anderson GM, McNeney B, et al. Hospitalizations for back and neck problems: A comparison between the province of Ontario and Washington State. Health Serv Res 1998;33(4 Pt 1):929–45. [PMC free article] [PubMed] [Google Scholar]

- 35. Cole JA, Rothman KJ, Cabral HJ, Zhang Y, Farraye FA.. Migraine, fibromyalgia, and depression among people with IBS: A prevalence study. BMC Gastroenterol 2006;6(1):26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Beaudet N, Courteau J, Sarret P, Vanasse A.. Prevalence of claims-based recurrent low back pain in a Canadian population: A secondary analysis of an administrative database. BMC Musculoskelet Disord 2013;14(151):1–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Beaudet N, Courteau J, Sarret P, Vanasse A.. Improving the selection of true incident cases of low back pain by screening retrospective administrative data. Eur J Pain 2014;18(7):923–31. [DOI] [PubMed] [Google Scholar]

- 38. Dworkin RH, Malone DC, Panarites CJ, Armstrong EP, Pham SV.. Impact of postherpetic neuralgia and painful diabetic peripheral neuropathy on health care costs. J Pain 2010;11(4):360–8. [DOI] [PubMed] [Google Scholar]

- 39. Kopec JA, Rahman MM, Berthelot JM, et al. Descriptive epidemiology of osteoarthritis in British Columbia, Canada. J Rheumatol 2007;34(2):386–93. [PubMed] [Google Scholar]

- 40. Rahme E, Hunsche E, Toubouti Y, Chabot I.. Retrospective analysis of utilization patterns and cost implications of coxibs among seniors in Quebec, Canada: What is the potential impact of the withdrawal of rofecoxib? Arthritis Rheum 2006;55(1):27–34. [DOI] [PubMed] [Google Scholar]

- 41. Tarride JE, Haq M, O'Reilly DJ, et al. The excess burden of osteoarthritis in the province of Ontario, Canada. Arthritis Rheum 2012;64(4):1153–61. [DOI] [PubMed] [Google Scholar]

- 42. Muzina DJ, Chen W, Bowlin SJ.. A large pharmacy claims-based descriptive analysis of patients with migraine and associated pharmacologic treatment patterns. Neuropsychiatr Dis Treat 2011;7:663–72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Bigal ME, Kolodner KB, Lafata JE, Leotta C, Lipton RB.. Patterns of medical diagnosis and treatment of migraine and probable migraine in a health plan. Cephalalgia 2006;26(1):43–9. [DOI] [PubMed] [Google Scholar]

- 44. Bernstein CN, Wajda A, Svenson LW, et al. The epidemiology of inflammatory bowel disease in Canada: A population-based study. Am J Gastroenterol 2006;101(7):1559–68. [DOI] [PubMed] [Google Scholar]

- 45. Barnabe C, Faris PD, Quan H.. Canadian pregnancy outcomes in rheumatoid arthritis and systemic lupus erythematosus. Int J Rheumatol 2011;345727:1–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46. Bernatsky S, Renoux C, Suissa S.. Demyelinating events in rheumatoid arthritis after drug exposures. Ann Rheum Dis 2010;69(9):1691–3. [DOI] [PubMed] [Google Scholar]

- 47. Ehrmann Feldman D, Bernatsky S, Abrahamowicz M, et al. Consultation with an arthritis specialist for children with suspected juvenile rheumatoid arthritis: A population-based study. Arch Pediatr Adolesc Med 2008;162(6):538–43. [DOI] [PubMed] [Google Scholar]

- 48. Ehrmann Feldman D, Bernatsky S, Houde M.. The incidence of juvenile rheumatoid arthritis in Quebec: A population data-based study. Pediatr Rheumatol Online J 2009;7(20):1–4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Suissa S, Hudson M, Ernst P.. Leflunomide use and the risk of interstitial lung disease in rheumatoid arthritis. Arthritis Rheum 2006;54(5):1435–9. [DOI] [PubMed] [Google Scholar]

- 50. Widdifield J, Bernatsky S, Paterson JM, et al. Quality care in seniors with new-onset rheumatoid arthritis: A Canadian perspective. Arthritis Care Res (Hoboken) 2011;63(1):53–7. [DOI] [PubMed] [Google Scholar]

- 51. Tarride JE, Haq M, Nakhai-Pour HR, et al. The excess burden of rheumatoid arthritis in Ontario, Canada. Clin Exp Rheumatol 2013;31(1):18–24. [PubMed] [Google Scholar]

- 52. Pasricha SV, Tadrous M, Khuu W, et al. Clinical indications associated with opioid initiation for pain management in Ontario, Canada: A population-based cohort study. Pain 2018;159(8):1562–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53. Zuccaro SM, Vellucci R, Sarzi-Puttini P, Cherubino P, Labianca R, Fornasari D.. Barriers to pain management: Focus on opioid therapy. Clin Drug Investig 2012;32(Suppl 1):11–9. [DOI] [PubMed] [Google Scholar]

- 54. MacDonald NE, Flegel K, Hebert PC, Stanbrook MB.. Better management of chronic pain care for all. CMAJ 2011;183(16):1815. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55. Sessle BJ. The pain crisis: What it is and what can be done. Pain Res Treat 2012;2012(703947):1–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56. Lalonde L, Choiniere M, Martin E, et al. Priority interventions to improve the management of chronic non-cancer pain in primary care: A participatory research of the ACCORD program. J Pain Res 2015;8:203–15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57. Kress HG, Aldington D, Alon E, et al. A holistic approach to chronic pain management that involves all stakeholders: Change is needed. Curr Med Res Opin 2015;31(9):1743–54. [DOI] [PubMed] [Google Scholar]

- 58. Moher D, Shamseer L, Clarke M, et al. Preferred Reporting Items for Systematic Review and Meta-Analysis Protocols (PRISMA-P) 2015 statement. Syst Rev 2015;4(1):1–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59. McInnes MDF, Moher D, Thombs BD, et al. Preferred Reporting Items for a Systematic Review and Meta-Analysis of Diagnostic Test Accuracy studies: The PRISMA-DTA statement. JAMA 2018;319(4):388–96. [DOI] [PubMed] [Google Scholar]

- 60. Treede RD, Rief W, Barke A, et al. A classification of chronic pain for ICD-11. Pain 2015;156(6):1003–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61. Chung CP, Rohan P, Krishnaswami S, McPheeters ML.. A systematic review of validated methods for identifying patients with rheumatoid arthritis using administrative or claims data. Vaccine 2013;31(Suppl 10):K41–61. [DOI] [PubMed] [Google Scholar]

- 62. Moores KG, Sathe NA.. A systematic review of validated methods for identifying systemic lupus erythematosus (SLE) using administrative or claims data. Vaccine 2013;31(Suppl 10):K62–73. [DOI] [PubMed] [Google Scholar]

- 63. Shrestha S, Dave AJ, Losina E, Katz JN.. Diagnostic accuracy of administrative data algorithms in the diagnosis of osteoarthritis: A systematic review. BMC Med Inform Decis Mak 2016;16(82):1–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64. Widdifield J, Labrecque J, Lix L, et al. Systematic review and critical appraisal of validation studies to identify rheumatic diseases in health administrative databases. Arthritis Care Res (Hoboken) 2013;65(9):1490–503. [DOI] [PubMed] [Google Scholar]

- 65. Fitzcharles MA, Shir Y, Ablin JN, et al. Classification and clinical diagnosis of fibromyalgia syndrome: Recommendations of recent evidence-based interdisciplinary guidelines. Evid Based Complement Alternat Med 2013;2013:1–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66. Dworkin RH, Bruehl S, Fillingim RB, Loeser JD, Terman GW, Turk DC.. Multidimensional diagnostic criteria for chronic pain: Introduction to the ACTTION-American Pain Society Pain Taxonomy (AAPT). J Pain 2016;17(9 Suppl):T1–9. [DOI] [PubMed] [Google Scholar]

- 67. Streiner DL, Norman GR, Cairney J.. Health Measurement Scales: A Practical Guide to Their Development and Use. 5th ed.New York: Oxford University Press; 2015. [Google Scholar]

- 68. Worster A, Haines T.. Advanced statistics: Understanding medical record review (MRR) studies. Acad Emerg Med 2004;11(2):187–92. [PubMed] [Google Scholar]

- 69. Vassar M, Holzmann M.. The retrospective chart review: Important methodological considerations. J Educ Eval Health Prof 2013;10(12):1–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70. Jamrozik E, Hyde Z, Alfonso H, et al. Validity of self-reported versus hospital-coded diagnosis of stroke: A cross-sectional and longitudinal study. Cerebrovasc Dis 2014;37(4):256–62. [DOI] [PubMed] [Google Scholar]

- 71. de Menezes TN, Oliveira EC, de Sousa Fischer MA.. Validity and concordance between self-reported and clinical diagnosis of hypertension among elderly residents in northeastern Brazil. Am J Hypertens 2014;27(2):215–21. [DOI] [PubMed] [Google Scholar]

- 72. Sanchez-Villegas A, Schlatter J, Ortuno F, et al. Validity of a self-reported diagnosis of depression among participants in a cohort study using the Structured Clinical Interview for DSM-IV (SCID-I). BMC Psychiatry 2008;8(43):1–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73. Foltynie T, Matthews FE, Ishihara L, Brayne C.. The frequency and validity of self-reported diagnosis of Parkinson's Disease in the UK elderly: MRC CFAS cohort. BMC Neurol 2006;6(29):1–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74. Svensson A, Lindberg M, Meding B, Sundberg K, Stenberg B.. Self-reported hand eczema: Symptom-based reports do not increase the validity of diagnosis. Br J Dermatol 2002;147(2):281–4. [DOI] [PubMed] [Google Scholar]

- 75. Kvien TK, Glennas A, Knudsrod OG, Smedstad LM.. The validity of self-reported diagnosis of rheumatoid arthritis: Results from a population survey followed by clinical examinations. J Rheumatol 1996;23(11):1866–71. [PubMed] [Google Scholar]

- 76. Pastorino S, Richards M, Hardy R, et al. Validation of self-reported diagnosis of diabetes in the 1946 British birth cohort. Prim Care Diabetes 2015;9(5):397–400. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77. Formica MK, McAlindon TE, Lash TL, Demissie S, Rosenberg L.. Validity of self-reported rheumatoid arthritis in a large cohort: Results from the Black Women's Health Study. Arthritis Care Res (Hoboken) 2010;62(2):235–41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78. Lenderink AF, Zoer I, van der Molen HF, Spreeuwers D, Frings-Dresen MH, van Dijk FJ.. Review on the validity of self-report to assess work-related diseases. Int Arch Occup Environ Health 2012;85(3):229–51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79. Baldacci F, Policardo L, Rossi S, et al. Reliability of administrative data for the identification of Parkinson’s disease cohorts. Neurol Sci 2015;36(5):783–6. [DOI] [PubMed] [Google Scholar]

- 80. Beghi E, Logroscino G, Micheli A, et al. Validity of hospital discharge diagnoses for the assessment of the prevalence and incidence of amyotrophic lateral sclerosis. Amyotroph Lateral Scler Other Motor Neuron Disord 2001;2(2):99–104. [DOI] [PubMed] [Google Scholar]

- 81. Benatar M, Wuu J, Usher S, Ward K.. Preparing for a U.S. National ALS Registry: Lessons from a pilot project in the State of Georgia. Amyotrophic Lateral Scler 2011;12(2):130–5. [DOI] [PubMed] [Google Scholar]

- 82. Bernstein CN, Blanchard JF, Rawsthorne P, Wajda A.. Epidemiology of Crohn's disease and ulcerative colitis in a Central Canadian Province: A population-based study. Am J Epidemiol 1999;149(10):916–24. [DOI] [PubMed] [Google Scholar]

- 83. Bezzini D, Policardo L, Meucci G, et al. Prevalence of multiple sclerosis in Tuscany (Central Italy): A study based on validated administrative data. Neuroepidemiology 2016;46(1):37–42. [DOI] [PubMed] [Google Scholar]

- 84. Bogliun G, Beghi E.. Validity of hospital discharge diagnoses for public health surveillance of the Guillain-Barrè syndrome. Neurol Sci 2002;23(3):113–7. [DOI] [PubMed] [Google Scholar]

- 85. Chastek BJ, Oleen-Burkey M, Lopez-Bresnahan MV.. Medical chart validation of an algorithm for identifying multiple sclerosis relapse in healthcare claims. J Med Econ 2010;13(4):618–25. [DOI] [PubMed] [Google Scholar]

- 86. Chiò A, Ciccone G, Calvo A, et al. Validity of hospital morbidity records for amyotrophic lateral sclerosis: A population-based study. J Clin Epidemiol 2002;55(7):723–7. [DOI] [PubMed] [Google Scholar]

- 87. Colais P, Agabiti N, Davoli M, et al. Identifying relapses in multiple sclerosis patients through administrative data: A validation study in the Lazio Region, Italy. Neuroepidemiology 2017;48(3–4):171–8. [DOI] [PubMed] [Google Scholar]

- 88. Culpepper WJ II, Ehrmantraut M, Wallin MT, Flannery K, Bradham DD.. Veterans Health Administration multiple sclerosis surveillance registry: The problem of case-finding from administrative databases. J Rehabil Res Dev 2006;43(1):17–23. [DOI] [PubMed] [Google Scholar]

- 89. Di Domenicantonio R, Cappai G, Arcà M, et al. Occurrence of inflammatory bowel disease in central Italy: A study based on health information systems. Dig Liver Dis 2014;46(9):777–82. [DOI] [PubMed] [Google Scholar]

- 90. Goff SL, Feld A, Andrade SE, et al. Administrative data used to identify patients with irritable bowel syndrome. J Clin Epidemiol 2008;61(6):617–21. [DOI] [PubMed] [Google Scholar]

- 91. Harrold LR, Andrade SE, Eisner M, et al. Identification of patients with Churg-Strauss syndrome (CSS) using automated data. Pharmacoepidemiol Drug Saf 2004;13(10):661–7. [DOI] [PubMed] [Google Scholar]

- 92. Kaye WE, Sanchez M, Wu J.. Feasibility of creating a National ALS Registry using administrative data in the United States. Amyotroph Lateral Scler Frontotemporal Degener 2014;15(5–6):433–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93. Kioumourtzoglou M-A, Seals RM, Himmerslev L, Gredal O, Hansen J, Weisskopf MG.. Comparison of diagnoses of amyotrophic lateral sclerosis by use of death certificates and hospital discharge data in the Danish population. Amyotroph Lateral Scler Frontotemporal Degener 2015;16(3–4):224–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94. Legorreta AP, Ricci J-F, Markowitz M, Jhingran P.. Patients diagnosed with irritable bowel syndrome. Dis Manage Health Outcomes 2002;10(11):715–22. [Google Scholar]

- 95. Lix LM, Yogendran MS, Shaw SY, Targownick LE, Jones J, Bataineh O.. Comparing administrative and survey data for ascertaining cases of irritable bowel syndrome: A population-based investigation. BMC Health Serv Res 2010;10(31):1–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 96. Lowe A-M, Roy P-O, B-Poulin M, et al. Epidemiology of Crohn's disease in Québec, Canada. Inflamm Bowel Dis 2009;15(3):429–35. [DOI] [PubMed] [Google Scholar]

- 97. Marrie RA, Fisk JD, Stadnyk KJ, et al. Performance of administrative case definitions for comorbidity in multiple sclerosis in Manitoba and Nova Scotia. Chronic Dis Inj Can 2014;34(2–3):145–53. [PubMed] [Google Scholar]

- 98. Marrie RA, Fisk JD, Stadnyk KJ, et al. The incidence and prevalence of multiple sclerosis in Nova Scotia, Canada. Can J Neurol Sci 2013;40(6):824–31. [PubMed] [Google Scholar]

- 99. Marriott JJ, Chen H, Fransoo R, Marrie RA.. Validation of an algorithm to detect severe MS relapses in administrative health databases. Mult Scler Relat Disord 2018;19:134–9. [DOI] [PubMed] [Google Scholar]

- 100. Molodecky NA, Myers RP, Barkema HW, Quan H, Kaplan GG.. Validity of administrative data for the diagnosis of primary sclerosing cholangitis: A population-based study. Liver Int 2011;31(5):712–20. [DOI] [PubMed] [Google Scholar]

- 101. Ofman JJ, Ryu S, Borenstein J, et al. Identifying patients with gastroesophageal reflux disease in a managed care organization. Am J Health Syst Pharm 2001;58(17):1607–13. [DOI] [PubMed] [Google Scholar]

- 102. Pisa FE, Verriello L, Deroma L, et al. The accuracy of discharge diagnosis coding for amyotrophic lateral sclerosis in a large teaching hospital. Eur J Epidemiol 2009;24(10):635–40. [DOI] [PubMed] [Google Scholar]

- 103. Sands BE, Duh M-S, Cali C, et al. Algorithms to identify colonic ischemia, complications of constipation and irritable bowel syndrome in medical claims data: Development and validation. Pharmacoepidemiol Drug Saf 2006;15(1):47–56. [DOI] [PubMed] [Google Scholar]

- 104. Stickler DE, Royer JA, Hardin JW.. Accuracy and usefulness of ICD-10 death certificate coding for the identification of patients with ALS: Results from the South Carolina ALS Surveillance Pilot Project. Amyotrophic Lateral Scler 2012;13(1):69–73. [DOI] [PubMed] [Google Scholar]

- 105. Vasta R, Boumédiene F, Couratier P, et al. Validity of medico-administrative data related to amyotrophic lateral sclerosis in France: A population-based study. Amyotrophic Lateral Scler Frontotemporal Degener 2017;18(1–2):24–31. [DOI] [PubMed] [Google Scholar]

- 106. Widdifield J, Ivers NM, Young J, et al. Development and validation of an administrative data algorithm to estimate the disease burden and epidemiology of multiple sclerosis in Ontario, Canada. Mult Scler 2015;21(8):1045–54. [DOI] [PubMed] [Google Scholar]

- 107. Marrie RA, Yu N, Blanchard J, Leung S, Elliott L.. The rising prevalence and changing age distribution of multiple sclerosis in Manitoba. Neurology 2010;74(6):465–71. [DOI] [PubMed] [Google Scholar]

- 108. Lacasse A, Ware MA, Dorais M, Lanctôt H, Choinière M.. Is the Quebec provincial administrative database a valid source for research on chronic non-cancer pain? Pharmacoepidemiol Drug Saf 2015;24(9):980–90. [DOI] [PubMed] [Google Scholar]

- 109. Katz JN, Barrett J, Liang MH, et al. Sensitivity and positive predictive value of Medicare Part B physician claims for rheumatologic diagnoses and procedures. Arthritis Rheum 1997;40(9):1594–600. [DOI] [PubMed] [Google Scholar]

- 110. Marrie RA, Yu BN, Leung S, et al. The incidence and prevalence of fibromyalgia are higher in multiple sclerosis than the general population: A population-based study. Mult Scler Relat Disord 2012;1(4):162–7. [DOI] [PubMed] [Google Scholar]

- 111. Kolodner K, Lipton RB, Lafata JE, et al. Pharmacy and medical claims data identified migraine sufferers with high specificity but modest sensitivity. J Clin Epidemiol 2004;57(9):962–72. [DOI] [PubMed] [Google Scholar]

- 112. Marrie RA, Yu BN, Leung S, et al. The utility of administrative data for surveillance of comorbidity in multiple sclerosis: A validation study. Neuroepidemiology 2013;40(2):85–92. [DOI] [PubMed] [Google Scholar]

- 113. Hartsfield CL, Korner EJ, Ellis JL, Raebel MA, Merenich J, Brandenburg N.. Painful diabetic peripheral neuropathy in a managed care setting: Patient identification, prevalence estimates, and pharmacy utilization patterns. Popul Health Manag 2008;11(6):317–28. [DOI] [PubMed] [Google Scholar]

- 114. Dalton JE, Bolen SD, Mascha EJ.. Publication bias: The elephant in the review. Anesth Analg 2016;123(4):812–3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 115. Cadarette SM, Wong L.. An introduction to health care administrative data. Can J Hosp Pharm 2015;68(3):232–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 116. Hinds A, Lix LM, Smith M, Quan H, Sanmartin C.. Quality of administrative health databases in Canada: A scoping review. Can J Public Health 2016;107(1):e56–61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 117. Herrett E, Thomas SL, Schoonen WM, Smeeth L, Hall AJ.. Validation and validity of diagnoses in the general practice research database: A systematic review. Br J Clin Pharmacol 2010;69(1):4–14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 118.GBD 2017 Disease and Injury Incidence and Prevalence Collaborators. Global, regional, and national incidence, prevalence, and years lived with disability for 354 diseases and injuries for 195 countries and territories, 1990-2017: A systematic analysis for the Global Burden of Disease Study 2017. Lancet 2018;392(10159):1789–858. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 119. Chubak J, Pocobelli G, Weiss NS.. Tradeoffs between accuracy measures for electronic health care data algorithms. J Clin Epidemiol 2012;65(3):343–9.e2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 120. Pekkanen J, Pearce N.. Defining asthma in epidemiological studies. Eur Respir J 1999;14(4):951–7. [DOI] [PubMed] [Google Scholar]

- 121. Haanpaa ML, Backonja MM, Bennett MI, et al. Assessment of neuropathic pain in primary care. Am J Med 2009;122(10 Suppl):S13–21. [DOI] [PubMed] [Google Scholar]

- 122. Johnson JL, Greaves L, Repta R.. Better science with sex and gender: Facilitating the use of a sex and gender-based analysis in health research. Int J Equity Health 2009;8(14):1–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 123. Day S, Mason R, Tannenbaum C, Rochon PA.. Essential metrics for assessing sex & gender integration in health research proposals involving human participants. PLoS One 2017;12(8):e0182812. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 124. McGregor AJ, Hasnain M, Sandberg K, Morrison MF, Berlin M, Trott J.. How to study the impact of sex and gender in medical research: A review of resources. Biol Sex Differ 2016;7(46):61–72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 125. Pilote L, Humphries KH.. Incorporating sex and gender in cardiovascular research: The time has come. Can J Cardiol 2014;30(7):699–702. [DOI] [PubMed] [Google Scholar]

- 126. Boerner KE, Chambers CT, Gahagan J, Keogh E, Fillingim RB, Mogil JS.. Conceptual complexity of gender and its relevance to pain. Pain 2018;159(11):2137–41. [DOI] [PubMed] [Google Scholar]

- 127. Fillingim RB, King CD, Ribeiro-Dasilva MC, Rahim-Williams B, Riley JL 3rd. Sex, gender, and pain: A review of recent clinical and experimental findings. J Pain 2009;10(5):447–85. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 128. Tian TY, Zlateva I, Anderson DR.. Using electronic health records data to identify patients with chronic pain in a primary care setting. J Am Med Inform Assoc 2013;20(e2):e275–80. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 129. Tonelli M, Wiebe N, Fortin M, et al. Methods for identifying 30 chronic conditions: Application to administrative data. BMC Med Inform Decis Mak 2016;15(31):1–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 130. Whiting PF, Rutjes AW, Westwood ME, et al. QUADAS-2: A revised tool for the quality assessment of diagnostic accuracy studies. Ann Intern Med 2011;155(8):529–36. [DOI] [PubMed] [Google Scholar]

- 131. Otsa K, Talli S, Harding P, et al. Administrative database as a source for assessment of systemic lupus erythematosus prevalence: Estonian experience. BMC Rheumatol 2019;3(26):1–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 132. Slim ZF, Soares de Moura C, Bernatsky S, Rahme E.. Identifying rheumatoid arthritis cases within the Quebec Health Administrative Database. J Rheumatol 2019;46(12):1570–6. [DOI] [PubMed] [Google Scholar]

- 133. Park HR, Im S, Kim H, et al. Validation of algorithms to identify knee osteoarthritis patients in the claims database. Int J Rheum Dis 2019;22(5):890–6. [DOI] [PubMed] [Google Scholar]

- 134. Linauskas A, Overvad K, Johansen MB, Stengaard-Pedersen K, de Thurah A.. Positive predictive value of first-time rheumatoid arthritis diagnoses and their serological subtypes in the Danish National Patient Registry. Clin Epidemiol 2018;Volume 10:1709–20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 135. Curtis JR, Xie F, Chen L, Greenberg JD, Zhang J.. Evaluation of a methodologic approach to define an inception cohort of rheumatoid arthritis patients using administrative data. Arthritis Care Res (Hoboken) 2018;70(10):1541–5. [DOI] [PubMed] [Google Scholar]

- 136. Shiff NJ, Oen K, Rabbani R, Lix LM.. Validation of administrative case ascertainment algorithms for chronic childhood arthritis in Manitoba, Canada. Rheumatol Int 2017;37(9):1575–84. [DOI] [PubMed] [Google Scholar]

- 137. Hanly JG, Thompson K, Skedgel C.. The use of administrative health care databases to identify patients with rheumatoid arthritis. Open Access Rheumatol 2015;7:69–75. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 138. Kroeker K, Widdifield J, Muthukumarana S, Jiang D, Lix LM.. Model-based methods for case definitions from administrative health data: Application to rheumatoid arthritis. BMJ Open 2017;7(6):e016173. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.