Abstract.

Purpose: Accurate segmentation of treatment planning computed tomography (CT) images is important for radiation therapy (RT) planning. However, low soft tissue contrast in CT makes the segmentation task challenging. We propose a two-step hierarchical convolutional neural network (CNN) segmentation strategy to automatically segment multiple organs from CT.

Approach: The first step generates a coarse segmentation from which organ-specific regions of interest (ROIs) are produced. The second step produces detailed segmentation of each organ. The ROIs are generated using UNet, which automatically identifies the area of each organ and improves computational efficiency by eliminating irrelevant background information. For the fine segmentation step, we combined UNet with a generative adversarial network. The generator is designed as a UNet that is trained to segment organ structures and the discriminator is a fully convolutional network, which distinguishes whether the segmentation is real or generator-predicted, thus improving the segmentation accuracy. We validated the proposed method on male pelvic and head and neck (H&N) CTs used for RT planning of prostate and H&N cancer, respectively. For the pelvic structure segmentation, the network was trained to segment the prostate, bladder, and rectum. For H&N, the network was trained to segment the parotid glands (PG) and submandibular glands (SMG).

Results: The trained segmentation networks were tested on 15 pelvic and 20 H&N independent datasets. The H&N segmentation network was also tested on a public domain dataset () and showed similar performance. The average dice similarity coefficients () of pelvic structures are (prostate), (bladder), (rectum), and H&N structures are (PG) and (SMG). The segmentation for each CT takes on average.

Conclusions: Experimental results demonstrate that the proposed method can produce fast, accurate, and reproducible segmentation of multiple organs of different sizes and shapes and show its potential to be applicable to different disease sites.

Keywords: deep learning, segmentation, hierarchical convolutional neural network, radiotherapy

1. Introduction

Radiation therapy (RT) is widely used for treating cancer patients where high-energy radiation is used to kill cancer cells. The efficacy of RT depends on accurate delivery of therapeutic radiation dose to the target while sparing adjacent healthy tissues for which accurate segmentation of the target tumor and organs at risk (OARs) is critical. Manual contouring by radiation oncologists is still considered as the gold standard in current clinical practice, but it is very time consuming and the quality of the segmentation varies depending on the physician’s knowledge and experience. Computed tomography (CT) is used as the reference image for radiotherapy planning as it offers the electron density information needed for dose calculation. However, poor soft-tissue contrast in CT images makes the contouring process challenging, thus yielding to large inter- and intraobserver contouring variability.1–4

Automatic organ segmentation has been an active research area for the last few decades. Among existing automatic segmentation methods, state-of-the-art methods include (but are not limited to) atlas-based, model-based, and learning-based methods. In atlas-based methods, atlas images are registered to the image to be segmented followed by atlas label propagation to the target image to get the final segmentation. Since a single atlas cannot perfectly fit every patient, use of multiple atlases has become a standard baseline for atlas-based segmentation.5–9 Although multi-atlas-based segmentation has been widely adopted with state-of-the-art segmentation quality, it requires a significant amount of time as it involves multiple registrations between the atlas and the target volumes. Model-based segmentation utilizes a deformable model and/or a priori knowledge of the target such as shape, intensity, and texture to constrain the segmentation process.10–15 It is often used in combination with another method, e.g., multi-atlas-based segmentation, to further improve the segmentation quality. Such hybrid approaches have been applied to the delineation of head and neck (H&N) structures on CT images, showing promising results.10,16–18 These model-based methods require fine-tuning parameters for every structure to be segmented and are sensitive to structure and image quality variations. Learning-based methods train a classifier or regressor from a pool of training images. Then the segmentation is generated by predicting the likelihood map.19,20 Conventional learning-based approaches require hand-crafted feature extraction and the segmentation quality significantly depends upon the extracted features.

In the last several years, deep learning-based automatic segmentation has demonstrated its potential in accurate and consistent organ segmentation. In particular, convolutional neural network (CNN) became state-of-the-art in solving challenging image classification and segmentation problems due to its capability of extracting deep image features.21–23 CNN architecture consists of several hidden convolutional layers followed by an activation function and pooling layers that enable automatic feature learning to accomplish classification and segmentation tasks. CNN-based segmentation approaches have been widely applied to both normal organ and tumor segmentation problems,23,24 significantly improving the segmentation performance over other state-of-the-art methods. The introduction of a fully convolutional network (FCN) especially enabled image segmentation with arbitrary image sizes through efficient and robust learning and inference.25 One of the most successful FCN approaches in medical image segmentation is UNet, an FCN with skip connection and capability of extracting contextual features from contracting layers and structural information from expansion layers.26 UNet and its variants have shown very promising results in automatic medical image segmentation.27–33

CNN-based automatic segmentation approaches have been widely used for multiple organ segmentations in CT images. Roth et al.34 proposed a multi-level CNN model for pancreas segmentation. Wang et al.32 developed an FCN combined with dilated convolution and deep supervision for prostate segmentation. Men et al.35 used a deep dilated CNN to segment the clinical target volume and pelvic OARs. Kazemifar et al.31 proposed a CNN-based segmentation of CT male pelvic organs using two-dimensional (2-D) UNet. Balagopal et al.36 proposed a cascaded multi-channel 2-D and three-dimensional (3-D) UNet with aggregated residual networks to segment male pelvic CT images. Wang et al.33 used UNet with boundary-sensitive information to segment male pelvic structures. Dong et al.37 proposed a UNet combined with generative adversarial network (GAN)38 to segment multiple organs in thorax CT. Recently, CNN has been used for organ segmentation in magnetic resonance imaging (MRI),28,30,39–42 achieving promising results utilizing excellent soft tissue contrast in MRI that improves identification of organ boundaries. However, considering that CT is used as the reference images for RT planning, automatic segmentation of CT images is highly desired despite being more challenging than segmenting magnetic resonance (MR) images.

CNN-based models have also shown promising results in segmenting H&N structures. Existing patch-based networks to segment H&N anatomical structures used 2-D/3-D local patches in a sliding window to identify OARs, which are unable to capture global features.43–46 These patch-based methods also require pre- and postprocessing steps with additional parameters tuning. Chan et al.47 proposed a cascaded CNN for single- and multi-task learning through transfer learning for H&N anatomy segmentation using a limited number of training samples. Hänsch et al.48 explored the potential of 2-D, 2-D ensemble, and 3-D UNet-based models in parotid gland segmentation. Zhu et al.49 proposed a UNet-based model with residual blocks for the segmentation of OARs in H&N where the data imbalance problem is addressed using a combination of dice and focal loss function. Tong et al.50 presented a CNN model with GAN and shape constraint to segment H&N structures in MR and CT images.

Although many CNN-based approaches require a preprocessing step that reshapes or crops input images to a proper size and resolution for the segmentation network and/or try to segment multiple organs simultaneously, several groups investigated hierarchical or multi-level CNN segmentation approaches to automatically segment target organ(s) of interest. This strategy has been used to segment the pancreas,34,51,52 esophagus,53 and also multiple organs in the abdomen,54 H&N,55 and male pelvic region36 in CT images, demonstrating improved efficiency and segmentation performance by localizing the target region and letting the segmentation network focus on the local region around the target organ to be segmented.

In this paper, we propose a hierarchical coarse-to-fine volumetric segmentation of CT where a coarse segmentation is produced using a multi-class 3-D UNet to determine organ-specific regions of interest (ROIs) and fine segmentation is performed utilizing GAN with a 3-D UNet as generator and FCN as discriminator. GAN contributes by providing learned parameters of accurate segmentation by distinguishing between real and generated segmentations and thus globally improving segmentation accuracy. Unlike many existing hierarchical/multi-level approaches that are based on 2-D/2.5-D images34,53 and/or patches with sliding or tiling strategies,34,51,52,54 our network is fully 3-D-based and processes the input 3-D CT volume and outputs associated multiple organ segmentations. Our method is similar to Balagopal et al.36 (2-D localization network + modified 3-D UNet segmentation network) and Wang et al.55 (3-D UNet bounding box network with sliding + 3-D UNet segmentation network) that first create bounding boxes for organs to be segmented followed by cropped image segmentation using (modified) UNet. Our approach creates organ-specific ROIs by 3-D multi-class segmentation, and the fine segmentation is performed using a 3-D UNet constrained by GAN, thus producing improved segmentation as reported in our experiments and results. Our initial approach and preliminary results on male pelvic CT segmentation were reported in a conference paper.56 We further extended our approach to two different anatomical sites; male pelvic and H&N CT images, and trained and tested the proposed networks on a much larger cohort of data. The proposed approach showed its robust performance on both sites with state-of-the-art performances, demonstrating its generalizability to multiple sites and organs. H&N especially involves many OARs to be contoured requiring significant effort for contouring, therefore, it can benefit from the proposed method. We present complete methodology and extensive validation results using larger (internal and external) datasets in this paper.

2. Datasets and Preprocessing

We trained the proposed hierarchical CNN and tested to segment multiple organs in CT images of two different disease sites, male pelvic and H&N regions. Deidentified CT image data and associated contours drawn by the attending radiation oncologists were obtained from the patients’ RT plan records under the approval of the institutional review board.

2.1. Male Pelvic CT

We obtained 290 pelvic CT images from prostate cancer patients who were treated by either external-beam RT (EBRT) or brachytherapy. Each patient had CT images of the pelvic region and manual contouring of the prostate, bladder, and rectum drawn by the attending radiation oncologist. We used 275 datasets for training and the remaining 15 for testing. We augmented the training datasets to 1100 by applying random shifting, rotation, and flipping. The CT images have isotropic in-plane pixel sizes ranging from 1.17 to 1.36 mm and through-plane slice spacing of 3 mm. The sizes of the CT images range from .

In these prostate cancer patients’ CT images, there are fiducial markers (for EBRT cases) or brachytherapy seeds (for brachytherapy cases) implanted within the gland, creating very high-intensity values. We preprocessed CT images to remove such fiducials and seeds by automatically identifying them by thresholding and replacing their intensity values with a mean intensity of the neighboring gland voxels.

For coarse segmentation network training, we downsampled the original CT image to voxel resolution with an image size of . The coarse segmentation network produced a rough segmentation of the multiple organs of interest, i.e., prostate, bladder, and rectum, from which we extracted the ROI for each organ. The original CT was then cropped for each ROI, yielding cropped CT images of , , and for the prostate, bladder, and rectum, respectively, with a voxel size of for the successive fine segmentations. Notice that we did not use the original CT resolution due to varying in-plane resolutions and chose these ROIs and voxel sizes to sufficiently cover each organ, considering the graphics processing unit (GPU) memory for processing 3-D volume.

2.2. H&N CT

The performance of the proposed method was further evaluated for salivary glands segmentation in H&N CT. We collected 220 CT images from H&N cancer patients treated by EBRT. We used 200 datasets for training and 20 datasets for testing. The parotid glands (PG) and submandibular glands (SMG) were contoured by the attending radiation oncologists during the routine RT planning. The CT images had with an isotropic in-plane pixel size of 0.94 to 1.36 mm and through-plane slice spacing of 3 to 3.13 mm.

Similar to the pelvic cases, we downsampled the original H&N CT to resolution with the volume dimension of for the coarse segmentation network training. We augmented the training data by shifting, rotation, and flipping, yielding 800 training datasets. The coarse segmentation network produced a rough segmentation of the salivary glands from which we extracted the ROIs of the left and right side of PG and SMG. The original CT images were then cropped using the ROIs to produce a PG ROI image of and SMG ROI image of . Since salivary glands are much smaller than the pelvic organs, we were able process the fine segmentation at the original CT resolution. Also given the symmetric nature of both PG and SMG, we merged the flipped left PG and SMG with the right PG and SMG and trained only two networks: one for PG and the other for SMG for the fine segmentation. Since the coarse segmentation network segments left and right glands separately, laterality is known and can be restored after the fine segmentation.

We have also tested the trained (on our local data as described above) network on the Public Domain Database for Computational Anatomy (PDDCA) version 1.4.1.57 This dataset includes 48 H&N CT images among which 38 datasets have manual segmentations of both left and right PG and SMG. These 38 CT images had with a pixel size of 0.88 to 1.27 mm and through-plane slice spacing of 2 to 3 mm.

3. Methods

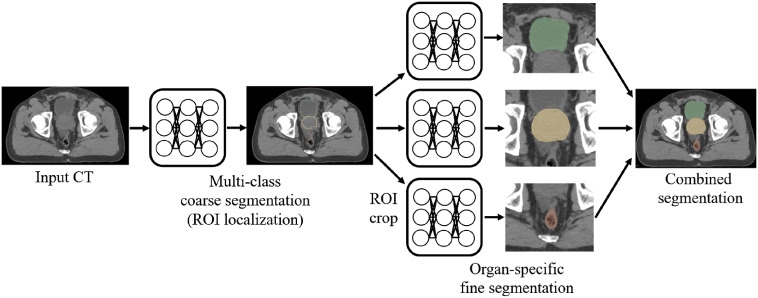

The proposed hierarchical CNN segmentation approach consists of two steps: coarse and fine segmentations as shown in Fig. 1. The coarse segmentation generates organ-specific ROIs that are used to crop the input CT for each organ. The fine segmentation then processes the cropped ROI volume to produce high-quality segmentation of each organ. Finally, the segmented organ masks are merged to form a multi-organ segmentation of the patient. The following sections describe each step in detail.

Fig. 1.

Workflow of the proposed hierarchical segmentation method.

3.1. Coarse Segmentation

In the coarse segmentation step, multi-label segmentation is performed on the downsampled CT by a multi-class 3-D UNet. Organ-specific ROIs are extracted from the computed labels, each containing the corresponding organ of interest. The original CT volume contains a large background that carries contextual information irrelevant to the organs to be segmented. Including such background does not help each organ segmentation, but rather burdens in feature space and increases computational complexity. The computed ROIs allow us to crop the original CT to smaller volumes, compactly including the organs to be segmented, and therefore, to reduce computational complexity while improving the segmentation performance. Note that the coarse segmentation network is trained to segment all the organs of interest together instead of segmenting them separately. CT images are downsampled to a lower resolution to further save computational resources and time as only coarse-segmented labels are required in this step.

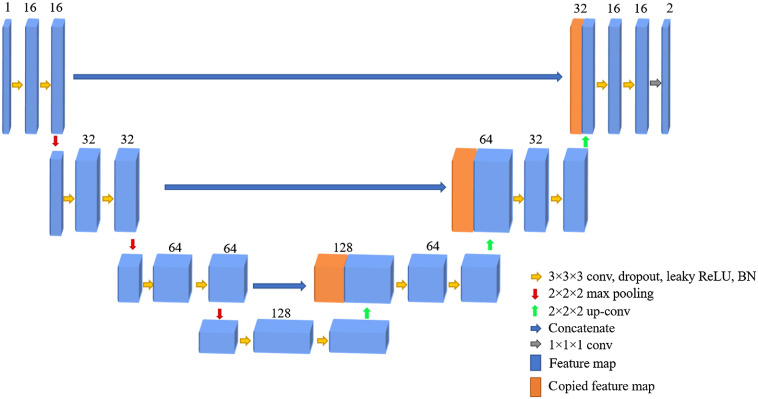

The modified 3-D UNet architecture used for coarse segmentation consists of contraction and expansion paths each with four layers. Each layer of the contraction path is composed of two convolutions ( conv), followed by a leaky rectified linear unit (ReLU) activation function,58 and pooling. In each layer, batch normalization (BN) is added to speed up the learning process by reducing sensitivity to parameter initialization59 and dropout to prevent overfitting.60 The number of feature maps in the first layer is 16, which doubles at each successive layer. The expansion path has a similar architecture to the contraction path except that in each layer it has a up-convolution ( up-conv) that halves the number of feature maps. The last convolution () layer maps the output features to the desired number of labels. Skip connections are used to transfer features extracted from the early contraction path to the expansion path.

Once this first network is trained, it produces a coarse segmentation map of the organs of interest, which is then used to automatically extract ROIs for every organ. Based on the coarse segmentation, the centroid of each organ is calculated and then the ROI of each organ is cropped from the original image for which the centroid of each segmented organ is the center of the cropped ROI. The size of ROIs was determined to be large enough to cover each organ and enough surrounding background context.

3.2. Fine Segmentation

The fine segmentation network takes the organ-specific cropped CT images obtained from the coarse segmentation as input and segments of each organ of interest. Organ-specific fine segmentation CNNs are designed using 3-D UNet and GAN as shown in Fig. 2. The GAN network consists of generator and discriminator networks where the two networks compete with each other to produce an accurate segmentation. A modified 3-D UNet is used as a generator and trained using the cropped CT images and manual segmentations. The 3-D UNet has similar architecture as described for the coarse segmentation network as shown in Fig. 3. The generator produces the predicted label of the organ of interest. On the other hand, a standard FCN composed of four strided convolutions each with dropout and BN followed by a fully connected layer is used as the discriminator (Fig. 4).The discriminator is trained using manually contoured labels to determine if the generator-predicted labels are real or fake.

Fig. 2.

The UNet-GAN architecture for fine segmentation.

Fig. 3.

Generator network.

Fig. 4.

Discriminator network.

For GAN, the generator network and discriminator network are trained simultaneously. The objective of is to learn the distribution from the dataset and then sample a variable from the uniform or Gaussian distribution . The purpose of is to classify whether an image comes from the training dataset or from . To define the cost function of the GAN, let and denote labels for fake (generator-produced labels) and real data (labels from training data), respectively. Then the cost function for and are defined using a least squares loss function61 as follows:

| (1) |

| (2) |

To maximize the similarity between the generator-produced and the ground truth segmentations, we also compute a weighted dice loss defined as

| (3) |

where for each class , is the class weight, is the binary label at each pixel , and is the predicted binary label. In case of multi-class coarse segmentation, in Eq. (3) represents the class label for each organ to be segmented. For the fine segmentation, as only one class needs to be predicted. The final objective function for the generator is defined as the sum of the least squares generator loss and the weighted dice loss as follows:

| (4) |

where λ ∈ [0,1].

3.3. Network Implementation

We implemented the proposed CNNs using Tensorflow62 and used the following settings for both coarse and fine segmentation networks. The initial learning rate was set to . We used an Adam optimizer, a stochastic gradient descent-based optimizer that adaptively estimates the lower order moments and automatically adjusts step size during optimization.63 Dropout rate was 0.25 and the mini-batch size was 16. We trained and tested our network on a workstation with an Intel Xeon processor with 32 GB RAM and NVIDIA GeForce GTX TITAN X GPU with 12 GB memory.

4. Experiments and Results

4.1. Network Training and Computation Time

For pelvic images, training for the coarse and fine segmentation networks took 15 and 32 h, respectively. In the testing phase, coarse segmentation took 3 s and fine segmentation for each organ took 4 s. For the H&N images, training of the coarse and fine segmentation networks took 15 and 28 h, respectively. The computation time for coarse segmentation was 3 s and fine segmentation for each organ took 4 s.

4.2. Evaluation Criteria

We quantitatively assessed the automatic segmentation quality using six metrics in comparison to the ground truth segmentation.64 To measure the degree of overlap between the automatic and ground truth segmentations, we computed the dice similarity coefficient (DSC). To compare the distances between the surfaces of the automatic and ground truth segmentations, we computed mean surface distance (MSD) and 95% Hausdorff distance (HD95), i.e., the 95th percentile of the distances between the surface points of the automatic and ground truth segmentations. Instead of maximum HD, HD95 was computed to discard the impact of a small subset of inaccurate segmentation while evaluating the overall segmentation quality. Finally, to measure the accurately segmented portion among the automatic segmentation, we computed positive predictive value (PPV) and sensitivity (SEN) defined as and , where and are the ground truth and automatic segmentations, respectively.

4.3. Pelvic Segmentation

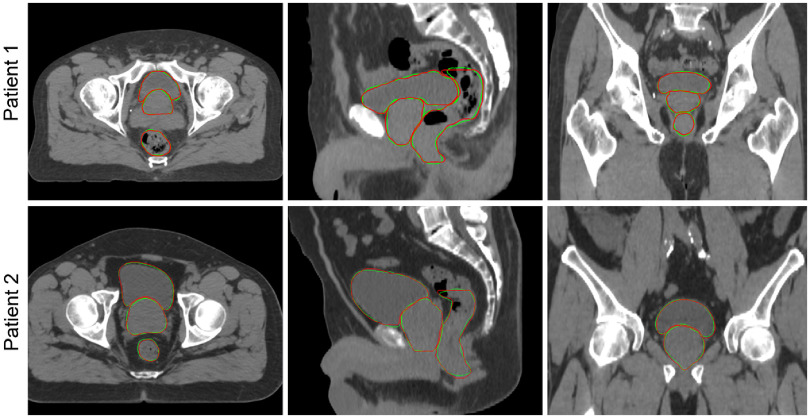

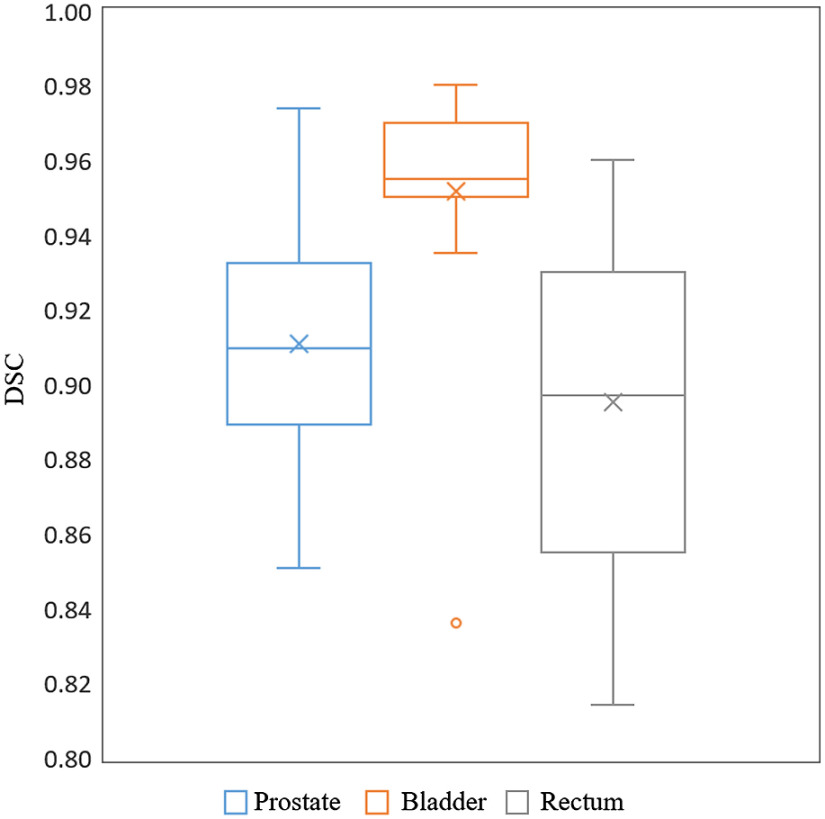

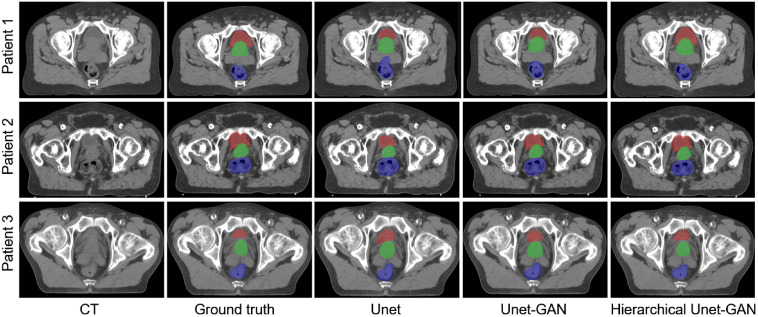

Example segmentations of two test cases are shown in Fig. 5 and the quantitative segmentation accuracy of all 15 test cases in terms of DSC is shown in Fig. 6. Our training datasets included full, half, and empty bladder so that the trained network was capable of segmenting them correctly as shown in Fig. 5. It should be noted that we used contours drawn by the attending radiation oncologist for the patients’ RT planning to train and test the proposed network. There was slight variation among physicians regarding where to stop contouring the rectum superiorly (rectum-sigmoid boundary), which caused a slightly reduced autosegmentation performance for the rectum. We did not attempt to modify the rectum contour from what was defined by the attending radiation oncologist to reflect the real clinical scenario. Notice that the network produced similar segmentation as the physician’s manual segmentation.

Fig. 5.

Example segmentations of the prostate, bladder, and rectum shown in axial, sagittal, and coronal planes. Each row represents a different patient. Note that the bladder shapes are significantly different between these two cases. Green, automatic segmentation and red, manual segmentation.

Fig. 6.

Box and whisker plots for DSC of the three segmented pelvic organs. The boxes show 25th and 75th percentiles and the centerline inside each box indicates the median value. “×” marks indicate mean values over 15 cases.

To demonstrate the benefit of incorporating GAN and performing organ-specific fine segmentation in the proposed hierarchical approach, we compared the proposed hierarchical UNet-GAN segmentation with two other CNN approaches; (1) multi-class UNet and (2) multi-class UNet-GAN where both networks were trained to simultaneously segment the prostate, bladder, and rectum together. Both of the multi-class CNNs were trained using 275 CT with augmentation where the ROI of dimensions was cropped from original CT with a voxel size of . The cropped ROI includes the prostate, bladder, rectum, and enough background contexts.

Quantitative and qualitative comparisons are reported in Table 1 and shown in Fig. 7, respectively. In general, multi-class UNet achieved a reasonable segmentation performance as reported in the prior studies.19,56,65 We observed that erroneously segmented regions in the multi-class UNet were often improved when GAN was incorporated as shown in Fig. 7. We believe that GAN constrained the network to produce segmentations of which shapes vary within a reasonable range of variation and are close to human experts’ manual segmentations. The proposed hierarchical UNet-GAN achieved superior performance to the multi-class UNet and UNet-GAN approaches with an overall DSC () of , and for the prostate, bladder and rectum, respectively. Although the number of test cases was small, we performed Wilcoxon signed rank test for the DSC scores to assess the statistical significance of the performance difference between the proposed hierarchical UNet-GAN and multi-class UNet/UNet-GAN (Table 1). It was observed that there were significant improvements in prostate and bladder segmentations with , (vs UNet/UNet-GAN), respectively, whereas the difference was not statistically significant for the rectum segmentation (). The proposed method also achieved lower MSD and HD95 than the other two methods for all three structures. Note that MSD is less than 1.8 mm for all structures, which can be considered excellent segmentation performance given the original CT image resolution ( on average). The main reason for the superior performance of the proposed method to the other methods is twofold. First, the fine segmentation focuses only on a specific organ confined within a compact ROI that contains enough contextual information around the target organ while excluding unrelated (or very weakly related) background information outside the ROI. Second, the incorporation of GAN enables adversarial training, which further strengthens the segmentation network to produce accurate segmentation by penalizing segmentation with irregular shapes that are inconsistent with the experts’ manual segmentation.

Table 1.

Quantitative comparison of pelvic CT segmentation performance of different methods ().

| Metrics | Method | Prostate | Bladder | Rectum |

|---|---|---|---|---|

| DSC | Hierarchical UNet-GAN | |||

| UNet-GAN | () | () | () | |

| UNet | () | () | () | |

| MSD (mm) | Hierarchical UNet-GAN | |||

| UNet-GAN | ||||

| UNet | ||||

| HD95 (mm) | Hierarchical UNet-GAN | |||

| UNet-GAN | ||||

| UNet | ||||

| PPV | Hierarchical UNet-GAN | |||

| UNet-GAN | ||||

| UNet | ||||

| SEN | Hierarchical UNet-GAN | |||

| UNet-GAN | ||||

| UNet |

Note: -values were computed between UNet-GAN/UNet and hierarchical UNet-GAN through Wilcoxon signed rank test. is considered statistically significant.

Fig. 7.

Examples of segmentations for (green) prostate, (red) bladder, and (blue) rectum using three different approaches. Each row shows an axial view of a different patient.

Table 2 shows quantitative comparison between the proposed method and existing state-of-the-art methods. Although these methods used different datasets, this comparison allows us to assess the performance of the proposed methods in (indirect) comparison to other methods. In terms of DSC, our method outperformed both model-based and regression forest-based machine learning methods.15,19,20 In comparison to three CNN-based approaches,31,33,36 our method showed the best performance overall, outperforming all three for the prostate segmentation while being comparable or slightly better for the bladder and rectum segmentations.

Table 2.

Pelvic CT segmentation performance comparison with other state-of-the-art methods (DSC, ).

4.4. H&N Segmentation

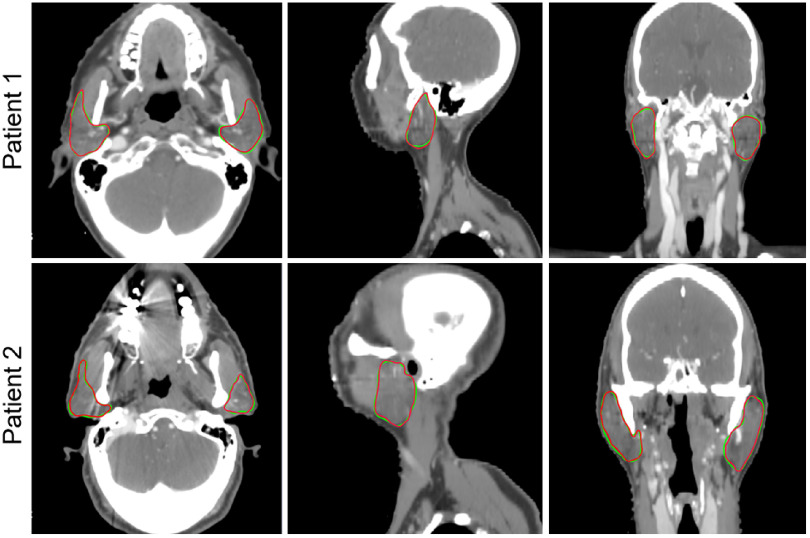

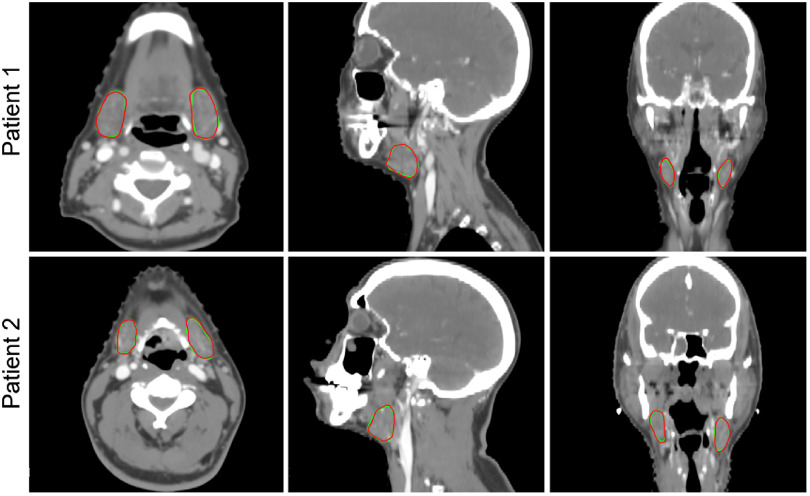

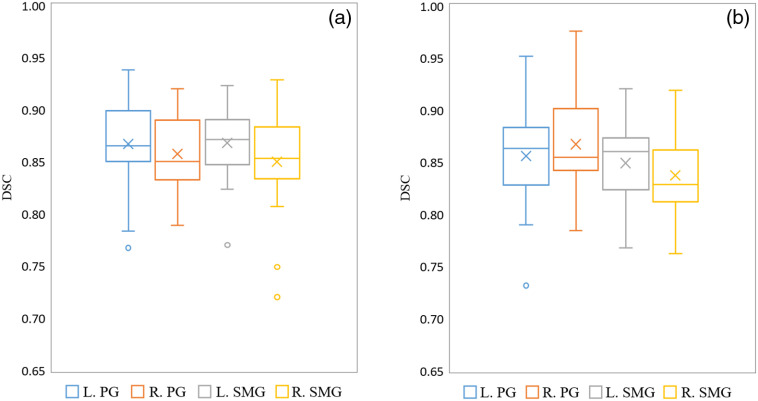

We have segmented PG and SMG for 20 H&N CT test datasets. Two example cases are shown in Figs. 8 and 9. The distribution of DSC over 20 cases is shown in Fig. 10(a). The performance of the proposed method is compared with multi-class UNet and multi-class UNet-GAN segmentations similar to the pelvic cases, and the quantitative comparison is reported in Table 3. It is observed that incorporating GAN to UNet improves the segmentation performance, and the proposed hierarchical UNet-GAN achieves better segmentation performance compared to the other two methods. These performance improvements are consistent with the trend in the pelvic cases and demonstrate the benefit of incorporating GAN and utilizing a hierarchical approach. The average DSCs for PG and SMG are 0.87 and 0.86, respectively. We also performed Wilcoxon signed rank test for the DSC scores to assess the statistical significance of the performance difference between the proposed hierarchical UNet-GAN and multi-class UNet/UNet-GAN (Table 3). It was observed that there were significant improvements in both glands with and (versus UNet/UNet-GAN) for the PG and SMG (left–right combined), respectively.

Fig. 8.

Examples of PG segmentations. Each row shows axial, sagittal, and coronal views of a different patient. Green, automatic segmentation and red, manual segmentation.

Fig. 9.

Examples of SMG segmentations. Each row shows axial, sagittal, and coronal views of a different patient. Green, automatic segmentation and red, manual segmentation.

Fig. 10.

Box and whisker plots for DSC of PG and SMG segmentations: (a) internal datasets with 20 cases and (b) PDDCA dataset with 38 cases. The boxes show 25th and 75th percentiles and the centerline inside each box indicates the median value. “×” marks indicate mean values.

Table 3.

Quantitative comparison of H&N CT segmentation performance of different methods (mean ± SD).

| Metrics | Method | Left PG | Right PG | Left SMG | Right SMG |

|---|---|---|---|---|---|

| DSC | Hierarchical UNet-GAN | ||||

| UNet-GAN | () | () | () | () | |

| UNet | () | () | () | () | |

| MSD (mm) | Hierarchical UNet-GAN | ||||

| UNet-GAN | |||||

| UNet | |||||

| HD95 (mm) | Hierarchical UNet-GAN | ||||

| UNet-GAN | |||||

| UNet | |||||

| PPV | Hierarchical UNet-GAN | ||||

| UNet-GAN | |||||

| UNet | |||||

| SEN | Hierarchical UNet-GAN | ||||

| UNet-GAN | |||||

| UNet |

Note: -values were computed between UNet-GAN/UNet and hierarchical UNet-GAN through Wilcoxon signed rank test. is considered statistically significant.

To assess the generalizability of the proposed method, we applied our trained network to 38 H&N CTs in the PDDCA dataset that has both PG and SMG segmentations. Although trained on a different dataset, the proposed hierarchical UNet-GAN was able to achieve similar segmentation performance for both PG and SMG. The distribution of DSC over 38 cases is shown in Fig. 10(b) and the quantitative performance is reported in Table 4.

Table 4.

Quantitative results on PDDCA dataset ().

| Left PG | Right PG | Left SMG | Right SMG | |

|---|---|---|---|---|

| DSC | ||||

| MSD (mm) | ||||

| HD95 (mm) | ||||

| PPV | ||||

| SEN |

We also compared the proposed hierarchical UNet-GAN with seven existing state-of-the-art methods as shown in Table 5. These methods are based on multi-atlas,7 deformable model using landmarks,10 hierarchical vertex regression to learn shape and appearance,66 patch-based CNN,44 convolutional dense-net with shape constraint and GAN,50 two-stage 3-D Unets (3-D UNet bounding box network with sliding + 3-D UNet segmentation network),55 and 3-D UNet with residual blocks.49 It should be noted that some of these methods50,66,67 used the PDDCA dataset to evaluate segmentation performance. Evaluation using the PDDCA dataset shows a direct and fair comparison among the competing methods. As reported in Table 5, the proposed method outperformed these state-of-the-art methods in terms of DSC. The only exception is the method using 3-D UNet with a residual block,49 which achieved slightly better DSC (average of left and right is 0.875) for PG compared to the proposed method (average of left and right is 0.865). However, the proposed method achieved the best performance for SMG segmentation among all the methods compared.

Table 5.

H&N CT segmentation performance comparison with other state-of-the-art methods (DSC).

5. Discussion

In this paper, we proposed a hierarchical coarse-to-fine segmentation network to automatically segment multiple organs from CT images for RT planning. In the coarse segmentation stage, a less time-consuming multi-class coarse segmentation of multiple organs of interest is performed using whole CT images. This multi-class segmentation is used to localize the ROI of each organ, which is subsequently used in the fine segmentation stage. This organ localization network helps to remove less important background and improve the efficiency of the fine segmentation by constraining the segmentation within the specific region of the organ. The fine segmentation network is designed with a modified 3-D UNet combined with GAN. GAN performs adversarial training by distinguishing between ground truth and predicted segmentations. The combined dice and GAN loss guides the training process to better handle inconsistent and irregular shapes, thus producing more accurate segmentations. Segmentation comparisons with other variations of UNet reported in Tables 1 and 3 show the contribution of GAN-based adversarial training integrated with UNet.

In the multi-class segmentation, class imbalance is a common problem where small structures are prone to being underrepresented compared to the bigger structures.68 In the proposed method, we perform single-class segmentation of each organ instead of multi-class segmentation, which potentially improves the quality of individual organs’ segmentation regardless of their size. Tables 1 and 3 show that the single-class segmentation always outperforms one-step multi-class segmentation. Another advantage of the single-class segmentation network is that the network training can utilize all available data even if there are missing labels while the multi-class segmentation network typically requires a complete set of labels for all structures unless additional constraints to handle missing labels are incorporated.23 However, one limitation of the single-class segmentation is that it requires more networks to be trained, one for each organ. In the proposed method at the fine segmentation step, each organ has a much smaller ROI than the combined ROI for multi-class segmentation, which enables much more efficient network training and faster execution.

An extensive validation of the proposed method was performed using two disease sites: pelvic and H&N regions. Such an extensive validation in multiple disease sites proves that the proposed method is versatile and generalizable to segment organs of diverse shape and size.

Automatic segmentation of pelvic organs is a crucial step for the effective treatment of prostate cancer using RT. Over the past years, several prostate segmentation methods have been proposed using atlas-based, model-based and most recently, deep-learning-based approaches.19,20,31,33,36,69 We have compared our proposed method with these state-of-the-art methods and showed that the proposed method outperforms them with reliable, accurate, and reproducible organ segmentation performance.

The proposed hierarchical UNet-GAN method was also employed to segment salivary glands in H&N CT images. PG and SMG are responsible for producing saliva. Excessive irradiation of these organs may cause side effects such as xerostomia that may lead to late complications including poor dental hygiene, oral infections, sleep disturbances, and difficulty in swallowing.70 Therefore, these glands are routinely contoured for an effective RT planning to minimize their RT-induced toxicities. The segmentation results of PG and SMG using our proposed method show promising outcome when compared with the performance of existing methods. This segmentation performance is comparable to human experts’ considering the significant interobserver variability between experts’ manual segmentations of H&N structures.4,71

Performing a fair comparison with different segmentation methods is difficult as they are often designed and optimized for a specific problem as well as tuned and tested on a specific dataset. Reimplementing or utilizing available open-source tools may allow us to directly compare their performances on the same data, but this may lead to unfair comparison as given parameters may not be optimal for other data. A slight change of parameters may also cause significant performance change. Instead, we used the publicly available PDDCA dataset that was used in MICCAI 2015 H&N autosegmentation grand challenge.57 Such a validation using independent public datasets provides a frame of reference to compare results from different competing segmentation methods. Quantitative results using the PDDCA dataset as reported in Table 4 shows consistent performance as with the internal dataset, demonstrating the generalizability of our network to other datasets. Furthermore, indirect comparison to other state-of-the-art segmentation methods shows the excellent performance of the proposed method over existing methods.

We used 275 prostate and 200 H&N data sets to train our networks. These data sets were augmented by rotation, translation, and lateral flipping to yield 1100 prostate and 800 H&N training data sets. These numbers are comparable to or exceed the number of training sets used in other CNN-based segmentation approaches in the literature31,33,36,44,49,50,55 and allowed us to train our networks to produce the reported promising performance. We observed that the segmentation performance degraded when we reduced the number of training data sets. Given the limited set of available data, we had to maximize the training data, leaving a small number of testing data sets. Although the numbers of test cases (15 prostate and 58 [20 internal and 38 external] H&N cases) are also comparable to other studies, extended testing on a much larger cohort of test cases may be needed to perform a rigorous statistical analysis.

In this paper, we have presented segmentation results of a limited number of organs in each disease site. In addition to the prostate, bladder, and rectum, our method can be extended to include more structures such as the bowel, femoral head, seminal vesicle, and sigmoid that are commonly contoured for prostate RT planning. For H&N, including other structures such as the brain, brainstem, eyes, optic nerve, optical chiasm, mandible, pituitary gland, thyroid, and larynx are our next steps toward more efficient H&N RT planning. We are confident that the current network can be readily used to segment these organs without much modification.

Finally, we have used CT images to train and test the proposed method in this study. The same network can be used with other image modalities such as MRI, and may lead to similar or higher accuracy depending on the organ visibility in those image modalities.

6. Conclusion

This paper presented an end-to-end, CNN-based automatic multi-organ segmentation in CT images using a hierarchical UNet-GAN with automatic ROI localization of the organs to be segmented. The automatic ROI extraction improved computational efficiency and the segmentation accuracy by allowing the fine segmentation network to focus only on the region of each organ. The fine segmentation of each organ is performed using UNet-GAN where the generator and discriminator compete with each other to improve segmentation accuracy. To avoid a class imbalance problem of multi-class segmentation, we performed single-class training, which improved segmentation accuracy over the multi-class segmentation. We performed extensive experimental validation using clinical data and showed that the proposed method outperformed other state-of-the-art methods. The proposed method can potentially improve the efficiency of RT planning of cancer treatment by reducing the burden of tedious manual contouring.

Acknowledgments

This work was supported by the National Cancer Institute (NCI), National Institutes of Health (NIH) under Grant No. R01CA151395.

Biographies

Sharmin Sultana is a postdoctoral researcher in the Department of Radiation and Oncology at Johns Hopkins University. She received her BS and MS degrees in computer science and engineering from the University of Dhaka in 2009 and 2011, respectively, and her PhD in computational modeling and simulation engineering from Old Dominion University in 2017. Her current research interests include medical image analysis, computer vision, and deep learning

Adam Robinson is a research assistant in the Department of Radiation Oncology at Johns Hopkins University. He received his BS degree in physics and mathematics from the University of Maryland Baltimore County in 2012, and MS degree in applied and computational mathematics in 2016. His current research interests include medical image analysis and machine learning applied to radiation therapy.

Daniel Y. Song serves as a professor in the Department of Radiation Oncology at Johns Hopkins University. His research focus is on technological innovations for improving the practice of prostate brachytherapy, as well as the conduct of clinical trials in innovative methods of radiotherapy for prostate cancer and other genitourinary malignancies. He performed some of the original research testing the feasibility of hydrogel spacers and establishing their benefit in reducing dose to the rectum.

Junghoon Lee is an associate professor in the Department of Radiation Oncology at Johns Hopkins University. He received his BS in electrical engineering and MS in biomedical engineering in 1997 and 1999, respectively, from Seoul National University, Republic of Korea, and his PhD in electrical and computer engineering from Purdue University in 2006. His research interests are in image processing and computer vision with applications to medical imaging problems.

Disclosures

No conflicts of interest to report.

Contributor Information

Sharmin Sultana, Email: ssultan5@jhmi.edu.

Adam Robinson, Email: arobin60@jhu.edu.

Daniel Y. Song, Email: dsong2@jhmi.edu.

Junghoon Lee, Email: junghoon@jhu.edu.

References

- 1.Steenbergen P., et al. , “Prostate tumor delineation using multiparametric magnetic resonance imaging: inter-observer variability and pathology validation,” Radiother. Oncol. 115(2), 186–190 (2015). 10.1016/j.radonc.2015.04.012 [DOI] [PubMed] [Google Scholar]

- 2.Fiorino C., et al. , “Intra-and inter-observer variability in contouring prostate and seminal vesicles: implications for conformal treatment planning,” Radiother. Oncol. 47(3), 285–292 (1998). 10.1016/S0167-8140(98)00021-8 [DOI] [PubMed] [Google Scholar]

- 3.Lee W. R., et al. , “Interobserver variability leads to significant differences in quantifiers of prostate implant adequacy,” Int. J. Radiat. Oncol. Biol. Phys. 54(2), 457–461 (2002). 10.1016/S0360-3016(02)02950-4 [DOI] [PubMed] [Google Scholar]

- 4.Nelms B. E., et al. , “Variations in the contouring of organs at risk: test case from a patient with oropharyngeal cancer,” Int. J. Radiat. Oncol. Biol. Phys. 82(1), 368–378 (2012). 10.1016/j.ijrobp.2010.10.019 [DOI] [PubMed] [Google Scholar]

- 5.Sjöberg C., et al. , “Clinical evaluation of multi-atlas based segmentation of lymph node regions in head and neck and prostate cancer patients,” Radiat. Oncol. 8(1), 229 (2013). 10.1186/1748-717X-8-229 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Acosta O., et al. , “Evaluation of multi-atlas-based segmentation of CT scans in prostate cancer radiotherapy,” in IEEE Int. Symp. Biomed. Imaging: From Nano to Macro, pp. 1966–1969 (2011). 10.1109/ISBI.2011.5872795 [DOI] [Google Scholar]

- 7.Han X., et al. , “Atlas-based auto-segmentation of head and neck CT images,” Lect. Notes Comput. Sci. 5242, 434–441 (2008). 10.1007/978-3-540-85990-1_52 [DOI] [PubMed] [Google Scholar]

- 8.Wang Y., et al. , “A quality control model that uses PTV-rectal distances to predict the lowest achievable rectum dose, improves IMRT planning for patients with prostate cancer,” Radiother. Oncol. 107(3), 352–357 (2013). 10.1016/j.radonc.2013.05.032 [DOI] [PubMed] [Google Scholar]

- 9.Asman A. J., Landman B. A., “Non-local statistical label fusion for multi-atlas segmentation,” Med. Image Anal. 17(2), 194–208 (2013). 10.1016/j.media.2012.10.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Qazi A. A., et al. , “Auto-segmentation of normal and target structures in head and neck CT images: a feature-driven model-based approach,” Med. Phys. 38(11), 6160–6170 (2011). 10.1118/1.3654160 [DOI] [PubMed] [Google Scholar]

- 11.Huang J., et al. , “An improved level set method for vertebra CT image segmentation,” Biomed. Eng. Online 12(Suppl 1), S1–16 (2013). 10.1186/1475-925X-12-S1-S1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Qian X., et al. , “An active contour model for medical image segmentation with application to brain CT image,” Med. Phys. 40(2), 21911 (2013). 10.1118/1.4774359 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Kaus M. R., McNutt T., Pekar V., “Automated 3D and 4D organ delineation for radiation therapy planning in the pelvic area,” Proc. SPIE 5370, 346–356 (2004). 10.1117/12.534822 [DOI] [Google Scholar]

- 14.Costa M. J., et al. , “Automatic segmentation of bladder and prostate using coupled 3D deformable models,” Lect. Notes Comput. Sci. 4791, 252–260 (2007). 10.1007/978-3-540-75757-3_31 [DOI] [PubMed] [Google Scholar]

- 15.Martinez F., et al. , “Segmentation of pelvic structures for planning CT using a geometrical shape model tuned by a multi-scale edge detector,” Phys. Med. Biol. 59(6), 1471 (2014). 10.1088/0031-9155/59/6/1471 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Chen A., et al. , “Combining registration and active shape models for the automatic segmentation of the lymph node regions in head and neck CT images,” Med. Phys. 37(12), 6338–6346 (2010). 10.1118/1.3515459 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Gorthi S., et al. , “Segmentation of head and neck lymph node regions for radiotherapy planning using active contour-based atlas registration,” IEEE J. Sel. Top. Signal Process. 3(1), 135–147 (2009). 10.1109/JSTSP.2008.2011104 [DOI] [Google Scholar]

- 18.Pekar V., et al. , “Head and neck auto-segmentation challenge: segmentation of the parotid glands,” in Med. Image Comput. and Comput. Assist. Intervention (MICCAI), pp. 273–280 (2010). [Google Scholar]

- 19.Gao Y., et al. , “Accurate segmentation of CT male pelvic organs via regression-based deformable models and multi-task random forests,” IEEE Trans. Med. Imaging 35(6), 1532–1543 (2016). 10.1109/TMI.2016.2519264 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Shao Y., et al. , “Locally-constrained boundary regression for segmentation of prostate and rectum in the planning CT images,” Med. Image Anal. 26(1), 345–356 (2015). 10.1016/j.media.2015.06.007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Shen D., Wu G., Suk H.-I., “Deep learning in medical image analysis,” Annu. Rev. Biomed. Eng. 19, 221–248 (2017). 10.1146/annurev-bioeng-071516-044442 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Rawat W., Wang Z., “Deep convolutional neural networks for image classification: A comprehensive review,” Neural Comput. 29(9), 2352–2449 (2017). 10.1162/neco_a_00990 [DOI] [PubMed] [Google Scholar]

- 23.Tajbakhsh N., et al. , “Embracing imperfect datasets: A review of deep learning solutions for medical image segmentation,” Med. Image Anal. 63, 101693 (2020). 10.1016/j.media.2020.101693 [DOI] [PubMed] [Google Scholar]

- 24.Litjens G., et al. , “A survey on deep learning in medical image analysis,” Med. Image Anal. 42, 60–88 (2017). 10.1016/j.media.2017.07.005 [DOI] [PubMed] [Google Scholar]

- 25.Long J., Shelhamer E., Darrell T., “Fully convolutional networks for semantic segmentation,” in Proc. IEEE Conf. Comput. Vision and Pattern Recognit., pp. 3431–3440 (2015). 10.1109/CVPR.2015.7298965 [DOI] [PubMed] [Google Scholar]

- 26.Ronneberger O., Fischer P., Brox T., “U-net: convolutional networks for biomedical image segmentation,” Lect. Notes comput. Sci. 9351, 234–241 (2015). 10.1007/978-3-319-24574-4_28 [DOI] [Google Scholar]

- 27.Çiçek Ö., et al. , “3D U-Net: learning dense volumetric segmentation from sparse annotation,” Lect. Notes Comput. Sci. 9901, 424–432 (2016). 10.1007/978-3-319-46723-8_49 [DOI] [Google Scholar]

- 28.Milletari F., Navab N., Ahmadi S.-A., “V-net: fully convolutional neural networks for volumetric medical image segmentation,” in Fourth Int. Conf. 3D Vision (3DV), pp. 565–571 (2016). [Google Scholar]

- 29.Li X., et al. , “H-DenseUNet: hybrid densely connected UNet for liver and tumor segmentation from CT volumes,” IEEE Trans. Med. Imaging 37(12), 2663–2674 (2018). 10.1109/TMI.2018.2845918 [DOI] [PubMed] [Google Scholar]

- 30.Zhu Q., et al. , “Deeply-supervised CNN for prostate segmentation,” in Int. Joint Conf. Neural Networks (IJCNN), pp. 178–184 (2017). [Google Scholar]

- 31.Kazemifar S., et al. , “Segmentation of the prostate and organs at risk in male pelvic CT images using deep learning,” Biomed. Phys. Eng. Express. 4(5), 55003 (2018). 10.1088/2057-1976/aad100 [DOI] [Google Scholar]

- 32.Wang B., et al. , “Automated prostate segmentation of volumetric CT images using 3D deeply supervised dilated FCN,” Proc. SPIE 10949, 109492S (2019). 10.1117/12.2512547 [DOI] [Google Scholar]

- 33.Wang S., et al. , “CT male pelvic organ segmentation using fully convolutional networks with boundary sensitive representation,” Med. Image Anal. 54, 168–178 (2019). 10.1016/j.media.2019.03.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Roth H. R., et al. , “Deeporgan: multi-level deep convolutional networks for automated pancreas segmentation,” Lect. Notes Comput. Sci. 9349, 556–564 (2015). 10.1007/978-3-319-24553-9_68 [DOI] [Google Scholar]

- 35.Men K., Dai J., Li Y., “Automatic segmentation of the clinical target volume and organs at risk in the planning CT for rectal cancer using deep dilated convolutional neural networks,” Med. Phys. 44(12), 6377–6389 (2017). 10.1002/mp.12602 [DOI] [PubMed] [Google Scholar]

- 36.Balagopal A., et al. , “Fully automated organ segmentation in male pelvic CT images,” Phys. Med. Biol. 63(24), 245015 (2018). 10.1088/1361-6560/aaf11c [DOI] [PubMed] [Google Scholar]

- 37.Dong X., et al. , “Automatic multiorgan segmentation in thorax CT images using U-net-GAN,” Med. Phys. 46(5), 2157–2168 (2019). 10.1002/mp.13458 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Goodfellow I., “NIPS 2016 tutorial: generative adversarial networks,” arXiv:1701.00160 (2016).

- 39.Tian Z., et al. , “PSNet: prostate segmentation on MRI based on a convolutional neural network,” J. Med. Imaging 5(2), 21208 (2018). 10.1117/1.JMI.5.2.021208 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.To M. N. N., et al. , “Deep dense multi-path neural network for prostate segmentation in magnetic resonance imaging,” Int. J. Comput. Assist. Radiol. Surg. 13(11), 1687–1696 (2018). 10.1007/s11548-018-1841-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Elguindi S., et al. , “Deep learning-based auto-segmentation of targets and organs-at-risk for magnetic resonance imaging only planning of prostate radiotherapy,” Phys. Imaging Radiat. Oncol. 12, 80–86 (2019). 10.1016/j.phro.2019.11.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Savenije M. H. F., et al. , “Clinical implementation of MRI-based organs-at-risk auto-segmentation with convolutional networks for prostate radiotherapy,” Radiat. Oncol. 15, 1–12 (2020). 10.1186/s13014-020-01528-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Fritscher K., et al. , “Deep neural networks for fast segmentation of 3D medical images,” Lect. Notes Comput. Sci. 9901, 158–165 (2016). 10.1007/978-3-319-46723-8_19 [DOI] [Google Scholar]

- 44.Ibragimov B., Xing L., “Segmentation of organs-at-risks in head and neck CT images using convolutional neural networks,” Med. Phys. 44(2), 547–557 (2017). 10.1002/mp.12045 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Močnik D., et al. , “Segmentation of parotid glands from registered CT and MR images,” Phys. Med. 52, 33–41 (2018). 10.1016/j.ejmp.2018.06.012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Ren X., et al. , “Interleaved 3D-CNN s for joint segmentation of small-volume structures in head and neck CT images,” Med. Phys. 45(5), 2063–2075 (2018). 10.1002/mp.12837 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Chan J. W., et al. , “A convolutional neural network algorithm for automatic segmentation of head and neck organs at risk using deep lifelong learning,” Med. Phys. 46(5), 2204–2213 (2019). 10.1002/mp.13495 [DOI] [PubMed] [Google Scholar]

- 48.Hänsch A., et al. , “Comparison of different deep learning approaches for parotid gland segmentation from CT images,” Proc. SPIE 10575, 1057519 (2018). 10.1117/12.2292962 [DOI] [Google Scholar]

- 49.Zhu W., et al. , “AnatomyNet: deep learning for fast and fully automated whole-volume segmentation of head and neck anatomy,” Med. Phys. 46(2), 576–589 (2019). 10.1002/mp.13300 [DOI] [PubMed] [Google Scholar]

- 50.Tong N., et al. , “Shape constrained fully convolutional DenseNet with adversarial training for multiorgan segmentation on head and neck CT and low-field MR images,” Med. Phys. 46(6), 2669–2682 (2019). 10.1002/mp.13553 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Zhu Z., et al. , “A 3D coarse-to-fine framework for volumetric medical image segmentation,” in Int. Conf. 3D Vision (3DV), pp. 682–690 (2018). [Google Scholar]

- 52.Zhu Z., et al. , “Multi-scale coarse-to-fine segmentation for screening pancreatic ductal adenocarcinoma,” Lect. Notes Comput. Sci. 11769, 3–12 (2019). 10.1007/978-3-030-32226-7_1 [DOI] [Google Scholar]

- 53.Trullo R., et al. , “Fully automated esophagus segmentation with a hierarchical deep learning approach,” in IEEE Int. Conf. Signal and Image Process. Appl. (ICSIPA), pp. 503–506 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Roth H. R., et al. , “Hierarchical 3D fully convolutional networks for multi-organ segmentation,” arXiv:1704.06382 (2017).

- 55.Wang Y., et al. , “Organ at risk segmentation in head and neck ct images using a two-stage segmentation framework based on 3D U-Net,” IEEE Access 7, 144591–144602 (2019). 10.1109/ACCESS.2019.2944958 [DOI] [Google Scholar]

- 56.Sultana S., et al. , “CNN-based hierarchical coarse-to-fine segmentation of pelvic CT images for prostate cancer radiotherapy,” Proc. SPIE 11315, 113151I (2020). 10.1117/12.2549979 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Raudaschl P. F., et al. , “Evaluation of segmentation methods on head and neck CT: auto-segmentation challenge 2015,” Med. Phys. 44(5), 2020–2036 (2017). 10.1002/mp.12197 [DOI] [PubMed] [Google Scholar]

- 58.Xu B., et al. , “Empirical evaluation of rectified activations in convolutional network,” arXiv:1505.00853 (2015).

- 59.Ioffe S., Szegedy C., “Batch normalization: accelerating deep network training by reducing internal covariate shift,” arXiv:1502.03167 (2015).

- 60.Srivastava N., et al. , “Dropout: a simple way to prevent neural networks from overfitting,” J. Mach. Learn. Res. 15(1), 1929–1958 (2014). [Google Scholar]

- 61.Mao X., et al. , “Least squares generative adversarial networks,” in Proc. IEEE Int. Conf. Comput. Vision, pp. 2794–2802 (2017). [Google Scholar]

- 62.Abadi M., et al. , “Tensorflow: large-scale machine learning on heterogeneous distributed systems,” arXiv:1603.04467 (2016).

- 63.Kingma D. P., Ba J., “Adam: a method for stochastic optimization,” arXiv:1412.6980 (2014).

- 64.Taha A. A., Hanbury A., “Metrics for evaluating 3D medical image segmentation: analysis, selection, and tool,” BMC Med. Imaging 15(1), 29 (2015). 10.1186/s12880-015-0068-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Sultana S., Song D. Y., Lee J., “Deformable registration of PET/CT and ultrasound for disease-targeted focal prostate brachytherapy,” J. Med. Imaging 6(3), 35003 (2019). 10.1117/1.JMI.6.3.035003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Wang Z., et al. , “Hierarchical vertex regression-based segmentation of head and neck CT images for radiotherapy planning,” IEEE Trans. Image Process. 27(2), 923–937 (2017). 10.1109/TIP.2017.2768621 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Han X., et al. , “Automatic segmentation of head and neck CT images by GPU-accelerated multi-atlas fusion,” in Proc. Head Neck Auto-Segment. Challenge Workshop, p. 219 (2009). [Google Scholar]

- 68.Wachinger C., Reuter M., Klein T., “DeepNAT: deep convolutional neural network for segmenting neuroanatomy,” Neuroimage 170, 434–445 (2018). 10.1016/j.neuroimage.2017.02.035 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Acosta O., et al. , “Multi-atlas-based segmentation of pelvic structures from CT scans for planning in prostate cancer radiotherapy,” in Abdomen and Thoracic Imaging, El-Baz A. S., Saba L., Suri J., Eds., pp. 623–656, Springer, Boston, Massachusetts: (2014). [Google Scholar]

- 70.Dirix P., Nuyts S., “Evidence-based organ-sparing radiotherapy in head and neck cancer,” Lancet Oncol. 11(1), 85–91 (2010). 10.1016/S1470-2045(09)70231-1 [DOI] [PubMed] [Google Scholar]

- 71.Brouwer C. L., et al. , “3D variation in delineation of head and neck organs at risk,” Radiat. Oncol. 7(1), 32 (2012). 10.1186/1748-717X-7-32 [DOI] [PMC free article] [PubMed] [Google Scholar]