Abstract

Background:

The artificial intelligence field is obtaining ever-increasing interests for enhancing the accuracy of diagnosis and the quality of patient care. Deep learning neural network (DLNN) approach was considered in patients with brain stroke (BS) to predict and classify the outcome by the risk factors.

Materials and Methods:

A total of 332 patients with BS (mean age: 77.4 [standard deviation: 10.4] years, 50.6% – male) from Imam Khomeini Hospital, Ardabil, Iran, during 2008–2018 participated in this prospective study. Data were gathered from the available documents of the BS registry. Furthermore, the diagnosis of BS was considered based on computerized tomography scans and magnetic resonance imaging. The DLNN strategy was applied to predict the effects of the main risk factors on mortality. The quality of the model was measured by diagnostic indices.

Results:

The finding of this study for 81 selected models demonstrated that ranges of accuracy, sensitivity, and specificity are 90.5%–99.7%, 83.8%–100%, and 89.8%–99.5%, respectively. Based on the optimal model (tangent hyperbolic activation function with the minimum–maximum hidden units of 10–20, max epochs of 400, momentum of 0.5, and learning rate of 0.1), the most important predictors for BS mortality were time interval after 10 years (accuracy = 92.2%), age category (75.6%), the history of hyperlipoproteinemia (66.9%), and education level (66.9%). The other independent variables are at moderate importance (66.6%) which include sex, employment status, residential place, smoking habits, history of heart disease, cerebrovascular accident type, blood pressure, diabetes, oral contraceptive pill use, and physical activity.

Conclusion:

The best means for dropping the BS load is effective BS prevention. DLNN strategy showed a surprising presentation in the prediction of BS mortality based on the main risk factors with an excellent diagnostic accuracy. Moreover, the time interval after 10 years, age, the history of hyperlipoproteinemia, and education level are the most important predictors for BS.

Keywords: Brain stroke, data mining, deep learning, predicting, risk factors

INTRODUCTION

Brain stroke (BS) is known as the main leading cause of death and permanent disability worldwide,[1] and in Iran, it is the second leading reason for death and more than half of patients with BS lose their lives within 8 years.[2] The threat of developing BS indicates to be doubled per decade.[3] Based on the report by the WHO,[4] nearly 15 million people suffer from BS worldwide every single year, and approximately 13 million BS result from high blood pressure. European countries demonstrate an average of 650,000 death caused by BS annually. These reports highlight the importance of the prognosis of the BS.

One of the principal public health concerns is modifiable factors among patients with BS. It is known that the most potent risk factors for BS are hypertension,[5,6,7] history of hyperlipoproteinemia, and diabetes.[7] Heart disease expands the risk of cardiovascular and cerebrovascular diseases (such as ischemic BS) too.[7] Neurological weakness and death rates are significantly higher in these patients who also have diabetes.[7] Furthermore, active and passive smoking are identified as the main risk factors for BS.[8,9] Furthermore, passive smoking increases the risk of overall BS by 30%.[10] Detecting BS risk factors may allow for rapid and conceivably more effective BS prevention.

To accelerate the identification and prevention period, and reduction of the BS load costs, this study aimed to employ the deep learning neural network (DLNN) method by applying the potent risk factors. DLNN method is a layered approach for processing information and making decisions. Utilizing more layers in the hidden part of the model than the classical NN methods, DLNN may provide more accuracy and precision.[11] The DLNN is a predictive structure that can generate complicated functions as well as complex relationships among data. Flexibility and nonlinear nature are other main features of this tool.[12,13,14] This research aims to develop a prediction-based DLNN model for the main risk factors of patients with BS by finding the optimal DLNN based on sensitivity, specificity, accuracy, and the area under the ROC curve (AUC).

MATERIALS AND METHODS

Study design and procedure

In this prospective longitudinal study, data were collected from the BS registry of the Imam Khomeini Hospital, Ardabil, Iran. A total of 332 patients were entered in the 10-year follow-up of the study (2008–2018). All patients with BS were submitted by the International Coding System ICD-10 according to the computerized tomography (CT) scan and magnetic resonance imaging. The follow-up time was considered from the date of hospitalization by acute BS until the death or end of follow-up, whichever came first.

Main variables and measures

For all patients and based on hospital document, the demographic variables including age category at diagnosis (1: ≥58; 2: 59–68; 3: 69–75; ≤76), sex (1: male; 2: female), employment status (1: employed; 2: unemployed), place of residence (1: urban; 2: rural), education level (1: DIPLOMA; 2: academic), smoking (1: yes; 2: no), former smoking (1: yes; 2: no), waterpipe smoking (1: yes; 2: no), history of heart disease (1: yes; 2: no), diabetes (1: yes; 2: no), oral contraceptive pill use (1: yes; 2: no), physical activates (1: yes; 2: no), history of cerebrovascular accident type (1: ischemic; 2: hemorrhagic), history of blood pressure history (1: yes; 2: no), history of hyperlipoproteinemia (1: yes; 2: no), and history of myocardial infraction (1: yes; 2: no) were used in the analysis as input variables.

Ethical considerations

The protocol of the study was approved by the Institutional Review Board of Tabriz University of Medical Sciences (ethics code: IR.TBZMED.REC.1398.667). The privacy of participants was preserved, and all participants filled and signed the contentment and informed consent.

Statistical analysis

Statistical analysis was performed by STATISTICA (ver. 13) (StatSoft, Statistica, Tulsa, USA). Data were expressed using mean (standard deviation) and median (min–max) for normal and nonnormal numeric variables, respectively, and frequency (percent) for categorical variables. The DLNN model was applied to model the relationship between the event and independent variables. The basic DLNN model includes three parts: an input layer, hidden layers, and an output layer. The input layer consists of independent variables.[14]

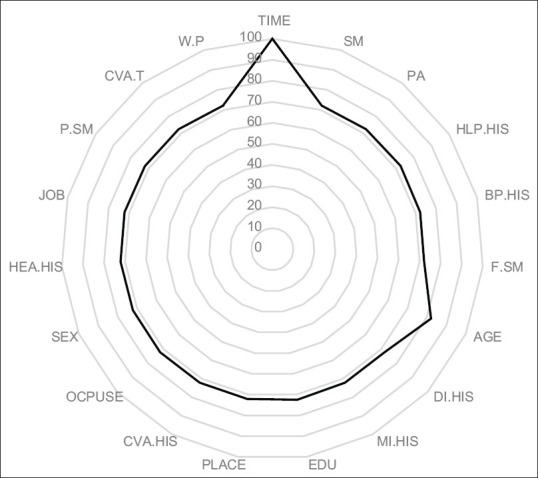

First, several settings for epoch, momentum, learning rate, and the size of hidden layers have been assessed (a total of 1533 different scenarios were implemented). Second, 81 models were selected, evaluated, checked, and compared precisely by diagnosis indices. Finally, one optimal model was chosen, and the input variables were entered into the model. The optimal DLNN model was presented by a radar plot utilizing Microsoft Excel (Microsoft Corporation Rosa, California, USA).

In hidden layers, activation functions were tangent hyperbolic (tanh), sigmoid, and rectified linear (rectilinear). The sample was split into three parts: 70% for training, 15% for testing, and 15% for validating utilizing a random sampling method. Diagnostic indices, including sensitivity, specificity, positive predictive value, negative predictive value accuracy, and the area under the receiver operating characteristic (ROC) curve, along with their 95% confidence interval (CI) were used to measure the quality and fitness of every model.

RESULTS

From 480 enrolled patients, only 332 were eligible to participate in this study, and the censored data within 10 years of follow up were about 32 (13%) persons. The median follow-up time was 81.3 (min = 0.0, max = 163.3) months. About 26.7%, 23.3%, and 50% of the participants were aged under 58, ranged over 59–68, and above 69 years old, respectively. Furthermore, about 56% of the participants were female, 70% were unemployed, and 61% of them were urban inhabitants [Table 1]. Furthermore, 81% of the cases were not active smokers, and just 59% had a history of blood pressure, whereas 93% have no history of any myocardial infarctions [Table 2].

Table 1.

Demographic characteristics of the study participants and the results of log-rank test

| Characteristics | Death from BS, frequency (%) | Incidence rate (per 10,000) (95%CI) | P |

|---|---|---|---|

| Age category (years) | |||

| ≤58 | 88 (26.67) | 27 (19-39) | Referent |

| 59-68 | 77 (23.33) | 65 (47-89) | 0.003 |

| 69-75 | 102 (30.91) | 153 (123-190) | <0.001 |

| 76+ | 63 (19.01) | 204 (155-270) | <0.001 |

| Sex | |||

| Female | 111 (56) | 67 (54-82) | Referent |

| Male | 88 (44) | 100 (83-120) | 0.016 |

| Employment status | |||

| Employed | 59 (30) | 70 (55-91) | Referent |

| Unemployed | 140 (70) | 88 (74-103) | 0.175 |

| Education level | |||

| ≤Diploma | 322 (97) | 83 (72-96) | Referent |

| Academic | 10 (3) | 46 (17-124) | 0.299 |

| Place of residence | |||

| Urban | 201 (61) | 73 (61-87) | Referent |

| Rural | 131 (39) | 98 (79-122) | 0.114 |

P-value based on pairwise log-rank test as compared to the reference category (adjusted for multiple comparisons). Significant P-values are shown in bold font. CI=Confidence interval; BS=Brain stroke

Table 2.

Clinical profile of study participants and the results of log-rank test

| Risk factors | Frequency (%) | Incidence rate (per 10000) (95% CI) | P |

|---|---|---|---|

| Physical activity | |||

| Yes | 46 (14) | 56 (37-85) | 0.041 |

| No | 284 (86) | 86 (74-100) | Referent |

| Smoking | |||

| Yes | 64 (19) | 79 (57-110) | 0.925 |

| No | 267 (81) | 82 (71-96) | Referent |

| History of cerebrovascular | |||

| Yes | 80 (24) | 86 (51-94) | 0.295 |

| No | 252 (76) | 69 (74-100) | Referent |

| History of myocardial infraction | |||

| Yes | 24 (7) | 58 (34-100) | 0.237 |

| No | 308 (93) | 84 (73-97) | Referent |

| History of blood pressure | |||

| Yes | 196 (59) | 96 (81-115) | 0.025 |

| No | 135 (41) | 64 (51-81) | Referent |

| Heart disease | |||

| Yes | 85 (26) | 101 (78-131) | 0.124 |

| No | 245 (74) | 75 (64-89) | Referent |

| History of diabetes | |||

| Yes | 59 (18) | 114 (84-154) | 0.0722 |

| No | 270 (82) | 76 (65-89) | Referent |

| History of hyperlipoproteinemia | |||

| Yes | 61 (18) | 55 (38-79) | 0.025 |

| No | 269 (82) | 89 (76-103) | Referent |

| Cerebrovascular type | |||

| Ischemic | 66 (20) | 118 (88-158) | 0.018 |

| Hemorrhagic | 257 (80) | 75 (64-88) | Referent |

| Oral contraceptive pill use | |||

| Yes | 60 (36) | 28 (18-43) | <0.001 |

| No | 105 (64) | 114 (90-144) | Referent |

P-value based on pairwise log-rank test as compared to the reference category (adjusted for multiple comparisons). Significant P-values are shown in bold font. CI=Confidence interval

Moreover, the results of the log-rank test showed that patients with a history of blood pressure and hyperlipoproteinemia had a higher risk of mortality. Besides, being male (P = 0.016), more senior ages (P < 0.001), oral contraceptive pill use (P < 0.001), ischemic cerebrovascular (P < 0.018), and no physical activity (P < 0.041) led into the higher risk of mortality [Tables 1 and 2].

Results of deep learning neural network-based modeling

The quality of 81 DLNNs, based on the diagnostic indices, encouragingly, demonstrated that the sensitivity ranged over 83.8% and 100%, while specificity varies from 89.8% to 99.5%. Further, the positive predictive value range started from 82.4% and terminated at 99%. For the negative predictive value, the range shifted from 92.1%–100%, and the accuracy scaled from 90.5% to 99.7%, respectively. Table 3 offers 81 different settings of DLNN models based on the diagnostic indices. The optimal model with the highest accuracy was “81: Tanh. 10.400.5.1” where the properties of the model were as follows: minimum–maximum hidden units were 10–20, max epochs were 400, the momentum was 0.5, and the learning rate was 0.1. The accuracy of tanh, rectified linear, and sigmoid activation functions was estimated at 99.5, 99.3, and 94.3, respectively. The DLNN with a tanh activation function was considered as the optimal model.

Table 3.

Results of comparing 81 selected deep learning neural network models

| Model | 95% CI | Ac (%) | ||||

|---|---|---|---|---|---|---|

| SE | SP | PPV | NPV | ROC area | ||

| 1: rect10.1000.5.1 | 100 (96.3-100) | 99 (96.499.9) | 98 (93-99.8) | 100 (98.1-100) | 0.99 (0.98-1.0) | 99.3 |

| 2: rect.30.1000.5.1 | 99 (94.5-100) | 99.5 (97.2-100) | 99 (94.5-100) | 99.5 (97.2-100) | 0.99 (0.98-1.0) | 99.3 |

| 3: rect.50.200.9.1 | 94.9 (88.6-98.3) | 94.4 (90.2-97.2) | 89.5 (82-94.7) | 97.4 (94-99.1) | 0.95 (0.92-0.97) | 94.5 |

| 4: rect.50.300.9.1 | 97 (91.4-99.4) | 96.4 (92.8-98.6) | 93.2 (86.5-97.2) | 98.4 (95.5-99.7) | 0.97 (0.95-0.99) | 96.6 |

| 5: rect.50.400.9.1 | 98 (92.9-99.8) | 97 (93.5-98.9) | 94.2 (87.8-97.8) | 99 (96.3-99.9) | 0.98 (0.96-0.99) | 97.3 |

| 6: rect.50.500.9.1 | 100 (96.3-100) | 97.5 (94.2-99.2) | 95.2 (89.1-98.4) | 100 (98.1-100) | 0.99 (0.98-1.0) | 98.3 |

| 7: rect.50.500.9.05 | 96 (90-98.9) | 96.4 (92.8-98.6) | 93.1 (86.4-97.2) | 97.9 (94.8-99.4) | 0.96 (0.94-0.99) | 96.3 |

| 8: rec.50.1000.0.9.05 | 100 (96.3-100) | 97.5 (94.2-99.2) | 95.2 (89.1-98.4) | 100 (98.1-100) | 0.99 (0.98-1.0) | 98.3 |

| 9: tanh.10.400.1.1 | 98 (92.9-99.8) | 94.9 (90.9-97.5) | 90.7 (83.5-95.4) | 98.9 (96.2-99.9) | 0.97 (0.94-0.99) | 96.0 |

| 10: tanh.10.300.5.1 | 99 (94.5-100) | 97 (93.5-98.9) | 94.2 (87.9-97.9) | 99.5 (97.1-100) | 0.98 (0.96-1.0) | 96.7 |

| 11: tanh.10.200.5.1 | 99 (94.5-100) | 92.9 (88.4-96.1) | 87.5 (79.9-93) | 99.5 (97-100) | 0.96 (0.94-0.98) | 99.4 |

| 12: tanh.10.100.5.1 | 88.9 (81-94.3) | 90.9 (85.9-94.5) | 83 (74.5-89.6) | 94.2 (89.9-97.1) | 0.90 (0.86-0.94) | 90.2 |

| 13: tanh.10.200.9.1 | 94.9 (88.6-98.3) | 93.9 (89.6-96.8) | 88.7 (81.1-94) | 97.4 (94-99.1) | 0.94 (0.92-0.97) | 94.3 |

| 14: tanh.10.300.9.1 | 96 (90-98.9) | 93.9 (89.6-96.8) | 88.8 (81.2-94.1) | 97.9 (94.7-99.4) | 0.95 (0.92-0.98) | 94.6 |

| 15: tanh.10.400.9.1 | 94.9 (88.6-98.3) | 94.4 (90.2-97.2) | 89.5 (82-94.7) | 97.4 (94-99.1) | 0.95 (0.92-0.97) | 94.6 |

| 16: tanh.10.500.9.1 | 94.9 (88.6-98.3) | 94.9 (90.9-97.5) | 90.4 (83-95.3) | 97.4 (94-99.1) | 0.95 (0.92-0.98) | 94.9 |

| 17: tanh.10.1000.9.1 | 99 (94.5-100) | 99.5 (97.2-100) | 99 (94.5-100) | 99.5 (97.2-100) | 0.99 (0.98-1.0) | 99.3 |

| 18: tanh.10.1000.9.05 | 93.9 (87.3-97.7) | 95.4 (91.5-97.9) | 91.2 (83.9-95.9) | 96.9 (93.4-98.9) | 0.95 (0.92-0.98) | 94.9 |

| 19: tanh.10.500.9.05 | 94.9 (88.6-98.3) | 93.9 (89.6-96.8) | 88.7 (81.1-94) | 97.4 (94-99.1) | 0.94 (0.92-0.97) | 94.6 |

| 20: tanh.10.400.0.05 | 96 (90-98.9) | 93.9 (89.6-96.8) | 88.8 (81.2-94.1) | 97.9 (94.7-99.4) | 0.95 (0.92-0.98) | 94.3 |

| 21: tanh.10.300.05.1 | 94.9 (88.6-98.3) | 93.4 (89-96.4) | 87.9 (80.1-93.4) | 97.4 (93.9-99.1) | 0.94 (0.91-0.97) | 94.9 |

| 22: tanh.10.1000.0.05 | 94.9 (88.6-98.3) | 95.9 (92.2-98.2) | 92.2 (85.1-96.6) | 97.4 (94.1-99.2) | 0.95 (0.93-0.98) | 94.6 |

| 23: tanh.10.500.0.05 | 89.9 (82.2-95) | 90.4 (85.3-94.1) | 82.4 (73.9-89.1) | 94.7 (90.4-97.4) | 0.90 (0.87-0.94) | 90.2 |

| 24: tanh.10.400.0.05 | 96 (90-98.9) | 93.9 (89.6-96.8) | 88.8 (81.2-94.1) | 97.9 (94.7-99.4) | 0.95 (0.92-0.98) | 94.6 |

| 25: tanh.10.400.0.05 | 94.9 (88.6-98.3) | 93.4 (89-96.4) | 87.9 (80.1-93.4) | 97.4 (93.9-99.1) | 0.94 (0.91-0.97) | 94.9 |

| 26: tanh.10.400.0.05 | 89.9 (82.2-95) | 90.4 (85.3-94.1) | 82.4 (73.9-89.1) | 94.7 (90.4-97.4) | 0.90 (0.87-0.94) | 90.2 |

| 27: tanh.10.1000.5.01 | 91.9 (84.7-96.4) | 90.4 (85.3-94.1) | 82.7 (74.3-89.3) | 95.7 (91.7-98.1) | 0.91 (0.88-0.95) | 90.9 |

| 28: tanh.10.1000.1.05 | 94.9 (88.6-98.3) | 94.4 (90.2-97.2) | 89.5 (82-94.7) | 97.4 (94-99.1) | 0.95 (0.92-0.97) | 94.9 |

| 29: tanh.10.500.1.05 | 96 (90-98.9) | 93.4 (89-96.4) | 88 (80.3-93.4) | 97.9 (94.6-99.4) | 0.95 (0.92-0.97) | 94.3 |

| 30: tanh.10.400.1.05 | 96 (90-98.9) | 93.9 (89.6-96.8) | 88.8 (81.2-94.1) | 97.9 (94.7-99.4) | 0.95 (0.92-0.98) | 94.6 |

| 31: tanh.10.300.1.05 | 96 (90-98.9) | 93.4 (89-96.4) | 88 (80.3-93.4) | 97.9 (94.6-99.4) | 0.95 (0.92-0.97) | 94.3 |

| 32: tanh.20.1000.1.01 | 96 (90-98.9) | 93.4 (89-96.4) | 88 (80.3-93.4) | 97.9 (94.6-99.4) | 0.95 (0.92-0.97) | 94.3 |

| 33: tanh.20.1000.1.05 | 94.9 (88.6-98.3) | 96.4 (92.8-98.6) | 93.1 (86.2-97.2) | 97.4 (94.1-99.2) | 0.96 (0.93-0.98) | 95.9 |

| 34: tanh.20.500.1.05 | 96 (90-98.9) | 93.9 (89.6-96.8) | 88.8 (81.2-94.1) | 97.9 (94.7-99.4) | 0.95 (0.92-0.98) | 94.6 |

| 35: tanh.20.400.1.05 | 96 (90-98.9) | 93.9 (89.6-96.8) | 88.8 (81.2-94.1) | 97.9 (94.7-99.4) | 0.95 (0.92-0.98) | 94.6 |

| 36: tanh.20.300.1.05 | 96 (90-98.9) | 93.9 (89.6-96.8) | 88.8 (81.2-94.1) | 97.9 (94.7-99.4) | 0.95 (0.92-0.98) | 94.6 |

| 37: tanh.20.200.1.05 | 94.9 (88.6-98.3) | 93.4 (89-96.4) | 87.9 (80.1-93.4) | 97.4 (93.9-99.1) | 0.94 (0.91-0.97) | 93.9 |

| 38: tanh.20.1000.5.01 | 94.9 (88.6-98.3) | 93.4 (89-96.4) | 87.9 (80.1-93.4) | 97.4 (93.9-99.1) | 0.94 (0.91-0.97) | 93.9 |

| 39: tanh.20.1000.5.05 | 94.9 (88.6-98.3) | 95.9 (92.2-98.2) | 92.2 (85.1-96.6) | 97.4 (94.1-99.2) | 0.95 (0.93-0.98) | 94.6 |

| 40: tanh.20.500.5.05 | 96 (90-98.9) | 93.9 (89.6-96.8) | 88.8 (81.2-94.1) | 97.9 (94.7-99.4) | 0.95 (0.92-0.98) | 94.6 |

| 41: tanh.20.400.5.05 | 96 (90-98.9) | 93.9 (89.6-96.8) | 88.8 (81.2-94.1) | 97.9 (94.7-99.4) | 0.95 (0.92-0.98) | 94.6 |

| 42: tanh.20.300.5.05 | 96 (90-98.9) | 93.9 (89.6-96.8) | 88.8 (81.2-94.1) | 97.9 (94.7-99.4) | 0.95 (0.92-0.98) | 94.6 |

| 43: tanh.20.200.5.05 | 94.9 (88.6-98.3) | 93.4 (89-96.4) | 87.9 (80.1-93.4) | 97.4 (93.9-99.1) | 0.94 (0.91-0.97) | 94.9 |

| 44: tanh.20.100.1.1 | 94.9 (88.6-98.3) | 93.4 (89-96.4) | 87.9 (80.1-93.4) | 97.4 (93.9-99.1) | 0.94 (0.91-0.97) | 93.9 |

| 45: tanh.20.200.1.1 | 96 (90-98.9) | 93.9 (89.6-96.8) | 88.8 (81.2-94.1) | 97.9 (94.7-99.4) | 0.95 (0.92-0.98) | 94.6 |

| 46: tanh.20.300.1.1 | 93.9 (87.3-97.7) | 93.9 (89.6-96.8) | 88.6 (80.9-94) | 96.9 (93.3-98.8) | 0.94 (0.91-0.97) | 93.9 |

| 47: tanh.20.400.1.1 | 96 (90-98.9) | 95.4 (91.5-97.9) | 91.3 (84.2-96) | 97.9 (94.8-99.4) | 0.96 (0.93-0.98) | 95.6 |

| 48: tanh.20.500.1.1 | 94.9 (88.6-98.3) | 96.4 (92.8-98.6) | 93.1 (86.2-97.2) | 97.4 (94.1-99.2) | 0.96 (0.93-0.98) | 95.9 |

| 49: tanh.20.1000.1.1 | 99 (94.5-100) | 99.5 (97.2-100) | 99 (94.5-100) | 99.5 (97.2-100) | 0.99 (0.98-1.0) | 99.3 |

| 50: tanh.20.1000.5.1 | 99 (94.5-100) | 99.5 (97.2-100) | 99 (94.5-100) | 99.5 (97.2-100) | 0.99 (0.98-1.0) | 99.3 |

| 51: tanh.20.500.5.1 | 94.9 (88.6-98.3) | 95.9 (92.2-98.2) | 92.2 (85.1-96.6) | 97.4 (94.1-99.2) | 0.95 (0.93-0.98) | 94.6 |

| 52: tanh.20.400.5.1 | 96 (90-98.9) | 95.4 (91.5-97.9) | 91.3 (84.2-96) | 97.9 (94.8-99.4) | 0.96 (0.93-0.98) | 95.6 |

| 53: tanh.20.300.5.1 | 94.9 (88.6-98.3) | 94.4 (90.2-97.2) | 89.5 (82-94.7) | 97.4 (94-99.1) | 0.95 (0.92-0.97) | 94.6 |

| 54: tanh.20.200.5.1 | 94.9 (88.6-98.3) | 94.4 (90.2-97.2) | 89.5 (82-94.7) | 97.4 (94-99.1) | 0.95 (0.92-0.97) | 94.6 |

| 55: tanh.20.100.5.1 | 94.9 (88.6-98.3) | 93.4 (89-96.4) | 87.9 (80.1-93.4) | 97.4 (93.9-99.1) | 0.94 (0.91-0.97) | 93.9 |

| 56: tanh.20.100.9.1 | 91.9 (84.7-96.4) | 89.8 (84.8-93.7) | 82 (73.6-88.6) | 95.7 (91.7-98.1) | 0.91 (0.88-0.94) | 90.5 |

| 57: tanh.20.200.9.1 | 96 (90-98.9) | 93.9 (89.6-96.8) | 88.8 (81.2-94.1) | 97.9 (94.7-99.4) | 0.95 (0.92-0.98) | 94.6 |

| 58: tanh.20.300.9.1 | 96 (90-98.9) | 93.9 (89.6-96.8) | 88.8 (81.2-94.1) | 97.9 (94.7-99.4) | 0.95 (0.92-0.98) | 94.6 |

| 59: tanh.20.400.9.1 | 96 (90-98.9) | 95.4 (91.5-97.9) | 91.3 (84.2-96) | 97.9 (94.8-99.4) | 0.96 (0.93-0.98) | 95.6 |

| 60: tanh.20.500.9.1 | 94.9 (88.6-98.3) | 95.9 (92.2-98.2) | 92.2 (85.1-96.6) | 97.4 (94.1-99.2) | 0.95 (0.93-0.98) | 94.6 |

| 61: rect.50.1000.9.01 | 90.9 (83.4-95.8) | 91.9 (87.1-95.3) | 84.9 (76.6-91.1) | 95.3 (91.2-97.8) | 0.91 (0.88-0.95) | 91.6 |

| 62: rect.20.1000.9.01 | 94.9 (88.6-98.3) | 95.9 (92.2-98.2) | 92.2 (85.1-96.6) | 97.4 (94.1-99.2) | 0.95 (0.93-0.98) | 94.6 |

| 63: rect.20.1000.9.05 | 94.9 (88.6-98.3) | 95.9 (92.2-98.2) | 92.2 (85.1-96.6) | 97.4 (94.1-99.2) | 0.95 (0.93-0.98) | 94.6 |

| 64: rect.50.1000.9.05 | 100 (96.3-100) | 97.5 (94.2-99.2) | 95.2 (89.1-98.4) | 100 (98.1-100) | 0.99 (0.98-1.0) | 98.3 |

| 65: rect.50.500.9.05 | 94.9 (88.6-98.3) | 96.4 (92.8-98.6) | 93.1 (86.2-97.2) | 97.4 (94.1-99.2) | 0.96 (0.93-0.98) | 96.3 |

| 66: rect.50.400.9.05 | 96 (90-98.9) | 95.4 (91.5-97.9) | 91.3 (84.2-96) | 97.9 (94.8-99.4) | 0.96 (0.93-0.98) | 95.6 |

| 67: rect.50.200.9.05 | 83.8 (75.1-90.5) | 94.4 (90.2-97.2) | 88.3 (80-94) | 92.1 (87.5-95.4) | 0.89 (0.85-0.93) | 90.9 |

| 68: rect.50.200.9.1 | 94.9 (88.6-98.3) | 94.4 (90.2-97.2) | 89.5 (82-94.7) | 97.4 (94-99.1) | 0.95 (0.92-0.97) | 94.6 |

| 69: rect.50.300.9.1 | 97 (91.4-99.4) | 96.4 (92.8-98.6) | 93.2 (86.5-97.2) | 98.4 (95.5-99.7) | 0.97 (0.95-0.99) | 96.6 |

| 70: rect.50.400.9.1 | 98 (92.9-99.8) | 97 (93.5-98.9) | 94.2 (87.8-97.8) | 99 (96.3-99.9) | 0.98 (0.96-0.99) | 97.3 |

| 71: sig.30.500.0.3 | 94.9 (88.6-98.3) | 93.9 (89.6-96.8) | 88.7 (81.1-94) | 97.4 (94-99.1) | 0.94 (0.92-0.97) | 94.3 |

| 72: sig30.300.9.5 | 94.9 (88.6-98.3) | 93.9 (89.6-96.8) | 88.7 (81.1-94) | 97.4 (94-99.1) | 0.94 (0.92-0.97) | 94.3 |

| 73: sig20.300.5.4 | 92.9 (86-97.1) | 91.4 (86.5-94.9) | 84.4 (76.2-90.6) | 96.3 (92.4-98.5) | 0.92 (0.89-0.95) | 91.9 |

| 74: sig.20.300.5.5 | 94.9 (88.6-98.3) | 93.4 (89-96.4) | 87.9 (80.1-93.4) | 97.4 (93.9-99.1) | 0.94 (0.91-0.97) | 93.9 |

| 75: sig.20.400.5.5 | 94.9 (88.6-98.3) | 93.9 (89.6-96.8) | 88.7 (81.1-94) | 97.4 (94-99.1) | 0.94 (0.92-0.97) | 94.3 |

| 76: sig.20.400.1.5 | 94.9 (88.6-98.3) | 93.9 (89.6-96.8) | 88.7 (81.1-94) | 97.4 (94-99.1) | 0.94 (0.92-0.97) | 94.3 |

| 77: sig.20.400.1.3 | 92.9 (86-97.1) | 91.4 (86.5-94.9) | 84.4 (76.2-90.6) | 96.3 (92.4-98.5) | 0.92 (0.89-0.95) | 91.9 |

| 78: sig.40.500.1.5 | 96 (90-98.9) | 93.9 (89.6-96.8) | 88.8 (81.2-94.1) | 97.9 (94.7-99.4) | 0.95 (0.92-0.98) | 94.6 |

| 79: sig.40.200.2.5 | 94.9 (88.6-98.3) | 92.9 (88.4-96.1) | 87 (79.2-92.7) | 97.3 (93.9-99.1) | 0.94 (0.91-0.97) | 93.6 |

| 80: sig.30.400.9.3 | 94.9 (88.6-98.3) | 93.4 (89-96.4) | 87.9 (80.1-93.4) | 97.4 (93.9-99.1) | 0.94 (0.91-0.97) | 93.9 |

| 81: tanh.10.400.5.1 | 98 (92.9-99.8) | 94.9 (90.9-97.5) | 90.7 (83.5-95.4) | 98.9 (96.2-99.9) | 0.97 (0.94-0.99) | 99.5 |

The names of the models refer to the characteristic of the model from left: Activation function, hidden layer size, epoch, momentum, learning rate. SE=Sensitivity; SP=Specificity; PPV=Positive predictive value; NPV=Negative predictive value; ROC=Receiver operating characteristics; Ac=Accuracy; CI=Confidence interval

Based on the results from the optimal model, the effect of prediction of risk factors on mortality was divided into two categories. The radar plot [Figure 1] shows the accuracy by the most important predictors of BS. Accordingly, the most important predictors for BS mortality were time interval after 10 years with 92.2% accuracy, age category with 75.6% accuracy, the history of hyperlipoproteinemia with 66.9% accuracy, and education level with 66.9% accuracy. The other independent variables, as mentioned beforehand, were at a moderate importance level with 66.6% accuracy.

Figure 1.

Radar plot for comparing the variable importance based on the optimal model. Normalized importance of the independent variable to predict BS mortality. AGE = Age category; MI.HIS = History of myocardial infraction; JOB = Employment status; PLACE = Place of residence; EDU = Education level; CVA.HIS = History of cerebrovascular accident; W.P = Waterpipe smoking; F.SM = Former smoking; P.SM = Passive smoking; SM = Smoking; HEA.HIS = History of heart disease; OCPUSE = Oral contraceptive pill use; PA = Physical activates; BP.HIS = History of blood pressure; HLP.HIS = History of hyperlipoproteinemia; DI.HIS = Diabetes; CVA.T = Cerebrovascular accident type

DISCUSSION

For investigating the main predictors of survival in patients with BS, we used the DLNN technique which showed a surprising presentation in the prediction of BS mortality based on the main risk factors with an excellent diagnostic accuracy (99.7%, 100%, and 99.5% for accuracy, sensitivity, and specificity, respectively). It seems that DLNN offers the capability to analyze data more quickly and possibly with higher precision, besides its transformational features for the health care. The multilayered setting of deep learning empowers one to perform classification jobs such as identifying subtle abnormalities in medical imagining, clustering cases with similar characteristics, or highlighting associations between symptoms and results within data. In addition, the DLNN strategy does not require data prepossessing, and the system takes care of many self-filtering and self-normalization tasks.[11,15] In order to discover and investigate the behavior of accuracy and error rate of models, the implementations were carried out in different settings and increasing the number of hidden layers, epochs, or learning rate improved the accuracy indices.

Similar to those carried out and found by our study, researchers are utilizing DLNN and various data mining techniques for the diagnosis of many illnesses such as heart diseases,[16] diabetes,[17] BS,[18] and cancers.[19] In another study that has utilized DLNN model for acute ischemic BS treatment,[20] interestingly, results show the preeminence of the proposed DLNN versus a regression model. In another study, to predict final lesion volume, the DLNN performance was significantly better in predicting the outcome than the generalized linear model.[21] In another research,[22] three classification algorithms, including DLNN, were applied for predicting BS outcomes based on the demographic information of patients. The authors utilize the accuracy and the AUC as the indicators for evaluation.[22] Therefore, DLNN technically accomplishes a vital rule in the prediction of diseases in the medical and health sciences.[11]

The main objective of the study was modelling the most relevant risk factors for predicting BS by applying the DLNN approach. The results from the optimal model revealed that time interval, age category, history of hyperlipoproteinemia, and educational level were of the main predictors of death. Other studies have declared that these risk factors increase the rate of BS incidents.[23,24,25] The finding of a study showed that among people aged over 75 years, the hemorrhagic incidence raised nearly 80%.[26] On the contrary, men have higher death incidence than women (P < 0.001).[27] Another research showed that moderate to heavy-intensity physical activities were associated with a lower risk of ischemic BS (adjusted hazard ratio: 0.65, 95% confidence interval: 0.44–0.98),[28] while findings of the current study did not support it. These discrepancies may be the effect of dissimilarities of methodologies, target populations, and interferences.

Strengths and limitations

As a strength of this study, we used DLNN, aiming to derive rules and to detect complex relationships with higher accuracy, which is assumption independent as compared to classical statistical methods. In addition, compared to machine learning, DLNN can measure the accuracy of its answers on its own due to the nature of its multilayered structure.

However, the current study has some limitations. First, inaccurate responses in data collection that was not provided by patients themselves and was given by those who accompanied the patients. Second, the self reporting of the history of comorbidities. Third, overfitting concerns need to be mentioned; it indicates that the function's performance is strong in the training set but might be less appropriate in other datasets. Forth, the “black box” characteristics of the DLNN strategy are another restriction: while it can estimate any function, its mechanism reviewing may not present any sensible vision on the structure of the task being approximated. Therefore, it might be required to develop methods in practical application, comprehensively and precisely. In connection with the points previously mentioned, we recommend the DLNN with greater number of variables and larger sample sizes.

CONCLUSION

Interestingly, the DLNN strategy presented an amazing performance in the prediction of BS mortality based on the main risk factors with an admirable diagnostic accuracy of BS. Based on the results of the optimal model, the most important predictors for BS mortality were time interval after 10 years, smoking, history of myocardial infarction, and age category. The other independent variables were at a moderate importance level. A BS can be destructive to individuals and their society, so efficient BS prevention stays the best necessitates for dropping the BS burden, instead of considerable enhancements for the treatment of patients. By determining BS risk factors, early and conceivably more effective prevention will be possible.

Financial support and sponsorship

Nil.

Conflicts of interest

There are no conflicts of interest.

REFERENCES

- 1.Cheon S, Kim J, Lim J. The use of deep learning to predict stroke patient mortality. Int J Environ Res Public Health. 2019;16:1–12. doi: 10.3390/ijerph16111876. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Kim HC, Choi DP, Ahn SV, Nam CM, Suh I. Six-year survival and causes of death among stroke patients in Korea. Neuroepidemiology. 2009;32:94–100. doi: 10.1159/000177034. [DOI] [PubMed] [Google Scholar]

- 3.Centers for Disease Control and Prevention. [Last accessed on 2020 Apr 11]. Available from: https://wwwcdcgov/

- 4.World Health Organization. [Last accessed on 2020 Apr 11]. Available from: https://wwwwhoint/

- 5.Pastore D, Pacifici F, Capuani B, Palmirotta R, Dong C, Coppola A, et al. Sex-genetic interaction in the risk for cerebrovascular disease. Curr Med Chem. 2017;24:2687–99. doi: 10.2174/0929867324666170417100318. [DOI] [PubMed] [Google Scholar]

- 6.Bailey RR. Promoting physical activity and nutrition in people with stroke. Am J Occup Ther. 2017;71:7105360010p1–5. doi: 10.5014/ajot.2017.021378. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Assarzadegan F, Tabesh H, Shoghli A, Ghafoori Yazdi M, Tabesh H, Daneshpajooh P, et al. Relation of stroke risk factors with specific stroke subtypes and territories. Iran J Public Health. 2015;44:1387–94. [PMC free article] [PubMed] [Google Scholar]

- 8.Hatleberg CI, Ryom L, Kamara D, de Wit S, Law M, Phillips A, et al. Predictors of ischemic and hemorrhagic strokes among people living with HIV: The D: A: D international prospective multicohort study. EClinicalMedicine. 2019;13:91–100. doi: 10.1016/j.eclinm.2019.07.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Xu Z, Li Y, Tang S, Huang X, Chen T. Current use of oral contraceptives and the risk of first-ever ischemic stroke: A meta-analysis of observational studies. Thromb Res. 2015;136:52–60. doi: 10.1016/j.thromres.2015.04.021. [DOI] [PubMed] [Google Scholar]

- 10.Lee PN, Forey BA. Environmental tobacco smoke exposure and risk of stroke in nonsmokers: A review with meta-analysis. J Stroke Cerebrovasc Dis. 2006;15:190–201. doi: 10.1016/j.jstrokecerebrovasdis.2006.05.002. [DOI] [PubMed] [Google Scholar]

- 11.Ghatak A. Deep learning with R Singapore. Springer Singapore. 2019:1–245. [Google Scholar]

- 12.Bengio Y, Courville A, Vincent P. Representation learning: A review and new perspectives. IEEE Trans Pattern Anal Mach Intell. 2013;35:1798–828. doi: 10.1109/TPAMI.2013.50. [DOI] [PubMed] [Google Scholar]

- 13.Patel AB, Nguyen T, Baraniuk RG. A probabilistic framework for deep learning. In: Advances in Neural Information Processing Systems. NIPS'16: Proceedings of the 30th International Conference on Neural Information Processing SystemsDecember. 2016:2558–66. [Google Scholar]

- 14.Schmidhuber J. Deep learning in neural networks: An overview. Neural Netw. 2015;61:85–117. doi: 10.1016/j.neunet.2014.09.003. [DOI] [PubMed] [Google Scholar]

- 15.Kutkina O, Feuerriegel S. Deep Learning in R: R-bloggers. 2016. p. 1. Available from: https://wwwrbloguni-freiburgde/2017/02/07/deep-learning-in-r/

- 16.Das R, Turkoglu I, Sengur A. Effective diagnosis of heart disease through neural networks ensembles. Expert Syst Appl. 2009;36:7675–80. doi: 10.1016/j.cmpb.2008.09.005. [DOI] [PubMed] [Google Scholar]

- 17.Iqbal K, Sohail Asghar DA. Hiding sensitive XML association rules with supervised learning technique. Intelligent Information Management. 2011;3:219–29. [DOI: 10.4236/iim. 2011.36027] [Google Scholar]

- 18.Panzarasa S, Quaglini S, Sacchi L, Cavallini A, Micieli G, Stefanelli M. Data mining techniques for analyzing stroke care processes. Stud Health Technol Inform. 2010;160:939–43. [PubMed] [Google Scholar]

- 19.Li L, Tang H, Wu Z, Gong J, Gruidl M, Zou J, et al. Data mining techniques for cancer detection using serum proteomic profiling. Artif Intell Med. 2004;32:71–83. doi: 10.1016/j.artmed.2004.03.006. [DOI] [PubMed] [Google Scholar]

- 20.Stier N, Vincent N, Liebeskind D, Scalzo F. deep learning of tissue fate features in acute ischemic stroke. Proceedings (IEEE Int Conf Bioinformatics Biomed) 2015;2015:1316–21. doi: 10.1109/BIBM.2015.7359869. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Nielsen A, Hansen MB, Tietze A, Mouridsen K. Prediction of tissue outcome and assessment of treatment effect in acute ischemic stroke using deep learning. Stroke. 2018;49:1394–401. doi: 10.1161/STROKEAHA.117.019740. [DOI] [PubMed] [Google Scholar]

- 22.Kansadub T, Thammaboosadee T, Supaporn Kiattisin CJ. Stroke risk prediction model based on demographic data. In 2015 8th Biomedical Engineering International Conference (BMEiCON) :1–3. IEEE [DOI: 101109/BMEiCON20157399556] [Google Scholar]

- 23.Fekadu G, Chelkeba L, Kebede A. Retraction Note: Risk factors, clinical presentations and predictors of stroke among adult patients admitted to stroke unit of Jimma university medical center, South west Ethiopia: Prospective observational study. BMC Neurol. 2019;19:327. doi: 10.1186/s12883-019-1564-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.EBSCOhost 137139786 Predictors for stroke mortality. A Comparison of the Oslo-Study 1972/73 and the Oslo II-Study in. 2000 [Google Scholar]

- 25.Nouh AM, McCormick L, Modak J, Fortunato G, Staff I. High mortality among 30-day readmission after stroke: Predictors and etiologies of readmission. Front Neurol. 2017;8:632. doi: 10.3389/fneur.2017.00632. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Béjot Y, Cordonnier C, Durier J, Aboa-Eboulé C, Rouaud O, Giroud M. Intracerebral haemorrhage profiles are changing: Results from the Dijon population-based study. Brain. 2013;136:658–64. doi: 10.1093/brain/aws349. [DOI] [PubMed] [Google Scholar]

- 27.Giroud M, Delpont B, Daubail B, Blanc C, Durier J, Giroud M, et al. Temporal trends in sex differences with regard to stroke incidence: The Dijon stroke registry (1987-2012) Stroke. 2017;48:846–9. doi: 10.1161/STROKEAHA.116.015913. [DOI] [PubMed] [Google Scholar]

- 28.Willey JZ, Moon YP, Paik MC, Boden-Albala B, Sacco RL, Elkind MS. Physical activity and risk of ischemic stroke in the Northern Manhattan Study. Neurology. 2009;73:1774–9. doi: 10.1212/WNL.0b013e3181c34b58. [DOI] [PMC free article] [PubMed] [Google Scholar]