Abstract

Background:

Coronavirus disease 2019 (COVID-19) is an emerging infectious disease and global health crisis. Although real-time reverse transcription polymerase chain reaction (RT-PCR) is known as the most widely laboratory method to detect the COVID-19 from respiratory specimens. It suffers from several main drawbacks such as time-consuming, high false-negative results, and limited availability. Therefore, the automatically detect of COVID-19 will be required.

Objective:

This study aimed to use an automated deep convolution neural network based pre-trained transfer models for detection of COVID-19 infection in chest X-rays.

Material and Methods:

In a retrospective study, we have applied Visual Geometry Group (VGG)-16, VGG-19, MobileNet, and InceptionResNetV2 pre-trained models for detection COVID-19 infection from 348 chest X-ray images.

Results:

Our proposed models have been trained and tested on a dataset which previously prepared. The all proposed models provide accuracy greater than 90.0%. The pre-trained MobileNet model provides the highest classification performance of automated COVID-19 classification with 99.1% accuracy in comparison with other three proposed models. The plotted area under curve (AUC) of receiver operating characteristics (ROC) of VGG16, VGG19, MobileNet, and InceptionResNetV2 models are 0.92, 0.91, 0.99, and 0.97, respectively.

Conclusion:

The all proposed models were able to perform binary classification with the accuracy more than 90.0% for COVID-19 diagnosis. Our data indicated that the MobileNet can be considered as a promising model to detect COVID-19 cases. In the future, by increasing the number of samples of COVID-19 chest X-rays to the training dataset, the accuracy and robustness of our proposed models increase further.

Keywords: COVID-19, Transfer Learning, X-ray Images, Deep Learning, Convolution Neural Network, Machine Learning

Introduction

In the present time, coronavirus disease 2019 (COVID-19) is an emerging infectious disease and global health crisis. Originally, this virus was identified in Wuhan, China in December 2019 [ 1 ]. To date (on 28 July, 2020 at 10:46 GMT), 16,672,569 cases are infected by COVID-19 around the world with 657,265 deaths and 10,263,092 recovered cases [ 2 ]. In more severe cases, COVID-19 causes acute respiratory distress syndrome (ARDS), pneumonia, and respiratory failure. In fact, high pathogenic COVID-19 mainly infects the lower respiratory tract and actives dendritic and epithelial cells, thereby resulting in expression of pro-inflammatory cytokines that cause pneumonia and ARDS, which can be fatal [ 3 ].

Real-time reverse transcription polymerase chain reaction (RT-PCR) is known as the most widely laboratory method to detect the COVID-19 from respiratory specimens, such as nasopharyngeal or oropharyngeal swabs [ 4 ]. RT-PCR is a sensitive method for diagnosing of COVID-19, but it suffers from several main drawbacks, including time-consuming, high false-negative results, and limited availability [ 5 - 7 ]. To resolve these drawbacks, the medical imaging techniques such as chest X-ray and computed tomography (CT) scan of chest to detect and diagnose COVID-19 can be use as alternative tools [ 8 , 9 ]. The radiologists consider chest X-ray images over CT-scan as primary radiography examination to detect the infection caused by COVID-19 [ 10 ] due to high availability of X-ray machines in most of the hospitals, low ionizing radiations, and low cost of X-ray machines compared to CT-scan machine. Hence, in the present study, we preferred chest X-ray images over CT-scan. Chest X-ray images can easily detect COVID-19 infection’s radiological signatures. It must be analyzed and diagnosed from chest X-ray images by using an expert radiologist. Of note, it is time-consuming and has susceptibility to detect erroneously [ 11 ]. Therefore, the automatically detect of COVID-19 from chest X-ray images is required.

To date, several studies have used deep learning based methods to automate the analysis of radiological images [ 12 ]. Deep learning based methods have been previously utilized to diagnosis tuberculosis disease from chest X-ray images [ 13 ]. It is possible that weights of networks initialized and trained on a large datasets by using deep learning based methods and then fine tuning these weights of pre-trained networks on a small datasets [ 14 ]. Owing to the limited available dataset related to COVID-19, the pre-trained neural networks can be utilized for diagnosis of COVID-19. However, these approaches applied on chest X-ray images are very limited till now [ 15 ]. To this end, the present study aimed to use an automated deep convolution neural network based pre-trained transfer models for detection and diagnosis of COVID-19 infection in chest X-rays.

Material and Methods

This study was designed as a retrospective study.

Deep Transfer Learning

Transfer learning is a machine learning technique which reuse a pre-trained model that has been used for a problem, on a new related problem [ 16 ]. In fact, transfer learning applied pre-trained models for machine leaning. In the analysis of medical data, one of the major research challenges for health-care researchers can be attributed to the limited available dataset [ 7 ]. Besides, deep learning models have several drawbacks such as a lot of data for training and data labeling that is costly and time-consuming [ 7 ]. Using transfer learning provides the training of data with fewer datasets. In addition, the calculation cost of transfer learning models is less. Over the last decades, using deep learning algorithms and convolution neural networks (CNNs) resulted in many breakthroughs in many fields such as industry, agriculture, and medical disease diagnostic [ 17 - 19 ]. CNN architecture aims to mimic human visual cortex system [ 11 ]. Basically, there are three main layers in CNN, including the convolution layer, the pooling layer and the fully connected layer [ 20 ]. The learning of model is performed by the convolution layer, the pooling layer, whereas the role of the fully connected layer is the classification [ 20 ]. Herein, four well-known pre-trained CNN models were applied to detect infection in chest X-rays. So, X-rays images were classified into two groups, normal or COVID-19: 1- VGG16, 2- VGG-19, 3- MobileNet, and 4- InceptionResNetV2. VGG architectures have been designed by Oxford University’s visual geometry group [ 21 ]. VGG-16 consists of 13 convolutional layers and 3 fully-connected layers, whereas VGG-19 is a combination of 16 convolutional layers and 3 fully-connected layers [ 21 ]. Therefore, VGG-19 is considered as a deeper CNN architecture in comparison with VGG-16. MobileNet architecture has proposed by Howard et al., in 2017 [ 22 ]. The MobileNet model is built on a streamlined architecture that applies depthwise separable convolutions to build light weight deep neural networks. Depthwise separable convolutions are consisted of following layers: 1- depthwise convolutions and 2- pointwise convolutions [ 22 ]. In 2016, Szegedy et al., have proposed InceptionResNetV2, as a combined architecture [ 23 ]. This model applies the idea of inception blocks and residual layers together. The use of residual connections results in preventing problem of degradation associated with deep networks; hence, it decreases the training time [ 23 ]. InceptionResNetV2 architecture is 164 layers deep and can assist us in our mission to classify X-ray images into normal or COVID-19.

X-ray image dataset

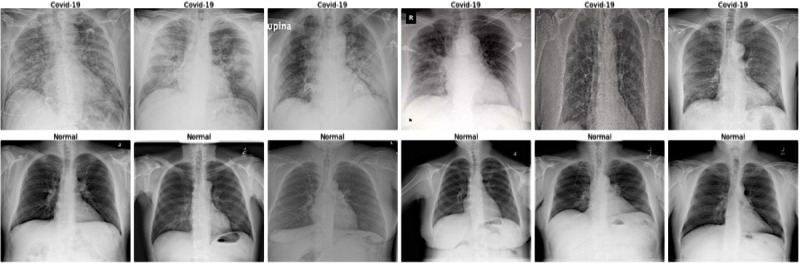

In the present study, an open-source dataset was used. COVID-19 chest X-ray images are available at this GitHub repository (https://github.com/ieee8023/covid-chestxray-dataset) that has been prepared by Cohen et al, [ 24 ]. The repository of images is an open data set of COVID-19 cases containing both X-ray images and CT scans and new images is regularly added. In this study, we used chest X-ray images to classify the COVID-19. At the time of preparing this study, the dataset consisted of about 181 COVID-19 chest X-ray images. As displayed in Table 1, the number of training pairs were 348, 236 negative and 112 positive COVID-19 chest X-ray images. Also, 55 negative and 33 positive X-ray images were used to create validation datasets, while 73 negative and 36 positive images were used for testing purpose. Figure 1 shows some examples of the chest X-ray images taken from dataset.

Table 1.

Summary of the input dataset used for the proposed models

| Category | Training data | Validation data | Testing data |

|---|---|---|---|

| COVID-19 (181) | 112 | 33 | 36 |

| Normal (364) | 236 | 55 | 73 |

Figure 1.

Examples of chest X-ray images from the dataset with related labels.

Data pre-processing

Owing to the lack of uniformity in the dataset and the X-rays images with various sizes, we rescaled all the chest X-ray images. Of note, the samples in the dataset are limited; hence, the data augmentation techniques were implemented to resolve this problem. In addition, the image augmentation methods can result in improved classification model performance. In this study, the data augmentation parameters were performed with a rotation range of 20, a zoom range of 0.05, a width shift range of 0.1, height shift range 0.1, shear range of 0.05, horizontal/vertical filliping, and filling mode called “nearest”.

Proposed model

As stated earlier, the dataset containing COVID-19 chest X-ray images was used in our study, which it is publicly available on GitHub. Since the dataset obtained from multiple hospitals, the image resolutions differ from each other; hence, we rescaled the images and normalized pixels values to a range between zero and one. In our study, we used CNN-based method; therefore, it is not affected by adverse effects of the data compression used in the present study.

In this study, a CNN-based model was used to detect COVID-19 from the chest X-ray images. We have used four pre-trained CNN models, including VGG16, VGG19, MobileNet, and InceptionResNetV2. We have not explained these models in detail because a lot number of studies have been described previously the applied parameters in these models. In brief, the architecture of these models consists of convolution, pooling, flattening, and fully connected layers. The aforementioned models (i.e., VGG16, VGG19, MobileNet, and InceptionResNetV2) were used for feature extraction. Then, a transfer learning model consist of five different mentioned layers was trained and applied on the COVID-19 dataset. These five different layers are considered as main part of the model. In other words, we have built a new fully-connected layer head comprising following layers: AveragePooling2D, Flatten, Dense, Dropout, and a last Dense with the “two-element softmax (sigmoid)” activation to predict the distribution probability of classes. AveragePooling2D layer is the first layer and average pooling operation is performed by this layer with pool size of (4, 4). Then, a flatten layer was used to flat the input. Flatten layers allows to change the shape of the data from 2-dimentional (2D) matrix of features into a vector that can be import into a fully connected neural network classifier. The aim of the dense layer is to transform the data. In other words, the transformed vector in the previous layer is input into a fully dense connected layer. This layer decreases the vector of height 512 to a vector of 64 elements. Then, a dropout with a threshold of 0.5 is applied to ignore 50% neurons. The purpose of this layer is to improve generalization. Finally, the last dense layer is used to reduce the vector of height 64 to a vector of 2 elements. The output of the classification model in this problem is two-class classification or binary classification.

Training phase

In the present study, a transfer learning approach is adopted to assess the performance of the CNN architectures described here and compare them. Because radiologists must first distinguish COVID-19 chest X-rays from normal images, we decided to choose a CNN design that can identify COVID-19 and healthy people.

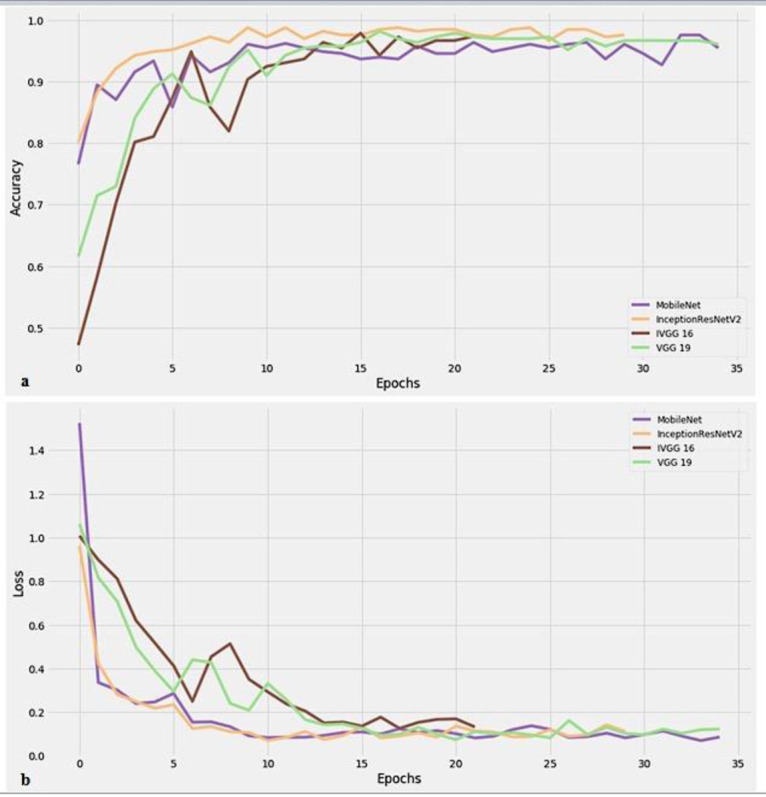

The networks were trained using the binary cross-entropy loss function and Adam optimizer with learning rate of 0.0001, batch size of 15, and epoch value of 100. Other parameters and functions used in training phase have been described in the Materials and Methods subsection 2.3. As aforementioned, we have implemented data augmentation techniques to enhance training efficiency and prevent the model from overfitting. In our study, neural networks were implemented with Python on a GeForce GTX 8 GB NVIDIA and 32 GB RAM. We used the Holdout method, as the simplest type of cross validation to assess the performance of our binary classification models. Training curve of accuracy and loss for each transfer learning model is shown in Figures 2 and 3, respectively. Although number of epochs were set equal to 100, the all models were reached the stability with a number of epochs ranged between 28 and 30 because we used callback, as a powerful tool to customize the behavior of transfer learning models during training.

Figure 2.

Training curve of accuracy (a) and loss (b) for the proposed models.

Figure 3.

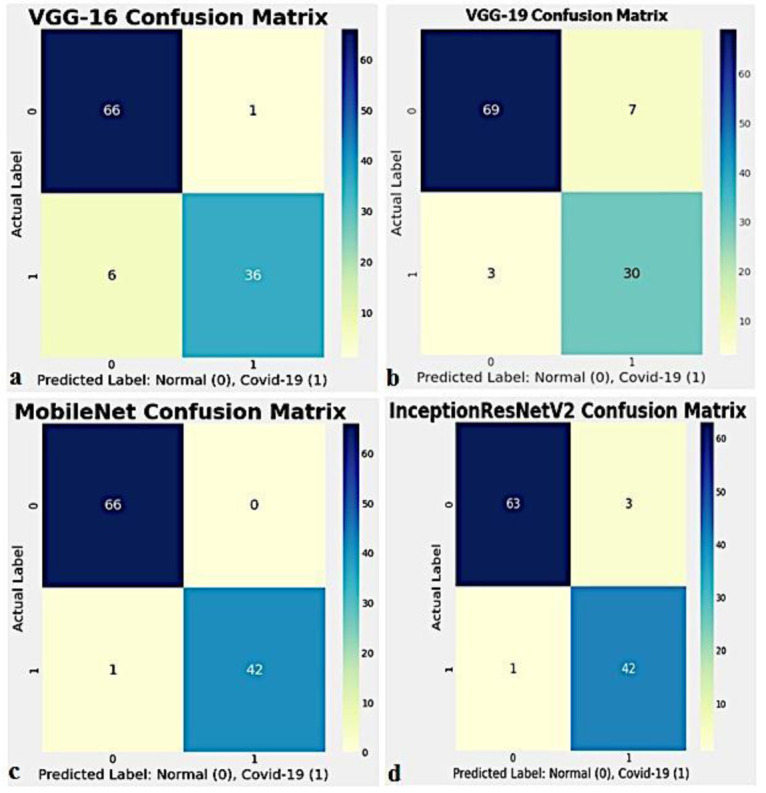

Confusion matrixes of the proposed models, (a) Visual Geometry Group (VGG)-16, (b) VGG-19, (c) MobileNet, and (d) InceptionResNetV2.

Results

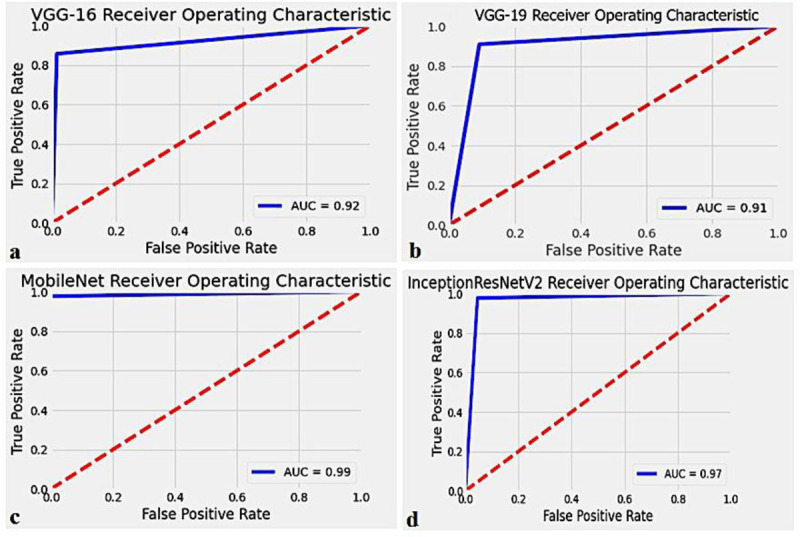

We calculated the confusion matrix and area under curve (AUC) of receiver operating characteristics (ROC) to evaluate the performance of each transfer learning model.

Confusion matrix or table of confusion is a table with two rows and two columns reporting four primary parameters known as False Positives (FP), False Negatives (FN), True Positives (TP), and True Negatives (TN). Figure 3 shows the performance of each transfer learning model for binary classification in the form of confusion matrix, aimed to distinguish COVID-19 chest X-rays from healthy X-rays. As shown in Figure 3, the MobileNet model has the best classification performance. Four different performance metrics, including accuracy, precision, recall, and F-measure (F1-score) are used to evaluate the classification accuracy of each transfer learning model. The above-mentioned metrics are considered as most common measurement metrics in machine learning. Table 2 summarizes the overall accuracy, precision, recall and F-measure computed for each transfer learning model by formulae given below.

Table 2.

Performance parameters of each convolution neural network (CNN)-based pre-trained transfer model on the testing data

| Model | Accuracy (%) | Precision (%) | Recall (%) | F1 score (%) |

|---|---|---|---|---|

| VGG-16 | 93.6 | 97.0 | 86.0 | 91.0 |

| VGG-19 | 90.8 | 81.0 | 91.0 | 86.0 |

| MobileNet | 99.1 | 100 | 98.0 | 99.0 |

| InceptionResNetV2 | 96.8 | 93.0 | 98.0 | 95.0 |

As observable in Table 2, the all proposed models provide accuracy greater than 90.0%. The pre-trained MobileNet model provides the highest classification performance of automated COVID-19 classification with 99.1% accuracy in comparison with other three proposed models. The F1-score represents a measure of a test’s accuracy. As shown in Table 2, The MobileNet model achieves the highest classification performance with 99.0% F1-score.

ROC curve is a 2D graphical plot, plots between the true positive rate (sensitivity) and the false positive rate (specificity). In fact, the ROC curve represents the trade-off between sensitivity and specificity [ 25 ]. Herein, the ROC curve with true positive rate on the y-axis and false positive rate on the x-axis of each transfer learning model is plotted, as shown in Figure 4. Also, we have calculated the AUC of ROC curve, as effective way indicating the accuracy of ROC produced by each transfer learning model. The AUC represents a measure of how well a parameter can discriminate between the COVID-19 and healthy groups. The plotted AUC of ROC of four different models is shown in Figure 4. As displayed in Figure 4, the plotted AUC of ROC of VGG-16, VGG-19, MobileNet, and InceptionResNetV2 models were 0.92, 0.91, 0.99, and 0.97, respectively. In the field of medical diagnosis, these values are considered to be “excellent”.

Figure 4.

Receiver operating characteristics (ROC) curve for the proposed models, (a) Visual Geometry Group (VGG)-16, (b) VGG-19, (c) MobileNet, and (d) InceptionResNetV2.

Discussion

In this study, we proposed four pre-trained deep CNN models, including VGG-16, VGG-19, MobileNet, and InceptionResNetV2 for discriminating COVID-19 cases from chest X-ray images. From our data, it can be seen that VGG-16, VGG-19, MobileNet, and InceptionResNetV2 achieved the overall accuracy 93.6%, 90.8%, 99.1%, and 96.8% for binary classification, respectively. In addition, our data show that the precision (positive predictive value) and recall (sensitivity) for COVID-19 cases are interesting results. It should be noted that an encouraging result is higher recall value that represents low FN case. This is important because the proposed models should be able to reduce missed COVID-19 cases as much as possible, as most important purpose of the present study. The results of our study show that VGG-16 and MobileNet achieve best precision of 97.0% and 100%, respectively. Furthermore, the MobileNet and InceptionResNetV2 models provided the same performance classification with recall of 98.0%, as shown in Table 2.

Table 3 summarizes the recent studies on the automated detection of COVID-19 from chest X-ray and CT images. As observable in Table 3, the results achieved by our proposed models are similar or even superior compared to previous similar studies. Several group of researcher have attempted to develop an automated model to diagnose COVID-19 accurately. Hemdan et al., have proposed COVIDX-Net model to detect COVID-19 cases from chest X-ray images [ 26 ]. Their model achieved an accuracy equal to 90.0% using 25 COVID-19 positive and 25 healthy chest X-rays. In another study, a residual deep architecture called COVID-Net for COVID-19 diagnosis has been designed. The results of that study indicate that COVID-Net provides an accuracy of 92.4% using medical images obtained from various open access data [ 27 ]. Apostolopoulos et al., and Mpesiana et al., using transfer learning have analyzed 224 approved COVID-19, 700 pneumonias, and 504 normal images [ 28 ]. Their proposed model (i.e., VGG-19) achieved an accuracy of 98.75% for binary classification. Wang et al., obtained a classification accuracy of 82.9% exploiting the modified Inception (M-Inception) deep model by considering 195 COVID-19 and 258 healthy CT images [ 29 ].

Table 3.

Summary of the recent study on the automated COVID-19 detection

| Study | Architecture | Image | COVID-19 | Healthy | Accuracy 2-class classification (%) |

|---|---|---|---|---|---|

| Hemdan et al., [26] | COVIDX-Net | X-ray | 25 | 25 | 90.0 |

| Wang and Wong [27] | COVID-Net (Residual Arch) | X-ray | 53 | 8066 | 92.4 |

| Narin et al., [7] | ResNet-50 | X-ray | 50 | 50 | 98.0 |

| InceptionV3 | 97.0 | ||||

| Sethy and Behra [6] | ResNet-50 | X-ray | 25 | 25 | 95.38 |

| Apostolopoulos and Mpesiana [28] | VGG-19 | X-ray | 224 | 504 | 98.75 |

| Xception | 85.57 | ||||

| Wang et al., [29] | M-Inception | CT | 195 | 258 | 82.9 |

| Zheng et al., [30] | UNet + 3D Deep Network | CT | 313 | 229 | 90.8 |

| Present study | VGG-16 | X-ray | 181 | 364 | 93.6 |

| VGG-19 | 90.8 | ||||

| MobileNet | 99.1 | ||||

| InceptionResNetV2 | 96.8 | ||||

This is a proven fact that wearing cloth face covering, social distancing, and rigorous testing along with other preventive measures can reduce the spread of COVID-19. The antibody test and the RT-PCR are two current standard methods in the worldwide for detecting COVID-19. The antibody test is an indirect way for diagnosis of COVID-19 infection and has a very slow process. In contrast, the RT-PCR is relatively fast and can diagnose COVID-19 in around 4-6 hours. However, the RT-PCR testing has several limitations such as limited availability, high cost, shortage of the kit. As such, this molecular assay is time-consuming. Of note, when we consider the magnitude of COVID-19 pandemic throughout the world, the RT-PCR is not very fast.

The aforementioned limitations can be resolved with our proposed pre-trained deep CNN models, in particular MobileNet. The proposed models in the present study are able to detect the COVID-19 positive case in less than 2 seconds. Our proposed models achieved the accuracy more than 90% with the limited patient data that we had. Furthermore, the MobileNet and InceptionResNetV2 models provide 98% true positive rate. From the discussions, it can be understood that our proposed models achieved the promising and encouraging results in detection of COVID-19 from chest X-ray images, as compared to recent methods proposed by the state-of-the-art. Data indicate that deep learning plays a great role in fighting COVID-19 pandemic in near future. Our model must be validated by adding more patient data to the training dataset. In this study, our proposed models based on chest X-ray images aimed to improve the COVID-19 detection. The proposed models can reduce clinician workload significantly.

Conclusion

In this study, we presented four pre-trained deep CNN models such as VGG16, VGG19, MobileNet, and InceptionResNetV2 are used for transfer learning to detect and classify COVID-19 from chest radiography. The all proposed models were able to perform binary classification with the accuracy more than 90.0% for COVID-19 diagnosis. MobileNet model achieved the highest classification performance of automated COVID-19 detection with 99.1% accuracy among the other three proposed models. Our data indicated that the MobileNet can be considered as a promising model to detect COVID-19 cases. This model can be helpful for medical diagnosis in radiology departments. A limitation of our study is the use of the insufficient number of COVID-19 chest X-ray images. In the future, by increasing the number of samples of COVID-19 chest X-rays to the training dataset, the accuracy and robustness of our proposed models increase further.

Footnotes

Conflict of Interest: None

References

- 1.Shan F, Gao Y, Wang J, Shi W, Shi N, Han M, et al. Lung infection quantification of covid-19 in ct images with deep learning. ArXiv. 2020 [Google Scholar]

- 2.Worldometer. COVID-19 CORONAVIRUS PANDEMIC. [Cited 2020 July 28]. 2020 . Available from: https://www.worldometers.info/coronavirus/?utm_campaign=homeAdvegas1?

- 3.Yuki K, Fujiogi M, Koutsogiannaki S. COVID-19 pathophysiology: A review. Clin Immunol. 2020; 215:108427. doi: 10.1016/j.clim.2020.108427. [ PMC Free Article ] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Wang W, Xu Y, Gao R, Lu R, Han K, Wu G, et al. Detection of SARS-CoV-2 in Different Types of Clinical Specimens. JAMA. 2020;323(18):1843–4. doi: 10.1001/jama.2020.3786. [ PMC Free Article ] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Ai T, Yang Z, Hou H, Zhan C, Chen C, Lv W, et al. Correlation of Chest CT and RT-PCR Testing for Coronavirus Disease 2019 (COVID-19) in China: A Report of 1014 Cases. Radiology. 2020;296(2):E32–40. doi: 10.1148/radiol.2020200642. [ PMC Free Article ] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Sethy P K, Behera S K. Detection of Coronavirus Disease (COVID-19) Based on Deep Features. Preprints. 2020 doi: 10.20944/preprints202003.0300.v1. [DOI] [Google Scholar]

- 7.Narin A, Kaya C, Pamuk Z. Automatic detection of coronavirus disease (covid-19) using x-ray images and deep convolutional neural networks. ArXiv. 2020 doi: 10.1007/s10044-021-00984-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Zu Z Y, Jiang M D, Xu P P, Chen W, Ni Q Q, Lu G M, et al. Coronavirus Disease 2019 (COVID-19): A Perspective from China. Radiology. 2020;296(2):E15–25. doi: 10.1148/radiol.2020200490. [ PMC Free Article ] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Kanne J P, Little B P, Chung J H, Elicker B M, Ketai LH. Essentials for Radiologists on COVID-19: An Update-Radiology Scientific Expert Panel. Radiology. 2020;296(2):E113–4. doi: 10.1148/radiol.2020200527. [ PMC Free Article ] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Basavegowda H S, Dagnew G. Deep learning approach for microarray cancer data classification. CAAI Trans Intell Technol. 2020;5(1):22–33. doi: 10.1049/trit.2019.0028. [DOI] [Google Scholar]

- 11.Majeed T, Rashid R, Ali D, Asaad A. Problems of Deploying CNN Transfer Learning to Detect COVID-19 from Chest X-rays. MedRxiv. 2020 doi: 10.1007/s13246-020-00934-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Kaur M, Singh D. Fusion of medical images using deep belief networks. Cluster Comput. 2020;23:1439–53. doi: 10.1007/s10586-019-02999-x. [DOI] [Google Scholar]

- 13.Qi G, Wang H, Haner M, Weng C, Chen S, Zhu Z. Convolutional neural network based detection and judgement of environmental obstacle in vehicle operation. CAAI Trans Intell Technol. 2019;4(2):80–91. doi: 10.1049/trit.2018.1045. [DOI] [Google Scholar]

- 14.Shukla P K, Sharma P, Rawat P, Samar J, Moriwal R, et al. Efficient prediction of drug–drug interaction using deep learning models. IET Syst Biol. 2020;14:211–6. doi: 10.1049/iet-syb.2019.0116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Sarker L, Islam M M, Hannan T. COVID-DenseNet: A Deep Learning Architecture to Detect COVID-19 from Chest Radiology Images. Preprints. 2020 doi: 10.20944/preprints202005.0151.v1. [DOI] [Google Scholar]

- 16.Hussain M, Bird J J, Faria D R. A Study on CNN Transfer Learning for Image Classification. Springer International Publishing: Advances in Computational Intelligence Systems; 2018. p. 191-202 . [Google Scholar]

- 17.Rahimzadeh M, Attar A. Introduction of a new Dataset and Method for Detecting and Counting the Pistachios based on Deep Learning. ArXiv. 2020 [Google Scholar]

- 18.Lih O S, Jahmunah V, San T R, Ciaccio E J, Yamakawa T, Tanabe M, et al. Comprehensive electrocardiographic diagnosis based on deep learning. Artif Intell Med. 2020;103:101789. doi: 10.1016/j.artmed.2019.101789. [DOI] [PubMed] [Google Scholar]

- 19.Dekhtiar J, Durupt A, Bricogne M, Eynard B, Rowson H, Kiritsis D. Deep learning for big data applications in CAD and PLM – Research review, opportunities and case study. Comput Ind. 2018;100:227–43. doi: 10.1016/j.compind.2018.04.005. [DOI] [Google Scholar]

- 20.Guo T, Dong J, Li H, Gao Y, editors. Simple convolutional neural network on image classification. 2nd International Conference on Big Data Analysis (ICBDA); Beijing, China: IEEE; 2017 . [DOI] [Google Scholar]

- 21.Simonyan K, Zisserman A. Very deep convolutional networks for large-scale image recognition. ArXiv. 2014 [Google Scholar]

- 22.Howard AG, Zhu M, Chen B, Kalenichenko D, Wang W, Weyand T, et al. Mobilenets: Efficient convolutional neural networks for mobile vision applications. ArXiv. 2017 [Google Scholar]

- 23.Szegedy C, Liu W, Jia Y, Sermanet P, Reed S, Anguelov D, et al. Going deeper with convolutions. Conference on Computer Vision and Pattern Recognition (CVPR); Boston, MA, USA: IEEE; 2015. p. 1-9 . [DOI] [Google Scholar]

- 24.Cohen J P, Morrison P, Dao L, Roth K, Duong T Q, Ghassemi M. Covid-19 image data collection: Prospective predictions are the future. ArXiv. 2020 [Google Scholar]

- 25.Streiner DL, Cairney J. What’s under the ROC? An introduction to receiver operating characteristics curves. Can J Psychiatry. 2007;52(2):121–8. doi: 10.1177/070674370705200210. [DOI] [PubMed] [Google Scholar]

- 26.Hemdan EE-D, Shouman MA, Karar ME. Covidx-net: A framework of deep learning classifiers to diagnose covid-19 in x-ray images. ArXiv. 2020 [Google Scholar]

- 27.Wang L, Wong A. COVID-Net: A Tailored Deep Convolutional Neural Network Design for Detection of COVID-19 Cases from Chest X-Ray Images. ArXiv. 2020 doi: 10.1038/s41598-020-76550-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Apostolopoulos ID, Mpesiana TA. Covid-19: automatic detection from X-ray images utilizing transfer learning with convolutional neural networks. Phys Eng Sci Med. 2020;43:635–40. doi: 10.1007/s13246-020-00865-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Wang S, Kang B, Ma J, Zeng X, Xiao M, Guo J, et al. A deep learning algorithm using CT images to screen for Corona Virus Disease (COVID-19) MedRxiv. 2020 doi: 10.1101/2020.02.14.20023028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Zheng C, Deng X, Fu Q, Zhou Q, Feng J, Ma H, et al. Deep Learning-based Detection for COVID-19 from Chest CT using Weak Label. MedRxiv. 2020 doi: 10.1101/2020.03.12.20027185. [DOI] [Google Scholar]