Abstract

t-distributed Stochastic Neighborhood Embedding (t-SNE), a clustering and visualization method proposed by van der Maaten & Hinton in 2008, has rapidly become a standard tool in a number of natural sciences. Despite its overwhelming success, there is a distinct lack of mathematical foundations and the inner workings of the algorithm are not well understood. The purpose of this paper is to prove that t-SNE is able to recover well-separated clusters; more precisely, we prove that t-SNE in the ‘early exaggeration’ phase, an optimization technique proposed by van der Maaten & Hinton (2008) and van der Maaten (2014), can be rigorously analyzed. As a byproduct, the proof suggests novel ways for setting the exaggeration parameter α and step size h. Numerical examples illustrate the effectiveness of these rules: in particular, the quality of embedding of topological structures (e.g. the swiss roll) improves. We also discuss a connection to spectral clustering methods.

Keywords: t-SNE, dimensionality reduction, spectral clustering, convergence rates, theoretical guarantees

1. Introduction and main result.

The analysis of large, high dimensional datasets is ubiquitous in an increasing number of fields and vital to their progress. Traditional approaches to data analysis and visualization often fail in the high dimensional setting, and it is common to perform dimensionality reduction in order to make data analysis tractable. t-distributed Stochastic Neighborhood Embedding (t-SNE), introduced by van der Maaten and Hinton (2008), is an impressively effective non-linear dimensionality reduction technique that has recently found enormous popularity in several fields. It is most commonly used to produce a two-dimensional embedding of high dimensional data with the goal of simplifying the identification of clusters. Despite its tremendous empirical success, the theory underlying t-SNE is unclear. The only1 theoretical paper at this point is Shaham and Steinerberger (2017), which shows that the structure of the loss functional of SNE (a precursor to t-SNE) implies that global minimizers separate clusters in a quantitative sense.

1.1. A case study.

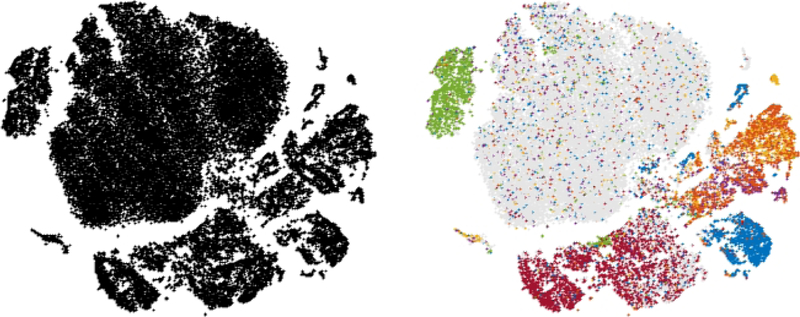

As an unsupervised learning method, t-SNE is commonly used to visualize high dimensional data and provide crucial intuition in settings where ground truth is unknown. The analysis of single cell RNA sequencing (scRNA-seq) data, where t-SNE has become an integral part of the standard analysis pipeline, provides a relevant example of its usage. Figure 1.1 shows (left) the output of running t-SNE on the 30 largest principal components of the normalized expression matrix of 49300 retinal cells taken from Macosko et al. (2015). The output on the right has cells colored based on which of 12 cell type marker genes were most expressed (with grey signifying that none of the marker genes were expressed). This example is well suited to showcase both the tremendous impact of t-SNE in the medical sciences as well as the inherent difficulties of interpreting its output when ground truth is unknown: how many clusters are in the original space, and do they correspond one-to-one to clusters in the t-SNE plot? Do the clusters (e.g. the largest cluster that does not express any marker genes) have substructure that is not apparent in this visualization? Pre-processing steps will yield different embeddings; how stable are the clusters? All these questions are of the utmost importance and underline the need for a better theoretical understanding.

Figure 1.1:

t-SNE output (left) and colored by some known ground truth (right).

1.2. Early Exaggeration.

t-SNE (described in greater detail in §3) minimizes the Kullback-Leibler divergence between a Gaussian distribution modeling distances between points in the high dimensional input space and a Student t-distribution modeling distances between corresponding points in a low dimensional embedding. Given a d-dimensional input dataset , t-SNE computes an s-dimensional embedding of the points in , denoted by , where s ≪ d and most commonly s = 2 or 3. The main idea is to define a series of affinities pij on as well as a series of affinities qij in the embedding and then try minimize the distance of these distributions in the Kullback-Leibler distance

which gives rise to a gradient descent method via

where Z = Σk≠l(1 + ∥yk − yl∥2)−1. One difficulty is that the speed with which the algorithm converges slows down as the number of points n increases, the algorithm requires many more iterations to converge. However, already the original paper van der Maaten and Hinton (2008) proposes a number of ways in which the convergence can be accelerated.

A less obvious way to improve the optimization, which we call ‘early exaggeration’, is to multiply all of the pij’s by, for example, 4, in the initial stages of the optimization. […] In all the visualizations presented in this paper and in the supporting material, we used exactly the same optimization procedure. We used the early exaggeration method with an exaggeration of 4 for the first 50 iterations (note that early exaggeration is not included in the pseudocode in Algorithm 1). (from: van der Maaten and Hinton (2008))

It is easy to test empirically that this renormalization indeed improves the clustering and is effective. It has become completely standard and is hard-coded into the very widely used standard implementation available online, as described by van der Maaten (2014):

During the first 250 learning iterations, we multiplied all pij–values by a userdefined constant α > 1. […] this trick enables t-SNE to find a better global structure in the early stages of the optimization by creating very tight clusters of points that can easily move around in the embedding space. In preliminary experiments, we found that this trick becomes increasingly important to obtain good embeddings when the data set size increases [Emphasis GL & SS], as it becomes harder for the optimization to find a good global structure when there are more points in the embedding because there is less space for clusters to move around. In our experiments, we fix α = 12 (by contrast, van der Maaten and Hinton (2008) used α = 4). (from: van der Maaten (2014))

As it turns out, this simple optimization trick can be rigorously analyzed. As a byproduct of our analysis, we see that the convergence of non-accelerated t-SNE will slow down as the number of points n increases and the number of iterations required will grow at least linearly in n. The implementation available online counteracts this problem by various methods: (1) the early exaggeration factor α, (2) a large (h = 200) stepsize in the gradient descent

and by (3) optimization techniques such as momentum. We only deal with the t-SNE algorithm, the early exaggeration factor α and the step-size h; one of the main points of our paper is that a suitable parameter selection of α and h makes it possible to guarantee fast convergence without additional optimization techniques.

1.3. Summary of Main Results.

We will now state our main results at an informal level; all the statements can be made precise (this is done in §3.2) and will be rigorously proven.

1. Canonical parameters and exponential convergence.

There is a canonical setting for the parameters α,h for which the algorithm applied to clustered data converges provably at an exponential rate without the use of other optimization techniques (such as momentum). This setting is

These parameters lead to an exponential convergence of all embedded clusters to small balls (whose diameter depends on how well is clustered). Generally, the speed of convergence is exponential with an exponential factor κ

The restriction αh ≤ n is not artificial, easy experiments show that the algorithm generally fails (no longer converges) as soon as αh ≥ 2n.

2. Spectral clustering.

The t-SNE algorithm, in this regime, behaves like a spectral clustering algorithm (defined in Section §4.1); moreover, this algorithm can be written down explicitly. This allows for (1) the use of theory from spectral clustering to rigorously analyze t-SNE and (2) a fast implementation that can perform the early exaggeration phase in a fraction of the time necessary to run t-SNE (in this regime). It also poses the challenge of trying to understand whether t-SNE behaves qualitatively different for the standard parameters α ~ 12,h ~ 200 or whether it behaves more or less identically (and thus like a spectral method).

3. Disjoint clusters.

It is not guaranteed that the embedded clusters in are disjoint; but given a random initialization, it is extremely unlikely that two distinct clusters will converge to the same center. Furthermore, if is well-clustered, the diameter of the clusters can be made even smaller by decreasing the step-size h and further increasing α as long as the product satisfies αh ~ n/10. Increasing α will resolve overlapping clusters, as long as they have different centers. In particular, the number of disjoint clusters in is a lower bound on the number of clusters in (and, generically, the numbers coincide). Finally, we note that since the appearance of the preprint for this manuscript, Arora et al. (2018) built upon our analytic framework to show that clusters will effectively be disjoint, under a slightly different set of assumptions.

4. Independence of initialization.

All these results are independent of the initialization of as long as it is contained in a sufficiently small ball.

An immediate implication of (3) is the following: if we are given some clustered data and see that the embedding of t-SNE for large values of α (and small values of h) produces k clusters, then there are exactly k clusters in . The results guarantee that all clusters in are eventually mapped to small balls which can be made arbitrarily small. We see that when parameters are chosen optimally, this result provides a justification for the way t-SNE is commonly used in, say, biomedical research. However, we emphasize that this result only applies to data which is well-clustered, as made precise in §3.2.

1.4. Approximating Spectral Clustering.

The fact that t-SNE approximates a spectral clustering method for α ~ n/10, h ~ 1 raises a fascinating question: does t-SNE, in its early exaggeration phase, perform better with the classical parameter choices of α ~ 12,h ~ 200 than it does with α ~ n/10, h ~ 1? If yes, then its inner workings may give rise to improved spectral methods. If no, then it would be advantageous to use α ~ n/10, h ~ 1, which then, however, is essentially a spectral method and it may be advantageous (and much faster) to initialize the second phase of t-SNE by using the outcome of a more advanced spectral method as initialization instead. We discuss some experiments in that direction in §4.1 and believe this to be worthy of further investigation. Moreover, we describe a visualization technique in the style of t-SNE for spectral clustering tools (see §4.2).

1.5. Organization.

The Organization of this paper is as follows: we first illustrate our main points with some numerical examples in Section §2. Section §3 establishes notation and a formal statement of our main result, Section §4 derives a connection between t-SNE and spectral clustering, Section §5 discusses a certain type of discrete dynamical system on finite numbers of points and establishes a crucial estimate, Section §6 gives a proof of the main result.

2. Numerical examples.

This section discusses a number of numerical examples to illustrate our main points.

2.1. Lines and Swiss roll.

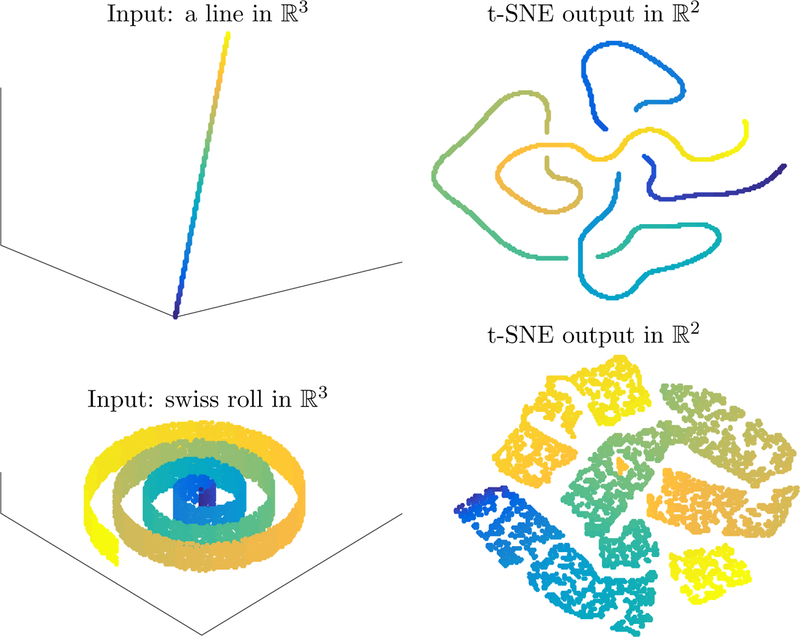

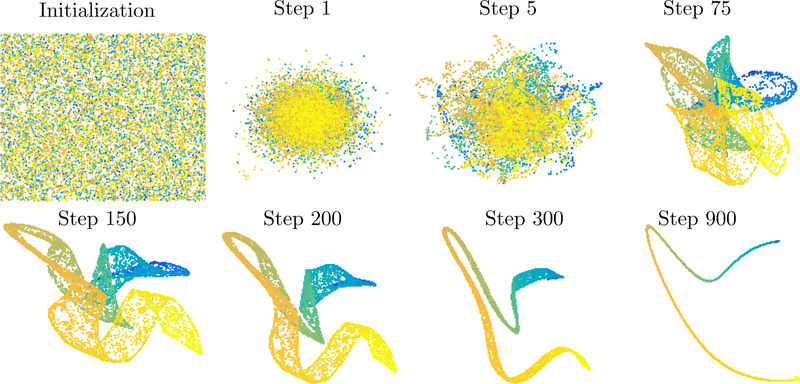

It is classical that t-SNE does not successfully embed the swiss roll; however, the random initialization causes difficulty even on simpler data: Figure 2.1 shows the t-SNE embedding (using Matlab implementation of van der Maaten (2014) with default parameters) of a simple line in .

Figure 2.1:

Classical t-SNE embeddings of a line and the swiss roll.

The randomized initialization causes, after initial contraction in the early exaggeration phase, a topological interlocking that cannot be further resolved. The example is even more striking with the swiss roll, where the random initialization leads to ‘knots’ that cannot be untied by t-SNE. In stark contrast, the parameter selection

allows for a more effective early exaggeration phase that clearly recovers the line from random initial data and even contracts the swiss roll to a correctly ordered line (that would then expand in the second phase of the algorithm).

The successful embedding of these examples when α and h are chosen optimally is consistent with our claim that in this regime, the early exaggeration phase of t-SNE acts like a spectral method, many of which also correctly embed these manifolds.

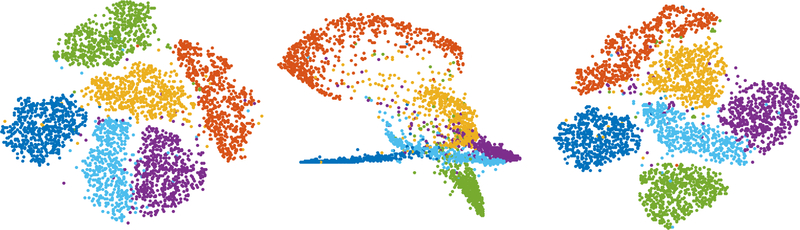

2.2. Real-life data.

Finally, we show the impact of the parameter selection α ~ n/10,h ~ 1 in a real-life example. Figure 2.4 shows (left) classical out-of-the-box t-SNE on 10000 randomly subsampled handwritten digits (0–5) from the MNIST data set as well as the outcome of the early exaggeration phase of t-SNE with parameters α ~ n/10,h ~ 1 (middle) and the final outcome after the second phase of t-SNE has been initialized with the data shown in the middle (right). We see that early exaggeration does essentially all the clustering already and the second phase rearranges them. This behavior is typical: the clustering and global organization occurs in the early exaggeration phase – when the points can move most easily – and then during the rest of the iterations they often expand to fill a larger area, revealing more of the within-cluster variability that is often of interest. As evident in this figure, the second phase often improves separation between clusters, because when α = 1 the repulsive forces are no longer negligible, and the clusters hence repulse one another.

Figure 2.4:

A real-life example: classical t-SNE (left), t-SNE with our proposed parameter selection both after the early exaggeration phase (middle) and final output (right).

We believe this example again hints at one of the fundamental questions that arises from the work in this paper: is the initial clustering done by standard t-SNE comparable to the initial clustering with the new parameter selection? If so, then the fact that the new parameter selection emulates a spectral clustering method (see §4) certainly suggests the option of initializing with other clustering methods as opposed to random initialization. Moreover, it would hint at the danger of using a spectral clustering method and t-SNE as a dual verification of clustering.

3. t-SNE: Notation and the Main result.

This section starts with a complete discussion of t-distributed Stochastic Neighbor Embedding, partly for the convenience of the reader and partly to establish terminology and notation, and then describes the main result.

3.1. t-SNE.

We denote the d-dimensional input dataset by , t-SNE computes an s-dimensional embedding of the points in , denoted by , where s ≪ d and most commonly s = 2 or 3. The joint probability pij measuring the similarity between xi and xj is computed as:

The bandwidth of the Gaussian kernel, σi, is often chosen such that the perplexity of Pi matches a user defined value, where Pi is the conditional distribution across all data points given xi. We will never deal with these issues: we will assume that the pij are given and that they correspond to a well-clustered set (in a precise sense defined below). In particular, we will not assume that they have been obtained using a Gaussian kernel. The similarity between points yi and yj in the low dimensional embedding is defined as:

t-SNE finds the points {y1,…,yn} which minimize the Kullback-Leibler divergence between the joint distribution P of points in the input space and the joint distribution Q of points in the embedding space:

The points are initialized randomly, and the cost function is minimized using gradient descent. The gradient is derived in Appendix A of van der Maaten and Hinton (2008):

where Z is a global normalization constant

As in van der Maaten (2014), we split the gradient into two parts:

Since we are interested in the minimization of the functional, we would naturally step in direction of the negative gradient

Each of these two terms has a very natural interpretation, the first term is usually called the attractive term while the second term describes a repulsive force (see, for example, van der Maaten and Hinton (2008) for use of this terminology). The reason is simple: the first term is moving yi to a weighted average of the other yj. The weights are big if the underlying points are close in space and small if they are far away. The second term has the opposite sign and thus exerts the opposite effect, however, the degree of repulsion depends solely on the closeness of points in the embedding space. Put differently, the first term attracts terms that are meant to be together based on distance in the original space and the second term tries to push points apart when they get too close in the embedding space regardless of whether they are meant to be close to each other or not. One would hope that attractive forces win out over the repulsion for points that are meant to be close to each other and loses out for points that are meant to be far apart from each other and this is one of the common interpretations of the underlying mechanism. Early exaggeration introduces the coefficient α > 1 and corresponds to the gradient descent method

and a small step-size h > 0 leads to the expression

3.2. Main result.

This section gives our main result. We emphasize that the method of proof is rather flexible and it is not difficult to obtain variations on the result under slightly different assumptions. We emphasize that our result is formally stated for a set of points {x1,…,xn} and a set of mutual affinities pij. We will not assume that the pij are obtained using the standard t-SNE normalizations but work at a full level of generality using a set of three assumptions. We note, and explain below, that for standard t-SNE the second assumption holds until the number of points exceeds, roughly, n ~ 20000 and the third assumption holds by design. The first assumption encapsulates our notion of clustered data.

1. is clustered.

We proceed by giving a very versatile definition of what it means to be a cluster; it is also applicable to things that clearly are not clusters, however, in those cases the error bound in the Theorem will not convey any information. Formally, we assume that there exists a (the number of clusters) and a map π: {1,…,n} → {1,2,…,k} assigning each point to one of the k clusters such that the following property holds: if π(xi) = π(xj), then

The constant 10 is fairly arbitrary in the sense that any absolute constant will do (but slow down the speed of convergence as it gets larger); we put 10 to avoid an overly complicated exposition. Observe that |π−1(π(i))| is merely the size of the cluster in which i and j lie. We will furthermore abbreviate, for fixed 1 ≤ i ≤ n, summations over clusters as

The condition on pij above implies that

This assumption ensures that elements are at least somewhat connected within their cluster; that is, we lower bound the affinity of each point to the other points in its cluster. We emphasize that it is a rather weak assumption since we do not simultaneously demand that the sum over all other elements is small. Indeed, one of the leading-error terms in the Theorem is

so if the data is strongly clustered and the pij have been obtained in one of the usual ways, then the optimal choice of π will be exactly the one that minimizes this quantity uniformly over all i and will then correspond to the original clustering; however, any other map π: {1,…,n} → {1,2,…,k} is equally admissible but is likely to result in a large (trivial, uninformative) error bound. In particular, our assumptions on what it means to be clustered are so weak that a given data set does not necessarily have a unique decomposition into different clusters, and this is mirrored in the main result that does not imply cluster separation (we refer to Arora et al. (2018) for such a statement under different assumptions).

2. Parameter choice.

Our second assumption ensures that the step size in the gradient descent, a quantity determined by the step size h and the exaggeration parameter α as the product αh, is within a reasonable regime. An assumption along these lines is clearly necessary for general gradient descent techniques to avoid overoscillation (i.e. missing a local minima and moving too far in the other direction). More precisely, we assume that α and h are chosen such that, for some 1 ≤ i ≤ n

The main result will be applicable to single cluster (i.e. it is possible to guarantee that a single cluster converges even if the rest does not) and it can be applied to exactly those clusters satisfying this inequality. It is easy to see, both in the proof and in numerical experiments, that the upper bound is a necessary condition for the early exaggeration phase of t-SNE to work (more precisely, the upper bound 1 is necessary but we need a little bit of leeway in another part of the argument). We observe that condition (1) implies that α ~ n/10 and h ~ 1 is admissible, however, other parameter choices (i.e. α ~ 10n, h ~ 1/100) are equally valid. As the number of points gets larger, the lower bound is violated: our main result can be easily extended to cover that case, however, the factor κ with which exponential convergence occurs approaches 1 and convergence, while technically exponential, becomes slow. In particular, an analysis of how this condition acts in the proof motivates an accurate parameter selection rule.

Guideline.

The best convergence rate for the cluster containing yi is attained when

is the best selection to ensure that all clusters converge.

We also observe that in the setting where the parameters are not chosen optimally (e.g. convergence rate κ ~ 1), momentum can be useful to accelerate convergence. However, for t-SNE there are currently no rigorous results in that direction.

3. Localized initialization.

The initialization satisfies . This assumption is not crucial and could be easily modified at the cost of changing some other constants. The proof suggests that initializing at smaller scales might be beneficial on the level of constants.

We can now state our main result.

Theorem 3.1 (Main Result).

Under Assumptions (1)–(3), the diameter of the embedded cluster {yj: 1 ≤ j ≤ n ∧ π(j) = π(i)} decays exponentially (at universal rate) until its diameter satisfies, for some universal c > 0,

Remarks.

The Theorem can be applied to a single cluster; in particular, some clusters may contract to tiny balls while others do not contract at all.

- Since αh ~ n, we see that the bound is only nontrivial if, for some small constant c2 > 0,

Otherwise, it merely tells us that the elements of the clusters are contained in a ball of radius ~ 1 (as are all the other points): this is not an artifact of the proof, but clearly necessary. If the affinity to other clusters is large, the data is not well-clustered. Generally, for well-clustered data, we would expect that sum to be very close to 0 which would yield a leading term error of ch/n. The constant c seems to be roughly on scale c ~ 10 for well-clustered data and slightly larger for data with worse clustering properties (in particular, for the classical t-SNE parameter section, it would slowly increase with the number of points n). We believe this estimate to be too conservative and consider the true value to be on a smaller order of magnitude; this question will be pursued in future work.

The proof of the main result is actually rather versatile and should easily adapt to a variety of other settings that might be of interest. This versatility is partly due to the connection of the argument to rather fundamental ideas in partial differential equations, indeed, the argument may be interpreted as a maximum principle for a discrete parabolic operator acting on vector-valued (i.e. points in space) data. This interpretation is what led us to establish a connection to spectral clustering which we now discuss.

4. A Connection to Spectral Clustering.

The purpose of this section is to note a connection to spectral clustering (Laplacian eigenmaps, see Belkin and Niyogi (2003)); the fact that the main terms coincide has previously been noted by Carreira-Perpinan (2010).

4.1. Approximating spectral clustering.

We give a quantitative description: it is possible to take the limit α → ∞, h → 0 (scaled so that α · h = const) and that, in that limit, one obtains a simple spectral clustering method. We re-introduce notation and assume again that is given. We assume pij is some collection of affinities scaled in such a way that for xi,xj in the same cluster π(i) = π(j)

We observe that this scaling is slightly differently than the one above: it is obtained by absorbing the αh ~ n term into the affinities. At the same time, h → 0 implies that the repulsion term containing the qij does not exert any force. This implies that, in the limit, the remaining term in the gradient descent method is given by

This, however, can be interpreted as a Markov chain with suitably chosen transition probabilities. It may be unusual, at first, to see this equation since the yi(t) are vectors in , however, all the equations separate different coordinates, which allows for a reduction to the familiar form. All the canonical results from spectral clustering apply: the asymptotic behavior is given by the largest non-trivial eigenvalue(s), which are either 1 (in the case of perfectly separated clusters) or very close to 1 and convergence speed depends on the spectral gap. Note, in particular, that for generic data the largest eigenvector is constant; however, since the algorithm is only run for a small number of steps, we essentially recover the dominant eigenvectors and the second phase of t-SNE then re-expands the clusters.

4.2. Visualizing spectral clustering.

The connection also allows us to go the other direction and discuss a particular visualization technique for spectral methods that shows arising clusters as points in (or higher dimensions, which is not essential here). The transition matrix of the Markov chain is given by

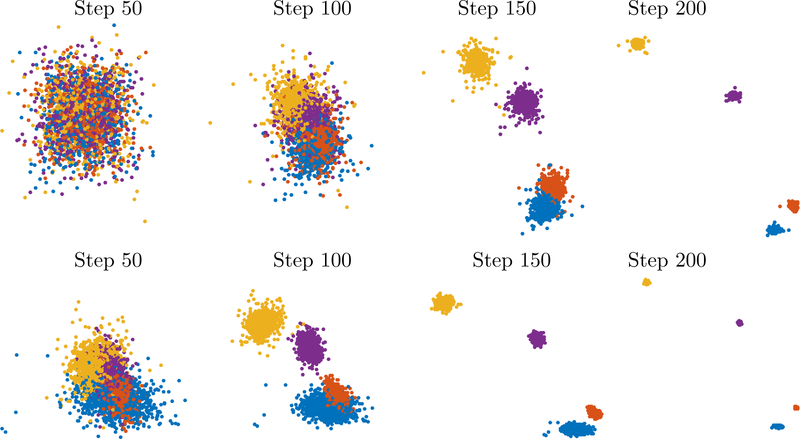

The large-time behavior of y(t) = Aty(0) is essentially determined by the spectrum of A close to 1. Moreover, in the case of perfect clustering with pij = 0 whenever xi and xj are in different clusters, there are exactly k eigenvalues equal to 1 and the initialization converges to that. Let us now assume that the goal is visualization in . We let be a set of points that we assume are i.i.d. random variables from, say, the uniform distribution on [−0.01,0.01]2. We propose to visualize the point set after k iterations as follows: collect these n initial vectors in a n × 2 vector y and interpret the n rows of Aky as coordinates in . This creates t-SNE-style visualizations for spectral methods (see Fig. 4.1 and Fig. 4.2, lower rows).

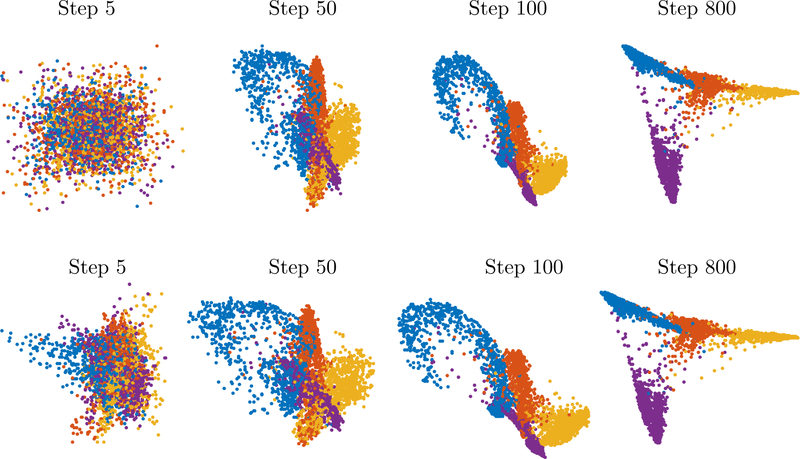

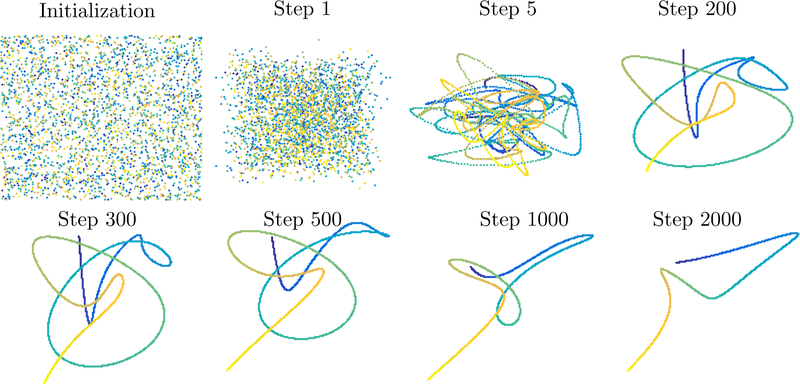

Figure 4.1:

Early exaggeration via t-SNE with α = n/10, h = 1 (top) and visualization of iterations of the spectral method (bottom) on same initialization.

Figure 4.2:

Early exaggeration via t-SNE with α ~ n/3, h = 1 (top, parameter selection via guideline) and iterations of the spectral method (bottom).

Examples.

An example of this method is shown in Figure 4.1. The example is comprised of 40000 points in sampled from four very narrow Gaussians and are highly clustered. We used perplexity of 30 to create the pij and used α = n/10,h = 1 in the implementation of t-SNE. The second row in Figure 4.1 shows the projection onto the 50 largest eigenvectors of A. The computation time of t-SNE took roughly 7 minutes vs. 1 minute for the spectral decomposition – note, however, that once the spectral decomposition has been computed, then iterations can be computed in constant time (one only has to raise the eigenvalues to some power). Another example is given in Figure 4.2 that is run on 4 digits in MNIST; again, both methods coincide. This demonstrates that our derivation of the approximating spectral method was accurate. At the same time, it suggests to repeat the fundamental question.

Open problem.

Is the clustering behavior of the early exaggeration phase of t-SNE with α = 12, h = 200 (and possibly optimization techniques such as momentum) essentially qualitatively equivalent to the behavior of t-SNE with our parameter choice α = n/10,h = 1?

If this were indeed the case, then the early exaggeration phase of t-SNE would be simply a spectral clustering method in disguise; if not, then it would be very valuable to understand under which circumstances its performance is superior to spectral clustering and whether its underlying mechanisms could be used to boost spectral methods. We reiterate that we believe this to be a very interesting problem.

5. Ingredients for the Proof: Discretized Dynamical Systems.

This section introduces a type of discrete dynamical systems on sets of points in and we describe their asymptotic behavior; this is a self-contained result; it could potentially be interpreted as an analysis of a spectral method that is robust to small error terms but the analysis is simple enough for us to keep entirely self-contained. Our original guiding picture was that of the maximum principle in the theory of parabolic partial differential equations.

5.1. A discrete dynamical system.

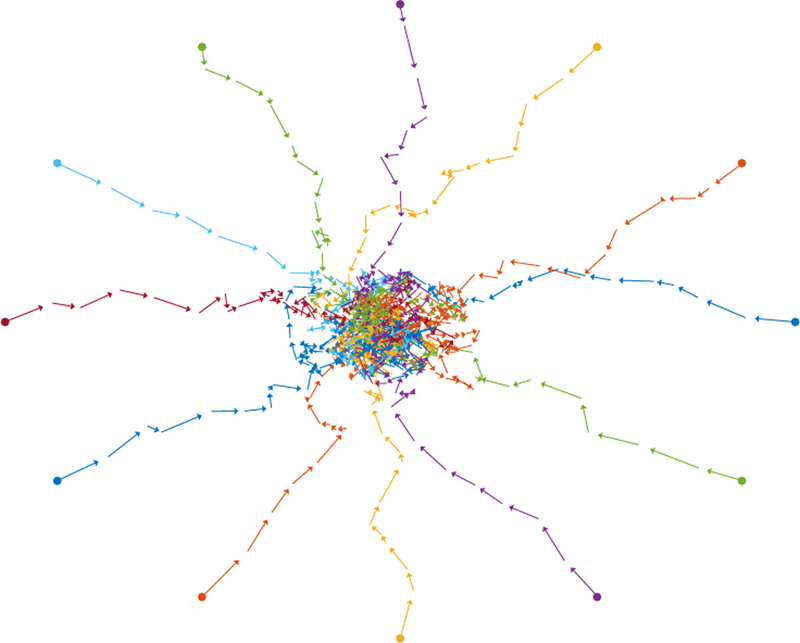

Let be given. We use them as initial values for a time-discrete dynamical system that is defined via

At this stage, if the points are in general position and n ≥ s, basic linear algebra implies that this system can undergo almost any arbitrary evolution as long as one is free to choose αi,j,t.

We will henceforth assume that these parameters assume the following three conditions.

- There is a uniform lower bound on the coefficients for all t > 0 and all i ≠ j

- There is a uniform upper bound on the coefficients

- There is a uniform upper bound on the error term

A typical example of such a dynamical system is given in the Figure below: we start with twelve points on the unit circle and then iterate the system for some random choices of ai,j,t and random εi(t). The points move at first towards each other until they are close and the error term starts being on the same scale as the forces of attraction. The points then move around randomly (all the while staying close to each other). We will make this intuitive picture precise below. The main result of this section is that all the points in this dynamical system are eventually contained in a ball whose size only depends on n, δ and ε. We start by showing that the convex hull of the points is stable. We use B(0, ε) to denote a ball of radius ε, A + B = {a + b: a ∈ A ∧ b ∈ B} and conv A for the convex hull of A.

Lemma 5.1 (Stability of the convex hull).

With the assumptions above, we have

Proof.

This argument is simple. We note that

By assumption,

and this implies zi(t + 1) − εi(t) ∈ conv{z1(t),z2(t),…,zn(t)}. ∎

Lemma 5.2 (Contraction inequality).

With the notation above, if the diameter is large

then

One particularly important consequence is the following: the diameter shrinks, at an exponential rate (1 − nδ/20)t, to a size of ~ ε/(nδ). Of course, this convergence is particularly fast whenever nδ ~ 1. It is easy to see, for example by taking n = 2 points in , that this is the optimal scale for the result to hold.

Proof.

The method of proof will be as follows: we will project the set of points onto an arbitrary line (say, the x−axis by taking only the first coordinate of each point) and show that the one-dimensional projections contract exponentially quickly. This then implies the desired statement. Let be such a projection. We abbreviate the diameter of the projection as

We may assume w.l.o.g. that this set is contained in {πxz1(t),πxz2(t),…,πxzn(t)} ⊂ [0,diam]. We then subdivide the interval into two regions

and denote the number of points in each interval by i1,i2. Clearly, i1 + i2 = n and therefore either i1 ≥ n/2 or i2 ≥ n/2. We assume w.l.o.g. the first case holds. Projections are linear, thus

We abbreviate

and write

■

Moreover, using the lower bound ai,j,t ≥ δ

Then, however,

which shows that πxzi(t + 1) ∈ [−ε, diam(1 − nδ/4) + ε]. This implies

If the diameter is indeed disproportionately large

then this can be rearranged as

and therefore

Since this is true in every projection, it also holds for the diameter of the original set. ∎

Remark.

The argument could be slightly improved because in its current form it assumes that the error εi has ∥εi(t)∥ℓ∞ = ε, while we assume . This, together with the usual other optimization schemes, should yield an improved estimate on the constant. The condition on δ could also be weakened (at the cost of losing constants). In particular, it would be sufficient in Assumption (1) in our main result to assume that, for every 1 ≤ i ≤ n

that are in the same cluster π(zi) = π(zj). By adapting the proof, the constant (1/2 + ε) could be reduced further, however, this is inevitably going to decrease the provable bounds on the exponential decay rate (which is not an artifact of the method, the speed of convergence of the algorithm slows down in this setting).

6. Proof of the Main Result.

The rough outline of the argument is as follows: we initialize all points inside [−0.01,0.01]2. We rewrite the gradient descent method acting on one particular embedded cluster as a dynamical system of the type studied above with an error term. The error term contains qij, which depend on distances between points from different clusters. This is difficult to control, especially if the points are far apart. Our strategy will now be as follows: we show that the qij are all under control as long as everything is contained in [−0.02,0.02]2. We use stability of the convex hull to guarantee that all of the embedded points are within [−0.02,0.02]2 for at least ℓ iterations and show that this time-scale is enough to guarantee contraction of the cluster.

Proof.

We start by showing that the qij are comparable as long as the point set is contained in a small region space. Let now {y1,y2,…,yn} ⊂ [−0.02,0.02]2 and recall the definitions

Then, however, it is easy to see that 0 ≤ ∥yi − yj∥ ≤ 0.06 implies

We will now restrict ourselves to a small embedded cluster {yi: π(i) fixed} and rewrite the gradient descent method as

where the first sum is yielding the main contribution and the other two sums are treated as a small error. Applying our results for dynamical systems of this type requires us to verify the conditions. We start by showing the conditions on the coefficients to be valid. Clearly,

which is clearly admissible whenever αh ~ n. As for the upper bound, it is easy to see that

It remains to study the size of the error term for which we use the triangle inequality

and, similarly for the second term,

■

This tells us that the norm of the error term is bounded by

It remains to check whether time-scales fit. The number of iterations ℓ for which the assumption is reasonable is at least ℓ ≥ 0.01/ε. At the same time, the contraction inequality implies that in that time the cluster shrinks to size

where the last inequality follows from the elementary inequality

Remarks.

The proof is relatively flexible in several different spots. By demanding that the initialization is contained in a sufficiently small ball, one can force the quantity Zqij to be arbitrarily close to 1. We also emphasize that we did not optimize over constants and additional fine-tuning in various spots would yield better constants (at the cost of a more involved argument which is why we decided against it). The use of the triangle inequality in bounding the error terms is another part of the proof that deserves attention: if the clusters are spread out, then we would expect the repulsive forces to act from all directions and lead to additional cancellation (which, if it were indeed the case that the qij do not play a significant role in the clustering that occurs in the early exaggeration phase, would be an additional reason for the strong similarity to the outcome of the spectral method). It could be of interest to study mean-field-type approximations to gain a better understanding of this phenomenon.

Figure 2.2:

Early exaggeration phase of t-SNE on a line with α = 20n, h = 0.05.

Figure 2.3:

Early exaggeration phase of t-SNE on a swiss roll with α = n, h = 1.

Figure 5.1:

A typical evolution a dynamical system of this type.

7. Acknowledgements.

The authors thank Laurens van der Maaten for valuable feedback on this work. GCL was supported by NIH grant #1R01HG008383–01A1 (PI: Yuval Kluger) and U.S. NIH MSTP Training Grant T32GM007205. SS was partially supported by #INO1500038 (Institute of New Economic Thinking).

Footnotes

After the appearance of the preprint for this manuscript, Arora et al. (2018) used our analytic framework to obtain results of a similar flavor. We mention how this work relates to our result in Section §1.3; Arora et al. (2018) can be consulted for more details.

References.

- Arora Sanjeev, Hu Wei, Kothari Pravesh K. An Analysis of the t-SNE Algorithm for Data Visualization. preprint at arXiv:1803.01768

- Belkin M and Niyogi P . Laplacian eigenmaps for dimensionality reduction and data representation Neural Computation 15 (6), 1373–1396. [Google Scholar]

- Miguel A Carreira-Perpinan. The elastic embedding algorithm for dimensionality reduction. 27th Int. Conf. Machine Learning (ICML 2010), pp. 167–174. [Google Scholar]

- van der Maaten Laurens. Accelerating t-sne using tree-based algorithms. Journal of Machine Learning Research, 15(1):3221–3245, 2014. [Google Scholar]

- van der Maaten Laurens and Hinton Geoffrey. Visualizing data using t-sne. Journal of Machine Learning Research, 9(Nov):2579–2605, 2008. [Google Scholar]

- Evan Z Macosko Anindita Basu, Satija Rahul, Nemesh James, Shekhar Karthik, Goldman Melissa, Tirosh Itay, Allison R Bialas Nolan Kamitaki, Martersteck Emily M, et al. Highly parallel genome-wide expression profiling of individual cells using nanoliter droplets. Cell, 161(5):1202–1214, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shaham Uri and Steinerberger Stefan. Stochastic Neighbor Embedding separates wellseparated clusters. preprint at arXiv:1702.02670