Abstract

The identification of profiled cancer-related genes plays an essential role in cancer diagnosis and treatment. Based on literature research, the classification of genetic mutations continues to be done manually nowadays. Manual classification of genetic mutations is pathologist-dependent, subjective, and time-consuming. To improve the accuracy of clinical interpretation, scientists have proposed computational-based approaches for automatic analysis of mutations with the advent of next-generation sequencing technologies. Nevertheless, some challenges, such as multiple classifications, the complexity of texts, redundant descriptions, and inconsistent interpretation, have limited the development of algorithms. To overcome these difficulties, we have adapted a deep learning method named Bidirectional Encoder Representations from Transformers (BERT) to classify genetic mutations based on text evidence from an annotated database. During the training, three challenging features such as the extreme length of texts, biased data presentation, and high repeatability were addressed. Finally, the BERT+abstract demonstrates satisfactory results with 0.80 logarithmic loss, 0.6837 recall, and 0.705 F-measure. It is feasible for BERT to classify the genomic mutation text within literature-based datasets. Consequently, BERT is a practical tool for facilitating and significantly speeding up cancer research towards tumor progression, diagnosis, and the design of more precise and effective treatments.

1. Introduction

Nowadays, genomic, transcriptomic, and epigenomic studies have been benefited from the development of inexpensive next-generation sequencing technologies, which play essential roles in exploring tumor biology [1–3]. Tumors usually possess heterogeneities, and the genomic profiling of tumors normally contains various types of genetic mutations [4–7]. However, only a small proportion of mutation genes are involved in boosting tumor growth, whereas most of them are neutral and irrelevant to tumor progression [8, 9]. Characterization and identification of cancer driver genes are important a in clinical trials to reveal tumor pathogenesis and facilitate diagnosis, prognosis, and personalized therapy [10–13]. Despite the importance of gene classification, the following analysis is challenging due to the significant amount of manual work for interpretating genomics, which is time-consuming, laborious, and subjective. With the increasing availability of electronic unstructured and semistructured data sources, automatically categorizing documents has emerged as a potential tool for information organization. Machine learning (ML), as a promising optimization tool, has been widely used in credit scoring, fraud detection, retailers, market segmentation, manufacturing, education, and healthcare [14–18]. Hence, using ML to analyze clinical contextual data automatically is favorable [19–21]. For example, in 1986, Swanson first discovered the undiscovered links in a large number of scientific literature [22]. Also, Marcotte et al. used Naive Bayesian classification to classify the literature focusing on protein-protein interaction [23].

Despite the achievements traditional ML methods have made, potential drawbacks such as low accuracy exist when they are applied on clinical text classification. In 2018, Google proposed that the BERT method achieved state-of-the-art results in 11 projects, including text classification [24]. Descriptions about clinical research acadamic papers show high similarities , which blurs the classification boundary, increases the inconsistancy, and lows the accuracy. Consequently, the advanced ML methods, such as Light Gradient Boosting Machine (LightGBM), has been proposed to enable gene multiclassification based on complex literature [25]. Nevertheless, these methods are limited by complex calculations when applied to large-scale datasets, particularly for genomic-related literature datasets that contain millions, or billions, of annotated training examples [26, 27]. In addition, the performances of ML are dependent on feature extraction that requires professional knowledge and long-term processing [28–31].

To overcome these difficulties, deep learning (DL) has emerged to handle large-scale and complex datasets since its performance increases with the enlargement of datasets [32–34]. For example, the convolutional neural networks (CNN) [35], recurrent neural networks (RNN) [36], and their combination [37] have been applied to the sentence classification successfully. Also, In 2018, Google proposed that the BERT method achieved state-of-the-art results in 11 projects, including text classification.

Hence, we fine-tune the BERT model to classify mutation effects (9 classes) using an expert-annotated oncology knowledge base. Our BERT method is developed based on the original BERT model and is capable of obtaining different syntactic and semantic information. Three main characters of training datasets including extreme length of text entry, data imbalance, and repetitive description are engineered during training challenges. We propose three truncation methods including abstract+head, head only, and head+tail to deal with extreme length of text entry and repetitive description. Besides, data imbalance is relieved by negative sampling. Overall, we improve the BERT method to classify complex clinical texts, and obtain 0.8074 logarithmic loss, 0.6837 recall, and 0.705 F-measure scores.

2. Problem Statement

The treatment of cancer is closely related to the identification of mutant genes [38]. At present, clinicians need review and classify each mutant gene manually according to the evidence in text-based clinical literature, which is a complicated, time-consuming, and error-prone method [39–42]. To solve this problem, Memorial Sloan Kettering Cancer Center (MSKCC) has provided an expert-annotated precision oncology knowledge base with thousands of mutations manually annotated by world-class researchers and oncologists for studying gene classification using computer-based method [43]. On top of that, we design an artificial intelligence algorithm to automatically and accurately classify mutations for avoiding mistakes caused by manual classification, and provide further help for cancer treatments.

In recent years, with the rise of artificial intelligence, natural language processing, which uses linguistics, computers, mathematics, and other scientific methods to communicate between human beings and computers, has developed rapidly [44–46]. Among them, text classification is one of the most basic and critical tasks in natural language processing [47]. Text classification is the process of associating a given text within one or more categories according to characteristics of texts (content or attributes) under a predefined classification system [48–50]. The process of text classification mainly includes three steps. Firstly, the text is preprocessed, then the vector representation of the text is extracted. Finally, the classifier is trained to classify the text [48]. Text classification can be divided into single-label text classification and multilabel text classification according to the number of labels to which the text belongs. The single-label text refers to each text belonging to only one category, while multilabel text refers to each text belonging to one or more categories [51–53]. The calculation formula for text classification can be defined as follows:

| (1) |

In the formula, the collection D = {d1, d2, ⋯dn} refers to the set of texts classified, where the ith classified text is represented by di, and n is the number of classified texts. The collection C = {c1, c2, ⋯, cm} is a collection of predefined classification categories, where the jth category is represented by cj, and m is the number of predefined categories. F is a function representing a mapping relationship.

Currently, the most common methods for text classification are statistical ML and DL-based methods. Statistical ML methods usually preprocess texts in the first place, then manually extract high-dimensional sparse features. Consequently, they use statistical ML algorithms to obtain classification results. In 1998, Joachims first employed support for vector machine (SVM) in text classification and achieved favorable results [54]. In the following research, many methods based on statistical ML are used in text classification, including Naïve Bayes classifier [55], K-nearest Neighbor method (KNN) [56], decision tree [57], boosting [58], and LightGBM [59]. Among them, LightGBM is widely used in classification problems due to its fast speed, low memory consumption, and relatively high accuracy [60]. Although LightGBM gets good classification results in some scenes, research related to this approach runs basically into bottleneck due to its strong dependence on the effectiveness of features. Also, it is time-consuming and labor-intensive during feature extraction process.

Although the traditional statistical ML models can classify texts faster than the manual method, they require manual feature extraction, which leads to a large amount of labor cost and is difficult to obtain effective features [61–63]. On the other hand, the DL methods are superior to traditional statistical ML methods in terms of text feature expression and automatic acquisition of feature expression capabilities, thus eliminating complex manual feature engineering processes and reducing possible application costs [64]. As we all know, large-scale pretraining language models have become a new driving force for various natural language processing tasks [65]. For example, BERT models can significantly improve model performance by fine-tuning downstream tasks. Google first proposed the BERT model, and it completely subverted the logic of training word vectors before training specific tasks in natural language processing [24]. Methods of fine-tuning the BERT model, such as extended text preprocessing and layer adjustment, have been proved to improve the results substantially [66]. Wu et al. proposed a conditional BERT method, which can enhance the text classification ability of original BERT method by predicting the conditions of masked words [67]. To sum up, it is feasible to employ the fine-tuned model based on the original BERT to classify genetic mutations.

Hence, we propose an improved BERT model with high classification accuracy after analyzing the MSKCC mutation gene interpretation database thoroughly. We believe this method can be successfully applied to genetic mutation classification. The main contributions of our work are summarized as follows:

The text description of the individual sample shows considered lengths. There are differences in text lengths between different categories of samples. Some categories contain shorter words, while others contain miscellaneous descriptions. Generally, texts in a dataset range from hundreds to thousands of words in length. However, the lengths of the gene mutation in this paper are much longer than usual. We use the BERT method to truncate texts and extract valuable information in the texts using different methods, thus avoiding adverse impacts of excessive differences in text lengths on the results.

There is a deviation of total gene number in all categories. Individual genes are unevenly distributed in different categories. Some genes belong to five or more groups, while others only present in two categories. To solve the vast differences in the number of samples between different categories in the dataset, we choose an undersampled data processing method to balance the data deviations between different categories.

The whole dataset has a high repeated description. Different examples belong to different categories share the same text entry. Some categories show a high correlation, which may lead to low accuracy. To solve this problem, we improve the BERT model and splice the last three layers of the initial model, which increases the accuracy of the model and reduces the running time.

To a certain extent, we illustrate the effectiveness of using DL in the classification of genetic clinical texts. As the data set increases, the DL model represented by BERT will learn the characteristics of the sample better to achieve exceptional results. In the future, DL models will have better performances on similar tasks.

3. Materials and Methods

3.1. Description of Datasets

MSKCC sponsored the training and test datasets in this study for method development and validation. For the past several years, world-class experts have created a clinical evidence annotated precision oncology knowledge database. The annotations contain information about which genes are oncology clinically actionable. We sum up three characteristics of the MSKCC datasets mentioned below:

Textual descriptions of individual samples exhibit considerable lengths. The text lengths among different classes show variabilities. Some of the classes contain shorter words while other classes contain redundant descriptions.

The overall gene numbers presented among the whole classes show biases. The distribution of individual genes in different classes is unequal. Some genes belong to five classes or more, and some of the genes only fit in two classes.

High repetitive descriptions exist in the whole datasets. Different samples belong to different classes that share the same text entry. Classes demonstrate high correlations.

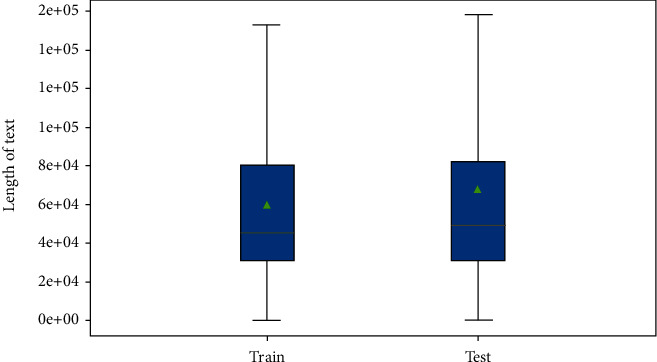

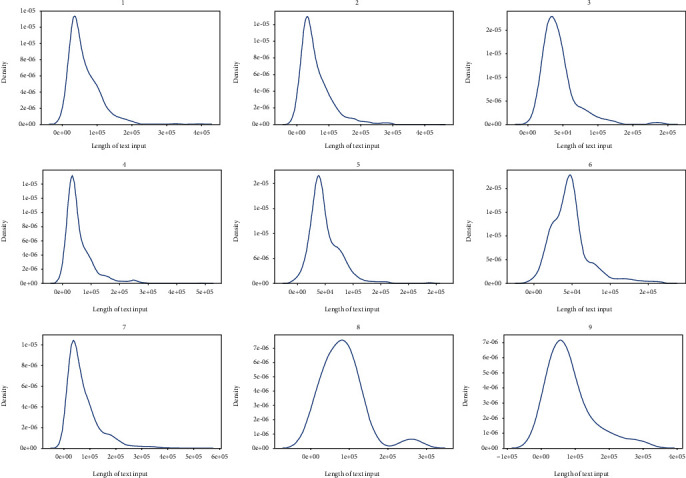

3.1.1. Length of Entry Text

It is reasonable to analyze the length of the entry text as a prior task for textual-based classification. We find that extremely long descriptions with massive irrelevant information are correlated with samples (Figure 1). We plot the distribution of text lengths (Figure 2), and our datasets contain more counted words than the normal classification datasets in reviews [68]. Consequently, we examine the distribution of text lengths among different target classes to better understand the uniformity of datasets. Variabilities are demonstrated among different classes (Figure 3). Comparing the density of the length distributions, we divide the classes into three groups. Classes 3, 5, and 6 contain the shortest counted words; classes 1, 2, 4, and 7 exhibite medium counted words; and classes 8 and 9 show the most counts. Overall, two features that increase the task difficulty are attracted: considerable lengths of words and the unequal text length distribution among different classes.

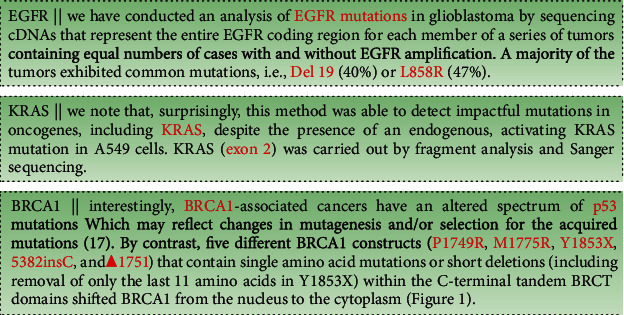

Figure 1.

The cut-off document views of the datasets.

Figure 2.

Distribution of the text entry lengths.

Figure 3.

Distribution of the text entry lengths among different classes.

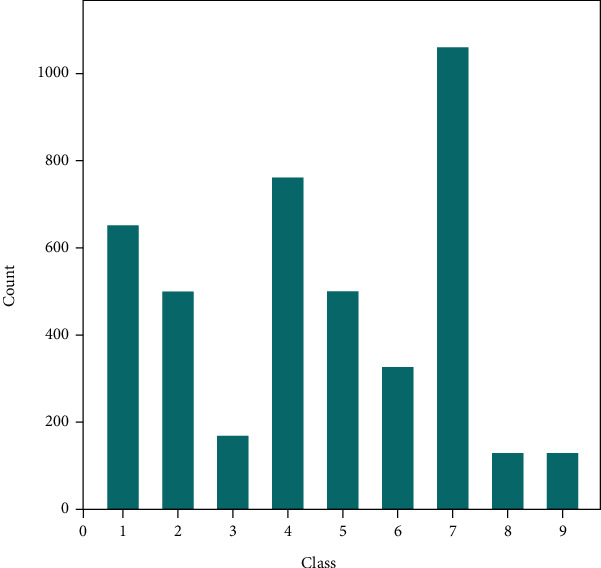

3.1.2. Analysis of the Data Distribution

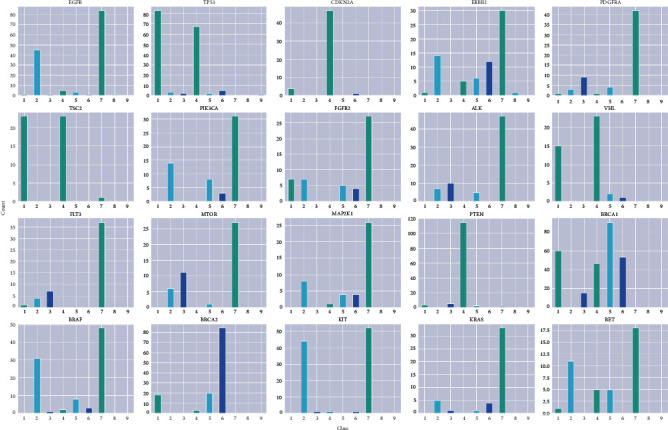

Analyzing the composition of datasets can help us construct algorithms at an early stage. We sum up the frequency of genes among 9 classes (Figure 4). The 9 classes correspond to mutation effects but are annotated using numbers instead of real textural information to avoid artificial labeling, thus improving the reliability of our algorithms during the training. The true information of these labels is listed in Table 1. The distribution of genes among 9 classes exhibited bias. Genes in class 7 are significantly higher than genes in classes 3, 8, and 9.

Figure 4.

Distribution of the number of genes among 9 classes.

Table 1.

Class information corresponds to the annotated number.

| Annotated number | Class information |

|---|---|

| 1 | Likely loss of function |

| 2 | Likely gain of function |

| 3 | Neutral |

| 4 | Loss of function |

| 5 | Likely neutral |

| 6 | Inconclusive |

| 7 | Gain of function |

| 8 | Likely switch of function |

| 9 | Switch of function |

We also examin the interactions among different features within target classes. To reduce calculations, we select the top 20 gene types to illustrate the interrelations instead of the whole gene types (Table 2). Selected genes are sorted by classes (Figure 5). The distribution of genes demonstrate huge variabilities among different classes. We find that classes 8 and 9 contain almost none of these genes, and class 3 contain a few of these genes. These distribution biases are in accordance with our previous gene frequency summary based on the whole gene types. Similarly, the trends in classes 1, 2, 4, and 7 correspond to our previous results. These comparable results indicate that the whole datasets are highly associated with selected genes. Consequently, discriminatory differences among classes can impede the feature learning performances of our algorithms and low the accuracy of the text classification.

Table 2.

List of top 20 genes in the datasets.

| Rank | Gene name | Rank | Gene name |

|---|---|---|---|

| 1 | EGFR | 11 | FLT3 |

| 2 | TP53 | 12 | MTOR |

| 3 | CDKN2A | 13 | MAP2K1 |

| 4 | ERBB2 | 14 | PTEN |

| 5 | PDGFRA | 15 | BRCA1 |

| 6 | TSC2 | 16 | BRAF |

| 7 | PIK3CA | 17 | BRCA2 |

| 8 | FGFR2 | 18 | KIT |

| 9 | ALK | 19 | KRAS |

| 10 | VHL | 20 | RET |

Figure 5.

Distribution of genes among classes.

We further explore the distribution of individual genes within classes, which demonstrates inequitable distributions. For instance, genes such as CDKN2A, PTEN, and TSC2 only present in a limited number of classes (lesser than three). In contrast, BRCA1, ERBB2, FGFR2, and RET are possessed in the majority of classes. Compared with genes only present in a few groups, genes that spread among classes are generally difficult to classify because elaborate texture descriptions can blur the classification standard. Hence, the accuracy of classifications is dependent on the gene compositions. Commonly, genes distributed in lesser classes can show more satisfactory results.

3.1.3. Characteristics of the Datasets

Using typical genes as samples, we find that these typical genes presented in classes demonstrated variabilities. To better recognize these biases and complete potential influences behind them, we conduct a statistical analysis of the whole datasets from the text entry aspects. We find that different samples share the same text entries after extracting common words. The highly repetitive descriptions increase the difficulties of classification, especially when samples in different classes share the same sketches. The worst scenario is the fact that samples belong to different classes that have the same name, but other clue information is missing. For example, five possible mutations of gene BRCA1, the mutation P1749R, M1775R, Y1853X, 5382insC, and Δ1751, may belong to different classes, but their descriptions are close, even in the same sentence. Similarly, two mutations of EGFR, such as Del 19 and L858R, also show in pairs (Figure 1). Hence, we can assume that it is tough to categorize the samples into correct classes by relying on the name of mutations with limited or without other valuable information.

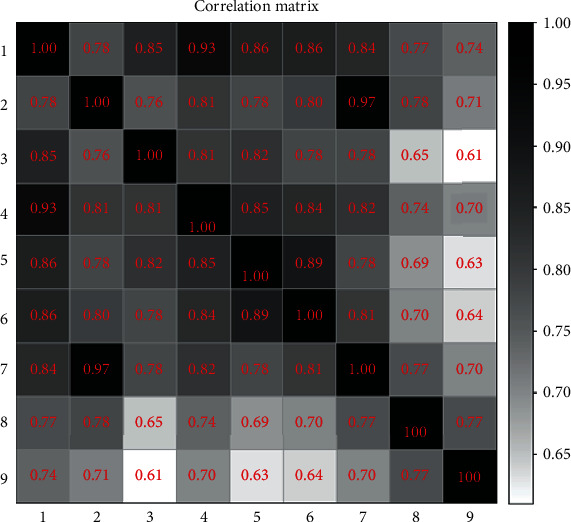

Also, class-dependent word similarities are evaluated using full word lists (Figure 6). Correlation coefficients exhibited high connections (higher than 60%) between classes. Among them, classes 2 and 7 and classes 1 and 4 demonstrate extremely high correlations with 97% and 93% coefficients, respectively. Therefore, we think substantial work needs to be done to clarify samples that share similar descriptions in high correlative classes. Besides, we can not expect high accuracy when classifying samples with these properties.

Figure 6.

Confusion matrix analysis of the similarity of the texts in different classes.

3.2. BERT

Compared with traditional ML methods, DL demonstrates better performances in text feature expression and automatically obtains feature expression capabilities, thus removing the complicated manual feature engineering process and decreasing its application cost. BERT is a new language representation model based on DL, which was released by the AI team of Google company in October 2018. The BERT model is divided into two parts: pretraining and fine-tuning.

3.2.1. Pretraining of Modified BERT Model

In the pretraining process, a large-scale unlabeled text corpus is used to complete the deep vector representation of text content in the deep bidirectional neural network through an unsupervised training method, thus forming the corresponding text pretraining model. Google has trained two pretrained models. One is the BERT-base model, which includes 12 transformers, 12 self-attention heads, and 768 hidden sizes. The other is the BERT-large model, which contains 24 transformers, 16 self-attention heads, and 1024 hidden sizes. Parameters of BERT-base methods are loaded into the downstream BERT classification model so that our model parameters can be fine-tuned based on these pretrained models, which significantly reduces the convergence time of the model and increases the accuracy of the model. During the pretraining process, BERT randomly masks out, replaces some words, and predicts these missing or replaced words through the remaining ones. The transformer must maintain a distributed representation of each input token. The transformer is likely to remember the word masked without this masking and predicting procedure.

3.2.2. Fine-Tuning of Modified BERT Model

Since the generalization ability of the pretrained model is powerful, the BERT pretrained model can be applied to various downstream tasks after fine-tuning the parameters of the pretrained model. For example, it is possible to meet the needs of a text classification task by adding pooling, full connect, and Softmax function to the output layer sequence of fine-tuned BERT model. The fine-tuning process requires much lesser training resources compared to the pretraining process. The method of fine-tuning BERT model, such as truncation and layer adjustment, has been proved to be capable of improving the result [18]. It implements the process of unsupervised learning through the mask, thereby predicting the vocabulary that will appear in the sentence and understanding the specific meaning of the sentence according to the context.

3.3. Evaluation Equation

This paper evaluates the performances of the model using several evaluation indicators: Logloss, recall (REC), precision (PRE), F1 score, receiver operating characteristic (ROC) curve, and confusion matrix. True Positive (TP), True Negative (TN), False Positive (FP), and False Negative (FN) can be used to calculate some of the indicators mentioned above. TP is the number of categories that are correctly predicted. TN is the number of categories that are correctly predicted as another class. FP is the number of categories that are wrongly predicted. FN is the number of categories that are wrongly predicted as another class.

In multiclassification tasks, Logloss is one of the most common loss functions, where the predicted input is a probability value distribution between 0 and 1, and it can be defined as follows:

| (2) |

where M is the number of samples and N is the number of classifications. ymn is the predicted result of classification, such as 0 and 1. p (ymn) is the predicted probability of ymn.

PRE defines the proportion of genes identified correctly belonging to this type of mutation:

| (3) |

REC calculates the proportion of genes identified correctly belonging to this type of mutation in all this type of gene:

| (4) |

F1 score takes into account the factors of PRE and REC. F1 is the standard metric for this task. It combines precision and recall. Macro-F1 is a parameter index that can best reflect the effectiveness and stability of the model:

| (5) |

The ROC curve is created by plotting the TP against the FP at various threshold settings.

The confusion matrix is a specific table capable of visualizing the performance of an algorithm. Individual rows of the matrix represent the predicted gene classses, while each column represents the genes in the actual classes.

4. Experiments

For easier comparison with other methods, our training process uses the GPU of the server in the lab for training. There are 3136 training sets and 553 verification sets in total. The Python language is selected as the programming language in this experiment. The experiment is completed on Tensorflow's open-source framework and BERT-base. We use the parameters on BERT-base trained by Google through a large number of corpus on Wikipedia as pretraining parameters to accelerate the convergence speed and reduce the convergence difficulty. Our experimental parameters are batch size 128, learning rate 3e − 5, and warmup period 0.06; the whole experiment runs for 30 cycles; the maximum sequence length of BERT input is 512; and the optimizer is Adam optimizer, while other model parameters remain unchanged.

4.1. Experiment Procedure

The BERT model can automatically complete the process of converting each word in the text into a one-dimensional vector by querying the word vector table and inputting it in the model. The input of the model contains three sections: the token embeddings, the segmentation embeddings, and position embeddings.

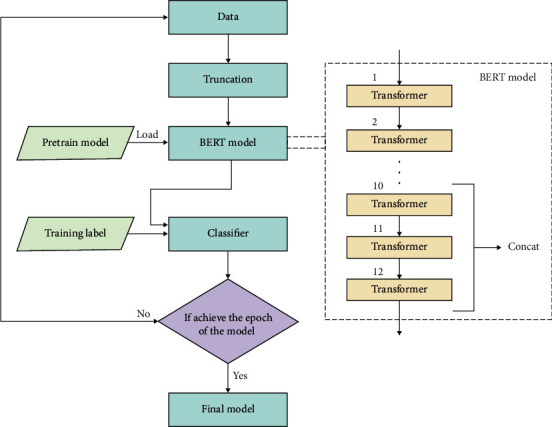

Because BERT is a pretraining model with high generalization ability, the output layer of BERT can be externally connected with corresponding layers to complete downstream tasks. For example, in this experiment, the processed data is substituted into the BERT model for training, and the output layer will connect Softmax function for classification tasks (Figure 7).

Figure 7.

Scheme of the training.

BERT is an unsupervised model that uses whether the sentences are related to each other as labels and masks some words to make the masked words as labels, thus avoiding the tedious process of manually labeling data. Generally, the data in the dataset are not balanced. Take the samples in the 7th and 8th categories of the dataset as an example. The difference between their numbers even reaches more than 10 times. In this case, the default classification method makes classifiers pay too much attention to the category with a larger number of samples, thus making the generalization ability of the model weak and unable to obtain satisfactory results. Therefore, we use random sampling to eliminate the imbalance between data and extract only a part of samples from the category within a larger number of samples to balance the sample number differences between classes.

Simultaneously, because the length of the gene text in the dataset is greater than 512 tokens, which is the longest length that can be retained by BERT, we need to use the truncation method to intercept part of the information in the text. We take three ways to solve this problem. The head only truncation method intercepts the first 512 tokens (at most) as input, the head+tail method intercepts part of the head and part of the tail to form 512 tokens (at most) as input, and the abstract+head method sorts the gene text according to importance, then select the most important 512 tokens (at most) as input.

Finally, the processed data are substituted into the BERT model for training. Numerous previous works have shown that fine-tuning a pretrained model which has been trained with a large amount of corpus can significantly improve the classification result. As BERT can learn different contents in different layers, stitching some of the layers together can make the model get richer information, thereby improving the accuracy of the model, so the last three layers in the BERT model are concatenated. Max pooling, fully connected, and Softmax function are added after the concatenated output layer to realize the classification of gene text to improve the classification accuracy of the model.

4.2. Experiment Results and Discussions

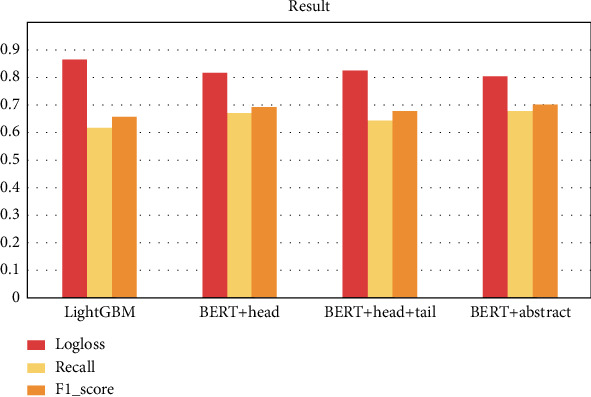

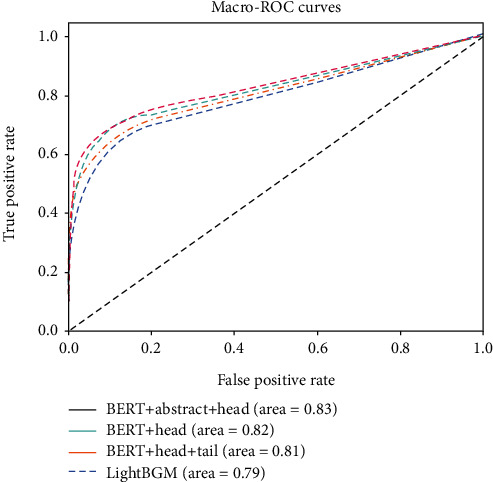

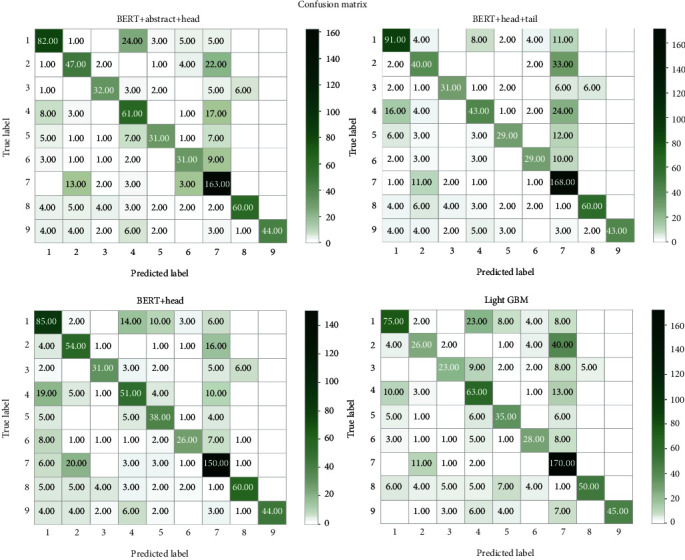

It can be seen from the figure that compared with the LightGBM method, the BERT methods using three types of truncation have higher ACC, REC, and F1 score. The confusion matrix shows our classification situation in a visual way (Figure 6). The red numbers are nonzero values. It can be observed that type 1 is easy to be confused with types 4 and 5. There are more machine judgment errors of texts between type 7 and type 2. Overall, the classification of data-lacking types 8 and 9 is more complicated than other types, possibly because there are fewer samples of types 8 and 9, and these two types have fewer intersections with other types of mutation. The lack of intersection leads to difficulties in distinguishing types 8 and 9 from different types of mutations. The ROC curve can evaluate the accuracy of the model prediction.

The performance and ranking of the entries for the proposed four methods are shown in Figure 8. All methods share the same setting of hyperparameters for an unbiased comparison. Overall, deep learning-based algorithms (BERT) perform slightly better than machine learning-based methods (LightGBM). Among the three models using BERT, the BERT+abstract truncation method has the best performance as a single model with 0.8074 logarithmic loss and 0.6837 recall. The 0.705 F-measure score is limited by the extreme shortage of training data. Better performance should be obtained when it is applied to large-scale datasets.

Figure 8.

Evaluation of four methods.

Besides, the ROC curves of the other three methods are below the ROC curve of the BERT+abstract (Figure 9). The ROC curves for the BERT+abstract, the BERT+head, the BERT+head+tail, and the LightGBM with the highest and lowest AUCs are also shown in Figure 9. Compared results indicate better performance of the BERT+abstract since the AUC assesses the algorithm's inherent validity using an effective and combined measure of sensitivity and specificity. The accuracy of predicted results is highly dependent on the datasets. The performances of our model are limited by the size of available datasets in our case. However, the capabilities of deep networks can be improved using expanded data. Our proposed model is a proof-of-concept, and we believe it is applicable when applied on large-scale datasets.

Figure 9.

ROC curves of the proposed methods.

Moreover, we compare confusion matrix tables using predict classes versus true classes among different methods (Figure 10). The confusion matrix table is an error matrix which can be used to evaluate the performance of the algorithm. In summary, individual classes of genes are predicted precisely using the BERT+abstract method, corresponding with results of Logloss and F1 measurements.

Figure 10.

Confusion matrix tables of proposed four methods.

It can be observed that class 1 is easy to be confused with classes 4 and 5. Furthermore, there are more machine judgment errors of texts between type 7 and type 2. These phenomena can be easily attributed to the similarity of texts among these classes as we previously described. Also, it is apparent that classifying classes 8 and 9 is complicated. The computer may misjudge mutation texts with real labels of 8 or 9 as other types but hardly underestimate other types of mutation texts as type 8 or 9 since there are fewer samples in classes 8 and 9. The shortage of samples in classes 8 and 9 also fails to provide sufficient data to distinguish themselves from other classes since there are no intersections. Contrastingly, the classification of class 7 is easier due to the abundant samples. Therefore, the abundance of data plays essential roles in improving the efficiency of classification.

5. Conclusion

In this study, we propose a deep learning algorithm to identify genomic information within texture-based literature abstracts. Aiming to address the classification problem in an extremely long, imbalanced, and repetitive dataset, we test four methods, including LightGBM and three different truncation BERT methods. By analyzing their Logloss, recall, F1 score, ROC curve, and AUC scores, we notice that the abstract+head truncation BERT method has superior results than other algorithms in all indicators.

In this study, our BERT method is limited due to the shortage of datasets, and its performance can be improved dramatically with the size of datasets increasing. Moreover, our approach will be potentially applied on diagnosing and treating more than 120,000 patients every year around the world based on the announcement of the MSKCC, which will provide our opportunity to enhance our methods further when large-scale datasets are available. We believe BERT is a promising tool for accelerating tumor genomic-related research and facilitating tumor diagnosis and treatments. Besides, this text-based classifier algorithm demonstrated high universality, and it is applicable not only in tumor-specific research but also in other types of diseases and in other nonacademic areas.

Acknowledgments

This work has been supported by the National Key Research and Development Program No.2018YFB2100100, Data-Driven Software Engineering innovation team of Yunnan province of China No.2017HC012, Postdoctoral Science Foundation of China No.2020M673312, Innovation and Entrepreneurship training projects for College Students of Yunnan University No.20201067307, Postdoctoral Science Foundation of Yunnan Province, Project of the Yunnan Provincial Department of Education scientific research fund No. 2019J0010, and DongLu Young and Middle-aged backbone Teachers Project of Yunnan University.

Contributor Information

Zhaogang Yang, Email: zhaogang.yang@utsouthwestern.edu.

Na Zhao, Email: zhaonayx@126.com.

Data Availability

All data generated or analyzed during this study are included in this published article.

Conflicts of Interest

The authors declare that they have no competing interests.

Authors' Contributions

N Zhao and ZG Yang conceived and designed the experiments. YH Su, HX Xiang, HT Xie, and Y Yu analyzed and extracted data. YH Su and HT Xie constructed the figures. YH Su, HT Xie, and ZG Yang participated in table construction. All authors participated in the writing, reading, and revising of the manuscript and approved the final version of the manuscript.

References

- 1.Metzker M. L. Sequencing technologies — the next generation. Nature Reviews Genetics. 2010;11(1):31–46. doi: 10.1038/nrg2626. [DOI] [PubMed] [Google Scholar]

- 2.Yang Z., Shi J., Xie J., et al. Large-scale generation of functional mRNA-encapsulating exosomes via cellular nanoporation. Nature Biomedical Engineering. 2020;4(1):69–83. doi: 10.1038/s41551-019-0485-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Masseroli M., Canakoglu A., Pinoli P., et al. Processing of big heterogeneous genomic datasets for tertiary analysis of next generation sequencing data. Bioinformatics. 2019;35(5):729–736. doi: 10.1093/bioinformatics/bty688. [DOI] [PubMed] [Google Scholar]

- 4.Stratton M. R., Campbell P. J., Futreal P. A. The cancer genome. Nature. 2009;458(7239):719–724. doi: 10.1038/nature07943. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Stephens P. J., The Oslo Breast Cancer Consortium (OSBREAC), Tarpey P. S., et al. The landscape of cancer genes and mutational processes in breast cancer. Nature. 2012;486(7403):400–404. doi: 10.1038/nature11017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Ma C., Jiang F., Ma Y., Wang J., Li H., Zhang J. Isolation and detection technologies of extracellular vesicles and application on cancer diagnostic. Dose-response : a publication of International Hormesis Society. 2019;17(4):155932581989100–1559325819891004. doi: 10.1177/1559325819891004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Walters N., Nguyen L. T. H., Zhang J., Shankaran A., Reátegui E. Extracellular vesicles as mediators ofin vitroneutrophil swarming on a large-scale microparticle array. Lab on a Chip. 2019;19(17):2874–2884. doi: 10.1039/C9LC00483A. [DOI] [PubMed] [Google Scholar]

- 8.Bailey M. H., Tokheim C., Porta-Pardo E., et al. Comprehensive characterization of cancer driver genes and mutations. Cell. 2018;173:371–385.e18. doi: 10.1016/j.cell.2018.02.060. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Pon J. R., Marra M. A. Driver and passenger mutations in cancer. Annual Review of Pathology: Mechanisms of Disease. 2015;10(1):25–50. doi: 10.1146/annurev-pathol-012414-040312. [DOI] [PubMed] [Google Scholar]

- 10.Youn A., Simon R. Identifying cancer driver genes in tumor genome sequencing studies. Bioinformatics. 2011;27(2):175–181. doi: 10.1093/bioinformatics/btq630. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Tamborero D., Gonzalez-Perez A., Perez-Llamas C., et al. Comprehensive identification of mutational cancer driver genes across 12 tumor types. Scientific Reports. 2013;3(1):p. 2650. doi: 10.1038/srep02650. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Vogelstein B., Papadopoulos N., Velculescu V. E., Zhou S., Diaz L. A., Kinzler K. W. Cancer genome landscapes. Science. 2013;339(6127):1546–1558. doi: 10.1126/science.1235122. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Liu Y., Ma Y., Zhang J., Yuan Y., Wang J. Exosomes: a novel therapeutic agent for cartilage and bone tissue regeneration. Dose Response. 2019;17(4, article 1559325819892702) doi: 10.1177/1559325819892702. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Tsai C.-F., Wu J.-W. Using neural network ensembles for bankruptcy prediction and credit scoring. Expert Systems with Applications. 2008;34(4):2639–2649. doi: 10.1016/j.eswa.2007.05.019. [DOI] [Google Scholar]

- 15.Norgeot B., Glicksberg B. S., Butte A. J. A call for deep-learning healthcare. Nature Medicine. 2019;25(1):14–15. doi: 10.1038/s41591-018-0320-3. [DOI] [PubMed] [Google Scholar]

- 16.Sun Z.-L., Choi T.-M., Au K.-F., Yu Y. Sales forecasting using extreme learning machine with applications in fashion retailing. Decision Support Systems. 2008;46(1):411–419. doi: 10.1016/j.dss.2008.07.009. [DOI] [Google Scholar]

- 17.Shen C., Nguyen D., Zhou Z., Jiang S. B., Dong B., Jia X. An introduction to deep learning in medical physics: advantages, potential, and challenges. Physics in Medicine & Biology. 2020;65(5):p. 05TR01. doi: 10.1088/1361-6560/ab6f51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Wang R., Weng Y., Zhou Z., Chen L., Hao H., Wang J. Multi-objective ensemble deep learning using electronic health records to predict outcomes after lung cancer radiotherapy. Physics in Medicine & Biology. 2019;64(24):p. 245005. doi: 10.1088/1361-6560/ab555e. [DOI] [PubMed] [Google Scholar]

- 19.Wu H., Toti G., Morley K. I., et al. SemEHR: a general-purpose semantic search system to surface semantic data from clinical notes for tailored care, trial recruitment, and clinical research. Journal of the American Medical Informatics Association. 2018;25(5):530–537. doi: 10.1093/jamia/ocx160. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Gulden C., Kirchner M., Schüttler C., et al. Extractive summarization of clinical trial descriptions. International Journal of Medical Informatics. 2019;129:114–121. doi: 10.1016/j.ijmedinf.2019.05.019. [DOI] [PubMed] [Google Scholar]

- 21.Li S., Xu P., Li B., et al. Predicting lung nodule malignancies by combining deep convolutional neural network and handcrafted features. Physics in Medicine & Biology. 2019;64(17):p. 175012. doi: 10.1088/1361-6560/ab326a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Swanson D. R. Fish oil, Raynaud's syndrome, and undiscovered public knowledge. Perspectives in Biology and Medicine. 1986;30(1):7–18. doi: 10.1353/pbm.1986.0087. [DOI] [PubMed] [Google Scholar]

- 23.Marcotte E. M., Xenarios I., Eisenberg D. Mining literature for protein-protein interactions. Bioinformatics. 2001;17(4):359–363. doi: 10.1093/bioinformatics/17.4.359. [DOI] [PubMed] [Google Scholar]

- 24.Jacob Devlin M.-W. C., Lee K., Toutanova K. BERT: pre-training of deep bidirectional transformers for language understanding. 2019. https://arxiv.org/abs/1810.04805.

- 25.Wang D., Zhang Y., Zhao Y. Association for Computing Machinery. Proceedings of the 2017 International Conference on Computational Biology and Bioinformatics; 2017; Newark, NJ, USA. pp. 7–11. [Google Scholar]

- 26.Oytam Y., Sobhanmanesh F., Duesing K., Bowden J. C., Osmond-McLeod M., Ross J. Risk-conscious correction of batch effects: maximising information extraction from high-throughput genomic datasets. BMC Bioinformatics. 2016;17(1):p. 332. doi: 10.1186/s12859-016-1212-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Woerner A. E., Cox M. P., Hammer M. F. Recombination-filtered genomic datasets by information maximization. Bioinformatics. 2007;23(14):1851–1853. doi: 10.1093/bioinformatics/btm253. [DOI] [PubMed] [Google Scholar]

- 28.Raschka S., Mirjalili V. Python machine learning: machine learning and deep learning with Python, scikit-learn, and TensorFlow 2. Packt Publishing Ltd; 2019. [Google Scholar]

- 29.Li L., Ruan H., Liu C., et al. Machine-learning reprogrammable metasurface imager. Nature Communications. 2019;10:1–8. doi: 10.1038/s41467-019-09103-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Zhang X.-D. A Matrix Algebra Approach to Artificial Intelligence. Springer; 2020. [Google Scholar]

- 31.Rajkomar A., Dean J., Kohane I. Machine learning in medicine. New England Journal of Medicine. 2019;380(14):1347–1358. doi: 10.1056/NEJMra1814259. [DOI] [PubMed] [Google Scholar]

- 32.Messac A., Chen X. EMBC 2019 Speakers. 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC); 2019; Berlin, Germany. pp. 2182–2185. [DOI] [PubMed] [Google Scholar]

- 33.Tian Z., Yen A., Zhou Z., Shen C., Albuquerque K., Hrycushko B. A machine-learning–based prediction model of fistula formation after interstitial brachytherapy for locally advanced gynecological malignancies. Brachytherapy. 2019;18(4):530–538. doi: 10.1016/j.brachy.2019.04.004. [DOI] [PubMed] [Google Scholar]

- 34.Al-Ayyoub M., Nuseir A., Alsmearat K., Jararweh Y., Gupta B. Deep learning for Arabic NLP: a survey. Journal of Computational Science. 2018;26:522–531. doi: 10.1016/j.jocs.2017.11.011. [DOI] [Google Scholar]

- 35.Kim Y. Convolutional neural networks for sentence classification. Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP); 2014; Doha, Qatar. pp. 1746–1751. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Lee J. Y., Dernoncourt F. Sequential short-text classification with recurrent and convolutional neural networks. Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies; 2016; San Diego, California. pp. 515–520. [Google Scholar]

- 37.Shiou Tian Hsu C. M., Jones P., Samatova N. A hybrid CNN-RNN alignment model for phrase-aware sentence classification. Proceedings of the 15th Conference of the European Chapter of the Association for Computational Linguistics; 2017; Valencia, Spain. pp. 443–449. [Google Scholar]

- 38.Stenson P. D., Mort M., Ball E. V., et al. The human gene mutation database: 2008 update. Genome Medicine. 2009;1:1–6. doi: 10.1186/gm13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Stenson P. D., Ball E. V., Mort M., et al. Human gene mutation database (HGMD®): 2003 update. Human Mutation. 2003;21(6):577–581. doi: 10.1002/humu.10212. [DOI] [PubMed] [Google Scholar]

- 40.Stenson P. D., Mort M., Ball E. V., Shaw K., Phillips A. D., Cooper D. N. The Human Gene Mutation Database: building a comprehensive mutation repository for clinical and molecular genetics, diagnostic testing and personalized genomic medicine. Human Genetics. 2014;133(1):1–9. doi: 10.1007/s00439-013-1358-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Prelich G. J. G. Gene overexpression: uses, mechanisms, and interpretation. Genetics. 2012;190:841–854. doi: 10.1534/genetics.111.136911. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Gertych A., Swiderska-Chadaj Z., Ma Z., et al. Convolutional neural networks can accurately distinguish four histologic growth patterns of lung adenocarcinoma in digital slides. Scientific Reports. 2019;9, article 1483 doi: 10.1038/s41598-018-37638-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.(MSKCC) M. S. K. C. C. http://www.mskcc.org/

- 44.Indurkhya N., Damerau F. J. Handbook of Natural Language Processing. CRC Press; 2010. [Google Scholar]

- 45.Bird S., Klein E., Loper E. Natural Language Processing with Python: Analyzing Text with the Natural Language Toolkit. O'Reilly Media, Inc.; 2009. [Google Scholar]

- 46.Manning C., Schutze H. Foundations of Statistical Natural Language Processing. MIT press; 1999. [Google Scholar]

- 47.Kao A., Poteet S. R. Natural Language Processing and Text Mining. Springer Science & Business Media; 2007. [Google Scholar]

- 48.Moschitti A., Basili R. European Conference on Information Retrieval. Springer; 2007. [Google Scholar]

- 49.Hartmann J., Huppertz J., Schamp C., Heitmann M. Comparing Automated Text Classification Methods. International Journal of Research in Marketing. 2019;36(1):20–38. doi: 10.1016/j.ijresmar.2018.09.009. [DOI] [Google Scholar]

- 50.Deng X., Li Y., Weng J., Zhang J. Feature selection for text classification: a review. Multimedia Tools and Applications. 2019;78(3):3797–3816. doi: 10.1007/s11042-018-6083-5. [DOI] [Google Scholar]

- 51.Amazal H., Ramdani M., Kissi M. International Conference on Smart Applications and Data Analysis. Springer; 2020. [Google Scholar]

- 52.Chen W., Liu X., Guo D., Lu M. International Conference on Data Mining and Big Data. Springer; 2018. [Google Scholar]

- 53.Einea O., Elnagar A., Al Debsi R. Sanad: single-label Arabic news articles dataset for automatic text categorization. Data in Brief. 2019;25, article 104076 doi: 10.1016/j.dib.2019.104076. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Joachims T. European Conference on Machine Learning. Springer; 2005. [Google Scholar]

- 55.Chen J., Huang H., Tian S., Qu Y. Feature selection for text classification with naïve Bayes. Expert Systems with Applications. 2009;36(3):5432–5435. doi: 10.1016/j.eswa.2008.06.054. [DOI] [Google Scholar]

- 56.Suguna N., Thanushkodi K. An improved k-nearest neighbor classification using genetic algorithm. International Journal of Computer Science Issues. 2010;7:18–21. [Google Scholar]

- 57.Pranckevičius T., Marcinkevičius V. Comparison of naive bayes, random forest, decision tree, support vector machines, and logistic regression classifiers for text reviews classification. Baltic Journal of Modern Computing. 2017;5:p. 221. [Google Scholar]

- 58.Bloehdorn S., Hotho A. International Workshop on Knowledge Discovery on the Web. Springer; 2004. [Google Scholar]

- 59.Zhang X. S., Chen D., Zhu Y., et al. A multi-view ensemble classification model for clinically actionable genetic mutations. 2018. https://arxiv.org/abs/1806.09737.

- 60.Al Daoud E. Comparison between XGBoost, LightGBM and CatBoost using a home credit dataset. International Journal of Computer and Information Engineering. 2019;13:6–10. [Google Scholar]

- 61.McKinney B. A., Reif D. M., Ritchie M. D., Moore J. H. Machine learning for detecting gene-gene interactions. Applied Bioinformatics. 2006;5:77–88. doi: 10.2165/00822942-200605020-00002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Cleophas T. J., Zwinderman A. H., Cleophas-Allers H. I. Machine learning in medicine. Springer; 2013. [Google Scholar]

- 63.Makridakis S., Spiliotis E., Assimakopoulos V. Statistical and machine learning forecasting methods: concerns and ways forward. PLoS One. 2018;13, article e0194889 doi: 10.1371/journal.pone.0194889. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Schmidhuber J. Deep learning in neural networks: an overview. Neural Networks. 2015;61:85–117. doi: 10.1016/j.neunet.2014.09.003. [DOI] [PubMed] [Google Scholar]

- 65.Otter D. W., Medina J. R., Kalita J. K. A survey of the usages of deep learning for natural language processing. IEEE Transactions on Neural Networks and Learning Systems. 2020:1–21. doi: 10.1109/TNNLS.2020.2979670. [DOI] [PubMed] [Google Scholar]

- 66.Wu X., Lv S., Zang L., Han J., Hu S. International Conference on Computational Science. Springer; pp. 84–95. [Google Scholar]

- 67.Sun C., Qiu X., Xu Y., Huang X. China National Conference on Chinese Computational Linguistics. Springer; 2019. How to fine-tune BERT for text classification? [Google Scholar]

- 68.Giannakopoulos G., Mavridi P., Paliouras G., Papadakis G., Tserpes K. Article 13 (Association for Computing Machinery). Proceedings of the 2nd International Conference on Web Intelligence, Mining and Semantics; 2012; Craiova, Romania. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

All data generated or analyzed during this study are included in this published article.