Abstract

Rationale and Objectives

Artificial intelligence (AI) has rapidly emerged as a field poised to affect nearly every aspect of medicine, especially radiology. A PubMed search for the terms “artificial intelligence radiology” demonstrates an exponential increase in publications on this topic in recent years. Despite these impending changes, medical education designed for future radiologists have only recently begun. We present our institution's efforts to address this problem as a model for a successful introductory curriculum into artificial intelligence in radiology titled AI-RADS.

Materials and Methods

The course was based on a sequence of foundational algorithms in AI; these algorithms were presented as logical extensions of each other and were introduced as familiar examples (spam filters, movie recommendations, etc.). Since most trainees enter residency without computational backgrounds, secondary lessons, such as pixel mathematics, were integrated in this progression. Didactic sessions were reinforced with a concurrent journal club highlighting the algorithm discussed in the previous lecture. To circumvent often intimidating technical descriptions, study guides for these papers were produced. Questionnaires were administered before and after each lecture to assess confidence in the material. Surveys were also submitted at each journal club assessing learner preparedness and appropriateness of the article.

Results

The course received a 9.8/10 rating from residents for overall satisfaction. With the exception of the final lecture, there were significant increases in learner confidence in reading journal articles on AI after each lecture. Residents demonstrated significant increases in perceived understanding of foundational concepts in artificial intelligence across all mastery questions for every lecture.

Conclusion

The success of our institution's pilot AI-RADS course demonstrates a workable model of including AI in resident education.

Key Words: Artificial intelligence, Education, Residency training, Machine learning, Radiology

Abbreviations: AI, Artificial Intelligence; ML, Machine Learning

Introduction

Artificial intelligence has rapidly emerged as a field poised to affect nearly every aspect of medicine, especially radiology.1, 2, 3 A PubMed search for the terms “artificial intelligence radiology” demonstrates an exponential increase in publications on this topic in recent years. Additionally, radiologists, radiology residents, medical students have increasingly recognized the need for a basic understanding of artificial intelligence.4, 5, 6 Despite these impending changes, medical education designed for future radiologists have only recently begun.7, 8, 9

A number of resources have emerged attempting to specifically address this concern for radiology professionals taught to varying levels of ability.10, 11, 12, 13, 14, 15, 16 While these resources are tailored to imaging applications, as of writing we have found few examples of formal integration into residency training.

We present our institution's efforts to address this problem as a model for a successful introductory curriculum into artificial intelligence in radiology titled AI-RADS. Our pilot course was created with the goal of imparting an intuitive understanding of the strengths and limitations of machine learning techniques along with an intellectual framework to critically evaluate scientific literature on the subject.

Methods

This integrated artificial intelligence curriculum (AI-RADS) was designed for learners at any level of computational proficiency, assuming only an understanding of basic statistics. Each lecture consisted of an algorithm in artificial intelligence along with supporting fundamental concepts in computer science. Algorithms were introduced as a string of observations surrounding common problems modern computing has attempted to solve and were initially introduced as familiar anchoring examples such as spam filters, movie recommendations, fraud detection, etc. (Fig. 1 ). The lectures built on each other's concepts sequentially and were presented as logical extensions of one another (Fig. 2 ). Algorithms were selected based on how commonly they are employed or as introductions to more complex models, acting as an intellectual foundation for data representation. No prework was expected for didactic sessions.

Figure 1.

Sample lecture slide. Sample lecture slide from AI-RADS Lecture 3: K-Nearest Neighbor.

Figure 2.

Curriculum. Overall AI-RADS course objectives.

Journal Club

Didactic sessions were reinforced with active discussion by a concurrent journal club highlighting the algorithm discussed in the previous lecture (Appendix A). To circumvent the often verbose and intimidating technical descriptions, study guides for these papers were produced to define unfamiliar terms and dissect complicated mathematical expressions into simple terms. Learners were expected to have familiarized themselves with the paper and to have read over the study guide prior to journal club.

Pacing

The pilot AI-RADS course was incorporated as a regular part of resident didactic sessions following faculty approval from the resident education committee. These were integrated into the preexisting schedule if there was an available 1 hour timeslot. Lectures 5, 6, and 7 were held at the end of the work day, where residents were excused from clinical duties 1 hour early to attend. These lectures were held once per month for a total of 7 months; each 2-hour journal club was held 2 weeks after its corresponding lecture. By scheduling the course this way, residents had substantial artificial intelligence exposure every other week. This pacing was designed to promote retention while not overwhelming the learners.

After AI-RADS, learners should be able to:

-

1)

Describe foundational algorithms in artificial intelligence, their intellectual underpinning, and their applications to clinical radiology.

-

2)

Proficiently read journal articles on artificial intelligence in radiology.

-

3)

Identify potential weaknesses in artificial intelligence algorithmic design, database features, and performance reports.

-

4)

Identify areas where artificial intelligence techniques can be used to address problems.

-

5)

Describe different ways information can be abstractly represented and exploited.

-

6)

Demonstrate a fluency in common “buzzwords” in artificial intelligence.

Assessment

Surveys were administered before and after lectures to assess both quality and learner confidence in key concepts at hand. Four content questions were asked in each survey (see Appendix B for question list) where attendees would rate their ability to describe each topic on a scale from 1 to 5. They were also asked in both prelecture and postlecture surveys, their degree of comfort in reading medical literature centered around the algorithm. Concurrent journal clubs were held within 2 weeks of each didactic session; participants completed questionnaires following each discussion, rating their perceived understanding of the paper as well as their confidence in reading a different paper that utilizes the same computational technique.

All lectures and journal club study guides were written and delivered by the medical student fellow in radiology. Course demonstrations along with all figures attached were rendered using the Python 3 online shell, Jupyter. Content was reviewed by author SH, professor of computer science who specializes in artificial intelligence. Survey information was analyzed using the statistical analysis package SciPy version 1.2.3. Wilcoxon signed-rank tests were used in comparasion of pre- and postsurvey results.

Results

The results are provided in Figures 3 and 4 and Tables 1 and 2 .

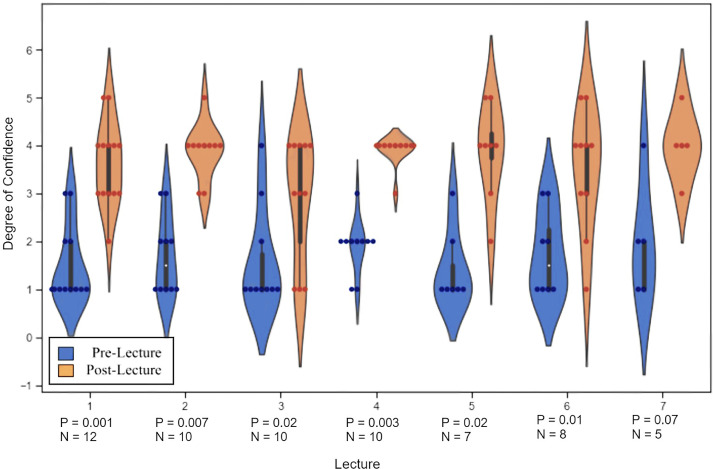

Figure 3.

Degree of confidence in reading an AI in radiology journal article. Learners were asked before and after each lecture their degree of comfort (1 = not confident, 5 = extremely confident) reading scientific literature utilizing the algorithm described in each lecture. The violin graph above demonstrates the individual responses (dots). The shape of each plot corresponds to the relative distribution of responses in each category, where width correlates with number of responses. The corresponding table displays the results of using Wilcoxon signed rank test to compare pre- and postlecture data.

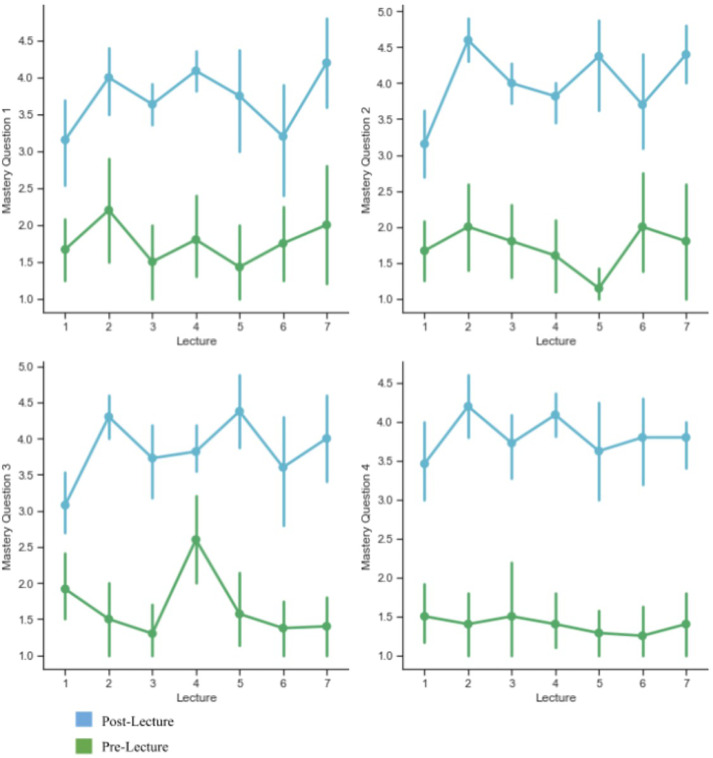

Figure 4.

Pre- and Postlecture content mastery questions. Each pre- and postlecture survey contains four questions highlighting key lecture concepts that were mapped to learning objectives. Learners rated their confidence in ability to describe these concepts on a scale from 1 to 5. There is a statistically significant difference between all pre- and postlecture question results (p < 0.04) by Wilcoxon Sign-rank test.

Table 1.

Average Measures of Satisfaction for Each Lecture

| Lecture 1 | Lecture 2 | Lecture 3 | Lecture 4 | Lecture 5 | Lecture 6 | Lecture 7 | |

|---|---|---|---|---|---|---|---|

| Interest in AI (1 = not interested, 5 = highly interested) | 4.1 | 4.3 | 4.0 | 4.5 | 4.3 | 4.1 | 4.0 |

| Content depth (1 = too little, 3 = just right, 5 = too much) | 3.0 | 3.1 | 3.25 | 3.3 | 3.4 | 3.4 | 3.2 |

| Quality of examples (1 = poor, 5 = excellent) | 4.6 | 4.7 | 4 | 4.3 | 4 | 3.9 | 4.7 |

| Number of responses | 12 | 10 | 10 | 10 | 7 | 8 | 5 |

As a measurement of satisfaction, learners were asked to report their interest in AI, the content depth of the lecture (3 = just right), and the quality of the examples used. Number of responses correspond to the number of residents who arrived on-time to receive the survey link.

Table 2.

Journal Club

| Journal Club | 1 | 2 | 3 | 5 | 6 |

|---|---|---|---|---|---|

| Preparation | |||||

| (1 = no prep, 5 = extensive prep) | 3.3 | 2 | 3.7 | 2.5 | 1.6 |

| Interest in Clinical Topic | |||||

| (1 = no interest, 5 = extremely interested) | 4.5 | 3.5 | 4.3 | 3.7 | 3.3 |

| Appropriateness of Paper | |||||

| (1 = inappropriate for my level, 5 = perfect) | 4.0 | 3.5 | 4.3 | 3.5 | 3.5 |

| Confidence in Ability to Explain this Article | |||||

| (1 = not confident at all, 5 = extremely confident) | 3.6 | 4 | 4 | 3.5 | 3.1 |

| Confidence in Ability to Read Similar Articles | |||||

| (1 = not confident at all, 5 = extremely confident) | 3.8 | 4 | 4 | 4.2 | 3.3 |

| How useful was the Study Guide? | |||||

| (1 = not useful, 5 = extremely useful) | 4.6 | 3.5 | 4.7 | 4.3 | 4.0 |

Note: Lecture 4 did not have an accompanying journal club, as the algorithm is not typically used in modern practice. Lecture 7 Journal club was canceled due to COVID-19.

Discussion

AI-RADS was well-received amongst trainees at our institution. From our metrics of quality, trainees overwhelmingly feel that the content depth of the AI-RADS lecture series is ideal, and the examples used are helpful vehicles to understand key concepts in artificial intelligence. Exit surveys demonstrated a high degree of learner satisfaction, with an aggregate rating of 9.8/10. Resident interest in artificial intelligence has remained at a stable high, suggesting that this course has not deterred learners from the field.

With the exception of Lecture 7, resident confidence in their ability to read an artificial intelligence related journal article in radiology statistically increased after each lecture (Fig. 3). Only five residents were able to attend Lecture 7, likely contributing to its borderline results. Parametric tests, such as Students t Test, yielded significant p-values in all lectures, however, given the low sample size, Wilcoxon Sign Rank was felt to be more accurate, though less statistically powerful. Anecdotally, discussions during these sessions have been robust and the questions residents ask suggest a deeper understanding of the underlying computational methods.

With the content-related questions, learners’ perceived ability to explain these concepts showed a marked increase from before to after each didactic session (Fig. 4). In lieu of formal assessment, these results demonstrate an increased understanding of core principles of artificial intelligence. This, in conjunction with perceived confidence in reading new articles related to the algorithm discussed, strongly imply an increased sense of comfort when dealing with techniques in artificial intelligence. Future iterations of this course may entail anonymous content-specific multiple-choice quizzes to further evaluate concept mastery.

Journal club was initially successful, but demonstrated a progressive decline in learner preparation, which was evident in later discussions. Residents indicated that added clinical responsibilities and lack of free time were main contributing factors to lack of engagement. In written commentary, residents stated that the journal club was very helpful in solidifying the concepts presented in lecture, and despite limited preparation, found the conversations illuminating. Many proposals have been regarding how journal clubs will be managed when this course is redeployed, including resident-led paper presentations with faculty support, take-home assignments focusing on specific articles, or integration of paper discussion into the didactic session.

Limitations of the course include heterogeneity of learner attendance, usually as a result of the clinical duties of certain rotations, scheduling, and resident burnout. It is important to note the likely contribution that lecture scheduling had in resident attendance. For the final three lectures, there was no availability in the normal didactic timeslot, requiring residents to be dismissed early from clinical duties to attend an end-of-day session. This was suboptimal and likely contributed to the relatively lower turnout. Additionally, this may have influenced overall comprehension, as the last three lectures are conceptually highly interrelated. Transitioning to an online platform would ideally circumvent these issues, as learners would be able to complete modules at their own pace. However, this may come at the cost of large group discussion opportunities. An interactive learn to code session was proposed, with preexisting datasets and skeleton code available such that learners may try to implement the algorithms they learned. While surveyed resident interest was high, many voiced concerns over time constraints with an already heavy schedule. As such, these sessions were felt to be more appropriate for future iterations once the basic curricula was completely incorporated. Lastly, while analysis by trainee demographics (such as sex, PGY, etc.) would add increased resolution to specific learner needs and satisfaction, the size of our program would effectively eliminate feedback anonymity for some learners. For this, demographic information was not obtained to encourage trainee candor in their response.

To ensure longevity and sustainability of this course as a unique hallmark of our institution's residency training program, the department is working on establishing online infrastructure to permanently house this resource. Future plans entail publishing all materials and freely sharing this educational series for all interested learners. These videos will be uploaded to the following YouTube channel as they become available: https://rb.gy/ychu2k

As artificial intelligence continues to reshape the world of medicine, it will become imperative that physicians are familiar with fundamental algorithms and techniques in artificial intelligence. This will become an essential skill for interpreting medical literature, assessing potential clinical software augmentations, formulating research questions, and purchasing equipment. By having an intuitive foundation of machine learning based around fundamental algorithms, learners will likely be better equipped to understand strengths and weaknesses of various techniques and be empowered to make more informed decisions.

In summary, residency programs are only beginning to employ basic computing concepts in their training, a skill that will become essential for the radiologists of tomorrow; proficiency in artificial intelligence will be a required skill in the near future of imaging services. We present our institution's efforts to address this problem as a model of a successful introductory curriculum into the applications of artificial intelligence on radiology.

Footnotes

Funding sources: This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Appendix A. Journal Club Articles

Do, B. H., Langlotz, C., & Beaulieu, C. F. (2017). Bone Tumor Diagnosis Using a Naïve Bayesian Model of Demographic and Radiographic Features. Journal of Digital Imaging, 30(5), 640–647. https://doi.org/10.1007/s10278-017-0001-7

Emblem, K. E., Pinho, M. C., Zöllner, F. G., Due-Tonnessen, P., Hald, J. K., Schad, L. R., Meling, T. R., Rapalino, O., & Bjornerud, A. (2015). A Generic Support Vector Machine Model for Preoperative Glioma Survival Associations. Radiology, 275(1), 228–234. https://doi.org/10.1148/radiol.14140770

Jensen, C., Carl, J., Boesen, L., Langkilde, N. C., & Østergaard, L. R. (2019). Assessment of prostate cancer prognostic Gleason grade group using zonal-specific features extracted from biparametric MRI using a KNN classifier. Journal of Applied Clinical Medical Physics, 20(2), 146–153. https://doi.org/10.1002/acm2.12542

Srinivasan, A., Galbán, C. J., Johnson, T. D., Chenevert, T. L., Ross, B. D., & Mukherji, S. K. (2010). Utility of the K-Means Clustering Algorithm in Differentiating Apparent Diffusion Coefficient Values of Benign and Malignant Neck Pathologies. American Journal of Neuroradiology, 31(4), 736–740. https://doi.org/10.3174/ajnr.A1901

Zhang, B., Tian, J., Pei, S., Chen, Y., He, X., Dong, Y., Zhang, L., Mo, X., Huang, W., Cong, S., & Zhang, S. (2019). Machine Learning–Assisted System for Thyroid Nodule Diagnosis. Thyroid, 29(6), 858–867. https://doi.org/10.1089/thy.2018.0380

Appendix B. Content Questions

| Lecture | Question 1 | Question 2 | Question 3 | Question 4 |

|---|---|---|---|---|

| 1 | I can confidently describe the difference between artificial intelligence, machine learning, and neural networks. | I am comfortable describing conditional probability. | I am comfortable describing Bayseian statistics. | I can describe how Bayseian statistics can be used in an artificial intelligence algorithm to classify an item of interest. |

| 2 | I can confidently describe the difference between supervised and unsupervised machine learning. | I can describe how images are represented numerically. | I can describe k-Means segmentation. | I can describe how k-means clustering can be used in an artificial intelligence algorithm to cluster items of interest. |

| 3 | I can confidently describe the difference between k-means and k-nearest neighbor. | I can describe different ways mathematical distance can be measured. | I can describe the curse of dimensionality. | I can describe how k-nearest neighbor can be used to classify images. |

| 4 | I can confidently describe what is meant by “data purity.” | I can describe how ensemble classifiers (sum greater than the individual parts) work. | I can describe the curse of dimensionality. | I can describe how random forest can be used in an artificial intelligence algorithm to classify data. |

| 5 | I can confidently describe what is meant by “linear classifier.” | I can explain the term “hyperplane.” | I can describe what is meant by “kerneling.” | I can describe how the perceptron algorithm classifies information. |

| 6 | I can confidently describe what is meant by “linear classifier.” | I can identify multiple different kinds of machine error. | I can describe how calculus can be used to improve machine learning algorithms. | I can describe how the support vector machine algorithm can be used to classify information. |

| 7 | I can confidently describe the basic idea behind neural networks. | I can Identify how neural network architecture draws inspiration from biological systems. | I can describe the process of convoluting and pooling images. | I can describe how a neural network classifies an image. |

Note: question 3 is repeated in lectures 3 and 4, and Question 1 is repeated in lectures 5 and 6. This is because these concepts were re-introduced to better explain the next algorithm in the series. These topics were one of the major themes in AI-RADS and are typically difficult for learners to understand, hence their reintroduction and expansion.

References

- 1.Boland G.W.L., Guimaraes A.S., Mueller P.R. The radiologist's conundrum: benefits and costs of increasing CT capacity and utilization. Eur Radiol. 2009;19:9–11. doi: 10.1007/s00330-008-1159-7. [DOI] [PubMed] [Google Scholar]

- 2.Fitzgerald R. Error in radiology. Clin Radiol. 2001;56:938–946. doi: 10.1053/crad.2001.0858. [DOI] [PubMed] [Google Scholar]

- 3.Hosny A., Parmar C., Quackenbush J. Artificial intelligence in radiology. Nat Rev Cancer. 2018;18:500–510. doi: 10.1038/s41568-018-0016-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Ooi S.K.G., Soon A.Y.Q., Fook-Chong S. Attitudes toward artificial intelligence in radiology with learner needs assessment within radiology residency programmes: a national multi-programme survey. Singapore Med J. 2019 doi: 10.11622/smedj.2019141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Pinto dos Santos D., Giese D., Brodehl D. Medical Students’ attitude towards artificial intelligence: a multicentre survey. Eur Radiol. 2019;29:1640–1646. doi: 10.1007/s00330-018-5601-1. [DOI] [PubMed] [Google Scholar]

- 6.Waymel Q., Badr S., Demondion X. Impact of the rise of artificial intelligence in radiology: what do radiologists think? Diagn Interv Imaging. 2019;100:327–336. doi: 10.1016/j.diii.2019.03.015. [DOI] [PubMed] [Google Scholar]

- 7.Kolachalama V.B., Garg P.S. Machine learning and medical education. Npj Digital Med. 2018;1:54. doi: 10.1038/s41746-018-0061-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.McDonald R.J., Schwartz K.M., Eckel L.J. The effects of changes in utilization and technological advancements of cross-sectional imaging on radiologist workload. Acad Radiol. 2015;22:1191–1198. doi: 10.1016/j.acra.2015.05.007. [DOI] [PubMed] [Google Scholar]

- 9.Paranjape K., Schinkel M., Nannan Panday R. Introducing artificial intelligence training in medical education. JMIR Med Educ. 2019;5:e16048. doi: 10.2196/16048. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Aerts Hugo J.W.L. Data science in radiology: a path forward. Clin Cancer Res. 2018;24:532–534. doi: 10.1158/1078-0432.CCR-17-2804. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Chartrand G, Cheng PM, Vorontsov E. Deep learning: a primer for radiologists. Radiographics. 2017;37:2113–2131. doi: 10.1148/rg.2017170077. [DOI] [PubMed] [Google Scholar]

- 12.Duong MT, Rauschecker AM, Rudie JD. Artificial intelligence for precision education in radiology. Br J Radiol. 2019;92 doi: 10.1259/bjr.20190389. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Pesapane Filippo, Codari Marina, Sardanelli Francesco. Artificial intelligence in medical imaging: threat or opportunity? Radiologists again at the forefront of innovation in medicine. Eur Radiol Exp. 2018;2:35. doi: 10.1186/s41747-018-0061-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Richardson ML, Garwood ER, Lee Y. Noninterpretive Uses of Artificial Intelligence in Radiology. Acad Radiol. 2020 doi: 10.1016/j.acra.2020.01.012. [DOI] [PubMed] [Google Scholar]

- 15.SFR-IA Group. CERF, and French Radiology Community Artificial Intelligence and medical imaging 2018: French radiology community white paper. Diagn Interv Imaging. 2018;99:727–742. doi: 10.1016/j.diii.2018.10.003. [DOI] [PubMed] [Google Scholar]

- 16.Tang An, Tam R, Cadrin-Chénevert A. Canadian association of radiologists white paper on artificial intelligence in radiology. Can Assoc Radiol J. 2018;69:120–135. doi: 10.1016/j.carj.2018.02.002. [DOI] [PubMed] [Google Scholar]