Abstract

The Conceptor network is a new framework of reservoir computing (RC), in addition to the features of easy training, global convergence, it can online learn new classes of input patterns without complete re-learning from all the training data. The conventional connection topology and weights of the hidden layer (reservoir) of RC are initialized randomly, and are fixed to be no longer fine-tuned after initialization. However, it has been demonstrated that the reservoir connection of RC plays an important role in the computational performance of RC. Therefore, in this paper, we optimize the Conceptor’s reservoir connection and propose a phase space reconstruction (PSR) -based reservoir generation method. We tested the generation method on time series prediction task, and the experiment results showed that the proposed PSR-based method can improve the prediction accuracy of Conceptor networks. Further, we compared the PSR-based Conceptor with two Conceptor networks of other typical reservoir topologies (random connected, cortex-like connected), and found that all of their prediction accuracy showed a nonlinear decline trend with increasing storage load, but in comparison, our proposed PSR-based method has the best accuracy under different storage loads.

Keywords: Conceptor, Reservoir computing, Phase space reconstruction, Time series prediction

Introduction

Unlike traditional recurrent neural networks (RNNs), such as Long Short-Term Memory (LSTM), that use error backpropagation through time (BPTT) algorithm to train the network, reservoir computing (RC), another type of RNN, only uses the simple linear regression meethod to train its output weights, while other connection weights (like input weights, hidden weights) are fixed once initialized. Due to the advantages of high accuracy, fast training, and global convergence (Ding et al. 2005), some typical RC models, such as echo state network (ESN) (Jaeger and Haas 2004) and liquid state machine (LSM) (Maass et al. 2002), have been widely applied to time series prediction (Li et al. 2015; Ma et al. 2009; Jaeger et al. 2007; Li et al. 2016; Wang et al. 2019; Hu et al. 2020), pattern classification (Skowronski and Harris 2007; M E et al. 2009; Hu et al. 2015; Zhang et al. 2019), and anomaly detection (Chen et al. 2018; Mohammadpoory et al. 2019), and so on.

The core component of RC framework, the reservoir layer, which is generally initialized to be sparse-connected, large-scale and fixed-weight, plays the crucial role in the performance of RC. Jaeger et al. (2007) demonstrated that besides the connection weights, the structures of the reservoir also has a non-negligible influence on the computational performance of RC. Thus, RC models with differently-designed reservoir topologies have been proposed in these years, such as the reservoir with small-world topology (Deng and Zhang 2007), multi-clustered topology (Zhang et al. 2017), cortex-like topology (Li et al. 2015; Malagarriga et al. 2019) and the modular topology (Ma and Chen 2013). On the other hand, Zhang and Small (2006) proposed a transformation method from a pseudoperiodic time series to complex networks, by representing each cycle of the pseudoperiodic series as a basic node. Yang and Yang (2008) built RC networks by using the correlation matrices of time series input. Gao and Jin (2009) proposed a method for generating the RC networks from time series based on phase space reconstruction.

Recently, another computing framework of RC, namely Conceptor network, has been proposed by Jaeger (2014) in 2015. Conceptor networks can store multiple patterns of inputs into a reservoir layer, filter the corresponding states using a “Conceptor” module, and finally reconstruct the input signals to achieve high classification accuracy. Conceptor network has a significant advantage that it is able to online load new classes of input patterns, which is a tough challenge for most other conventional supervised training-based classifiers. Due to such an attractive feature, Conceptor networks have been researched to solve the problems such as image classification (Hu et al. 2015; Qian and Zhang 2018; Qian et al. 2018), natural language processing (Liu et al. 2018), time series classification (Wang et al. 2016; Xu et al. 2019).

In this paper, we proposed a novel Conceptor network whose reservoir component is generated by using time series phase space reconstruction (PSR) method. Signal reconstruction of a mix of two irrational-period sines was employed as the network performance-testing model. After obtaining the appropriate values of threshold of the PSR method, an experiment comparing the network performance using this model confirmed the advantages of the proposed reservoir over an ordinary random reservoir. The experimental results demonstrated the effectiveness of our proposed reservoir generation method. Furthermore, for deep understanding the PSR method, the effects of memory load on Conceptor network performance are discussed.

The rest of this paper is organized as follows: In “Basic principles of conceptor network” section, we briefly introduce some basic preliminaries of Conceptor network. “Proposed method” section presents our proposed reservoir generation method based on phase space reconstruction. In “Experimental design and results” section, we first initialized the reservoir of Conceptor by using four chaotic time series datasets, and then examines the reconstruction effects of the mix of two irrational-period sines to analyze the performance of the generated Conceptor network. Section “Conclusions” section concludes this paper as the end.

Basic principles of conceptor network

The Conceptor network is a type of recurrent neural network (RNN) as shown in Fig. 1, which is driven by several dynamic input patterns . Suppose the hidden layer (reservoir) of Conceptor consists of N recurrent-connected neurons, if the network is driven by a pattern , the reservoir activation constitutes a high-dimensional state space of . Let and be the input weights and output weights, respectively. And let be the hidden weights. Thus update rule at time step for the reservoir state can be given by

| 1 |

where is a nonlinear activation function, and is usually selected as Tanh function. b is a random initialized bias. Similar to other reservoir computing models, such as ESN and LSM, once the input weights and hidden weights , as well as the bias b are random initialized, they are keep fixed. Only the output weights will be trained to reconstruct the input by using linear regression method.

Fig. 1.

Conceptor network structure

Denote the Conceptor matrix , its diagonal elements are the principal components of the reservoir state x of an input pattern p. For a set of reservoir states , design the loss function

| 2 |

where is a regularization term.

We can apply the stochastic gradient descent (SGD) algorithm to obtain the optimal to minimize the value of (2):

| 3 |

Thus, we can update the Conceptor core with an online fashion

| 4 |

where denotes the update rate.

Proposed method

PSR-based conceptor reservoir generation

In this paper, we proposed a time series prediction method based PSR of complex network. The time series is divided into fixed-length sequences and mapped to reservoir nodes. According to the correlation coefficient between the nodes to determine whether there is a connection between the nodes, when the coefficient is greater than a certain threshold, the connection between these two nodes is established, otherwise not established. For a time series , with t being the sampling interval, and M being the sample length, its phase space vector can be calculated by using the coordinate delay embedding algorithm:

| 5 |

where N is the reservoir dimension, i.e. the number of recurrent-connected neurons, as defined before. is the time delay, . H is the number of total time series vectors to be reconstructed, .

We use C-C method to determine the time delay , its general idea is to subdivide a single time series into t sequences, and then calculate S(m, M, r, t)

| 6 |

where

, , denotes Heaviside function. The common setting of m and r are , and where is the standard variance of the time series. The time series’ embedding dimension m is determined by using the false nearest neighbors method.

After reconstructing the phase space of time series, in order to build the corresponding network model, each vector element in the phase space can be regarded as a node, and their mutual distance in the phase space can be used to determine whether there is a connection between the nodes. Given two vector points and , the phase space distance is defined as

| 7 |

where is the nth element of .

Thus, We can establish the network using the following approach: the connection between node i and j exists if , and it keeps as a directed connection (from i to j), the connection weight is set as . If , the nodes are not connected, which means .

Memory management

Once the Conceptor reservoir is generated, we should finetune its reservoir memory state to store the pattern features of input data. the memory management mechanism of Conceptor reservoir is as follows:

Gradually input data to the Conceptor: If the patterns have been already stored in the reservoir, the new model can be stored without disturbing the previously stored patterns, even without knowing what patterns are previously stored.

Measure the remaining memory capacity of the reservoir, which means to measure the capability of the Conceptor reservoir to store another new patterns.

- Reduce redundancy: If a new data pattern has some similarity to a already stored pattern, load its completely different characteristics from other existing patterns into the reservoir memory. Then update the reservoir state set by

where denotes the distance matrix of reservoir weights. In a non-progressive training model, regularized linear regression can be used to calculate by minimizing:8

where K is the number of stored patterns and L is the length of the training samples. Then update the reservoir by .9 Combine the distance matrix with corresponding to the stored pattern , and reconstruct the reservoir state by the ith pattern.

Input training data of new pattern to Conceptor, and then calculate .

If there exists two training patterns are the same, i.e., , then let , which means the Conceptor reservoir need not to update to store the duplicate one. If is similar to but not equal to , the reservoir can reduce its information redundancy.

The key to the memory management of Conceptor reservoir is to track the memory storage space has whether be occupied or not by an input pattern. If the patterns have been stored, the occupied memory storage space is remarked by , while the unoccupied space is denoted by the complement .

Let the training data of input pattern be . The incremental memory storing algorithm is as shown in Algorithm 1.

Experimental design and results

Conceptor network generation

We use four different types of time series to generate the reservoir of Conceptor network by using PSR method. The four time series are:

- Lorenz chaotic system:

where , and .10 - Mackey-Glass chaotic system:

where produces a mildly chaotic behavior.11 Brownian motion system, representing a self-similar system model.

Gaussian white noise.

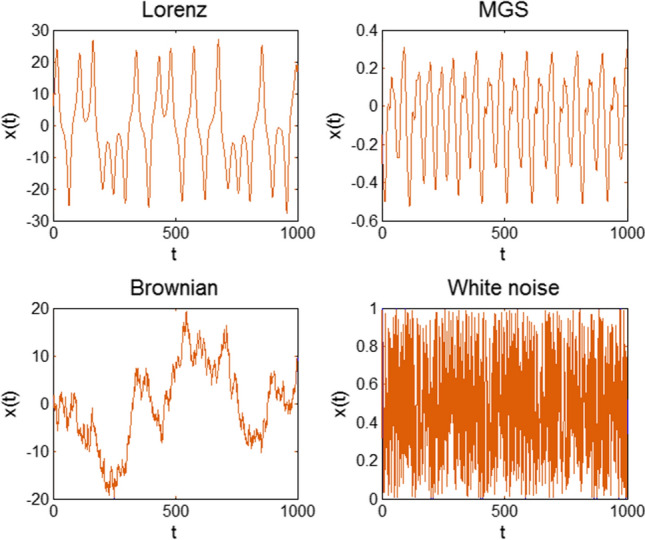

Figure 2 shows some samples of the four time series. By using the PSR method, we can build the Conceptor reservoir network based on these four datasets.

Fig. 2.

The four types of time series used for phase space reconstruction

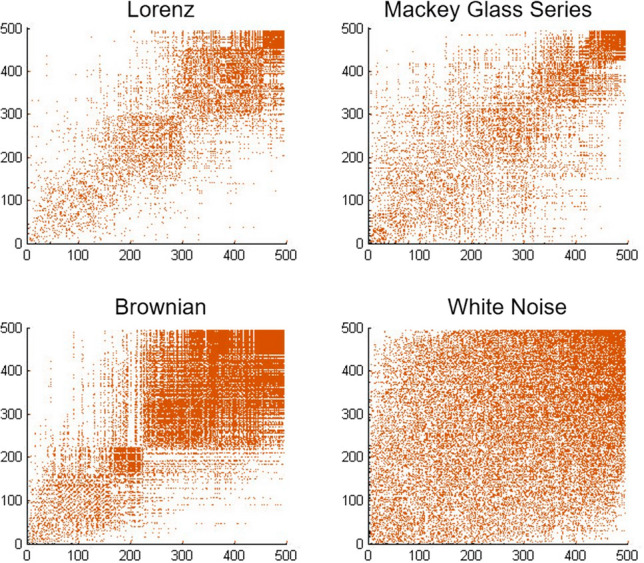

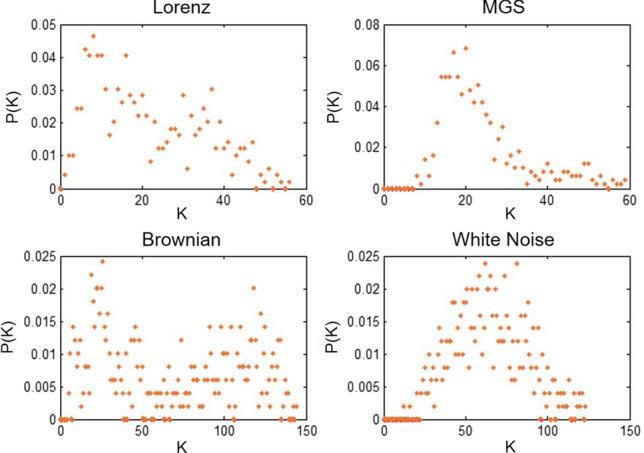

Figure 3 presents the scatter diagram of the adjacency matrix of Conceptor reservoir. The black points indicate that there is a connection between the nodes whose X and Y coordinates are their index number. It can be seen that the reservoir network obtained from the chaotic system (Lorenz and Mackey Glass Series) and the affine system (Brownian motion) both exhibit clustering structure, while the network obtained from the White Noise sequence does not form a cluster structure. Observing the node degree distribution (Fig. 4), it is found that the network constructed by the White Noise has a Gaussian distribution, and the network constructed by the chaotic system presents Weibull distribution (like exponential distribution, which has a large value of information entropy). The affine system exhibits a multi-spike distribution. The network obtained from Lorentz system has a more obvious clustering structure, and its degree distribution also shows a Weibull-like distribution.

Fig. 3.

The reservoir connection topologies of Conceptor generated by the four time series according to phase space reconstruction

Fig. 4.

The neuronal degree distributions of the generated Conceptor reservoirs

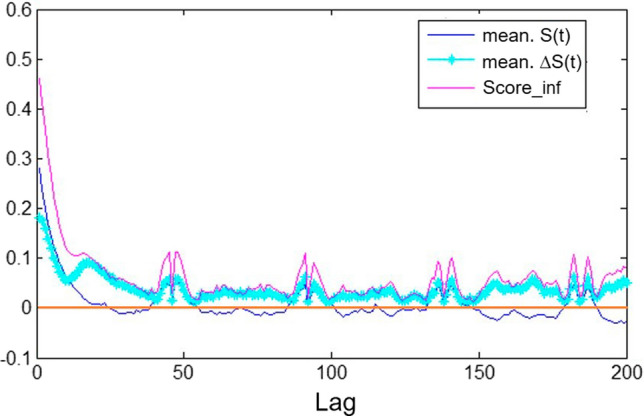

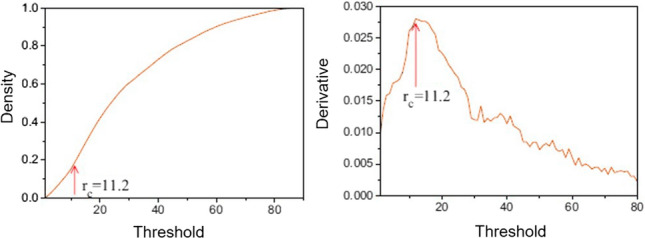

Taking the Lorenz chaotic system as an example, we calculate the parameters of the reconstructed phase space. Construct a Lorentz PSR network with a scale of , and its time delay can be obtained according to the C-C method. As shown in Fig. 5, is the value of X axis corresponding to the thick blue line when it reaches the local minimum, . Figure 6 describes the response curve of the connection density of the reservoir network to the threshold . When the network density growth rate is the maximum, the corresponding threshold is taken as the ideal value, where .

Fig. 5.

The latency calculated according to the C-C method. (Color figure online)

Fig. 6.

The network density responded on the threshold

Performance comparison with other conceptors

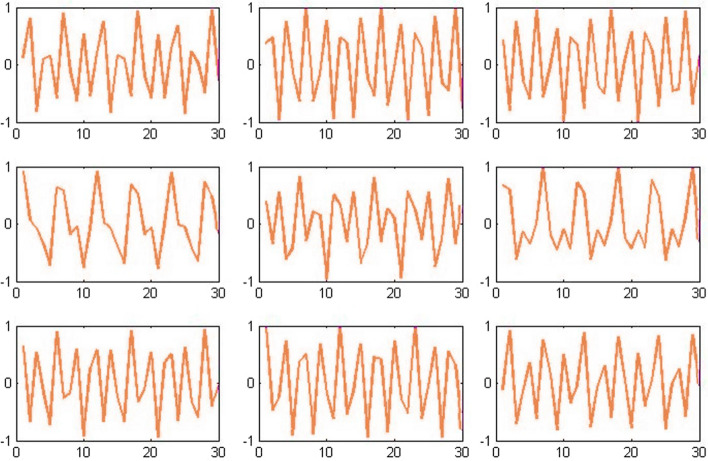

To verify the effectiveness of out proposed PSR-based Conceptor, we compare the PSR-based Conceptor with other two typical networks, i.e. random Conceptor network and cortex Conceptor network. We use a time series composed of two sinusoids with different periods as the input pattern to test the performance of the Conceptor network. The periods of these two different sine sequences are and , respectively, and their phase angles and amplitudes are random, but the sum of their amplitudes is fixed at 1. In Fig. 7, we show nine different randomly generated test time series. Table 1 shows the Conceptor parameters used in experiment.

Fig. 7.

Mixed irrational periodic sine signal

Table 1.

Parameter settings in the experiments

| Parameter | Value | Parameter | Value |

|---|---|---|---|

| K | 20 | N | 200 |

| 100 | 100 | ||

| 30 | 10000 | ||

| 0.01 | 0.01 |

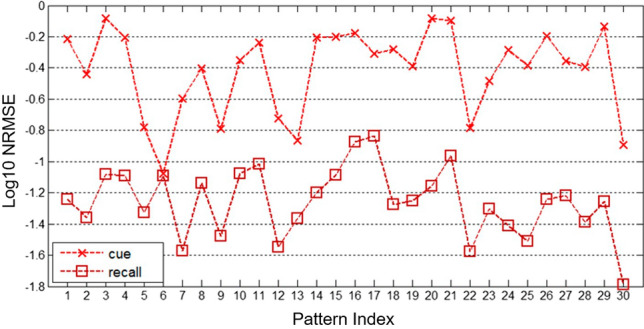

Figure 11 shows the prediction accuracy (indicated by NRMSE, as defined by (12)) comparison between these three Conceptors.

| 12 |

where is the network output during the testing phase; is the desired output during the testing phase and is the variance of the desired output. The standard deviation of NRMSE () is defined as follows:

| 13 |

where denotes the NRMSE average of the T independent trials.

Fig. 11.

The reconstruction NRMSE with and of PSR-based Conceptor

Prediction accuracy

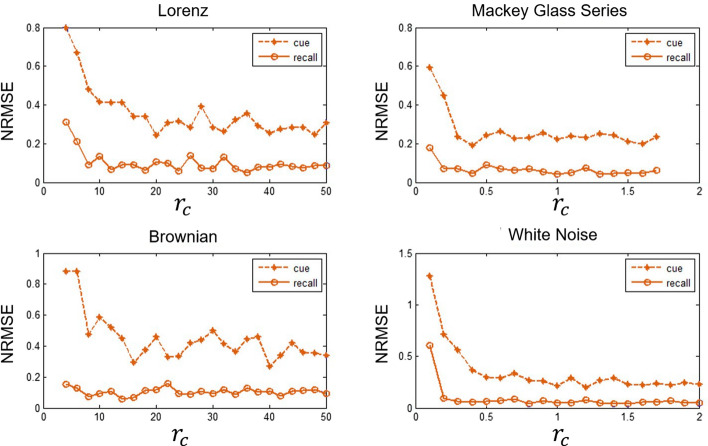

As the prediction accuracy comparison that presented in Fig. 8, Conceptors with different reservoir structures exhibit different computational performance.

Fig. 8.

Effects of threshold on reconstruction accuracy for all reservoirs

Figure 6 shows the effects of PSR threshold on the connection density of Conceptor reservoir. For further study, in Fig. 8, we present the NRMSE comparison between the four types of time series reconstructed Conceptors with different . We can see that the NRMSEs are stable when is larger than 15 for Lorenz time series, 0.3 for Mackey Glass Series, 12 for Brownian time series and 0.5 for White Noise.

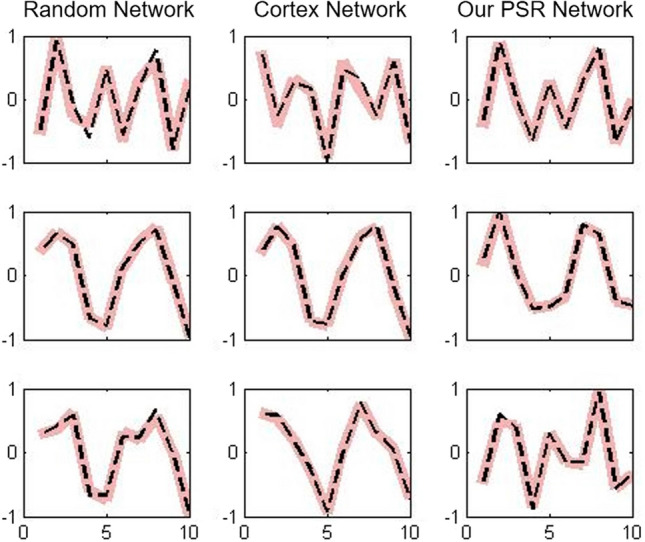

In Fig. 9 we show the reconstruction results of the first three input patterns of our proposed PSR-based Conceptor and other two networks to be compared.

Fig. 9.

The reconstruction results of time series by Conceptor

The black dotted line represents the input training pattern and pink bold line is the reconstructed pattern. Intuitively, we can see that the proposed PSR-based, the compared random and cortex Conceptor networks are all able to reconstruct the input patterns well.

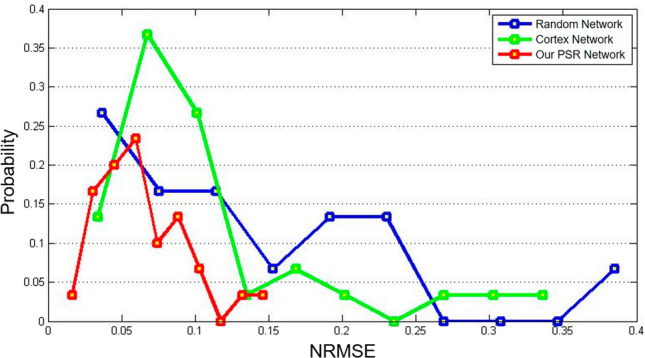

In order to deep understand the reconstruction ability of , we calculate the reconstruction NRMSE of 30 input patterns for each Conceptor reservoir, result is given in Fig. 10. It can be seen that the reconstruction errors (NRMSE) of the cortex network (green line) and our PSR-based network (red line) are generally smaller than the random network (blue line), that is, the error distribution is more concentrated in the position where the NRMSE is smaller. Further, the error distribution of the our PSR-based network is the best. Table 2 specifically gives the mean and variance of NRMSE of the three networks. It shows that the average NRMSE of the random network is the largest and the error fluctuation is also the largest, while the average NRMSE of PSR-based network is the smallest and the error fluctuation is also the smallest. It demonstrates that our proposed PSR-based Conceptor network has the strongest reconstruction capability than other two models.

Fig. 10.

The NRMSE distribution of our PSR-based and other two Conceptor networks. (Color figure online)

Table 2.

Mean and standard deviation of NRMSE for the three types of Conceptors

| Network | Avg. NRMSE | Std. |

|---|---|---|

| Random Net. | 0.130 | 0.0934 |

| Corte Net. | 0.110 | 0.0776 |

| PSR-based Net. | 0.062 | 0.0309 |

Memory load effects on recall ccuracy

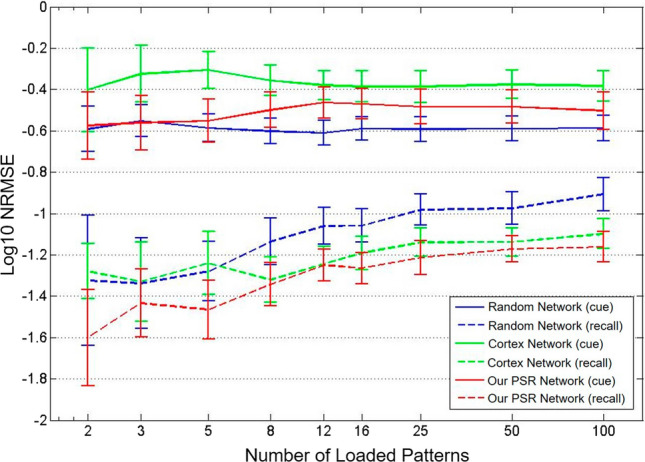

The prediction accuracy of time series reflects the computing performance of Conceptor, and is affected by the memory load of reservoir (Figs. 11, 12).

Fig. 12.

Effects of memory load on recall accuracy

In order to further study the relationship between the recall accuracy with memory load, and to know the reservoir memory capacity, we gradually increased the number of input patterns, and observed the change in the prediction error of the continuous memory load. Experiment is done as follows:

Build a Conceptor reservoir model;

In each independent trial, first load K input patterns to the Conceptor reservoir. Note that the K is gradually increasing by trial (from to ). Considering that the change trend is obvious when the number of input pattern is small, if the number is large, the memory storage space is close to saturation, and its change trend is weak, so let 100], respectively.

After the data pattern is loaded, repeat the above reconstruction step. Calculate the reconstruction error NRMSE for the first 10 loaded patterns.

Repeat step 2) and 3) five times, with newly created patterns, using the same reservoir.

The results are shown in Fig. 10, we can get that:

For all memory load situations and Conceptor networks, the reconstruction error (NRMSE) obtained by is smaller than that by , which means from to , the reconstruction accuracy of all Conceptors is improved.

The reconstruction error of all reservoir shows an upward trend. The more data patterns loaded into the reservoir, the greater the memory load and the worse the reconstruction accuracy.

Compared between the random network, cortex network and our PSR-based network, it is found that when K is small, the accuracy of the cortex network is similar to that of the random network, but it is better than the random network when the memory load is relative large. The PSR-based network has the highest reconstruction accuracy compared to both the convetional random network and cortex network.

Conclusions

This paper proposed a Conceptor reservoir method based on phase space reconstruction (PSR), which was investigated using a test involving the reconstruction of a mix of two irrational-period sines. Four PSR reservoirs had improved computational capability over the conventional random reservoir, and the improvement in the reconstruction precision of the Lorenz PSR reservoir and its standard deviation was largest. Experiments also revealed that reconstruction precision showed a nonlinear decline with increase in memory load, and the proposed Lorenz PSR-based reservoir maintained its advantages for different amounts of memory load. The generated reservoir can improve the computational accuracy of Conceptor networks, and experimental results proved the effectiveness of our proposed PSR-based Conceptor generation algorithm. By the deep analysis, we found that the precision of PSR-based reservoirs improves with the increase in threshold , which is for the reason of the increase in reservoir network density. Due to the neuronal connection of our PSR-based Conceptor reservoir was dynamically updated if new data pattern was input into, the computational complexity had important impact on the reservoir generation, thus the further work can be focused to address the complexity of our PSR-based method.

Compliance with ethical standards

Conflict of interest

The authors declare that they have no conflict of interest.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- Chen Q, Zhang A, Huang T, He Q, Song Y. Imbalanced dataset-based echo state networks for anomaly detection. Neural Comput Appl. 2018 doi: 10.1007/s00521-018-3747-z. [DOI] [Google Scholar]

- Deng Z, Zhang Y (2007) Collective behavior of a small-world recurrent neural system with scale-free distribution. In: IEEE Transactions on neural networks, pp 1364–1375 [DOI] [PubMed]

- Ding H, Pei W, He Z. A multiple objective optimization based echo state network tree and application to intrusion detection. IEEE Int Workshop VLSl Des Video Technol. 2005;52:443–446. [Google Scholar]

- Gao Z, Jin N. Complex network from time series based on phase space reconstruction. Chaos. 2009;19(3):033137. doi: 10.1063/1.3227736. [DOI] [PubMed] [Google Scholar]

- Hu H, Wang L, Lv SX. Forecasting energy consumption and wind power generation using deep echo state network. Renew Energy. 2020;154:598–613. doi: 10.1016/j.renene.2020.03.042. [DOI] [Google Scholar]

- Hu Y, Ishwarya M, Kiong LC (2015) Classify images with conceptor network. cs.CV, arXiv:1506.00815

- Jaeger H (2014) Controlling recurrent neural networks by conceptors. Technical Report

- Jaeger H, Haas H. Harnessing nonlinearity: predicting chaotic systems and saving energy in wireless communication. Science. 2004;304:78–80. doi: 10.1126/science.1091277. [DOI] [PubMed] [Google Scholar]

- Jaeger H, Lukosevieius M, Popovici D, Sieweret U. Optimization and applications of echo state networks with leaky integrator neurons. Neural Netw. 2007;20(3):335–352. doi: 10.1016/j.neunet.2007.04.016. [DOI] [PubMed] [Google Scholar]

- Li X, Chen Q, Xue F. Bursting dynamics remarkably improve the performance of neural networks on liquid computing. Cogn Neurodyn. 2016;10:415–421. doi: 10.1007/s11571-016-9387-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li X, Zhong L, Xue F, Zhang A. A priori data-driven multi-clustered reservoir generation algorithm for echo state network. PLoS ONE. 2015;10(4):e0120750. doi: 10.1371/journal.pone.0120750. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu T, Sedoc J, Ungar L. Correcting the common discourse bias in linear representation of sentences using conceptors. Proc BioCreative/OHNLP Challenge. 2018;2018:250–256. [Google Scholar]

- M E, L A, J, L (2009) Reservoir computing for static pattern recognition. In: European symposium on artificial neural network, pp 245–250. 10.1002/0470848944.hsa115

- Ma Q, Chen W. Modular state space of echo state network. Neurocomputing. 2013;122:406–417. doi: 10.1016/j.neucom.2013.06.012. [DOI] [Google Scholar]

- Ma Q, Zheng Q, Peng H, Qin J. Chaotic time series prediction based on fuzzy boundary modular neural networks. Acta Physics Sinca. 2009;58(3):1410. doi: 10.1109/ICMLC.2007.4370752. [DOI] [Google Scholar]

- Maass W, Natschlager T, Markram H. Real-time computing without stable states: a new framework for neural computation based on perturbatiorns. Neural Comput. 2002;14(11):2531–2560. doi: 10.1162/089976602760407955. [DOI] [PubMed] [Google Scholar]

- Malagarriga D, Pons AJ, Villa AEP. Complex temporal patterns processing by a neural mass model of a cortical column. Cogn Neurodyn. 2019;13:379–392. doi: 10.1007/s11571-019-09531-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mohammadpoory Z, Nasrolahzadeh M, Mahmoodian N, Sayyah M, Haddadnia J. Complex network based models of ecog signals for detectionof induced epileptic seizures in rats. Cog Neurodyn. 2019 doi: 10.1007/s11571-019-09527-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Qian G, Zhang L. A simple feedforward convolutional conceptor neural network for classification. Appl Soft Comput. 2018;70:1034–1041. doi: 10.1016/j.asoc.2017.08.016. [DOI] [Google Scholar]

- Qian G, Zhang L, Zhang Q. End-to-end training algorithm for conceptor-based neural networks. Electron Lett. 2018;54(15):924–926. doi: 10.1049/el.2018.0033. [DOI] [Google Scholar]

- Skowronski MD, Harris JG. Automatic speech recognition using a predictive echo state network classifier. Neural Netw. 2007;20:414–423. doi: 10.1016/j.neunet.2007.04.006. [DOI] [PubMed] [Google Scholar]

- Wang L, Wang Z, Liu S. An effective multivariate time series classification approach using echo state network and adaptive differential evolution algorithm. Expert Syst Appl. 2016;43:237–249. doi: 10.1016/j.eswa.2015.08.055. [DOI] [Google Scholar]

- Wang Z, Zeng YR, Wang S, Wang L. Optimizing echo state network with backtracking search optimization algorithm for time series forecasting. Eng Appl Artif Intell. 2019;81:117–132. doi: 10.1016/j.engappai.2019.02.009. [DOI] [Google Scholar]

- Xu Z, Zhong L, Zhang A. Phase space reconstruction-based conceptor network for time series prediction. IEEE Access. 2019;7:163172–163179. doi: 10.1109/ACCESS.2019.2952365. [DOI] [Google Scholar]

- Yang Y, Yang H. Complex network-based time series analysis. Phys A. 2008;387:1381–1386. doi: 10.1016/j.physa.2007.10.055. [DOI] [Google Scholar]

- Zhang A, Zhu W, Liu M (2017) Self-organizing reservoir computing based on spiking-timing dependent plasticity and intrinsic plasticity mechanisms. In: Chinese automation congress (CAC), vol 2017, pp 6189–6193. IEEE. 10.1109/CAC.2017.8243892. http://ieeexplore.ieee.org/document/8243892/

- Zhang A, Zhu W, Li J. Spiking echo state convolutional neural network for robust time series classification. IEEE Access. 2019;7:4927–4935. doi: 10.1109/ACCESS.2018.2887354. [DOI] [Google Scholar]

- Zhang J, Small M. Complex network from pseudoperiodic time series: topology versus dynamics. Phys Rev Lett. 2006;96:238701. doi: 10.1103/PhysRevLett.96.238701. [DOI] [PubMed] [Google Scholar]