Abstract

Timely monitoring and precise estimation of the leaf chlorophyll contents of maize are crucial for agricultural practices. The scale effects are very important as the calculated vegetation index (VI) were crucial for the quantitative remote sensing. In this study, the scale effects were investigated by analyzing the linear relationships between VI calculated from red–green–blue (RGB) images from unmanned aerial vehicles (UAV) and ground leaf chlorophyll contents of maize measured using SPAD-502. The scale impacts were assessed by applying different flight altitudes and the highest coefficient of determination (R2) can reach 0.85. We found that the VI from images acquired from flight altitude of 50 m was better to estimate the leaf chlorophyll contents using the DJI UAV platform with this specific camera (5472 × 3648 pixels). Moreover, three machine-learning (ML) methods including backpropagation neural network (BP), support vector machine (SVM), and random forest (RF) were applied for the grid-based chlorophyll content estimation based on the common VI. The average values of the root mean square error (RMSE) of chlorophyll content estimations using ML methods were 3.85, 3.11, and 2.90 for BP, SVM, and RF, respectively. Similarly, the mean absolute error (MAE) were 2.947, 2.460, and 2.389, for BP, SVM, and RF, respectively. Thus, the ML methods had relative high precision in chlorophyll content estimations using VI; in particular, the RF performed better than BP and SVM. Our findings suggest that the integrated ML methods with RGB images of this camera acquired at a flight altitude of 50 m (spatial resolution 0.018 m) can be perfectly applied for estimations of leaf chlorophyll content in agriculture.

Keywords: scale effects, maize, UAV/UAS, SPAD, chlorophyll contents, HSV, machine learning

1. Introduction

Maize (Zea mays L.) is a global stable crop that accounts for more than 34% of global cereal production, and the demand of it is constantly increasing with the growth of the global population and the impending economic pressures in the coming decades [1,2,3]. China has contributed 17% of global maize production with less than 9% of arable cropland considering the environmental and ecological protection [4,5,6,7]. Climate change, such as increasing temperature and abnormal precipitation, has both directly and indirectly influenced the growth and development of maize, which will inevitably result in the reduction or the stagnation of yields [8,9,10,11]. Thus, timely monitoring of the growth condition of maize and making adaptive measures are essential for guaranteeing the agricultural production and ensuring national food security. Chlorophyll is the most important pigment in plant photosynthesis that can reflect the strength of crop photosynthesis, the quality of nutrition, and physiology [12,13,14]. Therefore, chlorophyll content can be used for assessing, monitoring, and evaluating the growth status of crops. The precise measurement of chlorophyll content using SPAD-502 is very high, which is almost the same as the result using chemical tests, thus the chlorophyll contents measured by SPAD-502 can be perfectly used for replacing the chlorophyll in vegetation [15,16]. Thus, calculating and estimating the chlorophyll contents at the field scale are prerequisites for monitoring crop growth and strengthening decision-support systems for specific agronomic practices (e.g., fertilization, irrigation, weeding, ploughing, and harvest) [17,18,19].

Commonly, there are three approaches for measuring the chlorophyll contents of vegetation at field scale: destructive sampling (DS), simulation models (SM), and remote sensing (RS). The DS method is a direct method that is quite precise in measuring the chlorophyll contents of crops using experiments, but it is also very labor-intensive, time-consuming, and inefficient. Thus, it can hardly be applied for a relatively large area and the sampling points are also limited. Moreover, it is very destructive and time-consuming to acquire the data for a large area. The SM method is a natural laboratory that simulates the whole growing process of crops covering the status of all variables of crops such as chlorophyll content, but this method relies on the high resolution of input data such as weather, soil, management practice that are difficult to obtain [20,21,22]. Alternatively, RS has been successfully applied in many related fields such as image classification and change detection. In addition, the advanced RS techniques such as unmanned aerial vehicle remote sensing (UAV-RS) can be applied to acquire filed observation data at a fine spatial resolution (centimeter-level). Unlike the traditional satellite remote sensing (SRS) that is commonly limited by the spatial and spectral resolutions, and long revisit cycle, the UAV-RS can provide images at adequate spatial and temporal resolutions without the limitations from the weather condition [23,24,25]. It is also important for agricultural and ecological applications as they can be easily deployed and possessed the ability to dynamically monitor the crops in detail during important phenology events such as flowering, heading, and mature that are the critical growth stages of crops. The remote sensed sensors mounted on the UAV could fill the gap between high resolution in spatial and the quick revisit circle [26]. Thus, UAV-RS combined field data collection is the best choice that can acquire the database of complete growth of crops at high spatial and temporal resolution with less time.

In the red–green–blue (RGB) color space system, each pixel is defined using the combined R, G, and B band [27]. The vegetation index (VI) calculated from RGB images have been used to monitor the leaf chlorophyll content of crops for several decades [28,29,30,31]. Thus, cost-effective RGB cameras onboard UAVs have great abilities in monitoring the growth conditions using visual vegetation index for agricultural and ecological applications [32,33]. Rocio Ballesteros et al. monitored the biomass of onion using RGB images acquired from UAV platform [34]. Alessandro Matese et al. assessed the intra-vineyard variability in terms of characterization of the state of vines vigor using high spatial resolution RGB images [35]. Dong-Wook Kim et al. modeled and tested the growth status of Chinese Cabbage with UAV-Based RGB images [36]. The RGB and multispectral images from UAV were used together for the detection of the Gramineae weed in rice fields [37]. Jnaneshwar et al. clearly described the workflow of monitoring the plant health, crop stress and the guidance of management using multispectral 3D imaging system mounted on a UAV [38]. Zarco-Tejada et al. combined the helicopter-based UAV with the multispectral imaging sensors six-band multispectral camera (MCA-6, Tetracam, Inc., Chatsworth, CA, USA), and the images acquired with this system were first calibrated using linear regression method and further applied for the extraction of a series of vegetation index for agricultural parameter estimations [39]. Jacopo et al. performed the flight mission at a site-specific vineyard with Tetracam ADC-lite camera (Tetracam, Inc., Gainesville, FL, USA), and the ground measurement using FieldSpec Pro spectroradiometer (ASD Inc., Boulder, CO, USA) was applied for radiometric calibration [40]. Miao et al. assessed the potential ability of hyperspectral remote sensing images acquired with an AISA-Eagle VNIR hyperspectral imaging sensor (SPECIM, Spectral Imaging, Ltd., Oulu, Finland) through building multiple regression analyses between the bands from images and measured values using SPAD-502, and the results showed that bands from images explained 68–93% and 84–95% at the fields in the corn-soybean and corn-corn rotation fields, respectively [41]. Wang et al. estimated the leaf biochemical parameters in mangrove forest using hyperspectral data [42]. The multispectral images from multispectral and hyperspectral cameras had advantages in agricultural applications; however the cameras were relatively expensive compared with RGB cameras [43]. To date, only a few studies assessed and evaluated the chlorophyll contents using RGB images acquired from the UAV platform, and thus the ability and performance of RGB in predicting chlorophyll contents are still unexplored. The information extracted from high resolution images is enough for information mining, and there is no guarantee that the extracted information from all pixels is reasonable and true. The images acquired from different altitudes representing the different image resolutions. Thus, the resolution should match the ground samples and the flight altitude influencing the resolution of images from should be optimized to better achieve the data fitting [44]. The scale impacts using RGB images acquired from different imaging environment such as imaging at different flight altitudes were little evaluated. Meanwhile, the RGB cameras were relatively cheaper and more easily to be deployed than the multispectral cameras. Also, the previous adopted statistics approaches were traditional linear regression models, where there is a lack of learning underlying data distribution [15]. The statistical regression models using traditional linear regression models are mostly localized, and this issue can be overcome using more advanced machine-learning (ML) techniques such as backpropagation neural network model (BP), support vector machine (SVM), and random forest (RF). ML has been successfully applied in many domains including image pre-processing, image classification, pattern recognition, yield prediction, and simulation regression. The BP, SVM, and RF have been applied for many applications as they perform better for regression problems, especially the SVM method have been reported to have achieved the highest precision in previous studies [45,46].

In this study, we are trying to address the following: (1) investigating the scale effects using UAV RGB images acquired from different flight altitudes at the early growth stage of maize; (2) evaluating the performance of hue–saturation–value (HSV) color system compared with RGB color system in applications such as information extraction using vegetation index; (3) estimating the chlorophyll contents using ML methods with RGB images from different growth stages of maize.

2. Materials and Methods

2.1. Study Area

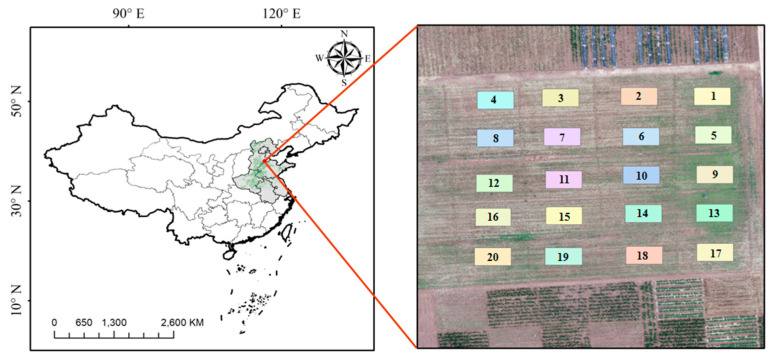

The experiment of different treatments of fertilizers to maize was conducted in Nanpi Eco-Agricultural Experimental Station (NEES) (38.00°N, 116.40°E), which was managed by the Chinese Academy of Sciences (CAS) (Figure 1). The NEES was in Hebei Province belonging to the North China Plain (NCP), which was the national main grain product areas of summer maize and winter wheat. The general growth duration of maize was from middle June to early October in a single year. There was a total of 20 plots in this area, and each plot was treated using different amount of fertilizers containing the common usage of nitrogenous fertilizer, phosphate fertilizer, and potassium fertilizer, respectively (Table A1). The study area was in the semi-humid monsoon climate zone, and the annual average temperature and average annual precipitation is 12.3 °C and 480 mm, respectively. The soil type of this region belonged to the cinnamon soil subgroup. The parent material of this soil species was deep and uniform, of which the profile is A-AB-BK. The A layer (0–20 cm) was desalinated Chao soil, with some saline soil. The AB layer (20–48 cm) was sandy coarse loam with a granular structure and the BK layer (48–100) was clay loam with weak adhesion. The soil in NEES represented the typical type of water-salt salinization in this region.

Figure 1.

Nanpi Eco-Agricultural Experimental Station (NEES) and overview of the long-term experimental fields.

2.2. Data Collection and Pre-Processing

2.2.1. UAV Data Collection and Pre-Processing

The UAV flights covering all 20 plots in NEES were carried out between 11:00 and 11:30 AM on 8 July, 18 August, 1 September, 16 September 2019, respectively. The flight on July 8 contained five different altitudes: 25 m, 50 m, 75 m, 100 m and 125 m. The flight altitudes on 18 August, 1 September, 16 September were all set as 50 m. The DJI Phantom 4 Pro V2.0 was used as the UAV platform for data collection, of which the max ascent speed and max decent speed were 6 and 4 m per second, respectively. The horizontal and vertical accuracy ranges were ±0.1 m and ±0.3 m (with vision positioning) with the electronic shutter speed as 1/8000 s. The platform had an endurance of up to 30 min, and the camera data storage capacity was approximately six hours. The RGB camera had a focal lens of 8.8 mm and a 20.7 megapixel (5472 × 3648 pixels) CMOS sensor arranged through the same lens. Before the flight missions, four ground control point (GCP) were made as prominent positions on the ground using white paints, and the precise locations were measured using Real-time kinematic (RTK) S86T system. To acquire the RGB images from different flight altitudes, the commercial software Altizure (V4.7.0.196) was applied for flight control with 85% forward lap and 75% side lap for all flight mission. Each flight mission had covered the whole experimental field, with the four GCPs included. Since the light conditions were very crucial for image capture and image processing in remote sensing domains for quantitative remote sensing. The sunny days were selected for data collection, and thus the impacts of solar and other disturbance of cloud was minimized. To assess the scale impacts, the data was collected in one single day within one hour to exclude the impacts of different solar radiation and angles. In addition, to better assess the scale impacts ascribed from different flight altitudes, an implement experiment was added on 16 July 2020 and the same approach of different flight altitudes were conducted the same as on 8 July 2019. The experiments with the acquisition of UAV images were also conducted on 18 August, 1 September, 16 September 2019 with the same method, and the only difference was that the flight altitude was set as 50 m. The RGB images acquired from different flight altitudes and on different dates were copied and transferred from the storage card mounted on the UAV. The standard procedure was conducted within Pix4d mapper using GCPs, which was a unique photogrammetry software suite for drone mapping [47,48,49].

2.2.2. Chlorophyll Field Measurements Data

The ground collection of chlorophyll contents in each plot was conducted using SPAD-502 under a standard procedure. The relative amount of leaf chlorophyll content was determined by measuring the light transmittance coefficient of the leaf at two wavelengths: 650 nm and 940 nm. The values measured using SPAD-502 was closely correlated with the chlorophyll content in leaf of plants, and the trend of chlorophyll content can be known by measured values. To eliminate the errors and make the measures more reliable, five points measuring method containing four corners and the center of each plot were measured for three repetitions. The average of chlorophyll contents of each plot can be precisely acquired by averaging the 15 (5 × 3 = 15) samples of data. Since there was a total of 20 plots, there were 20 values of chlorophyll contents after the average calculation of each flight. The chlorophyll contents measured by SPAD-502 were carried out on 8 July, 18 August, 1 September, 16 September shortly after the flight missions.

2.3. Methods

2.3.1. Scale Effects Using Vegetation Index Methods

The mosaic image acquired at different altitudes on July 82019 were shown in ENVI 5.3 and the spatial resolution were calculated. The spatial resolutions for flight altitudes of 25, 50, 75, 100 and 125 m were 0.006, 0.018, 0.021, 0.028, 0.034 m, respectively. The mosaic image of 50 m was shown in ENVI 5.3 and the region of interests (ROI) covering the center and four corners within each plot was made and exported, respectively (Figure 1). The same ROI of each plot were used to extract the subsample images within each plot of mosaic images including the long time series (8 July, 18 August, 1 September, 16 September) and different flight altitudes (25, 50, 75, 100, 125 m), respectively. Thus, there are 20 subsample images for each flight altitude and each date. A total of 18 indices had been applied and conducted in previous studies (Table 1). Before calculating the indices, the R, G and B bands were normalized using,

| R = r/(r + g + b), G = g/(r + g + b), B = b/(r + g + b) | (1) |

where r, g, and b represented the original digital number (DN) of the RGB images. Thus, the R, G and B represented the normalized DN that can be used for calculating vegetation index and quantitative remote sensing analyses.

Table 1.

The VI evaluated in this study. R, G, B indicated normalized red, green, and blue bands, respectively. Note: α was a constant as 0.667.

| Index | Name | Equation | Reference |

|---|---|---|---|

| E1 | EXG | [50,51] | |

| E2 | EXR | [52] | |

| E3 | VDVI | [53,54,55,56] | |

| E4 | EXGR | [57] | |

| E5 | NGRDI | [55,58] | |

| E6 | NGBDI | [54,59] | |

| E7 | CIVE | [60,61] | |

| E8 | CRRI | [62,63,64] | |

| E9 | VEG | [65,66] | |

| E10 | COM | [67,68] | |

| E11 | RGRI | [69,70] | |

| E12 | VARI | [71,72] | |

| E13 | EXB | [67,73] | |

| E14 | MGRVI | [74,75] | |

| E15 | WI | [72,76] | |

| E16 | IKAW | [58,77] | |

| E17 | GBDI | G − B | [52,78] |

| E18 | RGBVI | (G × G − B × R)/(G × G + B × R) | [79,80,81] |

The HSV color system converted the red, green, and blue of RGB images into HSV, of which the hue represents the value from 0 to 1 that corresponds to the color’s position on a color wheel. As hue increased from 0 to 1, the color transitions from red to orange, yellow, green, cyan, blue, magenta, and finally back to red. Saturation represented the amount of hue or departure from neutral. In addition, 0 indicated a neutral shade, whereas 1 indicated the maximum of saturation. The HSV value represented the maximum value among the RGB components of a specific color.

The scale impacts were mainly due to the different resolution of images, and in this study, we only focused on the scale impacts of different resolution ascribed from different flight altitudes. To assess the scale impacts, vegetation index in Table 1 were calculated using the RGB images acquired from different flight altitudes. Three approaches were conducted and compared, with the consideration of elimination of the background effects such as disturbance from soil and the color space system. For the first approach, the 20 plots of subsample images were used directly to build linear regression models with the measured chlorophyll contents using SPAD-502 at each plot, and images from different flight altitudes and different dates were separately assessed using the regression function in Matlab 2019b. The R2 was obtained for each vegetation index and compared with the results from different flight altitudes. The results using the first approach showed much irregularity that was mainly due to the impacts of background such as soil and other occlusions. For the second approach, the EXG-EXR method was applied to extract only green pixels and to reduce the effects of background disturbance such as soil [52,82]. In this way, the subsample images were classified into green pixels and non-green pixels. The subsample images were then transformed into binary images where the DN of green were assigned as 1 and the DN of background were assigned as 0. Thus, the pixel values equal 1 corresponding to green were used to build linear regression models with the measured chlorophyll contents in each plot. For the third approach, the subsample images were first classified into green and non-green pixels using the second approach, and the classified images (only green pixels) in RGB color space system were transformed into HSV color space system, which was an alternative representation of the RGB color space [83,84]. The HSV model was invented to align with the way human vision perceives color-making attributes, and the colors of each hue were arranged in a radial slice, around a central axis of neutral colors which ranged from black at the bottom to white at the top [85,86]. The subsample images in RGB color space were converted into HSV color space, and the binary images were used for extraction of VI using the green pixels. Thus, the images in HSV color space without the effects of background were used to build linear regression models with the measured chlorophyll contents at each plot using images from five flight altitudes. These three approaches were used to systematically evaluate and investigate the effects of background such as soil and to assess the performance of RGB and HSV color space.

2.3.2. Estimating the Chlorophyll Contents Using Machine-Learning Techniques

To precisely predict the chlorophyll contents, the advanced ML methods: BP, SVM and RF were used to build non-linear relationships. The independent variables were the 18 vegetation indices and the dependent variable was the chlorophyll content in each plot. For all ML models, 70% of samples were selected for building models and the remaining 30% of samples were used for validations. Moreover, the ten-fold cross-validation was adopted to assess model validity. The results using different ML models were obtained and compared with each other. Furthermore, all samples were adopted to build non-linear relationships to predict the chlorophyll contents of each pixel using all subsample images, and the chlorophyll contents at the site scale can be precisely acquired through this way. To assess the model performance and evaluate the prediction accuracy, the coefficient of determination (R2), root mean square error (RMSE), and mean absolute error (MAE) between observed and simulated yield of maize was applied. The equations are defined as follows:

| (2) |

| (3) |

| (4) |

In the equations, R2 is the coefficient of determination, n represents the total number of samples, Mi represents the true values, and Pi represents the predicted values. and represent the average of M and P, respectively.

3. Results

3.1. The Results of Scale Impacts Using Images from Different Flight Altitudes

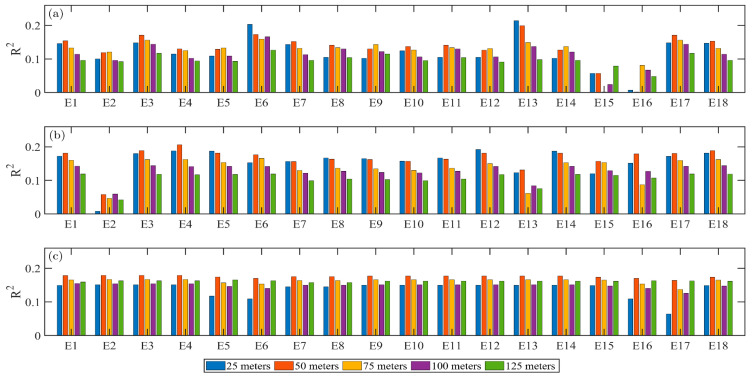

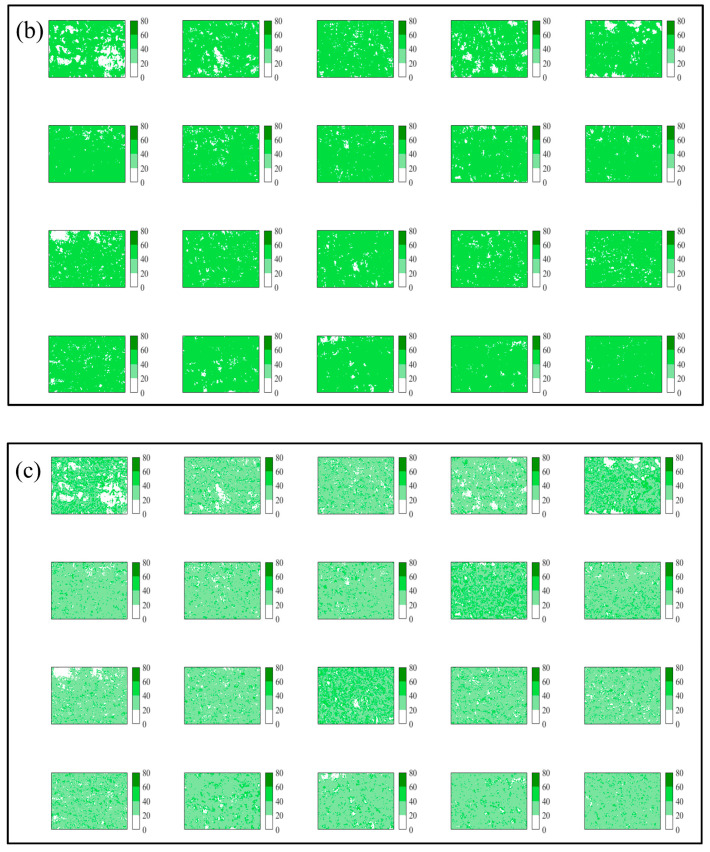

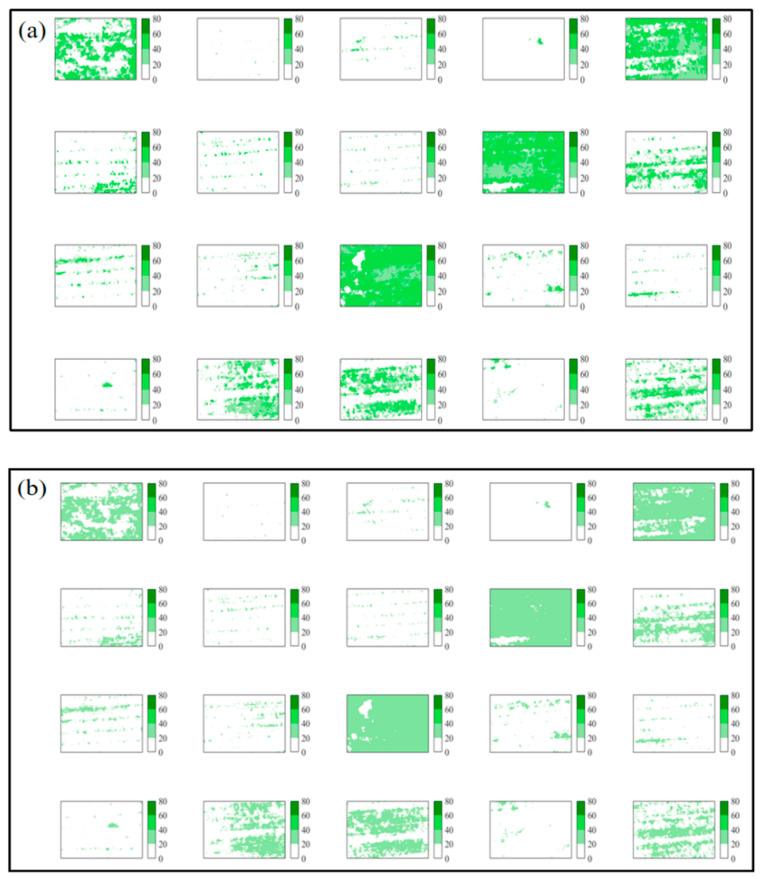

The 18 VI were used to build linear relationships with chlorophyll contents in each plot for different flight altitudes (Table A2, Table A3, Table A4). For the data acquired on July 8 in 2019, the crop binary map at flight altitude 50 m using EXR-EXG method is shown in Figure A1. The results of R2 between VI and SPAD values using images acquired from different flight altitudes on 8 July 2019 were each obtained and compared (Figure 2). It can be concluded that the R2 calculated using E 6 and E 13 performed better (Figure 2a). The percentage of VI with corresponding of the R2 increased from flight altitude of 25 to 50 m and gradually decreased from flight altitude of 50 to 125 m were 44% and 50%, respectively (Figure 2a,b). Thus, the proposed second approach was better than the first approach, and the significantly increased R2 had indicated that the elimination of effects of background had improved the accuracy to some extent. The results in Figure 2b had removed the disturbance of background such as soil. When the HSV color space was applied, the percentage of VI of R2 increased from 25 to 50 m has increased to 100%, which implied that the images in HSV color space system were better for information extraction than the traditional RGB color space system, especially for estimating the chlorophyll contents.

Figure 2.

Histogram of coefficient of determination (R2) between index and chlorophyll contents using E1–18 (Table 1) using, (a) RGB color space with background; (b) RGB color space with only green pixels; (c) HSV color space with only green pixels.

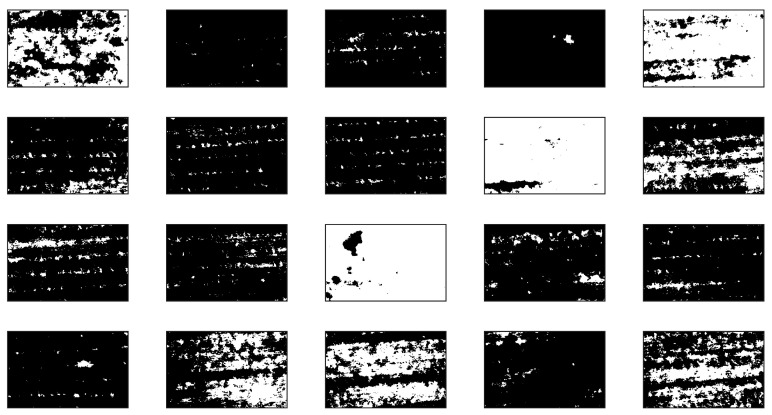

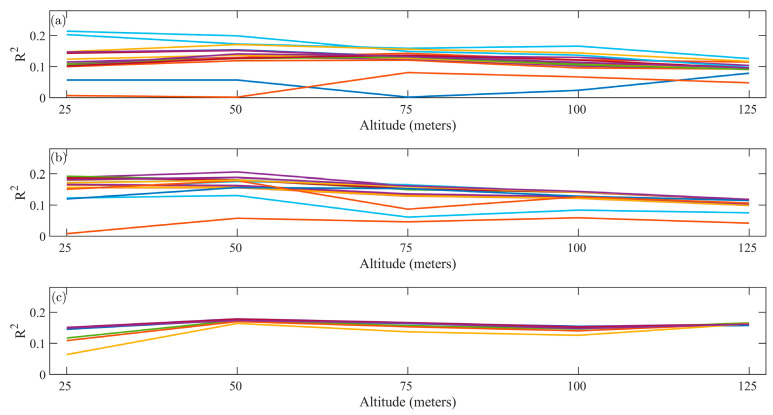

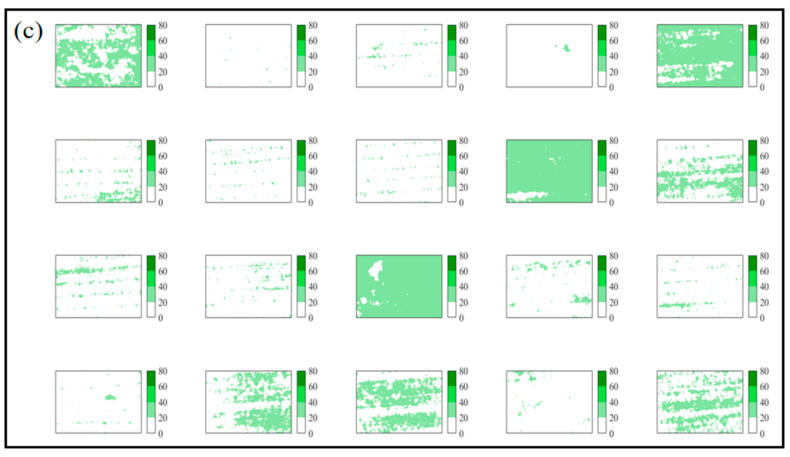

The results shown in polyline forms of five flight altitudes also indicated that the proposed second approach was better than the first approach (Figure A2). Even though some of the R2 using the first approach were larger than that of the second approach, the background such as soil had covered the real phenomenon as the images were acquired at the early growth of maize. The increased percentage of R2 using the second approach was quite evident. Also, the results in Figure 3c clearly demonstrated that the HSV was much better than the RGB. The percentage of R2 increased significantly from 20 to 50 m and then decreased when the HSV color space was applied for regression. Thus, the spatial resolution of images acquired at flight altitude 50 m was better matched with the ground collection resolution which were least affected by the scale impacts. The HSV color space system may have greater potential in the analysis of quantitative remote sensing than the common RGB color space system.

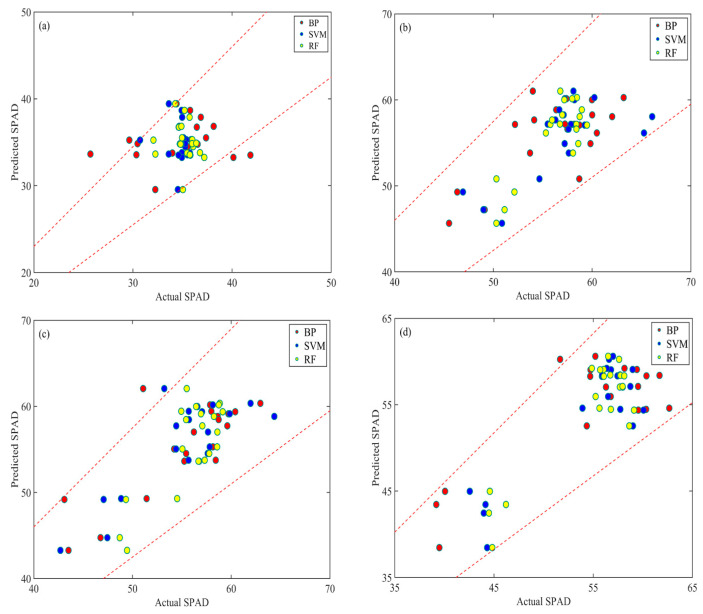

Figure 3.

Chlorophyll contents predictions using ML methods. The red, blue, and yellow points are the predicted values of the chlorophyll contents using BP, SVM, and RF, respectively. (a–d) represents the results using images acquired on 8 July, 18 August, 1 September, 16 September 2019.

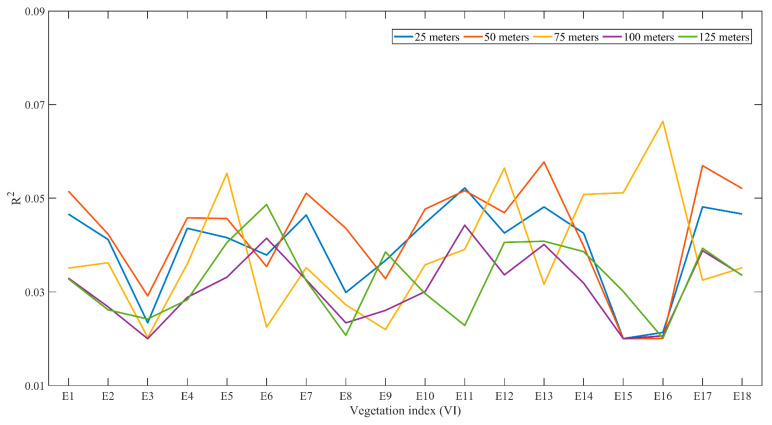

For the experiment conducted on 16 July 2020. The VI were all used to build linear regression relationships with the values measured by SPAD-502. Again, the results showed that the images acquired from flight altitude of 50 m were the least influenced by the scale impacts, where the R2 from 50 m were the highest compared with the R2 from other flight altitudes, respectively (Figure A3). The average values of R2 were 0.040, 0.043, 0.038, 0.031, 0.033 for 25, 50, 75, 100, 125 m, respectively. Thus, the images acquired from 50 m were least influenced by the scale impacts from different flight altitudes.

3.2. Performance of Machine-Learning Methods and Chlorophyll Contents Prediction

Since the images acquired from flight altitude of 50 m were least impacted by the scale impacts, thus the following section was performed using the images acquired at flight altitude of 50 m. The R2 between chlorophyll contents and VI calculated using RGB images acquired on 8 July, 18 August, 1 September, 16 September 2019 were obtained (Table 2). The dates of 8 July, 18 August, 1 September, 16 September 2019 represented different growth stages of maize, and the R2 between the VI and chlorophyll contents increased significantly with the growth of maize. The highest value of R2 was the linear relationship between chlorophyll contents and E5, which had reached 0.845. Thus, the VI extracted from RGB images had great potential for chlorophyll content estimations.

Table 2.

The calculated R2 between VI and chlorophyll contents using images acquired from different dates. Note: the data represents the time when the used images were acquired and E1–E18 are in corresponding with the VI in Table 1.

| Date | E1 | E2 | E3 | E4 | E5 | E6 | E7 | E8 | E9 |

| 8 July | 0.182 | 0.139 | 0.181 | 0.169 | 0.185 | 0.177 | 0.178 | 0.181 | 0.178 |

| 18 August | 0.240 | 0.499 | 0.210 | 0.091 | 0.514 | 0.362 | 0.040 | 0.506 | 0.530 |

| 1 September | 0.273 | 0.648 | 0.228 | 0.487 | 0.629 | 0.291 | 0.001 | 0.581 | 0.606 |

| 16 September | 0.471 | 0.832 | 0.462 | 0.722 | 0.845 | 0.342 | 0.047 | 0.825 | 0.842 |

| Date | E10 | E11 | E12 | E13 | E14 | E15 | E16 | E17 | E18 |

| 8 July | 0.179 | 0.181 | 0.186 | 0.170 | 0.185 | 0.170 | 0.202 | 0.182 | 0.182 |

| 18 August | 0.010 | 0.506 | 0.733 | 0.365 | 0.493 | 0.729 | 0.804 | 0.001 | 0.211 |

| 1 September | 0.003 | 0.581 | 0.671 | 0.400 | 0.622 | 0.591 | 0.674 | 0.263 | 0.314 |

| 16 September | 0.103 | 0.825 | 0.751 | 0.450 | 0.849 | 0.855 | 0.838 | 0.481 | 0.534 |

The RGB images acquired on 8 July, 18 August, 1 September, 16 September 2019 were used for extraction of subsample images using the ROIs of each plot. Then the VI were obtained for each plot, and the ML methods were applied between the VI and chlorophyll contents from ground measurement. The models were all trained using 70% of samples and validated using the remaining 30% samples. The predicted values and actual values of chlorophyll contents were obtained with the ±15% error lines, and most of the points were within the error lines indicated the predictions using ML methods were relatively high (Figure 3). The scatter points those were out of the error lines were all predicted values using BP, which indicated the SVM and RF performed better than the BP.

The detailed assessments of results between actual and predicted chlorophyll contents including R2, RMSE and MAE for each model and each date are shown in Table 3. The average of R2, RMSE, and MAE were 0.001, 2.996 and 2.316 for 8 July, 0.337, 3.216 and 2.553 for 18 August, 0.549, 3.357 and 2.642 for 1 September, 0.668, 3.579 and 2.882 for 16 September, respectively. The R2 increased with the increase of days, and the highest values can be obtained on 16 September. The calculated RMSE and MAE of all models and all dates were less than 5, thus the ML models were efficient for chlorophyll contents predictions.

Table 3.

The detailed results of R2, RMSE, and MAE for different dates using ML methods.

| R2 | 8 July | 18 August | 1 September | 16 September |

| BP | 0.001 | 0.454 | 0.595 | 0.703 |

| SVM | 0.001 | 0.332 | 0.587 | 0.702 |

| RF | 0.001 | 0.227 | 0.465 | 0.599 |

| RMSE | 8 July | 18 August | 1 September | 16 September |

| BP | 3.868 | 3.533 | 3.411 | 4.600 |

| SVM | 2.500 | 3.575 | 3.328 | 3.043 |

| RF | 2.622 | 2.541 | 3.333 | 3.095 |

| MAE | 8 July | 18 August | 1 September | 16 September |

| BP | 2.973 | 2.765 | 2.347 | 3.701 |

| SVM | 1.802 | 2.757 | 2.844 | 2.438 |

| RF | 2.174 | 2.138 | 2.736 | 2.509 |

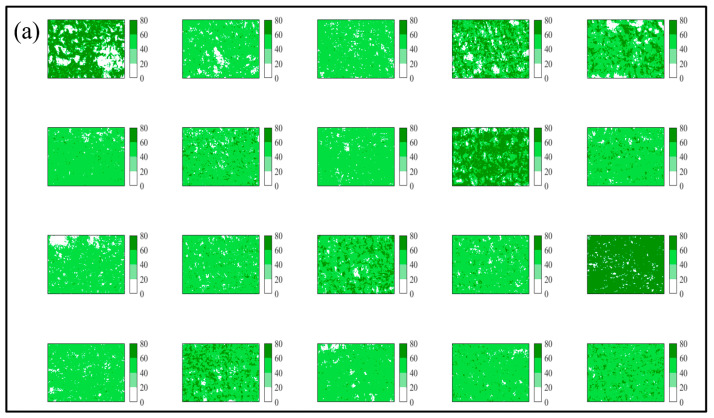

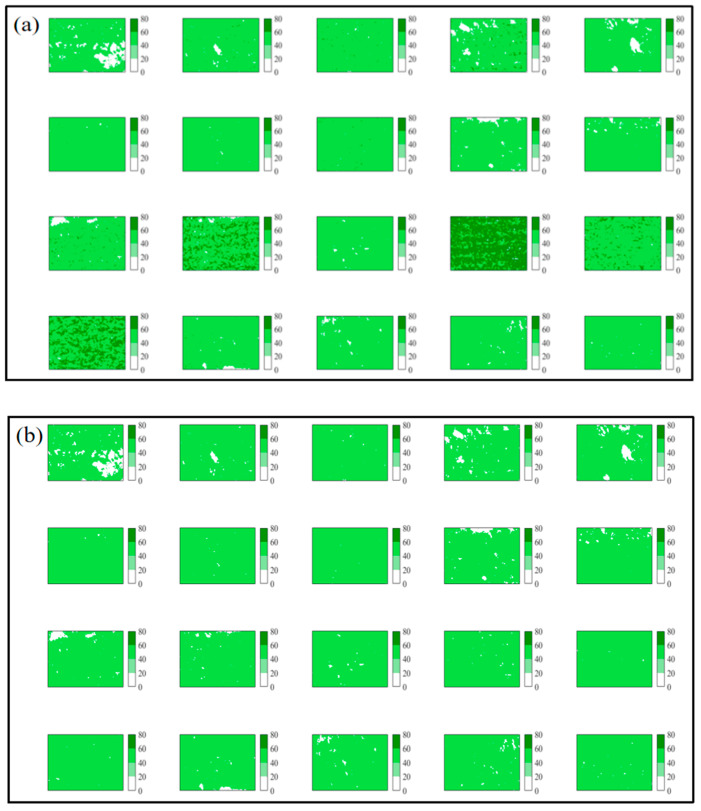

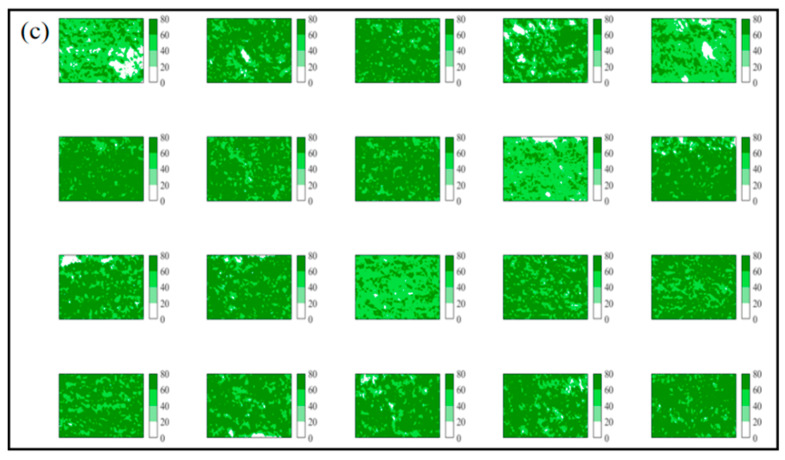

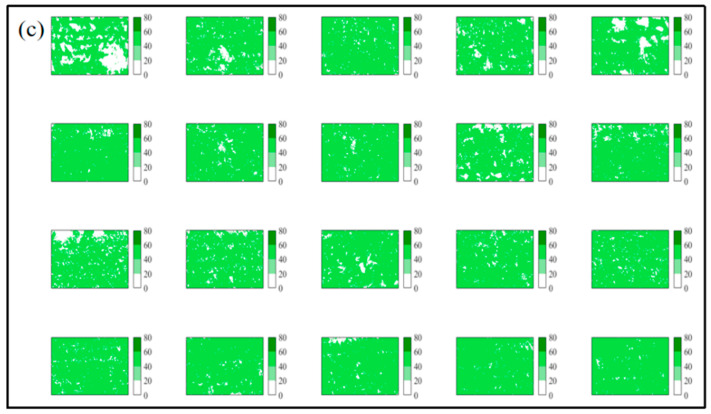

Since the ML models can be perfectly applied and evaluated, thus the models were rebuilt using all samples for BP, SVM, and RF, respectively. All samples were used to build models and to predict the chlorophyll contents using all the VI calculated from RGB images acquired on 16 September 2019 (Figure 4). The results of predicted chlorophyll contents using BP, SVM, and RF for 8 July, 18 August, 1 September are shown in Figure A4, Figure A5 and Figure A6, respectively.

Figure 4.

The predicted chlorophyll contents values by BP, SVM, and RF models using images acquired on 16 September 2019. Note: (a–c) represented the predicted chlorophyll contents using BP, SVM and RF, respectively.

4. Discussion

4.1. Limitations in Assessing the Sscale Impacts

In this study, three approaches were applied to assess the scale effects from different flight altitudes with consideration of the disturbance of background (soil and grass) and color space system. The result was in accordance with previous studies that the second approach showed more regularity, and the precision had improved significantly as the applied method has been successfully conducted for classifying and extracting the green pixels [52,82]. However, the introduced approach can hardly completely exclude the interference of background, in other words, the extracted green pixels were not pure enough to have only including the green vegetation. Thus, the uncertainty from the background of soil remained and may have influenced the reliability of results to some extent. Meanwhile, the disturbance of grass can hardly be excluded as they were green pixels in pictures. The bidirectional reflectance distribution function (BRDF) has also been used for modeling light trapping in solar cells and it is commonly used for corrections of different angles of solar radiation. BRDF effect was commonly assessed in quantitative remote sensing. Since all images acquired in this study were almost strictly vertical to the ground and the imaging conditions were the same for image acquisitions. Thus, the BRDF was not investigated or assessed in this study and the impacts from BRDF can be ignored. We have focused on the assessments and evaluations of scale impacts of different resolutions ascribed from different flight altitudes [87,88]. Without considering the BRDF, the RGB images from flight altitude of 50 m will better fit the ground samplings.

The lighting conditions were crucial factors influencing the quantitative remote sensing. In this study, the impacts from lighting conditions were limited as we have conducted two main processes before the assessment of scale impacts. First, the weather condition for acquiring images from the different flight altitudes were the same, thus, the lighting conditions during image acquisition was the same as we controlled the total flight time within 30 min. Thus, the only difference was the flight altitude, which was what we want to assess and evaluate. Secondly, we have converted the original DN values into normalized RGB values to eliminate the impacts of different lighting conditions. The scale impacts from different flight altitudes were of geometry, and the influence from different lighting conditions were limited. Therefore, we have enough reason to believe that the disturbance of lighting conditions for image acquisition can be eliminated to the least. However, the slight impacts of the lighting conditions will remain even though we have strictly controlled the total time of flight duration and the RGB values were normalized. However, the situations were different for assessing the estimations of chlorophyll contents using combined multi-vegetation index (VI) and ML. The lighting conditions for data acquisition were crucial for acquiring images of different days. The weather should be sunny, which is the basic requirement for both satellite and UAV quantitative remote sensing. If the weather conditions were different for different days of data acquisition, the impacts will be obvious. Thus, we suggest that the weather is better for assessing scale impacts and it should be sunny for acquiring the data for assessing the growth condition of maize.

The detailed parameters of the DJI UAV platform were introduced in Section 2.2.1. The spatial resolutions were 0.006, 0.018, 0.021, 0.028, 0.034 m for 25, 50, 75, 100 and 125 m, respectively. Since the flight altitudes of 50 m of this specific DJI platform were least influenced by the scale impacts, which meant that the spatial resolution of 0.018 m can precisely match the ground sampling of chlorophyll contents measured using SPAD-502. Thus, it is highly recommended that the spatial resolution should be optimized as 0.018 m to reveal the chlorophyll contents with the combined ground measurement using SPAD-502. Since the resolution of this camera mounted on DJI platform were 5472 × 3648 pixels, the flight altitudes should be higher than 50 m for camera with higher resolutions and the flight altitudes should be lower for camera with lower resolutions. The findings of this study may be helpful in future related agricultural and ecological studies such as monitoring the growth and predicting the yield of maize.

4.2. Machine-Learning-Based Chlorophyll Content Estimation

ML methods were widely used for regression and classification in RS domains as it can precisely catch the dynamic changes of the relationships between variables and input-output mapping. In this study, the BP, SVM, and RF were used for chlorophyll contents predictions using the VI calculated from RGB images acquired from flight altitude of 50 m that were least influenced by scale impacts. With 70% of ground samples for modeling and the remaining 30% of the sample for validating, the ML performed perfectly well and most of the predicted chlorophyll contents were within the expected ±15 error lines. However, the errors of some predicted values were relatively large using BP and the results had reached out of the error lines, which indicated that the errors of predicted values were relatively large. The SVM and RF models were better than the BP model, and this was due to the BP algorithm had disadvantages in balancing the prediction ability as it used a gradient steepest descent method, which may converge to local minimum [89,90]. Also, the BP may have the over-learning issue that may result in the “overfitting” problem [91,92]. The advanced SVM and RF models can balance the errors, obtaining robust and reliable results [93,94]. Thus, the SVM and RF models are suggested in application of agricultural yield predictions.

With the development of more advanced ML techniques such as deep learning (DL), the solutions of regression and classification can be efficiently solved. The DL method can build more layers of complex fully connected deep models in predicting regressions. Among various DL methods, the convolutional neural network (CNN) is among the most common used in image processing. Thus, DL variants should be considered for agricultural and ecological applications (regression and predictions).

5. Conclusions

In this study, the scale impacts were first assessed using UAV RGB images acquired from five different flight altitudes and chlorophyll contents measured by SPAD-502. Three approaches were proposed by considering the effects of background and impacts of color space system, then the linear regression between the VI and chlorophyll contents of each plot were conducted. We found that the scale impacts of images acquired at the flight altitude of 50 m (spatial resolution 0.018 m) using DJI UAV platform with this specific camera (5472 × 3648 pixels) were least. Also, the HSV performed better than the traditional RGB and it can be used for information extraction. Three commonly used ML methods were adopted to conduct the pixel-based chlorophyll contents prediction at different growth stages of maize, and the SVM and RF performed better than the BP. We had provided a complete solution for predicting chlorophyll contents using combined UAV-RS and ML, and it is highly recommended that the integration of ML technology (SVM and RF) and UAV-based RGB images (acquired from 50 m for this DJI platform) should be adopted and applied for chlorophyll contents predictions in agricultural and ecological applications.

Acknowledgments

We appreciate the revisions and suggestions from Xiuliang Jin from Institute of Crop Sciences, Chinese Academy of Agricultural Sciences.

Appendix A

Table A1.

The spatial distribution of fertilizer at 20 plots during the growth of maize. The NPK meant different combinations of fertilizers, and N represented the nitrogenous fertilizer, P represented the phosphate fertilizer, K represented the potassium fertilizer, respectively. The number after NPK represents the actual multiple of fertilizer fertilizers, and the numbers in brackets were the number of plots in corresponding with the plots shown in Figure A1.

| Column 1 | Column 2 | Column 3 | Column 4 | |

|---|---|---|---|---|

| Row 1 | N2+straw (4) | N3P3K1 (3) | N3P1K1 (2) | N1P1K2 (1) |

| Row 2 | N2+Organic fertilizer (8) | N3P2K1 (7) | N3P3K2 (6) | N1P1K1 (5) |

| Row 3 | N3+straw (12) | N4P3K1 (11) | N2P2K2 (10) | N1P2K1 (9) |

| Row 4 | N3+Organic fertilizer (16) | N4P2K1 (15) | N2P1K1 (14) | N1P3K1 (13) |

| Row 5 | N4P2K2 (20) | N4P1K1 (19) | N2P2K1 (18) | N2P3K1 (17) |

Table A2.

The R2 between vegetation indices calculated from RGB and chlorophyll contents using the images with background.

| RGB Index with Background | 25 m | 50 m | 75 m | 100 m | 125 m |

|---|---|---|---|---|---|

| index1 | 0.146 | 0.154 | 0.133 | 0.114 | 0.096 |

| index2 | 0.100 | 0.119 | 0.121 | 0.096 | 0.092 |

| index3 | 0.148 | 0.171 | 0.156 | 0.144 | 0.117 |

| index4 | 0.115 | 0.130 | 0.125 | 0.102 | 0.094 |

| index5 | 0.109 | 0.129 | 0.133 | 0.109 | 0.093 |

| index6 | 0.203 | 0.173 | 0.159 | 0.166 | 0.126 |

| index7 | 0.143 | 0.152 | 0.132 | 0.113 | 0.096 |

| index8 | 0.105 | 0.141 | 0.134 | 0.130 | 0.104 |

| index9 | 0.102 | 0.130 | 0.143 | 0.122 | 0.115 |

| index10 | 0.124 | 0.137 | 0.127 | 0.106 | 0.095 |

| index11 | 0.105 | 0.141 | 0.134 | 0.130 | 0.104 |

| index12 | 0.105 | 0.126 | 0.131 | 0.106 | 0.091 |

| index13 | 0.214 | 0.199 | 0.149 | 0.137 | 0.098 |

| index14 | 0.102 | 0.127 | 0.137 | 0.121 | 0.096 |

| index15 | 0.057 | 0.057 | 0.002 | 0.024 | 0.079 |

| index16 | 0.007 | 0.002 | 0.081 | 0.067 | 0.048 |

| index17 | 0.148 | 0.171 | 0.156 | 0.144 | 0.117 |

| index18 | 0.147 | 0.153 | 0.132 | 0.114 | 0.096 |

Table A3.

The R2 between vegetation indices calculated from RGB and chlorophyll contents using the images without background.

| RGB Index without Background | 25 m | 50 m | 75 m | 100 m | 125 m |

|---|---|---|---|---|---|

| index1 | 0.172 | 0.181 | 0.160 | 0.142 | 0.119 |

| index2 | 0.008 | 0.058 | 0.046 | 0.059 | 0.042 |

| index3 | 0.180 | 0.189 | 0.162 | 0.144 | 0.118 |

| index4 | 0.188 | 0.206 | 0.162 | 0.141 | 0.116 |

| index5 | 0.187 | 0.181 | 0.153 | 0.142 | 0.118 |

| index6 | 0.153 | 0.176 | 0.166 | 0.141 | 0.119 |

| index7 | 0.157 | 0.156 | 0.129 | 0.122 | 0.099 |

| index8 | 0.166 | 0.163 | 0.136 | 0.127 | 0.104 |

| index9 | 0.165 | 0.162 | 0.134 | 0.124 | 0.102 |

| index10 | 0.157 | 0.157 | 0.130 | 0.122 | 0.099 |

| index11 | 0.166 | 0.163 | 0.136 | 0.127 | 0.104 |

| index12 | 0.192 | 0.181 | 0.150 | 0.142 | 0.117 |

| index13 | 0.123 | 0.131 | 0.062 | 0.084 | 0.075 |

| index14 | 0.187 | 0.181 | 0.153 | 0.142 | 0.118 |

| index15 | 0.120 | 0.157 | 0.153 | 0.129 | 0.115 |

| index16 | 0.151 | 0.179 | 0.087 | 0.127 | 0.107 |

| index17 | 0.172 | 0.180 | 0.159 | 0.142 | 0.119 |

| index18 | 0.181 | 0.189 | 0.162 | 0.144 | 0.118 |

Table A4.

The R2 between vegetation indices calculated from HSV and chlorophyll contents using the images without background.

| HSV Index without Background | 25 m | 50 m | 75 m | 100 m | 125 m |

|---|---|---|---|---|---|

| index1 | 0.149 | 0.179 | 0.166 | 0.155 | 0.159 |

| index2 | 0.151 | 0.179 | 0.167 | 0.154 | 0.163 |

| index3 | 0.151 | 0.179 | 0.167 | 0.154 | 0.163 |

| index4 | 0.151 | 0.179 | 0.167 | 0.154 | 0.163 |

| index5 | 0.117 | 0.174 | 0.157 | 0.146 | 0.166 |

| index6 | 0.109 | 0.170 | 0.153 | 0.140 | 0.163 |

| index7 | 0.145 | 0.175 | 0.164 | 0.150 | 0.158 |

| index8 | 0.145 | 0.175 | 0.164 | 0.150 | 0.158 |

| index9 | 0.150 | 0.177 | 0.166 | 0.151 | 0.162 |

| index10 | 0.150 | 0.177 | 0.166 | 0.151 | 0.162 |

| index11 | 0.150 | 0.177 | 0.166 | 0.151 | 0.162 |

| index12 | 0.150 | 0.177 | 0.166 | 0.151 | 0.162 |

| index13 | 0.150 | 0.177 | 0.166 | 0.151 | 0.162 |

| index14 | 0.150 | 0.177 | 0.166 | 0.151 | 0.162 |

| index15 | 0.149 | 0.174 | 0.165 | 0.148 | 0.162 |

| index16 | 0.109 | 0.170 | 0.153 | 0.140 | 0.163 |

| index17 | 0.064 | 0.164 | 0.137 | 0.126 | 0.163 |

| index18 | 0.149 | 0.174 | 0.165 | 0.148 | 0.162 |

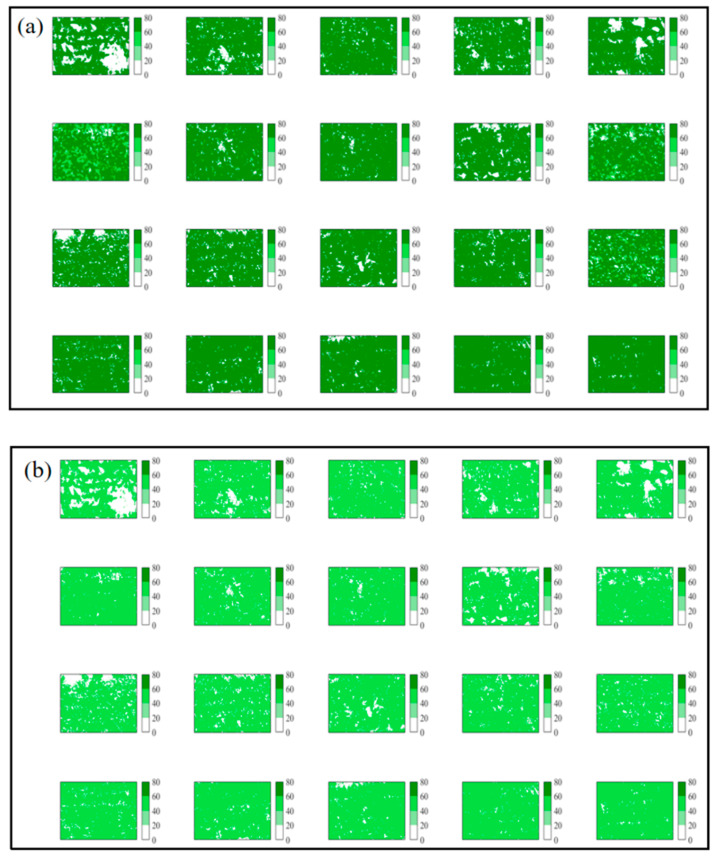

Figure A1.

Crop binary map at flight altitude at 50 m using EXR-EXG method.

Figure A2.

Polyline of R2 between index and chlorophyll contents using E1-E18. (a) RGB color space system with background; (b) RGB color space system without background; (c) HSV color space system without background.

Figure A3.

The linear regression results between vegetation index and measured values using SPAD-502.

Figure A4.

The predicted chlorophyll contents values using BP, SVM, and RF models using images acquired on 8 July 2019. (a–c) represented the predicted chlorophyll contents using BP, SVM, and RF, respectively.

Figure A5.

The predicted chlorophyll contents values using BP, SVM, and RF models using images acquired on 18 August 2019. (a–c) represented the predicted chlorophyll contents using BP, SVM, and RF, respectively.

Figure A6.

The predicted chlorophyll contents values using BP, SVM, and RF models using images acquired on 1 September 2019. (a–c) represented the predicted chlorophyll contents using BP, SVM, and RF, respectively.

Author Contributions

Conceptualization, Y.G. and G.Y.; methodology, Y.G., H.W., and J.S.; software, Y.G. and S.C., validation, Y.G. and H.S.; formal analysis, Y.G. and H.S.; investigation, Y.G. and H.W.; resources, Y.G., Y.F. and H.S.; data curation, Y.F. and H.W.; writing—original draft preparation, Y.G. and G.Y.; writing—review and editing, Y.G., Y.F., H.S., G.Y., J.S., H.W., J.W.; visualization, Y.G. and H.W.; supervision, Y.F.; project administration, Y.F.; funding acquisition, Y.F. All authors have read and agreed to the published version of the manuscript.

Funding

This study was supported by the General Program of National Nature Science Foundation of China (Grant No. 31770516), the National Key Research and Development Program of China (2017YFA06036001), and the 111 Project (B18006) and Fundamental Research Funds for the Central Universities (2018EYT05). The study was also financially supported by the ministry of ecology and environment of the People’s Republic of China for its financial support through the project of study on regional governance upon eco-environment based on major function zones: Research on eco-environment regional supervision system.

Conflicts of Interest

The authors declare no conflict of interest.

References

- 1.FAO. IFAD. UNICEF. WFP. WHO . Building Climate Resilience for Food Security and Nutrition. FAO; Rome, Italy: 2019. The state of food security and nutrition in the world 2017. [Google Scholar]

- 2.Lobell D.B., Field C.B. Global scale climate–crop yield relationships and the impacts of recent warming. Environ. Res. Lett. 2007;2:014002. doi: 10.1088/1748-9326/2/1/014002. [DOI] [Google Scholar]

- 3.Cole M.B., Augustin M.A., Robertson M.J., Manners J.M. The science of food security. NPJ Sci. Food. 2018;2:1–8. doi: 10.1038/s41538-018-0021-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Wei X., Declan C., Erda L., Yinlong X., Hui J., Jinhe J., Ian H., Yan L. Future cereal production in China: The interaction of climate change, water availability and socio-economic scenarios. Glob. Environ. Chang. 2009;19:34–44. doi: 10.1016/j.gloenvcha.2008.10.006. [DOI] [Google Scholar]

- 5.Lv S., Yang X., Lin X., Liu Z., Zhao J., Li K., Mu C., Chen X., Chen F., Mi G. Yield gap simulations using ten maize cultivars commonly planted in Northeast China during the past five decades. Agric. For. Meteorol. 2015;205:1–10. doi: 10.1016/j.agrformet.2015.02.008. [DOI] [Google Scholar]

- 6.Liu Z., Yang X., Hubbard K.G., Lin X. Maize potential yields and yield gaps in the changing climate of northeast China. Glob. Chang. Biol. 2012;18:3441–3454. doi: 10.1111/j.1365-2486.2012.02774.x. [DOI] [Google Scholar]

- 7.Huang M., Wang J., Wang B., Liu D.L., Yu Q., He D., Wang N., Pan X. Optimizing sowing window and cultivar choice can boost China’s maize yield under 1.5 °C and 2 °C global warming. Environ. Res. Lett. 2020;15:024015. doi: 10.1088/1748-9326/ab66ca. [DOI] [Google Scholar]

- 8.Kar G., Verma H.N. Phenology based irrigation scheduling and determination of crop coefficient of winter maize in rice fallow of eastern India. Agric. Water Manag. 2005;75:169–183. doi: 10.1016/j.agwat.2005.01.002. [DOI] [Google Scholar]

- 9.Tao F., Zhang S., Zhang Z., Rötter R.P. Temporal and spatial changes of maize yield potentials and yield gaps in the past three decades in China. Agric. Ecosyst. Environ. 2015;208:12–20. doi: 10.1016/j.agee.2015.04.020. [DOI] [Google Scholar]

- 10.Liu Z., Yang X., Lin X., Hubbard K.G., Lv S., Wang J. Maize yield gaps caused by non-controllable, agronomic, and socioeconomic factors in a changing climate of Northeast China. Sci. Total Environ. 2016;541:756–764. doi: 10.1016/j.scitotenv.2015.08.145. [DOI] [PubMed] [Google Scholar]

- 11.Tao F., Zhang S., Zhang Z., Rötter R.P. Maize growing duration was prolonged across China in the past three decades under the combined effects of temperature, agronomic management, and cultivar shift. Glob. Chang. Biol. 2014;20:3686–3699. doi: 10.1111/gcb.12684. [DOI] [PubMed] [Google Scholar]

- 12.Baker N.R., Rosenqvist E. Applications of chlorophyll fluorescence can improve crop production strategies: An examination of future possibilities. J. Exp. Bot. 2004;55:1607–1621. doi: 10.1093/jxb/erh196. [DOI] [PubMed] [Google Scholar]

- 13.Haboudane D., Miller J.R., Tremblay N., Zarco-Tejada P.J., Dextraze L. Integrated narrow-band vegetation indices for prediction of crop chlorophyll content for application to precision agriculture. Remote Sens. Environ. 2002;81:416–426. doi: 10.1016/S0034-4257(02)00018-4. [DOI] [Google Scholar]

- 14.Wood C., Reeves D., Himelrick D. Relationships between Chlorophyll Meter Readings and Leaf Chlorophyll Concentration, N Status, and Crop Yield: A Review. [(accessed on 8 September 2020)]; Available online: https://www.agronomysociety.org.nz/uploads/94803/files/1993_1._Chlorophyll_relationships_-_a_review.pdf.

- 15.Uddling J., Gelang-Alfredsson J., Piikki K., Pleijel H. Evaluating the relationship between leaf chlorophyll concentration and SPAD-502 chlorophyll meter readings. Photosynth. Res. 2007;91:37–46. doi: 10.1007/s11120-006-9077-5. [DOI] [PubMed] [Google Scholar]

- 16.Markwell J., Osterman J.C., Mitchell J.L. Calibration of the Minolta SPAD-502 leaf chlorophyll meter. Photosynth. Res. 1995;46:467–472. doi: 10.1007/BF00032301. [DOI] [PubMed] [Google Scholar]

- 17.Villa F., Bronzi D., Bellisai S., Boso G., Shehata A.B., Scarcella C., Tosi A., Zappa F., Tisa S., Durini D. Electro-Optical Remote Sensing, Photonic Technologies, and Applications VI. International Society for Optics and Photonics; Bellingham, WA, USA: 2012. SPAD imagers for remote sensing at the single-photon level; p. 85420G. [Google Scholar]

- 18.Zhengjun Q., Haiyan S., Yong H., Hui F. Variation rules of the nitrogen content of the oilseed rape at growth stage using SPAD and visible-NIR. Trans. Chin. Soc. Agric. Eng. 2007;23:150–154. [Google Scholar]

- 19.Wang Y.-W., Dunn B.L., Arnall D.B., Mao P.-S. Use of an active canopy sensor and SPAD chlorophyll meter to quantify geranium nitrogen status. HortScience. 2012;47:45–50. doi: 10.21273/HORTSCI.47.1.45. [DOI] [Google Scholar]

- 20.Hawkins T.S., Gardiner E.S., Comer G.S. Modeling the relationship between extractable chlorophyll and SPAD-502 readings for endangered plant species research. J. Nat. Conserv. 2009;17:123–127. doi: 10.1016/j.jnc.2008.12.007. [DOI] [Google Scholar]

- 21.Giustolisi G., Mita R., Palumbo G. Verilog-A modeling of SPAD statistical phenomena; Proceedings of the 2011 IEEE International Symposium of Circuits and Systems (ISCAS); Rio de Janeiro, Brazil. 15−18 May 2011; pp. 773–776. [Google Scholar]

- 22.Aragon B., Johansen K., Parkes S., Malbeteau Y., Al-Mashharawi S., Al-Amoudi T., Andrade C.F., Turner D., Lucieer A., McCabe M.F. A Calibration Procedure for Field and UAV-Based Uncooled Thermal Infrared Instruments. Sensors. 2020;20:3316. doi: 10.3390/s20113316. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Guo Y., Senthilnath J., Wu W., Zhang X., Zeng Z., Huang H. Radiometric calibration for multispectral camera of different imaging conditions mounted on a UAV platform. Sustainability. 2019;11:978. doi: 10.3390/su11040978. [DOI] [Google Scholar]

- 24.Bilal D.K., Unel M., Yildiz M., Koc B. Realtime Localization and Estimation of Loads on Aircraft Wings from Depth Images. Sensors. 2020;20:3405. doi: 10.3390/s20123405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Guo Y., Guo J., Liu C., Xiong H., Chai L., He D. Precision Landing Test and Simulation of the Agricultural UAV on Apron. Sensors. 2020;20:3369. doi: 10.3390/s20123369. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Senthilnath J., Dokania A., Kandukuri M., Ramesh K.N., Anand G., Omkar S.N. Detection of tomatoes using spectral-spatial methods in remotely sensed RGB images captured by UAV. Biosyst. Eng. 2016;146:16–32. doi: 10.1016/j.biosystemseng.2015.12.003. [DOI] [Google Scholar]

- 27.Riccardi M., Mele G., Pulvento C., Lavini A., d’Andria R., Jacobsen S.-E. Non-destructive evaluation of chlorophyll content in quinoa and amaranth leaves by simple and multiple regression analysis of RGB image components. Photosynth. Res. 2014;120:263–272. doi: 10.1007/s11120-014-9970-2. [DOI] [PubMed] [Google Scholar]

- 28.Lee H.-C. Introduction to Color Imaging Science. Cambridge University Press; Cambridge, UK: 2005. [Google Scholar]

- 29.Niu Y., Zhang L., Zhang H., Han W., Peng X. Estimating above-ground biomass of maize using features derived from UAV-based RGB imagery. Remote Sens. 2019;11:1261. doi: 10.3390/rs11111261. [DOI] [Google Scholar]

- 30.Kefauver S.C., Vicente R., Vergara-Díaz O., Fernandez-Gallego J.A., Kerfal S., Lopez A., Melichar J.P., Serret Molins M.D., Araus J.L. Comparative UAV and field phenotyping to assess yield and nitrogen use efficiency in hybrid and conventional barley. Front. Plant Sci. 2017;8:1733. doi: 10.3389/fpls.2017.01733. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Senthilnath J., Kandukuri M., Dokania A., Ramesh K. Application of UAV imaging platform for vegetation analysis based on spectral-spatial methods. Comput. Electron. Agric. 2017;140:8–24. doi: 10.1016/j.compag.2017.05.027. [DOI] [Google Scholar]

- 32.Ashapure A., Jung J., Chang A., Oh S., Maeda M., Landivar J. A Comparative Study of RGB and Multispectral Sensor-Based Cotton Canopy Cover Modelling Using Multi-Temporal UAS Data. Remote Sens. 2019;11:2757. doi: 10.3390/rs11232757. [DOI] [Google Scholar]

- 33.Mazzia V., Comba L., Khaliq A., Chiaberge M., Gay P. UAV and Machine Learning Based Refinement of a Satellite-Driven Vegetation Index for Precision Agriculture. Sensors. 2020;20:2530. doi: 10.3390/s20092530. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Ballesteros R., Ortega J.F., Hernandez D., Moreno M.A. Onion biomass monitoring using UAV-based RGB imaging. Precis. Agric. 2018;19:840–857. doi: 10.1007/s11119-018-9560-y. [DOI] [Google Scholar]

- 35.Matese A., Gennaro S.D. Practical Applications of a Multisensor UAV Platform Based on Multispectral, Thermal and RGB High Resolution Images in Precision Viticulture. Agriculture. 2018;8:116. doi: 10.3390/agriculture8070116. [DOI] [Google Scholar]

- 36.Dong-Wook K., Yun H., Sang-Jin J., Young-Seok K., Suk-Gu K., Won L., Hak-Jin K. Modeling and Testing of Growth Status for Chinese Cabbage and White Radish with UAV-Based RGB Imagery. Remote Sens. 2018;10:563 [Google Scholar]

- 37.Barrero O., Perdomo S.A. RGB and multispectral UAV image fusion for Gramineae weed detection in rice fields. Precis. Agric. 2018;19:809–822. doi: 10.1007/s11119-017-9558-x. [DOI] [Google Scholar]

- 38.Das J., Cross G., Qu C., Makineni A., Tokekar P., Mulgaonkar Y., Kumar V. Devices, systems, and methods for automated monitoring enabling precision agriculture; Proceedings of the 2015 IEEE International Conference on Automation Science and Engineering (CASE); Gothenburg, Sweden. 24–28 August 2015; pp. 462–469. [Google Scholar]

- 39.Berni J.A., Zarco-Tejada P.J., Suárez L., Fereres E. Thermal and narrowband multispectral remote sensing for vegetation monitoring from an unmanned aerial vehicle. IEEE Trans. Geosci. Remote Sens. 2009;47:722–738. doi: 10.1109/TGRS.2008.2010457. [DOI] [Google Scholar]

- 40.Primicerio J., Gennaro S.F.D., Fiorillo E., Genesio L., Vaccari F.P. A flexible unmanned aerial vehicle for precision agriculture. Precis. Agric. 2012;13:517–523. doi: 10.1007/s11119-012-9257-6. [DOI] [Google Scholar]

- 41.Miao Y., Mulla D.J., Randall G.W., Vetsch J.A., Vintila R. Predicting chlorophyll meter readings with aerial hyperspectral remote sensing for in-season site-specific nitrogen management of corn. Precis. Agric. 2007;7:635–641. [Google Scholar]

- 42.Wang J., Xu Y., Wu G. The integration of species information and soil properties for hyperspectral estimation of leaf biochemical parameters in mangrove forest. Ecol. Indic. 2020;115:106467. doi: 10.1016/j.ecolind.2020.106467. [DOI] [Google Scholar]

- 43.Jin X., Zarco-Tejada P., Schmidhalter U., Reynolds M.P., Hawkesford M.J., Varshney R.K., Yang T., Nie C., Li Z., Ming B. High-throughput estimation of crop traits: A review of ground and aerial phenotyping platforms. IEEE Geosci. Remote Sens. Mag. 2020;20:1–32. doi: 10.1109/MGRS.2020.2998816. [DOI] [Google Scholar]

- 44.Jin X., Kumar L., Li Z., Feng H., Xu X., Yang G., Wang J. A review of data assimilation of remote sensing and crop models. Eur. J. Agron. 2018;92:141–152. doi: 10.1016/j.eja.2017.11.002. [DOI] [Google Scholar]

- 45.Lillicrap T.P., Santoro A., Marris L., Akerman C.J., Hinton G. Backpropagation and the brain. Nat. Rev. Neuroence. 2020;21:335–346. doi: 10.1038/s41583-020-0277-3. [DOI] [PubMed] [Google Scholar]

- 46.Kosson A., Chiley V., Venigalla A., Hestness J., Köster U. Pipelined Backpropagation at Scale: Training Large Models without Batches. arXiv. 20202003.11666 [Google Scholar]

- 47.Pix4D SA . Pix4Dmapper 4.1 User Manual. Pix4d SA; Lausanne, Switzerland: 2017. [Google Scholar]

- 48.Da Silva D.C., Toonstra G.W.A., Souza H.L.S., Pereira T.Á.J. Qualidade de ortomosaicos de imagens de VANT processados com os softwares APS, PIX4D e PHOTOSCAN. V Simpósio Brasileiro de Ciências Geodésicas e Tecnologias da Geoinformação Recife-PE. 2014;1:12–14. [Google Scholar]

- 49.Barbasiewicz A., Widerski T., Daliga K. E3S Web of Conferences. EDP Sciences; Les Ulis, France: 2018. The Analysis of the Accuracy of Spatial Models Using Photogrammetric Software: Agisoft Photoscan and Pix4D; p. 12. [Google Scholar]

- 50.Woebbecke D.M., Meyer G.E., Von Bargen K., Mortensen D. Color indices for weed identification under various soil, residue, and lighting conditions. Trans. ASAE. 1995;38:259–269. doi: 10.13031/2013.27838. [DOI] [Google Scholar]

- 51.Zhang W., Wang Z., Wu H., Song X., Liao J. Study on the Monitoring of Karst Plateau Vegetation with UAV Aerial Photographs and Remote Sensing Images. IOP Conf. Ser. Earth Environ. Sci. 2019;384:012188. doi: 10.1088/1755-1315/384/1/012188. [DOI] [Google Scholar]

- 52.Meyer G.E., Neto J.C. Verification of color vegetation indices for automated crop imaging applications. Comput. Electron. Agric. 2008;63:282–293. doi: 10.1016/j.compag.2008.03.009. [DOI] [Google Scholar]

- 53.Hakala T., Suomalainen J., Peltoniemi J.I. Acquisition of bidirectional reflectance factor dataset using a micro unmanned aerial vehicle and a consumer camera. Remote Sens. 2010;2:819–832. doi: 10.3390/rs2030819. [DOI] [Google Scholar]

- 54.Xiaoqin W., Miaomiao W., Shaoqiang W., Yundong W. Extraction of vegetation information from visible unmanned aerial vehicle images. Trans. Chin. Soc. Agric. Eng. 2015;31:5. [Google Scholar]

- 55.Wan L., Li Y., Cen H., Zhu J., Yin W., Wu W., Zhu H., Sun D., Zhou W., He Y. Combining UAV-based vegetation indices and image classification to estimate flower number in oilseed rape. Remote Sens. 2018;10:1484. doi: 10.3390/rs10091484. [DOI] [Google Scholar]

- 56.Neto J.C. Ph.D. Thesis. The University of Nebraska-Lincoln; Lincoln, NE, USA: 2004. A Combined Statistical-Soft Computing Approach for Classification and Mapping Weed Species in Minimum-Tillage Systems. [Google Scholar]

- 57.Gitelson A.A., Kaufman Y.J., Stark R., Rundquist D. Novel algorithms for remote estimation of vegetation fraction. Remote Sens. Environ. 2002;80:76–87. doi: 10.1016/S0034-4257(01)00289-9. [DOI] [Google Scholar]

- 58.Zhang X., Zhang F., Qi Y., Deng L., Wang X., Yang S. New research methods for vegetation information extraction based on visible light remote sensing images from an unmanned aerial vehicle (UAV) Int. J. Appl. Earth Obs. Geoinf. 2019;78:215–226. doi: 10.1016/j.jag.2019.01.001. [DOI] [Google Scholar]

- 59.Beniaich A., Naves Silva M.L., Avalos F.A.P., Menezes M.D., Candido B.M. Determination of vegetation cover index under different soil management systems of cover plants by using an unmanned aerial vehicle with an onboard digital photographic camera. Semin. Cienc. Agrar. 2019;40:49–66. doi: 10.5433/1679-0359.2019v40n1p49. [DOI] [Google Scholar]

- 60.Ponti, Moacir P. Segmentation of Low-Cost Remote Sensing Images Combining Vegetation Indices and Mean Shift. IEEE Geoence Remote Sens. Lett. 2013;10:67–70. doi: 10.1109/LGRS.2012.2193113. [DOI] [Google Scholar]

- 61.Jordan C.F. Derivation of leaf-area index from quality of light on the forest floor. Ecology. 1969;50:663–666. doi: 10.2307/1936256. [DOI] [Google Scholar]

- 62.Joao T., Joao G., Bruno M., Joao H. Indicator-based assessment of post-fire recovery dynamics using satellite NDVI time-series. Ecol. Indic. 2018;89:199–212. doi: 10.1016/j.ecolind.2018.02.008. [DOI] [Google Scholar]

- 63.Jayaraman V., Srivastava S.K., Kumaran Raju D., Rao U.R. Total solution approach using IRS-1C and IRS-P3 data. IEEE Trans. Geoence Remote Sens. 2000;38:587–604. doi: 10.1109/36.823953. [DOI] [Google Scholar]

- 64.Hague T., Tillett N., Wheeler H. Automated crop and weed monitoring in widely spaced cereals. Precis. Agric. 2006;7:21–32. doi: 10.1007/s11119-005-6787-1. [DOI] [Google Scholar]

- 65.Rondeaux G., Steven M., Baret F. Optimization of soil-adjusted vegetation indices. Remote Sens. Environ. 1996;55:95–107. doi: 10.1016/0034-4257(95)00186-7. [DOI] [Google Scholar]

- 66.Guijarro M., Pajares G., Riomoros I., Herrera P., Burgos-Artizzu X., Ribeiro A. Automatic segmentation of relevant textures in agricultural images. Comput. Electron. Agric. 2011;75:75–83. doi: 10.1016/j.compag.2010.09.013. [DOI] [Google Scholar]

- 67.Huete A.R. A modified soil adjusted vegetation index. Remote Sens. Envrion. 2015;48:119–126. [Google Scholar]

- 68.Pádua L., Vanko J., Hruška J., Adão T., Sousa J.J., Peres E., Morais R. UAS, sensors, and data processing in agroforestry: A review towards practical applications. Int. J. Remote Sens. 2017;38:2349–2391. doi: 10.1080/01431161.2017.1297548. [DOI] [Google Scholar]

- 69.Verrelst J., Schaepman M.E., Koetz B., Kneubühler M. Angular sensitivity analysis of vegetation indices derived from CHRIS/PROBA data. Remote Sens. Environ. 2008;112:2341–2353. doi: 10.1016/j.rse.2007.11.001. [DOI] [Google Scholar]

- 70.Hashimoto N., Saito Y., Maki M., Homma K. Simulation of Reflectance and Vegetation Indices for Unmanned Aerial Vehicle (UAV) Monitoring of Paddy Fields. Remote Sens. 2019;11:2119. doi: 10.3390/rs11182119. [DOI] [Google Scholar]

- 71.Gitelson A.A., Viña A., Arkebauer T.J., Rundquist D.C., Keydan G., Leavitt B. Remote estimation of leaf area index and green leaf biomass in maize canopies. Geophys. Res. Lett. 2003;30:1248. doi: 10.1029/2002GL016450. [DOI] [Google Scholar]

- 72.Saberioon M.M., Gholizadeh A. Novel approach for estimating nitrogen content in paddy fields using low altitude remote sensing system. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016;41:1011–1015. doi: 10.5194/isprsarchives-XLI-B1-1011-2016. [DOI] [Google Scholar]

- 73.Bendig J., Yu K., Aasen H., Bolten A., Bennertz S., Broscheit J., Gnyp M.L., Bareth G. Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Obs. Geoinf. 2015;39:79–87. doi: 10.1016/j.jag.2015.02.012. [DOI] [Google Scholar]

- 74.Yeom J., Jung J., Chang A., Ashapure A., Landivar J. Comparison of Vegetation Indices Derived from UAV Data for Differentiation of Tillage Effects in Agriculture. Remote Sens. 2019;11:1548. doi: 10.3390/rs11131548. [DOI] [Google Scholar]

- 75.Suzuki R., Tanaka S., Yasunari T. Relationships between meridional profiles of satellite-derived vegetation index (NDVI) and climate over Siberia. Int. J. Climatol. 2015;20:955–967. doi: 10.1002/1097-0088(200007)20:9<955::AID-JOC512>3.0.CO;2-1. [DOI] [Google Scholar]

- 76.Ballesteros R., Ortega J.F., Hernandez D., Del Campo A., Moreno M.A. Combined use of agro-climatic and very high-resolution remote sensing information for crop monitoring. Int. J. Appl. Earth Obs. Geoinf. 2018;72:66–75. doi: 10.1016/j.jag.2018.05.019. [DOI] [Google Scholar]

- 77.Henry C., Martina E., Juan M., José-Fernán M. Efficient Forest Fire Detection Index for Application in Unmanned Aerial Systems (UASs) Sensors. 2016;16:893. doi: 10.3390/s16060893. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Lussem U., Bolten A., Gnyp M., Jasper J., Bareth G. Evaluation of RGB-based vegetation indices from UAV imagery to estimate forage yield in grassland. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018;42:1215–1219. doi: 10.5194/isprs-archives-XLII-3-1215-2018. [DOI] [Google Scholar]

- 79.Possoch M., Bieker S., Hoffmeister D., Bolten A., Bareth G. Multi-Temporal Crop Surface Models Combined With The Rgb Vegetation Index From Uav-Based Images For Forage Monitoring In Grassland. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016;41:991–998. doi: 10.5194/isprsarchives-XLI-B1-991-2016. [DOI] [Google Scholar]

- 80.Bareth G., Bolten A., Gnyp M.L., Reusch S., Jasper J. Comparison Of Uncalibrated Rgbvi With Spectrometer-Based Ndvi Derived From Uav Sensing Systems On Field Scale. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016;41:837–843. doi: 10.5194/isprsarchives-XLI-B8-837-2016. [DOI] [Google Scholar]

- 81.Jin X., Liu S., Baret F., Hemerlé M., Comar A. Estimates of plant density of wheat crops at emergence from very low altitude UAV imagery. Remote Sens. Environ. 2017;198:105–114. doi: 10.1016/j.rse.2017.06.007. [DOI] [Google Scholar]

- 82.Cantrell K., Erenas M., de Orbe-Payá I., Capitán-Vallvey L. Use of the hue parameter of the hue, saturation, value color space as a quantitative analytical parameter for bitonal optical sensors. Anal. Chem. 2010;82:531–542. doi: 10.1021/ac901753c. [DOI] [PubMed] [Google Scholar]

- 83.Tu T.-M., Huang P.S., Hung C.-L., Chang C.-P. A fast intensity-hue-saturation fusion technique with spectral adjustment for IKONOS imagery. IEEE Geosci. Remote Sens. Lett. 2004;1:309–312. doi: 10.1109/LGRS.2004.834804. [DOI] [Google Scholar]

- 84.Choi M. A new intensity-hue-saturation fusion approach to image fusion with a tradeoff parameter. IEEE Trans. Geosci. Remote Sens. 2006;44:1672–1682. doi: 10.1109/TGRS.2006.869923. [DOI] [Google Scholar]

- 85.Kandi S.G. Automatic defect detection and grading of single-color fruits using HSV (hue, saturation, value) color space. J. Life Sci. 2010;4:39–45. [Google Scholar]

- 86.Grenzdörffer G., Niemeyer F. UAV based BRDF-measurements of agricultural surfaces with pfiffikus. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2011;38:229–234. doi: 10.5194/isprsarchives-XXXVIII-1-C22-229-2011. [DOI] [Google Scholar]

- 87.Cui L., Jiao Z., Dong Y., Sun M., Zhang X., Yin S., Ding A., Chang Y., Guo J., Xie R. Estimating Forest Canopy Height Using MODIS BRDF Data Emphasizing Typical-Angle Reflectances. Remote Sens. 2019;11:2239. doi: 10.3390/rs11192239. [DOI] [Google Scholar]

- 88.Tu J.V. Advantages and disadvantages of using artificial neural networks versus logistic regression for predicting medical outcomes. J. Clin. Epidemiol. 1996;49:1225–1231. doi: 10.1016/S0895-4356(96)00002-9. [DOI] [PubMed] [Google Scholar]

- 89.Kruschke J.K., Movellan J.R. Benefits of gain: Speeded learning and minimal hidden layers in back-propagation networks. IEEE Trans. Syst. Man Cybern. 1991;21:273–280. doi: 10.1109/21.101159. [DOI] [Google Scholar]

- 90.Lawrence S., Giles C.L. Overfitting and neural networks: Conjugate gradient and backpropagation; Proceedings of the IEEE-INNS-ENNS International Joint Conference on Neural Networks (IJCNN 2000); Como, Italy. 27 July 2000; pp. 114–119. [Google Scholar]

- 91.Karystinos G.N., Pados D.A. On overfitting, generalization, and randomly expanded training sets. IEEE Trans. Neural Netw. 2000;11:1050–1057. doi: 10.1109/72.870038. [DOI] [PubMed] [Google Scholar]

- 92.Chang C.-C., Lin C.-J. LIBSVM: A library for support vector machines. ACM Trans. Intell. Syst. Technol. Tist. 2011;2:1–27. doi: 10.1145/1961189.1961199. [DOI] [Google Scholar]

- 93.Maulik U., Chakraborty D. Learning with transductive SVM for semisupervised pixel classification of remote sensing imagery. ISPRS J. Photogramm. Remote Sens. 2013;77:66–78. doi: 10.1016/j.isprsjprs.2012.12.003. [DOI] [Google Scholar]

- 94.Belgiu M., Drăguţ L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016;114:24–31. doi: 10.1016/j.isprsjprs.2016.01.011. [DOI] [Google Scholar]