Abstract

The era of big data has witnessed an increasing availability of multiple data sources for statistical analyses. We consider estimation of causal effects combining big main data with unmeasured confounders and smaller validation data with supplementary information on these confounders. Under the unconfoundedness assumption with completely observed confounders, the smaller validation data allow for constructing consistent estimators for causal effects, but the big main data can only give error-prone estimators in general. However, by leveraging the information in the big main data in a principled way, we can improve the estimation efficiencies yet preserve the consistencies of the initial estimators based solely on the validation data. Our framework applies to asymptotically normal estimators, including the commonly used regression imputation, weighting, and matching estimators, and does not require a correct specification of the model relating the unmeasured confounders to the observed variables. We also propose appropriate bootstrap procedures, which makes our method straightforward to implement using software routines for existing estimators. Supplementary materials for this article are available online.

Keywords: Calibration, Causal inference, Inverse probability weighting, Missing confounder, Two-phase sampling

1. Introduction

Unmeasured confounding is an important and common problem in observational studies. Many methods have been proposed to deal with unmeasured confounding in causal inference, such as sensitivity analyses (e.g., Rosenbaum and Rubin 1983a), instrumental variable approaches (e.g., Angrist, Imbens, and Rubin 1996). However, sensitivity analyses cannot provide point estimation, and valid instrumental variables are often difficult to find in practice. We consider the setting where external validation data provide additional information on unmeasured confounders. To be more precise, the study includes a large main dataset representing the population of interest with unmeasured confounders and a smaller validation dataset with additional information about these confounders.

Our framework covers two common types of studies. First, we have a large main dataset, and then collect more information on unmeasured confounders for a subset of units, for example, using a two-phase sampling design (Neyman 1938; Cochran 2007; Wang et al. 2009). Second, we have a smaller but carefully designed validation dataset with rich covariates, and then link it to a larger main dataset with fewer covariates. The second type of data is now ubiquitous. In the era of big data, extremely large data have become available for research purposes, such as electronic health records, claims databases, disease data registries, census data, and to name a few (e.g., Imbens and Lancaster 1994; Schneeweiss et al. 2005; Chatterjee et al. 2016). Although these datasets might not contain full confounder information that guarantees consistent causal effect estimation, they can be useful to increase efficiencies of statistical analyses.

In causal inference, Stürmer et al. (2005) proposed a propensity score calibration method when the main data contain the outcome and an error-prone propensity score based on partial confounders, and the validation data supplement a gold standard propensity score based on all confounders. Stürmer et al. (2005) then applied a regression calibration technique to correct for the measurement error from the error-prone propensity score. This approach does not require the validation data to contain the outcome variable. However, this approach relies on the surrogacy property entailing that the outcome variable is conditionally independent of the error-prone propensity score given the gold standard propensity score and treatment. This surrogacy property is difficult to justify in practice, and its violations can lead to substantial biases (Stürmer et al. 2007; Lunt et al. 2012). Under the Bayesian framework, McCandless, Richardson, and Best (2012) specified a full parametric model of the joint distribution for the main and validation data, and treat the gold standard propensity score as a missing variable in the main data. Antonelli, Zigler, and Dominici (2017) combined the ideas of Bayesian model averaging, confounder selection, and missing data imputation into a single framework in this context. Enders et al. (2018) use simulation to show that multiple imputation is more robust than two-phase logistic regression against misspecification of imputation models. Lin and Chen (2014) developed a two-stage calibration method, which summarizes the confounding information through propensity scores and combines the results from the main and validation data. Their two-stage calibration focuses on the regression context with a correctly specified outcome model. Unfortunately, regression parameters, especially in the logistic regression model used by Lin and Chen (2014), may not be the causal parameters of interest in general (Freedman 2008).

In this article, we propose a general framework to estimate causal effects in the setting where the big main data have unmeasured confounders, but the smaller external validation data provide supplementary information on these confounders. Under the assumption of ignorable treatment assignment, causal effects can be identified and estimated from the validation data, using commonly used estimators, such as regression imputation, (augmented) inverse probability weighting (Horvitz and Thompson 1952; Rosenbaum and Rubin 1983b; Robins, Rotnitzky, and Zhao 1994; Bang and Robins 2005; Cao, Tsiatis, and Davidian 2009), and matching (e.g., Rubin 1973; Rosenbaum 1989; Heckman, Ichimura, and Todd 1997; Hirano, Imbens, and Ridder 2003; Hansen 2004; Rubin 2006; Abadie and Imbens 2006; Stuart 2010; Abadie and Imbens 2016). However, these estimators based solely on the validation data may not be efficient. We leverage the correlation between the initial estimator from the validation data and the error-prone estimator from the main data to improve the efficiency over the initial estimator. This idea is similar to the two-stage calibration in Lin and Chen (2014); however, their method focuses only on regression parameters and requires the validation data to be a simple random sample from the main data. Alternatively, the empirical likelihood is also an attractive approach to combine multiple data sources (Chen and Sitter 1999; Qin 2000; Chen, Sitter, and Wu 2002; Chen, Leung, and Qin 2003). However, the empirical likelihood approach needs sophisticated programming, and its computation can be heavy when data become large. Our method is practically simple, because we only need to compute commonly used estimators that can be easily implemented by existing software routines. Moreover, Lin and Chen (2014) and the empirical likelihood approach can only deal with regular and asymptotically linear (RAL) estimators often formulated by moment conditions, but our framework can also deal with non-RAL estimators, such as matching estimators. We also propose a unified bootstrap procedure based on resampling the linear expansions of the estimators, which is simple to implement and works for both RAL and matching estimators.

Furthermore, we relax the assumption that the validation data are a random sample from the study population of interest. We also link the proposed method to existing methods for missing data, viewing the additional confounders as missing values for units outside of the validation data. In contrast to most existing methods in the missing data literature, the proposed method does not need to specify the missing data model relating the unmeasured confounders with the observed variables.

For simplicity of exposition, we use “IID” for “identically and independently distributed,” 1(·) for the indicator function, for a vector or matrix “plim” for the probability limit of a random sequence, and for two random sequences satisfying with n being the generic sample size. We relegate all regularity conditions for asymptotic analyses to the online supplementary material.

2. Basic Setup

2.1. Notation: Causal Effect and Two Data Sources

Following Neyman (1923) and Rubin 1974), we use the potential outcomes framework to define causal effects. Suppose that the treatment is a binary variable A ∈ {0,1}, with 0 and 1 being the labels for control and active treatments, respectively. For each level of treatment a ∈ {0,1}, we assume that there exists a potential outcome Y(a), representing the outcome had the subject, possibly contrary to the fact, been given treatment a. The observed outcome is Y = Y(A) = AY(1) + (1 − A)Y(0). Let a vector of pretreatment covariates be (X, U), where X is observed for all units, but U may not be observed for some units.

Although we can extend our discussion to multiple data sources, for simplicity of exposition, we first consider a study with two data sources. The validation data have observations with sample size n2 = ||. The main data have observations with sample size . In our formulation, we consider the case with ⊂ , and let . If one has two separate main and validation datasets, the main dataset in our context combines these two datasets. Although the main dataset is larger, that is, n1 > n2, it does not contain full information on important covariates U. Under a superpopulation model, we assume that {Ai, Xi, Ui, Yi(0), Yi (1) : i ∈ } are IID for all i ∈ , and therefore the observations in are also IID. The following assumption links the main and validation data.

Assumption 1.

The index set for the validation data of size n2 is a simple random sample from .

Under Assumption 1, {Aj, Xj, Uj, Yj(0), Yj(1) : j ∈ } and the observations in of the validation data are also IID, respectively. We shall relax Assumption 1 to allow to be a general probability sample from in Section 7. But Assumption 1 makes the presentation simpler.

Example 1.

Two-phase sampling design is an example that results in the observed data structure. In a study, some variables (e.g., A, X, and Y) may be relatively cheaper, while some variables (e.g., U) are more expensive to obtain. A two-phase sampling design (Neyman 1938; Cochran 2007; Wang et al. 2009) can reduce the cost of the study: in the first phase, the easy-to-obtain variables are measured for all units, and in the second phase, additional expensive variables are measured for a selected validation sample.

Example 2.

Another example is highly relevant in the era of big data, where one links small data with full information on (A, X, U, Y) to external big data with only (A, X, Y). Chatterjee et al. (2016) recently consider this scenario for parametric regression analyses.

Without loss of generality, we first consider the average causal effect (ACE)

| (1) |

and will discuss extensions to other causal estimands in Section 4.1. Because of the IID assumption, we drop the indices i and j in the expectations in (1) and later equations.

In what follows, we define the conditional means of the outcome as

the conditional variances of the outcome as

the conditional probabilities of the treatment as

2.2. Identification and Model Assumptions

A fundamental problem in causal inference is that we can observe at most one potential outcome for a unit. Following Rosenbaum and Rubin (1983b), we make the following assumptions to identify causal effects.

Assumption 2 (Ignorability).

for a = 0 and 1.

Under Assumption 2, the treatment assignment is ignorable in given (X, U). However, the treatment assignment is only “latent” ignorable in given X and the latent variable U (Frangakis and Rubin 1999; Jin and Rubin 2008).

Moreover, we require adequate overlap between the treatment and control covariate distributions, quantified by the following assumption on the propensity score e(X, U).

Assumption 3 (Overlap).

There exist constants c1 and c2 such that with probability 1, .

Under Assumptions 2 and 3, P{A = 1 | X, U, Y(1)} = P{A = 1 | X, U, Y(0)} = e(X, U), and E{Y(a) | X, U} = E{Y(a) | A = a, X, U} = μa(X, U). The ACE τ can then be estimated through regression imputation, inverse probability weighting (IPW), augmented inverse probability weighting (AIPW), or matching. See Rosenbaum (2002), Imbens (2004), and Rubin (2006) for surveys of these estimators.

In practice, the outcome distribution and the propensity score are often unknown and therefore need to be modeled and estimated.

Assumption 4 (Outcome model).

The parametric model is a correct specification for μa(X, U),for a = 0, 1; that is, , where is the true model parameter, for a = 0, 1.

Assumption 5 (Propensity score model).

The parametric model e(X, U; α) is a correct specification for e(X, U); that is, , where α* is the true model parameter.

The consistency of different estimators requires different model assumptions.

3. Methodology and Important Estimators

3.1. Review of Commonly Used Estimators Based on Validation Data

The validation data {(Aj, Xj, Uj, Yj) : j ∈ } contain observations of all confounders (X, U). Therefore, under Assumptions 2 and 3, τ is identifiable and can be estimated by some commonly used estimator solely from the validation data, denoted by . Although the main data do not contain the full confounding information, we leverage the information on the common variables (A, X, Y) as in the main data to improve the efficiency of . Before presenting the general theory, we first review important estimators that are widely used in practice.

Let μa(X, U; βa) be a working model for μa(X, U), for a = 0, 1, and e(X, U; α) be a working model for e(X, U). We construct consistent estimators and based on , with probability limits and α*, respectively. Under Assumption 4, , and under Assumption 5, e(X, U; α*) = e(X, U).

Example 3 (Regression imputation).

The regression imputation estimator is , where

is consistent for τ under Assumption 4.

Example 4 (Inverseprobability weighting).

The IPW estimator is , where

is consistent for τ under Assumption 5.

The Horvitz-Thompson-type estimator has large variability, and is often inferior to the Hajek-type estimator (Hájek 1971). We do not present the Hajek-type estimator because we can improve it by the AIPW estimator below. The AIPW estimator employs both the propensity score and the outcome models.

Example 5 (Augmented inverse probability weighting).

Define the residual outcome as for treated units and for control units. The AIPW estimator is , where

| (2) |

is doubly robust in the sense that it is consistent if either Assumption 4 or 5 holds. Moreover, it is locally efficient if both Assumptions 4 and 5 hold (Bang and Robins 2005; Tsiatis 2006; Cao, Tsiatis, and Davidian 2009).

Matching estimators are also widely used in practice. To fix ideas, we consider matching with replacement with the number of matches fixed at M. Matching estimators hinge on imputing the missing potential outcome for each unit. To be precise, for unit j, the potential outcome under Aj is the observed outcome Yj; the (counterfactual) potential outcome under 1 − Aj is not observed but can be imputed by the average of the observed outcomes of the nearest M units with 1 − Aj. Let these matched units for unit j be indexed by , where the subscripts d and V denote the dataset and the matching variable V (e.g., V = (X, U)), respectively. Without loss of generality, we use the Euclidean distance to determine neighbors; the discussion applies to other distances (Abadie and Imbens 2006). Let be the number of times that unit j is used as a match based on the matching variable V in .

Example 6 (Matching).

Define the imputed potential outcomes as

Then the matching estimator of τ is

Abadie and Imbens (2006) obtained the decomposition

where

| (3) |

The difference in (3) accounts for the matching discrepancy, and therefore B2 contributes to the asymptotic bias of the matching estimator. Abadie and Imbens (2006) showed that the matching estimators have nonnegligible biases when the dimension of V is greater than one. Let be an estimator for μa(X, U), obtained either parametrically, for example, by a linear regression estimator, or nonparametrically, for a = 0, 1. Abadie and Imbens (2006) proposed a bias-corrected matching estimator

where is an estimator for B2 by replacing μa(X, U) with .

3.2. A General Strategy

We give a general strategy for efficient estimation of the ACE by utilizing both the main and validation data. In Sections 3.3 and 3.4, we will provide examples to elucidate the proposed strategy with specific estimators.

Although the estimators based on the validation data are consistent for τ under certain regularity conditions, they are inefficient without using the main data . However, the main data do not contain important confounders U; if we naively use the estimators in Examples 3–6 with U being empty, then the corresponding estimators can be inconsistent for τ and thus are error-prone in general. Moreover, for robustness consideration, we do not want to impose additional modeling assumptions linking U and (A, X, Y).

Our strategy is straightforward: we apply the same error-prone procedure to both the main and validation data. The key insight is that the difference of the two error-prone estimates is consistent for 0 and can be used to improve efficiency of the initial estimator due to its association with . Let an error-prone estimator of τ from the main data be , which converges to some constant τep, not necessarily the same as τ. Applying the same method to the validation data , we can obtain another error-prone estimator . More generally, we can consider τep to be an L-dimensional vector of parameters identifiable based on the joint distribution of (A, X, Y), and and to be the corresponding estimators from the main and validation data, respectively. For example, an contain estimators of τ using different methods based on .

We consider a class of estimators satisfying

| (4) |

in distribution, as , which is general enough to include all the estimators reviewed in Examples 3–6. Heuristically, if (4) holds exactly rather than asymptotically, by the multivariate normal theory, we have the following the conditional distribution

Let , and be consistent estimators for v2, and V. We set to equal its estimated conditional mean , leading to an estimating equation for τ:

Solving this equation for τ, we obtain the estimator

| (5) |

Proposition 1.

Under Assumption 1 and certain regularity conditions, if (4) holds, then is consistent for τ, and

| (6) |

in distribution, as . Given a nonzero Γ, the asymptotic variance, , is smaller than the asymptotic variance of .

The consistency of does not require any component in and to correctly estimate τ. That is, these estimators can be error prone. The requirement for the error-prone estimators is minimal, as long as they are consistent for the same (finite) parameters. Under Assumption 1, is consistent for a vector of zeros, as .

We can estimate the asymptotic variance of by

| (7) |

Remark 1.

We construct the error prone estimators and based on and , respectively. Another intuitive way is to construct and based on and , respectively. In general, we can construct the error prone estimators based on different subsets of and as long as their difference converges in probability to zero. We show in the supplementary material that our construction maximizes the variance reduction for , given the procedure of the error prone estimators.

Remark 2.

We can view (5) as the best consistent estimator of τ among all linear combinations , in the sense that (5) achieves the minimal asymptotic variance among this class of consistent estimators. Similar ideas appeared in design-optimal regression estimation in survey sampling (Deville and Särndal 1992; Fuller 2009), regression analyses (Chen and Chen 2000; Chen 2002; Wang and Wang 2015), improved prediction in high dimensional datasets (Boonstra, Taylor, and Mukherjee 2012), and meta-analysis (Collaboration 2009). In the supplementary material, we show that the proposed estimator in (5) is the best estimator of τ among the class of estimators { is a smooth function of (x, y, z), and is consistent for τ}, in the sense that (5) achieves the minimal asymptotic variance among this class.

Remark 3.

The choice of the error-prone estimators will affect the efficiency of . From (6), for a given , to improve the efficiency of with a 1-dimensional error-prone estimator, we would like this estimator to have a small variance V and a large correlation with , Γ. In principle, increasing the dimension of the error-prone estimator would not decrease the asymptotic efficiency gain as shown in the supplementary material. However, it would also increase the complexity of implementation and harm the finite sample properties. To “optimize” the tradeoff, we suggest choosing the error-prone estimator to be the same type as the initial estimator . For example, if is an AIPW estimator, we can choose to be an AIPW estimator without using U in a possibly misspecified propensity score model. The simulation in Section 5 confirms that this choice is reasonable.

To close this subsection, we comment on the existing literature and the advantages of our strategy. The proposed estimator in (5) utilizes both the main and validation data and improves the efficiency of the estimator based solely on the validation data. In economics, Imbens and Lancaster (1994) proposed to use the generalized method of moments (Hansen 1982) for using the main data which provide moments of the marginal distribution of some economic variables. In survey sampling, calibration is a standard technique to integrate auxiliary information in estimation or handle nonresponse; see, for example, Chen and Chen (2000), Wu and Sitter (2001), Kott (2006), Chang and Kott (2008), and Kim, Kwon, and Paik (2016). An important issue is how to specify optimal calibration equations; see, for example, Deville and Särndal (1992), Robins, Rotnitzky, and Zhao (1994), Wu and Sitter (2001), and Lumley, Shaw, and Dai (2011). Other researchers developed constrained empirical likelihood methods to calibrate auxiliary information from the main data; see, for example, Chen and Sitter (1999), Qin (2000), Chen, Sitter, and Wu (2002), and Chen, Leung, and Qin (2003).

Compared to these methods, the proposed framework is attractive because it is simple to implement which requires only standard software routines for existing methods, and it can deal with estimators that cannot be derived from moment conditions, for example, matching estimators. Moreover, our framework does not require a correct model specification of the relationship between unmeasured covariates U and measured variables (A, X, Y).

3.3. Regular Asymptotically Linear (RAL) Estimators

We first elucidate the proposed method with RAL estimators.

From the validation data, we consider the case when is RAL; that is, it can be asymptotically approximated by a sum of IID random vectors with mean 0:

| (8) |

where are IID with mean 0. The random vector is called the influence function of with and (e.g., Bickel et al. 1993). Regarding regularity conditions, see, for example, Newey (1990).

Let be an error-prone propensity score model for, , and be an error-prone outcome regression model for μa(X), for a = 0, 1. The corresponding error-prone estimators of the ACE can be obtained from the main data and the validation data . We consider the case when is RAL:

| (9) |

where are IID with mean 0.

Theorem 1.

Under certain regularity conditions, (4) holds for the RAL estimators (8) and (9), where , , and .

To derive and for RAL estimators, let and be estimators of and by replacing E(·) with the empirical measure and unknown parameters with their corresponding estimators. Note that the subscript d in indicates that it is obtained based on . Then, we can estimate Γ and V by

Finally, we can obtain the estimator and its variance estimator by (5) and (7), respectively.

The commonly-used RAL estimators include the regression imputation and (augmented) inverse probability weighting estimators. Because the influence functions for and are standard, we present the details in the supplementary material. Below, we state only the influence function for .

For the outcome model, let Sa (A, X, U, Y; βa) be the estimating function for βa, for example,

for a = 0, 1, which is a standard choice for the conditional mean model. For the propensity score model, let S(A, X, U; α) be the estimating function for α, for example,

which is the score function from the likelihood of a binary response model. Moreover, let

be the Fisher information matrix for α in the propensity score model. In addition, let and be the estimators solving the corresponding empirical estimating equations based on , with probability limits and α*, respectively.

Lemma 1 (Augmented inverse probability weighting).

For simplicity, denote , and for a = 0, 1. Under Assumption 4 or 5, has the influence function

| (10) |

| (11) |

where

Lemma 1 follows from standard asymptotic theory, but as far as we know it has not appeared in the literature. Lunceford and Davidian (2004) suggest a formula without (10) and (11) for , which, however, works only when both Assumptions 4 and 5 hold. Otherwise, the resulting variance estimator is not consistent if either Assumption 4 or 5 does not hold, as shown by simulation in Funk et al. (2011). The correction terms in (10) and (11) also make the variance estimator doubly robust in the sense that the variance estimator for is consistent if either Assumption 4 or 5 holds, not necessarily both.

For error-prone estimators, we can obtain the influence functions similarly. The subtlety is that both the propensity score and outcome models can be misspecified. For simplicity of the presentation, we defer the exact formulas to the online supplementary material.

3.4. Matching Estimators

We then elucidate the proposed method with non-RAL estimators. An important class of non-RAL estimators for the ACE are the matching estimators. The matching estimators are not regular estimators because the functional forms are not smooth due to the fixed numbers of matches (Abadie and Imbens 2008). Continuing with Example 6, Abadie and Imbens 2006) express the bias-corrected matching estimator in a linear form as

| (12) |

where

| (13) |

Similarly, has a linear form

| (14) |

where

| (15) |

Theorem 2.

Under certain regularity conditions, (4) holds for the matching estimators (12) and (14), where

The existence of the probability limits in Theorem 2 are guaranteed by the regularity conditions specified in the supplementary material (c.f. Abadie and Imbens 2006).

To estimate (v2, Γ, V) in Theorem 2, we need to estimate the conditional mean and variance functions of the outcome given covariates. Following Abadie and Imbens (2006), we can estimate these functions via matching units with the same treatment level. We will discuss an alternative bootstrap strategy in the next subsection.

3.5. Bootstrap Variance Estimation

The asymptotic results in Theorems 1 and 2 allow for variance estimation of . In addition, we also consider the bootstrap for variance estimation, which is simpler to implement and often has better finite sample performances (Otsu and Rai 2016). This is particularly important for matching estimators because the analytic variance formulas involve nonparametric estimation of the conditional variances .

There are two approaches for obtaining bootstrap observations: (a) the original observations; and (b) the asymptotic linear terms of the proposed estimator. For RAL estimators, bootstrapping the original observations will yield valid variance estimators (Efron and Tibshirani 1986; Shao and Tu 2012). However, for matching estimators, Abadie and Imbens (2008) showed that due to lack of smoothness in their functional form, the bootstrap based on approach (a) does not apply for variance estimation. This is mainly because the bootstrap based on approach (a) cannot preserve the distribution of the numbers of times that the units are used as matches. As a remedy, Otsu and Rai (2016) proposed to construct the bootstrap counterparts by resampling based on approach (b) for the matching estimator.

To unify the notation, let indicate for RAL and for and similar definitions apply to . Let and be their estimated version by replacing the population quantities by the estimated quantities (d = 1, 2). Following Otsu and Rai (2016), for b = 1,...,B, we construct the bootstrap replicates for the proposed estimators as follows:

Step 1. Sample n1 units from with replacement as , treating the units with observed U as the bootstrap validation data .

Step 2. Compute the bootstrap replicates of as

Based on the bootstrap replicates, we estimate Γ, V and v2 by

| (16) |

| (17) |

| (18) |

Finally, we estimate the asymptotic variance of by (7), that is, .

Theorem 3.

Under certain regularity conditions, are consistent for .

Remark 4.

If the ratio of n2 and n1 is small, the above bootstrap approach may be unstable, because it is likely that some bootstrap validation data contain only a few or even zero observations. In this case, we use an alternative bootstrap approach, where we sample n2 units from with replacement as , sample n1 − n2 units from \ with replacement, combined with , as , and obtain the proposed estimators based on and . This approach guarantees that the bootstrap validation data contain n2 observations.

Remark 5.

It is worthwhile to comment on a computational issue. When the main data have a substantially large size, the computation for the bootstrap can be demanding if we follow Steps 1 and 2 above. In this case, we can use subsampling (Politis, Romano, and Wolf 1999) or the Bag of Little Bootstraps (Kleiner et al. 2014) to reduce the computational burden. More interestingly, when and ρ = 0, that is, the validation data contain a small fraction of the main data, Γ and V reduce to and , respectively. That is, when the size of the main data is substantially large, we can ignore the uncertainty of and treat it as a constant, which is a regime recently considered by Chatterjee et al. (2016). In this case, we need only to bootstrap the validation data, which is computationally simpler.

4. Extensions

4.1. Other Causal Estimands

Our strategy extends to a wide class of causal estimands, as long as (4) holds. For example, we can consider the average causal effects over a subset of population (Crump et al. 2006; Li, Morgan, and Zaslavsky 2016), including the average causal effect on the treated.

We can also consider nonlinear causal estimands. For example, for a binary outcome, the log of the causal risk ratio is

and the log of the causal odds ratio is

We give a brief discussion for the log CRR as an illustration. The key insight is that under Assumptions 2 and 3, we can estimate E{Y(a)} with commonly-used estimators from , denoted by . We can then obtain an estimator for the log CRR as log . Similarly, we can obtain error-prone estimators for the log CRR from both and using only covariates X. By the Taylor expansion, we can linearize these estimators and establish a similar result as (4), which serves as the basis to construct an improved estimator for the log CRR.

4.2. Design Issue: Optimal Sample Size Allocation

As a design issue, we consider planning a study to obtain the data structure in Example 1 in Section 2 subject to a cost constraint. The goal is to find the optimal design, specifically the sample allocation, that minimizes the variance of the proposed estimator subject to a cost constraint, as in the classical two-phase sampling (Cochran 2007).

Suppose that it costs C1 to collect (A, X, Y) for each unit, and C2 to collect U for each unit. Thus, the total cost of the study is

| (19) |

The variance of the proposed estimator is of the form

| (20) |

for example, for RAL estimators,

is the variance of the projection of onto the linear space spanned by . Minimizing (20) with respect to n1 and n2 subject to the constraint (19) yields the optimal and , which satisfy

| (21) |

where is the squared multiple correlation coefficient of , which measures the association between the initial estimator and the error-prone estimator. We derive (21) using the Lagrange multipliers, and relegate the details to the supplementary material. Not surprisingly, (21) shows that the sizes of the validation data and the main data should be inversely proportional to the square-root of the costs. In addition, from (21), a large size n2 for the validation data is more desirable when the association between the initial estimator and the error-prone estimator is small.

4.3. Multiple Data Sources

We have considered the setting with two data sources, and we can easily extend the theory to the setting with multiple data sources , where contain partial covariate information, and the validation data, , contain full information for (A, X, U, Y). For example, for , contains variables where . Each dataset , indexed by , has size nd for d = 1,...,K. This type of data structure arises from a multi-phase sampling as an extension of Example 1 or multiple sources of “big data” as an extension of Example 2.

Let be the initial estimator for τ from the validation data , and be the error-prone estimator for τ from . Let be the estimator obtained by applying the same error-prone estimator for to , so that is consistent for 0, for . Assume that

in distribution, as , where . If Γ and V have consistent estimators and , respectively, then, extending the proposed method in Section 3, we can use

to estimate τ. The estimator is consistent for τ with the asymptotic variance , which is smaller than the asymptotic variance of , if Γ is nonzero. Similar to the reasoning in Remark 3, using more data sources will improve the asymptotic estimation efficiency of τ.

5. Simulation

In this section, we conduct a simulation study to evaluate the finite sample performance of the proposed estimators. In our data generating model, the covariates are and , where . The potential outcomes are , and , where are independent. Therefore, the true value of the ACE is τ = E(5Ui). The treatment indicator Ai follows Bernoulli with . The main data consist of n1 units, and the validation data consist of n2 units randomly selected from the main data.

The initial estimators are the regression imputation, (A)IPW and matching estimators applied solely to the validation data, denoted by respectively. To distinguish the estimators constructed based on different error-prone methods, we assign each proposed estimator a name with the form , where “method,2” indicates the initial estimator applied to the validation data and “methods” indicates the error-prone estimator(s) used to improve the efficiency of the initial estimator. For example, indicates the initial estimator is the regression imputation estimator and the error-prone estimator is the IPW estimator. We compare the proposed estimators with the initial estimators in terms of percentages of reduction of mean squared errors, defined as . To demonstrate the robustness of the proposed estimator against misspecification of the imputation model, we consider the multiple imputation (MI, Rubin 1987) estimator, denoted by , which uses a regression model of U given (A, X, Y) for imputation. We implement MI using the “mice” package in R with m = 10.

Based on a point estimate and a variance estimate obtained by the asymptotic variance formula or the bootstrap method described in Section 3.5, we construct a Wald-type 95% confidence interval , where is the 97.5% quantile of the standard normal distribution. We further compare the variance estimators in terms of empirical coverage rates.

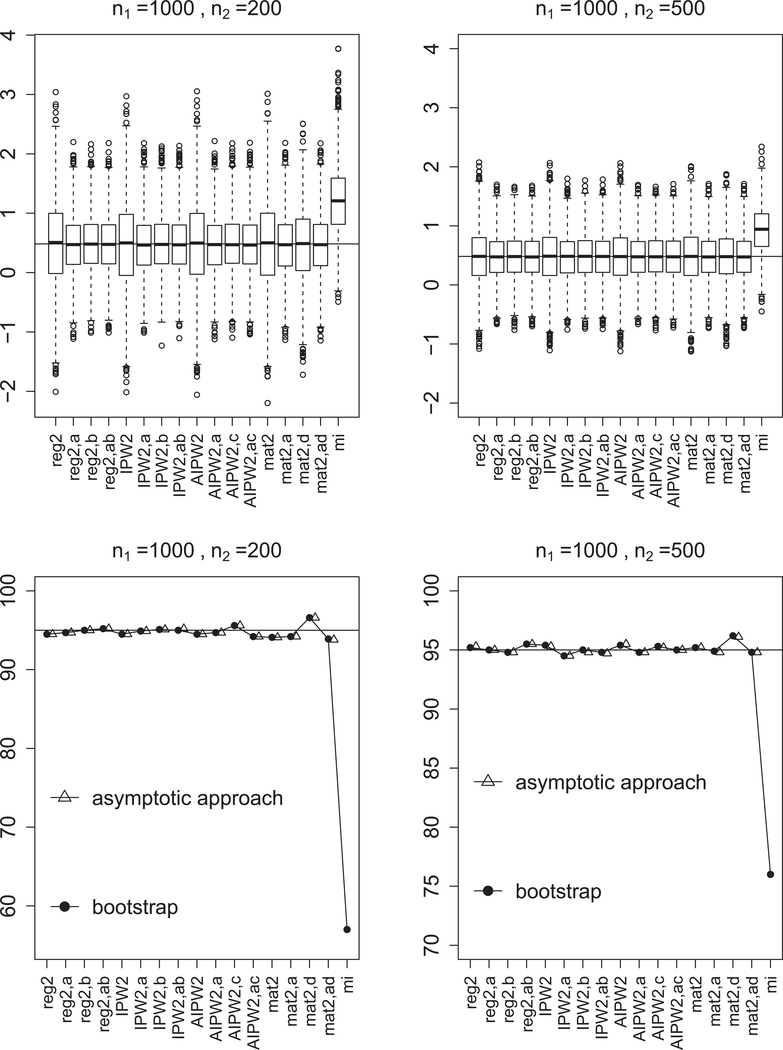

Figure 1 shows the simulation results over 2000 Monte Carlo samples for (n1, n2) = (1000,200) and (n1, n2) = (1000,500). The multiple imputation estimator is biased due to the misspecification of the imputation model. In all scenarios, the proposed estimators are unbiased and improve the initial estimators. Using the error-prone estimator of the same type of the initial estimator achieves a substantial efficiency gain, and the efficiency gain from incorporating additional error-prone estimator is not significantly important. Because of the practical simplicity, we recommend using the same type of error-prone estimator to improve the efficiency of the initial estimator. Confidence intervals constructed from the asymptotic variance formula and the bootstrap method work well, in the sense that the empirical coverage rate of the confidence intervals is close to the nominal coverage rate. In our settings, the matching estimator has the smallest efficiency gain among all types of estimators.

Figure 1.

Simulation results of point estimates (top panels) and coverage rates (bottom panels): the subscripts “a,” “b,” “c,” and “d” stand for methods “reg,” “IPW,” “AIPW,” and “mat,” respectively, “reg2” is “reg2,method” is , other notation is defined similarly, and “mi” is

6. Application

We present an analysis to evaluate the effect of chronic obstructive pulmonary disease (COPD) on the development of herpes zoster (HZ). COPD is a chronic inflammatory lung disease that causes obstructed airflow from the lungs, which can cause systematic inflammation and dysregulate a patient’s immune function. The hypothesis is that people with COPD are at increased risk of developing HZ. Yang et al. (2011) find a positive association between COPD and development of HZ; however, they do not control for important counfounders between COPD and HZ, for example, cigarette smoking and alcohol consumption.

We analyze the main data from the 2005 Longitudinal Health Insurance Database (LHID, Yang et al. 2011) and the validation data from the 2005 National Health Interview Survey conducted by the National Health Research Institute and the Bureau of Health Promotion in Taiwan (Lin and Chen 2014). The 2005 LHID consist of 42,430 subjects followed from the date of cohort entry on January 1, 2004 until the development of HZ or December 31, 2006, whichever came first. Among those, there are 8,486 subjects with COPD, denoted by A = 1, and 33,944 subjects without COPD, denoted by A = 0. The outcome Y was the development of HZ during follow up (1, having HZ and 0, not having HZ). The observed prevalence of HZ among COPD and non-COPD subjects are 3.7% and 2.2% in the main data and 2.5% and 0.8% in the validation data.

The confounders X available from the main data were age, sex, diabetes mellitus, hypertension, coronary artery disease, chronic liver disease, autoimmune disease, and cancer. However, important confounders U, including cigarette smoking and alcohol consumption, were not available. The validation data use the same inclusion criteria as in the main study and consist of 1,148 subjects who were comparable to the subjects in the main data. Among those, 244 subjects were diagnosed of COPD, and 904 subjects were not. In addition to all variables available from the main data, cigarette smoking and alcohol consumption were measured. In our formulation, the main data combine the LHID data and the validation data. Table 4 in Lin and Chen (2014) shows summary statistics on demographic characteristics and comorbid disorders for COPD and Non-COPD subjects in the main and validation data. Because the common covariates in the main and validation data are comparable, it is reasonable to assume that the validation sample is a simple random sample from the main data. Moreover, the difference in distributions of alcohol consumption between COPD and non-COPD subjects is not statistical significant in the validation data. But, the COPD subjects tended to have higher cumulative smoking rates than the non-COPD subjects in the validation data.

We obtain the initial estimators applied solely to the validation data and the proposed estimators applied to both data. As suggested by the simulation in Section 5, we use the same type of the error-prone estimator as the initial estimator. Following Stürmer et al. (2005) and Lin and Chen (2014), we use the propensity score to accommodate the high-dimensional confounders. Specifically, we fit logistic regression models for the propensity score e(X, U; α) and the error-prone propensity score based on and , respectively. We fit logistic regression models for the outcome mean function μa(X, U) based on a linear predictor , and for based on a linear predictor , for a = 0, 1.

We first estimate the ACE τ. Table 1 shows the results for the average COPD effect on the development of HZ. We find no big differences in the point estimates between our proposed estimators and the corresponding initial estimators, but large reductions in the estimated standard errors of the proposed estimators. As a result, all 95% confidence intervals based on the initial estimators include 0, but the 95% confidence intervals based on the proposed estimators do not include 0, except for . As demonstrated by the simulation in Section 5, the variance reduction by utilizing the main data is the smallest for the matching estimator. From the results, on average, COPD increases the percentage of developing HZ by 1.55%.

Table 1.

Point estimate, bootstrapped standard error and 95%Wald-type confidence interval

| Est | SE | 95% CI | Est | SE | 95% CI | ||

|---|---|---|---|---|---|---|---|

| 0.0178 | 0.0112 | (−0.0047, 0.0402) | 0.0155 | 0.0023 | (0.0109, 0.0200) | ||

| 0.0175 | 0.0111 | (−0.0048, 0.0398) | 0.0155 | 0.0024 | (0.0108, 0.0202) | ||

| 0.0179 | 0.0111 | (−0.0044, 0.0402) | 0.0156 | 0.0024 | (0.0109, 0.0203) | ||

| 0.0077 | 0.0092 | (−0.0106, 0.021) | 0.0079 | 0.0053 | (−0.0027, 0.0184) |

We also estimate the log of the causal risk ratio of HZ with COPD. The initial IPW estimate from the validation data is log (95% confidence interval: 0.02, 2.18). In contrast, the proposed estimate by using the error-prone IPW estimators is log (95% confidence interval: 0.41, 0.72), which is much more accurate than the initial IPW estimate.

7. Relaxing Assumption 1

In previous sections, we invoked Assumption 1 that is a random sample from . We now relax this assumption and link our framework to existing methods for missing data. Let Ii be the indicator of selecting unit i into the validation data, that is, Ii = 1 if i ∈ and Ii = 0 if . Alternatively, Ii can be viewed as the missingness indicator of Ui. Under Assumption 1, ; that is, U is missing completely at random. We now relax it to , that is, U is missing at random. In this case, the selection of from can depend on a probability design, which is common in observational studies, for example, an outcome-dependent two-phase sampling (Breslow, McNeney, and Wellner 2003; Wang et al. 2009).

We assume that each unit in the main data is subjected to an independent Bernoulli trial which determines whether the unit is selected into the validation data. For simplicity, we further assume that the inclusion probability is known as in two-phase sampling. Otherwise, we need to fit a model for the missing data indicator I given (A, X, Y). We summarize the above in the following assumption.

Assumption 6.

are IID with is selected from with a known inclusion probability .

In what follows, we use π for π(A, X, Y) and πj for π(Aj, Xj, Yj) for shorthand. Because of Assumption 6, we drop the indices i and j in the expectations, covariances, and variances, which are taken with respect to both the sampling and superpopulation models.

7.1. RAL estimators

For the illustration of RAL estimators, we focus on the AIPW estimator of the ACE τ, because the regression imputation and inverse probability weighting estimators are its special cases. Let and solve the weighted estimating equations and , and let and satisfy and . Under suitable regularity condition, and in probability, for a = 0, 1. Let the initial estimator for τ be the Hajek-type estimator (Hájek 1971):

| (22) |

where has the same form as (2). Under regularity conditions, Assumption 4 or 5, and Assumption 6, we show in the supplementary material that

| (23) |

where is given by (11). Because are IID with mean 0, is consistent for τ.

Similarly, let and solve the weighted estimating equation and , and let and satisfy and . Under suitable regularity condition, in probability, for a = 0, 1 and d = 1, 2. Let the error-prone estimators be

| (24) |

where has the same form as (S8) in the supplementary material. Following a similar derivation for (23), we have

| (25) |

where is given by (S9) in the supplementary material. Because both and are IID with mean 0, are consistent for τep.

Theorem 4.

Under certain regularity conditions, (4) holds for the Hajek-type estimators (22) and (24), where .

Similar to Section 3.3, we can construct a consistent variance estimator for by replacing the variances and covariance in Theorem 4 with their sample analogs.

7.2. Matching Estimators

Recall that is the index set of matches for unit l based on data and the matching variable V, which can be (X, U) or X. Define otherwise. Now, we denote as the weighted number of times that unit j is used as a match. If πj is a constant for all j ∈ , then reduces to the number of times that unit j is used as a match defined in Section 3.1, which justifies using the same notation as before.

Let the initial matching estimator for τ be the Hajek-type estimator:

Let a bias-corrected matching estimator be

| (26) |

where

We show in the supplementary material that

| (27) |

where is defined in (13) with the new definition of .

Similarly, we obtain error-prone matching estimators and express them as

| (28) |

where is defined in (15) with the new definition of .

From the above decompositions, is consistent for τ, and is consistent for 0.

Theorem 5.

Under certain regularity conditions, (4) holds for the estimators (26) and , where ,

We can construct variance estimators based on the formulas in Theorem 5. However, this again involves estimating the conditional variances and . We recommend using the bootstrap variance estimator in the next subsection.

7.3. A Bootstrap Variance Estimation Procedure

The asymptotic linear forms (23), (25), (27), and (28) are useful for the bootstrap variance estimation. For b = 1,...,B, we construct the bootstrap replicates as follows:

Step 1. Sample n1 units from with replacement as .

Step 2. Compute the bootstrap replicates of and as

where are the estimated versions of from d = 1, 2).

We estimate Γ, V and v2 by (16)–(18) based on the above bootstrap replicates, and var by (7), that is, .

Theorem 6.

Under certain regularity conditions, are consistent for .

For RAL estimators, we can also use the classical nonparametric bootstrap based on resampling the IID observations and repeating the analysis as for the original data. The above bootstrap procedure based on resampling the linear forms are particularly useful for the matching estimator.

7.4. Connection With Missing Data

As a final remark, we express the proposed estimator in a linear form that has appeared in the missing data literature.

Proposition 2.

Under certain regularity conditions and Assumption 6, has an asymptotic linear form

| (29) |

where is for RAL estimators and for the matching estimator, and a similar definition applies to . Under Assumption 1, πi ≡ ρ.

Expression (29) is within a class of estimators in the missing data literature with the form

| (30) |

where π = E(I | A, X, U, Y), s(A, X, U, Y) satisfies E{s(A, X, U, Y)} = 0, and s(A, X, U, Y) and are square integrable. Given the optimal choice of , which minimizes the asymptotic variance of (30) (Robins, Rotnitzky, and Zhao 1994; Wang et al. 2009). However, Kopt(A, X, Y) requires a correct specification of the missing data model . In our approach, instead of specifying the missing data model, we specify the error-prone estimators and utilize an estimator that is consistent for zero to improve the efficiency of the initial estimator. This is more attractive and closer to empirical practice than calculating Kopt(A, X, Y), because practitioners only need to apply their favorite estimators to the main and validation data using existing software. See also Chen and Chen (2000) for a similar discussion in the regression context under Assumption 1.

8. Discussion

Depending on the roles in statistical inference, there are two types of big data: one with large-sample sizes and the other with richer covariates. In our discussion, the main observational data have a larger sample size, and the validation observational data have more covariates. Although some counterexamples exist (Pearl 2009, 2010; Ding and Miratrix 2015; Ding, Vanderweele, and Robins 2017), it is more reliable to draw causal inference from the validation data. The proposed strategy is applicable even the number of covariates is high in the validation data. In this case, we can consider to be the double machine learning estimators (Chernozhukov et al. 2018) that use flexible machine learning methods for estimating regression and propensity score functions while retain the property in (4). Our framework allows for more efficient estimators of the causal effects by further combining information in the main data, without imposing any parametric models for the partially observed covariates. Coupled with the bootstrap, our estimators require only software implementations of standard estimators, and thus are attractive for practitioners who want to combine multiple observational data sources.

The key insight is to leverage an estimator of zero to improve the efficiency of the initial estimator. If a certain feature is transportable across datasets (Bareinboim and Pearl 2016), we can construct a consistent estimator of zero. We have shown that if the validation data are simple random samples from the main data, the distribution of (A, X, Y) is transportable from the validation data to the main data. We then construct a consistent estimator of zero by taking the difference of the estimators based on (A, X, Y) from the two datasets. In the presence of heterogeneity between two data sources, the transportability of the whole distribution of (A, X, Y) can be stringent. However, if we are willing to assume the conditional distribution of Y given (A, X) is transportable, we can then take the error prone estimators to be the regression coefficients of Y on (A, X) from the two datasets. As suggested by one of the reviewers, if the subgroups of two samples are comparable, we can construct the error prone estimators based on the subgroups. Similarly, this construction of error prone estimators can adapt to different transportability assumptions based on the subject matter knowledge.

In the worst case, the heterogeneity is intrinsic between the two samples, and we cannot construct two error prone estimators with the same probability limit. We can still conduct a sensitivity analysis combining two data. Instead of (4), we assume

| (31) |

where δ is the sensitivity parameter, quantifying the systematic difference between and . The adjusted estimator becomes . With different values of δ, the estimator can provide valuable insight on the impact of the heterogeneity of the two data, allowing an investigator to assess the extent to which the heterogeneity may alter causal inferences.

Supplementary Material

Acknowledgments

We thank the editor, the associate editor, and four anonymous reviewers for suggestions which improved the article significantly. We are grateful to Professor Yi-Hau Chen for providing the data and offering help and advice in interpreting the data. Drs. Lo-Hua Yuan and Xinran Li offered helpful comments. Dr. Yang is partially supported by the National Science Foundation grant DMS 1811245, National Cancer Institute grant P01 CA142538, and Oak Ridge Associated Universities. Dr. Ding is partially supported by the National Science Foundation grant DMS 1713152.

Footnotes

Supplementary materials

The online supplementary material contains technical details and proofs. The R package “Integrative CI” is available at https://github.com/shuyang1987/IntegrativeCI to perform the proposed estimators.

References

- Abadie A, and Imbens GW (2006), “Large Sample Properties of Matching Estimators for Average Treatment Effects,” Econometrica, 74, 235–267. [2,4,6,7] [Google Scholar]

- Abadie A (2008), “On the Failure of the Bootstrap for Matching Estimators,” Econometrica, 76, 1537–1557. [6,7] [Google Scholar]

- Abadie A (2016), “Matching on the Estimated Propensity Score,” Econometrica, 84, 781–807. [2] [Google Scholar]

- Angrist JD, Imbens GW, and Rubin DB (1996), “Identification of Causal Effects Using Instrumental Variables,” Journal of American Statistical Association, 91, 444–455. [1] [Google Scholar]

- Antonelli J, Zigler C, and Dominici F (2017), “Guided Bayesian Imputation to Adjust for Confounding When Combining Heterogeneous Data Sources in Comparative Effectiveness Research,” Biostatistics, 18, 553–568. [1] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bang H, and Robins JM (2005), “Doubly Robust Estimation in Missing Data and Causal Inference Models,” Biometrics, 61, 962–973. [2,3] [DOI] [PubMed] [Google Scholar]

- Bareinboim E, and Pearl J (2016), “Causal Inference and the data-fusion problem,” PNAS, 113,7345–7352. [13] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bickel PJ, Klaassen C, Ritov Y, and Wellner J (1993), Efficient and Adaptive Inference in Semiparametric Models, Baltimore: Johns Hopkins University Press; [5] [Google Scholar]

- Boonstra PS, Taylor JM, and Mukherjee B (2012), “Incorporating Auxiliary Information for Improved Prediction in High-dimensional Datasets: An Ensemble of Shrinkage Approaches,” Biostatistics, 14, 259–272. [5] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Breslow N, McNeney B, and Wellner JA (2003), “Large Sample Theory for Semiparametric Regression Models With Two-phase, Outcome Dependent Sampling,” Annals of Statistics, 31, 1110–1139. [11] [Google Scholar]

- Cao W, Tsiatis AA, and Davidian M (2009), “Improving Efficiency and Robustness of the Doubly Robust Estimator for a Population Mean With Incomplete Data,” Biometrika, 96, 723–734. [2,3] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chang T, and Kott PS (2008), “Using Calibration Weighting to Adjust for Nonresponse Under a Plausible Model,” Biometrika, 95, 555–571. [5] [Google Scholar]

- Chatterjee N, Chen YH, Maas P, and Carroll RJ (2016), “Constrained Maximum Likelihood Estimation for Model Calibration Using Summary-Level Information From External Big Data Sources,” Journal of American Statistical Association, 111, 107–117. [1,2,7] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen J, and Sitter R (1999), “A Pseudo Empirical Likelihood Approach to the Effective Use of Auxiliary Information in Complex Surveys,” Statistica Sinica, 9 385–406. [2,5] [Google Scholar]

- Chen J, Sitter R, and Wu C (2002), “Using Empirical Likelihood Methods to Obtain Range Restricted Weights in Regression Estimators for Surveys,” Biometrika, 89, 230–237. [2,5] [Google Scholar]

- Chen SX, Leung DHY, and Qin J (2003), “Information Recovery in a Study With Surrogate Endpoints,” Journal of American Statistical Association, 98, 1052–1062. [2,5] [Google Scholar]

- Chen YH (2002), “Cox Regression in Cohort Studies With Validation Sampling,” Journal of Royal Statistical Society, Series B, 64, 51–62. [5] [Google Scholar]

- Chen YH, and Chen H (2000), “A Unified Approach to Regression Analysis Under Double-sampling Designs,” Journal of Royal Statistical Society, Series B, 62, 449–460. [5,13] [Google Scholar]

- Chernozhukov V, Chetverikov D, Demirer M, Duflo E, Hansen C, Newey W, and Robins J (2018), “Double/debiased Machine Learning for Treatment and Structural Parameters,” The Econometrics Journal, 21, C1–C68. [13] [Google Scholar]

- Cochran WG (2007), Sampling Techniques (3rd ed), New York: Wiley; [1,2,8] [Google Scholar]

- Collaboration FS (2009), “Systematically Missing Confounders in Individual Participant Data Meta-analysis of Observational Cohort Studies,” Statistics in Medicine, 28, 1218–1237. [5] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crump R, Hotz VJ, Imbens G, and Mitnik O (2006), “Moving the Goalposts: Addressing Limited Overlap in the Estimation of Average Treatment Effects by Changing the Estimand,” Technical Report, 330, Cambridge, MA: National Bureau of Economic Research. Available at http://www.nber.org/papers/T0330 [7] [Google Scholar]

- Deville J-C, and Särndal C-E (1992), “Calibration Estimators in Survey Sampling,” Journal of American Statistical Association, 87, 376–382. [5]. [Google Scholar]

- Ding P, and Miratrix LW (2015), “To Adjustor Not to Adjust? Sensitivity Analysis of M-bias and Butterfly-bias,” Journal of Causal Inference, 3, 41–57. [13] [Google Scholar]

- Ding P, Vanderweele T, and Robins J (2017), “Instrumental Variables as Bias Amplifiers With General Outcome and Confounding,” Biometrika, 104,291–302. [13] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Efron B, and Tibshirani R (1986), “Bootstrap Methods for Standard Errors, Confidence Intervals, and Other Measures of Statistical Accuracy,” Statistical Science, 1, 54–75. [7] [Google Scholar]

- Enders D, Kollhorst B, Engel S, Linder R, and Pigeot I (2018), “Comparison of Multiple Imputation and Two-phase Logistic Regression to Analyse Two-phase Case-control Studies With Rich Phase 1: A Simulation Study,” Journal of Statistical Computation and Simulation, 88, 2201–2214. [1] [Google Scholar]

- Frangakis CE, and Rubin DB (1999), “Addressing Complications of Intention-to-treat Analysis in the Combined Presence of All-or-none Treatment-noncompliance and Subsequent Missing Outcomes,” Biometrika, 86, 365–379. [3] [Google Scholar]

- Freedman DA (2008), “Randomization Does Not Justify Logistic Regression,” Statistical Science, 23, 237–249. [2] [Google Scholar]

- Fuller WA (2009), Sampling Statistics, Hoboken, NJ: Wiley; [5] [Google Scholar]

- Funk MJ, Westreich D, Wiesen C, Stürmer T, Brookhart MA, and Davidian M (2011), “Doubly Robust Estimation of Causal Effects,” American Journal of Epidemiology, 173,761–767. [6] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hájek J (1971), Comment on “An Essay on the Logical Foundations of Survey Sampling, Part One,” by D. Basu, in Foundations of Survey Sampling, eds. Godambe VP and Sprott DA, Toronto: Holt, Rinehart, and Winston, p. 236 [3,11] [Google Scholar]

- Hansen BB (2004), “Full Matching in an Observational Study of Coaching for the SAT,” Journal of American Statistical Association, 99, 609–618. [2] [Google Scholar]

- Hansen LP (1982), “Large Sample Properties of Generalized Method of Moments Estimators,” Econometrica, 50, 1029–1054. [5] [Google Scholar]

- Heckman JJ, Ichimura H and Todd PE (1997), “Matching as an Econometric Evaluation Estimator: Evidence From Evaluating a Job Training Programme,” Rev. Econ. Stud, 64, 605–654. [2] [Google Scholar]

- Hirano K, Imbens GW, and Ridder G (2003), “Efficient Estimation of Average Treatment Effects Using the Estimated Propensity Score,” Econometrica, 71, 1161–1189. [2] [Google Scholar]

- Horvitz DG, and Thompson DJ (1952), “A Generalization of Sampling Without Replacement From a Finite Universe,” Journal of American Statistical Association, 47, 663–685. [2] [Google Scholar]

- Imbens GW (2004), “Nonparametric Estimation of Average Treatment Effects Under Exogeneity: A Review,” The Review of Economics and Statistics, 86, 4–29. [3] [Google Scholar]

- Imbens GW, and Lancaster T (1994), “Combining Micro and Macro Data in Microeconometric Models,” The Review of Economic Studies, 61,655–680. [1,5] [Google Scholar]

- Jin H, and Rubin DB (2008), “Principal Stratification for Causal Inference With Extended Partial Compliance,” Journal of American Statistical Association, 103, 101–111. [3] [Google Scholar]

- Kim JK, Kwon Y and Paik MC (2016), “Calibrated Propensity Score Method for Survey Nonresponse in Cluster Sampling,” Biometrika, 103,461–473. [5] [DOI] [PubMed] [Google Scholar]

- Kleiner A, Talwalkar A, Sarkar P, and Jordan MI (2014), “A Scalable Bootstrap for Massive Data,” Journal of Royal Statistical Society, Series B, 76, 795–816. [7] [Google Scholar]

- Kott PS (2006), “Using Calibration Weighting to Adjust for Nonresponse and Coverage Errors,” Survey Methodology, 32, 133–142. [5] [Google Scholar]

- Li F, Morgan KL, and Zaslavsky AM (2016), “Balancing Covariates Via Propensity Score Weighting,” Journal of American Statistical Association, 113,390–400. [7] [Google Scholar]

- Lin HW, and Chen YH (2014), “Adjustment for Missing Confounders in Studies Based on Observational Databases: 2-stage Calibration Combining Propensity Scores From Primary and Validation Data,” American Journal of Epidemiology, 180,308–317. [1,2,9] [DOI] [PubMed] [Google Scholar]

- Lumley T, Shaw PA, and Dai JY (2011), “Connections Between Survey Calibration Estimators and Semiparametric Models for Incomplete Data,” International Statistical Review, 79, 200–220. [5] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lunceford JK, and Davidian M (2004), “Stratification and Weighting Via the Propensity Score in Estimation of Causal Treatment Effects: A Comparative Study” Statistical Medicine, 23, 2937–2960. [6] [DOI] [PubMed] [Google Scholar]

- Lunt M, Glynn RJ, Rothman KJ, Avorn J, and Stürmer T (2012), “Propensity Score Calibration in the Absence of Surrogacy,” American Journal of Epidemiology, 175, 1294–1302. [1] [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCandless LC, Richardson S, and Best N (2012), “Adjustment for Missing Confounders Using External Validation Data and Propensity Scores,” Journal of American Statistical Association, 107, 40–51. [1] [Google Scholar]

- Newey WK (1990), “Semiparametric Efficiency Bounds,” Journal of Applied Econometrics, 5, 99–135. [5] [Google Scholar]

- Neyman J (1923), Sur les applications de la thar des probabilities aux experiences Agaricales: Essay de principle. English translation of excerpts by Dabrowska, D. and Speed, T., Statistical Science, 5, 465–472. [2] [Google Scholar]

- Neyman J (1938), “Contribution to the Theory of Sampling Human Populations,” Journal of American Statistical Association, 33, 101–116. [1,2] [Google Scholar]

- Otsu T, and Rai Y (2016), “Bootstrap Inference of Matching Estimators for Average Treatment Effects,” Journal of American Statistical Association, 112, 1720–1732. [7] [Google Scholar]

- Pearl J (2009), “Letter to the Editor: Remarks on the Method of Propensity Score,” Statistics in Medicine, 28, 1420–1423. [13] [DOI] [PubMed] [Google Scholar]

- Pearl J (2010), “On a Class of Bias-amplifying Variables That Endanger Effect Estimates,” in The Twenty-Sixth Conference on Uncertainty in Artificial Intelligence, eds. Grunwald P and Spirtes P, Corvallis, OR: Association for Uncertainty in Artificial Intelligence, pp. 425–432. [13] [Google Scholar]

- Politis DN, Romano JP, and Wolf M (1999), Subsampling, New York: Springer-Verlag; [7] [Google Scholar]

- Qin J (2000), “Combining Parametric and Empirical Likelihoods,” Biometrika, 87, 484–490. [2,5] [Google Scholar]

- Robins JM, Rotnitzky A, and Zhao LP (1994), “Estimation of Regression Coefficients When Some Regressors Are Not Always Observed,” Journal of American Statistical Association, 89, 846–866. [2,5,13] [Google Scholar]

- Rosenbaum PR (1989), “Optimal Matching for Observational Studies,” Journal of American Statistical Association, 84, 1024–1032. [2] [Google Scholar]

- Rosenbaum PR (2002), Studies Observational (2nd ed), New York: Springer; [3] [Google Scholar]

- Rosenbaum PR, and Rubin DB (1983a), “Assessing Sensitivity to an Unobserved Binary Covariate in an Observational Study With Binary Outcome,” Journal of Royal Statistical Society, Series B, 45, 212–218. [1] [Google Scholar]

- Rosenbaum PR (1983b), “The Central Role of the Propensity Score in Observational Studies for Causal Effects, Biometrika, 70, 41–55. [2,3] [Google Scholar]

- Rosenbaum PR (1973), “Matching to Remove Bias in Observational Studies,” Biometrics,29, 159–183. [2] [Google Scholar]

- Rubin DB (1974). Estimating causal effects of treatments in randomized and nonrandomized studies., J. Educ. Psychol 66: 688–701. [2] [Google Scholar]

- Rubin DB (1987). Multiple Imputation for Nonresponse in Surveys, New York: Wiley; [9] [Google Scholar]

- Rubin DB (2006). Matched Sampling for Causal Effects, New York: Cambridge University Press; [2,3] [Google Scholar]

- Schneeweiss S, Glynn RJ, Tsai EH, Avorn J, and Solomon DH (2005), “Adjusting for Unmeasured Confounders in Pharmacoepidemiologic Claims Data Using External Information: The Example of Cox2 Inhibitors and Myocardial Infarction,” Epidemiology, 16, 17–24. [1] [DOI] [PubMed] [Google Scholar]

- Shao J, and Tu D (2012), The Jackknife and Bootstrap, New York: Springer; [7] [Google Scholar]

- Stuart EA (2010), “Matching Methods for Causal Inference: A Review and a Look Forward,” Statistical Science, 25, 1–21. [2] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stürmer T, Schneeweiss S, Avorn J and Glynn RJ (2005). Adjusting effect estimates for unmeasured confounding with validation data using propensity score calibration, American Journal of Epidemiology, 162, 279–289. [1,9] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stürmer T, Schneeweiss S, Rothman KJ, Avorn J, and Glynn RJ (2007), “Performance of Propensity Score Calibratio-a Simulation Study, American Journal of Epidemiology, 165, 1110–1118. [1] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tsiatis A (2006), Semiparametric Theory and Missing Data, Springer, New York: [3] [Google Scholar]

- Wang W, Scharfstein D, Tan Z, and MacKenzie EJ (2009), “Causal Inference in Outcome-Dependent Two-Phase Sampling Designs,” Journal of Royal Statistical Society, Series B, 71, 947–969. [1,2,11,13] [Google Scholar]

- Wang X, and Wang Q (2015), “Semiparametric Linear Transformation Model With Differential Measurement Error and Validation Sampling,” Journal of Multivariate Analysis, 141,67–80. [5] [Google Scholar]

- Wu C, and Sitter RR (2001), “A Model-Calibration Approach to Using Complete Auxiliary Information From Survey Data,” Journal of American Statistical Association, 96, 185–193. [5] [Google Scholar]

- Yang YW, Chen YH, Wang KH, Wang CY, and Lin HW (2011), “Risk of Herpes Zoster Among Patients With Chronic Obstructive Pulmonary Disease: A Population-based Study,” Canadian Medical Association. Journal, 183, 275–280. [9] [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.