1. Introduction

Incorporating a treatment for chronic pain into clinical practice requires critical evaluation of the treatment’s effects based largely on the data submitted to support the approved product labeling, supplemented by publications in the literature. In turn, evaluating the evidence for a particular treatment requires understanding the trial designs (e.g., explanatory trials examining whether a treatment has an effect under carefully controlled conditions, and pragmatic trials examining whether a treatment has an effect in a general clinical population) [100] and methods for presenting data regarding treatment efficacy and safety [69]. When trial designs and reporting methods differ between trials, it may complicate the interpretation of a treatment’s clinical effect and the ability to compare results between different treatments for chronic pain and across diverse populations. Under the auspices of the Analgesic, Anesthetic, and Addiction Clinical Trial Translations, Innovations, Opportunities, and Networks (ACTTION; http://www.acttion.org/) public-private partnership with the U.S. Food and Drug Administration (FDA), the Initiative on Methods, Measurement, and Pain Assessment in Clinical Trials (IMMPACT; http://www.immpact.org/) convened a meeting in 2011 to review methods of analyzing and reporting the results of randomized clinical trials (RCTs) of pain treatments and the limitations of each approach. The focus of this meeting was to develop recommendations for improving the understanding and interpretation of RCTs of pain treatments by clinicians and other stakeholders who have limited methodologic or statistical expertise. Topics were selected to be consistent with research that has identified causes of clinical misinterpretations of RCTs, such as understandings of trial summary statistics and defining a clinically meaningful difference [2, 79, 98, 123]. Additional topics were considered during the drafting of this article [13, 66, 69, 70]. This article begins by highlighting explanatory and pragmatic approaches to research. Frequently-used methods for describing the benefits and risks of treatments for chronic pain are then considered, including their advantages and limitations. This is followed by a brief discussion of a selection of methods to generate an integrated summary of a treatment’s benefit-risk profile.

2. Methods

IMMPACT organized a meeting to discuss and reach consensus on approaches that facilitate interpretation of analgesic RCTs. International representatives from academia, regulatory and other governmental agencies, industry, and a pain patient advocacy group participated in this meeting, and were included as authors on this manuscript. ACTTION’s policy for IMMPACT meetings is to invite all members of the ACTTION Executive and Steering Committees. ACTTION strives to include on these committees individuals from across the world representing diverse stakeholders and with expertise or involvement in clinical trial methods. This list of invitees is supplemented by inviting individuals with particular expertise in the topics to be discussed at the specific IMMPACT meeting. Background lectures were presented by co-authors of this manuscript to facilitate discussion. Topics included: (1) what clinicians want to learn from the results of analgesic RCTs (MCR), (2) responder analyses, cumulative distribution functions, and other approaches to enhancing clinicians’ interpretation (JTF), (3) meta-analyses, numbers needed to treat (NNT), and Cochrane systematic reviews and other synthesized evidence regarding healthcare interventions (CE), and (4) interpreting responder analyses and NNTs (available on the IMMPACT website, http://www.immpact.org/meetings/Immpact14/participants14.html). During the meeting, the content to include in the manuscript and the advantages and limitations of various methods used to present RCT results were considered. Multiple revisions to preliminary drafts of this article were made until consensus was achieved among all authors.

3. Explanatory vs. pragmatic trials

When interpreting pain treatment trial results, it is important to consider not only the specific findings and how they are presented by the study authors and sponsors, but also to determine whether the research is designed to answer an explanatory question (i.e., is the treatment efficacious within a carefully controlled study?) or pragmatic question (i.e., is the treatment effective under real world conditions?); see Table 1 for terms and definitions [42, 100, 111]. To answer an explanatory question about a treatment’s efficacy and safety (i.e., what causal inferences can be drawn about the treatment’s analgesic effect and adverse event profile), the “gold standard” research design is an adequately powered double-blind, placebo-controlled, RCT [24, 53, 92]. RCTs of pain treatments are internally valid to the extent that they are rigorously controlled to minimize effects on outcomes that are not caused by the study treatment. Participants should be selected to maximize the probability of observing a treatment effect if one exists (e.g., including enough patients to have adequate power to detect a clinically meaningful difference between the treatment and control groups, minimizing concomitant pain treatments, comorbid pain conditions, rescue medication, and variability in the pain experience). Identifying a treatment effect if one exists can be accomplished by having strict eligibility criteria, keeping patients and study staff blinded to the protocol (e.g., randomization criteria, treatment group assignments, study start point, analysis time point for the primary efficacy endpoint) when possible, limiting concurrent treatments, performing power calculations with the best available estimates of required parameters (e.g., standard deviation of the outcome variable), measuring outcomes that are meaningful and interpretable in the population studied, and randomizing patients to ensure unbiased assignment to treatment. Explanatory trials are conducted to establish a treatment’s efficacy and safety under “ideal” conditions that may not necessarily generalize to the wider population of individuals with a particular chronic pain condition who are treated in clinical practice [39, 41].

Table 1.

Key terms

| Term | Explanation and example |

|---|---|

| Absolute risk reduction (ARR) | The treatment group difference in the percentages of participants experiencing an event (e.g., the difference in the percentages of “responders” between the treatment and placebo arms). |

| Analysis of means | Statistically analyzing the between-group difference in mean outcome. |

| Between-group difference | The difference in mean outcome between two groups. Example: The mean of the change between baseline and week 12 on a 0-10 NRS for participants in the active treatment group minus the same quantity for participants in the placebo group. |

| Between-group minimal clinically meaningful difference | The between-group difference in an RCT (i.e., amount of additional pain reduction in the treatment group beyond that observed in the comparator group) that is meaningful to patients or other stakeholders. There is no universally accepted difference between treatment and comparator that is considered to be clinically important. |

| Confidence intervals (CIs) | At a given level of confidence (e.g., 95%), the range of possible values that are expected to contain the true treatment effect. For example, if the RCT were replicated a large number of times, 95% of the 95% CIs from the RCTs would contain the true treatment effect |

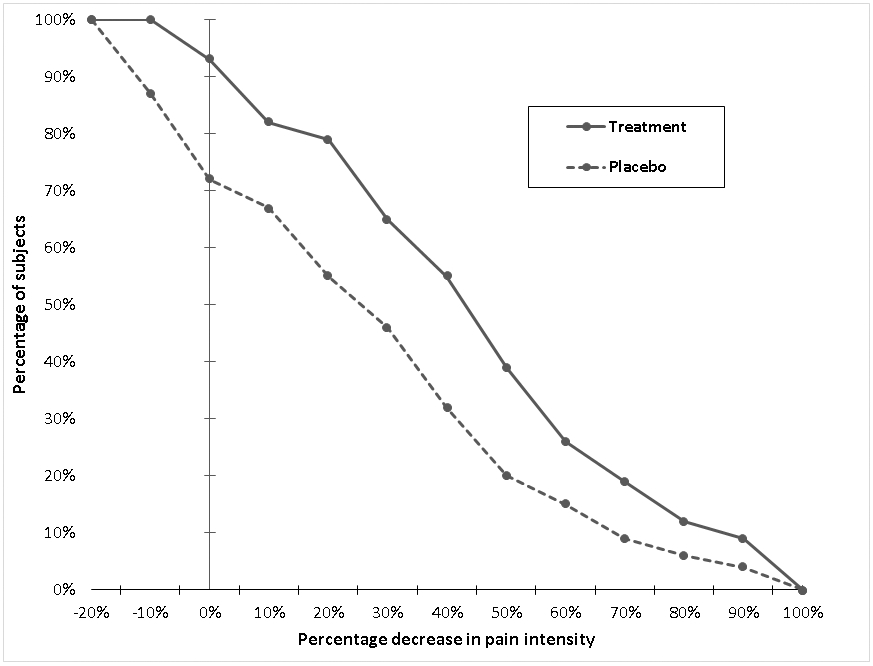

| Cumulative distribution function (CDF) | Plots of the percentage of “responders” in each study arm across the range of possible responses (see Figure 2). |

| Duration of effect | The length of the treatment benefit. |

| Explanatory trials | Trials designed to test whether the treatment is efficacious in more highly controlled settings (e.g., in a relatively homogeneous population). |

| Number needed to harm (NNH) | Identical to NNT, except NNH evaluates percentages of patients with harms. |

| Number needed to treat (NNT) | The reciprocal of the treatment group difference in the percentages of participants experiencing an event, calculated as 1/ARR. This number can be used, for example, to indicate the number of patients who would need to be treated to find 1 more “responder” in the treatment arm than in the comparator arm. |

| Power | Probability of rejecting the null hypothesis of no treatment effect when the treatment actually has an effect of a specified magnitude; calculated as 1 – Prob(Type II error). |

| Pragmatic trials | Trials designed to test whether a treatment that has been shown to have analgesic efficacy is effective in more real-world settings (e.g., in a heterogeneous population, concomitant medications allowed). |

| Primary outcome | The prespecified measure on which the effect of the treatment is being evaluated. |

| Relative risk (RR) | The ratio of the participants experiencing an event in the treatment arm to that in the placebo arm (e.g., the percentage of “responders” in the treatment arm divided by the percentage of “responders” in the placebo arm). |

| “Responder” analysis | A comparison between treatment groups of the percentage of “responders” (i.e., the individuals who have had a certain percentage improvement in pain intensity from baseline to end-of-study). |

| Treatment risks | All adverse events (AEs) associated with a treatment as identified by subject symptom reports and clinician-observed signs. |

| Type I error | Probability of rejecting the null hypothesis of no treatment effect (e.g., no treatment group difference in outcome) when the treatment actually has no effect; typically set at α = 0.05. |

| Type II error | Probability of failing to reject the null hypothesis of no treatment effect when the treatment actually has an effect of a specified magnitude; typically set at β = 0.10 – 0.20. |

| Within-group difference | The mean change within one treatment group between baseline and a defined follow-up time period. Example: The mean of the change between baseline and week 12 on a 0-10 NRS for participants in the placebo group. |

| Within-patient minimal clinically meaningful change | The within-person change in pain intensity that is meaningful to the individual. Typically considered to be ≥ 10-20% improvement on the 0-10 NRS, with > 30% improvement on the 0-10 NRS considered moderate improvement, though baseline levels of pain can affect this percentage. |

Notes: NRS – numerical rating scale; RCT – randomized clinical trial

Once a treatment has been shown to be efficacious and safe for a specific chronic pain condition within the setting of carefully controlled clinical trials, it is also important to determine whether the treatment is effective in the broader population of individuals with that condition in clinical practice; in other words, evaluating external validity. Evaluating the relative effectiveness compared to other standard treatments may help establish the clinical application of the treatment. Pragmatic trials are designed to evaluate the treatment in a more heterogeneous sample, as may be the case within a clinical patient population in which effect modifiers (e.g., comorbid pain conditions, various concurrent treatments, variability in the pain experience) are more likely to play a role [39, 41]. Such trials may yield a more generalizable estimate of the treatment effect and therefore may be more externally valid, but can be less informative with respect to understanding the potential impact of treatment due to the variability permitted in trial conduct. Identifying factors that might alter the treatment effect in pragmatic trials may enhance the ability to understand the clinical utility of a treatment; however, such investigations are often limited by insufficient sample sizes.

Explanatory and pragmatic trials each contribute distinct information to the evidence available regarding pain treatments. Explanatory trials address whether treatments have health benefits or risks, whereas pragmatic trials explore the bounds within which beneficial analgesic outcomes can be observed in study populations. Establishing whether treatments are efficacious and the broader circumstances under which they work are each important for clinical decision-making [100]. The tradeoffs between precision and external validity in explanatory versus pragmatic trials must be considered when interpreting the results of these two complementary classes of trials. In some cases, study designs that blend these two approaches may accelerate the speed with which pain treatments can be translated into clinical practice [97, 120].

4. Determining treatment benefit

4.1. Hypothesis testing, confidence intervals, and P-values

To adequately evaluate the evidence of efficacy provided by a statistically significant treatment group difference in the primary analysis, several issues must be addressed. Foremost among these are whether the analysis that tested the primary hypothesis of the trial was pre-specified (i.e., decided upon before analyzing the trial data), and whether the problem of multiplicity (i.e., performing multiple analyses), if applicable, was addressed in a satisfactory manner to prevent inflation of the probability of a type I error (see Table 1 for definitions of terms). Additional important considerations include whether there were any flaws or potential for bias in study planning, design, execution, and analysis of the trial data and whether the results for important secondary outcomes were consistent with the primary analysis [60, 94]. It is also important to evaluate the extent to which the results of the RCT suggest that the treatment provides a clinically important benefit, as discussed below.

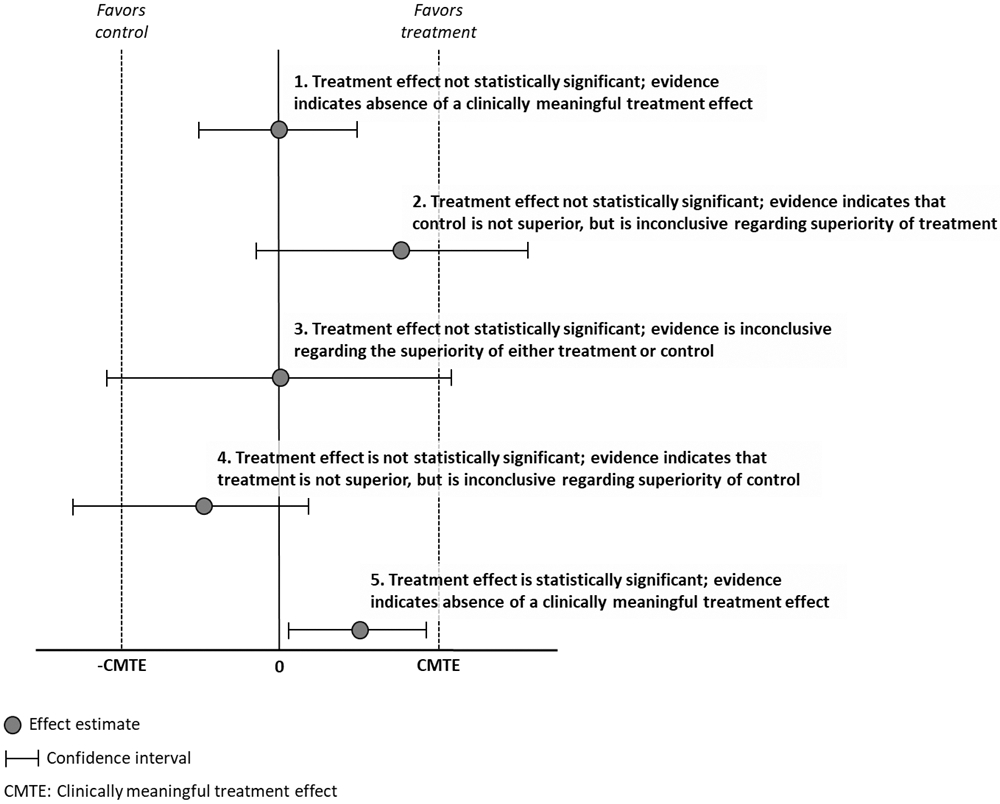

When the primary analysis of an RCT indicates that the difference between treatment groups is not statistically significant, one possibility is that there was a type II error, that is, the treatment is truly efficacious, but the RCT failed to identify the treatment benefit. This can happen for a variety of reasons, including a sample size too small to detect a treatment effect of minimal clinical importance, or problematic study design or execution that resulted in poor quality data and inadequate assay sensitivity [25]. However, assuming that there was sound study design and execution [93], it is important to decide whether the results should be interpreted as the absence of a clinically meaningful treatment effect or whether they should be considered inconclusive. A useful aid to making this decision is to assess the confidence interval (CI) for the treatment effect (see Figure 1). Specifically, if the CI for the treatment effect does not contain values that would be considered clinically meaningful treatment effects, then the trial results can be interpreted as evidence for the absence of a clinically meaningful treatment effect. Example 1 in Figure 1 is a trial in which the primary analysis did not yield a statistically significant result and the estimated magnitude of the treatment effect (i.e., the range of values that fall within the upper and lower CIs) was not clinically meaningful. In contrast, Example 5 shows a trial in which the treatment effect was found to be non-zero according to the hypothesis test, but the estimated magnitude of the treatment effect was not clinically meaningful [44]. If the CI includes values of the treatment effect considered clinically meaningful, however, the results of a trial in which the treatment effect was not statistically significant would be considered inconclusive (see Examples 2 and 3 in Figure 1). In Example 4, there is evidence for the absence of a beneficial treatment effect, but the evidence regarding superiority of the control is inconclusive. Of course, such results may provide the basis for further study to examine the treatment’s hypothesized efficacy [48]. Although for many years biostatisticians have recommended this approach to interpreting results of clinical trials in which the treatment effect was not statistically significant, a recent systematic review found that proper interpretation of CIs occurs infrequently in general medical journals [44].

Figure 1.

Interpretation of confidence intervals

The importance of examining CIs when interpreting pain RCTs can be seen in trials in which there is a “negative” result. For example, pregabalin is recommended for treatment of painful diabetic peripheral neuropathy (pDPN) by international treatment guidelines and is approved for treatment of neuropathic pain in both the US and Europe [7]. However, certain trials of individuals with pDPN have demonstrated statistically non-significant separation between pregabalin and placebo on pain outcomes, which are interpreted as indicating that the trial is negative. For example, a study conducted by Raskin and colleagues found a treatment effect (pregabalin – placebo) of −0.32 (95% CI, −0.74 to 0.09) on the primary outcome variable (i.e., change in pain intensity) [96]. Based on this result, the authors concluded that the study was negative, citing “the negative primary analysis” in the Discussion. However, consideration of the CI in this study suggests that the trial was inconclusive, rather than negative, given that the CI included results consistent with what could be considered a clinically meaningful decrease in pain intensity for pDPN (i.e., > 0.50) associated with pregabalin [23].

Systematic reviews of RCTs in the general medical literature [12] and for pharmacologic and invasive treatments for pain [45] have also shown that erroneous or misleading interpretations of treatment effects that are not statistically significant are quite common. For example, authors often suggest that two interventions are equivalent when an RCT fails to show that one treatment is superior to another. This is not an appropriate conclusion when a trial has been designed to test superiority rather than equivalence, as is the case for most RCTs of pain treatments. Conclusions of equivalence require that the treatment effect fall within prespecified margins of equivalence that are clinically justified [78, 95]. Additionally, common examples of misleading “spin” in the interpretation of RCTs with disappointing results in the primary analyses include emphasizing statistically significant results of secondary analyses, solely focusing on improvements from baseline in the active treatment group rather than differences between the active group and the placebo or comparison group, or highlighting the upper bound of the CI for the treatment group difference to suggest a meaningful positive effect. It is important for the reader to attend to the primary question that the trial was designed to answer and not be misled by secondary outcomes or analyses that only report effects within a treatment group.

The use of p-values in the context of scientific reporting has come under intense scrutiny in recent years due to their misuse and misinterpretation. For example, a common misinterpretation of a p-value is that it is the probability that the null hypothesis (of, say, no effect of treatment) is true. In fact, the p-value is the probability that a treatment effect larger than that observed in the trial would occur under an assumed statistical model if the null hypothesis were true. Also, the interpretation of a trial result as “proof” that a treatment is effective if it is statistically significant (e.g., p < 0.05) is flawed, as is the interpretation that a treatment is ineffective if the result is not statistically significant (e.g., p > 0.05). Indeed, the sole use of strict dichotomies with respect to p-values to judge whether or not a treatment is effective is highly problematic. For example, the level of evidence with respect to the existence of a treatment effect is certainly not qualitatively different when p = 0.0499 and when p = 0.0501. Another major problem with the misuse of p-values in clinical trials is the failure to account for multiplicity when several hypotheses are being tested, particularly with respect to secondary analyses (e.g., secondary outcome variables, multiple group comparisons, and subgroup analyses).

Although p-values provide some indication of how compatible the trial data are with a given null hypothesis, they do not convey any information regarding the clinical meaningfulness of the treatment effect. With a large enough sample size or low variability in outcomes, an observed treatment effect that is clinically insignificant can be associated with a statistically significant result [121]. Conversely, as discussed above, a trial with a small sample size or high variability in outcomes can yield a large estimated treatment effect that is not statistically significant. Understanding the potential clinical importance of a treatment effect requires more information, such as the estimated magnitude of the effect and the associated CI around that effect.

Useful discussions of the issues surrounding p-values and their interpretation can be found in recently published series of articles [121, 122] and the references therein. In the spirit of improved reporting, the CONSORT 2010 checklist recommends reporting the effect size estimate and some measure of the precision of that effect estimate [99]. In addition, many journals are moving toward reporting the effect size, the associated confidence interval, and the exact P-value rather than P < 0.05 [11, 50, 72], with at least one journal requiring that only effect size estimates and 95% CIs (with no P-values) be reported when researchers do not have a prespecified method to adjust for multiple analyses [50].

4.2. What is a clinically important benefit?

Statistically significant evidence of a treatment’s efficacy in a clinical trial is insufficient to indicate that the magnitude of the treatment effect is clinically important. For example, if the sample size is sufficiently large, very small group differences may be “statistically significant” even though they are clinically irrelevant. Evaluations of clinical importance must distinguish between determining whether the mean improvements are important to patients or whether the group differences between treatments in an RCT are clinically important. A third type of evaluation involves determining whether the benefits of a treatment are meaningful to society (e.g., reducing healthcare costs or increasing worker productivity), which is an important consideration but one that is beyond the scope of this article.

4.2.1. Clinical importance of improvements in individual patients

Determining the magnitude of reduction in pain that is meaningful to patients with acute or chronic pain is important to the field of pain. Results of this research indicate that reduction in pain intensity of 10-20% is considered to be a “minimally important” pain intensity reduction on a patient global impression of change (PGIC) scale, a ≥ 30% reduction corresponds to what patients would consider a “moderately important” improvement in pain intensity, whereas reductions of approximately 50% or more can be considered “substantial” improvements in pain intensity for individuals with acute and chronic pain [15, 27]. However, the importance of such decreases could differ depending on the patient’s baseline pain intensity. For example, a decrease in pain from 8 to 6 on a 0-10 NRS, which can be considered a reduction from severe to moderate pain, might be more important to a patient than a reduction from 3 to 1, both of which are mild levels of pain. Alternatively, as Hanley et al. [49] have shown, individuals with higher pain intensity at baseline require a greater reduction in pain intensity for it to be considered a meaningful decrease. It may also be possible that two individuals experience a reduction in their pain intensity by the same approximate percentage, but for individual A, the pain decreases from a 5 to a 3 on the 0-10 NRS, whereas for individual B, the pain decreases from an 8 to a 5. Although the treatment leads both to experience a 38-40% reduction in pain intensity, individual A may judge the current level of pain to be acceptable, whereas individual B may experience the reduced level of pain as unsatisfactory [109]. Nevertheless, percentage reduction in pain is generally considered a useful approach to determining whether a patient has had a meaningful improvement than an absolute change on an NRS [27, 36, 89, 90].

Decreases in pain intensity, however, do not necessarily correspond to the magnitudes of overall improvement preferred by patients [38, 46]. For example, a clinically important reduction in pain intensity could be accompanied by considerable adverse effects, with health-related quality of life unimproved or even worsened as a result; conversely, treatment might be associated with a modest decrease in pain but substantial improvements in sleep, mood, and function that taken together would be considered a major benefit by the patient.

4.2.2. Clinical importance of group differences in a clinical trial

The determination of the level of improvement patients consider clinically important is very often confused with evaluation of the group differences between an active and a control treatment. Thresholds for meaningful within-patient change (e.g., a reduction of 2 points on a 0-10 pain intensity NRS) should not be confused with the evaluation of what constitutes a meaningful difference between treatment groups. The determination of the clinical importance of group differences in RCTs depends on a constellation of factors, including: (1) the magnitude of the group difference observed in the trial and its associated CI; (2) the broader context of the disease being treated, including whether other treatments are available; (3) adverse events associated with the treatment, and (4) an overall evaluation of the benefit-risk profile, ideally as assessed by patients, clinicians, researchers, statisticians, and other stakeholders [20, 23, 47, 71, 98]. For example, a reduction in pain intensity of at least 2 points on a 0-10 NRS could be used to define a clinically meaningful improvement for an individual patient, but the difference in mean change from baseline between an active treatment and placebo does not necessarily need to be ≥ 2 points in order for the effect of the treatment to be considered clinically important. The interpretation of meaningful change depends on whether it is being considered at the group level (where smaller between group differences in changes from baseline may be interpreted as important) or at the individual level, where thresholds for meaningful change are typically based on input from patients regarding what they consider important [10].

A number of factors can be considered when evaluating the clinical importance of group differences in an RCT (see Table 2). The first consideration is that there must be a statistically significant difference between the groups, which is a necessary but not sufficient criterion. In addition, the group difference (e.g., as assessed by the standardized effect size) with respect to the primary outcome variable can be compared with the effects associated with other treatments that are considered to have clinically important benefits. If the treatment effect in an RCT of a new treatment is comparable to, or greater than, the effects seen with established therapies, then the improvement is likely to be clinically important, although studies confirming this finding would be necessary. If the treatment effect found with the new treatment is substantially smaller than what has been found for existing therapies, then it becomes essential to evaluate whether there are any other characteristics of the new treatment that might compensate for the modest treatment effect on the primary outcome variable and make the overall benefit clinically important. Other characteristics to consider include safety and tolerability, results for secondary efficacy outcomes including physical and emotional functioning, limitations of existing treatments, and the other factors listed in Table 2. Importantly, cross-study comparisons of different treatments may not reflect what would occur if the different treatments were compared within the same study.

Table 2.

Major factors to consider in determining the clinical importance of group differences (adapted from Dworkin et al. [23])

|

|

|

|

|

|

|

|

|

|

|

4.3. Placebo response and placebo effect

The placebo effect, or expectations of a treatment benefit, can play a role in the observed treatment benefit [33]. The placebo effect has neurobiological and physiological mechanisms that are activated by situational effects, interpersonal interactions, verbal suggestion, conditioning processes that include prior experiences with treatments, and other nonspecific effects [17, 33]. This differs from the placebo group response, which captures all changes that occur for patients when an inactive substance is administered, including regression to the mean, disease natural history, and the mechanisms associated with the placebo effect [33]. In RCTs for pain clinical trials, placebo group responses appear to have increased over time, perhaps due to increasing placebo effects, which makes it more difficult to identify efficacious treatments [26, 51, 113, 119]. Some have recommended performing studies to identify and understand the expectations of study participants by conducting a 3-arm trial of which one involves no intervention or by assessing participant expectations [17, 119].

4.4. Analysis of means

In pain clinical trials, the mean change in the primary outcome measure (i.e., the prespecified measure on which a difference between the treatment and the control group is expected) from the baseline pretreatment period to a designated time point after initiation of treatment for each treatment arm is typically reported (i.e., within-group change). Within-group changes indicate the average change (or no change) that is observed during the course of the study for participants in each treatment arm. However, formal analyses of within-group change do not address the true objective of the RCT – evaluating whether the difference between treatment arms over the course of the study is statistically and clinically significant. In some instances, researchers will employ analysis of means testing such as a t-test or analysis of variance (ANOVA) to compare the mean within-group changes between treatment arms. An analysis strategy that makes more efficient use of the baseline information is analysis of covariance, for which the statistical model includes treatment group and the baseline value of the outcome variable as independent variables. This strategy yields a more precise estimate of the treatment effects than the simple group comparison of mean within-group changes from baseline since the latter strategy incorporates the baseline value in a very limited way (i.e., only in the definition of the outcome variable) [102, 104]. Studies that only report the statistical significance of within-group changes for each treatment arm without statistical comparisons between the groups fail to demonstrate that a treatment provides any benefit beyond a placebo (or other comparator), as changes from baseline can be due to many factors other than the treatment effect (e.g., regression to the mean, symptom fluctuations, contextual influences). It is necessary to show that the changes from baseline are greater for one treatment group than the other in order to demonstrate a benefit of the treatment being studied.

When incorporating a treatment into a clinical setting, it is important to recognize that comparisons of group means indicate what is happening on average across all participants, and as is the case for all analyses used in trial designs other than multi-period cross-over studies, they are not informative about the responses of an individual patient [27, 68, 101]. For example, although an RCT may show that patients who receive a specific treatment report greater analgesic benefit, on average, than those who receive placebo, patients in the treatment and placebo arms may experience improvement, no change, or even increases in pain. Of course, while this point has often been made about group means, it applies to any group-level estimand such as a propoportion (e.g., of “responders”; see Section 4.5. below).

4.5. Responder analyses

An alternative way to analyze RCT data is to compare the treatment groups with respect to the percentage of patients whose improvements meet a pre-defined threshold. Common examples are categories of severity such as mild, moderate, or severe pain, or categories of reporting changes such as the percentage reporting ≥30% or ≥ 50% reductions in pain intensity. There is a lack of consensus regarding the pros and cons of responder analyses, and the related metric of number needed to treat (NNT). Presenting responder analyses can simplify the interpretation of trial results, allowing for a straightforward comparison of the proportion of patients in each treatment arm who experienced a pre-defined level of improvement on the outcome of interest [34]. However, responder analyses require an understanding of what is clinically meaningful to different stakeholders in order to define what constitutes a “response.” In other words, how much within-patient improvement in pain intensity is necessary for patients, clinicians, or other stakeholders to identify the pain reductions as clinically meaningful? As described in section 4.2.1. above, empirical evidence suggests that a reduction of 10-20% on the 0-10 NRS for pain intensity is associated with minimal improvement, a reduction of ≥30% is needed before patients report moderate change in pain intensity, and a ≥50% reduction is considered substantial improvement [27] [49].

The use of the phrase “responder” can be erroneously interpreted to refer to a stable characteristic of the participant, implying that the participant will respond to the treatment regardless of context. In fact, multiple randomized exposures to both the treatment and a control are necessary to determine whether or not a patient responds to the treatment [21, 105]. An important limitation of using proportions in responder analyses is the loss in statistical power associated with dichotomizing continuous data into categorical data (i.e., “responder” vs. “non-responder”). The reduction in power occurs because dichotomization sacrifices information; for example, consider that a patient who has a 29% reduction in pain intensity would not be considered a “responder”, whereas a patient with a 31% reduction would be, despite the fact that their pain reductions are nearly identical, and the patient with the 29% reduction may consider that reduction meaningful [4, 103, 108].

4.6. Cumulative distribution functions (CDFs)

A challenge previously discussed regarding responder analyses is that investigators must specify the decrease in pain intensity that must occur for study participants to be categorized as “responders”. However, solely reporting the percentage of RCT participants who have reported one or more distinct levels of reduction in pain intensity does not provide complete information about the trial. When contemplating use of a treatment in a clinical setting, clinicians may want to know the percentages of participants in each group who experienced different levels of reduction in pain intensity (e.g., 20% or 75% reduction). Farrar and colleagues proposed an alternative method of reporting RCT results, cumulative distribution functions (CDFs), which graphically depict a continuous plot of the percentages of participants in each treatment arm across the entire range of possible responses (see Figure 2 for an example) [35]. The main advantage of this information is that it provides a visual representation of the treatment group differences in percentage of “responders” at each level of “response,” including the full range of improvement. In this way, readers can apply their own definitions of a meaningful improvement when interpreting the results. Multi-tiered information, in conjunction with analysis of means, can be valuable in establishing whether the treatment benefit is clinically meaningful to patients [23, 114]. Presenting CDFs allows the reader to identify the percentages of participants who achieved each level of “response” and how that differed between treatment groups. In addition, the difference between the CDF curves at any “response” threshold is the absolute risk reduction (ARR), which can then be used to calculate the NNT (see below).

Figure 2.

Continuous distribution function (CDF) example

CDFs are a useful descriptive tool that can visually depict the data from an RCT. As with any RCT analysis, it is important that the problem of missing data is addressed before computing the CDF. Often, researchers presume that anyone who drops out of an RCT is a “non-responder”. However, that is not necessarily the case, particularly when the reason for dropout is unrelated to treatment. Methods to accommodating missing data are briefly addressed in section 7 below.

4.7. Number needed to treat (NNT), number needed to harm (NNH), and relative risk (RR)

The NNT is a value that summarizes a treatment group comparison with respect to the incidence of some event (e.g., “response”) between a study’s treatment arms [74, 82]. The NNT is calculated as 1/ARR, or the absolute risk reduction. The ARR reflects the difference between treatment groups in the percentages of participants experiencing an event (e.g., difference in the percentages of “responders” between the treatment and placebo arms). The NNT indicates the expected number of people who would need to take the treatment for there to be 1 additional “responder” beyond the number of “responders” in the placebo or other comparator arm [5, 66]. It can be calculated from the difference between the two CDF curves at a particular “response” threshold.

As is the case for responder analyses, the NNT is designed to simplify RCT interpretation and to permit comparisons across studies of different treatments for comparable diagnoses. For example, consider a hypothetical 16-week RCT comparing an analgesic treatment and placebo in which “responders” are those individuals who report a reduction in pain intensity ≥30% from baseline to end of treatment. If the results of the RCT indicate that 40% of the participants in the analgesic treatment arm and 20% in the placebo arm are “responders”, the ARR would be 0.40 – 0.20 = 0.20, and the NNT would be 1 / (0.40 – 0.20) = 5. The interpretation would be that if 5 individuals took the treatment for 16 weeks, 2 would be expected to meet the “response” criterion (i.e., 5 × 0.40), whereas if 5 individuals took the placebo for 16 weeks, 1 would be expected to meet the “response” criterion (i.e., 5 × 0.20); there would therefore be 1 additional “responder” among the patients receiving the active treatment versus those administered placebo [66]. The number needed to harm (NNH) is calculated in the same way using the incidence of adverse events (AEs) or other safety outcomes in the treatment and comparator arms over a given time period [5, 28, 82]. The NNH can be misleading, however, particularly if it aggregates AEs of varying severity and seriousness (e.g., if mild dry mouth and death are equally weighted when counting AEs). Thus, NNH calculations are much less frequently found in RCT reporting than NNT calculations.

Relative risk (RR) is another way to summarize the comparative incidence of “response” between two treatment arms in a study, providing information about the likelihood of achieving a “response” [1, 52]. The RR is calculated by dividing the percentage of patients who are “responders” in the analgesic treatment arm by the percentage of “responders” in the placebo arm [1, 52]. Using the same example of an RCT where 40% of the analgesic treatment arm participants were “responders” and 20% of the participants in the placebo arm were “responders”, the RR would be 40% / 20% = 2.0, indicating that participants in the analgesic treatment arm were twice as likely to be a “responder.” Because the word “risk” usually connotes harm, the interpretation of relative risks could be facilitated if the risks of nonresponse were presented (i.e., (100%−40%)/(100%−20%) = 0.75), indicating that the RR of nonresponse is 0.75 rather than that the RR of response is 2. An RR of 1 indicates that there is no difference between the two treatment groups [92]. The ARR may be more informative to clinicians and patients than the RR, however, in that it describes the difference in the incidence of the event of interest between the two treatment groups (e.g., percentages of “responders;” 0.40 – 0.20 = 0.20 or 20% difference in event incidence), rather than describing the relative probability of “response” in the treatment group compared to the placebo group (e.g., 2 times more likely to meet the “response” criterion) [1]. An RR of 2.0 could reflect very different ARRs, such as 20% (40% – 20%) or 5% (10% – 5%). Relative risks presented without the absolute risk are a common cause of confusion, in which the risk to an individual can appear exaggerated.

The limitations of the NNT mirror those of responder analyses, given that the NNT is based on categorizing study patients as having met a pre-defined threshold of improvement from baseline. Additionally, the NNT is frequently misinterpreted as indicating the number of individuals who need to be treated in order for 1 patient to be a “responder” [66], rather than the number of patients who would need to take the treatment for there to be 1 additional “responder” beyond the number of “responders” that would occur in the placebo arm. Clinically, this distinction is important. Using the example above, the NNT of 5 does not mean that we would expect 1 person to be a “responder” among 5 patients taking the active treatment, but rather that there would be 1 more “responder” among 5 patients receiving the active treatment than there would be among 5 patients taking placebo. It is also important to recognize that an NNT that is calculated using a specific threshold can be incorrectly compared to an NNT calculated with a different threshold (e.g., an NNT calculated using a 30% reduction in pain intensity to define “responder” would be interpreted differently than an NNT calculated using a 50% reduction) [35, 108].

There is a lack of consensus among the authors regarding whether NNTs contribute to the interpretation of RCTs and the extent to which they are incorrectly interpreted. As an example, “consider a trial comparing paracetamol to a placebo for treating tension headache. After 2 hours, 50% of people treated with the placebo are pain-free, as are 60% of those who were treated with paracetamol. The difference is 10% and the NNT is 10. However, if paracetamol works for 100% of participants in 60% of the times they are treated, it will give the same NNT as if it works for 60% of the participants 100% of the time. A high NNT should not be taken to imply that a drug works really well for a specific, narrow subset of people. It could simply mean that a drug is just not that effective across all individuals” (pp. 620-1) [106]. For additional reading on the limitations of NNTs, see also [66, 79].

4.8. Time to effect and duration of effect

Beyond identifying the effect of a treatment, data on the temporal course of that effect can provide information regarding the time to onset of a beneficial effect and how long the beneficial effect lasts. These details provide clinicians and patients with a more comprehensive understanding of a treatment’s overall clinical effect [22]. Although presenting a treatment’s time course data may be valuable for interpreting treatment effect, there is no standardized method to assess time to effect or duration of effect. One possibility, for example, is to graphically present each treatment arm’s mean pain intensity and variability across each week of the RCT, allowing the reader to interpret the time course. It may also be informative to present data indicating when the mean pain intensity in the treatment arm first demonstrates a statistically significant or clinically meaningful separation from the placebo arm, although this may conflate time to onset with sample size. Additionally, data regarding time to a clinically relevant event (e.g., minimal pain, discontinuation of treatment) in each treatment arm can be used to assess the time to onset of a beneficial effect. In acute pain RCTs, for example, researchers may use the double stopwatch method to capture the onset of first pain relief, as well as the onset of meaningful pain relief [73]. Clinicians might also want data on the proportion of “responders” at each assessment period separated by treatment arm. Reports of RCTs may use different methods to present data on a treatment’s time course, making it difficult to compare the temporal course of various treatments. As yet, this approach has not been frequently used in chronic pain RCTs. A further limitation is that few studies are conducted to examine the long-term durability of a treatment’s effect. Frequently the only available data on the duration of a treatment’s effect comes from RCTs that are 12 to 16 weeks in length (and sometimes shorter; see [16, 64, 83] showing that the double-blind period of opioid analgesic trials is frequently 6 weeks or less); such data cannot speak to the effect of the treatment when used over an extended period of time as might be expected in many chronic pain conditions.

One approach that may provide information about the duration of analgesic effect is an adaptation of the randomized withdrawal design. In randomized withdrawal studies, all patients initially receive active treatment and are then randomized to stay on active treatment or receive placebo [84]. At the end of the treatment period, individuals in both the treatment and placebo arms who meet some pre-defined threshold (e.g., ≥30% reduction in pain intensity) could be followed to determine the duration of the treatment effect in a double-blind long-term efficacy study [75].

5. Treatment risks

A complete description of a treatment’s clinical effect requires not only reporting efficacy results, but safety (i.e., AEs identified through patient symptoms or clinically assessed signs) as well. AEs provide important information regarding the tolerability and safety of a treatment, and can have implications for patients’ perceptions of the effectiveness of the treatment. For example, adverse treatment effects have been shown to be associated with increased reports of pain interference beyond the effect of pain intensity itself [77], suggesting that it is not a treatment’s analgesic benefit alone that affects pain outcomes. The CONSORT group has published guidance for comprehensive and transparent reporting of AEs occurring in an RCT [58] (see also [43]). It is important to recognize that AEs are not all equivalent, in that the seriousness and severity of the AEs affect when a clinician might opt to introduce the treatment. For AEs that are more serious or severe, the treatment might only be considered when all other treatment options have been exhausted and the patient is debilitated by their symptoms. For AEs that are mild or moderate in severity, the treatment might be discussed with a patient at an earlier stage in the context of balancing the treatment’s benefits and side effects.

In addition to documenting the types and numbers of AEs occurring in an RCT, it is essential for reports of RCTs to describe the methodology for acquiring data regarding AEs. For example, with passive capture, AEs may be collected solely when study participants self-disclose without any prompting or in response to a question such as, “Have you had any problems since the last visit?” With active capture, one could have a checklist of potential AEs and ask the subject if (s)he has experienced each AE. Research has shown that passive capture can result in underestimation of the harms experienced by study participants [6, 9, 67], although active capture has the potential to overestimate the number of harms observed. The methods used to capture AEs should be prespecified and described in articles reporting RCTs so the adequacy of the methods can be evaluated. Other critical details regarding potential treatment harms that should be reported are: (1) the number and nature of the specific AEs that were identified and reported throughout the trial, (2) the severity of the AEs (i.e., mild, moderate, severe), (3) definitions of each severity grade, (4) occurrence of serious AEs (SAEs; e.g., hospitalization or death) [115], (5) whether or not the AEs were considered to be plausibly associated with the treatment (i.e., adverse reactions), and (6) whether standardized coding methods (e.g., MedDRA; http://www.meddra.org) were used to classify AEs (e.g., “feeling nauseated”, “feeling queasy”) into meaningful categories (e.g., nausea). These characteristics of a treatment’s risks are particularly important because they have implications for comparisons across trials. It is also necessary to consider the length of the RCT, given that the risk of harm may occur after long-term use, rather than in 12-16 week trials.

Systematic reviews of pain trials have shown that investigators do not always report all study AEs [54, 107, 124]. Instead, subsets of AEs are frequently reported (e.g., “common” AEs, or AEs for which treatment group differences in incidence were statistically significant). Such truncated AE reporting is likely due in part to a desire to briefly summarize study AEs, as well as to meet limitations imposed by journal publishers. One drawback of reporting subsets of AEs is that rare AEs with possible serious clinical implications may not be adequately disclosed. Solely reporting the AEs that demonstrate a statistically significant difference between treatment arms can be misleading because the RCT may not have been sufficiently large to detect differences in the incidence of certain AEs between treatment arms [3, 56, 57, 63, 112]. Alternately, apparently statistically significant differences in AEs between treatment arms may be false positives that arise from multiple statistical tests without any adjustments for multiplicity [40]. Adequate AE reporting, therefore, involves reporting the denominator for all AE data and reporting both the number of events and the number of study participants who experience each AE that causes study withdrawal, as well as the moderate, severe, and serious AEs that occurred during the study [58, 107]. Additional AE detail could then be made available in online journal supplements [107].

6. Summarizing and integrating treatment benefits and risks

Interpreting the overall effect of a treatment necessitates considering the treatment’s benefits alongside its risks. Benefit-risk evaluations can be used to guide individual treatment decisions by patients and their clinicians, and can also be made at the societal level as a basis for regulatory approvals, reimbursement decisions, and medical policies. However, it can be practically difficult to weigh the benefits against the risks. Developing a standardized method to integrate treatment benefits and risks in order to provide an easily interpretable metric that represents the treatment’s benefit-risk profile is a complicated endeavor and currently no single, well-accepted method exists. Furthermore, determining whether treatment benefits outweigh the risks may require information about the medical history and preferences of the individual patient who will receive the treatment. Despite these challenges, there are several approaches to synthesizing the body of evidence across RCTs regarding a treatment’s effects that are important to highlight.

Aggregating data on a treatment’s overall benefits and risks can be done through a systematic review, meta-analysis, or integrated benefit-risk methods. Conducting a systematic review to identify all research published on a specific analgesic treatment, combining the efficacy data and the AE data across all trials, and analyzing those data is an effective way of consolidating the available evidence. There are limitations to meta-analyses, however. One concern is that the quality of the meta-analysis depends upon the quality of the research that goes into it. Poorly designed and executed studies are likely to be biased in a variety of ways (e.g., selection bias, performance bias), which affects the validity of their results, and this risk of bias should be accounted for in the meta-analysis [52]. Meta-analyses also require a method to aggregate trial outcomes that may not have been assessed in the same manner (e.g., continuous outcomes, time to effect outcomes). Furthermore, meta-analyses may be no better than post-marketing safety surveillance at identifying rare events that have not been previously identified [81]. Meta-analyses also tend to include only published data. Given a well-known reporting bias whereby study results that fail to show a treatment effect are less likely to be published, meta-analyses can be biased toward demonstrating a treatment effect that is larger than the true treatment effect [52, 80]. Despite these limitations, meta-analyses can provide relatively complete information regarding a treatment’s benefit and risk profile, which can help to inform clinical practice. Cochrane has developed comprehensive guidance regarding the conduct of systematic reviews and meta-analyses for treatment interventions [52], including the adoption of Grades of Recommendation, Assessment, Development, and Evaluation (GRADE). GRADE is an approach designed to evaluate the risk of bias in individual studies, and to provide a rating of confidence in the overall estimate of any effect and the likelihood that the estimate could be changed by additional data [8].

In the past decade, several initiatives have focused on advancing the methodology for integrating the assessment of a therapy’s benefit and risk into a single framework. Integrated benefit-risk frameworks may be qualitative, quantitative, or include both qualitative and quantitative components [86]. Qualitative frameworks include visual displays (i.e., tables, figures) that list key benefit and risk attributes [86]. One example of a qualitative benefit-risk framework is the FDA’s Benefit-Risk Integrated Assessment [116]. This structured framework is in a tabular format that allows reviewers to synthesize the evidence of the therapeutic context (i.e., analysis of the condition and current treatment options) and evidence supporting the benefit and risk and risk management strategies of the product that weighed in their decision-making. The European Medicines Agency (EMA) has issued a guidance document that addresses benefit-risk assessment that does not recommend a specific quantitative methodology, but is open to considering these approaches on a case-by-case basis [29].

Another method to integrate and summarize a treatment’s benefit-risk assessment that includes both qualitative and quantitative components is the Benefit Risk Action Team (BRAT) framework [18]. The important features of this approach include identifying the research context (e.g., condition being treated, treatment comparator) and the essential benefits and risks across studies, and then presenting the data regarding those benefits and risks in a way that can be easily understood (e.g., plots of differences in benefits and risks between treatment groups; see [18] for an example). This may assist clinicians in interpreting the overall benefit-risk profile, which can be integrated with their clinical expertise when treating patients. A more recent review identified 49 different methodologies to conduct quantitative and systematic benefit-risk assessment of medications [85]. These methods range from descriptive to more quantitative. The problem, objectives, alternatives, consequences, trade-offs, uncertainty, risk and linked decisions framework (PrOACT-URL) provides another method to descriptively report the risks associated with a treatment [85] (see http://protectbenefitrisk.eu/PrOACT-URL.html for examples). Other more quantitative methods include multi-criteria decision analysis (MCDA) that compares treatment options based on weighted benefit and risk criteria [76, 88], stochastic multicriteria acceptability analysis (SMAA; derived from MCDA) [85], benefit-risk ratio (BRR) that reflects the treatment risks divided by the benefits [85], stated-choice surveys of willingness to accept risks [61] or discrete choice experiments [85], and health outcomes modeling using the quality-adjusted life-year (QALY) [19].

Typical benefit-risk analyses involve separate intervention comparisons for each efficacy, safety, and quality-of-life outcome. Outcome-specific effects are tabulated and combined (systematically or unsystematically) in benefit-risk analyses so that such analyses can describe the totality of effects on patients. However, such approaches do not incorporate associations between outcomes of interest, fail to summarize the cumulative nature of different outcomes on individual patients, and suffer from competing risk challenges when interpreting individual outcomes. In addition, because efficacy and safety analyses are conducted on different subsets of participants, the population to which these benefit-risk analyses apply is unclear. New benefit-risk methodologies continue to be developed such as the desirability of outcome ranking (DOOR) and partial credit which attempt to address the limitations of prior methods by ranking various study outcomes using predetermined criteria [30-32].

7. Select Areas of Advancement for Clinical Trials

In 2010, the National Research Council (NRC) produced a report on The Prevention and Treatment of Missing Data in Clinical Trials [87], and in 2019 an addendum to the ICH E9 guidance on Statistical Principles for Clinical Trials [55] was released, leading to a major shift in how clinical trialists think about trial design and analysis. The NRC report drew attention to the significant limitations of existing simplistic methods for dealing with missing data, such as carrying forward the last (or baseline) observation. They also emphasized the use of more principled methods to deal with the problem such as direct likelihood methods (e.g., mixed model repeated measures, or MMRM), multiple imputation, or generalized estimating equations. Because any method to deal with missing data is based on untestable assumptions, the NRC report and the ICH E9 addendum further emphasized the importance of performing sensitivity analyses (i.e., analyses that make different assumptions concerning the distribution of the missing values given the observed data) to determine the degree of the dependence on the inference concerning the treatment effect on these assumptions.

The ICH E9 draft addendum discusses the importance of precise formulation of the estimand(s) of interest based on the study objectives [55]. The estimand consists of (1) the patient population of interest, (2) the outcome variable, (3) how post-randomization events (intercurrent events) will be handled, and (4) the population-level summary for the outcome variable. In particular, much thought needs to be given to how to deal with intercurrent events in formulating the estimand. The most common intercurrent events include dropout, discontinuation of the study intervention, use of prohibited medications and other protocol violations, and use of rescue medication [14]. The choice of an estimand depends on the characteristics of the treatment (e.g., disease modifying vs. symptom control), setting of treatment use (e.g., the ability to monitor outcome over time), and the choice of the control group [55]. The estimand has a major influence on the study design, the data to be collected, and how the data should be analyzed [14].

The vast majority of clinical trials in pain have used standard parallel group or cross-over designs. Some advances in trial design that are beginning to be used in the pain field include cross-over trials with multiple periods, enrichment, methods to reduce the amount of improvement in placebo groups, adaptive designs, and master protocols. A summary of these trial designs is provided in Table 3.

Table 3.

Innovative clinical trial designs that can be considered in studies of pain treatments

| Multi-period cross-over trials – Cross-over trials with multiple periods (e.g., 2 active treatment periods, 2 placebo or comparator periods) allow for determination of the extent to which the effect of a treatment relative to placebo varies among patients [21] |

| Enrichment clinical trials – Clinical trials in which patients are selected based on a given characteristic that is expected to increase the likelihood of detecting a treatment effect [118], for example: |

| Designs that might reduce placebo group improvement and increase assay sensitivity |

|

|

| Adaptive designs – Clinical trial designs that prospectively plan for modifications to the design based on the available evidence from the trial obtained at interim analyses without compromising the integrity or validity of the trial [117], for example: |

|

|

| Master protocol – A protocol containing multiple sub-studies that examine combinations of treatments, patient types, or diseases to increase the efficiency of drug development [110, 125]: |

8. Research Agenda

Improving the interpretation of trial data in clinical or public health decision-making research would make an important contribution in a number of related areas. First, models of shared decision making that include data from meta-analyses of trials could usefully be developed for pain treatments, including a focus on the values and preferences of both patients and clinicians. Second, when asked how data should be presented, people often prefer that complexity be reduced as much as possible. A recent survey of clinicians across 8 Western countries found that clinicians differentially understand various approaches to presenting treatment effects. Methods that employ dichotomized continuous outcomes (e.g., risk reduction) were considered by clinicians to be the most accessible, although the percentage of clinicians who correctly interpreted these methods was still below 50% [62]. Efforts to extend these findings to evaluate the information stakeholders want from RCTs, and how to educate stakeholders on the judicious use of both continuous and dichotomized data and the limitations of these data, would be valuable. Additionally, experimental research comparing different communication strategies and their effects on decision making and clinical outcomes would help us to understand the potential risks of these communication strategies.

9. Conclusions

Interpreting RCTs and their implications for clinical practice can be complicated due to the variety of methods that researchers use to report their findings. Becoming familiar with typical reporting approaches, as well as their strengths and limitations (see Table 4 for considerations regarding efficacy reporting methods), may assist clinicians in understanding a treatment’s observed benefits and risks and how those effects might translate to a clinical setting.

Table 4.

Considerations for reporting and interpreting efficacy outcomes from chronic pain clinical trials

| Analysis of means |

|

|

|

|

|

| Responder analyses |

|

|

|

|

|

| Number needed to treat (NNT), number needed to harm (NNH), relative risk (RR) |

|

|

|

|

|

| Cumulative distribution functions (CDFs) |

|

|

|

| Within-patient minimally clinically important difference |

|

|

|

| Time to effect and duration of effect |

|

|

|

|

Acknowledgments

We thank Valorie Thompson and Andrea Speckin for their assistance in organizing the meeting on which this article is based. We acknowledge Stephen Senn for his participation in the IMMPACT meeting on which this manuscript is based, as well as to this manuscript. We further acknowledge Stephen Morley for his participation in the IMMPACT meeting, and who passed before publication.

Footnotes

Disclosures

The views expressed in this article are those of the authors, some of whom were, or currently are, employees of pharmaceutical, consulting, or contract research companies and may have financial conflicts of interest related to the issues discussed in this article. At the time of the meeting on which this article is based, several authors were employed by pharmaceutical companies and others had received consulting fees or honoraria from one or more pharmaceutical or device companies. Authors of this article who attended the IMMPACT meeting and were not employed by industry or government at the time of the meeting received travel stipends, hotel accommodations, and meals during the meeting provided by ACTTION. ACTTION has received research contracts, grants, or other revenue from the FDA, multiple pharmaceutical and device companies, philanthropy, and other sources. Preparation of background literature reviews and the article was supported by ACTTION. No funding from any other source was received for the meeting, nor for the literature reviews and article preparation. No official endorsement by the FDA, US National Institutes of Health, or the pharmaceutical and device companies that have provided unrestricted grants to support the activities of ACTTION should be inferred.

SMS has received in the past 36 months a research grant from the Richard W. and Mae Stone Goode Foundation. RHD has received in the past 5 years research grants and contracts from the US Food and Drug Administration and the US National Institutes of Health, and compensation for serving on advisory boards or consulting on clinical trial methods from Abide, Acadia, Adynxx, Analgesic Solutions, Aptinyx, Aquinox, Asahi Kasei, Astellas, AstraZeneca, Biogen, Biohaven, Boston Scientific, Braeburn, Celgene, Centrexion, Chromocell, Clexio, Concert, Coronado, Daiichi Sankyo, Decibel, Dong-A, Editas, Eli Lilly, Eupraxia, Glenmark, Grace, Hope, Hydra, Immune, Johnson & Johnson, Lotus Clinical Research, Mainstay, Medavante, Merck, Neumentum, Neurana, NeuroBo, Novaremed, Novartis, NSGene, Olatec, Periphagen, Pfizer, Phosphagenics, Quark, Reckitt Benckiser, Regenacy (also equity), Relmada, Sanifit, Scilex, Semnur, Sollis, Spinifex, Syntrix, Teva, Thar, Theranexus, Trevena, Vertex, and Vizuri. In the past 36 months DCT has received research grants and contracts from US Food and Drug Administration and US National Institutes of Health, and compensation for consulting on clinical trial and patient preferences from AccelRx, Eli Lilly, Flexion, GlaxoSmithKline, and Pfizer. MPM has been supported in the past 36 months by research grants from NIH, FDA, NYSTEM, SMA Foundation, Cure SMA, Friedreich’s Ataxia Reseach Alliance, Muscular Dystrophy Association, ALS Association, and PTC Therapeutics, has received compensation for consulting from Neuropore Therapies, Inc. and Voyager Therapeutics, and has served on Data and Safety Monitoring Boards (DSMBs) for NIH, Novartis Pharmaceuticals Corporation, AstraZeneca, Eli Lilly and Company, aTyr Pharma, Inc., Catabasis Pharmaceuticals, Inc., Vaccinex, Inc., Cynapsus Therapeutics, Voyager Therapeutics, and Prilenia Therapeutics Development, Ltd. CE does not have any financial conflicts of interest specifically related to the issues discussed in this article. JTF has received research grants and contracts from US Food and Drug Administration, and National Institutes of Health, and consulting fees from Analgesic Solutions, Aptinyx, Biogen, Campbell Alliance, Daiichi Sankyo, DepoMed, Evadera, Jansen, Lilly, Novartis, Vertex, and Pfizer; DSMB services from NIH-NIA and Cara Therapeutics. MCR reports participation in scientific advisory boards with compensation for Site One Therapeutics, and with CODA Biotherapeutics. ZB was a salaried employee of Alexion Pharmaceuticals at the time of the meeting. LBB was a salaried employee of the U.S. Food and Drug Administration at the time of the meeting, and in the past 36 months, she has received compensation for consulting on clinical trial outcomes from Aclaris, Afyx, Alnylam, Bellerophon, Biogen, Brickell, Jazz, Leo, Plethora, Scynexis, Therapeutics MD, and Zynerba. LG does not have any financial conflicts of interest specifically related to the issues discussed in this article. SSE reports service on data monitoring committees for AstraZeneca, Merck, BMS, GeneOne, and Marinus; consulting for Johnson & Johnson, Shionogi, Biomarin, Alkermes, InsMed, Innovative Science Solutions, AbbVie, PTCBio, and Amgen; and an invited lecture for Sanofi. SRE reports compensation from Takeda / Millennium, Pfizer, Roche, Novartis, Achaogen, Huntington's Study Group, ACTTION, Genentech, Amgen, GSK, American Statistical Association, FDA, Osaka University, Nationa Cerebral and Cardiovascular Center of Japan, NIH, Society for Clinical Trials, Statistical Communications in Infectious Diseases (DeGruyter), AstraZeneca, Teva, Austrian Breast & Colorectal Cancer Study Group (ABCSG)/Breast International Group (BIG) and the Alliance Foundation Trials (AFT), Zeiss, Dexcom, American Society for Microbiology, Taylor and Francis, Claret Medical, Vir, Arrevus, Five Prime, Shire, Alexion, Gilead, Spark, Clinical Trials Transformation Initiative, Nuvelution, Tracon, Deming Conference, Antimicrobial Resistance and Stewardship Conference, World Antimicrobial Congress, WAVE, Advantagene, Braeburn, Cardinal Health, Lipocine, and Microbiotix, and grants from NIAID/NIH outside the submitted work. SI was a salaried employee of Eli Lilly and Company at the time of this meeting. MPJ has received in the past 36 months research grants from US the US National Institutes of Health, the Department of Education, the Administration of Community Living, the Patient-Centered Outcomes Institute, and National Multiple Sclerosis Society, the International Association for the Study of Pain, and the Washington State Spinal Injury Consortium, and compensation for consulting from Goalistics. RJ is a salaried employee of Pfizer and holds Pfizer stock. CK does not have any financial conflicts of interest specifically related to the issues discussed in this article. NPK is an employee of Analgesic Solutions, a research and consulting firm with numerous clients in the pharmaceutical and medical device industry. JPK was a salaried employee of Acadia Pharmaceuticals at the time of the meeting. EAK was a salaried employee of Collegium with stock at the time of the meeting. DL is a salaried employee of Scilex Pharmaceuticals, formerly known as Semnur Pharmaceuticals. JDM has had advisory board or consulting agreements with the following entities over the past decade as follows: advisory board for Clexio Biosciences, Esteve Pharmaceuticals, Flexion Therapeutics, Quark Pharmaceuticals, Quartet Medicine, Collegium Pharmaceutical, Biogen, Novartis, Aptinyx, Nektar, Allergan, Grünenthal, Eli Lilly and Company, Depomed, Janssen Pharmaceuticals, Teva, KemPharm, Abbott Laboratories, Plasma Surgical, Chromocell, Convergence Pharmaceuticals, Inspirion, Pfizer, Daiichi Sankyo, and Trevena; has served as a consultant to Trigemina, Editas Medicine, and Plasma Surgical; and has served on data safety monitoring boards for Novartis and Allergan. PJM has received research grants, consulting fees, and speaker fees from Abbvie, Amgen, Boehringer Ingelheim, BristolMyersSquibb, Celgene, Eli Lilly, Galapagos, Gilead, GlaxoSmithKline, Janssen, Merck, Novartis, Pfizer, SUN Pharma, and UCB. KVP has received in the past 36 months research grants and contracts from the US Food and Drug Administration and US National Institutes of Health. SNR has received research grants from US National Institutes of Health and Medtronic Inc., and has served as a consultant for Aptinyx, Allergan, Grunenthal, and Insys Therpaeutics. IS was a salaried employee of Gruenenthal Gmbh at the time of the meeting. LT is an employee of Pfizer and holds Pfizer stock. JT was a salaried employee of NeurogesX, Inc. at the time of the meeting and is a partner and managing director of the Aquila Consulting Group LLC. ADW in the past 36 months has received an investigator initiated grant from Collegium Pharmaceuticals, and has consulted for Pfizer and Analgesic Solutions. HDW was a salaried employee of the University of Washington at the time of the meeting, is currently a salaried employee of the pharmaceutical company Boehringer Ingelheim, and within the past 36 months was previously a salaried employee of a scientific consulting firm Evidera.

References

- 1.Alhazzani W, Walter SD, Jaeschke R, Cook DJ, Guyatt G. Does Treatment Lower Risk? Understanding the Results In Guyatt G, Rennie D, Meade MO, & Cook DJ, editors. Users’ Guides to the Medical Literature: A Manual for Evidence-Based Clinical Practice, 3rd ed. New York, NY: McGraw-Hill Education, 2015. [Google Scholar]

- 2.Altman DG, Bland JM. Improving doctors’ understanding of statistics. Journal of the Royal Statistical Society: Series A (Statistics in Society) 1991;154:223–248. [Google Scholar]

- 3.Altman DG, Bland JM. Statistics notes: Absence of evidence is not evidence of absence. BMJ 1995;311:485. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Altman DG, Royston P. The cost of dichotomising continuous variables. BMJ 2006;332:1080. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Andrade C. The numbers needed to treat and harm (NNT, NNH) statistics: what they tell us and what they do not. J Clin Psychiatry 2015;76:e330–3. [DOI] [PubMed] [Google Scholar]

- 6.Atkinson TM, Rogak LJ, Heon N, Ryan SJ, Shaw M, Stark LP, Bennett AV, Basch E, Li Y. Exploring differences in adverse symptom event grading thresholds between clinicians and patients in the clinical trial setting. J Cancer Res Clin Oncol 2017;143:735–743. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Azmi S, ElHadd KT, Nelson A, Chapman A, Bowling FL, Perumbalath A, Lim J, Marshall A, Malik RA, Alam U. Pregabalin in the Management of Painful Diabetic Neuropathy: A Narrative Review. Diabetes Ther 2019;10:35–56. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Balshem H, Helfand M, Schunemann HJ, Oxman AD, Kunz R, Brozek J, Vist GE, Falck-Ytter Y, Meerpohl J, Norris S, Guyatt GH. GRADE guidelines: 3. Rating the quality of evidence. J Clin Epidemiol 2011;64:401–6. [DOI] [PubMed] [Google Scholar]

- 9.Basch E. The missing voice of patients in drug-safety reporting. N Engl J Med 2010;362:865–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Beaton DE, Bombardier C, Katz JN, Wright JG, Wells G, Boers M, Strand V, Shea B. Looking for important change/differences in studies of responsiveness. OMERACT MCID Working Group. Outcome Measures in Rheumatology. Minimal Clinically Important Difference. J Rheumatol 2001;28:400–5. [PubMed] [Google Scholar]

- 11.British Medical Journal. BMJ Guidance for Authors. 2018; Available from: https://www.bmj.com/sites/default/files/attachments/resources/2018/05/BMJ-InstructionsForAuthors-2018.pdf, accessed 1/17/2020.

- 12.Boutron I, Dutton S, Ravaud P, Altman DG. Reporting and interpretation of randomized controlled trials with statistically nonsignificant results for primary outcomes. JAMA 2010;303:2058–64. [DOI] [PubMed] [Google Scholar]

- 13.Busse JW, Bartlett SJ, Dougados M, Johnston BC, Guyatt GH, Kirwan JR, Kwoh K, Maxwell LJ, Moore A, Singh JA, Stevens R, Strand V, Suarez-Almazor ME, Tugwell P, Wells GA. Optimal Strategies for Reporting Pain in Clinical Trials and Systematic Reviews: Recommendations from an OMERACT 12 Workshop. J Rheumatol 2015;42:1962–1970. [DOI] [PubMed] [Google Scholar]

- 14.Cai X GJ, He H, Turk DC, Dworkin RH, McDermott MP. Estimands and Missing Data in Clinical Trials of Chronic Pain Treatments: Advances in Design and Analysis. under review. [DOI] [PMC free article] [PubMed]

- 15.Cepeda MS, Africano JM, Polo R, Alcala R, Carr DB. What decline in pain intensity is meaningful to patients with acute pain? Pain 2003;105:151–7. [DOI] [PubMed] [Google Scholar]

- 16.Chou R, Clark E, Helfand M. Comparative efficacy and safety of long-acting oral opioids for chronic non-cancer pain: a systematic review. J Pain Symptom Manage 2003;26:1026–48. [DOI] [PubMed] [Google Scholar]

- 17.Colloca L. The Placebo Effect in Pain Therapies. Annu Rev Pharmacol Toxicol 2019;59:191–211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Coplan PM, Noel RA, Levitan BS, Ferguson J, Mussen F. Development of a framework for enhancing the transparency, reproducibility and communication of the benefit-risk balance of medicines. Clin Pharmacol Ther 2011;89:312–5. [DOI] [PubMed] [Google Scholar]

- 19.Cross JT, Veenstra DL, Gardner JS, Garrison LP Jr. Can modeling of health outcomes facilitate regulatory decision making? The benefit-risk tradeoff for rosiglitazone in 1999 vs. 2007. Clin Pharmacol Ther 2011;89:429–36. [DOI] [PubMed] [Google Scholar]

- 20.Dworkin JD, McKeown A, Farrar JT, Gilron I, Hunsinger M, Kerns RD, McDermott MP, Rappaport BA, Turk DC, Dworkin RH, Gewandter JS. Deficiencies in reporting of statistical methodology in recent randomized trials of nonpharmacologic pain treatments: ACTTION systematic review. J Clin Epidemiol 2016;72:56–65. [DOI] [PubMed] [Google Scholar]

- 21.Dworkin RH, McDermott MP, Farrar JT, O’Connor AB, Senn S. Interpreting patient treatment response in analgesic clinical trials: implications for genotyping, phenotyping, and personalized pain treatment. Pain 2014;155:457–60. [DOI] [PubMed] [Google Scholar]

- 22.Dworkin RH, Turk DC, Farrar JT, Haythornthwaite JA, Jensen MP, Katz NP, Kerns RD, Stucki G, Allen RR, Bellamy N, Carr DB, Chandler J, Cowan P, Dionne R, Galer BS, Hertz S, Jadad AR, Kramer LD, Manning DC, Martin S, McCormick CG, McDermott MP, McGrath P, Quessy S, Rappaport BA, Robbins W, Robinson JP, Rothman M, Royal MA, Simon L, Stauffer JW, Stein W, Tollett J, Wernicke J, Witter J, Immpact. Core outcome measures for chronic pain clinical trials: IMMPACT recommendations. Pain 2005;113:9–19. [DOI] [PubMed] [Google Scholar]

- 23.Dworkin RH, Turk DC, McDermott MP, Peirce-Sandner S, Burke LB, Cowan P, Farrar JT, Hertz S, Raja SN, Rappaport BA, Rauschkolb C, Sampaio C. Interpreting the clinical importance of group differences in chronic pain clinical trials: IMMPACT recommendations. Pain 2009;146:238–44. [DOI] [PubMed] [Google Scholar]