Abstract

Artificial intelligence (AI) in healthcare is the use of computer-algorithms in analyzing complex medical data to detect associations and provide diagnostic support outputs. AI and deep learning (DL) find obvious applications in fields like ophthalmology wherein huge amount of image-based data need to be analyzed; however, the outcomes related to image recognition are reasonably well-defined. AI and DL have found important roles in ophthalmology in early screening and detection of conditions such as diabetic retinopathy (DR), age-related macular degeneration (ARMD), retinopathy of prematurity (ROP), glaucoma, and other ocular disorders, being successful inroads as far as early screening and diagnosis are concerned and appear promising with advantages of high-screening accuracy, consistency, and scalability. AI algorithms need equally skilled manpower, trained optometrists/ophthalmologists (annotators) to provide accurate ground truth for training the images. The basis of diagnoses made by AI algorithms is mechanical, and some amount of human intervention is necessary for further interpretations. This review was conducted after tracing the history of AI in ophthalmology across multiple research databases and aims to summarise the journey of AI in ophthalmology so far, making a close observation of most of the crucial studies conducted. This article further aims to highlight the potential impact of AI in ophthalmology, the pitfalls, and how to optimally use it to the maximum benefits of the ophthalmologists, the healthcare systems and the patients, alike.

Keywords: Age-related macular degeneration, anterior-segment diseases, artificial intelligence, cataract, deep learning, diabetic retinopathy, glaucoma, machine learning, ophthalmology, retinopathy of prematurity

Ever since a handful of scientists coined the term in the Dartmouth workshop in 1956, artificial intelligence (AI) has been the locus of innovation in the scientific world for decades.[1] With its capabilities and potential gradually being unearthed by scientists, AI is becoming a game-changer in the contemporary scenario. Medicine and healthcare are the latest advocates of AI's revolutionary potential, and image recognition and analysis seem to be one of its strongest fortes.[2,3]

Although the definition of AI has evolved over the past, at present it refers to machine learning (ML) and its notable subset, deep learning (DL).[1,4,5]

ML refers to a paradigm that relies on data instead of explicit instructions to inform a computer how to perform a specific task. These problems are best understood as creating a mapping function between an input and an output. In healthcare, inputs are typically images or 3D volumes taken from a patient with a specific modality (retinal camera, optical coherence tomography [OCT], X-rays, and other imaging modalities), with outputs being the diagnosis of a specific condition. Some typical applications are chat-bots, oncology, pathology, and rare diseases.

Algorithms are being applied on a database of inputs and desired outputs representative of the problem (the “training set”). The outcome is a statistical model that generalizes the mapping to any given case of the same nature as the training set. This is done through an error minimization process, often iterative in nature, during which a complex model of relationships transforming the input into the most optimal output is “learnt” from the training set.

Artificial neural networks (ANNs), a set of machine learning (ML) algorithms, have achieved state-of-the-art performance in a wide range of problems. Their fundamental building block is an artificial neuron, which consists of simple mathematical functions transforming inputs into an output. These neurons are stacked beside on top of each other to form layers. This mimics the way the human brain works. Neurons rely on weights to compute their output. The training process of a neural network consists of deriving the most optimal set of weights through a process called “backpropagation.” This involves multiple iterations through a very large training set.

While the algorithmic fundamentals of ANNs date back to the 60s, its potential only started to emerge in the last decade.[6] Thanks to more powerful hardware, it is now possible to train neural networks with a number of neurons of an unprecedented magnitude. The rise of these large neural networks, coined “deep learning” (DL), has been a game-changer in many applications. In ophthalmology, DL has allowed ML algorithms to reach accuracies acceptable for large-scale field deployment.

Methods

This review was conceived after extensive online research using the keywords AI, ML, DL, ophthalmology, diabetic retinopathy (DR), age-related macular degeneration (ARMD), retinopathy of prematurity (ROP), anterior-segment diseases, cataract, glaucoma, fed into research databases like PubMed, Web of Science, Embase, and Cochrane. A chronological history of AI and its adaptation in healthcare, especially ophthalmology, was mapped and the subsequent advances in different fields of ophthalmology were documented.

Artificial Intelligence in Ophthalmology: Opportunities and Potential in Different Ophthalmological Conditions

AI finds obvious applications in ophthalmology where the amount of data to be analyzed are complex and the number of patients to be analyzed is huge; however, the outcomes are simple and well-defined. There are various approaches in the use of AI systems to automatically detect lesions in images of the eye.

DL has shown robust skills in medical imaging analysis as it involves constant refining, weighting, and comparing of details in the images as a part of the constant learning process, to accommodate every piece of information possible.[7,8] The most common way to apply DL to images (fundus or visual field images) or volumes (OCT scans) is through convolutional neural networks (CNNs). They take image pixels or volume voxels (the 3D equivalent of a pixel) as input.[3,9,10,11,12] In deep neuronal learning, a CNN algorithm teaches itself through repetition and self-correction process until the output matches with that of the human grader, by analyzing a labelled training set of expert-graded images and provides the diagnosis. The optimised AI algorithm is then ready to provide diagnostic support with unknown fundus images.

The most common conditions for which the utility of AI has been demonstrated include,

Diabetic retinopathy (DR)

Age-related macular degeneration (ARMD)

Retinopathy of prematurity (ROP)

Glaucoma, cataracts, and other anterior segment diseases.

The quality of an AI algorithm vastly depends on the dataset used to train and validate it. Beyond the absolute number of images, it is crucial to gather a fair amount of data for each of the different desired outcomes. In medical applications, data distributions are often heavily skewed, since healthy cases are almost always more prevalent than pathological cases. The most advanced stage of the pathology is often the one with the least available data. If the trained algorithm is intended to be used on different imaging device models, it is also important to use datasets representative of the differences of output between them, such as field of view or a characteristic color tint. The datasets should also be gathered following daily practice protocols for exclusion criteria or for assessment of acceptable quality. A possible strategy consists of implementing a quality detection algorithm as an integral part of the AI system. Furthermore, possible variations in the images due to ethnicity, age, gender, and so on should also be fairly represented in the datasets.

Validating an AI algorithm can either be done in a prospective or retrospective way. Retrospective validations can either be done by carving out a subset of the dataset for that purpose. This is, however, the weakest option, as this only validates the algorithm for the characteristics and biases of the dataset used. A better alternative is the use of an independent validation dataset gathered in a different context. Prospective validation is the most comprehensive validation approach. It validates the entire system in conjunction with the capturing process and the deployment workflow.

Further analysis of the cases wherein the AI algorithm has failed to provide a definite answer can be used to provide further insights. It might lead to a diagnosis of a different pathology which had not been comprehended earlier by the algorithm.

DR and AI

DR has evolved to be a hotspot for AI. With more than 400 million people with diabetes worldwide, DR is touted to be one of the leading causes of preventable blindness.[10] The overall prevalence of any DR among the global population is as high as 34.6%, with ~10% vision-threatening DR (VTDR).[11] In India, one out of five people with diabetes has some form of retinopathy. DR being a global health burden, tele-retinal screening programs and retinal screening programs employing DL-based imaging scans using fundus photography or multimodal imaging have immense potential and are being studied in various trials by ophthalmologists. Several reported studies have implemented DL algorithms for diagnosis of microaneurysms, hemorrhages, hard exudates, cotton-wool spots, and neovascularization among people with DR. Some of these algorithms borrow other ML techniques on top of ANNs, such as morphological component analysis (MCA), lattice neural network with dendritic processing (LNNDP), and k-nearest neighbour (kNN).[2,12]

A potential benefit with AI-enhanced diagnosis in DR detection is the sheer increase number of patients who get screened at primary care clinics thereby allowing for early detection of diabetic eye disease, which may have otherwise gone undetected as a result of the patient not independently going to an ophthalmologist to be screened for DR.

DL algorithms for DR detection have recently been reported to have higher sensitivity (~97%) as compared to manual efforts by ophthalmologists (~83%),[4] though at this time, more peer-reviewed clinical studies may be needed in literature to claim that AI may be doing better than an ophthalmologist, in reading images.

The potential of AI and automated screening systems in detecting referable DR has been established in multiple studies over the recent past emphasizing AI's potential in early screening and detection of DR [Table 1]. EyeArt™ by Eyenuk has used 40,542 images from 5084 patient encounters obtained from the EyePACS telescreening system to train AI algorithms to screen for DR with a 90% sensitivity at 63.2% specificity, as well as to detect the presence of microaneurysms with a sensitivity of 100%.[13] Another study by Tufail et al. evaluated the sensitivity and the specificity of EyeArt and Retmarker, two automated DR image assessment systems (ARIAS) in a study, earlier in 2017. The sensitivity point estimates of the EyeArt were 94.7% for any DR, 93.8% for referable retinopathy/RDR (graded by humans as either ungradable, maculopathy/diabetic macular edema, preproliferative, or proliferative DR), 99.6% for proliferative DR (PDR) and that of Retmarker was 73.0% for any retinopathy, 85.0% for RDR and, 97.9% for PDR, respectively.[14]

Table 1.

A review of the performance of various artificial intelligence algorithms validated in prospective as well as retrospective studies in the detection of referable diabetic retinopathy (RDR) using fundus images

| Study (Authors) | Type of Study | Camera/AI Algorithm | Dataset | Sensitivity (%) | Specificity (%) |

|---|---|---|---|---|---|

| Rajalakshmi et al.[18] | Retrospective | Remidio, Fundus on Phone (FOP)/EyeArt | Internally generated dataset | 99.3 | 66.8 |

| Abràmoff et al.[19] | Retrospective | Topcon TRC NW6 nonmydriatic fundus camera/IDx-DR X2 | MESSIDOR-2 | 96.8 | 87 |

| Gulshan et al.[16] | Retrospective | Topcon TRC NW6 nonmydriatic camera/inception-V3 | MESSIDOR-2 | 87 | 98.5 |

| Gulshan et al.[16] | Retrospective | EyePACS-1 | 90.3 | 98.1 | |

| Ting et al.[17] | Retrospective | FundusVue, Canon, Topcon, and Carl Zeiss/VCG-19 | SiDRP 14-15 | 90.5 | 91.6 |

| Guangdong | 98.7 | 81.6 | |||

| SIMES | 97.1 | 82.0 | |||

| SINDI | 99.3 | 73.3 | |||

| SCES | 100 | 76.3 | |||

| BES | 94.4 | 88.5 | |||

| AFEDS | 98.8 | 86.5 | |||

| RVEEH | 98.9 | 92.2 | |||

| Mexican | 91.8 | 84.8 | |||

| CUHK | 99.3 | 83.1 | |||

| HKU | 100 | 81.3 | |||

| Ramachandran et al.[27] | Retrospective | ‘Canon CR-2 Plus Digital Nonmydriatic Retinal Camera (Canon Inc., Melville, New York, USA)/Visiona | ODEMS | 84.6 | 79.7 |

| Ramachandran et al.[27] | Retrospective | ‘Canon CR-2 Plus Digital Nonmydriatic Retinal Camera (Canon Inc., Melville, New York, USA)/Visiona | Messidor | 96 | 90 |

| Natarajan et al.[20] | Prospective | Remidio Nonmydriatic Fundus on Phone (NM FOP 10)/MediosAI | Internal dataset generated | 100 | 88.4 |

| Sosale et al.[28] | Prospective | Remidio Nonmydriatic Fundus on/Medios AI Phone (NM FOP 10) | Internal dataset generated | 98.8 | 86.7 |

Furthermore, tech-giant Google (Health) has reported having created a dataset of 128,000 images fed by scientists to train a DL network for DR.[4]

Google's AI system (automated retinal disease assessment –ARDA) was evaluated with the help of two test runs using fundus photos from pre-diagnosed DR patients by expert physicians (The EyePACS-1 data set and MESSIDOR-2 data set). These tests resulted in high sensitivity values of 97.5% and 96.1% in each practice set and specificity values of 98.1% and 98.5%. Google has partnered with Aravind Eye Care System and Sankara Nethralaya in India to integrate its AI system as part of its global DR care initiative.[15,16]

Another study to evaluate the sensitivity and specificity of DL algorithms in automated detection of DR from fundus photographs defined referable diabetic retinopathy (RDR), as moderate and worse DR, referable diabetic macular edema (DME), or both, with two different datasets; the EyePACS-1 data set consisting of 9963 images from 4997 patients and MESSIDOR-2 data set consisting of 1748 images from 874 patients. The prevalence in both sets for RDR was 7.8% and 14.6%, respectively. For the first operating cut point with high specificity, the sensitivity and specificity for EyePACS-1 were 90.3% and 98.1%, respectively. For MESSIDOR-2, the sensitivity was 87.0% and the specificity was 98.5%. For the second cut point with high sensitivity, in EyePACS-1 the sensitivity was 97.5% and specificity was 93.4% and for MESSIDOR-2 the sensitivity was 96.1% and specificity was 93.9%.[16]

A multiethnic study conducted by Asian researchers, fed a DL system (DLS) with a dataset consisting of images for DR (76370 images), possible glaucoma (125189 images), and AMD (72610 images), and performance of DLS was evaluated for detecting DR (using 112648 images), possible glaucoma (71896 images), and AMD (35948 images). This DLS was used to evaluate 494661 retinal images as a part of the Singapore National Diabetic Retinopathy Screening Program (SIDRP), using digital retinal photography and verified by a team of trained professional graders.[17]

In the primary validation dataset consisting of 71 896 images from 14880 patients, the DLS had a sensitivity of 90.5% and specificity of 91.6% for detecting RDR; 100% sensitivity and 91.1% specificity for vision-threatening DR (VTDR) when compared with professionally analysed records from graders.[17]

The first published study of the use of AI-based automated detection of DR with smartphone-based fundus images was from India.[18] A retrospective analysis of Remidio Fundus on Phone (FOP) mydriatic smartphone-based retinal images and EyeArt AI software showed a very high sensitivity of 95.8% for detection of DR of any level of severity (95.8%) and over 99% sensitivity for detection of RDR as well as sight-threatening DR (STDR)/VTDR.[18]

IDx is the first AI device to get USFDA approval for screening for DR in 2018. The retinal images captured by a Topcon NW400 camera are uploaded to the IDx-DR software server. The software interprets the retinal images to provide the following outputs: i) “More than mild DR detected: referred to eye care professional” or ii) “negative for more than mild DR; rescreen in 12 months.” A multicenter trial of the device in more than 900 adults with diabetes revealed a sensitivity and specificity of 87.3% and 89.5%, respectively. There are more AI algorithms in the pipeline awaiting regulatory approval, from multiple ophthalmic researchers and private companies.

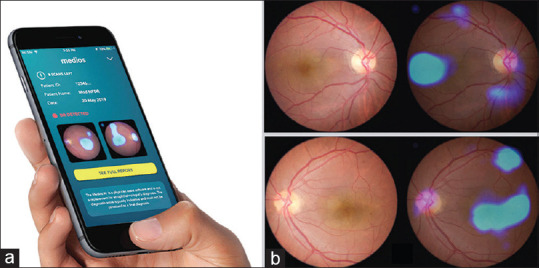

There have been attempts to address two key challenges in implementing large-scale models of screening, namely, affordable imaging systems and AI algorithms that can be used in minimal infrastructure contexts where access to the internet may be difficult. A recent study aimed at evaluating the performance of Medios AI- an offline AI algorithm that can be used on a smartphone, to detect RDR on images taken on Remidio's Fundus on Phone (FOP NM-10), a smartphone-based imaging system. This study analyszed images of 231 people with diabetes visiting various dispensaries under the municipality of Greater Mumbai [Fig. 1].[20] The results showed high accuracy of the offline AI algorithm with sensitivity and specificity in grading RDR of 100% and 88.4%, respectively and any grade of DR as 85.2%and 92%, respectively, when compared to manual reports generated by trained ophthalmologists.[20]

Figure 1.

(a) Interface for the inbuilt, automated, offline AI-algorithm, Medios-AI integrated into fundus on phone (FOP) to provide instant DR diagnosis. (b) Sample report generated showing heat maps highlighting DR lesions

ARMD and AI

ARMD is a chronic, degenerative condition of the retina which is the most common cause of visual impairment in elderly is, characterized by drusen, retinal pigment changes, choroidal neovascularization, hemorrhage, exudation, and even geographic atrophy.[10] It is broadly classified as dry and wet ARMD. Ting et al. showed that their DLS had a sensitivity of 93.2%, the specificity was 88.7% and the AUC was 0.931 for detection of referable ARMD based on multiethnic fundus images.[17]

With the promising results from DL interpretation of fundus images, efforts towards DL use in OCT analysis, given its use in the management of retinal disorders. DL analysis of OCT for morphological variations in the scan, detection of intraretinal fluid or subretinal fluid, neovascularization has started showing promise. DL systems are being effectively used to identify anatomic OCT-based features aiding in early diagnosis of retinal pathology and also predict outcomes of treatment.[19,21] The sensitivity using such methods varies between 87–100% with very high accuracy. Hwang et al. used a dataset of labelled 35,900 OCT images obtained of age-related macular degeneration (AMD) patients and used them to train CNNs to perform AMD diagnosis and found the accuracy was generally higher than 90% when compared to diagnosis by retina specialists and the treatment recommendations provided by DL was also comparable to that of retina specialists [Table 2].[21,22]

Table 2.

A review of the performance of various Artificial Intelligence algorithms tested for detection of Age-related Macular Degeneration (ARMD)

| Study (Authors)/Image Used | AI Algorithm/Dataset | AI Utility | Sensitivity (%) | Specificity (%) |

|---|---|---|---|---|

| Burlina et al.[29]/Fundus images | DCNN-A WS/National Institutes of Health AREDS | Detecting the presence of AMD from the dataset and differentiating from normal images | 88.4 | 94.1 |

| DCNN-U WS | 73.5 | 91.8 | ||

| DCNN-A NSG | 87.2 | 93.4 | ||

| DCNN-U NSG | 73.8 | 92.1 | ||

| DCNN-A NS | 85.7 | 93.4 | ||

| DCNN-U NS | 72.8 | 91.5 | ||

| Lee et al.[30]/OCT Images | Modified VGG16/Heidelberg Spectralis (Heidelberg Engineering, Heidelberg, Germany) imaging database | Detecting the presence of AMD from the dataset and differentiating from normal images | 92.6 | 93.7 |

| Treder et al.[31] | DCNN (using open-source deep learning framework TensorFlowÔ (Google Inc., Mountain View, CA, USA))/ImageNet | Detecting the presence of AMD from the dataset and differentiating from normal images | 100 | 92 |

| Sengupta et al.[32]/OCT Images | Transfer Learning/Privately generated dataset with 51140 normal, 8617 drusens, 37206 CNV, 11349 DME images | Differentiating AMD/DME images from the dataset consisting of all conditions causing treatable blindness | 97.8 | 97.4 |

| Sengupta et al.[32]/Fundus Images | DCNN/AREDS | 66.34 | 88.95 | |

| DCNN/Tsukazaki Hospital database | 100 | 97.31 | ||

| CNN/Kasturba Medical College database | 96.43 | 93.45 | ||

| Hwang et al.[22]/OCT Images | VGG16/Internally generated database with 35,900 images | Identify Normal images without AMD | 99.07 | 99.54 |

| Identify Dry AMD | 83.99 | 99.34 | ||

| Identify inactive Wet AMD | 96.07 | 90.40 | ||

| Identify Active Wet AMD | 86.47 | 99.05 | ||

| Inception V3 | Identify normal images without AMD | 99.38 | 99.70 | |

| Identify Dry AMD | 85.64 | 99.57 | ||

| Identify Inactive Wet AMD | 97.11 | 91.82 | ||

| Identify Active Wet AMD | 88.53 | 98.99 | ||

| ResNet50 | Identify Normal images without AMD | 99.17 | 99.80 | |

| Identify Dry AMD | 81.20 | 99.45 | ||

| Identify Inactive Wet AMD | 95.35 | 90.24 | ||

| Identify Active Wet AMD | 87.19 | 97.84 |

ROP and AI

ROP is a leading cause of childhood blindness all over the world but it can be treated effectively with early diagnosis and timely treatment. Blindness can be prevented if ROP with plus disease or retinopathy in zone one stage 3 even without plus disease is treated on time. Infants with pre-plus disease require close and repeated observation. The barriers to ROP screening are significant inter-examiner variability in diagnosis and only a few trained examiners to screen for ROP. Repeated observations and testing require huge manpower and energy and this is where AI could make a huge impact in improving the efficacy of ROP treatment.[23]

Researchers at The Massachusetts General Hospital and OHSU have been working on combining two existing AI models to create an algorithm and making reference standards to train the same, respectively. On comparing this algorithm with the analysis by trained ophthalmologists, its accuracy was detected to be better (91%) than that by the experts (82%).[23,24]

Other studies have reported the automatic identification of ROP through algorithms that focussed on two-level classification (plus or not plus disease) some of which were based on tortuosity and dilation features from arteries and veins, with an accuracy of 95% accuracy, which is comparable to the diagnosis made by experts [Table 3].[2,24] In 2018, Brown et al. reported the results of a fully automated DL system, informatics in ROP (i-ROP) that could diagnose plus disease, with AUROC of 0.98. The i-ROP system has created a severity score for ROP that appears to be promising for ROP treatment monitoring.[25]

Table 3.

A review of the performance of various artificial intelligence algorithms tested for detection of Retinopathy of Prematurity (ROP)

| Study (Authors) | Image | AI Algorithm/Dataset | Sensitivity (%) | Specificity (%) |

|---|---|---|---|---|

| Worrall et al.[33] | Fundus images | Bayesian CNN (per image)/Canada | 82.5 | 98.3 |

| Bayesian CNN (per exam) | 95.4 | 94.7 | ||

| Zhang et al.[34] | Wide-angle retinal images | AlexNet/Private dataset with 420 365 wide-angle retina images | 72.9 | 78.7 |

| VGG-16 | 98.7 | 97.8 | ||

| GoogleNet | 96.8 | 98.2 |

AI in glaucoma, cataracts and other anterior segment diseases

Cataract and glaucoma are very common diseases in ophthalmology. Cataracts lead to clouding of the lens and whereas glaucoma damages the optic nerve causing irreversible blindness.[10] Conditions like these, although irreversible, their progress can be significantly lowered by early diagnosis and reasonable treatment.

Slit-lamp images have been fed into CNN algorithms to evaluate the severity of nuclear cataracts. On further iteration and validation, their accuracy was found to be 70% against clinical grading. Significant progress has also been made considering identification of pediatric cataracts in terms of achieving exceptional accuracy and sensitivity in lens classification and density.[10] ML algorithms like radial basis functions or support-vector machines have improved lens implant power selection prior to cataract surgeries. They have been useful in conducting anterior segment area analysis such as in corneal topography scans and intraocular lens power predictions.[4]

Glaucoma detection primarily depends on the intraocular pressure, the thickness of retinal nerve fibre layer (RNFL), optic nerve, and visual field examination. Researchers have devised an algorithm to classify the optic disc of open-angle glaucoma from OCT images. This algorithm has reported an accuracy of 87.8%.[10] ML algorithms to identify glaucoma in its early stages assessing the cup disc ratio in fundus images or the thickness of the retinal nerve fiber in OCT images have reported accuracies ranging between 63.7% and 93.1% depending on the input images [Table 4]. Ting et al. showed that their DLS had a sensitivity and specificity of 96.4% and 87.2%, respectively and the AUC was 0.942 for glaucoma detection was based on fundus images.[17]

Table 4.

A review of the performance of various artificial intelligence algorithms tested for detection of Glaucoma

| Study (Authors) | Image | AI Algorithm/Dataset | Sensitivity (%) | Specificity (%) |

|---|---|---|---|---|

| Sengupta et al.[32] | Fundus image | DENet/SECS, SINDI | 70.67, 37.53 | |

| Inception V3/Private database with 48000+ images | 95.6 | 92 | ||

| MB-NN/Private database | 92.33 | 90.9 | ||

| OCT Images | MCDN/Private database | 88.89 | 89.63 | |

| Yousefi et al.[35] | OCT Images | The algorithm developed combining Bayesian net, Lazy K Star, Meta classification using regression, Meta ensemble selection, alternating decision tree (AD tree), random forest tree, and simple classification and regression tree (CART)/Privately generated from University of California at San Diego (UCSD)-based diagnostic innovations in glaucoma study (DIGS) and the African Descent and Glaucoma Evaluation Study (ADAGES), assessed RNFL thickness | 80.0 | 73.0 |

Potential pitfalls

There are a few potential pitfalls that one needs to weigh before being prompted to blindly trust the AI-based decisions and diagnoses in ophthalmology.[7]

AI algorithms would need equally skilled manpower to capture clear and coherent images to be fed as input images. Curated data sets that are robust become a must for proper deep learning by the AI systems

AI algorithms require a reliable single output for each input. On the contrary, intergrader variability is high when diagnosing retinal conditions. This gives rise to a paradox: the AI should be more reliable than humans while learning from data labelled by humans. This needs to be overcome by involving multiple graders and arbitrators which can be lengthy and expensive

High computational costs and in-depth training experiences are needed for developing AI algorithms; hence, one might only bear such investments when it comes to conditions with higher morbidity and mortality rates but not so much for rare diseases

The basis of identification and diagnoses made by AI algorithms is mechanical, and some amount of human intervention is always necessary for detecting each and every feature or variation of a disease;

AI may miss findings it's not looking for, which a trained human grader may not, giving patients a false sense of security

A wide range of complex algorithms are necessary to execute AI operations and designing these algorithms is itself, complicated; a slight error in programming could lead to higher levels of damage

One of the challenges in use of AI in ophthalmology is the limited availability of large data for the rare ocular diseases as well as in very common conditions like cataract where imaging is not done as a part of routine medical practice

The “Black Box” mode of learning where what goes on inside a neural network or ML algorithm remains unclear, despite familiar inputs and outputs; complete transparency is needed for taking accountability for treatment decisions for patients[26]

An ML algorithm would only be reliable on a population which is exactly similar to the one it learnt from, and whenever there is a slight change in the input data, a whole new set of learning algorithms need to be programmed to maintain the same accuracy

The difficult attribution of liability in case of errors and malfunctions of AI systems in screening and healthcare.

Legal aspects of AI

The development and implementation of the AI algorithms involve huge datasets and hence there are several legal issues such as regulatory issues, privacy issues, tort laws, and intellectual property laws that need to be addressed. The AI system consists of a complex set of mathematical rules whose inner-working mechanisms are beyond human comprehension. Generalized principles on how to deal with AI becomes difficult as there are different forms of applications for different purposes. This necessitates the governments, the industry players, research institutions, and other stakeholders to draft special AI ethics principles regarding fairness, safety, reliability, privacy, security, inclusiveness, accountability, and transparency and policies that need to be applied to the AI activities. Similarly, as the majority of AI algorithms development and validation and then clinical implementation involves the use of huge datasets, this leads to important questions on consent, privacy, and security against the misuse of data. Liability of the product, in case the algorithm commits an error in diagnosis and misses a diagnosis, is another aspect which lawmakers need to pay heed to while dealing with AI-based products or solutions.[36]

What lies in the future?

There are newer applications being discussed among the ophthalmic fraternity. These include research on implementing AI and ML algorithms in automated grading of cataracts, managing pediatric conditions such as refractive errors, congenital cataracts, detect strabismus, predicting future high myopia, and diagnosing reading disability. There have also been studies reported to automatically detect leukocoria in children from a recreational smartphone or digital camera photographs, implying another potential application of AI. Applications in ocular oncology using multispectral imaging and ML has also been recently tested. Newer AI algorithms are now measuring inner and outer retinal layer thicknesses to predict the risk for Alzheimer's disease.[37] Moreover, with the use of AI in multimodal imaging, i.e., combining fundus images with OCT and OCT angiography images, it might be possible to detect more accurately and multiple retinal diseases at one go. Such an algorithm would be invaluable in the differential screening of DR, AMD, glaucoma, and other retinal disorders simultaneously, along with their severity. Further studies and validations are required to assess the application of these algorithms in the clinical settings to ensure that such AI-assisted, automated screening and diagnosis effectively minimize doctors' burden and add value at the ophthalmology clinics.[38]

Conclusion

The deployment of AI in ophthalmology is augmenting diagnostic imaging, which may soon lead to real-time deployment in telemedicine screening programs. Recent studies done real-time have shown the feasibility of using an AI-assisted automated detection system in ophthalmology, especially in the detection of DR. The advantages of the use of AI in ophthalmology far outweigh its limitations. When used wisely and cautiously, with proper tracking and reporting, AI would definitely provide the desired output that could help to increase adherence and compliance with screening and treatment regimens. Robust deep learning algorithms are evolving rapidly and would soon get integrated into regular eye-care services. One should always remember that AI provides the best results only when augmented by the skilled human workforce.

Financial support and sponsorship

Nil.

Conflicts of interest

There are no conflicts of interest.

References

- 1.Schmidt-Erfurth U, Sadeghipour A, Gerendas BS, Waldstein SM, Bogunović H. Artificial intelligence in retina. Prog Retin Eye Res. 2018;67:1–29. doi: 10.1016/j.preteyeres.2018.07.004. [DOI] [PubMed] [Google Scholar]

- 2.Lu W, Tong Y, Yu Y, Xing Y, Chen C, Shen Y. Applications of artificial intelligence in ophthalmology: General overview. J Ophthalmol. 2018;2018:5278196. doi: 10.1155/2018/5278196. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Lee A, Taylor P, Kalpathy-Cramer J, Tufail A. Machine learning has arrived! Ophthalmology. 2017;124:1726–8. doi: 10.1016/j.ophtha.2017.08.046. [DOI] [PubMed] [Google Scholar]

- 4.Krause J, Gulshan V, Rahimy E, Karth P, Widner K, Corrado GS, et al. Grader variability and the importance of reference standards for evaluating machine learning models for diabetic retinopathy. Ophthalmology. 2018;125:1264–72. doi: 10.1016/j.ophtha.2018.01.034. [DOI] [PubMed] [Google Scholar]

- 5.Rahimy E. Deep learning applications in ophthalmology. Curr Opin Ophthalmol. 2018;29:254–60. doi: 10.1097/ICU.0000000000000470. [DOI] [PubMed] [Google Scholar]

- 6.Schmidhuber J. Deep learning in neural networks: An overview. Neural Netw. 2015;61:85–117. doi: 10.1016/j.neunet.2014.09.003. [DOI] [PubMed] [Google Scholar]

- 7.Samek W, Wiegand T, Müller KR. Explainable artificial intelligence: Understanding, visualizing and interpreting deep learning models. ArXiv170808296 Cs Stat. 2017. [Last accessed on 2020 Feb 04]. Available from: http://arxivorg/abs/170808296 .

- 8.Abràmoff MD, Folk JC, Han DP, Walker JD, Williams DF, Russell SR, et al. Automated analysis of retinal images for detection of referable diabetic retinopathy. JAMA Ophthalmol. 2013;131:351–7. doi: 10.1001/jamaophthalmol.2013.1743. [DOI] [PubMed] [Google Scholar]

- 9.Abràmoff MD, Garvin MK, Sonka M. Retinal imaging and image analysis. IEEE Rev Biomed Eng. 2010;3:169–208. doi: 10.1109/RBME.2010.2084567. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Du XL, Li WB, Hu BJ. Application of artificial intelligence in ophthalmology. Int J Ophthalmol. 2018;11:1555–61. doi: 10.18240/ijo.2018.09.21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Yau JW, Rogers SL, Kawasaki R, Lamoureux EL, Kowalski JW, Bek T, et al. Meta-Analysis for eye disease (META-EYE) Study group. Global prevalence and major risk factors of diabetic retinopathy. Diabetes Care. 2012;35:556–64. doi: 10.2337/dc11-1909. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Suzuki K, Zhang J, Xu J. Massive-training artificial neural network coupled with laplacian-eigenfunction-based dimensionality reduction for computer-aided detection of polyps in CT colonography. IEEE Trans Med Imaging. 2010;29:1907–17. doi: 10.1109/TMI.2010.2053213. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Bhaskaranand M, Ramachandra C, Bhat S, Cuadros J, Nittala MG, Sadda S, et al. Automated diabetic retinopathy screening and monitoring using retinal fundus image analysis. J Diabetes Sci Technol. 2016;10:254–61. doi: 10.1177/1932296816628546. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Tufail A, Rudisill C, Egan C, Kapetanakis VV, Salas-Vega S, Owen CG, et al. Automated diabetic retinopathy image assessment software: Diagnostic accuracy and cost-effectiveness compared with human graders. Ophthalmology. 2017;124:343–51. doi: 10.1016/j.ophtha.2016.11.014. [DOI] [PubMed] [Google Scholar]

- 15.Raman R, Srinivasan S, Virmani S, Sivaprasad S, Rao C, Rajalakshmi R. Fundus photograph-based deep learning algorithms in detecting diabetic retinopathy. Eye. 2019;33:97–109. doi: 10.1038/s41433-018-0269-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Gulshan V, Peng L, Coram M, Stumpe MC, Wu D, Narayanaswamy A, et al. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA. 2016;316:2402–10. doi: 10.1001/jama.2016.17216. [DOI] [PubMed] [Google Scholar]

- 17.Ting DS, Cheung CY, Lim G, Tan GS, Quang ND, Gan A, et al. Development and validation of a deep learning system for diabetic retinopathy and related eye diseases using retinal images from multiethnic populations with diabetes. JAMA. 2017;318:2211–23. doi: 10.1001/jama.2017.18152. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Rajalakshmi R, Subashini R, Anjana RM, Mohan V. Automated diabetic retinopathy detection in smartphone-based fundus photography using artificial intelligence. Eye. 2018;32:1138–44. doi: 10.1038/s41433-018-0064-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Abràmoff MD, Lou Y, Erginay A, Clarida W, Amelon R, Folk JC, et al. Improved automated detection of diabetic retinopathy on a publicly available dataset through integration of deep learning. Invest Ophthalmol Vis Sci. 2016;57:5200–6. doi: 10.1167/iovs.16-19964. [DOI] [PubMed] [Google Scholar]

- 20.Natarajan S, Jain A, Krishnan R, Rogye A, Sivaprasad S. Diagnostic accuracy of community-based diabetic retinopathy screening with an offline artificial intelligence system on a smartphone. JAMA Ophthalmol. 2019 doi: 10.1001/jamaophthalmol.2019.2923. doi: 101001/jamaophthalmol 20192923. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Schlegl T, Waldstein SM, Bogunovic H, Endstraßer F, Sadeghipour A, Philip AM, et al. Fully automated detection and quantification of macular fluid in OCT using deep learning. Ophthalmology. 2018;125:549–58. doi: 10.1016/j.ophtha.2017.10.031. [DOI] [PubMed] [Google Scholar]

- 22.Hwang DK, Hsu CC, Chang KJ, Chao D, Sun CH, Jheng YC, et al. Artificial intelligence-based decision-making for age-related macular degeneration. Theranostics. 2019;9:232–45. doi: 10.7150/thno.28447. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Campbell JP, Ataer-Cansizoglu E, Bolon-Canedo V, Bozkurt A, Erdogmus D, Cramer JK, et al. Expert diagnosis of plus disease in retinopathy of prematurity from computer-based image analysis. JAMA Ophthalmol. 2016;134:651–7. doi: 10.1001/jamaophthalmol.2016.0611. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Gelman R, Jiang L, Du YE, Martinez-Perez ME, Flynn JT, Chiang MF. Plus disease in retinopathy of prematurity: Pilot study of computer-based and expert diagnosis. J AAPOS. 2007;11:532–40. doi: 10.1016/j.jaapos.2007.09.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Brown JM, Campbell JP, Beers A, Chang K, Ostmo S, Chan RVP, et al. Automated diagnosis of plus disease in retinopathy of prematurity using deep convolutional neural networks. JAMA Ophthalmol. 2018;136:803–10. doi: 10.1001/jamaophthalmol.2018.1934. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Bathaee Y. The artificial intelligence black box and the failure of intent and causation. Harvard J Low Technol. 2018;31:50. [Google Scholar]

- 27.Ramachandran N, Hong SC, Sime MJ, Wilson GA. Diabetic retinopathy screening using deep neural network. Clin Exp Ophthalmol. 2018;46:412–6. doi: 10.1111/ceo.13056. [DOI] [PubMed] [Google Scholar]

- 28.Sosale AR. Screening for diabetic retinopathy—Is the use of artificial intelligence and cost-effective fundus imaging the answer? Int J Diabetes Dev Ctries. 2019;39:1–3. [Google Scholar]

- 29.Burlina PM, Joshi N, Pekala M, Pacheco KD, Freund DE, Bressler NM. Automated grading of age-related macular degeneration from color fundus images using deep convolutional neural networks. JAMA Ophthalmol. 2017;135:1170–6. doi: 10.1001/jamaophthalmol.2017.3782. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Lee CS, Baughman DM, Lee AY. Deep learning is effective for classifying normal versus age-related macular degeneration OCT images. Ophthalmol Retina. 2017;1:322–7. doi: 10.1016/j.oret.2016.12.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Treder M, Lauermann JL, Eter N. Automated detection of exudative age-related macular degeneration in spectral domain optical coherence tomography usingdeep learning. Graefes Arch Clin Exp Ophthalmol. 2018;256:259–65. doi: 10.1007/s00417-017-3850-3. [DOI] [PubMed] [Google Scholar]

- 32.Sengupta S, Singh A, Leopold HA, Lakshminarayanan V. Ophthalmic diagnosis and deep learning - A survey arXiv: 181207101. 2018. [Last accessed on 2019 Sep 19]. Available from: http://arxivorg/abs/181207101 .

- 33.Worrall DE, Wilson CM, Brostow GJ. Automated retinopathy of prematurity case detection with convolutional neural networks. In: Worrall DE, Wilson CM, Brostow GJ, editors. LABELS 2016/DLMIA 2016, LNCS 10008. Springer International Publishing; 2016. pp. 68–76. [Google Scholar]

- 34.Zhang Y, Wang L, Wu Z, Zeng J, Chen Y, Tian R, et al. Development of an automated screening system for retinopathy of prematurity using a deep neural network for wide-angle retinal images. IEEE Access. 2019;7:10232–41. [Google Scholar]

- 35.Yousefi S, Goldbaum MH, Balasubramanian M, Jung TP, Weinreb RN, Medeiros FA, et al. Glaucoma progression detection using structural retinal nerve fiber layer measurements and functional visual field points. IEEE Trans Biomed Eng. 2014;; 61:1143–54. doi: 10.1109/TBME.2013.2295605. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Padhy SK, Takkar B, Chawla R, Kumar A. Artificial intelligence in diabetic retinopathy: A natural step to the future. Indian J Ophthalmol. 2019;67:1004–9. doi: 10.4103/ijo.IJO_1989_18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Balyen L, Peto T. Promising artificial intelligence-machine learning-deep learning algorithms in ophthalmology. Asia Pac J Ophthalmol. 2019;8:264–72. doi: 10.22608/APO.2018479. [DOI] [PubMed] [Google Scholar]

- 38.Davis AJ, Kuriakose A. Role of artificial intelligence and machine learning in ophthalmology. Kerala J Ophthalmol. 2019;31:150–60. [Google Scholar]