When we move the features of our face, or turn our head, we communicate changes in our internal state to the people around us. How this information is encoded and used by an observer's brain is poorly understood. We investigated this issue using a functional MRI adaptation paradigm in awake male macaques. Among face-selective patches of the superior temporal sulcus (STS), we found a double dissociation of areas processing facial expression and those processing head orientation.

Keywords: amygdala, cognitive neuroscience, face perception, facial expressions, NHP fMRI, social cognition

Abstract

When we move the features of our face, or turn our head, we communicate changes in our internal state to the people around us. How this information is encoded and used by an observer's brain is poorly understood. We investigated this issue using a functional MRI adaptation paradigm in awake male macaques. Among face-selective patches of the superior temporal sulcus (STS), we found a double dissociation of areas processing facial expression and those processing head orientation. The face-selective patches in the STS fundus were most sensitive to facial expression, as was the amygdala, whereas those on the lower, lateral edge of the sulcus were most sensitive to head orientation. The results of this study reveal a new dimension of functional organization, with face-selective patches segregating within the STS. The findings thus force a rethinking of the role of the face-processing system in representing subject-directed actions and supporting social cognition.

SIGNIFICANCE STATEMENT When we are interacting with another person, we make inferences about their emotional state based on visual signals. For example, when a person's facial expression changes, we are given information about their feelings. While primates are thought to have specialized cortical mechanisms for analyzing the identity of faces, less is known about how these mechanisms unpack transient signals, like expression, that can change from one moment to the next. Here, using an fMRI adaptation paradigm, we demonstrate that while the identity of a face is held constant, there are separate mechanisms in the macaque brain for processing transient changes in the face's expression and orientation. These findings shed new light on the function of the face-processing system during social exchanges.

Introduction

Our sensory systems have evolved to detect changes in our environment. In the social domain, this is manifested in the capacity to visually monitor the behavior of others. In primates, facial behavior is of particular importance, with changing attributes such as expressions and head turning superimposed on more permanent attributes, such as identity. The reading of changeable facial attributes provides useful information about the internal state of a social agent (Tomkins and McCarter, 1964; Ekman et al., 1972). For example, humans are able to detect and respond to very subtle movements of facial muscles that convey complex thoughts and intentions (Ekman, 1992; Adolphs et al., 1998; Krumhuber et al., 2007; Oosterhof and Todorov, 2009). The speed and ease by which we perceive these changeable aspects of facial behavior belie the inherent difficulty of the visual operations involved.

In both human and nonhuman primates, the detailed visual analysis of face stimuli has been attributed to a network of regions in the ventral visual stream that can be localized using functional magnetic resonance imaging (fMRI; Kanwisher et al., 1997; Logothetis et al., 1999; Tsao et al., 2003). Current models that explain how this network accomplishes face perception are cast in terms of distributed areas with some specialization (Bruce and Young, 1986; Haxby et al., 2000; Freiwald et al., 2016; Grill-Spector et al., 2017). For example, in the macaque brain, both imaging and single-unit studies have indicated that face-selective patches in the fundus of the STS are tuned to facial expressions (Hasselmo et al., 1989; Hadj-Bouziane et al., 2008). But do these patches also process other changeable facial attributes?

There is some reason to believe that facial expression and head orientation would be processed separately. For example, changes in head orientation involve rigid movements of the whole head providing an observer with different views of the face, whereas changes in expression involve nonrigid, internal movements of facial features. The challenges associated with tolerating changes in the retinal image resulting from head rotations also pertain to the physical structure of nonface objects, and, thus, changes in head orientation might tap into more general neural circuitry. On the other hand, facial expressions are specific to faces, as a category of visual objects, and link to the interpretation of affect and emotion in structures such as the amygdala (Rolls, 1984; Gothard et al., 2007). To fully appreciate how the primate brain reads a face, it is important to conceptually dissociate the reading of these and other facial signals.

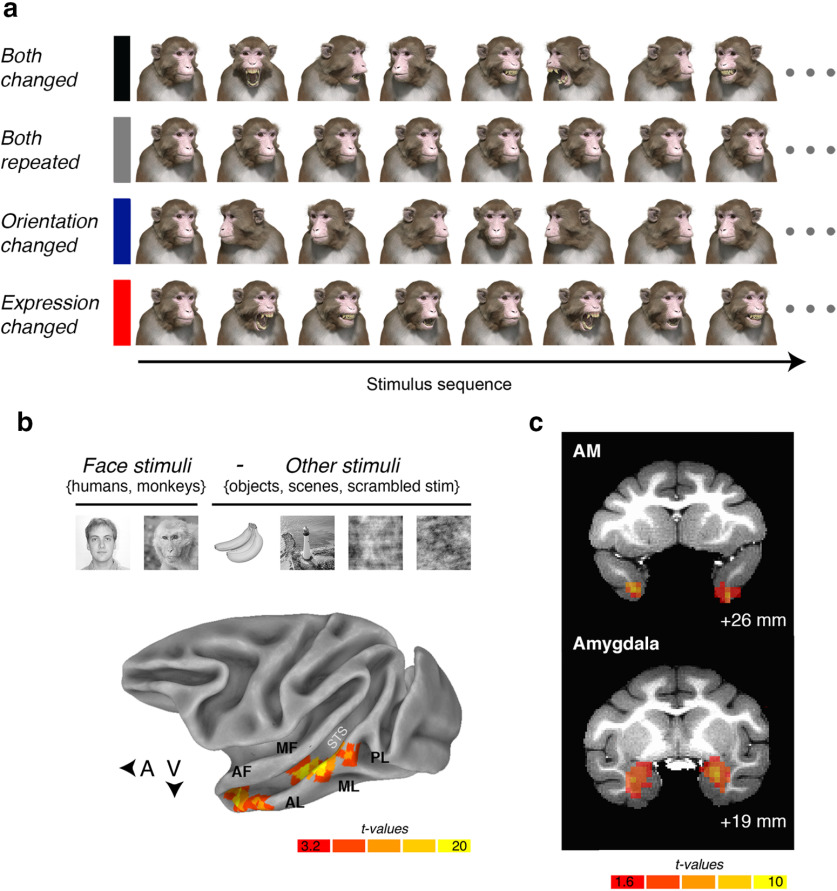

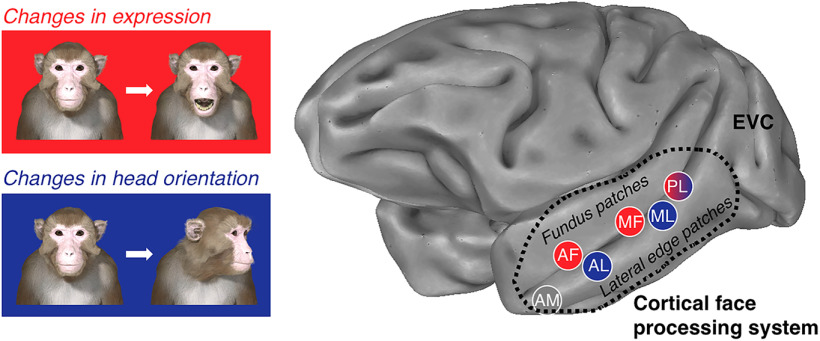

To address this question, we used a three-dimensional avatar of a macaque face based on data from a computed tomography scan (Fig. 1a; Murphy and Leopold, 2019). The use of this avatar gave us independent control over both the expression and the head orientation of the stimulus, while keeping its identity constant. Using many renderings of the avatar, we then applied a functional MRI adaptation paradigm to define the regions of the brain that are selective for, respectively, facial expression and head orientation. The logic behind fMRI adaptation, described in detail previously (Grill-Spector and Malach, 2001; Kourtzi and Kanwisher, 2001), can be summarized as follows: if a neural population is sensitive to a visual feature, then when that feature is presented repeatedly, the fMRI signal in the region will decrease (i.e., “adapt”) compared with when that feature is not repeated (i.e., “release from adaptation”). In the past, fMRI adaptation has been used to determine functional specialization in the human brain. For example, presenting a sequence of faces that differs either in their identity or their expression to human subjects leads to a differential release (i.e., a strengthening) of the fMRI signal in regions sensitive to those attributes (Winston et al., 2004; Loffler et al., 2005; Williams et al., 2007; Fox et al., 2009). Therefore, for the current purposes, fMRI adaptation is an ideal approach for mapping regions of the macaque face-processing system that are differentially sensitive to facial expression and head orientation.

Figure 1.

The design for the functional MRI adaptation experiment and the localization of the face patches. a, Illustrative example of the stimulus order in each of the four conditions presented in rows. b, Top, The contrast used to localize the face-selective patches in the macaque IT cortex. Bottom, Lateral view of a partially inflated macaque cortex with the data from one subject (subject K) projected onto the surface. The voxelwise statistical threshold was set at p = 3 × 10−11 (false discovery rate). c, Top, A coronal slice ∼26 mm anterior to the intramural line in subject K's native space. T-map indicates the anatomic location of area AM in subject K. Bottom, A coronal slice ∼19 mm anterior to the intramural line in subject K's native space. Cortical results were masked for illustrative purposes; only voxels inside the anatomic boundary of the amygdala are visible.

Materials and Methods

Subjects

We tested three male rhesus macaques (Macaca mulatta; age range, 7–9 years; weight range at time of testing, 9.2–11.5 kg). We kept the sample size to the smallest number possible that would still allow for scientific inference. Previous reports of similar fMRI experiments on this species have proven that a sample size of three is sufficient (Hadj-Bouziane et al., 2012; Zhu et al., 2013; Russ and Leopold, 2015; Liu et al., 2017). All three subjects were acquired from the same primate breeding facility in the United States where they had social group histories as well as group housing experience until their transfer to the National Institute of Mental Health (NIMH) for quarantine at the ages of 4–5 years. After that, they were housed in a large colony room with auditory and visual contact with other conspecifics.

Each subject was surgically implanted with a headpost under sterile conditions using isoflurane anesthesia. After recovery, the subjects were slowly acclimated to the experimental procedure. First, they were trained to sit calmly in a plastic restraint chair and fixate a small (0.5–0.7° of visual angle) red central dot for long durations (∼8 min). Fixation within a circular window (radius, 2° of visual angle) centered over the fixation dot resulted in juice delivery. The length of fixation that was required for juice delivery during training, and scan sessions varied randomly throughout the length of a run. The average time between rewards was typically 2 s (±300 ms) but varied depending on the behavior of the subject. All procedures were performed in accordance with the Guide for the Care and Use of Laboratory Animals (49) and were approved by the National Institute of Mental Health Animal Care and Use Committee.

Data acquisition

Before each scanning session, an exogenous contrast agent [monocrystalline iron oxide nanocolloid (MION)] was injected into the femoral vein to increase the signal-to-noise ratio (Vanduffel et al., 2001; Taubert et al., 2015a,b). MION doses were determined independently for each subject (∼8–10 mg/kg).

Structural and functional data were acquired in a 4.7 T, 60 cm vertical scanner (BioSpec, Bruker) equipped with a Bruker S380 gradient coil. Subjects viewed the visual stimuli projected onto a screen above their head through a mirror positioned in front of their eyes. We collected whole-brain images with a four-channel transmitter and receive radio frequency coil system (Rapid MR International). A low-resolution anatomic scan was also acquired in the same session to serve as an anatomic reference [modified driven equilibrium Fourier transform [MDEFT] sequence; voxel size: 1.5 × 0. 5 × 0.5 mm; FOV: 96 × 48 mm; matrix size: 192 × 96; echo time (TE): 3.95 ms; repetition time (TR): 11.25 ms]. Functional echoplanar imaging (EPI) scans were collected as 42 sagittal slices with an in-plane resolution of 1.5 × 1.5 mm and a slice thickness of 1.5 mm. The TR was 2.2 s, and the TE was 16 ms (FOV: 96 × 54 mm; matrix size: 64 × 36 m; flip angle, 75°). Eye position was recorded using an MR-compatible infrared camera (MRC Systems) fed into MATLAB version R2018b (MathWorks) via a DATApixx hub (VPixx Technologies).

Localization data

While the subjects were awake and fixating, we presented images (30/category) of six different object categories (human faces, monkey faces, scenes, objects, phase-scrambled human faces, and phase-scrambled monkey faces). Stimuli were cropped images presented on a square canvas that was 12° of visual angle in height. All six categories were presented in each run in a standard on/off block design (12 blocks in total). Each block lasted for 16.5 s. During a “stimulus on” block, 15 images were presented one at a time for 900 ms and were followed by a 200 ms interstimulus interval (ISI). We removed any run from the analysis where the monkey did not fixate within a 4° window for >60% of the time.

Face-selective regions were identified in all three subjects using the following contrast: activations evoked by (human faces + monkey faces) > activations evoked by (scenes + objects + phase scrambled human faces + phase scrambled monkey faces) (Fig. 1b). We targeted face patches known as AL, AF, ML, MF, and PL because these patches were easily identifiable bilaterally in all three subjects (Fig. 1c). Area AM, on the ventral surface of the anterior inferior temporal (IT) cortex, was easily visible in the localizer data in five of six hemispheres; thus, subject F only contributed the left hemisphere to the analysis of area AM. The previously described face-selective areas in the frontal lobe (in ventrolateral and orbitofrontal cortex) were not present in all subjects and/or both hemispheres, most likely because of the placement of the coils and signal coverage. For this reason, we excluded these regions from further analysis.

To validate the region of interest (ROI)-based analytical approach, we calculated the amount of variance explained by the four adaptation and release conditions for each face-selective region. For the purposes of this analysis, object-selective voxels were also defined for each subject using a contrast between the activation evoked by the object condition in the independent localizer experiment compared with those evoked by all other visual stimuli. Object-selective voxels represent regions of IT cortex where the magnitude of the fMRI signal was not expected to vary systematically across the experimental conditions. For all three subjects, the average R2 value for face-selective voxels (subject K, mean = 0.06; subject F, mean = 0.07; subject J, mean = 0.19) was higher than the average R2 value for the object-selective voxels (subject K, mean = 0.03; subject F, mean = 0.02; subject J, mean = 0.08). Also, a greater proportion of face-selective voxels had an R2 value that was ≥0.05 (subject K, 51.68%; subject F, 57.4%; subject J, 95%) than object selective voxels (subject K, 25.44%; subject F, 17.09%; subject J, 58%). Collectively, these observations indicate that the effects of adaptation were greater for face-selective voxels than for neighboring object-selective voxels in IT cortex. To distinguish the six cortical face-selective ROIs, we drew spheres with a 3 mm radius around the peak activations in the localizer data. This has proven to be an effective method of isolating separate face-selective regions (Dubois et al., 2015) that is not dependent on setting an arbitrary statistical threshold. To define the amygdala ROI, we drew spherical regions of interest centered on the peak activations (using the same contrast as the cortical face-selective ROIs; i.e., faces > nonface categories) within the anatomic boundary of the amygdala in the left and right hemispheres. To define early visual cortex (EVC), we drew spherical regions of interest centered on the peak activations resulting from the contrast between the two scrambled conditions and baseline activity (peak activations identified after applying the statistical threshold, p = 3 × 10−13).

Experimental stimuli and design

The factorial design included the following two conditions: (1) a condition where both the expression and head orientation of the avatar continually changed (termed “both changed”); and (2) a condition where the expression and head orientation of the avatar repeated (termed “both repeated”). We reasoned that if a face patch is sensitive to changeable attributes in a face while identity persists, then the magnitude of the response when both signals changed would be greater than when both signals repeated. We also included the following two cross-adaptation conditions: (1) the expression changed, while head orientation repeated (termed “expression changed”); and (2) the expression repeated, while the head orientation changed (termed “orientation changed”). These two cross-adaptation conditions were of particular interest because if a face-selective region were sensitive to the local movements among features that define changes in expression, regardless of head orientation, then the fMRI signal would be greater in the expression-changed condition than in the orientation-changed condition. Alternatively, if a face-selective region were sensitive to changes in the direction a head is turned, while tolerating changes in expression, then the fMRI response would be greater in the orientation-changed condition than in the expression-changed condition.

Figure 1a illustrates examples of the rhesus macaque face avatar used for the experiment (Murphy and Leopold, 2019). The avatar offered the ability to vary facial expression (five levels: lip smack, neutral, open mouth yawn, open mouth threat, and fear grin) and head orientation [five levels: −60° (right), −30° (right), 0° (direct), +30° (left), and +60° (left)] independently of each other while keeping the identity constant. We generated all 25 unique faces under eight different lighting conditions (i.e., a virtual lamp was rotated around the face, casting different shadows) to introduce low-level variations in the stimulus set. We also presented the stimuli at two different retinal sizes (height, 12° or 15° of visual angle). Together, these combinations resulted in 400 uniquely rendered avatar images (five expressions × five orientations × eight lightings × two visual angles), all of which portrayed the same individual. Critical to our approach here, all four experimental conditions involved presenting the subjects with the same 400 rendered images of the macaque avatar. The only difference between the conditions in terms of visual input was how the 400 images were sorted into blocks.

The fMRI adaptation experiment took advantage of a high-powered on/off block-design. Each of the four experimental conditions was composed of 25 unique blocks; these were divided equally across five runs. The allocation of blocks across runs was carefully counterbalanced to ensure that each run was approximately equal in terms of the number of arousing stimuli. For example, in the both-repeated condition, each of the five runs had a block representing each level of expression and head orientation. In the orientation-changed condition, however, the same 400 rendered images of the macaque avatar were organized across blocks according to expression (i.e., each run had a lip smack, neutral, yawn, threat, and fear grin block) but not head orientation because orientation changed with each presentation of the avatar. In the expression-changed condition, the opposite was true: stimuli were organized by head orientation (i.e., −60°, −30°, +0°, +30°, +60°), but expression changed with each presentation of the avatar. In fact, the only condition where the blocks were not organized according to stimulus expression and/or head orientation were those in the both-changed condition. Even so, the stimuli were pseudorandomized so that each run had the same number of stimuli drawn from each level of expression and head orientation.

Every run began with two dummy pulses, and then 4.4 s of fixation before the onset of the experiment. After this initial fixation period, the subjects were presented with the 16 stimuli in the first block, one at a time. Stimulus presentation was 500 ms with an ISI of 600 ms. Therefore, each block lasted for 17.6 s. A complete run was composed of five stimulation blocks and five interleaved fixation blocks. The purpose of fixation blocks was to allow the hemodynamic response to return to baseline. Fixation blocks had the same duration as stimulation blocks (i.e., 17.6 s). Therefore, each run took just over 3 min (i.e., 180.4 s), during which we collected 82 volumes of data.

The runs were completed in a unique order for each subject. Just as in the localizer task, the task during a run was to fixate the central red dot. The subjects had to fixate within the fixation window for at least 60% of the run time for the data to be included in the analysis. Subject K completed 55 runs over four test sessions. Three of these were excluded because the total percentage of fixation time was too low. This left 52 valid runs in the analysis with an average fixation time of 89.6%. Subject F completed 78 runs in total across four test sessions. No runs were removed based on the fixation criterion, and the average fixation time was 92.4%. Subject J completed 54 runs across two test sessions; 2 runs were removed from the analysis. Subject J had an average fixation time of 84% across valid runs.

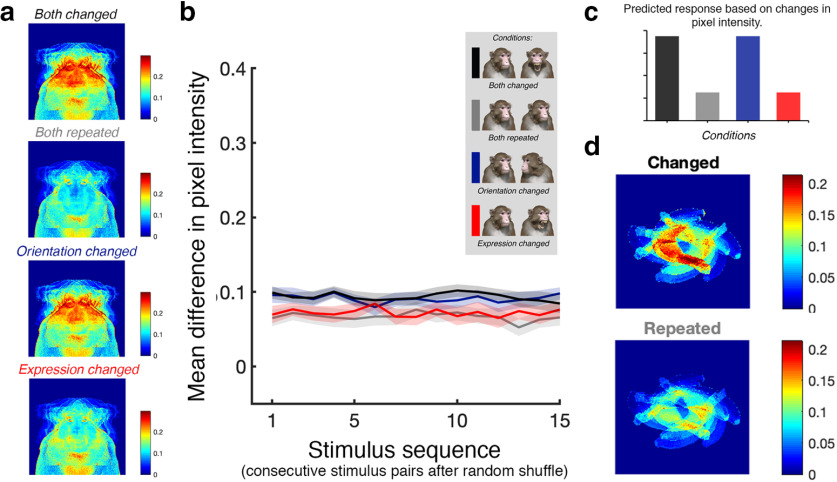

Image analysis and face specificity experiment

In the main experiment, changing the expression of a face meant manipulating the internal features of the avatar (e.g., the presence of teeth and the location of the brow relative to the eyes), whereas changing the head orientation meant rotating the avatar in depth and thus manipulating the external contour of the avatar. Therefore, it was likely that the different sequences of stimuli across conditions generated different amounts of variance in terms of pixel intensity. To quantify this variance, we first randomized the stimuli within each block (there were 25 unique blocks/condition) and measured the average of the absolute pixel intensity differences between each pair of consecutive stimuli. The average differences at the level of the pixel are provided in Figure 2a. Further, for each condition, the average difference in pixel intensity across consecutive stimulus pairs was computed (Fig. 2b). The results of this analysis indicate that if a region of interest were sensitive to stimulus change (rather than facial attributes per se), it would respond more to the both-changed and orientation-changed conditions when compared with the both-repeated and expression-changed conditions (Fig. 2c). Since this is the same pattern of results expected for regions of interest with an increased sensitivity to head orientation, we designed a second adaptation experiment, hereafter referred to as the face specificity experiment, using a three-dimensional nonface stimulus: a banana. The purpose of this experiment was to determine whether changes in the orientation of a banana release the fMRI signal in the face patches in the same way as changes in the orientation of a head. In other words, the results would confirm whether the face patches have a general sensitivity to nonrigid movement or whether they are specifically tuned to nonrigid movements of the head.

Figure 2.

The impact of image sequence on changes in pixel intensity. a, Mean change in intensity for every pixel across conditions in the main adaptation experiment. Top-to-bottom, Both-changed, both-repeated, orientation-changed, and expression-changed conditions. b, Mean difference in pixel intensity as a function of stimulus pair in a random sequence. Lines represent different adaptation conditions. Black and blue lines, Mean difference in the both-changed and orientation-changed conditions, respectively; gray and red lines, mean difference in the both-repeated and expression-changed conditions, respectively. c, The predicted pattern of activation across adaptation conditions in regions of interest that are sensitive to image change. d, Mean change in intensity for every pixel across conditions in the face specificity adaptation experiment. Top, The banana-changed condition; bottom, the banana-repeated condition.

We rotated the banana in space to generate five levels of orientation. As with the face avatar, we used eight different lighting condition and two retinal sizes to introduce low-level variation into the stimulus set. Thus, in total there were 128 unique banana stimuli presented in two experimental conditions. In the banana-changed condition, the stimuli were sorted into five unique blocks (16 stimuli/block) at random. In the banana-repeated condition, the stimuli were sorted into five unique blocks (16 stimuli/block) based on orientation. Other experimental details, including the timing parameters, were identical to those used in the main adaptation experiment. We confirmed that average pixel intensity changes were greater for the banana-changed condition compared with the banana-repeated condition, as was the case in the main experiment (Fig. 2d).

Because of availability, we were able to test two of the original subjects (subjects F and K), and data collection took place >3 months after the completion of the main adaptation experiment. The subject's task during a run was to fixate the central red dot and the same fixation criterion (i.e., fixation successfully maintained for >60% of the run) was used to determine which runs were included in the final analysis. Subject K completed 27 runs over three sessions of which 4 runs were excluded because they did not meet the fixation criterion, leaving 23 valid runs in the analysis with an average fixation time of 94.3%. Subject F completed 26 runs across three sessions, of which only 2 runs were removed from the analysis on the basis of the fixation criterion, resulting in an average fixation performance of 86.62% during the included runs.

Statistical analyses

To facilitate cortical surface alignments, we acquired high-resolution T1-weighted whole-brain anatomic scans in a 4.7 T Bruker scanner with an MDEFT sequence. Imaging parameters were as follows: voxel size: 0.5 × 0.5 × 0.5 mm; TE: 4.9 ms; TR: 13.6 ms; and flip angle: 14°.

All EPI data were analyzed using AFNI software (http://afni.nimh.nih.gov/afni; Cox, 1996). Raw images were first converted from Bruker into AFNI data file format. The data collected in each session were first corrected for static magnetic field inhomogeneities using the PLACE algorithm (Xiang and Ye, 2007). The time series data were then slice time corrected and realigned to the last volume of the last run. All the data for a given subject were registered to the corresponding high-resolution template for that subject, allowing for the combination of data across multiple sessions. The first two volumes of data in each EPI sequence were disregarded. The volume-registered data were then despiked and spatially smoothed with a 3 mm Gaussian kernel.

We convolved the hemodynamic response function for MION exposure with the four regressors of interest (both repeated, both changed, orientation changed, and expression changed) using an ordinary least-squares regression (executed using the AFNI function “3dDeconvolve” with “MIONN” as the response function). The regressors of no interest included in the model were six motion regressors (movement parameters obtained from the volume registration) and AFNI baseline estimates and signal drifts (linear and quadratic). Thus, each voxel had four parameter estimates corresponding to the four regressors of interest.

When pooling data across subjects for Figure 2, we wanted to ensure that the visualizations were not influenced by differences across subjects in terms of coil placement or small numbers of voxels with extreme β-coefficients, and, thus, we normalized the data within each ROI using the min-max method. For the same reasons, when we compared data across regions in Figure 3, we generated adaptation index values based on β-coefficients. All statistical comparisons between the ROIs were performed using custom scripts written in MATLAB version R2018b (MathWorks). The data from the face specificity experiment were preprocessed in the same way and convolved using the same AFNI function (i.e., 3dDeconvolve). In this experiment, however, there were only two regressors of interest (banana changed and banana repeated). Parameter estimates for every voxel analyzed as part of this study are available in Extended Data Figure 3-1.

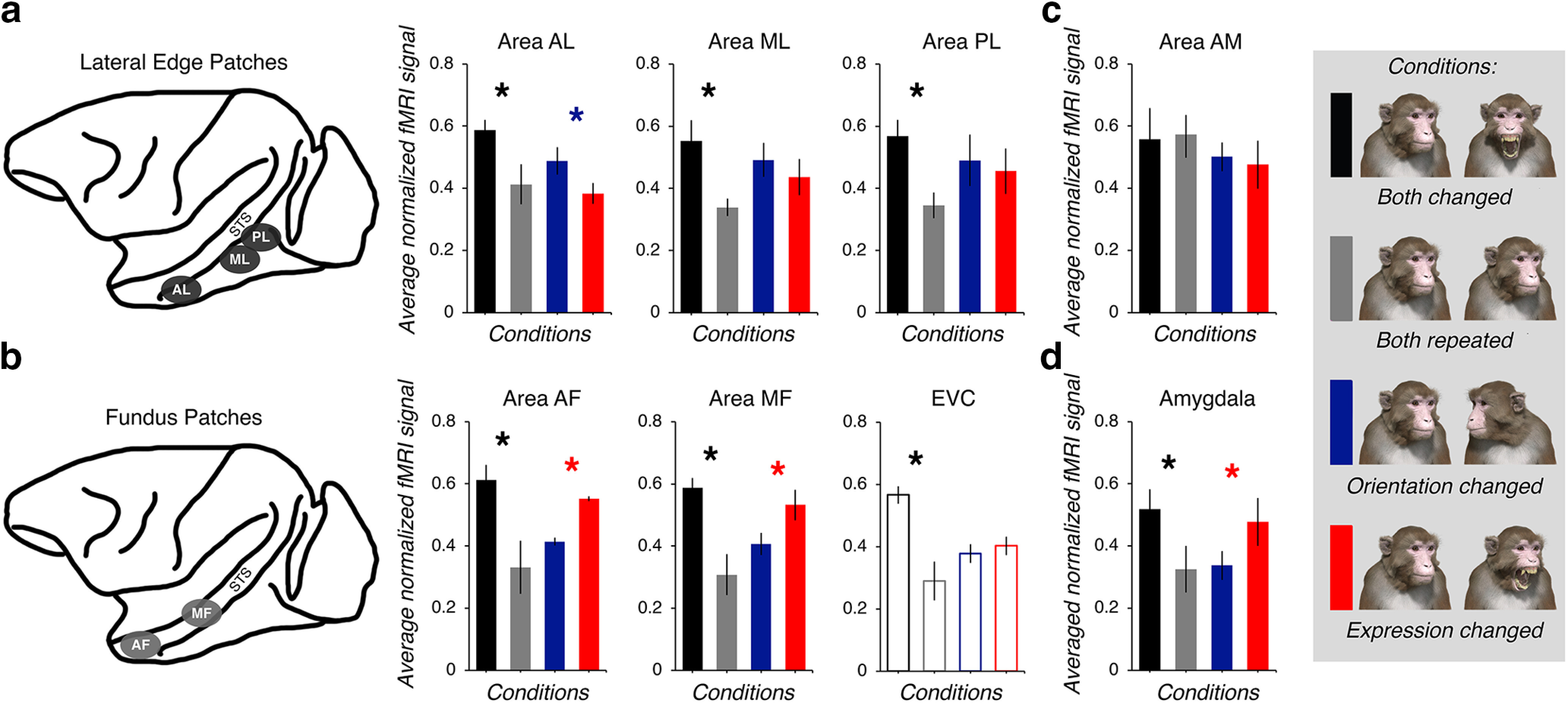

Figure 3.

The functional dissociation between the lateral edge patches and fundus patches. a, The average effect of adaptation on the lateral edge patches displayed as three bar graphs (from left to right; areas AL, ML, and PL). The color of each bar reflects the condition (the legend is positioned on the far right). A black asterisk indicates that there was a significant difference between the both-changed and both-repeated conditions in a direction consistent with adaptation to changeable attributes found for all three subjects. A blue asterisk indicates that, in all three subjects, there was a significant difference between the orientation-changed and expression-changed conditions in a direction consistent with a greater sensitivity to head orientation than expression. In contrast, a red asterisk indicates that, in all three subjects, there was a significant difference between the orientation-changed and expression-changed conditions, but the direction of the difference is consistent with a greater sensitivity to expression than head orientation. Error bars reflect ±1 SEM. b, The average effects of adaptation on the fundus patches and EVC. The bars that are not filled in to reflect the fact this region is not face selective. Otherwise, same conventions as in a. c, The average effects of adaptation on area AM on the ventral surface of the brain. Same conventions as in a. d, The average effects of adaptation on the amygdala. Same conventions as in a. All data are available as an extended dataset (Extended Data Fig. 3-1).

Results

The main effects of adaptation in the macaque brain

When we averaged the normalized data across subjects, we found a clear dissociation between the lateral edge patches (Fig. 3a) and the fundus patches (Fig. 3b). The fMRI signal across the four adaptation conditions was evaluated separately for each subject using a mixed-design 4 × 8 ANOVA with adaptation condition as the repeated factor and ROI as the group factor (with the Greenhouse–Geisser correction for violations of sphericity for the repeated factors). The analysis of the data for subject K revealed a significant main effect of adaptation condition (F(2.488,1159.263) = 483.4, p < 0.001, ηp2 = 0.51) and ROI (F(7,466) = 37.05, p < 0.001, ηp2 = 0.36), and a significant interaction effect between adaptation condition and ROI (F(17.414,1159.263) = 18.1, p < 0.001, ηp2 = 0.22). The same pattern of results was also observed for subject F (condition: F(1.976,869.443) = 148.43, p < 0.001, ηp2 = 0.25; ROI: F(7,440) = 164.79, p < 0.001, ηp2 = 0.72; condition * ROI: F(13.832,869.443) = 19.12, p < 0.001, ηp2 = 0.23) and subject J (condition: F(2.701,1263.891) = 264.45, p < 0.001, ηp2 = 0.36; ROI: F(7,468) = 92.93, p < 0.001, ηp2 = 0.32; condition * ROI: F(18.904,1263.891) = 39.74, p < 0.001, ηp2 = 0.37).

Raw parameter estimates for voxels included in the overall analysis (delimited by both subject and region of interest). Download Figure 3-1, TXT file (54.7KB, txt)

Following the significant interaction effects, we performed paired t tests on the data from each subject to determine whether, as predicted, the fMRI signal was greater in any of the eight ROIs when both the expression and head orientation of the avatar changed compared with when both the expression and head orientation of the avatar repeated. These were adjusted for multiple comparisons using the Bonferroni rule (α/16; Table 1). The results indicated that the fMRI response from seven of the eight ROIs was reliably released from adaptation when facial attributes (i.e., the expression and head orientation) changed. Area AM was the only exception; an adaptation effect was only induced in one subject (Table 1).

Table 1.

Difference between the both-changed and both-repeated conditions as a function of ROI

| Area AL |

Area ML |

Area PL |

Area AM |

|||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Changed– repeated | Observed p value | Sig | Changed– repeated | Observed p value | Sig | Changed– repeated | Observed p value | Sig | Changed– repeated | Observed p value | Sig | |

| Subject K | 1.218 | <0.001 | * | 1.110 | <0.001 | * | 1.690 | <0.001 | * | 0.031 | 0.734 | |

| Subject F | 0.623 | <0.001 | * | 0.538 | <0.001 | * | 0.409 | <0.001 | * | 0.044 | 0.253 | |

| Subject J | 0.230 | <0.001 | * | 0.379 | <0.001 | * | 0.342 | <0.001 | * | –0.120 | 0.001 | |

| Area AF | Area MF | EVC | Amyg | |||||||||

| Changed– repeated | Observed p value | Sig | Changed– repeated | Observed p value | Sig | Changed– repeated | Observed p value | Sig | Changed– repeated | Observed p value | Sig | |

| Subject K | 1.213 | <0.001 | * | 1.583 | <0.001 | * | 1.139 | <0.001 | * | 0.957 | <0.001 | * |

| Subject F | 0.446 | <0.001 | * | 0.559 | <0.001 | * | 1.340 | <0.001 | * | 0.405 | <0.001 | * |

| Subject J | 0.240 | <0.001 | * | 0.495 | <0.001 | * | 0.114 | <0.001 | * | 0.230 | <0.001 | * |

Sig, Significance.

*Evidence of adaptation to changeable aspects of the face of the avatar.

The planned comparisons between the orientation-changed and expression-changed conditions revealed a double dissociation among the face-selective ROIs. We found that areas AF and MF, both located in the fundus of the STS, showed greater activation when expression changed relative to when orientation changed (Fig. 3b, Table 2), consistent with these areas being sensitive to changes in expression across different head orientations. This was also the case in the amygdala (Fig. 3d, Table 2). In contrast, the fMRI responses from areas AL, ML, and PL were markedly different. In all three subjects, area AL showed greater activation when orientation changed than when expression changed (Fig. 3a), consistent with this area being more sensitive to head turns across different expressions. Although the same pattern of activation was observed in areas ML and PL, it was only significant in one subject (Table 2). There was no evidence that area AM responded systematically to these two conditions (i.e., none of the contrasts comparing the orientation-changed and expression-changed conditions were significant; Fig. 3c, Table 2). In the EVC region of interest, there was also no evidence of a difference between the orientation-changed and expression-changed conditions (Fig. 3b, Table 2). To interpret the response of EVC to these large complex visual stimuli, more would need to be understood about the impact of surround suppression during adaptation paradigms in primary visual cortex where receptive field sizes are smaller than in IT cortex (Larsson et al., 2016; Vogels, 2016). Thus, we excluded the EVC region of interest from further analyses.

Table 2.

Difference between the expression-changed and orientation-changed conditions as a function of ROI

| Area AL |

Area ML |

Area PL |

Area AM |

|||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Expression–orientation | Observed p value | Sig | Expression–orientation | Observed p value | Sig | Expression–orientation | Observed p value | Sig | Expression–orientation | Observed p value | Sig | |

| Subject K | –0.352 | <0.001 | * | –0.039 | 0.472 | –0.107 | 0.040 | 0.026 | 0.626 | |||

| Subject F | –0.409 | <0.001 | * | –0.377 | <0.001 | * | –0.133 | <0.001 | * | 0.012 | 0.826 | |

| Subject J | –0.359 | <0.001 | * | –0.088 | 0.020 | –0.067 | 0.072 | 0.090 | 0.016 | |||

| Area AF | Area MF | EVC | Amyg | |||||||||

| Expression–orientation | Observed p value | Sig | Expression–orientation | Observed p value | Sig | Expression–orientation | Observed p value | Sig | Expression–orientation | Observed p value | Sig | |

| Subject K | 0.330 | <0.001 | * | 0.568 | <0.001 | * | 0.085 | 0.214 | 0.441 | <0.001 | * | |

| Subject F | 0.301 | <0.001 | * | 0.173 | <0.001 | * | 0.003 | 0.965 | 0.349 | <0.001 | * | |

| Subject J | 0.272 | <0.001 | * | 0.520 | <0.001 | * | 0.064 | 0.085 | 0.359 | <0.001 | * | |

Sig, Significance.

*More sensitive to changes in head orientation than changes in facial expression.

*More sensitive to changes in expression than changes in head orientation.

The impact of head orientation and facial expression

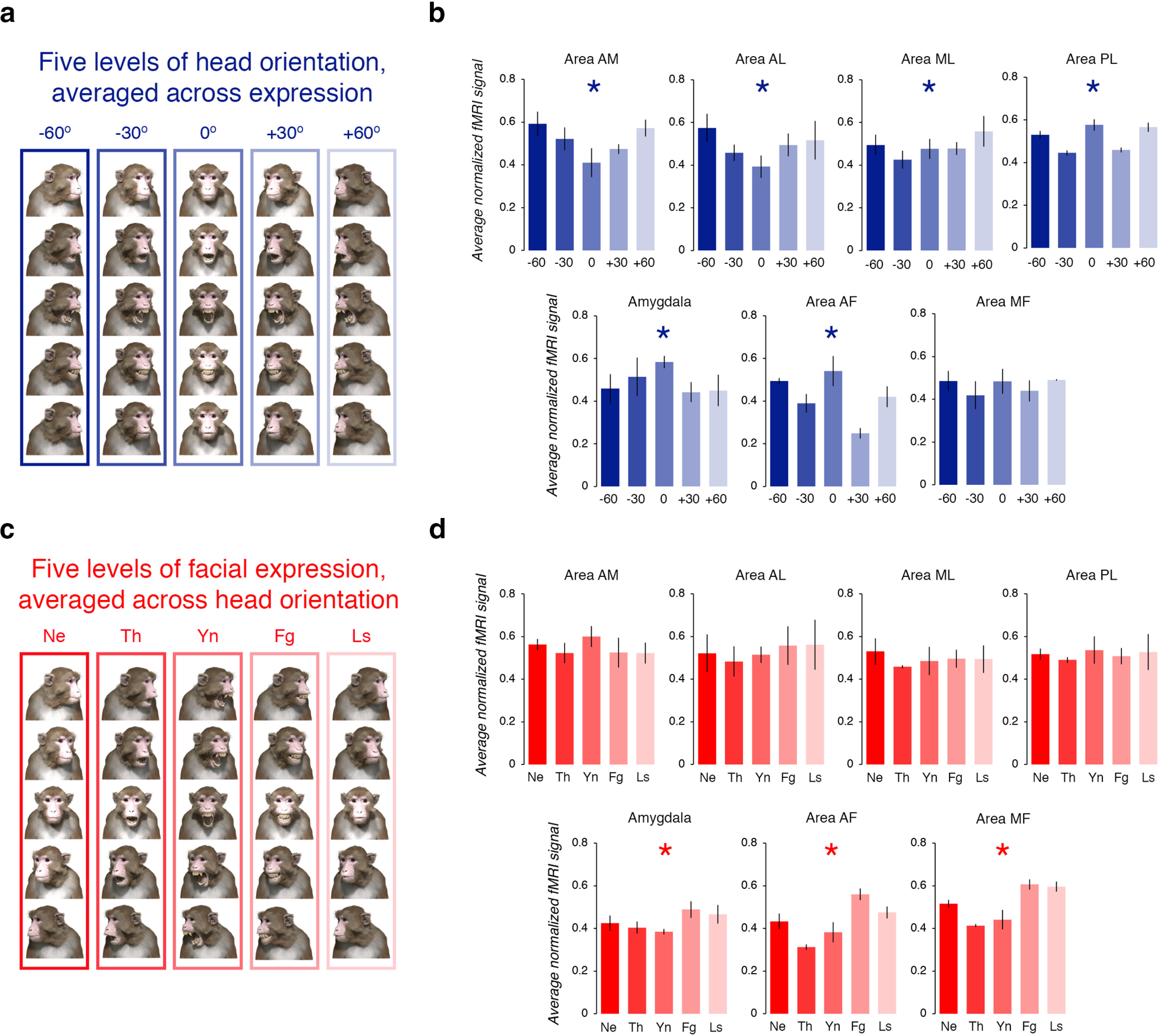

To determine whether the ROIs were tuned to head orientation, we reexamined the activity in response to the both-repeated condition. Each run in this condition was composed of five stimulus blocks, one block for each of the five levels of head orientation (−60°, −30°, 0°, +30°, +60°; Fig. 4a). Thus, we convolved the hemodynamic response function for MION exposure using the levels of head orientation as the five regressors of interest. The regressors of no interest were the baseline estimates, and the six movement parameters obtained from the volume registration using AFNI. The average fMRI signal across all five head orientation conditions is provided for each of the seven ROIs in Figure 4b.

Figure 4.

The impact of head orientation and facial expression on the fMRI signal in the both-repeated condition. a, Examples of the visual stimuli in each of the five levels of head orientation. b, Normalized fMRI signal, averaged across subjects, with separate bar graphs for each region of interest (top row, from left to right, areas AM, AL, ML, and PL; bottom row, from left to right, amygdala, area AF and area MF). The color of each bar reflects the condition (see a for legend). Blue asterisk indicates that, for all three subjects, there was a significant difference in the response to the zero orientation (0°) condition compared with the response in all other orientation conditions. Error bars reflect ± SEM. c, Examples of the visual stimuli in each of the five levels of facial expression. d, Normalized fMRI signal, averaged across subjects, with separate bar graphs for each region of interest (top row, from left to right, areas AM, AL, ML, and PL; bottom row, from left to right, amygdala, area AF, and area MF). The color of each bar reflects the condition (see c for legend). Red asterisk indicates that, for all three subjects, there was a significant difference in the response to the Ne condition compared with the response to all other expression conditions. Error bars reflect ± SEM.

To test whether the fMRI signal differed across the head orientation conditions, we evaluated the data of each subject using a mixed-design 5 × 7 ANOVA with head orientation as the repeated factor and ROI as the between factor. We used the Greenhouse–Geisser correction for violations of sphericity. These analyses revealed that, for all three subjects, there was a significant interaction between head orientation and ROI (subject K: F(3.517,1452.69) = 25.001, p < 0.001, ηp2 = 0.27; subject F: F(19.668,1258.778) = 22.15, p < 0.001, ηp2 = 0.26; subject J: F(19.972,1358.109) = 22.15, p < 0.001, ηp2 = 0.37), suggesting that the response across the head orientation conditions depended on the ROI. As a follow-up test, for each subject we compared the response evoked by the four conditions where the head was rotated away from zero (i.e., −60°, −30°, +30°, and +60°) to the response evoked by the one condition where the head was frontward facing (i.e., 0°) using a custom linear contrast. This contrast was significant across all subjects in six of the seven ROIs (all p values <0.001; Fig. 4b). These results indicate that, unlike the other ROIs, area MF (subject K, p = 0.05; subject F, p = 0.09; subject J, p = 0.07) did not respond differently to the face of the avatar when its head was rotated away from the direct, frontward-facing position.

The both-repeated runs were also composed of five blocks that varied in terms of the facial expression of the avatar [neutral (Ne), threat (Th), yawn (Yn), fear grin (Fg), and lip smack (Ls); Fig. 4c]. Thus, we repeated the analytical procedure described above for head orientation, except this time we convolved the hemodynamic response function for MION exposure with the five levels of facial expression. The fMRI signal across the expression conditions was evaluated for each subject using a mixed-design 5 × 7 ANOVA with facial expression as the repeated factor and ROI as the between factor. Once again, the Greenhouse–Geisser correction was used to adjust for violations of sphericity. These analyses revealed that, for all three subjects, there was a significant interaction between facial expression and ROI (subject K: F(18.836,1296.566) = 9.59, p < 0.001, ηp2 = 0.12; subject F: F(20.554,1314.82) = 25.88, p < 0.001, ηp2 = 0.29; subject J: F(20.883,1420.039) = 45.54, p < 0.001, ηp2 = 0.4], suggesting that the response across the five levels of facial expression differed depending on the ROI. We compared the response evoked by the four conditions where the face the avatar expressed emotion (i.e., threat, yawn, fear grin, and lip smack) to the response evoked by the neutral face condition using a custom linear contrast. This contrast was significant across all three subjects for only three of the seven ROIs (Fig. 4d). Overall, this analysis revealed that only the fundus patches (areas AF and MF; all p values <0.01) and the amygdala (all p values <0.001) responded differently to the face of the avatar when it expressed emotion compared with when it expressed no emotion; we note that these regions responded the most to the fearful and submissive expressions (Fig. 4d). In contrast, we found no evidence that the lateral edge face patches (i.e., areas AL, ML, PL; all p values >0.06) or area AM (all p values >0.1) responded differently to the face of the avatar when it expressed emotion compared with when it expressed no emotion. These results are consistent with the notion that the fundus face patches and the amygdala are specialized for processing changes in facial expressions.

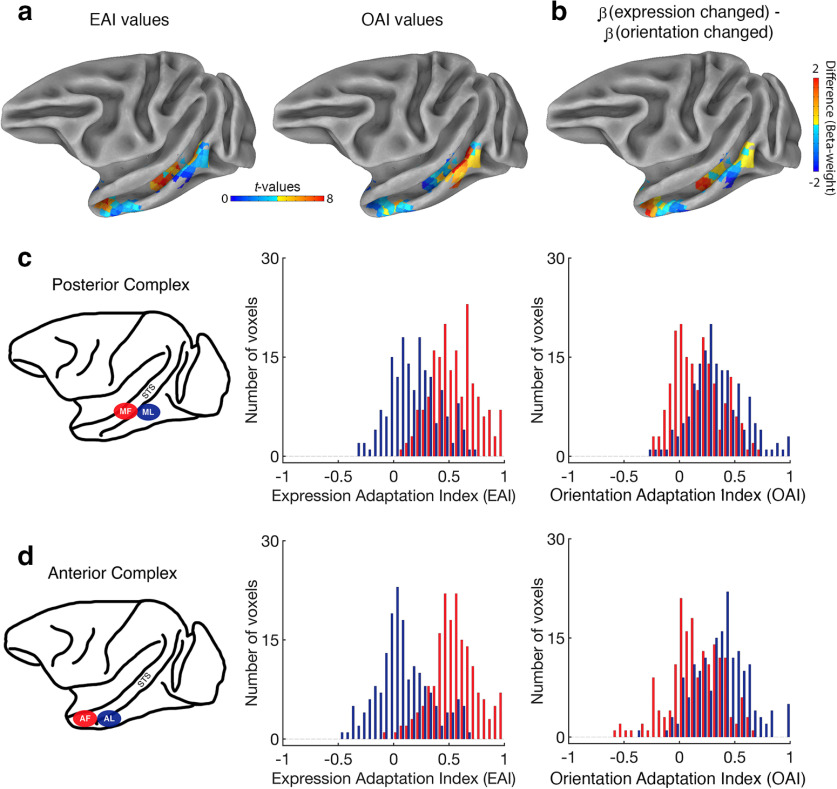

Double dissociation within the macaque STS

To compare the response profile of neighboring patches, we computed both an expression adaptation index (EAI) and a head orientation adaptation index (OAI) for each voxel. The EAI values were calculated by subtracting the sum of the two conditions where expression was repeated (both repeated and orientation changed) from the sum of the two conditions where expression varied (both changed and expression changed). Thus, a voxel with an EAI value significantly greater than zero responded more in the conditions where expression changed than in the conditions where expression repeated. Likewise, OAI values were calculated as follows: (both changed + orientation changed) − (both repeated + expression changed). Voxels with OAI values significantly greater than zero responded more in the conditions where head orientation changed than in the conditions where head orientation repeated. The advantage here is twofold: (1) both EAI and OAI values are calculated using the exact same data (i.e., all four conditions are entered into both formulas); and (2) the available power is maximized because we use all four conditions to construct each value. Figure 5a shows the EAI and OAI values for all face-selective voxels projected onto a partially inflated cortical surface (NIH macaque template;one hemisphere only; Fig. 5b, difference).

Figure 5.

Adaption index values confirm functional dissociation in STS. a, Lateral view of subject K's data projected onto the NMT cortical surface (Seidlitz et al., 2018) for illustrative purposes (left hemisphere only). Left, EAI values for all voxels that met the “face-selective” criterion. Other voxels are masked out. Right, OAI values for all voxels that met the face-selective criterion. b, For each face-selective voxel, the β-coefficient for the orientation-changed condition was subtracted from the β-coefficient for the expression-changed condition. Results were projected onto the partially inflated cortical surface. c, The distribution of normalized EAI (left) and normalized OAI (right) values in the posterior STS face patches, pooled across subjects. Voxels from area ML are in blue and voxels from area MF are in red. d, The distribution of normalized EAI and OAI values in the anterior STS face patches, pooled across subjects. Voxels from area AL are in blue, and voxels from area AF are in red.

We normalized the index values within each subject by dividing every value in each ROI by the maximum value observed in that region. This transformation means that index values typically range between −1 and 1; an index value of 0 indicated that the sum of the different conditions (i.e., release from fMRI adaptation) was equal to the sum of the same conditions (i.e., fMRI adaptation). Next, we pooled the data across all three subjects and plotted the distribution of normalized adaptation index values in the two face patches located next to each other in the middle of the STS (MF and ML; Fig. 5c). Given the small sample size (N = 3), we analyzed the data using Mann–Whitney U tests (two-tailed) because they are robust to violations of normality. When we analyzed the EAI values, we found that these values were higher, on average, in area MF (mean = 0.55, minimum = 0.09, maximum = 1) than in area ML (mean = 0.18, minimum = −0.32, maximum = 0.71; Mann–Whitney U test, two-tailed, p < 0.05). In contrast, when we analyzed the OAI values, we found that these values were higher, on average, in area ML (mean = 0.34, minimum = −0.27, maximum = 1) than in area MF (mean = 0.18, minimum = −0.24, maximum = 0.72; Mann–Whitney U test, two-tailed, p < 0.05). These findings indicate that areas MF and ML, despite anatomic proximity, are differentially sensitive to changes in expression and head orientation.

We also examined the pooled distribution of normalized adaptation index values in the two face patches that are positioned next to each other in the anterior region of STS (AF and AL; Fig. 5d). When we analyzed the EAI values, we found that these values were higher, on average, in area AF (mean = 0.55, minimum = −0.09, maximum = 1) than in area AL (mean = 0.09, minimum = −0.49, maximum = 0.68; Mann–Whitney U test, two-tailed, p < 0.05). When we analyzed the OAI values, however, we found that they were higher, on average, in area AL (mean = 0.15, minimum = −0.38, maximum = 1) than in area AF (mean = 0.15, maximum = 0.66, minimum = −0.56; Mann–Whitney U test, two-tailed, p < 0.05). In sum, these results demonstrate that areas MF and AF were more engaged by changes in expression than ML and AL, respectively. Conversely, areas ML and AL were more engaged by changes in head orientation than MF and AF, respectively. Individual subject data are plotted in the supplementary information.

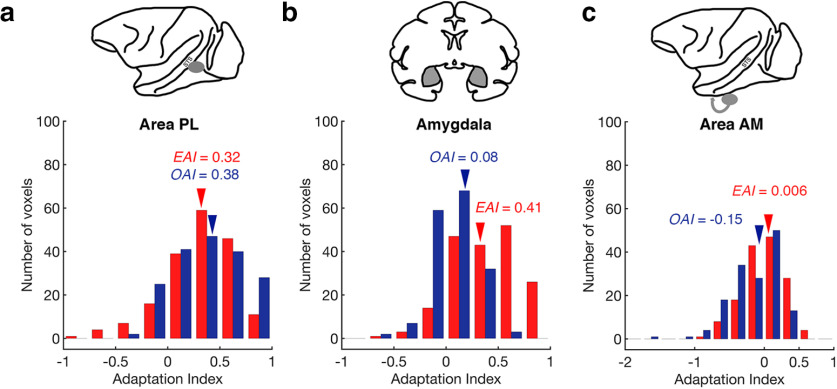

Area PL is posterior to the middle STS region containing areas ML and MF, while still located on the lower lateral edge of STS. In this region, we found that there was no difference between the EAI values (mean = 0.32, maximum = 0.95, minimum = −0.79) and the OAI values (mean = 0.38, maximum = 1, minimum = −0.29; Wilcoxon signed-rank test, two-tailed, p = 0.18; Fig. 6a). We note that EAI values in area PL were significantly higher than those in area ML (Mann–Whitney U test, two-tailed, p < 0.05) and lower than those in area MF (Mann–Whitney U test, two-tailed, p < 0.05), whereas OAI values in area PL were no different from those values in area ML (Mann–Whitney U test, two-tailed, p = 2.65) and higher than those values in area MF (Mann–Whitney U test, two-tailed, p < 0.05). In sum, these analyses confirm that area PL was equally sensitive to both expression and head orientation, a response pattern that distinguishes area PL from both area ML and area MF.

Figure 6.

Other face-selective regions of interest. a, The distribution of normalized adaptation indices for expression (EAI) and head orientation (OAI) values from area PL. Voxels are pooled across subjects. Red bars correspond to EAI values, and blue bars correspond to OAI values. Mean values are marked with an arrow. An index value of 0 would indicate no adaptation effect. Negative index values are difficult to interpret as they reflect an increased fMRI signal when a stimulus property repeated relative to when it changed. b, The distribution of normalized EAI and OAI values from the amygdala. Voxels are pooled across subjects. Same conventions as in a. c, The distribution of normalized EAI and OAI values from area AM. Voxels are pooled across subjects. Same conventions as in a.

Adaptation index values in the amygdala

We also investigated the distribution of EAI and OAI values in the amygdala (Fig. 6b). We found that the EAI values (mean = 0.40, maximum = 1, minimum = −0.61) were much higher than the OAI values (mean = 0.08, maximum = 0.53, minimum = −0.53; Wilcoxon signed-rank test, two-tailed, p < 0.05). Further, when comparing the fMRI signal in the amygdala to the fundus patches, we found that EAI values were reliably lower in the amygdala compared with either area MF (Mann–Whitney U test, two-tailed, p < 0.05) or area AF (Mann–Whitney U test, two-tailed, p < 0.05). OAI values were also reliably lower in the amygdala compared with either area MF (Mann–Whitney U test, two-tailed, p < 0.05) or the area AF (Mann–Whitney U test, two-tailed, p < 0.05).

Adaptation index values in area AM

Area AM is a face patch typically found on the ventral surface of the brain (i.e., outside of the STS) in anterior temporal cortex. In recent studies, area AM has been linked to orientation-invariant representations of individual faces but as yet, it has not been tested with manipulations of facial expression. Even before normalization, the observed OAI values for voxels in area AM were already distinct. For all three subjects, the largest adaptation index values were below zero. Thus, normalizing to the maximum yielded numbers outside the typical range (i.e., several OAI values were found to be below −1). Nonetheless, for the sake of comparison, we plotted the distribution of normalized adaptation index values in Figure 6c. We found that the distribution of EAI values (mean = 0.006, maximum = 0.7, minimum = −0.83) had an average value that was higher than the distribution of OAI values (mean = −0.15, maximum = 0.43, minimum = −1.66; Wilcoxon signed-rank test, two-tailed, p < 0.005). We also found that 47% of the voxels in area AM had EAI values <0 (i.e., no expression adaptation) and 69% of the voxels in area AM had OAI values <0 (i.e., no head orientation adaptation).

Face specificity experiment

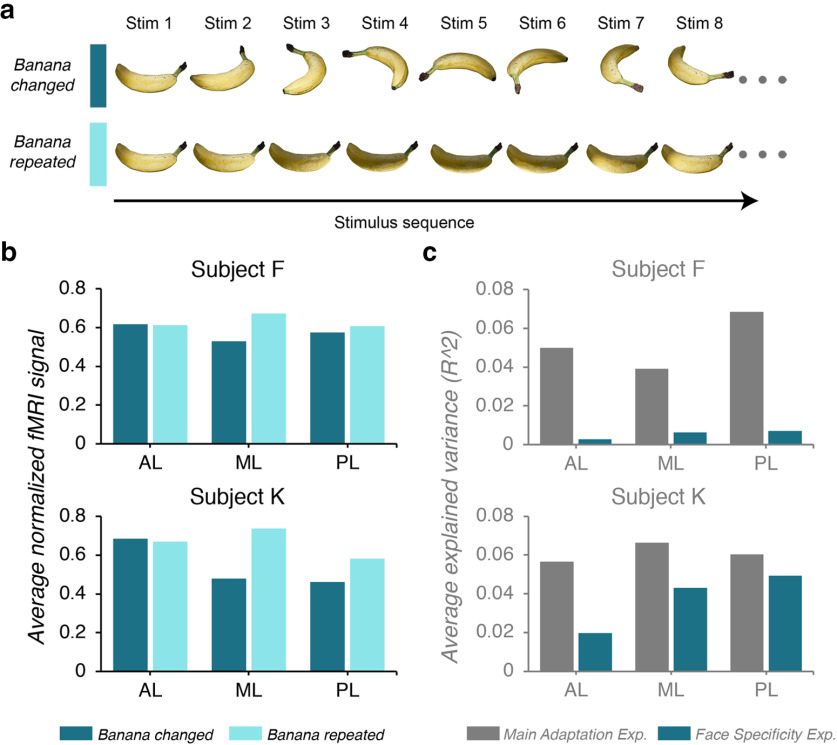

After a pixel-based analysis of the avatar stimuli revealed that there was an alternative explanation for the pattern of results observed in areas AL, ML, and PL (i.e., the lateral edge patches), we ran a second experiment on two subjects. We tested the subjects with images of a three-dimensionally rendered banana: in the banana-changed condition, we changed the orientation of the banana; and in the banana-repeated condition, we held the orientation of the banana constant (Fig. 7a). If the regions of interest are sensitive to image changes, then we expect to see a greater fMRI response in the banana-changed condition compared with the banana-repeated condition. The results for the three ROIs are provided in Figure 7b.

Figure 7.

The face specificity experiment. a, Illustrative example of the stimulus order in the two conditions (banana changed and banana repeated) presented in rows. b, The average normalized response of the lateral edge face patches (from left to right; areas AL, ML, and PL). The individual subject data are plotted separately (top, subject F; bottom, subject K). The color of each bar reflects the condition. c, Bar graph showing the difference in explained variance between the main adaptation experiment (gray bars) and the face specificity experiment (green bars) for each ROI (from left to right; areas AL, ML, and PL). Again, the individual subject data are plotted separately (top, subject F; bottom, subject K).

The fMRI signal across both conditions was evaluated separately for each subject using a mixed-design 2 × 3 ANOVA with condition as the repeated factor and ROI as the group factor (with the Greenhouse–Geisser correction for violations of sphericity for the repeated factors). For subject K, we found significant main effects of both condition (F(1.811,4852.211) = 470.53, p < 0.001, ηp2 = 0.15) and ROI (F(7,2679) = 48.63, p < 0.001, ηp2 = 0.11). Additionally, there was a significant interaction effect (F(12.678,4852.211) = 21.7, p < 0.001, ηp2 = 0.05). When we analyzed the data from subject F, we found evidence that the main effects were consistent across the two subjects (condition: F(1,171) = 25.75, p < 0.001, ηp2 = 0.13; ROI: F(2,171) = 8.89, p < 0.001, ηp2 = 0.09; condition * ROI: F(2,171) = 14.88, p < 0.001, ηp2 = 0.15).

We used paired t tests (two-tailed) to examine the difference between the two experimental conditions for each face patch. The Bonferroni rule was used to adjust for multiple comparisons within each subject (α = 0.05/3). Although for some regions, we did find significant differences in the fMRI response to the two conditions (subject K, areas ML and PL; subject F, area ML; all p values <0.001), the direction of these differences are difficult to interpret (i.e., banana repeated > banana changed). An enhanced response to a repeated stimulus has been argued to reflect reduced surround suppression or an inherited response from an earlier visual area with smaller receptive fields (Krekelberg et al., 2006; Sawamura et al., 2006; Larsson et al., 2016; Vogels, 2016). In this case, another contributing factor might have been the use of a nonpreferred visual stimulus (i.e., an artifact of testing face-selective regions with a banana stimulus).

To determine whether the main adaptation experiment explained more variance within the regions of interest than the second, the face specificity experiment, we calculated the average R2 value for areas AL, ML, and PL (Fig. 7c). As one might have expected, in both subjects we observed larger R2 values when we tested the face-selective regions with the face of the avatar (mean ± SD: subject F: AL, 0.05 ± 0.04; ML, 0.04 ± 0.03; PL, 0.07 ± 0.04; subject K: AL, 0.06 ± 0.04; ML, 0.07 ± 0.05; subject F: PL, 0.06 ± 0.04) than when we tested the same face-selective regions with a three-dimensional banana (mean ± SD; subject F: AL, 0.003 ± 0.003; ML, 0.006 ± 0.01; PL, 0.007 ± 0.01; subject K: AL, 0.02 ± 0.03; ML, 0.04 ± 0.04; subject F: PL, 0.04 ± 0.04). These results indicate that the three-dimensional banana did not drive activity in the face-selective ROIs in the same way as the three-dimensional avatar face. Although it is not known how the use of a nonpreferred stimulus would contribute to repetition enhancement, it is possible that area ML passively inherited a response from another visual area (e.g., V1–V4) because it was not otherwise engaged by a preferred stimulus. More research is needed to understand the circumstances under which a visual region would respond more when a stimulus is repeated compared with when it is changed.

Discussion

A large body of electrophysiological and fMRI results has suggested that human and nonhuman primates have specialized cortical machinery for the visual analysis of faces. This provides us with a starting point for understanding how primates are able to read different facial signals during social interactions. Figure 8 summarizes the main finding of the fMRI adaptation experiment: the discovery that different changeable attributes of a face recruit separate nodes within the macaque IT cortex. Our results demonstrated that areas AF and MF were more engaged by changes in expression than by changes in head orientation. Conversely, patches on the lower lateral edge of the STS was more engaged by changes in head orientation than by changes in expression. Further, when we compared neighboring regions of interest more directly, the analysis indicated that area AF was more sensitive to changes in expression than area AL and that area MF was more sensitive to changes in expression than areas ML (Fig. 5). For head orientation adaptation, the inverse was true; areas AL and ML were found to be more sensitive to changes in head orientation than were areas AF and MF, respectively. This organization along the medial–lateral axis has not been described before, perhaps because of our unique experimental design. Here, we manipulated two sources of changeable information available in the face of a macaque avatar, positioned at fixation, without altering identity cues.

Figure 8.

The functional organization of the cortical face-processing system in the macaque brain. Interacting with conspecifics face to face is something that social primates do on a regular basis. The face-processing system in the macaque brain has specialized regions for processing different sources of changeable information, such as expression and head orientation, while the identity of the conspecific remains the same from one moment to the next.

The role of the STS and the amygdala in monitoring emotion

We found that the fundus face patches and the amygdala are sensitive to changes in facial expression. This joint characteristic reflects the connectivity between the core face-processing system in the macaque STS and the amygdala (Amaral and Price, 1984; Moeller et al., 2008; Schwiedrzik et al., 2015; Grimaldi et al., 2016). The amygdala has been implicated in the assessment of valence and social salience (Hadj-Bouziane et al., 2008, 2012; Zhang and Li, 2018; Taubert et al., 2018b), with neurons that respond differentially to faces conveying different facial expressions (Rolls, 1984; Gothard et al., 2007; Hoffman et al., 2007). Previous research has also linked the fundus region of the STS in the macaque brain to dynamic signals (Furl et al., 2012; Freiwald et al., 2016). However, the functional contribution of these brain regions to face perception, and to social cognition more broadly, remains only partially understood. Here, we found that the fundus face patches and the amygdala shared an increased sensitivity to visual cues, indicating that the expression of the face of the avatar changed. Moreover, these regions also shared a reduced sensitivity to visual cues indicating that the orientation of the head of an avatar changed. These findings are consistent with the functional segregation of the spatially distinct face patches inside the STS, with the fundus face patches communicating with the amygdala to support the recognition of facial expressions and social inference (Freiwald et al., 2016; Sliwa and Freiwald, 2017; Taubert et al., 2019).

The sensitivity of the lateral edge patches to changeable facial signals

In contrast to the fundus face patches, some of the face patches on the lower lateral edge of the STS were more engaged by changes in the orientation of the head of the avatar than expression. In studies of face-selective neurons, while neurons within the combined MF/ML region have been found to be view selective (i.e., each neuron prefers a specific head orientation), this dependence is reduced in area AL and further still in area AM, where the representations for facial identity are robust to changes in head orientation (Freiwald and Tsao, 2010; Dubois et al., 2015; Meyers et al., 2015; Chang and Tsao, 2017). However, because this hierarchy is thought to play an important role in conspecific recognition, it was difficult to predict how these brain regions would respond when the identity of a conspecific did not change. We found that areas ML and AL on the lateral edge of the macaque STS responded to changes in head orientation, while identity persisted, with higher OAI values than their counterparts in the fundus of the STS (Fig. 5c,d). This was especially true for area AL, where we found a significant release from adaptation when head orientation changed but not when expression changed (Fig. 3a). Studies of neural connectivity have reported tight connections between the lateral edge face patches (Grimaldi et al., 2016; Premereur et al., 2016). For example, the acute stimulation of area ML activated areas PL, AL, and AM but not areas AF or MF (Premereur et al., 2016), indicating that there are at least two parallel processing pipelines within the cortical face-processing network (Fisher and Freiwald, 2015; Freiwald et al., 2016; Zhang et al., 2020). We note that we cannot rule out the possibility that the lateral edge patches are sensitive to low-level visual attributes, more broadly, because rotations of the head result in greater changes at the image level than expression changes (Fig. 2b). Even so, the results of the current study provide further evidence that the lateral edge face patches carry out perceptual operations that are independent from those conducted in the fundus patches.

The role of the PL and AM face patches

The response of area PL is notably unique from the other STS patches. Although we found evidence of a general release (both changed > both repeated), the results indicate that area PL is equally sensitive to both expression and orientation changes (Fig. 6a). This finding can be interpreted as evidence that area PL is activated by the complex features of a face (Issa and DiCarlo, 2012), and that this activation is invariant to the image distortions imposed by changes in retinal size and lighting conditions (Taubert et al., 2018a). However, while tolerant of changes in low-level properties that do not change the interpretation of the facial signals from a social perspective, the data also suggest that area PL is sensitive to high-level changes in facial structure. Based on this finding, it seems likely that area PL acts as a hub connecting EVC and the motion-sensitive areas such as MT, to both the fundus patches and the lateral edge patches in IT cortex. That said, given the temporal resolution of fMRI, we cannot speculate on the direction of the information flow (feedforward vs feedback).

Notably, not all of our face-selective ROIs exhibited a general release from adaptation. Although a comparison between EAI and OAI values suggested greater sensitivity to changes in facial expressions than head orientation, we found no evidence that the fMRI signal in area AM was released from fMRI adaptation when both expression and orientation were changed (both changed = both repeated; Fig. 3c). Neuronal responses in area AM, located on the ventral surface of the brain, have been shown to encode the differences between individual faces (Chang and Tsao, 2017). Thus, our results indicating a lack of fMRI adaptation can be interpreted by extension; because the same identity was presented repeatedly throughout the experiment, the fMRI signal adapted in all four conditions (Fig. 3c). According to this scenario, the neural representations of facial identity built in area AM are not only robust to changes in head orientation but also facial expression. However, the assertion that neural representations in area AM are tolerant of facial expression requires further investigation.

Conclusion

Detecting meaningful transient changes in facial structure throughout the length of social interaction between ourselves and another social agent represents a considerable challenge for the primate visual system, and yet we do so effortlessly (Taubert et al., 2016). In this study, we reasoned that by holding the identity of a face constant throughout a sequence, and by allowing expression and head orientation to vary, we mimicked the sensory input a subject might receive during a dyadic exchange with another conspecific. The results uncovered two parallel processing streams, segregating within the macaque STS with markedly different responses to changes in facial expression and head orientation. It follows that a similar cortical segregation may exist within the human face-processing system to support our ability to read the transient facial signals that occur during a face-to-face conversation with another person.

Footnotes

This research was supported by the Intramural Research Program of the National Institute of Mental Health (NIMH; Grants ZIA-MH-002918 to L.G.U. and ZIC-MH002899 to D.A.L.). We thank the Neurophysiology Imaging Facility Core (NIMH, National Institute of Neurological Disorders and Stroke, National Eye Institute) for functional and anatomical MRI scanning, with special thanks to David Yu, Charles Zhu, and Frank Ye for technical assistance.

The authors declare no competing financial interests.

References

- Adolphs R, Tranel D, Damasio AR (1998) The human amygdala in social judgment. Nature 393:470–474. 10.1038/30982 [DOI] [PubMed] [Google Scholar]

- Amaral DG, Price JL (1984) Amygdalo-cortical projections in the monkey (Macaca fascicularis). J Comp Neurol 230:465–496. 10.1002/cne.902300402 [DOI] [PubMed] [Google Scholar]

- Bruce V, Young A (1986) Understanding face recognition. Br J Psychol 77:305–327. 10.1111/j.2044-8295.1986.tb02199.x [DOI] [PubMed] [Google Scholar]

- Chang L, Tsao DY (2017) The code for facial identity in the primate brain. Cell 169:1013–1028.e14. 10.1016/j.cell.2017.05.011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cox RW. (1996) AFNI: software for analysis and visualization of functional magnetic resonance neuroimages. Comput Biomed Res 29:162–173. 10.1006/cbmr.1996.0014 [DOI] [PubMed] [Google Scholar]

- Dubois J, de Berker AO, Tsao DY (2015) Single-unit recordings in the macaque face patch system reveal limitations of fMRI MVPA. J Neurosci 35:2791–2802. 10.1523/JNEUROSCI.4037-14.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ekman P. (1992) An argument for basic emotions. Cogn Emot 6:169–200. 10.1080/02699939208411068 [DOI] [Google Scholar]

- Ekman P, Friesen WV, Ellsworth P (1972) Emotion in the human face: guidelines for research and an integration of findings. Oxford: Pergamon. [Google Scholar]

- Fox CJ, Moon SY, Iaria G, Barton JJ (2009) The correlates of subjective perception of identity and expression in the face network: an fMRI adaptation study. NeuroImage 44:569–580. 10.1016/j.neuroimage.2008.09.011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fisher C, Freiwald WA (2015) Contrasting specializations for facial motion within the macaque face-processing system. Curr Biol 25:261–266. 10.1016/j.cub.2014.11.038 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Freiwald W, Duchaine B, Yovel G (2016) Face processing systems: from neurons to real-world social perception. Annu Rev Neurosci 39:325–346. 10.1146/annurev-neuro-070815-013934 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Freiwald WA, Tsao DY (2010) Functional compartmentalization and viewpoint generalization within the macaque face-processing system. Science 330:845–851. 10.1126/science.1194908 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Furl N, Hadj-Bouziane F, Liu N, Averbeck BB, Ungerleider LG (2012) Dynamic and static facial expressions decoded from motion-sensitive areas in the macaque monkey. J Neurosci 32:15952–15962. 10.1523/JNEUROSCI.1992-12.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gothard KM, Battaglia FP, Erickson CA, Spitler KM, Amaral DG (2007) Neural responses to facial expression and face identity in the monkey amygdala. J Neurophysiol 97:1671–1683. 10.1152/jn.00714.2006 [DOI] [PubMed] [Google Scholar]

- Grill-Spector K, Malach R (2001) fMR-adaptation: a tool for studying the functional properties of human cortical neurons. Acta Psychol (Amst) 107:293–321. 10.1016/S0001-6918(01)00019-1 [DOI] [PubMed] [Google Scholar]

- Grill-Spector K, Weiner KS, Kay K, Gomez J (2017) The functional neuroanatomy of human face perception. Annu Rev Vis Sci 3:167–196. 10.1146/annurev-vision-102016-061214 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grimaldi P, Saleem KS, Tsao D (2016) Anatomical connections of the functionally defined “face patches” in the macaque monkey. Neuron 90:1325–1342. 10.1016/j.neuron.2016.05.009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hadj-Bouziane F, Bell AH, Knusten TA, Ungerleider LG, Tootell RB (2008) Perception of emotional expressions is independent of face selectivity in monkey inferior temporal cortex. Proc Natl Acad Sci U S A 105:5591–5596. 10.1073/pnas.0800489105 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hadj-Bouziane F, Liu N, Bell AH, Gothard KM, Luh WM, Tootell RB, Murray EA, Ungerleider LG (2012) Amygdala lesions disrupt modulation of functional MRI activity evoked by facial expression in the monkey inferior temporal cortex. Proc Natl Acad Sci U S A 109:E3640–E3648. 10.1073/pnas.1218406109 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hasselmo ME, Rolls ET, Baylis GC (1989) The role of expression and identity in the face-selective responses of neurons in the temporal visual cortex of the monkey. Behav Brain Res 32:203–218. 10.1016/s0166-4328(89)80054-3 [DOI] [PubMed] [Google Scholar]

- Haxby JV, Hoffman EA, Gobbini MI (2000) The distributed human neural system for face perception. Trends Cogn Sci (Regul Ed) 4:223–233. 10.1016/s1364-6613(00)01482-0 [DOI] [PubMed] [Google Scholar]

- Hoffman KL, Gothard KM, Schmid MC, Logothetis NK (2007) Facial-expression and gaze-selective responses in the monkey amygdala. Curr Biol 17:766–772. 10.1016/j.cub.2007.03.040 [DOI] [PubMed] [Google Scholar]

- Issa EB, DiCarlo JJ (2012) Precedence of the eye region in neural processing of faces. J Neurosci 32:16666–16682. 10.1523/JNEUROSCI.2391-12.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun MM (1997) The fusiform face area: a module in human extrastriate cortex specialized for face perception. J Neurosci 17:4302–4311. 10.1523/JNEUROSCI.17-11-04302.1997 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kourtzi Z, Kanwisher N (2001) Representation of perceived object shape by the human lateral occipital complex. Science 293:1506–1509. 10.1126/science.1061133 [DOI] [PubMed] [Google Scholar]

- Krekelberg B, Boynton GM, van Wezel RJ (2006) Adaptation: from single cells to BOLD signals. Trends Neurosci 29:250–256. 10.1016/j.tins.2006.02.008 [DOI] [PubMed] [Google Scholar]

- Krumhuber E, Manstead AS, Cosker D, Marshall D, Rosin PL, Kappas A (2007) Facial dynamics as indicators of trustworthiness and cooperative behavior. Emotion 7:730–735. 10.1037/1528-3542.7.4.730 [DOI] [PubMed] [Google Scholar]

- Larsson J, Solomon SG, Kohn A (2016) fMRI adaptation revisited. Cortex 80:154–160. 10.1016/j.cortex.2015.10.026 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu N, Hadj-Bouziane F, Moran R, Ungerleider LG, Ishai A (2017) Facial expressions evoke differential neural coupling in macaques. Cereb Cortex 27:1524–1531. 10.1093/cercor/bhv345 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Loffler G, Yourganov G, Wilkinson F, Wilson HR (2005) fMRI evidence for the neural representation of faces. Nat Neurosci 8:1386–1390. 10.1038/nn1538 [DOI] [PubMed] [Google Scholar]

- Logothetis NK, Guggenberger H, Peled S, Pauls J (1999) Functional imaging of the monkey brain. Nat Neurosci 2:555–562. 10.1038/9210 [DOI] [PubMed] [Google Scholar]

- Meyers EM, Borzello M, Freiwald WA, Tsao D (2015) Intelligent information loss: the coding of facial identity, head pose, and non-face information in the macaque face patch system. J Neurosci 35:7069–7081. 10.1523/JNEUROSCI.3086-14.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moeller S, Freiwald WA, Tsao DY (2008) Patches with links: a unified system for processing faces in the macaque temporal lobe. Science 320:1355–1359. 10.1126/science.1157436 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murphy AP, Leopold DA (2019) A parameterized digital 3D model of the Rhesus macaque face for investigating the visual processing of social cues. J Neurosci Methods 324:108309. 10.1016/j.jneumeth.2019.06.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oosterhof NN, Todorov A (2009) Shared perceptual basis of emotional expressions and trustworthiness impressions from faces. Emotion 9:128–133. 10.1037/a0014520 [DOI] [PubMed] [Google Scholar]

- Premereur E, Taubert J, Janssen P, Vogels R, Vanduffel W (2016) Effective connectivity reveals largely independent parallel networks of face and body patches. Curr Biol 26:3269–3279. 10.1016/j.cub.2016.09.059 [DOI] [PubMed] [Google Scholar]

- Rolls ET. (1984) Neurons in the cortex of the temporal lobe and in the amygdala of the monkey with responses selective for faces. Hum Neurobiol 3:209–222. [PubMed] [Google Scholar]

- Russ BE, Leopold DA (2015) Functional MRI mapping of dynamic visual features during natural viewing in the macaque. Neuroimage 109:84–94. 10.1016/j.neuroimage.2015.01.012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sawamura H, Orban GA, Vogels R (2006) Selectivity of neuronal adaptation does not match response selectivity: a single-cell study of the fMRI adaptation paradigm. Neuron 49:307–318. 10.1016/j.neuron.2005.11.028 [DOI] [PubMed] [Google Scholar]

- Schwiedrzik CM, Zarco W, Everling S, Freiwald WA (2015) Face patch resting state networks link face processing to social cognition. PLoS Biol 13:e1002245. 10.1371/journal.pbio.1002245 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seidlitz J, Sponheim C, Glen D, Ye FQ, Saleem KS, Leopold DA, Ungerleider L, Messinger A (2018) A population MRI brain template and analysis tools for the macaque. Neuroimage 170:121–131. 10.1016/j.neuroimage.2017.04.063 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sliwa J, Freiwald WA (2017) A dedicated network for social interaction processing in the primate brain. Science 356:745–749. 10.1126/science.aam6383 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taubert J, Van Belle G, Vanduffel W, Rossion B, Vogels R (2015a) Neural correlate of the Thatcher face illusion in a monkey face-selective patch. J Neurosci 35:9872–9878. 10.1523/JNEUROSCI.0446-15.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taubert J, Van Belle G, Vanduffel W, Rossion B, Vogels R (2015b) The effect of face inversion for neurons inside and outside fMRI-defined face-selective cortical regions. J Neurophysiol 113:1644–1655. 10.1152/jn.00700.2014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taubert J, Alais D, Burr D (2016) Different coding strategies for the perception of stable and changeable facial attributes. Sci Rep 6:32239. 10.1038/srep32239 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taubert J, Van Belle G, Vogels R, Rossion B (2018a) The impact of stimulus size and orientation on individual face coding in monkey face-selective cortex. Sci Rep 8:10339. 10.1038/s41598-018-28144-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taubert J, Flessert M, Wardle SG, Basile BM, Murphy AP, Murray EA, Ungerleider LG (2018b) Amygdala lesions eliminate viewing preferences for faces in rhesus monkeys. Proc Natl Acad Sci U S A 115:8043–8048. 10.1073/pnas.1807245115 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taubert J, Flessert M, Liu N, Ungerleider LG (2019) Intranasal oxytocin selectively modulates the behavior of rhesus monkeys in an expression matching task. Sci Rep 9:15187. 10.1038/s41598-019-51422-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tsao DY, Freiwald WA, Knutsen TA, Mandeville JB, Tootell RB (2003) Faces and objects in macaque cerebral cortex. Nat Neurosci 6:989–995. 10.1038/nn1111 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tomkins SS, McCarter R (1964) What and where are the primary affects? Some evidence for a theory. Percept Mot Skills 18:119–158. 10.2466/pms.1964.18.1.119 [DOI] [PubMed] [Google Scholar]

- Vanduffel W, Fize D, Mandeville JB, Nelissen K, Van Hecke P, Rosen BR, Tootell RB, Orban GA (2001) Visual motion processing investigated using contrast agent-enhanced fMRI in awake behaving monkeys. Neuron 32:565–577. 10.1016/s0896-6273(01)00502-5 [DOI] [PubMed] [Google Scholar]

- Vogels R. (2016) Sources of adaptation of inferior temporal cortical responses. Cortex 80:185–195. 10.1016/j.cortex.2015.08.024 [DOI] [PubMed] [Google Scholar]

- Williams MA, Berberovic N, Mattingley JB (2007) Abnormal FMRI adaptation to unfamiliar faces in a case of developmental prosopamnesia. Curr Biol 17:1259–1264. 10.1016/j.cub.2007.06.042 [DOI] [PubMed] [Google Scholar]

- Winston JS, Henson RNA, Fine-Goulden MR, Dolan RJ (2004) fMRI-adaptation reveals dissociable neural representations of identity and expression in face perception. J Neurophysiol 92:1830–1839. 10.1152/jn.00155.2004 [DOI] [PubMed] [Google Scholar]

- Xiang Q-S, Ye FQ (2007) Correction for geometric distortion and N/2 ghosting in EPI by phase labeling for additional coordinate encoding (PLACE). Magn Reson Med 57:731–741. 10.1002/mrm.21187 [DOI] [PubMed] [Google Scholar]

- Zhang H, Japee S, Stacy A, Flessert M, Ungerleider LG (2020) Anterior superior temporal sulcus is specialized for non-rigid facial motion in both monkeys and humans. Neuroimage 218:116878 10.1016/j.neuroimage.2020.116878 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang X, Li B (2018) Population coding of valence in the basolateral amygdala. Nat Commun 9:5195–5195. 10.1038/s41467-018-07679-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhu Q, Nelissen K, Van den Stock J, De Winter FL, Pauwels K, de Gelder B, Vanduffel W, Vandenbulcke M (2013) Dissimilar processing of emotional facial expressions in human and monkey temporal cortex. Neuroimage 66:402–411. 10.1016/j.neuroimage.2012.10.083 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Raw parameter estimates for voxels included in the overall analysis (delimited by both subject and region of interest). Download Figure 3-1, TXT file (54.7KB, txt)