Abstract

Behavior is essential for understanding infant learning and development. Although behavior is transient and ephemeral, we have the technology to make it tangible and enduring. Video uniquely captures and preserves the details of behavior and the surrounding context. By sharing videos for documentation and data reuse, we can exploit the tremendous opportuni-ties provided by infancy research and overcome the important challenges in studying behavior. The Datavyu video coding software and Databrary digital video library provide tools and infrastructure for mining and sharing the richness of video. This article is based on my Presidential Address to the International Congress on Infant Studies in New Orleans, May 22, 2016 (Video 1 at https://www.databrary.org/volume/955/slot/39352/-?asset=190106. Given that the article de-scribes the power of video for understanding behavior, I use video clips rather than static images to illustrate most of my points, and the videos are shared on the Databrary library.

1 |. DISCOVERING INFANTS

Infancy researchers have the best job in the world. Like all scientists, we get to discover things. But as infancy researchers, we also get to watch our participants discover things—how infants display their first social smiles, say their first words, and take their first walking steps (Video 2 at https://www.databrary.org/volume/955/slot/39376/-?asset=190428). Infants’ discoveries, combined with the antecedents and sequelae of their new skills, give researchers the opportunity to unearth the origins of basic psychological functions. We can track the early development of perception, action, cognition, emotion, language, and so on, and we can investigate similarities between early and mature forms.

Moreover, infancy provides a special opportunity to discover how development works. The uniquely rapid and dramatic changes in infancy enhance our potential to discover critical processes of development. Every context is open to inquiry. We can observe infant development in controlled laboratory studies or in the natural everyday environment. We can describe consistent patterns across groups, differences within groups, and the idiosyncratic pathways of individual babies. We can identify processes that are exquisitely sensitive to environmental stimulation and processes that are rel-atively impervious to outside influences. We can delve into our domain of choice, and we can track cascades of development from one domain to another.

Our job is remarkable in part because there is so much left to discover. Infancy research offers the exhilarating thrill of fantastic or unexpected phenomena and a sort of parental wonder about the extraordinary accomplishments of ordinary infant development. Indeed, after a century of notable progress, infancy research is still a wild, untamed frontier with vast, uncharted territories.

2 |. BEH AVIOR IS THE KEY TO DISCOVERY

Behavior cuts our path through the wilderness. After more than a century of scientific work, nearly everything we know and most of what to learn about infant development stems from infants’ behavior.

2.2 |. Behavior as means and ends

Behavior is our primary means for studying infants. In contrast to older children and adults, infants cannot use language to tell us what they are perceiving, thinking, or feeling. Infants communicate only by doing. So, we must infer the inner workings of infants’ minds by observing their behaviors (Adolph & Berger, 2006). The field has devised dozens of paradigms to access infants’ invisible mental activities based on their observable behaviors. Most studies rely on looking behaviors—how long infants gaze at a target, how frequently they switch gaze among targets, where they direct their gaze, and so on. Based on patterns of looking, we infer mental functions such as interest, surprise, pre-diction, statistical learning, visual discrimination, cross-modal mapping, categorical knowledge, lin-guistic understanding, short-term memory, and attribution of intention (for reviews, see Aslin, 2007; Oakes, 2012; Tafreshi, Thompson, & Racine, 2014).

Looking-based paradigms, however, require infants to sit stationary in front of a display. When infants are free to move, we can capitalize on a larger range of behaviors. Thus, we infer affiliation, attachment, desire, and fear based on infants’ facial expressions, vocalizations, and whether they avoid or seek proximity to specific people, places, and things (LoBue & Adolph, 2019; Saarni, Campos, Camras, & Witherington, 2006). We infer moral reasoning based on whether they reach for “good” characters over “bad” ones (Hamlin, 2014). We infer planning, inhibition, and means-ends reasoning based on whether they succeed or bungle a task, repeat or alter their response, and perform single actions or multiple actions in sequence (Chen & Siegler, 2000; Diamond, 2006; Munakata, McClelland, Johnson, & Siegler, 1997).

Relatedly, for those of us who aim to understand the process of development, behavior is a prime candidate. The process of development can only be studied by observing a developing system (Thelen, 1992). Development is an abstraction, so empirical studies must focus on the development of something. A long history of infancy researchers has used behavioral development—rather than mental development—as a window into change processes because behavior is directly observable (Gesell, 1933; McGraw, 1935). The particular behaviors—however interesting in their own right—serve as a model system for understanding developmental change (Adolph & Berger, 2006; Adolph, Hoch, & Cole, 2018; Adolph & Robinson, 2013, 2015; Adolph, Robinson, Young, & Gill-Alvarez, 2008; Lee, Cole, Golenia, & Adolph, 2018; Tamis-LeMonda, Kuchirko, Luo, & Escobar, 2017; Thelen & Ulrich, 1991).

Moreover, for many infancy researchers, behavior is not merely a means. It is an end-product wor-thy of study. The aim is to understand the developmental mechanisms that turn infants’ meaningless babbles into meaningful words (Goldstein & Schwade, 2008), sensorimotor play into pretend play (Tamis-LeMonda & Bornstein, 1996), and toddling steps into forays through the environment (Adolph et al., 2012; Adolph, Vereijken, & Shrout, 2003; Cole, Robinson, & Adolph, 2016; Hoch, O’Grady, & Adolph, 2018). In such cases, behavior takes center stage, and the hidden workings of infants’ minds are of secondary interest.

Regardless, for babies, behavior is the bottom line. Diagnoses of infants’ psychological health or disability are grounded in behavior (Zeanah, 2018). Physiological and neural responses to stimuli (heart rate, cortisol level, pupillometry, neural imaging, etc.) take their meaning from behavioral correlates (Aslin, Shukla, & Emberson, 2015). Formal models of infant learning and development prove their worth based on whether they can predict infants’ behavior (Dupoux, 2018; Munakata, 2006).

2.2 |. Behavior is more powerful than you may think

Given the central role of behavior in the study of infants, it is puzzling that behavior is so often discounted by researchers, funding agencies, policymakers, and the popular press. Perhaps skeptics must be disabused of the misguided notion that behavioral measures are less “scientific” than physiological or neural measures. In fact, infancy researchers boast an impressive suite of sophisticated technologies to record the details of behavior with remarkable spatial and temporal precision (e.g., eye tracking, motion tracking, video), and many technologies have been available for much of the last century (Gesell, 1946; McGraw, 1935). Similarly, we have a tremendous array of analytic techniques to quantify the unfolding of behavior in time and space (Bakeman & Quera, 2011). The “decade of the brain” pales against a century of behavior.

Perhaps naysayers mistakenly believe that infants’ behavioral repertoire is limited. It is not. Researchers’ widespread reliance on simple looking-time behavior as a window into infants’ mental life is due more to tradition and inertia than to a lack of viable infant responses. Any behavior has the potential to reveal internal states and the processes of development (e.g., Adolph et al., 2018). And infants emit an enormous variety and frequency of behaviors in addition to looking. In 20 s of play with a rattle, an average 5-month-old emits 360 behaviors across 17 body parts including the eyes, head, and hands (Gesell, 1935). In an hour of free play with a caregiver, the average toddler spends 30 min interacting with objects, takes 2,400 steps, travels the distance of 8 American football fields, and carries objects on one-third of the walking bouts (Adolph et al., 2012; Heiman, Cole, Lee, & Adolph, 2019; Karasik, Tamis-LeMonda, & Adolph, 2011).

Perhaps doubters assume that behavior is less reliable or less valid than a verbal report. Their doubts can be allayed. As with nonhuman animals, many infant behaviors are sufficiently reliable to allow robust conclusions about a single baby—including estimations of psychophysical functions and operant response curves (Adolph, 1997; Franchak & Adolph, 2014; Held, Birch, & Gwiazda, 1980; Hulsebus, 1974; Rovee-Collier & Gekoski, 1979; Teller, 1979). And even if infants could talk or follow detailed instructions as older participants do, behavioral measures may be preferable. Often, older children and adults do not know what they are perceiving or thinking and cannot or would not express what they are feeling. Even here, behavior may provide a more direct and valid index of mental life.

3 |. WE H AVE THE TOOLS TO REVEAL BEH AVIORAL DEVELOPMENT

The impermanence of behavior, however, does pose significant research challenges (Adolph, 2016). Often, events of interest persist only for brief periods of time. Behavior happens, and then it is gone. Infants’ looks, smiles, babbles, and steps appear in one moment and disappear in the next. Moreover, visual access to behavior can be challenging. Babies’ head turns obscure our view of their looks or smiles; their bodies block our view of their actions on objects. No worries, the transience of behavior can be easily overcome with the appropriate tools.

3.1 |. The trace of behavior

Behavior is ephemeral, but it can leave a permanent, objective trace. Happily, we need not rely on forensics—post hoc analyses of strewn blocks, dirty diapers, or crumbs on a plate—or the testimony of potentially biased, forgetful eyewitnesses such as parents or experimenters. Rather, we can capture the trace of behavior in real time while it is happening. We can transform the intangibles of behavior into something with permanent shape and form, now open to scientific scrutiny at our own pace and place. Desktop eye trackers record precisely where infants look at a scene with the duration of each gaze and saccade preserved in serial order; instrumented floors record where, when, and how force-fully infants step; motion trackers record the minutiae of infants’ body movements over space and time; inertial sensors, accelerometers, and pedometers record the temporal distribution and amplitude of infants’ physical activity; and LENA (lena.org) audio recorders collect vocalizations and ambient noise over the waking day (Figure 1a).

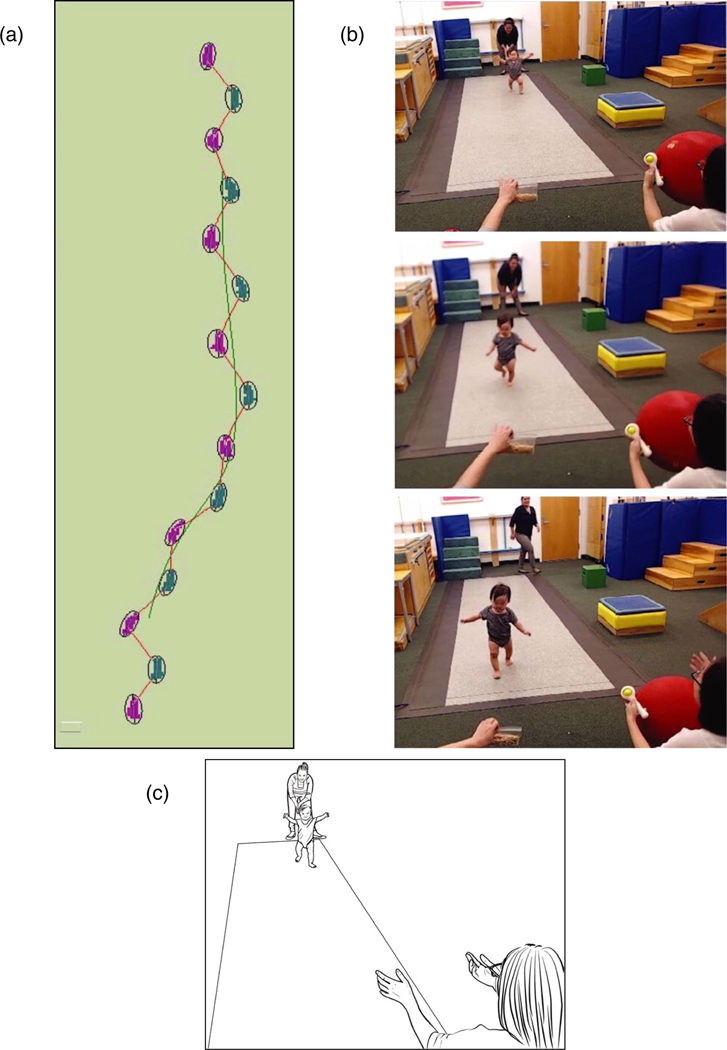

FIGURE 1.

Capturing a trace of behavior. (a) Trace from instrumented floor. (b) Serial photographs of infant’s behavior. (c) Line drawing derived from first photograph. See Video 3 at https://www.databrary.org/volume/955/slot/39377/-?asset=190438

Nonetheless, important pieces to the puzzle are always missing from the processed data (numbers in a spreadsheet, trace on a page) produced by eye trackers, motion trackers, and the like (see Figure 1a). Processed data, like the musical score of a song, fail to capture essential aspects of the live performance. To do so, we need video (see Video 3 at https://www.databrary.org/volume/955/slot/39377/-?asset=190438).

3.2 |. Video captures behavior in context

Only cinematic recordings—originally film, and now video—can capture the richness and complex-ity of behavior and the subtle details of the surrounding context (Adolph, 2016; Adolph, Gilmore, & Kennedy, 2017; Gilmore & Adolph, 2017). Only video provides the sights, sounds, and impres-sions available to a human observer. In fact, video clarifies and enriches the behavioral traces from eye trackers and motion trackers, and from physiological, neural, and audio recordings by reveal-ing the whole behavioral event in the physical and social context in which it occurred (Video 4 at https://www.databrary.org/volume/955/slot/39378/-?asset=190452). Typically, the moving image is accompanied by a sound track, so that we can both see and hear behavior unfold. The camera is ob-jective, and it never tires or becomes distracted. It sees things—such as manual gestures—that live observers might otherwise miss (Congdon, Novack, & Goldin-Meadow, 2016). With the right camera views, behavior can be captured in its entirety and preserved indefinitely. Third-person camera views reveal what participants are doing and where the events are happening—that is, what an outside (uninvolved) observer would see and hear. First-person camera views (from a head camera or head-mounted eye tracker) reveal events from the participant’s perspective. Multiple camera views can be combined to mitigate data loss from occlusion or limited vantage point (Video 5 at https://www.databrary.org/volume/955/slot/39379/-?asset=190574).

Compared with other recording technologies, video is inexpensive, readily accessible, and easy to use. A century ago, when film was the only cinematic medium, only the most highly funded, best equipped, well-staffed laboratories had the capacity for cinematic recording (Curtis, 2011; Gesell, 1952; Halverson, 1928; Thelen & Adolph, 1992). Now, inexpensive, convenient video solutions are available to every researcher, clinician, and parent through video cameras, smart phones, and webcams. Tiny wearable cameras are available for mountain bikers, police officers, spies, and, of course, infants (Video 6 at https://www.databrary.org/volume/955/slot/39380/-?asset=190429).

Video provides an exceptionally powerful medium for behavioral research because it allows us to manipulate time and space (Beebe, 2014; Gesell, 1935, 1946; Goldman, Pea, Barron, & Derry, 2006). We can control the speed and direction of playback, slow down or accelerate the behaviors forward and backward, loop a single event to watch it repeatedly, and jump from one selected moment to another to reveal the detailed secrets of the behaviors. We can zoom in to focus on a particular region of the video and zoom out to see the entire scene. By retaining the timing, sequence, and spatial relations among behaviors and context, video captures the durations of events, the sequence and overlaps among events (e.g., gestures that continue after the spoken utterance has ended), the nesting of smaller events inside of larger ones (facial expression nested within movement of the whole body), and briefer events as part of lengthier ones (the first step in a sequence of steps). We can decompose the video into a series of frames, capture the essential moments as still images, and pare away the extraneous clutter in line drawings (Figure 1c, Video 3 at https://www.databrary.org/volume/955/slot/39377/-?asset=190438). In these ways, video allows behavior to be dissected and analyzed, just as scientists do for a cadaver, a brain slice, or a cluster of cells spread on a glass slide. In Gesell’s (1946) words, video can make the anatomy of behavior as “tangible as tissue” (p. 471). Moreover, just as the physical act of dissection aids in understanding the anatomy of a body, the physical act of manipulating the video (by turning the crank on a film projector, manipulating the dial on a VCR, pressing the buttons on a media player) provides visceral feedback to help researchers to comprehend the events (Beebe, 2014; Gesell, 1946).

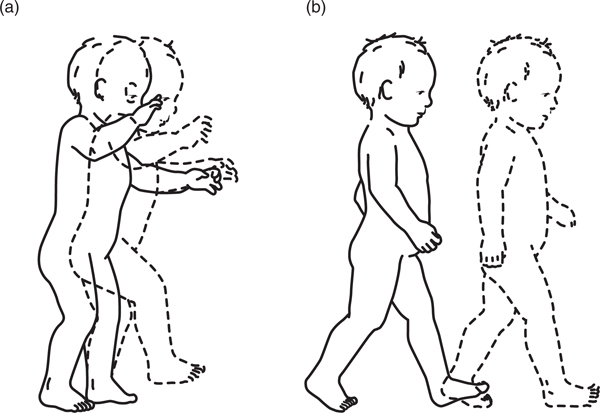

Video analysis also represents a powerful way to study development because it allows richly informed comparisons of real-time behavior across days, weeks, months, and years. By giving behavior a tangible form, video allows the growth of infants’ behavior to be measured and quantified, just as scientists do for the growth of infants’ bodies and the changing structure of their brains. As ex-emplified by the series of walking behaviors in Video 2 at https://www.databrary.org/volume/955/slot/39376/-?asset=190428, video overcomes chronological age by allowing us to compare different time points in immediate proximity (Curtis, 2011; Gesell, 1935, 1946). A behavior unfolding in real time, as illustrated by the dashed to solid lines in Figure 2, can simultaneously be seen unfolding in developmental time as illustrated by the juxtaposition between left and right panels in Figure 2 (McGraw, 1945). Unfortunately, many research videos are never analyzed and serve only as a backup for live coding of an event.

FIGURE 2.

Development of walking. Dashed lines denote real time. Panels (a) and (b) denote developmental time. (a) Infants’ first steps. (b) Proficient infant gait

3.3 |. Video analysis with datavyu

To make video recordings maximally useful and efficient, we need tools to explore them and to crys-tallize our observations. In some cases, computer-vision and machine-learning analyses can automatically identify objects and events in video, so that human coders are not necessary (Ossmy, Gilmore, & Adolph, 2020). But in most cases, only human eyes and minds currently have the ability to identify the behaviors of most interest to researchers.

Coding videos can be unnecessarily laborious. Mouse clicking a media player and jotting notes into makeshift spreadsheets are as outdated as hand cranks and stopwatches from the film era. Better tools are available from academic and commercial sources. The tool I know best and use in my own research is Datavyu (datavyu.org)—free, academic, computerized, video-coding software that sup-ports video analysis (Adolph, 2015; Adolph et al., 2017). The software is open source and supported by free online and live expert help. Datavyu allows researchers to quickly and efficiently sift through the sea of behaviors and contexts, sort behaviors into user-defined categories, time-lock annotations to the video, and export time-locked codes for statistical analysis.

Datavyu capitalizes on the unique properties of video to explore behavior and accelerate discovery. Earlier incarnations as MacSHAPA and OpenSHAPA (Sanderson et al., 1994) innovated the use of fingertip control over video playback to manipulate the spatiotemporal properties of behavior. Thus, with a few finger presses on a computer numpad, coders can play video forward and backward at varying speeds to reveal events of interest, jog frame by frame to determine when behaviors began and ended, freeze frames to dissect behavior into its component parts, zoom in and out to focus on details or the larger context, temporarily lock into regions of interest to guide their attention, loop the video to rewatch events, and jump instantly to designated events. Researchers can control video playback in multiple camera views simultaneously.

With Datavyu, coders can freely annotate the videos with comments or systematically tag portions of the video for events and behaviors of interest. Researchers can define their own categorical and qualitative codes, create user-defined quick keys to facilitate fast coding, and transcribe speech globally or at the utterance level. A powerful, flexible scripting language allows automatic manipulation of spreadsheets to (add, combine, alter, or delete codes), error-checking of entries, and calculations of in-terobserver reliability. With scripts, coders can build on their work in each subsequent coding pass (e.g., automatically view every infant object interaction previously coded to subsequently code caregiver’s speech), automatically time-sample events at user-defined intervals, and import other data streams (e.g., physiological or imaging data) into the Datavyu spreadsheet to juxtapose the processed data with the video. Each code and utterance is precisely time-locked to the appropriate video frames. The Datavyu spreadsheet temporally aligns codes so researchers can visualize how behaviors are sequenced, nested, and interleaved (Video 7 at https://www.databrary.org/volume/955/slot/39381/-?asset=19043). The time-locking allows researchers to return instantly to the behaviors and to export the data according to their unique specifications for statistical analysis. Behaviors can then be quantified as rates or durations (e.g., utterances per minute, average period of silence between utterances), sequences and contingencies (whether utterances follow short or long silences by a conversational partner), categories of behaviors (utterance type), or analyzed qualitatively (e.g., with ethnographic descriptions).

4 |. VIDEO SHARING CAN OVERCOME THE CHALLENGES OF INFANCY RESEARCH

Of course, infancy research is not all marvelous wonders. Behavioral research has notorious chal-lenges, and some challenges are exacerbated by the demands of working with infants. Video sharing can ease the difficulties because video is a remarkably effective form of documentation and a treasure trove for data reuse (Adolph, 2016; Adolph et al., 2017; Gilmore & Adolph, 2017).

4.1 |. Lack of transparency and reproducibility

Research methods and findings are not transparent. They cannot be because the words and static images in journal articles cannot portray procedures or phenomena in sufficient detail for the study to be replicated (Gilmore & Adolph, 2017). We write things like, “infants wore an ultra-light, wire-less, head-mounted eye tracker” (Franchak, Kretch, Soska, & Adolph, 2011, p. 1741), or “parents completed the MacArthur-Bates Communicative Development Inventory” (Newman, Rowe, & Ratner, 2016, p. 1,164). But the material in a typical methods section of a journal article does not explain how to get the head-mounted eye tracker on the baby (it is hard enough to put a hat on a baby) or how to administer the MCDI (e.g., how did researchers instruct parents about what counts as a baby “knowing” a “word”?). Without sufficient guidance from prior work, new investigators must reinvent the procedures for themselves. Results sections claim that infants: were “surprised or puzzled” while watching unexpected events (Baillargeon, Spelke, & Wasserman, 1985, p. 204); “seriously tried to sit in dollhouse chairs” (DeLoache, Uttal, & Rosengren, 2004, p. 1,027); “avoided” the apparent drop-off on a visual cliff (Witherington, Campos, Anderson, Lejeune, & Seah, 2005); or “plunged headlong down impossibly steep hills” (Adolph, 1997, p. 65). But words and pictures cannot fully represent the phenomena, so readers must imagine what happened because they cannot see it for themselves. Because so much of behavioral science is imagined rather than seen, research findings may be misinterpreted or overinterpreted.

We regularly use photographs or line drawings that foreground the essential features of procedures and displays (Figure 1b,c). Yet, we all implicitly acknowledge the importance of contextual background factors (lighting and ambient sound, furniture and toys, wall decorations and floor coverings, experimenter clothing and demeanor, etc.). At the same time, we do not know which contextual factors matter (Van Bavel, Mende-Siedlecki, Brady, & Reinero, 2016; Nosek, 2014). Every infancy researcher adopts superstitious laboratory lore that seem too odd, obvious, or trivial to include in a methods section (no thick beards on male experimenters, professional attire on the experimenters, use “infant-directed speech” when talking to babies, etc.). The subtleties of our procedures are like art forms, passed down from mentor to mentee (Peterson, 2016).

Mind you, researchers do not willfully withhold critical information. Rather, the format of traditional journal articles does not allow for complete disclosure. As a new assistant professor, one action editor told me that if scientists can describe how to clone Dolly the sheep in a few paragraphs, surely I could cut my methods section down to a page or two. More to the point, outside the standard descriptions in traditional reports, we do not know what information is relevant—the experimenter’s clean-shaven face and professional attire, the inviting laboratory furniture and bright walls, or the researcher’s use of infant-directed speech. Lack of transparency about methods and findings renders many studies irreproducible, and therefore reduces confidence in the research enterprise (Gilmore & Adolph, 2017; Harris, 2017; Open Science Collaboration, 2015).

Video sharing can help. Regardless of whether video is a primary source of raw data, video documentation of procedures, displays, and findings can overcome the barriers to transparency imposed by the deficiencies of words and pictures (Adolph et al., 2017; Gilmore & Adolph, 2017; Gilmore, Kennedy, & Adolph, 2018); see, for example, video documentation in the ManyBabies project (https://osf.io/6e2sw/). Video captures more of the detail and nuance than a brief verbal description, photograph, or diagram, and thus provides a clearer basis for replication and training purposes, ensures greater fidelity to protocols, and presents more cogent illustrations for teaching. If you want to know how to put a head-mounted eye tracker on a baby, watch a video of someone doing it (Video 8 at https://www.databrary.org/volume/955/slot/39382/-?asset=190431). If you want to see how an expert team administered the MCDI, watch them do it (Video 9 at https://www.databrary.org/volume/955/slot/39383/-?asset=190454). If you do not believe that children spontaneously sit in doll chairs or plunge down impossibly steep slopes, you can see it for yourself (Video 10 at https://www.databrary.org/volume/955/slot/39384/-?asset=190432and Video 11 at https://www.databrary.org/volume/955/slot/39385/-?asset=190572). If you assume that the word “avoid” means that infants shy away from the precipice (Adolph, Kretch, & LoBue, 2014), or the word “surprise” implies more than looking behaviors (Scherer, Zentner, & Stern, 2004), watch the videos.

Rather than relying on pictures with arrows and numbered panels to represent change over space and time, we can use video to show the actual changes over space and time (e.g., Johnson, Slemmer, & Amso, 2004) (Video 12 at https://www.databrary.org/volume/955/slot/39386/-?asset=190434). Compare what you can glean about a display, a procedure, or a research finding from words, line drawings, photographs, or a few seconds of video (Figure 1, Video 3 at https://nyu.databrary.org/volume/955/slot/39377/-). Put another way, if a picture is worth a thousand words, a video is worth a thousand pictures.

Moreover, video can capture the subtleties of procedures, displays, and phenomena without our explicit knowledge of which factors are meaningful. Video documentation—even one video of a “perfect” session with a “perfect” participant—can expose the typically hidden moderators that affect behavior and consequently affect the reproducibility of our studies. Indeed, if researchers uniformly shared videos that document procedures, displays, and findings, the importance of various factors could be tested empirically by comparing the videos (Adolph et al., 2017).

4.2 |. Lost data (Provenance and preservation)

Data loss threatens the integrity of the research enterprise. We lose our raw data, we lose our processed data, and we lose track of what we did to process the data.

Behavioral data, like all data, have a history. Every step of the workflow (the data provenance) affects the findings, and data processing is a critical part of the workflow. After we record infants doing something, we must process the raw data to turn the recordings into analyzable numbers. But processed data are uninterpretable without detailed documentation of the data provenance. Whether captured as a trace on a page (as in Figure 1a) or as flat-file data (numbers in spreadsheets), researchers cannot make much of processed data without knowing how the data were collected and processed, and what exactly the tracings or numbers represent. Many repositories for processed data (neural imaging, genomics, physiological data, etc.) are data graveyards. Things go in, but nothing comes out because lack of provenance renders the data uninterpretable and useless to others.

Eye-tracking data, for example, are meaningless without information about the make of the eye tracker, screen size and distance from the infant, duration and content of the display, calibration details, recording rate, designated regions of interest, gaze calculation, and so on (Oakes, 2012). Moreover, with commercial technologies such as Tobii eye trackers and LENA audio recorders, much of the processing goes on under the hood (e.g., Morgante, Zolfaghari, & Johnson, 2012). Because the processing is proprietary, researchers do not have ready access, so aspects of the data provenance are lost. Likewise, a coding spreadsheet filled with numbers and letters is largely uninterpretable, even when accompanied by the researcher’s codebook. Video coding manuals typically refer to tasks and behaviors with quirky, laboratory-specific labels that make the codes unusable by others. I tried to reuse codes from an old study in my laboratory, for example, but our coding manual stated only that “w = windsurfing,” “d = drunken walk,” and “h = hunchback” (Berger, Adolph, & Lobo, 2005). The descriptors were wonderful, but utterly opaque. “W = walk” and “f = fall” are equally useless. What behaviors qualify as walking steps or falls? Moreover, consider the countless decisions coders must make to score their research videos. Was that behavior a “walking step” or was the baby merely losing balance? Was the vocalization a “babble” or a “word”? With Datavyu, “under the hood” processing can be open source rather than proprietary, but we typically lose the connections between the behaviors in the videos and the codes in the spreadsheet, so again the provenance is lost. Preregistration of workflows (Nosek et al., 2015), scripts for analysis pipelines, and version control are not yet in widespread use, and are not panaceas.

Video sharing can help. Raw video data circumvent many issues of data provenance. Whereas processed data require extensive documentation to be interpretable, video is largely self-documenting (Adolph, 2016). Most of what researchers need to interpret video data is in the video. Simply watching a research video provides vast amounts of information about who the participants were, where they were, what they were doing, and how the data were collected. Frame rate and particulars about the camera lens are recoverable from the video. Details about the provenance are often unnecessary (make of the camera, zoom in the lens, etc.). It does not matter that I collected free play videos to study walking if another researcher is interested in infant-caregiver interactions. Moreover, a small amount of metadata (e.g., child’s age and sex, test date and location, identity of the research team) go a long way. A good example is Gordon’s (2004) videos of Piraha adults solving number problems and Piraha children during everyday activities (Video 13 at https://www.databrary.org/volume/955/slot/39387/-?asset=190435). The Piraha have no words for numbers, so their ages are truly unknown. Nonetheless, it is easy to discern that adults were engaged in simple number-matching tasks and often failed to match to sample, and that young infants engaged in surprising activities (to Western eyes) like playing with sharp knives in full view of caregivers. Thus, shared videos of behavior can be reused by other researchers without extensive documentation of the data provenance.

Unfortunately, raw data and many parts of the data provenance get lost from the public record, and often from our own laboratory records, so the path from raw data to research findings cannot be tracked or validated. All of us forget things, and idiosyncratic terminology, spotty record-keeping, and undocumented data management practices are the norm (Nosek, 2014, 2017). Our flat-file data, coding manuals, and analysis files get buried on hard drives that only a long-gone graduate student can find. Old video files molder on DVDs and defunct computers in a corner. For older researchers like me, videotapes decay in boxes labeled with incomprehensible study name acronyms. Without a permanent record of the data provenance, our research histories will disappear, as will the history of our science, leaving only our journal articles as isolated islands of untraceable claims.

Indeed, the history of infancy research is in danger of disappearing. Gesell (1946), one of the first researchers to explicitly recognize the power of cinematography to capture infant behavior, meticulously catalogued several hundred thousand feet of film recordings with accompanying file cards that described each participant and session. But most of Gesell’s films have decayed into vinegary brown ribbons, and the file cards have crumbled into dust. Likewise, for McGraw’s (1935) famous films of twins Johnny and Jimmy. And what happened to the cinematic recordings of infants in Tronick et al. (1978) “still-face” experiments, Ainsworth et al. (1978) “strange situation,” Gibson & Walk (1960) “visual cliff,” and Harlow & Suomi (1970) “pit of despair”?

Sharing raw video data can help to preserve our research history because the demands for provenance are minimal. Moreover, if we share video files along with spreadsheets of coded data, we can see every link between coders’ decisions and the actual behaviors (Video 7 at https://www.databrary.org/volume/955/slot/39381/-?asset=190430).

4.3 |. Limited samples, limited time, and wasted resources

Data collection is hugely inefficient and expensive because the resources of a single laboratory—time, money, and availability of participants—are limited. Researchers rely largely on samples of convenience because recruitment is so difficult. It is a chore to identify families to contact, parents do not answer their phones or emails, they cancel because of sick babies and inclement weather, they drop out of longitudinal studies, and many scheduled laboratory sessions are unrepentant no-shows. Data collection for a single cross-sectional study can take years. It is impractical, perhaps impossible, for a single laboratory to generate a large, diverse sample.

Sharing research videos allows us to increase the diversity of our samples, grow our sample sizes, increase our age range, and speed the process of data collection. As evidenced by several thousand publications based on shared transcripts in the CHILDES language repository, pooling data across children, ages, populations, and languages allows researchers to analyze datasets large and diverse enough to answer important questions about development (MacWhinney, 2000). Moreover, CHILDES is routinely used for teaching and for student projects—research that would be impossible in the limited time frame of a typical course or honors thesis.

Infant data are precious, but underused. Many procedures are short in duration because infants’ attention and compliance is limited. The average looking-time study, for example, lasts only a few minutes, and yields only a few costly data points per baby. Attrition rates can exceed 50% of the recruited sample because infants cry, cling, or fall asleep. Pilot data survive only until the next incarnation of the procedure. “Real” data die when the study is published. The life expectancy of laborious longitudinal and cultural studies ends when the funding runs out or the researcher loses interest.

Video sharing can recoup some of the costs of data collection by giving the data new life. Raw research video is typically so rich that the original investigator cannot fully exploit its potential. Other investigators can reuse shared videos to test related hypotheses or to ask questions beyond the scope of the original study. Researchers can mine videos to collect pilot data or to demonstrate feasibility for a grant proposal. Moreover, data reuse increases the efficiency of funding by avoiding duplicate data collection (Piwowar & Chapman, 2010).

Indeed, if we shared all our videos—including data that did not contribute to the final study—we would not waste hard-earned access to infant behavior (Adolph, 2016). Videos need not represent our best work or infants’ best behaviors, because all behavior is good behavior. Our successes and mistakes documented on video will serve as illustrations and teaching tools for other researchers. Data that go unused for one study (e.g., data from pilot subjects, data from infants of the wrong age, data from infants who failed to complete the task) could be used in subsequent studies to address different questions. One researcher’s offline behaviors, filler tasks, and subsidiary tasks can become another researcher’s primary data. One laboratory’s throw-away videos of crying, clinging, and sleeping infants can be invaluable for researchers who study vocal acoustics, temperament and attachment, or changes in arousal and behavioral state.

4.4 |. Siloed expertise

Traditionally, we study the remarkable changes in infancy from our own domain of expertise. Knowledge is siloed. Language researchers study language development, and emotion researchers study emotional development. No single researcher has the wherewithal to be a developmental jackof-all-trades, so each of us studies what we know with the methods and tools of our particular domain. Researchers in my motor development laboratory, for example, focus on infants’ movements. They often watch videos with the sound off because they lack expertise in language, emotion, and social interaction. Whereas other researchers see perceptual or cognitive variables when they watch videos of infants in looking-time paradigms, my students see postural development.

Consistent with theories of core knowledge, developmental primitives, and modularity of mind, studies of infant development typically follow the traditional divisions among research domains (Dominici et al., 2011; Fodor, 1983; Spelke, 2000; Spelke & Kinzler, 2007). But the alternative is also possible. Consistent with developmental systems views, domains of development may interact, and changes in one domain may cascade into other domains (Bronfenbrenner, 2009; Bronfenbrenner & Morris, 2006; Lerner, 2006; Masten & Cicchetti, 2010; Oakes & Rakison, 2019). However, we cannot resolve theoretical issues about interactions among domains based on the limited expertise of a single investigator.

Video sharing allows us to capitalize on the diverse expertise of our colleagues. If we make video a central part of the research enterprise and share our data, we can build on the prior coding passes of our colleagues. Possibly language and emotion are irrelevant to infants’ locomotor exploration, but maybe not. Perhaps postural control is unrelated to infants’ behavior in looking-time studies, but what if it is not? Regardless, we cannot get a complete picture of development by doing it alone. The wealth of analytic possibilities could be exploited if we shared our videos and capitalized on the expertise of others. We would make more rapid progress if we built on earlier efforts by analyzing videos in ways unimagined by the original researcher. The scientific contribution of a particular dataset need not depend on the private activities of one research laboratory, but instead could benefit from the imagination of many researchers with different expertise and viewpoints (Adolph, 2016; Adolph et al., 2017).

5 |. WE H AVE THE TOOLS TO SHARE BEH AVIORAL DEVELOPMENT

To enable widespread video sharing for documentation and data reuse, we need infrastructure and tools. The infrastructure must include the policies, technology, and staff to share, manage, store, and control access to research videos, exemplar video clips, displays, and associated metadata. The tools must make all of it easy for users. Enter Databrary.

5.1 |. Video sharing with databrary

Databrary (databrary.org) is a digital Web-based video library, the world’s only research repository specialized for sharing video. The infrastructure is housed at New York University and sustained by grants from federal agencies and foundations (www.databrary.org/about.html). The policies are informed by ethics experts, IRB officials, grants/contracts administrators, and legal counsel. The tools are free, open source, and supported online and live. Databrary created unique solutions for the problems of sharing identifiable data, managing data to make it useable, and building a community of researchers committed to video sharing.

Video contains personally identifiable information. Participants’ faces are visible, their voices are audible, and their names may be spoken aloud. Videos may contain information about participants’ families, homes, classrooms, or neighborhoods. Blurring faces, voices, and contexts devalue the videos for reuse. Thus, Databrary’s two-pronged policy framework (www.databrary.org/access/policies/) ensures participants’ privacy while providing open and ethical access (Gilmore & Adolph, in press; Gilmore et al., 2018).

First, Databrary requires researchers to obtain participants’ permission to share their data, including raw video files, video excerpts or photographs, and metadata (e.g., birthdate, test date, location, ethnicity/race, disability status). Whereas consent to participate in a study must be obtained before the session, permission to share can be obtained at the end of the session so that participants know what was recorded (Video 14 at https://www.databrary.org/volume/955/slot/39388/-?asset=190436). Obtaining participants’ permission to share at the time of the session should become standard practice because without it, the data will remain cloistered unless researchers invest enormous effort to track down participants years later. Researchers can use Databrary’s permission template (www.databrary.org/resources/templates) or create their own. Obtaining permission to share does not obligate researchers to share, and Databrary can store all the files, including videos from participants who do not wish to share with researchers outside the original team.

Second, the Databrary policy framework restricts access to a community of researchers under the oversight of their institutions. As formalized by a data access agreement with the researcher’s institution (www.databrary.org/resources/agreement), authorized investigators are primarily faculty members at colleges, universities, and medical schools who have certified research ethics training and who are eligible to conduct independent research at their institution. Basically, Databrary extends the zone of trust from the original investigator’s laboratory to the entire Databrary community. Authorized investigators agree to respect participants’ wishes about sharing; treat other researchers’ data with the same high standards as they do for their own; and be responsible for their students’ use of other researchers’ data. The access agreement allows authorized investigators and their affiliates to freely browse the library and download videos and excerpts for learning and teaching; investigators can upload videos for sharing and reuse videos for their own research studies with approval from their institution’s IRB/ethics board.

Data management is another sticky problem. For shared data to be maximally useful, it must be curated (tagged with searchable terms and systematically labeled and organized). Otherwise, researchers cannot easily search through a repository to find the data they want (searching for uncurated data is like rummaging through other people’s stuff at a garage sale). Some repositories (e.g., Open Science Framework, osf.org, and Dataverse, dataverse.org) store uncurated data, where each researcher decides whether and how to label and organize their files. Databrary discourages the garage-sale approach. Other repositories (e.g., ICPSR, ispsr.umich.edu/icpsrweb/, and CHILDES, childes.talkbank.org) curate researchers’ data for them. This approach takes time—months to years before data are shared—and relies on communication between the researcher and curator to configure standardized data structures, tags, and labels. Databrary offers curation assistance to investigators with existing datasets.

Alternatively, researchers can curate their own data according to specified standards. However, researchers typically do not think about curation until after their data are collected and processed, and the final manuscript is submitted or in press. After-the-fact curation is tedious, time-consuming, and unrewarding for researchers, so despite their best intentions, most do not ever share their data.

Databrary offers an important variant of the self-curation option. Instead of organizing data after the study is complete, Databrary encourages “active curation,” where researchers upload and organize their data as it is collected (Gilmore & Adolph, 2019; Gilmore et al., 2018). Rather than storing videos locally, researchers store their data on Databrary and use a flexible, modifiable spreadsheet to tag information about each participant, the context of data collection, and whether the data were included in the study. The only metadata strictly required are participants’ preferences about sharing. With this active-curation framework, the cost to researchers is equivalent to current laboratory practices of storing a copy of the video and entering the metadata into a spreadsheet. Moreover, with active curation, Databrary acts as each researcher’s laboratory file server and cloud storage, enabling Web-based sharing among members of the protocol and ensuring secure backup. The videos are automatically transcoded into preservable formats, and the original copies are also stored.

Sharing is easy. When investigators are ready to share (perhaps after the study is submitted for publication), they need only click a button. They can decide whether to share data only with their research team, with specific colleagues, with the entire Databrary community, or with the general public. Open sharing is the fastest way to accelerate science. Open sharing with the Databrary community means that users need not contact the original investigator to request access, and they need not collaborate with the original researcher. In fact, open sharing means that researchers we might not know, might not like, and who might not appreciate our work can access our data, praise it, critique it, build on it, and reuse it. Researchers need not worry about getting credit for sharing because each dataset has a permanent DOI and links to published papers and funding.

Video sharing is catching on, and the Databrary community is growing. Between 2014, when the Databrary website went live, and the publication of this piece in May 2020, Databrary authorized 1,145 + investigators and 545 + of their affiliates from 569 + institutions around the globe, and ingested 54,500 + hours of video.

5.2 |. The PLAY project

To jumpstart video reuse, 65 researchers across the United States and Canada are collaborating on the PLAY (Play & Learning Across a Year) project (www.play-project.org). By leveraging the joint expertise of the research team, and by capitalizing on Databrary and Datavyu to exploit the power of video, PLAY researchers will collect, transcribe, code, share, and use a video corpus of infant and mother naturalistic activity in the home. The overall goal is to catalyze discovery about infant development, and to test hypotheses about interactions among domains of development and cascades across real-time behaviors, developmental time, and environments. PLAY will focus on the crucial period from 12 to 24 months of age when infants show remarkable advances in language, object interaction, locomotion, and emotion regulation.

Together, PLAY researchers will create the first cross-domain, large-scale, fully transcribed, coded, and curated video corpus of human behavior—collected with a common protocol and coded with common criteria jointly developed by the researchers (see Video 7 at https://nyu.databrary.org/volume/955/slot/39381/-?asset=190430). The corpus will consist of videos of 1,000 + infant–mother dyads (12-, 18-, and 24-month-olds) from 30 diverse geographic sites in urban, suburban, and rural areas, with varied SES and education levels, and among Englishand Spanish-speaking families. Videos will be transcribed and coded for infant and mother communicative acts, gestures, object interactions, locomotion, and emotion. The corpus will be augmented with video home tours and questionnaire data on infant language, temperament, locomotion/fall history, gender identity and socialization, home environment, media use, family and infant health, and demographics.

Moreover, PLAY will advance new ways to use video as documentation to ensure scientific transparency and reproducibility. The entire protocol and code definitions are documented at https://www.play-project.org/coding.html with exemplar video clips to illustrate text-based descriptions. The corpus and all tools will be openly shared with the Databrary community.

6 |. CONCLUSION: IT IS NEVER TOO LATE FOR BEH AVIOR

I suffer from habitual lateness. I am late to weddings. I am late to funerals. I am late to meetings. And, to my great embarrassment, I am late with nearly every invited paper, including this one.

Albeit belated (I gave my presidential address in 2016), my message is still timely: Infant behavior is so rich, so complex, and so beautiful that we will never run out of new and exciting things to discover. By making behavior as “tangible as tissue,” video can lead to new insights into the causes and consequences of infant learning and development. Moreover, if we capture infant behavior on video, store the recordings in preservable formats in secure repositories, and openly share the recordings with other researchers, then the behaviors we study will have life beyond our journal articles and grant reports. Behavior, unlike the researchers who study it, never gets old.

Supplementary Material

ACKNOWLEDGEMENTS

Work on this article was supported by NICHD grants R01-HD033486, R01-HD086034, R01-HD094830, and DARPA-N66001–19-2–4035. I declare no conflicts of interest with regard to the funding sources for this work. I am grateful to Eleanor Gibson and Esther Thelen for teaching me to appreciate behavior, Brian MacWhinney for inspiring me to share behavior, three decades of students, colleagues, and mentors for helping me to understand behavior, and countless infants for providing so much beautiful behavior to study. I am blessed that Rick Gilmore and Catherine Tamis-LeMonda share my passion for video and the responsibility for Databrary, Datavyu, and PLAY. I thank Mark Blumberg, Rick Gilmore, Lily Gordon, Justine Hoch, Vanessa LoBue, Jennifer Rachwani, Catherine Tamis-LeMonda, and Melody Xu for reading earlier drafts of this article, and Minxin Chen, Justine Hoch, Jennifer Rachwani, and Melody Xu for their help with figures and videos.

Funding information

Eunice Kennedy Shriver National Institute of Child Health and Human Development, Grant/Award Number: R01-HD033486, R01-HD086034 and R01-HD094830;

Defense Advanced Research Projects Agency, Grant/Award Number: N66001– 19-2–4035

REFERENCES

- Adolph KE (2015). Best practices for coding behavioral data from video. Retrieved fromhttp://datavyu.org/user-guide /best-practices.html [Google Scholar]

- Adolph KE (2016). Video as data: From transient behavior to tangible recording. APS Observer, 29, 23–25. [PMC free article] [PubMed] [Google Scholar]

- Adolph KE, & Berger SE (2006). Motor development In Kuhn D, & Siegler RS (Eds.), Handbook of child psychology: Vol. 2 Cognition, perception, and language (6th ed., Vol. 2 Cognitive Processes, pp. 161–213). New York: Wiley [Google Scholar]

- Adolph KE (1997). Learning in the development of infant locomotion. Monographs of the Society for Research in Child Development, 62(3), 1–140. 10.2307/1166199 [DOI] [PubMed] [Google Scholar]

- Adolph KE, Cole WG, Komati M, Garciaguirre JS, Badaly D, Lingeman JM, … Sotsky RB (2012). How do you learn to walk? Thousands of steps and dozens of falls per day. Psychological Science, 23, 1387–1394. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adolph KE, Gilmore RO, & Kennedy JL (2017). Video data and documentation will improve psychological science. Psychological Science Agenda, http://science/about/psa/2017/2010/index.aspx [Google Scholar]

- Adolph KE, Hoch JE, & Cole WG (2018). Development (of walking): 15 suggestions. Trends in Cognitive Sciences, 22, 699–711. 10.1016/j.tics.2018.05.010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adolph KE, Kretch KS, & LoBue V (2014). Fear of heights in infants? Current Directions in Psychological Science, 23, 60–66. 10.1177/0963721413498895 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adolph KE, & Robinson SR (2013). The road to walking: What learning to walk tells us about development In Zelazo P (Ed.), Oxford handbook of developmental psychology (pp. 403–443). New York: Oxford University Press. [Google Scholar]

- Adolph KE, & Robinson SR (2015). Motor development In Liben L, & Muller U (Eds.), Handbook of child psychology and developmental science (7th ed., Vol. 2 Cognitive Processes, pp. 113–157). New York: Wiley. [Google Scholar]

- Adolph KE, Robinson SR, Young JW, & Gill-Alvarez F. (2008). What is the shape of developmental change? Psychological Review, 115, 527–543. 10.1037/0033-295X.115.3.527 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adolph KE, Vereijken B, & Shrout PE (2003). What changes in infant walking and why. Child Development, 74, 474–497. 10.1111/1467-8624.7402011 [DOI] [PubMed] [Google Scholar]

- Ainsworth MDS, Blehar MC, Waters E, & Wall S. (1978). Patterns of attachment: A psychological study of the Strange Situation. Hillsdale, NJ: Erlbaum. [Google Scholar]

- Aslin RN(2007).What’sinalook? DevelopmentalScience,10,48–53. 10.1111/j.1467-7687.2007.00563.x [DOI] [Google Scholar]

- Aslin RN, Shukla M, & Emberson LL (2015). Hemodynamic correlates of cognition in human infants. Annual Review of Psychology, 66, 349–379. 10.1146/annurev-psych-010213-115108 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baillargeon R, Spelke ES, & Wasserman S. (1985). Object permanence in five-month-old infants. Cognition, 20, 191–208. 10.1016/0010-0277(85)90008-3 [DOI] [PubMed] [Google Scholar]

- Bakeman R, & Quera V. (2011). Sequential analysis and observation methods for the behavioral sciences. New York, NY: Cambridge University Press. [Google Scholar]

- Beebe B. (2014). My journey in infant research and psychoanalysis: Microanalysis, a social microscope. Psychoanalytic Psychology, 31, 4–25. 10.1037/a0035575 [DOI] [Google Scholar]

- Berger SE, Adolph KE, & Lobo SA (2005). Out of the toolbox: Toddlers differentiate wobbly and wooden handrails. Child Development, 76, 1294–1307. 10.1111/j.1467-8624.2005.00851.x [DOI] [PubMed] [Google Scholar]

- Bronfenbrenner U. (2009). The ecology of human development. Cambridge, MA: Harvard University Press. [Google Scholar]

- Bronfenbrenner U, & Morris PA (2006). The bioecological model of human development In Lerner R, & Damon W (Eds.), Handbook of child psychology: Vol. 1 Theoretical models of human development (6th ed., Vol. 1Theoretical Models of Human Development, pp. 793–828). New York, NY: Wiley. [Google Scholar]

- Chen Z, & Siegler RS (2000). Across the great divide: Bridging the gap between understanding of toddlers’ and older children’s thinking. Monographs of the Society for Research in Child Development, 65(2), 1–96. [PubMed] [Google Scholar]

- Cole WG, Robinson SR, & Adolph KE (2016). Bouts of steps: The organization of infant exploration.Developmental Psychobiology, 58, 341–354. 10.1002/dev.21374 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Congdon EL, Novack MA, & Goldin-Meadow S. (2016). Gesture in experimental studies: How videotape technology can advance psychological theory. Organizational Research Methods, 21, 489–499. 10.1177/1094428116654548 [DOI] [Google Scholar]

- Curtis S. (2011). “Tangible as tissue”: Arnold Gesell, infant behavior, and film analysis. Science in Context, 24, 417–442. 10.1017/S0269889711000172 [DOI] [PubMed] [Google Scholar]

- DeLoache JS, Uttal DH, & Rosengren KS (2004). Scale errors offer evidence for a perception-action dissociation early in life. Science, 304(5673), 1027–1029. [DOI] [PubMed] [Google Scholar]

- Diamond A. (2006). The early development of executive functions In Bialystok E, & Craik FIM (Eds.), Lifespan cognition: Mechanisms of change (pp. 70–95). New York, NY: Oxford University Press. [Google Scholar]

- Dominici N, Ivanenko YP, Cappellini G, d’Avella A, Mondi V, Cicchese M, … Lacquaniti F. (2011). Locomotor primitives in newborn babies and their development. Science, 334, 997–999. 10.1126/science.1210617 [DOI] [PubMed] [Google Scholar]

- Dupoux E. (2018). Cognitive science in the era of artificial intelligence: A roadmap for reverse-engineering the infant language-learner. Cognition, 173, 43–59. 10.1016/j.cognition.2017.11.008 [DOI] [PubMed] [Google Scholar]

- Fodor J. (1983). Modularity of mind. Cambridge, MA: MIT Press. [Google Scholar]

- Franchak JM, & Adolph KE (2014). Affordances as probabilistic functions: Implications for development, perception, and decisions for action. Ecological Psychology, 26, 109–124. 10.1080/10407413.2014.874923 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Franchak JM, Kretch KS, Soska KC, & Adolph KE (2011). Head-mounted eye tracking: A new method to describe infant looking. Child Development, 82, 1738–1750. 10.1111/j.1467-8624.2011.01670.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gesell A. (1933). Maturation and the patterning of behavior In Murchison C (Ed.), Handbook of child psychology, Vol.1, 2nd ed. (pp. 209–235). Worcester, MA: Clark University Press. [Google Scholar]

- Gesell A. (1935). Cinemanalysis: A method of behavior study. Journal of Genetic Psychology, 47, 3–16. [Google Scholar]

- Gesell A. (1946). Cinematography and the study of child development. The American Naturalist, 80, 470–475. 10.1086/281463 [DOI] [PubMed] [Google Scholar]

- Gesell A. (1952). Arnold Gesell In Boring EG, Werner H, Langfeld HS, & Yerkes RM (Eds.), A history of psychology in autobiography, Vol. 4 (pp. 123–142). Worcester, MA: Clark University Press. [Google Scholar]

- Gibson EJ, & Walk RD (1960). The “visual cliff”. Scientific American, 202, 64–71. 10.1038/scientificamerican0460-64 [DOI] [PubMed] [Google Scholar]

- Gilmore RO, & Adolph KE (2019). Open sharing of research video: Breaking down the boundaries of the research team In Hall KL, Vogel AL, & Croyle RT (Eds.), Strategies for team science success: Handbook of evidence-based principles for cross-disciplinary science and practical lessons learned from health researchers. (pp. 575–583). cham: Springer. [Google Scholar]

- Gilmore RO, & Adolph KE (2017). Video can make behavioral science more reproducible. Nature Human Behavior, 1, 0128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gilmore RO, Kennedy JL, & Adolph KE (2018). Practical solutions for sharing data and materials from psychological research. Advances in Methods and Practices in Psychological Science, 1, 121–130. 10.1177/2515245917746500 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldman R, Pea R, Barron B, & Derry SJ (Eds.) (2006). Video research in the learning sciences. Mahwah, NJ: Erlbaum. [Google Scholar]

- Goldstein MH, & Schwade JA (2008). Babbling facilitates rapid phonological learning. Psychological Science, 19, 5. [DOI] [PubMed] [Google Scholar]

- Gordon P. (2004). Numerical cognition without words: Evidence from Amazonia. Science, 306, 496–499. 10.1126/science.1094492 [DOI] [PubMed] [Google Scholar]

- Halverson HM (1928). The Yale Psycho-Clinic Photographic Observatory. The American Journal of Psychology, 40, 126–129. 10.2307/1415316 [DOI] [Google Scholar]

- Hamlin JK (2014). The conceptual and empirical case for social evaluation in infancy. Human Development, 57, 250–258. 10.1159/000365120 [DOI] [Google Scholar]

- Harlow HF, & Suomi SJ (1970). Induced psychopathology in monkeys Engineering and Science, 33, 8–14. [Google Scholar]

- Harris R. (2017). Rigor mortis: How sloppy science creates worthless cures, crushes hopes, and wastes billions. NewYork, NY: Basic Books. [Google Scholar]

- Heiman CM, Cole WG, Lee DK, & Adolph KE (2019). Object interaction and walking: Integration of old and new skills in infant development. Infancy, 24, 547–569. 10.1111/infa.12289 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Held R, Birch E, & Gwiazda J. (1980). Stereoacuity of human infants. Proceedings of the National Academy of Sciences, 77, 5572–5574. 10.1073/pnas.77.9.5572 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoch JE, O’Grady SM, & Adolph KE (2018). It’s the journey, not the destination: Locomotor exploration in infants. Developmental Science, 22, e12740. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hulsebus RC (1974). Operant conditioning of infant behavior: A review. Advances in Child Development and Behavior, 8, 111–158. [DOI] [PubMed] [Google Scholar]

- Johnson SP, Slemmer JA, & Amso D. (2004). Where infants look determines how they see: Eye movements and object perception performance in 3-month-olds. Infancy, 6, 185–201. 10.1207/s15327078in0602_3 [DOI] [PubMed] [Google Scholar]

- Karasik LB, Tamis-LeMonda CS, & Adolph KE (2011). Transition from crawling to walking and infants’ actions with objects and people. Child Development, 82, 1199–1209. 10.1111/j.1467-8624.2011.01595.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee DK, Cole WG, Golenia L, & Adolph KE (2018). The cost of simplifying complex developmental phenomena: A new perspective on learning to walk. Developmental Science, 21, e12615 10.1111/desc.12615 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lerner RM (2006). Developmental science, developmental systems, and contemporary theories of human development In Siegler RS, Lerner RM, & Damon W (Eds.), Handbook of child psychology. Vol. 1. Theoretical models of human development (6th ed., pp. 1–17). Hoboken: John Wiley & Sons Inc. [Google Scholar]

- LoBue V, & Adolph KE (2019). Fear in infancy: Lessons from snakes, spiders, heights, and strangers. Developmental Psychology, 55, 1889–1907. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacWhinney B. (2000). The CHILDES Project: Tools for analyzing talk, 3rd ed. Mahwah, NJ: Erlbaum. [Google Scholar]

- Masten AS, & Cicchetti D. (2010). Developmental cascades. Development and Psychopathology, 22, 491–495. 10.1017/S0954579410000222 [DOI] [PubMed] [Google Scholar]

- McGraw MB (1935). Growth: A study of Johnny and Jimmy. New York, NY: Appleton-Century Crofts. [Google Scholar]

- McGraw MB (1945). The neuromuscular maturation of the human infant. New York, NY: Columbia University Press. [Google Scholar]

- Morgante JD, Zolfaghari R, & Johnson SP (2012). A critical test of temporal and spatial accuracy of the TobiiT60XL eye tracker. Infancy, 17, 9–32. 10.1111/j.1532-7078.2011.00089.x [DOI] [PubMed] [Google Scholar]

- Munakata Y. (2006). Information processing approaches to development In Kuhn D, & Siegler RS (Eds.), Handbook of child psychology: Vol. 2 Cognition, perception, and language (6th ed., Vol. 2 Cognitive Processes, pp. 426–463). New York, NY: Wiley. [Google Scholar]

- Munakata Y, McClelland JL, Johnson MH, & Siegler RS (1997). Rethinking infant knowledge: Toward an adaptive process account of successes and failures in object permanence tasks. Psychological Review, 104, 686–713. 10.1037/0033-295X.104.4.686 [DOI] [PubMed] [Google Scholar]

- Newman RS, Rowe ML, & Ratner NB (2016). Input and uptake at 7 months predicts toddler vocabulary: The role of child-directed speech and infant processing skills in language development. Journal of Child Language, 43, 1158–1173. 10.1017/S0305000915000446 [DOI] [PubMed] [Google Scholar]

- Nosek BA (2014). Improving my lab: My science with the Open Science Framework. APS Observer, 27(3). Retrieved from. [Google Scholar]

- Nosek BA (2017). Why are we working so hard to open up science? A personal story. https://cos.io/blog/why-are-we-working-so-hard-open-science-personal-story/ [Google Scholar]

- Nosek BA, Alter G, Banks GC, Borsboom D, Bowman SD, Breckler SJ, … Yarkoni T. (2015). Promoting an open research culture. Science, 348, 1422–1425. 10.1126/science.aab2374 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oakes LM (2012). Advances in eye tracking in infancy research. Infancy, 17, 1–8. 10.1111/j.1532-7078.2011.00101.x [DOI] [PubMed] [Google Scholar]

- Oakes LM, & Rakison DH (2019). Developmental cascades: Building the infant mind. New York, NY: Oxford University Press. [Google Scholar]

- Open Science Collaboration (2015). Estimating the reproducibility of psychological science Science, 349, aac4716. [DOI] [PubMed] [Google Scholar]

- Ossmy O, Gilmore RO, & Adolph KE (2020). AutoViDev: A computer-vision framework to enhance and accelerate research in human development In Arai K & Kapoor S (Eds.), Advances in computer vision: CVC 2019. Advances in Intelligent Systems and Computing (Vol. 944, pp. 147–156). Cham, Switzerland: Springer. [Google Scholar]

- Peterson D. (2016). The baby factory: Difficult research objects, disciplinary standards, and the production of statistical significance. Socius: Sociological Research for a Dynamic World, 2, 1–10. [Google Scholar]

- Piwowar HA, & Chapman WW (2010). Public sharing of research datasets: A pilot study of associations. Journal of Informetrics, 4, 148–156. 10.1016/j.joi.2009.11.010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rovee-Collier CK, & Gekoski M. (1979). The economics of infancy: A review of conjugate reinforcement In Reese HW, & Lipsitt LP (Eds.), Advances in child development and behavior (Vol. 13, pp. 195–255). New York, NY: Academic Press. [DOI] [PubMed] [Google Scholar]

- Saarni C, Campos JJ, Camras LA, & Witherington D. (2006). Emotional development: Action, communication, and understanding In Einsenberg N (Ed.), Handbook of child psychology. Vol. 3. Social, emotional, and personality development (6th ed., pp. 226–299). New York, NY: John Wiley & Sons. [Google Scholar]

- Sanderson PM, Scott JJP, Johnston T, Mainzer J, Wantanbe LM, & James JM (1994). MacSHAPA and the enterprise of Exploratory Sequential Data Analysis (ESDA). International Journal of Human-Computer Studies, 41, 633–681. 10.1006/ijhc.1994.1077 [DOI] [Google Scholar]

- Scherer KR, Zentner MR, & Stern D. (2004). Beyond surprise: The puzzle of infants’ expressive reactions to expectancy violation. Emotion, 4, 389–402. 10.1037/1528-3542.4.4.389 [DOI] [PubMed] [Google Scholar]

- Spelke ES (2000). Core knowledge. American Psychologist, 55, 1233–1243. 10.1037/0003-066X.55.11.1233 [DOI] [PubMed] [Google Scholar]

- Spelke ES, & Kinzler KD (2007). Core knowledge. Developmental Science, 10, 89–96. https://doiorg/10.1111/j.1467-7687.2007.00569.x [DOI] [PubMed] [Google Scholar]

- Tafreshi D, Thompson JJ, & Racine TP (2014). An analysis of the conceptual foundations of the infant preferential looking paradigm. Human Development, 57, 222–240. 10.1159/000363487 [DOI] [Google Scholar]

- Tamis-LeMonda CS, & Bornstein MH (1996). Variation in children’s exploratory, nonsymbolic, and symbolic play: An explanatory multidimensional framework In Rovee-Collier CK, & Lipsitt LR (Eds.), Advances in infancy research, Vol. 10 (pp. 37–78). Westport, CT: Ablex. [Google Scholar]

- Tamis-LeMonda CS, Kuchirko Y, Luo R, Escobar K, & Bornstein MH (2017). Power in methods: Language to infants in structured and naturalistic contexts. Developmental Science, 20, e12456 10.1111/desc.12456 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Teller DY (1979). The forced-choice preferential looking procedure: A psychophysical technique for use with human infants. Infant Behavior and Development, 2, 135–153. 10.1016/S0163-6383(79)80016-8 [DOI] [Google Scholar]

- Thelen E. (1992). Development as a dynamic system. Current Directions in Psychological Science, 1, 189–193. 10.1111/1467-8721.ep10770402 [DOI] [Google Scholar]

- Thelen E, & Adolph KE (1992). Arnold L. Gesell: The paradox of nature and nurture. Developmental Psychology, 28, 368–380. 10.1037/0012-1649.28.3.368 [DOI] [Google Scholar]

- Thelen E, Ulrich BD, & Wolff PH (1991). Hidden skills: A dynamic systems analysis of treadmill stepping during the first year. Monographs of the Society for Research in Child Development, 56(1), 1–103. 10.2307/1166099 [DOI] [PubMed] [Google Scholar]

- Tronick E, Als H, Adamson L, Wise S, & Brazelton TB (1978). Infant’s response to entrapment between contradictory messages in face-to-face interaction. Journal of American Academy of Child and Adolescent Psychiatry, 17, 1–13. [DOI] [PubMed] [Google Scholar]

- Van Bavel JJ, Mende-Siedlecki P, Brady WJ, & Reinero DA (2016). Contextual sensitivity in scientific reproducibility. Proceedings of the National Academy of Sciences, 113, 6454–6459. 10.1073/pnas.1521897113 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Witherington DC, Campos JJ, Anderson DI, Lejeune L, & Seah E. (2005). Avoidance of heights on the visual cliff in newly walking infants. Infancy, 7, 285–298. 10.1207/s15327078in0703_4 [DOI] [PubMed] [Google Scholar]

- Zeanah CH (2018). Handbook of infant mental health, (4th ed.). Zeanah CH (Ed.), New York, NY: Guildford. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.