Abstract

Mental health apps offer unique opportunities for self-management of mental health and well-being in mobile, cost-effective ways. There is an abundance of apps available to consumers, but selecting a useful one presents a challenge. Most available apps are not supported by empirical evidence and thus consumers have access to a range of unreviewed apps, the benefits of which are not known or supported. While user ratings exist, and are likely to be considered by consumers when selecting an app, they do not actually yield information on app suitability. A possible alternative way for consumers to choose an app would be to use an app review platform. A number of attempts have been made to construct such a platform, and this paper introduces PsyberGuide, which offers a step towards providing objective and actionable information for publicly available mental health apps.

Keywords: mental health, mobile apps, mHealth, dissemination, technology

The Rise of mHealth

Smartphones are increasing in prevalence and use, and mobile health (“mHealth”) apps have tremendous potential to facilitate and enhance mental health care. Apps can support individuals in managing their own mental health and be used in clinical care settings to augment existing treatment processes. Most apps range in cost from free to a few dollars, can be downloaded by anyone with a smartphone device, and can be used privately and “on the go,” overcoming barriers to traditional mental health treatments such as cost, access, and stigma. Recent estimates suggest that approximately 259,000 mHealth apps are available (Research2guidance, 2016), with mental health apps comprising about one third of disease-specific apps (Aitken & Lyle, 2015). Consumers appear quite interested in mental health apps. Community samples and psychiatric patients hold positive attitudes towards the use of apps to aid self-management of mental health (Proudfoot, 2013; Torous et al., 2014). Furthermore, clinicians report they would use and recommend apps if issues of security, privacy, and interoperability could be overcome (Schueller, Washburn, & Price, 2016).

Despite the enthusiasm of consumers and clinicians for mental health apps, more questions than answers abound: Do they really work? How do I find good ones? How can I actually use these things to improve my life or my practice? We aim to provide some answers to these questions by providing a brief overview of the empirical support for mental health apps, some resources for finding suitable apps, and examples of exemplary apps. We conclude with suggestions for people and clinicians looking to maximize the benefit that could be obtained from mental health apps. Our goal is to help empower readers to find useful resources and better understand best practices for use of mental health apps.

Do They Really Work? High Availability But Low Evidence Base

Considerable evidence is building that mental health apps are effective for a range of mental health conditions, including depression (Firth, Torous, Nicholas, Carney, Pratap, et al., 2017), anxiety (Firth, Torous, Nicholas, Carney, Rosenbaum, et al., 2017), bipolar disorder, and schizophrenia (Ben-Zeev et al., in press). However, there are very few apps with rigorous evidence demonstrating their efficacy; most studies use weak control groups such as waitlist or non-treatment designs. Of the apps that do have direct efficacy data, few are actually available to consumers, having been developed for research purposes alone.

In a recent review of anxiety apps (Sucala et al., 2017), only 2 of 52 apps (3.8%) reviewed had feasibility and efficacy data from an RCT. Sixty-seven percent of the apps lacked the advisory support of a health care professional in their development. Worse still, many apps do not even incorporate evidence-based strategies. In a review of apps targeting symptoms of worry and anxiety, over three-fourths of the apps (280/361) did not contain content consistent with any of seven identified evidence-based strategies for generalized anxiety disorder: assessment/self-monitoring, psychoeducation, progressive/applied relaxation, exposure, cognitive restructuring, stimulus control, and acceptance/mindfulness (Kertz et al., 2017). The lack of appropriate strategies likely represents a lack in clinical expertise during app development. Apps for bipolar disorder (BD) demonstrate a similar pattern (Nicholas, Larsen, Proudfoot, & Christensen, 2015). Alarmingly, 6 of the 82 apps in Nicholas and colleagues’ review contained incorrect information, ranging from incorrect differentiation of BD to critically wrong self-management advice (e.g., “take a shot of hard liquor” before bed during a manic episode). Thus, the app marketplace demonstrates “high availability but low evidence base” (Leigh & Flatt, 2015), meaning that consumers often use unreviewed, unsupported apps in conjunction with, or even in lieu of, mental health care.

It is unlikely that the discrepancy between the number of available and evaluated mental health apps will be solved by RCTs alone. The time required to conduct an RCT and publish results does not align with the rapid development cycle of apps. Trials often have extensive eligibility criteria, which slows recruitment and decreases generalizability. More pragmatic trials of apps freely available in app stores often have low rates of participant engagement, complicating the interpretation of findings (e.g., Anguera, Jordan, Castaneda, Gazzaley, & Areán, 2016; Arean et al., 2016). With a bewildering abundance of apps available, clinicians and consumers will continue to face challenges in attempting to discern which are most effective, usable, engaging, or safest; how do we separate the good from the bad?

How Do I Find Good Apps?

A common strategy to find an app is to search the app marketplaces (i.e., the Apple iTunes or Google Play store) and to download the most popular or highest-rated product. People value ratings and peer reviews; highly rated apps tend to be downloaded more frequently than those with lower ratings (Nicholas et al., 2015). For health apps, however, consumer ratings do not seem to reflect clinical usefulness or utility. User ratings show only moderate correlations with objective app quality rating scales (e.g., MARS, which will be discussed below; Stoyanov et al., 2015). People who leave app ratings are a biased, self-selected sample and might represent users with a particularly negative or positive experience to share. Furthermore, people who rate apps might not have engaged with an app long enough to explore its full functionality, and likely lack the necessary expertise to comment on aspects such as the inclusion of evidence-based strategies. App developers can leave ratings for their own apps or pay others to do so and there is no way to distinguish genuine consumer ratings from these other ratings (BinDhim, Hawkey, & Trevena, 2015).

Apps are also found through word of mouth, lists of “top” mental health apps, or advertisements. Patients, especially those receiving mental health care, are more likely to download and use an app if their provider recommends it (Aitken & Lyle, 2015). Therefore, increasing providers’ knowledge about apps may be an important pathway to increase uptake. While stigma might prevent a consumer from asking their personal networks for recommendations about mental health apps, providers can use their professional networks to solicit advice (e.g., listservs, publications, trainings and workshops, or professional meetings). However, just because an app is recommended by a professional does not mean it is “good.” Resources specifically developed to screen, identify, and assess mental health apps could provide a substantial contribution in helping both consumers and clinicians identify quality offerings. In general, such efforts can be divided into two categories: app ratings guidelines, which can be used by those considering apps, and app rating platforms, which function as clearinghouses for apps. We provide specific examples of both in Table 1, including the features of each.

Table 1.

Features of App Rating Platforms and Guidelines

| Name | Scope | Features | Status | # of Rated Products |

|---|---|---|---|---|

| PsyberGuide | App rating platform |

|

Active | 115 |

| ADAA | App rating platform |

|

Active | 19 |

| MindTools | App rating platform | Rates apps on Enlight | Active | 78 |

| ORCHA | App rating platform |

|

Active | 173 |

| mHAD | App rating platform |

|

Not yet launched (Rathner, 2017) | |

| Beacon | App rating platform |

|

Suspended | |

| Happtique | App rating platform |

|

Suspended | |

| The Toolbox | App rating platform |

|

Not publicly available evaluated in published RCT (Bidargaddi et al., 2017) | |

| American Psychiatric Association | App rating guidelines |

|

Active | |

| Enlight | App rating guidelines | Quality assessments:

|

Active | |

| Mobile App Rating Scale (MARS) | App rating guidelines |

|

App Rating Guidelines

App rating guidelines outline which characteristics to consider when assessing a mobile mental health app, often in addition to clear criteria outlining what constitutes a quality app with regards to those characteristics. The American Psychiatric Association, for example, has proposed a five-stage app rating model that includes (1) gathering background information; (2) determining risk, privacy, and security; (3) evaluating evidence; (4) assessing ease of use; and (5) considering interoperability. These stages are hierarchical such that determining an app is insufficient at one stage suggests one need not consider that app any further.

The most widely used rating system for mental health apps is the Mobile App Rating Scale (MARS), which provides an objective, multidimensional rating of health app quality and usability (Stoyanov et al., 2015). The MARS was developed by extracting quality indicators from different fields, including human-computer interaction and mHealth. The resultant scale provides a total mean score representing overall app quality and four subscales: engagement, functionality, aesthetics, and information. Raters also provide a subjective quality score, based on their own impression of the app, including its usability and perceived effectiveness. The MARS demonstrates good levels of internal consistency and interrater reliability and has been used in several publications to understand a variety of different mental health apps—for example, mindfulness (Mani, Kavanagh, Hides, & Stoyanov, 2015) and depression (Stoyanov et al., 2015).

Recently, the Enlight rating guidelines were developed through a comprehensive systematic review of existing app rating methods (Baumel, Faber, Mathur, Kane, & Muench, 2017). The result is a comprehensive battery of quality assessments and checklists. The quality assessment section consists of 25 items divided into six core constructs; usability, visual design, user engagement, content, therapeutic persuasiveness, and therapeutic alliance. Each section contains Likert-type scales with detailed anchors to standardize the review process. Following the completion of rating the six core constructs, raters complete a general subjective evaluation of the likelihood that the app could produce the intended clinical aim, properly balances difficulty to use and motivation, and overall satisfaction. Checklists are simpler than quality assessments and just require identification of specific aspects related to product use, including credibility, evidence base, privacy explanation, and basic security.

Consensus among these varied guidelines is that multidimensional rating systems are needed, rather than single numerical ratings, such as the “star” ratings found in app stores. Multidimensional ratings are useful for consumers and clinicians because some characteristics might be more important for different people or purposes, for example, those with low technological literacy might require a more user-friendly app. However, rating systems do not necessarily simplify the process of finding a mental health app and still require a significant effort on the part of the user, who must then review the products they have identified. This limitation has led to the rise of app rating platforms which identify, rate, and provide clear information regarding apps themselves.

App Rating Platforms

App rating platforms act as clearinghouses for mobile apps in a particular domain. Several app rating platforms exist, including a few focused specifically on mental health apps. These include the for-profit Organization for the Review of Care & Health Applications (ORCHA), in addition to the Anxiety and Depression Association of America’s (ADAA) mental health app ratings, the Mobile Health App Database (mHAD) Germany, MindTools, PsyberGuide, and the Toolbox (see Table 1 for details). The National Health Service’s app library and Head to Health Australia also provide resources to help consumers identify mental health apps but do not provide ratings. A pioneering platform in this space was Beacon, which provided users with a directory of eHealth resources beginning in 2009 (Christensen et al, 2010). It was suspended, however, in 2016, with less than 40 mental health apps reviewed on the site. We focus on PsyberGuide as it is currently the most active and comprehensive platform in the United States and is able to provide unbiased reviews due to its nonprofit status.

PsyberGuide: A Web Platform for App Ratings

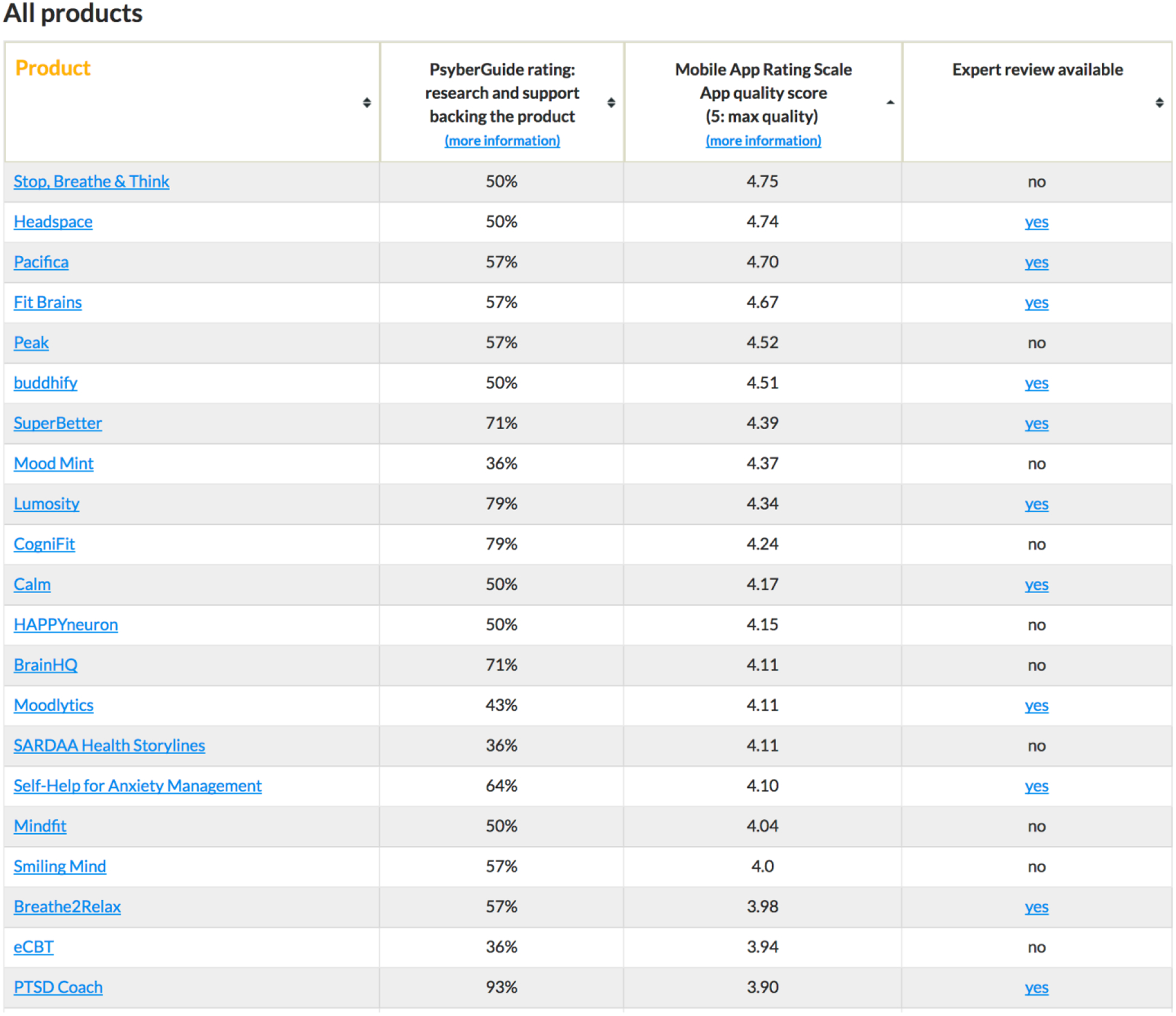

PsyberGuide (https://psyberguide.org) is a nonprofit initiative that maintains an online consumer guide for digital mental health products, with a particular emphasis on smartphone apps. Apps are reviewed through four independent rating systems; PsyberGuide ratings, the MARS score, expert reviews and transparency checklists (see Figure 1 for example screenshot of how information is presented on the website). PsyberGuide ratings are a metric of “credibility,” indicating the extent of empirical research and support for a product. There are six subscales: the “research base” subscale gives an overview of the research support for the product and the quality of that research; “research support” rates the quality of the source of funding for any supporting research; “proposed intervention” assesses the specificity of the change strategies within the app. Apps are also given ratings for product advisory support, the number of available consumer ratings, and software support. Ratings are combined to give a percentage score, and additional descriptive information is provided, including whether apps are designed to be used in consultation with a mental health professional. The PsyberGuide rating provides basic consumer information on each app as well as a comprehensive overview of supporting empirical evidence.

Figure 1.

Presentation of app ratings on PsyberGuide website.

Over half the apps on PsyberGuide also have MARS scores (as described earlier). Expert reviews are narrative in format and completed by doctoral-level individuals with expertise relevant to the app they are reviewing (details of which can be found on the website). These reviews give a broad overview of the pros and cons of each app in addition to the reviewer’s own recommendations. Thirty percent of products on the PsyberGuide website have an expert review. Importantly, these reviews cover a range of apps; apps with an expert review have an average PsyberGuide rating of 59% (range 14–93%) and an average MARS rating of 3.79 (range 2.51–4.74). Thus, expert reviews can round out information that might not be caught from more structured, numerical rating systems and can help instruct users how and why to use particular apps.

A recent addition is rating apps on transparency or the accessibility of information on an app’s privacy and security. Privacy and security is a foremost concern of clinicians and end users for mental health apps (Dennison, Morrison, Conway, & Yardley, 2013; Schueller et al., 2016). Transparency ratings are divided into categories of acceptable, questionable, unacceptable based on presence or absence of must-haves, should-haves, and nice-to-haves and is modeled on the American Psychiatric Association and Enlight concepts of privacy and security.

Currently, there are over 100 products reviewed on the PsyberGuide website, with an average PsyberGuide rating of 51% (range 0–93). Just over half of apps also have MARS ratings. Currently, the average MARS rating is 3.54 (range 1.63–4.75). In the apps listed on PsyberGuide there is no correlation between PsyberGuide and MARS ratings, r(63) = .22, p = .08, which is not surprising given that ratings tap different constructs. Both rating scales show little relationship with app store ratings; thus, PsyberGuide is providing novel information that is not possible through the app stores alone.

Exemplary Apps

It is worth noting that PsyberGuide does not aim to rate only the best products and inclusion on PsyberGuide is not intended to be an endorsement. Instead, PsyberGuide’s goal is to provide information about a range of products such that consumers can make more informed decisions regarding using an app. Twelve apps on the site have PsyberGuide ratings above 75%, with the highest rating coming from PTSD Coach (93%). PTSD Coach has been evaluated in several research studies, supporting its feasibility for use in veteran populations, both as an adjunct to traditional treatment (Possemato, Kuhn, Johnson, Hoffman, & Brooks, 2017) and for self-management (Miner et al., 2016). A recent randomized controlled trial of PTSD Coach remains one of the most rigorous evaluations of an app-based treatment for mental health and showed benefits in PTSD symptoms compared to a waitlist condition (Kuhn et al., 2017).

Six products have MARS ratings above 4.5; many of these apps are either meditation and mindfulness apps (Stop, Breathe, & Think; Headspace; Buddhify) or brain training apps (Fit Brains; Peak). It is not surprising that these apps have a more refined user experience given that such products have been extremely popular and have thus yielded more contributions from industry and technology developers. The highest MARS rating on the PsyberGuide website is associated with Stop, Breathe, & Think. Another highly rated app according to the MARS rating is Pacifica. Pacifica contains lessons and activities based on cognitive-behavioral therapy skills and includes a backend which allows for review of data when used in conjunction with a provider. Although these products have not been subjected to direct empirical evaluation, positive ratings by independent reviewers suggest they could be helpful. Such apps might be useful tools as adjuncts to traditional treatment resources as they can reinforce skills and techniques that are commonly used by providers using evidence-based practices.

How Can I Actually Use Apps to Improve My Life or My Practice?

We highlight three potentials for apps within mental health service delivery: (a) as self-help, stand-alone tools that can aid self-management of mental health symptoms, referred to here as unsupported apps; (b) in conjunction with a professional coach or therapist, deemed supported apps (offerings such as Ginger.io, Lantern, and Joyable are current examples of supported apps); (c) in the context of traditional treatment services as a digital adjunct to improve the efficacy or efficiency of care, which has been called blended care.

Unsupported apps are less costly than supported or blended options with fewer access barriers (e.g., setting up a time to chat with a provider), but have also been found to be less effective than those with some form of human involvement (Schueller, Tomasino, Mohr, 2017), which often introduces an element of accountability. Supported apps are a new, but growing, offering for consumer mental health and many companies are beginning to expand beyond direct-to-consumer models to focus on providing behavioral health services to business or insurance companies. Supporters in such programs are very familiar with the app and can help troubleshoot, track progress, and provide feedback. However, they are not necessarily trained professionals and it is unclear whether they consistently provide evidence-based practices. Consumers interested in such products should research who is providing care at least in terms of professional standing. Blended care incorporates relevant apps into treatment from a licensed professional, thus increasing accountability for app use and monitoring appropriate use of the app. However, such professionals may be less familiar with mental health apps and how to use them in their practice. A few apps, like Pacifica, have features that enable providers to access app data directly. More commonly, users will have to present and summarize their data for providers themselves. As such, we encourage providers interested in using apps to treat apps like other homework assignments, to review work from them regularly and to reinforce how apps align with treatment goals like skill practice, symptom tracking, or psychoeducational material.

Limitations

It is clear that neither app stores nor scientific evidence are sufficient to help people identify high-quality mental health apps. PsyberGuide is a more comprehensive and informative resource than app reviews, but it is not available at the point of download, which is a likely window of opportunity to impact a person’s decision to download and use an app. Furthermore, PsyberGuide must still deal with issues of versioning and updates, both to the app itself, and the research literature.

Future Directions and Conclusions

Despite these limitations, resources like PsyberGuide, are a step towards providing objective and actionable information for publicly available mental health apps. They serve as a useful starting point for people and clinicians to find information about apps that could be used either within or outside treatment contexts. Ultimately, such platforms could also inform the development of guidelines and regulations regarding mental health apps and their use in practice. By disseminating these guidelines, mHealth app developers may be influenced early in design and could develop apps with these guidelines and a review process in mind (Powell, Landman, & Bates, 2014). Barring significant changes in app store or federal regulations, it is unlikely that there will be a decrease in the proliferation of mental health apps. Thus, users and providers need resources, like PsyberGuide, to help them sort through available options and identify effective and usable products.

Highlights.

Apps are a useful tool in self-management of mental health and well-being

Most publicly available mental health apps have no direct scientific support

User ratings are an indication of app popularity but not clinical usefulness

Consumers and clinicians need additional ways to determine what “good” apps are

App rating platforms (for example, PsyberGuide) may be a way to address this

Acknowledgments

PsyberGuide is a propriety project of, and is funded by, the One Mind. The operation of PsyberGuide is managed by Stephen M. Schueller of Northwestern University, who is the PI of a grant from One Mind to Northwestern. Dr. Schueller is supported by a career development award K08MH102336 and is an investigator with the Implementation Research Institute (IRI), at the George Warren Brown School of Social Work, Washington University in St. Louis; through an award from the National Institute of Mental Health (5R25MH08091607) and the Department of Veteran Affairs, Health Services Research & Development Service, Quality Enhancement Research Initiative (QUERI).

Disclosure Statement. Both authors receive funding from the One Mind, of which PsyberGuide is a propriety project. Dr. Stephen M. Schueller is the Executive Director of PsyberGuide. This manuscript has been reviewed by an affiliate from the One Mind Institute.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Aitken M, & Lyle J (2015). Patient adoption of mHealth: Use, evidence and remaining barriers to mainstream acceptance. Parsippany, NJ: IMS Institute for Healthcare Informatics. [Google Scholar]

- Anguera JA, Jordan JT, Castaneda D, Gazzaley A, & Areán PA (2016). Conducting a fully mobile and randomised clinical trial for depression: Access, engagement and expense. BMJ Innovations, 2(1), 14–21. 10.1136/bmjinnov-2015-000098 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arean PA, Hallgren KA, Jordan JT, Gazzaley A, Atkins DC, Heagerty PJ, & Anguera JA (2016). The use and effectiveness of mobile apps for depression: Results from a fully remote clinical trial. Journal of Medical Internet Research, 18(12). 10.2196/jmir.6482 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baumel A, Faber K, Mathur N, Kane JM, & Muench F (2017). Enlight: A comprehensive quality and therapeutic potential evaluation tool for mobile and web-based eHealth interventions. Journal of Medical Internet Research, 19(3), e82 10.2196/jmir.7270 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ben-Zeev D, Brian RM, Jonathan G, Razzano L, Pashka N, Carpenter-Song EA, Drake RE, & Schrer EA (in press). Randomized controlled trial of mHealth vs. clinic-based interventions for serious mental illness: Patient engagement, satisfaction, and clinical outcomes. Schizophrenia Bulletin. [Google Scholar]

- BinDhim NF, Hawkey A, & Trevena L (2015). A systematic review of quality assessment methods for smartphone health apps. Telemedicine and e-Health, 21(2), 97–104. 10.1089/tmj.2014.0088 [DOI] [PubMed] [Google Scholar]

- Christensen H, Murray K, Calear AL, Bennett K, Bennett A, & Griffiths KM (2010). Beacon: A web portal to high-quality mental health websites for use by health professionals and the public. The Medical Journal of Australia, 192(11), S40–44. [DOI] [PubMed] [Google Scholar]

- Dennison L, Morrison L, Conway G, & Yardley L (2013). Opportunities and challenges for smartphone applications in supporting health behavior change: Qualitative study. Journal of Medical Internet Research, 15(4), e86 10.2196/jmir.2583 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Firth J, Torous J, Nicholas J, Carney R, Pratap A, Rosenbaum S, & Sarris J (2017). The efficacy of smartphone-based mental health interventions for depressive symptoms: a meta-analysis of randomized controlled trials. World Psychiatry, 16(3), 287–298. 10.1002/wps.20472 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Firth J, Torous J, Nicholas J, Carney R, Rosenbaum S, & Sarris J (2017). Can smartphone mental health interventions reduce symptoms of anxiety? A meta-analysis of randomized controlled trials. Journal of Affective Disorders, 218, 15–22. 10.1016/j.jad.2017.04.046 [DOI] [PubMed] [Google Scholar]

- Kertz SJ, Kelly JM, Stevens KT, Schrock M, & Danitz SB (2017). A review of free iPhone applications designed to target anxiety and worry. Journal of Technology in Behavioral Science, 1–10. 10.1007/s41347-016-0006-y [DOI] [Google Scholar]

- Kuhn E, Kanuri N, Hoffman JE, Garvert DW, Ruzek JI, & Taylor CB (2017). A randomized controlled trial of a smartphone app for posttraumatic stress disorder symptoms. Journal of Consulting and Clinical Psychology, 85(3), 267–273. 10.1037/ccp0000163 [DOI] [PubMed] [Google Scholar]

- Leigh S, & Flatt S (2015). App-based psychological interventions: friend or foe? Evidence-Based Mental Health, ebmental-2015–102203. 10.1136/eb-2015-102203 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mani M, Kavanagh DJ, Hides L, & Stoyanov SR (2015). Review and evaluation of mindfulness-based iPhone apps. JMIR mHealth and uHealth, 3(3). 10.2196/mhealth.4328 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nicholas J, Larsen ME, Proudfoot J, & Christensen H (2015). Mobile apps for bipolar disorder: A systematic review of features and content quality. Journal of Medical Internet Research, 17(8), e198 10.2196/jmir.4581 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Possemato K, Kuhn E, Johnson EM, Hoffman JE, & Brooks E (2017). Development and refinement of a clinician intervention to facilitate primary care patient use of the PTSD Coach app. Translational Behavioral Medicine, 7(1), 116–126. 10.1007/s13142-016-0393-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Powell AC, Landman AB, & Bates DW (2014). In search of a few good apps. JAMA, 311(18), 1851–1852. 10.1001/jama.2014.2564 [DOI] [PubMed] [Google Scholar]

- Proudfoot J (2013). The future is in our hands: the role of mobile phones in the prevention and management of mental disorders. The Australian and New Zealand Journal of Psychiatry, 47(2), 111–113. 10.1177/0004867412471441 [DOI] [PubMed] [Google Scholar]

- Rathner E (2017). Implementation of the mobile health app database (mHAD). Workshop presented at the 9th International for Society for Research on Internet Interventions Scientific Meeting, Berlin, Germany. [Google Scholar]

- Research2guidance (2016). mHealth app development economics 2016: The current status and trends of the mHealth app market. Accessed at http://research2guidance.com/r2g/r2gmHealth-App-Developer-Economics-2016.pdf

- Schueller SM, Tomasino KN, & Mohr DC (2017). Integrating human support into behavioral intervention technologies: The efficiency model of support. Clinical Psychology: Science and Practice, 24(1), 27–45. 10.1111/cpsp.12173 [DOI] [Google Scholar]

- Schueller SM, Washburn JJ, & Price M (2016). Exploring mental health providers’ interest in using web and mobile-based tools in their practices. Internet Interventions, 4, 145–151. 10.1016/j.invent.2016.06.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stoyanov SR, Hides L, Kavanagh DJ, Zelenko O, Tjondronegoro D, & Mani M (2015). Mobile App Rating Scale: A new tool for assessing the quality of health mobile apps. JMIR mHealth and uHealth, 3(1), e27 10.2196/mhealth.3422 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sucala M, Cuijpers P, Muench F, Cardoș R, Soflau R, Dobrean A, … David D (2017). Anxiety: There is an app for that. A systematic review of anxiety apps. Depression and Anxiety, 34(6), 518–525. 10.1002/da.22654 [DOI] [PubMed] [Google Scholar]

- Torous J, Chan SR, Tan SY-M, Behrens J, Mathew I, Conrad EJ, … Keshavan M (2014). Patient smartphone ownership and interest in mobile apps to monitor symptoms of mental health conditions: A survey in four geographically distinct psychiatric clinics. JMIR Mental Health, 1(1), e5 10.2196/mental.4004 [DOI] [PMC free article] [PubMed] [Google Scholar]