Abstract

Background

High throughput microfluidic protocols in single cell RNA sequencing (scRNA-seq) collect mRNA counts from up to one million individual cells in a single experiment; this enables high resolution studies of rare cell types and cell development pathways. Determining small sets of genetic markers that can identify specific cell populations is thus one of the major objectives of computational analysis of mRNA counts data. Many tools have been developed for marker selection on single cell data; most of them, however, are based on complex statistical models and handle the multi-class case in an ad-hoc manner.

Results

We introduce RankCorr, a fast method with strong mathematical underpinnings that performs multi-class marker selection in an informed manner. RankCorr proceeds by ranking the mRNA counts data before linearly separating the ranked data using a small number of genes. The step of ranking is intuitively natural for scRNA-seq data and provides a non-parametric method for analyzing count data. In addition, we present several performance measures for evaluating the quality of a set of markers when there is no known ground truth. Using these metrics, we compare the performance of RankCorr to a variety of other marker selection methods on an assortment of experimental and synthetic data sets that range in size from several thousand to one million cells.

Conclusions

According to the metrics introduced in this work, RankCorr is consistently one of most optimal marker selection methods on scRNA-seq data. Most methods show similar overall performance, however; thus, the speed of the algorithm is the most important consideration for large data sets (and comparing the markers selected by several methods can be fruitful). RankCorr is fast enough to easily handle the largest data sets and, as such, it is a useful tool to add into computational pipelines when dealing with high throughput scRNA-seq data. RankCorr software is available for download at https://github.com/ahsv/RankCorrwith extensive documentation.

Keywords: Single cell RNA-seq, Marker selection, Machine learning, Data analysis, Algorithms, Benchmarking

Background

In recent years, single cell RNA sequencing (scRNA-seq) has made it possible to characterize cellular diversity by determining detailed gene expression profiles of specific cell types and states ([1, 2]). Furthermore, mRNA data can now be collected from more than one million cells in one experiment due to the development of high throughput microfluidic sequencing protocols [3]. The incorporation of unique molecular identifier (UMI) technology additionally makes it possible to process these raw sequencing data into integer valued read counts (instead of the “counts per million fragments” types of rates that were used in bulk sequencing [1]). Thus, modern scRNA-seq experiments produce massive amounts of integer valued counts data.

These scRNA-seq data exhibit high variance and are sparse (often, approximately 90% of the reads are 0 [4]) for both biological (e.g. transcriptional bursting) and technical (e.g. 3’ bias in UMI based sequencing protocols) reasons. Those characteristics, in combination with the integer valued quality of the counts and the high dimensionality of the data (often, 20,000 genes show nonzero expression levels in an experiment), are such that scRNA-seq data do not match many of the models that underlie common data analysis techniques. For this reason, many specialized tools have been developed to answer biological questions with scRNA-seq data.

One such biological question that has generated a significant amount of study in the scRNA-seq literature is the problem of finding marker genes. From a biological perspective, we loosely define marker genes as genes that can be used to identify a given group of cells and distinguish those cells from all other cells or from other specific groups of cells. Usually, these are genes that show higher (or lower) levels of expression in the group of interest compared to the rest of the cell population; this provides simple ways to visualize the cell types and to test for the given cell types in experiments. In practice, certain genes are more desirable markers than others; for example, marker genes that encode surface proteins allow for the physical isolation of cell types via fluorescence-activated cell sorting (FACS).

A multitude of tools for finding marker genes are present in the (sc)RNA-seq literature. These tools often inherently define marker genes to be genes that are differentially expressed between two cell populations. That is, in order to find the genes that are useful for separating two populations of cells, a statistical test is applied to each gene in the data set to determine if the distributions of gene expression are different between the two populations: the genes with the most significance are selected as marker genes. The commonly-used analysis tools scanpy [5] and Seurat [6] implement differential expression methods as their default marker selection techniques; see also [7] for a survey of differential expression methods.

Marker selection has also received extensive study in the computer science literature, where it is known as feature selection. Given a data set, the goal of feature selection is to determine a (small) subset of the variables (genes) in that data set that are the most “relevant.” In this case, the relevance of a set of variables is defined by some external evaluation function - different feature selection algorithms use a variety of approaches to optimize different relevance functions.

There are generally two main classes of feature selection algorithms: greedy algorithms that select features one-by-one, computing a score at each step to determine the next marker to select (for example, forward- or backward-stepwise selection, see Section 3.3 of [8]; mutual information based methods, see e.g. [9]; and other greedy methods e.g. [10]), and slower algorithms that are based on solving some regularized convex optimization problem (for example LASSO [11], Elastic Nets [12], and other related methods [13]).

A major drawback of many existing feature selection and differential expression algorithms is that they are not designed to handle data that contain more than two cell types. Using a differential expression method, for example, one strategy is to pick a fixed number (e.g., 10) of the statistically most significant genes for each cell type; there may be overlap in the genes selected for different cell types. This strategy does not take into account the fact that some cell types are more difficult to characterize than others, however: one cell type may require more than 10 markers to separate from the other cells, while a different cell type may be separated with only one marker. Setting a significance threshold for the statistical test does not solve this problem; a cell type that is easy to separate from other cells will often exhibit several high significance markers, while a cell type that is difficult to separate might not exhibit any high significance markers.

In this work, we introduce RANKCORR, a feature selection algorithm that addresses the problem of multi-class marker selection on massive data sets in a novel manner1. RANKCORR is motivated by the algorithm introduced in [14]; here, we present a fast method for solving the optimization problem from [15] that is at the core of the algorithm from [14]. As a result, RANKCORR runs quickly: it uses computational resources commensurate with several fast and light simple statistical techniques and it can run on data sets that contain over one million cells. In addition, a key step of the RANKCORR method is ranking the scRNA-seq data: this provides a non-parametric way of considering the counts and eliminates the need to normalize the data. Moreover, RANKCORR is a one-vs-all method that selects markers for each cluster based on one input parameter. Instead of providing a score for every gene in each cluster and requiring for the user to manually trim these lists down, RANKCORR selects an informative number of markers for each cluster. In the general case, different numbers of markers will be selected for different clusters. The union of the markers selected for all clusters provides a set of markers that is informative about the entire clustering. Unlike the method of choosing a significance threshold with a differential expression method, cell types that are more difficult to separate from others will generally contribute more markers to the final set. Thus, RANKCORR represents a step towards principled multi-class marker selection when compared to the procedures that are common in most existing methods.

We test the performance of RANKCORR when it is applied to a collection of four experimental UMI counts data sets and an ensemble of synthetic data sets. These data sets contain up to one million cells and include examples of well-differentiated cell types as well as cell differentiation trajectories. Moreover, each data set comes equipped with a cell type classification; we consider classifications that are biologically motivated as well as clusters that are algorithmically created. We refer to the original data source references for their detailed descriptions of clustering and labeling procedures. See Table 1 for a summary of the data sets; full descriptions of the experimental data sets and synthetic data sets can be found in the Methods.

Table 1.

Data sets considered in this work. The ’genes’ column lists the number of non-zero genes detected in the data set. The peripheral blood mononuclear cell (PBMC) data set from [2] appears twice in this table: ZHENGFILT contains a subset of the full data set ZHENGFULL. See experimental data for more information. ZHENGSIM is a collection of simulated data sets created with the Splatter R package; see the generating synthetic data in the Methods sections for more information

| Data set | Cells | Genes | Description | Ground truth clusters | Ref. |

|---|---|---|---|---|---|

| Zeisel | 3005 | 4999 | mouse neurons | 9 (well-separated clusters) | [24] |

| Paul | 2730 | 3451 | mouse myeloid progenitor cells | 19 (differentiation trajectory) | [25] |

| ZhengFull | 68579 | 20387 | human PBMCs | 11 (some clusters overlap) | [2] |

| ZhengFilt | 5000 | ||||

| 10 x Mouse | 1.3 million | 24015 | mouse neurons | 39 (algorithmically generated) | [3] |

| ZhengSim | 5000 | varies | simulated from human CD19+ B cells | 2 | using [26] |

Using these data sets, we compare RANKCORR to a diverse set of feature selection methods. We consider feature selection algorithms from the computer science literature, the statistical tests used by default in the in the Seurat [6] and scanpy [5] packages, and several more complex statistical differential expression methods from the scRNA-seq literature. Refer to Table 2 and the marker selection methods section for a detailed list.

Table 2.

Differential expression methods tested in this paper. The “Data sets” column lists the data sets that each method is tested on in this work. The top block contains the methods that are presented in this work; implementations of these methods can be found in the repository linked in the data availability disclosure. The second block of methods consists of general statistical tests. The third block consists of methods that were designed specifically for scRNA-seq data. The fourth block consists of standard machine learning methods; Log. Reg. stands for logistic regression. We also consider selecting markers randomly without replacement. See the marker selection methods description in the Methods for more information

| Method | Data sets | Package | Version | Ref |

|---|---|---|---|---|

| RankCorr | All | custom | ||

| Spa | Zeisel, Paul | implementation | [14] | |

| t-test | All | scanpy | 1.3.7; see text | |

| Wilcoxon | All | 1.3.7; see text | ||

| edgeR | Zeisel, Paul, ZhengFilt | edgeR, rpy2 v2.9.4 | 3.24.1 | [27] |

| MAST | Zeisel, Paul, ZhengFilt | MAST, rpy2 v2.9.4 | 1.8.1 | [28] |

| scVI | Zeisel, Paul | Source from GitHub | 0.2.4 | [29] |

| Elastic Nets | Zeisel, Paul | scikit-learn [30] | 0.20.0 | [12] |

| Log. Reg. | All | scanpy | 1.3.7; see text | [31] |

| Random selection | Zeisel, Paul, ZhengFilt, | |||

| ZhengFull |

There is currently no definitive ground truth set of markers for any experimental scRNA-seq data set. Known markers for cell types have usually been determined from bulk samples, and treating these as ground truth markers neglects the individual cell resolution of single cell sequencing. Moreover, we argue that the set of known markers is incomplete and that other genes could be used as effectively as (or more effectively than) known markers for many cell types. Indeed, finding new, better markers for rare cell types is one of the coveted promises of single cell sequencing.

Since one goal of marker selection is to discover heretofore unknown markers, we cannot easily evaluate the efficacy of a marker selection algorithm by testing to see if the algorithm recovers a set of previously known markers on experimental scRNA-seq data sets. For this reason we evaluate the quality of the selected markers by measuring how much information the selected markers provide about the given clustering. In this work, we propose several metrics that attempt to quantify this idea.

According to these evaluation metrics, all of the algorithms considered in this manuscript produce reasonable markers, in the sense that they all perform significantly better than choosing genes uniformly at random. In addition, RANKCORR tends to perform well in comparison to the other methods, especially when selecting small numbers of markers. That said, there are generally only small differences between the different marker selection algorithms, and the “best” marker selection method depends on the data set being examined and the evaluation metric in question. It is thus impossible to conclude that any method always selects better markers than any of the others.

The major factors that differentiate the methods examined in this work are the computational resources (both physical and temporal) that the methods require. Since the algorithms show similar overall quality, researchers should prefer marker selection methods that are fast and light. This suggests that fast marker selection methods should be preferred over high complexity algorithms. RANKCORR is one of the fastest and lightest algorithms considered in this text, competitive with simple statistical tests. Thus, as a fast and efficient marker selection method that takes a further step towards understanding the multi-class case, RANKCORR is a useful tool to add into computational toolboxes.

Related work: towards a precise definition of marker genes

In the preceding discussion, we implicitly defined three types of “marker genes”:

biological markers, i.e. genes whose expression can be used in a laboratory setting to distinguish the cells in one population from the other cells (or from other cell subpopulations);

genes that are differentially expressed between one cell population and the other cells (or another cell subpopulation);

and genes that are chosen according to a feature selection algorithm that statistically/mathematically characterizes the relevance of genes to the cell populations in some way (e.g. by minimizing a loss function).

Although we will use these ideas fairly interchangeably throughout this manuscript (referring, for example, to “the markers selected by the algorithm”), it is important to keep in mind the differences between them. For instance, a differentially expressed gene that shows low expression is not a particularly useful biological marker. Indeed, it would be difficult to use a low expression gene to visualize the differences between cell types and inefficient to purify cell populations based on a low expression gene with a FACS sorter.

Several recent marker selection tools start to bridge the gap between differentially expressed genes and biological markers. For example, [16] incorporates a high expression requirement in a heuristic mathematical definition of marker genes, and [17] utilizes a test for differential expression that is robust to small differences between population means. For a given cell type and candidate marker gene, the test used in [17] also incorporates both a lower bound on the number of cells that must express the candidate marker within the cell type and an upper bound on the number of cells that can express a marker outside of the cell type. See the discussion of marker selection methods in the Methods for some further information.

In any case, it is worthwhile to establish a more precise biological definition of a marker gene in order to provide a solid theoretical framework for marker selection. For example, one biological definition of markers requires the cells to be grouped before markers can be determined; this assumes that markers are inherently associated with known cell types or states. This approach is influenced by the computational pipeline that many researchers are currently following (clustering followed by marker selection, see e.g. [18, 19]) and is the approach we consider in this manuscript. An alternative is to define markers as genes that naturally separate the cells into groups in some nice way; the discovered groups would then be classified into different cell types (i.e. allow marker selection to guide clustering). Another recent method [20] defines markers in terms of their overall importance to a clustering, eschewing the notion of markers for specific cell types. Their framework also incorporates finding markers for hierarchical cell type classifications (instead of “flat” clustering). We leave full considerations of rigorous definitions for future work.

Notation and definitions

Let R denote the set of real numbers, Z denote the set of integers, and N denote the set of natural numbers.

Consider an scRNA-seq experiment that collects gene expression information from n cells, and assume that p different mRNAs are detected during the experiment. After processing, for each cell that is sequenced, a vector x∈Rp is obtained: xj represents the number of copies of a specific mRNA that was observed during the sequencing procedure. When all n cells are sequenced, this results in n vectors in Rp, which we arrange into a data matrix X∈Rn×p. The entry Xi,j represents the number of counts of gene j in cell i. Note that this is the transpose of the data matrix that is common in the scRNA-seq literature.

Let [ n]={1,…,n}. For a matrix X, let Xi denote column i of X. Given a vector x, let denote the average of the elements of x and let σ(x) represent the standard deviation of the elements in x; that is, We use the notation ∥x∥p to represent the p-norm of the vector x. For example, ∥x∥2 is the standard Euclidean norm of x and . The notation ∥x∥0 represents the number of nonzero elements in x.

Ranking scRNA-seq data

The first step of RANKCORR is to rank the entries of an scRNA-seq counts matrix X. In this section, we make the notion of ranking precise, and we establish some intuition as to why the rank transformation produces intelligible results on scRNA-seq UMI counts data. We can do much more formal analysis in regards to the behavior of the rank transformation on scRNA-seq data; this analysis will appear in an upcoming work.

Consider a vector x∈Rn. For a given index i with 1≤i≤n, let Si(x)={ℓ∈[ n]:xℓ<xi} and Ei(x)={ℓ∈[ n]:xℓ=xi} (note that i∈Ei(x)). We have that |Si(x)| is the number of elements of x that are strictly smaller than xi and |Ei(x)| is the number of elements of x that are equal to xi (including xi itself).

Definition 1

The rank transformation Φ:Rn→Rn is defined by

| 1 |

Note that Φ(x)i is the index of xi in an ordered version of x (i.e. it is the rank of xi in x). If multiple elements in x are equal, we assign their ranks to be the average of the ranks that would be assigned to those elements (that is, for fixed i, all elements xj for j∈Ei(x) will be assigned the same rank).

Example 1

Let n=5, and consider the point x=(17,17,4,308,17). Then Φ(x)=(3,3,1,5,3). This value will be the same as the rank transformation applied to any point in x∈R5 with x3<x1=x2=x5<x4.

Ranking is commonly used in non-parametric statistical tests - ranking scRNA-seq data allows for statistical tests to be performed on the data without specific assumptions about the underlying distribution for the counts. This is important, since models for the counts distribution are continually evolving. As the measurement technology develops, different statistical models become more (or less) appropriate.

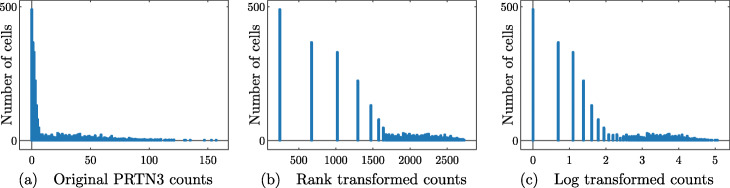

In addition, the rank transformation seems to be especially suited to UMI counts data, which is sparse and has a high dynamic range. When analyzing the expression of a fixed gene g across a population of cells, it is intuitive to separate the cells that are observed to express g from the cells that are not. Among the cells that express g, it is important to distinguish between low expression of g and high expression of g. The actual counts of g in cells with high expression (say a count of 500 vs a count of 1000) are often not especially important. Under the rank transformation, the largest count will be brought adjacent to the second-largest - no gap will be preserved. On the other hand, since there are many entries that are 0, the gap between no expression (a count of 0) and some expression will be significantly expanded (in Eq. (1), the set Ei(x) will be large for any i such that xi=0). See Fig. 1 for a visualization of these ideas on experimental scRNA-seq data.

Fig. 1.

Counts of gene PRTN3 in bone marrow cells in the PAUL data set (See the Methods). Each point corresponds to a cell; the horizontal axis shows the number of reads and the vertical axis shows the number of cells with a fixed number of reads. No library size or cell size normalization has been carried out in these pictures. Note that the tail of the log transformed data is subjectively longer, while the gap between zero counts and nonzero counts appears larger in the rank transformed data

Stratifying gene expression in this way is intuitively useful for determining the genes that are important in identifying cell types: a gene that shows expression in many cells of a given cell type can be used to separate that cell type from all of the others and thus is a useful marker gene. Thus, by enforcing a large separation between expression and no expression (when compared to the separation between low expression and high expression), it will be easier to identify markers. For these reasons, and since the rank transformation has shown promise in other scRNA-seq tools (for example NODES [21]) we use the rank transform in the RANKCORR marker selection algorithm.

A final note is that a connection can be made between the rank transformation and the log normalization that is commonly performed in the scRNA-seq literature. Often, the counts matrix X is normalized by taking Xij↦ log(Xij+1). This is a nonlinear transformation that helps to reduce the gaps between the largest entries of X while leaving the entries that were originally 0 unchanged (and preserving much of the gap between “no expression” and “some expression”). With this in mind, the rank transformation can be viewed as a more aggressive log transformation.

Results

Our results are of two types: (i) algorithmic performance guarantees for RANKCORR and (ii) empirical performance of RANKCORR and its comparison algorithms on scRNA-seq data sets (both benchmark and simulated).

RANKCORR: algorithmic performance guarantees

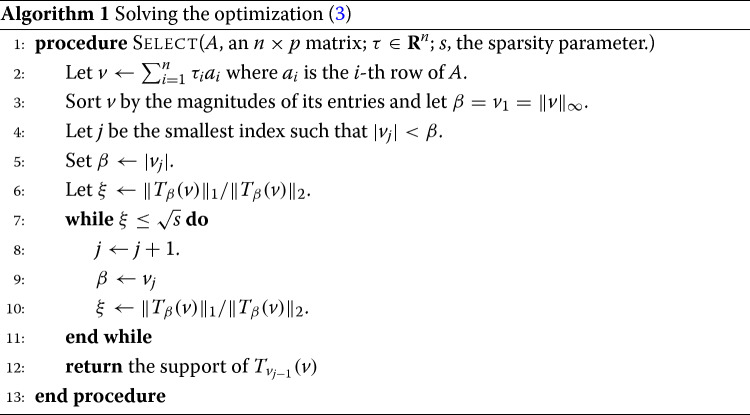

RANKCORR is based on the ideas presented in [14]. It is a fast algorithm that chooses an informative number of genes for each cluster by first ranking the scRNA-seq data, and then splitting the clusters in the ranked data with sparse separating hyperplanes that pass through the origin.

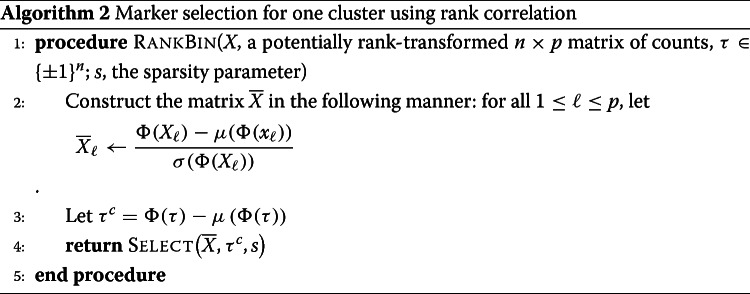

A full description of the RankCorr algorithm is found in the Methods. We provide an outline here so as to explain why it is such an efficient algorithm as compared to alternatives. RANKCORR requires three inputs:

An scRNA-seq counts matrix X∈Rn×p (n cells, p genes). The rank transformation provides a non-parametric normalization of the counts data, and thus there is no need to normalize the counts data before starting marker selection.

A vector of labels y∈Zn that defines a grouping of the cells (yi=k means that cell i belongs to group k). We think of y as separating the cells into distinct cell types or cell states, but in general the groups defined by y could consist of any arbitrary (non-overlapping) subsets of cells.

A parameter s that indirectly controls the number of markers to select. For a fixed input X, increasing s will produce more markers.

Before selecting markers, RANKCORR ranks and standardizes the input data X to create the matrix defined by

| 2 |

where Φ is the rank transformation, as defined in (1), and Xj denotes the j-th column of X2. Markers are then selected for the clustering defined by y in a one-vs-all manner. For a single group k∈y, define τ∈{±1}n such that τi=+1 if yi=k (that is, if cell i is in group k) and τi=−1 otherwise; we refer to τ as the cluster indicator vector for the group k. To select markers for group k, RANKCORR constructs the vector . Following this, using the input parameter s, the algorithm determines the vector as the solution to the optimization problem (3):

| 3 |

where denotes the i-th row of . RANKCORR returns the genes that have nonzero support in as the markers for group k.

Note that the output to (3) can be viewed as the normal vector to a hyperplane that passes through the origin and attempts to split the cells in group k from the cells that aren’t in group k. For example, if cell i is in group k (i.e. yi=k), then the term is positive exactly when . Thus, the objective function in (3) increases when more cells from group k are on the same side of the hyperplane with normal vector .

The optimization (3) was originally introduced in [15] in the context of sparse signal recovery and was adapted to the context of feature selection in a biological setting in [14]. The speed of RANKCORR is due to a fast algorithm (presented in the Methods) that allows us to quickly jump to the solution of the optimization (3) without the use of specialized optimization software.

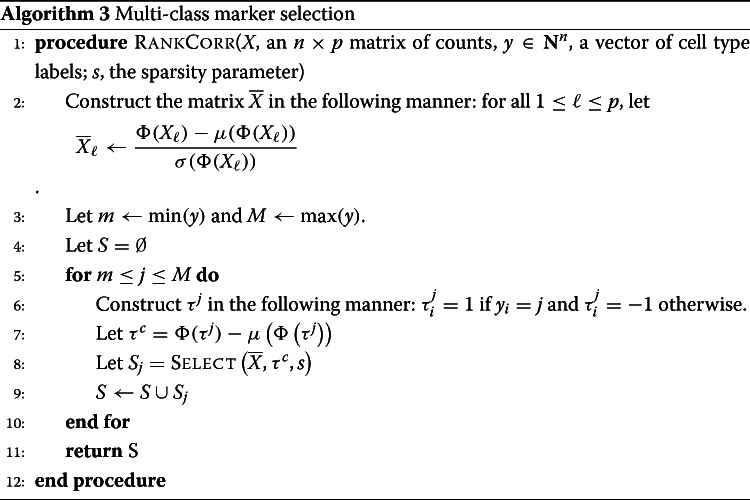

RANKCORR handles multi-class marker selection in a non-trivial one-vs-all manner

To extend RANKCORR to the multi-class scenario, we select markers for each group of cells defined by y. In particular, the value of the parameter s is fixed and the optimization (3) is run for each group of cells using the fixed value of s. To obtain a collection of markers for the clustering defined by y as a whole, RANKCORR returns the union of the selected markers from all of the groups. Alternatively, the markers can be kept separate to provide genes that can identify the individual groups.

The effect of fixing s across groups is complex. In the full description of RankCorr, we show that, for a fixed cluster with cluster indicator vector τ, the markers that RANKCORR selects are the genes that have the highest (in magnitude) Spearman correlation with τ. That is, similar to a differential expression method, RANKCORR can be thought of as generating lists of gene scores (one for each group) that are used to select the proper markers - instead of p-values, the scores considered by RANKCORR are magnitudes of specific Spearman correlations.

The parameter s does not directly control the number of high-correlation markers that are selected, however, and fixing s results in different numbers of markers for each cluster. Thus, the set of markers selected by RANKCORR is different from the set obtained by choosing a constant number of top scoring genes for each cluster. Additionally, fixing s is not equivalent to picking a correlation threshold ρ and selecting all genes that exhibit a Spearman correlation greater than ρ with any cluster indicator vector. Determining a precise characterization of the numbers of markers selected for each cluster is left for future work.

A key result is that RANKCORR contains a new method of merging lists of scores that is based on an algorithm with known performance guarantees [15]. Whether or not this one-vs-all method is an improvement over common heuristics for merging lists requires further exploration. There is some evidence, collected using simple synthetic test data, that selecting a constant number genes with the top Spearman correlation scores for each cluster results in comparable performance to RANKCORR. This has not been studied in the context of experimental data, however, and does not lead to any appreciable time savings over RANKCORR. It is also possible that the merging method used by RANKCORR could be adapted to work with p-values and provide an alternative method of merging the lists that are produced by differential expression methods. Thus we focus on RANKCORR as it is currently presented.

Empirical performance of RANKCORR

In the remainder of this Results, we present evidence that RANKCORR selects markers that are generally similar in quality to (or better than) the markers that are selected by other commonly used marker selection methods. Moreover, RANKCORR runs quickly, and only requires computational resources comparable to those required to run simple statistical tests. Thus, RANKCORR is a useful marker selection tool for researchers to add to their computational libraries.

We evaluated the performance of RANKCORR on four experimental data sets and a collection of synthetic data sets. These data sets are listed in Table 1; see the experimental data and synthetic data descriptions in the Methods for more information (including further details about the ground truth clusters considered in this analysis). We compare RANKCORR to the marker selection methods listed in the leftmost column of Table 2. The Wilcoxon method is the default marker selection technique in the Seurat [6] package, while the scanpy [5] package defaults to the version of the t-test that we include here. See the marker selection methods section for more implementation details.

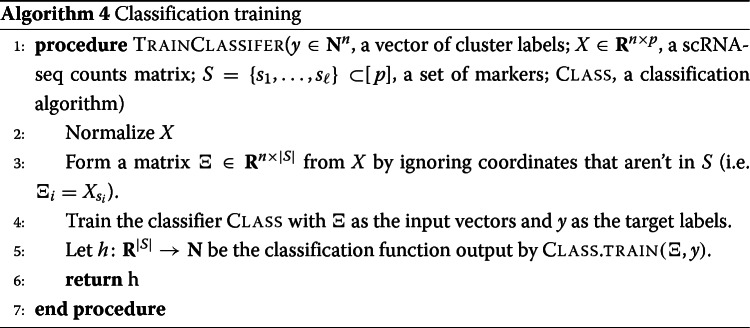

Evaluation of marker sets when ground truth markers are not known

To interpret, evaluate, and simply to present our results, we must quantify how much information a set of selected markers provides about a given clustering when ground truth markers are not known for certain (e.g., when selecting markers on an experimental data set). We propose two general procedures to accomplish this and present results using these:

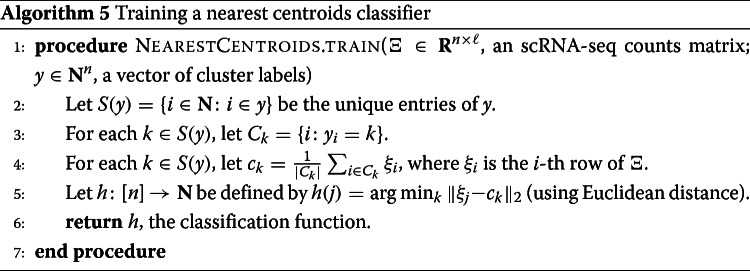

Supervised classification: train a classifier on the data contained in the selected markers using the ground truth clustering as the target output.

Unsupervised clustering: cluster the cells using the information in the set of selected markers without reference to the ground truth clustering.

In this study, we implemented algorithms that accomplish each general procedure. For each algorithm, we considered several different evaluation metrics. A summary of these algorithms and marker set evaluation metrics is found in Table 3, along with the abbreviations that we will use to refer to the metrics.

Table 3.

Evaluation metrics for marker sets on experimental data. The “Average precision” metric is a weighted average of precision over the clusters. The Matthews correlation coefficient is a summary statistic that incorporates all information from the confusion matrix. See [22] for more information about the classification metrics and [23] for more information about the clustering metrics

| General procedure | Methods | Metrics |

|---|---|---|

| Supervised classification | Nearest centroid classifier (NCC)Random forests classifier (RFC) | Classification error (1 - accuracy) |

| Average precision | ||

| Matthews correlation coefficient | ||

| Unsupervised clustering | Louvain clustering | Adjusted rand index (ARI) |

| Adjusted mutual information (AMI) | ||

| Fowlkes-Mallows score (FMS) |

The three supervised classification metrics (error rate, precision, and Matthews correlation coefficient) generally provide similar information. Thus, for most selected marker sets, we present results from five of the metrics (NCC classification error, RFC classification error, ARI, AMI, and FMS). The precision and Matthews correlation coefficient data can be found in Additional file 1. See the marker evaluation metrics in the Methods for further details about these metrics.

It is important to note that these metrics represent some summary statistical information about the selected markers - they do not capture the full information contained in a set of genes. Results on synthetic data suggest that the metrics are informative but not fine-grained enough to capture all differences between methods. Therefore, we would advise considering these metrics as “tests” for marker selection methods; that is, these metrics should mostly be used to identify marker selection methods that don’t perform well.

We compute each of the metrics using 5-fold cross-validation in order to reduce overfitting (see Section 7.10 of [8]). The timing information reported in the following sections represents the time needed to select markers on one fold.

Evaluating RANKCORR on experimental data

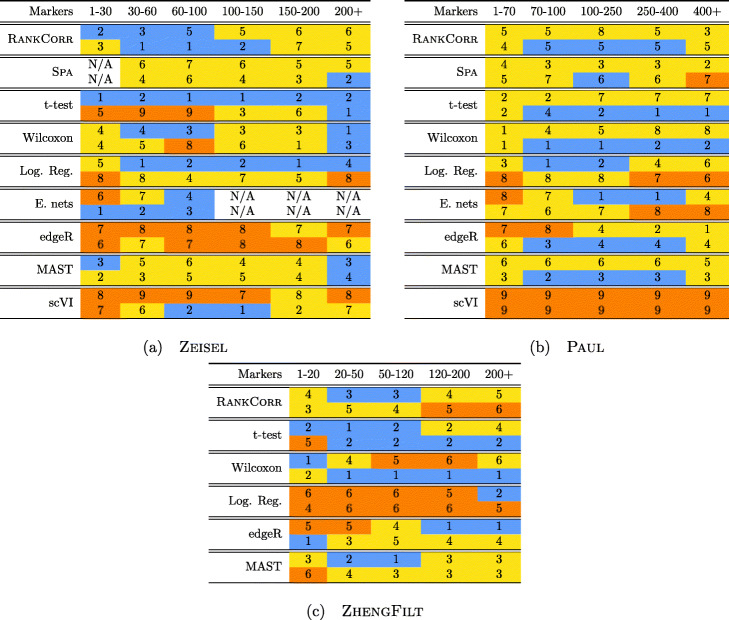

Performance summaries of the of the methods on the ZEISEL, PAUL, and ZHENGFILT data sets are presented in Fig. 2. In this figure, the colors of the boxes indicate relative performance: a blue box indicates performance that is better than the majority of the other methods, a yellow box indicates median performance, and an orange box indicates performance that is worse than the other methods. The coloring in the figures is based on Figs. 4, 5, 6, 7, 8, 9 and 10; the numbers of markers in the bins (in the top row) are selected to emphasize features found in these plots. The first row for each method represents the classification metrics and the second row represents the clustering metrics.

Fig. 2.

Performance of the marker selection methods on the (a) ZEISEL, (b) (PAUL), and (c) ZHENGFILT data sets as the number of selected markers is varied. There are two rows for each method; the first row for each method represents the classification metrics and the second row represents the clustering metrics. Blue indicates better performance than the other methods; orange indicates notably worse performance than the other methods. The marker bins are chosen to emphasize certain features in Figs. 4, 5, 6, 7, 8, 9 and 10; these figures present the values of the evaluation metrics for the different data sets. The values in the boxes correspond to a ranking of the methods, with 1 being the best method in the marker range. The classification and clustering results are ranked separately. Further notes: (a) All of the methods perform well on the ZEISEL data set - an orange box here does not indicate poor performance, but rather that other methods outperformed the orange one. (b) Many of the methods showed nearly identical performance according to the classification metrics; thus, this table contains many yellow boxes

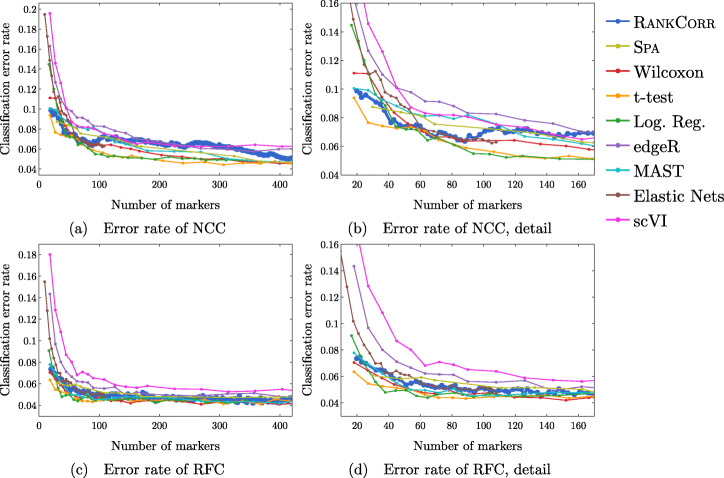

Fig. 4.

Error rate of both the nearest centroids classifier (NCC; (a) and (b)) and the random forests classifier (RFC; (c) and (d)) on the Zeisel data set. Figure (b) (respectively (d)) is a detailed image of the error rate of the different methods using the NCC (respectively RFC) when smaller numbers of markers are selected

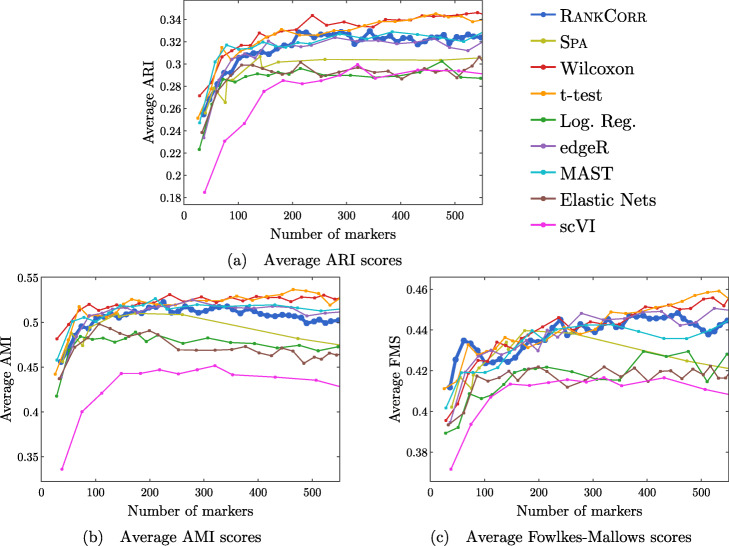

Fig. 5.

Clustering performance metrics vs total number of markers selected for marker selection methods on the ZEISEL data set. The ARI score is shown in (a), the AMI score is shown in (b), and the Fowlkes-Mallows score is shown in (c). The clustering is carried out using 5-fold cross validation and scores are averaged across folds

Fig. 6.

Error rates of both the nearest centroids classifier (NCC; (a) and (b)) and the random forests classifier (RFC; (c) and (d)) on the Paul data set. Figure (b) (respectively (d)) is a detailed image of the error rate of the different methods using the NCC (respectively RFC) when smaller numbers of markers are selected. Figure (b) details up to 220 total markers to make clear how similar the methods perform when small numbers of markers are selected. Figure (d) examines up to 350 total markers to detail the performance of the methods when small numbers of markers are selected as well as get an idea for the increasing behavior and noisy nature of the curves

Fig. 7.

Clustering performance metrics vs total number of markers selected for marker selection methods on the PAUL data set. The ARI score is shown in (a), the AMI score is shown in (b), and the Fowlkes-Mallows score is shown in (c). The clustering is carried out using 5-fold cross validation and scores are averaged across folds

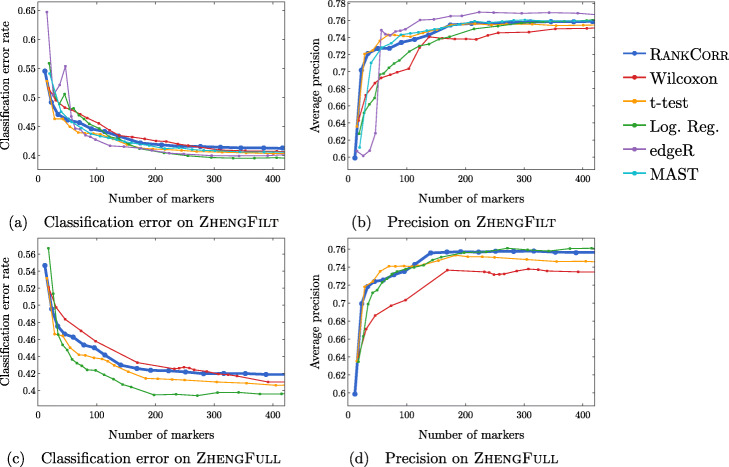

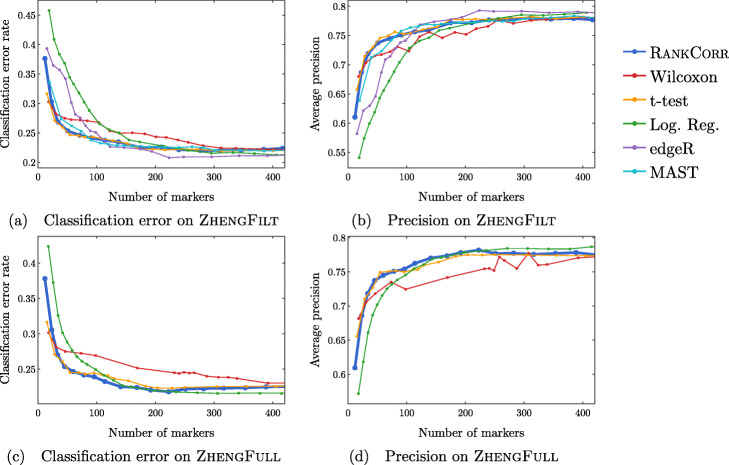

Fig. 8.

Accuracy and precision of the nearest centroids classifier on the ZHENG data sets using the bulk labels. The top row corresponds to the ZHENGFILT data set and the bottom row corresponds to the ZHENGFULL data set

Fig. 9.

Accuracy and precision of the random forests classifier on the ZHENG data sets using the bulk labels. The top row corresponds to the ZHENGFILT data set and the bottom row corresponds to the ZHENGFULL data set

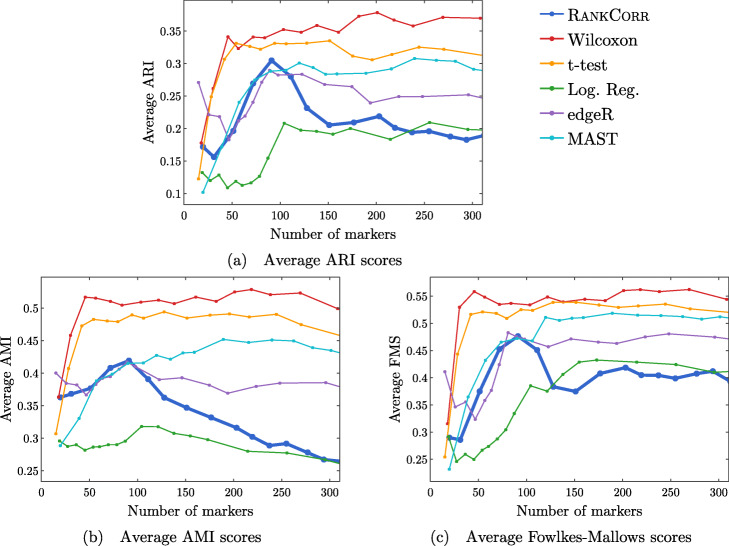

Fig. 10.

Clustering metrics on the ZHENGFILT data set

The values in the columns in Fig. 2 correspond to a heuristic ranking of the methods, with 1 the optimal method (on average) in the indicated range of markers; see the Methods for a full description of how the ranking is calculated. The classification metrics and clustering metrics are ranked separately (so that each column contains two full rankings of the methods; e.g. in Fig. 2b, every column contains the numbers 1 to 9 twice). Since these numbers are ranks, they do not capture the magnitude of the gaps in performance between methods. For example, if two methods differ by one rank (e.g. the method ranked 2 vs the method ranked 3), there could be a large gap in performance between the two methods. The colors of the cells are meant to capture the larger differences between (tiers of) methods, and methods with the same color in a column perform comparably (regardless of the difference in rank). For example, in some cases, the top four methods (ranked 1 through 4) are similar to each other and clearly better than the others, so they will be colored blue. In other cases, no method will appear significantly better than the others, so none of the boxes will be colored blue.

The ZHENGFULL and 10XMOUSE data sets were too large for the majority of the methods to handle; thus, data were only collected for the RANKCORR, t-test, logistic regression, and Wilcoxon methods (the fastest methods) on these data sets. We include the 10XMOUSE data specifically as a stress test to determine the methods that can handle the largest data sets. It is impressive that these methods are able to run on such a large data set in a reasonable amount of time. The performance characteristics of the methods on these data sets are found later in this section.

Overall, the different methods select sets of markers that are of similar quality: the performance of any “optimal” method is usually not much better than several of its competitors. For example, the true differences in performance between the yellow and blue boxes in Fig. 2 are often quite small. In addition, there is no method that consistently selects the best markers. The optimal method depends on the choice of data set, the evaluation metric, and the number of markers that are selected.

That said, the RANKCORR method tends to perform well on these data sets: it is generally competitive with the best methods in terms of performance. In particular, it especially excels when selecting less than 100 markers on all three data sets according to both the clustering and classification metrics.

Since the algorithms generally exhibit similar performances under the metrics considered in this work, efficient algorithms have a significant advantage. The computational resources (total computer time and memory) required to select markers on the experimental data sets are presented in Fig. 3. The fastest and lightest methods are RANKCORR, the t-test, and Wilcoxon: notably, RANKCORR is nearly as fast and light as the two very simple statistical methods (the t-test and Wilcoxon). It is thus these three methods that show a clear advantage over the other methods for working with experimental data. Logistic regression also runs quickly on the smaller data sets but does not scale as well as the three methods mentioned above and significantly slows down on the larger data sets. In addition, logistic regression shows inconsistent performance and is often one of the worst performers when selecting small numbers of markers. The other methods are significantly slower or require large computational resources compared to the size of the data set: see Fig. 3 for further discussion.

Fig. 3.

Computational resources used by the marker selection methods. In both figures, the data set size is the number of entries in the data matrix X: it is given by n×p, the number of cells times the number of genes. The data sets that we consider in this work are indicated in the figures. The total computation time required to select markers on one fold (CPU time, calculated as number of processors used multiplied by the time taken for marker selection) is shown in (a); the total memory required during these trials is shown in (b). Elastic nets scales poorly in (a), so it is only run on PAUL and ZEISEL. Both edgeR and MAST are limited by memory on ZHENGFILT (see (b)); this prevents their application to the larger data sets. scVI also requires a GPU while it is running; this prevents us from testing it on the larger data sets. RANKCORR, the t-test, Wilcoxon, and logistic regression all use 8 GB to run on ZHENGFULL and 80 GB to run on 10XMOUSE. See the marker selection methods for more details

These results support the idea that RANKCORR is a worthwhile marker selection method to consider (along side other fast methods) when analyzing massive UMI data sets. In the rest of this section, we give performance results on each specific data set.

The marker selection methods perform well on the ZEISEL data set

The classification error rates of the nearest centroid and random forests classifiers on the ZEISEL data set are presented in Fig. 4. The error rates are very low: it requires only 100 markers (an average of 11 markers per cluster) to reach an error rate lower than 5% for most methods using the RFC. The ARI, AMI, and FM scores, reported in Fig. 5, are also high (good) for all methods. Only a small number of markers were selected by elastic nets on the ZEISEL data set; thus, the elastic nets curves end before the others. Figure 2a contains a summary of the data presented in Figs. 4 and 5. The computational resources required by the methods are presented in Fig. 3, where it is clear that the RANKCORR, t-test, Wilcoxon, and logistic regression methods all run quickly on the ZEISEL data set and require few resources in comparison to the other methods.

The ground truth clustering that we consider on the ZEISEL data set is biologically motivated and contains nine clusters that are generally well separated (they represent distinct cell types) [24]. Most of the methods tested here produce markers that provide a significant amount of information about this ground truth clustering; these results thus represent a biological verification of the marker selection methods. That is, in this ideal biological scenario (a data set with highly discrete cell types) the (mathematically or statistically defined) markers that are chosen by the methods are biologically informative and can be used as real (biological) markers.

This suggests that, when selecting markers on a data set that is well clustered, it is useful to examine several marker selection algorithms to get different perspectives on which genes are most important. Marker selection algorithms that can run using only small amounts of resources, such as RANKCORR (see Fig. 3), thus have an advantage over the other methods.

In addition to this, RANKCORR is the only method that shows high performance when selecting fewer than 100 markers in both the clustering and classification metrics. Most researchers will be looking for small numbers of markers for their data sets; thus RANKCORR stands out as a promising method on the ZEISEL data set. Note also that RANKCORR generally outperforms SPA in the clustering metrics and is competitive with SPA in the classification metrics: RANKCORR is both faster than SPA and selects a generally more informative set of markers than SPA on the ZEISEL data set. Therefore, the performance on the ZEISEL data set is evidence for the fact that RANKCORR is a useful adaptation of SPA [14] for sparse UMI counts scRNA-seq data.

Finally, these data illustrate how the different evaluation metrics provide different statistical snapshots into the information contained in a set of markers. For example, logistic regression performs significantly worse than all of the other methods when large numbers of markers are selected according to the ARI and FMS plots. In the supervised classification trials, however, the logistic regression method performs competitively with the other methods. When no information about the ground truth clustering is provided, the performance of logistic regression on the ZEISEL data sets drops considerably. Despite the good results in the supervised clustering plots (that could be due to quickly selecting a small number of useful markers) it is reasonable to conclude that logistic regression selects many uninformative genes (in comparison to the other methods) as more markers are selected. It is best to think of the metrics as tests that can identify the marker selection methods that don’t perform well.

Marker selection algorithms struggle with the cell types defined along the cell differentiation trajectory in the PAUL data set

The PAUL data set consists of bone marrow cells and contains 19 clusters [25]. The clusters lie along a cell differentiation trajectory; therefore, it is reasonable that it would be difficult to separate the clusters or to accurately reproduce the clustering into discrete cell types. The Paul data set thus represents an adversarial example for these marker selection algorithms.

Figure 6 shows the performance of marker selection algorithms on the PAUL data set as evaluated by the supervised classification metrics. It is not surprising to see relatively high clustering error rates: the rates are always larger than 30% for the NCC and reach a minimum of around 27% with the RFC. Since there are 19 clusters, this is still much better then classifying the cells at random. The dependence of the ARI, AMI, and FM clustering scores on the number of markers selected is plotted for the different marker selection algorithms in Fig. 7. The values of the scores are all in low to medium ranges for all marker selection algorithms.

All of the scores produced by all of the methods on the PAUL data set are significantly worse than the metrics on the ZEISEL data set; however, the methods perform considerably better than markers selected uniformly at random (see Additional file 1, Figures 5–7 for this comparison). This is sensible, since it should intuitively be difficult to reproduce a discrete clustering that has been assigned along a continuous path. The notion of discrete cell types does not fit well with a cell differentiation trajectory; the poor score levels reflect the necessity to come up with a better mathematical description of a trajectory for the purposes of marker selection.

The ARI values are especially low on the PAUL data set, and the methods consistently produce lower ARI values than AMI values. This is a change from the ZEISEL data set, where the ARI scores were higher than the AMI scores (and the FMSs were the highest of all). The aspects of the data sets that change the relative ordering of the metrics are unclear; it must be the data sets that influence this change, however, since the change persists across the marker selection algorithms. Designing a metric for benchmarking marker selection algorithms is itself a difficult task, and the optimal metric to consider could depend on the data set in question.

A summary of the relative performance of the marker selection algorithms on the Paul data set is presented in Fig. 2b. All of the marker selection methods perform quite similarly on the PAUL data set. The RANKCORR algorithm is one of only three methods that always performs nearly optimally under every metric examined here; the others are the t-test and MAST. In addition, RANKCORR always performs well when selecting small numbers of markers, and shows exceptional performance in this regime under the Fowlkes-Mallows clustering metric. Combined with the facts that RANKCORR is fast to run and requires low computational resources, this shows that RANKCORR is a useful marker selection method to add to computational pipelines.

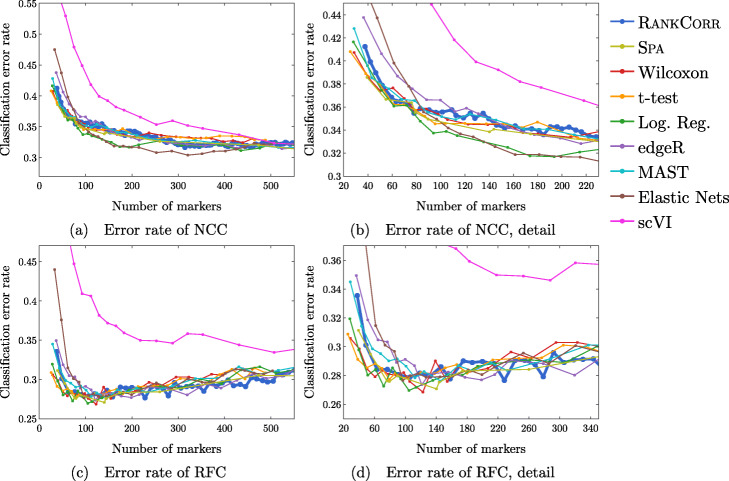

Results on the ZHENGFULL and ZHENGFILT data sets

Here, we examine the data set consisting of 68k peripheral blood mononuclear cells (PBMCs) from [2]; it contains data from more than 30 times the number of cells in either the PAUL or ZEISEL data sets. This is more representative of the sizes of the data sets that we are interested in working with. The ground truth clustering that we consider is the labeling obtained in [2] by correlation with bulk profiles (biologically motivated “bulk labels”). There are 11 cell types in this clustering. See the section describing the data sets (in the Methods) for more information.

The ZHENG data sets contain some distinct clusters (e.g. B cells), as well as some clusters that are highly overlapping (e.g. different types of T cells). There are no specific cell differentiation trajectories (that we are aware of), but the overlapping clusters provide a challenge for the marker selection methods. Thus, we expect to see performance benchmarks between those of PAUL and ZEISEL.

We mostly focus on ZHENGFILT, a version of the data set that is filtered to only include the information from the top 5000 most variable genes. We also consider the performance of the most efficient algorithms (RANKCORR, logistic regression, Wilcoxon, and the t-test) on ZHENGFULL, the data set containing all of the genes, to check for any differences. Extrapolating from Fig. 3, it would be infeasible to run the other methods on ZHENGFULL. Here we also begin to see that logistic regression scales worse than the other methods: it is already becoming slow and computationally heavy on “only” 68 thousand cells.

Figure 8 focuses on the performance of the methods when the NCC is used for classification; corresponding data using the RFC is found in Fig. 9. Unlike the PAUL and ZEISEL data sets, the precision curves are slightly different in some occasions, and thus they are presented here. In particular, the precision of these methods is significantly higher than their accuracy. Neither the classification accuracy nor the precision changes by very much when we filter from the full gene set (Figs. 8c,d and 9c,d) to the 5000 most variable genes (Figs. 8a,b and 9a,b). In general, this filtering very slightly increases both the accuracy and precision of the t-test, Wilcoxon, and RANKCORR methods, while it worsens the performance of the logistic regression method. This suggests that enough marker genes are kept by this variable gene filtering process to maintain accurate marker selection.

Overall, the classification error rates according to the NCC for these data are quite high, and don’t level off (to a minimum value of approximately 40%) until around 200 markers are selected; this corresponds to an average of around 18 unique markers per cluster. For very small numbers of markers selected, the classification error rates obtained from the NCC are quite high (around 55%).

The error rates using the RFC are decreased significantly compared to the NCC, and level off to approximately 22% when large numbers of markers are selected. The error again does not completely level off until around 200 total markers are selected, but there is a steeper initial descent. This steep initial descent in error rates could appear as the large groups of cells are separated from each other (e.g. B cells from T cells) and the slower improvement from 100 to 200 of total markers selected could be the methods fine-tuning the more difficult clusters (e.g. Regulatory T from Helper T). The error rates are between those observed in PAUL and ZEISEL. On the other hand, the error rates for the NCC classifier are much higher than expected.

We focus on the ZHENGFILT data set for the clustering metrics. This is due to the fact that the classification metrics are changed only slightly between ZHENGFILT and ZHENGFULL as well as the fact that Louvain clustering on the large ZHENG data set is itself time and resource intensive. The clustering metrics on the ZHENGFILT data set are presented in Fig. 10. All three scores are generally quite low, though they are again mostly much higher than random marker selection. The performance of random marker selection can be found in Additional file 1, Figure 12.

A summary of the performance of the marker selection algorithms on the ZHENGFILT data set is presented in Fig. 2c. Apart from the t-test, the methods show inconsistent performance when comparing the clustering metrics to the classification methods. For example, the edgeR method exhibits the top performance on the ZHENGFILT data set after more than 50-100 unique markers are selected according to the classification metrics. The classification metrics show edgeR as one of the worst methods when choosing less than 50 unique markers, however. This is in direct contradiction to the clustering metrics, where edgeR is always the best method for the smallest (∼20) total numbers of markers selected, and it then shows performance in the middle of the other methods as larger numbers of markers are selected.

It is possible that changing the number of nearest neighbours considered in the Louvain clustering would produce more consistent data. Although the clustering metrics did not appear to change significantly when altering the number of nearest neighbours on the previous data sets (see the Louvain parameter selection information in the Methods), the ZHENGFILT data set is much larger than those previous data sets; the larger number of cells may necessitate the use of information from more nearest neighbors to recreate the full clustering structure.

It is also possible that the bulk labels that are used for the ground truth are difficult to reproduce through the Louvain algorithm. We generated a clustering that visually looked like the bulk labels via the Louvain algorithm (it appears in Additional file 1, Figure 18); the ARI, AMI, and FMS values for the generated Louvain clustering compared to the bulk labels are in the ranges produced by the Wilcoxon and t-test methods (not larger than the scores here). In addition, the top ARI and AMI scores (produced by the Wilcoxon and the t-test methods) are comparable to (or only slightly better than) the scores on the PAUL data set (Fig. 7). This runs counter to our expectations: the PAUL data set contains a cell differentiation trajectory, with no real clusters that are easy to separate out, while the ZHENG data sets contain several clusters that are well separated. It is possible that the bulk labels produce clusters that are more mixed than it appears in a UMAP plot.

In any case, the disparity between the different types of scores further emphasizes the fact that the classification and clustering metrics provide different ways of looking at the information contained in a selected set of markers. Methods that perform well according to both types of metrics should be preferred.

Following this logic, the t-test produces the overall best results on the ZHENGFILT data set. It performs well under the classification metrics, especially for small numbers of total markers selected. In addition, it is consistently competitive with the best method (Wilcoxon) according to the clustering metrics.

Nonetheless, on the whole, RANKCORR performs approximately as well as the t-test, especially when selecting smaller numbers of markers. In particular, RANKCORR shows nearly optimal performance on the ZHENGFILT data set under the classification metrics. It performs poorly according to the clustering metrics when selecting more than 120 total markers, however, though it is still competitive with logistic regression in this domain. Still, the good performance when selecting less than 120 markers supports the notion that RANKCORR is a useful analytical resource for researchers to consider.

Marker selection on the 1 million cell 10XMOUSE data set

We consider the 10XMOUSE data set: it consists of 1.3 million mouse neurons generated using 10x protocols [3]. The “ground truth” clustering that we examine in this case was algorithmically generated without any biological verification or interpretation (see the section about data sets in the Methods). We include this data set as a stress test for the methods and therefore we do not perform any variable gene selection before running the marker selection algorithms (to keep the data set as large as possible). We also only consider the four fastest and lightest methods (RANKCORR, the t-test, Wilcoxon, and logistic regression) as these are the only methods considered in this work that could possibly produce results in a reasonable amount of time on this data set.

The classification error rates of the four methods according to the NCC classifier show behavior similar to the other data sets that we have examined in this manuscript: starting out relatively high when selecting a small number of markers, then rapidly decreasing for a short period until becoming nearly constant as a larger number of markers are selected. See Additional file 1, Figure 13 for the NCC curves.

The error rates of the methods approach approximately 25% as the number of markers selected increases. There are 39 clusters in the “ground truth” clustering that we examine here - thus, the error rates produced by all of the methods are much lower than the error rate expected from random classification. In Additional file 1, Figure 13, we see that the logistic regression method performs the best overall, and that RANKCORR consistently shows the highest error rate. The largest difference between the RANKCORR curve and the logistic regression curve is only around 3%, however. In addition, as mentioned above, logistic regression is the slowest method by far on this data set - extra accuracy is not worth much if the method is not able to finish running.

Because a biologically motivated or interpreted clustering may be quite different from the clustering used here and because the classification error rate does not capture the full information in a set of markers (and thus similar error rates are not necessarily an accurate indication of the relative performance of methods), it is only possible to conclude that all four methods examined here show similar performance on the 10XMOUSE dataset. The RANKCORR method produces useful markers, runs in a competitive amount of time, and takes a step towards selecting a smart set of markers for each cluster (rather than the same number of markers per cluster). It is impressive that these methods are able to run on such a massive data set.

The implementation of the RFC in scikit-learn was quite slow on the large 10XMOUSE data set, and thus we do not compare the methods via the RFC. From the smaller data sets, we might expect that the random forest classifier produces curves that are shaped similarly to the ones in Figure 13 in Additional file 1 but are shifted down to a lower error rate. This is indeed what we see for the RANKCORR method: a comparison of the RFC and the NCC is shown in Additional file 1, Figure 14. Each point on this RFC curve took over 3 hours on 10 processors to generate; the largest point took over 15 hours. The Louvain clustering method was also too slow to compute any clustering error rates for the markers selected here. This is a situation where the marker selection algorithms are faster than almost all of the evaluation metrics (emphasizing the continued need for good marker set evaluation metrics).

Comparison of marker selection methods on synthetic data

We have evaluated RANKCORR on synthetic data sets that are designed to look like experimental scRNA-seq data. In each synthetic data set that we consider, there is a known ground truth set of markers, and all genes that are not markers are statistically identical across the cell populations. Thus, we can present the actual precision of the marker selection methods as well as ROC curves. Precision is an especially important metric for marker selection - it is desirable for an algorithm to select genes that truly separate the two data sets (rather than genes that are statistically identical across the two populations). The values of precision, TPR, and FPR are computed without cross-validation, since the entire set (of genes) in each data set is test data - there is no training to be done. We additionally examine the classification error metric that was introduced in Table 3. We still use 5-fold cross-validation to compute this metric.

Since the speed of a marker selection algorithm has been observed as an important factor for use on experimental data, we compare RANKCORR only to the fastest methods: the t-test, Wilcoxon, and logistic regression.

See the synthetic data generation subsection in the Methods for a full description of the data generation process. See also Fig. 11 for an outline of the design. In short, we generate 20 different synthetic data sets; each simulated data set consists of 5000 cells that are split into two groups, and 10% of the genes are differentially expressed between these groups.

Fig. 11.

Set up of the simulated data. We consider 3 conditions: all genes used for simulation, filtering after simulation, and filtering before simulation. On the left side of this diagramme, we produce 10 data sets by using all genes in simulation, and 10 more by filtering down to the 5000 most variable genes after simulation. These “filtering after simulation” data sets contain a subset of the information from the “all genes used for simulation” data sets. On the right hand side, we produce 10 data sets by filtering down to the 5000 most variable before simulation

For the purposes of computational efficiency, many data analysis pipelines reduce input data to a subset of the most variable genes before selecting markers. Thus, we examine synthetic data sets that are filtered down to the 5000 most variable genes in addition to unfiltered data sets. In 10 samples, we filter before simulating (and simulate 5000 genes); in the other 10 samples, we simulate without filtering (and simulate as many nonzero genes as there were in the input data, usually around 12000 genes). From each data set that was simulated without filtering, we produce another data set by filtering down to the 5000 most variable genes. This results in three simulation conditions (all genes used for simulation, filtering before simulation, and filtering after simulation) and a total of 30 data sets. See Fig. 11.

Apart from the t-test data, each curve presented in this section represents the average across all 10 simulated data sets that are relevant to the curve. For the t-test, one of the trials in each simulation condition produced genes with tied p-values. This resulted in situations where it was impossible to select the top k genes in a stable manner; thus, these data sets were ignored and the t-test precision, TPR, and FPR curves each represent the average of the 9 data sets that are relevant to the curve.

The differentially expressed genes are chosen randomly; thus many of them show low expression levels (often expressed in less than 10 cells) and are difficult to detect. In general, marker selection methods should not select genes with very low expression levels (since these genes are not particularly useful as markers when all cell types have large enough populations). Thus, we do not present information about the recall here.

Simulated data illuminates the precise performance characteristics of marker selection methods

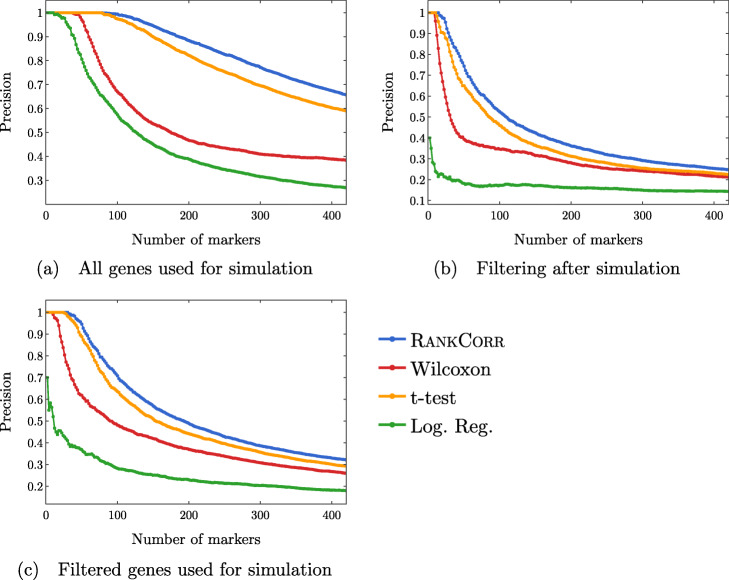

In Fig. 12, we examine the precision of the marker selection algorithms for the first 400 unique genes selected. It is promising to see that RANKCORR produces the highest precision in marker selection across all of the simulation methods. The t-test is second, the Wilcoxon method is third, and logistic regression consistently exhibits the lowest precision.

Fig. 12.

Precision of the marker selection methods versus the number of markers selected for the first 400 markers selected. Each sub-figure corresponds to a simulation method and the four lines correspond to the different marker selection algorithms. The RANKCORR method consistently shows the highest precision across all three simulation methods

Examining Fig. 12 more closely, we see that the methods generally start off with high precision that decreases as more markers are selected (each data set contains more than 400 differentially expressed genes). In both of the filtered simulation conditions, all of the methods get close to a precision of 0.1 or 0.2 when 400 markers are selected, and all of the curves are still decreasing at this point (a precision of 0.1 corresponds to random gene selection on these data sets). There are around 2000 differentially expressed genes in the un-filtered simulation condition, so the fact that the precision drops significantly when selecting up to 400 markers indicates proportionally similar behavior to the filtered data sets.

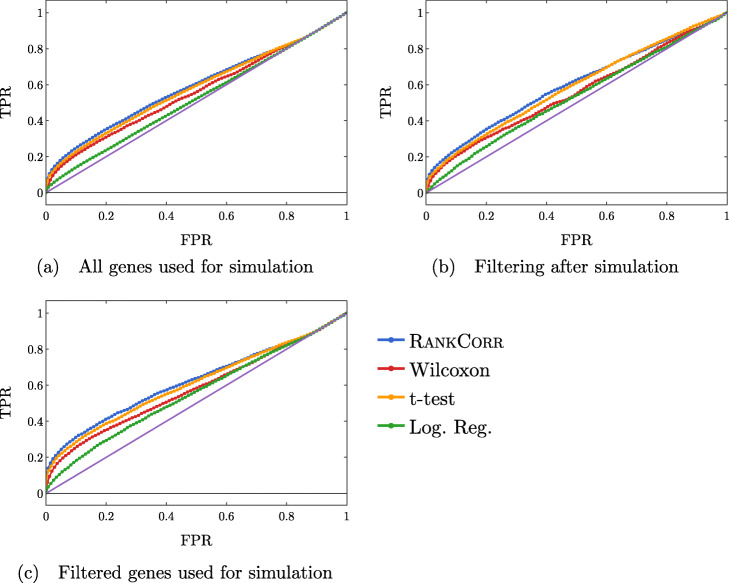

The ROC curves in Fig. 13 also reflect this behavior: the curves increase (above the diagonal) quite rapidly for a short period of time, but then remain close to the diagonal overall.

Fig. 13.

ROC curves. Each sub-figure corresponds to a simulation method and the four lines correspond to the different marker selection algorithms. The solid (purple) line is the diagonal TPR = FPR

These data are somewhat expected: in the simulations that come from all of the genes, many of the “differentially expressed” genes show low levels of expression. Thus, we would expect that the ROC curves should end up close to the diagonal as intermediate to large numbers of total markers are selected (since finding these low expression markers should be close to random selection). The filtered data sets could have solved this problem; however, the filtering method used here (see the full synthetic data description in the Methods) preserves the relative proportions of low- and high-expression genes and (possibly for this reason) do not affect the ROC curves very much.

Another explanation for these difficulties could be the differential expression parameters used in the Splat simulation. With these default parameters, the gene mean for some of the “differentially expressed” genes are only slightly different between the two clusters (for specifics, see the synthetic data discussion in the Methods). Thus, although the simulation may label these genes as differentially expressed, detecting the differential expression by any method will be very difficult. This underscores the differences between biological markers and differentially expressed genes: these differentially expressed genes would not be good practical biological markers, as it would be very difficult to tell two clusters apart based on the expression levels of these types of genes without collecting a lot of data.

Regardless, both of these plots support the notion that the methods are able to easily identify a small set of differentially expressed genes from the synthetic data but then rapidly start to have difficulties as more genes are selected. In addition, the RANKCORR method consistently shows the highest value of precision and TPR.

Inconsistent results are obtained when these simulated genes are filtered by dispersion

Comparing Fig. 12a-c across the simulation conditions, we see that the highest precision for each of the marker selection methods is obtained by using all genes for simulation, without any filtering. It is tough to explain why filtering genes by dispersion (the filtering method that we use here; see the synthetic data discussion information in the Methods) after simulating produces lower precision scores than not filtering. Since the t-test (for example) works by choosing genes based on a p-value score, and the genetic information is not changed by the filtering process (p-values would be the same in both the unfiltered and filtered after simulation data sets), it must be the case that many of the differentially expressed genes are removed from the data set when we filter after simulation. The highly variable genes selected by the filtering method used here are not required to have high expression; thus, there is no obvious reason that many differentially expressed genes should be filtered out.

Note that a similar effect is not observed in the ZHENG data sets (see Figs. 8 and 9), suggesting that this inconsistency is an artifact of the simulation methods used here. Simulating scRNA-seq data is itself a difficult task; see also [32] for a further discussion of the difficulties involved in simulating scRNA-seq data (and a tool that can help to expose these types of issues). Nonetheless, filtering genes is quite a heuristic process, and there is still more work to be done in fully understanding how this filtering impacts real scRNA-seq data3. At the very least, it is clear that the process of filtering genes by dispersion does not commute with the simulation methods used here, since filtering before simulation shows higher precision than filtering after simulation.

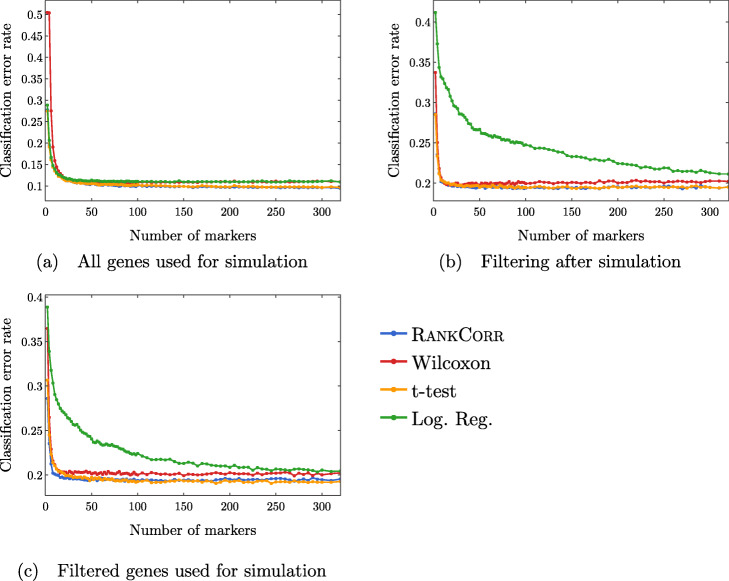

The classification error rate is an informative but coarse metric

Finally, we examine the classification error rate of the methods applied to the synthetic data in Fig. 14. It is interesting to note that, with only two clusters, we still misclassify a minimum of around 10% of cells. This suggests that the simulated data are not well separated - the differential expression introduced in the synthetic data is not strong enough to easily separate the two clusters. Moreover, apart from the curves corresponding to the logistic regression method, all of the curves look to be fairly constant after a small number of markers have been selected (approximately 50 for the simulations based on all genes and approximately 30 for the simulations based on filtered data). This further supports the discussion from above - the methods start by quickly choosing a small number of good markers; after this, the genes that are selected do not provide significantly more information about the clustering.

Fig. 14.

Clustering error rates using the Random Forest classifier for the first 500 markers chosen by each method. The sub-figures correspond to different simulation conditions. The RANKCORR algorithm consistently produces the smallest values of the clustering error rate

Note that the methods that show higher precision in Fig. 12 also show a lower classification error in Fig. 14. On the other hand, logistic regression shows poor precision levels on the filtered data sets and also appears significantly worse than the other methods in the classification error rate curves. Thus, according to these experiments, the classification error rate seems to be a coarse but reasonable measure of how well a set of markers describes the data set. In this example, if one methods performs worse than another method according to the classification error rate curves (Fig. 14), then the same relationship holds in the precision curves (Fig. 12). Some large differences in precision are eliminated in the classification error rate curves, however, and thus the classification error rate should be considered with a grain of salt. That is, the classification error rate is informative, but it does not provide a full statistical picture of how the methods are actually performing.

Discussion

The difficulties of benchmarking and the importance of simulated data

Benchmarking marker selection algorithms on scRNA-seq data is inherently a difficult task. The lack of a ground truth set of markers requires for us to devise performance evaluation metrics that will illuminate the information contained in a selected set of genes. We have examined several natural evaluation metrics in this work; these metrics sometimes produce conflicting results, however. Our experiments make it clear that these metrics provide different ways to view the information contained in a set of genes rather than capturing the full picture provided by of a set of markers.

Having a ground truth set of markers available makes the evaluation of marker selection algorithms much more explicit. In the synthetic data here, for example, it becomes apparent that the methods rapidly select a set of markers that provide a lot of information about the clustering, then essentially start picking things by chance. This type of behavior can only be revealed by a study with a known ground truth.

On the other hand, simulating scRNA-seq data is itself a difficult problem. The simulated data that we consider in this work behaves strangely when we filter it by selecting highly variable genes. In particular, the filtering process considered here seems to remove many of the useful differentially expressed genes in the simulated data. This type of behavior was not observed in the ZHENGFILT experimental data set, where working only with high variance genes had little impact on the marker set evaluation metrics. Better simulation methods, and mathematical results formalizing the quality of simulated data, are extremely important future projects. See [32, 33] for some work towards these goals.

The relationship between marker selection and the process of defining cell types

The marker selection framework considered in this work is quite narrow. It is focused on discrete cell types, and (as shown in the PAUL data set) does not handle cell differentiation trajectory patterns very well. Moreover, we assume that the genetic information that we supply to a marker selection algorithm consists of cells that are already partitioned into cell types. This is consistent with the data processing pipeline that many researchers currently follow (cluster the scRNA-seq data with an algorithm, then find markers for the clusters that are produced [18, 19]); it seems more reasonable to allow for marker selection to help guide the process of finding and defining cell types, however.

For example, future marker selection methods could find markers that are useful for identifying certain regions of the transcriptome space (in an unsupervised or semi-supervised manner). This would allow for clarity along a cell differentiation pathway - at any point on the trajectory, a researcher could view the markers that identify the nearby area, and to what degree each marker identifies the area. Thus, cell types (or differentiation pathways) could be suggested based on marker genes. These cell types might themselves reveal more informative markers, creating an iterative process: let the markers guide the clustering and vice versa. Such a method is known as an embedded feature selection method in the computer science literature; adapting an embedded feature selection method to scRNA-seq data is left for future consideration.

Conclusions

Across a wide variety of data sets (large and small; data sets containing cell differentiation trajectories; datasets with well separated clusters; biologically defined clusters; algorithmically defined clusters) and looking at many different performance metrics, it is impossible (and even inappropriate) to say that any of the methods tested selects better markers than all of the others. Indeed, the marker selection method that was “best” depended on the data set as well as the evaluation metric in question, and the difference in performance between the “best” marker selection algorithm and the “worst” was often quite small.

Thus, the major factors that differentiate the methods examined in this work are the computational resources (both physical and temporal) that the methods require. Since the algorithms show similar overall quality, researchers should prefer marker selection methods that are fast and light.

In addition to this, as technology advances, the trend is towards the generation of larger and larger data sets. High throughput sequencing protocols are becoming more efficient and cheaper, and other statistical and computational methods are improved when many samples are collected. Through imputation and smoothing methods (see e.g. [34–36]), a detailed description of the transcriptome space can be revealed even when low numbers of reads are collected in individual cells. Thus, the speed of a marker selection algorithm will only become more important.

The RANKCORR, Wilcoxon, t-test, and logistic regression methods run the fastest of all of the methods considered in this work. They run considerably faster and/or lighter than any of the complex statistical methods that have been designed specifically for scRNA-seq data. Logistic regression does not scale particularly well with the data set size, however, and it requires an amount of resources that is not competitive with the other three methods on the largest data sets. Moreover, logistic regression exhibits poor performance on several of the data sets considered in this work, especially when selecting small numbers of markers. Thus, as a general guideline, RANKCORR, Wilcoxon, and the t-test are the optimal marker selection algorithms examined in this work for the analysis of large, sparse UMI counts data. This recommendation is further bolstered by the fact that these three algorithms tend to perform well in the experiments that we have considered here, especially when selecting lower numbers of markers.