Abstract

The Coronavirus disease 2019 (COVID-19) is the fastest transmittable virus caused by severe acute respiratory syndrome Coronavirus 2 (SARS-CoV-2). The detection of COVID-19 using artificial intelligence techniques and especially deep learning will help to detect this virus in early stages which will reflect in increasing the opportunities of fast recovery of patients worldwide. This will lead to release the pressure off the healthcare system around the world. In this research, classical data augmentation techniques along with Conditional Generative Adversarial Nets (CGAN) based on a deep transfer learning model for COVID-19 detection in chest CT scan images will be presented. The limited benchmark datasets for COVID-19 especially in chest CT images are the main motivation of this research. The main idea is to collect all the possible images for COVID-19 that exists until the very writing of this research and use the classical data augmentations along with CGAN to generate more images to help in the detection of the COVID-19. In this study, five different deep convolutional neural network-based models (AlexNet, VGGNet16, VGGNet19, GoogleNet, and ResNet50) have been selected for the investigation to detect the Coronavirus-infected patient using chest CT radiographs digital images. The classical data augmentations along with CGAN improve the performance of classification in all selected deep transfer models. The outcomes show that ResNet50 is the most appropriate deep learning model to detect the COVID-19 from limited chest CT dataset using the classical data augmentation with testing accuracy of 82.91%, sensitivity 77.66%, and specificity of 87.62%.

Keywords: COVID-19, SARS-CoV-2, Deep transfer learning, CGAN

Introduction

At the end of February 2003, the Chinese population was infected with a severe acute respiratory syndrome (SARS) virus causing in Guangdong province in China. SARS was named SARS-CoV and confirmed as a member of the beta Coronavirus subgroup [1]. In 2019, Wuhan in China was infected by a 2019 novel Coronavirus that killed hundreds and infected thousands of humans within few days of the 2019 novel Coronavirus epidemic. The World Health Organization (WHO) named the novel virus as 2019 Coronavirus (2019-nCov) which can cause respiratory disease and severe pneumonia [2]. In 2020, the International Committee on Taxonomy of Viruses (ICTV) announced the 2019 Coronavirus as severe acute respiratory syndrome Coronavirus-2 (SARS-CoV-2) and the disease as Coronavirus disease 2019 (COVID-19) [3–5]. The family of Coronaviruses is alpha (α), beta (β), gamma (γ), and delta (δ) Coronavirus. 2019-nCov was reported to be a member of the β group of Coronaviruses. The SARS Coronavirus epidemic affected 26 countries and outcomes in more than 8000 cases in 2003. The SARS-CoV-2 epidemic infected more than 1.5 million individuals with death rate of 4%, across 150 countries, till the date of this writing. The transmission rate of SARS-CoV-2 is higher than SARS Coronavirus because S protein in the RBD region of SARS-CoV-2 may have enhanced its transmission [6].

In 2012, Middle East Respiratory Syndrome (MERS) was reported in Saudi Arabia as an illness caused by a Coronavirus. SARS and MERS are BetaCoronaviruses (β-CoVs or Beta-CoVs) that are transmitted to people from some cats and Arabian camels, respectively [7, 8]. The sale of wild animals may be the source of Coronavirus infection. The discovery of multiple offspring of pangolin Coronavirus and their similarity to SARS-CoV-2 suggests that pangolins should be considered as possible hosts of novel Coronaviruses. WHO recommendations were to reduce the risk of transmission of Coronavirus from animals to humans in wild animal markets [9]. Person to person Coronavirus transmissions have been reported in different cases outside China, namely in Italy [10], USA [11], Germany [12], and Vietnam [13], Nepal [14]. On 11 April 2020, SARS-CoV-2 claimed more than 1.7 million cases, 400,000 recovered cases, and 100,000 death cases. Figure 1 shows some statistics about recovered and death cases of COVID-19 [15].

Fig. 1.

Statistics of COVID-19 in some countries

Deep transfer learning (DTL) is a deep learning technique that reuses a trained deep learning model that is inspired by neurons of the brain [16, 17]. DTL is quickly becoming a critical technique in image/video classification and detection. DTL improves medical systems to realize higher outcomes, widen illness scope, and implement applicable real-time medical image [18–20] disease detection systems. In 2012, Krizhevsky and et al. and Ciregan et al. [21, 22] showed how convolutional neural networks (CNN/ConvNet) based on graphics processing unit (GPU) can enhance many image benchmark classification such as MNIST [23], Chinese characters [24], NORB (jittered, cluttered) [25], traffic signs [26], large-scale ImageNet [27], Arabic digits recognition [28], and Arabic handwritten characters recognition [29]. In the following years, various advances in CNN further decreased the error rate on the image/video classification competition. Many DTL models were introduced as AlexNet [22], VGGNet [30], GoogleNet [31], ResNet [32], Xception [33], DenseNet [34], Inception-V3 [35].

This section surveys the recent scientific papers on applying deep learning in the field of medical chest computerized tomography (CT) and X-ray images [36] classification. Christe et al. [37] introduced a computer-aided detection method based on deep learning that was able to detect idiopathic pulmonary fibrosis with similar accuracy to a human reader. The proposed system used for the automatic classification of CT images into 4 radiological diagnostic categories. The model achieved an F-score (harmonic mean for precision and recall) of 80%. In [38], the authors introduced a novel system for the automated classification of interstitial lung abnormality patterns in computed tomography images. The proposed system was an ensemble of deep convolutional neural networks (DCNNs) that detects more features by incorporating dimensional architectures. The outcome of the ensemble is a sensitivity of 91,41% and specificity of 98,18%.

In this paper, we introduce DTL models to classify limited COVID-19 chest CT scan digital images. To input adopting CT images of the chest to the DCNN, we enriched the medical chest CT images using classical data augmentation and CGAN to generate more CT images. After that, a classifier is used to ensemble the class (COVID/NonCOVID) outputs of the classification outcomes. The proposed DTL models were evaluated on the COVID-19 CT scan images dataset. The novelty of this research is conducted as follows: (1) The introduced DTL models have end-to-end structure without classical feature extraction and selection methods. (2) We show that data augmentation and conditional generative adversarial network (CGAN) is an effective technique to generate CT images. (3) Chest CT images are one of the best tools for the classification of COVID-19. (4) The DTL models have been shown to yield very high accuracy in the limited COVID-19 dataset. The rest of the paper is organized as follows. Section 2 discusses the dataset used in our research. Section 3 introduces the proposed models, while Sect. 4 illustrates the achieved outcomes and its discussion. Finally, Sect. 5 provides conclusions and directions for further research.

Dataset

The COVID-19 CT scan digital images dataset [39] utilized in this research was created by Zhao et al. (https://github.com/UCSD-AI4H/COVID-CT). The authors collected 760 preprints about COVID-19 from bioRxiv1 (https://www.biorxiv.org) and medRxiv2 (https://www.medrxiv.org) posted from January 19 to March 25 that report patient cases of COVID-19 CT. The dataset is organized into 3 folders (train, validation, and test) and contains subfolders for each image category (COVID/NonCOVID). There are 742 CT images and 2 categories (COVID/NonCOVID). The number of images for each class is presented in Table 1. Table 1 also illustrates the proposed method to increase the number of COVID-19 CT images using augmentation and CGAN. Figure 2 illustrates samples of CT images used for this research.

Table 1.

Number of images for each class in COVID-19 CT dataset

| Dataset | Train set | Validation set | Test set | |||

|---|---|---|---|---|---|---|

| COVID | NonCOVID | COVID | NonCOVID | COVID | NonCOVID | |

| COVID-19 | 191 | 234 | 60 | 58 | 94 | 105 |

| COVID-19 + Aug | 2292 | 2808 | 720 | 696 | 94 | 105 |

| COVID-19 + CGAN | 2191 | 2234 | 210 | 208 | 94 | 105 |

| COVID-19 + Aug + CGAN | 4292 | 4808 | 870 | 846 | 94 | 105 |

Fig. 2.

Samples of the used COVID/NonCOVID CT images used in this research

Proposed model

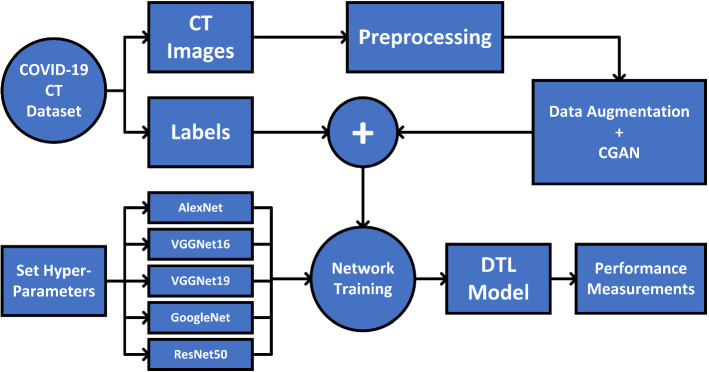

The proposed architecture consists of two main components; the first component is the data augmentation using classical data augmentation techniques along with CGAN, while the second component is the DTL model as shown in Fig. 3. Mainly, the classical data augmentation and CGAN are used in the preprocessing phase while the DTL is used in the performance measurement phase.

Fig. 3.

The proposed architecture of the classical data augmentation along with CGAN and DTL models

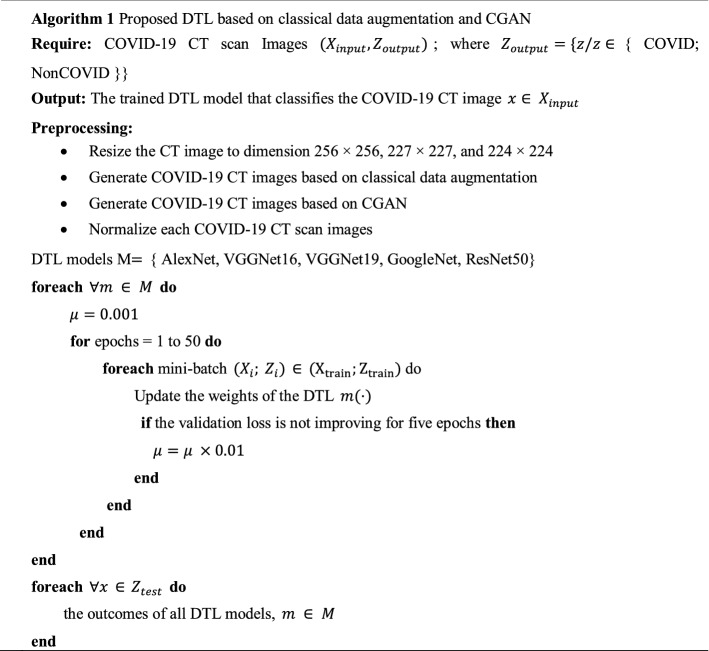

Algorithm 1

It introduces the proposed model in detail below. Let {AlexNet, VGGNet16, VGGNet19, GoogleNet, and ResNet50} be the set of DTL models. Each DTL is fine-tuned with the COVID-19 CT Images dataset , where is input of the set of images, each of size, 256 × 256, 227 × 227, and 224 × 224, the contain the corresponding labels, {COVID; NonCOVID}}. is a hyper-parameter that updates the weights of our DTL. The dataset is divided into train, validate, and test, training set , validate set , test set . The training data is then divided into mini-batches, each of size , such that ; and iteratively optimizes (fine-tuning) the DCNN model to reduce the empirical loss as illustrated in Eq. (1).

| 1 |

where is the DCNN model that predicts class for input given is a weight.

Deep transfer learning

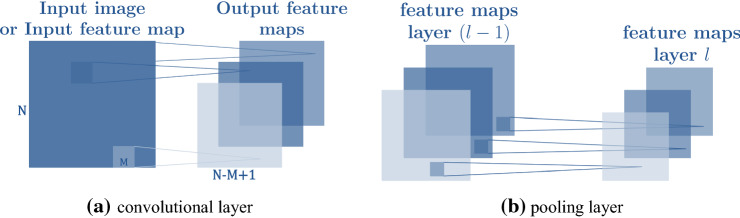

DTL is the most successful reuse type of deep convolutional neural network model for image/video classification. A single DTL model contains many different layers of convolution and pooling layer that work on feature extraction from image/video and more complex deep features in deeper layers.

Let layer be a convolutional layer. Suppose that we have some square neuron nodes which are followed by a convolutional layer. If we use an filter (mask) , then convolutional layer output will be of size which produces -feature maps that are illustrated in Fig. 4. The convolutional layer acts as a feature extractor that grabs features of the inputs. The convolution layer extracts features from the image like edges, lines, and corners, to compute the pre-nonlinearity input to some unit. Then, the input of layer comprises is computed in Eq. (2):

| 2 |

where is a bias matrix and is the mask of size . Then, the convolutional layer applies its activation function in Eq. (3):

| 3 |

where is called nonlinearity, function applied to achieve nonlinearity in DTL, which contains many types such as tanh, sigmoid, rectified linear units (ReLU). In our method, we utilize ReLU in Eq. (4) as the activation function for faster training process:

| 4 |

Fig. 4.

Illustration of the convolutional and pooling layer which produce feature maps

The loss function is the criterion for the training process. Our loss function in Eq. (5) is defined as the sum of the binary-entropy loss and the box regression loss:

| 5 |

where denotes the predicted score class while and denote of bounding boxes. indicates that we only consider the boxes of non-background (the box is background if ). This loss function contains two parts for bounding box regression loss classification loss and in Eqs. (6–8):

| 6 |

and

| 7 |

where

| 8 |

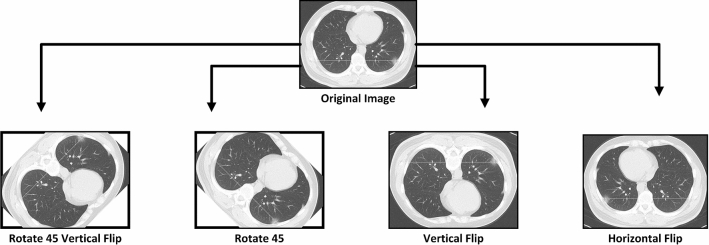

Data augmentation

The main idea behind this research is to perform a transfer learning with augmented COVID-19 CT images. To increase the performance of the proposed transfer learning models, training data amount and validate data is a very important factor. The most popular classical data augmentation method is to perform a combination of affine image transformations [40]. Different methods for classical data augmentation such as rotation, shifting, flipping, zooming, transformation, add noise were selected to be applied to the original dataset. Figure 5 shows examples of COVID-19 CT augmented images. The achieved performance measurement will be discussed in the experimental results section.

Fig. 5.

Perform augmentation methods to increase limited COVID-19 CT scan images

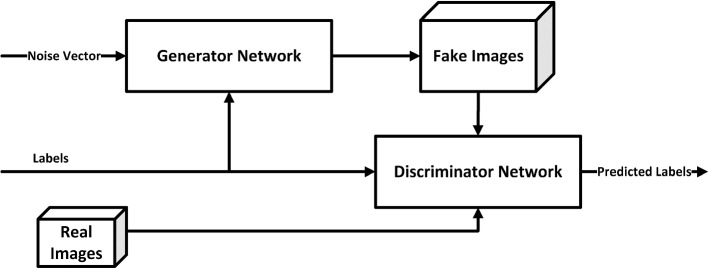

Conditional generative adversarial network

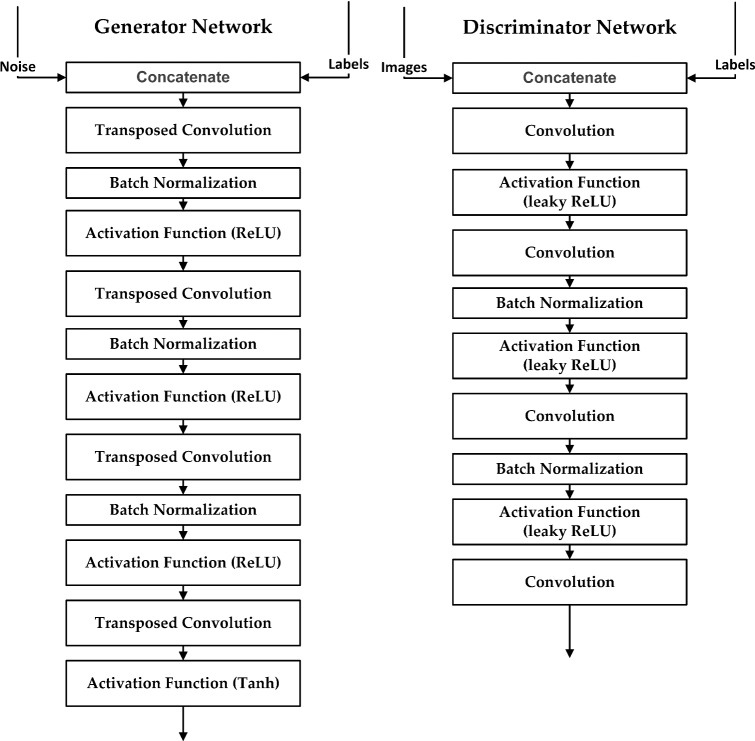

CGANs consist of two different types of networks (generator network and discriminator network) with the conditional label as shown in Fig. 6. A CGAN is a type of GAN that takes labels in the training process. The generator network in this paper consists of 4 transposed convolutional layers, 3 ReLU layers, 3 batch normalization layers, and tanh layer at the end of the model, while the discriminator network consists of 4 convolutional layers, 3 leaky ReLU, and 2 batch normalization layers. All the convolutional and transposed convolutional layers use the same filter size of 5 × 5 pixels with 20, 10, 5 filters for each layer for the generated network but 5, 10, 20, 40 for each layer in the discriminator network. Figure 7 presents the structure and the sequence of layers of the CGAN network proposed in this research. We trained our CGAN model as shown in the right Fig. 8, and on the left, some generated CT images.

Fig. 6.

Conditional generative adversarial network model

Fig. 7.

The structure of the proposed CGAN network

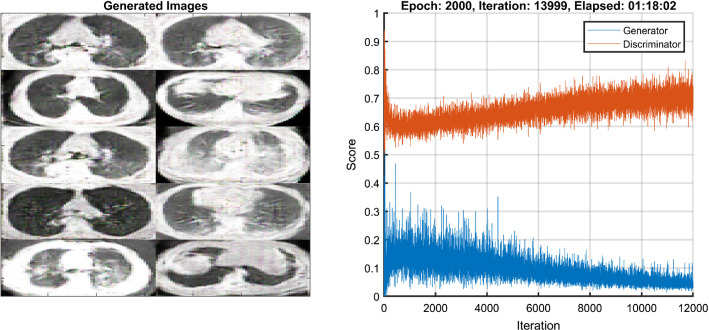

Fig. 8.

CGAN training and samples of the generated image

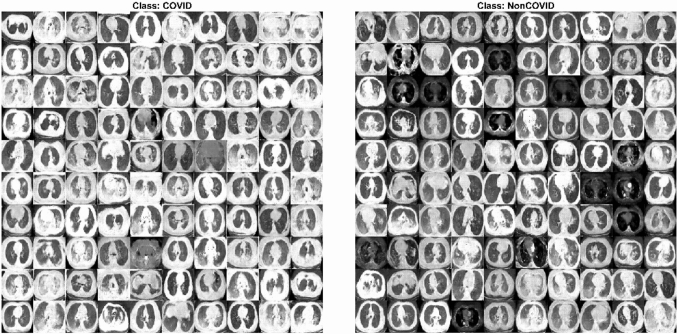

The CGAN network helped in overcoming the overfitting problem caused by the limited number of CT images in the COVID-19 dataset. Figure 9 presents samples of the output of the CGAN network for the COVID-19 class. Moreover, it increased the number of the dataset images to be 10 times more than the original one. The number of images in the augmented dataset reached 4425 images in the train set and 418 in the validation set after using the CGAN network for 2 classes. This will help in achieving better testing accuracy and performance matrices. The achieved performance measurement will be discussed in the experimental results section.

Fig. 9.

Samples of COVID-19 CT images generated by the CGAN model

Experimental results

The proposed model is trained on a high-end graphics processing unit (GPU). The GPU used (NVIDIA RTX 2070) contains 2304 CUDA core and comes with the CUDA deep neural network library (CuDNN) for GPU learning, the deep learning package TensorFlow machine learning and MATLAB as back end library. The proposed model has been tested under four different scenarios, the first scenario is to test the DTL models with the original COVID-19 CT dataset, the second scenario with data augmentation, the third one with CGAN, and the last one combines all three scenarios. All the test experiment scenarios included the two classes (COVID/NonCOVID). Every scenario consists of the validation phase and the testing phase as shown in Table 2.

Table 2.

Configuration of DTL models

| Model | Layers | Batch size | Momentum | Epoch | Learning rate | Optimizer |

|---|---|---|---|---|---|---|

| AlexNet | 8 | 32 | 0.9 | 50 | 0.001 | Adam |

| VGGNet16 | 16 | 32 | 0.9 | 50 | 0.001 | Adam |

| VGGNet19 | 19 | 32 | 0.9 | 50 | 0.001 | Adam |

| GoogleNet | 22 | 32 | 0.9 | 50 | 0.001 | Adam |

| ResNet50 | 50 | 32 | 0.9 | 50 | 0.001 | Adam |

Table 2 shows the five DTL models with initial learning rate () equal to 0.001 and the number of epochs equal to 50. Also, the mini-batch size is set to 32 and early stopping to be 5 epochs if the accuracy didn’t improve. In terms of optimizer technique, Adam [41] is chosen as our optimizer technique, which updates weights parameters. This optimizer technique is a combination of root mean square propagation (RMSprop) and stochastic gradient descent (SGD) with momentum. To avoid deep learning network overfitting problems, we utilize the dropout method [42] as well as the early stopping technique [43] to select the most appropriate training iteration.

Verification and testing accuracy measurement

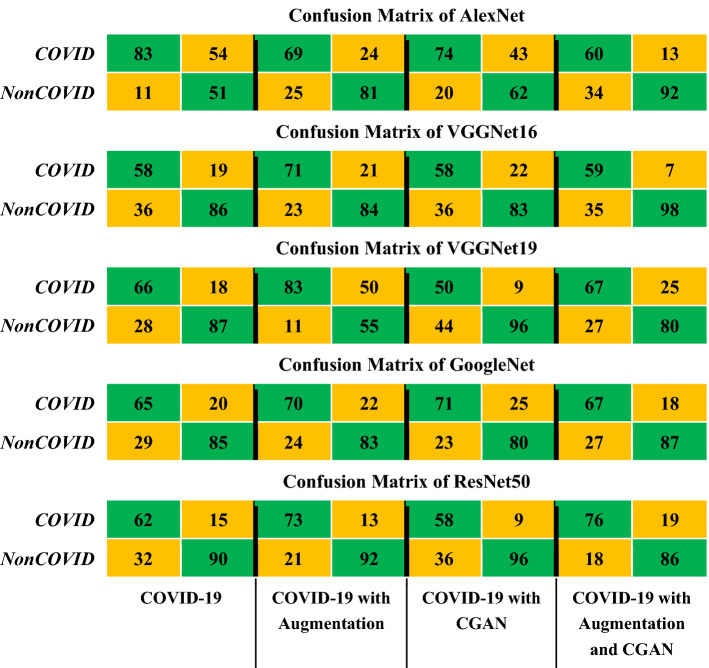

Testing accuracy is one of the estimations which demonstrates the performance measurement of any DTL models. The confusion matrix also is one of the performance measurements which give more insights into the achieved testing accuracy. DTL confusion matrices for two classes with different scenarios are illustrated in Fig. 10. The first DTL model investigated is AlexNet along with four scenarios as shown in Fig. 10. Table 3 shows that the highest testing accuracy using AlexNet is 76.4% when the COVID-19 CT dataset is augmented with data augmentation along with CGAN. The second DTL model is investigated with VGGNet16. Table 3 shows that the highest testing accuracy using VGGNet16 is 78.9% when the COVID-19 CT dataset is augmented with classical data augmentation along with CGAN. The third DTL model is investigated with VGGNet19. Table 3 shows that the highest testing accuracy using VGGNet19 is 76.9% when the COVID-19 CT dataset is not augmented. The fourth DTL model is investigated with GoogleNet. Table 3 shows that the highest testing accuracy using GoogleNet is 77.4% when the COVID-19 CT dataset is augmented with the classical data augmentation along with CGAN.

Fig. 10.

DTL Confusion matrices for two classes with different scenarios

Table 3.

DTL testing accuracy for the different four scenarios

| Dataset | AlexNet (%) | VGGNet16 (%) | VGGNet19 (%) | GoogleNet (%) | ResNet50 (%) |

|---|---|---|---|---|---|

| COVID-19 | 67.34 | 72.36 | 76.88 | 75.38 | 76.38 |

| COVID-19 with augmentation | 75.38 | 77.89 | 69.35 | 76.88 | 82.91 |

| COVID-19 with CGAN | 68.34 | 70.85 | 73.37 | 75.88 | 77.39 |

| COVID-19 with aug and CGAN | 76.38 | 78.89 | 73.87 | 77.39 | 81.41 |

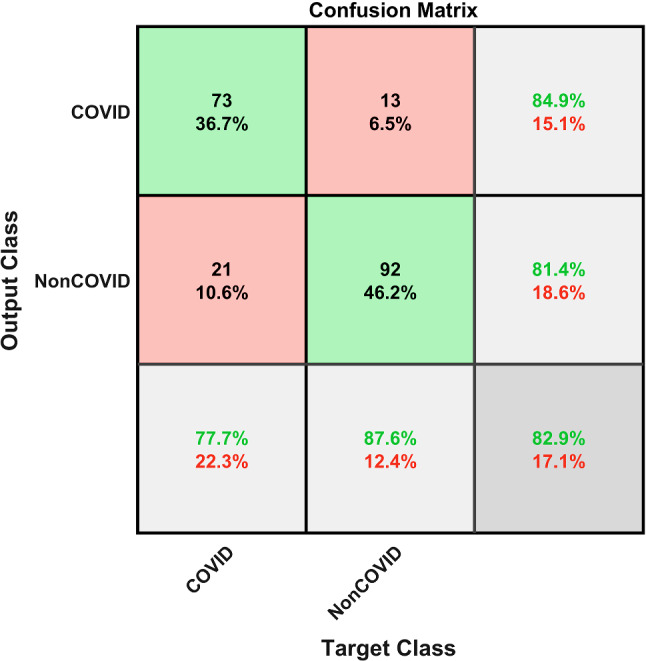

The final DTL model will be investigated with ResNet50. The highest testing accuracy is 82.9% when the COVID-19 CT dataset is augmented with classical data augmentation as shown in Fig. 11. Table 3 summarizes the testing accuracy for the different deep transfer learning models for 2 classes with the four scenarios. Table 3 illustrates testing accuracy for the different four scenarios, the Resnet50 achieved the highest accuracy with 82.9%, this is due to the large number of parameters in the Resnet50 architecture which contains millions of parameters which are not larger than VGGNet and GoogleNet, but the VGGNet and GoogleNet only include 16, and 22 layers while the Resnet50 includes 50 layers.

Fig. 11.

Confusion matrix of highest accuracy for ResNet50 in COVID-19 with classical data augmentation

Performance evaluation and discussion

To quantitatively evaluate the performance of the proposed models, other performance matrices are investigated. The most common performance measures in the field of deep learning are sensitivity, specificity, precision, accuracy and F1 score [44] and they are presented from Eq. (9) to Eq. (13).

| 9 |

where TP (true positives) is the count of correctly labeled instances of the class under observation, FP (false positives) is the count of miss-classified labeled of rest of the classes, TN (true negatives) is the count of correctly labeled instances of rest of the classes, and FN (false negatives) is the count of miss-classified labeled of the class under observation.

| 10 |

| 11 |

| 12 |

| 13 |

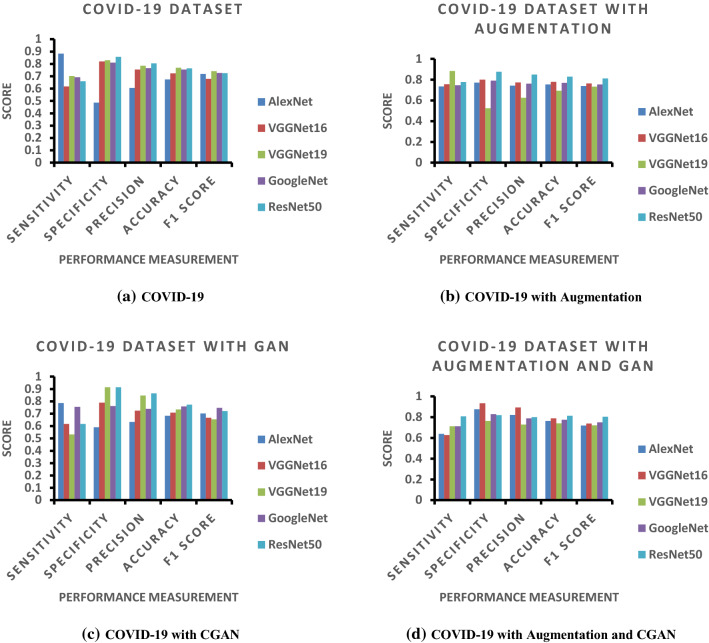

Figure 12 presents the performance metrics for different scenarios with DTL models for the COVID-19 CT dataset. The highest sensitivity of 88.3% (Table 4) is achieved by scenario-1 and scenario-2 (COVID-19 only and with augmentation) based on AlexNet and VGGNet19 that refers to the test’s ability to correctly classify COVID-19 CT patients who do have the condition. In the example of a CT scan medical test used to classify and detect a COVID-19 disease, the detection rate (sensitivity) of the test is the proportion of people who test positive for the COVID-19 malady among those who have the COVID-19 malady. A negative result in a test with a high detection rate is useful for getting rid of the COVID-19 CT malady.

Fig. 12.

Performance measurements for COVID-19 CT in four scenarios

Table 4.

Testing Sensitivity for the different 4 scenarios

| Dataset | AlexNet (%) | VGGNet16 (%) | VGGNet19 (%) | GoogleNet (%) | ResNet50 (%) |

|---|---|---|---|---|---|

| COVID-19 | 88.30 | 61.70 | 70.21 | 69.15 | 65.96 |

| COVID-19 with augmentation | 73.40 | 75.53 | 88.30 | 74.47 | 77.66 |

| COVID-19 with CGAN | 78.72 | 61.70 | 53.19 | 75.53 | 61.70 |

| COVID-19 with aug and CGAN | 63.83 | 62.77 | 71.28 | 71.28 | 80.85 |

A test with high specificity determines that a person does not have COVID-19 as shown in Table 5. Sensitivity and specificity can be summarized by a single quantity called the balanced accuracy as shown in Table 6, which is defined as the mean of both measures in Eq. (14):

| 14 |

Table 5.

Testing Specificity for the different 4 scenarios

| Dataset | AlexNet (%) | VGGNet16 (%) | VGGNet19 (%) | GoogleNet (%) | ResNet50 (%) |

|---|---|---|---|---|---|

| COVID-19 | 48.57 | 81.90 | 82.86 | 80.95 | 85.71 |

| COVID-19 with augmentation | 77.14 | 80.00 | 52.38 | 79.05 | 87.62 |

| COVID-19 with CGAN | 59.05 | 79.05 | 91.43 | 76.19 | 91.43 |

| COVID-19 with aug and CGAN | 87.62 | 93.33 | 76.19 | 82.86 | 81.90 |

Bold value indicates high Specificity

Table 6.

Testing Balanced accuracy for the different 4 scenarios

| Dataset | AlexNet (%) | VGGNet16 (%) | VGGNet19 (%) | GoogleNet (%) | ResNet50 (%) |

|---|---|---|---|---|---|

| COVID-19 | 68.44 | 71.80 | 76.54 | 75.05 | 75.84 |

| COVID-19 with augmentation | 75.27 | 77.77 | 70.34 | 76.76 | 82.64 |

| COVID-19 with CGAN | 68.89 | 70.38 | 72.31 | 75.86 | 76.57 |

| COVID-19 with aug and CGAN | 75.73 | 78.05 | 73.74 | 77.07 | 81.38 |

Bold values indicate 3 high Balanced accuracy

The balanced accuracy is in the range [0,1] where a value of 0 and 1 indicates the worst and the best classifier, respectively.

As shown in Table 6, ResNet50 is the best classifier to detect the COVID-19 in CT dataset with classical data augmentation along with CGAN. The classical data augmentation along with CGAN improves the performance of classification in all deep transfer models (AlexNet, VGGNet16, GoogleNet, ResNet50). The other bottleneck is the limited size of the COVID-19 CT database. Predictably the performance of deep transfer models can be further improved if more data are collected in the future. Although we have achieved promising accuracy rates, the proposed model in this study needs to be tested on larger-scale datasets that include different COVID-19 CT images to increase the testing accuracy and extend it in other medical applications. As future work, we plan to classify COVID-19 using a neutrosophic approach [45] and deep learning.

Conclusion and future works

In 2019, the world was infected by the 2019 novel Coronavirus that killed thousands and infected over a million human within few months of the 2019 novel Coronavirus epidemic. In this paper, classical data augmentations along with CGAN with deep transfer learning for COVID-19 detection in limited chest CT scan images are presented. The number of COVID-19 CT images of the collected dataset was 742 images for two types of labels. The classical data augmentation and CGAN helped to increase the CT dataset and overcoming the overfitting problem. Moreover, five deep transfer learning models (AlexNet, VGGNet16, VGGNet19, GoogleNet, and ResNet50) were selected in this paper for investigation. Using a combination of classical data augmentation and CGAN with deep transfer learning improved testing accuracy, and performance measurements such as sensitivity, specificity, precision, accuracy, and F1 score. The results show that ResNet50 is the best deep learning model to detect the COVID-19 from chest CT dataset. The major drawback is not trying most of deep learning models to get highest performance measurement. As future work, we plan to approach the COVID-19 study from machine learning and deep learning.

Funding

This research received no external funding.

Compliance with ethical standards

Conflict of interest

The author declares no conflict of interest.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Mohamed Loey, Email: mloey@fci.bu.edu.eg.

Gunasekaran Manogaran, Email: gmanogaran@ucdavis.edu.

Nour Eldeen M. Khalifa, Email: nourmahmoud@cu.edu.eg

References

- 1.Chang L, Yan Y, Wang L. Coronavirus disease 2019: coronaviruses and blood safety. Transfus Med Rev. 2020 doi: 10.1016/j.tmrv.2020.02.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Singhal T. A review of coronavirus disease-2019 (COVID-19) Indian J Pediatr. 2020;87(4):281–286. doi: 10.1007/s12098-020-03263-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Lai C-C, Shih T-P, Ko W-C, Tang H-J, Hsueh P-R. Severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2) and coronavirus disease-2019 (COVID-19): the epidemic and the challenges. Int J Antimicrob Agents. 2020;55(3):105924. doi: 10.1016/j.ijantimicag.2020.105924. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Li J, et al. Game consumption and the 2019 novel coronavirus. Lancet Infect Dis. 2020;20(3):275–276. doi: 10.1016/S1473-3099(20)30063-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Sharfstein JM, Becker SJ, Mello MM. Diagnostic testing for the novel coronavirus. JAMA. 2020 doi: 10.1001/jama.2020.3864. [DOI] [PubMed] [Google Scholar]

- 6.Shereen MA, Khan S, Kazmi A, Bashir N, Siddique R. COVID-19 infection: origin, transmission, and characteristics of human coronaviruses. J Adv Res. 2020;24:91–98. doi: 10.1016/j.jare.2020.03.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Rabi FA, Al Zoubi MS, Kasasbeh GA, Salameh DM, Al-Nasser AD. SARS-CoV-2 and coronavirus disease 2019: what we know so far. Pathogens. 2020;9(3):231. doi: 10.3390/pathogens9030231. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.York A. Novel coronavirus takes flight from bats? Nat Rev Microbiol. 2020;18(4):191. doi: 10.1038/s41579-020-0336-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Lam TT-Y, et al. Identifying SARS-CoV-2 related coronaviruses in Malayan pangolins. Nature. 2020 doi: 10.1038/s41586-020-2169-0. [DOI] [PubMed] [Google Scholar]

- 10.Giovanetti M, Benvenuto D, Angeletti S, Ciccozzi M. The first two cases of 2019-nCoV in Italy: where they come from? J Med Virol. 2020;92(5):518–521. doi: 10.1002/jmv.25699. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Holshue ML, et al. First case of 2019 novel coronavirus in the United States. N Engl J Med. 2020;382(10):929–936. doi: 10.1056/NEJMoa2001191. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Rothe C, et al. Transmission of 2019-nCoV infection from an asymptomatic contact in Germany. N Engl J Med. 2020;382(10):970–971. doi: 10.1056/NEJMc2001468. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Phan LT, et al. Importation and human-to-human transmission of a novel coronavirus in Vietnam. N Engl J Med. 2020;382(9):872–874. doi: 10.1056/NEJMc2001272. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Bastola A, et al. The first 2019 novel coronavirus case in Nepal. Lancet Infect Dis. 2020;20(3):279–280. doi: 10.1016/S1473-3099(20)30067-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Coronavirus (COVID-19) map. https://www.google.com/covid19-map/. Accessed 26 Apr 2020

- 16.Rong D, Xie L, Ying Y. Computer vision detection of foreign objects in walnuts using deep learning. Comput Electron Agric. 2019;162:1001–1010. doi: 10.1016/j.compag.2019.05.019. [DOI] [Google Scholar]

- 17.Loey M, ElSawy A, Afify M (2020) Deep learning in plant diseases detection for agricultural crops: a survey. In: International journal of service science, management, engineering, and technology (IJSSMET). www.igi-global.com/article/deep-learning-in-plant-diseases-detection-for-agricultural-crops/248499. Accessed 11 Apr 2020

- 18.Lundervold AS, Lundervold A. An overview of deep learning in medical imaging focusing on MRI. Z für Med Phys. 2019;29(2):102–127. doi: 10.1016/j.zemedi.2018.11.002. [DOI] [PubMed] [Google Scholar]

- 19.Maier A, Syben C, Lasser T, Riess C. A gentle introduction to deep learning in medical image processing. Z für Med Phys. 2019;29(2):86–101. doi: 10.1016/j.zemedi.2018.12.003. [DOI] [PubMed] [Google Scholar]

- 20.Loey M, Naman MR, Zayed HH (2020) A survey on blood image diseases detection using deep learning. In: International journal of service science, management, engineering, and technology (IJSSMET). www.igi-global.com/article/a-survey-on-blood-image-diseases-detection-using-deep-learning/256653. Accessed 17 Jun 2020

- 21.Ciregan D, Meier U, Schmidhuber J (2012) Multi-column deep neural networks for image classification. In: 2012 IEEE conference on computer vision and pattern recognition, pp 3642–3649. 10.1109/CVPR.2012.6248110

- 22.Krizhevsky Ilya GE, Alex H (2012) Sutskever, Imagenet classification with deep convolutional neural networks. In: Advances in neural information processing systems, pp 1097–1105

- 23.Lecun Y, Bottou L, Bengio Y, Haffner P. Gradient-based learning applied to document recognition. Proc IEEE. 1998;86(11):2278–2324. doi: 10.1109/5.726791. [DOI] [Google Scholar]

- 24.Yin F, Wang Q, Zhang X, Liu C (2013) ICDAR 2013 Chinese handwriting recognition competition. In: 2013 12th international conference on document analysis and recognition, pp 1464–1470. 10.1109/icdar.2013.218

- 25.LeCun Y, Huang FJ, Bottou L (2004) Learning methods for generic object recognition with invariance to pose and lighting. In: Proceedings of the 2004 IEEE computer society conference on computer vision and pattern recognition, 2004. CVPR 2004., vol 2, p II-104. 10.1109/cvpr.2004.1315150

- 26.Stallkamp J, Schlipsing M, Salmen J, Igel C (2011) The German traffic sign recognition benchmark: a multi-class classification competition. In: The 2011 international joint conference on neural networks, pp 1453–1460. 10.1109/IJCNN.2011.6033395

- 27.Deng J, Dong W, Socher R, Li L, Kai L, Li F-F (209) ImageNet: a large-scale hierarchical image database. In: 2009 IEEE conference on computer vision and pattern recognition, pp 248–255. 10.1109/CVPR.2009.5206848

- 28.El-Sawy A, EL-Bakry H, Loey M (2017) CNN for handwritten arabic digits recognition based on LeNet-5 BT. In: Proceedings of the international conference on advanced intelligent systems and informatics 2016, pp 566–575

- 29.El-Sawy A, Loey M, EL-Bakry H (2017) Arabic handwritten characters recognition using convolutional neural network. WSEAS Trans Comp Res 5:11–19. http://www.wseas.org/multimedia/journals/computerresearch/2017/a045818-075.php. Accessed 1 Apr 2020

- 30.Liu S, Deng W (2015) Very deep convolutional neural network based image classification using small training sample size. In: 2015 3rd IAPR Asian conference on pattern recognition (ACPR), pp 730–734. 10.1109/acpr.2015.7486599

- 31.Szegedy C et al (2015) Going deeper with convolutions. In: 2015 IEEE conference on computer vision and pattern recognition (CVPR), pp 1–9. 10.1109/CVPR.2015.7298594

- 32.He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: 2016 IEEE conference on computer vision and pattern recognition (CVPR), pp 770–778, 10.1109/CVPR.2016.90

- 33.Chollet F (2017) Xception: deep learning with depthwise separable convolutions. In: 2017 IEEE conference on computer vision and pattern recognition (CVPR), pp 1800–1807, 10.1109/CVPR.2017.195

- 34.Huang G, Liu Z, Van Der Maaten L, Weinberger KQ (2017) Densely connected convolutional networks. In: 2017 IEEE conference on computer vision and pattern recognition (CVPR), pp 2261–2269. 10.1109/cvpr.2017.243

- 35.Szegedy C, Vanhoucke V, Ioffe S, Shlens J, Wojna Z (2016) Rethinking the inception architecture for computer vision. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2818–2826

- 36.Loey M, Smarandache F, Khalifa NEM. Within the lack of chest COVID-19 X-ray dataset: a novel detection model based on GAN and deep transfer learning. Symmetry. 2020 doi: 10.3390/sym12040651. [DOI] [Google Scholar]

- 37.Christe A, et al. Computer-aided diagnosis of pulmonary fibrosis using deep learning and CT images. Invest Radiol. 2019;54(10):627–632. doi: 10.1097/RLI.0000000000000574. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Bermejo-Peláez D, Ash SY, Washko GR, San José Estépar R, Ledesma-Carbayo MJ. Classification of interstitial lung abnormality patterns with an ensemble of deep convolutional neural networks. Sci Rep. 2020 doi: 10.1038/s41598-019-56989-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Zhao J, Zhang Y, He X, Xie P (2020) COVID-CT-Dataset: a CT scan dataset about COVID-19. arXiv:2003.13865 [cs, eess, stat] http://arxiv.org/abs/2003.13865. Accessed 9 Apr 2020

- 40.Mikołajczyk A, Grochowski M (2018) Data augmentation for improving deep learning in image classification problem. In: 2018 international interdisciplinary PhD workshop (IIPhDW), pp. 117–122. 10.1109/iiphdw.2018.8388338

- 41.Kingma DP (2015) Adam: a method for stochastic optimization. http://arxiv.org/abs/1412.6980

- 42.Srivastava N, Hinton G, Krizhevsky A, Sutskever I, Salakhutdinov R. Dropout: a simple way to prevent neural networks from overfitting. J Mach Learn Res. 2014;15(56):1929–1958. [Google Scholar]

- 43.Caruana R, Lawrence S, Giles L (2000) Overfitting in neural nets: backpropagation, conjugate gradient, and early stopping. In: Proceedings of the 13th international conference on neural information processing systems, pp 381–387

- 44.Goutte C, Gaussier E (2010) A probabilistic interpretation of Precision, Recall and F-Score, with implication for evaluation

- 45.Smarandache F (2019) Neutrosophic set is a generalization of intuitionistic fuzzy set, inconsistent intuitionistic fuzzy set (Picture Fuzzy Set, Ternary Fuzzy Set), Pythagorean Fuzzy Set, q-Rung Orthopair Fuzzy Set, Spherical Fuzzy Set, etc. arXiv:1911.07333 [math] http://arxiv.org/abs/1911.07333. Accessed 13 Apr 2020