Supplemental Digital Content is available in the text.

Introduction:

Inpatient electrolyte testing rates vary significantly across pediatric hospitals. Despite evidence that unnecessary testing exists, providers still struggle with reducing electrolyte laboratory testing. We aimed to reduce serum electrolyte testing among pediatric inpatients by 20% across 5 sites within 6 months.

Methods:

A national quality improvement collaborative evaluated standardized interventions for reducing inpatient serum electrolyte testing at 5 large tertiary and quaternary children’s hospitals. The outcome measure was the rate of electrolyte laboratory tests per 10 patient-days. The interventions were adapted from a previous single-site improvement project and included cost card reminders, automated laboratory plans via electronic medical record, structured rounds discussions, and continued education. The collaborative utilized weekly conference calls to discuss Plan, Do, Study, Act cycles, and barriers to implementation efforts.

Results:

The study included 17,149 patient-days across 5 hospitals. The baseline preintervention electrolyte laboratory testing rate mean was 4.82 laboratory tests per 10 patient-days. Postimplementation, special cause variation in testing rates shifted the mean to 4.19 laboratory tests per 10 patient-days, a 13% reduction. There was a wide variation in preintervention electrolyte testing rates and the effectiveness of interventions between the hospitals participating in the collaborative.

Conclusions:

This multisite improvement collaborative was able to rapidly disseminate and implement value improvement interventions leading to a reduction in electrolyte testing; however, we did not meet our goal of 20% testing reduction across all sites. Quality improvement collaboratives must consider variation in context when adapting previously successful single-center interventions to a wide variety of sites.

INTRODUCTION

The cost of healthcare is rising at an unsustainable rate,1,2 and up to 34% of this spending can be classified as waste.3 An estimated $75.7–101.2 billion of this wasted spending can be attributed to low-value care, much of which falls under unnecessary laboratory or diagnostic testing.4,5

Routine electrolyte tests ordered to monitor a patient’s status often do not change medical management.6,7 Overtesting, defined as excessive diagnostic testing with little chance of providing a net benefit to patients, contributes to overall cost burden and can have harmful effects on patients and families due to increased pain and trauma, risk of anemia, and sleep disruptions.8–11 Additionally, overtesting increases the opportunity for false positives or other abnormalities lacking clinical significance.12 Spurious results can lead to a cascade effect, resulting in additional unnecessary testing, increased workload for hospital staff, increased cost, and risk of harm for both staff and patients.1,13,14 Therefore, it is essential for healthcare providers to examine current routine laboratory testing practices.

Despite recommendations and general guidance on appropriate laboratory monitoring from multiple organizations, differences in electrolyte testing rates for common pediatric diagnoses are as high as 80% across children’s hospitals.15–19 Previous quality improvement (QI) projects have been effective in reducing inpatient laboratory testing.20,21 Recently, a large academic children’s hospital successfully decreased electrolyte testing by 35% through QI methods.20

Learning how to implement interventions while rapidly adapting to site-specific contexts will be imperative for the continued dissemination of QI work to additional institutions. With this in mind, the Children’s Hospital Association partnered with 5 pediatric hospitals to initiate a multicenter QI collaborative to reduce the number of unnecessary electrolyte laboratory tests in the inpatient setting using previously tested interventions. We aimed to decrease the number of serum electrolyte tests obtained for children admitted to a pediatric hospital medicine service across the collaborative by 20% within 6 months.

METHODS

Context and Setting

We conducted a multisite QI project at 5 urban tertiary and quaternary children’s hospitals, focusing on hospital medicine services. Hospitals were geographically diverse and differed in the average daily census, the size of inpatient rounding teams, the ratio of resident to attending physicians, and the composition of subspecialty patients admitted to the hospitalist service (Table 1). Each hospital had training programs for medical students, interns, residents, and fellows, and had an established QI infrastructure.

Table 1.

Site-specific Characteristics

| Composition of Teams | Number of Inpatient Ward Teams | Subspecialties Admitted to PHM Service | Nighttime Staffing by PHM Attendings | Average Daily Patient Census | |

|---|---|---|---|---|---|

| A | Attending, 1 upper level resident, 2 interns, 1 APRN most of the time | ≥4 resident teams | Most subspecialties admitted to PHM | ≤60 | |

| B | Attending, 1 senior resident, 3 interns, 2–3 medical students, 1 PA student, 1 pharmacist | ≥4 resident teams; ≥1 nonresident team | Most subspecialties have their own admitting service | >60 | |

| C | Attending ± fellow, 1 senior resident, 3 interns, 1–2 medical students | ≥4 resident teams; ≥1 nonresident team | Most subspecialties have their own admitting service | No in-house coverage overnight; attending on-call from home | >60 |

| D | Attending ± fellow, 1 senior resident, 3 interns, 1-3 medical students | 2 resident teams; ≥1 nonresident team | Most subspecialties admitted to PHM | ≤60 | |

| E | Attending ± fellow, 2 senior residents, 2–3 interns, 3–4 medical students, 1 pharmacist | 2 resident teams | Most subspecialties have their own admitting service | No in-house coverage overnight; attending on-call from home | ≤60 |

APRN, advanced practice registered nurse; PA, physician assistant; PHM, Pediatric Hospital Medicine.

Preparing for Interventions

The collaborative identified participants via e-mail from the Pediatric Hospital Medicine Fellowship Directors listserv. There was no financial cost or incentive to participate. Participating sites identified 1 or 2 team leaders to perform data collection, implement a series of predetermined interventions, and participate in weekly phone calls for 6 months from January to June 2019.

Weekly conference calls allowed consistent collaboration and communication as well as rapid adaptations to interventions across sites. Early calls introduced background for the project, data collection methods, and interpretation of proposed interventions. After that, calls included discussion around Plan, Do, Study, Act (PDSA) cycles highlighting successes, barriers, and variation in experiences. From this discussion, teams were able to modify and adapt interventions and run PDSA cycles to meet their hospitals’ and teams’ needs. Calls also included a review of aggregate collaborative data and site-specific data. These discussions encouraged an all-teach, all-learn approach to bolster the efforts of sites struggling with implementing interventions.

This study was not considered human subjects research and was therefore exempted from review by each site’s Institutional Review Board.

Interventions

In this project, the collaborative utilized interventions based on those implemented in a single-center study by Tchou et al.20 Sites adapted interventions to meet the specific needs of teams and hospitals—recognizing that contextualizing interventions would be necessary for each site to effect meaningful change. The collaborative implemented 1 intervention per month, allowing 1–2 weeks for each team to perform PDSA cycles at their hospital before fully rolling out the intervention to their entire hospital medicine team (Table 2).

Table 2.

Monthly Intervention Descriptions

| Month | Intervention | Description |

|---|---|---|

| February | Cost reference cards | CHA provided general cost cards based on PHIS data. If site-specific cost data available, site-specific cost cards were created by individual sites |

| March | Standardized laboratory plan | Template describing type of test, date of test, and reason for testing created for the electronic medical record (Epic or Cerner depending on the site); each site customized to fit their EHR |

| April | Structured rounds discussion | Utilized the template from standardized laboratory plan in EHR along with the cost reference cards to incorporate discussion points on rounds |

| May | Sustain and re-emphasize previous interventions | Utilized the cost reference cards, laboratory plans in notes, and discussion points on rounds |

| June | High-value care education and daily laboratory orders on transfer patients | Incorporated education on high-value care for residents and medical students; re-evaluated laboratory orders on patients transferred to the hospital medicine service |

CHA, Children’s Hospital Association; PHIS, Pediatric Health Information System.

Cost Cards

We created cost reference cards using a national estimate of the cost per laboratory panel and individual laboratory test from the Children’s Hospital Association’s Pediatric Health Information System database, an administrative record containing inpatient billing data from more than 40 freestanding children’s hospitals in the United States (See Figure 1, Supplemental Digital Content 1, which describes the cost reference card created by CHA, http://links.lww.com/PQ9/A212). Three sites with access to site-specific cost data adapted the cost reference cards for this intervention.

Implementation of the cost cards varied across sites. For example, some teams printed and laminated pocket-sized cards to distribute to all house staff, whereas others posted large versions in every workroom and small versions on computers. All sites distributed reminder e-mails about the cost cards at regular intervals.

Standardized Laboratory Plan in Inpatient Notes

All sites received a structured template for daily laboratory plan documentation that included the laboratory test name, the date and time of planned collection, and the rationale for the order (See Figure 2, Supplemental Digital Content 2, which describes a representative template for standardized laboratory plan for notes, http://links.lww.com/PQ9/A213). Although all sites used the same elements from the template, implementation varied based on differences in electronic health record (EHR) systems. Templates were created as a “dot phrase” that could be manually added to daily notes, or the daily note templates themselves were modified to include a section that automatically populated. Regardless of the method, all sites frequently reminded team members to utilize the template via electronic and in-person communication.

Structured Rounds Discussion

A structured discussion of the laboratory testing plan for each patient was incorporated into daily presentations on inpatient rounds, mirroring the standardized laboratory plan structure in the daily note. Medical students, residents, and attending physicians were encouraged to engage in structured discussions during family-centered rounding and when reviewing patients together at later times, such as afternoon or overnight. Most sites sent reminders to include laboratory discussions on rounds to attending and resident physicians at the start of inpatient rotations.

High-value Care Education and Reducing Laboratory Tests for Transferred Patients

The collaborative chose to focus on sustaining previous interventions and reduction efforts. This decision came after discussion among the collaborative in response to several sites facing challenges with implementing previous interventions, such as time delays or difficulty with adapting the EHR.

A survey was administered to residents and attending physicians to assess attitudes toward inpatient laboratory testing and high-value care. After reviewing survey results, sites selected 1 of 2 possible final interventions. Flexibility in the last intervention allowed sites to act on the survey responses and feedback, focusing on the most impactful and meaningful interventions for their hospital.

Four sites chose to reinforce high-value care education and the previous 3 interventions. One site decided to focus on reducing daily laboratory orders on patients transferred to the hospitalist service from another service within the hospital. This intervention asked residents to discuss the necessity of current laboratory orders with an attending after the review of standing orders placed by the transferring service. The sites focusing on high-value care education focused on didactics and real-time clinical education on how to practice high-value care, with particular attention given to patient laboratory testing indications.

Study of the Intervention

During the first month of the collaborative, baseline preintervention data were retrospectively collected for December 2018 and January 2019. The intervention period began in February 2019 and ended on June 1, 2019, during which the collaborative collected and analyzed data after interventions. This timeline allowed the collaborative to wrap up the project and review all data by the end of June 2019. The eligible study population included any patient admitted to the hospital medicine service at participating sites. Due to the variability in the structure of hospital medicine services, individual sites adapted this definition as needed to best represent this population in their local context (Table 1).

To ensure timely data collection and reduce personnel burden, the collaborative permitted diverse data collection methods. Each site chose to either obtain data through a manual chart review or from an automated EHR report. Most sites utilized the manual chart review and sampled 35 patient charts per week, a predetermined number that was feasible for those sites to maintain across the 6-month collaborative. This sampling technique yielded over 140 patient-days per month, which provided adequate power to detect changes using SPC tools. One site utilized an automated EHR report that included all patients admitted to the hospital medicine service each week.

Data collected included both the number of panels and individual electrolyte tests. We defined electrolyte panel tests as laboratory panels that contained electrolytes. The composition and availability of laboratory panels qualifying as electrolyte panels varied slightly by site, but overall corresponded to essential metabolic, comprehensive metabolic, total parenteral nutrition, and extended capillary blood gas panels. Each panel counted as 1 test for our study, regardless of the composition of the panel. We chose this method to reduce the data collection burden and allow for the site-specific difference between panels. We defined individual electrolyte tests as individual analysis of sodium, potassium, chloride, bicarbonate, calcium (ionized or total), magnesium, or phosphorus. Each of these individual electrolytes counted as 1 test when ordered independently or ordered separately from a panel test. We did not include glucose as an individual electrolyte test for this study.

After the conclusion of the study, the Model for Understanding Success in Quality (MUSIQ) tool was distributed broadly within the hospital medicine division and pediatric residency programs at each site to understand better the impact of QI context on the observed results.22 Responses were collected from August through September 2019. Incomplete surveys were not included in the scoring. Responses from the survey provided additional context about the propensity for QI success, which was the primary reason for utilizing this survey within the collaborative.

Measures

Our primary outcome measure, total electrolyte tests per 10 patient-days, included both individual and panel electrolyte tests. Secondary outcome measures included individual electrolyte tests per 10 patient-days and electrolyte panel tests per 10 patient-days. We choose 10 patient-days as the denominator to create a measure generally greater than one. Individual and panel electrolyte tests were studied separately to observe if interventions had a preferential impact on either measure independently.

The collaborative did not collect formal process measures throughout the study; however, the process was monitored qualitatively through discussion on weekly calls, with periodic and as needed individual site audits to determine the utilization of interventions, and via surveys sent to all hospital medicine attending and resident physicians at each site for feedback. Additionally, the collaborative did not collect balancing measures, which lessened the data collection burden for a large-scale collaborative project. Leaders of the collaborative considered ethical and patient safety issues regarding the lack of balancing measures. Given that our interventions focused on increasing and improving discussions of value rather than mandating decreased testing in specific clinical situations, providers ultimately retained clinical decision-making power. Thus, we determined the design of this study to be safe and ethical.

All data were de-identified and sent to the Children’s Hospital Association monthly and aggregated for further analysis.

Analysis

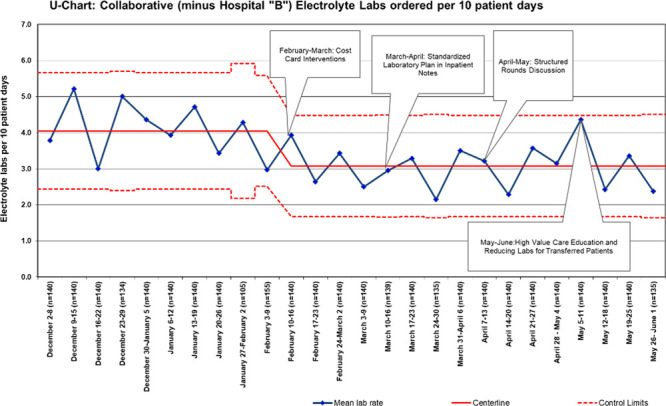

We evaluated measures using statistical process control U-charts.23–25 Special cause was determined by established rules. A subanalysis of data without Hospital B, who contributed a majority of our data, was performed to understand whether the oversampled data affected the direction and scale of the overall aggregate results. Descriptive statistics were used to analyze surveys, and comparisons were made between responses from attending and nonattending physicians.

Data from the MUSIQ tool were analyzed using the scoring methodology provided. Scoring for the MUSIQ tool was classified into ranges, with higher scores indicating the project has a higher chance of success due to a supportive environment with fewer contextual barriers. The lowest range was 24–49, indicating that the “project should not continue as is; consider deploying resources to other improvement activities,” whereas the highest scoring range was 120–198, corresponding to the “project has a reasonable chance of success.”22

RESULTS

Overall Collaborative Results

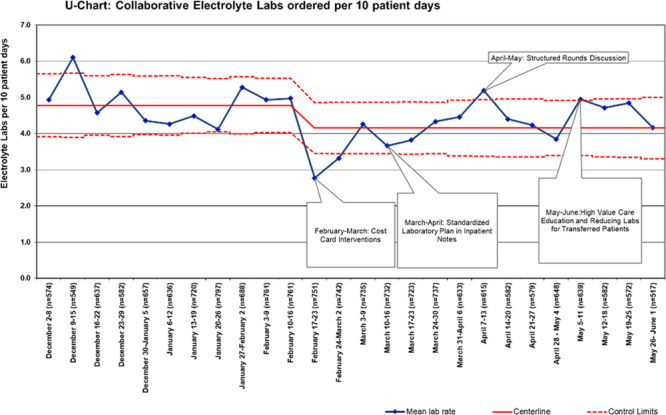

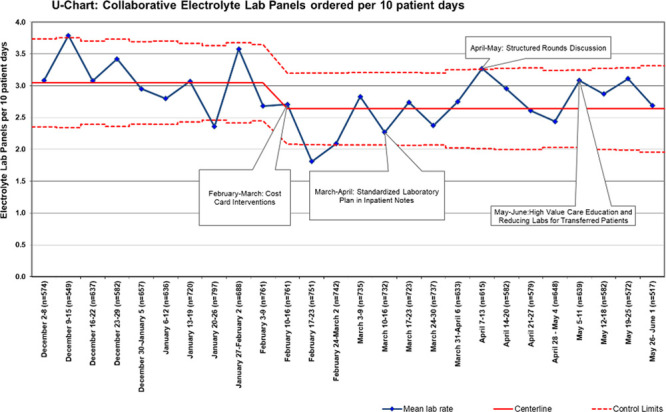

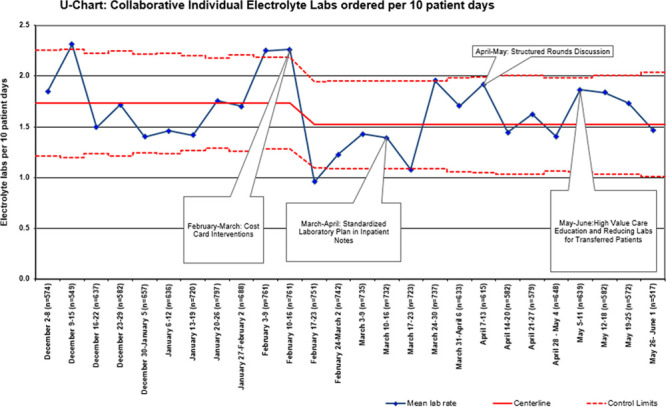

Complete data from each site were available for the baseline and intervention study periods and included 17,149 patient-days across 5 hospitals. The baseline mean was 4.8 total electrolyte tests ordered per 10 patient-days. After intervention implementation, there was a special cause variation as measured by points outside of the control limits. Overall, electrolyte testing was reduced by 13% from a mean of 4.8 to 4.2 tests per 10 patient-days (Fig. 1). Electrolyte panel testing was reduced by 13% from 3.1 to 2.7 tests per 10 patient-days (Fig. 2), and individual electrolyte testing was reduced by 10% from 1.7 to 1.5 tests per 10 patient-days (Fig. 3). When Hospital B was removed from the dataset, a similar special cause variation was observed with points outside the control limits and nine consecutive points below the centerline. Overall, electrolyte testing for the remaining 4 hospitals was reduced by 25% from a mean of 4.07 to 3.07 tests per 10 patient-days (Fig. 4).

Fig. 1.

Aggregated individual and panel electrolyte laboratory tests per 10 patient-days. Each site within the collaborative was able to roll out interventions at some point during a month-long period, allowing time to address any site-specific barriers and to test small-scale PDSA cycles first; some sites utilized site-specific cost cards.

Fig. 2.

Aggregated electrolyte laboratory panels per 10 patient-days. Each site within the collaborative was able to roll out interventions at some point during a month-long period, allowing time to address any site-specific barriers and to test small-scale PDSA cycles first; some sites utilized site-specific cost cards.

Fig. 3.

Aggregated individual electrolyte laboratory tests per 10 patient-days. Each site within the collaborative was able to roll out interventions at some point during a month-long period, allowing time to address any site-specific barriers and to test small-scale PDSA cycles first; some sites utilized site-specific cost cards.

Fig. 4.

Aggregated individual and panel electrolyte laboratory tests per 10 patient-days for the collaborative without Hospital “B” data. Each site within the collaborative was able to roll out interventions at some point during a month-long period, allowing time to address any site-specific barriers and to test small-scale PDSA cycles first; some sites utilized site-specific cost cards.

Individual Site Results

There was variation among site-specific baseline mean total electrolyte tests ranging from 3.1 to 5.7 tests per 10 patient-days (See Figures 3–7, Supplemental Digital Content 3, which describes individual site charts, Hospital “A” aggregated individual and panel electrolyte laboratory tests per 10 patient-days; individual site charts, Hospital “B” aggregated individual and panel electrolyte laboratory tests per 10 patient-days; Individual site charts, Hospital “C” aggregated individual and panel electrolyte laboratory tests per 10 patient-days; individual site charts, Hospital “D” aggregated individual and panel electrolyte laboratory tests per 10 patient-days; and individual site charts, Hospital “E” aggregated individual and panel electrolyte laboratory tests per 10 patient-days, http://links.lww.com/PQ9/A214). The range of weekly total electrolyte test rates across sites ranged from 1.1 to 9.4 laboratory tests per 10 patient-days.

After interventions, weekly total electrolyte test rates ranged from 0 to 7.4 laboratory tests per 10 patient-days. There was a varied response to interventions across sites. Three sites had a reduction in total electrolyte testing rates that met special cause variation rules. Two sites had a special cause variation for total testing rates after the first intervention and then subsequently had special cause increases later in the study. The third site showed special cause variation after the third intervention. Of 2 sites without special cause variation, overall variation decreased from a baseline testing rate range of 1.1–7.4 total electrolyte tests per 10 patient-days to an ending range of 1.1–4.5 electrolyte tests per 10 patient-days. Overall site-specific mean total electrolyte testing was reduced by a range of 0.0%–31.9%; individual electrolyte testing was reduced by 0.0%–45.8%, and the panel electrolyte testing was reduced by 0.0%–26.5%.

MUSIQ Survey Results

Of 71 respondents to the MUSIQ survey, 51 (71.8%) completed all survey questions. Of these complete surveys, most respondents were attending physicians (n = 33; 65%), followed by residents (n = 12; 23%), interns (n = 3; 6%), and other (n = 3; 6%). Overall, the total mean score was 121.5, with a median score of 122.5, which was in MUSIQ’s highest scoring category of having a “reasonable chance of success.” For individual responses, 35 responses fell into this highest category. Fifteen responses indicated the project “could be successful, but possible contextual barriers were present.” One response indicated the project had “serious contextual issues and is not set up for success.”

DISCUSSION

The collaborative reduced overall electrolyte laboratory testing by 13% across participating sites, including a reduction in both individual (10%) and panel (13%) electrolyte testing. Although the collaborative did not meet the original goal of a 20% reduction, our work provides early encouraging results to suggest that an intervention bundle that was previously successful at one institution can be brought to scale quickly, with particular attention to how interventions need to be adapted to site-specific contexts. This critical learning about how institutions can help one another in implementing high-value QI projects may support future efforts to more broadly mitigate the burden of unnecessary diagnostic testing.

We noted wide differences in baseline rates of electrolyte testing across our collaborative. This variation may indicate differences in patient populations by site or site-level cultural differences in the frequency of electrolyte testing, as noted in prior studies.19,26 There was a concern that improvement would be challenging for sites starting with a lower baseline mean due to less potential to achieve a special cause variation through further reductions; however, these lower baseline utilization sites still reduced mean testing rates. This finding aligns well with previous work demonstrating that reduction in electrolyte testing was possible even with lower baseline rates of testing than in our study.20 Although the appropriate rate of electrolyte testing has been hard to define in many clinical scenarios,27 the results of this study support the notion that appropriate testing rates may be lower than most sites currently utilize.

The specific electrolyte test clinicians utilized preferentially (individual versus panel testing) also varied significantly across sites. After our interventions highlighting the cost differential between individual tests and electrolyte panels on the cost cards, the expectation was that individual electrolyte testing rates would increase, and panel testing would decrease. For example, a physician team needing only the sodium level on a patient could order this individual laboratory test instead of a full basic metabolic panel. This practice would decrease costs while avoiding a cascade effect from obtaining more testing than needed.12,14 The reduction in individual electrolyte tests across the collaborative suggests that the high-value care focus of interventions may have promoted decreasing total laboratory orders preferentially over shifting to more focused ordering.

Through reflection, both as the project progressed and in an ex-post manner,28 this collaborative learned many valuable lessons that can be applied to future multisite QI initiatives. One of these lessons is the importance of understanding site context and its potential impact at the beginning of a project on later results.28,29 The interventions associated with a special cause variation differed by site. Some showed improvement after the standardized note template, and one achieved special cause variation after initiation of the structured rounds discussion. This observation suggests site-specific contextual factors likely contributed to variation in individual success.

The degree to which laboratory testing was reduced also varied substantially between the sites, with the reduction in rates of testing varying from 0.0% to 31.9% for all laboratory testing. Similar variability in improvement has been previously described in other large-scale QI collaborative projects.30–32 In our collaborative, sites varied in several factors highlighted in Table 1. Local operational and demographic factors can significantly affect intervention success.33 For example, smaller groups may have achieved faster buy-in and momentum with fewer stakeholders to reach. Variation in the structure of the day, amount of attending physician contact with the team, and the perception of who makes the medical decisions regarding laboratory testing may also have influenced how uniformly interventions could be established across the collaborative.

Another important contextual factor affecting intervention success is underlying QI or change-culture. From our collaborative, several MUSIQ scores were indicating possible contextual barriers to QI success. Each institution faced different barriers in adapting the interventions to their site at some point during the project, as revealed during informal audits of intervention utilization and as discussed on weekly conference calls. One example was the implementation of the standardized note template. All sites struggled to integrate the template into the EHR, and one site was unable to get an automated template created during the month that this intervention was highlighted. Overall, understanding differences in context across sites, through interviews and tools such as the MUSIQ survey, can be beneficial in the early planning stages of a QI collaborative.

There were many benefits of working in a multicenter QI collaborative. Ideas and perspectives from different sized institutions across the country were shared rapidly. The commitment to weekly calls supported relatively quick PDSA cycles and learning week-by-week the common challenges and successes experienced by each institution. In sharing how implementation efforts were unfolding, we had the opportunity to quickly adapt what worked well for other sites to our efforts and address barriers to successful implementation.

Finally, all sites found it challenging to sustain changes. Contextualizing process changes and navigating interventions that affect behavior and culture changes take time, effort, and resources.34 If given more time to implement interventions aimed at the underlying culture of laboratory testing and high-value care, more sustained change may have occurred in this collaborative. Regardless of project duration, it is essential to discuss a plan for sustaining progress and interventions in early collaborative planning.

This work has several limitations. First, to reduce the data requirements of participating sites, the collaborative did not track balancing or process measures. Although we are not aware of harm from electrolyte reduction, we do not have data to support that assertion. Second, the collaborative allowed for diverse data collection methods. Ultimately, only 1 site was able to leverage EHR resources to obtain data on all admitted patients, whereas the remainder of the collaborative utilized the manual chart review. This difference resulted in one site contributing the majority of our data. During weekly conference calls, data were reviewed with and without this site’s data to understand the overall effect on our aggregated results. We reported final results for all sites to stay true to our original intention with the collaborative. However, we believe that if all sites had the means to extract data for all patients on the hospital medicine service, we might have had more ability to detect change and remove potential bias associated with manual chart review. Third, the study timeframe was not long enough to show sustained change over time following the implementation of the last interventions, a critical component of QI. Furthermore, the relatively short timeframe may have overlooked seasonal variation in the patient population and associated variation in laboratory testing ordering patterns. Fourth, because we administered the survey after our study was complete, we did not collect MUSIQ scores by site. This data can be useful for differentiating site-specific context, and collecting MUSIQ scores in a site-specific manner should be considered for similar collaborative projects in the future. Finally, although our sites are diverse, they are all academic children’s hospitals, which may create limitations on the generalizability in the community setting.

Overall, the experience of our collaborative highlights lessons for approaching a multisite project with diversity in hospital size, patient populations, EHR systems, and informatics support capabilities. The members of a collaborative should address contextual barriers before the project start, including a discussion of intervention timeframes, potential support resources, and future barriers to success. Along with the discussion regarding particulars of a project such as this, collaborative projects could use the MUSIQ survey to assess how each site is individually prepared for QI success and whether there are items to be addressed before the initiation of a project to ensure success for the collaborative. This structured approach to understanding variation in context may better prepare future multisite collaborative projects.

DISCLOSURE

The authors have no financial interest to declare in relation to the content of this article.

Acknowledgments

We thank Samir Shah, MD, MSCE, for his guidance and mentorship in this project. We also thank the Children’s Hospital Association, and Matt Hall, PhD, Troy Richardson, PhD, and Carla Hronek, RN, PhD, for their assistance in this improvement project. Last, we thank the participants in the Children’s Hospital Association Multisite Collaborative to Reduce Unnecessary Inpatient Serum Electrolyte Testing: Vivian Lee, MD, Rebecca Cantu, MD, MPH, Steven McKee, MD, Brittney Harris, MD, Stephanie Scheffler, DO, Charalene Fisher, MD, Brittany Slagle, DO, Sara Sanders, MD, Christopher Edwards, MD, Rebecca Latch, MD, Andrea Hadley, MD, Tony Smith, BS, MLT.

Supplementary Material

Footnotes

Published online October 26, 2020

Accepted for a platform presentation at the Pediatric Academic Societies (PAS) meeting in Philadelphia, Pa., on May 5, 2020—canceled due to COVID-19 Pandemic.

Supplemental digital content is available for this article. Clickable URL citations appear in the text.

To cite: Coe M, Gruhler H, Schefft M, Williford D, Burger B, Crain E, Mihalek AJ, Santos M, Cotter JM, Trowbridge G, Kessenich J, Nolan M, Tchou M; On behalf of the Children’s Hospital Association Multisite Collaborative to Reduce Unnecessary Inpatient Serum Electrolyte Testing. Learning from Each Other: A Multisite Collaborative to Reduce Electrolyte Testing. Pediatr Qual Saf 2020;6:e351.

References

- 1.Bui AL, Dieleman JL, Hamavid H, et al. Spending on children’s personal health care in the United States, 1996-2013. JAMA Pediatr. 2017; 171:181–189 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Fieldston ES. Pediatrics and the dollar sign: charges, costs, and striving towards value. Acad Pediatr. 2012; 12:365–366 [DOI] [PubMed] [Google Scholar]

- 3.Berwick DM, Hackbarth AD. Eliminating waste in US health care. J Am Med Assoc. 2012; 307:1513–1516 [DOI] [PubMed] [Google Scholar]

- 4.Shrank WH, Rogstad TL, Parekh N. Waste in the US health care system: estimated costs and potential for savings. J Am Med Assoc. 2019; 322:1501–1509 [DOI] [PubMed] [Google Scholar]

- 5.Chua K-P, Schwartz AL, Volerman A, et al. Use of low-value pediatric services among the commercially insured. Pediatrics. 2016; 138:e20161809. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Narayanan S, Scalici P. Serum magnesium levels in pediatric inpatients: a study in laboratory overuse. Hosp Pediatr. 2015; 5:9–17 [DOI] [PubMed] [Google Scholar]

- 7.Ridout KK, Kole J, Fitzgerald KL, et al. Daily laboratory monitoring is of poor health care value in Adolescents Acutely Hospitalized for eating disorders. J Adolesc Health. 2016; 59:104–109 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Aydin D, Şahiner NC, Çiftçi EK. Comparison of the effectiveness of three different methods in decreasing pain during venipuncture in children: ball squeezing, balloon inflating and distraction cards. J Clin Nurs. 2016; 25:2328–2335 [DOI] [PubMed] [Google Scholar]

- 9.Porter FL, Grunau RE, Anand KJ. Long-term effects of pain in infants. J Dev Behav Pediatr. 1999; 20:253–261 [DOI] [PubMed] [Google Scholar]

- 10.Thavendiranathan P, Bagai A, Ebidia A, et al. Do blood tests cause anemia in hospitalized patients? The effect of diagnostic phlebotomy on hemoglobin and hematocrit levels. J Gen Intern Med. 2005; 20:520–524 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Linder LA, Christian BJ. Nighttime sleep characteristics of hospitalized school-age children with cancer. J Spec Pediatr Nurs. 2013; 18:13–24 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Houben PHH, Winkens RAG, van der Weijden T, et al. Reasons for ordering laboratory tests and relationship with frequency of abnormal results. Scand J Prim Health Care. 2010; 28:18–23 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Deyo RA. Cascade effects of medical technology. Annu Rev Public Health. 2002; 23:23–44 [DOI] [PubMed] [Google Scholar]

- 14.Mold JW, Stein HF. The cascade effect in the clinical care of patients. N Engl J Med. 1986; 314:512–514 [DOI] [PubMed] [Google Scholar]

- 15.Dhingra N, Diepart M, Dziekan G, et al. WHO Guidelines on drawing blood: best practices in phlebotomy WHO library cataloguing-in-publication Data WHO guidelines on drawing blood: best practices in phlebotomy. 2010, Geneva Switzerland: World Health Organization Press [Google Scholar]

- 16.Society of Hospital Medicine. Choosing Wisely; Available at: https://www.choosingwisely.org/societies/society-of-hospital-medicine-adult/. Updated February 21, 2013. Accessed December 18, 2019 [Google Scholar]

- 17.AABB. Choosing Wisely; Available at: http://www.choosingwisely.org/clinician-lists/american-association-blood-banks-serial-blood-counts-on-clinically-stable-patients/. Updated April 24, 2014. Accessed December 18, 2019 [Google Scholar]

- 18.Critical Care Societies Collaborative.. Choosing Wisely; Available at: http://www.choosingwisely.org/societies/critical-care-societies-collaborative-critical-care/. Updated January 28, 2014. Accessed December 18, 2019 [Google Scholar]

- 19.Tchou MJ, Hall M, Shah SS, et al. Patterns of electrolyte testing at children’s hospitals for common inpatient diagnoses. Pediatrics. 2019; 13:144. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Tchou MJ, Girdwood ST, Wormser B, et al. Reducing electrolyte testing in hospitalized children by using quality improvement methods. Pediatrics. 2018; 141:e20173187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Shinwa M, Bossert A, Chen I, et al. “THINK” before you order: multidisciplinary initiative to reduce unnecessary lab testing. J Healthc Qual. 2018; 41:165–171 [DOI] [PubMed] [Google Scholar]

- 22.Kaplan HC, Provost LP, Froehle CM, et al. The model for understanding success in quality (MUSIQ): building a theory of context in healthcare quality improvement. BMJ Qual Saf. 2012; 21:13–20 [DOI] [PubMed] [Google Scholar]

- 23.Perla RJ, Provost LP, Murray SK. The run chart: a simple analytical tool for learning from variation in healthcare processes. BMJ Qual Saf. 2011; 20:46–51 [DOI] [PubMed] [Google Scholar]

- 24.Benneyan JC, Lloyd RC, Plsek PE. Statistical process control as a tool for research and healthcare improvement. Qual Saf Health Care. 2003; 12:458–464 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Brady PW, Tchou MJ, Ambroggio L, et al. Quality improvement feature series article 2: displaying and analyzing quality improvement data. J Pediatric Infect Dis Soc. 2018; 7:100–103 [DOI] [PubMed] [Google Scholar]

- 26.Lind CH, Hall M, Arnold DH, et al. Variation in diagnostic testing and hospitalization rates in children with acute gastroenteritis. Hosp Pediatr. 2016; 6:714–721 [DOI] [PubMed] [Google Scholar]

- 27.Feld LG, Neuspiel DR, Foster BA, et al. Clinical Practice Guideline: Maintenance Intravenous Fluids in Children. 2018; 142, Itasca, IL: American Academy of Pediatrics; . Available at: www.aappublications.org/news. Accessed December 30, 2019 [DOI] [PubMed] [Google Scholar]

- 28.Dixon-Woods M, Bosk CL, Aveling EL, et al. Explaining Michigan: developing an ex post theory of a quality improvement program. Milbank Q. 2011; 89:167–205 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.McDonald KM. Considering context in quality improvement interventions and implementation: concepts, frameworks, and application. Acad Pediatr. 2013; 136 supplS45–S53 [DOI] [PubMed] [Google Scholar]

- 30.Biondi EA, McCulloh R, Staggs VS, et al. Reducing variability in the infant sepsis evaluation (revise): a national quality initiative. Pediatrics. 2019; 144:e20182201. [DOI] [PubMed] [Google Scholar]

- 31.Parikh K, Biondi E, Nazif J, et al. A multicenter collaborative to improve care of community acquired pneumonia in hospitalized children. Pediatrics. 2017; 139:e20161411. [DOI] [PubMed] [Google Scholar]

- 32.Stephens TJ, Peden CJ, Pearse RM, et al. ; EPOCH Trial Group. Improving care at scale: process evaluation of a multi-component quality improvement intervention to reduce mortality after emergency abdominal surgery (EPOCH trial). Implement Sci. 2018; 13:148. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Nilsen P, Bernhardsson S. Context matters in implementation science: a scoping review of determinant frameworks that describe contextual determinants for implementation outcomes. BMC Health Serv Res. 2019; 19:189. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.McDonald KM, Sundaram V, Bravata DM, et al. Closing the Quality Gap: A Critical Analysis of Quality Improvement Strategies (Vol. 7: Care Coordination). Rockville (MD) Agency for Healthcare Research and Quality (US); 2007 Jun. (Technical Reviews, No. 9.7.) Available at: https://www.ncbi.nlm.nih.gov/books/NBK44015/ [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.