Abstract

With the development of computer technology, many machine learning algorithms have been applied to the field of biology, forming the discipline of bioinformatics. Protein function prediction is a classic research topic in this subject area. Though many scholars have made achievements in identifying protein by different algorithms, they often extract a large number of feature types and use very complex classification methods to obtain little improvement in the classification effect, and this process is very time-consuming. In this research, we attempt to utilize as few features as possible to classify vesicular transportation proteins and to simultaneously obtain a comparative satisfactory classification result. We adopt CTDC which is a submethod of the method of composition, transition, and distribution (CTD) to extract only 39 features from each sequence, and LibSVM is used as the classification method. We use the SMOTE method to deal with the problem of dataset imbalance. There are 11619 protein sequences in our dataset. We selected 4428 sequences to train our classification model and selected other 1832 sequences from our dataset to test the classification effect and finally achieved an accuracy of 71.77%. After dimension reduction by MRMD, the accuracy is 72.16%.

1. Introduction

Protein, regarded as the material basis of life and the caretaker of life activities [1], participates in all the functions of maintaining individual survival, including catalyzing specific biochemical reactions and participating in immune response. The protein diversity is increased by alternative splicing and posttranslation modifications [2, 3]. Hence, the topic of protein function prediction came into being around the time of the birth of bioinformatics [4–11]. In view of the different functions of protein, there are various kinds to be classified [12–17]. Many scholars are devoted to the classification of different functions of an enzyme [18–23], and some apply themselves to the recognition of whether a protein sequence is an effecter protein. In this thesis, we attempt to determine if a protein is a vesicular transport protein.

Substances with small molecular weight, such as water or ions, will directly pass through the cell membrane by free diffusion or through the ion channels embedded in the cell membrane. However, macromolecular materials like proteins cannot directly pass through the cell membrane. In the process of transportation in and out of the cell, they are first surrounded by a layer of membrane generated by cell-forming vesicles and then through the fusion or rupture of vesicles with the cell membrane or various organelle membranes to complete material transportation. This process is called vesicular transport. The key role to facilitate this process is vesicular transporter, which is a kind of ubiquitous protein in the cell membrane and organelle membrane. When macromolecular materials are to be transported across the membrane, a specific vesicle transport will concentrate them or supervise the specific organelles to produce different vesicle structures to carry or to wrap the materials to be transmitted. Vesicle transport activity occurs widely between cells or within cells, such as the transmission of neurotransmitters between nerve cells and the operation of the immune system, which is essential for maintaining life. In the field of biology, there have been many advanced studies on cell vesicle transport, and the research areas are also diverse. For example, Rothman et al. [24] studied the problem about the transport of proteins in Golgi matrix, the composition, and structure of Golgi-coated vesicles. Liu et al. [25] concentrated on research about the effect vesicular transporter that plays in synaptic transmission and neurodegeneration. Similarly, many human diseases are also related to the abnormal action of vesicular transport in cells. Brain dopamine-serotonin vesicular transport disease, which can cause movement disorder in infancy, is closely related to vascular monoamine transporter 2 (VMAT2) [26]. In addition, many similar examples are constantly discovered. Increasingly, more diseases are associated with gene mutations, which are responsible for the vesicular transport function.

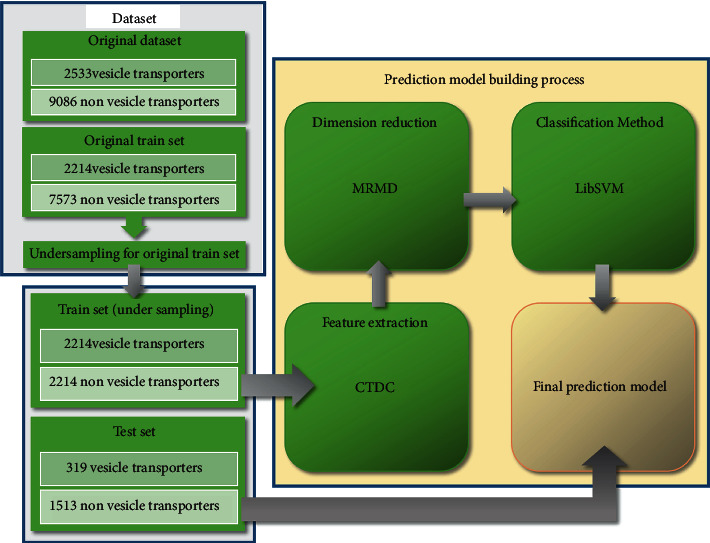

With the development of this field, an increasing number of vesicular transport proteins and other proteins have been found. There is growing desire for rapid identification of vesicular transporters, which is difficult to meet with biological technology. This type of research requires bioinformatics scholars to use machine learning and other computational methods to process and to analyze massive protein sequences. Thus far, the research on using computational methods to identify vesicle transporters is scant. In 2019, Le et al. [27] used PSSM matrix to store sequence features and convolutional neural network (CNN) to determine whether the sequence is a SNARE protein, which is a kind of vesicular transporter. In the same year, these authors used a classifier called GRU based on CNN to identify vesicular transporters. However, for the identification of protein, DNA and RNA, the process to deal with these problems is similar. In recent years, the two steps of the process, feature extraction and classification, have become increasingly complex, and this is also true in the field of identifying vesicular transporters. Meanwhile, we try as much as possible to use a simpler way of feature extraction and classification, to ensure a better classification effect. Finally, we use the composition descriptor in the composition, transition, and distribution (CTDC) and LibSVM as the methods of feature extraction and classification, which are widely used by many scholars. It is a novel idea about our research that the feature dimension of our final prediction process is reduced to 29. Our flowchart is shown in Figure 1.

Figure 1.

Flowchart of identifying vesicular transporters.

2. Materials and Methods

2.1. Dataset

Our data come from the previous research of Le et al. These data have been processed by BLAST to ensure that the similarity between any two sequences is less than 30%. In addition, we use random undersampling in order to balance the number of positive and negative samples in the training set. Finally, there are 4428 sequences in the final training set and 1832 sequences in the test set. Table 1 details the composition of the dataset.

Table 1.

The dataset used in this study.

| Original | Original train set | Train set | Test set | |

|---|---|---|---|---|

| Vesicular transport | 2533 | 2214 | 2214 | 319 |

| Nonvesicular transport | 9086 | 7573 | 2214 | 1513 |

2.2. Method to Feature Extraction

Feature extraction is very important for constructing a predictor [28–37]. We use the CTDC method in iLearn toolkit to extract features of protein sequences. Developed from iFeatures, iLearn is a comprehensive toolkit based on Python, which was designed by Chen et al. that can be downloaded at http://ilearn.erc.monash.edu. As a powerful platform, it not only integrates a series of feature extraction and analysis methods but provides many machine learning algorithms for classification. CTDC is the first part of the CTD feature method in iLearn based on the first of three descriptors.

CTD is a classic sequence feature extraction method that was first proposed by Dubchak et al. [38] in 1995. It consists of three descriptors: composition (C), transition (T), and distribution (D). Composition refers to the ratio of the number of single amino acids with specific properties (or several small amino acid sequence fragments with certain physical and chemical properties) in the whole sequence [39]. Composition can be expressed with the following formula:

| (1) |

where x represents amino acids with specific groups or sequence fragments with special physical and chemical properties, and a, b, and c represent different kinds of groups. N represents the total length of the sequence. The second descriptor represents the ratio of two closely adjacent groups to the total sequence calculated as

| (2) |

In Eq. (2), xy and yx denote two closely adjacent groups. The third descriptor, distribution, represents the general spreading state of special groups in the whole sequence. From the first amino acid of the sequence, calculate the proportion of an amino acid carrying a specific group in five subchains for all the amino acids in a sequence. These five chains contain the first, 25%, 50%, 75%, and 100% special amino acid from the first amino acid of the sequence.

After our experiment, the features extracted from transition and distribution contributed little to the classification effect, so we only select the features extracted from composition. In iLearn, there are 13 kinds of physicochemical properties adopted, and each physicochemical property has three kinds of amino acid combination patterns. The concrete meaning of these properties comes from the research results of Tomii and Kanehisa [40] in 1996.

2.3. Method for Classification

We use LibSVM method based on Weka. LibSVM is a library about the support vector machine (SVM) developed by Professor Lin et al. in 2001. It has been widely used in bioinformatics [41–54]. It has the advantages of being a small program that is flexible, with less inputting parameters, is open source to expand easily, and thus has become the most widely used SVM Library in China. This library tool can be accessed at https://www.csie.ntu.edu.tw/~cjlin/. Weka is a free and noncommercial mining platform, which has a series of functional modules that basically meet various needs in data analysis, such as a variety of different classification and regression algorithms and performing cross validation during classification, automatically. LibSVM classification has been supported since Weka version 3.5.

SVM is a kind of generalized linear classifier that relies on supervised learning [55–60]. The key to classification is to form a hyperplane in multidimensional feature space through algorithm calculation, which can approximate separate positive and negative samples; it can be expressed mathematically as

| (3) |

In (3), X is a vector composed of coordinate values of any point on the hyperplane in each dimension and ω is a vector that we need to calculate. In addition, in order to make the sum of the distance between the positive and negative sample set and the hyperplane reach the farthest, we need to construct two planes parallel to the hyperplane as the interval boundary to distinguish the sample classification. However, in most cases, positive and negative samples cannot be completely divided on both sides of a plane, so generally we will allow some samples to be divided incorrectly, which we called soft interval. Finally, the problem is simplified to formula (4).

| (4) |

where ξi represents a relaxation variable for each sample point, and C is the penalty parameter that needs to be set manually according to the actual situation. The Lagrange function corresponding to formula (5) can be shown as

| (5) |

where parameters αi and βi are Lagrange multipliers. At the same time, to solve the problem conveniently, we need to use the technique about Lagrangian duality and set the kernel function. The dual problem to Lagrange function is represented as

| (6) |

In this experiment, the radial basis function (RBF) is adopted as the kernel function, which is also the default setting in LibSVM. Two parameters, the cost (c) and gamma (g), need to be determined before building the classification model by using Weka. The parameter c is called the penalty coefficient. The higher the value of c is, the easier it is to over fit. And g is a parameter of RBF function after it is selected as kernel which affects the speed of process of training and prediction. There is no universally recognized best method for parameter selection, and the common method is to let c and g take values within a certain range, and then set different c and g in the process of training set data classification. Finally, use cross validation to get the classification accuracy verified by the training set in this groups c and g, and select the group with the best classification result by comparison [61]. It is a complicated process, but in LibSVM toolkit, the parameter optimization is automated, and it no longer needs to be manually adjusted. We use the program, grid.py in the LibSVM tool folder to get the optimal parameters.

2.4. MRMD for Dimensionality Reduction

The max-relevance-max-distance (MRMD) is a dimensionality reduction algorithm, which was developed by Zou et al. [62, 63] in 2015 that can be downloaded at https://github.com/heshida01/mrmd/tree/master/mrmdjar. It is based on a series of distance functions to judge the feature independence. The process of dimensionality reduction consists of three steps. First, the contribution of each feature to classification is evaluated and then the contribution is quantified. Second, sort the features according to their contribution to the classification. Third, select different numbers of features in order to classify and then record the results. For example, select the first feature the first time, select the first two features the second time, etc., until the number of selected features reaches the maximum; that is, all features are selected, and the classification test stops. By comparing the results of these classification tests, the best group is selected, and the features selected in this group are retained and regarded as the result of dimension reduction.

MRMD algorithm analyzes the contribution of each feature to the prediction process mainly through two aspects, max relevance and max distance. Max relevance (MR) is used to calculate the Pearson correlation coefficient between features and samples to quantify the correlation between features and case classes. As shown in Formula (7), Pearson correlation coefficient is equal to the covariance divided by the product of their respective standard deviations.

| (7) |

The vectors X and Y are composed of the ith feature from the sequence and the class label to which these sequences belong. Max distance (MD) is used to analyze the redundancy between features. Specifically, we calculate the three indexes between features:

| (8) |

In (8), these indexes are called Euclidean distance, Cosine similarity, and Tanimoto coefficient. The value of MD is obtained by comprehensive consideration of these three indexes.

Finally, the value of the contribution of the classification of each feature is obtained by adding the values of MR and MD in a certain proportion.

2.5. Evaluation of Classification Results

We adopt cross validation (CV) to evaluate the experimental results objectively. It is a classic, analytical method for judging the performance of a prediction model [64–78]. The core idea is to take out most of the samples in a given dataset to build a classification model, to leave a small part of the samples, to use the newly established model for prediction, and to calculate the forecast errors of these small samples and to record their sum of squares. This process continues until all samples are predicted once and only once. There are three common CV methods: hold-out method, K-fold cross validation (K-CV), and leave-one-out cross validation (LOO-CV). We take the second approach, K-CV.

In K-fold cross validation, the initial data are divided into k groups of subdatasets. A group of independent subdatasets are retained as the validation model data, and other k-1 subdatasets are used for training. In this way, we can get k models and take each prediction result of the classifier into account. In general, the operation is to take the average value of each index of every classification time from k models. The value of K can be set according to the actual situation, and here we set its value to 5. After 5-fold cross validation set in Weka, in order to evaluate the results of classification, some indexes are often used [79–85]. The metrics we use are recall, precision, MCC, and accuracy, and their corresponding formulas are as follows:

| (9) |

For the convenience of description, we use “positive” to represent vesicular transporters and “negative” to represent nonvesicular transporters. In (7), the letter T means true (correct). The letter N means false (incorrect). P is the positive sample, and N represents the negative sample. For example, TP means that the positive samples are correctly identified.

3. Results and Discussion

After optimizing the parameters of the dataset having the whole 39 dimensional features extracted by CTDC, we first implement classification without dimension reduction operation. By using the parameter optimization function in LibSVM, we can automatically find the most suitable c and g; finally, the value of c is 2048 and g is 0.5. The classification accuracy of train set is 66.84% by Weka. For a total of 4428 samples, 653 positive samples and 815 negative samples are misclassified. For test set, the accuracy reaches 71.77%. For 1832 samples, there are 94 positive samples and 416 negative samples that are misclassified.

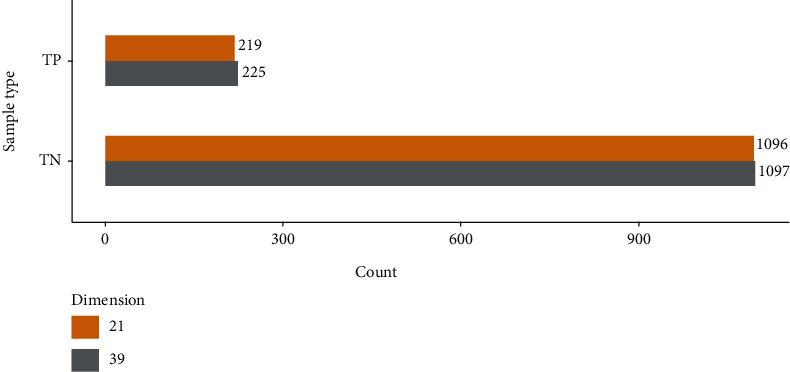

Simultaneously, we also test the datasets, which are processed by dimension reduction to judge the effect of MRMD method dimension reduction on classification results. First, we use MRMD for training set. After dimension reduction, the sample space dimension is reduced from 39 to 21. Second, we leave the feature of the test set selected by MRMD in training set and delete the others. Then, we do the same operation for the reduced dimension dataset. The optimal parameters c and g are 128 and 2, respectively. The classified accuracy of training set is 66.96% and test set is 72.16%. In train set, 656 positive samples and 807 negative samples are misclassified. In test set, 94 positive samples and 416 negative samples are misclassified.

To show more vividly the number of samples that have not been dimensionally reduced and have been correctly predicted, we have drawn Figure 2. TP represents the vesicle transporters predicted correctly, and TN is regarded as the nonvesicular transporters predicted incorrectly. From Figure 2, we can clearly see that the number of correctly predicted samples after dimensionality reduction is basically the same as that without dimensionality reduction. However, from another point of view, although this technique cannot classify more samples, correctly, it eliminates some features that do not contribute much to the classification and reduces the complexity of the classification process.

Figure 2.

Number of samples correctly predicted.

Of course, if it is unreasonable and incomplete to judge the prediction effect only by the accuracy rate, we need to know other indicators about the classification results to evaluate the result more objectively. For this reason, we list the four indexes, recall, precision, accuracy, and MCC, in the performance of classification of reduced dimension and not reduced dimension and create Table 2 to represent it.

Table 2.

Comparison of classification results.

| Recall | Precision | Accuracy | MCC | |

|---|---|---|---|---|

| 39 characteristics | 0.718 | 68.65% | 71.77% | 0.327 |

| 21 characteristics | 0.722 | 70.53% | 72.16% | 0.342 |

In Table 2, it is obvious that the prediction results using the 21 features after dimensionality reduction have not decreased. This proves that MRMD has no negative effect on the prediction. In addition, because MRMD calculates the contribution of each feature to classification and sorts them in the process of dimensionality reduction, we can understand which features have great differences between vesicular transporters and nonvesicular transporters. For example, according to the calculation of MRMD, the 32nd feature, called charge. G2, is ranked first after dimensionality reduction, which indicates that this feature has the greatest difference between positive and negative samples. The 13th feature, the hydrophobicity_CASG920101.G1, is in second place, which means that the degree of difference between two categories is second only to the 32nd feature and so on. The specific meaning of these characteristics can be found in chapter 2.2 of Tomii et al.: they represent different states of physical and chemical properties, such as hydrophobicity, normalized van der Waals volume, polarization, and polarizability. This partly explains whether a protein becomes a vesicle transporter because some amino acid combinations in their sequences appear physical and possess chemical properties that other proteins do not. Certainly, these are not the only factors that determine protein function.

4. Conclusion

At present, in protein classification, scholars often extract a large number of features or the classification methods used are very complex. In our research, we used CTDC feature extraction combined with MRMD feature screening and dimensionality reduction. It is worth mentioning that the MRMD adopted to reduce the dimension, which not only reduces the number of features used in classification, but also has no negative interference to the prediction effect. Finally, we used only 21 features to complete the prediction of vesicle transporters and achieved a satisfactory result. The accuracy of our prediction method is 66% for training set by 5-fold cross validation and 72% for test set after dimension reduction. Compared with the widely used convolution neural network (CNN) or deep neural network (DNN), although it will obtain higher accuracy, there are also problems of over fitting and poor interpretability of classification process. The operation process of these methods cannot be explained, and each parameter in the classifier is adjusted by negative feedback according to the actual and theoretical results. The prediction process relies on the mutual accumulation of input and output of a series of individual neurons. It is difficult to say whether the result is related to the specific amino acid arrangement or some specific groups. However, for these classical characteristics, the sequence feature often means that there are some rules in the arrangement of amino acids in the sequence. It may be helpful for scholars to judge whether an unknown protein is a vesicular transporter. Through our study, the difference degree of each feature between positive and negative samples differs according to the calculation of MRMD. The features, like charge. G2 and hydrophobicity_Casg920101.G1, ranked first and second, respectively, and indicate that these physicochemical properties play a key role in the recognition of vesicle transporters. Moreover, the best classification results can be obtained by selecting the first 21 features, which also indicates that the content of amino acid combinations of the remaining 18 features represented between vesicular transporter and nonvesicular transporter is not significantly different. The difference in the content of these groups with specific physicochemical properties also helps to explain why proteins exhibit specific functions.

Acknowledgments

This work was supported in part by the National Natural Science Foundation of China (grant numbers 61971117 and 61901103) and in part by the Natural Science Foundation of Heilongjiang Province (grant number LH2019F002).

Data Availability

Experimental data can be obtained from https://github.com/taozhy/identifying-vesicle-transport-proteins or ask the author directly by email: 1765145064@qq.com.

Conflicts of Interest

The authors have declared no competing interests.

Authors' Contributions

Yuming Zhao and Yanjuan Li conceived and designed the project. Zhiyu Tao and Benzhi Dong conducted experiments and analyzed the data. Zhiyu Tao and Yanjuan Li wrote the paper. Zhixia Teng and Yuming Zhao revised the manuscript. All authors read and approved the final manuscript. Zhiyu Tao and Yanjuan Li equally contributed equally to this work.

References

- 1.Tang Y.-J., Pang Y.-H., Liu B. IDP-Seq2Seq: identification of intrinsically disordered regions based on sequence to sequence learning. Bioinformatics. 2020 doi: 10.1093/bioinformatics/btaa667. [DOI] [PubMed] [Google Scholar]

- 2.Xu Y., Zhao W., Olson S. D., Prabhakara K. S., Zhou X. Alternative splicing links histone modifications to stem cell fate decision. Genome Biology. 2018;19(1) doi: 10.1186/s13059-018-1512-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Xu Y., Wang Y., Luo J., Zhao W., Zhou X. Deep learning of the splicing (epi) genetic code reveals a novel candidate mechanism linking histone modifications to ESC fate decision. Nucleic Acids Research. 2017;45(21):12100–12112. doi: 10.1093/nar/gkx870. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Capellini T. D., Vaccari G., Ferretti E., et al. Scapula development is governed by genetic interactions of Pbx1 with its family members and with Emx2 via their cooperative control of Alx1. Development. 2010;137(15):2559–2569. doi: 10.1242/dev.048819. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Shao J., Yan K., Liu B. FoldRec-C2C: protein fold recognition by combining cluster-to-cluster model and protein similarity network. Briefings in bioinformatics. 2020 doi: 10.1093/bib/bbaa144. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Xu L., Liang G., Shi S., Liao C. SeqSVM: a sequence-based support vector machine method for identifying antioxidant proteins. International Journal of Molecular Sciences. 2018;19(6) doi: 10.3390/ijms19061773. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Yu L., Xu F., Gao L. Predict new therapeutic drugs for hepatocellular carcinoma based on gene mutation and expression. Frontiers in Bioengineering and Biotechnology. 2020;8 doi: 10.3389/fbioe.2020.00008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Yang Q., Wang Y., Zhang Y., et al. NOREVA: enhanced normalization and evaluation of time-course and multi-class metabolomic data. Nucleic Acids Research. 2020;48(W1):W436–W448. doi: 10.1093/nar/gkaa258. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Yin J., Sun W., Li F., et al. VARIDT 1.0: variability of drug transporter database. Nucleic Acids Research. 2020;48(D1):D1042–D1050. doi: 10.1093/nar/gkz779. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Wang Y., Zhang S., Li F., et al. Therapeutic target database 2020: enriched resource for facilitating research and early development of targeted therapeutics. Nucleic Acids Research. 2020;48(D1):D1031–D1041. doi: 10.1093/nar/gkz981. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Wang G., Luo X., Wang J., et al. MeDReaders: a database for transcription factors that bind to methylated DNA. Nucleic Acids Research. 2018;46(D1):D146–D151. doi: 10.1093/nar/gkx1096. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Liu M. L., Su W., Guan Z. X., et al. An overview on predicting protein subchloroplast localization by using machine learning methods. Current Protein & Peptide Science. 2020;21 doi: 10.2174/1389203721666200117153412. [DOI] [PubMed] [Google Scholar]

- 13.Li S. H., Zhang J., Zhao Y. W., et al. iPhoPred: a predictor for identifying phosphorylation sites in human protein. IEEE Access. 2019;7:177517–177528. doi: 10.1109/ACCESS.2019.2953951. [DOI] [Google Scholar]

- 14.Yu L., Yao S., Gao L., Zha Y. Conserved disease modules extracted from multilayer heterogeneous disease and gene networks for understanding disease mechanisms and predicting disease treatments. Frontiers in Genetics. 2019;9 doi: 10.3389/fgene.2018.00745. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Li Y. H., Li X. X., Hong J. J., et al. Clinical trials, progression-speed differentiating features and swiftness rule of the innovative targets of first-in-class drugs. Briefings in Bioinformatics. 2020;21(2):649–662. doi: 10.1093/bib/bby130. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Tang J., Fu J., Wang Y., et al. ANPELA: analysis and performance assessment of the label-free quantification workflow for metaproteomic studies. Briefings in Bioinformatics. 2020;21(2):621–636. doi: 10.1093/bib/bby127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Yang Q., Li B., Tang J., et al. Consistent gene signature of schizophrenia identified by a novel feature selection strategy from comprehensive sets of transcriptomic data. Briefings in Bioinformatics. 2020;21(3):1058–1068. doi: 10.1093/bib/bbz049. [DOI] [PubMed] [Google Scholar]

- 18.Tang J., Wang Y., Fu J., et al. A critical assessment of the feature selection methods used for biomarker discovery in current metaproteomics studies. Briefings in Bioinformatics. 2020;21(4):1378–1390. doi: 10.1093/bib/bbz061. [DOI] [PubMed] [Google Scholar]

- 19.Xu L., Liang G., Chen B., Tan X., Xiang H., Liao C. A computational method for the identification of endolysins and autolysins. Protein & Peptide Letters. 2020;27(4):329–336. doi: 10.2174/0929866526666191002104735. [DOI] [PubMed] [Google Scholar]

- 20.Meng C., Guo F., Zou Q. CWLy-SVM: a support vector machine-based tool for identifying cell wall lytic enzymes. Computational Biology and Chemistry. 2020;87, article 107304 doi: 10.1016/j.compbiolchem.2020.107304. [DOI] [PubMed] [Google Scholar]

- 21.Zou Q., Chen W., Huang Y., Liu X., Jiang Y. Identifying multi-functional enzyme by hierarchical multi-label classifier. Journal of Computational and Theoretical Nanoscience. 2013;10(4):1038–1043. doi: 10.1166/jctn.2013.2804. [DOI] [Google Scholar]

- 22.Hong J., Luo Y., Zhang Y., et al. Protein functional annotation of simultaneously improved stability, accuracy and false discovery rate achieved by a sequence-based deep learning. Briefings in Bioinformatics. 2020;21(4):1437–1447. doi: 10.1093/bib/bbz081. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Li F., Zhou Y., Zhang X., et al. SSizer: determining the sample sufficiency for comparative biological study. Journal of Molecular Biology. 2020;432(11):3411–3421. doi: 10.1016/j.jmb.2020.01.027. [DOI] [PubMed] [Google Scholar]

- 24.Rothman J. E., Orci L. Movement of proteins through the Golgi stack: a molecular dissection of vesicular transport. The FASEB Journal. 1990;4(5):1460–1468. doi: 10.1096/fasebj.4.5.2407590. [DOI] [PubMed] [Google Scholar]

- 25.Liu and Y., Edwards R. H. The role of vesicular transport proteins in synaptic transmission and neural degeneration. Annual Review of Neuroscience. 1997;20(1):125–156. doi: 10.1146/annurev.neuro.20.1.125. [DOI] [PubMed] [Google Scholar]

- 26.Rilstone J. J., Alkhater R. A., Minassian B. A. Brain dopamine–serotonin vesicular transport disease and its treatment. New England Journal of Medicine. 2013;368(6):543–550. doi: 10.1056/NEJMoa1207281. [DOI] [PubMed] [Google Scholar]

- 27.Le N. Q. K., Yapp E. K. Y., Nagasundaram N., Chua M. C. H., Yeh H.-Y. Computational identification of vesicular transport proteins from sequences using deep gated recurrent units architecture. Computational and Structural Biotechnology Journal. 2019;17:1245–1254. doi: 10.1016/j.csbj.2019.09.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Liu B., Gao X., Zhang H. BioSeq-Analysis2.0: an updated platform for analyzing DNA, RNA, and protein sequences at sequence level and residue level based on machine learning approaches. Nucleic Acids Research. 2019;47(20) doi: 10.1093/nar/gkz740. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Yan K., Wen J., Xu Y., Liu B. Protein fold recognition based on auto-weighted multi-view graph embedding learning model. IEEE/ACM Transactions on Computational Biology and Bioinformatics. 2020 doi: 10.1109/TCBB.2020.2991268. [DOI] [PubMed] [Google Scholar]

- 30.Yang W., Zhu X. J., Huang J., Ding H., Lin H. A brief survey of machine learning methods in protein sub-Golgi localization. Current Bioinformatics. 2019;14(3):234–240. doi: 10.2174/1574893613666181113131415. [DOI] [Google Scholar]

- 31.Chen W., Feng P., Nie F. iATP: a sequence based method for identifying anti-tubercular peptides. Medicinal Chemistry. 2020;16(5) doi: 10.2174/1573406415666191002152441. [DOI] [PubMed] [Google Scholar]

- 32.Yang Q. X., Wang Y. X., Li F. C., et al. Identification of the gene signature reflecting schizophrenia's etiology by constructing artificial intelligence-based method of enhanced reproducibility. CNS Neuroscience & Therapeutics. 2019;25(9):1054–1063. doi: 10.1111/cns.13196. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Tang J., Fu J., Wang Y., et al. Simultaneous improvement in the precision, accuracy, and robustness of label-free proteome quantification by optimizing data manipulation chains. Molecular & Cellular Proteomics. 2019;18(8):1683–1699. doi: 10.1074/mcp.RA118.001169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Basith S., Manavalan B., Shin T. H., Lee G. iGHBP: computational identification of growth hormone binding proteins from sequences using extremely randomised tree. Computational and Structural Biotechnology Journal. 2018;16:412–420. doi: 10.1016/j.csbj.2018.10.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Manavalan B., Basith S., Shin T. H., Wei L., Lee G. mAHTPred: a sequence-based meta-predictor for improving the prediction of anti-hypertensive peptides using effective feature representation. Bioinformatics. 2019;35(16):2757–2765. doi: 10.1093/bioinformatics/bty1047. [DOI] [PubMed] [Google Scholar]

- 36.Manavalan B., Shin T. H., Kim M. O., Lee G. AIPpred: sequence-based prediction of anti-inflammatory peptides using random forest. Frontiers in Pharmacology. 2018;9 doi: 10.3389/fphar.2018.00276. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Zhao X., Jiao Q., Li H., et al. ECFS-DEA: an ensemble classifier-based feature selection for differential expression analysis on expression profiles. BMC Bioinformatics. 2020;21(1) doi: 10.1186/s12859-020-3388-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Dubchak I., Muchnik I., Holbrook S. R., Kim S. H. Prediction of protein folding class using global description of amino acid sequence. Proceedings of the National Academy of Sciences of the United States of America. 1995;92(19):8700–8704. doi: 10.1073/pnas.92.19.8700. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Tan J. X., Li S. H., Zhang Z. M., et al. Identification of hormone binding proteins based on machine learning methods. Mathematical Biosciences and Engineering. 2019;16(4):2466–2480. doi: 10.3934/mbe.2019123. [DOI] [PubMed] [Google Scholar]

- 40.Tomii K., Kanehisa M. Analysis of amino acid indices and mutation matrices for sequence comparison and structure prediction of proteins. Protein Engineering, Design and Selection. 1996;9(1):27–36. doi: 10.1093/protein/9.1.27. [DOI] [PubMed] [Google Scholar]

- 41.Liu B., Li K. iPromoter-2L2.0: identifying promoters and their types by combining smoothing cutting window algorithm and sequence-based features. Molecular Therapy-Nucleic Acids. 2019;18:80–87. doi: 10.1016/j.omtn.2019.08.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Zhu X. J., Feng C. Q., Lai H. Y., Chen W., Hao L. Predicting protein structural classes for low-similarity sequences by evaluating different features. Knowledge-Based Systems. 2019;163:787–793. doi: 10.1016/j.knosys.2018.10.007. [DOI] [Google Scholar]

- 43.Yang H., Yang W., Dao F. Y., et al. A comparison and assessment of computational method for identifying recombination hotspots in Saccharomyces cerevisiae. Briefings in Bioinformatics. 2019 doi: 10.1093/bib/bbz123. [DOI] [PubMed] [Google Scholar]

- 44.Chen W., Feng P., Liu T., Jin D. Recent advances in machine learning methods for predicting heat shock proteins. Current Drug Metabolism. 2019;20(3):224–228. doi: 10.2174/1389200219666181031105916. [DOI] [PubMed] [Google Scholar]

- 45.Xu L., Liang G., Liao C., Chen G. D., Chang C. C. An efficient classifier for Alzheimer’s disease genes identification. Molecules. 2018;23(12) doi: 10.3390/molecules23123140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Wang Y., Shi F., Cao L., et al. Morphological segmentation analysis and texture-based support vector machines classification on mice liver fibrosis microscopic images. Current Bioinformatics. 2019;14(4):282–294. doi: 10.2174/1574893614666190304125221. [DOI] [Google Scholar]

- 47.Yu L., Gao L. Human pathway-based disease network. IEEE/ACM Transactions on Computational Biology and Bioinformatics. 2019;16(4):1240–1249. doi: 10.1109/TCBB.2017.2774802. [DOI] [PubMed] [Google Scholar]

- 48.Li B., Tang J., Yang Q., et al. NOREVA: normalization and evaluation of MS-based metabolomics data. Nucleic Acids Research. 2017;45(W1):W162–W170. doi: 10.1093/nar/gkx449. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Li Y. H., Yu C. Y., Li X. X., et al. Therapeutic target database update 2018: enriched resource for facilitating bench-to-clinic research of targeted therapeutics. Nucleic Acids Research. 2018;46(D1):D1121–D1127. doi: 10.1093/nar/gkx1076. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Basith S., Manavalan B., Shin T. H., Lee G. SDM6A: a web-based integrative machine-learning framework for predicting 6mA sites in the rice genome. Molecular Therapy-Nucleic Acids. 2019;18:131–141. doi: 10.1016/j.omtn.2019.08.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Manavalan B., Basith S., Shin T. H., Wei L., Lee G. Meta-4mCpred: a sequence-based meta-predictor for accurate DNA 4mC site prediction using effective feature representation. Molecular Therapy-Nucleic Acids. 2019;16:733–744. doi: 10.1016/j.omtn.2019.04.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Manavalan B., Shin T. H., Lee G. PVP-SVM: sequence-based prediction of phage virion proteins using a support vector machine. Frontiers in Microbiology. 2018;9 doi: 10.3389/fmicb.2018.00476. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Jiang Q. H., Wang G., Jin S., Li Y., Wang Y. Predicting human microRNA-disease associations based on support vector machine. International Journal of Data Mining and Bioinformatics. 2013;8(3):282–293. doi: 10.1504/IJDMB.2013.056078. [DOI] [PubMed] [Google Scholar]

- 54.Zhao Y., Wang F., Juan L. MicroRNA promoter identification in arabidopsis using multiple histone markers. BioMed Research International. 2015;2015:10. doi: 10.1155/2015/861402.861402 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Wei L., Xing P., Shi G., Ji Z., Zou Q. Fast prediction of methylation sites using sequence-based feature selection technique. IEEE/ACM Transactions on Computational Biology and Bioinformatics. 2019;16(4):1264–1273. doi: 10.1109/TCBB.2017.2670558. [DOI] [PubMed] [Google Scholar]

- 56.Liu B. BioSeq-Analysis: a platform for DNA, RNA, and protein sequence analysis based on machine learning approaches. Briefings in Bioinformatics. 2019;20(4):1280–1294. doi: 10.1093/bib/bbx165. [DOI] [PubMed] [Google Scholar]

- 57.Yu L., Zhao J., Gao L. Predicting potential drugs for breast cancer based on miRNA and tissue specificity. International Journal of Biological Sciences. 2018;14(8):971–982. doi: 10.7150/ijbs.23350. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Huo Y., Xin L., Kang C., Wang M., Ma Q., Yu B. SGL-SVM: a novel method for tumor classification via support vector machine with sparse group Lasso. Journal of Theoretical Biology. 2020;486, article 110098 doi: 10.1016/j.jtbi.2019.110098. [DOI] [PubMed] [Google Scholar]

- 59.Xue W., Yang F., Wang P., et al. What contributes to serotonin-norepinephrine reuptake inhibitors’ dual-targeting mechanism? The key role of transmembrane domain 6 in human serotonin and norepinephrine transporters revealed by molecular dynamics simulation. ACS Chemical Neuroscience. 2018;9(5):1128–1140. doi: 10.1021/acschemneuro.7b00490. [DOI] [PubMed] [Google Scholar]

- 60.Fu J., Tang J., Wang Y., et al. Discovery of the consistently well-performed analysis chain for SWATH-MS based pharmacoproteomic quantification. Frontiers in Pharmacology. 2018;9 doi: 10.3389/fphar.2018.00681. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Liu B., Zhu Y., Yan K. Fold-LTR-TCP: protein fold recognition based on triadic closure principle. Briefings in Bioinformatics. 2019 doi: 10.1093/bib/bbz139. [DOI] [PubMed] [Google Scholar]

- 62.Zou Q., Zeng J., Cao L., Ji R., Part 2 A novel features ranking metric with application to scalable visual and bioinformatics data classification. Neurocomputing. 2016;173:346–354. doi: 10.1016/j.neucom.2014.12.123. [DOI] [Google Scholar]

- 63.Niu M., Zhang J., Li Y., et al. CirRNAPL: a web server for the identification of circRNA based on extreme learning machine. Computational and Structural Biotechnology Journal. 2020;18:834–842. doi: 10.1016/j.csbj.2020.03.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Wang H., Ding Y., Tang J., Guo F. Identification of membrane protein types via multivariate information fusion with Hilbert-Schmidt independence criterion. Neurocomputing. 2020;383:257–269. doi: 10.1016/j.neucom.2019.11.103. [DOI] [Google Scholar]

- 65.Shen Y., Tang J., Guo F. Identification of protein subcellular localization via integrating evolutionary and physicochemical information into Chou’s general PseAAC. Journal of Theoretical Biology. 2019;462:230–239. doi: 10.1016/j.jtbi.2018.11.012. [DOI] [PubMed] [Google Scholar]

- 66.Su R., Liu X., Wei L. MinE-RFE: determine the optimal subset from RFE by minimizing the subset-accuracy-defined energy. Briefings in Bioinformatics. 2020;21(2):687–698. doi: 10.1093/bib/bbz021. [DOI] [PubMed] [Google Scholar]

- 67.Liu B., Li C., Yan K. DeepSVM-fold: protein fold recognition by combining support vector machines and pairwise sequence similarity scores generated by deep learning networks. Briefings in Bioinformatics. 2019 doi: 10.1093/bib/bbz098. [DOI] [PubMed] [Google Scholar]

- 68.Yan K., Fang X., Xu Y., Liu B. Protein fold recognition based on multi-view modeling. Bioinformatics. 2019;35(17):2982–2990. doi: 10.1093/bioinformatics/btz040. [DOI] [PubMed] [Google Scholar]

- 69.Liu B., Zhu Y. ProtDec-LTR3.0: protein remote homology detection by incorporating profile-based features into Learning to Rank. IEEE Access. 2019;7:102499–102507. doi: 10.1109/ACCESS.2019.2929363. [DOI] [Google Scholar]

- 70.Lv H., Dao F. Y., Zhang D., et al. iDNA-MS: an integrated computational tool for detecting DNA modification sites in multiple genomes. iScience. 2020;23(4, article 100991) doi: 10.1016/j.isci.2020.100991. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Liu K., Chen W. iMRM:a platform for simultaneously identifying multiple kinds of RNA modifications. Bioinformatics. 2020;36(11):3336–3342. doi: 10.1093/bioinformatics/btaa155. [DOI] [PubMed] [Google Scholar]

- 72.Dou L., Li X., Ding H., Xu L., Xiang H. Is there any sequence feature in the RNA pseudouridine modification prediction problem? Molecular Therapy-Nucleic Acids. 2020;19:293–303. doi: 10.1016/j.omtn.2019.11.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Xu L., Liang G., Liao C., Chen G. D., Chang C. C. k-Skip-n-gram-RF: a random forest based method for Alzheimer’s disease protein identification. Frontiers in Genetics. 2019;10 doi: 10.3389/fgene.2019.00033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Basith S., Manavalan B., Hwan Shin T., Lee G. Machine intelligence in peptide therapeutics: a next-generation tool for rapid disease screening. Medicinal Research Reviews. 2020;40(4):1276–1314. doi: 10.1002/med.21658. [DOI] [PubMed] [Google Scholar]

- 75.Boopathi V., Subramaniyam S., Malik A., Lee G., Manavalan B., Yang D. C. mACPpred: a support vector machine-based meta-predictor for identification of anticancer peptides. International Journal of Molecular Sciences. 2019;20(8) doi: 10.3390/ijms20081964. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Hasan M., Manavalan B., Khatun S., Kurata H. i4mC-ROSE, a bioinformatics tool for the identification of DNA N4-methylcytosine sites in the Rosaceae genome. International Journal of Biological Macromolecules. 2020;157:752–758. doi: 10.1016/j.ijbiomac.2019.12.009. [DOI] [PubMed] [Google Scholar]

- 77.Wang G., Wang Y., Teng M., Zhang D., Li L., Liu Y. Signal transducers and activators of transcription-1 (STAT1) regulates microRNA transcription in interferon γ-stimulated HeLa cells. PLoS One. 2010;5(7, article e11794) doi: 10.1371/journal.pone.0011794. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Wang G., Wang Y., Feng W., et al. Transcription factor and microRNA regulation in androgen-dependent and -independent prostate cancer cells. BMC Genomics. 2008;9(Supplement 2) doi: 10.1186/1471-2164-9-S2-S22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Deng Y., Xu X., Qiu Y., Xia J., Zhang W., Liu S. A multimodal deep learning framework for predicting drug-drug interaction events. Bioinformatics. 2020 doi: 10.1093/bioinformatics/btaa501. [DOI] [PubMed] [Google Scholar]

- 80.Zhang W., Li Z., Guo W., Yang W., Huang F. A fast linear neighborhood similarity-based network link inference method to predict microRNA-disease associations. IEEE/ACM Transactions on Computational Biology and Bioinformatics. 2019:p. 1. doi: 10.1109/TCBB.2019.2931546. [DOI] [PubMed] [Google Scholar]

- 81.Zhang W., Jing K., Huang F., et al. SFLLN: a sparse feature learning ensemble method with linear neighborhood regularization for predicting drug–drug interactions. Information Sciences. 2019;497:189–201. doi: 10.1016/j.ins.2019.05.017. [DOI] [Google Scholar]

- 82.Zhang W., Yue X., Tang G., Wu W., Huang F., Zhang X. SFPEL-LPI: sequence-based feature projection ensemble learning for predicting LncRNA-protein interactions. PLoS Computational Biology. 2018;14(12, article e1006616) doi: 10.1371/journal.pcbi.1006616. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Zhao Y., Wang F., Chen S., Wan J., Wang G. Methods of microRNA promoter prediction and transcription factor mediated regulatory network. BioMed Research International. 2017;2017:8. doi: 10.1155/2017/7049406.7049406 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Cheng L., Wang P., Tian R., et al. LncRNA2Target v2.0: a comprehensive database for target genes of lncRNAs in human and mouse. Nucleic Acids Research. 2019;47(D1):D140–D144. doi: 10.1093/nar/gky1051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Wang J., Chen S., Dong L., Wang G. CHTKC: a robust and efficient k-mer counting algorithm based on a lock-free chaining hash table. Briefings in Bioinformatics. 2020 doi: 10.1093/bib/bbaa063. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Experimental data can be obtained from https://github.com/taozhy/identifying-vesicle-transport-proteins or ask the author directly by email: 1765145064@qq.com.