Abstract

There is a pressing need to redesign health professions education and integrate an interprofessional and systems approach into training. At the core of interprofessional education is creating training synergies across healthcare professions and equipping learners with the collaborative skills required for today’s complex health care environment. Educators are increasingly experimenting with new interprofessional education (IPE) models, but best practices for translating IPE into interprofessional practice (IPP) and team-based care are not well defined.

Our study explores current IPE models to identify emerging trends in strategies reported in published studies. We report key characteristics of 83 studies that report IPE activities between 2005 and 2010, including those utilizing qualitative, quantitative, and mixed method research approaches. We found a wide array of IPE models and educational components. Although most studies reported outcomes in student learning about professional roles, team communication, and general satisfaction with IPE activities, our review identified inconsistencies and shortcomings in how IPE activities are conceptualized, implemented, assessed, and reported. Clearer specifications of minimal reporting requirements are useful for developing and testing IPE models that can inform and facilitate successful translation of IPE best practices into academic and clinical practice arenas.

Keywords: Interprofessional Education, Literature Review, Health Professional Students

Introduction

Effective communication across multiple healthcare disciplines and professions is critical to ensure the delivery of safe and efficient care (Greiner & Knebel (Eds.), 2003). Yet, many healthcare professionals enter practice without sufficient training in interprofessional care and coordination (Barr, 2002). Interprofessional education (IPE) transcends uni-professional and siloed approaches to health professions education. It emphasizes interactive learning with and from members of other professions to improve professional practice and care delivery (Barr, 2002). The recent landmark Lancet report, “Health professionals for a new century: Transforming education to strengthen health systems in an interdependent world”, highlights the importance of the timing, duration and relevance of IPE in promoting behavior changes among individual health professionals and in responding to the pressing needs of our increasingly complex and interdependent health care systems and populations (Frenk, et al., 2010).

Over the years, a number of systematic and general scoping reviews of the IPE literature contributed to our current IPE knowledge base. These reviews largely addressed three main topics. One topic involves the conceptual basis for IPE and development of related competencies, such as mutual respect, role knowledge and clarification, patient-centered care, and IP team communication (D’Amour, Ferrada-Videla, San Martin Rodriguez, Beaulieu, 2005; Choi & Pak, 2006; Interprofessional Education Collaborative Expert Panel, 2011; Canadian Interprofessional Health Collaborative (CIHC), 2010; Reeves, et al., 2011). Another topic addresses strengthening research methods for demonstrating effective teamwork and communication as facilitating factors of IPE (Zwarenstein, et al., 1999; Zwarenstein, et al., 2000; Reeves, et al., 2009; Barr, Freeth, Hammick, Koppel, Reeves, 2006; Remington, Foulk, Williams, 2006; Hammick, Freeth, Koppel, Reeves, Barr, 2007; Reeves, et al., 2010; Ireland, Gibbs, West, 2008; Payler, Meyer, Humphris, 2008; Lapkin, Levett-Jones, Gilligan, 2011). Most notably, Lapkin, et al. (2011) provides an in-depth analysis of nine selected randomized controlled and longitudinal interprofessional educational studies and reports a descriptive assessment of research quality of the reviewed studies. A third major topic focuses on developing sustainable models for IPE implementation that can be mainstreamed into health professions curricula and clinical practices (Freeth & Reeves, 2004; Choi & Pak, 2007; Choi & Pak, 2008; Mitchell, et al., 2006; Barr, et al., 2006; Barr & Ross, 2006; Reeves, et al., 2011; Reeves, Goldman, Burton, Sawatzky-Girling, 2010. Reviews reported by Reeves et al. (2010, 2011) provide the most up to date survey of IPE participants, interventions and outcomes.

Our literature review builds upon the existing foundational work conducted by IPE researchers. The unique areas of our contribution are as follows. First, we report a large scale review that exclusively targets IPE issues involving pre-licensure health professions trainees. This focus was necessitated by the Macy Foundation grant our team received for developing and pilot testing innovative IPE models targeting medical, nursing and pharmacy students. Second, our review included quantitative, qualitative and mixed research studies published between 2005 and 2010 to avoid duplicate reviews that covered prior years. Therefore, it represents an up to date review of this five-year period. Third, our review extends the work of Reeves et al. (2010, 2011) and addresses a wide range of IPE aspects including curriculum content and structure, mode of facilitating interprofessional interactions, faculty recruitment and retention, faculty skill-building, and institutional leadership and financial support. Our overall purpose was twofold: 1) to detail the rich diversity of approaches to IPE targeting health professions students and 2) to identify areas for strengthening the future IPE research. We believe our review significantly contributes to the evolving and expanding knowledge base of IPE strategies, outcomes and research methods.

This review was undertaken by an interprofessional research team at the University of Washington (UW) Schools of Nursing, Medicine, and Pharmacy, in collaboration with a medical education qualitative researcher at the University of Ottawa. Funding from the Josiah Macy, Jr. and the Hearst Foundations supported this review as part of a larger IPE grant project.

Methods

Study Inclusion Criteria

This literature review includes reports of qualitative, quantitative, and mixed method educational intervention studies published in peer-reviewed journals between 2005 and 2010. We focused on this time interval in order to capture the most recent trends in IPE and to build upon the existing reviews reported in the literature. Studies were required to include all three of the following criteria: 1) an interprofessional mix of pre-licensure health professions students as the target learner group, 2) an educational program or intervention with an emphasis on IPE skills and/or competencies, and 3) assessment or evaluation data that demonstrated IPE effectiveness on learning outcomes or that documented educational experiences of health professions students.

Study Identification

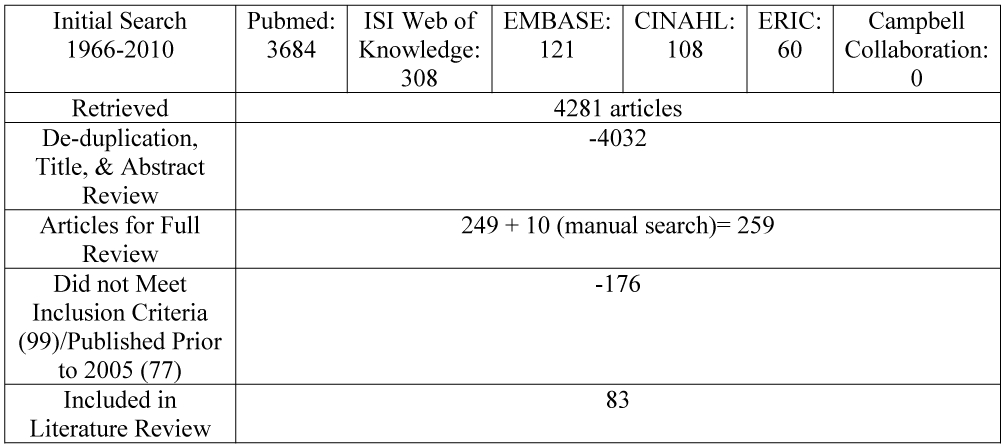

The following databases were searched for English language studies: PubMed, CINAHL (Cumulative Index to Nursing and Allied Health Literature), ERIC (Education Resources Information Center), ISI Web of Knowledge (Thompsen Reuters), EMBASE (Excerpta Medica Database), and the Campbell Collaboration. We designed search strategies in consultation with a senior research health science’s librarian. Boolean searching techniques (using ‘AND’ and ‘OR’) included the following terms: inter-, multi-, professional, discipline, education, and health professions students (see Web Appendix for General Search Strategy and Example). This method was augmented with manual searching of references in the reviewed articles. Three research team members discussed and applied inclusion/exclusion criteria to screen potential articles for inclusion in an iterative process. One team member was trained to review articles with work verified by the other two team members. The team engaged in iterative discussions when questions arose until consensus was reached. A total of 83 articles were included in the final pool for full review. The literature search and article identification flow are shown in Figure 1.

Figure 1:

Literature Search and Article Identification Process

Data Extraction and Synthesis

Our interprofessional research team developed a data abstraction tool for documenting key elements of the design, content, and evaluation of IPE programs and models reported in the reviewed studies. A total of 49 items were classified into the following categories: 1) study profile, 2) characteristics of educational intervention/program described, 3) type and number of students, 4) faculty roles and responsibilities, 5) assessment methods, 6) outcome measures, and 7) other information (e.g. administrative model, barriers, leadership and financial supports). The tool was pilot tested by five team members and refined through an iterative process using an evolving coding guide (see the Web Appendix for a copy of the Online Data Extraction Form and Coding Guide) lines http://collaborate.uw.edu/resources-and-publications/ipe-resources/appendices-table-of-content.html). The final data extraction form was delivered via Catalyst, a UW Web survey tool, for facilitating multiple coders’ work.

An inter-rater reliability (IRR) across coders was established through the following protocol. First, two trained coders reviewed the articles (L.C., E.M.). Second, a random sample of 30% of articles were reviewed by paired coders formed from the larger coding team. We attained an IRR of 87.6% across 26 articles (range: 79% to 93%). Upon completion of article coding, extracted data were downloaded from the online data extraction tool and analyzed using the SPSS (Statistical Package for the Social Sciences) 19.0. We report descriptive statistics for quantitative data; qualitative content was analyzed for themes and frequencies.

Results

Study Profiles

The majority of the studies were published in the United States (n=34, 41.0%), followed by Canada (n=17, 20.5%) and UK/Ireland (n=12, 14.5%) (see Table 1 for complete country list). Academia (n=44, 53%) were the most common education setting followed by combined academic and other settings (n=27, 32.5%), such as inpatient, outpatient/ambulatory, simulation center, patient’s home, or community. Fewer than 20% (n=15) of studies were based on multi-institutional collaborations. Only eight studies (9.6%) involved a randomization of students to educational interventions (see Web Appendix for References to Specific Findings).

Table 1:

Setting of included studies

| Study Setting (n=83) | ||

|---|---|---|

| Category | No. | % |

| Country | ||

| United States | 34 | 41.0 |

| Canada | 17 | 20.5 |

| UK/Ireland | 12 | 14.5 |

| Setting | ||

| Academic Setting | 44 | 53.0 |

| Academic + Other Settings | 27 | 32.5 |

| Non-Academic Setting | 12 | 14.5 |

Description of Students and Faculty

Over 20,000 students were involved in IPE activities across the reviewed studies (mean: 267; range: 7 - 4,099) (Table 2). While the majority of studies included students from either two (n=35, 42.2%) or three professions (n=28, 33.7%), 23 of 83 (25%) of studies involved four professions or more. Of the studies involving two professions, 24 out of 35 (68.6%) included nursing students and one other profession, with medical students being the predominant peers for nursing students (n=13, 37.0%). Student demographic information (e.g. age, gender, etc.) was reported in 40 studies (48.2 %). The academic level of students participating in the IPE program (e.g. sophomore, 1st year resident, etc) was not consistently reported.

Table 2:

Description of students and faculty

| Description of Students & Faculty (n=83) | ||

|---|---|---|

| Category | No. | % |

| Professions Represented | ||

| Two Professions | 35 | 42.2 |

| Medical + Nursing | 13 | 37.0 |

| Nursing + non-Medical | 11 | 31.4 |

| Medical + non-Nursing | 10 | 28.6 |

| No Medical or Nursing | 1 | 2.9 |

| Three Professions | 28 | 33.7 |

| Four Professions or More | 20 | 24.1 |

| Faculty | ||

| Faculty Development Offered | 15 | 18.1 |

| Recruitment Strategies Reported | 9 | 10.8 |

| Retention Incentives Reported | 3 | 3.6 |

With regards to IPE faculty, the majority of studies (n=68, 81.9%) did not report how faculty obtained their IPE skills, particularly in leading or facilitating the IPE activities. In addition, many reported having faculty from three or more professions involved in designing educational programs (n= 35, 42.2%). However, few studies documented strategies for preparing (n=15, 18.1%), recruiting (n= 9, 10.8%), or retaining (n=3, 3.6%) IPE faculty.

Educational Programs/Interventions

Educational programs and interventions varied greatly across the studies (Table 3). Approximately half of the studies (n=39, 47%) reported employing learning theories or conceptual frameworks to guide their IPE programs. How these theories and frameworks guided the development of IPE models is less clearly described. For example, adult learning theory and contact hypothesis—a theory that interpersonal contact is the most effective way to increase intergroup understanding and reduce prejudice—were most frequently cited. Yet, only three (3.6%) studies explicitly mention the contact hypothesis as a guiding framework for developing their study interventions. This suggests a missing link between theory and practice in the IPE studies we reviewed.

Table 3:

Characteristics of educational interventions

| Educational Interventions (n=83) | ||

|---|---|---|

| Category | No. | % |

| Length of Intervention Implementation | ||

| Less Than 1 Year | 20 | 24.1 |

| 1-5 Years | 39 | 47.0 |

| 6-10 Years | 9 | 10.8 |

| Over 10 Years | 4 | 4.8 |

| Frequency of Course | ||

| One Time Activity | 48 | 57.8 |

| Multiple Times During Year | 17 | 20.5 |

| Occurring Annually | 8 | 9.6 |

| Duration of Educational Intervention | ||

| Less than 6 hours | 15 | 18.1 |

| > 6 hours; < 1 week | 14 | 16.9 |

| 1-8 Weeks | 19 | 22.9 |

| One Quarter/Semester | 14 | 16.9 |

| One Year | 5 | 6.0 |

| Educational Strategies | ||

| Small Group Discussion | 48 | 57.8 |

| Patient Case Analysis | 40 | 48.2 |

| Large Group Lecture | 31 | 36.1 |

| Clinical Teaching/Direct Patient Interaction | 29 | 34.9 |

| Reflective Exercises | 29 | 34.0 |

| Intervention Reported as Offered for Credit | 24 | 28.9 |

| Simulation | 22 | 26.5 |

| Community-based Projects | 14 | 16.9 |

| E-Learning | 13 | 15.7 |

| Shadowing | 12 | 14.5 |

| Written Assignments | 11 | 13.3 |

| Workshops | 9 | 10.8 |

| Other (e.g. interviews, joint lab sessions, patient/family visits) | 13 | 15.7 |

| Developers of Intervention | ||

| Faculty only | 52 | 62.7 |

| Faculty + Students | 2 | 2.4 |

| Faculty + Other Health Professionals | 5 | 6.0 |

| Faculty + Patients/Families/Community (no students) | 2 | 2.4 |

| Mixed (multiple combinations of above) | 9 | 10.8 |

| Other/Unclear | 10 | 12.0 |

The majority of studies (n= 59, 71.1%) reported that their IPE activities have been in place for five years or less, followed by 13 (15.6%) studies in implementation for more than five years (see Web Appendix for References to Specific Findings). Eleven studies (13.3%) did not report how long their IPE activities were implemented for. IPE activities offered to students tended to be a one-time event (n=48, 57.8%), such as a workshop or simulation training in team communication. Only a few studies described IPE activities that were offered on an annual basis (n=8, 9.6%), (see Web Appendix for References to Specific Findings). Each IPE activity lasted from less than six hours (n=15, 18.1%) to one year or longer (n=5, 6.0%) with the majority ranging from one to eight weeks (n=19, 22.9%) (see Web Appendix for References to Specific Findings ).

The reviewed studies reported multiple interprofessional educational strategies and formats. Small group discussion was the predominant format (n=48, 57.8%), followed by case or problem-based learning (n=40, 48.2%), large group lectures (n=31, 36.1%), reflective exercises (n=29, 34.9%), clinical teaching or direct interaction with patients (n=29, 34.9%), simulation (n=22, 26.5 %), and community-based projects (n=14, 16.9 %). There was some overlap between small group discussion and case or problem-based learning where most case or problem-based learning involved small group discussion, but not all small group discussion involved case or problem-based learning. Less commonly reported educational strategies included the use of e-learning for IPE delivery (n=13, 15.7%) or shadowing of clinical providers or students from other professions (n=12, 14.5 %) (see Web Appendix for References to Specific Findings).

One of out five studies (n=24, 28.9%) reported that students received formal credit for completing the IPE course. Approximately half of the studies (n=39, 47%) did not explicitly state if student participation was mandatory. Among studies that reported the type of requirement, 10.8% of studies required a mandatory attendance by students (n=9) while participation was voluntary in 30.1% (n=25) of studies. A few studies (n=10, 12%) included a mixed group of students with different participation requirements (e.g. participation was mandatory for medical students but an elective activity for pharmacy students). While faculty developed the vast majority (n=70 of 80, 87.5%) of their educational programs, sixteen studies (20.0%) reported contributions by students or patients and families (see Table 3) (see Web Appendix for References to Specific Findings).

Outcomes and Assessment Methods

All studies reported learning outcomes, with the majority of studies (n=67, 80.7%) reporting more than one. The most common outcomes were students’ attitudes towards IPE (n=64, 77.1 %), followed by gains in knowledge of IPE competencies or clinical systems (n=33, 39.8%), student satisfaction with IPE courses (n=30, 36.1 %), and team skills, for example, in resuscitation, leadership and communication (n=25, 30.1%) (Table 4). Knowledge tended to target students’ understanding of professional roles, collaborative approaches, clinical/patient care content, care models, quality improvement, patient safety, and cultural competence. Patient-oriented outcomes, such as health care utilization and clinical outcomes involving diabetic patients, were not widely examined (n=6, 7.2%) (see Web Appendix for References to Specific Findings). We found that students’ attitudinal or behavioral changes were rarely assessed longitudinally.

Table 4:

Outcomes and assessment methods

| Outcomes and Assessment Methods (n=83) | ||

|---|---|---|

| Category | No. | % |

| Outcomes | ||

| Attitudes (e.g. towards IPE, towards other professions) | 64 | 77.1 |

| Knowledge (quality, roles) | 33 | 39.8 |

| Satisfaction | 30 | 36.1 |

| Skills (e.g. resuscitation skills) | 25 | 30.1 |

| Patient-Oriented Outcomes | 6 | 7.2 |

| Other | 30 | 36.1 |

| Number of Outcomes Included | ||

| 1 Outcome | 15 | 18.1 |

| 2 Combined Outcomes | 39 | 47.0 |

| 3 and More Combined | 28 | 33.7 |

| Assessment Methods | ||

| Attitude/Perception/Satisfaction Survey | 63 | 75.9 |

| Interview/Focus Group/Debrief | 37 | 44.6 |

| Program Evaluation/Feedback | 37 | 44.6 |

| Knowledge Test | 15 | 18.1 |

| Skill Performance Ratings | 8 | 9.6 |

| Other | 14 | 16.9 |

| Reliability Reported | 26 | 31.3 |

| Validity Reported | 18 | 21.7 |

Among assessment tools, surveys were most commonly used (n=63, 75.9 %), followed by qualitative methods such as interviews, focus groups and debriefings (n=37, 44.6%), and knowledge tests (n=15, 18.1%). In addition to including self-assessments by students, 37 studies (44.6%) incorporated program evaluations and eight (9.6%) developed and implemented observational ratings for assessing students’ skills (e.g. communication, teamwork, etc.) (see Web Appendix for References to Specific Findings). Approximately one-third of the studies (n=26, 31.3%) provided evidence of reliability and eighteen (21.7%) reported validity of the assessment instruments. Few tools were validated in-house as investigators predominantly used instruments with established validity.

Factors Limiting and Facilitating Success

Sixty-five studies (78.3%) cited barriers to IPE implementation (Table 5). Scheduling was identified as the most frequently reported barrier to IPE implementation (n=39, 47.0%), followed by difficulty in matching students of compatible levels (e.g. having different levels of skill/background, experience and clinical knowledge during clinical simulations) (n=15, 18.1%). Investigators also noted limitations in faculty and staff time (n=12, 14.5%), insufficient funding (n=10, 12%), and inadequate administrative support (n=6, 7.2%) as barriers. Among studies that reported administrative support as the key facilitating factor for success in IPE, financial support (e.g. institutional or grant support) (n=32, 38.6%), staff support (n=12, 14.5 %), and leadership buy-in (n=6, 9.8%) were most frequently cited.

Table 5:

Barriers and administrative supports

| Barriers and Administrative Supports (n=83) | ||

|---|---|---|

| Category | No. | % |

| Barriers to Implementation | ||

| Scheduling | 39 | 47.0 |

| Learner Level Compatibility | 15 | 18.1 |

| Preparation Time Required | 12 | 14.5 |

| Funding | 10 | 12.0 |

| Outcomes Measurement | 7 | 8.4 |

| Negative interdisciplinary interactions/hierarchies | 7 | 8.4 |

| Administrative Support | 6 | 7.2 |

| Faculty Not Prepared for Role | 4 | 4.8 |

| Administrative Support | ||

| Financial support (institutional, grant, combination or others) | 32 | 38.6 |

| Staff support | 12 | 14.5 |

| Leadership buy-in | 6 | 9.8 |

| Dedicated office space | 1 | 1.2 |

| Technology support | 2 | 2.4 |

Discussion

In this paper, we described a cross-sectional review of IPE activities based on 83 studies published between 2005 and 2010. The diversity of educational approaches we reported point to great potentials of both strengthening the IPE evidence base and widely disseminating effective educational interventions. Focusing on the pre-licensure health professions population and studies utilizing qualitative, quantitative, and mixed methods approaches we report a wide variety of IPE programs being implemented throughout the course of educational programs—timed from when students first enter a health professions program to culminating activities near graduation. The range of timings throughout educational programs indicate that health professions schools are attempting to expose students to IPE activities throughout their educational process

One notable finding from our review is that interprofessional faculty members are not the sole developers of IPE activities. In 20% of the studies we reviewed, students, patients, and families served as co-developers of the educational processes, which reflect perspectives of multiple IPE stakeholders. Small group discussion and problem-based learning were by far the most frequently described educational format, which is more conducive to student-to-student interactions and team building than didactics. In addition, clinical teaching, direct patient interactions, and community-based projects represented other types of educational formats. With an increasing adoption of simulation-based learning in health sciences education, we believe this method will become an integral educational modality in advancing IPE. While most studies did not report reliability and/or validity of evaluation instruments, those that did predominantly used instruments with established psychometric rigor. Over time, the use of common instruments across IPE programs would lead to programmatic comparisons and meta-analyses within the field of IPE.

In addition to innovative IPE approaches, this review also revealed areas for methodological improvements in IPE research. Mainly, the lack of detail and heterogeneity of outcome measures applied to IPE studies may limit the ability to draw conclusions about preferred educational strategies or best practices. Consistent with the findings of previously published IPE research, we have identified four main themes we observed in the current pre-licensure IPE literature: 1) many reported IPE programs are not guided by theoretical or conceptual frameworks; 2) there is inconsistency in reporting of descriptive details of study settings, population samples and outcomes weaken opportunities for comparison; 3) few studies conducted longitudinal follow-up of IPE outcomes; and 4) minimal attention to issues of faculty development threaten successful replication.

First, the absence of an explicit theoretical framework suggests a disconnect between educational theories and their application to practice. This raises an important opportunity for improved rigor in future IPE interventions and for advancing the IPE theoretical framework. As reported in the review by Reeves, et al. 2011, we encourage the reporting of theoretical and conceptual underpinnings of IPE programs. More detailed information about conceptual frameworks enable assessment of the fit among research objectives, teaching methods, and outcomes across studies.

The second major gap in the IPE literature relates to inconsistencies in the descriptive details of study settings, populations, and outcomes. This inconsistency has been identified by previous reviews as an ongoing weakness in the state of IPE literature (Lapkin et al., 2011, Reeves et al., 2011). More specifically, we found that many studies provided inadequate descriptions of study components including implementation strategies, participants and/or outcome measures. Therefore, the previous IPE researchers’ recommendations have not been fully embraced by the IPE research community. Detailed descriptions of key research components would better inform future IPE scholars in their explorations of cutting edge research questions.

Third, the majority of interventions were single event activities and had been implemented for less than five years. This trend limits the development of the best strategies for targeting long-term behavior changes and potential to positively impact patient outcomes. Longer term interventions and longitudinal follow up of learning outcomes are needed to identify enduring outcomes that may lead to practice changes. As highlighted in Reeves, et al. (2010), an increasing number of studies are reporting longitudinal effects of IPE and utilizing more robust study designs. However, these studies are still in the minority among published IPE literature. Sustained growth in this area requires investment in centers that have stable investigators, faculty, and funding committed to long-term evaluation of IPE.

Fourth, we identified a lack of reporting on systematic training to prepare faculty for assuming an IPE role. Faculty development has been regarded as an essential component of teaching or facilitating IPE competencies (Hammick et al., 2007, Reeves et al., 2012, Silver & Leslie, 2009, Steinert, 2005). Without targeted IPE training, faculty members are not adequately prepared to develop IPE programs or facilitate discussions with students who bring multiple perspectives from different health professions. Implementation of faculty development training for IPE will require significant administrative investments and collaborations within and between health professional schools. Several studies reported innovative approaches to building an IPE faculty pool to include cultural advisors (Kairuz & Shaw, 2005), education specialists, or theologians (Medves, et al., 2008). Similarly, a few studies included patients, families and students in the content development team, which we highlight as an innovative strategy (see Web Appendix for References to Specific Findings).

Our study has several limitations. We developed a data extraction instrument and coding criteria within our interprofessional, multi-institutional research team, but did not consult external experts. We addressed this limitation by reviewing relevant literature to identify published coding schemes, but may have missed important IPE dimensions in our review process. As a result, some of our criteria may not correspond to definitions used in recently published IPE competencies and guidelines. Another limitation involves a lack of a formal quality assessment of included articles. Our primary goal was to capture a broad IPE knowledge base by including a wide array of reported interprofessional education activities. We believe our approach complements the Cochrane-like reviews that applied more stringent quality standards. We recognize a quality assessment of studies would have generated useful patterns in key results we reported as was the case in the recent systematic review by Lapkin, et al. (2011).

Our review may have also been limited by the number and diversity of authors who coded the studies. To address this, we provided coder training (see Coding Guide in Web Appendix) and built in overlapping reviews of sampled articles for generating inter-rater reliability. Our reported inter-rater reliability of 87.6%, while an acceptable standard, could have been higher with a smaller group of reviewers from similar specialty domains.

Recommendations

As described above, and as has been identified in previous reviews, greater consistency in how IPE activities are reported in the literature is recommended in order to better understand the existing IPE models and provide a stronger theoretical basis for future IPE implementation. Therefore, we encourage the use of structured reporting guidelines and/or an accompanying technical paper in order to increase comparability and replicability of future IPE interventions Based on examples of formal reporting of research studies (Ireland, Gibb, and West, 2008), the authors have developed a template for researchers to follow in their reporting of IPE interventions. The structured reporting template, termed RIPE (Replicability of Interprofessional Education), is provided in the Web Appendix. We believe that use of the RIPE template will help foster greater comparison across studies, further theory building, and promote the development of best practices.

We also recommend inclusion of technical papers on IPE via Web appendices for authors to communicate detailed aspects of IPE research studies. Already adopted as compulsory for publication in health systems cost-effectiveness research, technical papers are valuable means of describing comprehensive study components. The IPE technical paper, as attached in the RIPE template, would outline information necessary to replicate IPE study design by including the following descriptions: 1) theoretical framework, 2) stated objectives of the intervention, 3) development and design of the intervention, 4) voluntary/required nature of the IPE intervention, 5) level and numbers of students and health professions(s), 6) frequency and duration of the IPE intervention, 7) teaching strategies, 8) faculty development, 9) validation of the tools used to assess/measure outcomes, 10) cost and resource utilization to implement the intervention, 11) institutional leadership support, 12) barriers/facilitators of implementation, and 13) community partnerships.

Our review describes recent findings from a broad realistic survey of the state of IPE research within the pre-licensure health professions literature. We echo the past recommendations by other IPE researchers and call for a greater consistency in reporting IPE models. This is one important way to contribute to the evolving and expanding IPE knowledge base of strategies, methods, and outcomes and ultimately, to prepare competent interprofessional trainees.

Supplementary Material

Acknowledgments

Declaration of Interest:

The authors report no conflicts of interest. The authors alone are responsible for the content and writing of the article. This work was supported by grants from the The Josiah Macy Jr Foundation (Grant # B08-05) and the Hearst Foundation.

Footnotes

Summary tables describing interventions and outcomes as well as complete references for all included articles are available via the Web Appendix at the following link: http://collaborate.uw.edu/resources-and-publications/ipe-resources/appendices-table-of-content.html

References

- Barr H (2002). Interprofessional Education: Today, yesterday, and tomorrow. The Learning and Teaching Support Network for Health Sciences & Practice from the UK Centre for the Advancement of Interprofessional Education. Retrieved 11/10/2010 from: http://www.hsaparchive.org.uk/doc/mp/01-03_hughbarr.pdf/view [Google Scholar]

- Barr H, Freeth D, Hammick M, Koppel I, Reeves S, (2006). The evidence base and recommendations for interprofessional education in health and social care. Journal of Interprofessional Care, 20 (1), 75–78. DOI: 10.1080/13561820600556182. [DOI] [PubMed] [Google Scholar]

- Barr H & Ross F (2006). Mainstreaming interprofessional education in the United Kingdom: A position paper. Journal of Interprofessional Care, 20(2), 96–104. DOI: 10.1080/13561820600 [DOI] [PubMed] [Google Scholar]

- Canadian Interprofessional Health Collaborative. (2010). A National interprofessional competency framework. Retrieved 11/10/2010 from www.cihc.ca

- Choi BC & Pak AWP (2006). Multidisciplinarity, interdisciplinarity, and trandisciplinarity in health research, services, education, and policy: 1. Definitions, objectives, and evidence of effectiveness. Clinical Investigative Medicine, 29(6), 351–364. PMID: 17330451 [PubMed] [Google Scholar]

- Choi BC & Pak AWP (2007). Multidisciplinarity, interdisciplinarity, and trandisciplinarity in health research, services, education, and policy: 2. Promotors, barriers, and strategies of enhancement. Clinical Investigative Medicine, 30 (6), E224–E232. PMID: 18053389 [DOI] [PubMed] [Google Scholar]

- Choi BC & Pak AWP (2008). Multidisciplinarity, interdisciplinarity, and trandisciplinarity in health research, services, education, and policy: 3. Discipline, inter-discipline distance, and selection of discipline. Clinical Investigative Medicine, 31 (1), E41–E48. PMID:18312747 [DOI] [PubMed] [Google Scholar]

- D’Amour D, Ferrada-Videla M, San Martin Rodriguez L, & Beaulieu MD (2005). The conceptual basis for interprofessional collaboration: Core concepts and theoretical frameworks. Journal of Interprofessional Care, Supplement 1, 116–131. DOI: 10.1080/13561820500082529. [DOI] [PubMed] [Google Scholar]

- Freeth D & Reeves S (2004). Learning to work together: using the presage, process, product (3P) model to highlight decisions and possibilities. Journal of Interprofessional Care, 18 (1), 43–56. DOI: 10.1080/13561820310001608221. [DOI] [PubMed] [Google Scholar]

- Frenk J, Chen L, Bhutta ZA, Cohen J, Crisp N, Evans T, Fineberg H, Garcia P, Ke Y, Kelley P, Kistnasamy B, Meleis A, Naylor D, Pablo-Mendez A, Reddy S, Scrimshaw S, Sepulveda J, Serwadda D, Zurayk. (2010). Health Professionals for a new century: transforming education to strengthen health systems in an interdependent world. The Lancet. 376: 1923–58. DOI: 10.1016/S0140-6736(10)61854-5. [DOI] [PubMed] [Google Scholar]

- Greiner AC, Knebel E (eds.) (2003). Health professions education: A bridge to quality Committee on the Health Professions Education Summit. ISBN: 0-51578309-51578-1. Retrieved from: http://www.nap.edu/catalog/10681.html [Google Scholar]

- Hammick M, Freeth D, Koppel I, Reeves S, Barr H (2007). The best evidence systematic review of interprofessional education: BEME Guide No. 9. Medical Teacher, 29 (735-751). DOI: 10.1080/0142590701632576. [DOI] [PubMed] [Google Scholar]

- Interprofessional Education Collaborative Expert Panel (2011). Core Competencies for Interprofessional Collaborative Practice: Report of an expert panel. Washington, D.C: Interprofessional Education Collaborative; Retrieved from: www.aacn.nche.edu/education/pdf/IPECReport.pdf [Google Scholar]

- Ireland J, Gibb S, West B (2008). Interprofessional education: reviewing the evidence. British Journal of Midwifery, 16(7). 446–453. Retrieved from: http://www.intermid.co.uk/cgi-bin/go.pl/library/abstract.html?uid=30462 [Google Scholar]

- Kairuz T & Shaw J (2005). Undergraduate inter-professional learning involving pharmacy, nursing, and medical students: the Maori health week initiative. Pharmacy Education, 5, 255–259. DOI: 10.1080/1560221050037637 [DOI] [Google Scholar]

- Lapkin S, Levett-Jones T, Gilligan C (2011). A systematic review of the effectiveness of interprofessional education in health professional programs. Nurse Education Today. 1–13. DOI: 10.1016/jnedt.2011.11.006 [DOI] [PubMed] [Google Scholar]

- Medves J, Paterson M Chapman CY, Young JH, Bowes N, Hobbes B, (2008). A new interprofessional course preparing learners for life in rural communities. Rural and Remote Health, 8, 1–8. PMID: 18302494. [PubMed] [Google Scholar]

- Mitchell PH, Belza B, Schaad DC, Robins LS, Gianola FJ, Odegard PS, Kartin D, Ballweg RA (2006). Working across the boundaries of health professions disciplines in education, research and service: The University of Washington Experience. Academic Medicine, 81 (10), 891–896. DOI: 10.1097/01.ACM.000238078.93713.a6 [DOI] [PubMed] [Google Scholar]

- Payler J, Meyer E, & Humphris D (2008). Pedagogy for interprofessional education—what do we know and how can we evaluate it? Learning in Health and Social Care, 7(2), 64–78. DOI: 10.1111/j.1473-6861.2008.00175.x [DOI] [Google Scholar]

- Reeves S, Goldman J, Burton A, Sawatzky-Girling B (2010). Synthesis of systematic review evidence of interprofessional education. Journal of Allied Health, 39 (3 pt 2), 198–203. Retrieved from: http://www.ingentaconnect.com.offcampus.lib.washington.edu/content/asahp/jah/2010/00000039/A00103s1/art00005 [PubMed] [Google Scholar]

- Reeves S, Goldman J, Gilbert J, Tepper J, Silver I, Suter E, and Zwarenstein M (2011). A scoping review to improve conceptual clarity of interprofessional interventions. Journal of Interprofessional Care, 25, 167–174. DOI: 10.3109/13561820.2010.529960. [DOI] [PubMed] [Google Scholar]

- Reeves S, Tassone M, Parker K, Wagner S, Simmons B (2012). Interprofessional education: An overview of key developments in the past three decades. Work, 41, 233–245. DOI: 10.3233/WOR-2012-1298. [DOI] [PubMed] [Google Scholar]

- Reeves S, Zwarenstein M, Goldman J, Barr H, Freeth D, Koppel I, Hammick M (2010). The effectiveness of interprofessional education: Key findings from a new systematic review. Journal of Interprofessional Care, early online, 1–12. DOI: 10.3109/13561820903163405. [DOI] [PubMed] [Google Scholar]

- Reeves S, Zwarenstein M, Goldman J, Barr H, Freeth D, Hammick M, Koppel I (2009). Interprofessional education: effects on professional practice and health care outcomes. Cochrane Database of Systematic Reviews, 1 Art. No.: CD002213. DOI: 10.1002/14651858.CD002213.pub2. [DOI] [PubMed] [Google Scholar]

- Remington TL, Foulk MA, Williams BC (2006). Evaluation of evidence for interprofessional education. American Journal of Pharmaceutical Education, 70 (2), article 66. DOI: 10.5688/aj700366. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Steinert Y (2005). Learning together to teach together: Interprofessional education and faculty development. Journal of Interprofessional Care, Supplement 1: 60–75. DOI: 10.1080/13561820500081778. [DOI] [PubMed] [Google Scholar]

- Silver IL, Leslie K (2009). Faculty development for continuing interprofessional education and collaborative practice. Journal of Continuing Education in the Health Professions, 29(3), 172–177. DOI: 10.1002/chp.20032. [DOI] [PubMed] [Google Scholar]

- Zwarenstein M, Atkins J, Barr H, Hammick M, Koppel I, & Reeves S (1999). A systematic review of interprofessional education. Journal of Interprofessional Care: 13(4), 417–424. DOI: 10.3109/13561829909010386. [DOI] [Google Scholar]

- Zwarenstein M, Reeves S, Barr H, Hammick M, Koppel I, Atkins J (2000). Interprofessional education: effects on professional practice and health care outcomes. Cochrane Database of Systematic Reviews, Issue 3 Art. No.: CD002213. DOI: 10.1002/14651858.CD002213. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.