Abstract

This work presents a machine learning approach for the computer vision-based recognition of materials inside vessels in the chemistry lab and other settings. In addition, we release a data set associated with the training of the model for further model development. The task to learn is finding the region, boundaries, and category for each material phase and vessel in an image. Handling materials inside mostly transparent containers is the main activity performed by human and robotic chemists in the laboratory. Visual recognition of vessels and their contents is essential for performing this task. Modern machine-vision methods learn recognition tasks by using data sets containing a large number of annotated images. This work presents the Vector-LabPics data set, which consists of 2187 images of materials within mostly transparent vessels in a chemistry lab and other general settings. The images are annotated for both the vessels and the individual material phases inside them, and each instance is assigned one or more classes (liquid, solid, foam, suspension, powder, ...). The fill level, labels, corks, and parts of the vessel are also annotated. Several convolutional nets for semantic and instance segmentation were trained on this data set. The trained neural networks achieved good accuracy in detecting and segmenting vessels and material phases, and in classifying liquids and solids, but relatively low accuracy in segmenting multiphase systems such as phase-separating liquids.

Short abstract

A computer vision system for recognition materials and vessels in the chemistry lab. The system is based on the new LabPics image data set and convolutional neural nets for image segmentation.

Introduction

Experimental chemistry consists largely of the handling of materials in vessels.1 Whether it involves moving and mixing liquids, dissolving or precipitating solids, or extraction and distillation, these manipulations almost always consist of handling materials within transparent containers and depend heavily on visual recognition.1 For chemists in the lab, it is crucial not only to be able to identify the vessel and the fill level of the material inside it but also to be able to accurately identify the region and phase boundaries of each individual material phase as well as its type (liquid, solid, foam, suspension, powder, etc.). For example, when a chemist is trying to create a reaction in a solution, it is important to ensure that all materials have been fully dissolved into a single liquid phase. A chemist attempting to separate the components of a mixture will often use phase separation for liquid–liquid extraction or selective precipitation; these and many other tasks depend heavily on the visual recognition of materials in vessels.1−6 Creating a machine-vision system that can achieve this is essential for developing robotic lab systems that can perform the full range of operations used for chemical synthesis.7−14 The main challenge in creating an image recognition system that can achieve this is that material phases can have a wide range of textures and shapes, and these may vary significantly even for the same type of material. Classical computer vision algorithms have hitherto mostly relied on edges or colors in order to identify objects and materials.15−24 While these methods can achieve good results in simple conditions and controlled environments, they fail in complex real-world scenarios.17−24 In recent years, convolutional nets (CNNs) have revolutionized the field of computer vision, leading to the development of a wide range of new applications from self-driving cars to medical imaging.25 When CNNs are trained with large numbers of examples of a specific task, they can achieve almost human-level recognition of objects and scenes under challenging conditions.25,26 Training such methods effectively requires a large number of annotated examples.27,28 For our purpose, this means a large number of images of materials in vessels, where the region and the type of each individual material and vessel are annotated.29 This work presents a new data set dedicated to materials and vessels with a focus on chemistry lab experiments. The data set, called Vector-LabPics, contains 2187 images of chemical experiments with materials within mostly transparent vessels in various laboratory settings and in everyday conditions such as beverage handling. Each image in the data set has an annotation of the region of each material phase and its type. In addition, the region of each vessel and its labels, parts, and corks are also marked. Three different neural nets were trained on this task: a Mask R-CNN30 and a generator-evaluator-selector (GES) net31 were trained on a task requiring instance-aware segmentation,32 which involved finding the region and boundaries of each material phase and vessel in the image, while a fully convolutional neural net (FCN)33 was trained for semantic segmentation, which involved splitting the image into regions based on their class.34

The Vector-LabPics Data Set

Creating a large annotated data set is a crucial part of training a deep neural net for a specific task. For deep learning applications, large data sets dedicated to specific tasks such as ImageNet27 and COCO32 act as a basis on which all methods in a given field are trained and evaluated. An important aspect of the data set for image recognition is the diversity of the images, which should reflect as many different scenarios as possible. The more diverse the data set, the more likely it is that a neural net trained on this data set will be able to recognize new scenarios that were not part of the data set. The goal for the Vector-LabPics data set and the method described here is to be able to work under as wide of a range of conditions as possible. Some of the most important sources of images for this data set include Youtube, Instagram, and Twitter channels dedicated to chemistry experiments. The list of contributors that enable this work is given in the Acknowledgments. Another source is images taken by the authors in various everyday settings. In total, the data set contains 2187 annotated images. The annotation was done manually using the VGG image annotator (VIA).35 Each individual vessel and material phase were annotated, as were the labels, corks, and other parts of the vessels (valves, etc.). Each instance segment received one or more classes from those shown in Table 1. The data set has two representations. The nonexclusive presentation is based on overlapping instances (Figure 1a). In this mode, the different segments can overlap; for example, when a solid phase is immersed in a liquid phase, the solid and liquid phases will overlap (Figure 1a). In a case of overlap, a priority (front/back) was added to each segment in the overlap region. For example, if a solid is immersed in a liquid, priority will be given to the solid (Figure 1a).

Table 1. Results for the Semantic Segmentation Net.

| class | IOU | precision | recall | N evala | N trainb |

|---|---|---|---|---|---|

| vessel | 0.93 | 0.96 | 0.97 | 497 | 1669 |

| filled | 0.85 | 0.92 | 0.92 | 497 | 1660 |

| liquid | 0.81 | 0.89 | 0.90 | 452 | 1419 |

| solid | 0.65 | 0.82 | 0.75 | 108 | 512 |

| suspension | 0.46 | 0.68 | 0.59 | 132 | 519 |

| foam | 0.26 | 0.47 | 0.37 | 31 | 283 |

| powder | 0.18 | 0.28 | 0.35 | 46 | 269 |

| granular | 0.26 | 0.72 | 0.29 | 21 | 74 |

| gel | 0.00 | 0.00 | 0.00 | 1 | 49 |

| vapor | 0.00 | 0.00 | 0.00 | 4 | 29 |

| large chunks (solid) | 0.00 | 0.00 | 0.00 | 7 | 38 |

| cork | 0.20 | 0.33 | 0.35 | 15 | 329 |

| label | 0.07 | 0.09 | 0.33 | 12 | 227 |

| vessel parts | 0.15 | 0.23 | 0.31 | 112 | 536 |

The number of images, in the evaluation set, that contain the class.

The number of training images that contain the class.

Figure 1.

(a) Instance segmentation with overlapping segments. In the case of overlap, the overlapping region is marked as either the front (green) or back (red). (b) Nonoverlapping (simple) instance segmentation: each pixel can belong to only one segment. Each segment can have several classes.

The data set also contains a simple version with nonoverlapping instances. In this case, each pixel can correspond to only one vessel instance and one material instance. If there is overlap, the front instance with higher priority is used, and the back instance is ignored, in the overlapping region (Figure 1b). In addition, the pixel can be assigned one label/part instance (Figure 2). Altogether, the simple representation has three channels: (i) the vessel instance; (ii) the material instance; and (iii) the label/cork/vessel part instance. Instances from the same channel cannot overlap; this means that two material instances may not overlap, but the material and vessel instance may overlap. Another approach is a semantic representation in which each pixel is assigned several classes but no instance (Figure 3). More accurately, each class has a binary map containing all the pixels in the image belonging to this class. This semantic representation is not instance-aware and does not allow us to separate different instances of the same class, such as adjacent vessels or phase-separating liquids (Figure 3). The LabPics data set is available at https://zenodo.org/record/3697452.

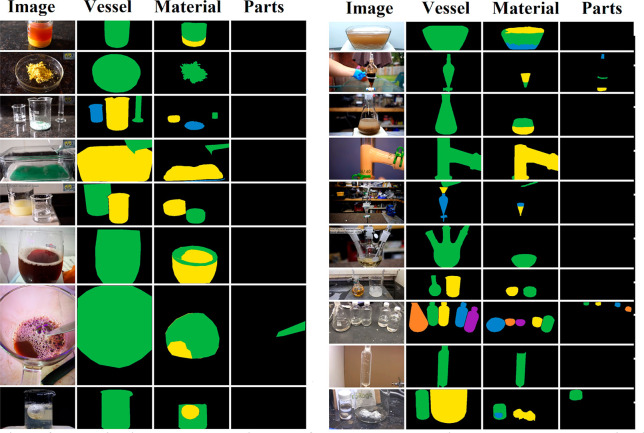

Figure 2.

Exclusive instance segmentation map from the Vector-LabPics data set. The segmentation is composed of three channels: the vessel, the material phases, and the vessel parts. Segments from the same channel cannot overlap.

Figure 3.

Examples of semantic segmentation maps from the Vector-LabPics data set. Each class has a binary segmentation map that covers all the pixels belonging to the class. Not all classes are shown. Images were taken from Nile Red Youtube channel.

Results and Discussion

Semantic and Instance Segmentation

Finding the region and class of each vessel and material phase in the image can be done using either semantic or instance segmentation. Instance-aware segmentation involves splitting the image into regions corresponding to different objects or material phases.30,31,43 This method can detect and separate phases of materials of the same class, such as phase-separating liquids or adjacent vessels (Figure 2). In addition, the segmentation and classification stages in this method can be separated, allowing for the segmentation of unfamiliar materials (i.e., materials classes not in the training set). Two types of convolutional nets were studied for instance-aware segmentation: a Mask R-CNN30 and a GES net.31 Mask R-CNN is the leading method for instance segmentation according to almost all the major benchmarks.31 GES net is another method for both instance and panoptic segmentation and is designed to work in a hierarchical manner. Another approach is semantic segmentation,33 which predicts for each class a binary map of the region in the image corresponding to that class (Figure 3). The main limitation of this class-based segmentation method is that it cannot separate different phases or object instances from the same class, such as phase-separating liquids or adjacent vessels (Figure 3). In addition, if the class of the material is not clear, or if it did not appear in the training set, the net will not be able to segment the material region. The only advantage of the semantic segmentation approach is that neural nets for such tasks are much easier and faster to train and run. For this task, we use the standard fully convolutional neural net (FCN) using the pyramid scene parsing (PSP) architecture.36 The code and trained models for nets are available as Supporting Information.

Hierarchical versus Single-Step Segmentation

The problem of recognition of materials in the vessel can be solved either by finding the vessel and materials in a single step (Figure 4a) or hierarchically, by first finding the vessel using one system and then the materials inside the vessel using a second system (Figure 4b).2,19,29,37 The single-step approach was applied using Mask R-CNN and FCN, which traces both vessels and materials simultaneously (Figure 4a). The alternative hierarchical image segmentation approach involves three steps (Figure 4b): (1) finding the general region of all vessels using FCN for semantic segmentation; (2) splitting the vessel region (found in Step 1) into individual vessel instances using a GES net for vessel instance segmentation; and (3) splitting each vessel region (found in Step 2) into specific material phases using another GES net for material instance segmentation (Figure 4b).

Figure 4.

(a) Single-step segmentation using Mask R-CNN to find both the vessel and material instances simultaneously. (b) Hierarchical segmentation, in which an FCN finds the general region of the vessels in the image, and this region is then transferred to a GES net for vessel instance detection. Each vessel instance segment is transferred to a second GES net for the segmentation of the material instance.

Evaluation Metrics

In this work, we employed two standard

metrics for evaluating segmentation quality. The intersection over

union (IOU) is the main metric for the evaluation of semantic segmentation

and is calculated separately for each class.33 The intersection is the sum of the pixels that belong to the class,

according to both the net prediction and the ground truth (GT), while

the union is the sum of pixels that belong to the class according

to either the net prediction or the GT. IOU is the intersection divided

by the union. The recall is the intersection divided by the sum of

all pixels belonging to the class according to the GT annotation.

Precision is the intersection divided by the sum of all pixels belonging

to the class according to the net prediction. For instance-aware segmentation,

we choose to use the standard metric of panoptic quality (PQ).38 PQ consists of a combination of recognition

quality (RQ) and segmentation quality (SQ), where a segment is defined

as the region of each individual object instance in the image. RQ

is used to measure the detection rate of instances and is given by  , where TP (true positive) is the number

of predicted segments that match a ground truth segment; FN (false

negative) is the number of segments in the GT annotation that do not

match any of the predicted segments; and FP (false positive) is the

number of predicted segments with no matched segment in the GT annotation.

Matching is defined as an IOU of 50% or more between predicted and

ground truth segments of the same class. SQ is simply the average

IOU of matching segments. PQ is calculated as PQ = RQ × SQ.

, where TP (true positive) is the number

of predicted segments that match a ground truth segment; FN (false

negative) is the number of segments in the GT annotation that do not

match any of the predicted segments; and FP (false positive) is the

number of predicted segments with no matched segment in the GT annotation.

Matching is defined as an IOU of 50% or more between predicted and

ground truth segments of the same class. SQ is simply the average

IOU of matching segments. PQ is calculated as PQ = RQ × SQ.

Class-Agnostic PQ Metric

The Standard PQ metric is calculated by considering only those segments that were correctly classified. This means that if a predicted segment overlaps with a ground truth segment but has a different class, it will be considered mismatched. The problem with this approach is that it does not measure the accuracy of segmentation without classification; a net that predicts the segment region perfectly but with the wrong class will have a PQ value of zero. One method to overcome this problem is to pretend that all segments have the same class; in this case, the PQ will depend only on the region of the predicted segment. However, given the class imbalance, this will increase the weight of the more common classes, and will not accurately measure the segmentation accuracy across all classes. To measure class-agnostic segmentation in a way that will equally represent different classes, we use a modified PQ metric. The PQ, RQ, and SQ values for the class-agnostic method are calculated as in the standard case, while the definitions of TP, FP, and FN are modified. The TP for a given class is the number of GT instances of this class that match predicted instances with IOU > 0.5 (regardless of the predicted instance class). The FN for a given class is the number of GT instances of this class that do not match any predicted segment (regardless of the predicted segment class). If an instance has more than one class, it will be counted for each class separately. The FP for a given class is the fraction of GT segments that belong to this class multiplied by the total number of class-agnostic FP segments. The total number of class-agnostic FP (false positive) is the number of predicted segments that do not match any ground truth segments regardless of class (matching means IOU > 0.5 between GT and predicted segments regardless of class). For example, if 20% of the GT instances belong to the solid class, and there are 1200 predicted segments that do not match any GT segments, the FP for the solid class would be 1200 × 0.2 = 240. In other words, to avoid using the predicted class for the FP calculation, we split the total FP among all classes according to the class ratio in the GT annotation.

Semantic Segmentation Results

The results of the semantic segmentation net are shown in Table 1 and Figure 5. It can be seen that the net achieved good accuracy (IOU > 0.8, Table 1) for segmentation of the vessel region, fill region, and liquid regions in the image, and a medium accuracy for solid segmentation (IOU = 0.65). As can be seen from Figure 5, these results are consistent across a wide range of materials, vessels, angles, and environments, suggesting that the net was able to achieve a high level of generalization when learning to recognize these classes. For the remaining subclasses, the net achieved low accuracy (IOU < 0.5, Table 1). The more common subclasses, such as suspension, foam, and powder, were recognized in some cases, while the more rare subclasses (gel, vapor) were completely ignored (Table 1). It should be noted that some of these subclasses have very few occurrences in the evaluation set, meaning that their statistics are unreliable. However, the low detection accuracy is consistent across all of the subclasses. This can be attributed to the small number of training examples for these subclasses, as well as the high visual similarity between different subclasses.

Figure 5.

Results of semantic segmentation. The prediction (Pred) results are marked in red while the ground truth (GT) annotations are marked in green. Not all classes are shown. Images were taken from the Nile Red Youtube channel.

Instance Segmentation Results

The results of instance-aware segmentation are shown in Table 2 and Figure 6. It can be seen from Table 2 that the nets achieve good performance in terms of recognition and segmentation for most types of materials in the class-agnostic case (PQ > 0.5). This is true even for relatively rare classes such as vapor and granular phases, implying that the net is able to generalize the recognition and segmentation process such that it does not depend on the specific type of material. The nets achieve low performance in the recognition of foams and chunks of solid, which usually contain small instances with wide variability in terms of shape. For class-dependent PQ, the main classes of vessels, liquids, and solids were detected and classified with fair accuracy (PQ > 0.4, Table 2). However, almost all subclasses gave low PQ values; the only subclass that was classified with reasonable quality was suspension, which had a relatively large number of training examples (Table 2). For multiphase systems containing one or more separate phases of materials in the same vessel, the quality of recognition was significantly lower than that of one-phase systems (Table 2). In multiphase systems, there is a tendency to miss one of the phases: for a solid immersed in liquid, the tendency is to miss the solid (Figure 6). One reason for this is that the phase boundaries between materials and air tend to be easier to see than those between liquids and materials. Another reason is that instances in multiphase systems tend to be smaller than instances in single-phase systems. The quality of recognition strongly depends on the segment size, and the larger the segment, the higher the quality (Table 2). This is true for both single-phase and multiphase systems (Table 2). It can also be seen from Table 2 that the hierarchical segmentation approach (using the GES net) gave better results than single-step segmentation using Mask R-CNN, although this was at the cost of a much longer running time of around three seconds per image compared to 0.2 s for the single-step approach

Table 2. Instance Segmentation per Class.

| hierarchical

segmentation (GES net) |

single-step segmentation (Mask RCNN) |

|||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| class

agnostica |

class dependentb |

class agnostica |

class dependentb |

number of instances

with class |

||||||||||

| class | PQ | RQ | SQ | PQ | RQ | SQ | PQ | RQ | SQ | PQ | RQ | SQ | test set | train set |

| vessel | 84 | 93 | 91 | 84 | 93 | 91 | 76 | 85 | 89 | 76 | 85 | 89 | 629 | 2696 |

| liquid | 56 | 69 | 81 | 54 | 67 | 81 | 45 | 54 | 82 | 42 | 51 | 82 | 658 | 2347 |

| solid | 48 | 57 | 83 | 44 | 52 | 85 | 31 | 38 | 80 | 13 | 15 | 84 | 100 | 758 |

| suspension | 63 | 74 | 84 | 43 | 50 | 87 | 52 | 61 | 85 | 10 | 12 | 82 | 136 | 708 |

| foam | 24 | 28 | 86 | 17 | 19 | 88 | 14 | 18 | 80 | 03 | 04 | 69 | 28 | 317 |

| powder | 40 | 48 | 83 | 30 | 36 | 83 | 27 | 35 | 77 | 13 | 15 | 84 | 42 | 344 |

| granular | 77 | 90 | 86 | 16 | 17 | 91 | 38 | 47 | 81 | 08 | 09 | 93 | 21 | 97 |

| large chunks | 23 | 37 | 62 | 00 | 00 | 27 | 34 | 80 | 00 | 00 | 7 | 44 | ||

| vapor | 71 | 81 | 88 | 62 | 67 | 93 | 67 | 75 | 88 | 00 | 00 | 4 | 39 | |

| gel | 84 | 95 | 89 | 00 | 00 | 00 | 00 | 00 | 00 | 1 | 115 | |||

| mean all subclasses | 54 | 65 | 82 | 24 | 27 | 88 | 32 | 38 | 82 | 05 | 06 | 82 | ||

| single phasec | 70 | 82 | 86 | 64 | 74 | 87 | 57 | 66 | 86 | 46 | 54 | 86 | 377 | 1551 |

| multiphased | 39 | 53 | 74 | 35 | 47 | 76 | 30 | 40 | 76 | 20 | 27 | 75 | 440 | 1810 |

| For Instance Size Larger than 5000 Pixels | ||||||||||||||

| single phasec | 75 | 86 | 87 | 68 | 78 | 88 | 60 | 69 | 87 | 50 | 57 | 87 | 323 | 1286 |

| multiphased | 47 | 63 | 75 | 44 | 57 | 77 | 37 | 48 | 77 | 26 | 34 | 76 | 289 | 1440 |

| For Instance Size Larger than 10 000 Pixels | ||||||||||||||

| single phasec | 77 | 87 | 88 | 70 | 79 | 89 | 62 | 71 | 87 | 50 | 58 | 87 | 269 | 1126 |

| multiphased | 51 | 68 | 75 | 45 | 59 | 76 | 41 | 54 | 76 | 30 | 40 | 75 | 206 | 1240 |

Matching between GT and predicted segments depends only on segment overlap and not on class.

Standard metrics, i.e., matching GT and predicted segments must have the same class.

Single-phase system: only one material phase in a given vessel.

A multiphase system: more than one separate material phase in the vessel.

Figure 6.

Ground truth and predicted results for instance segmentation. Images from Nile Red Youtube channel.

Results on Videos

The nets were demonstrated by running them on videos containing processes like phase separation, precipitation, freezing, melting, and foaming. The annotated videos are available at https://zenodo.org/record/3697693 and at YouTube. It can be seen from these videos that the nets can detect processes like precipitation, freezing, and melting by detecting the appearance of new phases like suspension, solids, and liquids. Also, processes such as phase separation and pouring can be detected by detecting the new phases and the change in the liquid level.

Conclusion

In this paper, we introduce a set of new computer vision methods tailored to chemical matter and the Vector-LabPics data set. These were designed for the recognition and segmentation of materials and vessels in images, with an emphasis on a chemistry lab environment. Several convolutional neural nets were trained on this data set. The nets achieve good accuracy for the segmentation and classification of vessels as well as liquid and solid materials in a wide range of systems. However, the nets’ ability to classify materials into more fine-grained material subclasses such as suspension, powder, and foam was relatively low. In addition, the segmentation of materials in multiphase systems, such as phase-separating liquids, had limited accuracy. The major limitation on increasing the accuracy of the net is the relatively small size of the data set. Major data sets for image segmentation such as COCO31 and Mapillary consist of tens of thousands of images, while the Vector-LabPics data set contains only 2197 images thus far. We also prioritized the creation of a general system that operates under a broad range of conditions over a system with higher accuracy that works only under a narrow set of conditions. It is clear that, in order to achieve high accuracy under general conditions, the size of the data set needs to be significantly increased. Alternatively, it is well established that a net that gives medium accuracy in a general setting can achieve a high level of accuracy under specific conditions by fine-tuning it on a small set of images containing these conditions.

Future Directions and Applications

Increasing the data set size is the obvious approach for improving accuracy. Thanks to the diverse community of chemists who share photos and videos of their experiments, the collection of images is easier than ever. However, it is clear that achieving a full visual understanding of chemical systems and lab environments demands more detailed annotation than what can be supplied by human annotators (3d and depth maps, for example). Training using CGI and simulation data is a promising approach for solving these limitations.39 A major obstacle for this approach is the lack of research on generating photorealistic CGI images of chemical systems. The approach presented here can easily be used on UV and IR images, which can add another layer of information to the visible light images. In addition, many applications of computer vision, such as image captioning,40 image querying, and video annotations,41 can be used for this field. From the application perspective, integration with robotic systems for autonomous materials discovery represents an obvious long-term goal. Near term applications include inspecting experiments for an undesirable process like phase separation, overflowing, drying, and precipitation, which can ruin a reaction and cause a safety hazard when dealing with flammable or toxic reagents. A major aspect of chemistry lab work is finding the right set of solvents to dissolve all components of a reaction in a single liquid phase or finding the right solvents to precipitate and selectively separate a component of a mixture. All of these problems can benefit from the method described here.

Methods

Training and Evaluation Sets

The Vector-LabPics data set was split into training and evaluation sets by selecting 497 images for the evaluation set and leaving 1691 images for the training set. The images for both sets were taken from completely different sources so that there would be no overlap in terms of the conditions, settings, or locations between the evaluation and training images. This was done in order to ensure that the results from the neural net for the evaluation set will represent the accuracy that is likely to be achieved for an image taken in a completely unfamiliar setting.

Training with Additional Data Sets

Training on related tasks is a way to increase the robustness and accuracy of a net. Reasoning about liquids for tasks like pouring and volume estimation by robots has been explored for problems relating to robotic kitchens and can be viewed as a related problem.2−6 However, the available data sets for these tasks consist of only a single liquid in opaque vessels in a narrow set of conditions and are also missing the annotation needed for the semantic and instance segmentation tasks used here. Containers in everyday settings appear in several general data sets, although the content of these vessels is not annotated. The COCO data set32 is the largest and most general image segmentation data set and contains several subclasses of vessels, such as cups, jars, and bottles. We speculated that training with Vector-LabPics and related vessel classes from the COCO data set could improve the accuracy of our nets. The nets were cotrained with subclasses of vessels from the COCO panoptic data set and gave the same accuracy as the nets trained on Vector-LabPics alone, implying that the addition of new data did not improve or degrade the performance. However, it should be noted that, for most images in the Vector-LabPics data set, the vessel is the main or only object in the image. A net cotrained on the COCO data set has an advantage in more complex environments where it is necessary to separate vessels from various other objects in the image. In addition, due to the small size of the data set, significant augmentation was used, including deforming, blurring, cropping, and decoloring.

Semantic Segmentation Using FCN

The semantic segmentation task involves finding the class for each pixel in the image (Figure 3). The standard approach is the fully convolutional neural net (FCN).33 We have implemented this approach using the PSP architecture.36,44 Most semantic segmentation tasks involve finding a single exclusive class for each pixel; however, in the case of Vector-LabPics, a single pixel may belong to several different classes simultaneously (Figure 3). The multiclass prediction was achieved by predicting an independent binary map for each class. For each pixel, this map predicts whether or not it belongs to the specific class (Figure 3). The training loss for this net was the sum of the losses of all of the classes’ predictions. Other than this, the training process and architecture were those of the standard approach used in previous works.33,36

Hierarchical Instance Segmentation Using a Unified GES Net

The generator evaluator31 approach for image segmentation is based on two main modules: (1) a generator that proposes various regions corresponding to different segments of vessels or materials in the image; and (2) an evaluator that estimates how well the proposed segment matches the real region in the image and selects the best segments to be merged into the final segmentation map. Although previous studies have used a modular approach with different nets for the generator and evaluator,31 this work uses a single unified net for both, i.e., one net that outputs the segment region (generator), its confidence score (evaluator), and its class (Figure 7). In this case, the generator net consists of a convolutional net that, given a point in the image, finds the segment containing that point (Figure 7).31,42,43 Picking different points in the image will lead the net to predict different segments. This can occur even if the point is in the same segment. Another input for the net is the region of interest (ROI) mask (Figure 7), which limits the region of the image in which the output segment may be found.29,42 Hence, the output segment must be contained within the ROI mask. In addition to the output segment and its class, the GES net also predicts the confidence score for this segment, which simply represents how well the prediction fits the real segment in the image in terms of the intersection over union (IOU). The net was run by picking several random points inside the ROI region and selecting the output segments with the highest scores (Figure 7a,b). Two different nets for vessel segmentation and material segmentation were trained separately and used hierarchically (Figure 4b). Hence, the region of all vessels was first found using FCN for semantic segmentation and was then transferred to a GES net for vessel instance segmentation (as an ROI input), to identify the regions of individual vessels (Figure 7a). The region of each vessel was transferred (as an ROI mask) to another network that finds the region and class of each material phase inside the vessel (Figure 7b).

Figure 7.

(a, b) GES net for vessel and material instance segmentation. The net receives an image, an ROI mask, and a pointer point in the image and outputs the mask instance containing the point within the ROI, for (a) the vessel and (b) the material. The net also outputs the confidence score, which is an estimation of how well the predicted region matches the real region in terms of the IOU. In the case of the material (b), the net also predicts the material class. The predictions with the highest scores are merged into the final segmentation map (a, b). (c) Unified GES net architecture.

Single-Step Instance Segmentation Using Mask R-CNN

The Mask R-CNN model was used to predict both the vessel and the material instances in a single step.30 Following the previous work, the model uses ResNet as a backbone,44 followed by a region proposal network (RPN) which provides a list of candidate instances. Given such candidates, both the instance binding box and class are predicted by the box head. In addition, masks for both vessel and material classes are generated. As the mask loss only considers the prediction that corresponds to the class label, it enables the model to predict highly overlapped masks correctly as interclass competition is avoided. This is especially important for our case, as most of the material instances are stored inside a vessel, which leads to almost complete overlap between the vessels and materials. Since an instance in the Vector-LabPics data set could belong to multiple subclasses, the original Mask R-CNN is modified to handle multilabel classification. Such a function is enabled via an additional subclass predictor; it takes the same ROI feature generated by the box head and output label power set as the multilabel subclass prediction. This predictor takes the same feature vector from the box head and uses a single fully connected layer to do the classification. The subclass loss is defined as a binary cross-entropy loss. As Mask R-CNN is designed to do instance segmentation, the results of the net need to be merged into the panoptic segmentation map. Two separate panoptic segmentation maps are created for the material and vessels. Proposed instances for each map are filtered by removing low-confidence instances. After that, all the remaining instances are overlaid on the corresponding segmentation map. In the case of overlapping masks, the mask with the higher confidence will cover the one with the lower confidence.

Acknowledgments

We would like to thank the reviewers for their useful suggestions that helped improve this work. We would like to thank the sources of the images used for creating this data set without them this work was not possible. These sources include Nessa Carson (@SuperScienceGrl, Twitter), Chemical and Engineering Science chemistry in pictures, Youtube channels dedicated to chemistry experiments: NurdRage, NileRed, DougsLab, ChemPlayer, and Koen2All. Additional sources for images include Instagram channels chemistrylover (Joana Kulizic), Chemistry.shz (Dr.Shakerizadeh-shirazi), MinistryOfChemistry, Chemistry And Me, ChemistryLifeStyle, vacuum_distillation, and Organic_Chemistry_Lab. We acknowledge the Defense Advanced Research Projects Agency (DARPA) under the Accelerated Molecular Discovery Program under Cooperative Agreement HR00111920027, dated August 1, 2019. The content of the information presented in this work does not necessarily reflect the position or the policy of the Government. A.A.-G. thanks Anders G. Frøseth for his generous support.

Supporting Information Available

The Supporting Information is available free of charge at https://pubs.acs.org/doi/10.1021/acscentsci.0c00460.

Additional details on the nets training, code, and trained models (PDF)

Videos of chemical processes that were annotated by the nets and code (ZIP)

The Vector-LabPics data set is available from these URLs: https://www.cs.toronto.edu/chemselfies/, https://zenodo.org/record/3697452. The codes and trained models for all the nets used for the work available from these repositories: https://github.com/aspuru-guzik-group/Computer-vision-for-the-chemistry-lab, https://zenodo.org/record/3697767

Author Contributions

# S.E. and H.X. contributed equally.

The authors declare no competing financial interest.

Supplementary Material

References

- Zubrick J. W.The organic chem lab survival manual: a student’s guide to techniques; John Wiley & Sons, 2016. [Google Scholar]

- Mottaghi R.; Schenck C.; Fox D.; Farhadi A. See the glass half full: Reasoning about liquid containers, their volume and content. Proceedings of the IEEE International Conference on Computer Vision 2017, 1889–1898. 10.1109/ICCV.2017.207. [DOI] [Google Scholar]

- Kennedy M.; Queen K.; Thakur D.; Daniilidis K.; Kumar V. Precise dispensing of liquids using visual feedback. 2017 IEEE/RSJ. International Conference on Intelligent Robots and Systems (IROS) 2017, 1260–1266. 10.1109/IROS.2017.8202301. [DOI] [Google Scholar]

- Schenck C.; Fox D.. Detection and tracking of liquids with fully convolutional Networks. arXiv preprint, arXiv:1606.06266, 2016.9. https://arxiv.org/abs/1606.06266.

- Wu T.-Y.; Lin J.-T.; Wang T.-H.; Hu C.-W.; Niebles J. C.; Sun M. Liquid pouring monitoring via rich sensory inputs. Proceedings of the European Conference on Computer Vision (ECCV) 2018, 11215, 352–369. 10.1007/978-3-030-01252-6_21. [DOI] [Google Scholar]

- Schenck C.; Fox D. Perceiving and reasoning about liquids using fully convolutional networks. International Journal of Robotics Research 2018, 37 (4–5), 452–471. 10.1177/0278364917734052. [DOI] [Google Scholar]

- Ley S. V; Ingham R. J; O’Brien M.; Browne D. L Camera-enabled techniques for organic synthesis. Beilstein J. Org. Chem. 2013, 9 (1), 1051–1072. 10.3762/bjoc.9.118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Steiner S.; Wolf J.; Glatzel S.; Andreou A.; Granda J.ła. M.; Keenan G.; Hinkley T.; Aragon-Camarasa G.; Kitson P. J.; Angelone D.; Cronin L.; et al. Organic synthesis in a modular robotic system driven by a chemical programming language. Science 2019, 363 (6423), eaav2211. 10.1126/science.aav2211. [DOI] [PubMed] [Google Scholar]

- Coley C. W.; Thomas D. A.; Lummiss J. A. M.; Jaworski J. N.; Breen C. P.; Schultz V.; Hart T.; Fishman J. S.; Rogers L.; Gao H.; et al. A robotic platform for flow synthesis of organic compounds informed by ai planning. Science 2019, 365 (6453), eaax1566. 10.1126/science.aax1566. [DOI] [PubMed] [Google Scholar]

- Ley S. V.; Fitzpatrick D. E.; Ingham R.. J.; Myers R. M. Organic synthesis: march of the machines. Angew. Chem., Int. Ed. 2015, 54 (11), 3449–3464. 10.1002/anie.201410744. [DOI] [PubMed] [Google Scholar]

- Hase F.; Roch L. M.; Aspuru-Guzik A. Next-generation experimentation with self-driving laboratories. Trends in Chemistry 2019, 1, 282. 10.1016/j.trechm.2019.02.007. [DOI] [Google Scholar]

- Daponte J. A.; Guo Y.; Ruck R. T.; Hein J. E. Using an automated monitoring platform for investigations of biphasic reactions. ACS Catal. 2019, 9 (12), 11484–11491. 10.1021/acscatal.9b03953. [DOI] [Google Scholar]

- Ren F.; Ward L.; Williams T.; Laws K. J.; Wolverton C.; Hattrick-Simpers J.; Mehta A. Accelerated discovery of metallic glasses through iteration of machine learning and high-throughput experiments. Science advances 2018, 4 (4), eaaq1566. 10.1126/sciadv.aaq1566. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li Z.; Najeeb M. A.; Alves L.; Sherman A. Z.; Shekar V.; Cruz Parrilla P.; et al. Robot-Accelerated Perovskite Investigation and Discovery (RAPID): 1. Inverse Temperature Crystallization. Chem. Mater. 2020, 32, 5650. 10.1021/acs.chemmater.0c01153. [DOI] [Google Scholar]

- Boykov Y.; Kolmogorov V. An experimental comparison of min-cut/max-flow algorithms for energy minimization in vision. IEEE Transactions on Pattern Analysis & Machine Intelligence 2004, 26, 1124–1137. 10.1109/TPAMI.2004.60. [DOI] [PubMed] [Google Scholar]

- Canny J. A computational approach to edge detection. IEEE Transactions on pattern analysis and machine intelligence 1986, PAMI-8, 679–698. 10.1109/TPAMI.1986.4767851. [DOI] [PubMed] [Google Scholar]

- Weiqi Yuan; Desheng Li Measurement of liquid interface based on vision. Fifth World Congress on Intelligent Control and Automation (IEEE Catal. No. 04EX788) 2004, 4, 3709–3713. 10.1109/WCICA.2004.1343291. [DOI] [Google Scholar]

- Pithadiya K. J.; Modi C. K.; Chauhan J. D. Comparison of optimal edge detection algorithms for liquid level inspection in bottles. 2009 Second International Conference on Emerging Trends in Engineering & Technology 2009, 447–452. 10.1109/ICETET.2009.55. [DOI] [Google Scholar]

- Eppel S.; Kachman T.. Computer vision-based recognition of liquid surfaces and phase boundaries in transparent vessels, with emphasis on chemistry applications. arXiv preprint, arXiv:1404.7174, 2014. https://arxiv.org/abs/1404.7174.

- O'Brien M.; Koos P.; Browne D. L.; Ley S. V. A prototype continuous flow liquid–liquid extraction system using open-source technology. Org. Biomol. Chem. 2012, 10 (35), 7031–7036. 10.1039/c2ob25912e. [DOI] [PubMed] [Google Scholar]

- Liu Q.; Chu B.; Peng J.; Tang S. A visual measurement of water content of crude oil based on image grayscale accumulated value difference. Sensors 2019, 19 (13), 2963. 10.3390/s19132963. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang T.-H.; Lu M.-C.; Hsu C.-C.; Chen C.-C.; Tan J.-D. Liquid-level measurement using a single digital camera. Measurement 2009, 42 (4), 604–610. 10.1016/j.measurement.2008.10.006. [DOI] [Google Scholar]

- Eppel S.Tracing liquid level and material boundaries in transparent vessels using the graph cut computer vision approach. arXiv preprint, arXiv:1602.00177, 2016. https://arxiv.org/abs/1602.00177.

- Zepel T.; Lai V.; Yunker L. P. E.; Hein J. E.. Automated Liquid-Level Monitoring and Control using Computer Vision, ChemRxiv Preprint, 2020. 10.26434/chemrxiv.12798143.v1. [DOI]

- McKinney S. M.; Sieniek M.; Godbole V.; Godwin J.; Antropova N.; Ashrafian H.; Back T.; Chesus M.; Corrado G. C.; Darzi A.; et al. International evaluation of an ai system for breast cancer screening. Nature 2020, 577 (7788), 89–94. 10.1038/s41586-019-1799-6. [DOI] [PubMed] [Google Scholar]

- Krizhevsky A.; Sutskever I.; Hinton G. E. Imagenet classification with deep convolutional neural networks. NIPS: Advances in neural information processing systems 2012, 1097–1105. [Google Scholar]

- Deng J.; Dong W.; Socher R.; Li L.-J.; Kai Li; Li Fei-Fei Imagenet: A large-scale hierarchical image database. 2009 IEEE conference on computer vision and pattern recognition 2009, 248–255. 10.1109/CVPR.2009.5206848. [DOI] [Google Scholar]

- Lin T.-Y.; Maire M.; Belongie S.; Hays J.; Perona P.; Ramanan D.; Dollár P.; Zitnick C. L.. Microsoft coco: Common objects in context. In European conference on computer vision; Springer, 2014; pp 740–755. [Google Scholar]

- Eppel S.Setting an attention region for convolutional neural networks using region selective features, for recognition of materials within glass vessels. arXiv preprint, arXiv:1708.08711, 2017. https://arxiv.org/abs/1708.08711.

- He K.; Gkioxari G.; Dollar P.; Girshick R. Mask r-cnn. Proceedings of the IEEE international conference on computer vision 2017, 2980–2988. 10.1109/ICCV.2017.322. [DOI] [Google Scholar]

- Eppel S.; Aspuru-Guzik A.. Generator evaluator-selector net: a modular approach for panoptic segmentation. arXiv preprint, arXiv:1908.09108, 2019. https://arxiv.org/abs/1908.09108.

- Lin T.-Y.; Maire M.; Belongie S.; Hays J.; Perona P.; Ramanan D.; Dollar P.; Zitnick C. L. Microsoft COCO: Common objects in context. ECCV 2014, 8693, 740. 10.1007/978-3-319-10602-1_48. [DOI] [Google Scholar]

- Long J.; Shelhamer E.; Darrell T. Fully convolutional networks for semantic segmentation. Proceedings of the IEEE conference on computer vision and pattern recognition 2015, 3431–3440. 10.1109/CVPR.2015.7298965. [DOI] [Google Scholar]

- Caesar H.; Uijlings J.; Ferrari V. COCO-Stuff: Thing and stuff classes in context. CVPR 2018, 1209. 10.1109/CVPR.2018.00132. [DOI] [Google Scholar]

- Dutta A.; Zisserman A.. The VIA annotation software for images, audio and video. In Proceedings of the 27th ACM International Conference on Multimedia, MM ’19; ACM: New York, 2019. [Google Scholar]

- Zhao H.; Shi J.; Qi X.; Wang X.; Jia J. Pyramid scene parsing network. Proceedings of the IEEE conference on computer vision and pattern recognition 2017, 6230. 10.1109/CVPR.2017.660. [DOI] [Google Scholar]

- Men K.; Geng H.; Cheng C.; Zhong H.; Huang M.; Fan Y.; Plastaras J. P.; Lin A.; Xiao Y. More accurate and efficient segmentation of organs-at-risk in radiotherapy with convolutional neural networks cascades. Med. Phys. 2018, 46 (1), 286–292. 10.1002/mp.13296. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kirillov A.; He K.; Girshick R.; Rother C.; Dollar P. Panoptic segmentation. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2019, 9396–9405. 10.1109/CVPR.2019.00963. [DOI] [Google Scholar]

- Sajjan S. S.; Moore M.; Pan M.; Nagaraja G.; Lee J.; Zeng A.; Song S.. Cleargrasp: 3d shape estimation of transparent objects for manipulation. arXiv preprint, arXiv:1910.02550, 2019. https://arxiv.org/abs/1910.02550.

- Otter D. W.; Medina J. R.; Kalita J. K. A survey of the usages of deep learning for natural language processing. IEEE Transactions on Neural Networks and Learning Systems 2020, 1–21. 10.1109/TNNLS.2020.2979670. [DOI] [PubMed] [Google Scholar]

- Kim D.; Woo S.; Lee J.-Y.; Kweon I. S. Video Panoptic Segmentation. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2020, 9856–9865. 10.1109/CVPR42600.2020.00988. [DOI] [Google Scholar]

- Eppel S.Class-independent sequential full image segmentation, using a convolutional net that finds a segment within an attention region, given a pointer pixel within this segment. arXiv preprint, arXiv:1902.07810, 2019.11. https://arxiv.org/abs/1902.07810.

- Sofiiuk K.; Barinova O.; Konushin A.. Adaptis: Adaptive instance selection Network. arXiv preprint, arXiv:1909.07829, 2019. https://arxiv.org/abs/1909.07829.

- He K.; Zhang X.; Ren S.; Sun J. Deep residual learning for image recognition. Proceedings of the IEEE conference on computer vision and pattern recognition 2016, 770–778. 10.1109/CVPR.2016.90. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.