Abstract

We report a novel generalized optical measurement system and computational approach to determine and correct aberrations in optical systems. The system consists of a computational imaging method capable of reconstructing an optical system’s pupil function by adapting overlapped Fourier coding to an incoherent imaging modality. It recovers the high-resolution image latent in an aberrated image via deconvolution. The deconvolution is made robust to noise by using coded apertures to capture images. We term this method coded-aperture-based correction of aberration obtained from overlapped Fourier coding and blur estimation (CACAO-FB). It is well-suited for various imaging scenarios where aberration is present and where providing a spatially coherent illumination is very challenging or impossible. We report the demonstration of CACAO-FB with a variety of samples including an in vivo imaging experiment on the eye of a rhesus macaque to correct for its inherent aberration in the rendered retinal images. CACAO-FB ultimately allows for an aberrated imaging system to achieve diffraction-limited performance over a wide field of view by casting optical design complexity to computational algorithms in post-processing.

1. INTRODUCTION

A perfect aberration-free optical lens simply does not exist in reality. As such, all optical imaging systems constructed from a finite number of optical surfaces are going to experience some level of aberration issues. This simple fact underpins the extraordinary amount of optical design efforts that have gone into the design of optical imaging systems. In broad terms, optical imaging system design is largely a complex process by which specialized optical elements and their spatial relationships are chosen in order to minimize aberrations and provide an acceptable image resolution over a desired field of view (FOV) [1]. The more optical surfaces available to the designer, the greater the extent the aberrations can be minimized. However, this physical system improvement approach for minimizing aberrations has reached a point of diminishing returns in modern optics. Microscope objectives with 15 optical elements have become commercially available in recent years [2], but it is unlikely that another order of magnitude of optical surfaces will be supported within the confines of an objective in the foreseeable future. Moreover, this strategy for minimizing aberration is never expected to accomplish the task of completely zeroing out aberrations. In other words, any optical system’s spatial bandwidth product (SBP), which scales as the product of system FOV and inverse resolution, can be expected to remain a design bound dictated by the residual aberrations in the system.

The issue of aberrations in simpler optical systems with few optical surfaces is, unsurprisingly, more pronounced. The eye is a very good example of such an optical system. While it does a fair job of conveying external scenes onto our retinal layer, its optical quality is actually quite poor. When a clinician desires a high-resolution image of the retinal layer itself for diagnostic purposes, the human eye lens and cornea aberrations would have to be somehow corrected or compensated for. The prevalent approach by which this is currently done is through the use of adaptive optics (AO) [3,4]. This is in effect a sophisticated way of physically correcting aberrations where complex physical optical elements are used to compensate for the aberrations of the lens and cornea. AO forms a guide star on the retina and uses a wavefront detector (e.g., Shack–Hartmann sensor) and a compensation device (e.g., deformable mirror) to correct for the aberrations affecting the guide star and a small region around it as it is under similar aberrations. This region is known as the isoplanatic patch [5], and its size varies depending on the severity of aberrations. To image a larger area beyond the isoplanatic patch, AO needs to be raster-scanned [6]. Since AO correction is fast (e.g., <500 ms [7]), it is still possible to obtain images of multiple isoplanatic patches quickly. However, the AO system can be complicated as it requires the active feedback loop between the wavefront measurement device and the compensation device and needs a separate guide star for the correction process [8].

Fourier ptychography (FP) circumvents the challenges of adding more optical elements for improving an optical system’s performance by recasting the problem of increasing the system’s spatial bandwidth product (SBP) as a computational problem that can be solved after image data have been acquired. Rather than striving to get the highest-quality images possible through an imaging system, FP acquires a controlled set of low-SBP images, dynamically determines the system’s aberration characteristics computationally, and reconstitutes a high-SBP, aberration-corrected image from the original controlled image set [9–15]. FP shares its roots with ptychography [16–18] and structured illumination microscopy (SIM) [19–21], which numerically expand the SBP of the imaging system in the spatial and spatial frequency domain, respectively, by capturing multiple images under distinct illumination patterns and computationally synthesizing them into a higher-SBP image. One way to view FP is to note its similarity to synthetic aperture imaging [22–24]. In a standard FP microscope system, images of the target are collected through a low-numerical-aperture (NA) objective with the target illuminated with a series of angularly varied planar or quasi-planar illumination. Viewed in the spatial frequency domain, each image represents a disc of information with its offset from the origin determined by the illumination angle. As with synthetic aperture synthesizing, we then stitch the data from the collected series in the spatial frequency domain. Unlike synthetic aperture imaging, we do not have direct knowledge of the phase relationships between each image data set. In FP, we employ phase retrieval and the partial information overlap among the image set to converge on the correct phase relationships during the stitching process [9]. At the end of the process, the constituted information in the spatial frequency domain can be Fourier transformed to generate a higher-resolution image of the target that retains the original FOV as set by the objective. It has been demonstrated that a sub-routine can be weaved into the primary FP algorithm that will dynamically determine the pupil function of the imaging system [11]. In fact, the majority of existing FP algorithms incorporate some versions of this aberration determination function to find and subsequently correct out the aberrations from the processed image [25–32]. This particular sub-discipline of FP has matured to the level that it is even possible to use a very crude lens to obtain high-quality images that are typically associated with sophisticated imaging systems [33]—this drives home the fact that correcting aberration computationally is a viable alternative to physical correction.

The primary objective of this paper is to report a novel generalized optical measurement system and computational approach to determine and correct aberrations in optical systems. This computational approach is coupled to a general optical scheme designed to efficiently collect the type of images required by the computational approach. Currently, FP’s ability to determine and correct aberration is limited to optical setups with a well-defined, spatially coherent field on the sample plane [9,11,34–42]. We developed a computational imaging method capable of reconstructing an optical system’s pupil function by adapting the FP’s alternating projections as used in overlapped Fourier coding [10] to an incoherent imaging modality, which overcomes the spatial coherence requirement of the original pupil function recovery procedure of FP. It can then recover the high-resolution image latent in an aberrated image via deconvolution. The deconvolution is made robust to noise by using coded apertures to capture images [43]. We term this method: coded-aperture-based correction of aberration obtained from overlapped Fourier coding and blur estimation (CACAO-FB). It is well suited for various imaging scenarios where aberration is present and where providing a spatially coherent illumination is very challenging or impossible. CACAO-FB ultimately allows for an aberrated imaging system to achieve diffraction-limited performance over a wide FOV by casting optical design complexity to computational algorithms in post-processing.

The removal of spatial coherence constraints is vitally important in allowing us to apply computational aberration correction to a broader number of imaging scenarios. These scenarios include: (1) optical systems where the illumination on a sample is provided via a medium with unknown index variations; (2) optical systems where space is so confined that it is not feasible to employ optical propagation to create quasi-planar optical fields; (3) optical systems where the optical field at the sample plane is spatially incoherent by nature (e.g., fluorescence emission).

CACAO-FB is substantially different from other recent efforts aimed at aberration compensation. Broadly speaking, these efforts can be divided into two major categories: blind and heuristic aberration recovery. Blind recovery minimizes a cost function, typically an image sharpness metric or a maximum-likelihood function, over a search space, usually the coefficient space of Zernike orthonormal basis [44–49], to arrive at the optimal aberration function. However, blind recovery is prone to converging towards multiple local minima and requires the aberrated sample image to be a complex field because blind aberration recovery with an intensity-only sample image is extremely prone to noise for any aberrations [45] other than simple ones such as a camera motion blur or a defocus blur [50]. Heuristic recovery algorithms rely on several assumptions, such as assuming that the captured complex-field sample image has diffuse distribution in its Fourier spectrum such that each sub-region in the Fourier domain encodes the local aberrated wavefront information [51–54]. Thus, heuristic methods are limited to specific types of samples, and their performance is highly sample dependent.

CACAO-FB is capable of achieving a robust aberration recovery performance in a generalized and broadly applicable format. In Section 2, we describe the principle of CACAO-FB. In Section 3, we report the demonstration of CACAO-FB with a crude lens and an eye model as imaging systems of interest. Finally, in Section 4, we demonstrate the potential of using CACAO-FB for retinal imaging in an in vivo experiment on a rhesus macaque’s eye and discuss the current challenges it needs to address to become a viable alternative to other AO retinal imagers. We summarize our findings and discuss future directions in Section 5.

2. PRINCIPLE OF CACAO-FB

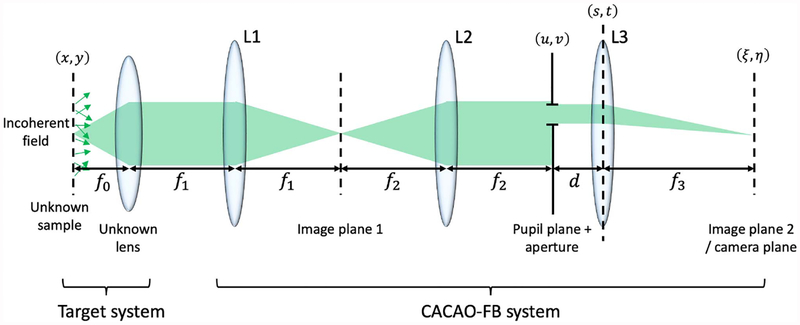

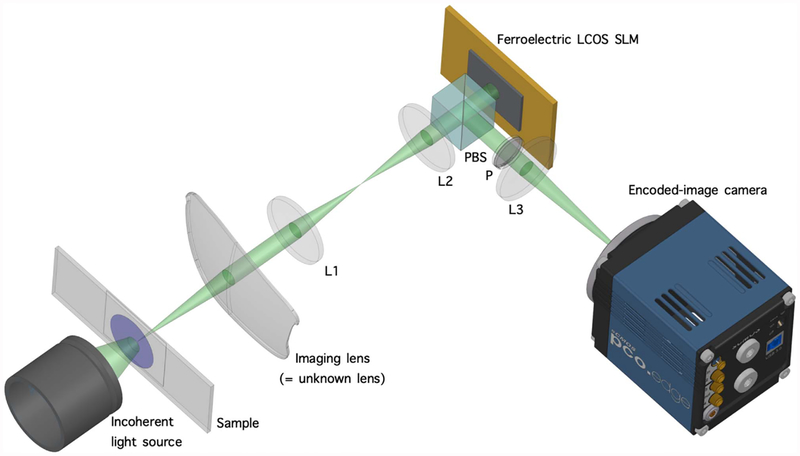

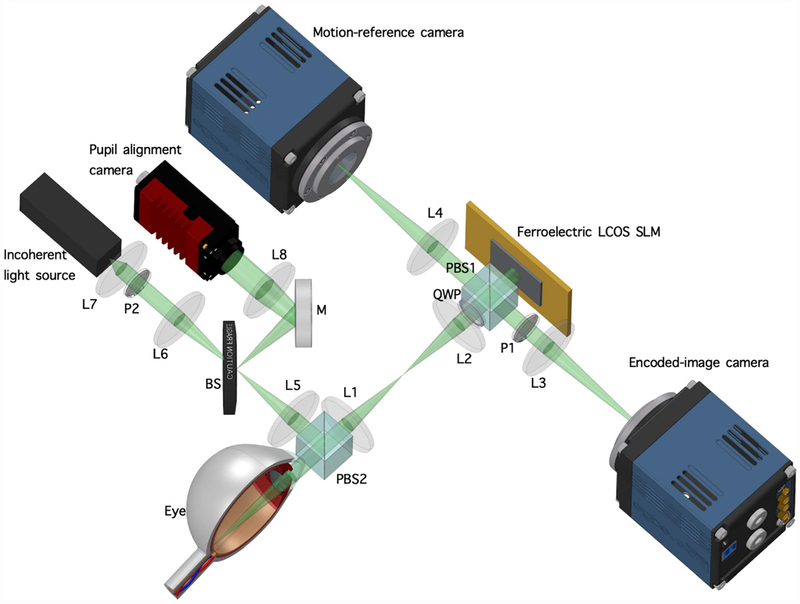

To best understand the overall operation of CACAO-FB processing, we start by examining the optical scheme (see Fig. 1). Suppose we start with an unknown optical system of interest (target system). This target system consists of a lens (unknown lens) placed approximately at its focal length in front of a target sample (unknown sample). The sample is illuminated incoherently. For the sake of simplicity in this thought experiment, we will consider the illumination to occur in the transmission mode. The CACAO-FB system collects light from the target system using relay lenses L1, L2, and L3, and an aperture mask in the pupil plane, which is the conjugate plane of the target system’s pupil with coordinates (u, v), that can be modulated into different pat terns. Our objective is to resolve the sample at high resolution. It should be clear from this target system description that our ability to achieve the objective is confounded by the presence of the unknown lens and its unknown aberrations. A good example of such a system is the eye—the retinal layer is the unknown sample, and the lens and cornea can be represented by the unknown lens.

Fig. 1.

Optical architecture of CACAO-FB. The CACAO-FB system consists of three tube lenses (L1, L2, and L3) to relay the image from the target system for analysis. The target system consists of an unknown lens and an unknown sample with spatially incoherent field. The CACAO-FB system has access to the conjugate plane of the target system’s pupil, which can be arbitrarily modulated with binary patterns using a spatial light modulator. The images captured by the CACAO-FB system are intensity only. f0, f1, f2, and f3 are the focal lengths of the unknown lens, L1, L2, and L3, respectively. d is an arbitrary distance smaller than f3.

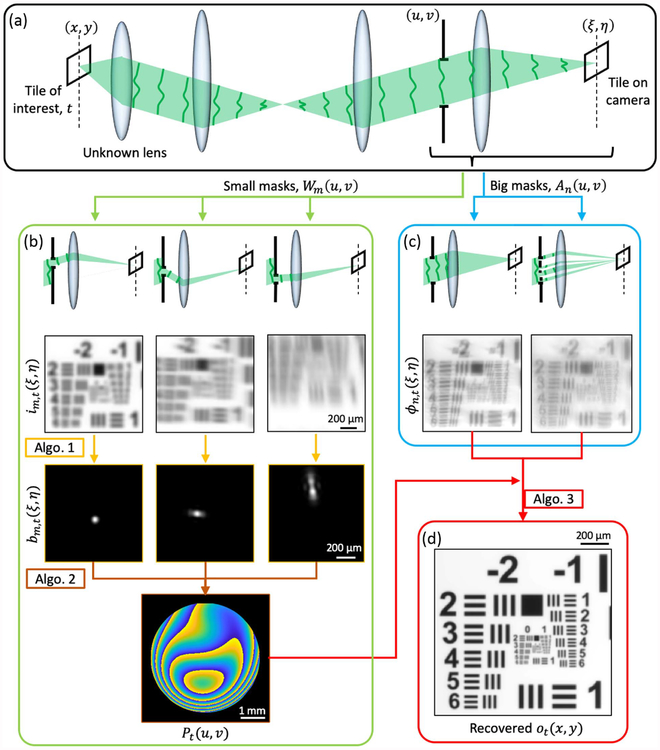

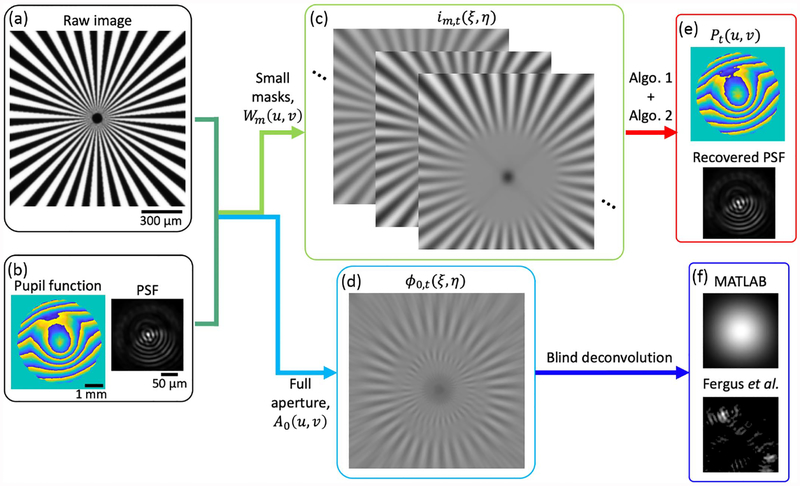

From this thought experiment, we can see that, to accomplish our objective, we would need to first determine the aberration characteristics of the unknown lens and then use the information to somehow correct out the aberration effects from the final rendered image. CACAO-FB does this by using three primary computational imaging algorithmic components that operate in sequence: 1) local aberration recovery with blur estimation; 2) full aberration recovery with a FP-based alternating projections algorithm; and 3) latent image recovery by deconvolution with coded apertures. The first two steps determine the target system’s aberrations, and the third step generates an aberration-corrected image. This pipeline is summarized in Fig. 2. The sample plane, which has coordinates (x, y), is divided into small tiles within which the aberration can be assumed to be spatially invariant, and CACAO-FB processes each corresponding tile on its image plane, which has coordinates (ξ, η), to recover a high-resolution image of the sample tile. In the following analysis, we focus our attention to one tile t. CACAO-FB begins by capturing a series of images with varying mask patterns in its pupil plane, which has coordinates (u, v). The patterns consist of two kinds: a set of small circular apertures Wm(u, v), which collectively spans the pupil of the unknown lens, and a set of big apertures An(u, v), which includes coded apertures and a full circular aperture with their diameters equal to the unknown lens’s pupil’s size. m and n are integers ranging from 1 to the total number of the respective aperture. The images captured with Wm(u, v) are labeled as im,t(ξ, η), and they encode the local aberration of the unknown lens’s pupil function in their point spread functions (PSF). The blur estimation algorithm (Section 2.B) extracts these PSFs; bm,t(ξ, η). These intensity values of the spatially filtered pupil function can be synthesized into the full pupil function Pt(u, v) with an FP-based alternating projections algorithm (Section 2.C). The images captured with An(u, v), labeled ϕn,t(ξ, η), are processed with the reconstructed pupil function and the knowledge of the mask patterns to generate the latent, aberration-free image of the sample ot(x, y) (Section 2.D).

Fig. 2.

Outline of CACAO-FB pipeline. (a) The captured images are broken into small tiles of isoplanatic patches (i.e., aberration is spatially invariant within each tile). (b) Data acquisition and post-processing for estimating the pupil function Pt(u, v). Limited-aperture images im,t(ξ, η) are captured with small masks, Wm(u, v) applied at the pupil plane. Local PSFs bm,t(ξ, η) are determined by the blur estimation procedure, Algorithm 1. These PSFs are synthesized into the full-aperture pupil function Pt(u, v) with Fourier-ptychography-based alternating projections algorithm, Algorithm 2. (c) Data acquisition with big masks An(u, v) at the pupil plane. (d) The recovered Pt(u, v) from (b) and the big-aperture images ϕn,t(ξ, η) from (c) are used for deconvolution (Algorithm 3) to recover the latent aberration-free intensity distribution of the sample ot(x, y).

The next four sub-sections will explain the mathematical model of the image acquisition process and the three imaging algorithmic components in detail.

A. Image Acquisition Principle of CACAO-FB System

We consider a point on the unknown sample s(x, y) and how it propagates to the camera plane to be imaged. On the sample plane, a unit amplitude point source at (x0, y0) can be described by

| (1) |

where U0 (x, y; x0, y0) is the complex field of the point on the sample plane, and δ (x − x0, y − y0) is the Dirac delta function describing the point located at (x0, y0).

We then use Fresnel propagation to propagate it to the unknown lens’s plane and apply the phase delay caused by the unknown lens, assuming an idealized thin lens with the estimated focal length f0 [Eqs. (S2) and (S3) of Supplement 1]. Any discrepancy from the ideal is incorporated into the pupil function P(u, v; x0, y0), which is usually a circular bandpass filter with a uniform modulus and some phase modulation. Thus, the field right after passing through the unknown lens is

| (2) |

where λ is the wavelength of the field and (u, v) are the coordinates of both the plane right after the unknown lens and the CACAO-FB system’s aperture plane as these planes are conjugate to each other. Thus, we refer to the aperture plane as the pupil plane. The spatially varying nature of a lens’s aberration is captured by the pupil function’s dependence on (x0, y0). We divide our sample into small tiles of isoplanatic patches (e.g., t = 1, 2, 3,…) and confine our analysis to one tiled region t on the sample plane that contains (x0, y0) and other points in its vicinity such that the spatially varying aberration can be assumed to be constant in the analysis that follows (i.e., P(u, v; x0, y0) = Pt(u, v). This is a common strategy for processing spatially variant aberration in wide-FOV imaging [55,56]. We can see from Eq. (2) that the field emerging from the unknown lens is essentially its pupil function with additional phase gradient term defined by the point source’s location on the sample plane.

At the pupil plane, a user-defined aperture mask M(u, v) is applied to produce

| (3) |

where we dropped the constant factor CF(x0, y0). After further propagation to the camera plane [Eqs. (S5)–(S9) of Supplement 1], we obtain the intensity pattern iPSF,t(ξ, η) that describes the of mapping a point on the sample to the camera plane as follows:

| (4) |

where is intensity of the PSF of the combined system in Fig. 1 for a given aperture mask M(u, v) and within the isoplanatic patch t. We observe from Eq. (4) that PSFs for different point source locations are related to each other by simple lateral shifts, such that an image it(ξ, η) captured by this system of an unknown sample function within the isoplanatic patch st(x, y) can be represented by

| (5) |

where ot(ξ, η) is the intensity of st(ξ, η), * is the convolution operator, and we ignore the coordinate scaling for the sake of simplicity. This equation demonstrates that the image captured by the detector is a convolution of the sample’s intensity field with a PSF associated with the sub-region of the pupil function defined by an arbitrary mask at the pupil plane. This insight allows us to capture images of the sample under the influence of PSFs that originate from different sub-regions of the pupil. We have aperture masks of varying shapes and sizes, mainly categorized into small masks and big masks. Small masks sample small regions of the pupil function to be used for reconstructing the pupil function, as will be described in detail in the following sections. Big masks include a mask corresponding to the full pupil size and several coded apertures that encode the pupil function to assist in the latent image recovery by deconvolution. To avoid confusion, we label the mth small mask, its associated PSF in isoplanatic patch t, and the image captured with it as Wm(u, v), bm,t(ξ, η), and im,t(ξ, η), respectively. nth big mask (coded aperture or a full aperture), its associated PSF, and the image captured with it are labeled as An(u, v), hn,t(ξ, η), and ϕn,t(ξ, η), respectively.

The CACAO-FB system im,t(ξ, η)s and ϕn,t(ξ, η)s in the data acquisition process, and these data are relayed to post-processing algorithms to recover ot(ξ, η), the underlying aberration-free image of the sample. The algorithm pipeline begins with the blur estimation algorithm using im,t(ξ, η)s as described below. In all the following simulations, there is one full aperture and four coded apertures An(u, v) with the diameter of 4.5 mm; 64 small masks Wm(u, v) with the diameter of 1 mm; an unknown lens L1 and L2 with the focal length of f0 = f1 = f2 = 100 mm; a tube lens with the focal length of f3 = 200 mm; an image sensor with the pixel size of 6.5 μm (3.25 μm effective pixel size); and a spatially incoherent illumination with the wavelength of 520 nm.

B. Local Aberration Recovery with Blur Estimation

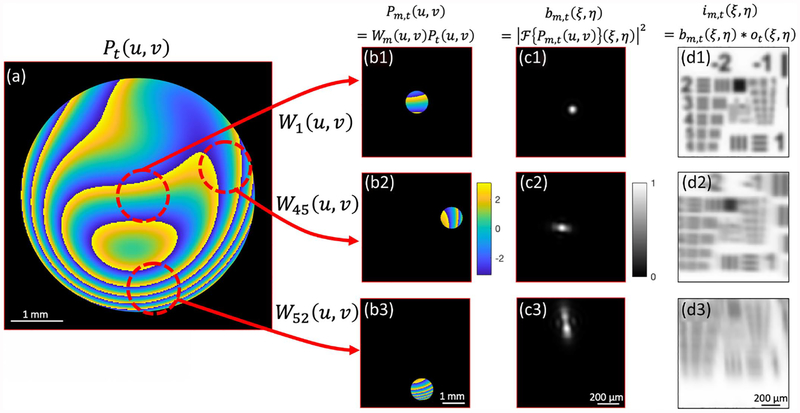

The blur function bm,t(ξ, η) associated the small mask Wm(u, v) applied to the pupil Pt(u, v) is also referred to as the local PSF, and it contains valuable information about the target system’s pupil function that we wish to recover. The size of Wm(u, v) is set small enough such that Wm(u, v) applied to a region on Pt(u, v) shows a local phase map that resembles a linear phase gradient, as shown in Fig. 3(b1). In such case, the associated bm,t(ξ, η) approximates a diffraction-limited spot with a spatial shift given by the phase gradient. Wm(u, v) applied to other regions on Pt(u, v) may have bm,t(ξ, η), whose shape deviates from a spot if the masked region contains more severe aberrations, as shown in Figs. 3(b2) and 3(b3). In general, the aberration at or near the center of an imaging lens is minimal, and it becomes severe near the edge of the aperture because the lens’s design poorly approximates the parabolic shape away from the optical axis [57]. Thus, the image captured with the center mask i1,t(ξ, η) is mostly aberration free with its PSF defined by the diffraction-limited spot associated with the mask’s aperture size. Other im,t(ξ, η)s have the same frequency band limit as i1,t(ξ, η) but are under the influence of additional aberration encapsulated by their local PSFs bm,t(ξ, η)s.

Fig. 3.

Simulating image acquisition with different small masks at the pupil plane. (a) The full pupil function masked by the lens’s NA-limited aperture. Differently masked regions of the pupil, (b1)–(b3), give rise to different blur kernels, (c1)–(c3), which allows us to capture images of the sample under the influence of different PSFs. Only the phase is plotted for Pt(u, v) and Pm,t(u, v)s, and their apertures are marked by the black boundaries. W1(u, v), W45(u, v), and W52(u, v) are three small masks from a spiraling-out scanning sequence.

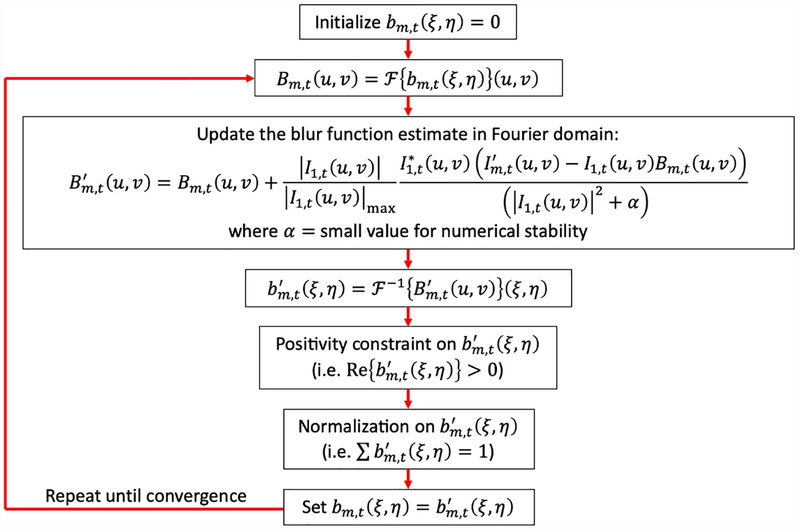

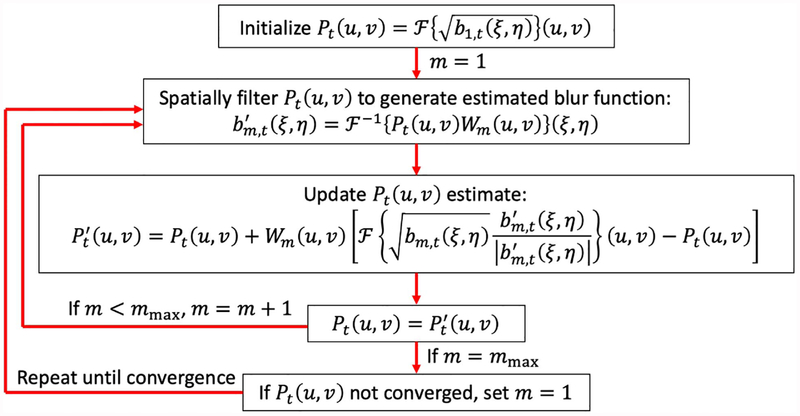

We adopt image-pair-based blur estimation algorithm widely used in computational photography discipline to determine bm,t(ξ, η). In this algorithm, one of the image pairs is assumed to be blur free while the other is blurred [58,59]. The blur kernel can be estimated by an iterative PSF estimation method, which is iterative Tikhonov deconvolution [60] in the Fourier domain, adopting the update scheme in Yuan’s blur estimation algorithm [58] and adjusting the step size to be proportional to |I1,t(u, v)|/|I1,t(u, v)|max for robustness to noise [61], where I1,t(u, v) is the Fourier spectrum of i1,t(ξ η) The blur estimation process is described in Algorithm 1 as shown in Fig. 4.

Fig. 4.

Flowchart of Algorithm 1: blur estimation algorithm for determining local PSFs from images captured with small apertures Wm,t(u, v).

The recovered bm,t(ξ, η)s are the intensity information of the different masked pupil regions’ Fourier transforms. They can be synthesized into the full pupil function Pt(u, v) using an FP-based alternating projections algorithm, as described in the following section.

C. Full Aberration Recovery with Fourier-Ptychography-based Alternating Projections Algorithm

FP uses the alternating projections algorithm to synthesize a sample’s Fourier spectrum from a series of intensity images of the sample captured by scanning an aperture on its Fourier spectrum [9,10]. In our implementation, the full pupil’s complex field Pt(u, v) is the desired Fourier spectrum to be synthesized, and the local PSFs bm,t(ξ, η)s are the aperture-scanned intensity images to be used for FP-based alternating projections, as shown in the bottom half of Fig. 2(b). Therefore, reconstructing the pupil function from a series of local PSFs’ intensity information in our algorithm is completely analogous to reconstructing the complex spatial spectrum of a sample from a series of its low-passed images. The FP-based alternating projections algorithm is Algorithm 2, and it is described in Fig. 5.

Fig. 5.

Flowchart of Algorithm 2: Fourier-ptychography-based alternating projections algorithm for reconstructing the unknown lens’s pupil function Pt(u, v).

The FP-based alternating projections algorithm requires that the scanned apertures during image acquisition have at least 30% overlap [62] for successful phase retrieval. Thus, the updating bm,t(ξ, η)s in Algorithm 2 are ordered in a spiral-out pattern, each having an associated aperture Wm(u, v) that partially overlaps (40% by area) with the previous one’s aperture. The influence of the overlap on the reconstruction is illustrated in Fig. S1 of Supplement 1. For the simulated pupil diameter of 4.5 mm, there are 64 Wm(u, v)s of 1 mm diameter to span the pupil with 40% overlap.

We simulate the image acquisition by an aberrated imaging system and our pupil function reconstruction process in Fig. 6. Algorithms 1 and 2 are able to estimate the local PSFs from the 64 images captured with the small masks Wm(u, v) [Fig. 6(c))], and they reconstruct the complex pupil function Pt(u, v) successfully [Fig. 6(e)]. A simple Fourier transformation of Pt(u, v) generates the PSF of the aberrated imaging system. On a Macbook Pro with 2.5 GHz Intel Core i7 and 16 GB of RAM, it takes 2 min for Algorithm 1 and 20 s for Algorithm 2 to operate on the 64 images (1000 by 1000 pixels) taken with Wm(u, v). To gauge our method’s performance among other computational blur estimation methods, we attempt PSF reconstruction with two blind deconvolution algorithms. One is MATLAB’s deconvblind, which is a standard blind deconvolution algorithm based on the accelerated, damped Richardson–Lucy algorithm, and the other is the state-of-the-art blind blur kernel recovery method based on variational Bayesian approach by Fergus et al. [63,64]. They both operate on a single blurred image [Fig. 6(d)] to simultaneously extract the blur function and the latent image [Fig. 6(f)]. For our purpose, we compare the reconstructed PSFs to gauge the performance. As shown in Fig. 6(f), the reconstructed blur kernels by MATLAB and Fergus et al. both show poor fidelity to the true PSF. This clearly demonstrates the effectiveness of our algorithm pipeline in reconstructing a complicated PSF, which would otherwise be impossible to recover by a blind deconvolution method. The absolute limit of our aberration reconstruction method, assuming an unlimited photon budget, is essentially determined by the number of pixels inside the defined full aperture. However, in real-life settings with limited photon budget and a dynamic sample, the smallest subaperture we can use to segment the full aperture is determined by the allowable exposure time and the shot-noise-limited condition of the camera. One has to consider the number of photons required by the camera for the signal to overcome the camera noise and the length of exposure permissible to capture a static image of the sample.

Fig. 6.

Simulation of our pupil function recovery procedure and a comparison with blind deconvolution algorithms. (a) The Siemens star pattern used in the simulation. (b) The system’s pupil function and the associated PSF. (c) A series of images im,t(ξ, η)s captured with small masks Wm(u, v) applied to the pupil function. (d) An image captured with the full-pupil-sized mask An(u, v) on the pupil function, which simulates the general imaging scenario by an aberrated imaging system. (e) The system’s pupil function and PSF recovered by our procedure. They show high fidelity to the original functions in (b). (f) Blur functions recovered by MATLAB’s and Fergus et al.’s blind deconvolution algorithm, respectively. They both show poor reconstructions compared to the recovered PSF in (e).

D. Latent Image Recovery by Deconvolution with Coded Apertures

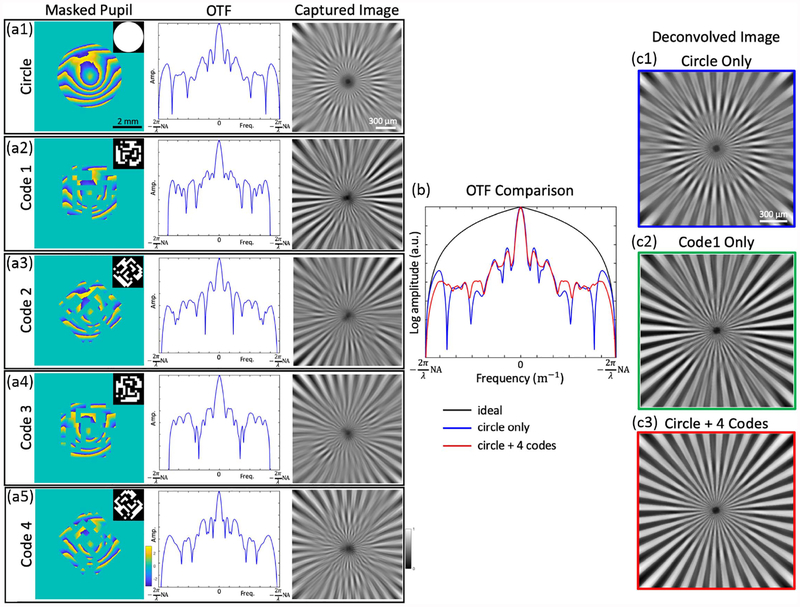

With the knowledge of the pupil function obtained from Algorithms 1 and 2, it is possible to recover ot(x, y) from the aberrated image ϕt(ξ, η) taken with the full pupil aperture. In the Fourier domain, the image’s spectrum is represented as Φt(u, v) = Ht(u, v)Ot(u, v), where Ht(u, v) and Ot(u, v) are the spatial spectrum of ht(ξ, η) and ot(x, y), respectively. Ht(u, v) is also called the optical transfer function (OTF) of the optical system and, by Fourier relation, is an auto-correlation of the pupil function Pt(u, v). In the presence of severe aberrations, the OTF may have values at or close to zero for many spatial frequency regions within the bandpass, as shown in Fig. 7. These are due to the phase gradients with opposite slopes found in an aberrated pupil function, which may produce values at or close to zero in the auto-correlation process. Thus, the division of Φt(u, v) by Ht(u, v) during deconvolution will amplify noise at these spatial frequency regions since the information there has been lost in the image acquisition process. This is an ill-posed inverse problem.

Fig. 7.

Simulation that demonstrates the benefit of coded-aperture-based deconvolution. (a1)–(a5) Masked pupil functions obtained by masking the same pupil function with the full circular aperture and coded apertures under different rotation angles (0°, 45°, 90°, 135°), their associated OTFs along one spatial frequency axis, and captured images. Each coded aperture is able to shift the null regions of the OTF to different locations. (b) Comparison between the OTF of a circular-aperture-masked pupil function and the summed OTFs of the circular- and coded-aperture-masked pupil functions. Null regions in the frequency spectrum are mitigated in the summed OTF, which allows all the frequency content of the sample within the band limit to be captured with the imaging system. The OTF of an ideal pupil function is also plotted. (c1) Deconvolved image with only a circular aperture shows poor recovery with artifacts corresponding to the missing frequency contents in the OTF’s null regions. (c2) A recovered image using one coded aperture only. Reconstruction is better than (c1) but still has some artifacts. (c3) A recovered image using circular and multiple coded apertures is free of artifacts since it does not have missing frequency contents.

There are several deconvolution methods that attempt to address the ill-posed problem by using a regularizer [60] or a priori knowledge of the sample, such as by assuming sparsity in its total variation [65,66]. However, due to their inherent assumptions, these methods work well only on a limited range of samples, and the parameters defining the a priori knowledge need to be manually tuned to produce successful results. Fundamentally, they do not have the information in the spatial frequency regions where the OTF is zero, and the a priori knowledge attempts to fill in the missing gaps. Wavefront coding using a phase mask in the Fourier plane has been demonstrated to remove the null regions in the OTF such that a subsequent deconvolution by the pre-calibrated PSF can recover the latent image [67–70]. We adopt a similar method called coded aperture proposed by Zhou and Nayar [43] that uses an amplitude mask in the Fourier domain to achieve the same goal. With the amplitude-modulating SLM already in the optical system, using the amplitude mask over a phase mask is preferred. Combined with the knowledge of the pupil function reconstructed by Algorithms 1 and 2, no a priori knowledge is required to recover the latent image via deconvolution. A coded aperture designed by Zhou and Nayar at the pupil plane with a defocus aberration can generate a PSF whose OTF does not have zero values within its NA-limited bandpass. The particular coded aperture is generated by a genetic algorithm that searches for a binary mask pattern that maximizes its OTF’s spatial frequency content’s modulus. The optimum aperture’s pattern is different depending on the amount of noise in the imaging condition. We choose the pattern as shown in Fig. 7 since it performs well across various noise levels [43].

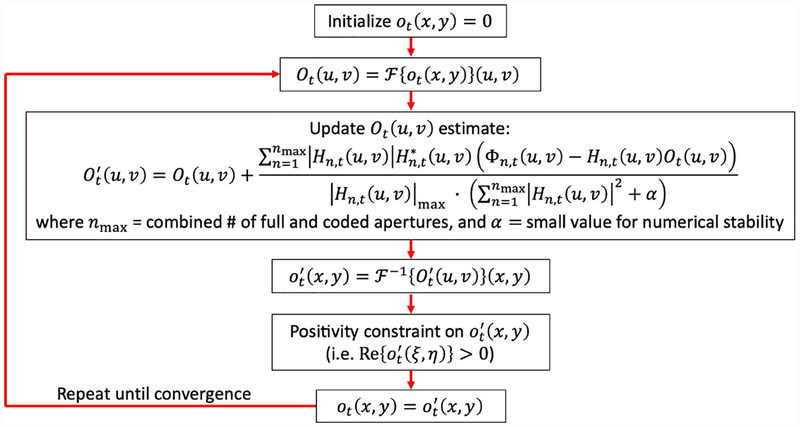

The pupil function in our imaging scenario does not only consist of defocus, as the imaging lenses have severe aberrations. Therefore, our pupil function can have an unsymmetrical phase profile unlike the defocus aberration’s symmetric bullseye phase profile. Thus, rotating the coded aperture can generate PSFs with different spatial frequency distribution, resulting in a different PSF shape beyond the mere rotation of the PSF. Therefore, in the data capturing procedure, we capture a series of images with a sequence of big masks An(u, v) consisting of four coded apertures and a standard circular aperture at the pupil plane, as represented in Fig. 2(c). This ensures that we obtain all spatial frequency information within the NA-limited bandpass. The PSF associated with each An(u, v) applied to Pt(u, v) is easily obtained by and its OTF by . With the measured full and coded aperture images ϕn,t(ξ, η)s and the knowledge of the OTFs, we perform a combined deconvolution using iterative Tikhonov regularization, similar to Algorithm 1, to recover the object’s intensity distribution ot(x, y) as described by Algorithm 3 in Fig. 8 and represented in Fig. 2(d).

Fig. 8.

Flowchart of Algorithm 3: iterative Tikhonov regularization for recovering the latent sample image ot(x, y) from the aberrated images. Here, .

A simulated deconvolution procedure with the coded aperture on a Siemens star pattern is shown in Fig. 7. The combined OTF of a circular aperture and the coded aperture at four rotation angles is able to eliminate the null regions found in the circular-aperture-only OTF and thus produce a superior deconvolution result. The deconvolution performance across different frequency components is correlated to the combined OTF’s modulus. We observe that the signal-to-noise ratio (SNR) of at least 40 in the initial aberrated image produces a deconvolution result with minimal artifacts. On a Macbook Pro with 2.5 GHz Intel Core i7 and 16 GB of RAM, it takes 2 s for Algorithm 3 to process the 5 images (1000 by 1000 pixels) taken with An(u, v) (one full aperture, four coded apertures) to generate the deconvolution result.

3. EXPERIMENTAL DEMONSTRATION ON ARTIFICIAL SAMPLES

A. Demonstration of CACAO-FB on a Crude Lens

The CACAO-FB prototype system’s setup is simple, as shown in Fig. 9. It consists of a pair of 2 inch, f = 100 mm achromatic doublets (AC508–100-A from Thorlabs) to relay the surface of an imaging lens of interest to the surface of the ferroelectric liquid-crystal-on-silicon (LCOS) spatial light modulator (SLM) (SXGA-3DM-HB from 4DD). A polarized beam splitter (PBS) (PBS251 from Thorlabs) lies in front of the SLM to enable binary modulation of the SLM. A polarizer (LPVISE100-A from Thorlabs) is placed after the PBS to further filter the polarized light to compensate for the PBS’s low extinction ratio in reflection. A f = 200 mm tube lens (TTL200-A from Thorlabs) Fourier transforms the modulated light and images it on a sCMOS camera (PCOedge 5.5 CL from PCO). To determine the orientation of the SLM with respect to the Fourier space in our computational process, we us a phase-only target, such as a microbead sample, illuminated by a collimated laser source to perform an overlapped-Fourier-coding phase retrieval [10]. With the correct orientation, the reconstructed complex field should have the expected amplitude and phase. The imaging system to be surveyed is placed in front of the CACAO-FB system at the first relay lens’s focal length. The imaging system consists of a crude lens and a sample it is supposed to image. The crude lens in our experiment is a +6D trial lens (26 mm diameter, f = 130 mm) from an inexpensive trial lens set (TOWOO TW-104 TRIAL LENS SET). A resolution target is placed at less than the lens’s focal length away to introduce more aberration into the system. The sample is flood-illuminated by a monochromatic LED light source (520 nm, UHP-Microscope-LED-520 from Prizmatix) filtered with a 10 nm bandpass filter.

Fig. 9.

Experimental setup of imaging a sample with a crude lens (i.e., unknown lens). Sample is illuminated by a monochromatic LED (520 nm), and the lens’s surface is imaged onto the SLM by a 1∶1 lens relay. The part of light modulated by the SLM is reflected by the PBS and is further filtered by a polarizer to account for the PBS’s low extinction ratio in reflection (1:20). The pupil-modulated image of the sample is captured on the sCMOS camera. L, lens; P, polarizer; PBS, polarizing beam splitter.

The relayed lens surface is modulated with various binary patterns by the SLM. The SLM displays a full aperture (5.5 mm diameter), a coded aperture rotated at 0°, 45°, 90°, and 135° with the maximum diameter matching the full aperture, and a series of limited apertures (1 mm diameter) shifted to different positions in a spiraling-out pattern within the full aperture dimension. The camera’s exposure is triggered by the SLM for synchronization. Another trigger signal for enabling the camera to begin a capture sequence is provided by a data acquisition board (NI ELVIS II from National Instrument), which a user can control with MATLAB. Multiple images for each SLM aperture are captured and summed together to increase their signal-to-noise ratio (SNR). The full-aperture image has SNR = 51, with other aperture-scanned images having SNR approximately proportional to the square root of their relative aperture area. SNR is estimated by calculating the mean and variance values in a uniformly bright patch on the image.

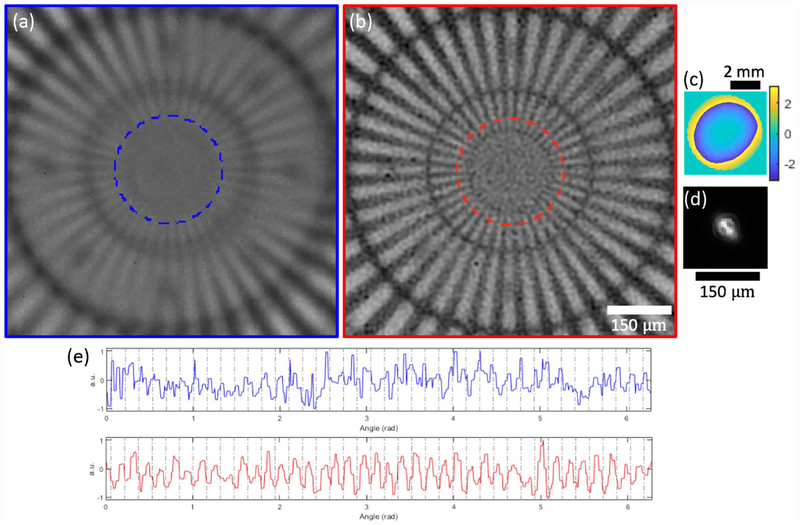

To quantify the resolution performance of CACAO-FB, we image a Siemens star pattern with the crude +6D lens. The pattern consists of 40 line pairs radiating from the center such that the periodicity along a circle increases with increasing radius. The smallest circle along which the periodic structure is barely resolvable determines the resolution limit of the optical system [71]. For the focal length of 130 mm, the aperture diameter of 5.5 mm, and the illumination wavelength of 520 nm, the expected resolution is between λ/NA = 24.6 μm (coherent) and λ/(2NA) = 12.3 μm (incoherent) periodicity, defined by the spatial frequency cutoff in the coherent and incoherent transfer functions, respectively. As shown in Fig. 10, CACAO-FB can resolve features up to 19.6 μm periodicity, which is within the expected resolution limit.

Fig. 10.

Resolution performance measured by imaging a Siemens star target. (a) A crude lens has optical aberration that prevents resolving the Siemens star’s features. (b) CACAO-FB is able to computationally remove the aberration and resolve 19.6 μm periodicity feature size, which lies between the coherent and incoherent resolution limit given by the focal length of 130 mm, the aperture diameter of 5.5 mm, and the illumination wavelength of 520 nm. (c) Pupil function recovered by CACAO-FB used for removing the aberration. (d) The PSF associated with the pupil function. (e) Intensity values from the circular traces on (a) and (b) that correspond to the minimum resolvable feature size of 19.6 μm periodicity. The Siemens star’s spokes are not visible in the raw image’s trace, whereas 40 cycles are clearly resolvable in the deconvolved result’s trace.

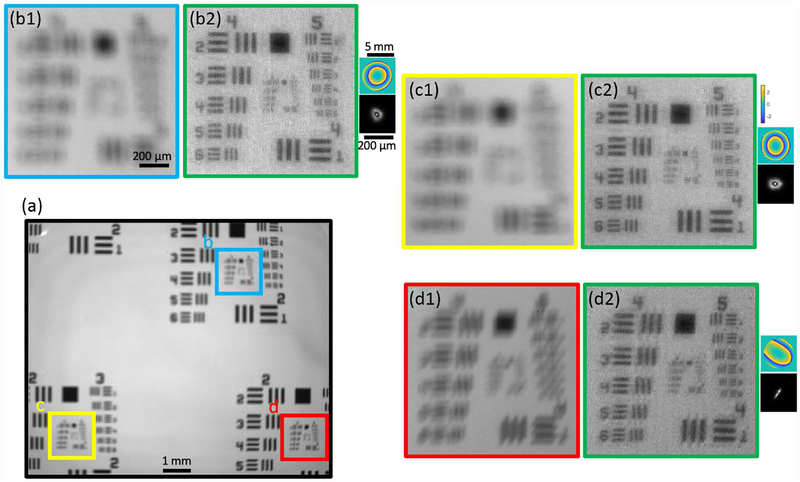

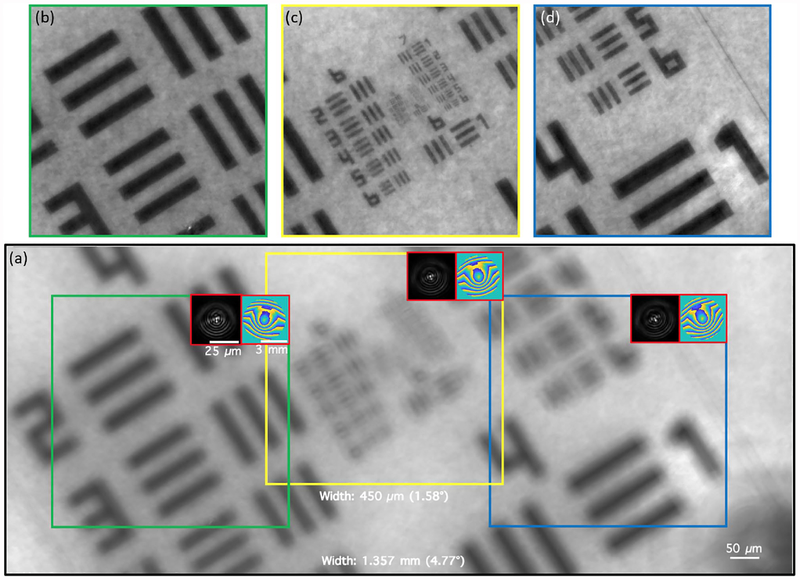

The +6D lens is expected to have poor imaging performance that varies across its FOV since it is an inexpensive single element lens. We image a sample slide consisting of an array of USAF targets and select three tiled regions, each containing a USAF target pattern, to demonstrate CACAO-FB’s ability to address spatially variant aberration in its latent image recovery procedure. As shown in Fig. 11, the recovered PSFs in the three different regions are drastically different, which demonstrates the spatially variant nature of the aberration. Deconvolving each region with the corresponding PSF can successfully recover the latent image. The expected resolution limits as calculated above correspond to a range between Group 5 Element 3 (24.8 μm periodicity) and Group 6 Element 3 (12.4 μm periodicity). Features up to Group 5 Element 5 (19.68 μm periodicity) are resolved after deconvolution as shown in Fig. 11, which matches closely with the resolution determined by the Siemens star pattern.

Fig. 11.

Spatially varying aberration compensation result on a grid of USAF target. (a) The full FOV captured by our camera with the full circular aperture at 5.5 mm displayed on the SLM. Each small region denoted by (b), (c), and (d) had a different aberration map as indicated by varying pupil function and PSFs. Spatially varying aberration is adequately compensated for in post-processing as shown by the deconvolution results (b2), (c2), and (d2).

B. Demonstration of CACAO-FB on an Eye Model

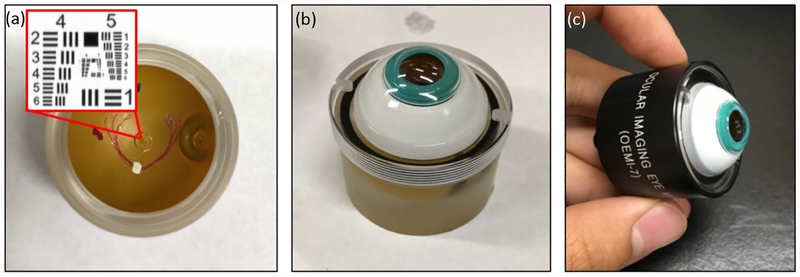

We use an eye model from Ocular Instruments to simulate an in vivo retinal imaging experiment. We embed a cut-out USAF resolution target (2015a USAF from Ready Optics) on the model’s retina and fill the model’s chamber with de-ionized water, as shown in Fig. 12. We make adjustments to our CACAO-FB system as shown in Fig. 13 to accommodate the different imaging scenario. First, it has to illuminate the retina in a reflection geometry via the same optical path as imaging. A polarized beam splitter (PBS) is used to provide illumination such that the specular reflection from the eye’s cornea, which mostly maintains the s polarization from the PBS, is filtered out of the imaging optical path. The scattered light from the retina is depolarized and can partially transmit through the PBS. The light source is a fiber-coupled laser diode (520 nm) (NUGM01T from DTR’s Laser Shop), which is made spatially incoherent by propagating through a 111 m long, 600 μm core diameter multimode fiber (FP600URT from Thorlabs), following the method in Ref. [72]. The laser diode is triggered such that it is on only during camera exposure. Images are captured at 50 Hz, ensuring that the flashing illumination’s frequency lies outside of the range that can cause photosensitive epilepsy in humans (i.e., between 15 and 20 Hz [73]). We add a pupil camera that outputs the image of the eye’s pupil with fiduciary marks for aligning the eye’s pupil with our SLM. Finally, a motion-reference camera (MRC) that has the identical optical path as the encoded-image camera (EIC) aside from pupil modulation by SLM is added to the system to account for an in vivo eye’s motion between image frames. The amount of light split between the MRC and EIC can be controlled by the PBS and a quarter-wave plate before the SLM.

Fig. 12.

Eye model with a USAF target embedded on the retinal plane. (a) A cut-out piece of glass of USAF target is attached on the retina of the eye model. The lid simulates the cornea and also houses a lens element behind it. (b) The model is filled with water with no air bubbles in its optical path.(c) The water-filled model is secured by screwing it in its case.

Fig. 13.

Experimental setup of imaging an eye model and an in vivo eye. Illumination is provided by a fiber-coupled laser diode (520 nm), and the eye’s pupil is imaged onto the SLM by a 1∶1 lens relay. The sample is slightly defocused from the focal length of the crude lens to add additional aberration into the system. Pupil alignment camera provides fiduciary to the user for adequate alignment of the pupil on the SLM. PBS2 helps with removing corneal reflection. The motion-reference camera is synchronized with encoded-image camera to capture images not modulated by the SLM. BS, beam splitter; L, lens; M, mirror; P, polarizer; PBS, polarized beam splitter; QWP, quarter-wave plate.

In Fig. 14, the recovered images show severe spatially varying aberration of the eye model but good deconvolution performance throughout the FOV, nonetheless. The tile size is set such that it is the biggest tile that could produce an aberration-free image, judged visually. The full aperture in this scenario had a 4.5 mm diameter, and its associated aberrated image had a SNR of 126.

Fig. 14.

CACAO-FB result of imaging the USAF target in the eye model. (a) Raw image (2560 × 1080 pixels) averaged over 12 frames captured with the full circular aperture at 4.5 mm. The pupil function and PSF in each boxed region show the spatially varying aberration. (b)–(d) Deconvolution results show sharp features of the USAF target. The uneven background is from the rough surface of the eye model’s retina.

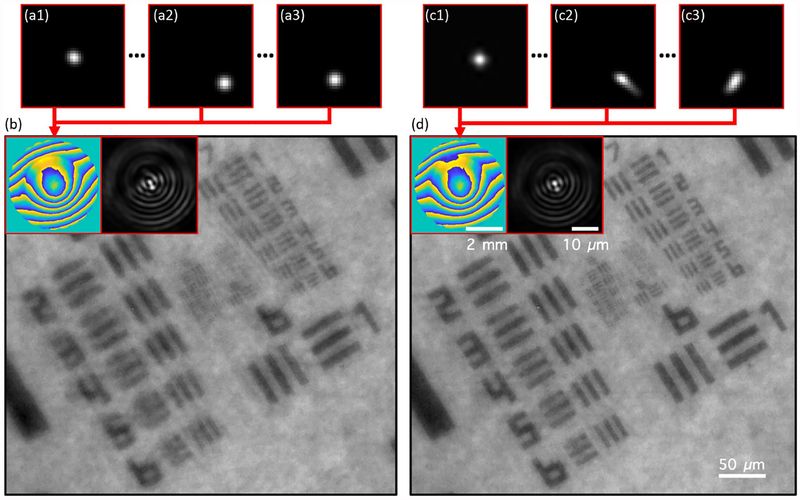

In this imaging scenario, the blur kernels of the limited-aperture images had a significant impact on the deconvolution result, as shown in Fig. 15. The aberration of the eye model was severe such that the retrieved blur kernels of the limited-aperture images had distinct shapes in addition to lateral shifts. We observe a much better deconvolution result with the reconstructed pupil that takes blur kernels’ shapes into account compared to the one that does not. The latter is analogous to Shack–Hartmann wavefront sensing method, which only identifies the centroid of each blur kernel to estimate the aberration. Thus, this demonstrates the importance of the blur kernel estimation step in our algorithm and the distinct difference of our aberration reconstruction from other wavefront sensing methods.

Fig. 15.

Showing the importance of masked pupil kernel shape determination for successful deconvolution. (a1)–(a3) Limited PSFs determined only by considering their centroids. (b) Recovered aberration and deconvolution result obtained with centroid-only limited PSFs. Some features of USAF are distorted. (c1)–(c3) Limited PSFs determined with the blur estimation algorithm. (d) Recovered aberration and deconvolution result obtained with the blur-estimated local PSFs. No distortions in the image are present, and more features of the USAF target are resolved.

4. ADAPTING CACAO-FB TO AN IN VIVO EXPERIMENT ON THE EYE OF A RHESUS MACAQUE

The same setup as in Section 3.B is used for the in vivo experiment on a rhesus macaque’s eye. The animal is anesthetized with 8–10 mg/kg ketamine and 0.02 mg/kg dexdomitor IM. Two drops of tropicamide (0.5%–1%) are placed on the eye to dilate the pupil. To keep the eye open for imaging, a sanitized speculum is placed between the eyelids. A topical anesthetic (proparacaine0.5%) is applied to the eye to prevent any irritation from the speculum placement. A rigid gas permeable lens is placed on the eye to ensure that the cornea stays moist throughout imaging. The light intensity is kept below a level of 50 mW/cm2 on the retina in accordance with ANSI recommended safe light dosage.

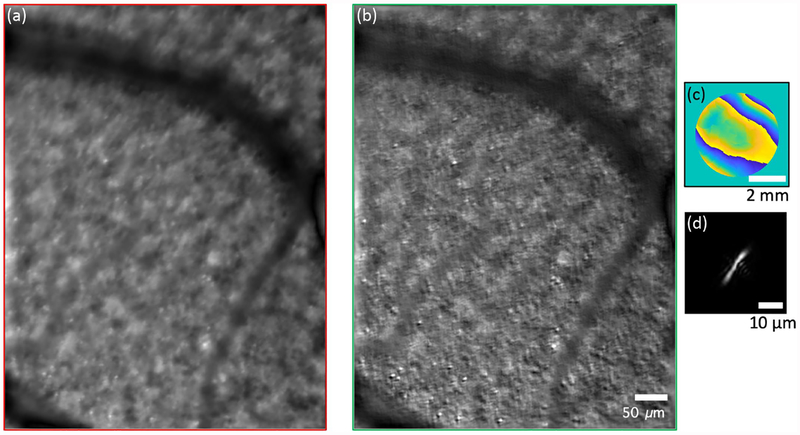

Due to the safety limitation on the illumination power, the captured images of the retina have low SNR (e.g., the full-aperture image has SNR = 7.5). We increase the SNR by capturing multiple redundant frames [213 frames for An(u, v)s, 4 frames for Wm(u, v)s] together and adding them (Fig. S3 of Supplement 1). Thus, a long sequence of images (~45 s) has to be captured, and these images with weak retinal signals have to be registered for motion prior to CACAO-FB since the eye has residual motion even under anesthesia. Due to a long averaging window, aberration of high temporal frequency is washed out, but we still expect to be able to resolve the photoreceptors, albeit at a lower contrast [7]. Motion registration includes both translation and rotation, and these operations need to be done such that they do not apply any spatial filter that may alter the images’ spatial frequency spectra. Rotation is performed with fast discrete sincinterpolation [74], which is a series of Fourier transform operations that can be accelerated by GPU programming. The frames from the motion-reference camera are used for the registration process (Fig. S2 of Supplement 1 and Visualization 1). A center region with half the dimensions of the full frame is selected from one of the frames as a template for registration. The normalized cross-correlation (NCC) value is found between the template and each frame [75] for every rotation angle (−1.5 to 1.5 deg, 0.0015 deg step size). The set of rotation angle and lateral shift values that produces the maximum NCC value at a pixel resolution for each frame corresponds to the motion registration parameters for that frame and the corresponding encoded-image camera’s frame. The registration parameters for all the frames are applied to the images of the encoded-image camera, and the images are grouped by different apertures to be summed together (Fig. S3 of Supplement 1).

The deconvolution result is shown in Fig. 16. Although the sensor size of 2560 × 2160 pixels is used for capturing raw images, the resultant averaged images are only 1262 × 1614 pixels after the motion registration. The input full-aperture image had SNR = 109. Photoreceptors are much better resolved after aberration removal. We expect the entire visible region to have an even spread of photoreceptors, but we observe well-resolved photoreceptors mostly in the brighter regions. This may be due to the lower SNR in the darker regions leading to a poorer deconvolution result. We cannot capture more frames of the retina to further increase the SNR because the animal’s eye’s gaze drifts over time and the original visible patch of the retina is shifted out of our system’s FOV. Furthermore, non-uniform specular reflections from the retina add noise to part of the captured data, leading to sub-optimal latent image recovery by the CACAO-FB algorithm pipeline.

Fig. 16.

CACAO-FB result from imaging an in vivo eye of a rhesus macaque. (a) Raw image averaged over 213 frames captured with 4.5 mm full circular aperture. (b) Deconvolution result using the (c) pupil function reconstructed by CACAO-FB procedure. (d) PSF associated with the pupil function.

5. DISCUSSION

We developed a novel method to characterize the aberration of an imaging system and recover an aberration-free latent image of an underlying sample in post-processing. It does not require the coherent illumination necessary in other computational, aberration-compensating imaging methods. It does not need separate wavefront detection and correction devices found in many conventional adaptive optics systems, as the hardware complexities are off-loaded to the software regime, which can harness the ever-increasing computational power. Its principle is based on incoherent imaging, which obviates sensitivity issues such as phase fluctuations and incident angles associated with coherent imaging and allows for characterizing an integrated optical system where the sample plane is only accessible via the target system’s lens. Our demonstrations of CACAO-FB on sub-optimal lenses in bench-top and in vivo experiments show its viability in a broad range of imaging scenarios. Its simple hardware setup is also a key advantage over other aberration-correction methods that may allow for its wide adoption.

More severe aberration can be addressed readily by shrinking the scanned aperture size on the SLM so that the aberration within each windowed pupil function remains low order. This comes at the expense of the acquisition speed as more images need to be captured to cover the same pupil diameter.

If the masks on the pupil are shrunk smaller with no overlap, this pupil masking process becomes a Shack–Hartmann (SH) sensing method. This illustrates the key advantages of our scheme over a SH sensor: using bigger masks allows for fewer image acquisitions and increases the images’ SNR. A bigger mask of an aberrated pupil no longer encodes for a simple shifted spot in the spatial domain as would be the case for a SH sensor but rather a blur kernel as shown in Fig. 3. Therefore, reconstructing the blur kernels of the limited aperture images is critical for CACAO-FB’s performance, as is demonstrated in Fig. 15.

Using an aperture mask in the Fourier plane discards a significant amount of photons in the image acquisition process. One possible way to improve the photon efficiency of our system would be to use a phase mask instead of an amplitude mask to code the Fourier plane as has been demonstrated in Ref. [70] to remove nulls in the OTF of an aberrated imaging system.

Although the recovered retinal image in Section 4 is not on par with what one can achieve with a typical AO retinal imager, it showcases the proof of concept of using CACAO-FB to correct for aberrations in a general optical system. There are several challenges of imaging an in vivo eye that can be addressed in future works to allow CACAO-FB to be a viable alternative to AO retinal imagers. First, increasing the SNR by averaging multiple retinal images of the rhesus macaque’s eye in vivo is challenging as its gaze continues to drift even under general anesthesia. There is a finite number of frames we can capture before the original patch of retina shifts out of our system’s FOV. Imaging a human subject would be less susceptible to this issue as an operator can instruct the subject to focus on a target and maintain the same patch of the retina within the system’s FOV as done in Ref. [7]. The small lateral shifts between captured frames due to the eye’s saccade can be digitally registered prior to averaging. Using a different wavelength invisible to the eye will allow the subject to maintain his/her gaze throughout an extended acquisition time. Second, non-uniform specular reflections from the retinal layer corrupt the captured images. The flood illumination provided on the retina through the pupil does not have sufficient angular coverage to even out the specular reflections such that some images captured with a small aperture contained specular reflections while others did not. A flood illumination with a wider divergence angle can mitigate this problem.

On the other hand, our method will be able to provide a readily viable solution for imaging static targets such as wide FOV imaging of fluorescent samples under sample- and system-induced aberrations since the fluorescent signals are inherently spatially incoherent and the CACAO-FB principle can be applied to the imaging process. With its simple optical setup, we believe CACAO-FB can be easily incorporated into many existing imaging systems to compensate for the limitations of the physical optics design.

Supplementary Material

Acknowledgment.

We thank Amir Hariri for assisting with the experiment; Soo-Young Kim, Dierck Hillmann, Martha Neuringer, Laurie Renner, Michael Andrews and Mark Pennesi for being of tremendous help over email regarding the experimental setup and in vivo eye imaging; and Mooseok Jang, Haowen Ruan, Edward Haojiang Zhou, Joshua Brake, Michelle Cua, Hangwen Lu, and Yujia Huang for helpful discussions.

Funding. National Institutes of Health (NIH) (R21 EY026228A).

Footnotes

See Supplement 1 for supporting content.

REFERENCES

- 1.Lohmann AW, Dorsch RG, Mendlovic D, Zalevsky Z, and Ferreira C, “Space-bandwidth product of optical signals and systems,”J. Opt. Soc. Am. A 13, 470–473 (1996). [Google Scholar]

- 2.McConnell G, Trägårdh J, Amor R, Dempster J, Reid E, and Amos WB, “A novel optical microscope for imaging large embryos and tissue volumes with sub-cellular resolution throughout,” eLife 5, e18659 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Godara P, Dubis A, Roorda A, Duncan J, and Carroll J, “Adaptive optics retinal imaging: Emerging clinical applications,” Optom. Vis. Sci 87, 930–941 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Williams D, “Imaging single cells in the living retina,” Vis. Res 51, 1379–1396 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Fried DL, “Anisoplanatism in adaptive optics,” J. Opt. Soc. Am 72, 52–61 (1982). [Google Scholar]

- 6.Booth MJ, “Adaptive optical microscopy: the ongoing quest for a perfect image,” Light Sci. Appl 3, e165 (2014). [Google Scholar]

- 7.Hofer H, Chen L, Yoon GY, Singer B, Yamauchi Y, and Williams DR, “Improvement in retinal image quality with dynamic correction of the eye’s aberrations,” Opt. Express 8, 631–643 (2001). [DOI] [PubMed] [Google Scholar]

- 8.Marcos S, Werner JS, Burns SA, Merigan WH, Artal P, Atchison DA, Hampson KM, Legras R, Lundstrom L, Yoon G, Carroll J, Choi SS, Doble N, Dubis AM, Dubra A, Elsner A, Jonnal R, Miller DT, Paques M, Smithson HE, Young LK, Zhang Y, Campbell M, Hunter J, Metha A, Palczewska G, Schallek J, and Sincich LC, “Vision science and adaptive optics, the state of the field,” Vis. Res 132, 3–33 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Zheng G, Horstmeyer R, and Yang C, “Wide-field, high-resolution Fourier ptychographic microscopy,” Nat. Photonics 7, 739–745 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Horstmeyer R, Ou X, Chung J, Zheng G, and Yang C, “Overlapped Fourier coding for optical aberration removal,” Opt. Express 22, 24062–24080 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Ou X, Zheng G, and Yang C, “Embedded pupil function recovery for Fourier ptychographic microscopy,” Opt. Express 22, 4960–4972 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Ou X, Horstmeyer R, Yang C, and Zheng G, “Quantitative phase imaging via Fourier ptychographic microscopy,” Opt. Lett 38, 4845–4848 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Bian Z, Dong S, and Zheng G, “Adaptive system correction for robust Fourier ptychographic imaging,” Opt. Express 21, 32400–32410 (2013). [DOI] [PubMed] [Google Scholar]

- 14.Bian L, Suo J, Chung J, Ou X, Yang C, Chen F, and Dai Q, “Fourier ptychographic reconstruction using Poisson maximum likelihood and truncated Wirtinger gradient,” Sci. Rep 6, 27384 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Bian L, Suo J, Zheng G, Guo K, Chen F, and Dai Q, “Fourier ptychographic reconstruction using Wirtinger flow optimization,” Opt. Express 23, 4856–4866 (2015). [DOI] [PubMed] [Google Scholar]

- 16.Rodenburg JM and Bates RHT, “The theory of super-resolution electron microscopy via Wigner-distribution deconvolution,” Philos. Trans. R. Soc. A 339, 521–553 (1992). [Google Scholar]

- 17.Faulkner HML and Rodenburg JM, “Movable aperture lensless transmission microscopy: a novel phase retrieval algorithm,” Phys. Rev. Lett 93, 023903 (2004). [DOI] [PubMed] [Google Scholar]

- 18.Pan A and Yao B, “Three-dimensional space optimization for near-field ptychography,” Opt. Express 27, 5433–5446 (2019). [DOI] [PubMed] [Google Scholar]

- 19.Gustafsson MGL, “Surpassing the lateral resolution limit by a factor of two using structured illumination microscopy,” J. Microsc 198, 82–87 (2000). [DOI] [PubMed] [Google Scholar]

- 20.Gustafsson MGL, “Nonlinear structured-illumination microscopy: wide-field fluorescence imaging with theoretically unlimited resolution,” Proc. Natl. Acad. Sci. USA 102, 13081–13086 (2005). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Qian J, Dang S, Wang Z, Zhou X, Dan D, Yao B, Tong Y, Yang H, Lu Y, Chen Y, Yang X, Bai M, and Lei M, “Large-scale 3D imaging of insects with natural color,” Opt. Express 27, 4845–4857 (2019). [DOI] [PubMed] [Google Scholar]

- 22.Turpin TM, Gesell LH, Lapides J, and Price CH, “Theory of the synthetic aperture microscope,” Proc. SPIE 2566, 230–240 (1995). [Google Scholar]

- 23.Di J, Zhao J, Jiang H, Zhang P, Fan Q, and Sun W, “High resolution digital holographic microscopy with a wide field of view based on a synthetic aperture technique and use of linear CCD scanning,” Appl. Opt 47, 5654–5659 (2008). [DOI] [PubMed] [Google Scholar]

- 24.Hillman TR, Gutzler T, Alexandrov SA, and Sampson DD, “High-resolution, wide-field object reconstruction with synthetic aperture Fourier holographic optical microscopy,” Opt. Express 17, 7873–7892 (2009). [DOI] [PubMed] [Google Scholar]

- 25.Yeh LH, Dong J, Zhong J, Tian L, and Chen M, “Experimental robustness of Fourier ptychography phase retrieval algorithms,” Opt. Express 23, 33214–33240 (2015). [DOI] [PubMed] [Google Scholar]

- 26.Bian L, Suo J, Situ G, Zheng G, Chen F, and Dai Q, “Content adaptive illumination for Fourier ptychography,” Opt. Lett 39, 6648–6651 (2014). [DOI] [PubMed] [Google Scholar]

- 27.Sun J, Chen Q, Zhang Y, and Zuo C, “Efficient positional misalignment correction method for Fourier ptychographic microscopy,” Biomed. Opt. Express 7, 1336–1350 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Zhang Y, Jiang W, Tian L, Waller L, and Dai Q, “Self-learning based Fourier ptychographic microscopy,” Opt. Express 23, 18471–18486 (2015). [DOI] [PubMed] [Google Scholar]

- 29.Li P, Batey DJ, Edo TB, and Rodenburg JM, “Separation of three-dimensional scattering effects in tilt-series Fourier ptychography,” Ultramicroscopy 158, 1–7 (2015). [DOI] [PubMed] [Google Scholar]

- 30.Horstmeyer R, Chung J, Ou X, Zheng G, and Yang C, “Diffraction tomography with Fourier ptychography,” Optica 3, 827–835 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Pan A, Zhang Y, Wen K, Zhou M, Min J, Lei M, and Yao B, “Subwavelength resolution Fourier ptychography with hemispherical digital condensers,” Opt. Express 26, 23119–23131 (2018). [DOI] [PubMed] [Google Scholar]

- 32.Pan A, Zhang Y, Zhao T, Wang Z, Dan D, Lei M, and Yao B, “System calibration method for Fourier ptychographic microscopy,” J. Biomed. Opt 22, 096005 (2017). [DOI] [PubMed] [Google Scholar]

- 33.Kamal T, Yang L, and Lee WM, “In situ retrieval and correction of aberrations in moldless lenses using Fourier ptychography,” Opt. Express 26, 2708–2719 (2018). [DOI] [PubMed] [Google Scholar]

- 34.Chung J, Kim J, Ou X, Horstmeyer R, and Yang C, “Wide field-of-view fluorescence image deconvolution with aberration-estimation from Fourier ptychography,” Biomed. Opt. Express 7, 352–368 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Tian L, Li X, Ramchandran K, and Waller L, “Multiplexed coded illumination for Fourier Ptychography with an LED array microscope,” Biomed. Opt. Express 5, 2376–2389 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Chung J, Lu H, Ou X, Zhou H, and Yang C, “Wide-field Fourier ptycho-graphic microscopy using laser illumination source,” Biomed. Opt. Express 7, 4787–4802 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Tian L and Waller L, “3D intensity and phase imaging from light field measurements in an LED array microscope,” Optica 2, 104–111 (2015). [Google Scholar]

- 38.Tian L, Liu Z, Yeh L-H, Chen M, Zhong J, and Waller L, “Computational illumination for high-speed in vitro Fourier ptychographic microscopy,” Optica 2, 904–911 (2015). [Google Scholar]

- 39.Williams A, Chung J, Ou X, Zheng G, Rawal S, Ao Z, Datar R, Yang C, and Cote R, “Fourier ptychographic microscopy for filtration-based circulating tumor cell enumeration and analysis,” J. Biomed. Opt 19, 066007 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Dong S, Guo K, Nanda P, Shiradkar R, and Zheng G, “FPscope: a field-portable high-resolution microscope using a cellphone lens,” Opt. Express 5, 3305–3310 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Sun J, Zuo C, Zhang L, and Chen Q, “Resolution-enhanced Fourier ptychographic microscopy based on high-numerical-aperture illuminations,” Sci. Rep 7, 1187 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Kuang C, Ma Y, Zhou R, Lee J, Barbastathis G, Dasari RR, Yaqoob Z, and So PTC, “Digital micromirror device-based laser-illumination Fourier ptychographic microscopy,” Opt. Express 23, 26999–27010 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Zhou C and Nayar S, “What are good apertures for defocus deblurring?” in IEEE International Conference on Computational Photography (IEEE, 2009), pp. 1–8. [Google Scholar]

- 44.Fienup JR and Miller JJ, “Aberration correction by maximizing generalized sharpness metrics,” J. Opt. Soc. Am. A 20, 609–620 (2003). [DOI] [PubMed] [Google Scholar]

- 45.Hillmann D, Spahr H, Hain C, Sudkamp H, Franke G, Pfåffle C, Winter C, and Hüttmann G, “Aberration-free volumetric high-speed imaging of in vivo retina,” Sci. Rep 6, 35209 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Soulez F, Denis L, Tourneur Y, and Thiébaut E, “Blind deconvolution of 3D data in wide field fluorescence microscopy,” in 9th IEEE International Symposium on Biomedical Imaging (ISBI) (IEEE, 2012), pp. 1735–1738. [Google Scholar]

- 47.Thiébaut E and Conan J-M, “Strict a priori constraints for maximum-likelihood blind deconvolution,” J. Opt. Soc. Am. A 12, 485–492 (1995). [Google Scholar]

- 48.Adie S, Graf B, Ahmad A, Carney S, and Boppart S, “Computational adaptive optics for broadband optical interferometric tomography of biological tissue,” Proc. Natl. Acad. Sci. USA 109, 7175–7180 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Shemonski N, South F, Liu Y-Z, Adie S, Carney S, and Boppart S, “Computational high-resolution optical imaging of the living human retina,” Nat. Photonics 9, 440–443 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Kundur D and Hatzinakos D, “Blind image deconvolution,” IEEE Signal Process. Mag 13(3), 43–64 (1996). [Google Scholar]

- 51.Kumar A, Drexler W, and Leitgeb RA, “Subaperture correlation based digital adaptive optics for full field optical coherence tomography,” Opt. Express 21, 10850–10866 (2013). [DOI] [PubMed] [Google Scholar]

- 52.Kumar A, Fechtig D, Wurster L, Ginner L, Salas M, Pircher M, and Leitgeb R, “Noniterative digital aberration correction for cellular resolution retinal optical coherence tomography in vivo,” Optica 4, 924–931 (2017). [Google Scholar]

- 53.Ginner L, Schmoll T, Kumar A, Salas M, Pricoupenko N, Wurster L, and Leitgeb R, “Holographic line field en-face OCT with digital adaptive optics in the retina in vivo,” Biomed. Opt. Express 9, 472–485 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Gunjala G, Sherwin S, Shanker A, and Waller L, “Aberration recovery by imaging a weak diffuser,” Opt. Express 26, 21054–21068 (2018). [DOI] [PubMed] [Google Scholar]

- 55.Zheng G, Ou X, Horstmeyer R, and Yang C, “Characterization of spatially varying aberrations for wide field-of-view microscopy,” Opt. Express 21, 15131–15143 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Gunturk BK and Li X, Image Restoration: Fundamentals and Advances (CRC Press, 2012), Vol. 7. [Google Scholar]

- 57.Goodman J, Introduction to Fourier Optics (McGraw-Hill, 2008). [Google Scholar]

- 58.Yuan L, Sun J, Quan L, and Shum H-Y, “Image deblurring with blurred/noisy image pairs,” ACM Trans. Graph 26, 1 (2007). [Google Scholar]

- 59.Lim SH and Silverstein DA, “Method for deblurring an image,” U.S. patent 8,654,201 (February18, 2014).

- 60.Neumaier A, “Solving ill-conditioned and singular linear systems: a tutorial on regularization,” SIAM Rev 40, 636–666 (1998). [Google Scholar]

- 61.Rodenburg JM and Faulkner HML, “A phase retrieval algorithm for shifting illumination,” Appl. Phys. Lett 85, 4795–4797 (2004). [Google Scholar]

- 62.Sun J, Chen Q, Zhang Y, and Zuo C, “Sampling criteria for Fourier ptychographic microscopy in object space and frequency space,” Opt. Express 24, 15765–15781 (2016). [DOI] [PubMed] [Google Scholar]

- 63.Fergus R, Singh B, Hertzmann A, Roweis ST, and Freeman WT, “Removing camera shake from a single photograph,” ACM Trans. Graph 25, 787–794 (2006). [Google Scholar]

- 64.Levin A, Weiss Y, Durand F, and Freeman WT, “Understanding blind deconvolution algorithms,” IEEE Trans. Pattern Anal. Mach. Intell 33, 2354–2367 (2011). [DOI] [PubMed] [Google Scholar]

- 65.Bioucas-Dias JM, Figueiredo MAT, and Oliveira JP, “Total variation-based image deconvolution: a majorization-minimization approach,” in IEEE International Conference on Acoustics Speech and Signal Processing (IEEE, 2006), pp. 861–864. [Google Scholar]

- 66.Levin A, Fergus R, Durand F, and Freeman WT, “Image and depth from a conventional camera with a coded aperture,” ACM Trans. Graph 26, 70 (2007). [Google Scholar]

- 67.Dowski E and Cathey TW, “Extended depth of field through wavefront coding,” Appl. Opt 34, 1859–1866 (1995). [DOI] [PubMed] [Google Scholar]

- 68.Kubala K, Dowski ER, and Cathey WT, “Reducing complexity in computational imaging systems,” Opt. Express 11, 2102–2108 (2003). [DOI] [PubMed] [Google Scholar]

- 69.Muyo G and Harvey AR, “Wavefront coding for athermalization of infrared imaging systems,” Proc. SPIE 5612, 227–235 (2004). [Google Scholar]

- 70.Muyo G, Singh A, Andersson M, Huckridge D, Wood A, and Harvey AR, “Infrared imaging with a wavefront-coded singlet lens,” Opt. Express 17, 21118–21123 (2009). [DOI] [PubMed] [Google Scholar]

- 71.Horstmeyer R, Heintzmann R, Popescu G, Waller L, and Yang C, “Standardizing the resolution claims for coherent microscopy,” Nat. Photonics 10, 68–71 (2016). [Google Scholar]

- 72.Rha J, Jonnal RS, Thorn KE, Qu J, Zhang Y, and Miller DT, “Adaptive optics flood-illumination camera for high speed retinal imaging,” Opt. Express 14, 4552–4569 (2006). [DOI] [PubMed] [Google Scholar]

- 73.Martins da Silva A and Leal B, “Photosensitivity and epilepsy: current concepts and perspectives-a narrative review,” Seizure 50, 209–218 (2017). [DOI] [PubMed] [Google Scholar]

- 74.Yaroslavsky L, Theoretical Foundations of Digital Imaging Using MATLAB (CRC Press, 2013). [Google Scholar]

- 75.Wade AR and Fitzke FW, “A fast, robust pattern recognition system for low light level image registration and its application to retinal imaging,” Opt. Express 3, 190–197 (1998). [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.