Significance

All through human civilization, optimization has played a major role, from aerodynamics to airline scheduling, delivery routing, and telecommunications decoding. Optimization is receiving increasing attention, since it is central to today’s artificial intelligence. All of these optimization problems are among the hardest for human or machine to solve. It has been overlooked that physics itself does optimization in the normal evolution of dynamical systems, such as seeking out the minimum energy state. We show that among such physics principles, the idea of minimum power dissipation, also called the Principle of Minimum Entropy Generation, appears to be the most useful, since it can be readily implemented in electrical or optical circuits.

Keywords: hardware accelerators, physical optimization, Ising solvers

Abstract

Optimization is a major part of human effort. While being mathematical, optimization is also built into physics. For example, physics has the Principle of Least Action; the Principle of Minimum Power Dissipation, also called Minimum Entropy Generation; and the Variational Principle. Physics also has Physical Annealing, which, of course, preceded computational Simulated Annealing. Physics has the Adiabatic Principle, which, in its quantum form, is called Quantum Annealing. Thus, physical machines can solve the mathematical problem of optimization, including constraints. Binary constraints can be built into the physical optimization. In that case, the machines are digital in the same sense that a flip–flop is digital. A wide variety of machines have had recent success at optimizing the Ising magnetic energy. We demonstrate in this paper that almost all those machines perform optimization according to the Principle of Minimum Power Dissipation as put forth by Onsager. Further, we show that this optimization is in fact equivalent to Lagrange multiplier optimization for constrained problems. We find that the physical gain coefficients that drive those systems actually play the role of the corresponding Lagrange multipliers.

Optimization is ubiquitous in today’s world. Everyday applications of optimization range from aerodynamic design of vehicles and physical stress optimization of bridges to airline crew scheduling and delivery truck routing. Furthermore, optimization is also indispensable in machine learning, reinforcement learning, computer vision, and speech processing. Given the preponderance of massive datasets and computations today, there has been a surge of activity in the design of hardware accelerators for neural-network training and inference (1).

We ask whether physics can address optimization? There are a number of physical principles that drive dynamical systems toward an extremum. These are the Principle of Least Action; the Principle of Minimum Power Dissipation (also called Minimum Entropy Generation); the Variational Principle; Physical Annealing, which preceded computational Simulated Annealing; and the Adiabatic Principle (which, in its quantum form, is called Quantum Annealing).

In due course, we may learn how to use each of these principles to perform optimization. Let us consider the Principle of Minimum Power Dissipation in dissipative physical systems, such as resistive electrical circuits. It was shown by Onsager (2) that the equations of linear systems, like resistor networks, can be reexpressed as the minimization principle of a power dissipation function for currents in various branches of the resistor network. By reexpressing a merit function in terms of power dissipation, the circuit itself will find the minimum of the merit function, or minimum power dissipation. Optimization is generally accompanied by constraints. For example, perhaps the constraint is that the final answers must be restricted to be . Such a digitally constrained optimization produces answers compatible with any digital computer.

A series of physics-based Ising solvers have been created in the physics and engineering community. The Ising challenge is to find the minimum energy configuration of a large set of magnets. This is very hard even when the magnets are restricted to only two orientations, North Pole up or down (3). Our main insights in this paper are that most of these Ising solvers use hardware based on the Principle of Minimum Power Dissipation and that almost all of them implement the well-known Lagrange multipliers method for constrained optimization.

An early work was by Yamamoto and coworkers in ref. 4, and this was followed by further work from their group (5–8) and other groups (9–15). These entropy-generating machines range from coupled optical parametric oscillators to resistor–inductor–capacitor electrical circuits, coupled exciton–polaritons, and silicon photonic-coupler arrays. These types of machines have the advantage that they solve digital problems orders of magnitude faster, and in a more energy-efficient manner, than conventional digital chips that are limited by latency and the energy cost (8).

Within the framework of these dissipative machines, constraints can be readily included. In effect, these machines perform constrained optimization equivalent to the technique of Lagrange multipliers. We illustrate this connection by surveying seven published physically distinct machines and showing that each minimizes power dissipation in its own way, subject to constraints; in fact, they perform Lagrange multiplier optimization.

In effect, physical machines perform local steepest descent in the power-dissipation rate. They can become stuck in local optima. At the very least, they perform a rapid search for local optima, thus reducing the search space for the global optimum. These machines are also adaptable toward advanced techniques for approaching a global optimum.

At this point, we note that there are several other streams of work on physical optimization in the literature that we shall not be dealing with in this paper. These works include a variety of Lagrange-like continuous-time solvers (16, 17), Memcomputing methods (18), Reservoir Computing (19, 20), adiabatic solvers using Kerr nonlinear oscillators (21), and probabilistic bit logic (22). A brief discussion of adiabatic Kerr oscillator systems (21) is presented in SI Appendix, section 4.

The paper is organized as follows. In Section 1, we recognize that physics performs optimization through its various principles. Then, we concentrate on the Principle of Minimum Power Dissipation. In Section 2, we give an overview of the minimum power-dissipation optimization solvers in the literature and show how they incorporate constraints. Section 3 has a quick tutorial on the method of Lagrange multipliers. Section 4 studies five published solvers in detail and shows that they all follow some form of Lagrange multiplier dynamics. In Section 5, we look at those published physics-based solvers that are less obviously connected to Lagrange multipliers. Section 6 presents the applications of these solvers to perform linear regression in statistics. Finally, in Section 7, we conclude and discuss the consequences of this ability to implement physics-based Lagrange multiplier optimization for areas such as machine learning.

1. Optimization in Physics

We survey the minimization principles of physics and the important optimization algorithms derived from them. The aim is to design physical optimization machines that converge to the global optimum, or a good local optimum, irrespective of the initial point for the search.

1.A. The Principle of Least Action.

The Principle of Least Action is the most fundamental principle in physics. Newton’s Laws of Mechanics, Maxwell’s Equations of Electromagnetism, Schrödinger’s Equation in Quantum Mechanics, and Quantum Field Theory can all be interpreted as minimizing a quantity called Action. For the special case of light propagation, this reduces to the Principle of Least Time, as shown in Fig. 1.

Fig. 1.

The Principle of Least Time, a subset of the Principle of Least Action. The actual path that light takes to travel from point A to point B is the one that takes the least time to traverse. Recording the correct path entails a small energy cost consistent with the Landauer Limit.

A conservative system without friction or losses evolves according to the Principle of Least Action. The fundamental equations of physics are reversible. A consequence of this reversibility is the Liouville Theorem, which states that volumes in phase space are left unchanged as the system evolves.

Contrary-wise, in both a computer and an optimization solver, the goal is to have a specific solution that occupies a smaller zone in the search space than the initial state, incurring an entropy cost first specified by Landauer and Bennett. Thus, some degree of irreversibility, or energy cost, is needed, specified by the number of digits in the answer in the Landauer–Bennett analysis. An algorithm has to be designed and programmed into the reversible system to effect the reduction in entropy needed to solve the optimization problem.

The reduction in entropy implies an energy cost but not necessarily a requirement for continuous power dissipation. We look forward to computer science breakthroughs that would allow the Principle of Least Action to address unsolved problems. An alternative approach to computing would involve physical systems that continuously dissipate power, aiding in the contraction of phase space toward a final solution. This brings us to the Principle of Least Power Dissipation.

1.B. The Principle of Least Power Dissipation.

If we consider systems that continuously dissipate power, we are led to a second optimization principle in physics, the Principle of Least Entropy Generation or Least Power Dissipation. This principle states that any physical system will evolve into a steady-state configuration that minimizes the rate of power dissipation given the constraints (such as fixed thermodynamic forces, voltage sources, or input power) that are imposed on the system. An early version of this statement is provided by Onsager in his celebrated papers on the reciprocal relations (2). This was followed by further foundational work on this principle by Prigogine (23) and de Groot (24). This principle is readily seen in action in electrical circuits and is illustrated in Fig. 2. We shall frequently use this principle, as formulated by Onsager, in the rest of the paper.

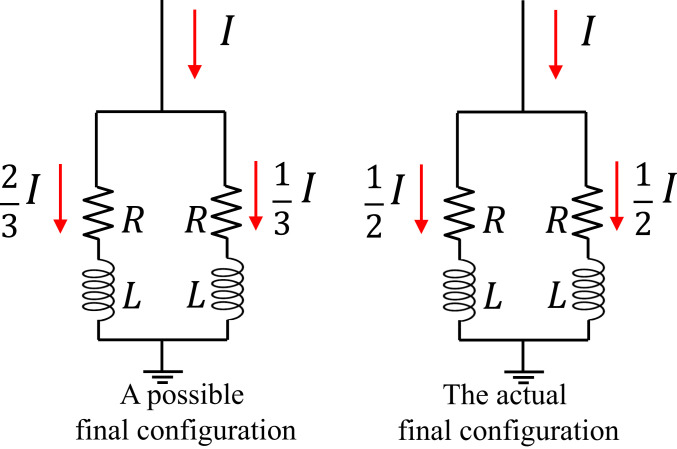

Fig. 2.

The Principle of Least Power Dissipation. In a parallel connection, the current distributes itself in a manner that minimizes the power dissipation, subject to the constraint of fixed input current .

1.C. Physical Annealing; Energy Minimization.

This technique is widely used in materials science and metallurgy and involves the slow cooling of a system starting from a high temperature. As the cooling proceeds, the system tries to maintain thermodynamic equilibrium by reorganizing itself into the lowest energy minimum in its phase space. Energy fluctuations due to finite temperatures help the system escape from local optima as shown in Fig. 3. This procedure leads to global optima when the temperature reaches zero in theory, but the temperature has to be lowered prohibitively slowly for this to happen.

Fig. 3.

Physical Annealing involves the slow cooling down of a system. The system performs gradient descent in configuration space with occasional jumps activated by finite temperature. If the cooling is done slowly enough, the system ends up in the ground state of configuration space.

1.D. Adiabatic Method.

The Adiabatic Method, illustrated in Fig. 4, involves the slow transformation of a system from initial conditions that are easily constructed to final conditions that capture the difficult problem at hand.

Fig. 4.

A system initialized in the ground state of a simple Hamiltonian continues to stay in the ground state as long as the Hamiltonian is changed slowly enough.

More specifically, to solve the Ising problem, one initializes the system of spins in the ground state of a simple Hamiltonian and then transforms this Hamiltonian into the Ising problem by slowly varying some system parameters. If the parameters are varied slowly enough, the system stays in the instantaneous ground state throughout and the problem gets solved. In a quantum mechanical system, this is sometimes called “quantum annealing.” Several proposals and demonstrations, including the well-known D-Wave machine (25), utilize this algorithm.

The slow rate of variation of the Hamiltonian parameters is determined by the minimum energy spacing between the instantaneous ground state and first excited state that occurs as we move from the initial Hamiltonian to the final one. The smaller the gap is, the slower the rate at which we need to perform the variation to successfully solve the problem. It has been shown that the gap can become exponentially small in the worst case, implying that this algorithm takes exponential time in the worst case for nondeterministic polynomial time (NP)-hard problems.

1.E. Minimum Power Dissipation in Multioscillator Arrays.

Multioscillator Arrays subject to Parametric Gain were introduced in refs. 4 and 5 for solving Ising problems. This can be regarded as a subset of the Principle of Minimum Power Dissipation, which always requires an input power constraint to avoid the null solution. In this case, gain acts as a constraint for minimum power dissipation, and the oscillator array must arrange itself to dissipate the least power subject to that constraint. If the oscillator array is bistable, this problem becomes analogous to the magnetic Ising problem. This mechanism will be the main point of Section 2.

2. Coupled Multioscillator Array Ising Solvers

The motivation for “Coupled Multioscillator Array Ising Solvers” is best explained using concepts from laser physics. As a laser is slowly turned on, spontaneous emission from the laser-gain medium couples into the various cavity modes and begins to become amplified. The different cavity modes have different loss coefficients due to their differing spatial profiles. As the laser pump/gain increases, the least-loss cavity mode grows faster than the others, and the gain is clamped by saturation. This picture can be incomplete since further nonlinear evolution among all of the modes can occur.

Coupled Multioscillator Array Ising machines try to map the power losses of the optimization machine to the magnetic energies of the Ising problem. If the mapping is correct, the lowest power configuration will match the energetic ground state of the Ising problem. This is illustrated in Fig. 5. The system evolves toward a state of minimum power dissipation, or minimum entropy generation, subject to the constraint of gain being present.

Fig. 5.

A lossy multioscillator system is provided with gain. The x axis is a list of all of the available modes in the system, whereas the y axis plots the loss coefficient of each mode. Gain is provided to the system and is gradually increased. As in single-mode lasers, the lowest loss mode, illustrated by the blue dot, grows exponentially, saturating the gain. Above the threshold, we can expect further nonlinear evolution among the modes so as to minimize power dissipation.

The archetypal solver in this class consists of a network of interconnected oscillators driven by phase-dependent parametric gain. Parametric gain amplifies only the cosine quadrature and causes the electric field to lie along the Real Axis in the complex plane. The phase of the electric field (0 or ) can be used to represent spin in the Ising problem. The resistive interconnections between the oscillators are designed to favor ferromagnetic or antiferromagnetic “spin–spin” interactions by the Principle of Minimum Power Dissipation, subject to parametric (phase-dependent) gain as the power input.

The gain input is very important to the Principle of Minimum Power Dissipation. If there were no power input, all of the currents and voltages would be zero, and the minimum power dissipated would be zero. In the case of the Coupled Multioscillator circuit, the power input is produced through a gain mechanism, or a gain module. The constraint could be the voltage input to the gain module. However, if the gain were to be too small, it might not exceed the corresponding circuit losses, and the current and voltage would remain near zero. If the pump gain is then gradually ramped up, the oscillatory mode requiring the least threshold gain begins oscillating. Upon reaching the threshold gain, a nontrivial current distribution of the Couple Multioscillator circuit will emerge. As the gain exceeds the required threshold, there will be further nonlinear evolution among the modes so as to minimize power dissipation. The final-state “spin” configuration, dissipating the lowest power, is reported as the desired optimum.

With Minimum Power Dissipation, as with most optimization schemes, it is difficult to guarantee a global optimum.

In optimization, each constraint contributes a Lagrange multiplier. We will show that the gains of the oscillators are the Lagrange multipliers of the constrained system. In Section 3, we provide a brief tutorial on Lagrange multiplier optimization.

3. Lagrange Multiplier Optimization Tutorial

The method of Lagrange multipliers is a very well-known procedure for solving constrained optimization problems in which the optimal point in multidimensional space locally optimizes the merit function subject to the constraint . The optimal point has the property that the slope of the merit function is zero as infinitesimal steps are taken away from , as taught in calculus. However, these deviations are restricted to the constraint curve, as shown in Fig. 6. The isocontours of the function increase until they are limited by, and just touch, the constraint curve at the point .

Fig. 6.

Maximization of function subject to the constraint . At the constrained local optimum, the gradients of and , namely and , are parallel.

At the point of touching, , the gradients of and are parallel to each other:

| [1] |

The proportionality constant is called the Lagrange multiplier corresponding to the constraint .

When we have multiple constraints , we expand Eq. 1 as follows:

| [2] |

where the gradient vector represents equations, accompanied by the constraint equations , resulting in equations. These equations solve for the components in the vector and the unknown Lagrange multipliers . That would be equations for unknowns.

Motivated by Eq. 2, we introduce a Lagrange function defined as follows:

| [3] |

which can be optimized by gradient descent or other methods to solve for and . The theory of Lagrange multipliers, and the popular “Augmented Lagrange Method of Multipliers” algorithm used to solve for locally optimal , are discussed in great detail in refs. 26 and 27. A gist of the main points is presented in SI Appendix, sections 1–3.

For the case of the Ising problem, the objective function is given by , where is the magnetic Ising Energy and is the th magnetic moment vector. For the optimization method represented in this paper, we need a circuit or other physical system whose power dissipation is also , but now is power dissipation, not energy; is a variable that represents voltage, or current or electric field; and the are not magnetic energy but rather dissipative coupling elements. The correspondence is between magnetic spins quantized along the z axis, and the circuit variable .

While “energy” and “power dissipation” are represented by different units, we nonetheless need to establish a correspondence between them. For every optimization problem, there is a challenge of finding a physical system whose power-dissipation function represents the desired equivalent optimization function.

If the Ising problem has spins, there are also constraints, one for each of the spins. A sufficient constraint is . More complicated nonlinear constraints can be envisioned, but could represent the first two terms in a more complicated constraint Taylor expansion.

Therefore, a sufficient Lagrange function for the Ising problem, with digital constraints, is given by

where is the Lagrange multiplier associated with the corresponding constraint. We shall see in Section 4 that most analog algorithms that have been proposed for the Ising problem in the literature actually tend to optimize some version of the above Lagrange function.

4. The Physical Ising Solvers

We now discuss some physical methods proposed in the literature and show how each scheme implements the method of Lagrange multipliers. They all obtain good performance on the Gset benchmark problem set (28), and many of them demonstrate better performance than the heuristic algorithm, Breakout Local Search (29). The main result of our work is the realization that the gains used in all these physical methods are in fact Lagrange multipliers.

The available physical solvers in the literature, we entitle as follows: Optical Parametric Oscillators [4.A], Coupled Radio Oscillators on the Real Axis [4.B], Coupled Laser Cavities Using Multicore Fibers [4.C], Coupled Radio Oscillators on the Unit Circle [4.D], and Coupled Polariton Condensates [4.E]. In Section 5, we discuss schemes that might be variants of minimum power dissipation: Iterative Analog Matrix Multipliers [5.A] and Leleu Mathematical Ising Solver [5.B]. In SI Appendix, section 4, we discuss “Adiabatic Coupled Radio Oscillators” (21), which seems unconnected with minimum power dissipation.

Optical Parametric Oscillators, Coupled Radio Oscillators on the Real Axis, and Coupled Radio Oscillators on the Unit Circle use only one gain for all of the oscillators, which is equivalent to imposing only one constraint, while Coupled Laser Cavities Using Multicore Fibers, Coupled Polariton Condensates, and Iterative Analog Matrix Multipliers use different gains for each spin and correctly capture the constraints of the Ising problem.

4.A. Optical Parametric Oscillators.

4.A.1. Overview.

An early optical machine for solving the Ising problem was presented by Yamamoto and coworkers (4, 30). Their system consists of several pulses of light circulating in an optical-fiber loop, with the phase of each light pulse representing an Ising spin. In parametric oscillators, gain occurs at half the pump frequency. If the gain overcomes the intrinsic losses of the fiber, the optical pulse builds up. Parametric amplification provides phase-dependent gain. It restricts the oscillatory phase to the Real Axis of the complex plane. This leads to bistability along the positive or negative real axis, allowing the optical pulses to mimic the bistability of magnets.

In the Ising problem, there is magnetic coupling between spins. The corresponding coupling between optical pulses is achieved by specified interactions between the optical pulses. In Yamamoto and coworkers’ approach (30), one pulse is first plucked out by an optical gate, amplitude modulated by the proper connection weight specified in the Ising Hamiltonian, and then reinjected and superposed onto the other optical pulse , producing constructive or destructive interference, representing ferromagnetic or antiferromagnetic coupling.

By providing saturation to the pulse amplitudes, the optical pulses will finally settle down, each to one of the two bistable states. We will find that the pulse-amplitude configuration evolves exactly according to the Principle of Minimum Power Dissipation. If the magnetic dipole solutions in the Ising problem are constrained to , then each constraint is associated with a Lagrange multiplier. Surprisingly, we find that each Lagrange multiplier turns out to be equal to the gain or loss associated with the corresponding oscillator.

4.A.2. Lagrange multipliers as gain coefficients.

Yamamoto and coworkers (5) analyze their parametric oscillator system using slowly varying coupled wave equations for the circulating optical modes. We now show that the coupled wave equation approach reduces to an extremum of their system “power dissipation.” The coupled-wave equation for the slowly varying amplitude of the in-phase electric field cosine component of the th optical pulse (representing magnetic spin in an Ising system) is as follows:

| [4] |

where the weights, , are the dissipative coupling rate constants. (The arise from constructive and destructive interference and can be positive or negative.  where

where  is the corresponding weight in the binary Ising problem.) represents the parametric gain (1/sec) supplied to the th pulse, and is the corresponding loss (1/sec). We shall henceforth use normalized, dimensionless in the rest of the paper. The normalization electric field is that which produces an energy of 1/2 joule in the normalization volume, while for voltages, the normalization voltage is that which produces an energy of 1/2 joule in the linear capacitor. For clarity of discussion, we dropped the cubic terms in Eq. 4 that Yamamoto and coworkers (5) originally had. A discussion of these terms in given in SI Appendix, section 3.

is the corresponding weight in the binary Ising problem.) represents the parametric gain (1/sec) supplied to the th pulse, and is the corresponding loss (1/sec). We shall henceforth use normalized, dimensionless in the rest of the paper. The normalization electric field is that which produces an energy of 1/2 joule in the normalization volume, while for voltages, the normalization voltage is that which produces an energy of 1/2 joule in the linear capacitor. For clarity of discussion, we dropped the cubic terms in Eq. 4 that Yamamoto and coworkers (5) originally had. A discussion of these terms in given in SI Appendix, section 3.

Owing to the nature of parametric amplification, the quadrature sine components of the electric fields die out rapidly. The normalized power dissipation, (in watts divided by one joule), including the negative dissipation associated with gain can be written:

| [5] |

where the electric field cosine amplitudes are rendered dimensionless. If we minimize the power dissipation without invoking any constraints, that is, with , the amplitudes simply go to zero.

If the gain is large enough, some of the amplitudes might go to infinity. To avoid this, we employ the constraint functions , which enforce a digital outcome. Adding the constraint function to the power dissipation yields the Lagrange function, L (in units of watts divided by one joule), (which includes the constraint functions times the respective Lagrange multipliers):

| [6] |

The unconstrained Eq. 5 and the constrained Eq. 6 differ only in the () added to the term, which effectively constrains the amplitudes and prevents them from diverging to . Eq. 6 is the Lagrange function given at the end of Section 3. Surprisingly, the gains emerge to play the role of Lagrange multipliers. This means that each mode, represented by the subscripts in , must adjust to a particular gain such that power dissipation is minimized. Minimization of the Lagrange function (Eq. 6) provides the final steady state of the system dynamics. In fact, the right-hand side of Eq. 4 is the gradient of Eq. 6, demonstrating that the dynamical system performs gradient descent on the Lagrange function. If the circuit or optical system is designed to dissipate power in a mathematical form that matches the Ising magnetic energy, then the system will seek out a local optimum of the Ising energy.

Such a physical system, constrained to , is digital in the same sense as a flip–flop circuit, but unlike the von Neumann computer, the inputs are resistor weights for power dissipation. Nonetheless, a physical system can evolve directly, without the need for shuttling information back and forth as in a von Neumann computer, providing faster answers. Without the communications overhead but with the higher operation speed, the energy dissipated to arrive at the final answer will be less, despite the circuit being required to generate entropy during its evolution toward the final state.

To achieve minimum power dissipation, the amplitudes and the Lagrange multipliers must all be simultaneously optimized using the Lagrange function as discussed in Section 4.E. While a circuit will evolve toward optimal amplitudes , the gains must arise from a separate active circuit. Ideally, the active circuit that controls the Lagrange multiplier gains would have its power dissipation included with the main circuit. A more common method is to provide gain that follows a heuristic rule. For example, Yamamoto and coworkers (5) follow the heuristic rule . It is not yet clear whether the heuristic-based approach toward gain evolution will be equally effective as using the complete Lagrange method in Section 4.E and lumping together all main circuit and feedback components and minimizing the total power dissipation.

We conclude this subsection by noting that the Lagrange function, Eq. 6, corresponds to the following merit function, the normalized power dissipation, (in watts divided by one joule), and constraints:

4.B. Coupled Radio Oscillators on the Real Axis.

4.B.1. Overview.

A coupled inductor–capacitor (LC) oscillator system with parametric amplification was analyzed in the circuit simulator, SPICE, by Xiao (9). This is analogous to the optical Yamamoto system, but this system consists of a network of radio frequency LC oscillators coupled to one another through resistive connections. The LC oscillators contain linear inductors but nonlinear capacitors, which provide the parametric gain. The parallel or cross-connect resistive connections between the oscillators are designed to implement the ferromagnetic or antiferromagnetic couplings between magnetic dipole moments as shown in Fig. 7. The corresponding phase of the voltage amplitude , 0 or , determines the sign of magnetic dipole moment .

Fig. 7.

Coupled LC oscillator circuit for two coupled magnets. The oscillation of the LC oscillators represents the magnetic moments, while the parallel or antiparallel cross-connections represent ferromagnetic or antiferromagnetic coupling, respectively. The nonlinear capacitors are pumped by at frequency , providing parametric gain at .

The nonlinear capacitors are pumped by voltage at frequency , where the LC oscillator natural frequency is . Second harmonic pumping leads to parametric amplification in the oscillators. As in the optical case, parametric amplification induces gain in the Real Axis quadrature and imposes phase bistability on the oscillators.

Ideally, an active circuit would control the Lagrange multiplier gains , and the gain control circuit would have its power dissipation included with the main circuit. A more common approach is to provide gain that follows a heuristic rule. Xiao (9) linearly ramps up the gain as in Optical Parametric Oscillators. Again, as in the previous case, a mechanism is needed to prevent the parametric gain from producing infinite amplitude signals. Zener diodes are used to restrict the amplitudes to finite saturation values. With the diodes in place, the circuit settles into a voltage phase configuration, 0 or , that minimizes net power dissipation for a given pump gain.

4.B.2. Lagrange function and Lagrange multipliers.

The evolution of the oscillator capacitor voltages was derived from Kirchhoff’s laws by Xiao (9). The slowly varying amplitude approximation on the cosine component of these voltages, , produces the following equation for the ith oscillator:

| [7] |

where the are the peak voltage amplitudes; is the resistance of the coupling resistors; the cross-couplings are assigned values  ; is the linear part of the capacitance in each oscillator; is the number of oscillators; is the natural frequency of the oscillators; the parametric gain constant , where is the capacitance modulation at the second harmonic; and the decay constant . In this simplified model, all decay constants are taken as equal, and, moreover, each oscillator experiences exactly the same parametric gain , conditions that can be relaxed if needed.

; is the linear part of the capacitance in each oscillator; is the number of oscillators; is the natural frequency of the oscillators; the parametric gain constant , where is the capacitance modulation at the second harmonic; and the decay constant . In this simplified model, all decay constants are taken as equal, and, moreover, each oscillator experiences exactly the same parametric gain , conditions that can be relaxed if needed.

We note that Eq. 7 performs gradient descent on the net power-dissipation function:

| [8] |

where h, L, f are the power-dissipation functions in watts divided by one joule. This is very similar to Section 4.A. The first two terms on the right-hand side together represent the dissipative losses in the coupling resistors, while the third term is the negative of the gain provided to the system of oscillators.

Next, we obtain the following Lagrange function through the same replacement of with that we performed in Section 4.A:

| [9] |

where the are normalized to the voltage that produces an energy of 1/2 joule on the capacitor . The above Lagrange function corresponds to Lagrange multiplier optimization using the following merit function and constraints:

Again, we see that the gain coefficient is the Lagrange multiplier of the constraint .

4.B.3. Time dynamics and iterative optimization of the Lagrange function.

Although the extremum of Eq. 9 represents the final evolved state of the physical system and represents an optimization outcome, it would be interesting to examine the time evolution toward the optimal state. We shall show in this subsection that iterative optimization of the Lagrange function in time reproduces the slowly varying time dynamics of the circuit. Each iteration is assumed to take time . In each iteration, the voltage amplitude takes a step antiparallel to the gradient of the Lagrange function:

| [10] |

where the minus sign on the right-hand side drives the system toward minimum power dissipation. The proportionality constant controls the size of each iterative step; it also calibrates the dimensional units between power dissipation and voltage amplitude. (Since is voltage amplitude, has units of reciprocal capacitance.) Converting Eq. 10 to continuous time,

| [11] |

where the play the role of Lagrange multipliers, and the are the constraints. Substituting from Eq. 9 into Eq. 11, we get

| [12] |

The constant can be absorbed into the units of time to reproduce Eq. 7, the slowly varying amplitude approximation for the coupled radio oscillators. Thus, in this case and many of the others (except Section 4.E), the slowly varying time dynamics can be reproduced from iterative optimization steps on the Lagrange function.

4.C. Coupled Laser Cavities Using Multicore Fibers.

4.C.1. Overview.

The Ising solver designed by Babaeian et al. (10) makes use of coupled laser modes in a multicore optical fiber. Polarized light in each core of the optical fiber corresponds to each magnetic moment in the Ising problem. The number of cores is equal to the number of magnets in the given Ising instance. The right-hand and left-hand circular polarization of the laser light in each core represent the two polarities (up and down) of the corresponding magnet. The mutual coherence of the various cores is maintained by injecting seed light from a master laser.

The coupling between the fiber cores is achieved through amplitude mixing of the laser modes by Spatial Light Modulators at one end of the multicore fiber (10). These Spatial Light Modulators couple light amplitude from the th core to the th core according to the prescribed connection weight .

4.C.2. Equations and comparison with Lagrange multipliers.

As in prior physical examples, the dynamics can be expressed using slowly varying equations for the polarization modes of the th core, and , where the two electric-field amplitudes are in-phase temporally, are positive real, but have different polarization. They are

where is the decay rate in the th core, and is the gain in the th core. The third term on the right-hand side represents the coupling between the th and th cores that is provided by the Spatial Light Modulators. They next define the degree of polarization as . Subtracting the two equations above, we obtain the following evolution equation for :

| [13] |

where the electric fields are properly dimensionless and normalized as in Section 4.A. The power dissipation is proportional to . However, this can also be written . can be regarded as relatively constant as energy switches back and forth between right and left circular polarization. Then, power dissipation would be most influenced by quadratic terms in :

As before, we add the digital constraints , where represents fully left or right circular polarization, and obtain the Lagrange function:

| [14] |

Once again, the gains play the role of Lagrange multipliers. Thus, a minimization of the power dissipation, subject to the optical gain , solves the Ising problem defined by the same couplings. In fact, the right-hand side of Eq. 13 is the gradient of Eq. 14, demonstrating that the dynamical system performs gradient descent on the Lagrange function.

The merit and constraint functions in the Lagrange function above are

4.D. Coupled Electrical Oscillators on the Unit Circle.

4.D.1. Overview.

We now consider a network of nonlinear, amplitude-stable electrical oscillators designed by Wang and Roychowdhury (11) to represent an Ising system for which we seek a digital solution with each dipole along the z axis in the magnetic dipole space. Wang and Roychowdhury provide a dissipative system of LC oscillators with oscillation amplitude clamped and oscillation phase or revealing the preferred magnetic dipole orientation . It is noteworthy that Roychowdhury goes beyond Ising machines and constructs general digital logic gates using these amplitude-stable oscillators in ref. 31.

In their construction, Wang and Roychowdhury (11) use nonlinear elements that behave like negative resistors at low-voltage amplitudes but as saturating resistance at high-voltage amplitudes. This produces amplitude-stable oscillators. In addition, Wang and Roychowdhury (11) provide a second harmonic pump and use a form of parametric amplification (referred to as subharmonic injection locking in ref. 11) to obtain bistability with respect to phase.

With the amplitudes being essentially clamped, it is the readout of these phase shifts, 0 or , that provides the magnetic dipole orientation . One key difference between this system and Yamamoto’s system is that the latter had fast phase dynamics and slow amplitude dynamics, while Roychowdhury’s system has the reverse.

4.D.2. Equations and comparison with Lagrange multipliers.

Wang and Roychowdhury (11) derived the dynamics of their amplitude-stable oscillator network using perturbation concepts developed in ref. 32. While a circuit diagram is not shown, ref. 11 invokes the following dynamical equation for the phases of their electrical oscillators:

| [15] |

where is a coupling resistance in their system, is the phase of the th oscillator, and the are decay parameters that dictate how fast the phase angles settle toward their steady-state values.

We now show that Eq. 15 can be reproduced by iteratively minimizing the power dissipation in their system. Power dissipation across a resistor is , where is the voltage difference. Since and are sinusoidal, the power dissipation consists of constant terms and a cross-term of the form

where is the power dissipated in the resistors. Magnetic dipole orientation parallel or antiparallel is represented by whether or , respectively. We may choose an origin for angle space at , which implies or . This can be implemented as

Combining the power dissipated in the resistors with the constraint function , we obtain a Lagrange function:

| [16] |

where is the Lagrange multiplier corresponding to the phase-angle constraint, and are resistive coupling rate constants. The right-hand side of Eq. 15 is the gradient of Eq. 16, demonstrating that the dynamical system performs gradient descent on the Lagrange function.

The Lagrange function above is isomorphic with the general form in Section 3. The effective merit function and constraints in this correspondence are

4.E. Coupled Polariton Condensates.

4.E.1. Overview.

Kalinin and Berloff (12) proposed a system consisting of coupled polariton condensates to minimize the XY Hamiltonian. The XY Hamiltonian is a two-dimensional version of the Ising Hamiltonian and is given by

where the represents the magnetic moment vector of the th spin restricted to the spin-space XY plane.

Kalinin and Berloff (12) pump a grid of coupled semiconductor microcavities with laser beams and observe the formation of strongly coupled exciton–photon states called polaritons. For our purposes, the polaritonic nomenclature is irrelevant. For us, these are simply coupled electromagnetic cavities that operate by the Principle of Minimum Power Dissipation similar to the previous cases. The complex electromagnetic amplitude in the th microcavity can be written , where and represent the cosine and sine quadrature components of , and j is the unit imaginary. is mapped to the X-component of the magnetic dipole vector, and to the Y-component. The electromagnetic microcavity system settles into a state of minimum power dissipation as the laser pump and optical gain are ramped up to compensate for the intrinsic cavity losses. The phase angles in the complex plane of the final electromagnetic modes are then reported as the corresponding -magnetic moment angles in the XY plane.

Since the electromagnetic cavities experience phase-independent gain, this system does not seek phase bistability. We are actually searching for the magnetic dipole vector angles in the XY plane that minimize the corresponding XY magnetic energy.

4.E.2. Lagrange function and Lagrange multipliers.

Ref. 12 uses “Ginzburg–Landau” equations to analyze their system, resulting in equations for the complex amplitudes of the polariton wavefunctions. However, the are actually the complex electric-field amplitudes (properly dimensionless and normalized as in Section 4.A) of the th cavity. The electric-field amplitudes satisfy the slowly varying amplitude equation:

| [17] |

where is optical gain, is linear optical loss, is nonlinear attenuation, is nonlinear phase shift, and are dissipative coupling rate constants. We note that both the amplitudes and phases of the electromagnetic modes are coupled to each other and evolve on comparable timescales. This is in contrast to ref. 11, where the main dynamics were embedded in phase—amplitude was fast and almost fixed—or, conversely (9), where the dynamics were embedded in amplitude—phase was fast and almost fixed.

We show next that the method of ref. 12 is essentially the method of Lagrange multipliers with an added “rotation.” The power-dissipation rate is

If we add a saturation constraint, , then by analogy to the previous sections, is reinterpreted as a Lagrange multiplier:

| [18] |

where is the Lagrange function and h, L, f are the normalized power-dissipation functions (in watts divided by one joule). Thus, the scheme of coupled polaritonic resonators operates to find the state of minimum power dissipation in steady state, similar to the previous cases.

Dynamical Eq. 17 performs gradient descent on the Lagrange function Eq. 18 in conjunction with a rotation about the origin, . This rotation term, , is not captured by the Lagrange multiplier interpretation. It could, however, be useful in developing more sophisticated algorithms than the method of Lagrange multipliers, and we discuss this prospect in Section 5.B, where a system with a more general “rotation” term is discussed.

4.E.3. Iterative evolution of Lagrange multipliers.

In the method of Lagrange multipliers, the merit-function Eq. 18 is used to optimize not only the electric-field amplitudes but also the Lagrange multipliers . The papers of the previous sections used simple heuristics to adjust their gains/decay constants, which we have shown to be Lagrange multipliers. Kalinin and Berloff (12) employ the Lagrange function itself to adjust the gains, as in the complete Lagrange method discussed next.

We introduce the full method of Lagrange multipliers by briefly shifting back to the notation of Section 3. The full Lagrange method finds the optimal and by performing gradient descent of in and gradient ascent of in . The reason for ascent in rather than descent is to more strictly penalize deviations from the constraint. This leads to the iterations

| [19] |

| [20] |

where and are suitably chosen step sizes.

With our identification that the Lagrange multipliers are the same as the gains , we plug the Lagrange function Eq. 18 into the second iterative equation and take the limit . We obtain the following dynamical equation for the gains :

| [21] |

This iterative evolution of the Lagrange multipliers is indeed what Kalinin and Berloff (12) employ in their coupled polariton system.

To Eq. 21, we must add the iterative evolution of the field variables :

| [22] |

Eqs. 21 and 22 represent the full iterative evolution, but in some of the earlier subsections, was assigned a heuristic time dependence.

We conclude this subsection by splitting the Lagrange function into the effective merit function and the constraint function . The extra “phase rotation” U is not captured by this interpretation.

4.F. General Conclusions from Coupled Multioscillator Array Ising Solvers.

1) Physical systems minimize the power-dissipation rate subject to input constraints of voltage, amplitude, gain, etc. 2) These systems actually perform Lagrange multiplier optimization with the gain playing the role of multiplier for the th digital constraint. 3) Under the digital constraint, amplitudes or phases or , power-dissipation minimization schemes are actually binary, similar to a flip–flop. 4) In many of the studied cases, the system time dependence follows gradient descent on the power-dissipation function as the system approaches a power-dissipation minimum. In one of the cases (Section 4.E), there was a rotation superimposed on this gradient descent.

5. Other Methods in the Literature

We now look at other methods in the literature that do not explicitly implement the method of Lagrange multipliers but nevertheless end up with dynamics that resemble it to varying extents. All of these methods offer operation regimes where the dynamics is not analogous to Lagrange multiplier optimization, and we believe it is an interesting avenue of future work to study the capabilities of these regimes.

5.A. Iterative Analog Matrix Multipliers.

Soljacic and coworkers (13) developed an iterative procedure consisting of repeated matrix multiplication to solve the Ising problem. Their algorithm was implemented on a photonic circuit that utilized on-chip optical matrix multiplication units composed of Mach–Zehnder interferometers that were first introduced for matrix algebra by Zeilinger and coworkers in ref. 33. Soljacic and coworkers (13) showed that their algorithm performed optimization on an effective merit function that is demonstrated to be a Lagrange function in SI Appendix, section 5.

We use our insights from the previous sections to implement a simplified iterative optimization using an optical matrix multiplier. A block diagram of such a scheme is shown in Fig. 8. Let the multiple magnetic moment configuration of the Ising problem be represented as a vector of electric-field amplitudes, , of the spatially separated optical modes. Each mode-field amplitude represents the value of each magnetic moment. In each iteration, the optical modes are fed into the optical circuit, which performs matrix multiplication, and the resulting output optical modes are then fed back to the optical circuit input for the next iteration. Optical gain or some other type of gain sustains the successive iterations.

Fig. 8.

An optical circuit performing iterative multiplications converges on a solution of the Ising problem. Optical pulses are fed as input from the left-hand side at the beginning of each iteration, pass through the matrix multiplication unit, and are passed back from the outputs to the inputs for the next iteration. Distributed optical gain sustains the iterations.

We wish to design the matrix multiplication unit such that it has the following power-dissipation function:

The Lagrange function, including a binary constraint, , is given by

| [23] |

where the is the dissipative loss rate constant associated with electric-field interference between optical modes in the Mach–Zehnder interferometers, and is the optical gain.

The iterative multiplicative procedure that evolves the electric fields toward the minimum of the Lagrange function Eq. 23 is given by

where is a constant step size with the appropriate units, and each iteration involves taking steps in proportional to the gradient of the Lagrange function. ( represents differentiation with respect to the two quadratures.) Simplifying and sending all of the terms involving time step to one side, we get

| [24] |

where is the Kronecker delta (1 only if ). The Mach–Zehnder interferometers should be tuned to the matrix . Thus, we have an iterative matrix multiplier scheme that minimizes the Lagrange function of the Ising problem. In effect, a lump of dissipative optical circuitry, compensated by optical gain, will, in a series of iterations, settle into a solution of the Ising problem.

The simple system above differs from that of Soljacic and coworkers (13) in that their method has added noise and nonlinear thresholding in each iteration. A detailed description of their approach is presented in SI Appendix, section 5.

5.B. Leleu Mathematical Ising Solver.

Leleu et al. (8) proposed a modified version of the Yamamoto’s Ising machine (5) that significantly resembles the Lagrange method while incorporating important new features. To understand the similarities and differences between Leleu’s method and that of Lagrange multipliers, we recall the Lagrange function for the Ising problem that we encountered in Section 4:

| [25] |

In the above, are the optimization variables, is the interaction matrix, is the gain provided to the th variable, and is the loss experienced by the th variable. To find a local optimum that satisfies the constraints, one performs gradient descent on the Lagrange function in the variables and gradient ascent in the variables, as discussed in Section 4.E, Eqs. 19 and 20. Substituting Eq. 25 into them and taking the limit of , we get

| [26] |

| [27] |

On the other hand, Leleu et al. (8) propose the following system:

| [28] |

| [29] |

where the are the optimization variables, is the loss experienced by each variable, is a common gain supplied to each variable, is a positive parameter, and the are error coefficients that capture how far away each is from its saturation amplitude. Leleu et al. also had cubic terms in in ref. 8, and a discussion of these terms is given in SI Appendix, section 3.

It is clear that there are significant similarities between Leleu’s system and the Lagrange multiplier system. The optimization variables in both systems experience linear losses and gains and have interaction terms that capture the Ising interaction. Both systems have auxiliary variables that are varied according to how far away each degree of freedom is from its preferred saturation amplitude. However, the similarities end here.

A major differentiation in Leleu’s system is that multiplies the Ising interaction felt by the th variable, resulting in . The complementary coefficient is . Consequently, Leleu’s equations implement asymmetric interactions between vector components and . The inclusion of asymmetry seems to be important because Leleu’s system achieves excellent performance on the Gset problem set, as demonstrated in ref. 8.

We obtain some intuition about this system by splitting the asymmetric term into a symmetric and antisymmetric part. This follows from the fact that any matrix can be written as the sum of a symmetric matrix, , and an antisymmetric matrix, . The symmetric part leads to gradient descent dynamics similar to all of the systems in The Physical Ising Solvers. The antisymmetric part causes a energy-conserving “rotary” motion in the vector space of .

The secret of Leleu et al.’s (8) improved performance seems to lie in this antisymmetric part. The dynamical freedom associated with asymmetry might provide a fruitful future research direction in optimization and deserves further study to ascertain its power.

6. Applications in Linear Algebra and Statistics

We have seen that minimum power-dissipation solvers can address the Ising problem and similar problems like the traveling salesman problem. In this section, we provide yet another application of minimum power-dissipation solvers to an optimization problem that appears frequently in statistics, namely curve fitting. In particular, we note that the problem of linear least-squares regression, linear curve fitting with a quadratic merit function, resembles the Ising problem. In fact, the electrical circuit example we presented in Section 4.B can be applied to linear regression. We present such a circuit in this section. Our circuit provides a digital answer but requires a series of binary resistance values, that is, , to represent arbitrary binary statistical input observations.

The objective of linear least-squares regression is to fit a linear function to a given set of data . The are input vectors of dimension , while the are the observed outputs that we want our regression to capture. The linear function that is being fit is of the form , where is a feature vector of length , and is a vector of unknown weights. The vector is calculated by minimizing the sum of the squared errors it causes when used on an actual dataset:

where is the th component of the vector . This functional form is identical to the Ising Hamiltonian, and we may construct an Ising circuit with , with the weights acting like the unknown magnetic moments. There is an effective magnetic field in the problem . A simple circuit that solves this problem for (each instance has two features) is provided in Fig. 9. This circuit provides weights to 2-bit precision.

Fig. 9.

A 2-bit, linear regression circuit to find the best two curve-fitting weights , using the Principle of Minimum Power Dissipation.

The oscillators on the left-hand side of Fig. 9 represent the and bits of the first weight, while the oscillators on the other side represent the second weight.

The cross-resistance that one would need to represent the that connects the th and th oscillators is calculated as

where is a binary hierarchy of resistances based on a reference resistor , and are the bits of : . This represents to 3-bit precision using resistors that span a dynamic range . Further, the sign of the coupling is allotted according to whether the resistors are parallel-connected or cross-connected. In operation, the resistors would be externally programmed to the correct binary values, with many more bits than 3-bit precision, as given by the matrix product .

We have just solved the regression problem of the form , where matrix and vector were known measurements and the corresponding best weight vector for fitting was the unknown. We conclude by noting that this same procedure can be adopted to solve linear systems of equations of the form .

7. Discussion and Conclusion

Physics obeys a number of optimization principles such as the Principle of Least Action, the Principle of Minimum Power Dissipation (also called Minimum Entropy Generation), the Variational Principle, Physical Annealing, and the Adiabatic Principle (which, in its quantum form, is called Quantum Annealing).

Optimization is important in diverse fields, ranging from scheduling and routing in operations research to protein folding in biology, portfolio optimization in finance, and energy minimization in physics. In this article, we made the observation that physics has optimization principles at its heart and that they can be exploited to design fast, low-power digital solvers that avoid the limits of standard computational paradigms. Nature thus provides us with a means to solve optimization problems in all of these areas, including engineering, artificial intelligence, machine learning (backpropagation), Control Theory, and reinforcement learning.

We reviewed seven physical machines that purported to solve the Ising problem and found that six of the seven were performing Lagrange multiplier optimization; further, they also obey the Principle of Minimized Power Dissipation (always subject to a power-input constraint). This means that by appropriate choice of parameter values, these physical solvers can be used to perform Lagrange multiplier optimization orders of magnitude faster and with lower power than conventional digital computers. This performance advantage can be utilized for optimization in machine-learning applications where energy and time considerations are critical.

The following questions arise: What are the action items? What is the most promising near term application? All of the hardware approaches seem to work comparably well. The easiest to implement would be the electrical oscillator circuits, although the optical oscillator arrays can be compact and very fast. Electrically, there would two integrated circuits, the oscillator array, and the connecting resistors that would need to be reprogrammed for different problems. The action item could be to design the first chip consisting of about 1,000 oscillators and a second chip that would consist of the appropriate coupling resistor array for a specific optimization problem. The resistors should be in an addressable binary hierarchy so that any desired resistance value can be programmed in by switches, within the number of bits accuracy. It is possible to imagine solving a new Ising problem every millisecond by reprogramming the resistor chip.

On the software side, a compiler would need to be developed to go from an unsolved optimization problem to the resistor array that matches that desired goal. If the merit function were mildly nonlinear, we believe that the Principle of Minimum Power Dissipation would still hold, but there has been less background science justifying that claim.

With regard to the most promising near-term application, it might be in Control Theory or in reinforcement learning in self-driving vehicles, where rapid answers are required, at modest power dissipation.

The act of computation can be regarded as a search among many possible answers. Finally, the circuit converges to a final correct configuration. Thus the initial conditions may include a huge phase-space volume of possible solutions, ultimately transitioning into a final configuration representing a small- or modest-sized binary number. This type of computing implies a substantial entropy reduction. This led to Landauer’s admonition that computation costs of entropy decrease and of energy, for a final answer with binary digits.

By the Second Law of Thermodynamics, such an entropy reduction must be accompanied by an entropy increase elsewhere. In Landauer’s viewpoint, the energy and entropy limit of computing was associated with the final acting of writing out the answer in bits, assuming the rest of the computer was reversible. In practice, technology consumes 104 times more than the Landauer limit, owing to the insensitivity of the transistors operating at 1 V, when they could be operating at 10 mV.

In the continuously dissipative circuits we have described here, the energy consumed would be infinite if we waited long enough for the system to reach the final optimal state. If we terminate the powering of our optimizer systems after they reach the desired final-state answer, the energy consumed becomes finite. By operating at voltage 1 V and by powering off after the desired answer is achieved, our continuously dissipating Lagrange optimizers could actually be closer to the Landauer limit than a conventional computer.

A controversial point relates to the quality of solutions that are obtained for NP-hard problems. The physical systems we are proposing evolve by steepest descent toward a local optimum, not a global optimum. Nonetheless, many of the authors of the seven physical systems presented here have claimed to find better local optima than their competitors, due to special adjustments in their methods. Undoubtedly, some improvements are possible, but none of the seven papers reviewed here claims to always find the one global optimum, which would be NP-hard (34).

We have shown that a number of physical systems that perform optimization are acting through the Principle of Minimum Power Dissipation, although other physics principles could also fulfill this goal. As the systems evolve toward an extremum, they perform Lagrange function optimization where the Lagrange multipliers are given by the gain or loss coefficients that keep the machine running. Thus, nature provides us with a series of physical optimization machines that are much faster and possibly more energy-efficient than conventional computers.

Supplementary Material

Acknowledgments

We gratefully acknowledge useful discussions with Dr. Ryan Hamerly, Dr. Tianshi Wang, and Prof. Jaijeet Roychowdhury. The work of S.K.V., T.P.X., and E.Y. was supported by the NSF through the Center for Energy Efficient Electronics Science under Award ECCS-0939514 and the Office of Naval Research under Grant N00014-14-1-0505.

Footnotes

The authors declare no competing interest.

This article contains supporting information online at https://www.pnas.org/lookup/suppl/doi:10.1073/pnas.2015192117/-/DCSupplemental.

Data Availability.

All study data are included in the article and SI Appendix.

References

- 1.Shen Y., et al. , Deep learning with coherent nanophotonic circuits. Nat. Photonics 11, 441–446 (2017). [Google Scholar]

- 2.Onsager L., Reciprocal relations in irreversible processes. II. Phys. Rev. 38, 2265–2279 (1931). [Google Scholar]

- 3.Lucas A., Ising formulations of many NP problems. Front. Phys. 2, 5 (2014). [Google Scholar]

- 4.Utsunomiya S., Takata K., Yamamoto Y., Mapping of Ising models onto injection-locked laser systems. Opt. Express 19, 18091–18108 (2011). [DOI] [PubMed] [Google Scholar]

- 5.Haribara Y., Utsunomiya S., Yamamoto Y., Computational principle and performance evaluation of coherent Ising machine based on degenerate optical parametric oscillator network. Entropy 18, 151 (2016). [Google Scholar]

- 6.Inagaki T., et al. , Large-scale Ising spin network based on degenerate optical parametric oscillators. Nat. Photonics 10, 415–419 (2016). [DOI] [PubMed] [Google Scholar]

- 7.Inagaki T., et al. , A coherent Ising machine for 2000-node optimization problems. Science 354, 603–606 (2016). [DOI] [PubMed] [Google Scholar]

- 8.Leleu T., Yamamoto Y., McMahon P. L., Aihara K., Destabilization of local minima in analog spin systems by correction of amplitude heterogeneity. Phys. Rev. Lett. 122, 040607 (2019). [DOI] [PubMed] [Google Scholar]

- 9.Xiao T. P., “Optoelectronics for refrigeration and analog circuits for combinatorial optimization,” PhD thesis, Department of Electrical Engineering and Computer Sciences, University of California, Berkeley, CA (2019).

- 10.Babaeian M., et al. , A single shot coherent Ising machine based on a network of injection-locked multicore fiber lasers. Nat. Commun. 10, 3516 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Wang T., Roychowdhury J., “OIM: Oscillator-based Ising machines for solving combinatorial optimisation problems” in Unconventional Computation and Natural Computation, McQuillan I., Seki S., Eds. (Lecture Notes in Computer Science, Springer International Publishing, Cham, Switzerland, 2019), vol. 11493, pp. 232–256. [Google Scholar]

- 12.Kalinin K. P., Berloff N. G., Global optimization of spin Hamiltonians with gain-dissipative systems. Sci. Rep. 8, 17791 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Roques-Carmes C., et al. , Heuristic recurrent algorithms for photonic Ising machines. Nat. Commun. 11, 249 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Mahler S., Goh M. L., Tradonsky C., Friesem A. A., Davidson N., Improved phase locking of laser arrays with nonlinear coupling. Phys. Rev. Lett. 124, 133901 (2020). [DOI] [PubMed] [Google Scholar]

- 15.Pierangeli D., Marcucci G., Conti C., Large-scale photonic Ising machine by spatial light modulation. Phys. Rev. Lett. 122, 213902 (2019). [DOI] [PubMed] [Google Scholar]

- 16.Ercsey-Ravasz M., Toroczkai Z., Optimization hardness as transient chaos in an analog approach to constraint satisfaction. Nat. Phys. 7, 966–970 (2011). [Google Scholar]

- 17.Molnár B., Molnár F., Varga M., Toroczkai Z., Ercsey-Ravasz M., A continuous-time MaxSAT solver with high analog performance. Nat. Commun. 9, 4864 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Traversa F. L., Di Ventra M., Polynomial-time solution of prime factorization and NP-complete problems with digital memcomputing machines. Chaos 27, 023107 (2017). [DOI] [PubMed] [Google Scholar]

- 19.Maass W., Natschläger T., Markram H., Real-time computing without stable states: A new framework for neural computation based on perturbations. Neural Comput. 14, 2531–2560 (2002). [DOI] [PubMed] [Google Scholar]

- 20.Tanaka G., et al. , Recent advances in physical reservoir computing: A review. Neural Netw. 115, 100–123 (2019). [DOI] [PubMed] [Google Scholar]

- 21.Goto H., Tatsumura K., Dixon A. R., Combinatorial optimization by simulating adiabatic bifurcations in nonlinear Hamiltonian systems. Sci. Adv. 5, eaav2372 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Borders W. A., et al. , Integer factorization using stochastic magnetic tunnel junctions. Nature 573, 390–393 (2019). [DOI] [PubMed] [Google Scholar]

- 23.Prigogine I., Etude Thermodynamique des Phénomènes irréversibles (Editions Desoer, Liège, 1947), chap. V. [Google Scholar]

- 24.de Groot S., Thermodynamics of Irreversible Processes (Interscience Publishers, New York, NY, 1951), chap. X. [Google Scholar]

- 25.Dickson N. G., et al. , Thermally assisted quantum annealing of a 16-qubit problem. Nat. Commun. 4, 1903 (2013). [DOI] [PubMed] [Google Scholar]

- 26.Boyd S., Vandenberghe L., Convex Optimization (Cambridge University Press, 2004). [Google Scholar]

- 27.Bertsekas D., Nonlinear Programming (Athena Scientific, 1999). [Google Scholar]

- 28.Index of /∼yyye/yyye/Gset. https://web.stanford.edu/∼yyye/yyye/Gset/. Accessed 21 September 2020.

- 29.Benlic U., Hao J. K., Breakout local search for the max-cut problem. Eng. Appl. Artif. Intell. 26, 1162–1173 (2013). [Google Scholar]

- 30.McMahon P. L., et al. , A fully programmable 100-spin coherent Ising machine with all-to-all connections. Science 354, 614–617 (2016). [DOI] [PubMed] [Google Scholar]

- 31.Roychowdhury J., Boolean computation using self-sustaining nonlinear oscillators. Proc. IEEE 103, 1958–1969 (2015). [Google Scholar]

- 32.Demir A., Mehrotra A., Roychowdhury J., Phase noise in oscillators: A unifying theory and numerical methods for characterization. IEEE Trans. Circuits Syst. I: Fundam. Theory Appl. 47, 655–674 (2000). [Google Scholar]

- 33.Reck M., Zeilinger A., Bernstein H. J., Bertani P., Experimental realization of any discrete unitary operator. Phys. Rev. Lett. 73, 58–61 (1994). [DOI] [PubMed] [Google Scholar]

- 34.Karp R. M., “Reducibility among combinatorial problems” in Complexity of Computer Computations, Miller R. E., Thatcher J. W., Eds. (Springer, 1972), pp. 85–103. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

All study data are included in the article and SI Appendix.