Abstract

Context.

Observer variability in digital microscopy and the effect of computer-aided digital microscopy are underexamined areas in need of further research, considering the increasing use and future role of digital imaging in pathology. A reduction in observer variability using computer aids could enhance the statistical power of studies designed to determine the utility of new biomarkers and accelerate their incorporation in clinical practice.

Objectives.

To quantify interobserver and intraobserver variability in immunohistochemical analysis of HER2/neu with digital microscopy and computer-aided digital microscopy, and to test the hypothesis that observer agreement in the quantitative assessment of HER2/neu immunohistochemical expression is increased with the use of computer-aided microscopy.

Design.

A set of 335 digital microscopy images extracted from 64 breast cancer tissue slides stained with a HER2 antibody, were read by 14 observers in 2 reading modes: the unaided mode and the computer-aided mode. In the unaided mode, HER2 images were displayed on a calibrated color monitor with no other information, whereas in the computer-aided mode, observers were shown a HER2 image along with a corresponding feature plot showing computer-extracted values of membrane staining intensity and membrane completeness for the particular image under examination and, at the same time, mean feature values of the different HER2 categories. In both modes, observers were asked to provide a continuous score of HER2 expression.

Results.

Agreement analysis performed on the output of the study showed significant improvement in both interobserver and intraobserver agreement when the computer-aided reading mode was used to evaluate preselected image fields.

Conclusion.

The role of computer-aided digital microscopy in reducing observer variability in immunohistochemistry is promising.

Immunohistochemistry (IHC) has evolved during the past 40 years to become central to both diagnostic pathology and clinical research.1 Advances in molecular biology leading to the discovery of numerous molecular markers (or biomarkers), coupled with the manufacture of the corresponding specific antibodies, have expanded the role of IHC to not only help diagnosis but also to predict the behavior of certain tumors and their response to treatment. One such example is the biomarker ERBB2 (commonly known as HER2/neu or HER2) for breast cancer, which is primarily used to identify likely responders to trastuzumab (Herceptin, Genentech Inc, San Francisco, California) therapy. Five international, prospective, randomized clinical trials have demonstrated that adjuvant trastuzumab reduces the risk of recurrence and mortality by one-half and one-third, respectively, in patients with early stage breast cancer.2 The evaluation of HER2 expression with IHC involves the visual examination of cell membrane staining in paraffin-embedded tissue slides with a light microscope (optical microscopy) and overall slide classification in categories of 0, 1+, 2+, and 3+, corresponding to no, weak, moderate, and strong staining. According to the American Society of Clinical Oncology/College of American Pathologists guidelines,2 cases scored as 3+ are recommended for trastuzumab therapy, whereas 2+ cases are subject to further testing with fluorescence in situ hybridization. The evaluation of HER2 and other biomarkers with optical microscopy inspection of IHC-stained tissue specimens is widely used because it is relatively cheap and is suited for paraffin-embedded tissue, including archival material that is typically needed for outcome studies with long followup times. Even though the use of light microscopy for viewing IHC slides still predominates, there is growing evidence of pathology following the path of digital radiology during the last decade in embracing digital technologies.3-10

The field of digital microscopy, both for clinical practice and the development of new biomarkers, has been growing rapidly, driven by the need for high throughput and recent developments in microscopy imaging, such as automated whole-slide scanning systems. Digital microscopy has a number of possible applications,11 including interlaboratory consultations of the same slide, teaching, telepathology (including timely support to remote locations), and image analysis for computer-aided assessment. The use of computer-aided assessment was suggested as a way to decrease interobserver and intraobserver variability in the immunohistochemical interpretation of biomarkers.12-15 Immunohistochemistry was shown to suffer from interobserver and interlaboratory variability,16-19 which may hinder the clinical utility of biomarkers, as well as the statistical power of clinical trials for biomarker discovery. Even if a biomarker has demonstrated prognostic or predictive value, the potential for its widespread clinical use is greatly diminished if the biomarker measurements cannot be relied on for making clinical management decisions in the case of individual patients. In the case of HER2, the visual evaluation of criteria, such as intensity and uniformity of staining, is a subjective process that can affect the accuracy of IHC assessment and contribute to interobserver variability. Several studies have reported significant observer variability in the IHC analysis of HER2,20,21 supporting the need for quantitative methods to improve accuracy and reproducibility. The College of American Pathologists/American Society of Clinical Oncology guidelines recommend image analysis as an effective tool for achieving consistent interpretation of IHC HER2 staining, provided that a pathologist confirms the result.2 Computer-aided, digital microscopy systems, involving the digitization of stained tissue and its analysis with image algorithms, could produce a true continuous score of IHC expression and perform reproducible and quantitative analysis of biomarker expression in a practical way. Moreover, they have the potential to reveal relationships between markers that may not be discernible to the pathologist because of his or her inability to distinguish between fine levels of color,15,22 provided that such color information is not limited by the components of the digital microscopy system.

A number of computer-aided immunohistochemistry systems for different biomarkers have been reported in the literature. Several studies have reported on the use of image analysis algorithms to quantify HER2 expression23-29 as well as the use of IHC analysis of other biomarkers, including estrogen receptors for breast cancer,30 BCL2 for melanoma,31 and p53 proliferating cell nuclear antigen for endometrial tissue.32 In addition to research studies, a number of commercial systems are currently available for the evaluation of IHC, as reviewed by Cregger et al.33 Such systems have been used to assess IHC expression.34-36 The above studies incorporating image analysis were most often compared for concordance to light microscopy or fluorescence in situ hybridization. However, there is a lack of studies focusing on interobserver and intraobserver variability in the interpretation of IHC with digital microscopy or with computer-aided digital microscopy. In the study by Bloom et al,36 observers selected areas of HER2 slides for which the automated scores provided by a commercial image-based system were averaged to produce an IHC score for that slide. With that approach, interobserver agreement in HER2 immunohistochemical scoring was improved compared with light microscopy-based evaluation. However, that study36 (and other studies, to the best of our knowledge) did not examine observer variability in the interpretation of IHC with digital microscopy, that is, comparing the scores of multiple observers reading the same HER2 images on the same monitor. Observer variability in digital microscopy and the effect of computer-aided digital microscopy are underexamined areas in need of further research, particularly considering the increasing use and future role of digital pathology.

In this article, we present results from a randomized observer study to quantify interobserver and intraobserver variability in the immunohistochemical analysis of HER2 with digital microscopy and to assess any possible benefit of computer-aided microscopy in reducing such variability. A set of digital microscopy images extracted from breast cancer tissue slides stained with a HER2 antibody were read in a random order by 14 observers in 2 reading modes: the unaided mode and the computer-aided mode. In the unaided mode, HER2 images were displayed on a calibrated color monitor with no other information, whereas in the computer-aided mode, HER2 images were displayed on the same calibrated color monitor along with a corresponding feature plot showing computer-extracted values of membrane staining intensity and membrane completeness for the particular image under examination and, at the same time, the mean feature values of the different HER2 categories. In both modes, observers were asked to provide a continuous score of HER2 expression of the image. Here, we present the design, implementation, and results of our observer study to quantify interobserver and intraobserver variability in the interpretation of HER2 staining with digital and computer-aided digital microscopy.

MATERIALS AND METHODS

Observer Study Design

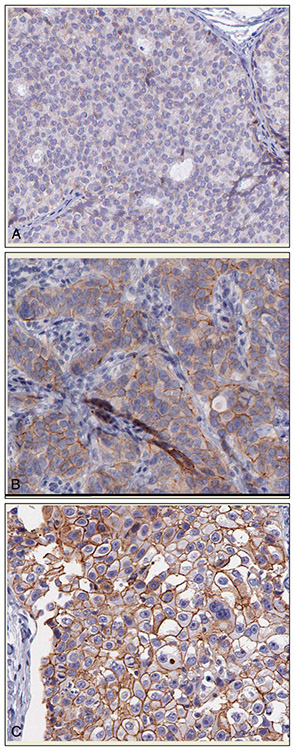

A set of 335 digital microscopy images extracted from 64 breast cancer tissue slides stained with a HER2 antibody was used in this study. All tissue slides were scanned using the Aperio Scanscope T2 Whole Slide Imager (Aperio Technologies, Vista, California) employing ×20 objectives. For each of the resulting whole digital slides, a number of images were extracted to cover the whole area of epithelial cells. These images were selected by the principal investigator (M.A.G.) after being trained by the expert pathologist on a different set of images to identify regions of epithelial cells. Each image was saved in a color TIFF format with 8 bits and a size of 646 by 816 pixels. Examples of images for the 1+, 2+, and 3+ HER2 categories (1+, 2+, 3+) are shown in Figure 1 (A through C, respectively). Details regarding slice preparation and digitization are presented in the “Slice Preparation and Image Acquisition” section below.

Figure 1.

Examples of digital immunohistochemical images of HER2-stained, breast cancer tissue samples used in the study. The images were acquired from a 1+ slide (A), a 2+ slide (B), and a 3+ slide (C). Image size for this illustration was reduced from actual size (original magnification ×20).

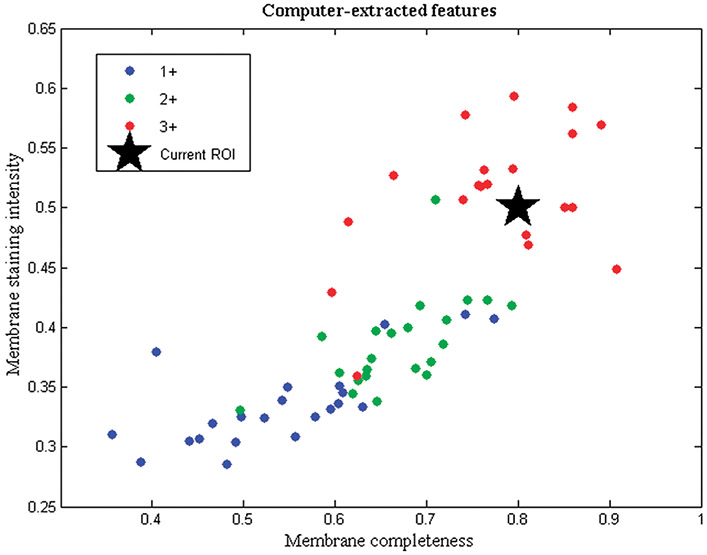

Before the observer study, each image was processed with a previously developed automated algorithm29 that extracted mean values of membrane staining intensity and membrane completeness for each image. These 2 features are the most important features in the interpretation of HER2 as seen in clinical guidelines for its evaluation.2 Based on the 2 computer-extracted values, a feature plot was created for each image. As can be seen in the example of Figure 2, the plot had axes of membrane staining intensity (vertical axis) and membrane completeness (horizontal axis) and showed the computer-extracted values of the 2 features for the particular image under examination (star). At the same time, common to all feature plots, the mean feature values of HER2 slides (averaged for all images of each slide) were shown with color-coding, based on the 1+, 2+, or 3+ archive score of the slide, to illustrate the range of these features. To show the feature range from as many slides as possible and because of the limited number of slides available, mean values from the 64 slides were shown in the plot; however, observers were not aware of the origin of the mean feature values. The objective of using this computer aid in the form of the feature plot was to provide the observer with image-specific information of membrane completeness and membrane staining intensity in feature space and, at the same time, show the range of these feature values for each HER2 category. Our primary hypothesis was that this additional information could improve the agreement between observers and the consistency within them in deciding their quantitative HER2 score for a particular image.

Figure 2.

Feature plot shown concurrently with HER2 images in the computer-aided mode of the observer study. The plot shows membrane completeness and membrane staining intensity values of a testing image (star prompt) relative to the values of slides in 1+, 2+, and 3+ categories. Abbreviation: ROI, region of interest, refers to the image shown.

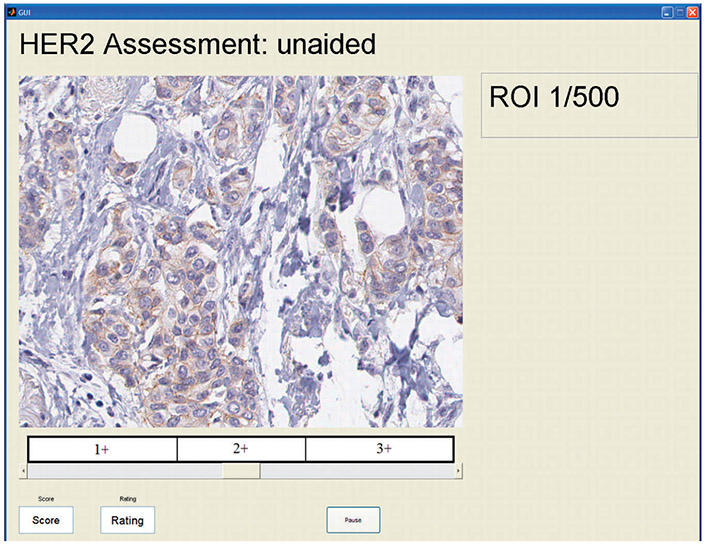

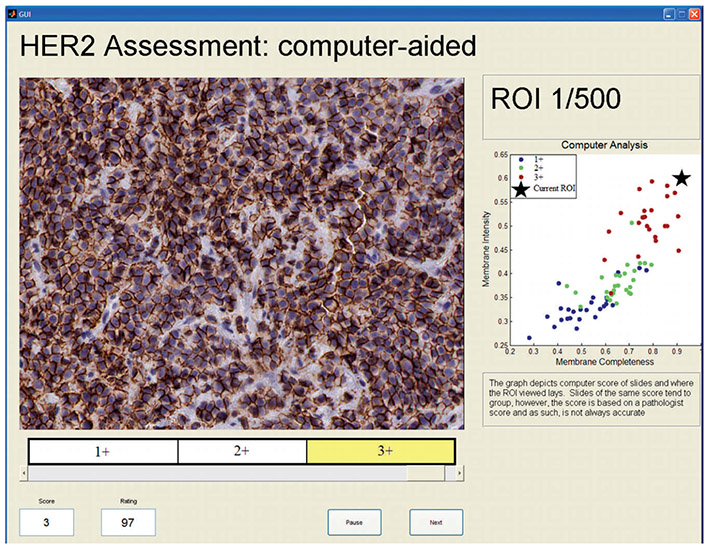

To test this hypothesis, we conducted an observer study in which multiple observers read the HER2 images in 2 modes: the unaided mode and the computer-aided mode. In the unaided mode, HER2 images were displayed with no other information, and the observer was asked to select a value for the HER2 expression of the image in the range between 1 and 100, using a moving slider as shown in Figure 3. The quantitative score was shown in a box while moving the slider, along with information on the corresponding category (1+, 2+, or 3+) in which the slider was located. As can be seen in Figure 3, the 100-point scale was split evenly into thirds and landmarks were provided to relate the 100-point scale to the familiar categories of HER2 expression. Another reason for showing these cut points was to minimize the risk of observers using different ranges of the 100-point scale for different expression categories. Equally spaced cut points were shown for simplicity. In the computer-aided mode, observers were shown a HER2 image along with its corresponding feature plot, as shown in Figure 4, and were again asked to select a value for the HER2 expression of the image in the range between 1 and 100 with the same moving slider. Each observer read all images in each of 2 sessions, at least 1 month apart from each other to reduce the chance of image recall. In the first session, images were randomly shown in either the unaided or the computer-aided reading mode. In the second session, the same images were read in the other reading mode (computer-aided or unaided), so that all images in the set were read in both modes. The order in which the images were shown was randomized on both days and for each observer.

Figure 3.

A screenshot of the graphic user interface used in the study showing an image being read in the unaided reading mode. Abbreviation: ROI, region of interest, refers to the image shown (HER2 stain, original magnification ×20).

Figure 4.

A screenshot of the graphic user interface used in the study showing an image being read in the aided reading mode. Abbreviation: ROI, region of interest, refers to the image shown (HER2 stain, original magnification ×20).

The study design enabled both interobserver and intraobserver agreement analysis in the following way. A subset of 241 images was used to examine interobserver variability. Images used in the interobserver analysis were shown to the observers in 2 sessions and alternating reading modes as described above. Additionally, 2 other subsets were used to examine intraobserver variability. The first set contained 47 images that were read in the unaided mode in both reading sessions; the second contained 47 different images that were read in the computer-aided mode in both sessions.

Observer Group and Training

A total of 14 observers participated in this study. Observers consisted of 2 groups, a pathologist group of 7 and a novice group, also of 7 members. The pathologist group ranged in training and experience, consisting of 5 board-certified pathologists (postboard experience ranging from 2–12 years) and 2 residents (1 had completed second year, 1 had completed third year). The novice group consisted of researchers in the field of medical imaging but who had no clinical or medical training before the study.

Before initiating the observer study, each observer was subjected to a 3-step training procedure. In the first step, observers had a one-on-one training session with the principal investigator of the study. The session used a PowerPoint (Microsoft, Redmond, Washington) presentation that included (1) the objectives of the study, (2) the guidelines used in HER2 interpretation, (3) training images of each HER2 category (30 images that were not included in the observer study were shown, 10 in each category) along with comments on the HER2 expression of each image, (4) a brief description of the algorithm used to extract the quantitative features of membrane staining intensity and membrane completeness and its performance, (5) an explanation of the feature plot used in the computer-aided mode, (6) examples of images in the unaided and computer-aided modes, and (7) instructions for their participation in the study. Instructions to the reviewers were the following: (1) for each session (335 images), read the images in 4 parts of about 83 images each, and take a short break after each part; (2) consult both the image and the feature plot (in the computer-aided reading mode) before scoring; and (3) try to use the whole range of the scoring slider. The last instruction was given to the observers so that the resulting score would better approximate a continuous value. Observers were instructed that even images of the same category, such as 3+, might not have the same expression and should be scored based on the degree of membrane staining intensity and completeness.

The second step of the observer training consisted of a feedback session where observers read 30 training images (not included in the set of 335 or the PowerPoint training) that were preread by an expert pathologist in breast disease. After an observer had scored a training image, a prompt would appear on the screen if the score of the observer differed significantly from that of the expert pathologist’s score. The prompt would indicate in which direction (lower or higher) the observer score was different from the expert’s score. The observer would then score the image again. A difference of 15 in the 1 to 100 slider scale was used to determine whether the observer’s score was close to the expert’s score. This value was selected as a logical trade off between achieving reasonably good agreement with the expert without requiring complete agreement (keeping in mind that uncertainty exists even in expert scoring).

The final training step was a practice run with 15 training images (again not included in the set of 335 or the PowerPoint training) where observers completed a mini session identical in design to the main study to accustom themselves to the interface and the use of the 2 reading modes.

Slice Preparation and Image Acquisition

The microscopy images used in the observer study were extracted from 77 formalin-fixed, paraffin-embedded breast cancer tissue slides acquired from the archives of the Department of Pathology, University of California at Irvine. The following procedure was used to produce the slides. The breast cancer tissue specimens were sectioned onto positively charged slides and deparaffinized. Antigen retrieval was carried out with Dako Target Retrieval Solution (Dako, Carpinteria, California) pH 6 in a pressure cooker for 5 minutes. Application of primary antibody and detection system was performed on a Dako Autostainer Plus automated immunostainer. The HER2 polyclonal antibody (Dako) was used at a dilution of 1:500. Negative controls were performed with substitution of a rabbit immunoglobulin for the primary antibody.

Manual evaluation of HER2 staining in categories 1+, 2+ or 3+ (with increasing expression) was provided by a surgical pathologist with experience in breast pathology, applying the grading system of the Dako HercepTest. Briefly, incomplete, faint membrane staining in >10% of cells in a section was designated 1+, and moderate or strong complete membrane staining in >10% of cancer cells was designated 2+ or 3+, respectively. The IHC scoring of this data set was performed before the recent College of American Pathologists/American Society of Clinical Oncology guidelines2 (which call for report on staining from >30% of cells instead of 10%) were published. The distribution of the slide scores was as follows: 26 (34%) were scored 1+, 27 (35%) were scored 2+, and 24 (31%) were scored 3+. Thirteen slides (4 with a score of 1+, 5 with a score of 2+ and 4 with a score of 3+) were randomly selected from each category to provide the training images, whereas the remaining 64 slides (22 with a score of 1+, 22 with a score of 2+, and 20 with a score of 3+) provided the 335 images used in the main study. As a result, 112 images were selected from 1+ slides, 114 images from 2+ slides, and 109 images from 3+ slides. No slides with 0 staining were used in our study partly because only a few such cases were available at the point of data acquisition and also because the focus of the study was on the variability of staining quantification and not on detecting stained cases.

Display Specifications and Calibration

The observer study was conducted on a single color monitor to avoid any bias from the use of different monitors that might have different properties or calibration. The monitor used was a 3-million-pixel (536 × 2048) liquid crystal device (RadiForce R31, EIZO, Ishikawa, Japan), with a digital video interface, digital input, running at 60 Hz. The display pixel pitch was 207 μm, and the screen diagonal was 52.9 cm. The display device has support for 10-bit color palettes. However, even though calibration measurements were done with a color (filtered) tri-stimulus probe, the device was calibrated using the manufacturer’s calibration software (RadiCS, EIZO, Ishikawa, Japan) in a grayscale mode to comply with a Digital Imaging and Communications in Medicine (DICOM, Rosslyn, Virginia) grayscale standard display function, which implies equal signals sent to each of the 3 color subpixels (<10% deviation from the target contrast per just-noticeable difference). This calibration technique is typical of color displays that are used in grayscale and color modes. Any other color management scheme has the potential to affect the grayscale response and, therefore, compliance to the DICOM standard. The minimum and maximum luminances were 0.9 candelas (cd)/m2 and 300 cd/m2, respectively. The readings were performed in a laboratory with black surfaces for controlled ambient reflections and under an illuminance range at the face of the monitor between 10 and 30 lux. The interface used in this study used was programmed in MATLAB (MathWorks, Natick, Massachusetts).

Statistical Analysis

The scores collected from each observer were in the form of an integer in the range 0 to 100. At the same time, the scores could be placed in a corresponding category (1+, 2+, 3+) because the slider used by the observers also indicated the category, as shown in Figure 3. Observer data were analyzed in both continuous and categorical forms.

Interobserver variability was analyzed using 2 commonly used, group-concordance measures, namely the Kendall coefficient of concordance and the intraclass correlation coefficient (ICC). These measures of concordance quantify how often 2 readers order a pair of cases in the same order (Kendall coefficient) or how consistent quantitative measurements made by different observers are with each other when measuring the same quantity (ICC). Kendall coefficient of concordance37 (also known as the Kendall W) is a rank-correlation, nonparametric test that is suitable to multiple observers and is related to the dispersion of individual mean ranks from the average mean rank. Kendall W is suited to both continuous and categorical data ranges from 0 (no agreement within a group) to 1 (perfect agreement within a group). The presence of ties in the observer rankings can affect the relative values of the Kendall W, so we used Kendall Wt, a test that includes a correction element for ties.38 A possible drawback of rank-based measures, such as Kendall, is that they do not take into account the absolute magnitude of disagreement. As a complementary measure, we also analyzed observer data with the ICC metric as described in a manuscript by MacLennan.39 The ICC is commonly used for the assessment of interrater agreement for quantitative measurements of multiple observers measuring the same quantity.40 Its main difference from rank correlation-based metrics, such as Kendall W, is that in the ICC, the data are centered and scaled using a pooled mean and standard deviation, whereas in rank-based correlation, each variable is centered and scaled by its own mean and standard deviation.

The agreement between pathologists and novices was assessed with pairwise analysis using the well-known Kendall τb measure, which measures rank association by calculating the difference in the fraction of concordant and discordant pairs while correcting for ties.41 For example, consider a pair of slides, 1 and 2; 2 observers, A and B, are concordant when both rank slide 1 as more diseased than slide 2, or when both rank slide 2 as more diseased than slide 1. The observers are discordant when reader A ranks slide 1 as more diseased than slide 2, whereas reader B ranks slide 2 as more diseased than slide 1 (and vice versa). The range of Kendal τb is (−1 to 1), where 1 indicates the readers are always concordant (perfect agreement), −1 indicates they are always discordant (perfect disagreement), and 0 indicates no agreement.

Confidence intervals for mean Kendall τb values, as well as for the difference in these values between unaided and computer-aided reading modes, were calculated with bootstrap analysis using the procedures described in the Appendix. Our bootstrap analyses accounted for both observer and case variability.

Similarly, intraobserver agreement was assessed using Kendall τb, because it consisted of pairwise analysis only. For all analyses, comparisons were made between the unaided and computer-aided reading modes. Additionally, separate analyses were conducted for the pathologist, the novice, and the overall group of observers. Kendall Wt and τb statistics were implemented in MATLAB (MathWorks), whereas ICC analysis was performed using SPSS (SPSS, Chicago, Illinois).

RESULTS

Comparisons were made between the unaided (conventional) and computer-aided assessment of HER2 expression using both the continuous and categorical form of the observers’ output. Categorical data convey information related to the familiar HER2 categories used in clinical practice for drug therapy management. On the other hand, information (and statistical power) is lost when data are placed in such a small number of categories; immunohistochemical expression is a surrogate measure of protein expression, a continuous variable. Using the continuous form allows studies to determine the optimal strategy for categorizing data (for example, a high threshold could be used on a screening population or a low threshold could be used on a high-risk population). The continuous form can also act as a reference standard for training image-analysis algorithms. For the aforementioned reasons, the output of this study was analyzed using both the continuous and categorical types of data.

Tables 1 and 2 summarize the results of interobserver agreement using group concordance analysis on both the continuous and categorical forms of data. Table 1 tabulates results using Kendall coefficient of concordance along with confidence intervals constructed from 1000 bootstrap samples. Similarly, Table 2 tabulates results using the ICC metric. It can be seen from the results that group concordance with either metric is significantly increased in the computer-aided mode (all results are statistically significant). This result is valid for the overall group (pathologists and novices) as well as for the pathologist and novice groups separately. Moreover, agreement is improved for both continuous and categorical forms of data. It is noteworthy that the confidence intervals for both metrics seem to be somewhat smaller for the continuous data, a result that supports the assertion that some information is lost when data are binned.

Table 1.

Results of Group Agreement Analysis Using Kendall Coefficient of Concordance (Kw)a

| Observer Group | Interobserver Agreement From Continuous Data, Kw (95% CI) |

Interobserver Agreement From Categoric Data, Kw (95% CI) |

||

|---|---|---|---|---|

| Unaided | Computer-Aided | Unaided | Computer-Aided | |

| Overall | 0.82 (0.78–0.85) | 0.92 (0.90–0.93) | 0.76 (0.72–0.80) | 0.86 (0.84–0.88) |

| Pathologists | 0.82 (0.79–0.85) | 0.91 (0.90–0.93) | 0.77 (0.74–0.80) | 0.86 (0.84–0.89) |

| Novices | 0.84 (0.81–0.87) | 0.94 (0.92–0.95) | 0.79 (0.75–0.82) | 0.88 (0.86–0.91) |

Abbreviation: CI, confidence interval.

Numbers in parentheses indicate the 95% CI constructed from 100 bootstrap samples. All differences in agreement between unaided and computer-aided are statistically significant.

Table 2.

Results of Group Agreement Analysis Using the Intraclass Correlation Coefficient (ICC)a

| Observer Group | Interobserver (Group) Agreement From Continuous Data, ICC (95% CI) |

Interobserver (Group) Agreement From Categoric Data, ICC (95% CI) |

||

|---|---|---|---|---|

| Unaided | Computer-Aided | Unaided | Computer-Aided | |

| Overall | 0.81 (0.78–0.84) | 0.92 (0.91–0.93) | 0.72 (0.68–0.76) | 0.83 (0.80–0.86) |

| Pathologists | 0.80 (0.76–0.83) | 0.91 (0.89–0.92) | 0.71 (0.66–0.75) | 0.82 (0.79–0.85) |

| Novices | 0.83 (0.80–0.86) | 0.93 (0.92–0.94) | 0.74 (0.70–0.78) | 0.85 (0.82–0.87) |

Abbreviation: CI, confidence interval.

Numbers in parentheses indicate the 95% CI constructed from 100 bootstrap samples. All differences in agreement between unaided and computer-aided are statistically significant.

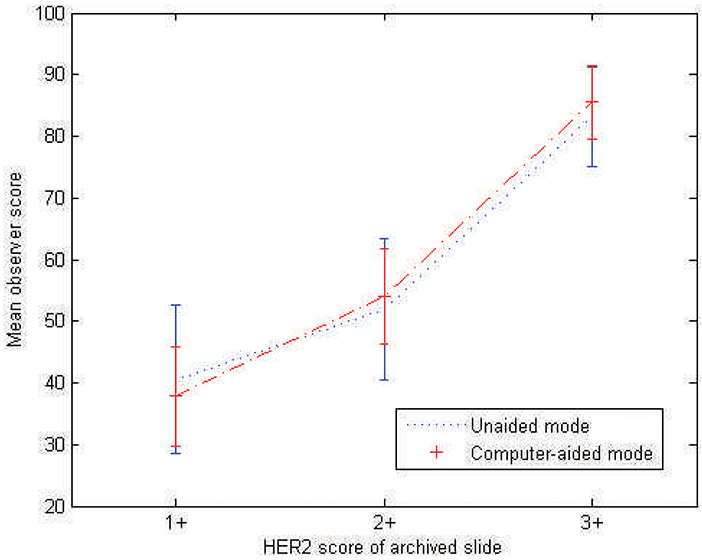

To examine differences within observers, specific to HER2 categories, the mean and standard deviation of observers’ scores were calculated for each category and plotted in Figure 5. As can be seen in the plot, there was more variation and overlap among the scores for images extracted from slides archived as 1+ and 2+, whereas there was reduced variation for 3+ slides and reduced overlap between 2+ and 3+. The plot also shows that using the computer-aided reading mode decreased the variation in scoring for all categories. In other analyses, it was found that from the top 10% of images (24 images) with the most variation in observer scoring (measured by standard deviation of observers’ scores), 45.8% (n = 11) were images from 1+ slides, 54.2% (n = 13) were images from 2+ slides, and no images (n = 0) were extracted from 3+ slides. On the other hand, from the bottom 10% of images (least variation), 4.2% (n = 1) were images from 1+ slides, 16.7% (n = 4) were images from 2+ slides, and 79.1% (n = 19) were extracted from 3+ slides. As expected, observer variability was higher for 1+ and 2+ cases.

Figure 5.

Mean observer scores in the range of 1 to 100 for the unaided and computer-aided reading modes. Error bars show 1 standard deviation.

Table 3 tabulates results of interobserver agreement using pairwise analysis on both the continuous and categorical forms of data. The table tabulates the average Kendall τb values among observers and the difference in those values between unaided and computer-aided assessment, along with confidence intervals on those measures constructed from 1000 bootstrap samples. Pairwise analysis allows intergroup comparisons to be made between pathologists and novices. Agreement among observers in each group is also included in the table, for comparison purposes using the same agreement measure. Results show that agreement between the novice group and pathologist group is increased when computer-aided assessment is used. These results indicate the potential for computer-aided assessment to have a role in bridging the gap between experienced and inexperienced pathology observers, such as intern clinical residents. Moreover, agreement between novices and pathologists in the unaided mode is similar to the agreement among pathologists, validating our observer training methodology described in the “Observer Group and Training” section. Such a training methodology, which included a reading session with feedback on agreement with the scores provided by an experienced pathologist in breast disease, could be used as a teaching tool for pathology residents.

Table 3.

Results of Pairwise Agreement Analysis Using Kendall τba

| Reader Group | Interreader (Pairwise) Agreement Using Continuous Data, Kendall τb (95% CI) |

Interreader (Pairwise) Agreement Using Categoric Data, Kendall τb (95% CI) |

||||

|---|---|---|---|---|---|---|

| Unaided | Computer-Aided | Difference | Unaided | Computer-Aided | Difference | |

| Overall | 0.62 (0.56–0.67) | 0.76 (0.71–0.81) | 0.14 (0.10–0.19) | 0.70 (0.64–0.76) | 0.82 (0.76–0.87) | 0.12 (0.06–0.17) |

| Pathologists | 0.61 (0.53–0.67) | 0.75 (0.66–0.81) | 0.14 (0.08–0.20) | 0.69 (0.61–0.76) | 0.80 (0.72–0.89) | 0.11 (0.03–0.19) |

| Novices | 0.63 (0.57–0.69) | 0.78 (0.72–0.83) | 0.15 (0.09–0.21) | 0.71 (0.63–0.77) | 0.83 (0.77–0.89) | 0.12 (0.06–0.19) |

| Pathologists versus novices | 0.62 (0.57–0.66) | 0.76 (0.71–0.81) | 0.14 (0.11–0.19) | 0.70 (0.65–0.75) | 0.82 (0.76–0.86) | 0.12 (0.06–0.16) |

Abbreviation: CI, confidence interval.

Numbers in parentheses indicate the 95% CI constructed from 100 bootstrap samples. All differences in agreement between unaided and computer-aided are statistically significant.

Results on the analysis of intraobserver agreement are shown in Table 4. The results show a statistically significant improvement in overall agreement using both the continuous and categorical forms of data, indicating the potential of computer-aided assessment to increase consistency within observers. The improvement is not statistically significant for the pathologists’ group, which could be attributed to the smaller sample size; note that 241 cases were used for interobserver analysis, whereas 47 cases each were used for intraobserver analysis for the unaided and computer-aided modes. The smaller sample size is also the likely reason that the confidence intervals in the intraobserver agreement analysis seem to be increased compared with those for interobserver analysis. It is interesting to note that, for the expert pathologist in breast disease, the intrareader variability as measured by the Kendall τ was 0.75 with the unaided and 0.82 with the computer-aided mode.

Table 4.

Results of Intrareader Agreement Analysis Using Kendall τba

| Reader Group | Intrareader Agreement Using Continuous Data, Kendall τb (95% CI) |

Intrareader Agreement Using Categoric Data, Kendall τb (95% CI) |

||||

|---|---|---|---|---|---|---|

| Unaided | Computer-Aided | Difference | Unaided | Computer-Aided | Difference | |

| Overall | 0.71 (0.64 to 0.76) | 0.81 (0.75 to 0.85) | 0.10 (0.02 to 0.17) | 0.74 (0.66 to 0.80) | 0.84 (0.77 to 0.89) | 0.10 (0 to 0.19) |

| Pathologists | 0.72 (0.64 to 0.79) | 0.80 (0.72 to 0.86) | 0.08 (−0.01 to 0.18) | 0.73 (0.63 to 0.82) | 0.85 (0.76 to 0.92) | 0.12 (−0.01 to 0.25) |

| Novices | 0.70 (0.60 to 0.77) | 0.81 (0.73 to 0.87) | 0.11 (0.01 to 0.23) | 0.75 (0.62 to 0.84) | 0.82 (0.75 to 0.89) | 0.07 (−0.04 to 0.20) |

Abbreviation: CI, confidence interval.

Numbers in parentheses indicate the 95% CI constructed from 100 bootstrap samples. Statistically significant differences in agreement are shown in bold.

In addition to agreement analysis, a relative bias analysis was performed to examine whether there was a systematic difference in observer scores between the 2 reading modes. The results tabulated in Table 5 show that the differences in scores for both modes, as well as among the different observer types, are not statistically significant, with very little uncertainty in the difference. This indicates that the use of computer-aided microscopy does not introduce a reading bias.

Table 5.

Differences in Observers’ Scores Using the Unaided and Computer-Aided Reading Modesa

| Reader Group | Mean Observer Score Using Continuous Data, No. (95% CI) |

Mean Observer Score Using Categoric Data, No. (95% CI) |

||||

|---|---|---|---|---|---|---|

| Unaided | Computer-Aided | Difference | Unaided | Computer-Aided | Difference | |

| Overall | 58.4 (55.0 to 61.9) | 59.0 (55.6 to 62.5) | 0.6 (−1.8 to 0.5) | 2.2 (2.1 to 2.3) | 2.2 (2.1 to 2.3) | 0 (−0.05 to 0.02) |

| Pathologists | 59.9 (56.3 to 63.7) | 60.6 (57.2 to 64.1) | 0.7 (−2.1 to 0.8) | 2.3 (2.2 to 2.3) | 2.3 (2.2 to 2.4) | 0 (−0.04 to 0.04) |

| Novices | 56.9 (53.1 to 60.6) | 57.5 (54.0 to 61.0) | 0.6 (−2.1 to 1.2) | 2.1 (2.0 to 2.2) | 2.2 (2.1 to 2.3) | 0.02 (−0.07 to 0.03) |

Abbreviation: CI, confidence interval.

Numbers in parentheses indicate the 95% CI constructed from 100 bootstrap samples. No differences in scores are statistically significant.

COMMENT

In summary, our study showed significant improvement in both interobserver and intraobserver variability when computer-aided assessment of HER2 staining was used. The result was valid for pathologists ranging in clinical experience as well as for novices trained to read digital microscopy images of HER2 immunohistochemistry. A possible reason for this improvement may be that the computer-extracted features may prompt the observer to take a second look at an image and take into account areas that were previously underexamined. Similar to mammography, computer aids in histopathology can mitigate the effects of satisfaction of search42 (where diagnostic information is missed after other information is found in a different area of a medical image) and oversight due to fatigue. Even though observers performed limited searches in this study, as discussed below, information from the computer aid that pointed to a significantly different score from that of the observer could prompt them to possibly examine the staining of more cells.

The finding that novices demonstrated consistency at the same level as pathologists is of interest because it suggests that after proper selection of fields to evaluate and sufficient training, certain nonmedical personnel might be suitable for consistently evaluating staining.

An obvious limitation in our study is that regions of interest were used in the observer study, as opposed to whole slide images, which would better mimic the clinical task of integrating multiple fields into a composite score. The study did not address the consistency with which this is accomplished by pathologists with and without computer assistance. However, by focusing on regions of interest, we were able to examine observer variability in color quantification and minimize the effect of other sources of variability, such as the selection of regions and the combination of information from different areas or fields of view of a whole slide. A different observer study would have to be designed for the task of identifying sources of variability in the selection of regions of interest and possibly examining methodologies for reducing such variability.

The results of this study may not be generalizable to situations in which a similar training program is not provided or in which the examination environment differs from the controlled conditions used in the study. In addition, results may be different if a different algorithm is used for computer-aided analysis. A different algorithm might introduce different biases and/or provide different outputs that might affect how observers use the computer-aided mode. Moreover, “novices” in our study consisted of researchers in the field of medical imaging, who may not be representative of the population at large, despite their lack of formal medical training. Finally, the study is limited in that computer-aided interpretations were not compared with assessment using a conventional light microscope.

Future work includes the development of an adaptive method incorporating automated parameter optimization so that computer-aided assessment can be applied to different bio markers. The method used to derive the features that describe HER2 staining, as used in this observer study, relied on manually extracted, training pixels from cell membrane areas, making it impractical for applying to different biomarkers, which may have different color properties. An adaptive method would be retrained using IHC data from a particular biomarker, so that its parameters would be optimized for the properties of that biomarker, such as the range of hue and saturation of the colors present. Then, computer-aided assessment would be robust to variability in IHC data due to the use of different antibodies, fixation conditions, or digital acquisition. Moreover, such a method could also be applied to the analysis of tissue microarrays for largescale analysis. Other areas of future research include the development of methodologies for processing the whole slide by incorporating optimal strategies for combining scores from different areas of a slide. Such methodologies could improve reproducibility and consistency because the evaluation of different areas of a slide by different observers and the method used for combining these evaluations contribute to variability in the interpretation of biomarkers.43 Finally, we have undertaken a project to characterize color as it propagates through the digital microscopy imaging and display chain. The results of this study will provide useful information related to color management in digital pathology.

In summary, we have conducted an observer study with digital microscopy to examine the benefit of computer-aided assessment in the interpretation of IHC. The results of the observer study reported in this manuscript demonstrate that computer-aided assessment of preselected image fields can decrease both interobserver and intraobserver variability in the assessment of HER2 immunohistochemical expression. Reducing variability in the interpretation of IHC could enhance the statistical power of clinical trials designed to determine the utility of new biomarkers and accelerate their incorporation in clinical practice.

Supplementary Material

Acknowledgments

We are grateful to Weijie Chen, PhD; Sinchita Roy Chowduri, MD; Liliana Ilic, PhD; Lisa Kinnard, PhD; Dai Li, MD, PhD; Kant Matsuda, MD; Takikita Mikiko, MD, Sophie Paquerault, PhD; Max Robinowitz, MD; Annie Saha, MS; Diksha Sharma, MS; and Rongping Zeng, PhD, for their participation in this study. We would also like to thank the reviewers for their insightful comments.

Footnotes

The authors have no relevant financial interest in the products or companies described in this article.

Disclaimer: The mention of commercial products, their sources, or their use in connection with material reported herein is not to be construed as either an actual or implied endorsement of such products by the Department of Health and Human Services.

Contributor Information

Marios A. Gavrielides, Division of Imaging and Applied Mathematics, Office of Science and Engineering Laboratories, Center for Devices and Radiological Health, US Food and Drug Administration, Silver Spring, Maryland.

Brandon D. Gallas, Division of Imaging and Applied Mathematics, Office of Science and Engineering Laboratories, Center for Devices and Radiological Health, US Food and Drug Administration, Silver Spring, Maryland.

Petra Lenz, Laboratory of Pathology, Center for Cancer Research, National Cancer Institute, National Institutes of Health, Bethesda, Maryland.

Aldo Badano, Division of Imaging and Applied Mathematics, Office of Science and Engineering Laboratories, Center for Devices and Radiological Health, US Food and Drug Administration, Silver Spring, Maryland.

Stephen M. Hewitt, Laboratory of Pathology, Center for Cancer Research, National Cancer Institute, National Institutes of Health, Bethesda, Maryland.

References

- 1.Taylor CR, Cote RJ. Immunomicroscopy, a Diagnostic Tool for the Surgical Pathologist. 3rd ed. Philadelphia, PA: Saunders Elsevier; 2006. [Google Scholar]

- 2.Wolff AC, Hammond ME, Schwartz JN, et al. American Society of Clinical Oncology/College of American Pathologists guideline recommendations for human epidermal growth factor receptor 2 testing in breast cancer. J Clin Oncol. 2007;25(1):118–145. [DOI] [PubMed] [Google Scholar]

- 3.Weinstein RS. Innovations in medical imaging and virtual microscopy. Hum Pathology. 2005;36(4):317–319. [DOI] [PubMed] [Google Scholar]

- 4.Furness PN. The use of digital images in pathology. J Pathol. 1997;183(3):253–263. [DOI] [PubMed] [Google Scholar]

- 5.Yagi Y, Gilbertson JR. Digital imaging in pathology: the case for standardization. J Telemed Telecare. 2005;11(3):109–116. [DOI] [PubMed] [Google Scholar]

- 6.Lundin M, Lundin J, Isola J. Virtual microscopy. J Clin Pathol. 2004;57(12):1250–1251. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Horii SC. Whole-slide imaging in pathology: the potential impact on PACS In: Horii SC, Andriole KP, eds. Proceedings of the SPIE, Medical Imaging: PACS and Imaging Informatics. San Diego, CA: SPIE; 2007:65160B1. [Google Scholar]

- 8.McCullough B, Ying X, Monticello T, Bonnefoi M. Digital microscopy imaging and new approaches in toxicologic pathology. Toxicol Pathol. 2004;32(suppl 2):49–58. [DOI] [PubMed] [Google Scholar]

- 9.Helin HO, Lundin ME, Laakso M, Lundin J, Helin HJ, Isola J. Virtual microscopy in prostate histopathology: simultaneous viewing of biopsies stained sequentially with hematoxylin and eosin, and α-methylacyl-coenzyme A racemase/p63 immunohistochemistry. J Urol. 2006;175(2):495–499. [DOI] [PubMed] [Google Scholar]

- 10.Molnar B, Berczi L, Diczhazy C, et al. Digital slide and virtual microscopy based routine and telepathology evaluation of routine gastrointestinal biopsy specimens. J Clin Pathol. 2003;56(6):433–438. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Gu J, Ogilvie RW. Virtual Microscopy and Virtual Slides in Teaching, Diagnosis, and Research. Boca Raton, Florida: CRC Press; 2005. [Google Scholar]

- 12.Seidal T, Balaton AJ, Battifora H. Interpretation and quantification of immunostains. Am J Surg Pathol. 2001;25(9):1204–1207. [DOI] [PubMed] [Google Scholar]

- 13.Braunschweig T, Chung J-Y, Hewitt SM. Perspectives in tissue microarrays. Comb Chem High Throughput Screen. 2004;7(6):575–585. [DOI] [PubMed] [Google Scholar]

- 14.Braunschweig T, Chung JY, Hewitt SM. Tissue microarrays: bridging the gap between research and the clinic. Expert Rev Proteomics. 2005;2(3):325–336. [DOI] [PubMed] [Google Scholar]

- 15.Camp RL, Chung GG, Rimm DL. Automated subcellular localization and quantification of protein expression in tissue microarrays. Nat Med. 2002;8(11):1323–1327. [DOI] [PubMed] [Google Scholar]

- 16.Mengel M, von Wasielewski R, Wiese B, Rudiger T, Muller-Hermelink HK, Kreipe H. Inter-laboratory and inter-observer reproducibility of immunohistochemical assessment of the Ki-67 labelling index in a large multi-centre trial. J Pathol. 2002;198(3):292–299. [DOI] [PubMed] [Google Scholar]

- 17.Lacroix-Triki M, Mathoulin-Pelissier S, Ghnassia J, et al. High interobserver agreement in immunohistochemical evaluation of HER-2/neu expression in breast cancer: a multicentre GEFPICS study. Eur J Cancer. 2006;42(17):2946–2953. [DOI] [PubMed] [Google Scholar]

- 18.Biesterfeld S, Veuskens U, Schmitz F-J, Amo-Takyi B, Bocking A. Interobserver reproducibility of immunocytochemical estrogen- and progesterone receptor status assessment in breast cancer. Anticancer Res. 1996;16(5A):2497–2500. [PubMed] [Google Scholar]

- 19.Thomson TA, Hayes MM, Spinelli JJ, et al. HER2/neu in breast cancer: interobserver variability and performance of immunohistochemistry with 4 antibodies compared to fluorescent in situ hybridization. Mod Pathol. 2001;14(11):1079–1086. [DOI] [PubMed] [Google Scholar]

- 20.Rhodes A, Jasani B, Anderson E, Dodson AR, Balaton AJ. Evaluation of HER-2/neu immunohistochemical assay sensitivity and scoring on formalin-fixed and paraffin-processed cell lines and breast tumors: a comparative study involving results from laboratories in 21 countries. Am J Clin Pathol. 2002;118(3):408–417. [DOI] [PubMed] [Google Scholar]

- 21.Hsu C-Y, Ho DM-T, Yang C-F, Lai C-R, Yu I-T, Chiang H. Interobserver reproducibility of Her-2/neu protein overexpression in invasive breast carcinoma using the Dako HercepTest. Am j Clin Pathol. 2002;118(5):693–698. [DOI] [PubMed] [Google Scholar]

- 22.Camp RL, Dolled-Filhart M, King BL, Rimm DL. Quantitative analysis of breast cancer tissue microarrays shows that both high and normal levels of HER2 expression are associated with poor outcome. Cancer Res. 2003;63(7):1445–1448. [PubMed] [Google Scholar]

- 23.Hall BH, Ianosi-Irimie M, Javidian P, Chen W, Ganesan S, Foran DJ. Computer-assisted assessment of the human epidermal growth factor receptor 2 immunohistochemical assay in imaged histologic sections using a membrane isolation algorithm and quantitative analysis of positive controls. BMC Med Imaging. 2008;8(11). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Joshi AS, Sharangpani GM, Porter K, et al. Semi-automated imaging system to quantitate Her-2/neu membrane receptor immunoreactivity in human breast cancer. Cytometry A. 2007;71(5):273–285. [DOI] [PubMed] [Google Scholar]

- 25.Skaland I, Ovestad I, Janssen EAM, et al. Comparing subjective and digital image analysis HER2/neu expression scores with conventional and modified FISH scores in breast cancer. j Clin Pathol. 2008;61(1):68–71. [DOI] [PubMed] [Google Scholar]

- 26.Lehr H-A, Jacobs TW, Yaziji H, Schnitt SJ, Gown AM. Quantitative evaluation of HER-2/neu status in breast cancer by fluorescence in situ hybridization and by immunohistochemistry with image analysis. Am J Clin Pathol. 2001;115(6):814–822. [DOI] [PubMed] [Google Scholar]

- 27.Matkowskyj KA, Schonfeld D, Benya RV. Quantitative immunohistochemistry by measuring cumulative signal strength using commercially available software Photoshop and MATLAB. J Histochem Cytochem. 2000;48(2):303–311. [DOI] [PubMed] [Google Scholar]

- 28.Hatanaka Y, Hashizume K, Kamihara Y, et al. Quantitative immunohistochemical evaluation of HER2/neu expression with HercepTest™ in breast carcinoma by image analysis. Pathol Int. 2001;51(1):33–36. [DOI] [PubMed] [Google Scholar]

- 29.Masmoudi H, Hewitt SM, Petrick N, Myers KJ, Gavrielides MA. Automated quantitative assessment of HER-2/neu immunohistochemical expression in breast cancer. IEEE Trans Med Imaging. 2009;28(6):916–925. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Mofidi R, Walsh R, Ridgway PF, et al. Objective measurement of breast cancer oestrogen receptor status through digital image analysis. Eur J Surg Oncol. 2003;29(1):20–24. [DOI] [PubMed] [Google Scholar]

- 31.DiVito KA, Berger AJ, Camp RL, Dolled-Filhard M, Rimm DL, Kluger HM. Automated quantitative analysis of tissue microarrays reveals an association between high Bcl-2 expression and improved outcome in melanoma. Cancer Res. 2004;64(23):8773–8777. [DOI] [PubMed] [Google Scholar]

- 32.Elhafey AS, Papadimitriou JC, El-Hakim MS, El-Said AI, Ghannam BB, Silverberg SG. Computerized image analysis of p53 and proliferating cell nuclear antigen expression in benign, hyperplastic, and malignant endometrium. Arch Pathol Lab Med. 2001;125(7):872–879. [DOI] [PubMed] [Google Scholar]

- 33.Cregger M, Berger AJ, Rimm DL. Immunohistochemistry and quantitative analysis of protein expression. Arch Pathol Lab Med. 2006;130(7):1026–1030. [DOI] [PubMed] [Google Scholar]

- 34.Wang S, Saboorian H, Frenkel EP, et al. Automated cellular imaging system (ACIS)—assisted quantitation of immunohistochemical assay achieves high accuracy in comparison with fluorescence in situ hybridization assay as the standard. Am J Clin Pathol. 2001;116(4):495–503. [DOI] [PubMed] [Google Scholar]

- 35.Ciampa A, Xu B, Ayata G, et al. HER-2 status in breast cancer, correlation of gene amplification by FISH with immunohistochemistry expression using advanced cellular imaging system. App Immunohistochem Mol Morphol. 2006;14(2):132–137. [DOI] [PubMed] [Google Scholar]

- 36.Bloom K, Harrington D. Enhanced accuracy and reliability of HER-2/neu immunohistochemical scoring using digital microscopy. Am J Clin Pathol. 2004;121(5):620–630. [DOI] [PubMed] [Google Scholar]

- 37.Kendall MG, Smith BB. The problem of m rankings. Ann Math Statist. 1939;10(3):275–287. [Google Scholar]

- 38.von Eye A, Mun EY. Analyzing Rater Agreement: Manifest Variable Methods. Mahwah, NJ: Laurence Erlbaum Associates Inc; 2005. [Google Scholar]

- 39.MacLennan RN. Interrater reliability with SPSS for Windows 5.0. Am Stat. 1993;47(4):292–296. [Google Scholar]

- 40.Shrout PE, Fleiss JL. Intraclass correlations: uses in assessing rater reliability. Psychol Bull. 1979;86(2):420–428. [DOI] [PubMed] [Google Scholar]

- 41.Kendall M Rank Correlation Methods. London, England: Charles Griffin and Co Ltd; 1948. [Google Scholar]

- 42.Berbaum KS, Franken EA Jr, Dorfman DD, et al. Satisfaction of search in diagnostic radiology. Invest Radiol. 1990;25(2):133–140. [DOI] [PubMed] [Google Scholar]

- 43.van Diest PJ, van Dam P, Henzen-Logmans SC, et al. A scoring system for immunohistochemical staining: consensus report of the task force for basic research of the EORTC-GCCG. Clin Pathol. 1997;50(10):801–804. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.