Abstract

Objective:

Recent advances in light-sheet fluorescence microscopy (LSFM) enable 3-dimensional (3-D) imaging of cardiac architecture and mechanics in toto. However, segmentation of the cardiac trabecular network to quantify cardiac injury remains a challenge.

Methods:

We hereby employed “subspace approximation with augmented kernels (Saak) transform” for accurate and efficient quantification of the light-sheet image stacks following chemotherapy-treatment. We established a machine learning framework with augmented kernels based on the Karhunen-Loeve Transform (KLT) to preserve linearity and reversibility of rectification.

Results:

The Saak transform-based machine learning enhances computational efficiency and obviates iterative optimization of cost function needed for neural networks, minimizing the number of training datasets for segmentation in our scenario. The integration of forward and inverse Saak transforms can also serve as a light-weight module to filter adversarial perturbations and reconstruct estimated images, salvaging robustness of existing classification methods. The accuracy and robustness of the Saak transform are evident following the tests of dice similarity coefficients and various adversary perturbation algorithms, respectively. The addition of edge detection further allows for quantifying the surface area to volume ratio (SVR) of the myocardium in response to chemotherapy-induced cardiac remodeling.

Conclusion:

The combination of Saak transform, random forest, and edge detection augments segmentation efficiency by 20-fold as compared to manual processing.

Significance:

This new methodology establishes a robust framework for post light-sheet imaging processing, and creating a data-driven machine learning for automated quantification of cardiac ultra-structure.

Keywords: Biomedical optical imaging, machine learning, cardiology, principal component analysis

I. Introduction

LIGHT-SHEET fluorescence microscopy (LSFM) is instrumental in advancing the field of developmental biology and tissue regeneration [1–4]. LSFM systems have the capacity to investigate cardiac ultra-structure and function [5–14], providing the moving boundary conditions for computational fluid dynamics [15, 16] and the specific labeling of trabecular network for interactive virtual reality [17, 18]. However, efficient and robust structural segmentation of cardiac trabeculation remains a post-imaging challenge [19–23]. Manual segmentation of cardiac images in zebrafish and mice remains the gold standard for ground truth despite being a labor-intensive and error-prone method. Currently existing automatic methods, including adaptive binarization, clustering, voronoi-based segmentation, and watershed, have remained limited [24]. For instance, adaptive histogram thresholding provides a semi-automated computational approach to perform image segmentation; however, the output is detracted by variability in background-to-noise ratio from the standard image processing algorithms [23].

Machine learning strategies, including neural networks [25–33], have been an integral part of biomedical research [34, 35] and clinical medicine [36–38]. The implementation of regions with convolutional neural network (R-CNN) provides the foundation for object detection and segmentation [29]. The fully convolutional network (FCN) also enables accurate and efficient image segmentation, replacing the fully connected layers with deconvolution-based upsampling and shared convolution computation [30]. The deep neural network (DNN) further allows for boundary extraction based on a pixel classifier in the electron microscopy images [26], and similar advanced neural networks facilitate the classification and segmentation of MRI images [28, 32].

However, these aforementioned methods require a large volume of high-quality annotated-training data, which is not readily available in the vast majority of LSFM-acquired cardiac trabecular network. Despite being an effective deduction method, a convolutional neural network (CNN) is considered to be a weak inference method to capture semantic information. In short, the limited resources of well-established ground truth and the weak inference capability motivated us to develop the subspace approximation with augmented kernels (Saak) transform, whereby we integrated the Karhunen-Loeve Transform (KLT) with the random forest classifier to reduce the need for high volume of annotated LSFM training data.

We established a Saak transform to preserve linearity and reversibility of rectified linear unit (ReLU), and we applied Saak transform to extract the transform kernels for principal components [39]. We paired KLT and augmented kernels with a random forest classifier to leverage the data efficiently to perform feature extraction and classification for LSFM image segmentation. We further integrated our Saak transform-based machine learning with LSFM imaging and edge detection of structural change in myocardium to define the surface area to volume ratio (SVR) in response to chemotherapy-induced injury. In addition, both forward and inverse transforms are available in our Saak transform method, enabling the reconstruction of estimated images with guaranteed authenticity after filtering out adversarial perturbations, and reducing a threat prone to errors in the neural networks [40–44]. While current adversarial attack methods are specifically designed for CNN, we are able to parse out the dominant data by using Saak transform. This suggests that in the realm of image segmentation, Saak transform is also a potential approach to overcome adversarial perturbation in tandem with other machine learning methods. Overall, Saak transform is a high throughput method to demonstrate precise and robust image segmentation of the complicated cardiac trabecular network, implicating both deductive and inference capacities to perform segmentation following cardiac injury.

II. Methods

A. Fundamentals of Saak Transform

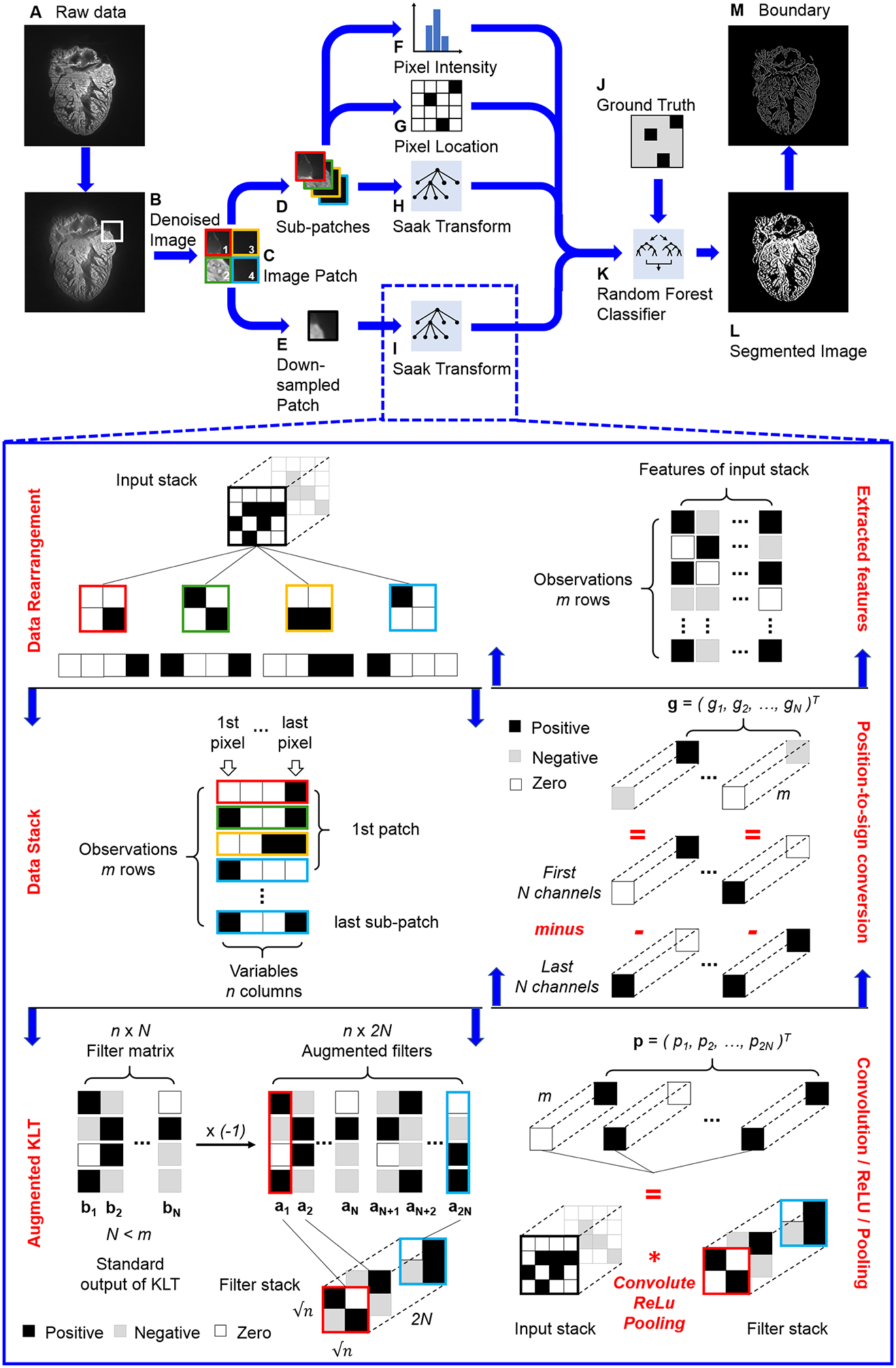

The overall Saak transform-based machine learning incorporates transform kernels for feature extraction and random forest for classification to generate a segmented image (Figure 1). In brief, we defined f ∈ RN as the input, where N represents the overall dimension. The KLT basis functions are denoted by bk, k = 1, 2,…, N (Figure 1I), satisfying the orthonormal condition as follows:

| (1) |

where 0 < i, j ⩽ N and δi,j is the Kroneckor delta function. A general approach after convolution in neural networks is to rectify the negative output to activate the non-linearity. However, we generated augmented kernels to preserve all the convolutional output, that is, the linearity of the transformation. These augmented kernels enable us to comprehensively exploit image patterns for the feature extraction, defined as follows (Figure 1I, augmented output of KLT):

| (2) |

where ak is the original KLT kernel while ak+N is the augmented transform kernel. The integration of ak and ak+N allows for maintaining all the outputs during ReLU by assigning different signs. The input, f, is then projected onto the augmented basis to yield a projection vector p, followed by ReLU and max pooling, and defined as follows:

| (3) |

where . Since ak+N is opposite to ak, we are able to convert the position to sign by subtracting the augmented kernel ak+N from the KLT kernel ak to generate Saak coefficients:

| (4) |

where gk is defined as follows:

| (5) |

Fig 1.

Pipeline of Saak transform-based machine learning. (A) The raw image is denoised by (B) variational stationary noise remover, and (C) split into patches. Patches are further reduced into (D) sub-patches and (E) down-sampled patches. (H and I) These two types of patches are passed to the Saak transform, which operates by performing an augmented Karhunen-Loeve Transform (KLT) operation, followed by the convolution, rectified linear unit (ReLU), and max pooling in a quad-tree structure. The Saak coefficients (H and I) are packaged with (F) the pixel intensity, (G) the location, and the (J) ground truth segmentation results to train (K) a random forest classifier. (L) The trained one-pass feedforward machine learning algorithm has the capacity to segment image automatically, and (M) the segmented images are further processed by edge detection.

Thus, we finished a forward Saak transform by projecting the input, f, onto transform kernels, ak and ak+N, to generate Saak coefficients, gk, k = 1,2,…, N, as features of the input. Conversely, we are also able to reconstruct the estimation of input f after collecting Saak coefficients and converting the sign to position:

| (6) |

where M ≤ 2N. This process is known as the inverse Saak transform, and we have provided mathematical analysis of Saak transform in details [39].

B. Non-overlapping Saak Transform

We pre-processed raw LSFM images by using variational stationary noise remover (VSNR) to minimize the striping artifacts (Figures 1A, B) [45, 46].

Following the application of the denoising algorithm, the images of 1024 × 1024 pixels were decomposed into non-overlapping patches with 16 × 16 pixels (4096 patches per image, Figure 1C). An averaging window with 4 × 4 pixels further divided these patches into sub-patches (65536 per image, Figure 1D) and down-sampled patches (4096 per image, Figure 1E), respectively. The final numbers of patches, sub-patches and down-sampled patches are determined by the product of the number of training images and 4096, 65536 and 4096, respectively. Non-overlapping Saak transform operates on a quad-tree structure that splits the input into quadrants until reaching leaf nodes with 2 × 2 pixel squares (Inset of Figure 1I). We used the following 4 groups of parameters for feature extraction: pixel intensity (Figure 1F), pixel location (Figure 1G), Saak coefficients for sub-patches (Figure 1H), and Saak coefficients for down-sampled patches (Figure 1I). Our algorithm passed these parameters to a random forest classifier (Figure 1K) for training with annotated data (Figure 1J). The algorithm also provides the optionality to select any classifier based on the application. The combination of feature extraction and classification created a one-pass feedforward machine learning algorithm. Following the training, unannotated images were passed to generate a segmented result (Figure 1L) and a boundary image (Figure 1M).

All of the down-sampled patches and sub-patches were passed to Saak transform for feature extraction. The input of Saak transform was generally a 3-D image stack such as a sub-patch or down-sampled patch stack. We first extracted blocks by sequentially sliding the 2 × 2 window over the 2-D patches, and reshaped the block to 1-D array (Figure 1I, Data rearrangement). All the arrays were then stacked along the column as a new 2-D matrix in which rows represent observations while columns are variables (Figure 1I, Data stack). We performed KLT on the matrix to extract the transform kernels bk, k = 1, 2,…, N, and reversed the sign of kernels to generate augmented kernels ak and ak+N (Figure 1I, Augmented KLT). All the kernels were reshaped back to 2 × 2 pixel squares as filters to convolute with the input stack, followed by ReLU and max pooling (Figure 1I, Convolution / ReLU / Pooling). We acquired coefficients from p1 to p2N, and subtracted pN+1, …, p2N from p1, …, p2N to generate Saak coefficients, g1, …, gN, correspondingly. These coefficients enable to reduce the dimension of the final output and convert the position to sign (Figure 1I, Position-to-sign conversion). For instance, p1 is (3, 0)T, and pN+1 is (0, 2)T , and therefore g1 is (3, −2)T. We rearranged all coefficients to finalize the features as the input of the classifier (Figure 1I, Extracted features).

An energy threshold between 0 and 1 (selected as 0.97 for this experiment) is able to be applied to maintain the minimum accuracy of representation while truncating terms from the Saak representation for runtime efficiency. We further used F-test to select 8 closely related features as the input for the random forest classifier at most, allowing for overcoming the overfitting issue in the context of small training data size. The accuracy of the Saak representation was verified with the analysis of variance (ANOVA) to complete the feature extraction process.

C. Overlapping Saak Transform

In addition to the non-overlapping Saak transform, we generated overlapping patches for feature extraction as well. The main difference between two methods is the overlapping area among different patches. Following denoising, the Saak algorithm decomposed the image of 1024 × 1024 pixels into overlapping patches with 27 × 27 pixels and 1 stride (1048576 patches per image, Figure 1C). A 9 × 9 pixel averaging filter divided patches into sub-patches (9437184 per image, Figure 1D) and down-sampled patches (1048576 per image, Figure 1E), respectively. The final numbers of patches, sub-patches and down-sampled patches are determined by the product of the number of training images and 1048576, 9437184 and 1048576, respectively. All of the patches and sub-patches were passed to the Saak transform operating on a quad-tree structure that split the input into quadrants until reaching leaf nodes with 3 × 3 pixel squares.

The original image of 1024 × 1024 pixels is computationally expensive to conduct KLT, and therefore we decomposed it to multiple lower-dimensional patches and sub-patches. The dimensions of patches and sub-patches in Saak transform are application-dependent and adjustable, and the parameters were experimentally optimized. The patches of 16 × 16 pixels and 27 × 27 pixels in both non-overlapping and overlapping methods allow us to investigate the global pattern of adult zebrafish hearts and improve the computational efficiency. We are also working on the other unsupervised method to determine the parameters, and balance the trade-off between efficiency and image size [47].

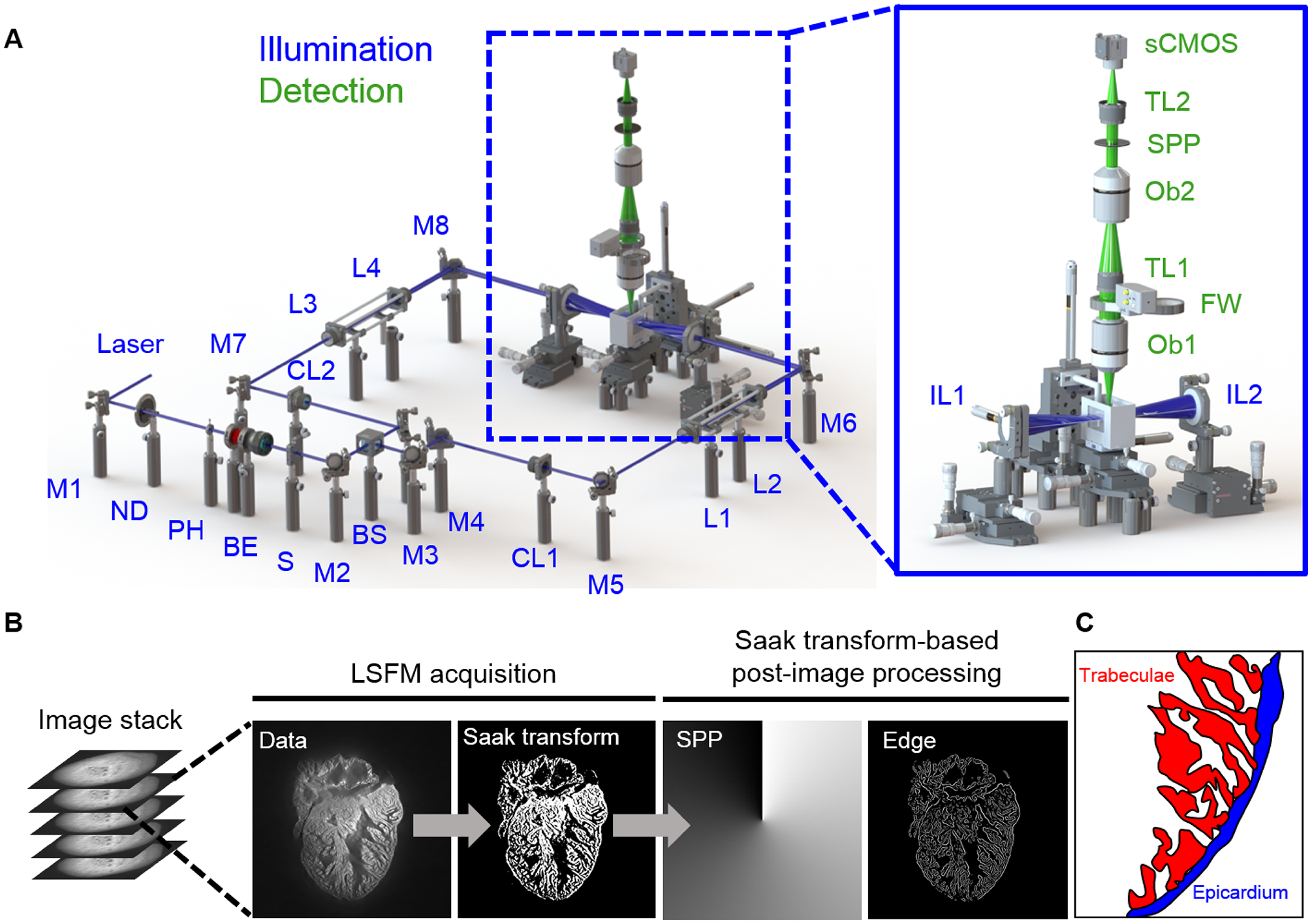

D. Light-sheet Microscope Design

Our custom-built LSFM system was designed to image the changes in trabecular network in the zebrafish model of cardiac injury and regeneration, enabling to reveal the intact heart as large as 0.8 × 0.8 × 1.2 mm3 without mechanical slicing. The lateral and axial resolution of our LSFM system adapted from previous reports [23, 48] is 2.8 μm and 4.5 μm, respectively (Figure 2). Fluorescence was orthogonally captured through an objective lens (Ob1) and a tube lens (TL1) to minimize photobleaching (Inset in Figure 2A). A sCMOS (Flash 4.0, Hamamatsu, Japan) was used to capture 1024 × 1024 pixels per image at 100 frames per second. Images from all depths were evenly considered as training and testing purposes. Following precise alignment with the LSFM system, a fully automated image acquisition was implemented via a customized LabVIEW program. We performed the forward and inverse Fourier transform in MATLAB (MathWorks, Inc.) to create a virtual spiral phase plate (SPP) for automatic edge detection in [49, 50]. The current hardware system is also adaptable for inserting a real SPP along with optical components (Ob2 and TL2) as a high-pass filter for edge detection. For the sake of Saak transform, we chose to perform the edge detection and trim the boundary of cardiac trabeculae (Figure 2C) in MATLAB following LSFM imaging in this work (Figure 2B).

Fig 2.

LSFM data acquisition and post image processing. (A) In the illumination unit (blue), the laser is reflected by a mirror (M) to a neutral density filter (ND), adjustable pinhole (PH), beam expander (BE), and slit (S). The beam is 1) split by a beam splitter (BS), 2) converted to a light sheet by the cylindrical lens (CL), and 3) tuned by relay lenses (L). Illumination lenses (IL) project the light-sheet across the heart. The detection unit (green) consists of two objective lenses (Ob) and two tube lenses (TL) to focus the image on the sCMOS camera. The filter wheel (FW) and optional spiral phase plate (SPP) are used to further process the image. (B) The raw image stack acquired by LSFM is segmented through Saak transform-based machine learning, followed by the edge detection and boundary trim. (C) A schematic highlights the cardiac trabecular network (red) in the adult zebrafish ventricle (blue).

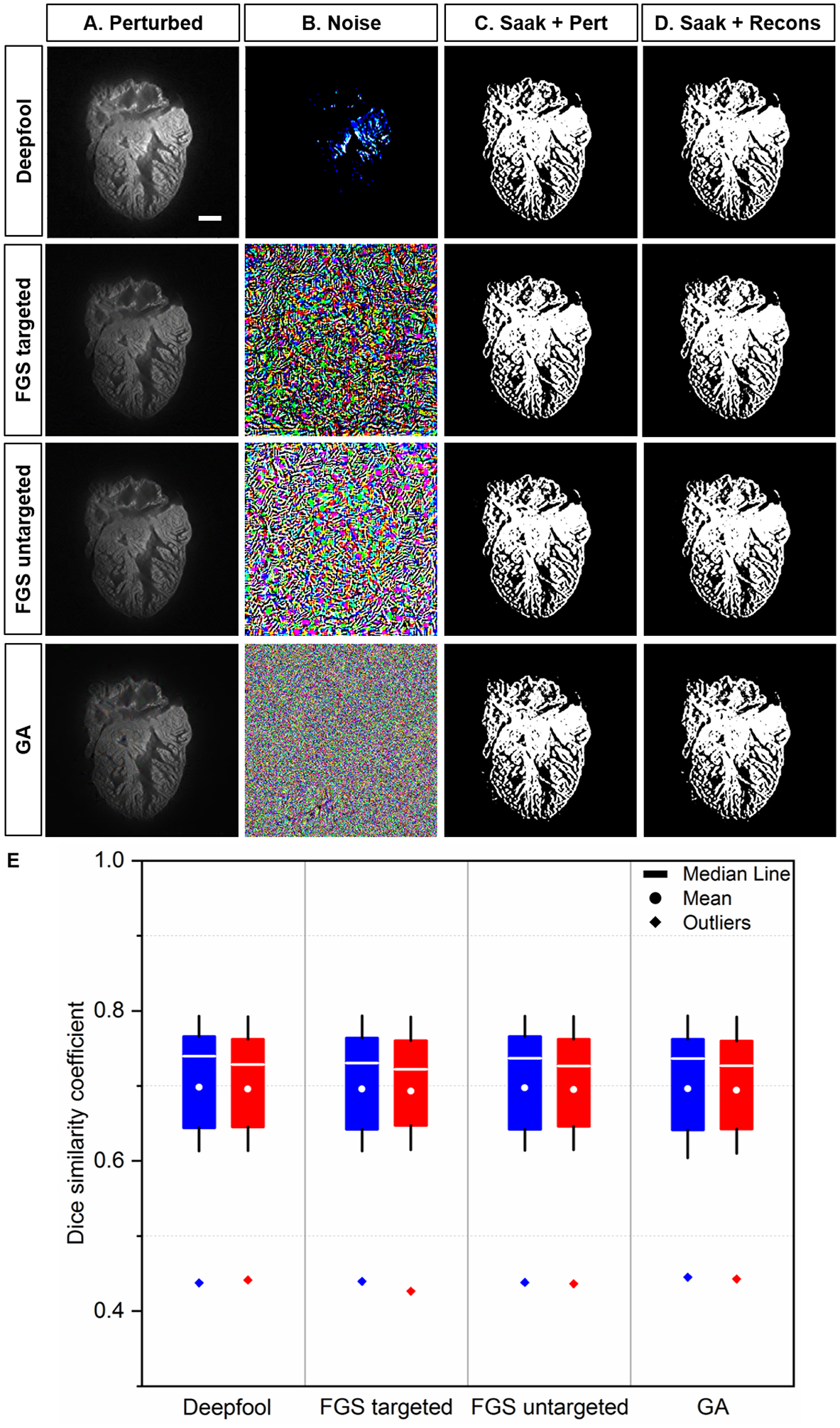

E. Generation of Adversarial Perturbation

We implemented well-established perturbation methods, including Deepfool [51], fast gradient sign (FGS) [52] and gradient ascent (GA) methods [53], to generate adversarial perturbed images. Adversarial perturbation is designed to challenge the robustness of classification by neural networks. We included both targeted and untargeted attacks in the FGS method [52]. In comparison to FGS and GA methods, Deepfool leads to a smaller perturbation. We used random forest as the classifier, and compared the segmentation output between the perturbed and reconstructed images.

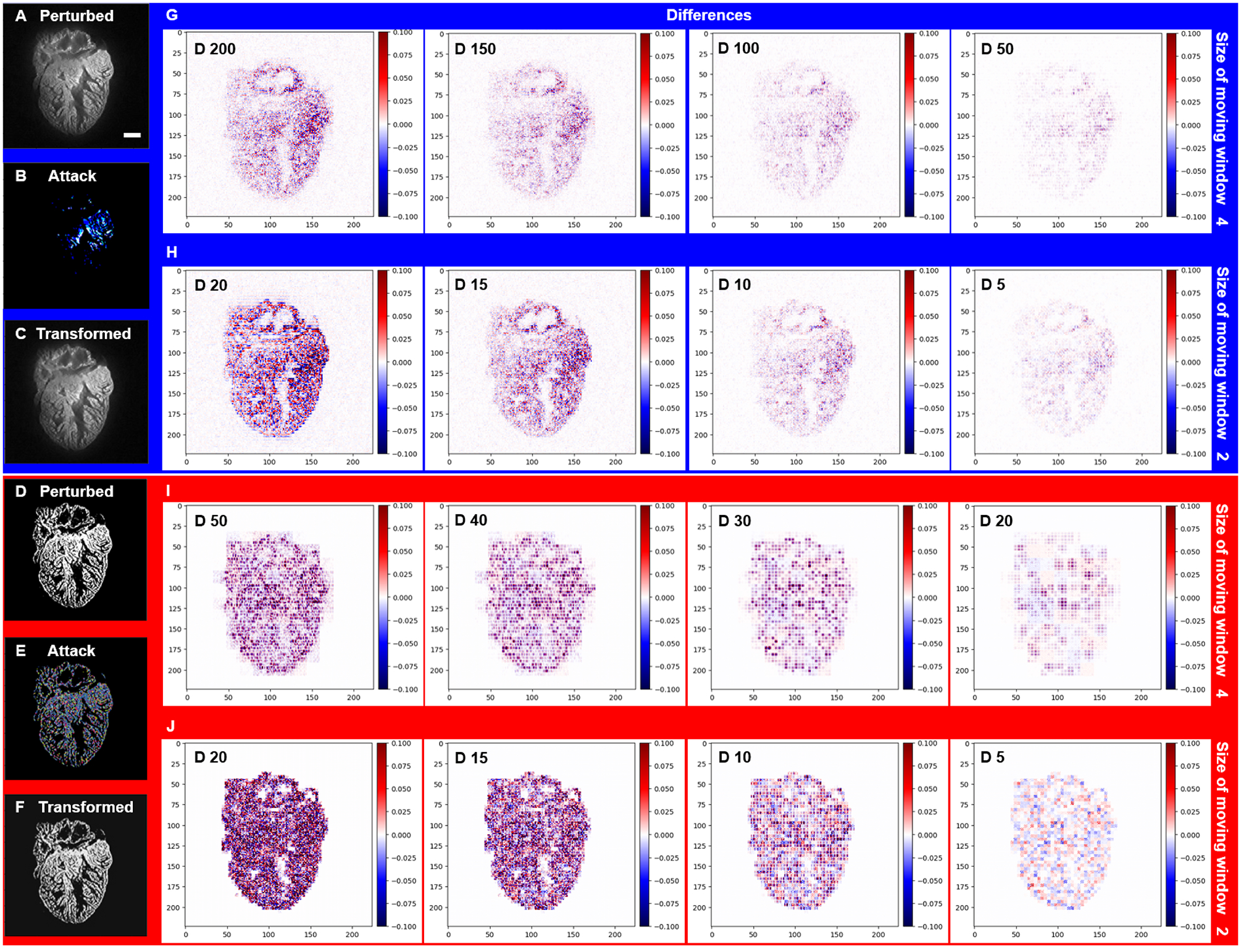

F. Saak Coefficients Filtering

Clean and adversarial images shared similar distributions in the low-frequency Saak coefficients despite variability in the high-frequency coefficients [44]. To reduce adversarial perturbations, we sought to truncate the high-frequency Saak coefficients as a filtering strategy, while the low-frequency Saak coefficients were maintained to reflect the continuous surface or slow varying contour in the segmental process. Following the truncation of high-frequency Saak coefficients generated by the forward Saak transform, we used the rest of the coefficients to reconstruct the image based on the inverse Saak transform (Figure 7). Two types of moving windows were applied: 4 × 4, and 2 × 2 pixels. In the grayscale image, we truncated 200 (73% of total), 150 (55%), 100 (37%) and 50 (18%) coefficients by using a 4 × 4 moving window, and we also truncated 20 (95% of total), 15 (71%), 10 (48%) and 5 (24%) coefficients by using a 2 × 2 moving window. In addition, we performed the same procedure in the binary image, and filtered out 50 (18% of total), 40 (15%), 30 (11%) and 20 (7%) coefficients by using the 4 × 4 moving window, along with 20 (95% of total), 15 (71%), 10 (48%) and 5 (24%) coefficients by using the 2 × 2 moving window. All of the collected Saak coefficients were applied in inverse Saak transform to reconstruct the images.

Fig 7.

Saak transform-based segmentation following perturbed and reconstructed images. (A) Perturbed images were generated by introducing the original images to (B) adversarial perturbations from Deepfool, targeted and untargeted fast gradient sign (FGS), and gradient ascent (GA) methods, respectively. (C-D) Non-overlapping Saak transform plus random forest were performed to segment the cardiac trabecular network. (E) The values of DSC between perturbed and reconstructed images were statistically insignificant (p >0.05, n=12 testing images for each perturbation). Blue: perturbed; red: reconstructed. Scale bar: 200 μm.

G. Assessing Changes in Cardiac Trabecular Network Following Chemotherapy-Induced Injury

All animal studies were performed in compliance with the IACUC protocol approved by the UCLA Office of Animal Research. Experiments were conducted in adult 3-month old zebrafish (Danio rerio). A one-time 5 μL injection of doxorubicin (Sigma) at a dose of 20 μg/g of body weight by intraperitoneal route (Nanofil 10 μL syringe, 34 gauge beveled needle, World Precision Instruments) [54] or of a control vehicle (Hank’s Balanced Salt Solution) was performed at day 0 (n=5 for doxorubicin, and n=5 for control). Following microsurgical isolation at day 30 post injection, hearts were placed in a phosphate buffered saline 1x solution to remove retained blood, paraformaldehyde 4% for fixation, agarose 1.5% for embedding, sequential ethanol steps (40 – 60 – 80 – 100%) for dehydration and BABB (with a BA:BB ratio of 1:2) for lipid removal and refractive index matching. Ex vivo imaging of autofluorescence was performed using a custom-built LSFM system [23].

H. Preparation of the Ground Truth

The image stack was acquired with a 2 μm step size yielding a resolution at 1.625 μm × 1.625 μm × 2 μm and an image size at 1024 × 1024 pixels. We manually annotated the myocardium with binary labels in Amira 6.1 (FEI, Berlin, Germany). Pixels that represented myocardium were flagged with a value of 1, while the cavities and spaces were flagged with a value of 0. The binary segmented images served as either training data for the Saak transform algorithm or validation sets to verify the accuracy of the Saak transform-based image segmentation.

I. Validation

The performance of the segmentation algorithm was validated by calculating the dice similarity coefficient (DSC),

| (7) |

where X represents the ground truth segmented myocardial region, and Y the Saak transform or U-Net segmented myocardial region. We used an open source repository of the 24-convolutional layer U-Net [55, 56] and adjusted the input parameters for our image size of 1024 × 1024 pixels. This U-Net structure is defined without pre-trained weights for image segmentation. The algorithm was tested with varying numbers of training images.

J. Statistical Analysis

All of values were represented as mean ± standard deviation. A two-sample t-test was used to assess the means between two datasets, followed by a two-sample F-test for variance and a Kolmogorov-Smimov test for normality. All the comparable groups under each condition were subject to normal distribution and equal variance. In the box plot, the box was defined by the 25th and 75th percentiles, and the whiskers were defined by the 5th and 95th percentiles. Median, mean and outliers were indicated in line, dot, and diamond, respectively. A p-value of < 0.05 was considered statistically significant.

K. Code Availability

All of the Saak transform codes were written and tested in Python 3.6. The code generated during and/or analyzed during the current study are available from the corresponding author.

L. Data Availability

The datasets generated during and/or analyzed during the current study are available from the corresponding author.

III. Results

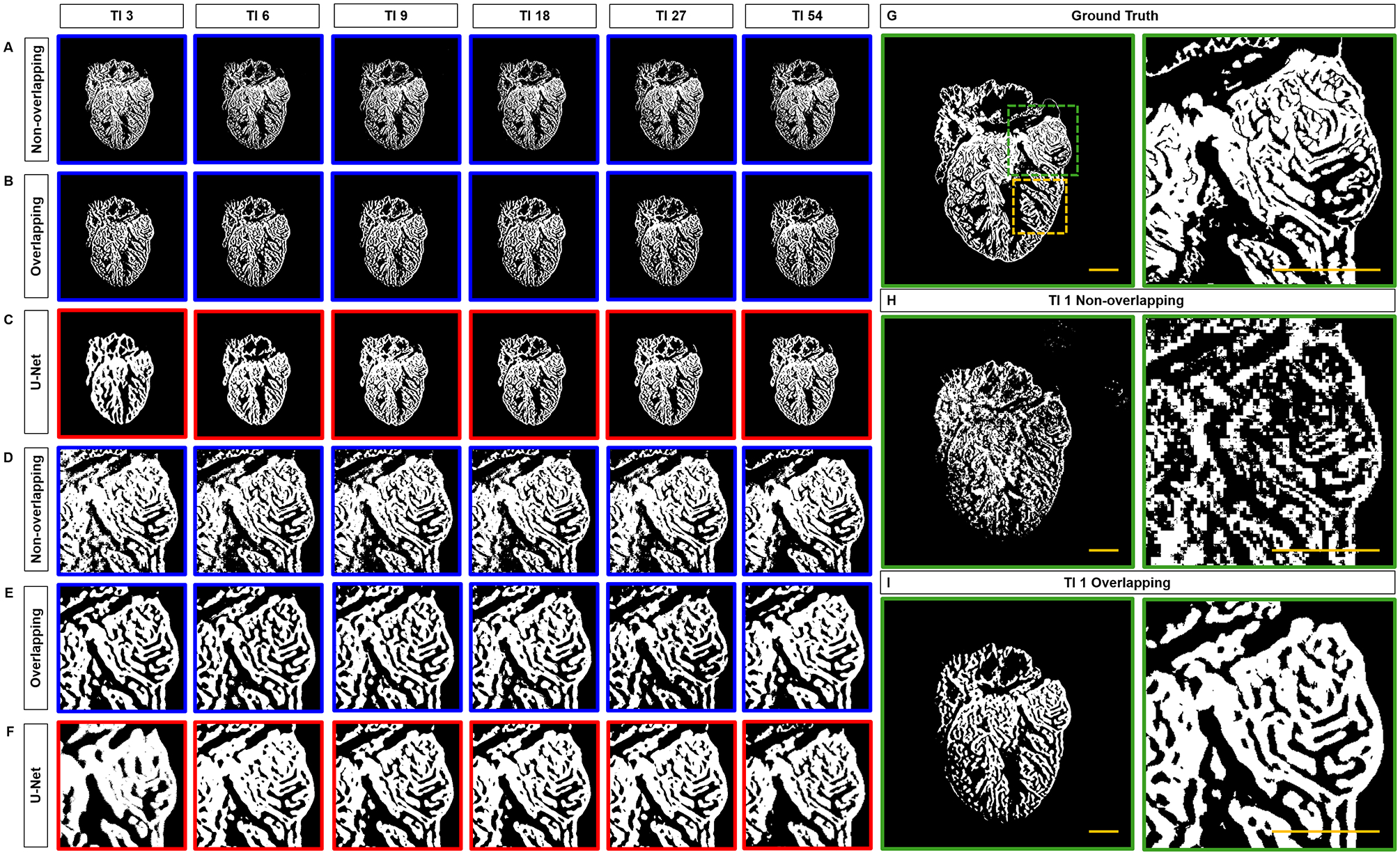

A. Qualitative Comparison of the Segmented Trabeculae

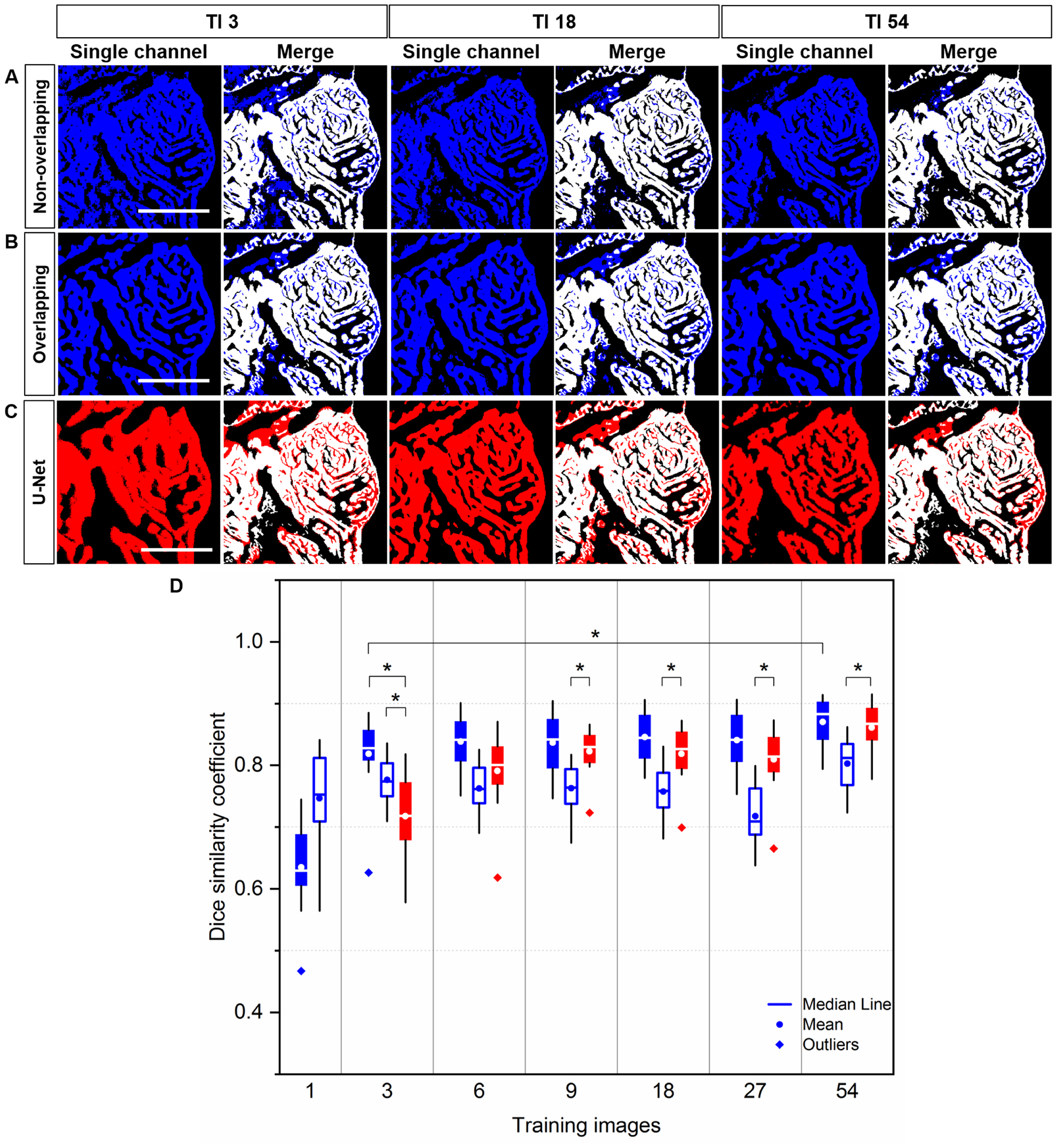

We compared the non-overlapping and overlapping Saak transforms with the well-recognized U-Net. We implemented both non-overlapping and overlapping patches to generate transform kernels and the corresponding Saak coefficients by training with 1, 3, 6, 9, 18, 27 and 54 images (Figures 3A–B). Using the same number of training images (TI), Saak transform captured the trabecular network in greater detail compared to U-Net (Figure 3C). By comparing segmentation accuracy from 3 to 54 training images (Figures 3D–F), we qualitatively assessed the identical region of ventricle (dashed green squares in Figure 3G) to demonstrate that the Saak transform is superior with regards to segmentation of the interconnected trabecular network. We revealed the differences between the non-overlapping- and overlapping-patches Saak algorithms by comparing them with the manually annotated ground truth under the identical training conditions (Figure 3G). The non-overlapping-patches method was sensitive to high-resolution image components, revealing the detailed trabecular formation at the cost of noisy background, whereas the overlapping-patches method segmented a smooth and interconnected trabecular formation with lower resolution but less noise. We further highlighted the distinct results between the non-overlapping and overlapping methods by testing the Saak transform with a single training image (TI 1) (Figures 3H–I). With the reduced volume of training images needed to segment cardiac structure, Saak transform-based machine learning outperformed the well-established U-Net from a data-efficiency standpoint. However, the non-overlapping method yielded pixelated trabecular structures when only provided with 1 training image, whereas the overlapping method result captured more detail in the ventricular trabecular formation. For these reasons, we employed over 3 training images to offset the trade-off between segmentation accuracy and training efficiency in the ensuing results.

Fig 3.

Comparison of Segmentation results. (A) Non-overlapping and (B) overlapping patches to generate Saak coefficients. (C) Under the same 3, 6, 9, 18, 27 and 54 training images, the trabecular network is better demarcated by Saak transform than by U-Net. (D-F) Comparison of trabecular network in the region of interest (dashed square in G) further supports the capacity of Saak transform to reveal the detailed trabecular network. (H-I) The results of 1 training-based image are compared to the (G) ground truth. TI: training image. Scale bar: 200 μm.

B. Quantitative Comparison of the Segmented Trabeculae

We assessed the accuracy by comparing the correctly segmented regions of ventricle among 1) the non-overlapping Saak transform (Figure 4A, blue top channel), 2) overlapping Saak transform (Figure 4B, blue middle channel), and 3) U-Net (Figure 4C, red bottom channel) in relation to the manually annotated ground truth (Figures 4A–C, white merge). Dice similarity coefficient (DSC) was calculated to quantify the performance of segmentation following various numbers of training images. We compared twelve testing images with the corresponding ground truth under each training condition (1, 3, 6, 9, 18, 27 and 54 training images) for the statistical analysis of DSC. Based on 3 training images, we arrived at a mean DSC of 0.82 ± 0.06 for non-overlapping Saak transform and 0.72 ± 0.07 for U-Net (p = 0.001) (Figure 4D). The accuracy of segmentation following 3 training images for the non-overlapping method is comparable to that of 18 and 27 images, but significantly different from that of 54 images (0.87 ± 0.04) for the same method (p = 0.03); that is, the mean DSC value ranges from 0.80 to 0.90 for the non-overlapping Saak transform, but the segmentation accuracy improves as the number of training images increase. Increasing the number of training images for U-Net improves the accuracy and approaches that of the non-overlapping Saak transform. Similar to the non-overlapping method, the overlapping Saak transform outperforms U-Net when provided with 3 training images, as demonstrated by a DSC value of 0.78 ± 0.04 (p = 0.02 for overlapping vs. U-Net). However, U-Net surpasses overlapping Saak transform, starting from 9 to 18 to 54 training images, yielding p-values from 0.001 to 0.003 to 0.004, respectively. These findings corroborate the previous qualitative comparison (Figures 4A–C) between Saak transform-based machine learning and U-Net, supporting that the segmentation accuracy and training efficiency of Saak transform outperform iterative optimization in neural networks in the context of a small training dataset.

Fig 4.

Quantitative comparison of Segmentation: Saak transform vs. U-Net. (A-C) Saak transform (in blue) vs. U-Net (in red) are compared following 3, 18 and 54 training images. These segmentation results were merged with the manually annotated image (in white) for quantitative comparison. (D) Following 3 training images, the values of dice similarity coefficient (DSC) support that both non-overlapping (solid blue) and overlapping (hollow blue) Saak transforms outperform U-Net (red). Starting from 9 to 18 to 27 to 54 training images, non-overlapping Saak transform and U-Net perform similarity, while overlapping Saak transform underperforms. Twelve testing images were included in method under the identical training images. Solid blue: non-overlapping; hollow blue: overlapping; red: U-Net. Scale bar: 200 μm.

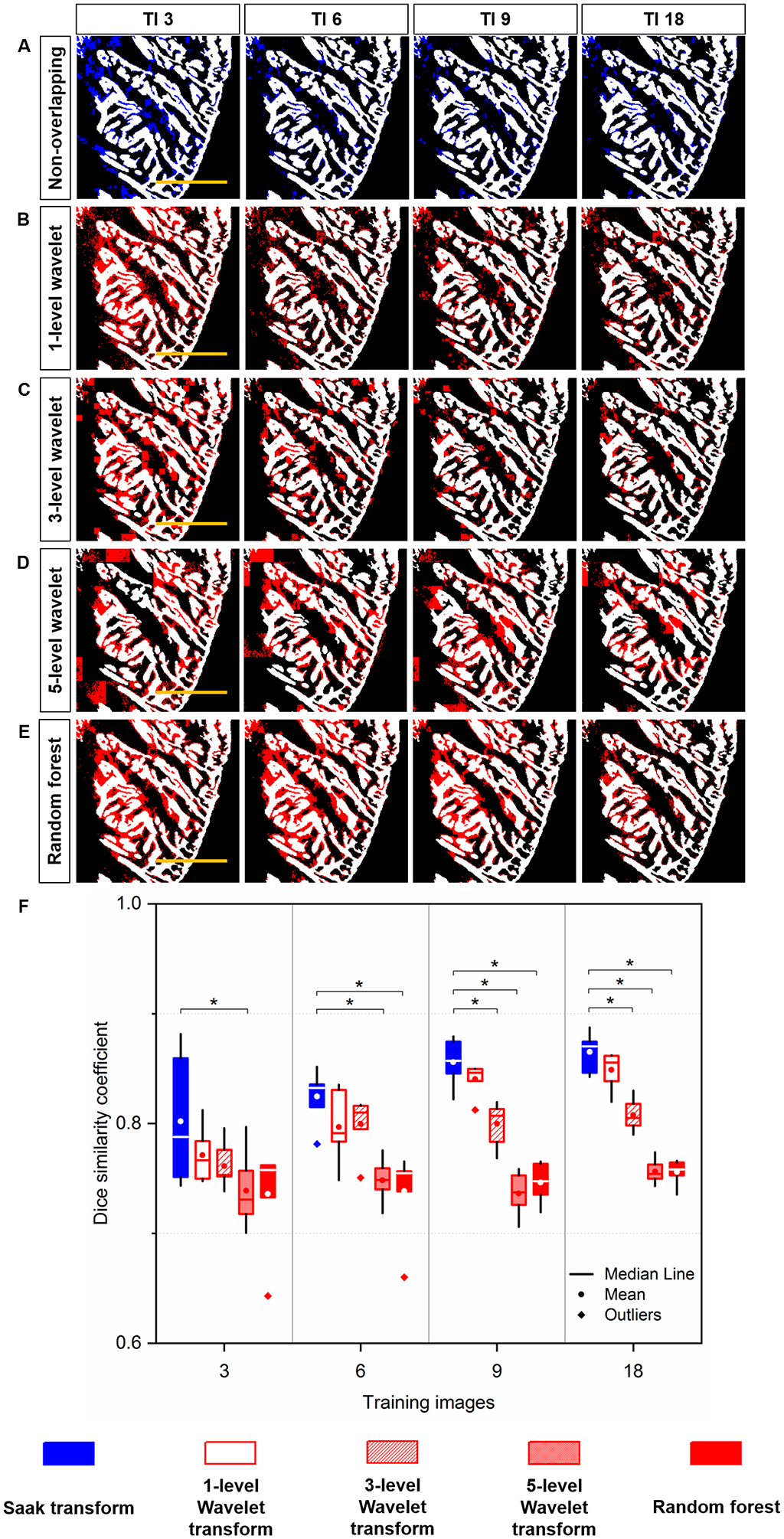

We further demonstrated that Saak coefficients contributed to the improvement of segmentation accuracy with a small training dataset (Figure 5, identical region to the dashed yellow square in Figure 3G). We replaced Saak transform (Figure 5A) with 1-, 3-, and 5-level Haar Wavelet transform (Figures 5B–D) to extract approximate, horizontal, vertical and diagonal coefficients as features, and trained the random forest classifier with new features starting from 3 to 18 training images. In comparison to multi-level Haar Wavelet transform, Saak transform-based method provides comparable results to 1-level Wavelet transform under all training conditions, and outperforms 3- and 5-level Wavelet transforms starting from 9 and 3 training images, respectively. Using six testing images, we arrived at the mean DSC from 0.80 ± 0.05 to 0.87 ± 0.02 for Saak transform under 3, 6, 9 and 18 training images (Figure 5F), demonstrating the statistically significant difference in contrast to 3- and 5-level Haar Wavelet transforms (p < 0.05). In this context, Saak transform allows us to obviate the selection of mother wavelet and preserve the reversibility of ReLU in the machine learning process. We further performed a vanilla random forest to validate the role of Saak coefficients in the small training dataset. Without the aid of Saak coefficients, the results from the random forest are insensitive to the increasing number of training images per group (Figures 5E–F), yielding the statistically significant difference under 6, 9 and 18 training images in comparison to the original Saak transform. The different results between Saak transform and random forest indicate the positive contribution of Saak coefficients to the classification accuracy.

Fig 5.

Quantitative comparison of Segmentation: (A) Saak transform (in blue) vs. (B-D) Wavelet transform (in red) and (E) Random forest (in red) following 3, 6, 9 and 18 training images. These segmentation results were merged with the manually annotated image (in white) for quantitative comparison. (F) Saak transform-based method (random forest in combination with Saak coefficients) outperforms 3- and 5-level Haar Wavelet transforms (random forest in combination with Wavelet coefficients) starting from 9 and 3 training images, respectively. Starting from 6 training images, Saak transform-based method surpasses random forest, indicating the statistically significant difference (p < 0.05, n = 6 testing images for each condition). Scale bar: 200 μm.

C. Forward and Inverse Saak Transforms to Filter Adversarial Perturbations

An inherent capability of the Saak transform is to distinguish the most significant contributions to the variance in a dataset. This enables the Saak transform to theoretically improve the robustness of image segmentation if the covariance matrices are consistent. As an exercise, we are able to use established adversarial attack methods to demonstrate the Saak transform’s capacity to separate significant image data from artificial noise, followed by the reconstruction with the rest of Saak coefficients. Using the well-established Deepfool attack, we added perturbation to both the grayscale (Figures 6A–C) and binary images (Figures 6D–F). Saak transform provides various moving windows, including 4 × 4 and 2 × 2 pixels, to extract coefficients to detect the perturbation deeply involved in the ventricular structure (Figures 6B and E). We truncated the high-frequency coefficients at different percentage as previously reported [44]. By applying the 4 × 4 moving window, we filtered out 200 (73% of total), 150 (55%), 100 (37%) and 50 (18%) coefficients from the grayscale image (Figure 6G), and 50 (18% of total), 40 (15%), 30 (11%) and 20 (7%) coefficients from the binary image (Figure 6I). By applying the 2 × 2 moving window, we repeated the procedure to reconstruct the image based on the remaining Saak coefficients (Figures 6H and J), including the case in which 95% of coefficients were removed (D stands for the number of coefficients: 20). Thus, the forward and inverse Saak transforms preserve robustness and enhance the authenticity of image reconstruction in the presence of adversarial perturbation.

Fig 6.

Forward and inverse Saak transforms to filter the adversarial perturbations from LSFM images. (A and D) Adversarial perturbed images were generated by Deepfool. (B and E) Perturbations were added to both grayscale and binary images. (C and F) Transformed images were reconstructed following truncation of the high-frequency coefficients. (G) By applying the 4 × 4 moving window, 200 (73% of total), 150 (55%), 100 (37%) and 50 (18%) coefficients (spectral dimensions) were filtered out from the grayscale image, respectively, and (I) 50 (18% of total), 40 (15%), 30 (11%) and 20 (7%) coefficients were filtered out from the binary image, respectively. (H and J) By applying the 2 × 2 moving window, 20 (95% of total), 15 (71%), 10 (48%) and 5 (24%) coefficients were filtered out from both the grayscale and binary images, yielding the representative differences from the binary image. The pseudo-color represents the intensity of specified adversarial perturbation. D stands for number of Saak coefficients. Scale bar: 200 μm.

D. Saak Transform-based Segmentation of Perturbed and Reconstructed Images

The unique reversibility of the Saak transform enables additional image processing that may theoretically augment image segmentation methods. To establish the robustness of image segmentation, we implemented Saak transform as a module to the random forest. Four images were perturbed by Deepfool, targeted FGS, untargeted FGS and GA methods, respectively (Figure 7A), and they were visually similar to that of the previous ground truth (Figure 1B). Next, adversarial noises were artificially added to these images (Figure 7B). We applied the non-overlapping Saak transform to segment both the perturbed (Figure 7C) and reconstructed images (Figure 7D), and we conducted a paired t-test following the individual perturbations (Figure 7E). We demonstrate that the low-frequency Saak coefficients are robust to multiple adversarial perturbations to the trabecular network, and the segmentation output reveals no statistically significant difference between the perturbed (blue) and reconstructed (red) segmentation (p = 0.95, 0.95, 0.96, and 0.96, twelve testing images for each perturbation). In this context, Saak transform is able to be used as a light-weight module for feature extraction in conjunction with the existing machine learning-based classifier, improving the robustness and authenticity of segmented images.

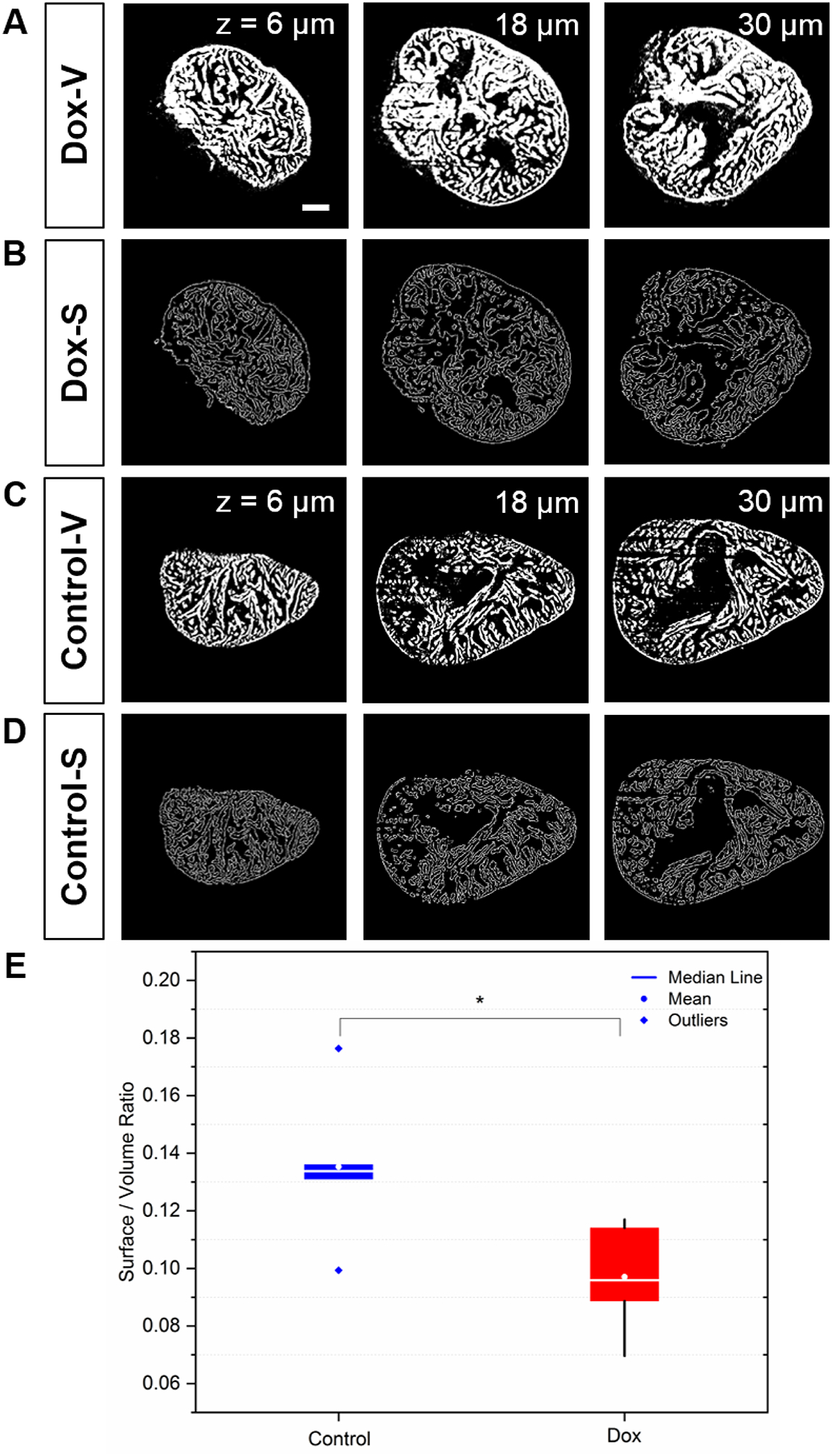

E. Integrating Edge Detection to Assess Chemotherapy-Induced Changes in Trabeculation

To assess the chemotherapy-induced cardiac injury, we integrated Saak transform-based machine learning and edge detection for efficient segmentation. The Saak transform provides the fundamental basis to segment the 3-D endocardium and epicardium, enhancing the efficiency by 20-fold as compared to the manual segmentation. About 20 hours are required to segment a single 2-D image pixel by pixel by a well-trained expert (Figure 8A), whereas only 1 hour is needed for Saak transform to complete both training and testing. In light of the complicated cardiac trabecular network in the adult zebrafish, we demonstrate the application of SVR as an indicator to assess the degree of trabecular network. We used 118 images in total to train and test the Saak transform-based machine learning method, and performed non-overlapping Saak transform in 5 pairs (doxorubicin treatment vs. control) to assess chemotherapy-induced changes in trabeculation. Our statistical analysis of the SVR was performed based on 100 images from 10 zebrafish (5 doxorubicin vs. 5 control), and each group contained 10 2-D images. The epicardium and endocardium were classified as white pixels, and the ventricular cavity was in black similar to the background. The white pixel counts were defined as the volume of cardiac structure (Figures 8A and C). We extracted the contour from the binary images, and trimmed the contour line with a single pixel filter (Figures 8B and D). The white pixels along the contour were defined as the surface area in 3-D. We summed all of the surface areas and volumes respectively, to compute SVR for the treatment vs. control groups. In response to chemotherapy-induced cardiac injury, the SVR was significantly reduced (doxorubicin: SVR= 0.10 ± 0.02, n=5; control: SVR= 0.14 ± 0.03, n=5; p = 0.03). This finding was consistent with the previously reported reduction in cardiac trabeculation in response to chemotherapy-induced myocardial injury [13, 23].

Fig 8.

The surface area to volume ratio (SVR) in response to doxorubicin treatment vs. control. (A) Saak transform-generated endo- and myocardial segmentation. (B) Surface contour provides a quantitative insight into cardiac remodeling in response to chemotherapy-induced heart failure. (C-D) The wild-type zebrafish were used as the control. Columns from left to right indicate different imaging depth at 6, 18, and 30 μm. (E) The reduction in SVR value is statistically significant following doxorubicin treatment (p = 0.03 vs. control, n=5 for treatment and control). Scale bar: 200 μm.

IV. DISCUSSION

The advances in light-sheet imaging usher in the capacity for multi-scale cardiac imaging with high lateral and axial resolution, providing a platform for the supervised machine learning methods to perform high-throughput image segmentation and computational analyses. However, manual segmentation of cardiac images is labor-intensive and error-prone. The main advantage of Saak transform-based machine learning is to reduce the demand for high-quality annotated data that are not readily available in most of LSFM imaging applications, thus allowing for a wide range of post-processing techniques in image segmentation. By partitioning the task into the feature extraction module and classification module, the Saak transform has the capacity to reduce the amount of annotated training data to segment the adult zebrafish hearts while remaining robust against perturbation.

The Saak transform-based machine learning encompasses 2 key steps: 1) to perform KLT on the input stack to extract transform kernels, that is, building the optimal linear subspace approximation with orthonormal bases, 2) to augment each transform kernel with its negative and convert the position to sign, that is, eliminating the rectification loss. Unlike other CNN-based machine learning methods, the Saak transform for feature extraction is based on linear algebra and statistics, and therefore provides mathematically interpretable results. Specifically, the Saak transform builds on the one-pass feedforward manner to determine the coefficients through augmented KLT kernels, maximizing computational efficiency to obviate the need for determination and optimization of filter weights via training data and backpropagation. While a single training image is insufficient for U-Net to converge, the Saak transform-based machine learning method initiates image segmentation under the same conditions. We found that the Saak transform performs more accurate segmentations of LSFM structures than U-Net (at TI3, p = 0.001 for non-overlapping vs. U-Net, and p = 0.02 for overlapping vs. U-Net). The discriminant power of Saak coefficients is built on augmented kernels for pattern recognition and segmentation, and annotated images are only used in the kernel determination module instead of the feature extraction module. Thus, the number of training datasets decouples the segmentation accuracy, implicating that Saak transform has both deductive and inference capacities. However, the potential pitfall of a small training dataset is also captured (Figure 5F) when we used different training images. The final segmentation output varied and DSC values ranged from 0.74 to 0.88 as we totally changed 3 training images, implicating the importance of the diversity with regard to training images.

The inherent linearity and reversibility of the Saak transform allows for both forward and inverse processes to distinguish the principal components in images. By using the LSFM-based cardiac images, we have demonstrated this ability against adversarial perturbations, suggesting that Saak transform is a light-weight module to overcome adversarial perturbation prior to image segmentation and also preserves the capability to reconstruct the estimated images. Saak transform enables complement spatial smoothing techniques to mitigate the adversarial effects without interfering with the classification performance on raw images. In contrast to the unclear reversibility of CNN methods, the existence of the inverse Saak transform is well-defined. We are able to reconstruct the estimated images following the removal of high-frequency Saak coefficients that might be adversarial perturbed. The transparency of the Saak transform pipeline provides reproducibility to analyze breakdown points for numerous applications. All of the aforementioned features enable the Saak transform to dovetail for high throughput image segmentation.

We integrated Saak transform with light-sheet imaging to demonstrate the computational efficiency and reversibility with the limited resources of ground truth, creating a robust and data-driven framework for insights into chemotherapy-induced cardiac structure and mechanics. Previously, we needed to perform manual or semi-automated segmentation to reconstruct LSFM architectures for computational fluid dynamics [15, 16] and interactive virtual reality [17, 18]. The addition of edge detection to Saak transform enables the calculation of surface area in relation to the volume of myocardium. The extent of trabecular network as measured by SVR was significantly attenuated following chemotherapy-induced myocardial injury. Conventionally, total heart volumes were measured by bounding the manual segmented results and filling the endocardial cavities, while endocardial cavity volumes were calculated by the difference between the total heart volumes and segmented myocardial volumes [23]. However, assessing endocardial cavity volume is a labor-intensive task. Based on the Saak transform and edge detection, we utilized SVR to quantify trabeculation in the 3-D image stacks (10 2-D images) with 20-fold improvement in efficiency as compared to our previous method. This approach allows for further quantification of the 3-D hypertrabeculation and ventricular remodeling in response to ventricular injury and regeneration.

While our current Saak transform provides 2-D image segmentation, we will adopt another accelerated parallel computation flow for 3-D objects as well. We will further incorporate the disease information into the current classifier for the inspection of heart failure. Advances in imaging technologies are in parallel with the development and application of Saak transform. We have recently reported a sub-voxel light-sheet microscopy to provide high-throughput volumetric imaging of mesoscale specimens at the cellular resolution [57]. Integrating this method with Saak transform would further enhance spatiotemporal resolution and image contrast with a large field-of-view. The Saak transform would also be applicable to other imaging modalities such as confocal microscopy, MRI and CT, for the post-image processing of imaging dataset.

V. CONCLUSION

Elucidating tissue injury and repair would accelerate the fields of developmental biology and regenerative medicine. We integrate Saak transform-based machine learning with LSFM imaging to assess cardiac architecture in zebrafish model in response to chemotherapy-induced injury, providing a minimal training dataset for efficient and accurate image segmentation. The Saak transform-based LSFM, in conjunction with edge detection, allows for both quantitative and qualitative insights into cardiac ultra-structure and organ morphogenesis. Integrating the augmented kernels with random forest classifier captures the human’s inference capacity to enhance the accuracy of classification. Overall, our platform establishes the basis for quantitative computation of high throughput microscopy images to advance imaging and computational analysis for both fundamental and translational research.

Acknowledgment

The authors declare no conflict of interest. All authors thank Mr. Ryan P. O’Donnell for proofreading the manuscript, and appreciate Dr. Xiaolei Xu at Mayo Clinic for providing adult zebrafish model of chemotherapy-induced injury.

This study was supported by the NIH HL118650 (TKH), HL083015 (TKH), HL111437 (TKH), HL129727 (TKH), I01 BX004356 (TKH), K99 HL148493 (YD) and AHA 18CDA34110338 (YD).

Contributor Information

Yichen Ding, Henry Samueli School of Engineering and David Geffen School of Medicine, University of California, Los Angeles, CA 90095 USA.

Varun Gudapati, Henry Samueli School of Engineering and David Geffen School of Medicine, University of California, Los Angeles, CA 90095 USA.

Ruiyuan Lin, Ming-Hsieh Department of Electrical Engineering, University of Southern California, Los Angeles, CA 90089 USA.

Yanan Fei, Ming-Hsieh Department of Electrical Engineering, University of Southern California, Los Angeles, CA 90089 USA.

René R Sevag Packard, Henry Samueli School of Engineering and David Geffen School of Medicine, University of California, Los Angeles, CA 90095 USA.

Sibo Song, Ming-Hsieh Department of Electrical Engineering, University of Southern California, Los Angeles, CA 90089 USA.

Chih-Chiang Chang, Henry Samueli School of Engineering and David Geffen School of Medicine, University of California, Los Angeles, CA 90095 USA.

Kyung In Baek, Henry Samueli School of Engineering and David Geffen School of Medicine, University of California, Los Angeles, CA 90095 USA.

Zhaoqiang Wang, Henry Samueli School of Engineering and David Geffen School of Medicine, University of California, Los Angeles, CA 90095 USA.

Mehrdad Roustaei, Henry Samueli School of Engineering and David Geffen School of Medicine, University of California, Los Angeles, CA 90095 USA.

Dengfeng Kuang, Tianjin Key Laboratory of Optoelectronic Sensor and Sensing Network Technology, and Institute of Modern Optics, Nankai University, Tianjin 300350, China.

C.-C. Jay Kuo, Ming-Hsieh Department of Electrical Engineering, University of Southern California, Los Angeles, CA 90089 USA.

Tzung K. Hsiai, Henry Samueli School of Engineering and David Geffen School of Medicine, University of California, Los Angeles, CA 90095 USA.

References

- [1].Huisken J and Stainier DY, “Selective plane illumination microscopy techniques in developmental biology,” Development, vol. 136, pp. 1963–1975, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].De Vos WH, et al. , “Invited Review Article: Advanced light microscopy for biological space research,” Rev. Sci. Instr, vol. 85, p. 101101, 2014. [DOI] [PubMed] [Google Scholar]

- [3].Power RM and Huisken J, “A guide to light-sheet fluorescence microscopy for multiscale imaging,” Nat. Meth, vol. 14, pp. 360–373, 2017. [DOI] [PubMed] [Google Scholar]

- [4].Ding Y, et al. , “Multi-scale light-sheet for rapid imaging of cardiopulmonary system,” JCI Insight, vol. 3, p. e121396, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [5].Dodt H-U, et al. , “Ultramicroscopy: three-dimensional visualization of neuronal networks in the whole mouse brain,” Nat. Meth, vol. 4, pp. 331–336, 2007. [DOI] [PubMed] [Google Scholar]

- [6].Amat F, et al. , “Fast, accurate reconstruction of cell lineages from large-scale fluorescence microscopy data,” Nat. Meth, vol. 11, pp. 951–958, 2014. [DOI] [PubMed] [Google Scholar]

- [7].Mickoleit M, et al. , “High-resolution reconstruction of the beating zebrafish heart,” Nat. Meth, vol. 11, pp. 919–922, 2014. [DOI] [PubMed] [Google Scholar]

- [8].Fei P, et al. , “Cardiac Light-Sheet Fluorescent Microscopy for Multi-Scale and Rapid Imaging of Architecture and Function,” Sci. Rep, vol. 6, p. 22489, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [9].Lee J, et al. , “4-Dimensional light-sheet microscopy to elucidate shear stress modulation of cardiac trabeculation,” J. Clin. Invest, vol. 126, pp. 1679–1690, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [10].Ding Y, et al. , “Light-sheet fluorescence imaging to localize cardiac lineage and protein distribution,” Sci. Rep, vol. 7, p. 42209, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [11].Baek KI, et al. , “Advanced microscopy to elucidate cardiovascular injury and regeneration: 4D light-sheet imaging,” Progr. Biophys. Mol. Biol, vol. 138, pp. 105–115, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].Ding Y, et al. , “Light-sheet Fluorescence Microscopy for the Study of the Murine Heart,” J. Vis. Exp, p. e57769, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [13].Chen J, et al. , “Displacement analysis of myocardial mechanical deformation (DIAMOND) reveals segmental susceptibility to doxorubicin-induced injury and regeneration,” JCI Insight, vol. 4, p. e125362, 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [14].Hsu JJ, et al. , “Contractile and hemodynamic forces coordinate Notch1b-mediated outflow tract valve formation,” JCI insight, vol. 4, p. e124460, 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [15].Vedula V, et al. , “A method to quantify mechanobiologic forces during zebrafish cardiac development using 4-D light sheet imaging and computational modeling,” PLoS Comput. Biol, vol. 13, p. e1005828, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].Lee J, et al. , “Spatial and temporal variations in hemodynamic forces initiate cardiac trabeculation,” JCI insight, vol. 3, p. e96672, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [17].Ding Y, et al. , “Integrating light-sheet imaging with virtual reality to recapitulate developmental cardiac mechanics,” JCI Insight, vol. 2, p. e97180, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [18].Abiri A, et al. , “Simulating Developmental Cardiac Morphology in Virtual Reality Using a Deformable Image Registration Approach,” Ann. Biomed. Eng, vol. 46, pp. 2177–2188, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [19].Li G, et al. , “3D cell nuclei segmentation based on gradient flow tracking,” BMC Cell Biol, vol. 8, p. 40, 2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [20].Amat F, et al. , “Fast and robust optical flow for time-lapse microscopy using super-voxels,” Bioinformatics, vol. 29, pp. 373–380, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [21].Schiegg M, et al. , “Graphical model for joint segmentation and tracking of multiple dividing cells,” Bioinformatics, vol. 31, pp. 948–956, 2014. [DOI] [PubMed] [Google Scholar]

- [22].Strnad P, et al. , “Inverted light-sheet microscope for imaging mouse pre-implantation development,” Nat. Meth, vol. 13, pp. 139–142, 2016. [DOI] [PubMed] [Google Scholar]

- [23].Packard RRS, et al. , “Automated Segmentation of Light-Sheet Fluorescent Imaging to Characterize Experimental Doxorubicin-Induced Cardiac Injury and Repair,” Sci. Rep, vol. 7, p. 8603, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [24].Krämer P, et al. , “Comparison of segmentation algorithms for the zebrafish heart in fluorescent microscopy images,” in Intl. Symp. Vis. Comp, 2009, pp. 1041–1050. [Google Scholar]

- [25].Lawrence S, et al. , “Face recognition: A convolutional neural-network approach,” in IEEE T. Neur. Networks, 1997, pp. 98–113. [DOI] [PubMed] [Google Scholar]

- [26].Ciresan D, et al. , “Deep Neural Networks Segment Neuronal Membranes in Electron Microscopy Images,” in Adv. Neur. Inform. Proc. Syst, 2012, pp. 2843–2851. [Google Scholar]

- [27].Krizhevsky A, et al. , “Imagenet classification with deep convolutional neural networks,” in Adv. Neur. Inform. Proc. Syst, 2012, pp. 1097–1105. [Google Scholar]

- [28].Prasoon A, et al. , “Deep feature learning for knee cartilage segmentation using a triplanar convolutional neural network,” in Intl. Conf. Med. Image Comp. Comp. Assis. Interv, 2013, pp. 246–253. [DOI] [PubMed] [Google Scholar]

- [29].Girshick R, et al. , “Rich feature hierarchies for accurate object detection and semantic segmentation,” in Proc. IEEE Conf. Comp. Vis. Patt. Recogn, 2014, pp. 580–587. [Google Scholar]

- [30].Long J, et al. , “Fully convolutional networks for semantic segmentation,” in Proc. IEEE Conf. Comp. Vis. Patt. Recog, 2015, pp. 3431–3440. [Google Scholar]

- [31].Zheng S, et al. , “Conditional random fields as recurrent neural networks,” in Proc. IEEE Intl. Conf. Comp. Vision, 2015, pp. 1529–1537. [Google Scholar]

- [32].Havaei M, et al. , “Brain tumor segmentation with deep neural networks,” Med. Imag. Anal, vol. 35, pp. 18–31, 2017. [DOI] [PubMed] [Google Scholar]

- [33].Chen L-C, et al. , “Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs,” IEEE T. Patt. Anal. Mach. Intell, vol. 40, pp. 834–848, 2018. [DOI] [PubMed] [Google Scholar]

- [34].Orringer DA, et al. , “Rapid intraoperative histology of unprocessed surgical specimens via fibre-laser-based stimulated Raman scattering microscopy,” Nat. Biomed. Eng, vol. 1, p. 0027, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [35].Savastano LE, et al. , “Multimodal laser-based angioscopy for structural, chemical and biological imaging of atherosclerosis,” Nat. Biomed. Eng, vol. 1, p. 0023, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [36].Esteva A, et al. , “A guide to deep learning in healthcare,” Nat. Med, vol. 25, pp. 24–29, 2019. [DOI] [PubMed] [Google Scholar]

- [37].He J, et al. , “The practical implementation of artificial intelligence technologies in medicine,” Nat. Med, vol. 25, pp. 30–36, 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [38].Topol EJ, “High-performance medicine: the convergence of human and artificial intelligence,” Nat. Med, vol. 25, pp. 44–56, 2019. [DOI] [PubMed] [Google Scholar]

- [39].Kuo C-CJ and Chen Y, “On data-driven Saak transform,” J. Vis. Commun. Image Repr, vol. 50, pp. 237–246, 2018. [Google Scholar]

- [40].Szegedy C, et al. , “Intriguing properties of neural networks,” arXiv preprint arXiv:1312.6199, 2013.

- [41].Kurakin A, et al. , “Adversarial examples in the physical world,” arXiv preprint arXiv:1607.02533, 2016.

- [42].Kurakin A, et al. , “Adversarial machine learning at scale,” arXiv preprint arXiv:1611.01236, 2016.

- [43].Liu Y, et al. , “Delving into transferable adversarial examples and black-box attacks,” arXiv preprint arXiv:1611.02770, 2016.

- [44].Song S, et al. , “Defense Against Adversarial Attacks with Saak Transform,” arXiv preprint arXiv:1808.01785, 2018.

- [45].Fehrenbach J, et al. , “Variational algorithms to remove stationary noise: applications to microscopy imaging,” IEEE T. Image Proc, vol. 21, pp. 4420–4430, 2012. [DOI] [PubMed] [Google Scholar]

- [46].Fehrenbach J and Weiss P, “Processing stationary noise: Model and parameter selection in variational methods,” SIAM J. Imag. Sci, vol. 7, pp. 613–640, 2014. [Google Scholar]

- [47].Chen Y and Kuo C-CJ, “PixelHop: A Successive Subspace Learning (SSL) Method for Object Recognition,” J. Vis. Commun. Image Repr, p. 102749, 2020. [Google Scholar]

- [48].Ding Y, et al. , “Light-sheet imaging to elucidate cardiovascular injury and repair,” Curr. Cardiol. Rep, vol. 20, p. 35, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [49].Ritsch-Marte M, “Orbital angular momentum light in microscopy,” Philos. Trans. A Math. Phys. Eng. Sci, vol. 375, p. 20150437, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [50].Qiu X, et al. , “Spiral phase contrast imaging in nonlinear optics: seeing phase objects using invisible illumination,” Optica, vol. 5, pp. 208–212, 2018. [Google Scholar]

- [51].Moosavi-Dezfooli S-M, et al. , “Deepfool: a simple and accurate method to fool deep neural networks,” in Proc. IEEE Conf. Comp. Vis. Patt. Recogn, 2016, pp. 2574–2582. [Google Scholar]

- [52].Goodfellow IJ, et al. , “Explaining and harnessing adversarial examples,” arXiv preprint arXiv:1412.6572, 2014.

- [53].Nguyen A, et al. , “Deep neural networks are easily fooled: High confidence predictions for unrecognizable images,” in Proc. IEEE Conf. Comp. Vis. Patt. Recogn, 2015, pp. 427–436. [Google Scholar]

- [54].Ding Y, et al. , “Haploinsufficiency of target of rapamycin attenuates cardiomyopathies in adult zebrafish,” Circ. Res, vol. 109, pp. 658–669, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [55].Ronneberger O, et al. , “U-Net: Convolutional Networks for Biomedical Image Segmentation,” arXiv:1505.04597 2015.

- [56].Zhixuhao, “Implementation of deep learning framework - Unet, using Keras,” GitHub, https://github.com/zhixuhao/unet, 2017. [Google Scholar]

- [57].Fei P, et al. , “Subvoxel light-sheet microscopy for high-resolution high-throughput volumetric imaging of large biomedical specimens,” Adv. Photon, vol. 1, p. 016002, 2019. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The datasets generated during and/or analyzed during the current study are available from the corresponding author.