Abstract

Employers and insurers are experimenting with benefit strategies that encourage patients to switch to lower-price providers. One increasingly popular strategy is to financially reward patients who receive care from such providers. We evaluated the impact of a rewards program implemented in 2017 by twenty-nine employers with 269,875 eligible employees and dependents. For 131 elective services, patients who received care from a designated lower-price provider received a check ranging from $25 to $500, depending on the provider’s price and service. In the first twelve months of the program we found a 2.1 percent reduction in prices paid for services targeted by the rewards program. The reductions in price resulted in savings of $2.3 million, or roughly $8 per person, per year. These effects were primarily seen in magnetic resonance imaging and ultrasounds, with no observed price reduction among surgical procedures.

The combination of wide variation in health care prices within communities and the lack of an association between price and quality1–3 creates an opportunity: Shifting patients to lower-price providers and facilities could drive substantial savings without hurting the quality of care. How to accomplish this has been unclear. Giving patients access to provider prices through price transparency tools has had limited success in encouraging patients to switch to lower-price providers.4–6 While high-deductible health plans reduce the use of care, these plans have not led to any substantive price shopping.7–9 Reference pricing programs have been successful in encouraging patients to switch to lower-price providers,10–14 but few employers have implemented such programs because of concerns about effectively communicating the complex design to employees and the high financial penalties employees may face if they go to a higher-price provider.15,16

In this context, employers are increasingly turning to financial rewards programs.17 In these programs, if a patient receives care from a lower-price provider, the patient is sent a check for $25–$500, depending on the cost of the service and the specific provider the patient selects. This structure is appealing to employers because, compared to alternative programs such as high-deductible health plans or reference pricing, it encourages patients to price shop without exposing them to increased out-of-pocket spending. It is also easier for employers to implement financial “carrots” instead of “sticks.” Rewards programs have generated broad interest among both employers and health plans.18

Despite these programs’ popularity, little is known about their impact. We assessed the impact of a rewards program implemented by twenty-nine employers in January 2017. We compared prices, choice of providers, and utilization over the period January 2015–December 2017 among employees exposed to the program to outcomes among employees not exposed to the program.

Study Data And Methods

MEMBER REWARDS PROGRAM

Health Care Service Corporation (HCSC) is the fourth-largest health plan in the United States and is the licensee of the Blue Cross Blue Shield Association in Illinois, Montana, New Mexico, Oklahoma, and Texas. In January 2017 HCSC introduced a program called Member Rewards, which was designed by another company (Vitals SmartShopper). This program builds upon HCSC’s existing price shopping support tools that were composed of a telephone-based advice service and an online price transparency tool. These tools were available to all enrollees, in both the intervention and comparison populations, throughout the study period. In the rewards program, if an employee receives one of 135 elective reward-eligible services—for example, magnetic resonance imaging (MRI), computed tomography (CT) scans, and knee replacement surgeries (a full list is in the online appendix)19—the employee can receive a check in the range of $25–$500, depending on the specific service and the relative price of the provider (the lower the price, the larger the reward).

To be eligible for reward incentives, patients must call the rewards advice line or use the price transparency tool before receiving care. Vitals SmartShopper uses a proprietary methodology to identify reward-designated providers based on factors such as the relative price within the community and ensuring access to a reward-designated provider within a reasonable distance from the patient.

The rewards program was offered as an optional add-on benefit only for enrollees in preferred provider organization (PPO) plans. Employers pay for the program separately, and it is layered on top of the existing benefit design. The program does not change other forms of patient cost sharing (for example, copayments, coinsurance, and deductibles). From the first to the second year of the program, the number of people eligible for it increased by nearly 500,000—a 171 percent increase.

INTERVENTION AND COMPARISON POPULATIONS

The program was launched in January 2017 with thirty-five self-insured employers. Six employers were excluded from our analysis because they were not part of HCSC for the entirety of 2015 or 2016. The intervention population therefore consisted of enrollees who received benefits from the twenty-nine remaining employers.

The comparison population was composed of employees (and their dependents) of forty-five self-insured employers who introduced the rewards program in 2018. These employers were receptive to the program and thus are more comparable than an employer that never implemented it.

While both intervention and comparison employers may have offered other types of plans (for example, point-of-service plans), our analyses focused only on PPO enrollees, as those were the enrollees in the program. These PPO plans could include a high deductible. We excluded employees and their dependents older than age sixty-five. We excluded observations from counties with fewer than 100 enrollees and included only counties with employees from both intervention and comparison employers. We required all enrollees to have been in the same insurance plan for at least six months in any calendar year. Our study sample for 2017 included 269,875 enrollees in the intervention population and 284,889 enrollees in the comparison population.

REWARD-ELIGIBLE SERVICES

There were 135 services for which patients were eligible to receive reward incentives. We excluded 4 low-volume services that did not have observations in all three years of the study period. We grouped the remaining 131 services into CT scans, MRIs, ultrasounds, other imaging procedures, endoscopies, minor surgical procedures (for example, breast biopsy), moderate surgical procedures (for example, arthroscopy), and major surgical procedures (for example, knee and hip replacements). We did not further categorize the surgical procedures by type (for example, orthopedic or breast) because of low volumes. Appendix exhibit 1 presents the categorization of each of the 131 services.19

OUTCOMES

Our primary outcome was the average transaction price—the allowed amount paid for services, which consists of total payments made by the employer and the patient. To address skewness, we log-transformed prices. Our secondary outcomes were the fraction of services obtained from lower-price providers and utilization rates of the reward-eligible services. In our main analyses we conducted an “intention-to-treat” analysis that focused on all enrollees offered the program. Our analysis thus estimated the impact for an employer or health plan that introduced the program.

To describe trends in prices across all 131 services, we created a price index. We measured monthly prices for each of the services in both the intervention and comparison populations. We weighted each of these prices using the utilization rates at the levels observed for the intervention and comparison populations in 2016.

One of our secondary outcomes was the fraction of services obtained from lower-price providers. Because we did not have access to a list of providers eligible for reward incentives, we defined lower-price providers as those charging prices at or below the point in the price distribution for each service when patients began receiving reward incentives. (This point was approximately the thirty-fifth percentile within each geographic market.)

Use of reward-eligible services was our other secondary outcome. The impact of a rewards program on utilization is not clear. The reward incentives (which effectively lower out-of-pocket spending) may make patients more likely to receive elective services. At the same time, the rewards program could lead to reductions in utilization when patients learn about their total out-of-pocket spending when using the price support tools. To test the impact of the rewards program on utilization, we measured the proportion of patients who received any reward-eligible service in each calendar year and the proportion of patients who received a reward-eligible service within each category of service (for example, MRI or ultrasound). We also examined changes in total spending on reward-eligible services. We measured spending by calculating the log-transformed total amount spent by each patient on reward-eligible services in each calendar year.

We measured engagement with the rewards program by comparing the proportion of enrollees in the intervention and comparison populations who used the price shopping support tools to search for a similar service at any point in 2017 before they received the respective service. We also identified the share of services in the intervention program that resulted in a reward incentive payment and the average payment amount.

ANALYSES

We used difference-in-differences regression models to assess the impact of the rewards program. These models controlled for patients’ demographic characteristics (age and sex) and high-deductible health plan enrollment. We used dummy variables (that is, fixed effects) to control for service code, time trends, and employer. A full description of the regression models is in the appendix.19 As a sensitivity test, we iteratively added controls to test how the inclusion of different variables affected the results. As shown in the appendix,19 adding additional control variables did not meaningfully change the results.

The use of these models requires the assumption that trends in the intervention and comparison populations would have been similar in the absence of the implementation of rewards. To test the validity of this assumption, we examined the unadjusted and regression-adjusted trends in the pre-rewards period (2015–16). There was only a nonsignificant 0.2 percent difference in prices between the intervention and comparison populations in the pre-implementation period.

To address the concern that market- or service-specific trends could be biasing our results, we conducted a sensitivity analysis in which we included interactions between Metropolitan Statistical Area (MSA)-year and procedure-year indicators. As discussed in the appendix,19 adding these control variables did not meaningfully influence the results.

Given that they had the same insurer and same PPO insurance product, the intervention and comparison populations had similar provider networks and thus paid similar transaction prices at the same providers. In a sensitivity analysis we tested for differential provider price changes. We did not find any evidence that providers changed their prices for people in the intervention population but not for the comparison population (appendix exhibit 6).19

LIMITATIONS

This study had several key limitations. First, we used quasi-experimental approaches to estimate the impact of the rewards program, since exposure to the program was not randomized.

Second, our findings could have been biased if benefit design changes were introduced in the intervention population at the same time as the rewards program.

Third, for several categories of surgical procedures, we observed differential pre-implementation trends, which made it difficult to assess post-implementation savings for these services. Given the magnitude of many of the effects we observed, our findings could have been biased by relatively subtle different pre-implementation trends.

Fourth, across the twenty-nine employers, we observed modest engagement in the rewards program, as measured by the fraction of services for which enrollees received a reward. Health plans and employers that more successfully engaged enrollees might find more robust savings.

Fifth, while we accounted for reward incentive payments, our savings estimates did not account for the administrative costs of implementing and communicating information about the program to members.

Finally, we examined the impacts of only the first year of the program; the impacts could increase over time if more patients became familiar and comfortable with the program. On the other hand, the novelty of the program may have increased engagement in the short term, and in future years we could see less of an impact.

Study Results

INTERVENTION AND COMPARISON POPULATIONS

The number of enrollees increased modestly for the intervention population during the study period. The average age, sex mix, and risk scores were similar and stable over time in both populations (exhibit 1). In the comparison population, the fraction of people in a high-deductible health plan increased by 3.6 percentage points over the study period, while in the intervention population it dropped by 0.6 percentage points.

EXHIBIT 1.

Comparison of intervention and comparison populations in the study of rewards programs for use of lower-price providers, 2015–17

| Intervention population |

Comparison population |

|||||

|---|---|---|---|---|---|---|

| 2015 | 2016 | 2017 | 2015 | 2016 | 2017 | |

| Enrollees | 240,095 | 274,025 | 269,875 | 276,585 | 282,164 | 284,889 |

| Employers | 29 | 29 | 29 | 45 | 45 | 45 |

| Average months enrolled | 11.4 | 11.4 | 11.5 | 11.4 | 11.5 | 11.5 |

| Average age (years) | 33.5 | 33.4 | 33.6 | 33.1 | 33.0 | 33.1 |

| Male (%) | 47.4 | 47.2 | 47.0 | 50.4 | 50.4 | 50.4 |

| Average risk score | 1.06 | 1.03 | 1.03 | 0.97 | 0.98 | 0.99 |

| Enrolled in HDHP (%) | 28.0 | 27.4 | 27.4 | 26.8 | 28.4 | 30.4 |

SOURCE Authors’ analysis of medical claims data for 2015–17 from Health Care Service Corporation employers. NOTES Risk scores were calculated using diagnostic case groups developed by Verscend Technologies. A higher risk score is consistent with greater risk of increased spending. The intervention and comparison populations are defined in the text. HDHP is high-deductible health plan.

VARIATION IN PRICES AND ENGAGEMENT WITH REWARDS PROGRAM

In the year prior to the implementation of rewards, there was considerable variation across the study sample in prices for reward-eligible services. In the intervention population the seventy-fifth-percentile price of $1,532 was about two and half times the twenty-fifth-percentile price of $616 (exhibit 2). For the most expensive category of services, major surgical procedures, the seventy-fifth-percentile price of $35,277 was 67 percent higher than the twenty-fifth-percentile price of $21,107. The full distribution of the within-market ratios of the seventy-fifth- and twenty-fifth-percentile prices is presented in appendix exhibit 2.19 On average, for the same reward-eligible procedure in the same geographic market, the seventy-fifth-percentile price was 3.48 times the twenty-fifth-percentile price. Mean patient cost sharing ranged from $23 for mammograms to $1,158 for major surgical procedures (exhibit 2).

EXHIBIT 2.

Engagement with rewards program by intervention and comparison populations Intervention population

| Intervention population |

||||||||

|---|---|---|---|---|---|---|---|---|

| Price ($) |

Patient used price shopping support tool for services received (%) |

Intervention population |

||||||

| Mean | 25th percentile | 75th percentile | Mean patient cost sharing ($) | Intervention population | Comparison population | Services result in reward (%) | Average reward ($) | |

| All services | 1,090 | 616 | 1,532 | 224 | 8.2 | 1.4 | 1.9 | 114 |

| IMAGING SERVICES | ||||||||

| CT scan | 996 | 264 | 1,508 | 291 | 6.9 | 0.9 | 1.6 | 113 |

| Mammogram | 211 | 147 | 264 | 23 | 5.2 | 0.7 | 1.0 | 42 |

| MRI | 992 | 481 | 1,577 | 281 | 18.9 | 2.6 | 5.8 | 113 |

| Ultrasound | 270 | 123 | 366 | 125 | 3.3 | 0.9 | 0.4 | 41 |

| Other imaging | 594 | 324 | 817 | 68 | 3.2 | 0.4 | 0.8 | 85 |

| INVASIVE PROCEDURES | ||||||||

| Endoscopy | 1,244 | 772 | 1,524 | 210 | 10.2 | 2.1 | 1.9 | 172 |

| Minor procedures | 1,224 | 545 | 1,774 | 357 | 3.0 | 0.8 | 0.6 | 101 |

| Moderate procedures | 2,824 | 1,735 | 3,931 | 565 | 8.3 | 2.6 | 1.5 | 132 |

| Major procedures | 26,409 | 21,107 | 35,277 | 1,158 | 15.4 | 6.2 | 1.7 | 408 |

SOURCE Authors’ analysis of medical claims data for 2015–17 from Health Care Service Corporation employers. NOTES Prices are in 2016 dollars. A full list of services included in each category is in the appendix (see note 19 in text). Price percentiles were calculated across all markets. Market-specific ratios are in appendix exhibit 2. The intervention and comparison populations are defined in the text. CT is computed tomography. MRI is magnetic resonance imaging.

Across all reward-eligible services performed in the twelve months after the program was introduced, 8.2 percent of patients in the intervention population used a price shopping support tool, compared to 1.4 percent of patients in the comparison population. In the intervention population, 1.9 percent of services resulted in a reward payment. Thus, 23.2 percent of the patients who used a price shopping support tool, on average, received a reward payment for going to a lower-price provider. Engagement in the program was highest for MRIs (18.9 percent were associated with the use of a price shopping support tool, of which 31 percent were associated with a reward). The mean reward incentive payment was $114, ranging from $408 for major surgical procedures to $41 for ultrasounds (exhibit 2).

IMPACT OF THE REWARDS PROGRAM ON PRICES

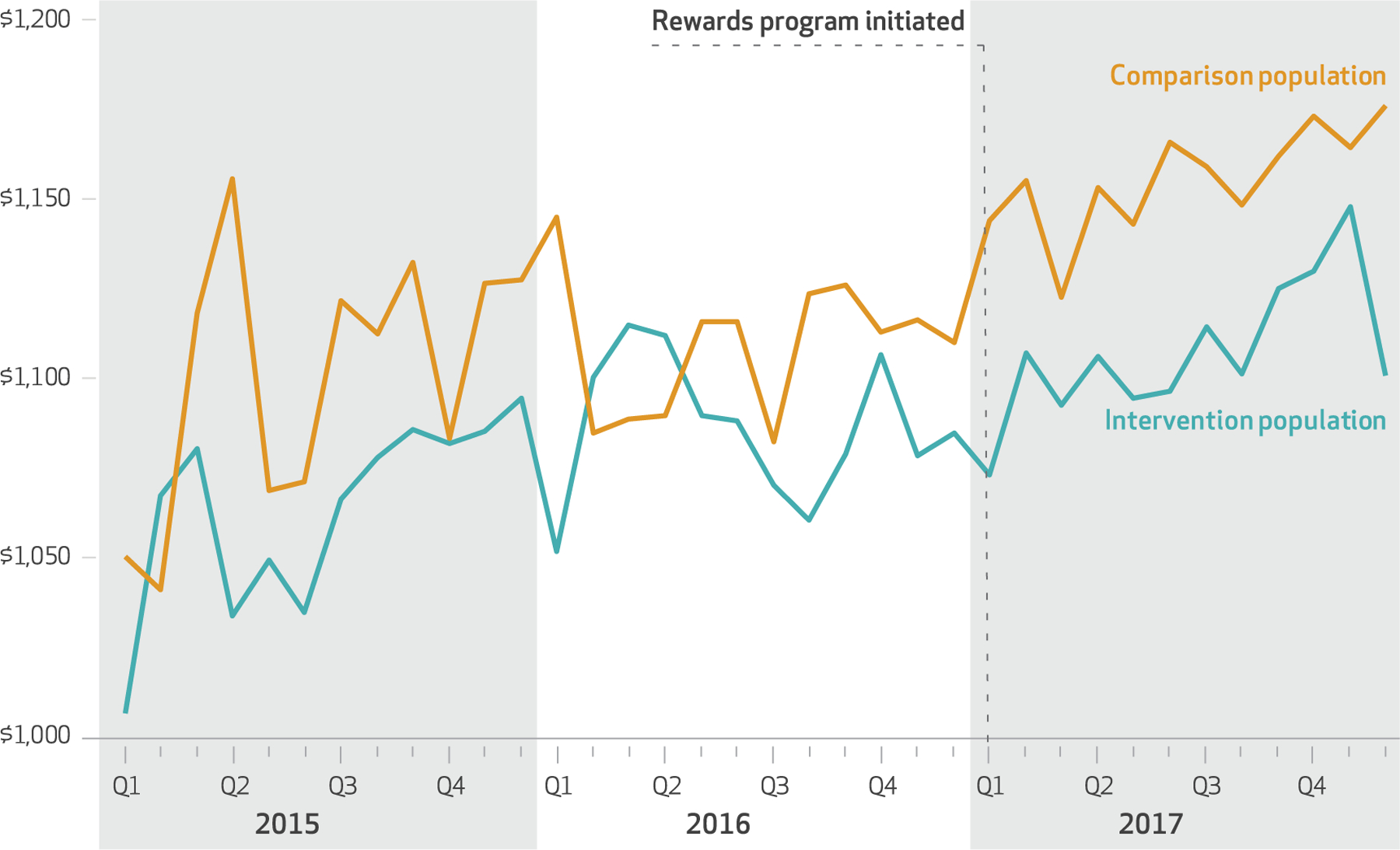

While there was considerable month-to-month variation, in the two-year pre-intervention period the trends were similar between the intervention and comparison populations (exhibit 3). Relative to the pre-implementation trends, the average prices in the 2017 post-implementation period increased by 4.6 percent for the comparison population and 3.0 percent for the intervention population—a difference of 1.6 percent.

EXHIBIT 3. Price index for reward-eligible services in intervention and comparison populations, 2015–17.

SOURCE Authors’ analysis of medical claims data for 2015–17 from Health Care Service Corporation employers. NOTES Averages are weighted by 2016 service volume for both populations. The intervention and comparison populations are defined in the text.

In our multivariate difference-in-differences analyses, the implementation of the rewards program was associated with a 2.1 percent reduction in the average price per service across all eligible services (exhibit 4). The decrease in prices was greatest for MRIs (4.7 percent), ultrasounds (2.5 percent), and mammograms (1.7 percent). We did not find any significant change in prices for CT scans, other types of imaging (for example, bone density and positron emission tomography scans), endoscopy, or surgical procedures.

EXHIBIT 4.

Associations of enrollment in rewards program and prices, use of lower-price providers, and utilization

| Price (percent difference) | Fraction of services performed by lower-price providers (percentage-point difference) | Likelihood of receiving a reward-eligible service (percentage-point difference) | |

|---|---|---|---|

| All services | −2.1*** | 1.3*** | −0.26** |

| IMAGING SERVICES | |||

| CT scan | −1.4 | 0.9 | −0.03 |

| Mammogram | −1.7** | −1.4** | −0.16** |

| MRI | −4.7*** | 3.4*** | −0.01 |

| Ultrasound | −2.5** | 2.8*** | −0.07 |

| Other imaging | 1.0 | 0.1 | −0.05 |

| INVASIVE PROCEDURES | |||

| Endoscopy | −1.0 | 1.6 | 0.06 |

| Minor procedures | 0.03 | −1.6 | −0.04 |

| Moderate procedures | 1.5 | −3.8 | −0.01 |

| Major procedures | −2.2 | 1.6 | −0.04 |

SOURCE Authors’ analysis of medical claims data for 2015–17 from Health Care Service Corporation employers. NOTES The exhibit shows the results of a difference-in-differences analysis that compared prices, use of lower-price providers, and utilization in the first year of the rewards program (2017) versus in the two prior years (2015–16), with trends in the comparison population controlled for. The models also used fixed effects to control for year, quarter, individual service, and employer. A full list of services included in each category is in the appendix (see note 19 in text). CT is computed tomography. MRI is magnetic resonance imaging.

p < 0:05

p < 0:01

For all services, prices in the intervention and comparison populations did not differ significantly before the rewards program was introduced (appendix exhibits 4 and 5).19 Following the program’s introduction, prices for the intervention group decreased in the first two quarters of 2017. As shown in the quarterly regression-adjusted trends in appendix exhibit 4,19 this reduction was less clear in the latter two quarters of the year.

IMPACT OF THE PROGRAM ON USE OF LOWER-PRICE PROVIDERS

In the multivariate difference-in-differences analyses, the implementation of the rewards program was associated with a 1.3-percentage-point increase in the probability of receiving care from a lower-price provider (exhibit 4). Based on the baseline market share of 36 percent for lower-price providers, this increase translates into a relative increase of 3.6 percent in their market share. This change was driven by a 3.4-percentage-point increase in the use of lower-price MRI providers and a 2.8-percentage-point increase in the use of lower-price providers for ultrasounds.

IMPACT OF THE PROGRAM ON USE OF REWARD-ELIGIBLE SERVICES

Across all services, we found a 0.3-percentage-point relative reduction in the fraction of people in the intervention population who used a reward-eligible service. Based on the baseline utilization rate of 17.6 percent, this reduction translates to a 1.7 percent relative reduction in the likelihood of using a service eligible for a reward. When we incorporated both the price and utilization changes, we estimated a 5.2 percent reduction in annual spending on reward-eligible services (appendix exhibit 4).19

In 2017, $108.7 million was spent on reward-eligible services in the intervention population. Thus, the 2.1 percent reduction in the average cost per procedure implies a savings of $2.3 million per year. The intervention employers spent $204,000 on reward incentives. These services accounted for 12.4 percent of total medical spending, so the savings represent 0.3 percent of total medical spending for the intervention employers. This translates roughly into savings of $8 per enrollee per year. When we included changes in utilization, we observed a 5.2 percent reduction in medical spending on reward-eligible services (appendix exhibit 7).19

Discussion

Wide variation in health care prices for the same service presents an opportunity to reduce health care spending if more care can be shifted to lower-price providers. One way to motivate patients to use such providers is simply to pay them to do so. This study examined the impact of a new program that used financial rewards to encourage patients to switch to lower-price providers. In the first twelve months of the rewards program we observed a 2.1 percent relative reduction in prices across all services eligible for the program. This effect was most evident for MRIs, for which there was a 4.7 percent reduction in prices. While engagement with the program was limited, based on the price difference and share of patients who shopped, our results imply that patients who did shop saved 78 percent for ultrasounds, 33 percent for mammograms, and 25 percent for MRIs. These large savings might not be surprising, given the wide variation in prices for these services.

The impact on prices varied substantially by service, with the largest reduction in prices for imaging services such as MRIs and ultrasounds (though not for CT scans) and little impact on surgical procedures. There are several potential explanations for this variation across types of services. To receive a reward, patients may need to receive care from a provider different from the one their physician initially recommended.20 Compared to circumstances where they need an invasive procedure, patients may feel more comfortable asking the provider for a new referral for imaging services. There is also the complexity of switching their care. For a surgical procedure, switching providers is particularly complex, as it requires identifying a lower-price provider and potentially getting another preoperative visit. Quality considerations may also influence patients’ reactions to rewards. Patients may view imaging services more as commodity services and therefore be more likely to switch, while patients may be more worried about the quality of lower-price surgeons. Finally, the availability of lower-price providers may play a role. For example, there are many independent MRI providers but relatively fewer independent CT scan providers.

We also found a 0.3-percentage-point relative reduction in utilization among patients in receipt of any reward-eligible services. The intervention population still had to pay their usual out-of-pocket payments, and a patient’s out-of-pocket expense was much higher than the rewards amount, on average. Therefore, this reduction in utilization may be due to patients’ using the price shopping tools, becoming more aware of these out-of-pocket liabilities, and deciding to not get care from any provider. Whether this reduction in care is beneficial or harmful depends on the relative impact on low- or high-value care.21,22 This reduction in utilization is quite small and needs to be confirmed in future work.

Employers appear to be more interested in rewards programs than in reference pricing programs. The appeal of rewards programs for employers is clear: They are less likely to cause financial hardship and create controversy among employees. However, rewards programs may be less effective in reducing spending. Reference pricing programs save roughly 15 percent per procedure, while we estimated a savings of 2.1 percent for rewards programs.13 This suggests that the “stick” of reference pricing has a greater impact on spending than does the “carrot” approach of rewards.

Supplementary Material

Acknowledgments

This research was supported by a grant from the Laura and John Arnold Foundation. The views presented here are those of the authors and not necessarily those of the Laura and John Arnold Foundation, its directors, officers, or staff. Christopher Whaley has received funding from the California Public Employees’ Retirement System, National Cancer Institute (NCI), and National Institute for Health Care Management (NIHCM) Foundation. Lan Vu and Leanne Metcalfe are employees of the Health Care Service Corporation. Neeraj Sood has received funding from the National Institute on Aging and NIHCM Foundation. Michael Chernew reported having equity in V-BID Health, Virta Health, and Paladin Healthcare Capital. He sits on the boards of advisers for the Commonwealth Fund, Paladin Healthcare Capital, National Institute for Health Care Management, Archway Health, MITRE Corporation Fellows Program, and Congressional Budget Office. He has received payment for consultancy from Pharmaceutical Research and Manufacturers of America, Merck, McKinsey, Anthem, Milliman, American Hospital Association, Janssen Pharmaceuticals, Navigant, Precision Health Economics, and John Freedman Healthcare. He has received speaking honoraria from Healthcare Research Analysts, AcademyHealth, America’s Health Insurance Plans, DuPage Medical Group, Ontario Hospital Association, Deutsche Bank, Madinah Institute for Leadership and Entrepreneurship, Health Information and Management Systems Society, GI Round Table, Medaxiom, DxConference, NEJM Catalyst, Fidelity Brokerage Service, CBA Speakers Bureau, ACO, and Applied Policy LLC. He has received payment for editorial services from American Medical Association, Elsevier, and Intellisphere. He has research funding from the Laura and John Arnold Foundation, Peterson Center on Healthcare, Robert Wood Johnson Foundation, Commonwealth Fund, and Centers for Medicare and Medicaid Services. Ateev Mehrotra has received funding from the Commonwealth Fund, Blue Shield of California, California Health Care Foundation, Donaghue Foundation, Centers for Medicare and Medicaid Services, and NCI.

Contributor Information

Christopher M. Whaley, RAND Corporation in Santa Monica, California..

Lan Vu, Health Care Service Corporation in Dallas, Texas..

Neeraj Sood, Sol Price School of Public Policy and faculty at the Leonard D. Schaeffer Center for Health Policy and Economics, both at the University of Southern California, in Los Angeles..

Michael E. Chernew, Leonard D. Schaeffer Professor of Health Care Policy and director of the Healthcare Markets and Regulation (HMR) Lab in the Department of Health Care Policy at Harvard Medical School, in Boston, Massachusetts.

Leanne Metcalfe, Health Care Service Corporation in Dallas..

Ateev Mehrotra, Department of Health Care Policy, Harvard Medical School..

NOTES

- 1.Hussey PS, Wertheimer S, Mehrotra A. The association between health care quality and cost: a systematic review. Ann Intern Med 2013; 158(1):27–34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Roberts ET, Mehrotra A, McWilliams JM. High-price and low-price physician practices do not differ significantly on care quality or efficiency. Health Aff (Millwood). 2017;36(5): 855–64. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Whaley C The association between provider price and complication rates for outpatient surgical services. J Gen Intern Med 2018;33(8): 1352–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Whaley C, Schneider Chafen J, Pinkard S, Kellerman G, Bravata D, Kocher B, et al. Association between availability of health service prices and payments for these services. JAMA 2014;312(16):1670–6. [DOI] [PubMed] [Google Scholar]

- 5.Desai S, Hatfield L, Hicks AL, Chernew ME, Mehrotra A. Association between availability of a price transparency tool and outpatient spending. JAMA 2016;315(17): 1874–81. [DOI] [PubMed] [Google Scholar]

- 6.Desai S, Hatfield LA, Hicks AL, Sinaiko AD, Chernew ME, Cowling D, et al. Offering a price transparency tool did not reduce overall spending among California public employees and retirees. Health Aff (Millwood). 2017;36(8):1401–7. [DOI] [PubMed] [Google Scholar]

- 7.Haviland AM, Eisenberg MD, Mehrotra A, Huckfeldt PJ, Sood N. Do “consumer-directed” health plans bend the cost curve over time? J Health Econ 2016;46:33–51. [DOI] [PubMed] [Google Scholar]

- 8.Eisenberg MD, Haviland AM, Mehrotra A, Huckfeldt PJ, Sood N. The long term effects of “consumer-directed” health plans on preventive care use. J Health Econ 2017;55: 61–75. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Brot-Goldberg ZC, Chandra A, Handel BR, Kolstad JT. What does a deductible do? The impact of cost-sharing on health care prices, quantities, and spending dynamics. Q J Econ 2017;132(3):1261–318. [Google Scholar]

- 10.Robinson JC, Brown TT. Increases in consumer cost sharing redirect patient volumes and reduce hospital prices for orthopedic surgery. Health Aff (Millwood). 2013;32(8):1392–7. [DOI] [PubMed] [Google Scholar]

- 11.Robinson JC, Brown T, Whaley C. Reference-based benefit design changes consumers’ choices and employers’ payments for ambulatory surgery. Health Aff (Millwood). 2015;34(3):415–22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Robinson JC, Brown TT, Whaley C, Finlayson E. Association of reference payment for colonoscopy with consumer choices, insurer spending, and procedural complications. JAMA Intern Med 2015;175(11): 1783–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Robinson JC, Brown TT, Whaley C. Reference pricing changes the “choice architecture” of health care for consumers. Health Aff (Millwood). 2017;36(3):524–30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Whaley CM, Guo C, Brown TT. The moral hazard effects of consumer responses to targeted cost-sharing. J Health Econ 2017;56:201–21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Sinaiko AD, Shehnaz A, Mehrotra A. Why aren’t more employers implementing reference-based pricing benefit design? Am J Manag Care. Forthcoming 2019. [PubMed] [Google Scholar]

- 16.Brown TT, Guo C, Whaley C. Reference-based benefits for colonoscopy and arthroscopy: large differences in patient payments across procedures but similar behavioral responses. Med Care Res Rev 2018. August 13 [Epub ahead of print]. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Rosenberg T Shopping for health care: a fledgling craft. New York Times Opinionator; [blog on the Internet]. 2016 Apr 12 [cited 2019 Jan 9]. Available from: https://opinionator.blogs.nytimes.com/2016/04/12/shopping-for-healthcare-a-fledgling-craft/ [Google Scholar]

- 18.Appleby J Need a medical procedure? Pick the right provider and get cash back. Kaiser Health News; [serial on the Internet]. 2018 Mar 5 [cited 2019 Jan 16]. Available from: https://khn.org/news/need-a-medical-procedure-pick-the-right-provider-and-get-cash-back/ [Google Scholar]

- 19. To access the appendix, click on the Details tab of the article online.

- 20.Chernew M, Cooper Z, Larsen-Hallock E, Morton FS. Are health care services shoppable? Evidence from the consumption of lower-limb MRI scans [Internet]. Cambridge (MA): National Bureau of Economic Research; [revised 2019 Jan; cited 2019 Jan 16]. (NBER Working Paper No. 24869). Available for download (fee required) from: https://www.nber.org/papers/w24869 [Google Scholar]

- 21.Colla CH, Morden NE, Sequist TD, Schpero WL, Rosenthal MB. Choosing wisely: prevalence and correlates of low-value health care services in the United States. J Gen Intern Med 2015;30(2):221–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Reid RO, Rabideau B, Sood N. Impact of consumer-directed health plans on low-value healthcare. Am J Manag Care. 2017;23(12):741–8. [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.