Abstract

Animal cognition research often involves small and idiosyncratic samples. This can constrain the generalizability and replicability of a study’s results and prevent meaningful comparisons between samples. However, there is little consensus about what makes a strong replication or comparison in animal research. We apply a resampling definition of replication to answer these questions in Part 1 of this article, and, in Part 2, we focus on the problem of representativeness in animal research. Through a case study and a simulation study, we highlight how and when representativeness may be an issue in animal behavior and cognition research and show how the representativeness problems can be viewed through the lenses of, i) replicability, ii) generalizability and external validity, iii) pseudoreplication and, iv) theory testing. Next, we discuss when and how researchers can improve their ability to learn from small sample research through, i) increasing heterogeneity in experimental design, ii) increasing homogeneity in experimental design, and, iii) statistically modeling variation. Finally, we describe how the strongest solutions will vary depending on the goals and resources of individual research programs and discuss some barriers towards implementing them.

Keywords: Animal cognition, Comparison, Experimental design, Generalizability, Replication, Representativeness, Sampling

Animal cognition research often involves small samples. In order to make general claims about a group or species’ behavior, researchers assume that their samples are representative enough of the group or species of interest. However, this assumption is rarely tested, and the literature is populated by claims that are produced by single laboratories, testing the same animals, at single time points and in closely related experimental designs. This could lead to overgeneralized findings that are difficult to replicate (Baker, 2016; Henrich et al., 2010; Würbel, 2000; Yarkoni, 2019), but equally, it could be an effective strategy to maximize scientific progress in resource-limited fields (Craig & Abramson, 2018; Davies & Gray, 2015; Mook, 1983; Schank & Koehnle, 2009; Smith & Little, 2018). To explore this issue, this article shows how concerns about replicability, representativeness, comparison and theory testing, and pseudoreplication are all related through the lens of sampling. To design the best experiments, researchers should consider all five in relation to their sampling plans. Part 1 of this article focuses on sampling and replication, and answers the following questions:

What is a replication in animal behavior and cognition research?

What is the relationship between replication and theory testing?

What makes a species-fair comparison?

Part 2 of the article then focuses on representativeness and asks how concerned researchers should be with the problem of non-representative sampling in animal research. We explore this issue through a re‐analysis of existing data on animal ‘self-control’ and a simulation study. The simulation study shows that, for some between-group or between-species comparisons, poorly representative samples could lead to false positive rates closer to 50% than 5%, the rate conventionally cited when authors use p < .05 to define statistical significance. Finally, we end the article with a discussion of how researchers might assess, mitigate, and account for the problem of representativeness in comparative cognition.

Part 1 – Claims, Samples and Replications

What Are Replications in Animal Research?

A study is labeled a replication because it is the same in some regards to a previous experiment. For example, a replication study may repeat the same experimental protocol as a previous study, except use a new sample of animals. However, it is not possible to perform exactly the same study twice, and because of this, any replication study can also be reframed in terms of a test of generalization. Even if the same experimenters perform the same experiment on the same group of animals, the replication experiment is still a test of generalization across time.

However, while truly identical replications are impossible, this does not mean the concept of replication is obsolete, or redundant with generalizability. When performing replications, scientists are not usually interested in what philosophers call absolute identity, but in what they call relative identity (Geach, 1973; Lewis, 1993; Noonan & Curtis, 2004; Quine, 1950). They are not interested in whether a feature of a replication is exactly the same as an original study, rather, they are interested in whether that feature can be considered the same, or as coming from the same population, relative to a given theory. Idealistically, a theory or claim would specify what can and cannot be considered as coming from the same population, i.e., identifying its boundary conditions (e.g., Simons et al., 2017), and thus what a valid test of it would sample from. For example, consider the Rescorla-Wagner model, which specifies that gains in associative strength are proportional to the prediction error (Rescorla & Wagner, 1972). From the perspective of the Rescorla-Wagner model, it does not matter whether the hypothesis is tested with a sample of rats or a sample of mice, or pigeons, or monkeys, etc. Providing a valid conditioning procedure is followed, all of these species are within the boundary conditions of the Rescorla-Wagner model, and an original study making a general claim about the Rescorla-Wagner model by testing rats could therefore be replicated in pigeons or in monkeys – the Rescorla-Wagner model makes no distinction. On the contrary, the most robust tests of the Rescorla-Wagner model would sample from across all of species that the model applies to, rather than just a single species.

Recently, resampling definitions of replication have been developed (Asendorpf et al., 2013; Machery, 2020). These may be the most effective definition of replication in animal cognition research. When researchers test a claim, they sample from populations of experimental units (most often animals), settings, treatments, and measurements (Gómez et al., 2010). For example, when testing the claim that chimpanzees will explore a mark on their forehead when exposed to a mirror, researchers sample from the population of chimpanzees available for research, from various settings (laboratories, zoos, wild), with a variety of possible treatments (different size mirrors, different types of marks, etc.), and many different possible measurements (e.g., an ethogram of self-directed actions). The resampling definition of replication states that a replication study is a study that resamples from the same populations of experimental units, treatments, measurements, and settings that an original study could have sampled from, relative to the claim being tested (Machery, 2020; Nosek & Errington, 2020). This is outlined in Table 1, which is adapted from Machery (2020).

Table 1. A Resampling Account of Replication (Adapted from Machery, 2020).

| An experiment samples from: | A replication resamples from: |

|---|---|

| A population of experimental units, e.g., a population of a species in captivity | The same population of experimental units |

| A population of treatments, e.g., experimental conditions | The same population of treatments |

| A population of measurements, e.g., definitions of success on a trial | The same population of measurements |

| A population of settings, e.g., sites and times | The same population of settings |

According to the resampling approach, a complete replication resamples from the same populations of experimental units, treatments, measurements, and settings as an original study, relative to the theory or claim in question. However, an experiment could also replicate some features of an original study but not others (Machery, 2020). This would create an explicit test of generalizability; probing whether the claim or theory can be applied successfully outside of some of its pre-specified boundary conditions. For example, a researcher would be able to test whether theories built on work with captive monkeys generalize to their wild counterparts by resampling from the same population of, treatments and measurements, but sampling from a different population of settings (captive versus wild).

To see how the resampling definition can be applied in animal cognition research, we now discuss a partial or “conceptual” replication of a study investigating aging in monkeys (Almeling et al., 2016; Bliss-Moreau & Baxter, 2019). This is a useful example as, like most experiments in animal cognition, Bliss-Moreau and Baxter’s study is not a close replication of the previous study; it was neither conducted in identical laboratory settings nor even in the same model species.

Case Study: Do Nonhuman Primates Lose Interest in the Non-Social World with Age?

In 2016, Almeling and colleagues examined the relationship between the age of monkeys and their interest in the social and non-social environment. They tested 116 Barbary macaques housed in a large (20 ha) outdoor park in France. Across three non-social novel object interest tasks, Almeling et al. reported that older Barbary macaques interacted less with objects compared to younger Barbary macaques (N = 88 in these tasks). From this, they made the general claim that nonhuman primates lose interest in the non-social world with age. Bliss-Moreau and Baxter (2019) replicated one of the object conditions of Almeling et al. in a larger sample of 243 rhesus macaques. However, these rhesus macaques were housed in indoor cages either alone or with a social pair mate, in contrast to the free‐roaming Barbary macaques. Bliss-Moreau and Baxter labeled their study as a “conceptual” replication because they tested a different species in a markedly different setting and used a different, albeit conceptually similar, food-baited apparatus. However, relative to the claim that monkeys, in general, display a loss of interest to non-social stimuli with age, the populations sampled by Bliss-Moreau and Baxter do seem to come from the same overall populations that Almeling et al.’s claim specifies, i.e., both are tests of the claim that interest in the non-social environment declines during aging in monkeys.

Bliss-Moreau and Baxter reported no statistically significant effect of age on exploration across the first two minutes, which they interpreted as contrary to the results of Almeling et al. (2016) and challenging “the notion that interest in the ‘non-social world’ declines with age in macaque monkeys, generally” (Bliss-Moreau & Baxter, 2019, p. 6). This claim seems reasonable: both Almeling et al. and Bliss-Moreau and Baxter sampled from within the experimental units, setting, treatment, and measurement populations implicitly specified by the claim that interest in the non-social world declines with age in macaque monkeys, and so our confidence in the claim overall should decrease, following the negative replication results. But can we really say that Bliss-Moreau and Baxter’s experiment replicated Almeling et al.’s? This question is difficult, because replications exist on many levels (across experimental units, settings, treatments, and measurements) and are theory or claim dependent. Moreover, most experiments in animal behaviour and cognition do not make a single isolated claim. For example, the following theoretical claims could reasonably be inferred from the Almeling et al. paper:

-

1)

Socially living Barbary macaques lose interest in the non-social environment with age

-

2)

Barbary macaques lose interest in the non-social environment with age

-

3)

Socially living monkeys lose interest in the non-social environment with age

-

4)

Monkeys lose interest in the non-social environment with age

When asking how Bliss-Moreau and Baxter’s (2019) study is a replication of Almeling et al.’s, (2016) we should consider not just how the studies relate to each other, but how they relate to each claim we are assessing. Ultimately, the goal of a replication study is usually to test a scientific claim, rather than just to match a previous study’s methods (Nosek & Errington, 2020). Therefore, when interpreting the results of replication studies, researchers should focus on how relevant and diagnostic the data from each study are to the claim(s) in question, rather than just how similar they are. The main strength of the resampling definition of replication — that a replication study resamples from the same populations that an original study could have sampled from, relative to the claim being tested — is that it forces researchers analyzing replication studies to consider exactly what is being tested and how effective the test is, rather than focusing unnecessarily on absolute similarity.

One barrier to identifying and testing claims is that many theories and claims in animal cognition are verbal and vague. This makes it difficult to derive risky predictions of the theories, because their vagueness affords them the flexibility to accommodate nearly any result (Roberts & Pashler, 2000). This could be remedied by formally modelling theories and hypotheses (Farrell & Lewandowsky, 2010; Guest & Martin, 2020), and some suggest these models are key to making progress in understanding animal minds (Allen, 2014), or in understanding what comparative cognition can achieve as a science (Farrar & Ostojić, 2019). These models can be informed by known mechanisms driving animal behavior, such as associative learning (Heyes & Dickinson, 1990; Lind, 2018; Lind et al., 2019), but these need not be preferred to, or even contradict, non-associative models (Bausman & Halina, 2018; Mercado, 2016; Smith et al., 2016). Just like any other scientific tool, formal models need critique from a variety of perspectives; but the benefit of these models is that they facilitate such critique, in comparison with verbal theories that can avoid it.

Species-Fair Comparisons

The resampling account not only offers a theoretical framework for replications, generalizations, and theory testing in animal cognition research, but it also offers a framework for analyzing between-species comparisons. Between-species comparisons are just tests of the generalizability of an effect across species, and like any other test of generalization, they can be reframed in terms of replication, too. Comparing an effect between a group of chimpanzees and a group of bonobos is the same as testing if the effect generalizes from chimpanzees to bonobos, or replicating a study from chimpanzees in bonobos, and both of these are entailed by a coarser study of whether great apes (chimpanzees, bonobos and orangutans) show the effect in question. Whether the study in question is best described as a comparison, replication or a test of a claim is somewhat moot — it is all three at the same time, relative to claims of different coarseness.

However, there are clearly times when researchers may wish to focus on comparative claims, and this requires sampling from different population of experimental units, e.g., different breeds, groups, or species of animals (with the caveat that these could be seen as coming from the same population relative to broader claims). For an ideal comparison between two groups of animals, researchers would sample from different populations of experimental units, and the same populations of treatments, measurements, and settings. Again, “same” here does not mean identical, but the same relative to the claim and experimental unit at hand. For example, consider a researcher who wants to compare the relative response of dolphins (e.g., Hill et al., 2016) to familiar and unfamiliar humans with that of elephants (e.g., Polla et al., 2018). Clearly, the researcher must sample from different populations of experimental units [dolphins, elephants], and a different population of settings [aquatic, non-aquatic]. However, even though the settings are different in absolute terms, they are the same relative to the experimental unit; the dolphins are tested in water, the elephants on land, and this makes the comparison more valid (Clark et al., 2019; Leavens et al., 2019; Tomasello & Call, 2008), or a ‘species-fair’ comparison (Boesch, 2007; Brosnan et al., 2013; Eaton et al., 2018; Tomasello & Call, 2008).

Part 2 – The Problem of Representativeness in Animal Research

A sampling perspective shines light on why many results in animal research may struggle to replicate. Animal experiments often sample a small number of animals at a single site, using a single apparatus and measurement technique. However, from these small samples come general claims about animal behavior, creating a mismatch between the statistical model and the theoretical claim (Yarkoni, 2019). The statistical model will usually allow generalization to the population that the experimental units were randomly sampled from, for example the population of animals at a given site, (although even then they may not be randomly sampled, see Schubiger et al., 2019), but any inferences to the wider population of interest will be overconfident, unless the population of interest can be justified as the individual animal (Smith & Little, 2018). This is an unavoidable consequence of working with difficult to reach populations (Lange, 2019), but it should be accounted for when building theories. This is important as many aspects of animal behavior vary across samples; for example, due to experimenter effects (Beran, 2012; Bohlen et al., 2014; Cibulski et al., 2014; Pfungst, 2018; Sorge et al., 2014), genetic variation (Fawcett et al., 2014; Johnson et al., 2015; MacLean et al., 2019), housing conditions (Farmer et al., 2019; Hemmer et al., 2019; Würbel, 2001), diets (Davidson et al., 2018; Höttges et al., 2019), and learning/developmental histories (Skinner, 1976).

Situating the Problem of Representativeness

The problem of representativeness has been discussed from several different angles across scientific literatures, unfortunately poorly connected and with different terminologies. However, they share the similar underlying concern that researchers’ claims are poorly matched by their sampling strategies and statistical models.

Replicability

First, a lack of representative sampling causes low replicability or results reproducibility (not to be confused with computational reproducibility, e.g., see Culina, van den Berg et al., 2020; Minocher et al., 2020): because of small and non‐representative samples of experimental units, settings, treatments, and measurements, sampling variation will mean that laboratories will struggle to replicate or reproduce the results of previous experiments. This argument has featured heavily in rodent phenotyping studies (Crabbe et al., 1999; Kafkafi et al., 2017, 2018; Lewejohann et al., 2006; Richter et al., 2009, 2010, 2011; Wahlsten et al., 2003; Würbel, 2000, 2002).

Generalizability and External Validity

Second, a lack of representative sampling causes problems of generalizability or external validity: researchers’ claims will not often generalize to novel but related settings (Yarkoni, 2019).

Pseudoreplication

Third, the lack of representative sampling in animal research is usually due to non-random sampling from the population of interest. This leads to pseudoreplication (Hurlbert, 1984; Lazic, 2010) if this non‐random sampling is not accounted for in the statistical models, and the consequence is that uncertainty intervals will be overly narrow, and the results will struggle to replicate in new samples – or generalize to them.

Theory Testing

Fourth, the lack of representative sampling produces weak tests of a theory or claim (Baribault et al., 2018): a test that probes only a small sample space of a theory’s predictions provides less opportunity for weaknesses in the theory or claim to be found, compared to a test that covers most of the relevant sample space.

The Difficulty of Identifying Differences Between Groups and Species

That animal behavior differs across space and time makes it difficult to understand whether species or group differences in behavior are really a consequence of real species differences, or whether they are due to the host of other factors that vary between sites. In reality, the observed differences between two groups will be the sum of the real group differences in behavior that are of interest and all other factors that influence animal behavior and vary between sites. When making quantitative between-species and between-group comparisons, they are nearly always confounded by site-specific differences in factors that are not the focus of interest. Lazic (2016) commented on such a scenario in an introductory textbook for laboratory biology: “To make valid inferences, one would need to assume that the effects of [site] are zero. Moreover, as this assumption cannot be checked, the researcher can only hope that [site] effects are absent. Such a design should be avoided” (Lazic, 2016, p. 68).

One may object to this and acknowledge that, while there are many variables that differ between sites but go unmeasured, the net sum of these effects should be close to zero across sites, i.e., they will cancel each other out. However, this would only be the case if there were many variables with small effect that were randomly assigned to each site, and this is not what happens. On the contrary, laboratories or sites differ markedly from each other on a range of variables with large effects (e.g., housing conditions, learning experiences). It is often recognized that animal laboratories are poorly positioned to generate representative data of the species in the wild (Boesch, 2020; Calisi & Bentley, 2009), but what if they are also poorly positioned to generate representative data of the species in laboratories? Taken to the extreme, there may be a laboratory that is testing a sample that is more representative of a species other than its own; for example, a sample of lemurs that have parrot-like self-control, or a sample of handreared wolves that behave more like dolphins when presented with a novel object. To highlight the difficulties of making between group or between species inferences across sites, we now consider a case study of between species comparisons made using the cylinder task, and then present a simulation study of how sampling affects comparisons in animal research.

Case Study: Between Species Comparisons and the Cylinder Task

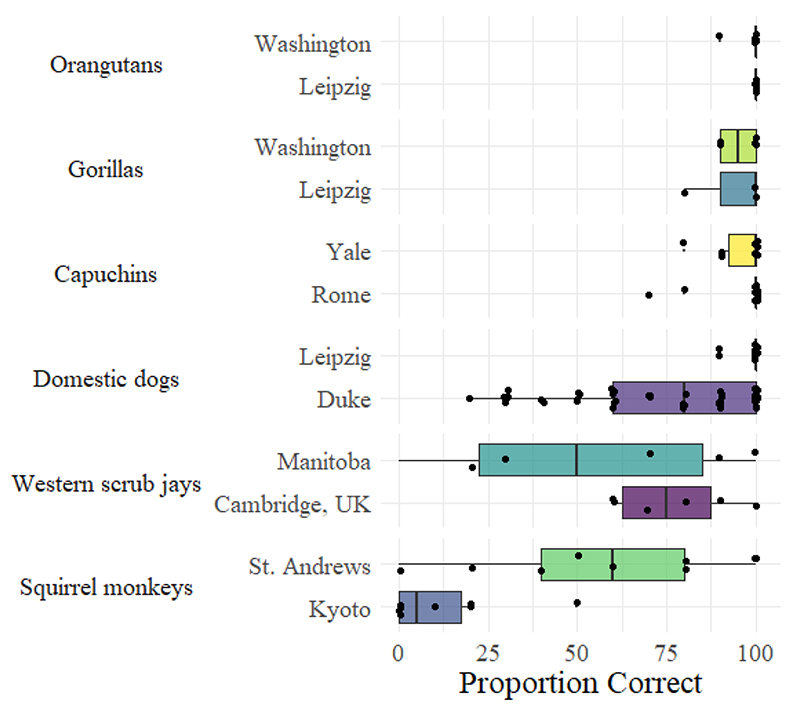

For this case study, we used data from MacLean et al. (2014) to probe the stability of a measurement of behavioral inhibition when taking new samples of experimental units at new sites. MacLean et al.’s (2014) large-scale study tested the performance of 36 species across 43 sites on two tasks aimed at measuring self-control (but rather measured one form of behavioral inhibition: Beran, 2015); the A not B task and the cylinder task. The cylinder task was given to 32 species across 38 sites. In this task, animals are familiarized with retrieving a piece of food from the center of an opaque cylinder. After retrieving the food from the opaque cylinder in 4 out of 5 consecutive training trials, the animals proceed to testing. In testing, the animal is presented with a transparent cylinder with food in the center. In order to successfully retrieve the food, the animal needs to inhibit an initial drive to directly reach for the food which would cause them to subsequently collide with the transparent cylinder, and instead detour to the cylinder ends to access the food. Each animal was given 10 trials, and an overall score between 0% (no animals succeeded on any trial) and 100% (all animals succeeded on every trial) was computed for each species. Five species (orangutans, gorillas, capuchin monkeys, squirrel monkeys and domestic dogs) were tested across two sites. Figure 1 displays the between-site variation for these species, and also includes data from an additional species, the Western scrub-jay, that was tested both in the original experiment and a couple of years later at a new site (Stow et al., 2018).

Figure 1. Species Differences Between Sites in the Cylinder Task.

Note. All data from MacLean et al. (2014), except the Manitoba scrub-jay data, which are from Stow et al. (2018).

For the species not performing near ceiling (scrub-jays, squirrel monkeys and domestic dogs), the variability is striking. For squirrel monkeys, the median score in Kyoto was 5%, compared with 60% in St Andrews. No individual in Kyoto performed above the median in St Andrews, and this demonstrates how some between-site differences that cannot be attributed to species identity can have large influences on behavior. To highlight the issues this can pose for inference, consider what would happen if the animals from Kyoto were not squirrel monkeys, but Tonkean macaques. Then, it is likely that the difference in performance compared to the St Andrews’ squirrel monkeys would likely be interpreted as a species difference – “Tonkean macaques are worse at behavioral inhibition than squirrel monkeys,” could be the title of a paper reporting these results. In fact, the substantial difference in behavior between species tested at different sites need not imply meaningful species differences at all. If we took new samples for all species that MacLean et al. tested, it is possible a completely different ranking of animals would be produced. MacLean et al.’s (2014) overall model gains credibility, however, because of the use of phylogenetic models (and also including data from the A-not-B task, another test of behavioral inhibition). Incorporating phylogeny and estimating phylogenetic signal when making comparisons, providing there is enough data, little bias, and sufficient model checks, can lead to large increases in statistical power (Freckleton, 2009; see MacLean et al., 2012 also for an overview of other benefits of comparative phylogenetic models). However, any individual site comparison of non-ceiling cylinder task performance between species, either within the MacLean et al. study, or from other published research, is likely too uncertain to produce meaningful estimates at the species level, and this can lead researchers astray when making inferences from individual results. Table 2 presents some statements from studies that followed MacLean et al.’s procedures using a single species at a single site, along with the species’ cylinder task “score.”

Table 2. Results and Claims from Four Species Tested on the Cylinder Task.

| Study | Group | Score | Claim |

|---|---|---|---|

| Ferreira et al., 2020 | High ranging chickens | 24% | “High rangers had the worst performance of all species tested thus far” (p. 3) |

| Low ranging chickens | 40% | ||

| Isaksson et al., 2018 | Great tits | 80% | “The average performance of our great tits was 80%, higher than most animals that have been tested and almost in level with the performance in corvids and apes.” (p. 1, abstract) |

| Langbein, 2018 | Goats | 63% | “The results indicated that goats showed motor self-regulation at a level comparable to or better than that of many of the bird and mammal species tested to date.” (p. 1, abstract) |

| Lucon-Xiccato et al., 2017 | Guppies | 58% | “A performance fully comparable to that observed in most birds and mammals” (p. 1, abstract) |

This set of numerical comparisons are factually correct, but what do they mean? The worst performing chickens actually scored higher than the Kyoto squirrel monkeys, and if we sampled another population of great tits it is possible that their performance would regress close to the mean value of all species. Ordaining a species with a single score following a single test on a small sample of animals from a single site with a single apparatus, and then comparing this number between species has no means of error control and hides the uncertainty in their estimates. Several of the inferences are reasonable; for example, we may genuinely believe that chickens will perform poorly on behavioral inhibition tasks, but this is primarily constrained by our (arbitrary) prior beliefs. For potentially more surprising results, such as the high score of great tits, our beliefs are not so constraining, yet neither are the data.

Moreover, and counter-intuitively, the best estimate of great tit performance on the cylinder task is not the 80% reported by Isaksson et al. (2018), even though this is the only known data collected with great tits on this task. Rather, the best estimate would utilize the information we have about similar animals (other birds of a similar size/socio-ecology/phylogeny), that would shrink our estimate of great tit performance closer to the mean value for, as an example, all Passeriformes tested to date. Interestingly, during the revision process of this article, two further datasets of great tit performance on the cylinder task became available. In contrast to the 80% reported by Isaksson, and in line with our prediction of regression to the mean, Troisi et al. (2020) recorded a score of 38%, and a sample of 35 tested by Coomes et al. (2020) scored 41%. Moreover, in a pilot to one of these studies using a larger tube, a sample of great tits scored 0%, suggesting that the size of the tube can heavily modulate individual’s performance (G. L. Davidson, personal communication, Jan., 2021).

How, then, can we make better inferences from single site samples of data? We could attempt to get a better estimate at this single site; for example, by testing great tits on a wide range of tube apparatuses. Alternatively, we can also use the data from other species to inform our great tit estimate. Because the behaviour of different animals will often be correlated, for example as a function of phylogenetic distance, we should allow data from similar species to guide each other’s estimates. Ideally, a phylogenetic model would be constructed that incorporates information on the phylogenetic distance between species and a model of the trait’s evolution (McElreath, 2016). Other relevant predictor variables, such as body size, tube size, or body size/tube size ratio, could be added into these models, also, or they could be investigated in separate meta-regression models. However, for many animal cognition questions, such models will be difficult to generate, but the general principle holds: when a surprisingly high or surprisingly low estimate of a species behavior is produced, and most data from similar species are less extreme, it is likely that the new estimate is over- or underestimated. Returning to the cylinder task, it is clear that non-ceiling results are not very informative about animal cognition if we do not know whether the results from any given sample are stable across space or time — before considering issues of construct validity (Beran, 2015; Kabadayi et al., 2017, 2018).

Simulation Study

To illustrate how between-site variation (a proxy for the sum of setting, treatment and measurement variation) can lead to elevated false positive rates and results that struggle to replicate, we now present a short simulation study of a replication and a comparison in comparative cognition. The simulation and visualizations were performed in R 4.0.2 (R Core Team, 2020), using the packages tidyverse (Wickham et al., 2019), extrafont 0.17 (Chang, 2014) and scales 1.1.1 (Wickham & Seidel, 2020). The code is available at: https://github.com/BGFarrar/Replications-Comparisons-and-Sampling. This section can be skipped if the reader is already comfortable with the topic. We simulated a hypothetical within-species replication between two groups of chimpanzees, and a hypothetical between-species replication/comparisons between a group of chimpanzees and a group of bonobos. We simulated 100 hypothetical sites of chimpanzees, and 100 hypothetical sites of bonobos, with 100 animals at each site. The behavior of animals within a site was correlated, such that animals sampled from the same sites, on average, had more similar behaviors than animals sampled from different sites. At each site, we ‘measured’ each animal’s behavior to produce a neophobia and self-control score for each. For both the replication simulation and the comparison simulation, four parameters were used to simulate each animal’s behavior: a population grand mean, β0, a by-location random intercept L0 a by-subject random intercept S0, and a by-individual residual error term els. Subject was nested within location, such that all subjects at the same location had the same location effect. Data were simulated using the following formula:

For the replication simulation, 10,000 chimpanzees were simulated with the following settings:

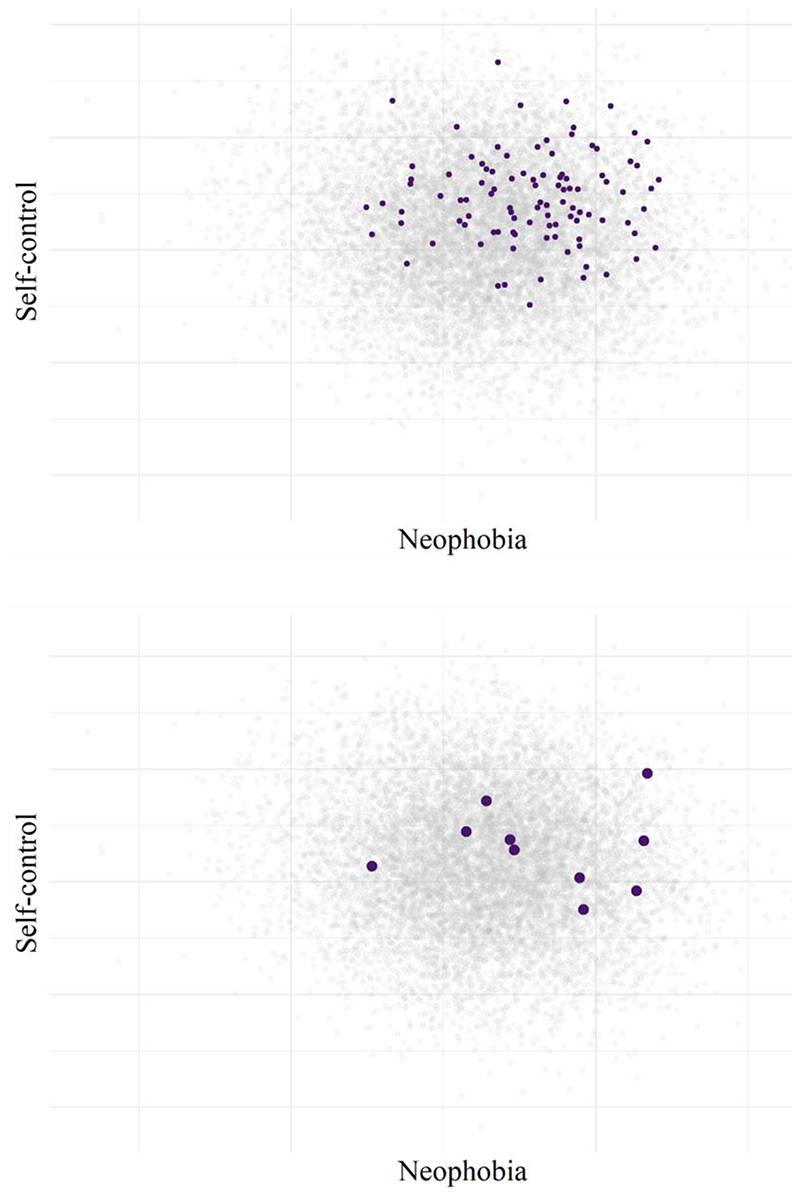

The panels of Figure 2 display the behavior of all 10,000 chimpanzees (100 animals x 100 sites) in grey. Next, we randomly selected one site to be our first sample. The upper panel of Figure 2 highlights all 100 chimpanzees from this site. However, in reality we would not usually have access to or test 100 animals at a site; instead, a primate cognition sample size is usually around 7 (Many Primates, Altschul, Beran, Bohn, Caspar et al., 2019). Therefore, we randomly selected 10 animals, which are highlighted in the lower panel of Figure 2.

Figure 2. The Behavior-Space of a Simulated Population of 10,000 Chimpanzees (grey dots in both panels).

Note. In purple, the Upper Panel shows 100 hypothetical chimpanzees sampled from a single site, and the Lower Panel shows just 10 of these chimpanzees.

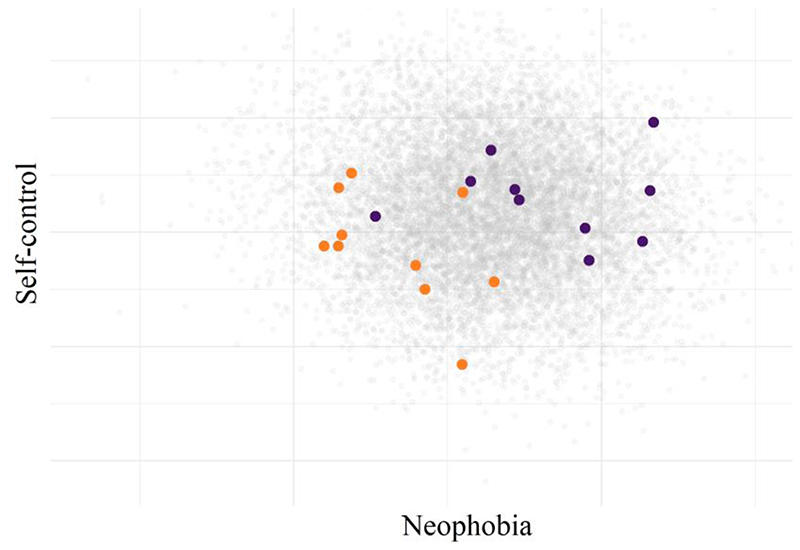

To create a replication study, we repeated this process, taking another random sample of 10 chimpanzees from a different site. This sample is plotted in Figure 3 alongside our first sample, creating a within‐species (or experimental unit) replication, which could also be framed as a between site comparison, or a test of generalizability across sites.

Figure 3. A Hypothetical Within-Species Replication, or Between-Site Comparison.

Note. Purple points represent the same chimpanzees sampled from the first site (Figure 2), and orange points represent a second sample of chimpanzees.

The second sample of chimpanzees, in orange, had smaller neophobia and larger self-control scores than the first sample, in purple. Performing a two-sided Welch’s t test, both differences were statistically significant, p neophobia < .001 and p self-control = .04. This reflects the real variation between the sites, which were simulated at 28% for neophobia, and 14% for self-control. Our samples of just 10 animals captured this difference relatively accurately, estimating the group differences as 31% for neophobia and 14% for self-control. While our two samples provided good estimates of the true between-sample differences, our samples were poorly representative of the overall population of chimpanzees. Site 1 (purple), overestimated neophobia by 14% and self-control by 3%, whereas Site 2 underestimated neophobia by 17%, and self-control by 11%.

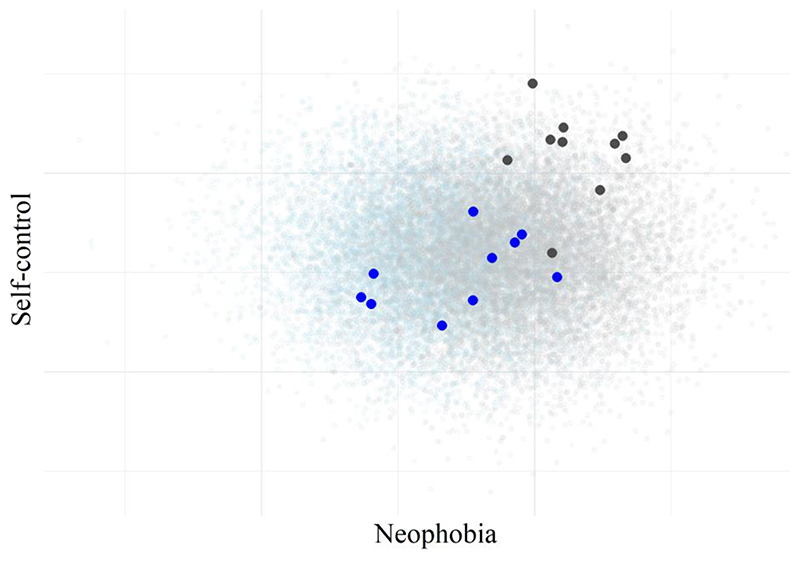

Having simulated a within-species replication, we proceeded to simulate a typical between-species comparison. To achieve this, we randomly sampled from the set of 100 animals at 100 sites, but this time of bonobos. All of the parameters determining bonobo behavior were kept the same as with the chimpanzees, except that we set the bonobo neophobia scores to be, on average, just under one standard deviation higher than the chimpanzee neophobia scores (specifically, this was set as the species difference being 1.5 times larger than the between-site standard deviation, such that:

The decision to make bonobos more neophobic than chimpanzees was arbitrary, and most empirical data supports the opposite conclusion (e.g., Forss et al., 2019). The average self-control scores were kept the same between species. Just as with the replication, we simulated all 10,000 chimpanzees and bonobos, and selected a site at random from which we sampled 10 chimpanzees, and a random site from which we sampled 10 bonobos. Figure 4 shows the results: the entire population of 10,000 chimpanzees in grey circles and 10,000 bonobos in blue circles, and our samples are highlighted.

Figure 4. A Comparison Between Hypothetical Samples of Chimpanzees and Bonobos.

Note. Populations of 10,000 chimpanzees (light blue) and 10,000 bonobos (gray) sampled from 100 simulated sites. Samples of 10 chimpanzees and 10 bonobos from a single site are overlaid for chimpanzees (blue) and bonobos (dark grey).

Our samples in Figure 4 captured the direction of the population difference in neophobia scores, which were statistically significantly larger in the bonobo sample than the chimpanzees, p neophobia < .001. However, the magnitude of this effect was overestimated by 41%. For self-control, where no population differences were simulated, our samples produced a statistically significant difference between chimpanzees and bonobos (p self-control < .001), incorrectly estimating a species difference of over 40%. This highlights how even when a statistically significant difference is observed between species at different sites, it does not mean that the difference should be attributed to species identity alone. To explore this further, we investigated how often our comparison would return a statistically significant difference between the neophobia scores and self-control scores of our chimpanzee and bonobo samples. Because our simulation specified that there were no true differences between the species in self-control, this can provide our base-rate of false positive results, under the assumption that statistically significant results would be taken as evidence for a species difference. We simulated 100,000 comparisons between samples of 10 chimpanzees and 10 bonobos, each taken from a new site.

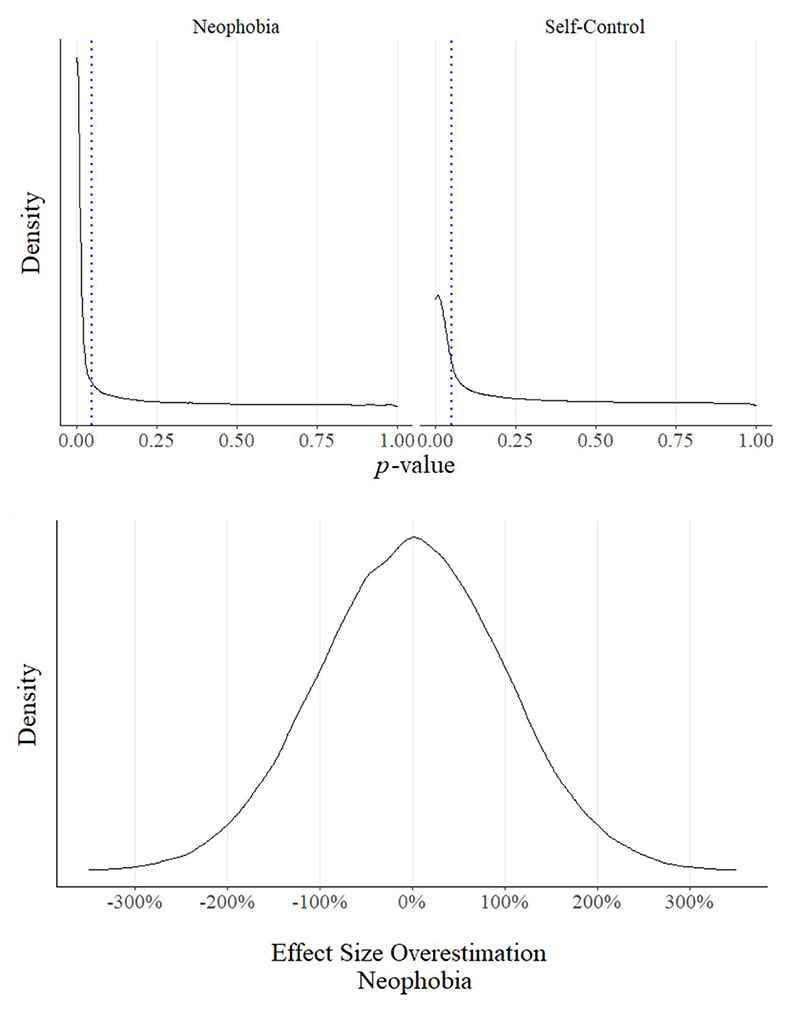

Across the 100,000 simulated comparisons, our small sample design detected a true difference between chimpanzees and bonobos in neophobia 66% of the time with alpha = .05, which looks quite promising. However, the 100,000 simulations also detected a difference between the chimpanzees and bonobos on the self-control measure 49% of the time, in which there were no species differences specified. Figure 5 (upper panel) plots the p-value distributions of the two comparisons, and the similarity between these distributions shows that observing a statistically significant difference between two samples, even if p < .05, is not necessarily strong evidence of an overall species difference. Figure 5 (lower panel) displays the degree of over- and under-estimation of the neophobia effect size across all samples. Strikingly, in 32% of comparisons, the effect size was overestimated or underestimated by over 100%.

Figure 5. p-value Distributions and Effect Size Overestimation from Two Simulated Comparisons.

Note. Upper panel: p-value density distributions of two-sample t-tests from 100,000 comparisons between 10 hypothetical chimpanzees and 10 hypothetical bonobos, sampled at different sites. The simulation included between-site variation, and a species difference in neophobia, but not self-control. Lower panel: The density distribution of effect size overestimation for the 100,000 comparisons of neophobia behavior. No data are shown for self-control as the set difference was 0, therefore it was not possible to calculate the % overestimation for simulations with non-zero differences.

Strong and Weak Comparisons

Non-representative sampling leads to weak comparisons, and these comparisons are particularly troublesome when:

There is a large ratio of within-species variation to between-species variation (MacLean et al., 2012), and absolute species differences are small. Such a scenario will mean the direction and magnitude of differences between samples will be volatile.

Experimental units are not tested across samples of the same relative settings, measurements, and treatments, and because of this, measurement techniques systematically differ between research programs; For example, when a single population of experimental units is repeatedly sampled, or the same researchers and research groups perform most of the research, with the same experimental designs (Clark et al., 2019; Ioannidis, 2012a; Leavens et al., 2019). This could lead to highly replicable – within narrow boundary conditions - differences between samples being recorded, but these differences being a consequence of specific local features (often confounders) rather than general species differences.

In contrast, strong between-group comparisons should fulfill the following three criteria:

-

1)

The results are consistent within experimental units across times, experimenters, treatments, and measurements within the claims’ boundary conditions.

-

2)

The samples of experimental units being compared are tested from within the same relative populations of settings, treatments, and measurements relative to the claim.

-

3)

The between-group differences can be replicated when resampling from the target populations of experimental units.

Improving Sampling in Animal Research

There are several methods researchers can use to assess and model the effects of biased sampling on the reliability and generalizability of their research findings, which we have divided into experimental design and statistical methods.

Experimental Design

Increasing Heterogeneity

Increasing heterogeneity is a direct method of increasing the representativeness of a sample to a target population. By sampling more diversely from within the populations specified by a theory or claim, researchers can better estimate the population parameters of interest (Milcu et al., 2018; Voelkl et al., 2018, 2020; von Kortzfleisch et al., 2020). This could involve sampling from multiple sites, such as in large collaborative studies (Crabbe et al., 1999; Culina, Adriaensen et al., 2020; Many Primates, Altschul, Beran, Bohn, Call et al., 2019), but also by using multiple different experimenters and varying the conditions and treatments within sites (Baribault et al., 2018; Richter et al., 2010; Würbel, 2002). As an example, Rössler et al. (2020) compared the ability of a sample of wild-caught Goffin’s cockatoos and a sample of laboratory-housed Goffin’s cockatoos to physically manipulate an apparatus to access a reward. However, rather than presenting the cockatoos with a single apparatus, they were tested in an area with a total of 20 apparatuses. Because Rössler et al. sampled from a diverse range of treatments, we can be confident that - at least for these samples of cockatoos – the results are robust across variations in treatment. An ideal experiment might generate diverse samples across all feasible factors – sites, treatments, experiments, times of day, measurements etc., which will increase the replicability and generalizability of the results (Würbel, 2000); however, it is high-cost (Davies & Gray, 2015; Mook, 1983; Schank & Koehnle, 2009).

Increasing Homogeneity and Control

In contrast to increasing heterogeneity, a lower-cost approach is to increase standardization and control. For example, performing experiments with blinded experimenters only is more homogeneous than performing experiments with a mixture of blinded and non-blinded experimenters. From the re-sampling perspective, blinded and unblinded experimenters come from different populations, and most theories in comparative cognition make predictions that are independent of experimenter bias (i.e., do not predict that experimenter effects are essential for their predictions to be true). Similarly, homogeneity can be useful when a theory is most effectively tested within a subset of the populations that it might apply to. For example, animals are often trained before being tested when researchers attempt to isolate individual psychological mechanisms, such as learning. Such researchers are not usually interested in measuring variability due to neophobia or novel-object exploration, and so animals are familiarized with and trained on the task set-up before being tested to avoid including this “noise” in the dataset. The training pulls all individuals towards their theoretical maximum, increasing statistical power and the relevance of the collected data to the theory in question (Schank & Koehnle, 2009; Smith & Little, 2018), and this can increase the validity of between-group comparisons when the groups have markedly different learning histories (Leavens et al., 2019).

Statistical Approaches: Multilevel Models, Phylogenetic Models, and Being Cautious

The variation in experimental units, settings and measurements can be modeled statistically, using multilevel models (e.g., DeBruine & Barr, 2019; McElreath, 2016), and these should include phylogenetic information for multi-species datasets (Cinar et al., 2020; Davies et al., 2020; Freckleton, 2009; MacLean et al., 2012; Stone et al., 2011). Perhaps most useful are these models’ ability to pool information across species and shrink extreme species estimates towards the mean response for a given clade, but it also has the benefit of more closely aligning research fields with evolutionary theory (MacLean et al., 2012; Vonk & Shackelford, 2012). However, generating appropriate multilevel or evolution-informed models of animal behavior is a complex task, which will require a decent amount of data and knowledge about how traits may have been selected. Often, these data and this knowledge will not be available.

When researchers cannot introduce or model variation in their designs, they are faced with a dilemma. Uncertainty intervals will be too narrow with respect to the researcher’s populations of interest, but the researcher has no direct means of estimating by how much. One solution is for researchers to artificially increase the uncertainty in their statistical estimates (Kafkafi et al., 2017; Yarkoni, 2019), and this could be informed by data on the ratio of between-site to between-species variance from similar multi-site studies; however, this introduces a trade-off between statistical power and false positive discovery rates. In general, researchers should be cautious when interpreting extreme results observed from single samples, such as the 80% great tit performance on the cylinder task we saw earlier, which regressed to around 40% upon resampling.

Barriers

Concerns about replicability and representativeness have surfaced often in animal behavior and cognition research, at a variety of levels (Beach, 1950; Beran, 2012; Bitterman, 1960; Boesch, 2012, 2020; Brosnan et al., 2013; Clark et al., 2019; Dacey, 2020; Eaton et al., 2018; Farrar et al., 2020; Janmaat, 2019; Leavens et al., 2019; Schubiger et al., 2019; Stevens, 2017; Szabó et al., 2017; van Wilgenburg & Elgar, 2013; Vonk, 2019). However, it is unclear whether any real progress has been made towards understanding the prevalence and consequences of low representativeness in these fields, and we suggest that there are four main reasons why, which are theoretical, practical, motivational, and educational (see also Farrar & Ostojić, 2020).

First, theoretically, researchers may believe that their samples are representative of their target populations, or that if they are not, that this does not heavily impact the validity of their results. Such a position may be justifiable, for example when, i) relatively independent animals can be sampled by the same team (e.g., with dog research, or serially captured and released samples), ii) animals are highly trained (Leavens et al., 2019; Skinner, 1956; Smith & Little, 2018), iii) unique case studies, and iv) when heterogenization is used. However, if researchers do justify the generalizability of their findings theoretically, then these arguments should be made explicitly within papers (Simons et al., 2017), be solicited by editors and reviewers (Webster & Rutz, 2020), or provided as commentaries on entire research programmes. These justifications will be strongest when they employ a sampling approach to experimental design, and do not excessively focus on the experimental unit over other levels of sampling variance (Farrar & Ostojić, 2020).

Second, researchers may not practically have access to the resources needed to test the representativeness of their samples. They may only have one sample, and other laboratories with access to the same species might not exist. This is a problem – it may not be possible to study hard-to-reach samples in a reliable or replicable manner (Lange, 2019; Leonelli, 2018). However, researchers with such samples can take steps to ensure that their results are as robust as possible, and that an appropriate amount of uncertainty is disclosed, through the experimental and statistical techniques we have mentioned in this article, and so practical constraints do not inherently bar researchers from addressing issues of representativeness.

Third, researchers may lack the motivation or incentives to test the representativeness of their samples, and the stability of their results across experimental units, settings, treatments, and measurements. If the scientific incentive and funding structure selects for compelling narratives, oversold findings, and ground-breaking results (Higginson & Munafò, 2016; Ioannidis, 2012b; Smaldino & McElreath, 2016) over rigor, self-correction and understanding, the comparative researcher who attempts to replicate their findings across experimental units and settings may be disadvantaged in terms of common scientific metrics (citations and publications). Addressing these incentive problems is a large task which requires action at the level of the individual (Yarkoni, 2018), organization (Nosek et al., 2012) and society (Amann, 2003; Lazebnik, 2018). Encouragingly, there appears to be a desire to perform more replication studies, in some fields. Fraser et al. (2020), for example, surveyed 439 ecologists, and found that researchers thought replications are very important, reflect a “crucial” use of resources, and should be published by all journals.

Fourth, researchers may be unaware or have not accessed the education needed to effectively consider and model sampling variability in their studies. Statistical misconceptions (Goodman, 2008; Hoekstra et al., 2014) and mis-practice (Hoekstra et al., 2012; Nieuwenhuis et al., 2011) are prevalent, present in textbooks (Price et al., 2020), and are perhaps only more likely with the increasing complexity of statistical procedures and software that are available (Forstmeier & Schielzeth, 2011; Schielzeth & Forstmeier, 2009; Silk et al., 2020). At the same time, many university programmes may lack teaching on replication related topics (TARG Meta-Research Group, 2020), and there are no requirements to continue education for researchers following formal qualifications, i.e., post PhD, and neither has considering the replicability or generalizability of findings been well integrated with much of the publishing system (Neuliep & Crandall, 1990, 1993; Webster & Rutz, 2020).

These four barriers will be effectively combatted by top-down measures, such as funding bodies and institutions signing initiatives like the San Francisco Declaration on Research Assessment (DORA), and providing contracts and the job-security needed to promote researchers’ scientific development over output metrics. However, bottom-up initiatives from within animal behavior research could be effective and are at least under researchers’ direct control (Yarkoni, 2018), and individuals can address each of the four barriers above by, and helping others in, i) discussing how their sampling plans relate to their research aims, and describing what these research aims are, ii) discussing the ethical and practical constraints on diversifying their sampling plans if this is desirable, and considering changes to research designs and generating collaborations if the benefits could outweigh the costs, iii) examining their own motivations when performing science and publishing research findings and, iv) actively pursuing further education in research design and statistical analyses.

Conclusions

In this article, we applied a resampling definition of replication to animal cognition, and we explored the consequences of small and non-independent (poorly representative) samples in animal behavior and cognition research. Limited sampling is likely a large constraint on the replicability and generalizability of research findings, and it has particularly concerning implications for the accuracy of between group or between species inferences. Comparative researchers should be especially concerned about a lack of representativeness of their samples when there is a large ratio of within-species variation to between‐species variation, and when the same researchers, animals, and research methods are used repeatedly. Finally, we discussed how researchers can use techniques such as heterogenization, homogenization, and statistical modeling to improve the replicability and representativeness of their results, and considered the practical, theoretical, and motivational factors that might prevent a full assessment of reliability and representativeness in the field.

Acknowledgements

We would like to thank Marta Halina, Ljerka Ostojić and Piero Amodio for helpful comments throughout an earlier version the manuscript, and the editor, an anonymous reviewer and Alfredo Sánchez-Tójar for helpful suggestions and critiques throughout the review process. BGF was supported by the University of Cambridge BBSRC Doctoral Training Programme (BB/M011194/1).

References

- Allen C. Models, mechanisms, and animal minds. The Southern Journal of Philosophy. 2014;52:75–97. doi: 10.1111/sjp.12072. [DOI] [Google Scholar]

- Almeling L, Hammerschmidt K, Sennhenn-Reulen H, Freund AM, Fischer J. Motivational shifts in aging monkeys and the origins of social selectivity. Current Biology. 2016;26:1744–1749. doi: 10.1016/j.cub.2016.04.066. [DOI] [PubMed] [Google Scholar]

- Amann R. A Sovietological view of modern Britain. The Political Quarterly. 2003;74:468–480. doi: 10.1111/1467-923X.00558. [DOI] [Google Scholar]

- Asendorpf JB, Conner M, De Fruyt F, De Houwer J, Denissen JJA, Fiedler K, Fiedler S, Funder DC, Kliegl R, Nosek BA, Perugini M, et al. Recommendations for increasing replicability in psychology. European Journal of Personality. 2013;27:108–119. doi: 10.1002/per.1919. [DOI] [Google Scholar]

- Baker M. 1,500 scientists lift the lid on reproducibility. Nature News. 2016;533:452. doi: 10.1038/533452a. [DOI] [PubMed] [Google Scholar]

- Baribault B, Donkin C, Little DR, Trueblood JS, Oravecz Z, van Ravenzwaaij D, White CN, De Boeck P, Vandekerckhove J. Metastudies for robust tests of theory. Proceedings of the National Academy of Sciences. 2018;115:2607–2612. doi: 10.1073/pnas.1708285114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bausman W, Halina M. Not null enough: Pseudo-null hypotheses in community ecology and comparative psychology. Biology and Philosophy. 2018;33:30. doi: 10.1007/s10539-018-9640-4. [DOI] [Google Scholar]

- Beach FA. The Snark was a Boojum. American Psychologist. 1950;5:115–124. doi: 10.1037/h0056510. [DOI] [Google Scholar]

- Beran MJ. Did you ever hear the one about the horse that could count? Frontiers in Psychology. 2012;3 doi: 10.3389/fpsyg.2012.00357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beran MJ. The comparative science of “self-control”: What are we talking about? Frontiers in Psychology. 2015;6 doi: 10.3389/fpsyg.2015.00051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bitterman ME. Toward a comparative psychology of learning. American Psychologist. 1960;15:704–712. doi: 10.1037/h0048359. [DOI] [Google Scholar]

- Bliss-Moreau E, Baxter MG. Interest in non-social novel stimuli as a function of age in rhesus monkeys. Royal Society Open Science. 2019;6:182237. doi: 10.1098/rsos.182237. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boesch C. What makes us human Homo sapiens? The challenge of cognitive cross-species comparison. Journal of Comparative Psychology. 2007;121:227–240. doi: 10.1037/0735-7036.121.3.227. [DOI] [PubMed] [Google Scholar]

- Boesch C. The ecology and evolution of social behavior and cognition in primates. In: Vonk J, Shackleford TK, editors. The Oxford handbook of comparative evolutionary psychology. Oxford University Press; 2012. [DOI] [Google Scholar]

- Boesch C. Listening to the appeal from the wild. Animal Behavior and Cognition. 2020;7:257–263. doi: 10.26451/abc.07.02.15.2020. [DOI] [Google Scholar]

- Bohlen M, Hayes ER, Bohlen B, Bailoo J, Crabbe JC, Wahlsten D. Experimenter effects on behavioral test scores of eight inbred mouse strains under the influence of ethanol. Behavioural Brain Research. 2014;272:46–54. doi: 10.1016/j.bbr.2014.06.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brosnan SF, Beran MJ, Parrish AE, Price SA, Wilson BJ. Comparative approaches to studying strategy: Towards an evolutionary account of primate decision making. Evolutionary Psychology. 2013;11:147470491301100320. doi: 10.1177/147470491301100309. [DOI] [PubMed] [Google Scholar]

- Calisi RM, Bentley GE. Lab and field experiments: Are they the same animal? Hormones and Behavior. 2009;56:1–10. doi: 10.1016/j.yhbeh.2009.02.010. [DOI] [PubMed] [Google Scholar]

- Chang W. extrafont: Tools for using fonts. 2014 (R package version 0.17) [Computer software]. https://CRAN.R-project.org/package=extrafont.

- Cibulski L, Wascher CAF, Weiß BM, Kotrschal K. Familiarity with the experimenter influences the performance of Common ravensCorvus coraxand Carrion crows Corvus corone corone in cognitive tasks. Behavioural Processes. 2014;103:129–137. doi: 10.1016/j.beproc.2013.11.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cinar O, Nakagawa S, Viechtbauer W. Phylogenetic multilevel meta-analysis: A simulation study on the importance of modeling the phylogeny. EcoEvoRxiv. 2020 doi: 10.32942/osf.io/su4zv. [DOI] [Google Scholar]

- Clark H, Elsherif MM, Leavens DA. Ontogeny vs. phylogeny in primate/canid comparisons: A meta-analysis of the object choice task. Neuroscience & Biobehavioral Reviews. 2019;105:178–189. doi: 10.1016/j.neubiorev.2019.06.001. [DOI] [PubMed] [Google Scholar]

- Coomes JR, Davidson GL, Reichert MS, Kulahci IG, Troisi CA, Quinn JL. Inhibitory control, personality, and manipulated ecological conditions influence foraging plasticity in the great tit. BioRxiv. 2020:2020.12.16.423008. doi: 10.1101/2020.12.16.423008. [DOI] [Google Scholar]

- Crabbe JC, Wahlsten D, Dudek BC. Genetics of mouse behavior: Interactions with laboratory environment. Science. 1999;284:1670–1672. doi: 10.1126/science.284.5420.1670. [DOI] [PubMed] [Google Scholar]

- Craig DPA, Abramson CI. Ordinal pattern analysis in comparative psychology—A flexible alternative to null hypothesis significance testing using an observation oriented modeling paradigm. International Journal of Comparative Psychology. 2018;31 https://escholarship.org/uc/item/08w0c08s. [Google Scholar]

- Culina A, Adriaensen F, Bailey LD, Burgess MD, Charmantier A, Cole EF, Eeva T, Matthysen E, Nater CR, Sheldon BC, Sæther B-E, et al. Connected data landscape of long - term ecological studies: The SPI-Birds data hub. EcoEvoRxiv. 2020 doi: 10.32942/osf.io/6gea7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Culina A, van den Berg I, Evans S, Sánchez-Tójar A. Low availability of code in ecology: A call for urgent action. PLOS Biology. 2020;18:e3000763. doi: 10.1371/journal.pbio.3000763. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dacey M. Anecdotal experiments: Evaluating evidence with few animals. PhilSci-Archive. 2020 http://philsci-archive.pitt.edu/17683/

- Davidson GL, Cooke AC, Johnson CN, Quinn JL. The gut microbiome as a driver of individual variation in cognition and functional behaviour. Philosophical Transactions of the Royal Society B: Biological Sciences. 2018;373:20170286. doi: 10.1098/rstb.2017.0286. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davies AD, Lewis Z, Dougherty LR. A meta-analysis of factors influencing the strength of matechoice copying in animals. Behavioral Ecology. 2020;31:1279–1290. doi: 10.1093/beheco/araa064. [DOI] [Google Scholar]

- Davies GM, Gray A. Don’t let spurious accusations of pseudoreplication limit our ability to learn from natural experiments (and other messy kinds of ecological monitoring) Ecology and Evolution. 2015;5:5295–5304. doi: 10.1002/ece3.1782. [DOI] [PMC free article] [PubMed] [Google Scholar]

- DeBruine LM, Barr DJ. Understanding mixed effects models through data simulation. PsyArXiv. 2019 doi: 10.31234/osf.io/xp5cy. [DOI] [Google Scholar]

- Eaton T, Hutton R, Leete J, Lieb J, Robeson A, Vonk J. Bottoms-up! Rejecting top-down humancentered approaches in comparative psychology. International Journal of Comparative Psychology. 2018;31 https://escholarship.org/uc/item/11t5q9wt. [Google Scholar]

- Farmer HL, Murphy G, Newbolt J. Change in stingray behaviour and social networks in response to the scheduling of husbandry events. Journal of Zoo and Aquarium Research. 2019;7:203–209. doi: 10.19227/jzar.v7i4.441. [DOI] [Google Scholar]

- Farrar BG, Boeckle M, Clayton NS. Replications in comparative cognition: What should we expect and how can we improve. Animal Behavior and Cognition. 2020;7:1–22. doi: 10.26451/abc.07.01.02.2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Farrar BG, Ostojić L. The illusion of science in comparative cognition. PsyArXiv. 2019 doi: 10.31234/osf.io/hduyx. [DOI] [Google Scholar]

- Farrar BG, Ostojić L. It’s not just the animals that are STRANGE. Learning & Behavior. 2020 doi: 10.3758/s13420-020-00442-5. [DOI] [PubMed] [Google Scholar]

- Farrell S, Lewandowsky S. Computational models as aids to better reasoning in psychology. Current Directions in Psychological Science. 2010;19:329–335. doi: 10.1177/0963721410386677. [DOI] [Google Scholar]

- Fawcett GL, Dettmer AM, Kay D, Raveendran M, Higley JD, Ryan ND, Cameron JL, Rogers J. Quantitative genetics of response to novelty and other stimuli by infant rhesus macaquesMacaca mulatta)across three behavioral assessments. International Journal of Primatology. 2014;35:325–339. doi: 10.1007/s10764-014-9750-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ferreira VHB, Reiter L, Germain K, Calandreau L, Guesdon V. Uninhibited chickens: Ranging behaviour impacts motor self-regulation in free-range broiler chickens. (Gallus gallus domesticus). Biology Letters. 2020;16:20190721. doi: 10.1098/rsbl.2019.0721. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Forss SIF, Motes-Rodrigo A, Hrubesch C, Tennie C. Differences in novel food response between Pongo and Pan. American Journal of Primatology. 2019;81:e22945. doi: 10.1002/ajp.22945. [DOI] [PubMed] [Google Scholar]

- Forstmeier W, Schielzeth H. Cryptic multiple hypotheses testing in linear models: Overestimated effect sizes and the winner’s curse. Behavioral Ecology and Sociobiology. 2011;65:47–55. doi: 10.1007/s00265-010-1038-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fraser H, Barnett A, Parker TH, Fidler F. The role of replication studies in ecology. Ecology and Evolution. 2020;10:5197–5207. doi: 10.1002/ece3.6330. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Freckleton RP. The seven deadly sins of comparative analysis. Journal of Evolutionary Biology. 2009;22:1367–1375. doi: 10.1111/i.1420-9101.2009.01757.x. [DOI] [PubMed] [Google Scholar]

- Geach PT. Ontological relativity and relative identity. In: Munitz MK, editor. Logic and ontology. New York University Press; 1973. pp. 287–302. [Google Scholar]

- Gómez OS, Juristo N, Vegas S. Replications types in experimental disciplines; Proceedings of the 2010 ACM-IEEE International Symposium on Empirical Software Engineering and Measurement; 2010. pp. 1–10. [DOI] [Google Scholar]

- Goodman S. A dirty dozen: Twelve P-value misconceptions. Seminars in Hematology. 2008;45:135–140. doi: 10.1053/j.seminhematol.2008.04.003. [DOI] [PubMed] [Google Scholar]

- Guest O, Martin AE. How computational modeling can force theory building in psychological science. PsyArXiv. 2020 doi: 10.31234/osf.io/rybh9. [DOI] [PubMed] [Google Scholar]

- Hemmer BM, Parrish AE, Wise TB, Davis M, Branham M, Martin DE, Templer VL. Social vs. nonsocial housing differentially affects perseverative behavior in ratsRatus norvegicus . Animal Behavior and Cognition. 2019;6:168–178. doi: 10.26451/abc.06.03.02.2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Henrich J, Heine SJ, Norenzayan A. Most people are not WEIRD. Nature. 2010;466:29–29. doi: 10.1038/466029a. [DOI] [PubMed] [Google Scholar]

- Heyes C, Dickinson A. The intentionality of animal action. Mind & Language. 1990;5:87–103. doi: 10.1111/j1468-0017-1990.tb00154.x. [DOI] [Google Scholar]

- Higginson AD, Munafò MR. Current incentives for scientists lead to underpowered studies with erroneous conclusions. PLOS Biology. 2016;14:e2000995. doi: 10.1371/journal.pbio.2000995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hill HM, Yeater D, Gallup S, Guarino S, Lacy S, Dees T, Kuczaj S. Responses to familiar and unfamiliar humans by belugas Delphinapterus leucas bottlenose dolphins Tursiops truncatus & Pacific white-sided dolphins (Lagenorhynchus obliquidens) A replication and extension. International Journal of Comparative Psychology. 2016;29 https://escholarship.org/uc/item/48j4v1s8. [Google Scholar]

- Hoekstra R, Kiers HAL, Johnson A. Are assumptions of well-known statistical techniques checked, and why (not)? Frontiers in Psychology. 2012;3 doi: 10.3389/fpsyg.2012.00137. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoekstra R, Morey RD, Rouder JN, Wagenmakers E-J. Robust misinterpretation of confidence intervals. Psychonomic Bulletin & Review. 2014;21:1157–1164. doi: 10.3758/s13423-013-0572-3. [DOI] [PubMed] [Google Scholar]

- Höttges N, Hjelm M, Hård T, Laska M. How does feeding regime affect behaviour and activity in captive African lions. (Panthera leo)? Journal of Zoo and Aquarium Research. 2019;7:117–125. doi: 10.19227/jzar.v7i3.392. [DOI] [Google Scholar]

- Hurlbert SH. Pseudoreplication and the design of ecological field experiments. Ecological Monographs. 1984;54:187–211. doi: 10.2307/1942661. [DOI] [Google Scholar]

- Ioannidis JPA. Scientific inbreeding and same-team replication: Type D personality as an example. Journal of Psychosomatic Research. 2012a;73:408–410. doi: 10.1016/j.jpsychores.2012.09.014. [DOI] [PubMed] [Google Scholar]

- Ioannidis JPA. Why science is not necessarily self-correcting. Perspectives on Psychological Science. 2012b;7:645–654. doi: 10.1177/1745691612464056. [DOI] [PubMed] [Google Scholar]

- Isaksson E, Utku Urhan A, Brodin A. High level of self-control ability in a small passerine bird. Behavioral Ecology and Sociobiology. 2018;72:118. doi: 10.1007/s00265-018-2529-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Janmaat KRL. What animals do not do or fail to find: A novel observational approach for studying cognition in the wild. Evolutionary Anthropology: Issues, News, and Reviews. 2019;28:303–320. doi: 10.1002/evan.21794. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson Z, Brent L, Alvarenga JC, Comuzzie AG, Shelledy W, Ramirez S, Cox L, Mahaney MC, Huang Y-Y, Mann JJ, Kaplan JR, et al. Genetic influences on response to novel objects and dimensions of personality in Papio baboons. Behavior Genetics. 2015;45:215–227. doi: 10.1007/s10519-014-9702-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kabadayi C, Bobrowicz K, Osvath M. The detour paradigm in animal cognition. Animal Cognition. 2018;21:21–35. doi: 10.1007/s10071-017-1152-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kabadayi C, Krasheninnikova A, O’Neill L, van de Weijer J, Osvath M, von Bayern AMP. Are parrots poor at motor self-regulation or is the cylinder task poor at measuring it? Animal Cognition. 2017;20:1137–1146. doi: 10.1007/s10071-017-1131-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kafkafi N, Agassi J, Chesler EJ, Crabbe JC, Crusio WE, Eilam D, Gerlai R, Golani I, Gomez-Marin A, Heller R, Iraqi F, et al. Reproducibility and replicability of rodent phenotyping in preclinical studies. Neuroscience & Biobehavioral Reviews. 2018;87:218–232. doi: 10.1016/j.neubiorev.2018.01.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kafkafi N, Golani I, Jaljuli I, Morgan H, Sarig T, Würbel H, Yaacoby S, Benjamini Y. Addressing reproducibility in single-laboratory phenotyping experiments. Nature Methods. 2017;14:462–464. doi: 10.1038/nmeth.4259. [DOI] [PubMed] [Google Scholar]

- Langbein J. Motor self-regulation in goats Capra aegagrus hircus in a detour-reaching task. PeerJ. 2018;6:e5139. doi: 10.7717/peerJ5139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lange F. Are difficult-to-study populations too difficult to study in a reliable way? European Psychologist. 2019;25(1):41–50. doi: 10.1027/1016-9040/a000384. [DOI] [Google Scholar]

- Lazebnik Y. Who is Dr. Frankenstein? Or, what Professor Hayek and his friends have done to science. Organisms Journal of Biological Sciences. 2018;2 doi: 10.13133/2532-58764AHEAD1. [DOI] [Google Scholar]

- Lazic SE. The problem of pseudoreplication in neuroscientific studies: Is it affecting your analysis? BMC Neuroscience. 2010;11:5. doi: 10.1186/1471-2202-11-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lazic SE. Experimental design for laboratory biologists: Maximising information and improving reproducibility. 1st. Cambridge University Press; 2016. [DOI] [Google Scholar]

- Leavens DA, Bard KA, Hopkins WD. The mismeasure of ape social cognition. Animal Cognition. 2019;22:487–504. doi: 10.1007/s10071-017-1119-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leonelli S. Re-thinking reproducibility as a criterion for research quality. PhilSci-Archive. 2018 http://philsci-archive.pitt.edu/14352/ [Google Scholar]

- Lewejohann L, Reinhard C, Schrewe A, Brandewiede J, Haemisch A, Görtz N, Schachner M, Sachser N. Environmental bias? Effects of housing conditions, laboratory environment and experimenter on behavioral tests. Genes, Brain and Behavior. 2006;5:64–72. doi: 10.1111/j.1601-183X.2005.00140.x. [DOI] [PubMed] [Google Scholar]

- Lewis DK. Many, but almost one. In: Cambell K, Bacon J, Reinhardt L, editors. Ontology, causality and mind: Essays on the philosophy of D. M. Armstrong. Cambridge University Press; 1993. pp. 23–38. [Google Scholar]

- Lind J. What can associative learning do for planning? Royal Society Open Science. 2018;5:180778. doi: 10.1098/rsos.180778. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lind J, Ghirlanda S, Enquist M. Social learning through associative processes: A computational theory. Royal Society Open Science. 2019;6:181777. doi: 10.1098/rsos.181777. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lucon-Xiccato T, Gatto E, Bisazza A. Fish perform like mammals and birds in inhibitory motor control tasks. Scientific Reports. 2017;7:1–8. doi: 10.1038/s41598-017-13447-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Machery E. What is a replication? Philosophy of Science. 2020;87:709701. doi: 10.1086/709701. [DOI] [Google Scholar]

- MacLean EL, Hare B, Nunn CL, Addessi E, Amici F, Anderson RC, Aureli F, Baker JM, Bania AE, Barnard AM, Boogert NJ, et al. The evolution of self-control. Proceedings of the National Academy of Sciences of the United States of America. 2014;111:E2140–2148. doi: 10.1073/pnas.1323533111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacLean EL, Matthews LJ, Hare BA, Nunn CL, Anderson RC, Aureli F, Brannon EM, Call J, Drea CM, Emery NJ, Haun DBM, et al. How does cognition evolve? Phylogenetic comparative psychology. Animal Cognition. 2012;15:223–238. doi: 10.1007/s10071-011-0448-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacLean EL, Snyder-Mackler N, vonHoldt BM, Serpell JA. Highly heritable and functionally relevant breed differences in dog behaviour. Proceedings of the Royal Society B: Biological Sciences. 2019;286:20190716. doi: 10.1098/rspb.2019.0716. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Primates Many, Altschul D, Beran MJ, Bohn M, Caspar K, Fichtel C, Försterling M, Grebe N, Hernandez-Aguilar RA, Kwok SC, Rodrigo AM, et al. Collaborative open science as a way to reproducibility and new insights in primate cognition research. Japanese Psychological Review. 2019;62:205220 [Google Scholar]

- Primates Many, Altschul DM, Beran MJ, Bohn M, Call J, DeTroy S, Duguid SJ, Egelkamp CL, Fichtel C, Fischer J, Flessert M, et al. Establishing an infrastructure for collaboration in primate cognition research. PLOS ONE. 2019;14:e0223675. doi: 10.1371/journal.pone.0223675. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McElreath R. Statistical rethinking: A Bayesian course with examples in R and Stan. CRC Press/Taylor & Francis Group; 2016. [Google Scholar]

- Mercado E. Commentary: Interpretations without justification: A general argument against Morgan’s Canon. Frontiers in Psychology. 2016;7 doi: 10.3389/fpsyg.2016.00452. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Milcu A, Puga-Freitas R, Ellison AM, Blouin M, Scheu S, Freschet GT, Rose L, Barot S, Cesarz S, Eisenhauer N, Girin T, et al. Genotypic variability enhances the reproducibility of an ecological study. Nature Ecology & Evolution. 2018;2:279–287. doi: 10.1038/s41559-017-0434-x. [DOI] [PubMed] [Google Scholar]

- Minocher R, Atmaca S, Bavero C, Beheim B. Reproducibility of social learning research declines exponentially over 63 years of publication. PsyArXiv. 2020 doi: 10.31234/osf.io/4nzc7. [DOI] [Google Scholar]

- Mook DG. In defense of external invalidity. American Psychologist. 1983;38:379–387. doi: 10.1037/0003-066X.38.4.379. [DOI] [Google Scholar]

- Neuliep JW, Crandall R. Editorial bias against replication research. Journal of Social Behavior & Personality. 1990;5:85–90. [Google Scholar]

- Neuliep JW, Crandall R. Reviewer bias against replication research. Journal of Social Behavior & Personality. 1993;8:21–29. [Google Scholar]

- Nieuwenhuis S, Forstmann BU, Wagenmakers E-J. Erroneous analyses of interactions in neuroscience: A problem of significance. Nature Neuroscience. 2011;14:1105–1107. doi: 10.1038/nn.2886. [DOI] [PubMed] [Google Scholar]

- Noonan H, Curtis B Identity. In: The Stanford Encyclopedia of Philosophy. 2018. Zalta EN, editor. Summer. 2004. https://plato.stanford.edu/archives/sum2018/entries/identitv/ [Google Scholar]

- Nosek BA, Errington TM. What is replication? PLOS Biology. 2020;18:e3000691. doi: 10.1371/journal.pbio.3000691. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nosek BA, Spies JR, Motyl M. Scientific utopia: II. Restructuring incentives and practices to promote truth over publishability. Perspectives on Psychological Science. 2012;7:615–631. doi: 10.1177/1745691612459058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pfungst O. In: Clever Hans (the horse of Mr. Von Osten): A contribution to experimental animal and human psychology. 1st. Rahn CL, editor. Original work published; Holt: 2018. Trans 1911. [Google Scholar]

- Polla EJ, Grueter CC, Smith CL. Asian elephants (Elephas maximus) discriminate between familiar and unfamiliar human visual and olfactory cues. Animal Behavior and Cognition. 2018;5:279–291. doi: 10.26451/abc.05.03.03.2018. [DOI] [Google Scholar]

- Price R, Bethune R, Massey L. Problem with p values: Why p values do not tell you if your treatment is likely to work. Postgraduate Medical Journal. 2020;96:1–3. doi: 10.1136/postgradmedj-2019-137079. [DOI] [PubMed] [Google Scholar]

- Quine WV. Identity, ostension, and hypostasis. The Journal of Philosophy. 1950;47:621–633. doi: 10.2307/2021795. [DOI] [Google Scholar]

- R Core Team. R: A language and environment for statistical computing. R Foundation for Statistical; 2020. [Computer software]. https://www.R-project.org/ [Google Scholar]

- Rescorla RA, Wagner AR. A theory of Pavlovian conditioning: Variations in the effectiveness of reinforcement and nonreinforcement. In: Black AH, Prokasy WF, editors. Classical conditioning II: Current research and theory. 2nd. Appleton Century Crofts; 1972. pp. 64–99. [Google Scholar]

- Richter SH, Garner JP, Auer C, Kunert J, Würbel H. Systematic variation improves reproducibility of animal experiments. Nature Methods. 2010;7:167–168. doi: 10.1038/nmeth0310-167. [DOI] [PubMed] [Google Scholar]

- Richter SH, Garner JP, Würbel H. Environmental standardization: Cure or cause of poor reproducibility in animal experiments? Nature Methods. 2009;6:257–261. doi: 10.1038/nmeth.1312. [DOI] [PubMed] [Google Scholar]

- Richter SH, Garner JP, Zipser B, Lewejohann L, Sachser N, Touma C, Schindler B, Chourbaji S, Brandwein C, Gass P, van Stipdonk N, et al. Effect of population heterogenization on the reproducibility of mouse behavior: A multi-laboratory study. PLOS ONE. 2011;6:e16461. doi: 10.1371/journal.pone.0016461. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roberts S, Pashler H. How persuasive is a good fit? A comment on theory testing. Psychological Review. 2000;107:358–367. doi: 10.1037/0033-295X.107.2.358. [DOI] [PubMed] [Google Scholar]

- Rössler T, Mioduszewska B, O’Hara M, Huber L, Prawiradilaga DM, Auersperg AMI. Using an Innovation Arena to compare wild-caught and laboratory Goffin’s cockatoos. Scientific Reports. 2020;10:8681. doi: 10.1038/s41598-020-65223-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schank JC, Koehnle TJ. Pseudoreplication is a pseudoproblem. Journal of Comparative Psychology. 2009;123:421–433. doi: 10.1037/a0013579. [DOI] [PubMed] [Google Scholar]

- Schielzeth H, Forstmeier W. Conclusions beyond support: Overconfident estimates in mixed models. Behavioral Ecology. 2009;20:416–420. doi: 10.1093/beheco/arn145. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schubiger MN, Kissling A, Burkart JM. Does opportunistic testing bias cognitive performance in primates? Learning from drop-outs. PLOS ONE. 2019;14:e0213727. doi: 10.1371/journal.pone.0213727. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Silk MJ, Harrison XA, Hodgson DJ. Perils and pitfalls of mixed-effects regression models in biology. PeerJ. 2020;8:e9522. doi: 10.7717/peerJ9522. [DOI] [Google Scholar]

- Simons DJ, Shoda Y, Lindsay DS. Constraints on generality (COG): A proposed addition to all empirical papers. Perspectives on Psychological Science. 2017;12:1123–1128. doi: 10.1177/1745691617708630. [DOI] [PubMed] [Google Scholar]