Abstract

The typical control of myoelectric interfaces, whether in laboratory settings or real-life prosthetic applications, largely relies on visual feedback because proprioceptive signals from the controlling muscles are either not available or very noisy. We conducted a set of experiments to test whether artificial proprioceptive feedback, delivered non-invasively to another limb, can improve control of a two-dimensional myoelectrically-controlled computer interface. In these experiments, participants’ were required to reach a target with a visual cursor that was controlled by electromyogram signals recorded from muscles of the left hand, while they were provided with an additional proprioceptive feedback on their right arm by moving it with a robotic manipulandum. Provision of additional artificial proprioceptive feedback improved the angular accuracy of their movements when compared to using visual feedback alone but did not increase the overall accuracy quantified with the average distance between the cursor and the target. The advantages conferred by proprioception were present only when the proprioceptive feedback had similar orientation to the visual feedback in the task space and not when it was mirrored, demonstrating the importance of congruency in feedback modalities for multi-sensory integration. Our results reveal the ability of the human motor system to learn new inter-limb sensory-motor associations; the motor system can utilize task-related sensory feedback, even when it is available on a limb distinct from the one being actuated. In addition, the proposed task structure provides a flexible test paradigm by which the effectiveness of various sensory feedback and multi-sensory integration for myoelectric prosthesis control can be evaluated.

Index Terms: Electromyogram signal, proprioceptive feedback, sensorimotor integration

I. Introduction

Myoelectric interfaces use the electrical activity of muscles (electromyogram (EMG)) to control computers or electrically actuated devices, such as prosthetic limbs [1]. During the operation of myoelectric interfaces the user typically relies on visual information as the main source of feedback about the state of the device. While there have been several attempts to deliver sensory feedback about the state of the interface through grip force feedback via vibro-tactile stimulation [2], vibro-tactile, mechano-tactile or electro-tactile stimulation [3]–[5], and feedback of the prosthetic joint angle or position through cutaneous stimuli [7],[8], it is not yet clear whether provision of other sensory signals in addition to vision would augment control of myoelectric interfaces. This is because, conventionally, the effectiveness of these sensory signals is quantified when vision is withheld. The aim of this paper is to develop a simple paradigm by which 1) the usefulness of the added feedback modality delivered to an intact body organ can be examined in different visual feedback conditions and 2) the importance of the congruency between different feedback modalities can be quantified.

Only limited attention has been given to the provision of positional cues as feedback via proprioception; that is “the perception of joint and body movement as well as position of the body, or body segments, in space” [9]. Proprioceptive feedback provided mechanically to the arm using an exoskeleton has been shown to improve monkeys’ performance in a brain-machine interface task [10]. In a study with able-bodied humans [11], subjects controlled the motion of a virtual finger to grasp a virtual object via a grasping force input measured at the thumb. The grasping force controlled proprioceptive feedback that was felt at the index finger. It was shown that additional proprioceptive feedback could improve control of a visual representation of the grasp, albeit only for small target sizes.

Recently in [12], it was shown that the use of proprioceptive feedback via an exoskeleton in a non-invasive brain computer interface experiment can enhance sensorimotor de-synchronization of the brain rhythms. However, in myoelectric interfaces (e.g. myoelectric prostheses), traumatic event of amputation can impair their peripheral sensorimotor connections at the site of injury. Hence, delivering biologically-accurate proprioceptive feedback through the controlling limb for amputees have only been possible using invasive electrodes [13] and sophisticated electronics [14] positioned at more proximal sites.

Here we examined an alternative strategy, proprioception delivery on a limb different than that controls a myoelectric task, and investigated whether sensorimotor association can be learnt in such a strategy. A similar concept was previously employed in a simple one-dimensional task by Wheeler et al [8]. However, since provision of artificial proprioception to the controlling limb was not their primary purpose, they did not evaluate its usefulness in absence or presence of the visual feedback.

We developed a myoelectric interface task in which the participants controlled the position of a computer cursor by myoelectric activity associated with small isometric contractions of the muscles in their left hand and arm. Subjects received artificial proprioceptive feedback (PF) to their right arm that was moved passively by a robotic manipulandum.

The interaction between different sensory modalities has long been a subject of research [15], [16]. In contrast to visual information that is encoded in the extrinsic coordinates, proprioceptive information is encoded in a body-centered coordinate system [17], [18]. Therefore, for artificial proprioceptive feedback to be useful in a visually instructed task - such as the proposed setup - the proprioceptive information must be transformed and integrated with the visual information. We examined whether such multi-sensory integration across limbs can be learnt and the extent to which this depends on the spatial congruency between the proprioceptive and visual feedback.

In section II, we present the hardware for delivery of the artificial proprioception, the methods of recording and analysis of the EMG signals and finally the details of our three experimental protocols. Results of the experiments are reported in Section III, before we discuss their significance and conclude in Section IV.

II. Method

A. Subjects

40 healthy right-handed subjects took part in three experiments: 21 in Experiment 1 and 19 in both Experiments 2 and 3. The latter two were run in close succession. All subjects gave their informed written consent before participation. The study was approved by the local ethics committee at Newcastle University.

B. Artificial proprioception

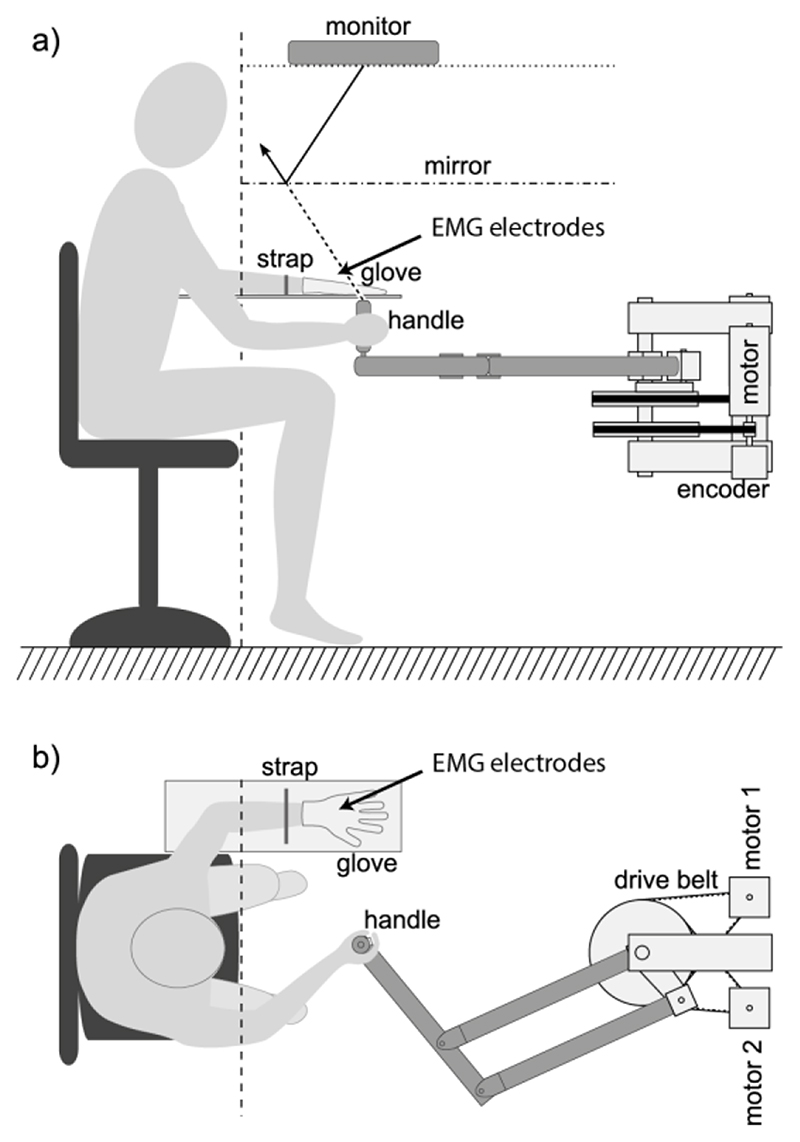

We used an active manipulandum to provide artificial proprioceptive feedback about the cursor to subjects’ right arms, by guiding their hands along a movement trajectory that was controlled by myoelectric activity measured on their other, left hand and arm. A schematic view of the setup can be seen in Fig. 1. The robotic device was constructed in-house, similar to the vBot setup described in [19]. It consisted of a parallelogram arm, powered by two motors via drive belts that adjusted the angles of the two arm links. Angular positions were monitored through incremental encoders on each drive axis. A rotating handle was mounted onto the end of the arm, housing a button that had to be pushed with the index finger while holding the handle in order to supply power to the motors.

Fig. 1. Schematic view of the experimental setup.

(a) Side view: motorized manipulandum guiding the non-controlling, right hand along the cursor trajectory. Cursor and targets were projected from a monitor mirror system such that the visual cursor appears in the same plane as the handle of the manipulandum. (b) Top view: subjects controlled the task through isometric contractions in their left hand, immobilized on a horizontal arm rest, while the right hand was guided in the horizontal plane, congruent with cursor position.

We immobilized subjects’ controlling left hands and arms on an armrest with a modified glove and a Velcro strap. The glove was glued to a board that was mounted on the armrest (Fig. 1). The armrest was mounted high enough to allow for unobstructed movement of the handle. Subjects observed the contents of a computer monitor, mounted on top, through a semi-transparent mirror so that they perceived a virtual horizontal display at the same height as the tip of the handle they were holding (Fig. 1a). During experiments, lights were switched off so that subjects did not receive visual feedback of their arm and could view only the computer display.

In some experimental conditions, we made the manipulandum closely follow a visual cursor moving in the virtual plane using a standard proportional-integral-derivative (PID) controller, with the cursor visible at the position of the handle. The coefficients of the PID controller were first determined using the Ziegler–Nichols method and then fine-tuned manually to avoid strong vibrations in the motion feedback which could perturb proprioception by introducing sensory noise. To this end, we optimized the PID controller to track only low-frequency movements that were relevant to the task by reducing control stiffness. PID control was fine-tuned to achieve a trade-off between accuracy of tracking and smoothness of movements; since fast, low-amplitude variability within the cursor position could superimpose a vibratory movement component onto the overall trajectory of the manipulandum and deteriorate proprioceptive feedback. We sought to avoid this by empirically decreasing the integral gain of the PID controller to allow smoother trajectories at the expense of sacrificing some level of stiffness and accuracy and the match between movements of cursor and manipulandum. Table I lists correlation coefficients, temporal lags and average distance between cursor and handle positions.

Table I. Accuracy of manipulandum.

| PF+VF (PF) | 1D targets | 2D targets | ||

|---|---|---|---|---|

| Movement phase | Hold phase | Movement phase | Hold phase | |

| rx | 0.93 (0.95) | 0.72 (0.69) | 0.74 (0.74) | 0.77 (0.75) |

| ry | 0.93 (0.95) | 0.72 (0.68) | 0.96 (0.95) | 0.67 (0.66) |

| lag | 0 (0) | 6 (6) | 0 (0) | 17(13) |

| d(cm) | 1.3 (1.2) | 1.9 (1.6) | 1.6 (1.5) | 2.7 (2.3) |

Accuracy of manipulandum matching the cursor position in PF+VF condition or the hypothetical cursor position in PF condition (in brackets). rx/ry: correlation coefficients of trajectories in horizontal and vertical direction, respectively; average over trials. lag (ms): median time lag between the visual cursor and the manipulandum (lag of maximum cross covariance). d (cm): average distance between cursor and manipulandum.

Importantly, movement of the handle did not influence cursor position so that the experimental task could not be affected at all by subjects’ right arm movements. Nevertheless, subjects were strongly discouraged from moving or resisting the manipulandum actively.

C. Electromyography Recordings

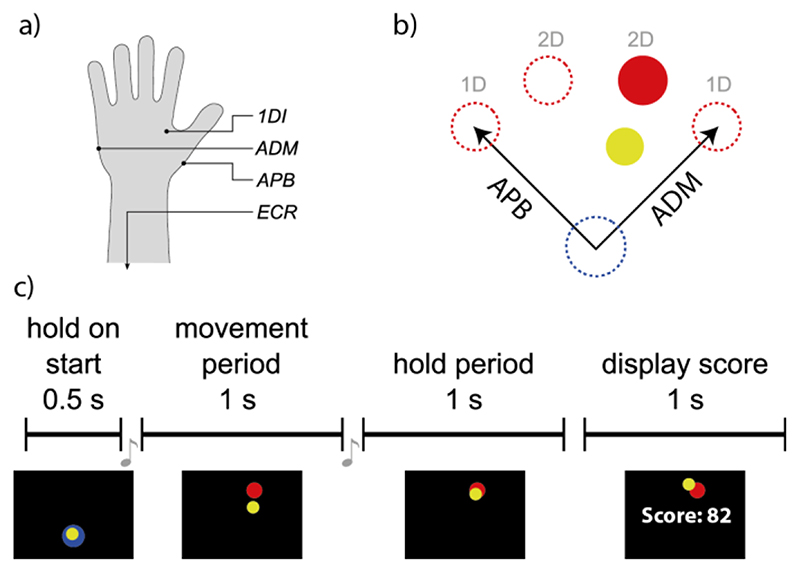

We recorded the EMG signal from the muscles of the left hand and forearm as indicated in Fig. 2a. For Experiment 1, the EMGs were recorded from abductor pollicis brevis (APB) and abductor digiti minimi (ADM). For Experiments 2 and 3, we recorded additional signals from the first dorsal interosseus (1DI) and extensor carpi radialis (ECR) muscles. APB, ADM and 1DI are intrinsic hand muscles, abducting thumb, little finger and index finger, respectively; ECR is located in the forearm and extends the hand at wrist level. Adhesive gel electrodes (Bio-logic, Natus Medical Inc., USA) were positioned over the belly of the muscle and an adjacent knuckle in the case of the intrinsic hand muscles, or on two positions along the muscle in the case of ECR. Myoelectric signals were amplified by a NeuroLog system (NL844/NL820A, Digitimer, UK) with the gain adjusted between 100 and 5000, band-pass filtered between 30 Hz and 1 kHz and subsequently digitized and transmitted to a PC at 2500 samples per second (NI USB-6229 BNC, National Instruments, USA). A Python-based graphical user interface was developed to implement data acquisition.

Fig. 2. Experimental layout.

(a) EMGs were recorded from muscles in the left hand and forearm; (b) task structure; (c) Trial structure. Subjects performed a virtual 4-target center-out task by controlling a cursor (yellow) from a starting zone (blue) to one of four target positions (red) with activations of two different muscles (Experiments 1 and 2: APB and ADM; Experiment 3: 1DI and ECR). Lateral targets required only one-dimensional control while the two central targets could only be reached with contributions from both muscles.

Before the start of an experiment, we recorded signal offset as well as amplitude of the measured signal, during rest and during comfortable contraction, for each EMG channel separately. To determine comfortable contraction levels, subjects were instructed to contract each muscle at a level that could be comfortably maintain and repeat many times without fatigue. In our previous studies with similar myoelectric interfaces [20]–[22], this level corresponded to an activity between 10-20% of the maximum voluntary contraction. Any encountered signal offset was subtracted from each channel as the first pre-processing stage. Instantaneous activation levels of recorded muscles y were estimated by smoothing (with a rectangular window) the preceding 750 ms of rectified EMG both during online processing and the assessment of activation levels of calibration data. This smoothing procedure slows the movement of the cursor, however probably due to this continuous update and the relatively slow movement in our task we found that (also in the previous work of Radhakrishnan et al (2008) [21] where in fact the smoothing window was 800ms in one experimental condition), subjects could adapt to it quickly. The subjects did not report the delay to impede their task.

The same procedure was repeated to calculate the rest yr and the comfortable contraction yc levels.

During the experiments, a normalized muscle activation level yn was computed for every channel independently by dividing the instantaneous level y by the level of comfortable contraction yc after resting levels yr were subtracted from either:

| (1) |

D. Experimental protocols

The experiment consisted of a myoelectric-controlled center-out task with four circular targets (⌀2.4 cm) at 45°, 75°, 105° and 135° on a quarter circle of 8.6 cm radius around the circular starting zone (⌀3.6 cm) in the lower part of the workspace (Fig. 2b). The position of a yellow cursor (⌀1.8 cm) was determined by the activation levels of the two controlling muscles. The subjects controlled the position of the cursor such that contraction of a muscle caused the cursor to move along the muscle’s direction of action (DoA) proportional to the online estimated normalized muscle activation level, whereas relaxation brought the cursor back to the starting position (see equation 2). The two DoA vectors were pointing out from the starting point in 45° and 135° direction, as shown in Fig. 2b. The arrangement of DoAs was designed to be unintuitive, that is, DoAs were not reflected in movements the respective muscle would cause in the hand during natural movement. We deliberately avoided intuitive DoAs in our experiments to slow down the learning process and better observe improvements and sensorimotor integration over time.

The four target positions were divided into two groups: 1D targets, represented by the lateral positions (45° and 135°), and 2D targets which included the two central targets (75° and 105°). For movements to 1D targets, activation of the muscle with a DoA perpendicular to target direction was ignored, which resulted in a simpler, one-dimensional control scheme. For 2D targets, two-dimensional cursor position x was determined by the vector sum of both DoA vectors, scaled by the normalized activation level yn of their respective muscle:

| (2) |

Each trial consisted of four distinct phases, outlined in Fig. 2c. At the beginning a blue circle in the lower work-space indicated the starting zone. The experiment continued only after the yellow cursor was held continuously within the starting zone for 0.5 s. An auditory signal (250 ms long at 660 Hz) marked the beginning of a movement period during which one of the four targets was shown instead of the starting zone. During this period of 1 s, subjects were asked to move the cursor to the newly presented target and try to maintain the cursor inside the target during the ensuing hold period, marked by another auditory cue (250 ms long at 880 Hz), for one more second. A performance related score was calculated and presented to the participants at the end of each trial. The score reflected the percentage of time the cursor overlapped, even partially, with the target circle during the hold period. To calculate the score, we considered the screen refresh rate (N = 75 Hz) and the software counted the number of times n (out of N screen updates) in which

| (3) |

where |.|2 denotes the Euclidean distance. The score in each trial was . The last cursor position of the hold period, together with the target, was still visible on screen during presentation of the performance score, even in conditions that withheld visual feedback of the cursor during movement. Recording, online-processing, experimental control and user interface were handled by Python-based software, developed for these and similar experiments.

Experiment 1

Experiment 1 consisted of 480 trials, divided into two parts: a familiarization phase of 120 trials during which subjects received visual and artificial proprioceptive feedback (VF+PF condition) and a test phase of 360 trials with half of the trials running in VF+PF condition. The remaining trials were equally divided between conditions of only PF, without a visible cursor (PF condition), only visual feedback (VF condition) or neither of both kinds of sensory feedback (noFB condition). During the test phase different conditions appeared in a pseudorandom order so that in each set of 24 consecutive trials, each of the feedback conditions PF, VF and noFB were presented in combination with each of four targets exactly once, while in the same set of trials, condition VF+PF was combined with each target three times. Cursor position was controlled by muscles APB (DoA 135°, up left) and ADM (DoA: 45°, up right), as illustrated in Fig. 2a. All subjects in this experiment were naïve to the concepts of myoelectric control as well as PF.

Experiment 2

Experiment 2 consisted of 240 trials. During the first 120 trials, i.e. the familiarization phase, subjects received only visual feedback (VF condition). The test phase was equivalent to that of Experiment 1, with half of the trials running in VF+PF condition. The test phase consisted of only 120 trials. DoAs and controlling muscles were the same as in Experiment 1. This experiment was carried out with a new group of volunteers, who had not experienced PF before, that is, who did not participate in Experiment 1. They received their first experience of PF at the beginning of the test phase.

Experiment 3

Experiment 3 followed immediately after Experiment 2 with the same participants. To reduce the effect of prior training, for the new experiment, instead of APB and ADM, two previously unused muscles, 1DI and ECR, were used for cursor control. Experiment 3 consisted of 240 trials including a familiarization phase of 120 trials. The experimental conditions reflected those of Experiment 1 with the critical difference that during the PF and VF+PF condition, PF was not congruent to the cursor movement, but mirrored at the vertical midline so that the manipulandum guided the participant’s right hand to the left when the cursor moved the right and vice versa.

E. Performance Metrics

In order to evaluate overall task performance and to track learning, we calculated the Euclidean distance between the centers of cursor position Pcursor and target position Ptarget and averaged this over the duration of the hold period in each trial. We refer to this measure as ‘target mismatch’, an error measure, normalized so that a value of 1.0 reflects the radius between starting point Pstart and the quarter-circle of the targets, whereas values close to zero indicate accurate matching of the target with little error:

| (4) |

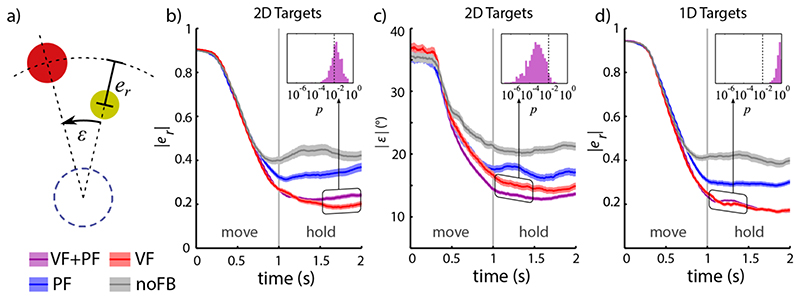

We further distinguished between the distance the cursor travelled and its direction from the starting point compared to target distance and direction, respectively, by converting cursor position to polar coordinates with the starting point as the origin. We defined absolute radial error |ε r|, indicating a mismatch in the magnitude of muscle contraction, and absolute angular error |ε|, reflecting errors in the relation between the activities of two muscles, illustrated in Fig. 3a. For the peripheral 1D targets, where control had only a single degree of freedom, no angular errors existed and radial errors were identical to the target mismatch measure.

Fig. 3. Average time-course of cursor trajectories over movement and hold period in Experiment 1.

(a) Mismatch between cursor and 2D targets were separated into radial errors er and angular errors ε. (b)-(d) Mean values over trials 121-480 from all subjects (coloured lines) ± 1 standard error (bands) are presented for different feedback conditions: VF+PF (purple), PF (blue), VF (red) and noFB (grey). (b) Absolute radial errors of target for 2D targets. Inset shows spread of p-values from 500 independent t-tests for differences between VF+PF (randomized sub-sample, cf. text) and VF in the time of 1.5 - 2.0 s - the dotted line represents p = 0.01. (c) Absolute angular errors for 2D targets (in degrees). Inset: spread of p-values for test VF+PF vs. VF, 1.0 - 1.5 s. (d) Absolute radial errors for 1D targets (1D movements do not allow for angular errors). Inset: spread of p-values for test VF+PF vs. VF, 1.0 - 1.5 s.

F. Statistical Analysis

In several cases we compared two groups of samples and tested for significant differences in their means, using Student’s t-test for unpaired samples. When a family of comparisons was made, significance levels were adjusted to yield a family-wise error rate < 0.05 (Bonferroni correction). Before the t-tests, the normality of the data points was ascertained with a Shapiro–Wilk test.

III. Results

Our analysis focused on analyzing the subject’s ability to learn the presence and absence of the visual feedback condition. To avoid a bias that could be introduced because of non-learning subjects, we excluded those who could not gain viable control over the task from analysis. As a common criterion for the exclusion of subjects, we based this decision on trials 121 to 240, which had comparable conditions in all three experiments. Subjects were considered as non-learners, if the average target mismatch (Eq. 3) of all trials with visual feedback (conditions VF+PF and VF) was greater than 0.8. Thus, two subjects were excluded from Experiment 1, three from Experiment 2 and one from Experiment 3. Preliminary results of this work were published in [23].

A. Experiment 1

Within-trial dynamics

We examined task-related errors in the test phase of Experiment 1 as they evolved over movement and hold period. To separate specific features of myoelectric control, we distinguished between radial and angular errors (Fig. 3). On a grand average, errors were stationary over the time of the hold period, but displayed some notable differences in dynamics between feedback conditions. Differences between feedback conditions were found and compared within three separate time windows: the late movement period (0.5-1.0 s after target appearance), the early hold phase (1.0-1.5 s) and the late hold phase (1.5-2.0 s). Within the early movement period (0-0.5 s after target appearance), comprising reaction time and initial muscle activation before feedback correction, no significant differences occurred between conditions (Fig. 3b-d).

We used a t-test to determine whether the time-averaged differences between cursor trajectories of two conditions within a time window were significant. To calculate these differential measures, we paired up trials from the same subject, to the same target and from within the same 24-trial time frame of the experiment. Since VF+PF condition had three times more trials than the other conditions, only one matching VF+PF trial out of every three was randomly selected for a paired t-test. This random selection was repeated 500 times independently. Absolute angular errors for 2D targets decreased by ~5% in the VF+PF condition compared to the VF only condition during 1.5 – 2.0 s into the trial (Fig. 3c). However, this improvement was not observed in absolute radial errors. Insets in Fig. 3b-d show distribution of p-value for t-tests between VF+PF and VF conditions. A complete overview of all significant differences between feedback conditions is given in Fig. S1 (Supplementary Material).

Overall task performance

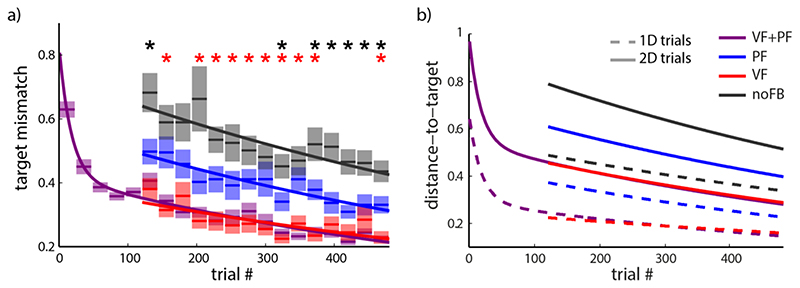

To evaluate overall task performance we calculated average target mismatch during the hold phase over a series of time windows to produce learning curves. Trials from all learning subjects were pooled and averaged over time frames corresponding to 24 trials but separating trials from different conditions within that period. Figure 4a reflects improvements of task performance in Experiment 1. Parametric fits are overlaid as solid lines. Temporal evolution of target mismatch in conditions PF, VF and noFB, that were only encountered in the test phase, could be well approximated by a single exponential fit (fit significance p < 0.05 for all fits with varying r 2), the more rapid initial learning phase in VF+PF condition was accounted for by the use of a double exponential function.

Fig. 4. Learning of myoelectric-control in Experiment 1.

(a) Target mismatch during the hold period, averaged over trials of the same condition within each set of 24 consecutive trials in the experiment. Data is pooled over all subjects. Semi-transparent boxes show ± standard error of the mean with mean indicated by solid lines in the middle. Overlaid are exponential fits for conditions PF (blue), VF (red) and noFB (black) and a double exponential fit for condition VF+PF (purple), which was the only condition during the initial learning phase. Paired t-tests were run between the PF trials and the VF or noFB trials. Red and black asterisks indicate significantly lower target mismatch in VF or significantly higher values in noFB condition, respectively, when compared to the PF condition. (b) Learning of myoelectric-control in Experiment 1, separated for 1D (dashed lines) and 2D trials (solid lines).

Figure 4 suggests that the presence of visual feedback allowed subjects to match the target during the hold period, irrespective of whether additional proprioceptive feedback was supplied (VF+PF condition) or not (VF condition). For this measure, no improvement over VF could be found in the VF+PF condition (multiple paired t-tests, Bonferroni corrected, p > 0.05).

A significantly lower average target mismatch in PF vs. noFB condition (Fig. 4a, black asterisks) and significantly higher average vs. the VF condition (red asterisks) was confirmed by applying a series of paired t-test over short stretches of 24 trials, represented by the averages shown in Fig. 4a, comprising one trial to each target for each of the three conditions (PF, VF and noFB) of each subject. Trials of different conditions were paired for the same target of the same subject. We used a Bonferroni correction for multiple comparisons (multiple t-test with post-hoc analysis), testing for a family-wise error rate of smaller than 0.05 (corresponding to p < 0.0033 for each single test). Therefore, task performance with PF as the only source of sensory feedback (PF) was consistently better than without feedback (noFB), but weaker than in VF or VF+PF condition. These differences were maintained throughout the course of learning.

Control errors for movements to the peripheral 1D targets (Fig. 4b) were lower than for the substantially more difficult case of two-dimensional control (2D targets). However, the relations between different feedback conditions were independent from target positions or dimensions.

Similar learning curves could be obtained using the score, presented to subjects at the end of each trial, as a performance metric (Fig. S2, Supplementary Material). However, although this measure was provided to the subject during the experiments as a simple and intuitive performance indicator, it is not as sensitive as the target mismatch index in distinguishing the differences between the four feedback conditions. The main reason behind this shortcoming is that, score introduces floor and ceiling effect, i.e. a cut-off at both scores 0 and 100 of the scale where no further distinction of performance is possible.

B. Experiment 2

With a new group of subjects, we tested whether subjects, in Experiment 1, showed higher performance in the early PF-alone trials because they have an innate mapping between visual and proprioceptive feedbacks or they acquired this mapping because they experienced these two feedbacks simultaneously during the initial familiarization block (VF+PF condition). Therefore, in Experiment 2, condition VF+PF in the familiarization phase of Experiment 1 was replaced with condition VF only, withholding any experience of PF until the onset of the test phase in trials 121-240.

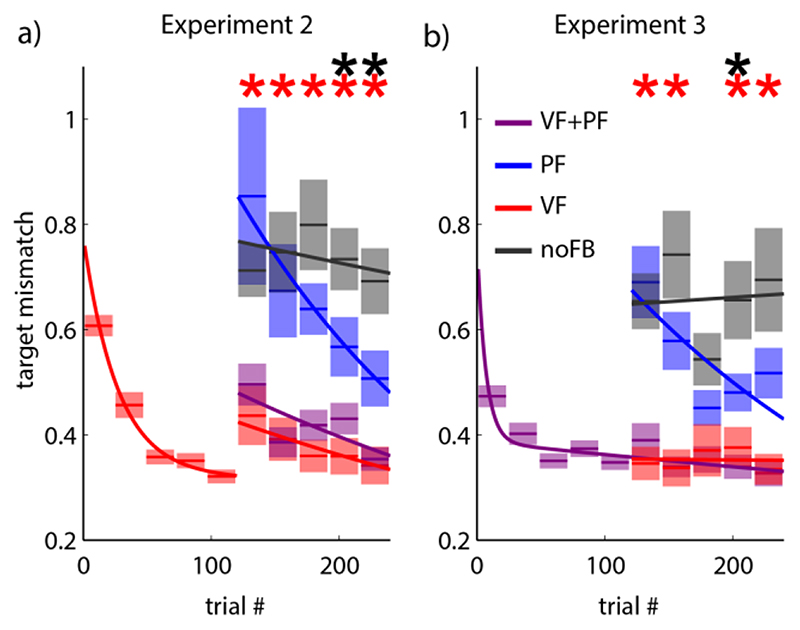

While average target mismatch in VF+PF condition was still not significantly different from VF condition at the same stage of learning (multiple paired t-tests, Bonferroni corrected, p > 0.05), performance in PF condition equaled that of the noFB condition at the beginning of the test phase and only became significantly better in later trials (Fig. 5a) (multiple paired t-tests, Bonferroni corrected, p < 0.05, black asterisks). This indicates that artificial PF needs significant prior experience to be used as a source of feedback associated with the task, and that this association was in fact formed during the familiarization phase in Experiment 1. The learning curve for the VF condition shows a step towards higher control errors at the beginning of the test phase, when PF was first introduced as a new sensory modality to be processed. The second part of the curve in Fig. 5a was therefore fitted with a separate exponential function to accommodate this sudden change.

Fig. 5. Experiments 2 and 3.

(a) Experiment 2: familiarization phase with VF condition (instead of PF) followed by test sessions of VF, PF, VF+PF and no FB. b) Experiment 3: artificial proprioceptive feedback mirrored at vertical midline. Paired t-tests were run between PF trials and VF or noFB trials within a set. Red and black asterisks indicate significantly lower target mismatch in VF or significantly higher values in noFB condition, respectively, with a family-wise error rate ≤ 0.05 (Bonferroni correction).

C. Experiment 3

With Experiment 3, we tested whether the integration of artificial PF into sensorimotor control was enabled by the fact that vision and artificial proprioception provided congruent feedback, or whether more arbitrary relations – specifically, with proprioception as a mirror image of vision – could be learned equally well. Therefore, in contrast to Experiment 1, we designed the handle movement providing artificial PF in Experiment 3 to be mirrored at the vertical midline.

The subjects started this experiment with a familiarization phase with both VF and PF. During the familiarization phase very rapid initial learning could be observed (Fig. 5b). This does not come unexpected, since subjects were not naïve to myoelectric control any more, after participating in Experiment 2. However, since a different set of EMGs was used, specifics of the control had to be trained anew.

Next when one or both of the feedbacks were removed, control errors were high for both PF and noFB conditions in the early trials of the test phase, indicating that integration of incongruent artificial PF into myoelectric control had been delayed. The red asterisks in Fig 5b indicate statistically meaningful differences between the PF and VF conditions. In later trials, accuracy in PF condition improved to significantly outperform condition noFB (black asterisk) (multiple paired t-tests with a family-wise error rate ≤ 0.05 - Bonferroni corrected) demonstrating that subjects could learn to use the mirrored feedback but only when the absence of visual feedback made the use of the mirrored feedback necessary for performance of the task.

IV. Concluding Remarks

Our findings demonstrate that the artificial proprioceptive feedback, supplied to the contralateral arm, improves myoelectric control in the absence of visual feedback. When visual feedback was available, overall performance was unaffected by the additional feedback, despite a small but significant reduction in errors in movement direction. This observation is compatible with previous reports that found that proprioceptive information was more effective to estimate direction than distance [18], [24]. This selective advantage of added artificial proprioceptive feedback emerged early in the movement, but was diminished towards the end of the hold period. We speculate both visual and proprioceptive feedback contribute during movement, whereas visual feedback, which allows for direct matching of visual cursor and target, takes precedence over proprioception control during the hold period. Direction errors must be controlled early to avoid large corrections later in the movement, thus artificial proprioceptive feedback may have a significant impact during this period. By contrast, errors in distance are only relevant towards the end of the movement and during the hold phase, when vision may be more advantageous than proprioception.

Based on studies of limb position drift during repetitive movements without visual feedback, Brown et al. in [26] concluded that separate controllers exist for limb position and movement. According to this hypothesis, position control relies more heavily on vision, while proprioception effectively informs movement control, such that the direction and length of individual movements remained constant as the drift of hand position accumulated. The differential impact of artificial proprioceptive feedback on movement and hold periods in our task adds further support to the notion that proprioceptive feedback may have a higher importance for movement than position control.

There were two reasons behind choosing muscles of the hand, instead of the forearm. First, we were interested in quantifying capability of intrinsic hand muscles in controlling myoelectric interfaces because, in the long-term, these results could contribute to design of biomimetic and abstract controllers for partial hand prostheses [20]–[22], [25]. As we discussed in [20], we avoided the intuitive DoAs to slow down the learning process and better observe improvements over time. In prosthetic applications the choice control signals may be restricted because of amputation, precluding intuitive control. These cases are, to some degree, better emulated by a non-intuitive design, such as ours. Previously, we showed that muscles of the forearm and hand show very similar tuning activity patterns when controlling a myoelectric interface [20]. Therefore, had we carried out Experiment 1 with the muscles of the left arm, it is likely that we would have observed very similar results.

Our second rationale was that the control of a myoelectric interface with the left hand muscles and receiving artificial feedback from the contralateral arm not only allows to test for distributed sensorimotor integration but also it imposes a secondary level of abstractness in feedback, that is proprioceptive information about activity of hand muscles are relayed back the brain via arm. Importantly, we showed that in this myoelectric interface design, proprioceptive feedback from the controlling muscles (here on the left hand) is very limited [21],[22].

In the current study, the EMG signal was used to control the position of the cursor. This design was consistent with our previous work on myoelectric controlled interfaces and helped with the interpretation of the results [20]–[22]. Further studies are required to determine whether other states such as cursor velocity or acceleration are better controllable through EMG. We detailed robot performance characteristic in Table I to highlight the technical constraints of our experiment and setup. We did not observe any performance effects that we could relate directly to the robot performance but cannot state with certainty that better robot performance will not improve the results.

Another important factor that will be considered is the time lag between the cursor (output of the motor system) and the robot position (sensory input) and its effect on sensorimotor integration. However, the investigation of the delay related effects was beyond the scope of the current work. In the current study we opted to keep the time lag between the cursor and the robot position as small as possible (Table 1).

In our first experiment, following an initial familiarization phase in which APF was supplied together with visual information (VF+PF), proprioception improved control as soon as visual feedback was withheld (test phase, PF condition). However, this did not occur if the familiarization phase consisted solely of visual feedback trials (Experiment 2). This is in agreement with finding of Mon-Williams et al. [16] who showed that when vision is available, in perceiving limb position, subjects trust the visual feedback more than what they feel through their touch sensory system. Together, they imply that sensory integration of the proprioceptive modality occurred implicitly during the familiarization phase, even though this additional feedback did not measurably impact task success when visual feedback was available.

In Experiment 2, we tested whether the higher performance in early PF-alone trials in Experiment 1 was because subjects had an inherent mapping between congruent visual and proprioceptive feedbacks or they acquired this mapping because they experienced these two feedbacks simultaneously during the initial familiarization block. Results showed that if the familiarization block contained only VF trials, the performance in the early PF-alone trials are as low as that in no-FB trials. This finding supports strongly the notion of multi-sensory integration during the familiarization block when these two feedbacks are presented simultaneously. The sudden deterioration in task-related performance in the VF condition, after introducing three new feedback conditions, i.e. VF+PF, PF and noFB, to the task at the beginning of the test phase may have resulted because the relative weightings of the visual and proprioceptive sensory feedback modalities must be updated in the motor program for all conditions in parallel and within a short space of time. A potential mechanism to adjust the weights could be to decrease the weighting of visual feedback and increase incrementally the weighting of the proprioceptive feedback. This approach consequently leads to performance degradation in the VF-only condition. An alternative, but simpler, explanation could be that the performance drop in VF-only condition is the result of a general increase in computational load required to integrate the additional feedback modality into myoelectric control. Further work will be required to determine how multi-sensory integration and learning evolves in unfamiliar and abstract tasks such the one we proposed in this article.

In Experiment 3, we tested whether this implicit integration of artificial proprioception into sensorimotor control required congruent visual and proprioceptive information. We found that unlike in the case of congruent feedback, proprioceptive feedback was not incorporated implicitly into the control strategy despite its provision with visual feedback during the familiarization phase. This is consistent with the findings of [28] that proprioception is not used for sensorimotor adaptation, when observed motor errors conflict with vision. However, even an incongruent proprioceptive feedback could improve performance in the absence of visual feedback. This was only seen late in the test phase after training on this specific condition (PF condition). Therefore, we believe that proprioceptive information is weighted less during the learning process when it contradicts, or at least when does not fully agree with, visual feedback.

Control of a dexterous hand prosthesis in an unpredictable environment will benefit from the provision of fast, reliable and potentially multi-modal sensory feedback [29], [30]. Non-visual feedback modalities can aid myoelectric control when vision is unavailable, and may help the prosthesis become incorporated into the wearer’s body image [31]. For the foreseeable future, the only way to deliver proprioceptive feedback of the posture or position of a prosthesis is through intact sensory pathways. Previous efforts have focused on substituting other sensory modalities, such as non-invasive tactile stimulation [2],[3] or via the use of the invasive targeted sensory re-innervation [32]. Our results suggest an alternative strategy may be to substitute the proprioceptive faculties of another limb. In either case, finding appropriate targets for effective proprioceptive feedback remains a challenge, because the artificial sense must not hinder the normal function of intact pathways. Nevertheless, the flexibility of the motor system to incorporate proprioceptive information from other limbs presents opportunities to improve the usability of prostheses by exploiting the range of alternative sensory channels available to amputees [33] The proposed paradigm, in this current design, may not be useful in real-life prosthetic control applications since it may not be practical to provide proprioceptive feedback in the healthy arm. Our motivation is to possibly provide the proprioception corresponding to a prosthesis to one of the finger (possibly the little finger which is used least) of the healthy arm.

The proposed task structure can provide a flexible paradigm by which the effectiveness multi-sensory integration in different feedback conditions can be evaluated.

Supplementary Material

Biographies

Tobias Pistohl was born in Munich, Germany, in 1975. He received a diploma degree in biology from University of Freiburg, Germany, in 2004 and earned his Ph.D. degree from University of Freiburg in 2010, where he continued to work for a brief period as a research associate. In 2011, he joined the Institute of Neuroscience, Newcastle University, UK as a research associate, where he stayed until 2013. In 2014 he took on his current position as lab manager at University of Freiburg in a research group for Neurobiology and Neurotechnology. His research interests include brain-machine interfaces, myoelectric interfaces, control of movements and prosthetic control.

Tobias Pistohl was born in Munich, Germany, in 1975. He received a diploma degree in biology from University of Freiburg, Germany, in 2004 and earned his Ph.D. degree from University of Freiburg in 2010, where he continued to work for a brief period as a research associate. In 2011, he joined the Institute of Neuroscience, Newcastle University, UK as a research associate, where he stayed until 2013. In 2014 he took on his current position as lab manager at University of Freiburg in a research group for Neurobiology and Neurotechnology. His research interests include brain-machine interfaces, myoelectric interfaces, control of movements and prosthetic control.

Deepak Joshi Deepak Joshi did B. Tech in Instrumentation engineering and M. Tech in Instrumentation and control engineering from India in 2004 and 2006, respectively. He received PhD degree in biomedical engineering from Indian Institute of Technology (IIT) Delhi. He was a visiting scholar at Institute of Neuroscience (ION), Newcastle University and research engineer at national university of Singapore (NUS), before joining Graphic Era University, Dehradun India. Currently, he is on leave from the university to pursue his postdoctoral research at University of Oregon, USA. His research area includes neural-machine interface, machine learning, biomedical instrumentation, and signal processing

Deepak Joshi Deepak Joshi did B. Tech in Instrumentation engineering and M. Tech in Instrumentation and control engineering from India in 2004 and 2006, respectively. He received PhD degree in biomedical engineering from Indian Institute of Technology (IIT) Delhi. He was a visiting scholar at Institute of Neuroscience (ION), Newcastle University and research engineer at national university of Singapore (NUS), before joining Graphic Era University, Dehradun India. Currently, he is on leave from the university to pursue his postdoctoral research at University of Oregon, USA. His research area includes neural-machine interface, machine learning, biomedical instrumentation, and signal processing

Gowrishankar Ganesh (M’08) received his Bachelor of Engineering (first-class, Hons.) degree from the Delhi College of Engineering, India, in 2002 and his Master of Engineering from the National University of Singapore, in 2005, both in Mechanical Engineering. He received his Ph.D. in Bioengineering from Imperial College London, U.K., in 2010.

Gowrishankar Ganesh (M’08) received his Bachelor of Engineering (first-class, Hons.) degree from the Delhi College of Engineering, India, in 2002 and his Master of Engineering from the National University of Singapore, in 2005, both in Mechanical Engineering. He received his Ph.D. in Bioengineering from Imperial College London, U.K., in 2010.

He worked as a Researcher in robotics and human motor control at the Advanced Telecommunication Research (ATR), Kyoto, Japan, from 2004 and through his PhD. Following his PhD, he joined at the National Institute of Information and Communications Technology (NICT) as a Specialist Researcher in robot-human interactions till December 2013. Since January 2014, he has joined as a Senior Researcher at the Centre National de la Recherché Scientifique (CNRS), and is currently located at the CNRS-AIST joint robotics lab (JRL) in Tsukuba, Japan. He is a visiting researcher at the Centre for Information and Neural Networks (CINET) in Osaka, ATR in Kyoto and the Laboratoire d’Informatique, de Robotique et de Microélectronique de Montpellier (LIRMM) in Montpellier. His research interests include human sensori-motor control and learning, robot control, social neuroscience and robot-human interactions.

Andrew Jackson received the MPhys degree in physics from the University of Oxford, Oxford, U.K., in 1998 and the Ph.D. degree in neuroscience from University College, London U.K., in 2002. He is currently a Wellcome Trust Research Career Development Fellow at the Institute of Neuroscience, Newcastle University, U.K. His scientific interests include the neural mechanisms of motor control, cortical plasticity and spinal cord physiology. This basic research informs the development of neural prosthetics technology to restore motor function to the injured nervous system.

Andrew Jackson received the MPhys degree in physics from the University of Oxford, Oxford, U.K., in 1998 and the Ph.D. degree in neuroscience from University College, London U.K., in 2002. He is currently a Wellcome Trust Research Career Development Fellow at the Institute of Neuroscience, Newcastle University, U.K. His scientific interests include the neural mechanisms of motor control, cortical plasticity and spinal cord physiology. This basic research informs the development of neural prosthetics technology to restore motor function to the injured nervous system.

Dr. Jackson is a graduate member of the Institute of Physics and a member of the Society for Neuroscience.

Kianoush Nazarpour (S’05–M’08–SM’14) received the Ph.D. degree in electrical engineering from Cardiff University, Cardiff, U.K., in 2008. Between 2007 and 2012, he held two postdoctoral researcher posts in Birmingham and Newcastle universities. In 2012, he joined Touch Bionics, U.K. as a Senior Algorithm Engineer.

Kianoush Nazarpour (S’05–M’08–SM’14) received the Ph.D. degree in electrical engineering from Cardiff University, Cardiff, U.K., in 2008. Between 2007 and 2012, he held two postdoctoral researcher posts in Birmingham and Newcastle universities. In 2012, he joined Touch Bionics, U.K. as a Senior Algorithm Engineer.

He is currently a lecturer at the School of Electrical and Electronics Engineering, Newcastle University, U.K. His research includes development of signal analysis methods and electronic technologies to enable sensorimotor restoration after amputation or injury to the spinal cord.

Dr Nazarpour is an Editor of the Medical Engineering & Physics journal. He received the Best Paper award in the 3rd International Brain-Computer Interface (BCI) Conference (Graz, 2006), and the David Douglas award (2006), UK for his work on joint space-time-frequency analysis of the electroencephalogram signals.

Contributor Information

Tobias Pistohl, Institute of Neuroscience, Newcastle University, UK. He is now with Bernstein Center Freiburg, University of Freiburg.

Deepak Joshi, Department of Electrical and Electronics Engineering, Graphic Era University, Dehradun - 248002, India.

Gowrishankar Ganesh, CNRS-AIST JRL (Joint Robotics Laboratory), UMI3218/CRT, Intelligent Systems Research Institute, Tsukuba, Japan-305-8568 and the Centre for Information and Neural Networks (CINET-NICT), Osaka, Japan-5650871.

Andrew Jackson, Institute of Neuroscience, Newcastle University, Newcastle upon Tyne, NE2 4HH, UK.

Kianoush Nazarpour, School of Electrical and Electronic Engineering and the Institute of Neuroscience, Newcastle University.

References

- [1].Farina D, Jiang N, Rehbaum H, Holobar A, Graimann B, Dietl H, Aszmann O. The extraction of neural information from the surface EMG for the control of upper-limb prostheses: Emerging avenues and challenges. IEEE Trans Neural Syst Rehabil Eng. 2014;22(4):797–809. doi: 10.1109/TNSRE.2014.2305111. [DOI] [PubMed] [Google Scholar]

- [2].Cipriani C, Antfolk C, Balkenius C, Rosen B, Lundborg G, Carrozza MC, Sebelius F. A novel concept for a prosthetic hand with a bidirectional interface: a feasibility study. IEEE Trans Biomed Eng. 2007;56:2739–2743. doi: 10.1109/TBME.2009.2031242. [DOI] [PubMed] [Google Scholar]

- [3].Antfolk C, D’Alonzo M, Controzzi M, Lundborg G, Rosén B, Sebelius F, Cipriani C. Artificial redirection of sensation from prosthetic fingers to the phantom hand map on transradial amputees: vibrotactile versus mechanotactile sensory feedback discrimination. IEEE Trans Neural Sys Rehab Eng. 2013;21(1):112–120. doi: 10.1109/TNSRE.2012.2217989. [DOI] [PubMed] [Google Scholar]

- [4].Witteveen HJB, Droog EA, Rietman JS, Veltink PH. Vibro- and electrotactile user feedback on hand opening for myoelectric forearm prostheses. IEEE Trans Biomed Eng. 2012;59:2219–2226. doi: 10.1109/TBME.2012.2200678. [DOI] [PubMed] [Google Scholar]

- [5].Witteveen HJB, Luft F, Rietman JS, Veltink PH. Stiffness feedback for myoelectric forearm prostheses using vibrotactile stimulation. IEEE Trans Neural Syst Rehabil Eng. 2014;22(1):53–61. doi: 10.1109/TNSRE.2013.2267394. [DOI] [PubMed] [Google Scholar]

- [6].Thorp EB, Larson E, Stepp CE. Combined auditory and vibrotactile feedback for human–machine-interface control. IEEE Trans Neural Syst Rehabil Eng. 2014;22(1):62–68. doi: 10.1109/TNSRE.2013.2273177. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Mann RW. Efferent and afferent control of an electromyographic, proportional-rate, force sensing artificial elbow with cutaneous display of joint angle. Proc Institution of Mechanical Engineers. 1968;183:86–92. [Google Scholar]

- [8].Wheeler J, Bark K, Savall J, Cutkosky M. Investigation of rotational skin stretch for proprioceptive feedback with application to myoelectric systems. IEEE Trans Neural Syst Rehabil Eng. 2010;18:58–66. doi: 10.1109/TNSRE.2009.2039602. [DOI] [PubMed] [Google Scholar]

- [9].Sherrington CS. The Integrative Action of the Nervous System. New York: C. Scribner’s sons; 1906. [Google Scholar]

- [10].Suminski AJ, Tkach DC, Fagg AH, Hatsopolous N. Incorporating feedback from multiple sensory modalities enhances brain-machine interface control. J Neurosci. 2010;30:16777–16787. doi: 10.1523/JNEUROSCI.3967-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [11].Blank A, Okamura AM, Kuchenbecker KJ. Identifying the role of proprioception in upper-limb prosthesis control: studies on targeted motion. ACM Trans Applied Perception. 2010;7(3) 15. [Google Scholar]

- [12].Ramos-Murguialday A, Schürholz M, Caggiano V, Wildgruber M, Caria A, Maria Hammer E, Halder S, Birbaumer N. Proprioceptive feedback and brain computer interface (BCI) based neuroprostheses. PLoS One. 2012 doi: 10.1371/journal.pone.0047048. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [13].Dhillon GS GS, Horch KW. Direct neural sensory feedback and control of a prosthetic arm. IEEE Trans Neural Syst Rehabil Eng. 2005;13(4):468–472. doi: 10.1109/TNSRE.2005.856072. [DOI] [PubMed] [Google Scholar]

- [14].Williams I, Constandinou TG. An energy-efficient, dynamic voltage scaling neural stimulator for a proprioceptive prosthesis. IEEE Trans Biomedical Circuits and Systems. 2013;7:129–139. doi: 10.1109/TBCAS.2013.2256906. [DOI] [PubMed] [Google Scholar]

- [15].Harris CS. Adaptation to displaced vision: visual, motor, or propriocep tive Change? Science. 1963;140:812–813. doi: 10.1126/science.140.3568.812. [DOI] [PubMed] [Google Scholar]

- [16].Mon-Williams SM, Wann JP, Jenkinson M, Rushton K. Synaesthesia in the normal limb. Proc Biol Sci. 1997;264:1007–1010. doi: 10.1098/rspb.1997.0139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [17].Tillery SI, Flanders M, Soechting JF. A coordinate system for the synthesis of visual and kinesthetic information. J Neurosci. 1991;11:770–778. doi: 10.1523/JNEUROSCI.11-03-00770.1991. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [18].Soechting JF, Flanders M. Sensorimotor representations for pointing to targets in three-dimensional space. J Neurophysiol. 1989;62:582–594. doi: 10.1152/jn.1989.62.2.582. [DOI] [PubMed] [Google Scholar]

- [19].Howard IS, Ingram JN, Wolpert DM. A modular planar robotic manipulandum with end-point torque control. J Neurosci Methods. 2009;181:199–211. doi: 10.1016/j.jneumeth.2009.05.005. [DOI] [PubMed] [Google Scholar]

- [20].Pistohl T, Cipriani C, Jackson A, Nazarpour K. Abstract and proportional myoelectric control for multi-fingered hand prostheses. Annals of Biomedical Eng. 2013;41(12):2687–2698. doi: 10.1007/s10439-013-0876-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [21].Radhakrishnan SM, Baker SN, Jackson A. Learning a novel myoelectric-controlled interface task. J Neurophysiol. 2008;100:2397–2408. doi: 10.1152/jn.90614.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [22].Nazarpour K, Barnard A, Jackson A. Flexible cortical control of task-specific muscle synergies. J Neurosci. 2012;32:12349–12360. doi: 10.1523/JNEUROSCI.5481-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [23].Pistohl T, Joshi D, Gowrishankar G, Jackson A, Nazarpour K. Artificial proprioception for myoelectric control; Proc IEEE EMBC; 2013. pp. 1595–1598. [DOI] [PubMed] [Google Scholar]

- [24].Scheidt RA, Conditt MA, Secco EL, Mussa-Ivaldi FA. Interaction of visual and proprioceptive feedback during adaptation of human reaching movements. J Neurophysiol. 2005;93:3200–3213. doi: 10.1152/jn.00947.2004. [DOI] [PubMed] [Google Scholar]

- [25].Antuvan CW, Ison M, Artemiadis P, P Embedded human control of robots using myoelectric interfaces. IEEE Trans Neural Syst Rehabil Eng. 2014;22(4):820–827. doi: 10.1109/TNSRE.2014.2302212. [DOI] [PubMed] [Google Scholar]

- [26].Brown LE, Rosenbaum DA, Sainburg RL. Limb position drift: implications for control of posture and movement. J Neurophysiol. 2003;90:3105–3118. doi: 10.1152/jn.00013.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [27].Cipriani C, Segil JL, Birdwell JA, ff. Weir RF. Dexterous control of a prosthetic hand using fine-wire intramuscular electrodes in targeted extrinsic muscles. IEEE Trans Neural Syst Rehabil Eng. 2014;22(4):828–836. doi: 10.1109/TNSRE.2014.2301234. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [28].Pipereit K, Bock O, Vercher JL. The contribution of proprioceptive feedback to sensorimotor adaptation. Exp Brain Res. 2006;174:45–52. doi: 10.1007/s00221-006-0417-7. [DOI] [PubMed] [Google Scholar]

- [29].Saunders I, Vijaykumar S. The role of feed-forward and feedback processes for closed-loop prosthesis control. J Neuroeng Rehabil. 2011;8:60. doi: 10.1186/1743-0003-8-60. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [30].Makin JG, Fellows MR, Sabes PN. Learning Multisensory Integration and Coordinate Transformation via Density Estimation. PLoS Computational Biology. 2013 doi: 10.1371/journal.pcbi.1003035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [31].Marasco PD, Kim K, Colgate JE, Peshkin MA, Kuiken TA. Robotic touch shifts perception of embodiment to a prosthesis in targeted reinnervation amputees. Brain. 2011;134:747–758. doi: 10.1093/brain/awq361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [32].Hebert JS, Olson JL, Morhart MJ, Dawson MR, Marasco PD, Kuiken TA, Chan KM. Novel targeted sensory reinnervation technique to restore functional hand sensation after transhumeral amputation. IEEE Trans Neural Syst Rehabil Eng. 2014;22(4):765–773. doi: 10.1109/TNSRE.2013.2294907. [DOI] [PubMed] [Google Scholar]

- [33].Panarese A, Edin BB, Vecchi F, Carrozza MC, Johansson RS. Humans can integrate force feedback to toes in their sensorimotor control of a robotic hand. IEEE Trans Neural Syst Rehabil Eng. 2009;17:560–567. doi: 10.1109/TNSRE.2009.2021689. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.