Abstract

Endomicroscopy is an emerging imaging modality, that facilitates the acquisition of in vivo, in situ optical biopsies, assisting diagnostic and potentially therapeutic interventions. While there is a diverse and constantly expanding range of commercial and experimental optical biopsy platforms available, fibre-bundle endomicroscopy is currently the most widely used platform and is approved for clinical use in a range of clinical indications. Miniaturised, flexible fibre-bundles, guided through the working channel of endoscopes, needles and catheters, enable high-resolution imaging across a variety of organ systems. Yet, the nature of image acquisition though a fibre-bundle gives rise to several inherent characteristics and limitations necessitating novel and effective image pre- and post-processing algorithms, ranging from image formation, enhancement and mosaicing to pathology detection and quantification. This paper introduces the underlying technology and most prevalent clinical applications of fibre-bundle endomicroscopy, and provides a comprehensive, up-to-date, review of relevant image reconstruction, analysis and understanding/inference methodologies. Furthermore, current limitations as well as future challenges and opportunities in fibre-bundle endomicroscopy computing are identified and discussed.

Keywords: Fibre bundle endomicroscopy, confocal laser endomicroscopy, imaging, Image restoration, image analysis, image understanding

1. Introduction

The emergence of miniaturised optical-fibre based endoscopes has enabled real-time imaging, at cellular resolution, of tissues that were previously inaccessible through conventional endoscopy. Fibre-bundle endomicroscopy (FBEμ), the most prevalent endomicroscopy platform, has been clinically deployed for the acquisition of in vivo, in situ optical biopsies in a wide and ever-increasing range of organ systems predominantly in the gastrointestinal, urological and the respiratory tracts. Customarily, a coherent fibre bundle is guided through the working channel of an endoscope (or a needle) to a region of interest and intra-venous or topical dyes are employed to augment tissue fluorescence, enhancing the emitted signal of the imaged structure. Endomicroscopy has the potential to assist diagnostic and interventional procedures by aiding targeted sampling and increasing diagnostic yield and ultimately reducing the need for histopathological tissue biopsies and any associated delays. To date, the most widespread use of FBEμ (along with fluorescent dyes, such as fluorescein) is in the gastro-intestinal (GI) tract (Fugazza et al., 2016; Wallace and Fockens, 2009; Wang et al., 2015). In particular, FBEμ has been employed in the upper GI tract (East et al., 2016) to detect (i) structural changes in the oesophagus mucosa associated with squamous cell carcinoma and Barrett’s oesophagus, and (ii) polyps and neoplastic lesions as well as gastritis and metaplastic lesions in the stomach and duodenum. In the lower GI tract, fibre-endomicroscopy has been utilised to (i) detect colonic neoplasia (Su et al., 2013) and malignancy in colorectal polyps (Abu Dayyeh et al., 2015), as well as to (ii) assess the activity and relapse potential of Inflammatory Bowel Disease (IBD) (Rasmussen et al., 2015; Salvatori et al., 2012).

In pulmonology, the auto-fluorescence (at 488 nm) generated through the abundance of elastin and collagen has enabled the exploration of the distal pulmonary tract as well as the assessment of the respiratory bronchioles and alveolar gas exchanging units of the distal lung without the need for exogenous contrast agents. Clinical studies have demonstrated the ability of FBEμ to image a range of pathologies including (i) changes in cellularity in the alveolar space as indicator of acute lunge cellular rejection following lung transplantation (Yserbyt et al., 2014), (ii) cross-sectional and level of fluorescence changes in the alveolar structure in emphysema (Newton et al., 2012; Yserbyt et al., 2017), and (iii) elastic fibre distortion (Yserbyt et al., 2013) and neoplastic changes in epithelial cells (Fuchs et al., 2013; Thiberville et al., 20 07,2009) in bronchial mucosa.

Other clinical applications of FBEμ include (but are not limited to) imaging (i) structural epithelial changes observable in bladder neoplasia (Sonn et al., 2009) as well as upper tract urothelial carcinoma (Chen and Liao, 2014), (ii) pancreatobiliary strictures as well as in pancreatic cystic lesions (catheter and/or needle based endomicroscopy), detecting potential malignancy (Karia and Kahaleh, 2016; Smith et al., 2012), (iii) the oropharyngeal cavity, differentiating between healthy epithelium, squamous epithelium and squamous cell carcinoma (Abbaci et al., 2014), and (iv) brain tumours (surgical access), such as glioblastoma, providing immediate histological assessment of the brain-to-neoplasm interface and hence improving tumour resection (Mooney et al., 2014; Pavlov et al., 2016; Zehri et al., 2014). Furthermore, there has been an effort to develop molecularly targeted fluorescent probes, such as peptides (Burggraaf et al., 2015; Hsiung et al., 2008; Staderini et al., 2017), antibodies (Pan et al., 2014) and nanoparticles (Bharali et al., 2005), that can bind and amplify fluorescence in the presence of specific type of tumours (Hsiung et al., 2008; Khondee and Wang, 2013; Pan et al., 2014), inflammation (Avlonitis et al., 2013), bacteria (Akram et al., 2015b) and fibrogenesis (Aslam et al., 2015). Such fluorescent probes will give rise to molecular FBEμ, enhancing the imaging and the diagnostic capabilities of the technology and significantly augmenting utility.

The proliferation of probe-based confocal laser endomicroscopy (pCLE) in clinical practice, along with the emergence of novel, FBEμ architectures and molecularly targeted fluorescent probes necessitate the development of highly sensitive imaging platforms, as well as a range of custom, purpose specific image analysis and understanding methodologies that will assist the diagnostic process. This review provides a brief summary of the available endomicroscopic imaging platforms (Section 2), along with an overview of the state-of-the-art in fibre-bundle endomicroscopic (FBEμ) image computing methods, namely image reconstruction (Section 3), analysis (Section 4) and understanding/inference (Section 5). Owing to its more widespread dissemination, this review paper concentrates in FBEμ image computing. Yet, a small number of relevant image analysis and understanding techniques developed and assessed for other endomicroscopy platforms, offering viable solutions for FBEμ have also been included. Current limitations in FBEμ image computing as well as future challenges and opportunities are also identified and discussed (Section 6).

2. Technology overview

To date, four endomicroscopic imaging platforms, all exploiting different fundamental optical imaging technologies, have been commercialised for clinical use (NinePoint, Olympus, Pentax, Mauna Kea, Zeiss). While this review paper concentrates on fibre bundle based systems, a brief description of the currently available endomicroscopic imaging platforms, both commercial and research based, is provided.

NinePoint Medical (Bedford, Massachusetts, USA) developed the NVisionVLE platform, a Volumetric Laser Endomicroscopy (VLE) (Bouma et al., 2009; Vakoc et al., 2007; Yun et al., 2006) device that can acquire in-vivo, high-resolution (7 μm), volumetric data of a cavity (e.g. the gastro-intestinal tract) through a flexible, narrow diameter catheter (<2.8 mm). VLE combines principles of endoscopic Optical Coherence Tomography (OCT) (Tearney et al., 1997), along with Optical Frequency-Domain Imaging (OFDI) (Yun et al., 2003) to acquire a sequence of cross-sectional images 100-fold faster than conventional OCT, while maintaining the same resolution and contrast. Similar non-commercial OFDI technology has been employed by Tethered Capsule Endoscopy (TCE) to provide an imaging alternative for the gastro-intestinal tract (Gora et al., 2013).

Olympus Medical Systems Co. (Tokyo, Japan) developed a range of prototype endocytoscopes (Hasegawa, 2007), white-light, flexible, contact endoscopes that can image at cellular resolution (up to >1000x magnification). These, now discontinued, prototypes incorporated a miniaturised Charged-Coupled Device (CCD) sensor, the associated objective lenses and an adjacent light source at the distal end of the endoscope. All imaging at the endoscope/tissue contact layer was achieved via light scattering. Olympus provided a variety of options, from (i) full endoscope integration, to (ii) standalone, probes that could fit through a 3.7 mm endoscope work-ing channel (Ohigashi et al., 2006; Singh et al., 2010). An alternative non-commercial implementation, replacing the miniature sensor with a flexible, coherent fibre bundle enabling imaging at the proximal end of the fibre (Hughes et al., 2013), as well as a range of adaptations to achieve true reflectance endomicroscopy while avoiding back-reflections (Hughes et al., 2014; Liu et al., 2011; Sun et al., 2010) have been proposed.

Confocal laser endomicroscopy (CLE) employs a miniaturised optical fibre to acquire 2D images, predominantly fluorescent, across the examined tissue structure. Inspired from benchtop confocal microscopy (Minsky, 1988), a low-power laser signal (typically at 488 nm), focused to a single, finite point within the specimen, is scanned across a two-dimensional imaging plane, generating a 2D image commonly referred to as an optical section. Optical fibres are typically used for relaying light and may act as bidirectional pinholes, rejecting light outside the focal point, reducing the associated image blurring. There are currently, numerous experimental and two commercial CLE platforms with clinical utility, namely endoscope-based (eCLE) and probe-based (pCLE) endomicroscopy. A number of review papers (Jabbour et al., 2012; Oh et al., 2013) provide insight into the current CLE instrumentations. In brief, eCLE integrates a miniaturised confocal scanner into the distal tip of a device using a single core fibre (Delaney et al., 1994; Harris, 1992, 2003). Piezoelectric or electromagnetic actuators can be used to generate the 2D scanning pattern. The tissue signal generated at each individual (scanning) location is transferred through the single-core fibre to a detector and associated processing unit at the proximal end, where the image is accumulated and reconstructed after each complete scan. A clinical eCLE platform was developed by Pentax Medical (Tokyo Japan), integrating the confocal scanning facility into a conventional endoscope. This now discontinued device had a 12.8 mm diameter and enabled the acquisition of optical sections at a 500 × 500 μm field of view and 0.7 μm lateral resolution. Optiscan (Melbourne, Australia) has recently developed a pre-clinical eCLE platform, comprising of a 4 mm diameter standalone micro-endoscope with a < 0.5 μm lateral resolution (highest commercially available resolution) and a 475 × 475 μm field of view. The acquisition frame-rate in both devices is dependent on the associated acquisition aspect ratio and Z-stack depth (single vs multiple frames), with typically reported values ranging between 0.8 to 6 frames per second (for a single frame). Carl Zeiss Meditec (Jena, Germany) has recently developed a digital biopsy tool for neurosurgery (Leierseder, 2018) based on the underlying Optiscan eCLE technology (475 × 267 μm FOV at 488 nm excitation). A number of alternative, non-commercial experimental architectures utilising distal based scanning have been proposed, including (i) high speed imaging (Shi and Wang, 2010) via parallel distal scanning through a multi-core fibre; (ii) dual-axis imaging (Wang et al., 2003), separating the illumination and collection paths and enabling 2D (Liu et al., 2007), 3D (Ra et al., 2008) and multi-colour (Leigh and Liu, 2012) imaging capabilities with improved optical sectioning (axial resolution); and (iii) two-photon imaging (So et al., 20 00; Wu and Li, 2010), employing multiple, less energetic photons to induce transition of the imaged fluorescent structure to the desired excitation state, improving the resulting imaging resolution, penetration depth and reducing potential tissue photodamage.

Probe-based confocal laser endomicroscopy (pCLE) utilises a multicore imaging fibre-bundle for the acquisition of 2D optical en face sections of a tissue structure. Confocal scanning takes place at the proximal end of the fibre and is relayed by the fibre bundle. Each individual core within the bundle, often combined with a pinhole, rejects light outside the focal plane. Compared to eCLE, the optical setup for pCLE results in a substantially smaller distal endomicroscope as well as higher acquisition frame rates. In contrast, the imaging depth is fixed (and smaller) by the distal optics and the lateral resolution is determined (limited) by the inter-core distance of a particular multicore fibre bundle and distal optics design. Gmitro and Aziz (1993), Rouse et al. (2004) and Sabharwal et al. (1999) proposed an early implementation of pCLE with Dubaj et al. (2002) and Le Goualher et al. (2004b) introducing refinements for real-time confocal scanning in biomedical applications. Mauna Kea Technologies (Paris, France) developed the Cellvizio pCLE imaging platform along with a wide range of compatible multi-core fibre probes with diameters as small as 0.3 mm, field of views between 300 and 600 μm, and, excluding magnification from distal optics, approximate lateral resolution of 3.3 μm. Additional distal optics can be used to improve the imaging resolution to approximately 1 μm, at the expense of field of view (240 μm) and diameter (<3 mm). The probes’ miniature sizes enable use through the working channel of most commercially available endoscopes as well as some needles/catheters, while the relevant high data acquisition rate (>12 fps) enables real-time imaging of moving structures, resulting in Cellvizio (pCLE) being the most widely used endomicroscopy platform approved for clinical use. A rapidly growing volume of alternative, non-commercial experimental architectures is being developed, including (i) right-angle stage attachment for standard desktop confocal microscopes (ii) linescanning confocal endomicroscopy (Hughes and Yang, 2015, 2016), improving the acquisition frame rate without compromising substantially image quality; (iii) flexible and low-cost endomicroscopy architectures (Hong et al., 2016; Krstajić et al., 2016; Pierce et al., 2011; Shin et al., 2010), employing LED, widefield illumination; (iv) structured illumination endomicroscopy (Bozinovic et al., 2008; Ford et al., 2012b; Ford and Mertz, 2013), providing out-of-focus background rejection without beam scanning; (v) oblique back-illumination endomicroscopy (Ba et al., 2016; Ford et al., 2012a; Ford and Mertz, 2013), collecting phase-gradient images of thick scattering samples; and (vi) multi-spectral imaging (Bedard and Tkaczyk, 2012; Cha and Kang, 2013; Jean et al., 2007; Krstajic et al., 2016; Makhlouf et al., 2008; Rouse and Gmitro, 2000; Vercauteren et al., 2013; Waterhouse et al., 2016). These alternative architectures, along with pCLE can be grouped under the term Fibre-Bundle Endomicroscopy (FBEμ), which, due to its more widespread dissemination, is the technology primarily deliberated throughout this study.

3. Image reconstruction

The nature of image acquisition through coherent fibre bundles is a source of inherent limitations in FBEμ imaging. Coherent fibre bundles are comprised of multiple (>10.0 00) cores that (i) have variable size and shape, (ii) are irregularly distributed across the field of view, (iii) have variable light transition properties, including coupling efficiency and inter-core coupling spread, and (iv) have spatiotemporally variable auto-fluorescent (background) response at certain imaging wavelengths. Such properties directly limit the imaging capabilities of the technology. There has therefore been a substantial interest in the development of effective and efficient approaches to reconstruct FBEμ images, attempting to compensate for these inherent limitations. Table 1 provides an overview of the most relevant image reconstruction studies applicable to FBEμ, while Fig. 1 provides characteristic examples of the associated imaging limitations.

Table 1. Overview of reconstruction approaches for fibred endoscopic imaging.

| Topic | References | Methodology | Comments |

|---|---|---|---|

| Honeycomb effect & Fourier domain filters | (Dickens et al. 1998; Dickens et al. 1997,1999) | Manual band-reject filters with “high-boost” filter. | Simple to implement, and computationally efficient approaches that suppress the honeycomb structure. However, inherently susceptible to blurring the imaged structures. |

| Han et al. (2010) | Histogram equalisation with Gaussian low-pass filter. | ||

| Rupp et al. (2007) and Winter et al. (2006) | Low pass filter using alternative (circular, star-shaped), rotationally invariant kernels. | ||

| Lee and Han (2013b) | Gaussian based, notch reject filter, eliminating periodic, high-frequency components. | ||

| Ford et al. (2012b) | Iteratively blurring (low pass) cladding regions while maintaining core intensities. | ||

| Dumripatanachod and Piyawattanametha (2015) | Efficient with 2 1D top-hat filters (equivalent to square kernel). | ||

| Honeycomb effect & core interpolation | Elter et al. (2006), Le Goualher et al. (2004a) and Rupp et al. (2007,2009) | C0-2 continuous interpolation methods on irregular core lattice. | Simple and efficient approaches capable on maintaining the original core information. Successfully employed in clinical/commercial systems. |

| Zheng et al. (2017) | Enhancement of interpolated (bilinear) images using rotationally invariant Non-Local Means. | ||

| Winter et al. (2007) | Correcting for variable core PSF overlap over a colour-sensor’s Bayer pattern, suppressing false colour moiré patterns. | ||

| Honeycomb effect & image superimposition | Rupp et al. (2007) | Integrate the core locations of 4 shifted and aligned images, interpolate revised grid. | Capable of removing the honeycomb structure and increase the effective resolution of the acquired data. Developing real-time elastic registration approaches is a major challenge. |

| Kyrish et al. (2010), Lee and Han (2013a) and Lee et al. (2013) | Compounding images shifted (translation stage) with a range of predetermined patterns. | ||

| Cheon et al. (2014a), Cheon et al. (2014) and Vercauteren et al. (2005,2006) | Aligning (compensating for random movements) and combining consecutive frames. | ||

| Honeycomb effect & iterative reconstruction | Han and Yoon (2011) | Maximising the posterior probability in a Bayesian framework (Markov Random Fields). | Preliminary studies, successful at removing honeycomb structures. Not necessarily improve reconstruction error to interpolation. Computationally costly due to iterative nature |

| Liu et al. (2016) | l 1 norm minimisation (using iterative shrinkage thresholding - 1ST) in the wavelet domain. | ||

| Han et al. (2015) | Efficient, non-parametric iterative compressive sensing for inpainting cladding regions. | ||

| Variable coupling & background response | Ford et al. (2012b), Le Goualher et al. (2004a) and Zhong et al. (2009) | Affine intensity transform incorporating dark and bright-field information at each core. | Capable of supressing (in real time) the effect of spatio-temporally variable coupling and background response. Successfully employed in clinical/commercial systems. |

| Savoire et al. (2012) | Blind calibration exploring neighbouring core correlation to recursively (online) derive gain and offset coefficients in each core. | ||

| Vercauteren et al. (2013) | Multi-colour extension of Le Goualher et al. (2004a) dealing with geometric and chromatic distortions. | ||

| Cross coupling | Perperidis et al. (2017a) | Quantifying (and integrating into a linear model) cross coupling within fibre bundles. | Effective in supressing the effect of cross coupling. Computationally costly for real-time scenarios. |

| Karam Eldaly et al. (2018) | Deconvolution and image reconstruction, reducing the effect of inter-core coupling. |

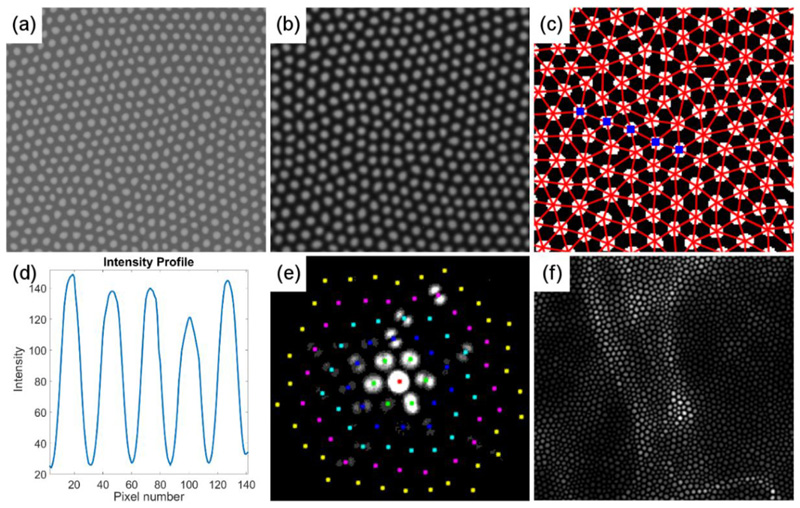

Fig. 1. Examples illustrating properties of coherent fibre bundles that limit the imaging capabilities of fibred endoscopy.

(a) Scanning Electron Microscopy (SEM) image of commercial coherent fibre bundle (FIGH-30-650S, Fujikura), along with (b) a uniform, flood illumination (520 nm) image of the same fibre bundle, using widefield endomicroscopy. The variable size and shape, as well as irregular distribution of the cores is apparent in both (a) and (b). (c) Binary masque and associated Delaunay triangulation of cores, identified within a uniformly illuminated image, similar to (b). (d) Intensity profile across the five cores highlighted in (c) illustrating the variations of coupling efficiency amongst different neighbouring cores. (e) Example inter-core coupling spread at 520 nm, as measured by Perperidis et al. (2017b). (f) Example raw widefield endomicroscopy image of auto-fluorescent alveoli structures from an ex-vivo, human lung. The imaged structured is heavily corrupted by the intrinsic characteristics of the imaging fibre bundle, highlighting the need for effective image reconstruction approaches. Images (a-b), (c) and (e) have been reproduced (cropped) from Figures 6, 7 and 8 respectively of the “Characterization and modelling of inter-core coupling in coherent fibre bundles" by Perperidis et al. (2017b) under the Creative Commons Attribution (CC BY) 4.0 International License (https://creativecommons.org/licenses/by/4.0).

3.1. Honeycomb effect

The most visually striking, and limiting artefact, arising from the transmission of the imaged scene through a coherent fibre bundle, is the so-called honeycomb effect. The honeycomb effect, illustrated in Fig. 1, is a consequence of the light being guided from the distal to the proximal end of the individual cores comprising the fibre-bundle but not through the surrounding cladding. Each core, while usually imaged across multiple pixels, contains intensity information on a single, discrete position within the imaged scene. Consequently, the resulting raw image data is a high-resolution rectangular matrix representation of a low-resolution, irregularly-sampled scene. Several studies have attempted to supress/remove the honeycomb effect in fibred endoscopy, generating continuous, high-resolution image sequences.

Throughout the years, a number of approaches employing band-pass filtering in the Fourier domain have been proposed (Dickens et al., 1998,Dickens et al., 1997,1999; Dumripatanachod and Piyawattanametha, 2015; Ford et al., 2012b; Han et al., 2010; Lee and Han, 2013b; Maneas et al., 2015; Rupp et al., 2007; Winter et al., 2006). Band-pass filters employing a range of different kernels, static and adaptive (derived from the core distribution across the bundle) were typically combined with a range of pre- and post-processing approaches to enhance the performance of suppressing the core honeycomb pattern. Band-pass filtering provides a simple and efficient approach to suppress/remove the honeycomb structure from fibred endoscopic images. However, given the irregularly distributed cores in most modern miniaturised fibrescopes, identifying suitable thresholds in the frequency domain that would remove the honeycomb effect (usually high frequency component) without blurring the underlying imaged structure (usually lower frequency component) can be inherently challenging.

In contrast to band-pass filtering, interpolating amongst the irregular core lattice effectively removes the undesired honeycomb structure while retaining the original image content at the core locations. To accurately identify the locations of each individual core, a uniformly illuminated calibration image is required. Local maxima and the Circular Hough Transforms (CHT) are amongst the wide range of off-the-shelf solutions for identifying core locations. Suggested interpolation methods (Elter et al., 2006; Le Goualher et al., 2004a; Rupp et al., 20 07,2009; Vercauteren et al., 2006) include (i) C° continuous nearest neighbour, triangulation-based and natural-neighbour based linear interpolations, (ii) C 1 continuous Clough-Tocher interpolation (Amidror, 2002) and a Bernstein-Bezier extension to natural neighbours (Farin, 1990), and (iii) C 2 continuous Radial Basis Functions (Amidror, 2002), b-spline approximation (Lee et al., 1997) and recursive Gaussian filter (Deriche, 1993) adaptation of Shepard’s interpolation. Zheng et al. (2017) attempted to refine the results of a bilinear interpolation using a rotationally invariant adaptation of Non-Local Means (NLM) filters. Moreover, Winter et al. (2007) proposed an extension of the core interpolation approach for single chip colour cameras, suppressing any false colour moiré patterns. While higher order continuity generated smoother images, a property that can be desirable in particular applications, the associated reconstruction accuracy was shown (Rupp et al., 2009) to be only marginally supe-rior to simple C 0 algorithms. On the other hand, for simple Voronoi Tessellation based approaches, all calculations could be performed once, at the calibration stage, generating look up tables to be employed during the subsequent image reconstruction task. Consequently, generating comparable results with less computational complexity makes such linear interpolation approaches more attractive candidates for real-time applications.

Superposition or compounding of spatially misaligned and partially decorrelated images is an approach that has been effective in the enhancement of medical ultrasound images (Perperidis et al., 2015; Rajpoot et al., 2009). In fibred endoscopy, movement of the fibre tip in successive frames accommodates the acquisition of information from the regions previously masked by the fibre cladding. Hence, by effectively aligning the imaged structures and combining a sequence of shifted frames (i) the honeycomb structure can be suppressed/eliminated, (ii) the imaging resolution can be increased. Numerous attempts have examined the effect of different shift patterns, altering the location of the core pattern with respect to the imaged structure, and superposition methods, such as deriving the average or maximum intensity of the aligned images on fibreoscopic images (Kyrish et al., 2010; Lee and Han, 2013a; Lee et al., 2013; Rupp et al., 2007). Alternatively, Cheon et al. (2014a, Cheon et al., 2014) and Vercauteren et al. (2005,2006) employed the random movements during data acquisition, as would be expected in a realistic clinical scenario, to create an enhanced composite image. The approach was first introduced as part an image mosaicing framework (see Section 4.1) with the main effort being placed in devising an accurate and efficient approach for the alignment of consecutive images. While small translational movements can be efficiently and potentially accurately estimated in real-time, in a realistic scenario with, image distortions as well as elastic and sometimes large structural deformations between consecutive frames, the accurate real-time alignment and compounding can be an eminently challenging task. Increased acquisition frame rate can potentially reduce the deformations between consecutive frames, making their effective alignment more realisable. However, increasing the acquisition frame rate can have detrimental effect in the signal to noise ratio and associated imaging limits of detection.

Recently, several more “sophisticated”, iterative methods have been proposed for the reconstruction of fibred endoscopic images and the removal of the associated fixed honeycomb pattern. Han and Yoon (2011) employed a Bayesian approximation algorithm to decouple the honeycomb effect. Liu et al. (2016), based on the empirical observation that natural images tend to be sparse in the wavelet domain, employed l 1 norm minimisation in the wavelet domain to remove the honeycomb pattern. Han et al. (2015) employed an efficient, non-parametric iterative compressive sensing technique for inpainting the cladding regions, without the need of any prior information with regards to the underlying core structure. Limited evaluation (on USAF resolution targets and some biological data) demonstrated the potential of such iterative approaches in image reconstruction, removing the honeycomb artefact as well as fibre bundle defects, while maintaining the spatial resolution and considerably increasing the image contrast and contrast to noise ratio (CNR). However, the current algorithm im-plementations are considered computationally expensive, making them unsuitable for real time applications. Nevertheless, accelerated, parallel processing though state-of-the-art Graphical Processing Units (GPU) could potentially enable the real-time implementation of such iterative approaches.

3.2. Variable coupling and background response

Coherent fibre bundles comprise of a large number cores, commonly in excess of 50 00. To reduce the effect of inter-core coupling, neighbouring cores tend to be of variable size and shape. A consequence of this core irregularity is the variable coupling efficiency observed across the fibre bundle. Furthermore, some imaging fibre bundles have exhibited an intrinsic, background auto-fluorescent response at certain imaging wavelengths (e.g. 488 nm). Auto-fluorescence, as with coupling efficiency, is also associated with the shape and size of each individual core. These innate fibre properties have a detrimental effect in imaging quality. Consequently, explicit calibration procedures have been developed in an attempt to supress their effect in fibred endoscopic imaging. Le Goualher et al. (2004a) proposed an off-line calibration process, utilising (i) an image of the fibre auto-fluorescent background (dark-field), as well as (ii) an image of a uniformly fluorescent medium (bright-field). More specifically, for every frame during data acquisition, geometric distortions caused by the resonant scanning mirrors were compensated and the intensity at each core location was normalised using an affine intensity transformation combining the dark and bright-field information. Ford et al. (2012b) and Zhoπg et al. (2009) extended the Le Goualher et al. (2004a) approach, introducing additional normalisation terms to partially compensate for camera bias, ambient background light and occasional system realignment. Vercauteren et al. (2013) adapted the off-line calibration approach in Le Goualher et al. (2004a) to deal with the distortion compensations (geometric and chromatic) for multi-colour acquisition. In particular, chromatic distortions were estimated and compensated by a symmetric and robust version of the Iterative Closest Point algorithm relying on orthogonal linear regression.

The aforementioned studies assumed constant gain (coupling efficiency) and offset (background auto-fluorescence) for each individual core. However, medium-dependent and slow time-varying coefficient deviations can introduce a static noise pattern on the acquired images. Savoire et al. (2012) explored the high correlation of signals between neighbouring cores to develop a blind on-line calibration process. For every core in the bundle, (i) linear regression on a temporal window estimated the relative gain and offset coefficients for the associated neighbouring core-pairs, (ii) regularised inversion derived the core’s actual gain and offset parameters. To compensate for slow time-varying coefficient changes, the process was performed recursively over temporal windows sufficiently large to enable a robust inversion process.

3.3. Inter-core coupling

Inter-core coupling is another limitation in coherent fibre bundles, resulting in blurring of the imaged structures and consequently a worsening in the associated limits of detection in FBEμ. Inter-core coupling has been studied both experimentally (Chen et al., 2008; Wood et al., 2017) and within the theoretical framework of coupled mode theory (Ortega-Quijano et al., 2010; Reichenbach and Xu, 2007; Wang and Nadkarni, 2014), providing (i) insights on the factors affecting cross talk, and (ii) solutions/recommendations for optimal design, selection and optimisation of fibre bundles. Yet, due to the trade-off between cross coupling and core density, cross coupling can be suppressed yet not eliminated through optimal fibre design. In a recent study, Perperidis et al. (2017a), introduced a novel approach for measuring, analysing and quantifying cross coupling within coherent fibre bundles, in a format that can be integrated into a linear model of the form v = Hu + w with v being the recorded image, u the original signal, H the convolution operator modelling the spread of light, and w an additive observation noise. Karam Eldaly et al. (2018) employed this linear model and demonstrated the potential of both optimisation-based and simulation-based approaches in reconstructing FBEμ data and reducing the effect of inter-core coupling. However, the computational requirements of the proposed methodology limit their current suitability in real-time clinical applications.

4. Image analysis

Analysis of the acquired data and quantification of the imaged structures and potential pathologies is an imperative component to the development of Computer Aided Diagnosis (CAD) systems. Such systems can capitalise on the real-time, optical biopsy capabilities of the technology. Yet, the underlying imaging technology, along with the nature of the clinical data acquisition, generating a steady stream of high resolution images with constricted Field of View (FOV), impose a series of inherent restrictions/challenges to the development of image analysis methodologies. To date, image analysis research for FBEμ can be broadly categorised into (i) mosaicing (Table 2), and (ii) quantification (Table 3) methods. However, the literature appears to be heavily unbalanced, concentrating predominantly on the task of mosaicing frame sequences to extend the associated FOV.

Table 2. Overview of mosaicing approaches for fibred endoscopic imaging.

| Topic | References | Methodology | Comments |

|---|---|---|---|

| Image based, real-time | Bedard et al. (2012) and Vercauteren et al. (2008) | Local rigid alignment through normalised cross correlation matching. | |

| Loewke et al. (2008) | Local rigid alignment through feature based optical flow refined via gradient descent on normalised cross correlation. | ||

| Certain assumptions and model simplifications required for achieving real time performance. | |||

| Image based, post-procedural | Vercauteren et al. (2005,2006) | Hierarchical framework of frame-to-reference transformations (on the original, sparsely sampled data) to derive a globally consistent rigid alignment, while compensating for motion distortions, elastic deformations. Global alignment seen as an estimation problem on a Lie group. | More complex models dealing with a range of local and global, rigid and elastic image transformations. Post-procedural approaches with real-time capacity compromised due to the underlying complex registration models. |

| Loewke et al. (2011,2007a) | Compensating for global (rigid) as well as local (elastic) transformations (including motion distortion. Fixed correspondence between images were replaced with a Gaussian Potential representing the amount of certainty in the registration. Global and local deformation potentials were maximised in an integrated optimisation problem | ||

| Hu et al. (2010) | Elastic registration of consecutive frames based on optical flow of robust image features (RANSAC strategy on Lucas-Kanade tracker. A Maximum a Posterior (MAP) estimation based image blending generated super-resolved images. | ||

| Image based, dynamic imaging | Mahé et al. (2015) | Dynamic mosaic obtained by solving a 3D Markov Random Field. Two-stage approach, of static mosaicing followed by stitching of the associated video segments. | Generating mosaics that maintain temporal information in the form of infinite loops. |

| External input based | Loewke et al. (2007b) | Initial rigid alignment using feedback from a robotic arm determining the five degree-of-freedom position/orientation of the fibre tip. | Actuators/sensors provide feedback on the scanning path improving the efficiency and/or the robustness of mosaicing. Hardware additions are limiting their suitability for endoscopic applications. |

| Vyas et al. (2015) | Initial rigid alignment using feedback from a six degree-of-freedom electromagnetic sensor positioned at the tip of the fibre-bundle. | ||

| Mahé et al. (2013) | Weak a-priori knowledge of the trajectory (spiral scan) used to derive spatio-temporal associations within the frame sequence. A hidden Markov model notation and a Viterbi algorithm was recovered the optimal frame associations, feeding a modification of the mosaicing algorithm by Vercauteren et al. (2006) to estimate the optimal transform. |

Table 3. Overview of quantification approaches for fibred endoscopic imaging.

| Organ (System) | Quantifying | References | Methodology | Comments |

|---|---|---|---|---|

| Circulatory | Red blood cell velocity. | Savoire et al. (2004) | Thresholding and line-fitting (M-estimators) translated (through trigonometry) to RBC velocity. | Inventive use of known and quantifiable artefact in raster scanning imaging systems for deriving physiological information. Preliminary results with uncertain clinical relevance. |

| Perchant et al. (2007) | ROI tracking and alignment through (i) scanning distortion compensation, and (ii) global affine registration, for blood velocity estimation through spatio-temporal correlation. | Feasibility study. Preliminary results with uncertain clinical relevance. |

||

| Oropharyngeal | Epithelial cells in vocal chords. | Mualla et al. (2014) | Watershed segmentation (borders) and local minima detection (location). | Empirical, ad-hoc approach employing off-the-shelf image analysis methods. Limited data can potentially lead to poor generalisation of the proposed methodology. |

| Gastro-intestinal | Intestinal crypts in Inflammatory Bowel Disease. (eCLE) | Couceiro et al. (2012) | Detecting (local maxima), segmenting (ellipse fitting on edge detection) and quantifying (number, connectivity). | Empirical, ad-hoc approaches employing off-the-shelf image analysis methods Heuristic parameter estimation, hard thresholds and limited data can potentially lead to poor generalisation of the proposed methodologies. |

| Intestinal crypts in colorectal polyps. | Prieto et al. (2016) | Contrast enhancement, thresholding (Otsu’s) and morphological filters (erosion, centre of mass, circularity). | ||

| Goblet cells in villi. (eCLE) | Boschetto et al. (2015a) | Detecting (matched filters), segmenting (Voronoi diagrams) cells and identifying (hard threshold) goblet cells within the villi. | ||

| Intestinal villi. (eCLE) | Boschetto et al. (2015b) | Detect via morphological filters (top-hat, morphological reconstruction and closing) and quad-tree decomposition. | ||

| Boschetto et al. (2016b) | Subdivide to superpixels, extract features and classify through Random Forests to generate a binary segmentation map. | Employing established data driven approaches with reasonable size of data, resulting on better generalisation potential. | ||

| Pulmonary | Alveoli sacs in mice distal lung. | Namati et al. (2008) | Segmenting (optimum separation thresholding) and quantifying (8-point connectivity) alveolar sacs. | Limited data and uncertain translatability to human alveoli sacks due to their large size relative to the limited field of view. |

| Stained mesenchymal stem cells in rat lungs. | Perez et al. (2017) | Contrast stretch, denoise (opening), threshold and count (connected component analysis). | Empirical, ad-hoc approach employing off-the-shelf image analysis methods. | |

| Stained bacteria in distal lung. | Karam Eldaly et al. (2018) | Outlier detection using a hierarchical Bayesian model along with a MCMC algorithm based on Gibbs sampler. | More elaborate approaches, adopting model-based and data-driven methodologies. | |

| Stained bacteria and cells in distal lung. | Seth et al., (2017, 2018) | Bacterial and cellular load using spatio-temporal template matching with a radial basis functions network. | They have potential for good generalisation and translation to clinical applications. |

4.1. Mosaicing

The miniaturisation of imaging fibre bundles in FBEμ constraints the effective field of view (potentially <500 μm) and thus limits sampling diversity, which have implications for navigation, target tissue identification and scene interpretation. To address these inherent limitations, multiple, partially overlapping frames acquired over time can be aligned and combined (stitched) into a single frame with extended field of view. The process has been referred to as image mosaicing. Over the years, there has been considerable research in the development of image mosaicing approaches (Ghosh and Kaabouch, 2016), employed in a range of applications, including endoscopic imaging (Bergen and Wittenberg, 2016). Yet, generic mosaicing approaches do not deal with the inherent properties and limitations of endomicroscopy as described in Vercauteren et al. (2006). Notably, FBEμ is a direct contact imaging technique. The interaction of a moving rigid fibre-bundle tip with soft tissue may result in non-linear deformations of the imaged structures. A model of probe-tissue interaction for FBEμ was proposed in Erden et al. (2013). Furthermore, in laser scanning based FBEμ platforms, an input frame does not represent a single point in time. Instead, each sampling point is acquired at a slightly different point in time, resulting in potential deformations when imaging fast moving objects. Finally, the imaged tissue structures are sampled through a sparse, irregularly distributed fibre bundle. Hence, due to these non-linear deformations, motion artefacts and irregular sampling of the input frames, there has been a substantial research interest in the development of custom mosaicing approaches optimised for endomicroscopic data. The proposed methodologies, some currently used in clinical practice, range from simple real-time, to more intricate post-procedural solutions, for either free-hand and/or robotically driven mosaicing platforms. Table 2 and Fig. 2 provide an overview of FBEμ mosaicing techniques and characteristic examples of the derived mosaics.

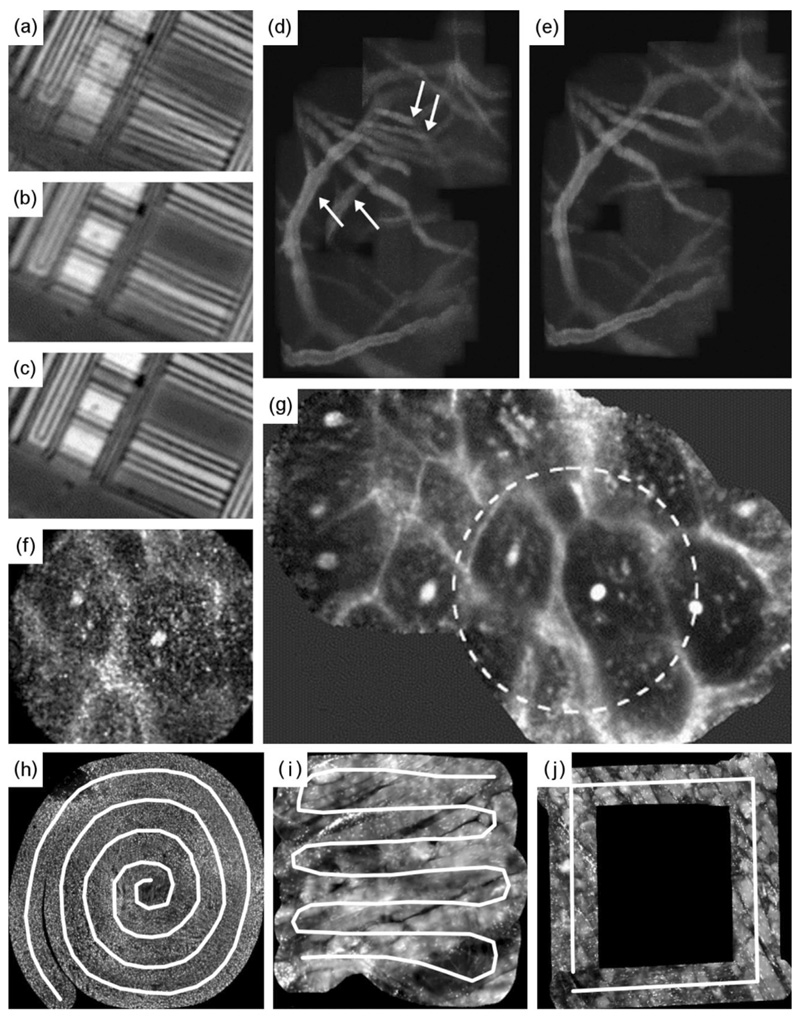

Fig. 2. Examples of mosaics employing a range of motion/deformation compensation algorithms as well as motorised acquisition path control.

(a-c) Mosaics of a silicon wafer using (a) local only, (b) local and motion distortion, and (c) global and motion distortion compensation; (d-e) Mosaics of mouse brain blood vessels using (d) local and (e) global motion and deformation compensation. While local, rigid frame alignment can generate mosaics in real-time, global and non-linear motion and deformation compensation is required for more accurate, continuous mosaics. (f-g) Global mosaic (circular ROI in (g) matching FOV in (f)) of human mouth mucosa where the accurate alignment and reconstruction can result in denoised and super-resolved images. (h-j) Customised and structured mosaic acquisition paths (such as spiral, raster and square scans). Images (a-c) and (f-g) reproduced and adapted (cropped/resized) with permission from Elsevier from Figures 18 and 22 respectively of the “Robust mosaicing with correction of motion distortions and tissue deformations for in vivo fibered microscopy” by Vercauteren et al. (2006). Images (d-e) are reproduced and adapted with permission from the Institute of Electrical and Electronics Engineers (IEEE) from Figure 9 of the “In Vivo Micro-Image Mosaicing” by Loewke et al. (2011). Image (h) is reproduced and adapted with permission from IEEE from Figure 3 of the “Conic-Spiraleur: A Miniature Distal Scanner for Confocal Microlaparoscope” by Erden et al. (2014). Image (i) is reproduced and adapted with permission from IEEE from Figure 13 of the “Building Large Mosaics of Confocal Edomicroscopic Images Using Visual Servoing” by Rosa et al. (2013). Image (j) is reproduced and adapted with permission from IEEE from Figure 6 of the “Understanding Soft-Tissue Behavior for Application to Microlaparoscopic Surface Scan” by Erden et al. (2013).

Early mosaicing approaches were post-procedural and addressed both rigid and elastic deformations (Fig. 2). Vercauteren et al. (20 05,20 06) were the first studies to identify the necessity for custom mosaicing approaches in endomicroscopy. Vercauteren et al. (2006) provided a hierarchical framework of frame-to-reference transformations (on the original, sparsely sampled data) to iteratively derive a globally consistent rigid alignment, while compensating for motion induced distortions, as well as for non-rigid deformations. Scattered data approximation was employed to reconstruct a continuous, regularly sampled image from the sparsely sampled inputs merged into a common reference. The proposed method, currently used as part of Mauna Kea’s post-procedural analysis software, was tested on phantom and in vivo data producing smooth mosaics with extended field of view and enhanced resolution (due to image reconstruction on partially overlapping, irregularly sampled images - see Superposition in Section 3.1). Loewke et al. (2007a) decomposed the problem into similar components, compensating for global (rigid) as well as local (elastic) transformations (incorporating the effect of motion distortions), maximising the certainty of the registration, both global and local, as an integrated optimisation problem. Averaging of overlapping pixels as well as multi-resolution pyramid blending were tested on both simulated as well as in vivo data, producing mosaics with smooth image transitions and sharp edges across the imaged structures. Finally, Hu et al. (2010) adopted a different approach, employing elastic registration of consecutive frames based on optical flow of robust image features and blending the mosaiced frames into a super-resolved image through a Maximum a Posterior (MAP) estimation technique. However, very limited FBEμ data (1 mosaic) were provided for the assessment of the technique.

The complex and descriptive models employed by the preceding methodologies have a direct effect on their computational requirements and consequently their real-time capability. Real-time mosaicing can provide much needed feedback, guiding the data acquisition process, ensuring a smooth continuous path over the desired region of interest (Fig. 2). Vercauteren et al. (2008) proposed an early real-time mosaicing algorithm, integrating translation and distortion (due to finite scanning speed) to a single rigid transformation (estimated through a fast, normalised cross correlation matching algorithm) followed from a simple “dead leaves” model for image blending. A very similar approach of aligning consecutive frames was employed by Bedard et al. (2012). Loewke et al. (2008) adopted a two-stage pair-wise registration between consecutive frames, (i) obtaining an initial translation estimate through optical flow on easily trackable features, and (ii) refining the es-timate through a gradient descent on cross correlation approach. A multi-resolution pyramid blending algorithm was also employed recombining overlapping regions to a composite image. To achieve real-time performance, these approaches needed to make certain assumptions and hence be subjected to a number of inherent limitations, such as the inability to compensate for global, accumulative alignment errors as well as any elastic deformations. A potential solution to these limitations, which has been adopted by both Loewke et al. (2011) and by Mauna Kea Technologies, is employ-ing a two-stage mosaicing strategy, including real-time mosaicing for live image acquisition, followed from a more accurate, postprocedural reconstruction.

Most mosaicing approaches for FBEμ have assumed a roughly static scene imaged with a moving field of view. However, this is not always the case in clinical applications with mosaicing removing dynamic information that can be of potential clinical use. He et al. (2010) proposed a method compensating for a range of movements, both operator induced as well as due to respiratory and cardiac motions, stabilising the field of view for improved monitoring of dynamic structural changes. In Mahé et al. (2015) dynamic video sequences on static mosaics were integrated, enabling the FOV extension without the associated loss of dynamic structural changes throughout the acquisition. A two-stage approach, of static mosaicing followed by stitching of the associated video segments was employed to reduce computational load. Visual artefacts at the seams across the mosaic were suppressed using a gradient-domain decomposition. Dynamic mosaics (infinite loops) from six organs (oesophagus, stomach, pancreas, bladder, biliary duct and colon) with various conditions were produced and clinically assessed by four experts. The produced visual summaries indicated higher level of consistency with the original data com-pared to static mosaicing.

Mosaicing techniques for FBEμ have, for the most part, concentrated in aligning and blending images with no a priori information on the acquisition trajectory, based exclusively on topology inference through the changes on the imaged structures. Such approaches call for large overlap amongst adjacent frames and, for effective, smooth results, can be computationally expensive. There has therefore been interest in incorporating such a priori trajectory information in the mosaicing process. Loewke et al. (2007b) utilised feedback from a robotic arm determining the five degree-of-freedom position and orientation of its end-effector (along with projective geometry) as an initial global rigid alignment amongst a frame sequence. Vyas et al. (2015) replaced the robotic arm with a six degree-of-freedom electromagnetic sensor positioned at the tip of the fibre-bundle in a proof-of-principle study. The positioning feedback from the sensor acted as a coarse global alignment followed from a fine tuning similar to Vercauteren et al. (2008). In Mahé et al. (2013) they used weak prior knowledge of the trajectory (spiral scan) to derive spatio-temporal associations within the frame sequence, linking overlapping frames from successive branches of the spiral scan and estimating optimal transforms similar to Vercauteren et al. (2006). While these approaches have been reported to improve the efficiency and/or the robustness of the mosaicing process, they require additional actuators/sensors at the tip of the fibre bundle, either to drive or to provide feedback on the scanning path. Such hardware additions are currently limiting their suitability for endoscopic applications, necessitating for future miniaturisation of the relevant technologies.

The previous studies described above dealt predominantly with free hand movements for producing an extended field of view through image mosaicing. While free-hand mosaicing is a very valuable tool, the ability to create customised scanning paths, ensuring a full imaging coverage of the region of interest, is also highly desirable. A number robotised distal scanning tips have been proposed (Erden et al., 2014; Rosa et al., 2013,2011; Zuo et al., 2015,2017b) facilitating customised and structured mosaic acquisition paths (such as spiral and raster scans) for FBEμ (Fig. 2). While a diverse set of architectures has been proposed, the plurality of solutions catered mostly for Minimal Invasive/Laparoscopic Surgery applications, with some prototypes suitable for endoscopic applications (Zuo et al., 2017a). Further miniaturisation is therefore imperative for facilitating robotically controlled scanning in endoscopy. In robotised scanning, the complex 3D surface of many of the examined structures, along with the lack of haptic feedback may result in loss of contact, or excessive contact/pressure between the fibre bundle and the imaged structure. Giataganas et al. (2015) created an adaptive probe mount that could maintain constant (low force magnitude) contact between the tissue and the imaging probe. Furthermore, the direct contact of the hard tip of the fibre bundle with the soft tissue can lead to tissue deformations, resulting in accumulative deviation off the desired path throughout a scan. There have been studies attempting to understand this soft tissue behaviour and provide feedback to the robotised scanner in an attempt to compensate for the anticipated path deviations. The feedback can be (i) through determining the loading-distance prior an automated scan and compensating respectively by adjusting the scan path (Erden et al., 2013), or (ii) through visual servoing (Rosa et al., 2013), estimating the imaged path in real-time through the mosaiced image data and adjusting accordingly to meet the desired scan path. While further research and development, especially in their miniaturisation is necessary, robotic scanning is anticipated to serve as a key milestone to the adoption of image mosaicing in a wide range of clinical endoscopic and laparoscopic procedures. Yet, the detailed discussion on such robotised scanning approaches is beyond the scope of this paper. Zuo and Yang (2017) has produced a thorough review on endomicroscopy for robot assisted intervention, providing details and discussion on a wide range of relevant studies.

4.2. Quantification

Aside from image mosaicing, there have been a limited number of image analysis studies for FBEμ images. These studies have predominantly concentrated on the detection and quantification of particles and structures that can act as indicators of pathological or physiological processes in the circulatory system, oropharyngeal, gastrointestinal and pulmonary tracts. For the most part, empirical, ad hoc observations, combined with simple, off-the-shelf image analysis approaches, have been employed. This section along with Table 3 and Fig. 3 provide a brief overview of the most relevant image analysis/quantification studies for fibred endomicro-scopic data.

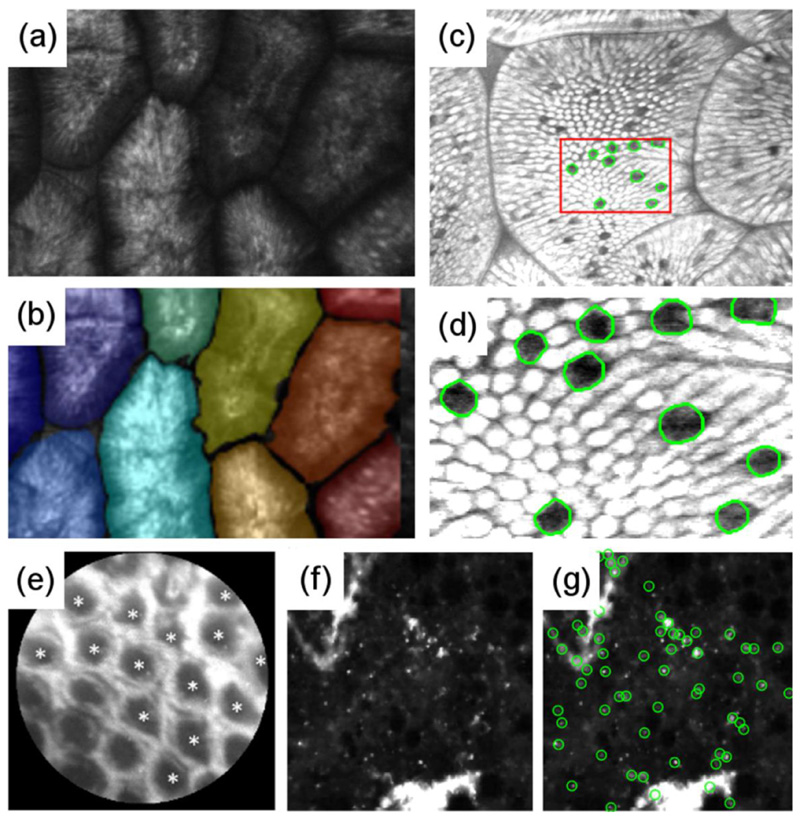

Fig. 3. Examples of image analysis performed on a range of organ systems.

(a-b) Segmentation of intestinal villi, and (c-d) detection of goblet cells within the villi. (e) Detection of intestinal crypts in colorectal polyps as a first step towards automated classification between benign and dysplastic epithelial tissue. (f-g) Detection of fluorescent stained bacteria, appearing as bright dots in (f), in the alveolar space of ovine distal lung. Images (a-b) are reproduced and adapted with permission from the Institute of Electrical and Electronics Engineers (IEEE) from Figures 1 and 4 respectively of the “Semiautomatic detection of villi in confocal endoscopy for the evaluation of celiac disease” by Boschetto et al. (2015b). Images (c-d) are reproduced and adapted with permission from IEEE from Figure 5 of the “Detection and density estimation of goblet cells in confocal endoscopy for the evaluation of celiac disease” by Boschetto et al. (2015a). Image (e) has been reproduced from Figure 8 of the “Quantitative analysis of ex vivo colorectal epithelium using an automated feature extraction algorithm for microendoscopy image data” by Prieto et al. (2016) under the Creative Commons Attribution (CC BY) 3.0 International License (https://creativecommons.org/licenses/by/3.0).

In the circulatory system, Savoire et al. (2004) proposed a method to estimate the velocity of Red Blood Cells (RBC) within micro-vessels from a single endomicroscopic frame, exploiting the skewing artefact introduced on fast moving RBC due to the relatively slow scanning speed of the vertical axis component (result-ing in circular RBCs appearing ellipsoidal). Perchant et al. (2007) developed algorithms to track and align a region of interest over consecutive frames for cell traffic analysis and blood velocity estimation. Huang et al. (2013) examined the variability in stained cardiac tissue structures imaged through FBEμ as a means for intraoperatively identifying nodal tissue in living rat hearts with potential application to neonatal open-heart surgery. In the orapharyngeal tract, Mualla et al. (2014) identified the borders and locations respectively of epithelial cells in the mucosa layer of vocal chords as the first step to analysing and quantifying structural changes.

In the gastrointestinal tract, Couceiro et al. (2012) developed a methodology that employed off-the-shelf algorithms for segmenting and quantifying intestinal crypts in endomicroscopic images as a potential indicator for Inflammatory Bowel Disease. Similarly, Prieto et al. (2016) employed crypt detection as a first step towards automated classification between benign and dysplastic epithelial tissue in colorectal polyps. Boschetto et al. (2015a,b,2016b) attempted to semi-automatically analyse and quantify fluorescent endomicroscopic images of the gastro-intestinal mucosa, as a first step to assist diagnosis and monitoring of coeliac Disease. Boschetto et al. (2016b,2015b) proposed methodologies for segmenting intestinal villi, while Boschetto et al. (2015a) proceeded in detecting and segmenting cells within the villi and differentiating between columnar and goblet cells of the epithelium.

In the pulmonary tract, Namati et al. (2008) analysed mice distal lung images and automatically quantified the number and size of alveolar sacs. Perez et al. (2017) applied a sequence of off-the-shelf image processing operations to count fluorescently labelled Mesenchymal Stem Cells injected into rat lungs, as a potential indicator for lung repair in radiation induced lung injury. Karam Eldaly et al. (2018) employed a fully unsupervised, hierarchical Bayesian approach for detecting bacteria labelled with a (green) fluorescent smart-probe (Akram et al., 2015a) within the, highly auto-fluorescent (also green) distal lung. The algorithm was an extension of McCool et al. (2016) for denoising along with outlier detection and removal in sparsely, irregularly sampled data. Such fully unsupervised approches offer a flexible and consistent methodology to deal with uncertainty in inference when limited amount of data or information is available. Seth et al. (2017, 2018) quantified bacterial and cellular load in the human lung adopting and adapting a learning-to-count (Arteta et al., 2014) approach, employing a multi-resolution, spatio-temporal template matching scheme using radial basis functions network.

5. Image understanding

Another component of the image computing pipeline is the higher-level understanding and exploitation of the acquired, reconstructed and sometimes processed data, in an attempt to extract clinically and biologically relevant information, and consequently guide the diagnostic process. Due to the nature of FBEμ data acquisition in a clinical setup, a large volume of continuous frame sequences is generated, sometimes surpassing 100 0 frames per video. These video sequences include uninformative/corrupted frames, off-target frames outside the examined anatomic structure and/or region of interest, as well as a range of on-target frames from healthy and pathological structures. This large, and sometimes very diverse, data volume acts as a major bottleneck in the analysis and quantification of the data, increasing the required human/computational resources, and potentially diluting the objectiveness of associated clinical procedure. The main body of FBEμ image understanding research to date can be broadly categorised into frame (i) classification (Tables 4–5 and Fig. 4), and (ii) content-based retrieval methods (Table 6).

Table 4. Overview of classification approaches for fibred endoscopic imaging employing traditional machine learning.

| Organ (System) | Classifying | References | Methodology | Comments |

|---|---|---|---|---|

| Pulmonary | Distal lung alveolar abnormalities. | Desir et al. (2012a), Désir et al. (2010,2012b), Hebert et al. (2012), Heutte et al. (2016), Koujan et al. (2018), Petitjean et al. (2009) and Saint-Réquier et al. (2009) |

Features: First Order Statistics, GLCMs, LBPs, SIFT, Scattering Transform, FREAK, ORB, Homomorphic filters, Structural Information (Canny and Sobel Edge Detectors), Sparse - Irregular LBPs, LQPs, HOGs, LDPs, Homogeneity, Spatial Frequency, Fractal Texture, Intensity, Wavelet and CNN Features. Classifiers: K-NN, SVMs, SVM-RFE, Gaussian Mixture Models, LDA, QDA, Random Forests, Generalised Linear Model, Gaussian Processes, Boosted Cascade of Classifiers, Neural Networks. Multiclass: One-vs-all and one-vs-one ECOCs, binary tree classification, Recursive SVM tree and Naïve Bayes. Other: Pruning trees for non-detection; feature selection (i.e. SDA, FSS and PCA) for dimensionality reduction; visual coding (Bag of Words, Sparse Coding and Fisher Kernel Coding) and classification on mosaics for enhanced classification performance. |

Simple and effective methodologies performing in most part binary classification. Results are positive indicating the potential strength of simple approaches in classifying endomicroscopic images. Primary limitations include (i) the limited scope of the classification, for example health VS pathological, when endomicroscopic sequences contain a plethora of frame classes, and (ii) the limited number of images used for training, testing and evaluation, making the proposed methodologies susceptible to a range of biases. |

| Informative frames within videos. | Leonovych et al. (2018) and Perperidis et al. (2016) | |||

| Cancerous nodules in airways and distal lung. | He et al. (2012), Rakotomamonjy et al. (2014) and Seth et al. (2016) | |||

| Gastro-intestinal | Oesophagus epithelial changes. | Ghatwary et al. (2017), Veronese et al. (2013) and Wu et al. (2017) | ||

| Intestinal adenocarcinoma. (eCLE) | Stefanescu et al. (2016) | |||

| Colorectal polyps. | André et al. (2012b) and Zubiolo et al. (2014) | |||

| Coeliac disease. (ecle) | Boschetto et al. (2016a) | |||

| Oropharyngeal | Pathological epithelium. | Jaremenko et al. (2015) and Vo et al. (2017) | ||

| Brain | Brain tumours (glioma and meningioma). | Kamen et al. (2016) and Wan et al. (2015) | ||

| Ovaries | Epithelial changes | Srivastava et al. (2005,2008) |

Table 5. Overview of classification approaches for fibred endoscopic imaging going beyond traditional machine learning.

| Organ (System) | Classifying | References | Methodology | Comments |

|---|---|---|---|---|

| Pulmonary | Cancerous nodules in airways. | Gil et al. (2017) | Unsupervised classification (compensating for limited data availability) using graph representation and community detection algorithms. | Early FBEμ classification approaches going beyond the traditional machine learning pipeline, exploring methods such as Convolutional Neural Networks (off the self as well as custom), transfer learning, unsupervised learning and multi-modal learning at a latent space. The results are very promising. Yet, more data, both in terms of numbers as well as in terms of diversity are necessary. Furthermore, custom solutions, taking into consideration the inherent FBEμ imaging properties, could further enhance the classification performance. |

| Gastro-intestinal | Oesophagus epithelial changes. | Hong et al. (2017) and Aubreville et al. (2017) | Custom CNN architecture for the multi-class frame classification. | |

| Oropharyngeal | Pathological epithelium. | Aubreville et al. (2017) | Full-training of LeNet-5 and shallow fine-tuning the Inception v3 (using the ImageNet database). | |

| Breast | Cancerous breast nodules. | Gu et al. (2017) | Multi-modal (FBEμ mosaics and histology) classification mapping the original features to a latent space for improved SVM performance. |

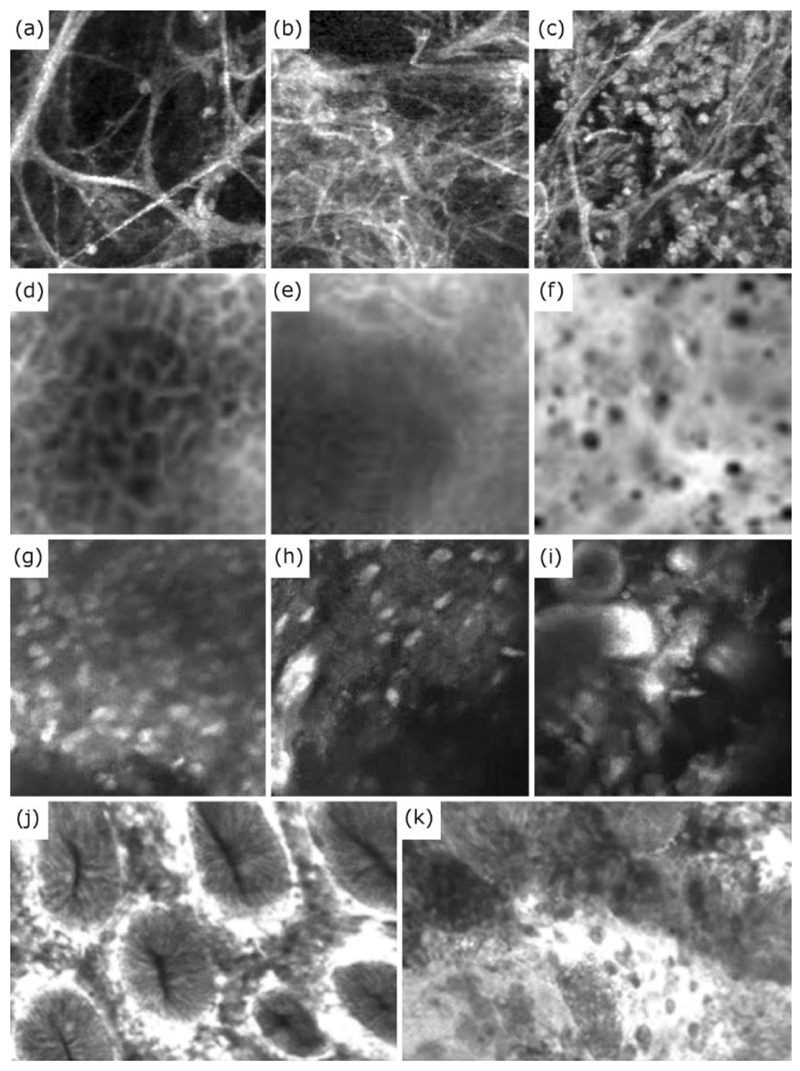

Fig. 4. Examples of structural changes observed in OEM images across variety of organ systems and conditions. These structural changes have been used to classify/detect a range of clinically relevant pathologies.

(a-c) Difference in tissue structure in the alveoli structures of the distal lung, indicating (a) healthy and (b) pathological elastin strands, as well as (c) alveoli sacs flooded with cells. (d-f) Difference between (d) healthy and (f) cancerous oral epithelium, along with (e) an example of oral epithelium with limited textural information where classification can be challenging. (g-i) Difference between (g-h) Glioblastoma and (i) Meningioma brain tumour images. (j-k) Difference between (j) healthy colon mucosa and (k) adenocarcinoma. Images (d-f) have been reproduced (cropped) from Figure 6 of the “Automatic Classification of Cancerous Tissue in Laserendomicroscopy Images of the Oral Cavity using Deep Learning” by Aubreville et al. (2017) under the Creative Commons Attribution (CC BY) 4.0 International License. Images (g-i) have been reproduced from Figure 3 of the “Automatic Tissue Differentiation Based on Confocal Endomicroscopic Images for Intraoperative Guidance in Neurosurgery” by Kamen et al. (2016) under CC BY 4.0. Images (j) and (k) have been reproduced (from Figures 2 and 3 respectively of the “Computer Aided Diagnosis for Confocal Laser Endomicroscopy in Advanced Colorectal Adenocarcinoma” by Ştefănescu et al. (2016) under CC BY 4.0 (https://creativecommons.org/licenses/by/4.0).

Table 6. Overview of image retrieval approaches for fibred endoscopic imaging.

| Topic | References | Methodology | Comments |

|---|---|---|---|

| Image retrieval through low-level visual features | André et al. (2009a) | Bag of Visual Words (k-means clustering) of multi-scale SIFT descriptors extracted from regularly distributed circular regions. | Thorough methodologies for image and video retrieval based solely on low-level information extracted from images. Due to lack of relevant ground truth, methodologies were evaluated as binary classification tasks (instead of retrieval). |

| André et al. (2009b) | Introduce (i) spatial information between local features by exploiting the co-occurrence matrix of their visual words (ii) temporal relationship across frames through mosaicing. | ||

| André et al. (2010) | Deriving visual words from individual frames and weighting the contributions of local regions through the relevant overlap rate derived during mosaicing. | ||

| André et al. (2012b,2011b) | Combining and clinically testing above approaches as a binary classification (kNN) between neoplastic/benign colonic epithelium. | ||

| André et al. (2011a) | (i) Generate the “perceived similarity” ground truth (manual assessment - Likert scale), and (ii) learn an adjusted similarity/distance metric (linear transform) for optimal mapping of video signatures (histograms of visual words). | First attempt to evaluate directly the performance of endomicroscopic video retrieval, through generating the perceived similarity of ground truth. | |

| Image retrieval combining low-level visual features with high-level semantic contex | André et al. (2012a,c) | Fisher-based approach transforming visual word histograms to 8 binary semantic concepts. Combine with adjusted similarity distance to improve “perceived similarity”. | Bridging the semantic gap between low-level visual features, extracted from the images, and high-level clinical knowledge, generated through human perception. |

| Watcharapichat (2012) | Gabor filter and Earth Mover’s Distance based retrieval enhanced through iterative “relevance feedback” and Isomap dimensionality reduction. | ||

| Tous et al. (2012) | Retrieval via (i) low-level, image-based features (LBPs & k-NN with Euclidian or Manhattan distances), (ii) high level key-word semantic descriptions (Apache Lucene search engine), and (iii) third party software compatibility through MPEG Query Format & JPEG Search standards. | ||

| Other image retrieval approaches | Kohandani Tafresh et al. (2014) | Semi-automated query adaptation of André et al. (2011b) via (i) temporal segmentation based on kinetic stability (Euclidean distance of SHIFT descriptors across consecutive frames), and (ii) manual selection of spatially stable segments. | Adaptations of André et al. (2011b) enhancing retrieval performance. |

| Gu et al. (2017) | Unsupervised, multimodal graph mining (i) deriving similar (cycle consistency) and dissimilar (geodesic distance) FBEμ and histology frame pairs, (ii) learning discriminative features in the associated latent space. |

5.1. Image classification

Classification of frames on pre-determined, clinically defined cohorts based on their content is currently the most investigated area of FBEμ image computing research. An abundance of studies have applied binary as well as multi-class classification on endomicroscopic images of a range of organ systems in an attempt to identify cancer in ovarian epithelium (Srivastava et al., 2005,2008), abnormalities in distal lung alveolar structures (Desir et al., 2012a; Désir et al., 2010,2012b; Hebert et al., 2012; Heutte et al., 2016; Koujan et al., 2018; Petitjean et al., 2009; Saint-Réquier et al., 2009), informative frames in brain (Izadyyazdanabadi et al., 2017; Izadyyazdanabadi et al., 2018) and pulmonary videos (Leonovych et al., 2018; Perperidis et al., 2016), cancerous nodules in the airways (Gil et al., 2017; He et al., 2012; Rakotomamonjy et al., 2014) and distal lung (Seth et al., 2016), pathological epithelium in the oropharyngeal cavity (Aubreville et al., 2017; Jaremenko et al., 2015; Vo et al., 2017), changes in oesophageal epithelium in cases of Barrett’s oesophagus (Ghatwary et al., 2017; Hong et al., 2017; Veronese et al., 2013; Wu et al., 2017), adenocarcinoma (Stefanescu et al., 2016), colorectal polyps (André et al., 2012b; Zubiolo et al., 2014) and celiac disease (Boschetto et al., 2016a) in intestinal epithelium, neoplastic tissue in breast nodules (Gu et al., 2017), as well as two types of common brain tumours, glioblastoma and meningioma (Kamen et al., 2016; Murthy et al., 2017; Wan et al., 2015). Methodologically, most of the aforementioned studies employed the same basic structure, defining a hand-crafted feature space descriptive of the underlying imaged structure, training a range of classifiers, to distinguish between pre-determined frame categories. For organs/structures that do not exhibit any auto-fluorescence at the imaging wavelengths, fluorescence dyes such as methylene blue and fluorescein, and molecular probes (He et al., 2012) were employed to generate the necessary fluorescent signal.

Commonly used feature descriptors include (i) first order image statistics, (ii) structural information through Skeletonisation, Sobel and Canny Edge Detectors, etc. (iii) Haralick’s texture parameters derived through gray Level Co-occurrence Matrices (GLCM), (iv) Local Binary Patterns (LBP) and their variation of Local Quinary Patterns (LQP), and (v) Scale Invariant Feature Transforms (SHIFT). Other less adopted descriptors employed as discriminative features include (i) spatial frequency based features extracted at Fourier domain (Srivastava et al., 2005,2008), (ii) fractal analysis (Stefanescu et al., 2016), (iii) Scattering transform (Rakotomamonjy et al., 2014; Seth et al., 2016), (iv) Fast Retina Keypoint (FREAK) (Wan et al., 2015), (v) oriented FAST and rotated BRIEF (ORB) (Wan et al., 2015), (vi) Histogram of orientated Gradients (HOG) (Gu et al., 2016; Vo et al., 2017), (vii) textons (Gu et al., 2016), (viii) Local Derivative Patterns (LDP) (Vo et al., 2017), as well as (ix) features extracted from Convolutional Neural Networks (CNN) prior to the fully connected layer employed for computing each class score (Gil et al., 2017; Vo et al., 2017). Leonovych et al. (2018) introduced Sparse Irregular Local Binary Patterns (SILBP), an adaptation of LBPs taking into consideration the sparse, irregular sampling imposed by the imaging fibre bundle on FBEμ images. Feature spaces combining two or more of the above descriptors are also frequent, with descriptors customarily extracted from the whole image, yet in some cases, regular or randomly distributed sub-windows/patches have been used, either on their own, or in conjunction to the whole image feature space.

A number of well-established classifiers have been assessed, including (i) k-Nearest Neighbours (kNN) (André et al., 2012b; Désir et al., 2010; Hebert et al., 2012; Saint-Réquier et al., 2009; Srivastava et al., 2005,2008), (ii) Linear and Quadratic Discriminant Analysis (LDA and QDA) (Leonovych et al., 2018; Srivastava et al., 2005,2008), (iii) Support Vector Machines (SVM) and their adaptation with Recursive Feature Elimination (SVM-RFE) (Désir et al., 2010, 2012b; Jaremenko et al., 2015; Leonovych et al., 2018; Petitjean et al., 2009; Rakotomamonjy et al., 2014; Saint-Réquier et al., 2009; Vo et al., 2017; Wan et al., 2015; Zubiolo et al., 2014), (iv) Random Forests (RF) and variants such as Extremely Randomised Trees (ET) (Desir et al., 2012a; Heutte et al., 2016; Jaremenko et al., 2015; Leonovych et al., 2018; Seth et al., 2016; Vo et al., 2017), (v) Gaussian Mixture Models (GMM) (He et al., 2012; Perperidis et al., 2016), (vi) Boosted Cascade of Classifiers (Hebert et al., 2012), (vii) Neural Networks (NN) (Stefanescu et al., 2016), (viii) Gaussian Processes Classifiers (GPC), and (ix) Lasso Generalised Linear Models (GLM) (Seth et al., 2016). Most studies employed leave-k-out and k-fold cross validation to assess the predictive capacity of the proposed methodology on limited, preannotated frames. In an attempt to enhance the classification performance and/or reduce the computational workload required for training and testing, some studies incorporated additional steps in the classification pipeline. In particular, feature selection (dimensionality reduction in feature space) such as Stepwise Discriminant Analysis (SDA), Forward Sequential Search (FSS), and Principal Component Analysis (PCA) were also used (Perperidis et al., 2016; Srivastava et al., 20 05,20 08) prior to the classification process. Furthermore, visual coding schemes, such as Bag-of-Words, Fisher Kernel Coding and Sparse Coding (Kamen et al., 2016; Vo et al., 2017; Wan et al., 2015), as well as reduction of non-detection, minimising the incorrectly classified images through rejection mechanisms (Desir et al., 2012a; Heutte et al., 2016), have been investigated.

Classification of endomicroscopic images has predominantly concentrated in binary cases, with a very limited number of studies having attempted multi-class classification (Boschetto et al., 2016a; Ghatwary et al., 2017; Hong et al., 2017; Koujan et al., 2018; Veronese et al., 2013; Wu et al., 2017; Zubiolo et al., 2014). To this end, Boschetto et al. (2016a) employed a multi-class Naïve Bayes classifier. Koujan et al. (2018) adopted the One-Versus-All (OVA) Error Correcting Output Codes (ECOC), a popular method (along with other ECOCs such as One-Versus-One and Ordinal) for multiclass classification using binary classifiers. Ghatwary et al. (2017) and Veronese et al. (2013) tackled the multi-class problem as a pre-determiπed sequence (tree) of binary classifications (through SVM), while Zubiolo et al. (2014) employed graph theory tools (minimum cut) to recursively estimate the optimal associated bipartitions (large SVM margin). Hierarchical (tree) binary classifications can potentially reduce the classification complexity from linear for OVA to logarithmic. Wu et al. (2017) improved the performance of multi-class classification performance incorporating unlabelled images through an adaptation of semi-supervised approach called Label Propagation method introduced by Zhou et al. (2003).

There have recently been some studies that do not follow the same basic structure of training a classifier on a hand-crafted feature space descriptive of the underlying imaged structures. Gil et al. (2017) proposed an unsupervised classification approach to compensate for the limited quantity of data available for training and testing decision support systems. The methodology used graph representation to codify feature space connectivity followed by community detection algorithms (Cazabet et al., 2010), representing space topology and detecting associated image communities. Gu et al. (2016) incorporated features extracted from endomicroscopy mosaics as well as associated histology images, to a supervised framework, mapping the original features to a latent space by maximising their semantic correlation. The derived latent features outperformed mono-modal features in binary classification (SVM) of breast cancer images. Furthermore, recent advances of Deep Learning architectures, such as Convolutional Neural Networks (CNN), have resulted in numerous powerful tools for binary or multi-class image classification, without the need for explicit definition of feature descriptors. Hong et al. (2017) proposed a custom CNN architecture with for the multi-class classification of epithelial changes in Barrett’s oesophagus. Aubreville et al. (2017) adopted and adapted two established CNN architectures for the detection of cancerous tissue in the oral cavity, (i) a patch-based classification based on full-training of LeNet-5 (Lecun et al., 1998), as well as (ii) a whole image classification based on shallow fine-tuning the Inception v3 network (Szegedy et al., 2016) pre-trained using ImageNet database (Deng et al., 2009). Similarly, Izadyyazdanabadi et al. (2017) fully-trained AlexNet (Krizhevsky et al., 2012) and GoogleNet (Szegedy et al., 2015) for the detection of diagnostic frames in brain endomicroscopy. Murthy et al. (2017) presented a novel multi-stage CNN, discarding images classified with high confidence at early stages, concentrating on more challenging images at subsequent, expert shallow networks. The proposed network demonstrated substantial improvement on traditional feature/classifier as well as CNN architectures when classifying (binary endomicroscopic brain tumour images. Izadyyazdanabadi et al., 2018) compared the classification performance amongst fully training CNNs from scratch against transfer learning through fine-tuning, shallow (fully connected layers) or deep (whole network), of pre-trained networks using conventional image databases such as ImageNet. Similar to Tajbakhsh et al. (2016), fine-tuning was found to be able to provide better or at least similar classification performance to training from scratch on limited medical image databases.

5.2. Image retrieval