Abstract

We developed an automatic method for synaptic partner identification in insect brains and use it to predict synaptic partners in a whole-brain electron microscopy dataset of the fruit fly. Those predictions can be used to infer a connectivity graph with high accuracy, thus allowing fast identification of neural pathways. To facilitate circuit reconstruction using our results, we developed CircuitMap, a user interface add-on for the circuit annotation tool Catmaid.

A neuron-level wiring diagram (connectome) is a valuable resource for neuroscience [1]. Currently, high-resolution electron microscopy (EM) is the only scalable method to comprehensively resolve individual synaptic connections. EM at nanometer scale provides enough detail to identify pre-synaptic sites, synaptic clefts, and post-synaptic partners. A challenging and currently limiting step is the extraction of the connectome from EM volumes, as manual analysis is prohibitively slow and unfeasible for large datasets.

Connectome analysis of the fruit fly is of particular interest on a whole-brain scale. So far, the “full adult fly brain” (FAFB) EM volume [2] is the only available dataset comprising a complete adult fly brain. The automatic segmentation of neurons in this volume has recently allowed speeding-up tracing efforts [3], but the reconstruction of synapses has remained a purely manual task. This step now represents a major bottleneck towards the whole-brain fruit fly connectome, as annotating all estimated 250 million synaptic partners by hand would take 40 years, assuming an annotation time of 5 s per synaptic partner.

Several approaches have been developed for the automatic detection of synapses in EM volumes, tailored towards the model organism of interest. In vertebrates, recent methods have been shown to infer synaptic connectivity reliably [4, 5]. In insect brains, however, the task of identifying synaptic connections is more challenging, since the size of synapses is generally smaller than in vertebrates and many synapses are polyadic (i.e., one pre-synaptic site connects to several post-synaptic sites). Most methods for synaptic partner prediction deal with those challenges in two steps: The first step consists of identifying and localizing synaptic features such as T-bars or synaptic clefts, whereas in the second step neuron segments in physical contact are classified as synaptically “connected” or “not-connected”. For the first step, convolutional neural networks (CNNs) were used to detect synaptic clefts [6] or T-bars [7]. For the second step, a graphical model was developed to solve synaptic partner assignment given a neuron segmentation and candidate synapse detections [8]. More recently, CNNs have been used to infer synaptic connectivity directly, either to predict pre- and post-synaptic neuron masks from synaptic cleft segmentations [9] or to classify neuron pair candidates extracted from a neuron segmentation as “connected” or “not-connected” [10]. Similarly, multilayer perceptrons have been applied to local context features [7]. In contrast to these two-step approaches, our method jointly detects pre- and post-synaptic sites and infers their connectivity, thus building on previous work, which leveraged long-range affinities [11].

However, few methods have been applied so far to large-scale Drosophila datasets: synaptic clefts have been predicted densely in the whole FAFB dataset, albeit without extraction of synaptic partners [6]. To the best of our knowledge, only one method has been used to predict synaptic partners [7] in a large dataset of the central brain of Drosophila [12].

Here, we present a method for synaptic partner identification, which we used to process the FAFB dataset, and a user interface to make predicted synaptic partners accessible in current circuit mapping workflows.

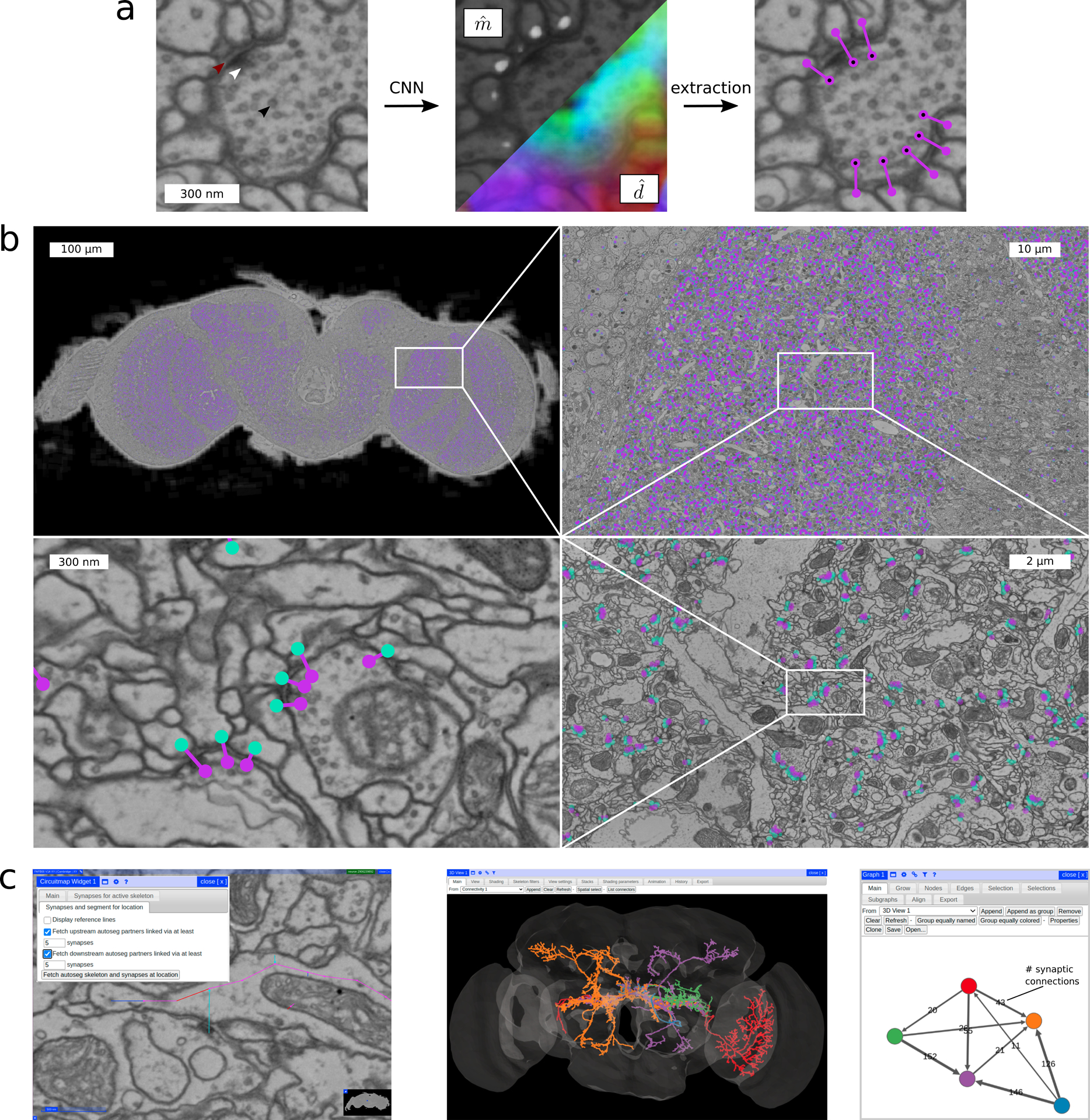

Our method uses a 3D U-Net CNN [13] to predict for each voxel in a volume whether it is part of a post-synaptic site and, if it is, an offset vector pointing from this voxel to the corresponding pre-synaptic site (Figure 1a and Methods). The method is similar to our previous work [11] in that it does not require explicit annotation of synapse features (such as T-bars or clefts) by a human annotator, but instead learns to make use of those features by its own, if they are helpful to predicting synaptic partners. The method presented here uses a different target representation (Methods), which eliminates potential blind spots for synaptic partner prediction and alleviates the need for a high quality neuron segmentation. Furthermore, the post-processing is 15 times more efficient, which we consider crucial for the processing of large volumes.

Figure 1:

Method overview for synaptic partner prediction, application to the “full adult fly brain” (FAFB) dataset and usage of CircuitMap for circuit reconstruction and analysis in Catmaid. a CNN predictions of post-synaptic sites () and direction vectors pointing to the pre-synaptic site (, 3D vectors shown as RGB-color) together with final detections after post-processing. Arrows show synaptic cleft (red), T-bar (white), and vesicles (black). b Sample section of the FAFB dataset with predicted synaptic partners (pre-synaptic site purple, post-synaptic site turquoise). c Using CircuitMap (left image), predicted synaptic partners are available in Catmaid to allow exploration of automatically reconstructed neural circuits. The example shows five automatically segmented neurons from [3] (middle image) together with their predicted number of synaptic connections (right image, node colors match the segmentation). See also Supplementary Video.

In particular, our method can be trained from pre- and post-synaptic point annotations alone, which reduces the effort needed to annotate future training datasets. Similarly, the prediction of synaptic partner sites does not rely on the availability of a neuron segmentation. Only the mapping of sites to neurons requires a segmentation, which facilitates replacing neuron segmentations and incorporation of our results into proof-reading workflows where changes to the segmentation occur frequently: an updated neuron segmentation changes only the mapping of already found synaptic partners onto neurons, synaptic partner sites do not have to be detected again. Furthermore, errors in the neuron segmentation do not propagate to the synaptic partner detection (Supplementary Discussion).

We train and validate our method using the publicly available training dataset of the Cremi challenge (https://cremi.org). This dataset consists of three 5 × 5 × 5 μm cubes, cropped from the calyx (part of the mushroom body) of the FAFB volume, in which synaptic partners were fully annotated (1965 synapses in total, Supplementary Figure 1). We performed a grid search on the Cremi dataset to find the best performing configuration amongst different architecture choices, network sizes, training losses and balancing strategies (Methods, Supplementary Figure 2). We further explore the possibility to improve accuracy by making use of a neuron segmentation during post-processing. A neuron segmentation allows us to filter two types of false positives: false positives connecting the same neurite and duplicate close-by detections of a single synaptic partner pair with the same connectivity. We find that when using a large network (192 million parameters) our model achieves good results even in the absence of a neuron segmentation (f-score 0.74 without neuron segmentation versus 0.76 with neuron segmentation). In the presence of an accurate neuron segmentation, smaller, more efficient architectures with ~90% less parameters are on par with larger ones: small and large networks both achieve f-scores of 0.76 (Supplementary Note 2).

Following the insights gained from validation on the Cremi datasets, we chose a small network (22 million parameters) with a cross-entropy loss to process the whole FAFB volume. To limit extraction to areas containing synapses we ignore regions not part of the neuropil using a previously described mask [6]. The remaining volume consists of ~50 teravoxels or equivalently ~32 million μm3, which we process in 3 days on 80 GPUs obtaining 244 million putative synaptic partners (Figure 1b, Methods).

Predicted synapses in FAFB can be analyzed with the query library Synful-Circuit [14]. Additionally, we implemented CircuitMap [15], an extension for the tracing tool Catmaid currently used for connectome annotation in FAFB (Supplementary Video). CircuitMap allows identification of partner neurons with a given number of synaptic connections and thus quick discovery of neural pathways in the Drosophila brain (Figure 1c).

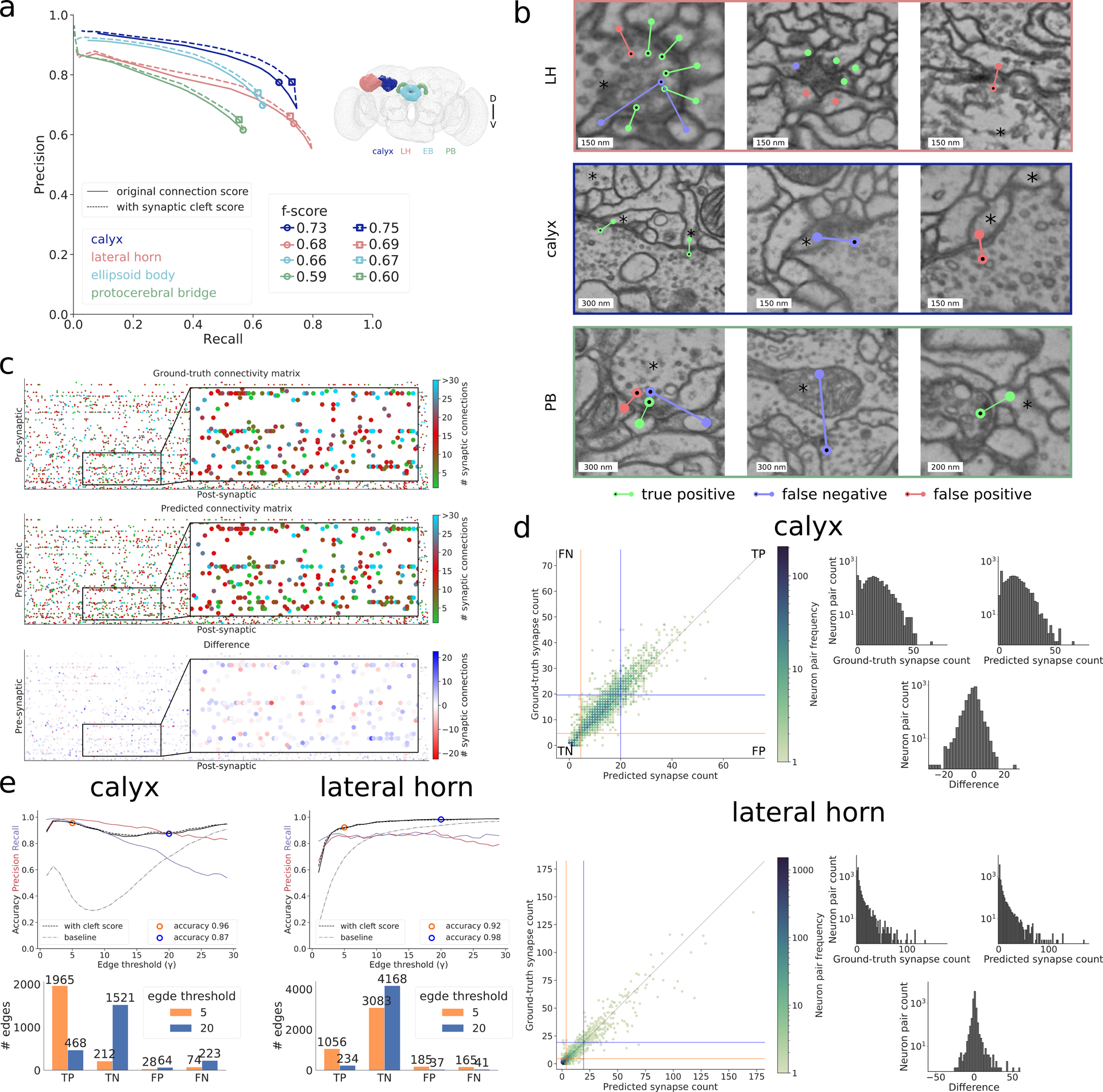

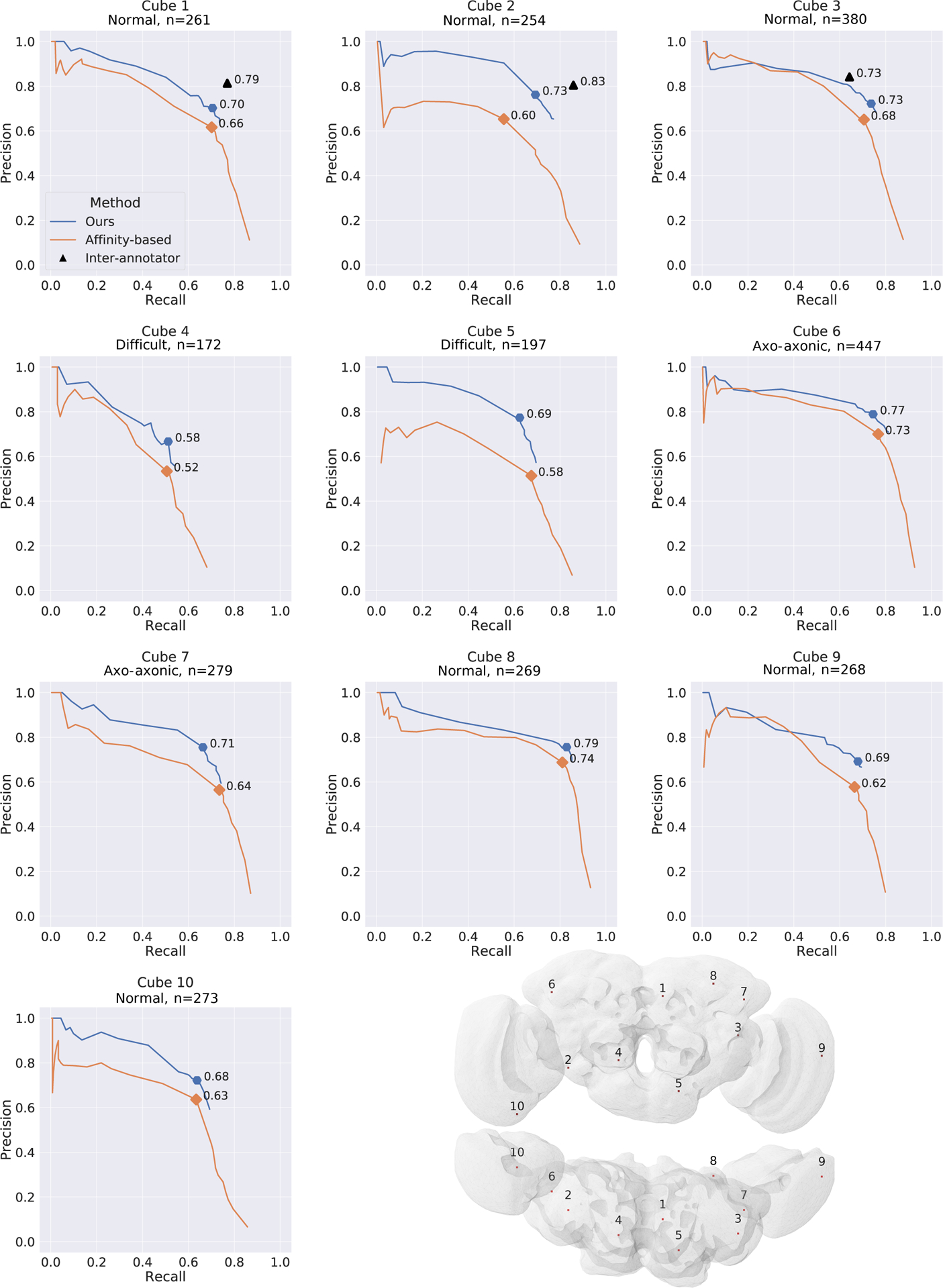

We evaluate the quality of the whole-brain predictions on six datasets with manually placed skeleton and synapse annotations created with Catmaid [16] in the four brain regions calyx (~60,000 connections), lateral horn (~25,000 connections), ellipsoid body (~60,000), and protocerebral bridge (~15,000 connections) (Figure 2, Supplementary Figure 1, Supplementary Note 1). When measuring performance at an individual synaptic connection level using the Cremi metric (Supplementary Note 2), we obtain f-scores of 0.73, 0.68, 0.66 and 0.59 for the four different brain areas calyx, lateral horn, ellipsoid body, and protocerebral bridge, respectively (Figure 2a and Supplementary Note 2). Examples of identified synapses representing true positives, false positives and false negatives are shown in Figure 2b. We observe a decrease in f-score in regions farther away from the training datasets, suggesting that more diverse training datasets are needed to improve overall accuracy. Some errors in the reconstruction can be attributed to data ambiguities. On three densely reconstructed cubes (9 μm3 each), we observed inter-human differences that correspond to f-scores of 0.79, 0.83, and 0.73. On the same cubes, our predictions reach f-scores of 0.70, 0.73, and 0.73 (Extended Data Figure 1 and Supplementary Note 2).

Figure 2:

Results in whole-brain FAFB dataset. a Precision-recall curves using the Cremi metric for the four different brain regions calyx (dark blue), lateral horn (peach), ellipsoid body (light blue) and protocerebral bridge (green) over different prediction score thresholds, with (solid) and without (dashed) filtering via synaptic cleft predictions [6] (Supplementary Note 2). Markers highlight validation best score threshold (f-score with cleft predictions: 0.75, 0.69, 0.67, 0.60 for calyx, lateral horn, ellipsoid body, and protocerebral bridge, respectively). b Examples of identified true positives, false positives, false negatives in lateral horn (top row), calyx (middle row), and protocerebral bridge (bottom row) on ground-truth neurons (marked with an asterisk). False positive in top row left, false positives and false negative in top row middle are examples of ambiguous cases, false negative in bottom row middle is an example of a missed axo-axonic connection. c Ground-truth, predicted, and difference of number of synaptic connections between 138 projection neurons (pre-synaptic) and 528 Kenyon cells (post-synaptic) in calyx. d For calyx, same data as in (c), shown as ground-truth versus predicted count of number of synaptic connections between pairs of neurons and equivalent plot for lateral horn. Pairs with both zero connections in ground-truth and prediction are omitted. Orange/blue line quadrants highlight false negative (FN), true positive (TP), false positive (FP), and true negative (TN) edges for two connectivity criteria, i.e., number of synapses equal or greater than γ = 5 (orange) and γ = 20 (blue). Histograms on the right show the respective frequency distributions of number of synaptic connections per edge. e Edge accuracy, precision, and recall over different connectivity thresholds γ for calyx (left) and lateral horn (right). Accuracy results for thresholds γ = 5 and γ = 20 are highlighted and their absolute numbers of TPs, TNs, FPs, FNs are provided in the bar plots below. Connectivity derived from neuron-proximity is shown as “baseline” (Supplementary Note 2).

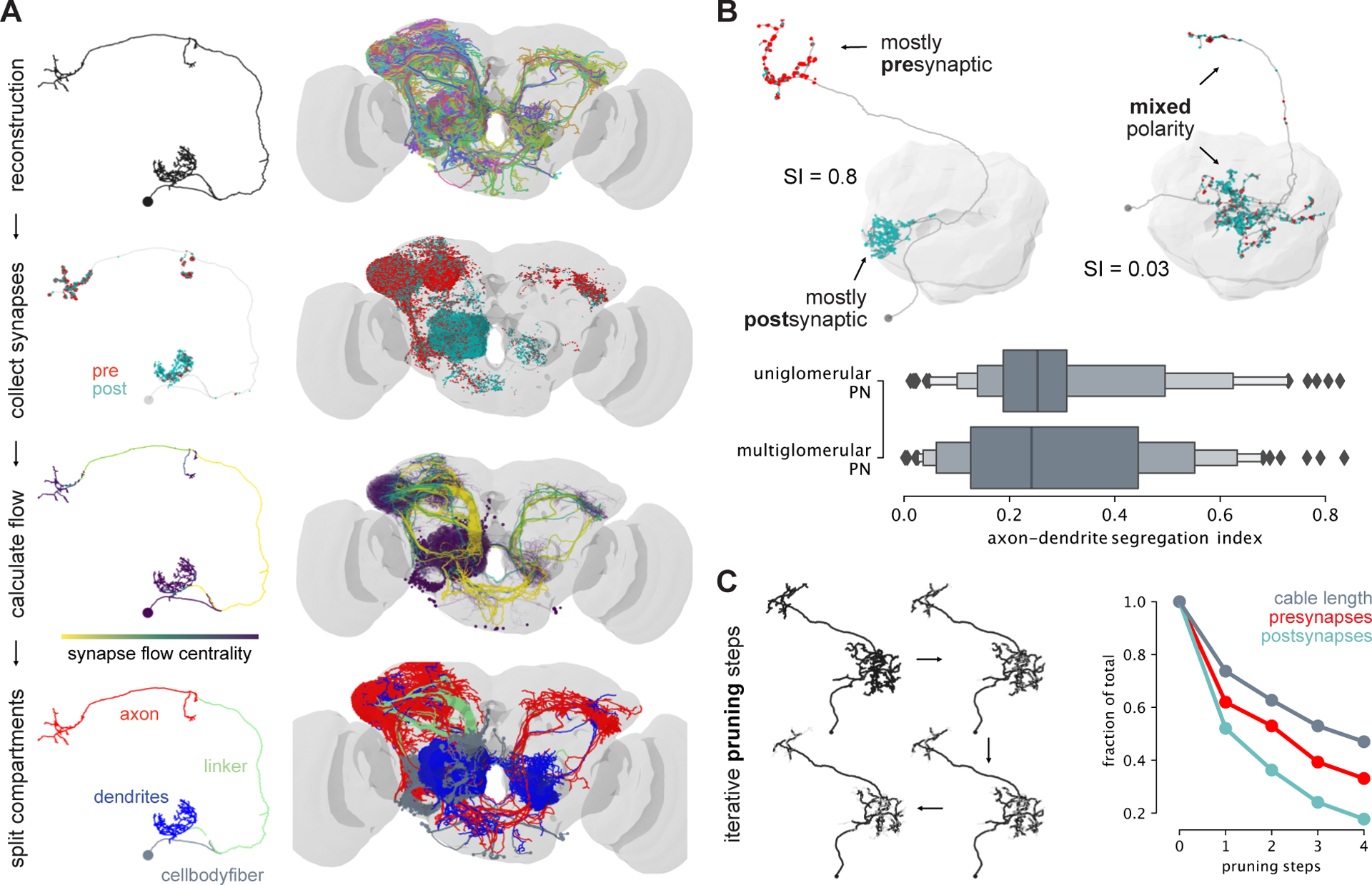

The predicted synaptic connections can be used to automatically infer the presence of a connectome edge with high accuracy (Figure 2c–e). We say that a pair of neurons is connected by an edge, if and only if the true number of synaptic connections is larger or equal to a given threshold γ (Supplementary Note 2). For γ = 5, between 92% and 96% of edges are correctly identified. We compare our method to a neuron-proximity baseline using neuron contact area as a proxy for synaptic weight and find substantial differences in accuracy: 39% and 69% for the baseline versus 96% and 92% for our method for calyx and lateral horn respectively. We further demonstrate that the predictions are conducive to supplement morphological studies of neuron types. Concretely, we study the polarization (i.e., distribution of synaptic inputs and outputs) of uniand multiglomerular olfactory projection neurons (Extended Data Figure 2 and Supplementary Note 3).

Although our model is well-suited to detect the typical one-to-many synapse in Drosophila (one pre-synaptic site targeting many post-synaptic sites), we observe limitations with regards to rare synapse types. Conceptually, our model is less-suited to detect many-to-one synapses, as we extract only a single synaptic connection for a detected post-synaptic site. We also observe problems with the detection of axo-axonic links (Figure 2b), indicating that more representative training datasets are needed.

Methods

Synapse Representation

Due to the polyadic nature of synapses in Drosophila, we represent synapses as sets of connections C ⊂ Ω2 between pairs of voxels (s, t) ∈ Ω2, where s and t denote pre- and post-synaptic locations, respectively, and Ω the set of all voxels in a volume. In this representation, pre-synaptic locations do not have to be shared for connections that involve the same T-bar or synaptic cleft (Supplementary Figure 1a). This is in constrast to the convention followed in annotation tools like Catmaid or NeuTu, where one pre-synaptic connector node serves as a hub for all post-synaptic partners (Supplementary Figure 1e). Although this convention might be beneficial for manual annotation, the representation proposed here is potentially better suited for automatic methods, as it does not require the localization of a unique pre-synaptic site per synapse. Instead, automatic methods can learn to identify synaptic partners independently from each other. Furthermore, this representation is also the one used by the Cremi challenge, which allows us to use the provided training data and evaluation metrics.

Connection Prediction

Given ground-truth annotations C and raw intensity values , we train a neural network to produce two voxel-wise outputs: a mask to indicate post-synaptic sites and a field of 3D vectors that point from post-synaptic sites to their corresponding pre-synaptic site (see Supplementary Figure 3 for an illustration). Both outputs are trained on ground-truth m and d, extracted from C in the following way: m(i) is equal to 1 if voxel i is within a threshold distance rm to any post-synaptic site t over all pairs (s, t) ∈ C, and 0 otherwise (resulting in a sphere). Similarly, d(i) = s − i for every voxel i within a threshold distance rd to the closest t over all pairs (s, t) ∈ C, and left undefined otherwise (during training, gradients on are only evaluated where d is defined). To obtain a set of putative synaptic connections from predictions and , we find connected components in , where θCC is a parameter of our method to threshold the real-valued output . For each component, we sum up the underlying predicted values of , which we refer to as the connection score. Each component with a score larger than a threshold θCS is considered to represent a post-synaptic site, from which we extract a location as the maximum of an Euclidean distance transform of the component. The corresponding pre-synaptic site location is then found by querying the predicted direction vectors , i.e., .

Network Architectures

We use a 3D U-Net [17] as our core architecture, following the design used by [18] and [6], i.e., we use four resolution levels with downsample factors in xyz of (3, 3, 1), (3, 3, 1), and (3, 3, 3) and a five-fold feature map increase between levels. Convolutional passes are comprised of two convolutions with kernel sizes of (3, 3, 3) followed by a ReLU activation. Final activation functions for and are sigmoid and linear, respectively. The initial number of feature maps f is left as a free hyper-parameter. In order to train the two outputs and required by our method, we explore three specific architectures: Architecture ST (single-task) refers to two separate U-Nets trained individually for either and , and thus does not benefit from weight sharing. Architectures MT1 and MT2 refer to multi-task networks that use weight sharing to jointly predict and . MT1 is a default U-Net with four output feature maps (one feature map for and three feature maps for ). MT2 is a U-Net with two independent upsampling paths (as independently proposed by [19]), each one predicting either and , i.e., weights are only shared during the downsampling path between the two outputs. Supplementary Figure 3d summarizes the architecture choices.

Training

Our training loss Ld for (d, ) is based on mean squared error (MSE). For the post-synaptic mask loss Lm (m, ), we explore both error functions MSE and cross-entropy (CE). In the multi-task context (MT1, MT2), we simply sum the two losses to obtain the total loss: Ltotal = Ld + Lm.

We train all investigated networks (ST, MT1, and MT2) on randomly sampled volumes of size (430, 430, 42) voxels, which we refer to as mini-batches. Each mini-batch is randomly augmented with xy-transpositions, xy-flips, continuous rotations around the z-axis, and section-wise elastic deformations and intensity changes. We use the Gunpowder library (https://github.com/funkey/gunpowder) for data loading, preprocessing, and all augmentations mentioned above. Training using TensorFlow on an NVIDIA Titan X GPU takes 6–11 days to reach 700,000 iterations.

Due to the sparseness of post-synaptic sites, the training signal m is heavily unbalanced: ~65% of randomly sampled mini-batches do not contain post-synaptic sites at all, the remaining mini-batches have a ratio of foreground- to background-voxels of ~1:4000 on average. We mitigate this imbalance with two balancing strategies: First, we reject empty mini-batches during training with probability prej. Second, we scale the loss for voxel-wise with the inverse class frequency ratio of foreground- and background-voxels, clipping at 0.0007−1. In practice, the loss for a foreground voxel is scaled on average with a factor of ~1400.

Model Validation

We realign and split the Cremi cubes into a training dataset (75% of the volume of each cube, resulting in a total of 1402 synapses) and a validation dataset (25%, 540 synapses).

We use the Cremi evaluation procedure to assess performance. In short, we match a predicted connection to a ground-truth connection if the underlying neuron segmentation IDs are the same and if the synaptic sites are in close distance (smaller than 400nm). Matched connections are counted as true positives, unmatched ground-truth connections are considered false negatives, unmatched predicted connections are considered false positives. We obtain precision and recall values by adding up true positives, false positives, and false negatives of all three Cremi samples before computing precision and recall values. This is different from the original proposed Cremi score, where precision and recall is computed for each sample individually and then averaged, which effectively weights samples based on the inverse of the number of synaptic connections they contain.

We performed a grid search on the validation dataset to find the best performing configuration amongst different architecture choices (ST, MT1, and MT2), number of initial feature maps (small: f = 4, big: f = 12), training loss for (CE: cross entropy, MSE: mean squared error), and two sample balancing strategies (standard: prej = 0.95 throughout training, curriculum: prej = 0.95 until 90,000 training iterations, then prej = 0). The resulting numbers of trainable parameters for the investigated small networks are 22 million (ST), 11 million (MT1), 12 million (MT2) and for the big networks 192 million (ST), 96 million (MT1), 115 million (MT2).

Supplementary Figure 2 summarizes the grid search results in terms of best f-score obtained over all connection thresholds θCS. Best validation results are obtained using big networks with curriculum learning (f-score 0.74, precision 0.72, recall 0.77). Differences between training losses (CE and MSE) as well as architectures ST and MT2 are marginal, however, architecture MT1 consistently failed to jointly predict and , also when trained with a product loss (Ltotal = Ld · Lm).

Synapse prediction on the complete FAFB dataset

We chose a small ST architecture with curriculum learning and a cross-entropy loss to process the whole FAFB volume. To limit prediction and synaptic partner extraction to areas containing synapses we ignore regions not part of the neuropil using a previously described mask [6]. The remaining volume consists of ~50 teravoxels or equivalently ~32 million μm3, which we process in blocks. For each block, we read in raw data with size (1132, 1132, 90) voxels to produce predictions of size (864, 864, 48) voxels (size difference due to valid convolutions in the U-Net). From those predictions we extract synaptic partners on the fly with a θCC ≥ 0.95 and store only their coordinates and associated connectivity scores if this score exceeds a very conservative threshold (θCS ≥ 5). To ensure sufficient context for partner extraction, we discard detections close (~100 nm) to the boundary of the prediction (≤28 voxels in xy, ≤2 sections in z). With a connected component radius of ~40 nm, this provides enough context to avoid duplicated connections at the boundary. A final block output size of (810, 810, 42) voxels per block or (3.2, 3.2, 1.6) μm results in a total of ~1.8 million blocks to cover the whole neuropil.

Using Daisy (https://github.com/funkelab/daisy), we parallelized processing with 80 workers, each having access to one RTX 2080Ti GPU for continuous prediction and five CPUs for simultaneous, parallel data pre-fetching and synaptic partner extraction. Processing all ~1.8 million blocks took three days, with a raw throughput of 1.53μm3/s per worker, yielding a total of 244 million putative connections.

Given a neuron segmentation l : Ω ↦ N, a straight-forward mapping results from assigning each synaptic site to the neuron skeleton that shares the same segment. However, false merges in the segmentation and misplaced skeleton nodes can lead to ambiguous situations where the segment containing a synaptic site contains several skeletons. Therefore, we assign synaptic sites to the closest skeleton node in the same segment, limited to nodes that are within a distance of 2 μm. The latter threshold was found empirically to confine the influence of wrong merges in the segmentation that otherwise could spread into neurites without skeleton traces. Wrong splits in the segmentation can lead to situations where no neuron skeleton shares the same segment as a synaptic site. In these cases, we leave the synaptic site unmapped.

According to the validation results on the Cremi dataset (Supplementary Notes 2), we further filter connections inside a neuron. Analogous to the Cremi dataset, where we filter connections inside the same segment , we filter connections mapped to the same neuron . Thus, we remove putative false positives, at the expense of potentially losing the ability to detect autapses (synapses connecting the identical neuron). We further cluster synaptic partners that connect the same neurons within a distance threshold of 250 nm, in order to avoid counting a single synaptic connection between two neurons multiple times. We remove all connections except the one with the highest score (θCS) in each identified cluster.

Extended Data

Extended Data Fig. 1. Brain-wide prediction accuracy.

Precision and recall over 10 densely annotated cubes in different brain regions (dataset DenseCubes). Highlights show best f-score over the detection threshold. Top row: Black triangle marks show the inter-human variance between two annotations, with one annotation treated as ground-truth evaluated against the other one. Bottom right: Visualization of the cube locations within the FAFB dataset.

Extended Data Fig. 2. Polarity of olfactory projection neurons (PN).

a, Outline of axon-dendrite splitting procedure. b, Exemplary well segregated (left) and unsegregated (right) PN. Boxplot shows segregation index (SI) for all PNs separated into uni- and multiglomerular PNs. c, Impact of completeness of neuronal reconstruction on recovery of synapses.

Supplementary Material

Acknowledgements

We thank Scott Lauritzen (HHMI Janelia) for helping with data acquisition; Matthew Nichols for importing Catmaid annotations; William Patton for code contributions; Nils Eckstein for helpful discussions; Jeremy Maitin-Shepard for adding synapse visualization features to neuroglancer; Vivek Jayaraman (HHMI Janelia) for providing evaluation data; HHMI Janelia Connectome Annotation Team (Ruchi Parekh, Alia Suleiman, Tyler Paterson) for evaluation data. We would also like to thank Zhihao Zheng (HHMI Janelia), Feng Li (HHMI Janelia), Corey Fisher (HHMI Janelia), Nadiya Sharifi (HHMI Janelia), Steven Calle-Schuler (HHMI Janelia) and Davi Bock (University of Vermont, Burlington) for access to prepublication data used for evaluation.

Funding

This work was supported by Howard Hughes Medical Institute and Swiss National Science Foundation (SNF grant 205321L 160133).

Footnotes

Code Availability

The code used to train networks and predict synaptic partners is available in the “synful” repository, https://github.com/funkelab/synful [22]. Code used to evaluate the results is available in the “synful fafb” repository, https://github.com/funkelab/synful_fafb [20]. A python library to query circuits in the FAFB volume using the predicted synaptic partners is available in the “SynfulCircuit” repository, https://github.com/funkelab/synfulcircuit [14]. The Catmaid widget used to interactively query circuits is available in the “CircuitMap” repository, https://github.com/catmaid/circuitmap [15].

Competing Interests Statement

Stephan Gerhard is the founder and CEO of UniDesign Solutions GmbH, which provides IT services.

Data Availability

The datasets generated during and/or analysed during the current study are available in the “synful fafb” repository [20] and “synful experiments” [21]. The following Figures and Tables use this data:

| Figure 1: | partner predictions (link to SQL dump in “synful_fafb”) |

| Figure 2: | evaluation results (datasets and code in “synful_fafb” and “synful_experiments”) |

| Extended Data Figure 1: | datasets and code in “synful_experiments” |

| Extended Data Figure 2: | polarity analysis based on partner predictions, code in “synful_experiments” |

| Supplementary Figure 2: | gridsearch code in “synful_experiments” |

References

- [1].Denk Winfried, Briggman Kevin L, and Helmstaedter Moritz. Structural neurobiology: missing link to a mechanistic understanding of neural computation. Nature Reviews Neuroscience, 13(5):351–358, 2012. [DOI] [PubMed] [Google Scholar]

- [2].Zheng Zhihao, Lauritzen J Scott, Perlman Eric, Robinson Camenzind G, Nichols Matthew, Milkie Daniel, Torrens Omar, Price John, Fisher Corey B, Sharifi Nadiya, et al. A complete electron microscopy volume of the brain of adult drosophila melanogaster. Cell, 174(3):730–743, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [3].Li Peter H., Lindsey Larry F., Januszewski Michal, Zheng Zhihao, Bates Alexander Shakeel, Taisz István, Tyka Mike, Nichols Matthew, Li Feng, Perlman Eric, Maitin-Shepard Jeremy, Blakely Tim, Leavitt Laramie, Jefferis Gregory S. X. E., Bock Davi, and Jain Viren. Automated Reconstruction of a Serial-Section EM Drosophila Brain with Flood-Filling Networks and Local Realignment. bioRxiv, April2019. doi: 10.1101/605634. [DOI] [Google Scholar]

- [4].Dorkenwald Sven, Schubert Philipp J, Killinger Marius F, Urban Gregor, Mikula Shawn, Svara Fabian, and Kornfeld Joergen. Automated synaptic connectivity inference for volume electron microscopy. Nature methods, 14 (4):435, 2017. [DOI] [PubMed] [Google Scholar]

- [5].Motta Alessandro, Berning Manuel, Boergens Kevin M, Staffler Benedikt, Beining Marcel, Loomba Sahil, Hennig Philipp, Wissler Heiko, and Helmstaedter Moritz. Dense connectomic reconstruction in layer 4 of the somatosensory cortex. Science, 366(6469), 2019. [DOI] [PubMed] [Google Scholar]

- [6].Heinrich Larissa, Funke Jan, Pape Constantin, Nunez-Iglesias Juan, and Saalfeld Stephan. Synaptic cleft segmentation in non-isotropic volume electron microscopy of the complete drosophila brain. In International Conference on Medical Image Computing and Computer-Assisted Intervention, pages 317–325. Springer, 2018. [Google Scholar]

- [7].Huang Gary B., Scheffer Louis K., and Plaza Stephen M.. Fully-Automatic Synapse Prediction and Validation on a Large Data Set. Frontiers in Neural Circuits, 12, October2018. ISSN; 1662–5110. doi: 10.3389/fncir.2018.00087. URL https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6215860/. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Kreshuk Anna, Funke Jan, Cardona Albert, and Hamprecht Fred A. Who is talking to whom: synaptic partner detection in anisotropic volumes of insect brain. In International Conference on Medical Image Computing and Computer-Assisted Intervention, pages 661–668. Springer, 2015. [Google Scholar]

- [9].Turner Nicholas L, Lee Kisuk, Lu Ran, Wu Jingpeng, Ih Dodam, and Seung H Sebastian. Synaptic partner assignment using attentional voxel association networks. In 2020 IEEE 17th International Symposium on Biomedical Imaging (ISBI), pages 1–5. IEEE, 2020. [Google Scholar]

- [10].Parag Toufiq, Berger Daniel, Kamentsky Lee, Staffler Benedikt, Wei Donglai, Helmstaedter Moritz, Lichtman Jeff W, and Pfister Hanspeter. Detecting synapse location and connectivity by signed proximity estimation and pruning with deep nets. In Proceedings of the European Conference on Computer Vision (ECCV), 2018. [Google Scholar]

- [11].Buhmann Julia, Krause Renate, Ceballos Lentini Rodrigo, Eckstein Nils, Cook Matthew, Turaga Srinivas, and Funke Jan. Synaptic partner prediction from point annotations in insect brains. In International Conference on Medical Image Computing and Computer-Assisted Intervention, pages 309–316. Springer, 2018. [Google Scholar]

- [12].Xu C Shan, Januszewski Michal, Lu Zhiyuan, Takemura Shin-ya, Hayworth Kenneth, Huang Gary, Shinomiya Kazunori, Maitin-Shepard Jeremy, Ackerman David, Berg Stuart, et al. A connectome of the adult drosophila central brain. BioRxiv, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [13].Ronneberger Olaf, Fischer Philipp, and Brox Thomas. U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical image computing and computer-assisted intervention, pages 234–241. Springer, 2015. [Google Scholar]

- [14].Buhmann Julia and Funke Jan. funkelab/synfulcircuit: v1.0, March 2021. URL 10.5281/zenodo.4633048. [DOI]

- [15].Kazimiers Tom, Gerhard Stephan, and Funke Jan. catmaid/circuitmap, March2021. URL 10.5281/zenodo.4633884. [DOI]

- [16].Schneider-Mizell Casey M, Gerhard Stephan, Longair Mark, Kazimiers Tom, Li Feng, Zwart Maarten F, Champion Andrew, Midgley Frank M, Fetter Richard D, Saalfeld Stephan, et al. Quantitative neuroanatomy for connectomics in drosophila. Elife, 5:e12059, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [17].Falk Thorsten, Mai Dominic, Bensch Robert, Çiçek Özgün, Abdulkadir Ahmed, Marrakchi Yassine, Böhm Anton, Deubner Jan, Jäckel Zoe, Seiwald Katharina, et al. U-net: deep learning for cell counting, detection, and morphometry. Nature methods, 16(1):67, 2019. [DOI] [PubMed] [Google Scholar]

- [18].Funke Jan, David Tschopp Fabian, Grisaitis William, Sheridan Arlo, Singh Chandan, Saalfeld Stephan, and Turaga Srinivas. Large scale image segmentation with structured loss based deep learning for connectome reconstruction. IEEE Transactions on Pattern Analysis and Machine Intelligence, 41(7):1669–1680, 052018. [DOI] [PubMed] [Google Scholar]

- [19].Wang Kaiqiang, Dou Jiazhen, Kemao Qian, Di Jianglei, and Zhao Jianlin. Y-net: a one-to-two deep learning framework for digital holographic reconstruction. Optics Letters, 44(19):4765–4768, 2019. [DOI] [PubMed] [Google Scholar]

- [20].Buhmann Julia and Funke Jan. funkelab/synful fafb: v1.0, March 2021. URL 10.5281/zenodo.4633135. [DOI]

- [21].Funke Jan, Buhmann Julia, Sheridan Arlo, Nguyen Tri M., and Malin-Mayor Caroline. synful experiments, March2021. URL 10.5281/zenodo.4635362. [DOI]

- [22].Buhmann Julia, Funke Jan, and Nguyen Tri M.. funkelab/synful: v1.0, March2021. URL 10.5281/zenodo.4633044. [DOI]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The datasets generated during and/or analysed during the current study are available in the “synful fafb” repository [20] and “synful experiments” [21]. The following Figures and Tables use this data:

| Figure 1: | partner predictions (link to SQL dump in “synful_fafb”) |

| Figure 2: | evaluation results (datasets and code in “synful_fafb” and “synful_experiments”) |

| Extended Data Figure 1: | datasets and code in “synful_experiments” |

| Extended Data Figure 2: | polarity analysis based on partner predictions, code in “synful_experiments” |

| Supplementary Figure 2: | gridsearch code in “synful_experiments” |