Abstract

Corneal diseases, uncorrected refractive errors and cataract represent the major causes of blindness globally. The number of refractive surgeries, either cornea- or lens-based, is also on the rise as the demand for perfect vision continues to increase. With the recent advancement and potential promises of artificial intelligence (AI) technologies demonstrated in the realm of ophthalmology, particularly retinal diseases and glaucoma, AI researchers and clinicians are now channelling their focus towards the less explored ophthalmic areas related to the anterior segment of the eye. Conditions that rely on anterior segment imaging modalities, including slit-lamp photography, anterior segment optical coherence tomography, corneal tomography, in vivo confocal microscopy and/or optical biometers, are the most commonly explored areas. These include infectious keratitis, keratoconus, corneal grafts, ocular surface pathologies, preoperative screening before refractive surgery, intraocular lens calculation, and automated refraction, amongst others. In this review, we aimed to provide a comprehensive update on utilization of AI in anterior segment diseases, with particular emphasis on the recent advancement in the past few years. In addition, we demystify some of the basic principles and terminologies related to AI, particularly machine learning and deep learning, to help improve the understanding, research and clinical implementation of these AI technologies among the ophthalmologists and vision scientists. As we march towards the era of digital health, guidelines such as CONSORT-AI, SPIRIT-AI and STARD-AI will play crucial roles in guiding and standardizing the conduct and reporting of AI-related trials, ultimately promoting their potential for clinical translation.

Keywords: Anterior segment, Artificial Intelligence, Cataract, Cornea, Deep learning, Machine learning, IOL power calculation

Introduction

While the potential of artificial intelligence (AI) is still being uncovered, it is proving to be useful in the field of ophthalmology to revolutionize vision care.1 Although it is unlikely to replace an ophthalmologist’s role, it is likely to augment patient care by improving the diagnostic performance and predicting possible outcomes.2 Initially developed for retinal disorders and glaucoma,3, 4 AI has recently been explored in the field of anterior segment diseases.1, 5–8 As anterior segment diseases often involve some form of imaging, including slit-lamp photography, anterior segment optical coherence tomography (AS-OCT), specular microscopy, corneal tomography/topography, and in vivo confocal microscopy (IVCM), there is a huge potential to leverage the power of AI to enhance the clinical service provision in these fields.

In this review, we aimed to provide a comprehensive update on the utility of AI in anterior segment diseases and surgeries, encompassing cornea, refractive surgery and cataract, with particular emphasis on the recent advancement in the past few years. We also provide a succinct overview of the basic principles of AI, particularly machine learning (ML) and deep learning (DL), to aid the understanding, research and clinical deployment of AI technologies among the ophthalmologists and vision scientists.

Global Burden of Anterior Segment Diseases

The cornea and the lens are the two most important refractive structures of the eye. Damage to these structures can potentially result in visual impairment and blindness. According to the World Health Organization, cataract and uncorrected refractive errors are the top two leading causes (55%) of blindness worldwide, particularly in the low- and middle-income countries (LMICs).9, 10 Currently, the number of cataract surgery performed globally is estimated at around 20 million cases per annum.11 As the proportion of ageing population increases, the global burden of cataract, consequently the demand for cataract surgery, is expected to rise significantly. In addition, the increasing demand for perfect vision following cataract surgery is also growing in view of the improvement in the techniques and technologies (e.g. lens and phacoemulsification technologies).12–14 Similarly, the socio-economic burden of refractive errors and corneal diseases cannot be ignored. Owing to intensive education and digital learning, prevalence of myopia is estimated to be 80-90% in East and South-east Asian countries.15 In addition, the recent increased digital screen time and reduced outdoor activities during COVID-19 pandemic have been shown to further increase the risk of myopia.16, 17

Corneal opacity is the 5th leading cause of blindness worldwide, with infectious keratitis (IK) being the main culprit, affecting both developed and developing countries.18–20 It has also been shown to place a significant burden on the healthcare system, with around $175 million being spent in the United States alone.21 Keratoconus is another common corneal ectatic disease that can progressively worsen and lead to visual impairment if left untreated.22 Timely detection and early stabilization with corneal cross-linking treatment are key to reduce the risk of visual loss, though corneal transplantation is required for the severe cases.23–25 Therefore, AI-related research efforts are being increasingly invested in these areas to enable accurate and high throughput disease screening and grading in the clinical settings.

Basic Principles of Artificial Intelligence (AI)

In general, AI is designed to mimic human behaviour and making human-like decisions by programming a computer. Early AI systems were rule-based models, where conditions (e.g. if “A” then “B”) were pre-defined by human experts based on their experience for a particular task. These rule-based methods normally had limited application scenarios and were often not robust to handle different situations in real-world environments.

More recently, as a subset of AI, machine learning (ML) methods become more popular due to their superior performance in comparison to rule-based systems. ML algorithms build a model (e.g. logistic regression, artificial neural network, decision trees) using sample training data, in order to make predictions or decisions without being explicitly programmed.26 Dependent on the available information in the example dataset, supervised, semi-supervised and unsupervised methods can be applied. If the corresponding outcome events (i.e. label or annotation) of all data samples are available, a fully supervised method can be used. If the outcome labels are not available, unsupervised methods need to be applied to explore the underlying data patterns automatically. If the labels are partially available or the labels are not accurate, some semi-supervised methods need to be deployed. Besides the availability and quality of the labels, representation of the characteristics of the data is extremely crucial. In classical ML methods, these characteristics are manually defined by human expert, which is not a trivial task and has a significant impact on the method performance. Multi-layer perceptron neural network is one of the most promising ML methods. Each of the neurons in the hidden layer is connected to all the outputs from the previous layer, known as fully connected layer, which enables complicated feature interaction to be learned. However, due to computational limit, it cannot train many hidden layers to handle input data with a high dimensionality, which is particularly challenging in applying it to imaging data (i.e. millions of pixels as the input).

As a subfield of ML, DL methods have emerged as the most powerful method that can achieve superior performances in many areas. DL algorithms aim to learn hierarchical feature representations automatically, which allows a complex mapping to be established between the input data and the output decision making. Since 2012, based on the idea of convolutional neural network (CNN) proposed by LeCun et al.27 and followed by a technological breakthrough28 that allows deeper neural networks to be trained, deep CNNs have achieved state-of-the-art performances in many imaging-based applications (e.g. object recognition, image segmentation, disease classification, etc.). In principle, CNN-based methods are able to automatically learn multi-scale feature representation by applying many convolutional filters and non-linear activation functions at different image scales. The weights of the convolutional filters are automatically learned during the training process through iterative back propagation of the errors measured between the predicted outputs and the ground truth labels. Deep CNN provides the advantage of learning the feature representation from data without human knowledge and the capability of processing large training data with high dimensionality.

Working principle of deep convolutional neural network (CNN)

A typical CNN model consists of multiple convolutional layers, pooling layers and activation units, which is trained using example images by minimizing a pre-defined loss function.

“Convolutional layer applies a number of filters to the input image or the feature map calculated from the previous layer, which results in enhanced features at certain locations in the image. The weights in these filters are learned during the training process.

Pooling layer performs as a dimensionality reduction operator that down-samples the input image or the feature map obtained from the previous layer. Average and max are the most popular pooling operators, which calculate the average and max value of a local region during the image down-sampling process, respectively.

The activation unit enables the neural network to learn non-linear relationships between the input and output. Sigmoid and tanh functions are popular choices in classical neural network models which suffer from the vanishing gradient problem preventing deep neural network (DNN) to be trained efficiently. Rectified linear unit (ReLU) is one of the keys to success for deep CNN models, which overcomes the vanishing gradient issue to some extent.28

Dependent on the applications, different loss functions can be used to optimize the deep CNN models (i.e. learn the weights of the filters). Mean squared error, cross-entropy and soft-Dice measures are typically used for regression model, classification model and image segmentation model respectively. Numerous other loss functions have also been proposed to address particular issues in different applications, such as adversarial loss for generative models.29

Several optimization methods, including Stochastic Gradient Descent, Momentum, Adam, Rmsprop and others, have been proposed to minimize the loss function.30 These are all based on gradient descent algorithm, which only produces a local optimum. Hence, model initialization has been an active research area for improving the model reliability and repeatability. Adam is currently the most favorable method in terms of robustness.31

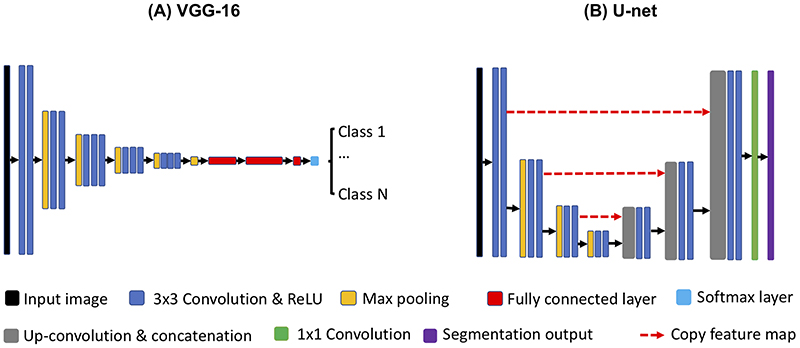

There are many different variants of deep CNN networks, such as Residual net,32 Inception net,33 and U-net34. However, the basic working principles are similar, which are based on the basic CNN components introduced above. Based on the aforementioned building blocks, popular deep CNN architectures, including VGG-1635 for classification and U-net for image segmentation are illustrated in Figure 1(A-B). Recently, generative adversarial network (GAN) has played an important role in many medical imaging applications due to its superior capability to synthesize realistic images by sampling the learned latent feature space.36 GAN consists of a generator and a discriminator, which are also based on the aforementioned CNN components. The generator learns to generate synthetic images from a random vector sampled from a low dimensional Gaussian distribution, while the discriminator tries to discriminate the synthetic images from a set of real images. Both the generator and discriminator are trained simultaneously using the minimax optimization strategy in an unsupervised manner.

Figure 1. Examples of deep convolutional neural networks (CNNs).

(A) a classification model using VGG-16 architecture; (B) an image segmentation model using Unet. Both models consist of convolution, rectified linear unit (ReLU) and max pooling operations for feature extraction in a multi-resolution manner. For the VGG-16, additional fully connected layers and a softmax layer are used for predicting the class labels based on the learned features. For the Unet, up-convolutional operation is used to up-sample the learned feature maps from the previous layer, followed by a concatenation with the feature maps from the corresponding down-sampling layer. This up-sampling process is also performed in a multi-resolution manner. Finally, 1x1 convolution is applied to predict the segmentation output based on the feature maps in the last layer.A deep convolutional neural network (CNN) classification model using VGG-16 architecture. The model uses input image size of 224 × 224 as an example. Layer 1 to layer 6 consist of convolution (filter size of 3×3), ReLU and max pooling operations that enable feature extraction and dimensionality reduction. The number W×H×C (e.g. 224×224×64) in each layer represent the width of image (W), the height of image (H), and the number of filters (C), respectively. Layer 7 to layer 9 are fully connected layers that perform the same as a multi-layer perceptron neural network for learning complicated feature interactions for decision making. Finally, the softmax layer maps the output value of layer 9 to the range of [0, 1], indicating the probability of the input image being classified as each of the classes.

Model training

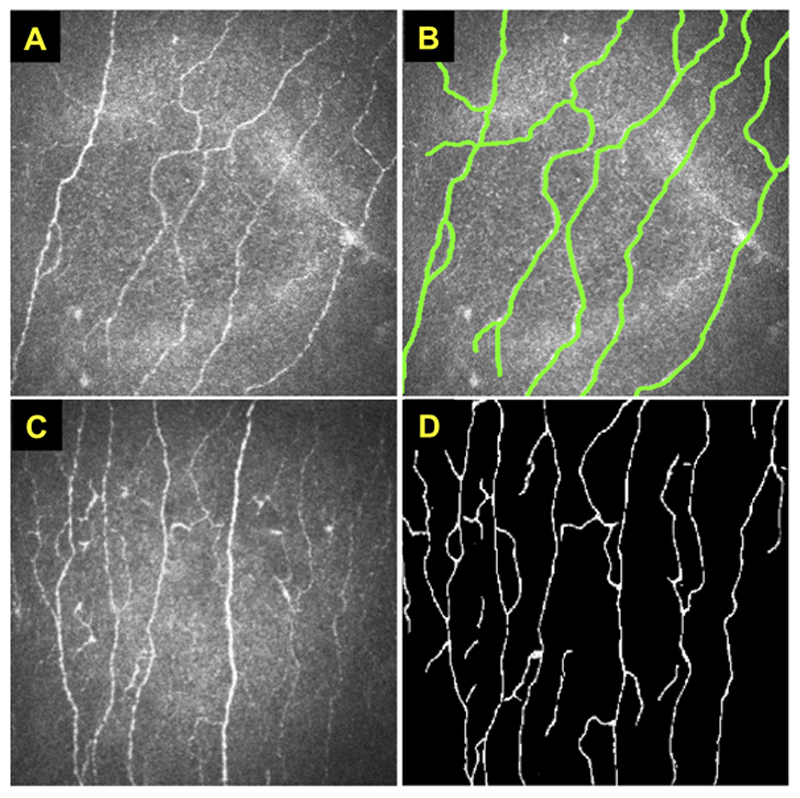

The quality of the training dataset is extremely crucial to the performance of the DL models. In medical applications, the image annotation provided by clinicians normally include pixel-level annotation or image-level annotation or both. For instance, Figure 2A-B show a set of paired example images of corneal IVCM and its corresponding manual annotations of nerve fibres, published by Zhang et al.37 It normally requires a large set of paired images (Figure 2A-B) to train a supervised deep CNN model. When an unseen image (e.g. Figure 2C) is input to the trained model, the nerve fibres can be automatically segmented as shown in Figure 2D. Subsequently, some quantified features such as nerve fibre length, density and orientation can be measured, together with other clinical information (e.g. age, body mass index, etc.), to achieve a disease diagnosis (e.g. healthy vs diseased).38 Alternatively, if the pixel-level nerve fibre annotation is not available, the image-level label (e.g. healthy or diseased) can be used to train a deep CNN classification model (e.g. VGG model in Figure 1A) without defining these quantification features. However, these classification models are less capable to be interpreted and have poorer generalizability when only a small dataset is available.

Figure 2. Examples of in vivo confocal micrographs of the cornea.

These figures were adapted from the study published by Zhang et al.37 (A) An example of IVCM demonstrating normal corneal nerves (shown as hyper-reflective structures). (B) A corresponding IVCM image of (A) with manually annotated nerve fibres. For supervised model training, it requires a large number of these paired images (A) and (B). When an unseen IVCM (C) is input to the trained model, the nerve fibres can be automatically segmented as shown in (D).

Similar to all other ML methods, there are several aspects that need to be considered when training deep CNN models. Firstly, due to a large number of model parameters and relatively small training examples (especially in medical applications), the model may suffer from over-fitting that cannot be generalized well to new test data. Normally a validation dataset is used to determine the training termination point for avoiding model over-fitting. Drop-out,39 data augmentation,40 and transfer learning41 have been proposed to improve the generalizability of a trained model. It is vitally important to evaluate the trained ML model using an independent external test set for assessing the generalizability of the method. A decrease in performance normally occurs when testing on an independent test set, which is mainly due to model overfitting to the training dataset or a data distribution discrepancy between the training and testing datasets. Moreover, most medical applications have limited number of data that are annotated for training, or only coarse annotations can be provided due to heavy workload and the requirement of highly skilled expertise. Many semi-supervised and weakly-supervised methods have been proposed to address the issue of limited annotations and inaccurate annotations.37, 42 Many GAN-based methods43 have also been proposed to synthesize task specific images for tackling the problem of limited data and distribution discrepancy between training and testing datasets.

Common terminologies

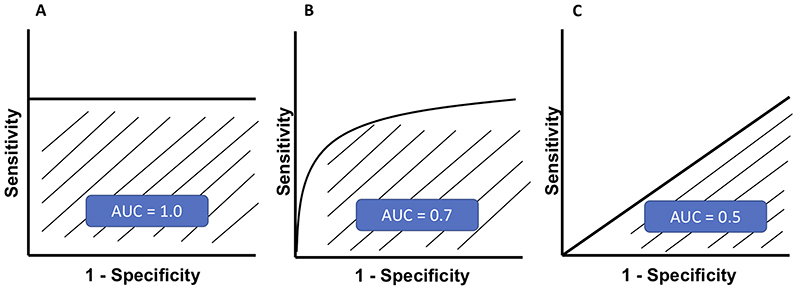

Some of the common terminologies used in AI studies, including sensitivity, specificity, positive predictive value (PPV) or precision, negative predictive value (NPV), and accuracy, are summarized and explained in Table 1. The sensitivity (Y-axis) and specificity (X-axis) rates are used to plot the receiver operating characteristic (ROC) curve and the area under the ROC curve (AUC) is often used to determine the performance of a given model at all thresholds. Three examples of ROC curve with different AUC are illustrated in Figure 3. An AUC of 1 may actually be a reflection of overfitting to training data.

Table 1. Common terminologies used in artificial intelligence studies.

| Actual outcome | ||||

| Disease | No disease | |||

| Predicted outcome | Disease | TP | FP | TP / (TP + FP) PPV or Precision |

| No disease | FN | TN | (TN) / (TN + FN) NPV |

|

| TP / (TP + FN) Sensitivity |

TN / (TN + FP) Specificity |

(TP + TN) / (TP+TN+FP+FN) Accuracy |

||

TP = True positive; FP = False positive; FN = False negative; TN = True negative; PPV = Positive predictive value; NPV = Negative predictive value

Definitions

Sensitivity = Actual positive cases predicted correctly by model

Specificity = Actual negative cases predicted correctly by model

PPV = Positively classified cases that were actually positive

NPV = Negatively classified cases that were actually negative

Accuracy = Overall accuracy in predicting both positive and negative cases.

Figure 3. Three examples of receiver operating characteristic (ROC) curve are illustrated.

(A) A ‘perfect’ classifier with an area under the ROC curve (AUC) of 1.0; (B) A real-world classifier with an AUC ranging 0.5-1.0; and (C) A “poor” classifier with an AUC of 0.5, which is no better than a random guess.

Artificial Intelligence in Cornea

AI has been successfully used in the prediction of diagnosis of various corneal disorders, including IK, keratoconus, pterygium, endothelial diseases, and corneal graft-related complications, amongst others.5, 7 An overview of the potential clinical deployment of AI using various imaging modalities is summarized in Table 2.

Table 2. Summary of the potential of artificial intelligence for various anterior segment diseases, based on data and various imaging modalities.

| Cornea | Refractive Surgery | Cataract | |

|---|---|---|---|

| Clinical data | |||

| SLP | |||

| AS-OCT | |||

| CT | |||

| IVCM |

SLP = Slit-lamp photography; AS-OCT = Anterior segment optical coherence tomography; CT = Corneal tomography / topography; IVCM = In vivo confocal microscopy; DMEK = Descemet membrane endothelial keratoplasty; CRS = Corneal refractive surgery; PCO = Posterior capsular opacification

Infectious keratitis (IK)

One of the clinical challenges associated with IK is the difficulty to establish an accurate diagnosis, due to low culture yield, lack of pathogen-specific features, and occurrence of polymicrobial infection (2-15%).44–46 Slit-lamp photographs are commonly used in clinical settings for documenting and monitoring progress of IK and other ocular surface diseases.47, 48 In 2003, Saini and associates, trained artificial neural network (ANN) using forty input variables to correctly classify corneal ulcers into fungal and bacterial categories.49 The specificity was 76.5% for bacterial and 100% for fungal ulcers, with an accuracy of 90.7%, which was significantly better than clinicians’ predictions. Using semi-automated segmentation of epithelial defects and stromal infiltrates visible on slit-lamp photographs, it was possible to reduce variability and maintain accuracy in measuring quantitative parameters in corneal ulcers.50 Over the past few years, DL algorithms have been developed to identify fungal hyphae on IVCM images.51, 52 Apart from differentiating normal from abnormal cornea, AI can also quantify the hyphal density to evaluate the severity of the infection.51

More recently, Li et al.53 have demonstrated the potential of using slit-lamp photographs in accurately diagnosing a range of anterior segment diseases, including IK, pterygium and conjunctivitis, and cataract. These images were first annotated by the clinicians using Visionome, which enables dense annotation of the pathological features. Subsequently, the annotated images were combined with DL frameworks using ResNet and faster region-based CNN for the detection and classification of disease, followed by automated recommendation of further treatment plan (i.e. clinical observation vs. medical treatment vs. surgical treatment).

In addition, a novel DL-based framework was developed to automatically distinguish fungal keratitis from non-fungal keratitis on corneal photographs.54 A total of 288 photographs were analyzed retrospectively and the performance of the AI-assisted method was analyzed in comparison to non-cornea specialist ophthalmologists and cornea specialists. The average performance had a sensitivity of 71%, diagnostic accuracy of 70% and PPV of 60%. The accuracy was found to be superior to the non-cornea specialist ophthalmologists but lower than the cornea specialists making it a useful diagnostic tool in primary health care centres. Gu et al.55 had also developed a DL-based algorithm using ocular surface photographs to differentiate among IK, non-infectious keratitis, corneal dystrophies and surface neoplasms. Using a dataset of 510 outpatients, the algorithm was tested against 10 ophthalmologists and was found to have AUC of more than 0.910 for each category. Liu et al.56 have also recently developed a novel convolutional neural network (CNN) framework for automatic diagnosis of fungal keratitis using IVCM images. Using data augmentation and image fusion, the accuracy of ALexNet and VGGNet based was 99.95% and 99.89% respectively (based on histogram matching fusion).

Keratoconus

AI has also proven to be useful in detecting corneal ectasia such as keratoconus. Early diagnosis and detection of forme fruste keratoconus (FFKC) or suspect keratoconus, especially prior to refractive surgeries, remains a clinical challenge. They are primarily diagnosed using various imaging modalities, particularly corneal topography, corneal tomography and AS-OCT. In the past few years, various AI approaches have been explored and proven to be successful. These include feedforward neural network, CNN, support vector machine (SVM) learning, and automated decision-tree classification.57–65 AI has been used to predict the outcome of keratoconus management.66

More recently, AI-based algorithms using corneal topographies, tomographies and AS-OCT such as KeratoDetect and Ectasia Status Index (ESI) have been developed to detect early keratoconus and screen patients prior to refractive surgeries.67–69 Most of the algorithms use colour coded tomography maps to detect features of ectasia. Recently, efforts have been made to develop CNN-based algorithms using numeric data matrixes which are more efficient and easier to generalize to all topographers used.70 This new algorithm achieved an accuracy of 99.3% in distinguishing among different types of eyes, including the healthy eyes, keratoconic eyes, and those that had undergone corneal refractive surgery previously. On the other hand, Cao et al.63 added other clinical measures such as demographic parameters, spherical equivalent, and axial length (AL) to the topographic data and compared the performances of eight different algorithms available. Based on 11 different parameters, they reported random forest method to have the highest AUC (0.97) for detecting subclinical keratoconus. SVM had the highest sensitivity (94%) and k-nearest neighbour model had the highest specificity (94%). It is noteworthy to highlight that corneal topography and tomography represent the current gold standard for diagnosing keratoconus and assessing for the risk of post-corneal refractive surgery ectasia preoperatively; hence they are often used as the “ground truth” for training AI algorithms in these areas. However, early detection of subclinical keratoconus or forme fruste keratoconus remains a diagnostic challenge using this approach.71 Future studies that include longitudinal data on subclinical keratoconus (to monitor for progression to keratoconus) would be valuable as these data can be utilized to train and develop a more accurate AI-based algorithms for distinguishing subclinical keratoconus from normal eyes.

Apart from diagnosis, AI has been recently explored in identifying susceptibility genes for keratoconus. Hosoda et al.72 used IBM’s Watson Drug Delivery (WDD) to identify keratoconus-susceptibility gene loci from a genome wide association study (GWAS) on central corneal thickness (CCT). They identified STON2 rs2371597 as the locus for CCT and confirmed a significant association between STON2 rs2371597 and development of keratoconus.

ANN has been used to guide implantation of intracorneal ring segments (ICRS) in keratoconic corneas and predict outcomes. Valdés-Mas et al.66 proposed an ANN based on multilayer perceptron to predict the outcomes of ICRS. They assessed vision gain by means of astigmatism and corneal curvature. Fariselli et al.73 conducted a comparative analysis of ANN (ANN group) and a group of corneas that received ICRS implants based on the company nomogram (nomogram group). There was a statistically significant difference in the corrected-distance-visual-acuity (CDVA) between the two groups, with the ANN group performing better than the nomogram group. In addition to visual gain, aberration profile was also analyzed and it was found that the coma-like aberrations decreased significantly in the ANN group. No change in aberrations was noted in the nomogram group. The authors, therefore suggested use of neural network approach to reduce the higher order aberrations and improve optical quality and visual function. Both the studies used KeraRings as the ICRS implanted. Limited by the number of studies, AI approach needs to be further explored in the use of ICRS using different rings such as INTACS and Ferrara rings

Corneal dystrophies and dysplasia

DL-based algorithms have been used to differentiate normal corneas from edematous corneas based on OCT images.74 Eleiwa et al.75 used AI to detect early stage Fuchs endothelial corneal dystrophy (FECD, without corneal edema) from late-stage FECD (with corneal edema) based on high-definition OCT images. The model they developed was 99% sensitive and 98% specific in detecting normal cornea from FECD (early or late). The AUC achieved was 0.997 with 91% sensitivity and 97% specificity in detecting early-FECD; and 0.974 with a specificity of 92% and a sensitivity up to 100% in detecting late-stage FECD.

Gu et al.55 reported an AUC of 0.939 for detecting corneal dystrophy or degeneration using a slit-lamp photograph-based DL model. They included ocular surface disorders such as limbal dermoid, papilloma, pterygium, conjunctival dermolipoma, conjunctival nevus and conjunctival melanocytic tumors to differentiate ocular surface neoplasms. With the limited existing literature, use of AI in ocular surface neoplasms needs to be explored further. On the other hand, Kessel et al.76 had trained DL-algorithms to detect and analyze amyloid deposition in corneal sections in patients of familial amyloidosis undergoing full-thickness keratoplasty.

Corneal nerves

There has been an increased clinical interest in the use of IVCM images to analyze sub-basal nerve plexus features and correlate them with ocular and systemic diseases. It is known that manual and semi-automated analysis of the nerve fibre parameters is tedious and time-consuming. Computer vision algorithms have made automated analysis of nerves possible.77 However, tracing of the corneal nerves in the presence of dendritic cells and other inflammatory cells, poses a potential problem.78 79

Scarpa et al.80 used a CNN-based method to find correlation between corneal nerves and diabetic neuropathy. The proposed method analyzed 3 images from each eye to give a more complex analysis similar to human clinical process without the need of montage creation and acquisition of multiple images, and categorized images into normal and pathological. Oakley et al.81 used IVCM images from macaque models and developed a DL approach for nerve segmentation that achieved a correlation score of 0.80 between readers and CNN. Williams et al.82 developed a DL based algorithm to analyze nerve fibre length, branching and tail points, fractal numbers and tortuosity, to diagnose diabetic neuropathy and its severity. On comparison with the ACCmetrics which is an automated nerve analysis software, this DL-based algorithm had superior correlation coefficients than the ACCmetrics for all the nerve parameters measured. Another corneal nerve segmentation network, CNS-Net, was developed by Wei and co-workers and was found to have an AUC of 0.96.83 Although most algorithms have explored normal nerves with diabetic polyneuropathy, future directions include AI-based analysis of corneal nerves to be applied in conditions like dry eyes, migraine and post-LASIK ectasia.78 79 84

Corneal grafts

Apart from corneal topography, tomography and AS-OCT, attempts have been made to analyze the specular microscopy images with AI algorithms. Significant work has been done on automated endothelial cell parameter estimation based on specular microscopy. Classic DL techniques worked by segmentation of the entire image. The data thus generated could be used only if the cells were visible in the whole image or by manual selection of the region of interest (ROI).85–87 Daniel et al.88 used U-Net in the automated segmentation of specular microscopy images of variable quality and from different ocular diseases. There was a good agreement between the automated image analysis and the manual annotation from the U-Net, with R2 from Pearson’s correlation being 0.96. The authors suggested that U-Net could be used to reliably distinguish the difference between normal and pathological corneal endothelium, and detect nascent immune reactions following keratoplasty. In addition, Treder et al.89 and Hayashi et al.90 used a DL approach to automatically detect graft detachments and predict the need for re-bubbling following Descemet membrane endothelial keratoplasty (DMEK), respectively.

More recently, studies have used DL algorithms for fully automated analysis to estimate corneal endothelium parameters from specular microscopy in eyes that have undergone ultrathin Descemet stripping automated endothelial keratoplasty (DSAEK). The DL-approach proposed by the authors, automatically segments post-ultrathin DSAEK endothelial images, and computes endothelial cell parameters by detecting cells in trustworthy areas. The use of three stages of processing, CNN-Edge, CNN-ROI, and postprocessing helps reduce potential mistakes from the edges of the image.91 The method was significantly better than Topcon’s automated analysis and similar to the results obtained by manual analysis. The DL-based method provided an estimate for endothelial cell density (ECD), coefficient of variation (CV) and hexagonality (HEX) in 98.4% of the images, as compared to Topcon’s software which provided ECD and CV in 71.5% images and HEX in 30.5% images.91

AI-assisted analysis of data obtained from Scheimpflug imaging has been shown to predict the need for penetrating and lamellar keratoplasty. Recently, Yousefi et al.68 used a comprehensive profile of corneal shape, thickness and elevation parameters in cases of keratoconus and corneal edema to predict the likelihood of future keratoplasty. Based on ESI (an index used to classify the severity of keratoconus) and various corneal parameters, the unsupervised ML algorithm was able to predict that ~30% of the eyes with moderate-to-severe keratoconus requiring PKP or ALK. However, it is interesting to note that the authors also utilized ESI to predict the need for endothelial keratoplasty. A different study design will be required to evaluate the potential of AI in predicting the need for endothelial keratoplasty using different baseline parameters in the future.

Artificial Intelligence in Refractive Surgery

With the increase demand for optimal visual and refractive outcome and minimal risk of postoperative complications, there has been an increase amount of AI-related research in the field of refractive surgery, particularly preoperative screening for risk of ectasia following corneal laser refractive surgery, guiding the selection of the type of refractive surgery, and automated refraction.

Post-LASIK ectasia

Iatrogenic ectasia following refractive surgeries occurs in two scenarios. Either the cornea is susceptible owing to pre-existing biomechanical weakening such as in subclinical KC, or when the impact of surgical procedure causes an induced biomechanical weakening in a previously normal cornea.92 93 Screening before refractive surgeries is extremely important to identify candidates at high risk of iatrogenic ectasia. Lopes et al. introduced the Pentacam Random Forest Index (PRFI), which was a trained ML algorithm of random forest (RF) using data from 3 different continents.94 The PRFI was significantly more sensitive and specific than the Belin-Ambrosio enhanced ectasia total derivation (BAD-D) in detecting keratectasia. When considering ectasias with normal topography, PRFI presented sensitivity of 85.2% and specificity of 96.6%. Using Orbscan II tomography, Saad and Gatinel described a linear discriminant model with a high sensitivity (93%) and specificity (92%) in detecting post-LASIK ectasia (PLE).95 A recent paper has shown the now named ‘SCORE’ Analyzer to have the highest AUC at 0.911.96 Using a larger dataset of 6465 corneal tomographic images, Xie and co-workers developed the Pentacam InceptionResNetV2 Screening System for refractive surgery.97 The authors report a sensitivity of 80% in identifying ectasia suspects, 90% in diagnosing early KC, and an overall diagnostic accuracy of 95% with an AUC of 0.99. In identifying normal cornea, suspected irregular cornea and keratoconic cornea, PIRSS was more accurate as compared to BAD (93.7% vs 86.2% accuracy). Moreover, the false positive rate in suspect category was 10% using BAD as compared to 1.7% using PIRSS. Despite the high accuracy, a longitudinal follow-up of the patients to see who actually developed the ectasia and an external validation are required to use the technology effectively. 98

Nomograms for prediction of vision correction method

Apart from screening for refractive surgery, AI has been used in recommending the appropriate refractive surgery and the nomograms used. Yoo et al.99 developed a multiclass ML model and classified patients into laser epithelial keratomileusis, laser in situ keratomileusis (LASIK), small incision lenticule extraction (SMILE) and contraindication groups. Using data from 18,840 subjects, the model was trained to select the laser surgery option appropriate for a candidate willing to undergo refractive surgery with an accuracy of 81% and 78.9% in internal and external validation datasets. Cui et al.100 developed a ML model to suggest a nomogram for SMILE surgery to achieve the desired visual outcome. They reported that 93% eyes in ML group and 83% eyes in surgeon group had post-operative refractive error within ±0.50D. The safety and efficacy indices were found to be better in ML group than the surgeon group. Furthermore, Kamiya et al.101 developed an ML-based algorithm to predict the postoperative posterior chamber phakic IOL vault using pre-operative AS-OCT images of patients undergoing phakic IOL implantation, to predict the achieved vault. They observed higher predictability of the vault with their ML algorithm than the manufacturer’s nomogram.

Prediction of subjective refraction

With the global burden of visual impairment from uncorrected refractive error estimated to be over 150 million, our ability to bypass subjective refraction and prescribe from an automated system has become an important goal.10 A new polynomial series, known as LD/HD (Low Degree/High Degree) has challenged the current use of Zernike Polynomials for decomposition of any given wavefront. Previously, DL has been utilized in predicting refractive errors from imaging analysis including fundus images.102 103 Zernike Polynomials were previously investigated as a tool for subjective refraction prediction using a dual hidden multilayer perceptron. ML using LD/HD polynomial decomposition beta-software installed on a wavefront aberrometer allowed accurate prediction of results achieved by subjective refraction, being superior to paraxial matching utilized by Thibos et al. previously.104

Wearable devices to monitor visual behaviour

Internet of Things (IoT) especially IoMT (Internet of medical things) is an expanding field with various wearable hardware designs.8 Visual Behaviour Monitor (Vivior AG, Zurich, Switzerland) is a novel wearable device used to objectively measure preoperative visual behaviours of the patient so that an appropriate refractive correction intervention plan can be made.105 The monitor utilized ML algorithms to assess data transmitted to the cloud and analyzed the lifestyle patterns, including lighting used, reading distances, duration of reading or other tasks. The integration of AI and cloud computing could potentially reduce chair time and allow patients to receive a very personalized treatment plan. Further studies elucidating the potential of AI-integrated cloud computing are required.

Artificial Intelligence in Cataract and Cataract Surgery

Adult cataract

In the US alone, recent analysis showed that even under the most optimistic conditions, the cataract surgical cases backlog due to the COVID19 pandemic would be greater than 1 million at 2 years post-suspension of surgery. This was extrapolated in 2020, just before the second and third lockdowns.106 Over 20 million cataract surgeries were being performed globally per year before the COVID-19 pandemic, but this figure has drastically reduced since. In some European centres, a 97% reduction in surgical volume has been reported in 1 month compared with the corresponding period a year before.107 Our recent Nottingham study similarly observed a significant increase in the surgical backlog by 400-500% as a result of COVID-19 pandemic, with cataract surgery (53%) forming the main bulk of the workload.108 The disruption of the healthcare service had also imposed negative psychosocial impact on the affected eye patients, particularly those with moderate-to-severe visual impairment.109 All these issues highlight the need for radical and innovative measures to tackle this unprecedented burden on ophthalmology services.

Recently, there has been an increased interest in computer-aided analysis of cataract images, using slit-lamp photograph and/or fundus photography, to enable automated detection and grading.110–112 Simultaneous diagnosis of anterior and posterior segment diseases would be preferable. However, such approach would need to take into consideration the potential confounding factors such as vitreous opacities or a small pupil. Xiong et al.113 explored a new approach to consider blurriness of retinal images as a way to grade cataracts, though vitreous opacities reduced specificity.

In 2019, Zhang et al. used fundus imaging technique with a stacked multi-feature based technique to differentiate severity of cataracts.114 ResNet18 (Residual Network) and GLCM (Gray level co-occurrence matrix) were utilized with two Support vector machine classifiers (SVM) and a fully connected neural network (FCNN) leading a six-level grading, demonstrating high accuracy of 92.7%. Subsequently, Zhou et al.115 highlighted the problem with deep neural networks (DNN), namely overfitting and increased network storage needs. They proposed a method using discrete state transition DNN – with DST-ResNet, and EDST-MLP achieving high accuracy in detection and grading of cataract, respectively. Xu and colleagues used a CNN-based model to learn direct features from input data followed by a deconvolution network method to investigate how the cataract was characterized in layers. This forgoes the need for experienced ophthalmologists to label data allowing for a new CNN model.114, 116 Moreover, AI-assisted telemedicine platforms have been proposed to screen, diagnose and grade cataracts, potentially serving as a model of care for global eye health.116, 117

So far, most AI models in cataract are based upon slit-lamp images and fundus photographs. Optical Quality Analysis System (OQAS, Visionmetrics, Spain), a new imaging technique based on a double-pass aberrometry, has been used to objectively evaluate the quality of vision. It measures several parameters, with OSI (Objective Scatter Index) being the most effective, assisting in preoperative decision making. These parameters could be potentially used as part of a referral algorithm with cloud computing to replace other less objective cataract detection techniques in the community.118

In addition, several studies have also reported the utility of AI in diagnosing posterior capsular opacification (PCO), one of the most common complications following cataract surgery.119 Mohammadi et al.120 developed an ANN-based algorithm for predicting the risk of significant PCO, with an accuracy of 87%. More recently Jiang et al.121 showed that TempSeq-Net (combination CL algorithm) predicted the progress of PCO at 24 months from slit lamp images with high accuracy of 92.2%.

Pediatric Cataract

Pediatric cataract is less uniform than adult cataract and a decision to operate is dependent on the risk of amblyopia, due to deprivation of visual stimuli. Pediatric examination is challenging and attaining consistent high quality slit-lamp images difficult.122 Though few studies have been conducted into pediatric cataracts due to scarcity in resources, they have started to demonstrate the ability of AI algorithms in diagnosing pediatric cataracts.123

Recently, Zhang et al.124 studied associations related to complications such as severe lens proliferation into the visual axis or raised intraocular pressure. Apriori algorithm used random forest (RF) and naïve Bayesian (NB) for prediction with datasets which were processed by a synthetic minority oversampling technique (SMOTE) leading to average accuracies of over 91% in solving three binary classifications but dropped to 65% when tested on a new dataset. There is an inability to translate information from adult to pediatric cases, though transfer learning or multi-task learning could allow adult model adaptation.7

Lin et al.125 recently developed a model to identify those at high risk of congenital cataracts. In this study, 2005 individuals, including 1274 children with congenital cataracts and 731 healthy controls’ information was used (non-imaging based) including birth history, family medical history and other environmental factors. Random forest (RF) and adaptive boosting methods were employed, the method achieved stable performance with an AUC of 0.94-0.96 in several subgroups. Long et al.126 used Bayesian and deep-learning algorithms to create CC-Guardian (Congenital Cataract), an AI agent that integrates individualized prediction and follow-up with a smartphone application and cloud computing. The CC-Guardian predicted visual axis opacification with an area under the curve of 0.944 and raised IOP with an AUC of 0.961.

IOL power calculation

Intraocular lens power calculations have moved beyond the first- and second-generation formulas (SRK, Hoffer and SRKII), with third generation formulas such as SRKT, Holladay, Haigis and Hoffer Q being used in clinical practice worldwide. Newer fourth-generation formulas such as Olsen formula (based on ray tracing) and Barrett Universal II have demonstrated higher accuracy in IOL calculation when compared with Holladay 2 and other third-generation formulas.127 AI-based IOL formulas are demonstrating higher levels of accuracy and they include the Hill-Radial Basis Function (RBF) calculator, Kane formula, PEARL-DGS formula and Ladas formula.128–130 Ophthalmologists will need to keep-pace to determine the best IOL selection method and must become familiar with these new options. A summary of AI-based IOL formulas is provided in Table 3.129–132

Table 3. Summary of the artificial intelligence (AI)-based formulas for intraocular lens (IOL) power calculation.

| Formula | Basis of AI | Constant | Main parameters |

|---|---|---|---|

| Clarke & Kapelner formula129 | BARF | A-Constant derived, Surgeon Factor | AL, K, ACD, CCT, LT |

| Pearl-DGS (2019)130, 131

(www.iolsolver.com) |

ML / output linearization | A-Constant derived | AL, K, ACD, CCT, WTW, LT |

| Hill-RBF Calculator 2.0 (2018)130–132

(www.rbfcalculator.com) |

Regression / neural network | A-Constant derived | AL, K, ACD, CCT, WTW, LT |

| Kane Formula (2017)130, 131

(www.iolformula.com) |

Theoretical optics / regression | A-Constant derived | AL, K, ACD, gender, CCT, LT |

| Ladas Super Formula AI (2015)132

(www.iolcalc.com) |

DL based on various formula | A-Constant derived | AL, K, ACD |

| FullMonte (2010)132 | Neural network | Markov Chain Monte Carlo Method | AL, K, STS width and perpendicular depth, Cornea shape factor Q |

PEARL = Prediction Enhanced by ARtificial intelligence and output Linearization; RBF = Radial basis function; BARF = Bayesian additive regression trees; ML = Machine learning; DL = Deep learning; AL = Axial Length; K = Keratometry; ACD = Anterior chamber depth; CCT = Central corneal thickness; WTW = White-to-white; LT = Lens thickness; STS = Sulcus-to-sulcus

Li et al.133 looked at ways of integrating a ML algorithm for accurate prediction of the anterior chamber depth (ACD) to see if this improved the prediction error of an existing IOL calculation formula. Dataset of 4806 patients were collected and split into training (5761 eyes) and test sets (961 eyes) and resulted in improving the prediction accuracy of all four formulas tested. A recent study developed a new XGBoost ML-based calculator for highly myopic eyes, which incorporated the Barrett Universal II formula results, showed a significant improvement in the percentage of eyes achieving ±0.25D of the prediction error compared with the BUII alone.

Carmona-Gonzalez and Palomino-Bautista134 recently developed a ML-based model, named Karmona, using a range of parameters to predict IOL power. Various ML techniques, including k-Nearest Neighbor, ANN, SVM and Random Forest, were employed. The new method was able to predict 90% and 100% of eyes within ±0.5D and ±1.0D, respectively. Another study used a multilayer perceptron (MLP) to calculate IOL power using 15,728 eyes, resulted in the prediction error less than 0.5D in more than 95% of cases.135 Savini et al.130 recently compared 13 formulas for IOL power calculations, including some AI-based formulas, with an inclination towards better outcomes with the newer AI-based formulas. With more comparative studies being published since the recent years, IOL formulas are likely to improve at a fast pace with aims to validate for special clinical cases also.127, 136, 137 In addition, with the increased demand in “perfect unaided vision”, lens manufacturers would also need to look at providing lenses in fraction of dioptres less than 0.5D (e.g. in 0.25D step).

Teaching and training

Harnessing artificial intelligence techniques to develop teaching or training methods has been explored in recent years.138 ML algorithms for automated phase classification of manually pre-segmented phases in videos of cataract surgery were evaluated. 139 This allowed for automated skill assessment and feedback with 100 cataract procedures performed by consultants and trainees from 2011-2017. The segments of procedure were labelled and instruments were manually annotated which provided the ground truth, and five ML algorithms were used. The results showed that developing these may provide tools for automated detection of phases in cataract surgery procedures to aid training. Another computer-assisted intervention (CAI) surgical phase recognition algorithm aimed at improving surgical outcomes.140 Lanza et al.141 evaluated factors involved in cataract surgery complications using AI where 73 complications out of 1229 surgeries were analyzed. The data obtained is hoped to be used to build a model that can help prevent complications and predict actual surgical time.

Future Directions

Recognition of the global burden of corneal diseases and cataracts has highlighted the unmet need in screening for these diseases, especially in resource poor nations. With advances finally being made in anterior segment disease, it is likely that both imaging and non-imaging based AI algorithms could allow timely diagnosis and treatment of corneal diseases and cataracts as well as advancing areas in refractive surgery.

Limitations of AI: Recognition before resolution

The utilization of AI for anterior segment diseases still remains its infancy, with multiple limitations required to be addressed before they can be implemented in the real-world clinical settings.5, 7 Standardization of the imaging techniques and methods for anterior segment is more difficult than of the fundal imaging, primarily due to the variability in the magnification, contrast, angle and width of the light beam, and the transparent nature of the cornea. All these would need to be taken into account during the standardization process to assure the image quality and usability. Preparing the ground truth with high quality data which annotated and segmented by experts is time consuming and algorithms with a greater appetite for data such as CNN will need to be replaced by more self- or unsupervised methods. Validation of large data set obtained from heterogeneous cohorts that reflect the real-world setting is necessary, but medico-legal and security of data as well as set rules must be adhered to.

AI focuses on making connections between input and preferred output. Therefore, it is important to ensure that these AI models targets the relevant factors instead of confounding factors to avoid the problem of ‘rubbish in, rubbish out’. An ideal input would have a high image resolution, high accuracy of data input (ground truth), least interobserver variability, coupled with new technology including hand-held retinal cameras, slit-lamp adapters, smartphones and cloud computing to improve the service workflow.

There is also a perception of a ‘black box’ effect where decision processes for giving more weighting to certain parameters or features is not apparent. Some of the hidden behaviors of DL models still require further research. For instance, by editing a few pixels in an image, which is barely noticeable by human, will cause a completely failure for DL models to recognize a well-distinguishable object (known as adversarial attack).142 A relatively open algorithm with examinable decision steps may justify and reassure clinicians looking to apply these in clinical care of their patients.122 There is also a deluge of new acronyms in AI as well as ever-increasing ways in which AI is used. Standardized nomenclature would augment reproducibility and allow generalizability of a given study to a new and different cohort.

Clinical implementation and integration with big data and tele-medicine

Many areas of adult and pediatric anterior segment diseases have yet to be explored with AI, with potential for future applications. An automated detection system combined with telemedicine could enable a wider access to the healthcare services, especially if they match or outperform those of a trained specialist. 117 116 There is a definite gap at present between useful algorithms and real-world implementation, with a need for focus on translational research. Big data, particularly those derived from electronic health records, serve as valuable untapped resources for facilitating the training and development of robust AI systems as large amount of data are often required.

Before clinical deployment, several barriers need to be considered, including cost, expertise and regulatory barriers. Though the time and cost currently needed to train and develop these algorithms are high, it is likely to be a necessary investment in preventing a collapsing global healthcare system in the future. Using multi-tasking models will also allow for improved cost to benefit ratio, where for example two diagnosis can be made with one examination, for example diagnosing cataract and corneal arcus at the same time.143

Standardization of the conduct and reporting of AI research

In addition, there exist considerable variations in the reporting and conduct of studies related to ophthalmic AI research standards.144 An agreed format in terms of reporting metrics, statistics and real-world clinical applicability is required. Recently, a number of AI guidelines, including CONSORT-AI, SPIRIT-AI, and STARD-AI, have been developed to ameliorate these issues.145–147 These are extensions of previous guidelines which have now been adapted for AI-related studies. SPIRIT-AI provides guidance on standard protocol items recommended for interventional trials related to AI whereas CONSORT-AI provides guidance on the consolidated standard of reporting trials related to AI. STARD-AI is an AI specific extension of the standards for reporting of diagnostic accuracy studies. These AI guidelines aim to improve the transparency, consistency and applicability of AI-based research, ultimately enhancing the potential of clinical translation. The apprehension of missed or wrong diagnosis, false reassurances and medicolegal implications remains. Careful implementation with a safety net system will be warranted to ensure utmost patient safety.

AI, telemedicine, 5G/6G networks, Quantum Communication Networks and IoMT are likely to form part of the new healthcare revolution.8, 148 With these digital technologies becoming available at our fingertips, a novel model of ophthalmic care can be develop, helping us to deal with the far reaching after-effects of the current COVID-19 health crisis.8, 108, 148–151

Funding / support

D.S.J.T acknowledges support from the Medical Research Council / Fight for Sight Clinical Research Fellowship (MR/T001674/1) and the Fight for Sight / John Lee, Royal College of Ophthalmologists Primer Fellowship (24CO4).

Biographies

Author Biography

First author: Dr. Radhika Rampat MBChB FRCOphth

First author: Dr. Radhika Rampat MBChB FRCOphth

Affiliation: Moorfields Eye Hospital, London, UK.

Radhika recently attained her certificate of completion of training and is currently a Senior Cornea Fellow & Admin Lead at Moorfields Eye Hospital. She authored and edited the Cornea Section of the Moorfields Emergency Guidelines which can be found on the MicroGuide App. Prior to this she spent a year as a Research Fellow in Cornea and Refractive Surgery with Dr. Damien Gatinel at The Rothschild Foundation Hospital in Paris. She is also an editing consultant for www.defeatkeratoconus.com. Her list of publications can be found at https://www.researchgate.net/profile/RadhikaRampat.

Corresponding author: Dr. Darren S. J. Ting MBChB FRCOphth

Corresponding author: Dr. Darren S. J. Ting MBChB FRCOphth

Affiliations:

Academic Ophthalmology, School of Medicine, University of Nottingham, Nottingham, UK.

Singapore Eye Research Institute, Singapore

Dr. Ting is a UK fully qualified ophthalmologist (post-CCT) and Medical Research Council (MRC) / Fight for Sight (FFS) Fellow at the University of Nottingham, UK. After earning his medical degree at the University of Edinburgh at the age of 22, he went on to complete his 7-year Ophthalmology Specialist Training in the North of England, UK, where he served as the Chief Resident of Ophthalmology for 4 years. He has a strong interest in training, education and research, particularly in corneal infection, corneal transplants, cataract surgery, artificial intelligence, microbiology, antimicrobial therapy, and drug discovery/development. So far, he has earned >20 ophthalmology awards, including the prestigious MRC/FFS Fellowship, the FFS/John Lee, Royal College of Ophthalmologists (RCOphth) Primer Fellowship (first recipient), FRCOphth Crombie Medal (gold medal in FRCOphth part 1 exam 2010/11), and DRCOphth Cornwall Prize (gold medal in DRCOphth exam 2015/16), amongst others. He has also published ~80 peer-reviewed papers, including 5 book chapters and a book (with the Oxford University Press). He currently serves as an editorial/review board member of Frontiers in Medicine, Annals Eye Science and Microorganisms, and peer-review for BJO, PLoS One, Eye, Cornea, and Acta Ophthalmologica.

Footnotes

Conflict of interest: None

References

- 1.Bellemo V, Lim G, Rim TH, Tan GSW, Cheung CY, Sadda S, et al. Artificial Intelligence Screening for Diabetic Retinopathy: the Real-World Emerging Application. Curr Diab Rep. 2019;19(9):72. doi: 10.1007/s11892-019-1189-3. [DOI] [PubMed] [Google Scholar]

- 2.Ahuja AS, Halperin LS. Understanding the advent of artificial intelligence in ophthalmology. J Curr Ophthalmol. 2019;31(2):115–7. doi: 10.1016/j.joco.2019.05.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Ting DSW, Cheung CY, Lim G, Tan GSW, Quang ND, Gan A, et al. Development and Validation of a Deep Learning System for Diabetic Retinopathy and Related Eye Diseases Using Retinal Images From Multiethnic Populations With Diabetes. JAMA. 2017;318(22):2211–23. doi: 10.1001/jama.2017.18152. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.De Fauw J, Ledsam JR, Romera-Paredes B, Nikolov S, Tomasev N, Blackwell S, et al. Clinically applicable deep learning for diagnosis and referral in retinal disease. Nat Med. 2018;24(9):1342–50. doi: 10.1038/s41591-018-0107-6. [DOI] [PubMed] [Google Scholar]

- 5.Ting DSJ, Foo VH, Yang LWY, Sia JT, Ang M, Lin H, et al. Artificial intelligence for anterior segment diseases: Emerging applications in ophthalmology. Br J Ophthalmol. 2021;105(2):158–68. doi: 10.1136/bjophthalmol-2019-315651. [DOI] [PubMed] [Google Scholar]

- 6.Zheng C, Johnson TV, Garg A, Boland MV. Artificial intelligence in glaucoma. Curr Opin Ophthalmol. 2019;30(2):97–103. doi: 10.1097/ICU.0000000000000552. [DOI] [PubMed] [Google Scholar]

- 7.Wu X, Liu L, Zhao L, Guo C, Li R, Wang T, et al. Application of artificial intelligence in anterior segment ophthalmic diseases: diversity and standardization. Ann Transl Med. 2020;8(11):714. doi: 10.21037/atm-20-976. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Li JO, Liu H, Ting DSJ, Jeon S, Chan RVP, Kim JE, et al. Digital technology, tele-medicine and artificial intelligence in ophthalmology: A global perspective. Prog Retin Eye Res. 2020 doi: 10.1016/j.preteyeres.2020.100900. 100900. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. https://www.who.int/news-room/fact-sheets/detail/blindness-and-visual-impairment

- 10.Flaxman SR, Bourne RRA, Resnikoff S, Ackland P, Braithwaite T, Cicinelli MV, et al. Global causes of blindness and distance vision impairment 1990-2020: a systematic review and meta-analysis. Lancet Glob Health. 2017;5(12):e1221–e34. doi: 10.1016/S2214-109X(17)30393-5. [DOI] [PubMed] [Google Scholar]

- 11.Wang W, Yan W, Fotis K, Prasad NM, Lansingh VC, Taylor HR, et al. Cataract Surgical Rate and Socioeconomics: A Global Study. Invest Ophthalmol Vis Sci. 2016;57(14):5872–81. doi: 10.1167/iovs.16-19894. [DOI] [PubMed] [Google Scholar]

- 12.Erie JC. Rising cataract surgery rates: demand and supply. Ophthalmology. 2014;121(1):2–4. doi: 10.1016/j.ophtha.2013.10.002. [DOI] [PubMed] [Google Scholar]

- 13.Ting DSJ, Rees J, Ng JY, Allen D, Steel DHW. Effect of high-vacuum setting on phacoemulsification efficiency. J Cataract Refract Surg. 2017;43(9):1135–9. doi: 10.1016/j.jcrs.2017.09.001. [DOI] [PubMed] [Google Scholar]

- 14.Sudhir RR, Dey A, Bhattacharrya S, Bahulayan A. AcrySof IQ PanOptix Intraocular Lens Versus Extended Depth of Focus Intraocular Lens and Trifocal Intraocular Lens: A Clinical Overview. Asia Pac J Ophthalmol (Phila) 2019;8(4):335–49. doi: 10.1097/APO.0000000000000253. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Morgan IG, French AN, Ashby RS, Guo X, Ding X, He M, et al. The epidemics of myopia: Aetiology and prevention. Prog Retin Eye Res. 2018;62:134–49. doi: 10.1016/j.preteyeres.2017.09.004. [DOI] [PubMed] [Google Scholar]

- 16.Wong CW, Tsai A, Jonas JB, Ohno-Matsui K, Chen J, Ang M, et al. Digital Screen Time During the COVID-19 Pandemic: Risk for a Further Myopia Boom? Am J Ophthalmol. 2020;223:333–7. doi: 10.1016/j.ajo.2020.07.034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Wang J, Li Y, Musch DC, Wei N, Qi X, Ding G, et al. Progression of Myopia in School-Aged Children After COVID-19 Home Confinement. JAMA Ophthalmol. 2021 doi: 10.1001/jamaophthalmol.2020.6239. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Ting DSJ, Ho CS, Deshmukh R, Said DG, Dua HS. Infectious keratitis: an update on epidemiology, causative microorganisms, risk factors, and antimicrobial resistance. Eye (Lond) 2021 doi: 10.1038/s41433-020-01339-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Ting DSJ, Ho CS, Cairns J, Elsahn A, Al-Aqaba M, Boswell T, et al. 12-year analysis of incidence, microbiological profiles and in vitro antimicrobial susceptibility of infectious keratitis: the Nottingham Infectious Keratitis Study. Br J Ophthalmol. 2020 doi: 10.1136/bjophthalmol-2020-316128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Ung L, Bispo PJM, Shanbhag SS, Gilmore MS, Chodosh J. The persistent dilemma of microbial keratitis: Global burden, diagnosis, and antimicrobial resistance. Surv Ophthalmol. 2019;64(3):255–71. doi: 10.1016/j.survophthal.2018.12.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Collier SA, Gronostaj MP, MacGurn AK, Cope JR, Awsumb KL, Yoder JS, et al. Estimated burden of keratitis--United States, 2010. MMWR Morb Mortal Wkly Rep. 2014;63(45):1027–30. [PMC free article] [PubMed] [Google Scholar]

- 22.Hashemi H, Heydarian S, Hooshmand E, Saatchi M, Yekta A, Aghamirsalim M, et al. The Prevalence and Risk Factors for Keratoconus: A Systematic Review and Meta-Analysis. Cornea. 2020;39(2):263–70. doi: 10.1097/ICO.0000000000002150. [DOI] [PubMed] [Google Scholar]

- 23.Ting DSJ, Rana-Rahman R, Chen Y, Bell D, Danjoux JP, Morgan SJ, et al. Effectiveness and safety of accelerated (9mW/cm. Eye (Lond) 2019;33(5):812–8. doi: 10.1038/s41433-018-0323-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Vinciguerra R, Pagano L, Borgia A, Montericcio A, Legrottaglie EF, Piscopo R, et al. Corneal Cross-linking for Progressive Keratoconus: Up to 13 Years of Follow-up. J Refract Surg. 2020;36(12):838–43. doi: 10.3928/1081597X-20201021-01. [DOI] [PubMed] [Google Scholar]

- 25.Ting DS, Sau CY, Srinivasan S, Ramaesh K, Mantry S, Roberts F. Changing trends in keratoplasty in the West of Scotland: a 10-year review. Br J Ophthalmol. 2012;96(3):405–8. doi: 10.1136/bjophthalmol-2011-300244. [DOI] [PubMed] [Google Scholar]

- 26.Sidey-Gibbons JAM, Sidey-Gibbons CJ. Machine learning in medicine: a practical introduction. BMC Med Res Methodol. 2019;19(1):64. doi: 10.1186/s12874-019-0681-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.LeCun Y, Bengio Y, Hinton G. Deep learning. Nature. 2015;521(7553):436–44. doi: 10.1038/nature14539. [DOI] [PubMed] [Google Scholar]

- 28.Krizhevsky A, Sutskever I, Hinton GE. ImageNet Classification with Deep Convolutional Neural Networks. Advances in neural information processing systems. 2012;25:1097–105. [Google Scholar]

- 29.Goodfellow IJ, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, et al. Generative Adversarial Networks. arXiv. 2014 1406.2661. [Google Scholar]

- 30.Goodfellow IJ, Bengio Y, Courville A. In: Deep learning. Bach F, editor. MIT Press; Cambridge, MA: 2016. [Google Scholar]

- 31.Kingma DP, Ba JL. ADAM: A method for stochastic optimization. arXiv. 2015 1412.6980v9. [Google Scholar]

- 32.He K, Zhang X, Ren S, Sun J. Deep Residual Learning for Image Recognition. arXiv. 2015 1512.03385. [Google Scholar]

- 33.Szegedy C, Vanhoucke V, Ioffe S, Shlens J, Wojna Z. Rethinking the Inception Architecture for Computer Vision. arXiv. 2015 1512.00567. [Google Scholar]

- 34.Ronneberger O, Fischer P, Brox T. U-Net: Convolutional Networks for Biomedical Image Segmentation. MICCAI; 2015. pp. 234–41. [Google Scholar]

- 35.Simonyan K, Zisserman A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv. 2015 1409.1556. [Google Scholar]

- 36.Radford A, Metz L, Chintala S. Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks. arXiv. 2016 1511.06434v2. [Google Scholar]

- 37.Zhang N, Francis S, Malik RA, Chen X. A Spatially Constrained Deep Convolutional Neural Network for Nerve Fiber Segmentation in Corneal Confocal Microscopic Images Using Inaccurate Annotations. IEEE-ISBI. 2020:456–60. [Google Scholar]

- 38.Chen X, Graham J, Dabbah MA, Petropoulos IN, Ponirakis G, Asghar O, et al. Small nerve fiber quantification in the diagnosis of diabetic sensorimotor polyneuropathy: comparing corneal confocal microscopy with intraepidermal nerve fiber density. Diabetes Care. 2015;38(6):1138–44. doi: 10.2337/dc14-2422. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Srivastava N, Hinton G, Krizhevsky A, Sutskever I, Salakhutdinov R. Dropout: A Simple Way to Prevent Neural Networks from Overfitting. Journal of Machine Learning Research. 2014;15(56):1929–58. [Google Scholar]

- 40.Shorten C, Khoshgoftaar TM. A survey on Image Data Augmentation for Deep Learning. Journal of Big Data. 2019;6(1):60. doi: 10.1186/s40537-021-00492-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Bird JJ, Faria DR, Ekart A, Ayrosa PPS, editors. From simulation to reality: CNN transfer learning for scene classification. IEEE 10th International Conference on Intelligent Systems; Varna, Bulgaria: IEEE. 2020. [Google Scholar]

- 42.Li R, Auer D, Wagner C, Chen X. A generic ensemble based deep convolutional neural network for semi-supervised medical image segmentation. IEEE-ISBI. 2020:1168–72. [Google Scholar]

- 43.Yi X, Walia E, Babyn P. Generative adversarial network in medical imaging: A review. Med Image Anal. 2019;58:101552. doi: 10.1016/j.media.2019.101552. [DOI] [PubMed] [Google Scholar]

- 44.Ting DSJ, Bignardi G, Koerner R, Irion LD, Johnson E, Morgan SJ, et al. Polymicrobial Keratitis With Cryptococcus curvatus, Candida parapsilosis, and Stenotrophomonas maltophilia After Penetrating Keratoplasty: A Rare Case Report With Literature Review. Eye Contact Lens. 2019;45(2):e5–e10. doi: 10.1097/ICL.0000000000000517. [DOI] [PubMed] [Google Scholar]

- 45.Ting DSJ, Settle C, Morgan SJ, Baylis O, Ghosh S. A 10-year analysis of microbiological profiles of microbial keratitis: the North East England Study. Eye (Lond) 2018;32(8):1416–7. doi: 10.1038/s41433-018-0085-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Khoo P, Cabrera-Aguas MP, Nguyen V, Lahra MM, Watson SL. Microbial keratitis in Sydney, Australia: risk factors, patient outcomes, and seasonal variation. Graefes Arch Clin Exp Ophthalmol. 2020;258(8):1745–55. doi: 10.1007/s00417-020-04681-0. [DOI] [PubMed] [Google Scholar]

- 47.Dahlgren MA, Lingappan A, Wilhelmus KR. The clinical diagnosis of microbial keratitis. American Journal of Ophthalmology. 2007;143(6):940–4. doi: 10.1016/j.ajo.2007.02.030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Dalmon C, Porco TC, Lietman TM, Prajna NV, Prajna L, Das MR, et al. The clinical differentiation of bacterial and fungal keratitis: a photographic survey. Invest Ophthalmol Vis Sci. 2012;53(4):1787–91. doi: 10.1167/iovs.11-8478. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Saini JS, Jain AK, Kumar S, Vikal S, Pankaj S, Singh S. Neural network approach to classify infective keratitis. Curr Eye Res. 2003;27(2):111–6. doi: 10.1076/ceyr.27.2.111.15949. [DOI] [PubMed] [Google Scholar]

- 50.Patel TP, Prajna NV, Farsiu S, Valikodath NG, Niziol LM, Dudeja L, et al. Novel Image-Based Analysis for Reduction of Clinician-Dependent Variability in Measurement of the Corneal Ulcer Size. Cornea. 2018;37(3):331–9. doi: 10.1097/ICO.0000000000001488. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Wu X, Qiu Q, Liu Z, Zhao Y, Zhang B, Zhang Y, et al. Hyphae Detection in Fungal Keratitis Images With Adaptive Robust Binary Pattern. IEEE Access. 2018 [Google Scholar]

- 52.Lv J, Zhang K, Chen Q, Chen Q, Huang W, Cui L, et al. Deep learning-based automated diagnosis of fungal keratitis with in vivo confocal microscopy images. Annals of Translational Medicine. 2020;8(11):706. doi: 10.21037/atm.2020.03.134. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Li W, Yang Y, Zhang K, Long E, He L, Zhang L, et al. Dense anatomical annotation of slit-lamp images improves the performance of deep learning for the diagnosis of ophthalmic disorders. Nat Biomed Eng. 2020;4(8):767–77. doi: 10.1038/s41551-020-0577-y. [DOI] [PubMed] [Google Scholar]

- 54.Kuo M-T, Hsu BW-Y, Yin Y-K, Fang P-C, Lai H-Y, Chen A, et al. A deep learning approach in diagnosing fungal keratitis based on corneal photographs. Sci Rep. 2020;10(1):14424. doi: 10.1038/s41598-020-71425-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Gu H, Guo Y, Gu L, Wei A, Xie S, Ye Z, et al. Deep learning for identifying corneal diseases from ocular surface slit-lamp photographs. Sci Rep. 2020;10(1):17851. doi: 10.1038/s41598-020-75027-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Liu Z, Cao Y, Li Y, Xiao X, Qiu Q, Yang M, et al. Automatic diagnosis of fungal keratitis using data augmentation and image fusion with deep convolutional neural network. Comput Methods Programs Biomed. 2020;187:105019. doi: 10.1016/j.cmpb.2019.105019. [DOI] [PubMed] [Google Scholar]

- 57.Issarti I, Consejo A, Jiménez-García M, Hershko S, Koppen C, Rozema JJ. Computer aided diagnosis for suspect keratoconus detection. Comput Biol Med. 2019;109:33–42. doi: 10.1016/j.compbiomed.2019.04.024. [DOI] [PubMed] [Google Scholar]

- 58.Smolek MK, Klyce SD. Current keratoconus detection methods compared with a neural network approach. Invest Ophthalmol Vis Sci. 1997;38(11):2290–9. [PubMed] [Google Scholar]

- 59.Silverman RH, Urs R, Roychoudhury A, Archer TJ, Gobbe M, Reinstein DZ. Epithelial remodeling as basis for machine-based identification of keratoconus. Invest Ophthalmol Vis Sci. 2014;55(3):1580–7. doi: 10.1167/iovs.13-12578. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Kovács I, Miháltz K, Kránitz K, Juhász É, Takács Á, Dienes L, et al. Accuracy of machine learning classifiers using bilateral data from a Scheimpflug camera for identifying eyes with preclinical signs of keratoconus. J Cataract Refract Surg. 2016;42(2):275–83. doi: 10.1016/j.jcrs.2015.09.020. [DOI] [PubMed] [Google Scholar]

- 61.Souza MB, Medeiros FW, Souza DB, Garcia R, Alves MR. Evaluation of machine learning classifiers in keratoconus detection from orbscan II examinations. Clinics (Sao Paulo) 2010;65(12):1223–8. doi: 10.1590/S1807-59322010001200002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Arbelaez MC, Versaci F, Vestri G, Barboni P, Savini G. Use of a support vector machine for keratoconus and subclinical keratoconus detection by topographic and tomographic data. Ophthalmology. 2012;119(11):2231–8. doi: 10.1016/j.ophtha.2012.06.005. [DOI] [PubMed] [Google Scholar]

- 63.Cao K, Verspoor K, Sahebjada S, Baird PN. Evaluating the Performance of Various Machine Learning Algorithms to Detect Subclinical Keratoconus. Transl Vis Sci Technol. 2020;9(2):24. doi: 10.1167/tvst.9.2.24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Twa MD, Parthasarathy S, Roberts C, Mahmoud AM, Raasch TW, Bullimore MA. Automated decision tree classification of corneal shape. Optom Vis Sci. 2005;82(12):1038–46. doi: 10.1097/01.opx.0000192350.01045.6f. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Smadja D, Touboul D, Cohen A, Doveh E, Santhiago MR, Mello GR, et al. Detection of subclinical keratoconus using an automated decision tree classification. Am J Ophthalmol. 2013;156(2):237–46. doi: 10.1016/j.ajo.2013.03.034. e1. [DOI] [PubMed] [Google Scholar]

- 66.Valdés-Mas MA, Martín-Guerrero JD, Rupérez MJ, Pastor F, Dualde C, Monserrat C, et al. A new approach based on Machine Learning for predicting corneal curvature (K1) and astigmatism in patients with keratoconus after intracorneal ring implantation. Comput Methods Programs Biomed. 2014;116(1):39–47. doi: 10.1016/j.cmpb.2014.04.003. [DOI] [PubMed] [Google Scholar]

- 67.Lavric A, Valentin P. KeratoDetect: Keratoconus Detection Algorithm Using Convolutional Neural Networks. Comput Intell Neurosci. 2019 doi: 10.1155/2019/8162567. 2019:8162567. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Yousefi S, Takahashi H, Hayashi T, Tampo H, Inoda S, Arai Y, et al. Predicting the likelihood of need for future keratoplasty intervention using artificial intelligence. Ocul Surf. 2020;18(2):320–5. doi: 10.1016/j.jtos.2020.02.008. [DOI] [PubMed] [Google Scholar]

- 69.Yousefi S, Yousefi E, Takahashi H, Hayashi T, Tampo H, Inoda S, et al. Keratoconus severity identification using unsupervised machine learning. PLoS One. 2018;13(11):e0205998. doi: 10.1371/journal.pone.0205998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Zéboulon P, Debellemanière G, Bouvet M, Gatinel D. Corneal Topography Raw Data Classification Using a Convolutional Neural Network. Am J Ophthalmol. 2020;219:33–9. doi: 10.1016/j.ajo.2020.06.005. [DOI] [PubMed] [Google Scholar]

- 71.Hashemi H, Beiranvand A, Yekta A, Maleki A, Yazdani N, Khabazkhoob M. Pentacam top indices for diagnosing subclinical and definite keratoconus. J Curr Ophthalmol. 2016;28(1):21–6. doi: 10.1016/j.joco.2016.01.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Hosoda Y, Miyake M, Meguro A, Tabara Y, Iwai S, Ueda-Arakawa N, et al. Keratoconus-susceptibility gene identification by corneal thickness genome-wide association study and artificial intelligence IBM Watson. Commun Biol. 2020;3(1):410. doi: 10.1038/s42003-020-01137-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Fariselli C, Vega-Estrada A, Arnalich-Montiel F, Alio JL. Artificial neural network to guide intracorneal ring segments implantation for keratoconus treatment: a pilot study. Eye Vis (Lond) 2020;7:20. doi: 10.1186/s40662-020-00184-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Zéboulon P, Ghazal W, Gatinel D. Corneal Edema Visualization With Optical Coherence Tomography Using Deep Learning: Proof of Concept. Cornea. 2020 doi: 10.1097/ICO.0000000000002640. [DOI] [PubMed] [Google Scholar]

- 75.Eleiwa T, Elsawy A, Özcan E, Abou Shousha M. Automated diagnosis and staging of Fuchs’ endothelial cell corneal dystrophy using deep learning. Eye Vis (Lond) 2020;7:44. doi: 10.1186/s40662-020-00209-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Kessel K, Mattila J, Linder N, Kivelä T, Lundin J. Deep Learning Algorithms for Corneal Amyloid Deposition Quantitation in Familial Amyloidosis. Ocul Oncol Pathol. 2020;6(1):58–65. doi: 10.1159/000500896. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Dabbah MA, Graham J, Petropoulos IN, Tavakoli M, Malik RA. Automatic analysis of diabetic peripheral neuropathy using multi-scale quantitative morphology of nerve fibres in corneal confocal microscopy imaging. Med Image Anal. 2011;15(5):738–47. doi: 10.1016/j.media.2011.05.016. [DOI] [PubMed] [Google Scholar]

- 78.Shetty R, Sethu S, Deshmukh R, Deshpande K, Ghosh A, Agrawal A, et al. Corneal Dendritic Cell Density Is Associated with Subbasal Nerve Plexus Features, Ocular Surface Disease Index, and Serum Vitamin D in Evaporative Dry Eye Disease. Biomed Res Int. 2016 doi: 10.1155/2016/4369750. 2016:4369750. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Shetty R, Deshmukh R, Shroff R, Dedhiya C, Jayadev C. Subbasal Nerve Plexus Changes in Chronic Migraine. Cornea. 2018;37(1):72–5. doi: 10.1097/ICO.0000000000001403. [DOI] [PubMed] [Google Scholar]

- 80.Scarpa F, Colonna A, Ruggeri A. Multiple-Image Deep Learning Analysis for Neuropathy Detection in Corneal Nerve Images. Cornea. 2020;39(3):342–7. doi: 10.1097/ICO.0000000000002181. [DOI] [PubMed] [Google Scholar]

- 81.Oakley JD, Russakoff DB, McCarron ME, Weinberg RL, Izzi JM, Misra SL, et al. Deep learning-based analysis of macaque corneal sub-basal nerve fibers in confocal microscopy images. Eye Vis (Lond) 2020;7:27. doi: 10.1186/s40662-020-00192-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Williams BM, Borroni D, Liu R, Zhao Y, Zhang J, Lim J, et al. An artificial intelligence-based deep learning algorithm for the diagnosis of diabetic neuropathy using corneal confocal microscopy: a development and validation study. Diabetologia. 2020;63(2):419–30. doi: 10.1007/s00125-019-05023-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Wei S, Shi F, Wang Y, Chou Y, Li X. A Deep Learning Model for Automated Sub-Basal Corneal Nerve Segmentation and Evaluation Using In Vivo Confocal Microscopy. Transl Vis Sci Technol. 2020;9(2):32. doi: 10.1167/tvst.9.2.32. [DOI] [PMC free article] [PubMed] [Google Scholar]