Summary

Any cognitive function is mediated by a network of many cortical sites whose activity is orchestrated through complex temporal dynamics. To understand cognition, we thus need to identify brain responses in both space and time simultaneously. Here we present a technique that does just that by linking multivariate response patterns of the human brain recorded with functional magnetic resonance imaging (fMRI) and with magneto- or encephalography (M/EEG) based on representational similarity. We present the rationale and current applications of this non-invasive analysis technique termed M/EEG-fMRI fusion, and discuss its pros and cons. We highlight its wide applicability in cognitive neuroscience and how its openness to further development and extension gives it strong potential for a deeper understanding of cognition in the future.

Keywords: Neural dynamics, MEG, EEG, fMRI, fusion

3. Introduction: Identifying human brain responses in space and time

We take our cognition for granted: reading these words, recognizing a voice, or remembering where you left your phone are cognitive functions we engage in regularly and effortlessly. The apparent simplicity however belies the complexity of the underlying choreography of neural activity. Each cognitive function involves a network of cortical sites with specific temporal dynamics. Some sites exhibit transient signals, quickly transferring information across the network; other sites manifest lingering responses for hundreds of milliseconds. A key to characterizing how neural activity enables cognitive function is thus to identify neural activity in both space and time with high resolution simultaneously.

This is a major challenge for contemporary cognitive neuroscience, because current non-invasive brain measurement techniques excel either in spatial or temporal resolution, but not in both. Magneto- and electroencephalography (M/EEG) have excellent temporal resolution at the millisecond level, but lack in spatial resolution. In contrast, functional magnetic resonance imaging (fMRI) can resolve brain activity-related blood property changes even below the millimeter level but is too sluggish to resolve brain activity at the rapid time scale it unfolds. Thus, if considered in isolation, each technique leaves the many-to-many mapping between brain regions and time points underspecified (Fig. 1A). However, the complementarity of the techniques’ virtues inspires the idea that by combining the measurement data one might combine their virtues, while overcoming their shortcomings (Dale and Halgren, 2001; Debener et al., 2006; Huster et al., 2012; Jorge et al., 2014; Rosa et al., 2010).

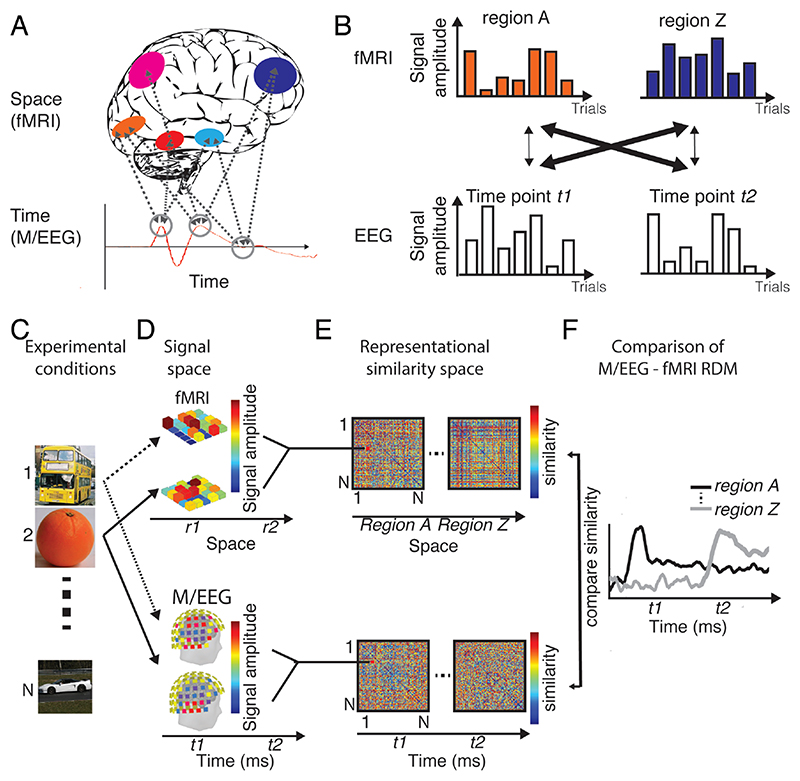

Figure 1. Identifying brain responses in space and time.

(A) Problem formulation. Any cognitive function is underpinned by complex spatiotemporal neural dynamics, with many brain regions contributing to it at many time points. M/EEG and fMRI resolve these dynamics well only in time or space respectively, leaving the many-to-many mapping between brain regions and time points underspecified. (B) Single-trial EEG fMRI. This technique links time points in EEG to brain regions in fMRI by the constraint that signal amplitudes should covary across measurements if they reflect the same neural responses. (C-E) Mechanics of basic M/EEG/fMRI fusion exemplified for a visual experiment. M/EEG-fusion is best understood as a particular application of representational similarity analysis. (C) For a sufficiently large condition set (here: N object images), (D) multivariate brain responses to the stimuli are mapped in their respective signal space. (E) We abstract away from the incommensurate signal space to a common similarity space, calculating pair-wise dissimilarities between data for experimental conditions. Results are saved in so-called representational dissimilarity matrices (RDMs) indexed in rows and columns by the N conditions compared. (F) In a final step M/EEG and fMRI RDMs are compared for similarity, specifying the mapping between time points in M/EEG and regions in fMRI by the constraint of representational similarity.

Here we discuss one promising recent manifestation of this idea based on representational similarity that we termed M/EEG-fMRI fusion (Cichy and Teng, 2017; Cichy et al., 2014, 2016). We begin by introducing the technique in its basic formulation and compare it to related approaches. We then highlight recent research using M/EEG-fMRI fusion to shed new light on the neural dynamics of sensory processing and higher-level cognitive functions. We hereby demonstrate the flexibility of the M/EEG fMRI fusion framework for adaptation and development. We close with a discussion of the future potential of M/EEG-fMRI fusion and its limitations. We argue that M/EEG-fMRI fusion will contribute to distilling the best out of current imaging method developments that push the limits towards new levels of theoretical description in the human brain.

4. The basic formulation of M/EEG-fMRI fusion

How can the mapping of neural responses observed at different time points in M/EEG and fMRI activations at different locations be resolved? One idea to forge a link between spatial locations in fMRI and time points in M/EEG is by intentionally introducing additional experimental constraints. For example, researchers have used trial-by-trial variability in simultaneous EEG-fMRI for this purpose (Debener et al., 2006) (Fig. 1B). The idea is that measurements of neural activity by different imaging modalities reflect the same generators if they correlate on a trial-by-trial basis. In this way activated brain regions identified with fMRI were linked to neural responses at particular time points measured with M/EEG.

M/EEG-fMRI fusion is similar in that it also uses experimentally induced constraints to link M/EEG and fMRI data (Fig. 1C-F). However, it differs in two fundamental ways. First, it harvests condition-by-condition rather than trial-by trial variability to link measurements of brain activity in different modalities. This allows combining brain measurements that cannot be taken simultaneously, such as fMRI and MEG. Second, it relates measurements to each other in a multivariate way rather than by relating univariate averages. This is motivated by the idea that signals relate to each other at the level of distributed population codes, rather than mass averages (Averbeck et al., 2006; Jacobs et al., 2010; Panzeri et al., 2015; Stanley, 2013, 2011). Further, it may provide higher sensitivity than univariate approaches by pooling signal across measurement channels (Haynes, 2015).

It is easy to see how these ideas can be practically implemented by considering M/EEG-fMRI fusion as a particular case of representational similarity analysis (RSA) (Kriegeskorte, 2008; Kriegeskorte and Kievit, 2013). The goal of RSA is to relate incommensurate multivariate measurement spaces (such as MEG sensor spaces, fMRI voxel spaces, or model unit spaces) by abstracting signals into a common similarity space.

We describe the process step-by-step and exemplify it by an experiment carried out to investigate visual object processing. First, a set of conditions is chosen that is believed to capture the diversity of neural processing underlying a cognitive function to a sufficient degree (i.e., here a diverse set of objects) (Fig. 1C). Then M/EEG and fMRI measurements are made for these conditions (Fig. 1D). In each measurement space separately, for all pair-wise combinations of conditions, we calculate the similarities (or equivalently the dissimilarities) between their multivariate measurements (Fig. 1E) in the signal spaces (i.e., voxel activation patterns in fMRI and sensor activation patterns in M/EEG). Please note that a multitude of different measures of similarity and dissimilarity are available (Guggenmos et al., 2018; Walther et al., 2016). For demonstrative purpose here, and also often also in practice, simple correlation (or 1 minus correlation for dissimilarity) can be used. In any case the resulting values are stored in matrices indexed in rows and columns by the conditions compared, called representational dissimilarity matrices (RDMs). RDMs summarize which conditions elicit similar or dissimilar patterns in regions if fMRI and for time points in M/EEG. Crucially, although RDMs originate from incommensurate source signal, they themselves have the same structure and dimensionality. This makes them directly comparable. Thus, by determining the similarity of RDMs across fMRI and M/EEG measurement spaces we can thus test the hypothesis that the same neural generators are measured in particular locations at particular time points (Fig. 1F).

In sum, M/EEG and fMRI data can be related to each other – fused – on the basis of common representational structure in spite of the incommensurate signal source space.

In the following we detail how M/EEG-fMRI fusion was used to reveal spatiotemporal dynamics of cognitive functioning. We first focus on visual processing.

5. The spatiotemporal dynamics of visual processing

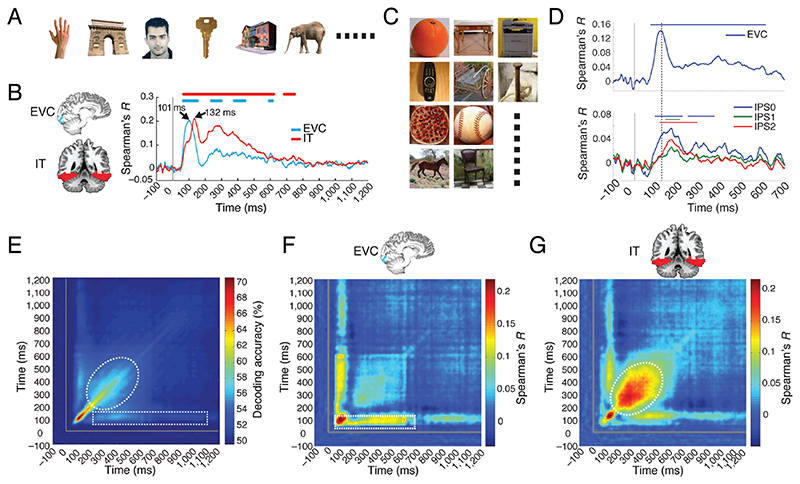

The first application of M/EEG-fMRI fusion investigated the cascade of spatiotemporal processing during visual object processing using a regions of interest (ROI) approach (Cichy et al., 2014). Brain responses were recorded with MEG and fMRI while participants viewed a set of 92 images of everyday objects (Fig. 2A, stimuli from (Kiani et al., 2007; Kriegeskorte et al., 2008)). Focusing on the ventral visual stream, the authors fused MEG data with fMRI ROI data from early visual cortex (EVC) and the inferior-temporal (IT) region as the starting and end point of the cortical visual processing hierarchy. They found that neural responses in EVC emerged and peaked earlier than in IT (Fig. 2B). This result was consistent with the idea of the ventral visual stream as a hierarchical processing cascade (DiCarlo and Cox, 2007; Felleman and Van Essen, 1991) and demonstrated the suitability of the M/EEG-fMRI fusion approach to evaluate patterns of human brain responses in space and time. An independent study using a new set of everyday objects images (Fig. 2C) replicated the initial finding in the ventral stream, and demonstrated a processing cascade in the dorsal stream, providing novel timing stamps for brain responses in parietal cortex (Fig. 2D) (Cichy et al., 2016, see also Mohsenzadeh et al., 2019).

Figure 2. Methods, results and variants of ROI-based M/EEG-fMRI fusion for understanding visual processing.

(A,C) Participants viewed a set of 92 silhouette images while their brain activity was recorded with MEG and fMRI. (B) M/EEG fMRI fusion between MEG sensor activation patterns and fMRI BOLD activation patterns in a region-of-interest approach revealed brain responses earlier in early visual cortex (EVC) than at later stages in the (B) ventral (inferior temporal cortex IT) and (D) dorsal stream (intra parietal sulcus 0-2). (E) The time generalization method applied to MEG data as recorded for stimuli in A revealed a pattern suggesting fast changing, i.e. transient and slow changing, i.e. persistent components (indicated by dashed white line). (F,G). Fusion of the M/EEG data analyzed using the temporal generalization method with fMRI revealed that different aspects of persistent neural responses are related to EVC and IT respectively.

Subsequent research applied M/EEG-fMRI fusion to investigate the processing of visual contents other than objects. For example, one study investigated the spatiotemporal dynamics underlying face perception (Muukkonen et al., 2020). Fusion of EEG with fMRI data revealed a processing cascade starting from early visual cortex and progressing into cortical regions specialized for the processing of faces (i.e. face-selective regions occipital face area (OFA) and fusiform face area (FFA)) as well as parietal regions. Another study applied M/EEG-fMRI fusion to the investigation of scene perception. The authors presented participants with images of scenes of different layout and texture (Henriksson et al., 2019). They found that an area known to be selective to the processing of visual scenes – occipital place area (OPA) was involved in the processing of spatial layout within 100ms after image presentation. This provided evidence that OPA is involved in the rapid encoding of spatial layout.

M/EEG-fusion is not a single fixed formulation of an algorithmic idea but is best understood as a multivariate analysis framework that can easily be combined with other multivariate data analysis approaches to yield a better description and further theoretical insights. We discuss two major examples that have developed M/EEG-fMRI fusion further in this spirit.

A first essential step was the localization of persistent versus transient dynamics by a combination of M/EEG-fMRI fusion with what is now commonly called time-generalization analysis (King and Dehaene, 2014). The idea of time generalization is to provide a deeper understanding of neural dynamics by assessing how similar multivariate M/EEG signals are over time. One key distinction is the longevity of representations: if representations change fast over time, the dynamics are transient; if representations stay the same over time or recur, the dynamics are persistent. Time-generalization analysis typically produces 2-dimensional matrices (indexed in time in both dimensions) indicating when multivariate representational spaces in M/EEG are similar or not. Algorithmically this can for example be achieved by multivariate pattern classification: a machine learning classifier is trained to distinguish conditions based on data from one time point, and is then tested on data from all other time points (Cichy et al., 2014; Isik et al., 2014; King et al., 2014; Meyers et al., 2008). Above-chance classification results at time-point combinations other than identical for training and testing data indicate similar neural patterns across time, and thus suggests persistent dynamics. Conducted in this manner, time generalization analysis on MEG data recorded during object perception showed a complex pattern of fast transient (e.g. high decoding accuracy early along the diagonal) and persistent representations (Cichy et al., 2014) (Fig. 2E, indicated by white dotted shapes lines). The presence of several persistent components immediately posed the question from where in the brain they stem. M/EEG-fMRI fusion provided the answer by localizing these persistent representations to EVC and IT respectively (Fig. 2F,G). This result provided a description of the spatiotemporal neural dynamics during vision with respect to the theoretical distinction between transient and persistent brain responses.

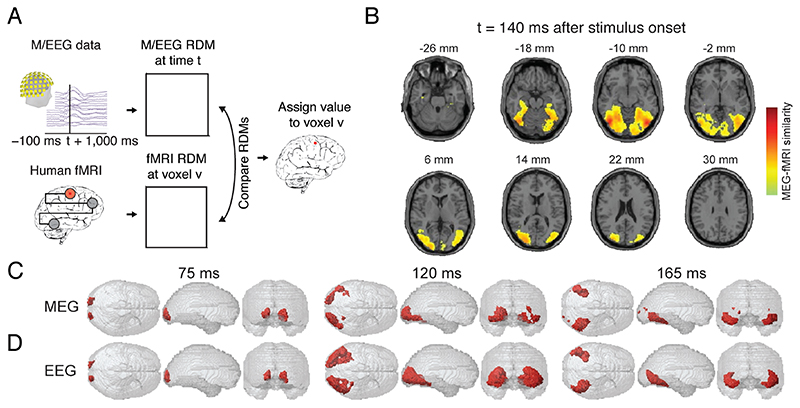

A second essential step was the extension to a spatially unbiased analysis using the searchlight approach (Fig. 3A). There, instead of focusing at a predefined set of regions, representational correspondence to M/EEG is established for every fMRI voxel independently based on the BOLD activation pattern in its local vicinity. In detail, conducted for each particular time point of M/EEG data this results in a spatially unbiased map of where fMRI activation patterns in the brain are representationally similar to EEG data of that time point. Taking the maps for all time points together results in a temporally resolved movie of how neural responses spatially evolve. For object processing, spatially-unbiased searchlight-based fusion of fMRI and MEG data replicated and extended the ROI-based result to a finer-grained view of how neuronal responses evolved during perception in the human brain (Fig. 3B,C) (Cichy et al., 2016).

Figure 3. Methods, results and variants of searchlight-based M/EEG-fMRI fusion for understanding visual processing.

(A) M/EEG-fMRI fusion using the volumetric searchlight approach. A MEG RDM is calculated for every time point t, and a fMRI RDM is calculated for every voxel v in the brain based on the multivariate activation pattern in its vicinity. The MEG and fMRI RDMs are compared and the result noted at the position of the voxel v. For each time point this results in a 3D map of representational similarity between M/EEG and fMRI data as exemplified in (B) for 140ms after the onset of an object image. (C) The searchlight analysis is repeated across time, yielding a movie of how human brain responses unfold over time. (D) Fusion of fMRI data with EEG data yields comparable results as when MEG data is used, as shown in C. Red color in C,D indicate significant fusion results.

M/EEG-fMRI fusion can in principle be used with MEG, EEG or a combination (Fig. 3D) (Cichy and Pantazis, 2017). The usability with EEG makes M/EEG-fMRI fusion a versatile analytical tool that is well accessible in a large number of sites in the world. However, MEG and EEG do have differential sensitivity to neural signals (Hämäläinen et al., 1998). This is mirrored in the fusion results of fMRI with MEG or EEG respectively capture unique aspects of neural representations (Cichy and Pantazis, 2017). In effect, whether to acquire MEG or EEG for fusion might depend on the exact experimental goals. For example, for fusion with high-frequency oscillations MEG might be more suited due to its relatively better ability to resolve these signals. In contrast, for fusion with very deep sources EEG might be more appropriate, as magnetic fields decay strongly with distance to the sensors. The differences in sensitivity of MEG and EEG to different aspects of neural activity also imply that fusion of FMRI data with both MEG and EEG combined has the best potential to yield the most refined view of human brain activity.

Together, these studies in visual processing demonstrate the feasibility, versality and potential of M/EEG-fMRI fusion to shed new light on the spatiotemporal processing underlying cognitive processing. The fact that similar spatiotemporal processing cascades were observed in the visual system for different contents and in independent studies speaks qualitatively for the ability of the technique to yield reproducible and reliable results. This was also ascertained quantitively by comparing the results of M/EEG-fMRI fusion across data and stimulus sets, suggesting good generalizability and reliability (Mohsenzadeh et al., 2019).

6. The spatiotemporal dynamics of higher-level cognition

While M/EEG fMRI fusion was first established in the field of perception, it is in no way limited to this aspect of cognition. Here we highlight two studies that applied M/EEG-fMRI fusion to higher-level cognition and show how the technique was further developed in each case to reach the desired theoretical insights.

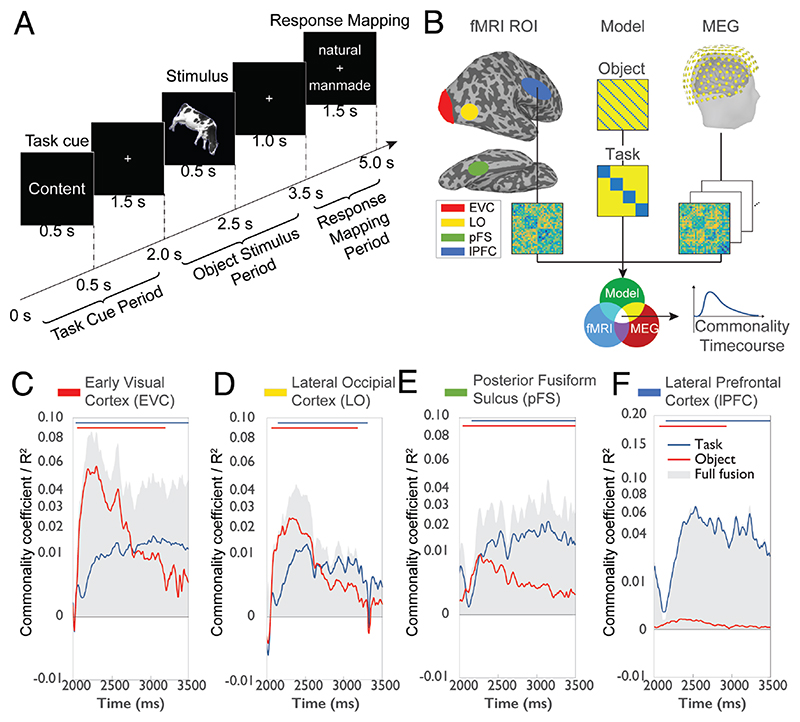

The first example is a study investigating how humans process the task contexts in which objects occur (Hebart et al., 2018) (Fig. 4A). Tasks and behavioral goals strongly influence how we perceive the world. Their large impact on perception is mirrored in the finding that tasks are processed in many different regions of the brain and themselves influence visual processing (Bracci et al., 2017; Erez and Duncan, 2015; Harel et al., 2014; Vaziri-Pashkam and Xu, 2017). This raises the question how activity in these brain regions is modulated by task across time. Hebart et al. (2018) used M/EEG-fMRI fusion to shed light on this issue. On each trial participants were first given one of four tasks (i.e., judge an object’s animacy, size; the color of the outline or tilt). Then an object from a set of different categories (e.g., cows, flowers, trees) was presented on which participants had to carry out the task followed by a delayed response screen. The authors focused their analysis on the object presentation period and asked with which spatiotemporal dynamics neural processing of task and of object emerged independently. This posed a challenge for the basic formulation of M/EEG-fMRI fusion: the processing of task and object is concurrent, while M/EEG-fMRI fusion in its basic formulation does not differentiate between different aspects of processing. As a solution, the authors used commonality analysis (also known as variance partitioning; Seibold and McPhee, 1979): they formulated in a model RDMs the effect of task and of object category, and then determined for each of the two model RDMs separately the proportion of variance shared between MEG and fMRI (Fig. 4B). The results (Fig. 4C-F) revealed a parallel rise in task-related signals throughout cortex, with an increasing dominance of task over object representations from early to higher visual areas and further to frontal cortex. This nuanced spatiotemporal description has theoretical impact on several grounds. For one, it demonstrated that task information is rapidly communicated between brain regions involved in task processing (Siegel et al., 2015). Further, it speaks against the idea of visual cortex as having a passive role in visual processing from which task-malleable frontal cortex reads out information (Freedman et al., 2003). Instead, is suggest that task biases visual processing in occipital cortex with increasing strength along the visual processing hierarchy.

Figure 4. Applications and extensions of M/EEG fusion to processing task context.

(A) Experimental paradigm. On each trial participants first receive a task cue on which task to carry out (e.g. judge whether the upcoming object is natural or manmade). Later they are presented a stimulus probe. Last, they give their response (Hebart et al., 2018). (B) Content-specific formulation of M/EEG-fMRI fusion. In addition to constructing RDMs based on region-specific fMRI BOLD activation and time-resolved MEG data, model RDMs are created, capturing the effect of different aspects of the data, such as the task or object. For example, for the task model RDM conditions of the same task were given the value 0 (to code similarity; here: blue color), and 1 otherwise (i.e., to code dissimilarity). The three types of RDM are then combined using commonality analysis to reveal the aspects of the data uniquely related to each model (Hebart et al., 2018). The results of content-specific fusion focusing on the object stimulus period from 2.0-3.5s for (C) early visual cortex, (D) lateral occipital cortex, (E) posterior fusiform sulcus and (F) lateral prefrontal cortex revealed a parallel rise in task-related signals throughout cortex, with an increasing dominance of task over object representations along the processing hierarchy (Hebart et al., 2018). Gray shaded area in C-F indicates total variance explainable by M/EEG-fMRI fusion.

Commonality analysis as used by Hebart et al (2018) is an elegant way to add constraints on M/EEG-fMRI fusion to make it content-specific, it is not the only one. Another possibility is to use conjunction inference (Nichols et al., 2005). Instead of calculating shared variances the idea is to use a logical and-operation, that is to count those locations and time points as related to processing of a particular content that also significantly relate to a model RDM of the content themselves (Khaligh-Razavi et al., 2018). The choice between different ways to make M/EEG-fMRI fusion content-specific is best determined by theoretical considerations on what kind of effect the investigator is interested in.

The second example is a study investigating the spatiotemporal neural dynamics related to attentional processing (Salmela et al., 2018). A large body of work has identified brain networks that underlie different components of attention and has described the temporal dynamics with which attention is being processed. However, the relationship between the spatially localized networks and the complex temporal dynamics is less well understood. Salmela at al. (2018) therefore used M/EEEG-fMRI fusion to investigate the many-to-many mapping between brain regions and processing stages of attention. In the experiment participants performed visual and auditory tasks (orientation or pitch one-back discrimination) separately or simultaneously and with or without distractors while EEG and fMRI data were recorded. The set of different combinations of experimental factors influencing attention was the level at which the data was further analyzed.

In a first step, the authors fused the EEG with fMRI data, revealing a processing cascade from sensory to parietal regions and further into frontal regions (Fig. 5A). In a second step the authors took M/EEG-fMRI analysis up one level by making its results the starting point for further analysis. After conducting M/EEG-fMRI fusion based on cortical parcels (Fig. 5B), they compared the parcel-specific M/EEG-fMRI time courses themselves for similarity (Fig. 5C). Visual inspection of the similarity relations between the parcel-specific EEG-fMRI time courses suggested 8 clusters (Fig. 5D) that were used for a discrimination analysis. Four discriminative functions explained 95% of the variance across areas. Their coefficients are plotted in Fig. 5E across time and Fig. 5F across space. The functions reveal large-scale pattern of spatiotemporal dynamics across networks that the authors interpret as four different spatiotemporal components of attention. These results provide new insight into the large-scale spatiotemporal dynamics of attentional processing and are a stepping stone for developing a quantitative spatiotemporal model of attentional processing in the human brain.

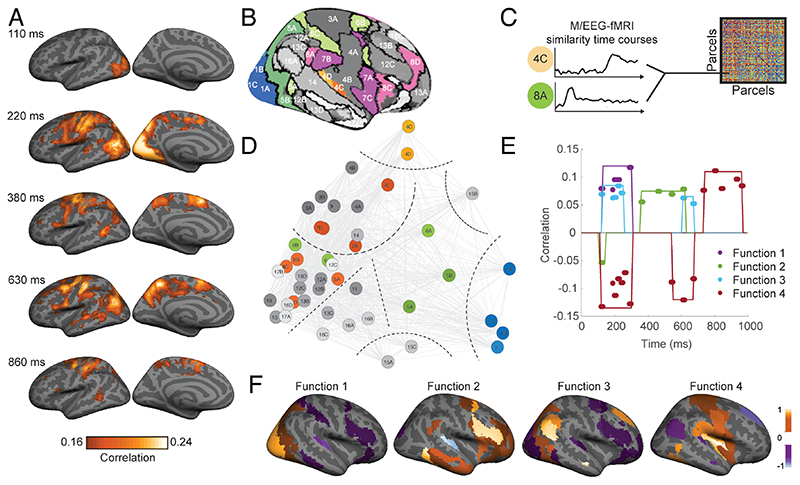

Figure 5. Applications and extensions of M/EEG fusion to attentional processing.

(A) Results of M/EEG-fMRI fusion investigating the spatiotemporal dynamics of sensory processing under different states of attention (here for left hemisphere) (Salmela et al., 2018). (B) Based on a brain parcellation atlas M/EEG fMRI time courses were defined. (C) The time courses themselves were compared for similarity, and (D) several clusters were identified on this basis. Discriminative analysis was carried out on the clustered data, and the coefficients of the four discriminative functions were plotted across (E) time and (F) space (Salmela et al., 2018). This revealed larger-scale networks of brain responses across space and time reminiscent of sensory processing (function 1), top-down guided attentional control (function 2), brain state transitions (function 3) and response control (function 4). Colors in E,F indicate coefficient values for the discriminative functions.

In sum, these studies exemplify how M/EEG-fMRI can be used to study complex cognitive phenomena such as task processing and attentional control. They further demonstrate how M/EEG-fMRI fusion can be methodologically extended for deeper theoretical insight by making it content-specific, or by making its results the subject of further analysis itself.

7. Future Potential of M/EEG-fMRI fusion

As demonstrated above, M/EEG-fMRI fusion is not a particular fixed single-purpose algorithm, but rather a general and easily extendable analytical framework. This gives M/EEG-fMRI fusion with strong potential for future studies of cognitive functions in a wide range of domains. We emphasize three different general directions below and provide concrete examples for each.

First, M/EEG fMRI fusion can immediately be used in any domain of investigation in which M/EEG and fMRI are currently employed. Its potential application thus ranges from understanding basic sensory encoding such as touch or sound (Lowe et al., 2020) to complex cognitive processes (e.g. working memory, language or planning), to studies of how neural dynamics change in clinical context (e.g. stroke or mental disease) or during development (e.g. through longitudinal or age-comparative studies) (Fig. 6A). To make this more concrete consider three examples. For one, M/EEG-fMRI could contribute to better understanding how information exchange is coordinated in cognitive functions that have several stages mapping on potentially different brain regions, such as encoding, maintenance and retrieval in working memory. Another example would be the study of the motor system, in particular the neural activity leading up to a movement. For this, M/EEG fusion would be conducted not forward in time as e.g. after presentation of a stimulus, but backward in time locked to the onset of movement. An exciting prospect is that if the results of M/EEG-fMRI time locked to stimulus onset and time-locked to response were combined, this reveal the full cascade of neural processing between the presentation of a stimulus and the behavioral response. Finally, M/EEG-fMRI fusion could be used in clinical neuroscience to identify how brain damage or mental disease impact information flow, revealing spatiotemporal functional biomarkers to aid in diagnosing disorders, or pinpointing impairments as a precursor to therapeutic interventions.

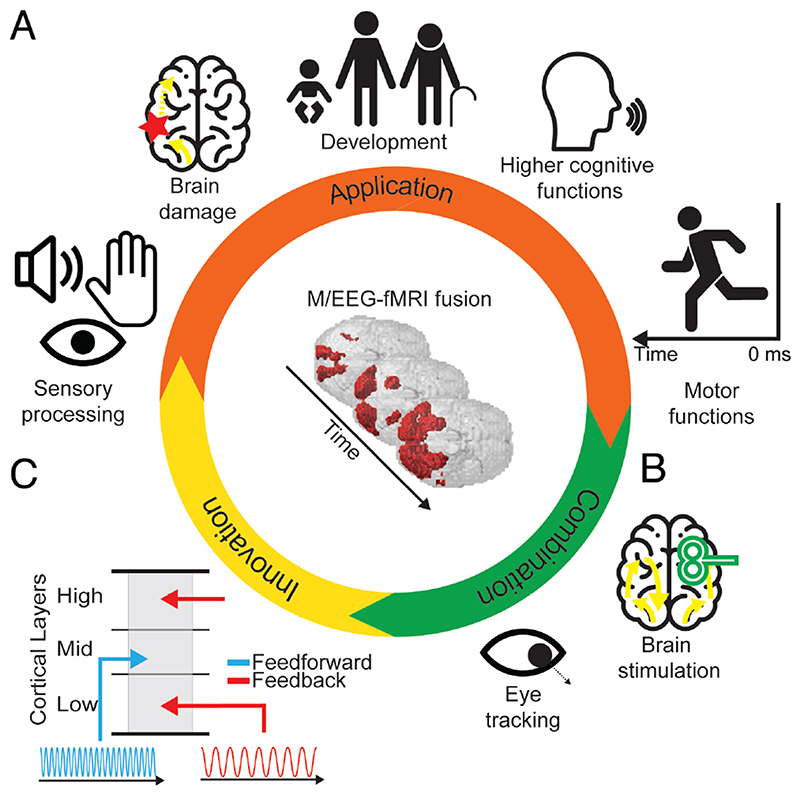

Figure 6. Future potential of M/EEG-fMRI fusion.

(A) M/EEG fMRI fusion may be applied in any field in which both M/EEG and fMRI are already applied in isolation. (B) M/EEG fMRI fusion may be combined with brain stimulation to reveal its effects and to establish causal relationships between neural responses and behavior. (C) Innovation in M/EEG and fMRI research can be directly harvested by fMRI fusion to reveal novel aspects of neural processing. For example, feed-forward and feedback information flow are associated with neural responses in distinct cortical layers and in distinct frequency bands. Thus, fusing M/EEG data resolved in different frequency bands with fMRI data resolved in cortical depth may reveal feed-forward and feedback information flow in the brain.

Second, M/EEG-fMRI fusion could be combined with other techniques that already find their application in M/EEG and fMRI research separately. This includes invasive and non-invasive brain stimulation as well as the assessment of eye movements and physiological measures. For example, the effect of brain stimulation, such as transcranial magnetic stimulation (Hallett, 2007; Walsh and Cowey, 2000) to a particular brain region could be assessed in terms of its impact on spatiotemporal network dynamics (Fig. 6B). This might help to establish causal relationships between spatiotemporally identified neural responses and cognitive function.

Third, M/EEG-MRI fusion can immediately benefit from innovation in the techniques involved to provide novel solutions in resolving brain responses. As an example, consider the challenge of dissociating information flow in its two fundamental streams: feedforward and feedback. This is a challenging problem to current human neuroimaging, as input and output signals overlap at the level at which we can measure them noninvasively. Current applications of M/EEG-fMRI fusion to this problem thus use well-proven experimental interventions such as masking to dissociate feedforward from feedback information flow (Mohsenzadeh et al., 2018). A further emerging possibility is making use of method innovation (Fig. 6C). In space, ultra-high field fMRI can resolve signals at different cortical depths (Huber et al., 2017; Kok et al., 2016; Muckli et al., 2015). Cortical layers at different depth are differentially targeted by feedforward and feedback anatomical connections (Gilbert, 1983; Larkum, 2013; Markov et al., 2014) and thus differentiating layer-specific activity may help dissociate feedforward and feedback related signaling. In time, feed-forward and feedback information flow is associated with different frequencies. Lower frequencies are stronger related to feedback and higher frequencies stronger to feed-forward activity (Bastos et al., 2015; Buffalo et al., 2011; Kerkoerle et al., 2014; Maier et al., 2010; Michalareas et al., 2016). Thus, by fusing layer-specific fMRI and frequency-resolved M/EEG data it might be possible to directly track feedback and feedforward information flow in space and time simultaneously. This idea also receives support from previous studies that used multivariate methods on time-frequency resolved data revealing the kind of fine-grained information as necessary for M/EEG-fMRI fusion (Pantazis et al., 2018), and the fusion of time-frequency resolved MEG with fMRI at standard resolution (Reddy et al., 2017).

In sum, M/EEG-fMRI may prove a valuable tool in understanding neural dynamics in a wide range of fields on inquiry in its current formulation, in novel combination with other techniques to manipulate brain data, and in epitomizing on innovation for unprecedented analytical power.

8. Limitations of the technique

M/EEG-fMRI fusion is subject to limitations dictated either by principle or by the current implementation of it. We discuss the most important limitations of both kinds below.

A fundamental limitation of M/EEG-fMRI fusion is that it can only reveal those aspects of neural activity to which both imaging modalities are sensitive. Thus, the inspection of M/EEG or fMRI signals alone will likely reveal more signal in the temporal and spatial domain as when M/EEG and fMRI are combined with fusion. Further, M/EEG-fMRI fusion requires stronger signal-to-noise ratios compared to when the results of each technique are considered in isolation to yield significant results, as it adds an additional analysis step that combines two noisy measurements and thus the noise. The flipside of the coin is that confidence in scientific findings increases with the amount of reasonable constraints that the data fulfills. Because for positive results of M/EEG-fMRI fusion the representational structure must be similar in both M/EEG and fMRI, this arguably provides stronger ground to believe the results of fusion to reflect neural activity than when either technique is considered in isolation. Thus, while alternative explanations can never be fully ruled out, M/EEG fMRI fusion results provide good grounds for theoretical inference about neural activity.

A second general limitation is that the power of the M/EEG-fMRI fusion approach depends on the richness of the condition set. When only few conditions can be defined, the constraints of representational similarity between M/EEG and fMRI data will be low, and thus the link established between spatial and temporal domains will be weak. In contrast, when many experimental conditions are used that capture neural processing underlying a particular cognitive function in a sufficiently diverse manner, the link will be strong. However, the exact number of conditions is not easily established, as it interacts with multiple factors, including the actual spatiotemporal dynamics to be resolved, signal-to-noise ratio, and the exact theoretical question asked.

A third fundamental limitation is that the results can be ambiguous: if two regions share similar representational format but are activated in fact at different time points, we cannot ascribe unique representational dynamics to each. However, this case is unlikely as information travelling between brain regions is transformed in a complex and non-linear manner, in effect also changing representational format. To further mitigate this concern the stimulus set could be chosen such as to maximally disambiguate between regions of interest based on previous knowledge on their representational format.

A particular limitation of the current implementation of M/EEG-fMRI fusion is that it considers information about timing only based on M/EEG, and information about space only based on fMRI. This is motivated by the idea to combine only the aspects across techniques for which each technique excels. However, this might not be the optimal procedure for many experimental settings. For one, considering temporal information from fMRI might be beneficial when faster than usual acquisition is employed (Ekman et al., 2017; Lewis et al., 2016; Poser and Setsompop, 2018), when the cognitive processes in question have dynamics that are slow enough to be meaningfully captured with a sampling rate of seconds, or when experimental interventions are used to draw out cognitive processing in time that otherwise would be rapid. Similarly, spatial information from M/EEG can be considered based on any of the multiple techniques developed to project source space information into the signal space of the brain. Then, fMRI data in a particular location would be related to activity measured in MEG localized to that location. This might for example be useful to mitigate the ambiguity in MEG/fMRI fusion if two regions share similar representational format by assigning unique MEG signals to each based on spatial information in the MEG data.

A limitation in the actual use of M/EEG-fMRI fusion until today is that data fused was from separate rather than simultaneous recordings. While currently for the combination of MEG and fMRI this is unavoidable, nothing prohibits recording EEG and fMRI data for fusion simultaneously. Whether EEG-fMRI fusion works better with simultaneously or separately recorded data is an open question that requires investigation, and that will also likely depend on how much weight the experimenter puts on the respective advantages and disadvantages of each approach. An argument for separate recordings is that the experimental design can be optimized to the specifics of the technique, e.g. not being limited by the sluggishness of the BOLD response experimental trials may be much more tightly spaced during EEG recordings, increase signal-to-noise ratio of the EEG data, and thus help fusion. Further, separate recordings are easier done in that combining fMRI and EEG requires specialized setups and equipment, creates interactions between the measurements that may impact data quality, and requires additional preprocessing steps. However, simultaneous recordings have unique benefits, too. Brain data is acquired under the exact same conditions (e.g. exact same sensory stimulation, same psychological and physiological state of the participant). In contrast, data acquired separately and subsequently is subject to variation (e.g. not perfectly matched stimulation conditions, different body postures, or history of exposure to the same experimental conditions). Thus, arguably simultaneous recordings reduce unwanted variability compared to separate recordings and might therefore benefit fusion. Simultaneous recordings also suggest a novel direction of method development: can simultaneously recorded EEG and fMRI fusion using together univariate and advanced multivariate fusion techniques based on based on trial-by-trial variability (Calhoun and Eichele, 2010; Debener et al., 2006; Eichele and Calhoun, 2010) with fusion based on condition-by-condition variability as discussed here yield a more refined view of spatiotemporal dynamics than either fusion would in isolation?

In sum, as M/EEG-fMRI fusion is subject to several fundamental and practical constraints that define its general applicability. As any technique it sheds light on human brain function from a particular perspective only. However, from that perspective it contributes unique views of human brain function. This does not make any other analytical or measurement technique superfluous. Instead we believe it is best viewed as an addition to the set of exciting tools of cognitive neuroscience, helping to accrue evidence for theories in the absence of access to ground truth.

9. Conclusions

To understand complex neural processing in the human brain, we need to resolve its component processes in space and time at the level at which they occur. In the absence of a single non-invasive technique that excels in both spatial and temporal resolution, analytical approaches that combine information from several techniques are key. M/EEG-fMRI fusion is such an approach. The feasibility has been demonstrated in the study of visual perception and has been successfully transferred to the study of higher cognitive functions. It is particularly flexible to extension and is well suited to immediately benefit from future developments in the imaging techniques that it combines. We thus believe that it has the potential to be fruitfully applied in a wide range of fields to reveal the spatiotemporal dynamics of human cognitive function.

Acknowledgments

This work was funded by the German Research Foundation (DFG, CI241/1-1, CI241/3-1 to R.M.C.), by the European Research Council (ERC, 803370 to R.M.C.), and by the Vannevar Bush Faculty Fellowship program by the ONR (N00014-16-1-3116 to A.O). We thank N. Apurva Ratan Murty for helpful comments on the manuscript. We thank Martin N. Hebart for comments on the manuscript.

Footnotes

Author contributions

Both authors contributed equally to the manuscript.

Declaration of Interest

The authors declare no competing interests.

References

- Averbeck BB, Latham PE, Pouget A. Neural correlations, population coding and computation. Nat Rev Neurosci. 2006;7:358–366. doi: 10.1038/nrn1888. [DOI] [PubMed] [Google Scholar]

- Bastos AM, Vezoli J, Bosman CA, Schoffelen J-M, Oostenveld R, Dowdall JR, De Weerd P, Kennedy H, Fries P. Visual Areas Exert Feedforward and Feedback Influences through Distinct Frequency Channels. Neuron. 2015;85:390–401. doi: 10.1016/j.neuron.2014.12.018. [DOI] [PubMed] [Google Scholar]

- Bracci S, Daniels N, Op de Beeck H. Task Context Overrules Object- and Category-Related Representational Content in the Human Parietal Cortex. Cereb Cortex. 2017;27:310–321. doi: 10.1093/cercor/bhw419. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buffalo EA, Fries P, Landman R, Buschman TJ, Desimone R. Laminar differences in gamma and alpha coherence in the ventral stream. Proc Nat Acad Sci USA. 2011;108:11262–11267. doi: 10.1073/pnas.1011284108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cichy RM, Pantazis D. Multivariate pattern analysis of MEG and EEG: A comparison of representational structure in time and space. Neuroimage. 2017;158:441–454. doi: 10.1016/j.neuroimage.2017.07.023. [DOI] [PubMed] [Google Scholar]

- Cichy RM, Teng S. Resolving the neural dynamics of visual and auditory scene processing in the human brain: a methodological approach. Phil Trans R Soc B. 2017;372:20160108. doi: 10.1098/rstb.2016.0108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cichy RM, Pantazis D, Oliva A. Resolving human object recognition in space and time. Nat Neurosci. 2014;17:455–462. doi: 10.1038/nn.3635. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cichy RM, Pantazis D, Oliva A. Similarity-Based Fusion of MEG and fMRI Reveals Spatio-Temporal Dynamics in Human Cortex During Visual Object Recognition. Cereb Cortex. 2016;26:3563–3579. doi: 10.1093/cercor/bhw135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dale AM, Halgren E. Spatiotemporal mapping of brain activity by integration of multiple imaging modalities. Curr Opin Neurobiol. 2001;11:202–208. doi: 10.1016/s0959-4388(00)00197-5. [DOI] [PubMed] [Google Scholar]

- Debener S, Ullsperger M, Siegel M, Engel AK. Single-trial EEG-fMRI reveals the dynamics of cognitive function. Trends Cogn Sci. 2006;10:558–563. doi: 10.1016/j.tics.2006.09.010. [DOI] [PubMed] [Google Scholar]

- DiCarlo JJ, Cox DD. Untangling invariant object recognition. Trends Cogn Sci. 2007;11:333–341. doi: 10.1016/j.tics.2007.06.010. [DOI] [PubMed] [Google Scholar]

- Eichele T, Calhoun VD. In: Simultaneous EEG, and fMRI: Recording, Analysis and Application. Ullsperger M, Debener S, editors. Oxford University Press; Oxford: 2010. Parallel EEG-fMRI ICA Decomposition; pp. 175–194. [Google Scholar]

- Ekman M, Kok P, de Lange FP. Time-compressed preplay of anticipated events in human primary visual cortex. Nat Comm. 2017;8:1–9. doi: 10.1038/ncomms15276. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Erez Y, Duncan J. Discrimination of Visual Categories Based on Behavioral Relevance in Widespread Regions of Frontoparietal Cortex. J Neurosci. 2015;35:12383–12393. doi: 10.1523/JNEUROSCI.1134-15.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Felleman DJ, Van Essen DC. Distributed Hierarchical Processing in the Primate Cerebral Cortex. Cereb Cortex. 1991;1:1–47. doi: 10.1093/cercor/1.1.1-a. [DOI] [PubMed] [Google Scholar]

- Gilbert CD. Microcircuitry of the Visual Cortex. Ann Rev Neurosci. 1983;6:217–247. doi: 10.1146/annurev.ne.06.030183.001245. [DOI] [PubMed] [Google Scholar]

- Guggenmos M, Sterzer P, Cichy RM. Multivariate pattern analysis for MEG: a comprehensive comparison of dissimilarity measures. Neuroimage. 2018;173:434–447. doi: 10.1016/j.neuroimage.2018.02.044. [DOI] [PubMed] [Google Scholar]

- Hallett M. Transcranial Magnetic Stimulation: A Primer. Neuron. 2007;55:187–199. doi: 10.1016/j.neuron.2007.06.026. [DOI] [PubMed] [Google Scholar]

- Hämäläinen MS, Ilmoniemi RJ, Sarvas J. Theory and Applications of Inverse Problems. Longman; Essex, England: 1998. Interdependence of information conveyed by the magnetoencephalogram and the electroencephalogram; pp. 27–37. [Google Scholar]

- Harel A, Kravitz DJ, Baker CI. Task context impacts visual object processing differentially across the cortex. Proc Nat Acad Sci USA. 2014;777:962–971. doi: 10.1073/pnas.1312567111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haynes J-D. A Primer on Pattern-Based Approaches to fMRI: Principles, Pitfalls, and Perspectives. Neuron. 2015;87:257–270. doi: 10.1016/j.neuron.2015.05.025. [DOI] [PubMed] [Google Scholar]

- Hebart MN, Bankson BB, Harel A, Baker CI, Cichy RM. The representational dynamics of task and object processing in humans. eLife. 2018;7:e32816. doi: 10.7554/eLife.32816. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Henriksson L, Mur M, Kriegeskorte N. Rapid Invariant Encoding of Scene Layout in Human OPA. Neuron. 2019;703:161–171.:e3. doi: 10.1016/j.neuron.2019.04.014. [DOI] [PubMed] [Google Scholar]

- Huber L, Handwerker DA, Jangraw DC, Chen G, Hall A, Stüber C, Gonzalez-Castillo J, Ivanov D, Marrett S, Guidi M, et al. High-Resolution CBV-fMRI Allows Mapping of Laminar Activity and Connectivity of Cortical Input and Output in Human M1. Neuron. 2017;96:1253–1263.:e7. doi: 10.1016/j.neuron.2017.11.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huster RJ, Debener S, Eichele T, Herrmann CS. Methods for Simultaneous EEG-fMRI: An Introductory Review. J Neurosci. 2012;32:6053–6060. doi: 10.1523/JNEUROSCI.0447-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Isik L, Meyers EM, Leibo JZ, Poggio TA. The dynamics of invariant object recognition in the human visual system. J Neurophysiol. 2014;111:91–102. doi: 10.1152/jn.00394.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jacobs J, Kahana MJ, Ekstrom AD, Mollison MV, Fried I. A Sense of Direction in Human Entorhinal Cortex. Proc Nat Acad Sci USA. 2010;107:6487–6492. doi: 10.1073/pnas.0911213107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jorge J, van der Zwaag W, Figueiredo P. EEG-fMRI integration for the study of human brain function. Neuroimage. 2014;102(Part 1):24–34. doi: 10.1016/j.neuroimage.2013.05.114. [DOI] [PubMed] [Google Scholar]

- Kerkoerle T, van, Self MW, Dagnino B, Gariel-Mathis M-A, Poort J, van der Togt C, Roelfsema PR. Alpha and gamma oscillations characterize feedback and feedforward processing in monkey visual cortex. Proc Nat Acad Sci USA. 2014;111:14332–14341. doi: 10.1073/pnas.1402773111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Khaligh-Razavi S-M, Cichy RM, Pantazis D, Oliva A. Tracking the Spatiotemporal Neural Dynamics of Real-world Object Size and Animacy in the Human Brain. J Cogn Neurosci. 2018;30:1559–1576. doi: 10.1162/jocn_a_01290. [DOI] [PubMed] [Google Scholar]

- Kiani R, Esteky H, Mirpour K, Tanaka K. Object Category Structure in Response Patterns of Neuronal Population in Monkey Inferior Temporal Cortex. J Neurophysiol. 2007;97:4296–4309. doi: 10.1152/jn.00024.2007. [DOI] [PubMed] [Google Scholar]

- King J-R, Dehaene S. Characterizing the dynamics of mental representations: the temporal generalization method. Trends Cogn Sci. 2014;18:203–210. doi: 10.1016/j.tics.2014.01.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- King J-R, Gramfort A, Schurger A, Naccache L, Dehaene S. Two Distinct Dynamic Modes Subtend the Detection of Unexpected Sounds. PLoS ONE. 2014;9:e85791. doi: 10.1371/journal.pone.0085791. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kok P, Bains LJ, van Mourik T, Norris DG, de Lange FP. Selective Activation of the Deep Layers of the Human Primary Visual Cortex by Top-Down Feedback. Curr Biol. 2016;26:371–376. doi: 10.1016/j.cub.2015.12.038. [DOI] [PubMed] [Google Scholar]

- Kriegeskorte N. Representational similarity analysis – connecting the branches of systems neuroscience. Front Sys Neurosci. 2008;2:4. doi: 10.3389/neuro.06.004.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Kreiman G. Visual Population Codes: Toward a Common Multivariate Framework for Cell Recording and Functional Imaging. The MIT Press; Cambridge, Mass: 2011. [Google Scholar]

- Kriegeskorte N, Kievit RA. Representational geometry: integrating cognition, computation, and the brain. Trends Cogn Sci. 2013;17:401–412. doi: 10.1016/j.tics.2013.06.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Mur M, Ruff DA, Kiani R, Bodurka J, Esteky H, Tanaka K, Bandettini PA. Matching Categorical Object Representations in Inferior Temporal Cortex of Man and Monkey. Neuron. 2008;60:1126–1141. doi: 10.1016/j.neuron.2008.10.043. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Larkum M. A cellular mechanism for cortical associations: an organizing principle for the cerebral cortex. Trends Neurosci. 2013;36:141–151. doi: 10.1016/j.tins.2012.11.006. [DOI] [PubMed] [Google Scholar]

- Lewis LD, Setsompop K, Rosen BR, Polimeni JR. Fast fMRI can detect oscillatory neural activity in humans. Proc Nat Acad Sci USA. 2016;113:E6679–E6685. doi: 10.1073/pnas.1608117113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lowe MX, Mohsenzadeh Y, Lahner B, Charest I, Oliva A, Teng S. Spatiotemporal Dynamics of Sound Representations reveal a Hierarchical Progression of Category Selectivity. BioRxiv. 2020:2020.06.12.149120 [Google Scholar]

- Maier A, Adams GK, Aura C, Leopold DA. Distinct Superficial and Deep Laminar Domains of Activity in the Visual Cortex during Rest and Stimulation. Front Syst Neurosci. 2010:4. doi: 10.3389/fnsys.2010.00031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Markov NT, Vezoli J, Chameau P, Falchier A, Quilodran R, Huissoud C, Lamy C, Misery P, Giroud P, Ullman S, et al. Anatomy of hierarchy: Feedforward and feedback pathways in macaque visual cortex. J Comp Neurol. 2014;522:225–259. doi: 10.1002/cne.23458. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meyers EM, Freedman DJ, Kreiman G, Miller EK, Poggio T. Dynamic Population Coding of Category Information in Inferior Temporal and Prefrontal Cortex. J Neurophysiol. 2008;100:1407–1419. doi: 10.1152/jn.90248.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Michalareas G, Vezoli J, van Pelt S, Schoffelen J-M, Kennedy H, Fries P. Alpha-Beta and Gamma Rhythms Subserve Feedback and Feedforward Influences among Human Visual Cortical Areas. Neuron. 2016;89:384–397. doi: 10.1016/j.neuron.2015.12.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mohsenzadeh Y, Qin S, Cichy RM, Pantazis D. Ultra-Rapid serial visual presentation reveals dynamics of feedforward and feedback processes in the ventral visual pathway. eLife. 2018;7:e36329. doi: 10.7554/eLife.36329. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mohsenzadeh Y, Mullin C, Lahner B, Cichy RM, Oliva A. Reliability and Generalizability of Similarity-Based Fusion of MEG and fMRI Data in Human Ventral and Dorsal Visual Streams. Vision. 2019;3:8. doi: 10.3390/vision3010008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Muckli L, De Martino F, Vizioli L, Petro LS, Smith FW, Ugurbil K, Goebel R, Yacoub E. Contextual Feedback to Superficial Layers of V1. Curr Biol. 2015;25:2690–2695. doi: 10.1016/j.cub.2015.08.057. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Muukkonen I, Ölander K, Numminen J, Salmela VR. Spatio-temporal dynamics of face perception. Neuroimage. 2020;209:116531. doi: 10.1016/j.neuroimage.2020.116531. [DOI] [PubMed] [Google Scholar]

- Nichols T, Brett M, Andersson J, Wager T, Poline J-B. Valid conjunction inference with the minimum statistic. Neuroimage. 2005;25:653–660. doi: 10.1016/j.neuroimage.2004.12.005. [DOI] [PubMed] [Google Scholar]

- Pantazis D, Fang M, Qin S, Mohsenzadeh Y, Li Q, Cichy RM. Decoding the orientation of contrast edges from MEG evoked and induced responses. Neuroimage. 2018;180:267–279. doi: 10.1016/j.neuroimage.2017.07.022. [DOI] [PubMed] [Google Scholar]

- Panzeri S, Macke JH, Gross J, Kayser C. Neural population coding: combining insights from microscopic and mass signals. Trends Cogn Sci. 2015;19:162–172. doi: 10.1016/j.tics.2015.01.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Poser BA, Setsompop K. Pulse sequences and parallel imaging for high spatiotemporal resolution MRI at ultra-high field. Neuroimage. 2018;168:101–118. doi: 10.1016/j.neuroimage.2017.04.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reddy L, Cichy R, VanRullen R. Oscillatory signatures of object recognition across cortical space and time. J Vis. 2017;17:1346–1346. [Google Scholar]

- Rosa MJ, Daunizeau J, Friston KJ. EEG-fMRI Integration: A critical review of biophysical modeling and data analysis approaches. J Integr Neurosci. 2010;09:453–476. doi: 10.1142/s0219635210002512. [DOI] [PubMed] [Google Scholar]

- Salmela V, Salo E, Salmi J, Alho K. Spatiotemporal Dynamics of Attention Networks Revealed by Representational Similarity Analysis of EEG and fMRI. Cereb Cortex. 2018;28:549–560. doi: 10.1093/cercor/bhw389. [DOI] [PubMed] [Google Scholar]

- Seibold DR, McPhee RD. Commonality Analysis: A method for decompositn explained variance in multiple regression. Hum Comm Res. 1979;5:355–365. [Google Scholar]

- Siegel M, Buschman TJ, Miller EK. Cortical information flow during flexible sensorimotor decisions. Science. 2015;348:1352–1355. doi: 10.1126/science.aab0551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stanley GB. Reading and writing the neural code. Nat Neurosci. 2013;16:259–263. doi: 10.1038/nn.3330. [DOI] [PubMed] [Google Scholar]

- Walsh V, Cowey A. Transcranial magnetic stimulation and cognitive neuroscience. Nat Rev Neurosci. 2000;1:73–80. doi: 10.1038/35036239. [DOI] [PubMed] [Google Scholar]

- Walther A, Nili H, Ejaz N, Alink A, Kriegeskorte N, Diedrichsen J. Reliability of dissimilarity measures for multi-voxel pattern analysis. Neuroimage. 2016;137:188–200. doi: 10.1016/j.neuroimage.2015.12.012. [DOI] [PubMed] [Google Scholar]