Abstract

The aim of the present study was to investigate if the perception of time is affected by actively attending to different metrical levels in musical rhythmic patterns. In an experiment with a repeated-measures design, musicians and non-musicians were presented with musical rhythmic patterns played at three different tempi. They synchronised with multiple metrical levels (half notes, quarter notes, eighth notes) of these patterns using a finger-tapping paradigm and listened without tapping. After each trial, stimulus duration was judged using a verbal estimation paradigm. Results show that the metrical level participants synchronised with influenced perceived time: actively attending to a higher metrical level (half notes, longer inter-tap intervals) led to the shortest time estimations, hence time was experienced as passing more quickly. Listening without tapping led to the longest time estimations. The faster the tempo of the patterns, the longer the time estimation. While there were no differences between musicians and non-musicians, those participants who tapped more consistently and accurately (as analysed by circular statistics) estimated durations to be shorter. Thus, attending to different metrical levels in music, by deliberately directing attention and motor activity, affects time perception.

Keywords: Time Perception, Time Estimation, Entrainment, Tapping, Attention, Musical Tempo, Rhythm Patterns

Introduction

There is a growing body of work suggesting that music affects internal timing mechanisms and experiences of time. The tempo of a musical piece has been shown to be a main factor driving distortions of perceived time. In this respect, previous studies on time perception and music have not addressed that musical tempo per se is an ambiguous construct, since the tempo of a musical piece as notated and performed, and the tempo actually perceived by listeners are not necessarily congruent. Furthermore, perception of beat salience may differ between individuals as they synchronise with different metrical levels in the music. This study aimed at investigating the effects of sensorimotor synchronisation (SMS) with different metrical levels in music, compared to listening-only without tapping, on time estimations. It is proposed that deliberately attending to a given metrical level may alter experiences of the music’s duration.

Time and timing mechanisms are essential components of the perception and performance of music (Epstein, 1995; London, 2012), yet the underlying mechanisms are still not entirely understood. Internal clock models of interval timing assume an oscillating mechanism that is constantly generating pulses (Gibbon, 1977; Gibbon, Curch, & Meck, 1984; Treisman, 1963). These pulses are accumulated when time needs to be judged. Increased physiological activation (arousal) causes the rate of the pulse generator to increase, therefore more pulses are accumulated in the same amount of time and, as a consequence, time seems to last longer. Several studies found this time-lengthening effect of arousal in different contexts such as hearing emotional versus neutral sounds (Noulhiane, Mella, Samson, Ragot, & Pouthas, 2007), looking at emotional compared to neutral pictures (Gil & Droit-Volet, 2012), and when listening to music (Droit-Volet, Bigand, Ramos, & Bueno, 2010; Droit-Volet, Ramos, Bueno, & Bigand, 2013).

According to the attentional gate model (Block & Zakay, 1996; Zakay & Block, 1995), temporal and non-temporal processes compete for the same attentional resources. The model is based on the internal clock models mentioned above, adding an attentional gate between the pulse generator and the accumulator, which ‘stores’ the ongoing pulses. When more attention is allocated to time itself, the gate opens wider, allowing for more pulses to accumulate, and time is subsequently perceived to be longer. Referring to empirical music research, studies found that individuals were willing to wait the least amount of time when no music was played in the background as a distractor, and that time appears to be shorter when the accompanying music is enjoyed (Cameron, Baker, Peterson, & Braunsberger, 2003; Kellaris & Kent, 1992; North & Hargreaves, 1999). Listening to music diverts more attention away from time or, in other words, ‘time flies’ with music (see also Droit-Volet et al., 2010). A meta-analysis by Block, Hancock, and Zakay (2010) suggests that the attentional gate mechanism is supported by a large number of studies, and increased cognitive demands dedicated to non-temporal tasks (hence attention directed away from time) leads to shorter time estimations. It is important to note that time estimations strongly depend on whether or not participants are informed in advance that time needs to be judged (prospective time paradigm). If participants are not informed about a time estimation task (retrospective paradigm), effects on perceived time change since other cognitive processes such as memory are more involved (Block, Grondin, & Zakay, 2018).

Much of the literature on time perception proposes that the tempo of events and event density are the main factors in perceived time distortions, and that physiological activation and perceived (musical) tempo are closely linked. In a series of experiments (Droit-Volet et al., 2013), tempo and other musical parameters were manipulated. Musical tempo was the main factor influencing time perception such that fast music was judged to last longer than slow music, and participants also judged fast music as being more arousing than slow music. This finding is supported by an earlier study (Oakes, 2003), showing that perceived waiting time increases with faster music (i.e. higher event density). Similar results were found for controlled rhythmic sequences of simple clicks and visual flashes (Droit-Volet & Wearden, 2002; Ortega & López, 2008; Treisman, Faulkner, Naish, & Brogan, 1990). Furthermore, for audio-visual stimuli such as films and video clips, music may not only enhance perceived and felt arousal, but may also influence time perception. In a study of the effects of music in slow motion video scenes, music influenced perceived time, leading to more accurate estimations of scene durations compared to conditions without music (Wöllner, Hammerschmidt, & Albrecht, 2018).

Apart from musical factors, in the current study we argue that individuals’ motor activity may influence perceived time. Music often results in an urge to move in time, establishing a stable temporal relationship between body movements and the rhythm, a process called entrainment (e.g. Jones, 2004). When moving to music, different body parts synchronise differently to musical rhythms depending on the rhythmic structure and tempo (Burger, London, Thompson, & Toiviainen, 2018; London, Burger, Thompson, & Toiviainen, 2016). Although studies have found a preference for perceiving beats every 500–600 ms for regular rhythmic sequences (Moelants, 2002; van Noorden & Moelants, 1999), perceptions of beat salience (i.e. pulse) in music may differ between individuals. This is a crucial aspect of rhythmic entrainment and has not been addressed with regard to time perception. Thus, tempo in music is an ambiguous construct with no objectively correct period, and depends on individual perception and structural components of the music (Moelants & McKinney, 2004; Toiviainen & Snyder, 2003).

Concerning the latter, most music consists of multiple metrical levels that are hierarchically structured, and each of the levels correspond to a different tempo (Cooper & Meyer, 1960; Lerdahl & Jackendoff, 1983). These hierarchical levels may span from low levels with ‘local’ temporal regularities to higher levels with more ‘global’ temporal regularities consisting of integer multiples of the lower levels. Temporal cues to meter perception include tempo (BPM), note duration, and event density (Drake, Gros, & Penel, 1999; London, 2011; Madison & Paulin, 2010; Parncutt, 1994). Non-temporal cues include pitch, harmonic progression, tonal movement, loudness changes (i.e. musical accents), timbre, and the listener’s motor activity (Boltz, 2011; Drake et al., 1999; Eitan & Granot, 2009; Epstein, 1995; Hannon, Snyder, Eerola, & Krumhansl, 2004; London, 2011; Snyder & Krumhansl, 2001). For example, a study by McKinney and Moelants (2006) suggests that the most salient beat level in a given piece of music may vary largely among individuals, such that in some pieces there may be up to four different metrical levels chosen for the most salient beat. Furthermore, beat salience is more ambiguous in certain genres (e.g. jazz) than in others (e.g. metal and punk).

In a finger tapping study using Western classical music (Martens, 2011), ambiguity of the perceived beat level between participants could not be explained by musical tempo. There were three types of listening strategies with regard to musical structure: ‘Surface’ listeners focused on the fastest consistent beat, the ‘deep’ listeners more on higher metrical levels, whereas a mixture of these two strategies was used by the ‘variable’ listeners. Less musical training was associated with ‘surface’ listening, while participants with more musical training more frequently employed ‘variable’ and ‘deep’ listening strategies. In a similar vein, a study by Drake, Penel, and Bigand (2000) reported that musicians, compared to non-musicians, had a tendency to tap along with higher metrical levels in classical piano pieces. Other studies did not find significant differences between musicians and non-musicians, gender or age groups (McKinney & Moelants, 2006; Moelants & McKinney, 2004; Snyder & Krumhansl, 2001). Regarding this divergence of findings, Snyder and Krumhansl (2001) suggested that “pulse-finding differences as a function of musical experience are not due to general motor control differences. Instead, style-dependent pulse-finding differences as a function of musical experience may indicate the presence of more perceptual difficulties (e.g., temporal periodicity extraction) with less musical experience” (p. 469).

Taken together, previous research suggests that music affects perceived time and that the tempo of music is the main musical factor, causing time to be perceived as lasting longer for faster tempi. However, musical tempo is not a single entity, since music features multiple metrical levels at which a listener may perceive the beat and thus synchronise to the signal. Once a stable relationship between the preferred rate and the tapping has been established, attention can be allocated to other accessible metrical levels, hence time can be structured in varying intervals (Drake, Jones, & Baruch, 2000).

The present study investigated how time estimations are affected by attending to different metrical levels when listening to music using a motor task (sensorimotor synchronisation). Based on the literature cited above, we hypothesised that (i) tapping at a faster rate by synchronising to lower metrical levels causes longer time estimations, and vice versa (e.g. Drake et al., 1999; London, 2011); (ii) faster musical tempi result in longer time estimations (e.g. Droit-Volet et al., 2013); and (iii) musical characteristics of rhythmic patterns also to some extent influence time estimations, since the non-temporal features should affect the perception of the most salient beat (e.g. Boltz, 2011). Furthermore, (iv) we assumed that time is perceived to last longer for musicians than non-musicians, since fewer cognitive resources are needed to tap to a specific metrical level. Thus, musicians should allocate more attention to the time estimation task, which in turn would cause durations to be perceived as lasting longer (e.g. Block et al., 2010; Drake, Penel et al., 2000).

Method

Participants

A total of 34 participants took part in the study. After careful inspection of the data, four participants had to be excluded for the following four reasons: insufficient tapping performance (35.19% of all trials were incorrect for one participant, see ‘Data analysis’), technical issues in the recording, same answers given throughout (this participant apparently fell asleep during parts of the experiment), and higher age in order to balance the range of the two groups. Subsequent analyses were based on 30 participants.

The musicians group consisted of 10 men and 5 women with a mean age of 26.53 years (SD = 8.76, range: 19–47 years). Participants played a musical instrument for M = 16.67 years (SD = 10.11) and studied an instrument or voice with a teacher for M = 9.80 years (SD = 5.16). The general importance of music in their lives was M = 5.77 (SD = 0.42), rated on a 7-point scale ranging from 1 (not important at all) to 7 (very important). The non-musicians group consisted of 12 women and 3 men with a mean age of 28.73 years (SD = 11.16, range: 20–54 years) who had played a musical instrument for M = 0.20 years (SD = 0.76). Participants in this group had music lessons for M = 0.23 years (SD = 0.77). Importance of music was rated to be M = 4.00 (SD = 1.41). The two groups differed in terms of instrumental experience (p < .001) and the importance of music (p < .001), but did not differ in age (p > .05). All participants took part in accordance with the guidelines of the local Ethics Committee and were compensated with 10 € for participation.

Stimuli

The stimuli used in this study consisted of three rhythmic patterns that were based on the common ‘PoumTchak’ rhythm (Zeiner-Henriksen, 2010b) typically used in electronic dance music (Snoman, 2009; Zeiner-Henriksen, 2010a). The patterns consisted of four instruments (kick drum, snare drum, hi-hat, and bass synthesiser) arranged in a 4/4 duple meter consisting of whole, half, quarter, and eighth notes and were presented in a loop. The bass synthesiser played the first four notes of a C minor scale in ascending direction (Figure 1).

Figure 1. Notations of the rhythmic patterns.

A pilot study several weeks before the current experiment tested these stimuli, including the ‘PoumTchak’ rhythm with the same instruments and 23 other patterns which were obtained by reassigning the four instruments to the four subdivisions in each possible combination. Thirty participants tapped freely to each of the patterns at their preferred rate, synchronising with a metrical level of their own choice. Based on the results of the pilot study, a subset of three patterns was then chosen for the current study that varied in participants’ agreement regarding the chosen tapping rate, as measured by the coefficient of unalikeability. The coefficient of unalikeability is a measure of consensus (Dror, Panda, May, Majumdar, & Koren, 2014), and here indicates the degree of participants’ agreement on the preferred tapping rate (i.e. most salient beat) for each pattern. In the current study, pattern #1 had the lowest coefficient, indicating high agreement upon participants, while pattern #2 had the highest coefficient, indicating low agreement. Pattern #3 was the one closest to the median (Table 1). One interesting outcome of the pilot study was that the ‘PoumTchak’ pattern did not yield the lowest coefficient of unalikeability, therefore it was not used in the current study.

Table 1. Features of musical rhythmic patterns.

| Pattern | Features | Coefficient of Unalikeability | |||

|---|---|---|---|---|---|

| Event Density (onset/sec) | Mean Flux (100-200 Hz) | Mean Flux (6.4-12.8 kHz) | Spectral Centroid (Hz) | ||

| #1 | 2.39 | 5.77 | 2.01 | 3053.47 | 0.24 |

| #2 | 4.25 | 6.79 | 2.45 | 2926.65 | 0.66 |

| #3 | 5.11 | 7.5 | 0.58 | 2112.26 | 0.43 |

Table 1 lists psychoacoustical features of the patterns that are particularly relevant for rhythm and tempo perception. Using the MIRToolbox for Matlab (Lartillot, Toiviainen, & Eerola, 2008), the degree of spectral flux for sub-bands 100–200 Hz and 6,400–12,800 Hz was calculated by taking the Euclidean distances of the power spectra for each two consecutive frames of the signal, using a frame length of 25 ms and an overlap of 50% between successive frames and then averaging the resulting time-series using the ‘mirflux’ function (Alluri & Toiviainen, 2010). These two sub-bands were shown to be particularly related to rhythmic features (Burger, Ahokas, Keipi, & Toiviainen, 2013). Event density was calculated by the salient note onsets per second since it can affect tempo perception (Drake et al., 1999). The spectral centroid was calculated using the ‘mircentroid’ function as a descriptor of perceptual brightness.

For each of these three patterns, different versions with tempi at 83, 120, and 150 BPM (quarter notes) were produced and each version consisted of eight bars, resulting in 3 × 3 stimuli. While 120 BPM refer to the preferred perceived tempo in music and spontaneous motor tempo Moelants, 2002), the other two tempi were chosen in order to produce distinct slow and fast versions of the rhythmic patterns. Furthermore, a larger difference between 120 BPM and the lower tempo at 83 BPM was chosen compared to the faster tempo at 150 BPM to get closer to, but still within, the lower rate limits of the corresponding inter-tap intervals (ITI’s, see Table 2) for sensorimotor synchronisation (SMS) tasks and to take into account non-linear relations of movement timing and interval durations (Madison, 2014; Repp, 2006). Since the number of bars were fixed, the tempi determined stimulus durations (Table 2). Additional catch-trials based on the same rhythmic patterns consisted of either four bars (83 BPM = 11.57 sec, 120 BPM = 8.00 sec, 150 BPM = 6.40 sec) or 12 bars (83 BPM = 34.70 sec, 120 BPM = 24.00 sec, 150 BPM = 19.20 sec).

Table 2. Temporal details of the musical rhythmic patterns.

| Listening Condition | Tempo | ||||

|---|---|---|---|---|---|

| 83 BPM | 120 BPM | 150 BPM | |||

| Half notes | 1.446 | 1 | 0.8 | ||

| IOI (sec) | Quarter notes | 0.723 | 0.5 | 0.4 | |

| Eighth notes | 0.361 | 0.25 | 0.2 | ||

| Stimulus | |||||

| 23.133 | 16 | 12.8 | |||

| Duration (sec) | |||||

Design and procedure

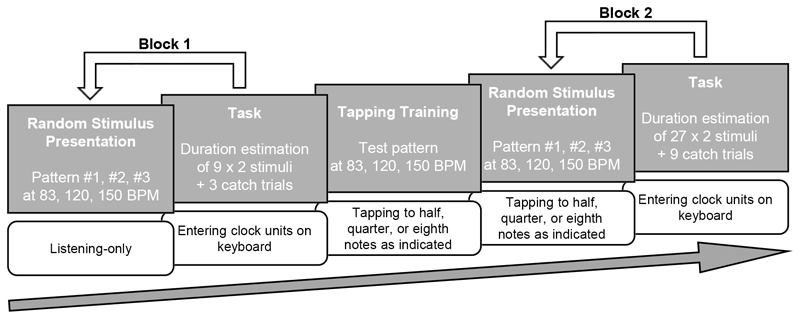

The study design consisted of three musical rhythmic patterns, presented at three different tempi in four listening condition (listening-only without tapping, attending and tapping to half notes, to quarter notes, and to eighth notes). The main task of the experiment was to estimate the time of each stimulus duration. Every participant was presented with all stimuli in all listening conditions, resulting in a 3 (Pattern) × 3 (Tempo) × 4 (Listening Condition, focussing on different metrical levels) repeated-measures design for the time estimation task, and a 3 × 3 × 3 repeated-measures design for the SMS task, since no tapping data was produced during the listening-only condition (Figure 2). Each condition was presented twice to account for response variability of time estimations (Grondin, 2010; Mioni, 2018). The listening-only block consisted of 18 trials and 3 ‘catch-trials’ with varying stimulus durations, and the tapping block consisted of 54 trials and 9 ‘catch-trials’ (one-seventh of all trials in each block). Each of the additional ‘catch-trials’ varied in terms of stimulus conditions and were meant to test for participants’ attention to the time estimation task. Participants reliably recognised the shorter or longer durations of the ‘catch-trials’, such that their averaged time estimation ratios correlated with the actual trials as indicated by intraclass correlation [ICC = .96, CI(.92, .98)].

Figure 2.

Experimental design. Participants first estimated the duration of each stimulus after listening without tapping (Block 1), then estimated the duration of each stimulus after tapping to a given metrical level (Block 2). Stimuli in both blocks were presented twice and in randomised order.

After providing informed consent, participants were placed in front of a LCD display connected to the experiment computer and entered demographic data into OpenSesame (Version 3.1.9; Mathôt, Schreij, & Theeuwes, 2012) which was used for experimental protocol as well as the collection of time estimation data. Then, participants were introduced to the first task which was to estimate the time of stimulus duration in seconds without a secondary task (prospective paradigm), meaning without tapping to a specific metrical level of the musical patterns. They were informed before presentation to listen to the stimuli attentively without counting the time. Stimuli were played back via Live 9 (Version 9.7.7, Ableton) and Beyerdynamic DT-880 Pro headphones. After each stimulus, participants estimated the stimulus duration by entering the subjective time in seconds on a computer keyboard. They confirmed their estimation by pressing enter, and the next stimulus played back with a delay of four seconds. All stimuli were presented in individually randomised order.

In the second part of the experiment, participants tapped to the stimuli and subsequently estimated the presentation duration as in the first part. A BopPad touch pad (Keith McMillen Instruments) with a C411 condenser vibration pickup (AKG) placed at the edge of the touch pad was used for tapping. The pickup was connected to a Scarlett 2i2 audio interface (Focusrite), and tapping data was recorded in Live 9. Software environment communication was done using a virtual MIDI port and the Mido library for Python. This set-up allowed for recordings of audio and MIDI signals simultaneously. Intrasystem latency of the touch pad (6.80 ms) and audio hardware (7.89 ms) were compensated.

Participants were instructed to tap with the index finger of their preferred hand on the touch pad to the half, quarter or eighth note level, respectively. The respective metrical level for each trial was shown for four seconds on the display before a stimulus was played back and further indicated by a cow bell sound implemented in the stimuli for the first two bars, since non-musicians were not expected to know the correct metrical level indicated by name immediately. All stimuli were presented in individually randomised orders (Figure 2). Before the second part of the experiment started, participants practised the tasks for at least three test trials including each metrical level once, and were able to repeat the practice trials as often as they needed using a test pattern not included in the actual experiment.

Data analysis

Tapping data

SMS performance is commonly assessed by tapping variability as a measure of SMS consistency, and SMS accuracy as a measure of asynchrony to a corresponding inter-onset interval (IOI) (Repp, 2005; Repp & Su, 2013). SMS is achieved when an action is in attunement with a predictable external rhythm. In order to analyse tapping performance, all taps before the onset of the third bar (- 25% of the corresponding ITI) were excluded. If two successive taps were within 10% from each other within a correct ITI, the second tap was counted as a rebound tap and removed from data. Successful SMS was defined by two conditions: first, the number of taps per trial had not to be smaller or larger than 25% of the correct number of taps (half notes: 12 taps, quarter notes: 24 taps, eighth notes: 48 taps). Second, the mean ITI of a trial had not to be shorter or longer than 25% the correct ITI. If one of these conditions was not met, that trial was counted as unsuccessful SMS and removed from analysis (3.76% of all trials). Of the remaining trials, the first 12 taps for each metrical level and tempo condition were considered for further analysis. Double or missing taps, with ITI’s shorter or longer than two thirds of the correct ITI for the specific trial, were removed from the analysis (1.54% of the total number of taps).

Tapping performance was assessed by circular variance as a measure for tapping consistency, and mean asynchrony as a measure of tapping accuracy. Circular variance was computed based on asynchronies using the CircStat toolbox for Matlab (Berens, 2009). Asynchronies were computed by calculating the circular mean for each trial and then converted back to mean asynchrony (in ms), since statistical methodology of circular data is not available for multifactor repeated-measures analyses (e.g. Wöllner, Deconinck, Parkinson, Hove, & Keller, 2012). Before entering the data into separate repeated-measures Analyses of Variance (ANOVAs), trials with identical condition were averaged. If the data did not meet sphericity assumption (Mauchly’s W), a Greenhouse-Geisser correction was used. Post-hoc comparisons were calculated with a Bonferroni adjustment.

Time estimation data

Time estimations for trials not fitting the criteria for successful SMS were removed from analysis. Time estimations (subjective time) were divided by the target duration (objective time) and subtracted by 1. This ratio is a standard method in assessing time estimation, whereby 0 indexes perfect time estimation, positive values overestimations and negative values underestimations of time, allowing for comparisons of time estimations for different target durations. In a last step, time estimation ratios for identical stimuli were averaged before a repeated-measures ANOVA was performed on the data. If sphericity assumption was not met (Mauchly’s W), a Greenhouse-Geisser correction was used. Bonferroni adjustments were employed for post-hoc comparisons.

Relationship between time estimation ratio and tapping performance

To assess the relationship between time estimations and tapping performance, repeated-measures correlations including the factors Listening Condition and Tempo were computed (Bakdash & Marusich, 2017). Two separate correlations between time estimation ratios and tapping consistencies as well as time estimation ratios and tapping accuracies were computed. This statistical technique does account for the within-participants study design. Using simple regression/correlation techniques would violate the assumption of independence or require averaging/aggregation the data.

Results

Results are presented in the following order: First, effects of the factors Listening Condition, Tempo, Patterns, and the two groups (musicians vs. non-musicians) on time estimation ratios are reported, followed by effects of the same factors on tapping consistency and tapping accuracy. Finally, the relationship between the two tapping performance measures and time estimation ratios are reported.

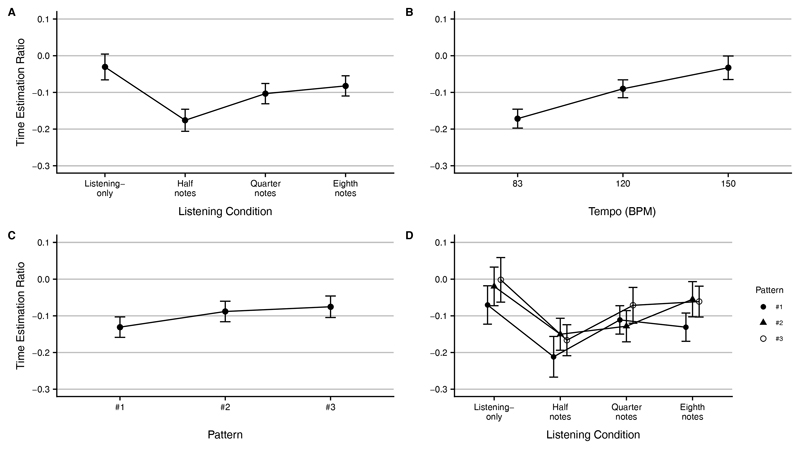

Time estimations

To test for effects on time estimations a repeated-measures ANOVA with the factors Listening Condition (listening-only without tapping, tapping to half notes, quarter notes, eighth notes), Tempo (83, 120, 150 BPM), and Pattern (pattern #1, #2, #3) was run on time estimation ratios with the two groups as a between-participant factor. The ANOVA yielded a significant main effect for factor Listening Condition [F(1.89, 47.16) = 11.08, p < .001, ηP2 = .31], suggesting that attending and tapping to a specific metrical level influenced the time estimations. Post-hoc comparisons indicate that tapping to half notes shortened perceived time, differing from all other metrical levels (p < .01). The other levels did not show a significant difference between them (p > .05) (Figure 3.A).

Figure 3.

Results of the time estimation ratios for each main factor and significant interaction effects. Error bars indicate 95% confidence intervals.

Time estimations were also affected by factor Tempo [F(1.16, 28.93) = 13.61, p < .001, ηP2 = .35], suggesting that the faster the tempo, the longer stimuli seemed to last and the more accurate the time was estimated. Post-hoc comparisons showed that all tempi differed from each other (p < .05) (Figure 3.B).

Factor Pattern showed a main effect [F(1.39, 34.89) = 6.26, p < .05, ηP2 = .20], suggesting that the musical characteristics of the patterns affected time estimations. Pattern #1 led to the shortest time estimations differing from the others (p < .05). Pattern #2 and #3 did not differ (p > .05) (Figure 3.C).

Musicians and non-musicians did not estimate the stimuli durations differently [F(1, 25) = 0.02, p > .05, ηP2 = .01]. The factor did not interact with any other factor either (p > .05).

Main factors Listening Condition and Pattern interacted with each other [F(6, 150) = 3.12, p < .01, ηP2 = .11], suggesting that the effect of Listening Condition differs as a function of Pattern (Figure 3.D). To further investigate this interaction, contrasts were performed on comparing all three patterns with each listening condition. Results show that tapping to half notes compared to quarter notes was perceived to last longer for pattern #1 than pattern #2 (p < .01). Further significant contrasts were found between quarter notes compared to eighth notes, which were judged longer for pattern #2 than for pattern #1 (p < .001). Tapping to eighth notes compared to quarter notes also differed between pattern #2 and #3 (p < .01), showing that time estimations were not affected for pattern #3 at these two metrical levels. No other interactions between main factors were found (p > .05).

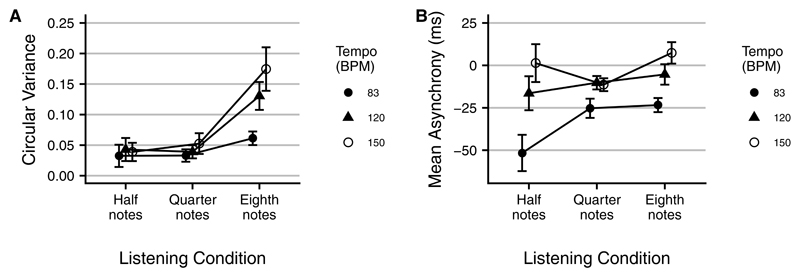

Tapping performance

To test for effects of the main factors Listening Condition, Tempo, and Pattern on tapping performance, two separate repeated-measures ANOVAs on circular variance as a measure for tapping consistency and mean asynchrony as a measure for tapping accuracy were run, with the two groups as a between-participant factor.

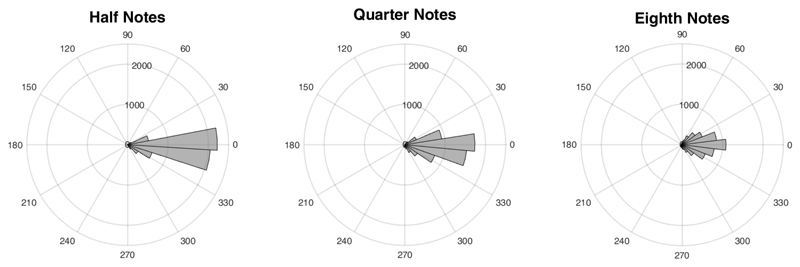

Tapping consistency

The ANOVA showed a main effect for Listening Condition on circular variance [F(2, 50) = 21. 86, p < .001, ηP2 = .47], suggesting that tapping consistency decreased with lower metrical levels. Figure 4 illustrates the circular histograms for the three metrical levels participants tapped to for all patterns and tempi. Post-hoc comparisons indicate that tapping consistency was lowest for eighth notes compared to half notes and quarter notes (p < .001), while half notes and quarter notes did not differ (p > .05).

Figure 4.

Circular histogram of participants’ tapping according to Listening Condition. The size of the bins represent the total number of taps, 0° represents the beat.

Factor Tempo yielded a main effect on circular variance as well [F(2, 50) = 13.77, p < .001, ηP2 = .36], suggesting that tapping consistency decreased with faster tempi. 83 BPM showed the highest tapping consistency compared to 120 BPM (p < .01) and 150 BPM (p < .001). 120 and 150 BPM did not differ (p > .05).

Factor Pattern did not show a main effect on circular variance [F(2, 50) = 1.30, p > .05, ηP2 = .05], indicating that the characteristics of the patterns did not influence tapping consistency. The between-participants factor showed no effect [F(1, 25) = 3.05, p > .05, ηP2 = .11], suggesting that musicians and non-musicians performed similar in terms of tapping consistency.

Factors Listening Condition and Tempo interacted with each other [F(2.61, 65.34) = 8.96, p < .001, ηP2 = .26], indicating that tapping consistency was affected by tapping rate as both factors changed the respective ITI’s (Figure 5.A). Contrast analysis showed that circular variance increased for eighth note level compared to half note level between 83 and 120 BPM (p < .01), 83 and 150 BPM (p < .001), as well as 120 and 150 BPM (p < .05). Circular variance was not affected by Tempo when tapping to half notes (p > .05). Furthermore, circular variance differed between quarter notes and eighth notes, between 83 and 120 BPM (p < .01), and between 83 and 150 BPM (p < .001), indicating that the tempo only affected tapping consistency on the eight note level, showing an increase in circular variance. There were no further interaction effects (p > .05).

Figure 5.

Significant interactions in tapping performance for (A) circular variance (B) and asynchrony. Error bars indicate 95% confidence intervals.

Tapping accuracy

The ANOVA on asynchronies yielded a main effect for factor Listening Condition [F(2, 50) = 13.10, p < .001, ηP2 = .34], suggesting that tapping to different metrical levels influenced accuracy. Particularly, the lower the metrical level (higher tapping rate), the smaller was the negative mean asynchrony, meaning that the corresponding metrical level within the pattern was less anticipated. Post-hoc comparisons confirmed that all metrical levels differed from each other (p < .05).

Tempo yielded a main effect [F(1.50, 37.47) = 52.46, p < .001, ηP2 = .68], suggesting that the faster the tempo, the less participants anticipated the corresponding metrical level (smaller negative mean asynchrony). Post-hoc comparisons confirmed that all tempi differed in terms of mean asynchrony (p < .01). Mean asynchrony was not influenced by factor Pattern [F(1.33, 33.31) = 2.15, p > .05, ηP2 = .08], thus the musical characteristics did not affect tapping accuracy.

Furthermore, the ANOVA yielded an interaction between factors Listening Condition and Tempo [F(2.51, 62.86) = 9.16, p < .001, ηP2 = .27] (Figure 5.B). Contrast analysis indicates that asynchronies differed when tapping to half notes and quarter notes between 83 and 120 BPM (p < .01), and between 83 and 150 BPM (p < .001). Asynchronies for half notes differed between 120 BPM and 150 BPM as well (p < .01). Comparing half and eighth notes, asynchronies differed at all tempi (p < .05), whereas asynchronies between 120 and 150 BPM were only affected for eighth notes compared to quarter notes (p < .01).

Musicians and non-musicians did not perform differently in terms of tapping accuracy [F(1, 25) = 0.41, p > .05, ηP2 = .03]. Yet, there was an interaction with factor Tempo [F(1.50, 37.47) = 3.94, p < .05, ηP2 = .14]. Contrast analysis indicates that non-musicians showed higher negative asynchronies at 83 BPM compared to 150 BPM than musicians (p < .05), suggesting that asynchronies were less affected by Tempo for musicians than non-musicians. Furthermore, factor Pattern interacted with the groups as well [F(1.33, 33.31) = 3.96, p < .05, ηP2 = .18]. Contrasts analysis suggests that musicians’ tapping accuracy was less influenced by the patterns than non-musicians’ tapping accuracy, showing higher negative asynchronies for pattern #2 compared to the other two (p < .05). No other interactions between main factors were found (p > .05).

Repeated-measures correlation between time estimations and tapping measures

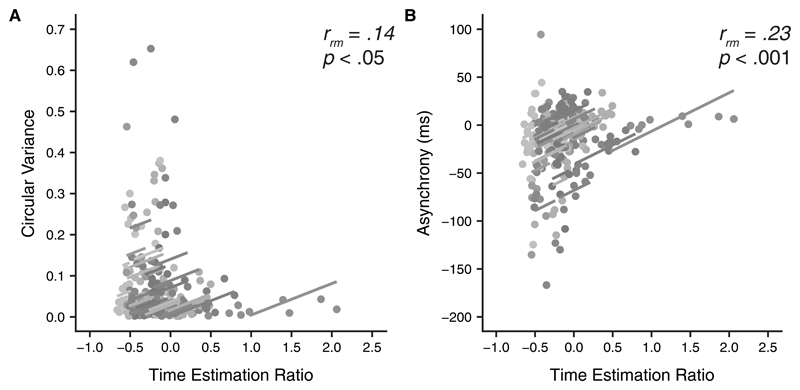

To assess the relationship between time estimations and tapping performance, two separate repeated-measure correlations were computed. Since factor Pattern did not show main effects on circular variance and mean asynchrony, data was averaged over the three patterns, therefore the correlations included the factors Listening Condition and Tempo. As Figure 6.A shows, tapping consistency was significantly correlated with time estimation ratios [rrm = .14, p < .05, CI(.01, .26)]. The positive correlation shows that the higher the time estimation ratio, the higher the circular variance. In other words, the more unstable participants tapped, the longer they estimated the durations. Similarly, mean asynchrony as a measure of tapping accuracy yielded a significant correlation with time estimation ratios [rrm = .23, p < .001, CI(.11, .35)], indicating that the higher the negative mean asynchrony, the shorter participants estimated the durations (Figure 6.B).

Figure 6.

Repeated-measures correlation between (A) time estimation ratio and circular variance, and (B) between time estimation ratio and mean asynchrony, across Listening Condition and Tempo. Lines represent the fit for each individual participant.

Discussion

Previous studies have shown that the tempo of music has an impact on its perceived duration. Yet what is perceived as the most salient beat in music, and hence the tempo, may differ between individuals. This study aimed at investigating the relationship between the metrical level of musical rhythmic patterns to which individuals actively attend (sensorimotor synchronisation, SMS) and the perception of time. While listening to the patterns without tapping led to the longest and most accurate time estimations, SMS resulted in underestimations of stimuli durations. When synchronising to a high metrical level (half notes), participants estimated time to be the shortest, while time estimations increased with lower metrical levels (quarter and eighth notes). Therefore, results of this study suggest that focusing on higher metrical levels in music, by directing attention and motor activity, affects perceived time. In other words, time passes differently for listeners of the same music, depending on the metrical level attended to.

The first hypothesis stated that the metrical level chosen for SMS influences time estimations, because structuring attentional processes and motor activity affects internal timing mechanisms according to internal clock models. Results showed that tapping to the half note level led to the shortest time estimations, followed by the quarter note level and eighth note level. While time was underestimated in all conditions, in the listening-only condition without tapping time was estimated as lasting longer compared to the SMS conditions. This can be explained by attentional processes. According to the attentional gate model (Block & Zakay, 1996; Zakay & Block, 1995), participants may have allocated more attentional resources to the time estimation task, thus time seemed to last longer following the accumulation of more pulses. Accordingly, time estimations were most accurate without tapping. When participants tapped to the patterns, in contrast, durations were estimated to last longer with lower metrical levels (higher tapping rate) compared to the other listening conditions including tapping. The interaction between factors Listening Condition and Pattern indicates that the characteristics of the patterns influenced time estimations (Drake et al., 1999; McKinney & Moelants, 2006; Moelants & McKinney, 2004), especially at the quarter note and eighth note level. The time-shortening effect of the half note level was particularly large for patterns #1 and #3, suggesting that SMS with a higher metrical level shortens perceived time, particularly for musical rhythms with higher beat salience (i.e. preferred tapping rate).

The second hypothesis stated that time estimations are longer with faster tempi, which was confirmed by the results showing that musical tempo affected time estimations. This is in line with findings from previous studies suggesting the same effect of musical tempo on time perception (Droit-Volet et al., 2013; Oakes, 2003; Treisman et al., 1990), as well as for visual rhythms (Droit-Volet & Wearden, 2002; Ortega & López, 2008), thus, the tempo effect for rhythms seems to be robust in different modalities. The current study adds empirical support for time perception theories suggesting that faster rhythms cause an internal clock or pulse generator to run faster and thus accumulate more pulses.

The third hypothesis proposed that the musical characteristics of the patterns should influence time estimations. This was supported by the results, yet of all factors in this study, the different patterns showed the smallest effect on time estimations. Only pattern #1, which was the one most similar to the common ‘PoumTchak’ pattern (Zeiner-Henriksen, 2010b), was different to patterns #2 and #3, leading to shorter perceptions of time. An explanation might be that pattern #1 showed the lowest event density and therefore was perceived as being slower or less physiologically arousing. The interaction between factors Pattern and Listening Condition, as discussed above, suggests that the perception of time is not independent from psychoacoustical characteristics of the patterns, especially for the quarter and eighth note level. Pattern #3, consisting of the lowest high-frequency spectral flux, showed no difference between quarter notes and eighth notes, and pattern #1 resulted in only a small time-lengthening trend for eighth notes. In Pattern #2, durations were perceived to be longer when tapping to eighth notes compared to quarter notes. It should be noted that pattern #2 had the lowest degree of participants’ agreement on the preferred tapping rate (coefficient of unalikeability) in the pilot study. Nevertheless, patterns #1 and #2 consisted of similar high-frequency flux values, and the snare drum was placed in both patterns at the corresponding metrical levels, suggesting that event density of percussive instruments with prominent timbral features might be of particular importance for the perception of time. Taken together, results show that musical characteristics may influence time perception differently for specific metrical levels, revealing a complex interaction between these factors. More research on psychoacoustic characteristics, beat salience, and time perception is needed to corroborate the current findings.

The fourth hypothesis stated that musicians would estimate time as lasting longer compared to non-musicians. Based on findings of the influence of cognitive load on time estimations and on the attentional gate model (Block et al., 2010; Block & Zakay, 1996; Zakay & Block, 1995), it was assumed that musicians would find it easier to perform the SMS tasks and could therefore allocate more attention to the time estimation task, which should have caused time to last longer. Nevertheless, there was no effect for time estimation, and also no main differences regarding tapping performances between these two groups of participants, suggesting that musicians and non-musicians were able to allocate attentional resources equally to both tasks. Effects of the groups were present in statistical interaction effects, indicating that musicians’ tapping accuracy (smaller negative mean asynchrony) was less affected by the three tempi than it was for the non-musicians group. Non-musicians tapped with higher negative mean asynchrony at the slow tempo and were also more affected by the patterns, resulting in a higher negative mean asynchrony for pattern #2, which showed the least consensus on a preferred tapping rate in the pilot study. While this interaction may partly support previous research into musical training and tapping performance (Repp, 2010; Repp & Doggett, 2007), the absence of main effects regarding tapping accuracy and consistency are more in line with Hove, Spivey, and Krumhansl (2010). Since both factors yielded no main effects and the interaction showed no crossover effect, this result should be interpreted with caution. It seems more likely that tapping performance is not simply enhanced by general musical training, but should rather improve with domain-specific instrumental training such as for percussion instruments (Repp, London, & Keller, 2013).

Tapping performance measures showed a small tendency towards positive correlations with time estimations, indicating that time is perceived as lasting longer when tapping becomes less stable and less anticipatory in terms of smaller negative asynchronies. This supports the assumption that the more difficult it is to synchronise with a musical rhythm, the fewer attentional resources are available for time estimation. Since tapping performance of the participants was generally successful (very few reactive taps), more extreme tempi could lead to more variability in tapping performance (see also Madison, 2014). In addition, future studies could use more complex tapping tasks such as presenting rhythms with varying metrical time signatures or polyrhythms in order to address this relationship in more detail.

In this study, self-produced musical rhythmic patterns were used, which allowed for the presentation at different tempi without the need for audio manipulation such as time-stretching or a posteriori modifications of other psychoacoustic parameters. Although some participants reported experiencing ‘ear worms’ after the experiment, anecdotally supporting the goal of creating a realistic music listening experience, future studies should use real pieces of music to investigate if the reported effects of SMS on time estimations can be replicated. As a starting point, we decided to use controlled stimuli that bore sufficient resemblance with electronic dance music. These patterns showed interactions between non-temporal musical features and time perception. As mentioned above, another possible approach would be to test the effect of different rhythmic complexities with other ratios such as triple and quadruple meters. This would allow for investigations of the relationship between tapping performance, various rhythmic structures and perceived time. Furthermore, future studies could use different methods to investigate psychological time by employing time estimation, time production and reproduction tasks, since each method has certain advantages and drawbacks (cf. Mioni, 2018). In the current study, the verbal estimation method was chosen since it offered a good balance between motor coordination (SMS) and time estimation responses.

To conclude, this study is the first to show that the metrical level chosen for sensorimotor synchronisation to music affects time estimations. If attention and motor activity is directed towards higher metrical levels, perceptions of time are shortened. Thus, when listening to music with an ambiguous musical tempo, individuals may have different temporal experiences that go beyond a simple beats per minute measure. Future research on time perception using musical stimuli should take the beat salience of the music into consideration. Complex musical structures may induce inter- and intraindividual ambiguity in perceived tempi, which correspondingly affects perceived time. The results might also be of particular interests for the choice of appropriate music for situations in which the perception of time is important, such as in waiting situations or commercial applications including song selection for certain background contexts. The findings do not only offer new insights into the field of music psychology but also into the field of time perception more generally, supporting the assumption of a unified sensorimotor sense of time.

Acknowledgments

This research was supported by the European Research Council to the second author (grant agreement: 725319) for the project ‘Slow motion: Transformations of musical time in perception and performance’ (SloMo). We would like to acknowledge the contribution of Federico Visi in the pilot phase of this study, particularly regarding the rhythmic patterns.

References

- Alluri V, Toiviainen P. Exploring Perceptual and Acoustical Correlates of Polyphonic Timbre. Music Perception. 2010;27(3):223–242. doi: 10.1525/mp.2010.27.3.223. [DOI] [Google Scholar]

- Bakdash JZ, Marusich LR. Repeated measures correlation. Frontiers in Psychology. 2017;8:456. doi: 10.3389/fpsyg.2017.00456. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berens P. CircStat: A Matlab toolbox for circular statistics. Journal of Statistical Software. 2009;31(10) doi: 10.18637/jss.v031.i10. [DOI] [Google Scholar]

- Block RA, Grondin S, Zakay D. In: Timing and time perception: Procedures, measures, and applications. Vatakis A, Balci F, Di Luca M, Correa Á, editors. Leiden, SH: Brill; 2018. Prospective and retrospective timing processes: Theories, methods, and findings; pp. 32–51. [Google Scholar]

- Block RA, Hancock PA, Zakay D. How cognitive load affects duration judgments: A meta-analytic review. Acta Psychologica. 2010;134(3):330–343. doi: 10.1016/j.actpsy.2010.03.006. [DOI] [PubMed] [Google Scholar]

- Block RA, Zakay D. In: Time and mind. Helfrich H, editor. Seattle, WA: Hogrefe & Huber; 1996. Models of psychological time revisited; pp. 171–195. [Google Scholar]

- Boltz MG. Illusory tempo changes due to musical characteristics. Music Perception. 2011;28(4):367–386. doi: 10.1525/mp.2011.28.4.367. [DOI] [Google Scholar]

- Burger B, Ahokas R, Keipi A, Toiviainen P. In: Proceedings of the Sound and Music Computing Conference 2013. Bresin R, editor. Berlin: Logos; 2013. Relationships between spectral flux, perceived rhythmic strength, and the propensity to move; pp. 179–184. [Google Scholar]

- Burger B, London J, Thompson MR, Toiviainen P. Synchronization to metrical levels in music depends on low-frequency spectral components and tempo. Psychological Research. 2018;82(6):1195–1211. doi: 10.1007/s00426-017-0894-2. [DOI] [PubMed] [Google Scholar]

- Cameron MA, Baker J, Peterson M, Braunsberger K. The effects of music, wait-length evaluation, and mood on a low-cost wait experience. Journal of Business Research. 2003;56(6):421–430. doi: 10.1016/S0148-2963(01)00244-2. [DOI] [Google Scholar]

- Cooper GW, Meyer LB. The rhythmic structure of music. Chicago, IL: University of Chicago Press; 1960. [Google Scholar]

- Drake C, Gros L, Penel A. In: Music, Mind, and Science. Yi S-W, editor. Seoul: Seoul National University Press; 1999. How fast is the music? The relation between physical and perceived tempo; pp. 190–203. [Google Scholar]

- Drake C, Jones MR, Baruch C. The development of rhythmic attending in auditory sequences: Attunement, referent period, focal attending. Cognition. 2000;77(3):251–288. doi: 10.1016/S0010-0277(00)00106-2. [DOI] [PubMed] [Google Scholar]

- Drake C, Penel A, Bigand E. Tapping in time with mechanically and expressively performed music. Music Perception. 2000;18(1):1–23. doi: 10.2307/40285899. [DOI] [Google Scholar]

- Droit-Volet S, Bigand E, Ramos D, Bueno JLO. Time flies with music whatever its emotional valence. Acta Psychologica. 2010;135(2):226–232. doi: 10.1016/j.actpsy.2010.07.003. [DOI] [PubMed] [Google Scholar]

- Droit-Volet S, Ramos D, Bueno JLO, Bigand E. Music, emotion, and time perception: The influence of subjective emotional valence and arousal? Frontiers in Psychology. 2013;4:417. doi: 10.3389/fpsyg.2013.00417. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Droit-Volet S, Wearden J. Speeding up an internal clock in children? Effects of visual flicker on subjective duration. The Quarterly Journal of Experimental Psychology Section B. 2002;55(3b):193–211. doi: 10.1080/02724990143000252. [DOI] [PubMed] [Google Scholar]

- Dror DM, Panda P, May C, Majumdar A, Koren R. “One for all and all for one”: Consensus-building within communities in rural India on their health microinsurance package. Risk Management and Healthcare Policy. 2014;7:139–153. doi: 10.2147/RMHP.S66011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eitan Z, Granot RY. Primary versus secondary musical parameters and the classification of melodic motives. Musicae Scientiae. 2009;13(1_suppl):139–179. doi: 10.1177/102986490901300107. [DOI] [Google Scholar]

- Epstein D. Shaping time: Music, the brain and performance. New York, NY: Schirmer Books; 1995. [Google Scholar]

- Gibbon J. Scalar expectancy theory and Weber’s law in animal timing. Psychological Review. 1977;84(3):279–325. doi: 10.1037/0033-295X.84.3.279. [DOI] [Google Scholar]

- Gibbon J, Curch RM, Meck WH. Scalar timing in memory. Annals of the New York Academy of Sciences. 1984;423(1):52–77. doi: 10.1111/j.1749-6632.1984.tb23417.x. [DOI] [PubMed] [Google Scholar]

- Gil S, Droit-Volet S. Emotional time distortions: The fundamental role of arousal. Cognition & Emotion. 2012;26(5):847–862. doi: 10.1080/02699931.2011.625401. [DOI] [PubMed] [Google Scholar]

- Grondin S. Timing and time perception: A review of recent behavioral and neuroscience findings and theoretical directions. Attention, Perception, & Psychophysics. 2010;72(3):561–582. doi: 10.3758/APP.72.3.561. [DOI] [PubMed] [Google Scholar]

- Hannon EE, Snyder JS, Eerola T, Krumhansl CL. The role of melodic and temporal cues in perceiving musical meter. Journal of Experimental Psychology Human Perception and Performance. 2004;30(5):956–974. doi: 10.1037/0096-1523.30.5.956. [DOI] [PubMed] [Google Scholar]

- Hove MJ, Spivey MJ, Krumhansl CL. Compatibility of motion facilitates visuomotor synchronization. Journal of Experimental Psychology Human Perception and Performance. 2010;36(6):1525–1534. doi: 10.1037/a0019059. [DOI] [PubMed] [Google Scholar]

- Jones MR. In: Ecological psychoacoustics. Neuhoff JG, editor. Amsterdam, Boston: Elsevier Academic Press; 2004. Attention and timing; pp. 49–85. [Google Scholar]

- Kellaris JJ, Kent RJ. The influence of music on consumers’ temporal perceptions: Does time fly when you’re having fun? Journal of Consumer Psychology. 1992;1(4):365–376. doi: 10.1016/S1057-7408(08)80060-5. [DOI] [Google Scholar]

- Lartillot O, Toiviainen P, Eerola T. In: Data Analysis, Machine Learning and Applications: Studies in Classification, Data Analysis, and Knowledge Organization. Preisach C, Burkhardt H, Schmidt-Thieme L, editors. Springer Berlin Heidelberg; 2008. A Matlab toolbox for music information retrieval; pp. 261–268. [Google Scholar]

- Lerdahl F, Jackendoff R. An overview of hierarchical structure in music. Music Perception. 1983;1(2):229–252. doi: 10.2307/40285257. [DOI] [Google Scholar]

- London J. Tactus ≠ Tempo: Some dissociations between attentional focus, motor behavior, and tempo judgment. Empirical Musicology Review. 2011;6(1):43–55. doi: 10.18061/1811/49761. [DOI] [Google Scholar]

- London J. Hearing in time: Psychological aspects of musical meter. 2 ed. Oxford, ON: Oxford University Press; 2012. [Google Scholar]

- London J, Burger B, Thompson M, Toiviainen P. Speed on the dance floor: Auditory and visual cues for musical tempo. Acta Psychologica. 2016;164:70–80. doi: 10.1016/j.actpsy.2015.12.005. [DOI] [PubMed] [Google Scholar]

- Madison G. Sensori-motor synchronisation variability decreases as the number of metrical levels in the stimulus signal increases. Acta Psychologica. 2014;147:10–16. doi: 10.1016/j.actpsy.2013.10.002. [DOI] [PubMed] [Google Scholar]

- Madison G, Paulin J. Ratings of speed in real music as a function of both original and manipulated beat tempo. The Journal of the Acoustical Society of America. 2010;128(5):3032–3040. doi: 10.1121/1.3493462. [DOI] [PubMed] [Google Scholar]

- Martens PA. The ambiguous tactus: Tempo, subdivision benefit, and three listener strategies. Music Perception. 2011;28(5):433–448. doi: 10.1525/mp.2011.28.5.433. [DOI] [Google Scholar]

- Mathôt S, Schreij D, Theeuwes J. OpenSesame: An open-source, graphical experiment builder for the social sciences. Behavior Research Methods. 2012;44(2):314–324. doi: 10.3758/s13428-011-0168-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McKinney MF, Moelants D. Ambiguity in tempo perception: What draws listeners to different metrical levels? Music Perception. 2006;24(2):155–166. doi: 10.1525/mp.2006.24.2.155. [DOI] [Google Scholar]

- Mioni G. In: Timing and time perception: Procedures, measures, and applications. Vatakis A, Balci F, Di Luca M, Correa Á, editors. Leiden, SH: Brill; 2018. Methodological issues in the study of prospective timing; pp. 79–97. [Google Scholar]

- Moelants D. In: Stevens CJ, Burnham DK, McPherson G, Schubert E, Renwick J, editors. Preferred tempo reconsidered; Proceedings of the 7th Conference on Music Perception and Cognition; Sydney, NSW. 2002. pp. 580–583. [Google Scholar]

- Moelants D, McKinney MF. In: Lipscomb SD, Ashley R, Gjerdingen RO, Webster P, editors. Tempo perception and musical content: What makes a piece fast, slow or temporally ambiguous; Proceedings of the 8th Conference on Music Perception and Cognition; Evanston, IL. 2004. pp. 558–562. [Google Scholar]

- North AC, Hargreaves DJ. Can music move people? Environment and Behavior. 1999;31(1):136–149. doi: 10.1177/00139169921972038. [DOI] [Google Scholar]

- Noulhiane M, Mella N, Samson S, Ragot R, Pouthas V. How emotional auditory stimuli modulate time perception. Emotion. 2007;7(4):697–704. doi: 10.1037/1528-3542.7.4.697. [DOI] [PubMed] [Google Scholar]

- Oakes S. Musical tempo and waiting perceptions. Psychology and Marketing. 2003;20(8):685–705. doi: 10.1002/mar.10092. [DOI] [Google Scholar]

- Ortega L, López F. Effects of visual flicker on subjective time in a temporal bisection task. Behavioural Processes. 2008;78(3):380–386. doi: 10.1016/j.beproc.2008.02.004. [DOI] [PubMed] [Google Scholar]

- Parncutt R. A perceptual model of pulse salience and metrical accent in musical rhythms. Music Perception. 1994;11(4):409–464. doi: 10.2307/40285633. [DOI] [Google Scholar]

- Repp BH. Sensorimotor synchronization: A review of the tapping literature. Psychonomic Bulletin & Review. 2005;12(6):969–992. doi: 10.3758/BF03206433. [DOI] [PubMed] [Google Scholar]

- Repp BH. Rate limits of sensorimotor synchronization. Advances in Cognitive Psychology. 2006;2(2):163–181. doi: 10.2478/v10053-008-0053-9. [DOI] [Google Scholar]

- Repp BH. Sensorimotor synchronization and perception of timing: Effects of music training and task experience. Human Movement Science. 2010;29(2):200–213. doi: 10.1016/j.humov.2009.08.002. [DOI] [PubMed] [Google Scholar]

- Repp BH, Doggett R. Tapping to a very slow beat: A comparison of musicians and nonmusicians. Music Perception. 2007;24(4):367–376. doi: 10.1525/mp.2007.24.4.367. [DOI] [Google Scholar]

- Repp BH, London J, Keller PE. Systematic distortions in musicians’ reproduction of cyclic three-interval rhythms. Music Perception. 2013;30(3):291–305. doi: 10.1525/mp.2012.30.3.291. [DOI] [Google Scholar]

- Repp BH, Su Y-H. Sensorimotor synchronization: A review of recent research (2006-2012) Psychonomic Bulletin & Review. 2013;20(3):403–452. doi: 10.3758/s13423-012-0371-2. [DOI] [PubMed] [Google Scholar]

- Snoman R. Dance music manual: Tools, toys and techniques. 2 ed. Amsterdam, NH: Focal; 2009. [Google Scholar]

- Snyder JS, Krumhansl CL. Tapping to ragtime: Cues to pulse finding. Music Perception. 2001;18(4):455–489. doi: 10.1525/mp.2001.18.4.455. [DOI] [Google Scholar]

- Toiviainen P, Snyder JS. Tapping to Bach: Resonance-based modeling of pulse. Music Perception. 2003;21(1):43–80. doi: 10.1525/mp.2003.21.1.43. [DOI] [Google Scholar]

- Treisman M. Temporal discrimination and the indifference interval: Implications for a model of the “internal clock”. Psychological Monographs: General and Applied. 1963;77(13):1–31. doi: 10.1037/h0093864. [DOI] [PubMed] [Google Scholar]

- Treisman M, Faulkner A, Naish PLN, Brogan D. The internal clock: Evidence for a temporal oscillator underlying time perception with some estimates of its characteristic frequency. Perception. 1990;19(6):705–743. doi: 10.1068/p190705. [DOI] [PubMed] [Google Scholar]

- Van Noorden L, Moelants D. Resonance in the perception of musical pulse. Journal of New Music Research. 1999;28(1):43–66. doi: 10.1076/jnmr.28.1.43.3122. [DOI] [Google Scholar]

- Wöllner C, Deconinck FJA, Parkinson J, Hove MJ, Keller PE. The perception of prototypical motion: Synchronization is enhanced with quantitatively morphed gestures of musical conductors. Journal of Experimental Psychology Human Perception and Performance. 2012;38(6):1390–1403. doi: 10.1037/a0028130. [DOI] [PubMed] [Google Scholar]

- Wöllner C, Hammerschmidt D, Albrecht H. Slow motion in films and video clips: Music influences perceived duration and emotion, autonomic physiological activation and pupillary responses. PloS One. 2018;13(6):e0199161. doi: 10.1371/journal.pone.0199161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zakay D, Block RA. In: Time and the dynamic control of behavior. Richelle M, Keyser VD, d’Ydewalle G, Vandierendonck A, editors. Liège, LG: P.A.I; 1995. An attentional-gate model of prospective time estimation; pp. 167–178. [Google Scholar]

- Zeiner-Henriksen HT. In: Ashgate popular and folk music series Musical rhythm in the age of digital reproduction. Danielsen A, editor. Farnham, SU: Ashgate; 2010a. Moved by the groove: Bass drum sounds and body movements in electronic dance music; pp. 121–139. [Google Scholar]

- Zeiner-Henriksen HT. The “PoumTchak” pattern: Correspondences between rhythm, sound, and movement in electronic dance music. 2010b. Retrieved from https://www.duo.uio.no/handle/10852/56756.