Abstract

Surgical robots have been widely adopted with over 4000 robots being used in practice daily. However, these are telerobots that are fully controlled by skilled human surgeons. Introducing “surgeon-assist”—some forms of autonomy—has the potential to reduce tedium and increase consistency, analogous to driver-assist functions for lanekeeping, cruise control, and parking. This article examines the scientific and technical backgrounds of robotic autonomy in surgery and some ethical, social, and legal implications. We describe several autonomous surgical tasks that have been automated in laboratory settings, and research concepts and trends.

Keywords: Autonomy, knowledge representation, machine learning (ML), machine perception, surgical actions, surgical robotics

I. Introduction

Robotic systems are increasingly incorporated into surgical procedures, such as hip replacement, spinal fusion, biopsy collection, and minimally invasive surgeries (MISs) for operations involving the gall-bladder, hernia, prostate, and many other organs. Current surgical robots are fully teleoperated devices, i.e., the human surgeon/operator is responsible for all robot motions. The only element of “automated” intervention is in image processing and tremor reduction (low-pass filtering). As many surgical actions are repetitive and fatiguing, patients and surgeons alike could benefit from some degree of “supervised” autonomy where specific subtasks are delegated to the robot and performed autonomously under close supervision by a human surgeon. Such supervised autonomy has the potential to reduce the learning curve and increase overall accuracy. Supervised autonomy is also essential for telesurgery, as teleoperation is not feasible due to time delay. Research in autonomous robots is advancing, and many new commercial surgical robot systems are emerging, which could embed some semiautonomous features. This article considers the state of the art in autonomy for robot-assisted surgery and its prospects for adoption in practice.

An additional motivation for the use of automation in surgery is to compensate for human errors. Patient safety in medicine, and surgery, in particular, depends on many factors [1], [2]. Most medical errors are due to human shortcomings, either because of missing information, poor decision-making, lack of dexterity, or fatigue and lack of attention. For example, intensive care patients are exposed to 1.7 errors per day on average [3], and the economic and ethical implications are enormous. Automated image and data analysis have the potential to guide human operators to reduce errors.

There are many definitions of “autonomy,” ranging from the operation of a home thermostat to that of a self-driving vehicle on the highway. Many forms of autonomy exist in operating rooms (ORs), for example, automated monitors of blood pressure and heart rate. In this article, we focus on autonomy in surgery, where a system performs specific physical subtasks based on inputs from cameras and sensors rather than from human operators (almost always under close observation from human surgeons to recover in case of error).

II. Autonomy in Robotic Surgery

Early attempts to automate surgical tasks included preparation of bones in joint reconstruction surgery [4]–[6], for Transurethral Resection of the Prostate (TURP) interventions [7], [8], and manipulation of laparoscopic cameras [9], [10]. Some of these tasks required only simple motions or execution of preplanned tool paths relative to the patient’s anatomy. The enormous increase in computational power, computer vision, and machine learning (ML) capabilities in recent years is beginning to permit surgical robots to perform more complex tasks with greater degrees of autonomy.

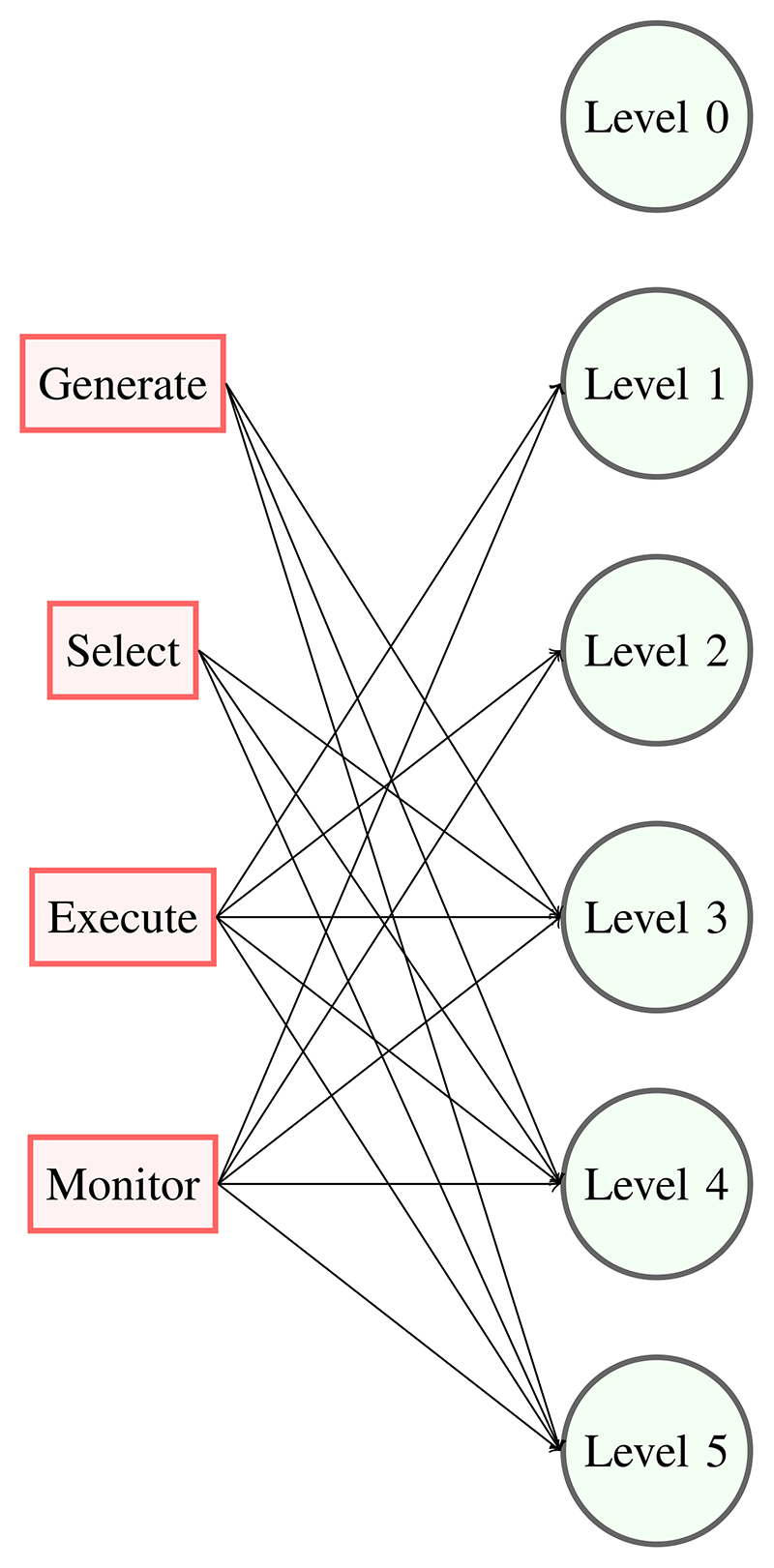

To better distinguish among the different automatic features, a few classifications of autonomy have been proposed. IEC/TR 60601-4-1: “Medical electrical equipment” Technical Report parameterizes autonomy along four cognitive functions.

Generate an option: to achieve the desired goals.

Select an option: among the possible options generated.

Execute an option: to carry out the selected option.

Monitor an option: to collect data about option execution.

Each function can be assigned to a human or to the system or done in cooperation. This definition generates ten degrees of autonomy based on the different assignments of the functions.

Classifications of autonomous surgical robots are available in the literature. Yip and Das [11] indicate four levels of autonomy, direct control, shared control, supervised autonomy, and full autonomy, and show examples of systems with these characteristics. Dupont et al. [12] characterizes active topics of medical robotics by considering the number of papers published on each topic, and autonomy is one of the topics with the strongest representation.

Recently proposed classification schemes for surgical robots [13], [14] follow that proposed for self-driving vehicles and similar systems and are based on human involvement in the task. At level 0, the robot does not provide any cognitive or manual assistance to the surgeon. At level 1, the robot informs the surgeon about task options and may provide manual assistance. In the intermediate levels 2 and 3, the interaction between the robot and the operator becomes discrete, e.g., the surgeon approves a course of action at the beginning of the task. Levels 4 and 5 are different since the robot may be practicing medicine and will require a different testing and validation strategy. The relations between IEC definitions and the levels of autonomy are schematically shown in Fig. 1: level 0 has no function related to autonomy; in level 1, the robot cooperates with the human and has functions related to execution and monitoring; level 2 is similar to level 1, but the robot executes and monitors surgical actions independently of human participation; finally, levels 3-4-5 have all functions related to autonomy. Within reach of today’s technology are levels of autonomy 2 and 3, in which small tasks are delegated to the robot under close human supervision. As mentioned in the Introduction, this approach will yield immediate and longer terms benefits, enabling more consistent task execution, e.g., in tissue manipulation and blood suction, in the longer term, compensating for slow or missing human response, when procedural cues are misinterpreted or missing because of communication interruption. These classifications can be used to identify, for selected surgical procedures, the technologies that would enable the different autonomy levels, as in [15].

Fig. 1. Relation between cognitive functions and levels of autonomy.

Fundamentally, these classification schemes are based on differing answers to two basic requirements: 1) the task that the robot is to do must be clearly and unambiguously specified and 2) the robot needs to be able to execute the specified task safely and reliably. Satisfying the first requirement necessitates the provision of suitable human–machine interfaces that are appropriate for the level of autonomy involved. This may involve something as simple as a joystick or pointing device for basic (level 1) teleoperation to very sophisticated (and so far unrealized) voice commands, such as “remove the gall bladder.”

To satisfy the second requirement, the system must be able to physically do what it is asked to do. The robot needs sufficient dexterity, precision, sensors, strength, size, and so on to perform the necessary motions, and the robot controller needs to be able to use these capabilities to perform the specified task. Furthermore, robotic devices used in surgical systems need to meet stringent sterility, safety, and reliability requirements.

Crucially, both requirements necessitate that systems have sufficient computational capabilities to interpret the surgeon’s specification of the task, relate it to the actual patient anatomy and task environment, and control the robot motions to perform the task. The level of situational awareness goes up rapidly as one moves up the hierarchy. For teleoperated surgical robots, such as the da Vinci Surgical System [16], the control computer needs to relate the motion of control handles and pedals to the motions of a surgical endoscope and surgical instruments manipulated by the robot. It must also perform very stringent internal safety checks to ensure that the robot’s motions are stable and consistent with the specifications coming from the control handles. Higher levels of autonomy typically require much more sophisticated models of the patient and the task to be performed.

Finally, it is important to realize that real-world systems generally do not operate only on a single level. For example, an orthopedic robot that automatically machines a bone to prepare it to receive an orthopedic implant may rely on some form of teleoperation or hand-over-hand guiding to position the robot where it needs to be in order to begin machining the bone. Similarly, a robotic system that is capable of automatically tying knots during suturing may rely on manual teleoperation for other portions of the procedure.

III. Clinical Applications and Systems Involving Autonomy

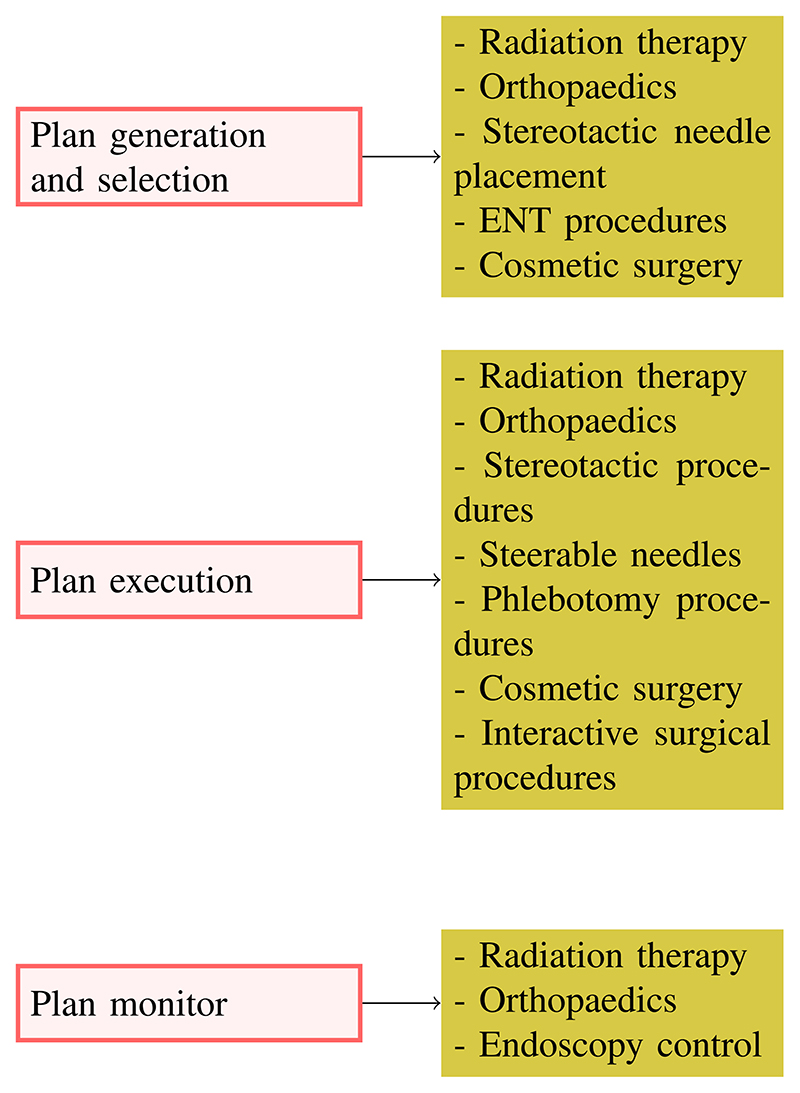

In this section, we list existing medical systems where some aspect of autonomy is carried out by a computer without human intervention. Since the descriptions in the following refer to commercially available products, we can only estimate their level of autonomy from the operational characteristics that they exhibit. The relation between the IEC characteristics of autonomy and products, and applications exhibiting these functions are schematically represented in Fig. 2.

Fig. 2. Products and applications involving autonomy.

A. Plan Generation and Selection With “Surgical CAD/CAM” Systems

Typically, the intervention is planned offline, usually from medical images, and the autonomous part of the system refers only to the generation and, sometimes, selection of the interventional plan. Before surgery, registration is done between the images and the real anatomy. Automatic plan generation is a well-established practice, and surgeons review and approves each step of the plan. In this case, system autonomy consists of providing alternate plans and carrying out complex calculations for the physician, e.g., to determine the best approach path to a lesion. Some examples of automatic plan generation include the following.

-

1)

Radiation Therapy: The intervention is planned offline, the patient is placed in the machine, the registration is performed, and then, the machine delivers a pattern of radiation to the patient [17]. Typically, the off-line planning is done interactively based on a segmented CT image showing a tumor and surrounding anatomic structures. A medical physicist defines an optimization problem that is solved by a computer workstation to produce a proposed pattern of radiation beams and then presents a simulation of the dose pattern to the human. The process proceeds iteratively until an acceptable plan is found. There have also been efforts to further automate this process (e.g., [18]–[20]).

-

2)

Orthopedics: It, especially joint replacement surgery, follows the same plan generation paradigm of other interventions, with attention, as much as possible, to bone structure and consistency. Again, the key planning decisions are the selection of an appropriate implant and determining where the implant is to be placed relative to the patient’s anatomy. Planning is typically done interactively based on preoperative CT or X-ray images [5], [6], [21]. However, there have also been efforts to further automate planning based on statistical models and patient images (e.g., [22]).

-

3)

Stereotactic Needle Placement: It includes brachytherapy [23], [24] and different biopsies. Here, the plan is chosen to spare healthy tissue (all tissues exposed to radiation are at risk of developing secondary cancers and, in most recent systems, can also be corrected in real time during execution to compensate for needle deflection and biological motions).

-

4)

ENT Procedures: One example is the HEARO robot for cochlear implants [25] that plan, under surgeon supervision, the different steps of the implant insertion. This is a typical example of autonomy level 1 providing both cognitive and manual support to the physician.

-

5)

Cosmetic Surgery: In this case, automation is used to create realistic patterns that can be similar to natural structures, as in hair restoration procedures [26].

B. Plan Execution

Plan execution cannot, in general, be separated from plan generation, and it is its logical next step, as noted in Section III-A. Often, the plan is adapted to the changing anatomical conditions during the execution. This adaptation rarely takes the form of a new plan computation, and it is usually implemented with a control algorithm that tracks some predefined feature. Also, this level of adaptation can be labeled as a level 1 since it provides manual support to the operating surgeon.

-

1)

Radiation Therapy: Radiation systems are arguably the first “autonomous robotic” interventional systems since all steps are carried out with minimal human intervention. One well-known example is the CyberKnife system [27] that combines image guidance and a robotic positioner to deliver highly focused radiation to the patient following a painting approach, i.e., following a trajectory that minimizes the exposure of healthy tissues to the radiation. X-ray cameras are used to track patient movements that provide real-time feedback to the robot positioner. The correctness of the compensation motions cannot be verified by the system operator when the robot executes them, because they are too fast. If an alarm condition is detected, the operator can only interrupt the motion with the Stop Button. This device is probably the most advanced on the market from the point of view of autonomy. The plan is generated by the machine and then approved by the physician, but, because of the limitation of human reaction time and perception, the operator cannot approve the actions of the robot during the therapy. Only major faults can be detected, and as with other surgical robots, system safety is ensured by a very deep risk analysis during the certification phase and by extensive testing and training.

-

2)

Orthopedic Surgery: Once the plan has been defined through the “Surgical CAD/CAM” approach, the execution is carried out with a cooperative approach in which the robot acts as a guide, i.e., a virtual fixture, to constrain the surgeon commanded motions. The Robodoc system (now marked by Think Surgical as the Solution One Surgical System) [28] was the first system to carry out part of a hip replacement procedure without human intervention [6] though the autonomous parts of the intervention were typically only the bone preparation portions. The Robodoc system was subsequently applied also to knee replacement surgery [29] and revision hip surgery [30]–[32]. A number of other systems for joint replacement surgery have subsequently appeared though these typically rely on automatic positioning of cutting guides (e.g., the Zimmer Rosa system) [33], constraining the motion of a hand-guided robot (e.g., the Stryker/Mako Rio system) [34], or automatically enabling a surgical cutter when it is positioned within a volume of bone to be removed (e.g., the Smith & Nephew Navio system [35]). Robotic systems have also been used in spine surgery to position a drilling guide to assist in inserting screws down vertebral pedicles, which are the struts of bone connecting the back part of the spine to the vertebral bodies (e.g., [36] and [37]). All of these systems combine presurgical planning from CT images to select an implant and determine its position on the patient’s bone, together with intraoperative registration of the surgical plan to the patient’s anatomy, followed by robotically assisted execution. Of these approaches, the Robodoc system exhibits the highest level of “autonomous” execution although it also uses hand-over-hand guiding of the robot during setup and registration phases with surgeon supervision during bone machining. This is again level 1 autonomy since the hand-guided robot gives manual support to the surgeon. The same feature can be interpreted also from the point of view of the automation system, and the robotic system can be considered as a cooperative surgical robot in the same fashion as industrial cooperative robots.

-

3)

Stereotactic Procedures: One of the first uses of surgical robots was the positioning of needle guides for brain biopsies and similar procedures (e.g., [38]), and these uses are now ubiquitous, with biopsies, injections, and similar interventions carried out throughout the body. When the procedure is done inside an MRI, X-ray, or CT imaging system, the robot may do the actual needle puncture although it is also common for the robot to position a needle guide [39]. An example of frameless stereotaxy is the NeuroMate system by Renishaw that uses an ultrasound (Us) probe to locate fiducial markers placed on the patient’s head [40]. As with orthopedic systems, these systems typically rely on human judgment to designate an anatomic target. The robot then moves autonomously to aim a needle guide or active device at the target, and then, either the surgeon or the system performs the actual needle placement. This last case may happen when the robot is within an MRI bore, and it can be classified as level 2 autonomy, as in [41].

-

4)

Steerable Needles: Flexible needles can be “steered” from outside the body during the insertion to compensate for biological motions or planning errors. Steering can be achieved either by rotating the needle about its primary axis and using the forces generated by the asymmetry in the tip shape by the tissue medium as the needle is pushed through the tissue [42] or by applying torques to the proximal needle tip [43]. The control inputs (translation speed and rotation angle) are computed based on nonholonomic kinematic models using image-based feedback, which may provide for feedback-based refinement of the model as the needle propagates through the tissue. XACT Robotics in Israel now offers a commercial steerable needle system based on the second approach [44]. XACT Robotics has collected data from more than 200 preclinical and clinical successful procedures from leading centers globally, such as the Hadassah Medical Center (Jerusalem, Israel) and the Lahey-Beth Israel Medical Center (Burlington, MA, UsA), and recently initiated commercial use at a physician office-based setting at Sarasota Interventional Radiology (Sarasota, FL, USA). In this case, the surgeon is not able to intervene in approving the robot’s actions because they are too fast for human reaction. The safety of this type of system is verified by extensive testing and with analytical proofs of stability and convergence of the control algorithm.

-

5)

Phlebotomy Procedures: Like other procedures using a needle to sample a tissue, these procedures use needles to make a puncture in a vein and draw blood. Often, the process of finding the vein is difficult, and Veebot developed an autonomous blood sampling robot that can find a vein using IR images, places the needle using US images, computes an optimal insertion path, and inserts it after verification [45]. Since this system can potentially carry out all the phases of the needle insertion autonomously, it achieves a high level of autonomy.

-

6)

Cosmetic Surgery: Two systems have autonomous functions in the field of hair restoration [26] and tattoo removal [46]. The hair transplant robot is able to autonomously identify the hair follicles, harvest them, and reseed then on the scalp following a natural pattern computed by the planning software.

-

7)Interactive Surgical Procedures: In these cases, most of the surgical decisions and actions are made intraop-eratively by the surgeons, and systems with significant autonomous functions have not yet reached the market. Some examples include the following.

-

1)In endoscopic MIS procedures, such as the da Vinci procedures [47], the robot may be purely teleoperated and may perform “assistive” functions, such as autonomous endoscope aiming, retraction, or similar tasks. There is an increasing trend toward more overtly “surgical” tasks, such as suturing, debridement, and tissue resection. Tool placement in urology is carried out mostly by da Vinci systems [48] that have replaced dedicated systems, such as [49];

-

2)Robots for neurosurgery: These devices are mostly teleoperated, but they include some form of cognitive and manual support to help the surgeon improve accuracy and increase both patient and physician safety. Examples of these devices are the Micromate by Isys [50] and the Mazor X Stealth [51] for spinal surgery. These devices allow surgeons to plan and monitor the intervention on a workstation away from the patient, thus preserving them from radiations generated by the intraoperative viewing system and reducing the exposure time of the patient. The visual guidance permits the registration of the robotic arm with the anatomical markers and the accurate position of a guide for the spinal intervention. The Renishaw Neuromate [52] is used for electrode implantation for deep brain stimulation, stereo electroencephalography, and stereotactic applications in neuroendoscopy and biopsy. It is used as a guide for the surgical tools and needles during robot-guided stereotactic implantation of multiple deep brain electrodes, providing a less invasive technique than open skull procedures and allowing the creation of a 3-D grid of electrodes [53].

-

3)Microsurgical robots: These are typically teleoperated or cooperatively controlled with a microscope as the primary feedback to the surgeon. Typical applications include ophthalmology and otolaryngology, such as the Steady Hand [54] cooperative robot and the teleoperated robot [55] for retinal microsurgery, and the Symani Surgical System for robot-assisted microsurgery [56].

- 4)

-

5)Robotic pills: These untethered devices are commercial products, e.g., PillCam device [60], which can travel passively through the entire intestine and take pictures of areas of interest.

-

1)

C. Plan Monitoring

Most of the sensors available in the robotic industry cannot be used in robot-assisted surgery because of design, dimension, and sterilization issues. Vision sensors are limited to mono and stereo cameras, but the latter does not achieve a good tridimensional resolution in MIS because of the short intercamera distance. Specific 3-D cameras, such as RGBD devices, are too big to be used in MIS and open procedures. Force and touch sensors would be of great help in tissue manipulation and palpation, but, so far, surgical instruments are not sensorized because of the difficulty in meeting dimension and sterilization constraints. Thus, vision is the main sensing modality available to an autonomous surgical robot to monitor a task evolution.

-

1)

Radiation Therapy: The CyberKnife system [27] discussed earlier tracks the patient’s movements and adjusts the radiation pattern to compensate for small biological motions, e.g., breathing, of the patient.

-

2)

Orthopedic Surgery: In this area, plan monitoring is mostly carried out by using IR markers positioned on the patient body and the tools. For example, in total knee replacement (TKR) procedures performed with the Mako system [21], the robot supports the surgeon’s movements by assuming a cooperative mode in the approach phase to the cutting plane.

-

3)

Endoscopy Control: Commercial products are available to move the endoscopic camera without human control, following specific features in the scene. For example, camera stabilization and target tracking are done by SOLOASSIST [61], FreeHand [62], Viki [63], and AutoLap [64]. These devices were actually the first autonomous systems used to support MIS intervention. However, the autonomy is implemented by feature tracking algorithms that keep the surgical instrument in the field of view of the endoscope.

IV. Technical Challenges of Autonomy

There is no single path to endow a robotic system with levels of autonomy, and in particular, autonomy in robotic surgery can be defined in different ways depending on the anatomical region of interest, the type of pathology, and surgical procedures. Therefore, we give an overview of key research results demonstrated in laboratories worldwide. Thanks to the availability of high-precision laboratory platforms for robot-assisted surgery, such as the da Vinci Research Kit (dVRK), the KUKA LBR Med collaborative robot, and the RAVEN robot, many research groups are addressing the technical challenges of automation of surgical procedures by developing realistic task demonstrations.

The dVRK research program was started when Intuitive Surgical Inc. donated decommissioned first generation (Classic) da Vinci hardware to academic research groups based on open-source software and electronic controllers developed at Johns Hopkins University and the Worchester Polytechnic Institute [65], [66]. There are now over 40 such systems deployed around the world, and many of these systems have been used for research demonstrating varying levels of surgical autonomy. This ongoing dVRK program is summarized in a recent survey [67]. The basic dVRK system software has also been adapted to control multiple robots, and its open-source framework is also being extended to create a collaborative robotics toolkit (CRTK) [68]. Many research areas are addressed using the dVRK system, e.g., augmented reality [69], simulation [70], sensing [71], and task execution [72]. The complete list of papers published on research using the dVRK system is available on Github in [73].

The KUKA LBR Med [74] is one of the few commercial robots that are medically certifiable, and it has the same dimensions and capabilities as the equivalent industrial robot. It is the preferred choice when developing a medical system or a research demonstrator based on a medically certifiable robot, as shown by the many applications shown in this video [75].

The RAVEN robot was developed at the University of Washington, and its main features are described in [76] and [77]. The robot is distributed by Applied Dexterity [78] and is currently used in research projects on task autonomy [79].

To support software and hardware interoperability, the users of the three research robots meet periodically at major events on surgical robotics to discuss progress and integration plans [80].

The use of “laboratory” surgical robots, as opposed to adapted industrial robots, is essential to carry out experiments that meet the constraints of a device that will operate in the OR and to be credible with surgeons who might be familiar with the clinical versions of the same robots.

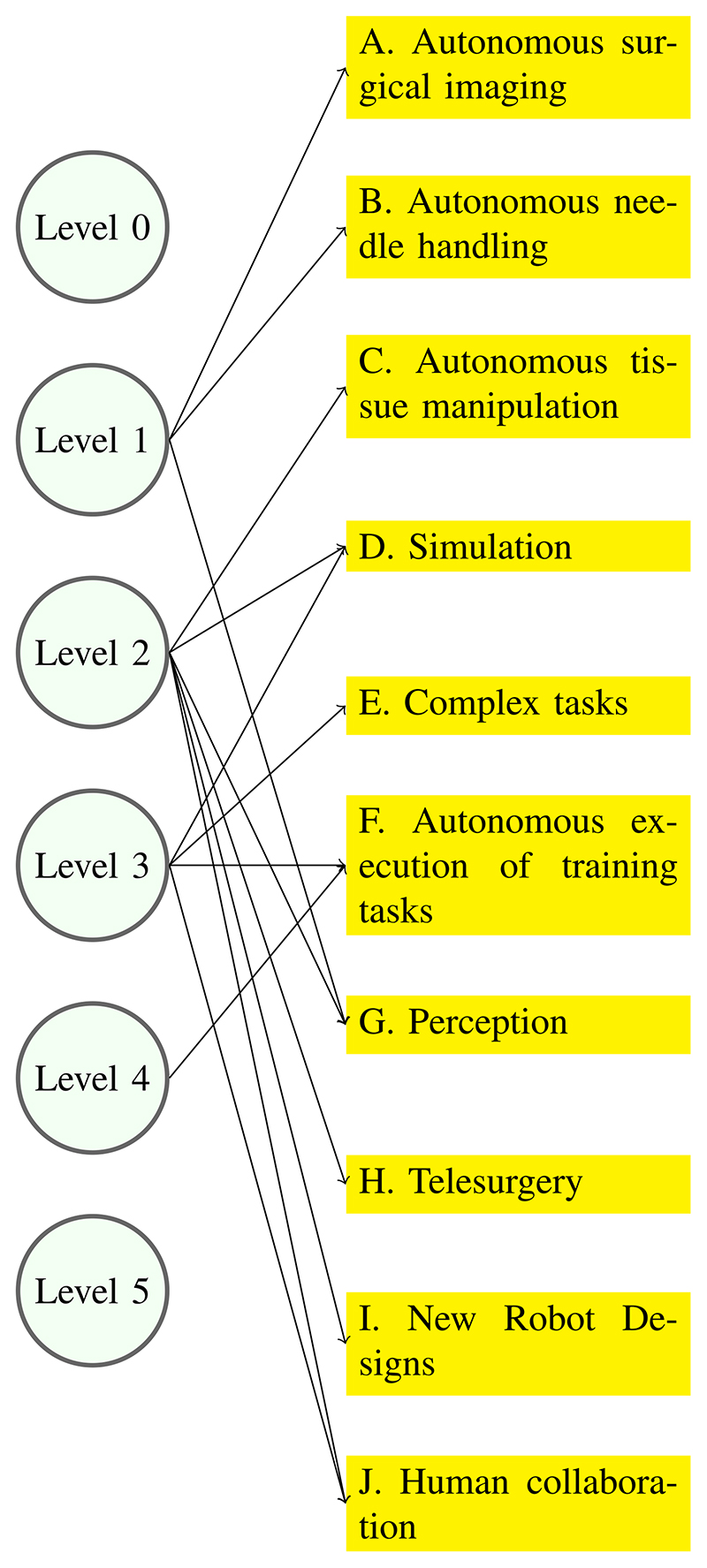

To showcase the main aspects of autonomy, researchers have focused on tasks that are highly relevant to surgical interventions, e.g., needle handling as in suture and biopsy, or on technologies that are key for autonomy, e.g., perception and simulation, or on demonstrating simple, but complete, tasks, such as those performed during surgical training and described in the Fundamentals of Laparoscopic Surgery [81]. Sections IV-A–IV-J focus on these single technology demonstrations. In this context, it is difficult to extract and discuss the single technologies contributing to the success of the demonstration as, for example, a planning phase. Research on planning is an active research area in particular when addressing the deformable environment of MIS surgery. An example of MIS plan generation is presented in [82], which provides a tool for registering the instrument position in the abdominal cavity and a smooth, curvilinear path generator.

Fig. 3 attempts to connect the levels of autonomy described in Section II with the demonstrations described in this section. It is clear that each demonstrator exhibits a mixture of levels of autonomy, and a clear-cut correspondence is difficult to establish, at least for now.

Fig. 3. Technical challenges of autonomy.

A. Autonomous Surgical Imaging

Imaging is the most common sensing modality in surgery, whether it is vision, with cameras, depth sensors, and machine vision, US, with its low cost and nonionizing radiation, or X-ray or magnetic resonance imaging systems. Each of them addresses a specific situation and is extensively used in clinical practice. Intelligent vision in robotic surgery is a research field on its own, and reviews of the state of the art are available, as in [83]. Furthermore, the vision system must be able to assess the quality of its own images and change its parameters autonomously, as demonstrated by researchers at the Technical University of Munich in [84] for the case of a US probe. In this case, the imaging system must recognize whether the probe has good contact with the organ surface and adjust position and orientation to optimize contact. Computer vision is now based on algorithms of artificial intelligence (AI), such as reinforcement learning (RL) or deep learning (DL), but their integration with autonomous functions is still challenging; researchers at the Centre Hospitalier Intercommunal, Poissy/Saint-Germain-en-Laye (France), discuss the application of AI techniques in autonomous surgery [85]. Researchers at Imperial College London (London, U.K.) have developed a simulator to generate large and accurate endoscopic datasets to study surgical vision and learning algorithms [86]. The need to autonomously provide better images of patients is particularly important in orthopedic surgery, where, besides the obvious need for good medical outcomes, there is the necessity to reduce the exposure time of both patients and medical staff to repeated acquisitions or even continuous fluoroscopy with a C-arm. Researchers at the University of Heidelberg (Germany) have developed a method to automate the image acquisition procedure, estimate the C-arm pose, and update it according to the required anatomy-specific standard projections for monitoring and evaluating the surgical result [84].

B. Autonomous Needle Handling

Needles are an essential tool for surgery, and they are often used in repetitive tasks, such as sutures or tissue sampling, which have the potential for automation. To explore this potential, needle handling has been divided into two main areas: curved needles used in sutures and straight needles used in percutaneous interventions. Suture needles carry the thread in their proximal hand, and they are picked up by a specific robotic instrument. Straight needles are usually hollow to let medications reach the tissues or biological samples to be collected, and they are installed on the robot’s wrist. It is clear that the development of autonomous sutures, which could lead to autonomy level 2 or 3, is a complex endeavor because it includes a full spectrum of robotic capabilities, e.g., perception, grasping, force, and position control, and has been addressed extensively by the robotic community. Before the suture can take place, an important part is needle grasping. The needle should be picked up by the robotic tool in such a way that it would not require, or minimize, the need to reposition it by moving it from one instrument to the other. This problem is addressed in [87] where researchers at the Politecnico di Milano propose a procedure consisting of a needle detection algorithm, an approach phase to the needle by the surgical instrument based on visual feedback, and a grasping phase according to the procedure to be executed. The specific case addressed in this article is radical prostatectomy, which requires a complex suture of the bladder to the urethra that could benefit from an intelligent grasp of the needle.

-

1)Suture Demonstrations: Suturing is an attractive task to be performed robotically because, in many cases, it takes a large percentage of the overall intervention time, and many research groups have focused on demonstrating some aspects of autonomous suturing. On the one hand, this is a challenging and interesting problem for an engineer, but, on the other hand, the suture may soon be replaced by other means of connecting tissues, e.g., glues, or be performed by new faster devices. In fact, this is the direction taken by the following projects in which Level 3 is achieved by developing an integrated robotic device.

-

1)SNAP: Researchers at UC Berkeley (UCB) developed a novel mechanical needle guide and a framework for optimizing needle size, trajectory, and control parameters using sequential convex programming. The Suture Needle Angular Positioner (SNAP) resulted in a 3× error reduction in the needle pose estimate in comparison with the standard actuator. They evaluate the algorithm and SNAP on a dVRK using tissue phantoms and compare completion time with that of humans from the JIGSAWS dataset [88]. Initial results suggest that the dVRK can perform suturing at 30% of human speed while completing 86% suture throws attempted. Videos and data are available at [89].

-

2)STAR: Researchers at John Hopkins University (JHU) developed the Smart Tissue Autonomous Robot (STAR), which is able to perform an anastomosis procedure with an accuracy higher than a human surgeon [90]. This prototype is based on the KUKA Med robot equipped with the Endo360 laparoscopic suturing tool and a vision system consisting of a plenoptic camera for 3-D acquisition and an NIR camera for detecting targets. The latest prototype uses a force/torque sensor to measure suture tension, and it has reached Level of Autonomy 3 (supervised autonomy) according to IEC TR 60601-4-1 [90], [91].

-

1)

-

2)Needle Insertion Demonstrations: This task has a different complexity, depending on whether the needle is rigid or flexible. In the first case, the whole demonstration can be carried out autonomously from planning to the execution of needle insertion and extraction since the uncertainties are primarily due to tissue stiffness and can be addressed by the needle position control. The insertion of a flexible needle is more complex since it must consider the deflection of the needle due to the interaction with the tissues. However, current research exploits this difficulty to compute a curvilinear trajectory consisting of an appropriate sequence of needle deflections that permit avoiding critical structures. In both cases, needle insertion consists of a sequence of phases, i.e., reach the simulator surface, insert the needle, monitor the correctness of insertion, and reach the target. However, in the case of a flexible needle, real-time trajectory monitoring must be coupled with possible adjustments. The level of autonomy of these procedures depends on the number of human interventions necessary. Both procedures can be considered to have achieved level of autonomy 4, i.e., the action sequence is planned by the system, approved by the physician, and executed by the robot. Flexible needle insertion in complex structures may require human intervention to approve or correct the deflection compensation, and therefore, in these cases, it has a lower level of autonomy.

-

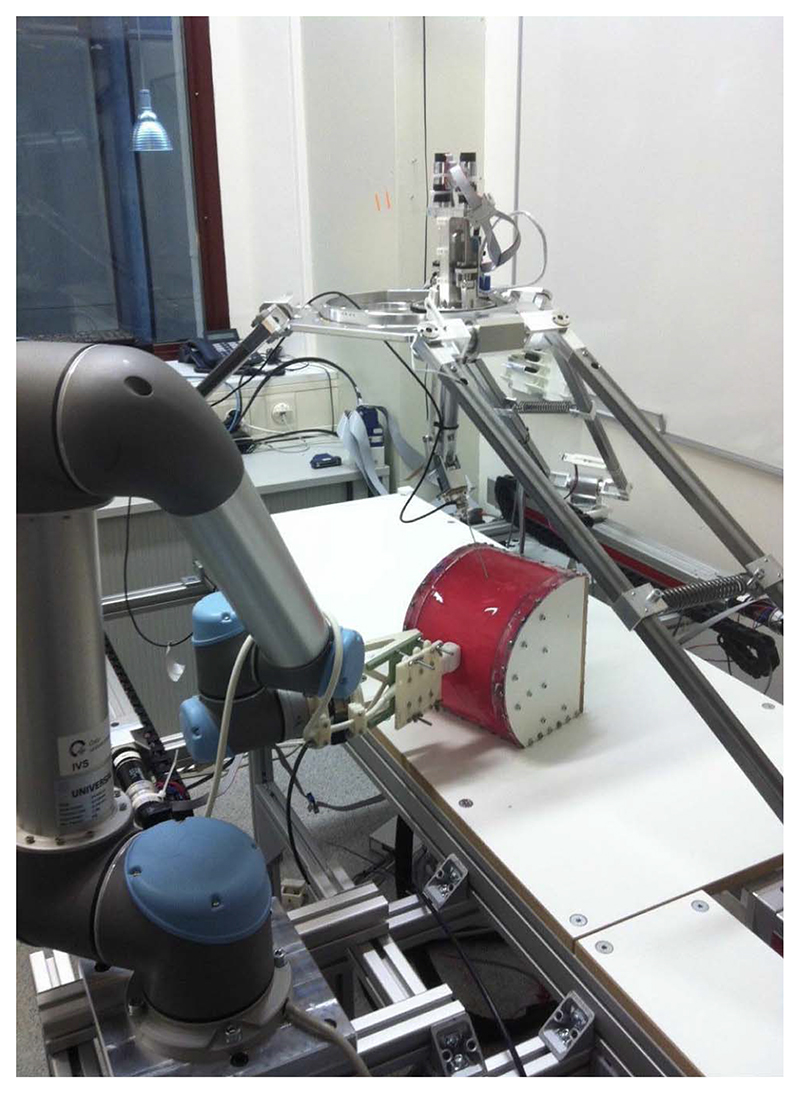

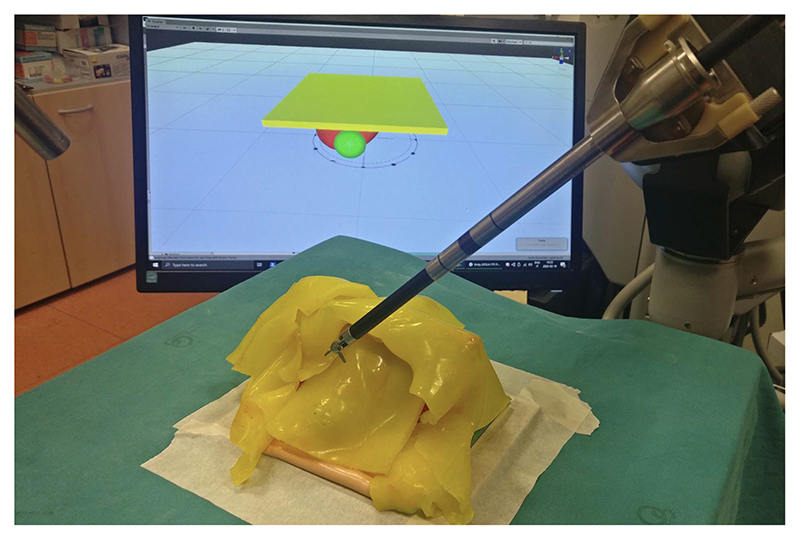

1)Rigid needle: Certain clinical procedures are carried out using a cannula that does not bend, e.g., brachytherapy or cryoablation, thus simplifying planning and control tasks. Researchers of the European Consortium Intelligent Surgical Robotics (I-SUR) [92] simulated a complete cryoablation procedure for kidney tumors using the demonstrator shown in Fig. 4. It consisted of three robots: a delta robot for macro positioning and needle insertion, a serial robot for precise needle holding, and a UR5 robot carrying a US probe for real-time guidance [93]. The “patient” was an abdomen segment with a kidney and relevant anatomical structures. The task was described using UML [94], and an executable finite state machine (FSM) was generated automatically from the task description. Human supervision was ensured by providing a smooth transition from autonomy to manual control [95] with haptic feedback [96]. This research is documented in [97], and a video is available at [98].

-

2)Flexible needle: In 2005, researchers at UCB and (JHU) were among the first to develop a planning algorithm for the insertion of highly flexible bevel-tip needles into soft tissues with obstacles in a 2-D imaging plane. Given an initial needle insertion plan specifying location, orientation, bevel rotation, and insertion distance, the planner combines soft tissue modeling and numerical optimization to generate a needle insertion plan that compensates for simulated tissue deformations, locally avoids polygonal obstacles, and minimizes the needle insertion distance. The simulator computes soft-tissue deformations using a finite element model that incorporates the effects of the needle tip and frictional forces using a 2-D mesh [99]. This work has been greatly extended in subsequent years with a robot-assisted 3-D needle steering system that used three integrated controllers: a motion planner concerned with guiding the needle around obstacles to a target in the desired plane, a planar controller that maintains the needle in the desired plane, and a torsion compensator that controls the needle tip orientation about the axis of the needle shaft [100], [101]. Experimental results from steering an asymmetric-tip needle in artificial tissue and ex vivo biological tissue demonstrated the effectiveness of the approach. A review of the scientific and patent literature for needle steering is presented in [102], and the technology is applied to different areas, e.g., in neurosurgery [103], within the MRI bore [104], and using recurrent neural networks for roll estimation [105]. At this point, the obstacle to the clinical use of steering needle technology seems to be mostly related to regulatory and marketing issues. In fact, the ACE robot developed by XACT [44] has achieved regulatory clearance and is capable of carrying out autonomous flexible needle insertion in a complex anatomical structure under CT guidance [106].

-

1)

Fig. 4. I-SUR cryoablation demonstrator.

C. Autonomous Tissue Manipulation

Most surgical targets in MIS are soft tissues that deform while being interacted by surgical instruments, and the deformation could lead to target deviation, which hinders the operating accuracy of the surgical tasks; thus, robotic tissue manipulation is a well-known challenging robot control problem.

-

1)

Lifting Policy Development: Tissue lifting is critical in many surgical procedures since it is instrumental in exposing the areas that have to be reached by the robot. The main challenge when attempting to automate robotic tissue manipulation is the dynamic behavior of soft tissues interacting with the anatomical environment. A critical element is the knowledge of the points where the tissue to be lifted is attached to other anatomical structures. At the University of Verona (UVR), a method was developed to perform this task, based on deep RL (DRL), which has shown promising results, without the need to design ad hoc control strategies. Training an agent in the simulation seems the most appropriate strategy to apply DRL to learn surgical subtasks since it avoids technical and ethical limitations of clinical experiments. However, sim-to-real methods need realistic and fast simulation environments to develop and test the algorithms. The simulated task was grasping and pulling the fat tissue covering a kidney to expose a tumor, for which a synthetic kidney phantom covered with silicone fat tissue was used, as shown in Fig. 5. The portion of fat tissue lifted by the simulated and real robot was a square region rigidly anchored to the top part of the kidney, and the DRL algorithms learned the fixation points and the sequence of motions to lift the silicon patch. The simulation experiments, including RL training and dVRK policy computation, ran on a powerful GPU, while the real experiments were carried out using the laboratory dVRK robot [107].

-

2)Image Guided Tissue Manipulation: As image feedback is the sole sensing information in MIS, image-guided manipulation of tissue becomes promising to provide active regulation of tissue deformation. There are researchers addressing autonomous robotic manipulation of deformable objects. Researchers at The Chinese University of Hong Kong (CUHK) proposed different approaches to address this problem:

D. Simulation

Task simulations by virtual and physical anatomy models support many activities related to autonomy, from plan testing to deformation prediction and target localization in a moving organ. Current simulation trends use a combination of approaches: finite element methods (FEMs), position-based dynamics (PBDs), and ML. FEM is a popular numerical procedure capable of achieving mechanically realistic simulations of anatomic structures [116], but it is characterized by a high computational cost. Excellent results in FEM applications are available, e.g., [117], and simplifications have been proposed to reduce the computational cost of FEM [118]. Simplifications include different solving processes, new formulations, or implementations on GPUs, but the capability to simulate sophisticated material laws and/or interactions between multiple organs is still not general enough. Experiments using a GPU implementation based on the results of [117] have shown that a high-performance simulation of liver tissue becomes unstable when using different biomechanical parameters, e.g., breast tissue [119]. FEM limitations can be overcome by geometry-based approaches, e.g., PBD, which model objects as an ensemble of particles whose positions are directly updated, as a solution to a quasi-static problem subject to geometrical constraints [120]. Stability, robustness, and simplicity are among the main reasons for the increasing popularity of the PBD method that can simulate different behaviors of elastic materials [121], [122]. PBD has been applied to the medical field to develop a training simulator involving dissection since they are able to handle topological changes in real time [123]–[125]. Modeling parameters can be learned by using ML techniques, whose long training sessions can be overcome using FEM simulations. A good balance between speed and accuracy is achieved by integrating all these methods in a single framework in which a deep network does the real-time computation, FEM produces the training data for the ML, and PBD is used for rendering. This makes it possible to test in real-time alternate execution plans in autonomous tasks.

E. Complex Tasks

Most surgical tasks cannot be described as a single action with a specific level of autonomy but combine different actions and perception capabilities. The demonstrators described in the following combine sensing, compensation of unexpected events, and motion control and show how an actual autonomous task requires the tight integration of many autonomous functions.

-

1)

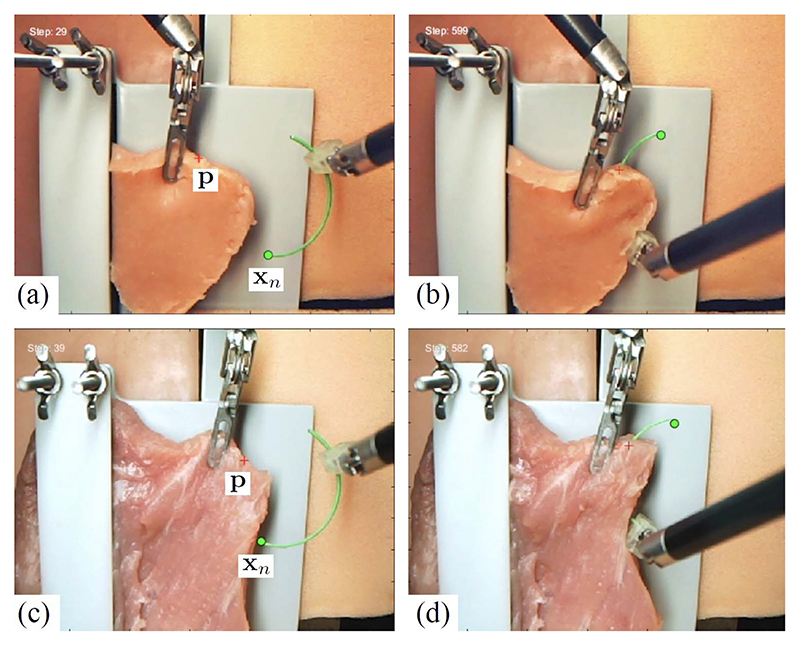

Suturing With Tissue Control: Tissue deformation induced by needle insertion can significantly reduce the insertion accuracy during suturing; thus, CUHK researchers proposed a dual-arm control strategy for active tissue control [126] (see Fig. 6 as the experiment snapshots). During suturing, the excessive needle-induced target deviation is minimized by using simultaneous control of the needle and the tissue [127]. This method led to high insertion accuracy (<1 mm) while executing the needle tip penetration to a superficial target. Needle pose during insertion is estimated using an adaptive method [115], [128]. This autonomous suturing framework allows a five-throw multithrow suturing on soft tissues with a task success rate of 80% (8/10) in ten consecutive trials, validated on both artificial and porcine tissues (see Fig. 7 for the pipeline illustration).

-

2)

Knot Tying: In 2010, researchers at UCB proposed an apprenticeship learning approach with the potential to allow robotic surgical assistants to autonomously execute specific trajectories with superhuman performance in terms of speed and smoothness. They recorded a set of trajectories using human-guided backdriven motions of the robot. These were then analyzed to extract a smooth reference trajectory, which we execute at gradually increasing speeds using a variant of iterative learning control. The approach was evaluated on two representative tasks using an earlier laboratory prototype, the Berkeley Surgical Robots [129]: a figure-eight trajectory and a two-handed knot-tie, a tedious suturing subtask required in many surgical procedures. Results suggest that the approach enables: 1) rapid learning of trajectories; 2) smoother trajectories than the human-guided trajectories; and 3) trajectories that are seven to ten times faster than the best human-guided trajectories [130]. Direct force control of knot tying [131] has also been replaced by learned policies, as demonstrated in [132].

-

3)

Debridement: It is the subtask of removing dead or damaged tissue fragments to allow the remaining healthy tissue to heal. Researchers at UCB developed an autonomous multilateral surgical debridement system using the RAVEN, an open-architecture surgical robot with two cable-driven 7-DOF arms. The system combined stereo vision for 3-D perception with trajopt, an optimization-based motion planner, and model predictive control (MPC). Laboratory experiments involving sensing, grasping, and removal of 120 fragments suggest that an autonomous surgical robot can achieve robustness comparable to human performance and demonstrated the advantage of multilateral systems, as the autonomous execution was 1.5 × faster with two arms than with one; however, it was two to three times slower than a human [79].

-

4)

Pattern Cutting: In the Fundamentals of Laparoscopic Surgery [81] standard medical training regimen, the pattern cutting task requires residents to demonstrate proficiency by maneuvering two tools: a surgical scissors and a tissue gripper, to accurately cut a circular pattern on surgical gauze suspended at the corners. The accuracy of cutting depends on tensioning, wherein the gripper pinches a point on the gauze and pulls to induce and maintain tension in the material as cutting proceeds. Researchers at UCB developed an automated tensioning policy that analyzes the current state of the gauze to output a direction of pulling as an action. The researchers used DRL with direct policy search methods to learn tensioning policies using a finite element simulator and then transferred them to the dVRK surgical robot [133].

-

5)

Tumor Resection: In 2015, researchers at UCB demonstrated fully autonomous localization of a tumor phantom and autonomous extraction of the tumor using automated palpation, incision, debridement, and adhesive closure with the dVRK [134].

Fig. 6. Image-guided dual-arm needle insertion with active tissue deformation.

(a) and (b) The initial/final state of needle insertion into a phantom tissue. (c) and (d) The initial/final state of needle insertion into a porcine tissue.

Fig. 7. Pipeline illustration of dual-arm autonomous multithrow suturing on soft tissues.

F. Autonomous Execution of Training Tasks

As described above, autonomous tasks consist of a number of activities that alternate sensing and perception, with action execution, planning, and execution again, in a continuous loop driven by the evolving environmental conditions. Thus, another aspect of demonstrating autonomous behavior is to execute tasks that can be completely planned and executed by the autonomous agents. These tasks are not real surgical tasks on phantoms, but they are taken from the Fundamentals of Laparoscopic Surgery [81], i.e., the training curriculum followed by surgeons in training. This choice has the added benefit that it allows a direct comparison between human and robot performance, identifying the skill level of an autonomous agent as if it were a student in training.

-

1)

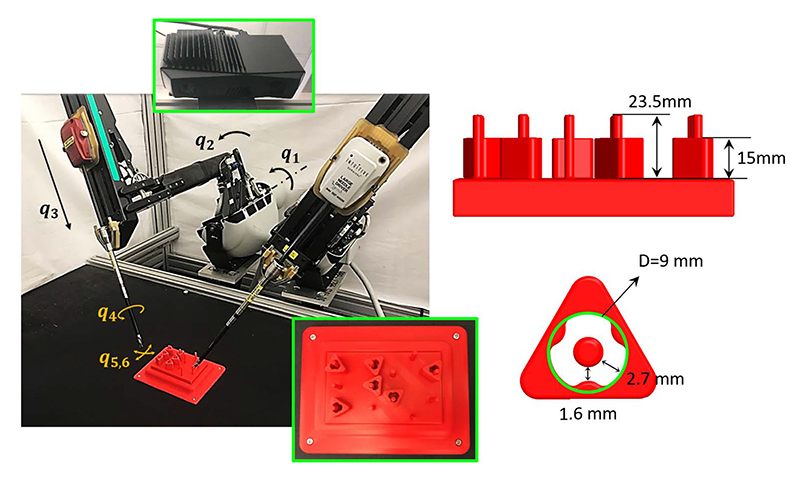

Peg Transfer: It is a typical medical training task in the Fundamentals of Laparoscopic Surgery [81]. Researchers from UCB used a dVRK surgical robot combined with a Zivid depth sensor to autonomously perform three variants of the peg-transfer task. As illustrated in Fig. 8, the system combined 3-D printing, depth sensing, and DL for calibration with a new analytic inverse kinematics model and a time-minimized motion controller. In a controlled study of 3384 peg-transfer trials performed by the system, an expert surgical resident, and nine volunteers, results suggest that the system achieves accuracy on par with the experienced surgical resident and is significantly faster and more consistent than the surgical resident and volunteers. Videos and data are available at [135].

-

2)

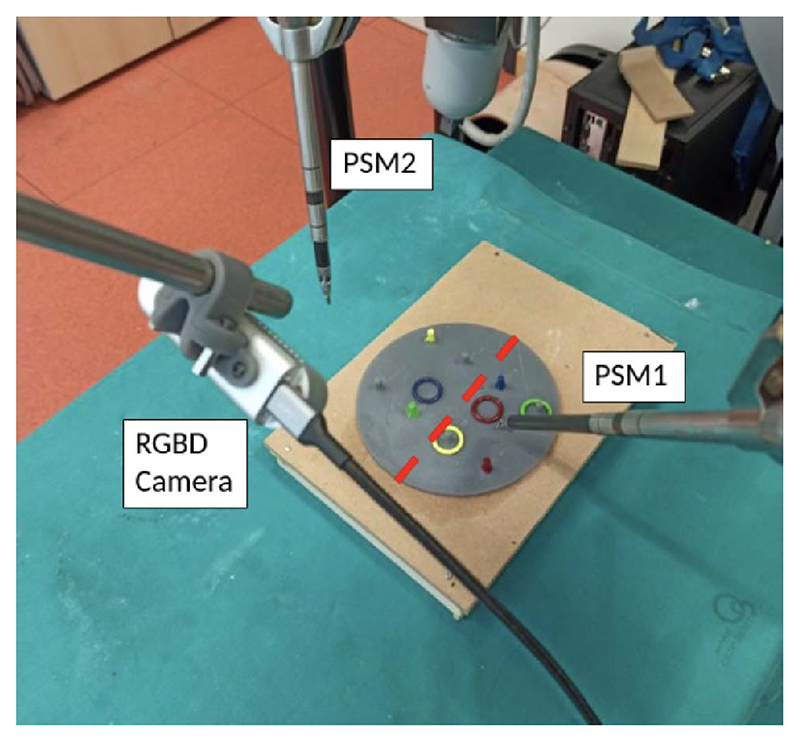

Peg and Ring: Another example training task performed by an autonomous robot is the “Peg and Ring” (P&R) task done by the dVRK research robot at UVR in the context of the autonomous robotic surgery (ARS) project [136]. The demonstrator setup is shown in Fig. 9, and an example of execution is available in this video [137]. The demonstrator integrates into a single task the technologies developed by the ARS project in terms of off-line and reactive planning [138], [139], situation awareness [140], motion representation and control [141], and simulation [142]. The task phases are represented by a hybrid automaton, which is implemented with an FSM written in the formalism of answer set programming (ASP) to represent actions, their preconditions and postconditions, task constraints, and the goal definition [143]. This framework supports the generation of multiple plans based on the values of environment variables called “fluents,” among which the best alternative can be chosen. When fluents change during a task, a replan request is issued, and the robot reacts to the unexpected event. The states of the FSM represent each action of the task, i.e., the discrete states of the automaton and, within each state, the robot actions are represented by smooth motions generated using the dynamic motion primitive (DMP) [141] formalism. Motion primitives can be learned from a single motion demonstration, thus greatly simplifying system tuning. Simulation is used to test the plan and to train a deep network to implement a specific action, e.g., tissue lifting,

Fig. 8.

Automated peg-transfer task setup. The UCB group used the dVRK robot from intuitive surgical with two arms. The blocks, pegs, and peg board were monochrome red to simulate a surgical setting. The dimensions of the pegs and the blocks are shown in the lower left, along with a top-down visualization of the peg board to the lower right. The robot takes actions based on images taken from a camera, installed 0.5 m from the task space, and 50° inclined from vertical. The six joints {q1, q6} are illustrated for one of the arms.

Fig. 9. ARS demonstrator.

G. Perception

Organ perception can be carried out by adding force and bioimpedance sensing to a surgical robot or by developing virtual sensors based on image analysis.

-

1)

Sensor-Based: The addition of sensors will permit to recognize by touch the organ health, and a safer grasp and lifting of tissues. Force sensing could be implemented by mounting sensing elements, e.g., strain gauges, on the instrument body to identify the contact force components. However, although many examples of robotic palpation have been demonstrated in laboratory setup [144], [145], the translation of even simple contact and force sensing to robot surgical instruments has been hampered by many technical factors, cost, and sterilization. Electric impedance sensing is an alternate sensing modality that does not require modifications to the instruments and can be used in a standard robot-assisted setup. Researchers at the Italian Institute of Technology in collaboration with UVR have developed a prototype of electric impedance sensing in surgical applications (ELISA) that allow measuring electric bioimpedance and identifying tissue type upon contact. This sensor is implemented by adding an impedance measurement to a standard dVRK electric cutter and using the cutter jaws to establish a contact with the tissue. It is clear that repeatability can only be achieved by a careful control of penetration depth and jaw opening, but ex vivo experiments have shown that this approach can identify four tissue types with an accuracy of higher than 92.82%.

-

2)

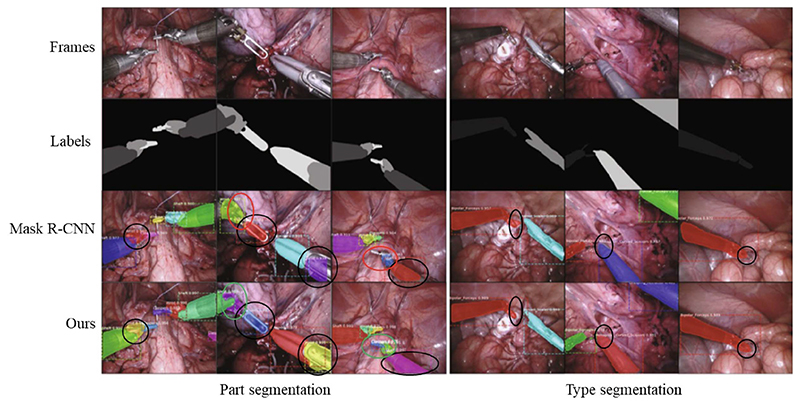

Image-Based: The critical challenges in perceiving a surgical procedure include segmentation of surgical instruments and surgical objects, and tracking of instruments. Instrument tracking and 3-D pose estimation are research areas, which produced significant results that are used, for example, to autonomously move the endoscopic camera or to interact with workspace boundaries [146]. However, to select the best approach for an autonomous system, performance and speed must match the overall system characteristics. Researchers at the Tianjin University of Technology summarize them in [147]. Specific approaches for autonomy are developed by researchers at CUHK, who demonstrated the feasibility and effectiveness of segmenting and tracking instruments in real scenarios using image-based DL methods. Segmentation can be performed in a supervised, semisupervised, or unsupervised manner depending on the ratio of labeled samples in the training set. In the supervised fashion, we reformulate the instrument segmentation as an instance segmentation task that combines both target detection with semantic segmentation task [128], and as a result, it is possible to detect and segment the instruments simultaneously with high accuracy and achieve new state-of-the-art performance on a publicly available EndoVis17 [148] dataset, as shown in Fig. 10. Unlike most previous methods using unlabeled frames individually, in [149] a dual motion-based method is proposed to wisely learn motion flows for segmentation enhancement by leveraging temporal dynamics, and in [150], MDAL is proposed, a learning scheme for adaptive instrument segmentation in robotic surgical videos trained with the EndoVis17 dataset [148], and achieved an average instrument segmentation result of 75.5% IoU and 84.9% Dice. The 3-D instrument tracking was demonstrated with monocular vision in [151]. The method used a modified U-Net model for the tool’s pixelwise position perception and a RobotDepth model for scale-aware depth recovery. Researchers at the University of California at San Diego developed SuPer [152], a framework for the effective interaction with soft tissues during MIS that continuously collects 3-D geometric information of the scenes and maps the organ deformations while tracking the rigid instruments.

Fig. 10. Comparison of the results of instruments part and type segmentation.

H. Telesurgery

Long-distance telesurgery has been proposed as a solution to the shortage of surgeons to operate from a safe distance in dangerous areas and to support mentoring and expert advice. Early experiments using supervised autonomy were performed between NASA-JPL (Pasadena, CA, USA) and Milan (Italy) [153], and between NewYork (USA) and Strasbourg (France) [154] and showed its feasibility, in spite of the long communication delay and the high cost. Models of robot-tissue interaction [155] and algorithms for compensation of communication time delay [156], [157] are active areas of research.

I. New Robot Designs

New types of surgical devices are being developed. For example, concentric-tube continuum robots consist of a set of concentric flexible tubes that are prebent to adapt to some anatomical structure, typically skull cavities, and can be inserted in the cavity thanks to their curvilinear shape. The amount of extension of each tube and the rotation about the central line determine the position of the tip holding a tool. An autonomous continuum tube robot learns all the configurations and develops an inverse map from the desired tool tip position to the robot’s degrees of freedom [158]. Soft surgical robots are summarized in the review paper [159] that describes the pros and cons of this technology. A soft surgical instrument will need to be controlled locally by an autonomous system to reach the target [160]. Soft endoscopes require autonomous support to perform the navigation tasks, e.g., endoscopes for gastrointestinal inspection have evolved from the early prototypes [161] to magnetically powered autonomous devices that allow forward and backward views of the digestive tract [162].

J. Human Collaboration

To address the work overload of the OR personnel, several research groups have addressed the problem of adding robotic assistants to the OR. These robots could interact with the medical personnel and perform some of the intervention tasks. The most addressed goal has been to develop a robotic scrub nurse, i.e., a robotic arm to hand over the surgical instruments. The application described in [163], developed by researchers of the INKA Laboratory at the Otto-von-Guericke Universität (Magdeburg, Germany), used the collaborative robot developed by Franka Emika [164] to validate the combination of voice commands and instruments marked with ArUco codes to let the robot pick up the instrument and handle them to the surgeon. Researchers at the Politecnico di Milano added to the vocal interaction, the capability of the robot of recognizing the context of the human command [165]. In fact, by combining a deep network with an ontology describing the relation among the instruments, the robot is able to model the surgical workflow and recognize its phases, i.e., being context-aware during a robotic-assisted partial nephrectomy [166]. Researchers at UVR pushed this concept further with the EU-funded project Smart Autonomous Robotic Assistant Surgeon (SARAS) [167] that explored the development of a bed-side robotic assistant for robot-assisted and standard laparoscopic interventions. The bedside second surgeon in the OR is a significant cost, and his/her actions depend on the decision of the primary surgeon and on the intervention evolution. Thus, most of the tasks can be carried out by a robot that recognizes the intervention phase and is able to contextualize the main surgeon’s instructions. Fig. 11 shows an implementation of this concept by adding two Franka Emika robots [164] to the dVRK setup for the simulation of robot-assisted prostatectomy. The system shown uses a multimodal neural network trained on a cooperative task performed by human surgeons to produce an action segmentation that provides the required context timing for actions [168]. Statecharts are used to model the surgical procedures, and they are well suited to semi-ARS because they allow merging bottom-up knowledge acquisition, based on data-driven techniques (e.g., ML), with top-down knowledge based on preexisting knowledge [169].

Fig. 11. SARAS bed-side robotic assistant.

V. Nontechnical Challenges of Autonomy

Society seems to now be ready for surgeon-assist robots in the same way we are ready for driver-assist features in cars. Autonomous robots represent a multifaceted challenge: for scientists and engineers who must develop technologies with high levels of safety, security, and privacy; for lawyers who must apply the law to each phase of an autonomous robot operation; and for philosophers and sociologists who have to address ethical and social issues. Robotics researchers, as well as lawyers and social scientists, are starting to address the implications of autonomy in the critical area of robot-assisted surgery [170].

It is questionable whether full autonomy in driving or surgery will be achievable in the foreseeable future. However, the addition of some small level of autonomy can be beneficial to surgery, such as many driver-assist functions that are useful while driving a car. The scientific community must clearly explain what can be expected from machines that can understand, act, learn, and adapt their behavior, albeit to a limited extent. The consequences of introducing autonomous machines into our society are being examined, and the robotics community is starting to articulate the answers to these questions by addressing ethical, legal, and societal (ELS) issues in specific funded projects [171], [172].

In this section, we summarize some of the issues that are increasing the difficulty of introducing systems with some levels of autonomy into clinical practice.

A. Social Impact of Autonomous Robots

Recent advances in the field of digitization and robotics, such as driver-less cars, autonomous smart factories, and service robots, fuel public fears that technology may replace most workers. However, in the past, technological changes have affected the structure of employment and had, on average, positive effects on the level of employment. The introduction of computers in the workplace changed the general composition of the workforce, reducing clerical jobs and increasing the number of jobs requiring technical skills. However, although, on average, the workforce increased, not all workers who lost their job due to computerization could acquire the skills necessary for the new jobs.

Thus, the main social challenge of autonomy will be to address the potential inequalities of education, as the digital divide of the past. Fears of a “jobless future” [173] have been ignited also by reports, such as [174] predicting a sharp reduction in the workforce. It is too early to tell whether these predictions will become reality, but, in the medical field, automation and intelligent agents are perceived as the welcome and necessary support. Medical personnel, especially in times of crisis, are overworked and in high demand and have no fear of being replaced by machines. Furthermore, jobs at risk may be those that consist of routine tasks that can be codified and executed automatically and not tasks that require adaptation and personal interactions, such as most heath care jobs. The main challenges from intelligent machines will be related to interacting with them and training in their use since it will be slower than the technological advances. In the medical field, jobs will not be lost but will be changed, and therefore, education and training must immediately follow the introduction of the potentially disruptive technology of autonomous robots.

A different type of social impact is the robotic divide, or accessibility gap [175], which is already occurring in the medical field, with only few affluent institutions able to afford the most advanced robotic technologies. This gap may increase with the introduction of autonomous technologies, and patients will be divided between those who can be admitted to certain hospitals and those who cannot.

B. Legal Impact of Autonomous Robots

Regulation/standardization is important during technology development to ensure safety, but it should not limit new developments. Regulation has to address the development of technologies for autonomous robots and insure that victims of accidents caused by an autonomous agent can be properly protected and indemnified. Furthermore, the European Union Expert Group’s Report on Liability for AI has generated the EU proposal of regulating autonomous agents [176] that exclude the possibility of full autonomy in critical tasks, such as medicine and surgery, admitting only “supervised autonomy” as the acceptable approach.

The chain of responsibility from design to manufacture, to installation and maintenance, to the final user should be clearly defined to assign the proper share of liability and is actively studied and discussed by EU-based initiatives, such as the European Center of Excellence on the Regulation of Robotics and AI [177].

C. Ethical Impact of Autonomous Robots

There is widespread uneasiness about robotics and AI. Thus, there is a need for ethical governance of autonomous robots, which, in turn, could support the process of building public confidence and trust in autonomous robotic systems. Governance is usually achieved by developing a roadmap connecting ethics, standards, and regulation. Ethical considerations for robotics were discussed in the EURON Roboethics Roadmap [178] and the Principles of Robotics [179].

Standards formalize ethical principles into a structure, which could provide guidelines for designers on how to conduct an ethical risk assessment for a given robot. Ethics justifies standards that need regulations to monitor the systems that are compliant with standards [180], [181]. Autonomous robot research should be conducted ethically, to transparently measure system capabilities with standardized tests or benchmarks. Further key elements for systems that work in the real world are verification and validation, to assure safety and adequacy to purpose.

Regulations require regulatory bodies that can provide transparency and confidence in the regulatory processes. Regulation should address robotics and autonomous systems, and not on software AI alone, because robots are physical objects that are easier to identify and verify than distributed or cloud-based AI [182].

Autonomous robots may take decisions that have real consequences for human safety or well-being, such as those made by medical diagnoses or surgical systems. Systems that make such decisions are critical systems. Existing critical software systems are not AI systems nor do they incorporate AI systems. The reason is that AI systems (and more generally ML systems) are generally regarded as impossible to verify for safety-critical applications. The problem of verification and validation of systems that learn is the subject of current research in explainable AI [183].

The robotics community is addressing public fears about robotics and AI, through public engagement and consultation to jointly identify and develop new standards for intelligent autonomous robots, together with benchmark, verification, and validation tests to assure compliance with the standards.

VI. Conclusion

This article summarizes some of the current efforts of the robotics community to give autonomous capabilities to surgical robots. In Section II, we summarize the definitions of the levels of autonomy that a surgical robot can have, and then, we describe some of the current products and clinical applications that have already some autonomous functions. To advance the use of autonomous functions in clinical practice, many challenges must be overcome. In the article, we describe how the robotics community addresses the technical challenges by presenting laboratory demonstrations that solve some of them. However, nontechnical challenges may affect the introduction of autonomous functions in robotic surgery, and we give a brief account of the ethical, legal, and social issues related to autonomous robots.

In the near future, the task of bringing some autonomy to robotic surgery may benefit from textbooks in robotic surgery that describes each procedure in algorithmic form, i.e., by a specific sequence of surgical actions that are personalized according to patient and pathology characteristics. However, the ability to adapt the autonomous robotic procedure to real circumstances and to react to unexpected situations will be the true challenge of the future.

Fig. 5. Experimental setup for tissue lifting at UVR.

Acknowledgment

Under a license agreement between Galen Robotics, Inc and the Johns Hopkins University (JHU), Russell H. Taylor and JHU are entitled to royalty distributions on technology that may possibly be related to that discussed in this publication. Russell H. Taylor also is a paid consultant to and owns equity in Galen Robotics, Inc. This arrangement has been reviewed and approved by JHU in accordance with its conflict-of-interest policies. Dr. Taylor’s patents on surgical robot technology have also been licensed to other commercial entities and both Dr. Taylor and JHU may be entitled to royalty distributions on this technology. Also, Dr. Taylor receives salary support from separate research agreements between JHU and Galen Robotics and between Johns Hopkins University and Intuitive Surgical, from the Multi-Modal Medical Robotics Centre in Hong Kong, and from various U.S. Government agencies.

The work of Paolo Fiorini was supported in part by an equipment grant from Intuitive Surgical and in part by the European Union under Grant ERC-ADG 742671 and Grant ERC-PoC 875523. The work of Ken Y Goldberg was supported in part by an equipment grant from Intuitive Surgical and in part by the Technology & Advanced Telemedicine Research Center (TATRC) under Project W81XWH-19-C-0096 through a medical Telerobotic Operative Network (TRON) project led by SRI International. The work of Yunhui Liu was supported in part by Hong Kong RGC underGrant TR42-409/18-R. The work of Russell H. Taylor was supported by NIH under Grant 5R44AI134500, Grant 5R01EB025883, Grant R01EB030511, Grant R01 AR080315, Grant 2R01 EB023943, and Grant R01EB031009

Biographies

About the Authors

Paolo Fiorini (Life Fellow, IEEE) received the Laurea degree in electronic engineering from the University of Padua, Padua, Italy, in 1976, the M.S.E.E. degree from the University of California at Irvine, Irvine, CA, USA, in 1982, and the Ph.D. degree in mechanical engineering (ME) from the University of California at Los Angeles (UCLA), Los Angeles, CA, USA, in 1995.

Paolo Fiorini (Life Fellow, IEEE) received the Laurea degree in electronic engineering from the University of Padua, Padua, Italy, in 1976, the M.S.E.E. degree from the University of California at Irvine, Irvine, CA, USA, in 1982, and the Ph.D. degree in mechanical engineering (ME) from the University of California at Los Angeles (UCLA), Los Angeles, CA, USA, in 1995.

From 1977 to 1985, he worked for companies in Italy and the USA, developing microprocessor-based controllers for domestic appliances, automotive systems, and hydraulic actuators. From 1985 to 2000, he was with the NASA Jet Propulsion Laboratory, California Institute of Technology, Pasadena, CA, USA, where he worked on autonomous and teleoperated systems for space experiments and exploration. In 2001, he returned to the School of Science and Engineering, University of Verona, Verona, Italy, where he is currently a Full Professor of computer science. In 2001, he founded the ALTAIR Robotics Laboratory, Verona, Italy, to develop innovative robotic systems for space, medicine, and logistics. Research in these areas has been funded by several national and international projects, including the European Framework Programs: FP6, FP7, H2020, and ERC.

Dr. Fiorini’s activities have been recognized by many awards, including the NASA Technical Awards.

Ken Y. Goldberg (Fellow, IEEE) is currently the William S. Floyd Distinguished Chair o1 Engineering with the University of California at Berkeley (UC Berkeley), Berkeley, CA, USA, and the Chief Scientist of Ambi Robotics, Berkeley, CA, USA. He leads research in robotics, machine learning, and artificial intelligence (AI). He is also a Professor of industrial engineering and operations research with UC Berkeley, with appointments in the Department of Electrical Engineering, the Department of Computer Science, the Department of Art Practice, the School of Information, and the UCSF Department of Radiation Oncology. He is also the Director of the CITRIS “People and Robots” Initiative (75 faculty across four UC campuses). He has published over 300 refereed papers. He was awarded nine U.S. patents.

Ken Y. Goldberg (Fellow, IEEE) is currently the William S. Floyd Distinguished Chair o1 Engineering with the University of California at Berkeley (UC Berkeley), Berkeley, CA, USA, and the Chief Scientist of Ambi Robotics, Berkeley, CA, USA. He leads research in robotics, machine learning, and artificial intelligence (AI). He is also a Professor of industrial engineering and operations research with UC Berkeley, with appointments in the Department of Electrical Engineering, the Department of Computer Science, the Department of Art Practice, the School of Information, and the UCSF Department of Radiation Oncology. He is also the Director of the CITRIS “People and Robots” Initiative (75 faculty across four UC campuses). He has published over 300 refereed papers. He was awarded nine U.S. patents.

Dr. Goldberg was awarded the NSF Presidential Faculty Fellowship (PECASE) in 1995, the Joseph Engelberger Award (top honor in robotics) in 2000, and the IEEE Major Educational Innovation Award in 2001. He is also the past Editor-in-Chief of the IEEE TRANSACTIONS ON AUTOMATION SCIENCE AND ENGINEERING (T-ASE).

Yunhui Liu (Fellow, IEEE) received the Ph.D. degree in applied mathematics and information physics from The University of Tokyo, Tokyo, Japan, in 1992.

Yunhui Liu (Fellow, IEEE) received the Ph.D. degree in applied mathematics and information physics from The University of Tokyo, Tokyo, Japan, in 1992.

After working at the Electrotechnical Laboratory, Tokyo, as a Research Scientist, he joined The Chinese University of Hong Kong (CUHK), Hong Kong, in 1995, where he is also a Choh-Ming Li Professor of mechanical and automation engineering and the Director of the T Stone Robotics Institute. He also serves as the Director/CEO of the Hong Kong Centre for Logistics Robotics, Hong Kong, sponsored by the InnoHK Programme of the HKSAR Government. He is also an Adjunct Professor with the State Key Laboratory of Robotics Technology and System, Harbin Institute of Technology, Harbin, China. He has published more than 500 papers in refereed journals and refereed conference proceedings. He was listed in the Highly Cited Authors (Engineering) by Thomson Reuters in 2013. His research interests include visual servoing, logistics robotics, medical robotics, multifingered grasping, mobile robots, and machine intelligence.

Dr. Liu received numerous research awards from international journals and international conferences in robotics and automation, and government agencies. He has served as the General Chair of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems. He has served as an Associate Editor for the IEEE TRANSACTIONS ON ROBOTICS AND AUTOMATION. He was the Editorin-Chief of Robotics and Biomimetics.

Russell H. Taylor (Life Fellow, IEEE) received the Bachelor of Engineering Science degree from Johns Hopkins University, Baltimore, MD, USA, in 1970, and the Ph.D. degree in computer science from Stanford University, Stanford, CA, USA, in 1976.

Russell H. Taylor (Life Fellow, IEEE) received the Bachelor of Engineering Science degree from Johns Hopkins University, Baltimore, MD, USA, in 1970, and the Ph.D. degree in computer science from Stanford University, Stanford, CA, USA, in 1976.

He has over 40 years of professional experience in the fields of computer science, robotics, and computer-integrated interventional medicine. He joined IBM Research, Yorktown Heights, NY, USA, in 1976, where he developed the A Manufacturing Language (AML) robot language and managed the Automation Technology Department and (later) the Computer-Assisted Surgery Group before moving in 1995 to Johns Hopkins University, where he is currently the John C. Malone Professor of computer science with joint appointments in the Department of Mechanical Engineering, the Department of Radiology, and the Department of Surgery. He is also the Director of the Laboratory for Computational Sensing and Robotics (LCSR), Baltimore, MD, USA, and the (graduated) NSF Engineering Research Center for Computer-Integrated Surgical Systems and Technology (CISST ERC), Baltimore, MD, USA. His research interests include robotics, human-machine cooperative systems, medical imaging and modeling, and computer-integrated interventional systems.

Dr. Taylor was elected as a Fellow of IEEE in 1994 “for contributions in the theory and implementation of programmable sensor-based robot systems and their application to surgery and manufacturing.” He is also a Fellow of the National Academy of Inventors, the American Institute for Medical and Biological Engineering (AIMBE), the MICCAI Society, and the Engineering School of The University of Tokyo. In 2020, he was elected to the U.S. National Academy of Engineering “for contributions to the development of medical robotics and computer-integrated systems.” He was a recipient of numerous awards, including four IBM Outstanding Achievement Awards, four IBM Invention Awards, the Maurice Müller Award for Excellence in Computer-Assisted Orthopaedic Surgery, the IEEE Robotics Pioneer Award, the MICCAI Society Enduring Impact Award, the IEEE EMBS Technical Field Award, and the Honda Prize. He is also an Editor of IEEE TRANSACTIONS ON MEDICAL ROBOTICS AND BIONICS, an Associate Editor of IEEE TRANSACTIONS ON MEDICAL IMAGING, and the Editor-in-Chief Emeritus of IEEE TRANSACTIONS ON ROBOTICS AND AUTOMATION. He has served on numerous other editorial and scientific advisory boards.

Contributor Information

Paolo Fiorini, Department of Computer Science, University of Verona, 37134 Verona, Italy.

Ken Y. Goldberg, Email: goldberg@berkeley.edu, Department of Industrial Engineering and Operations Research and the Department of Electrical Engineering and Computer Science, University of California at Berkeley, Berkeley, CA 94720 USA.

Yunhui Liu, Email: yhliu@cuhk.edu.hk, Department of Mechanical and Automation Engineering, T Stone Robotics Institute, The Chinese University of Hong Kong, Hong Kong, China.

Russell H. Taylor, Email: rht@jhu.edu, Department of Computer Science, the Department of Mechanical Engineering, the Department of Radiology, the Department of Surgery, and the Department of Otolaryngology, Head-and-Neck Surgery, Johns Hopkins University, Baltimore, MD 21218 USA, and also with the Laboratory for Computational Sensing and Robotics, Johns Hopkins University, Baltimore, MD 21218 USA.

References

- [1].Kohn LT, Corrigan JM, Donaldson MS, editors. To Err is Human: Building a Safer Health System. National Academies Press, Institute of Medicine (US) Committee on Quality of Health Care in America; Washington, DC, USA: 2000. [PubMed] [Google Scholar]

- [2].Baker GR, et al. The Canadian adverse events study: The incidence of adverse events among hospital patients in Canada. Can Med Assoc J. 2004;170(11):1678–1685. doi: 10.1503/cmaj.1040498. [DOI] [PMC free article] [PubMed] [Google Scholar]