Abstract

Recovering the 3D motion of the heart from cine cardiac magnetic resonance (CMR) imaging enables the assessment of regional myocardial function and is important for understanding and analyzing cardiovascular disease. However, 3D cardiac motion estimation is challenging because the acquired cine CMR images are usually 2D slices which limit the accurate estimation of through-plane motion. To address this problem, we propose a novel multi-view motion estimation network (MulViMotion), which integrates 2D cine CMR images acquired in short-axis and long-axis planes to learn a consistent 3D motion field of the heart. In the proposed method, a hybrid 2D/3D network is built to generate dense 3D motion fields by learning fused representations from multi-view images. To ensure that the motion estimation is consistent in 3D, a shape regularization module is introduced during training, where shape information from multi-view images is exploited to provide weak supervision to 3D motion estimation. We extensively evaluate the proposed method on 2D cine CMR images from 580 subjects of the UK Biobank study for 3D motion tracking of the left ventricular myocardium. Experimental results show that the proposed method quantitatively and qualitatively outperforms competing methods.

Keywords: Multi-view, 3D motion tracking, shape regularization, cine CMR, deep neural networks

I. Introduction

The motion of the beating heart is a rhythmic pattern of non-linear trajectories regulated by the circulatory system and cardiac neuroautonomic control [1]–[3]. Estimating cardiac motion is an important step for the exploration of cardiac function and the diagnosis of cardiovascular diseases [1], [4], [5]. In particular, left ventricular (LV) myocardial motion tracking enables spatially and temporally localized assessment of LV function [6]. This is helpful for the early and accurate detection of LV dysfunction and myocardial diseases [7], [8].

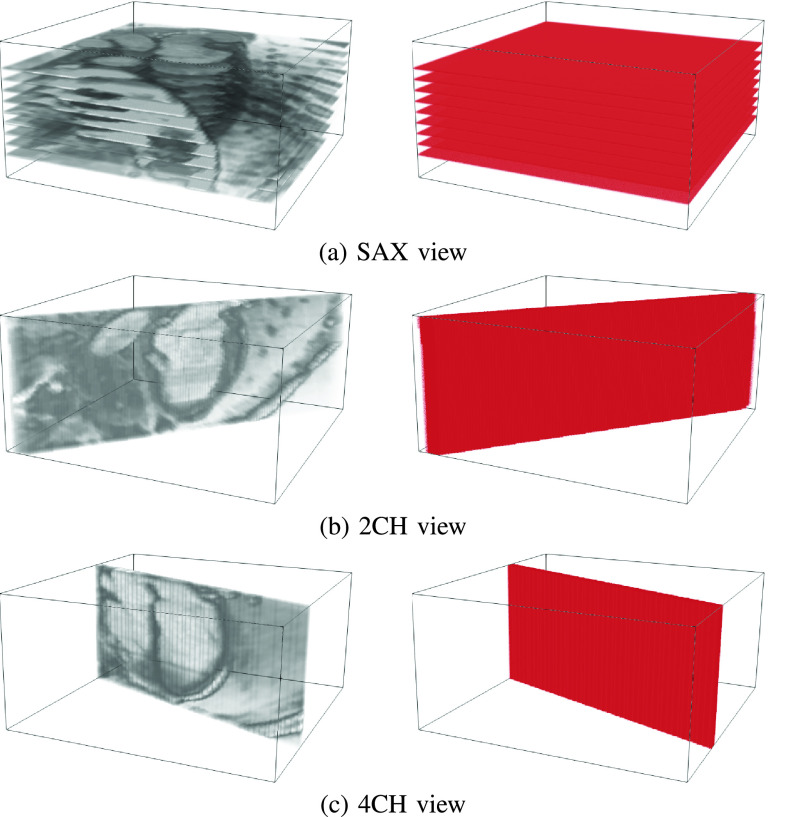

Cine cardiac magnetic resonance (CMR) imaging supports motion analysis by acquiring sequences of 2D images in different views. Each image sequence covers the complete cardiac cycle containing end-diastolic (ED) and end-systolic (ES) phases [10]. Two types of anatomical views are identified, including (1) short-axis (SAX) view and (2) long-axis (LAX) view such as 2-chamber (2CH) view and 4-chamber (4CH) view (Fig. 1). The SAX sequences typically contain a stack of 2D slices sampling from base to apex in each frame (e.g., 9-12 slices). The LAX sequences contain a single 2D slice that is approximately orthogonal to the SAX plane in each frame. These acquired images have high temporal resolution, high signal-to-noise ratio as well as high contrast between the blood pool and myocardium. With these properties, cine CMR imaging has been utilized in recent works for 2D myocardial motion estimation, e.g., [11]–[15].

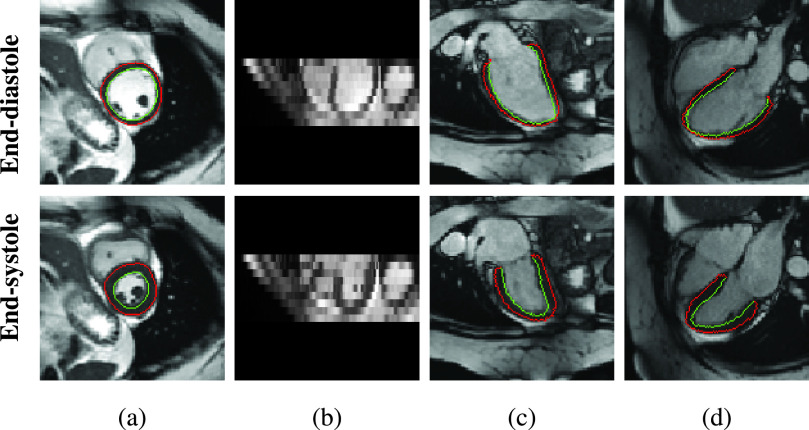

Fig. 1.

Examples of 2D cine CMR scans of a healthy subject. Cine CMR scans are acquired from short-axis (SAX) view and two long-axis (LAX) views. The SAX view contains a stack of 2D images while each LAX view contains a single 2D image. (a)

-plane of the SAX stack. (b)

-plane of the SAX stack. (b)

-plane of the SAX stack. (c) LAX 2-chamber (2CH) view. (d) LAX 4-chamber (4CH) view. Red and green contours1 show the epicardium and endocardium, respectively. The area between these contours is the myocardium of the left ventricle. We show the end-diastolic (ED) frame (top row) and the end-systolic (ES) frame (bottom row) of the cine CMR image sequence.

-plane of the SAX stack. (c) LAX 2-chamber (2CH) view. (d) LAX 4-chamber (4CH) view. Red and green contours1 show the epicardium and endocardium, respectively. The area between these contours is the myocardium of the left ventricle. We show the end-diastolic (ED) frame (top row) and the end-systolic (ES) frame (bottom row) of the cine CMR image sequence.

2D myocardial motion estimation only considers motion in either the SAX plane or LAX plane and does not provide complete 3D motion information for the heart. This may lead to inaccurate assessment of cardiac function. Therefore, 3D motion estimation that recovers myocardial deformation in the

,

,

and

and

directions is important. However, estimating 3D motion fields from cine CMR images remains challenging because (1) SAX stacks have much lower through-plane resolution (typically 8 mm slice thickness) than in-plane resolution (typically

directions is important. However, estimating 3D motion fields from cine CMR images remains challenging because (1) SAX stacks have much lower through-plane resolution (typically 8 mm slice thickness) than in-plane resolution (typically

mm), (2) image quality can be negatively affected by slice misalignment in SAX stacks as only one or two slices are acquired during a single breath-hold, and (3) high-resolution 2CH and 4CH view images are too spatially sparse to estimate 3D motion fields on their own.

mm), (2) image quality can be negatively affected by slice misalignment in SAX stacks as only one or two slices are acquired during a single breath-hold, and (3) high-resolution 2CH and 4CH view images are too spatially sparse to estimate 3D motion fields on their own.

In this work, we take full advantage of both SAX and LAX (2CH and 4CH) view images, and propose a multi-view motion estimation network for 3D myocardial motion tracking from cine CMR images. In the proposed method, a hybrid 2D/3D network is developed for 3D motion estimation. This hybrid network learns combined representations from multi-view images to estimate a 3D motion field from the ED frame to any

-th frame in the cardiac cycle. To guarantee an accurate motion estimation, especially along the longitudinal direction (i.e., the

-th frame in the cardiac cycle. To guarantee an accurate motion estimation, especially along the longitudinal direction (i.e., the

direction), a shape regularization module is introduced to leverage anatomical shape information for motion estimation during training. This module encourages the estimated 3D motion field to correctly transform the 3D shape of the myocardial wall from the ED frame to the

direction), a shape regularization module is introduced to leverage anatomical shape information for motion estimation during training. This module encourages the estimated 3D motion field to correctly transform the 3D shape of the myocardial wall from the ED frame to the

-th frame. Here anatomical shape is represented by edge maps that show the contour of the cardiac anatomy. During inference, the hybrid network generates a sequence of 3D motion fields between paired frames (ED and

-th frame. Here anatomical shape is represented by edge maps that show the contour of the cardiac anatomy. During inference, the hybrid network generates a sequence of 3D motion fields between paired frames (ED and

-th frames), which represents the myocardial motion across the cardiac cycle. The main contributions of this paper are summarized as follows:

-th frames), which represents the myocardial motion across the cardiac cycle. The main contributions of this paper are summarized as follows:

-

•

We develop a solution to a challenging cardiac motion tracking problem: learning 3D motion fields from a set of 2D SAX and LAX cine CMR images. We propose an end-to-end trainable multi-view motion estimation network (MulViMotion) for 3D myocardial motion tracking.

-

•

The proposed method enables accurate 3D motion tracking by combining multi-view images using both latent information and shape information: (1) the representations of multi-view images are combined in the latent space for the generation of 3D motion fields; (2) the complementary shape information from multi-view images is exploited in a shape regularization module to provide explicit constraint on the estimated 3D motion fields.

-

•

The proposed method is trained in a weakly supervised manner which only requires sparsely annotated data in different 2D SAX and LAX views and requires no ground truth 3D motion fields. The 2D edge maps from the corresponding SAX and LAX planes provide weak supervision to the estimated 3D edge maps for guiding 3D motion estimation in the shape regularization module.

-

•

We perform extensive evaluations for the proposed method on 580 subjects from the UK Biobank study. We further present qualitative analysis on the CMR images with severe slice misalignment and we explore the applicability of our method for wall thickening measurement.

II. Related Work

1). Conventional Motion Estimation Methods:

A common method for quantifying cardiac motion is to track noninvasive markers. CMR myocardial tagging provides tissue markers (stripe-like darker tags) in myocardium which can deform with myocardial motion [16]. By tracking the deformation of markers, dense displacement fields can be retrieved in the imaging plane. Harmonic phase (HARP) technique is the most representative approach for motion tracking in tagged images [17]–[19]. Several other methods have been proposed to compute dense displacement fields from dynamic myocardial contours or surfaces using geometrical and biomechanical modeling [20], [21]. For example, Papademetris et al. [21] proposed a Bayesian estimation framework for myocardial motion tracking from 3D echocardiography. In addition, image registration has been applied to cardiac motion estimation in previous works. Craene et al. [22] introduced continuous spatio-temporal B-spline kernels for computing a 4D velocity field, which enforced temporal consistency in motion recovery. Rueckert et al. [23] proposed a free form deformation (FFD) method for general non-rigid image registration. This method has been used for cardiac motion estimation in many recent works, e.g., [1], [4], [6], [14], [24]–[27]. Thirion [28] built a demons algorithm which utilizes diffusing models for image matching and further cardiac motion tracking. Based on this work, Vercauteren et al. [29] adapted demons algorithm to provide non-parametric diffeomorphic transformation and McLeod et al. [30] introduced an elastic-like regularizer to improve the incompressibility of deformation recovery.

2). Deep Learning-Based Motion Estimation Methods:

In recent years, deep convolutional neural networks (CNNs) have been successfully applied to medical image analysis, which has inspired the exploration of deep learning-based cardiac motion estimation approaches. Qin et al. [11] proposed a multi-task framework for joint estimation of segmentation and motion. This multi-task framework contains a shared feature encoder which enables a weakly-supervised segmentation. Zheng et al. [12] proposed a method for cardiac pathology classification based on cardiac motion. Their method utilizes a modified U-Net [31] to generate flow maps between ED frame and any other frame. For cardiac motion tracking in multiple datasets, Yu et al. [15] considered the distribution mismatch problem and proposed a meta-learning-based online model adaption framework. Different from these methods which estimate motion in cine CMR, Ye et al. [32] proposed a deep learning model for tagged image motion tracking. In their work, the motion field between any two consecutive frames is first computed, followed by estimating the Lagrangian motion field between ED frame and any other frame. Most of these existing deep learning-based methods aim at 2D motion tracking by only using SAX stacks. In contrast, our method focuses on 3D motion tracking by fully combining multiple anatomical views (i.e., SAX, 2CH and 4CH), which is able to estimate both in-plane and through-plane myocardial motion.

3). Multi-View Based Cardiac Analysis:

Different anatomical scan views usually contain complementary information and the combined multiple views can be more descriptive than a single view. Chen et al. [33] utilized both SAX and LAX views for 2D cardiac segmentation, where the features of multi-view images are combined in the bottleneck of 2D U-Net. Puyol-Antón et al. [27] introduced a framework that separately uses multi-view images for myocardial strain analysis. In their method, the SAX view is used for radial and circumferential strain estimation while the LAX view is used for longitudinal strain estimation. Abdelkhalek et al. [34] proposed a 3D myocardial strain estimation framework, where the point clouds from SAX and LAX views are aligned for surface reconstruction. Attar et al. [35] proposed a framework for 3D cardiac shape prediction, in which the features of multi-view images are concatenated in CNNs to predict the 3D shape parameters. In this work, we focus on using multi-view images for 3D motion estimation. Compared to most of these existing works which only combine the features of multi-view images in the latent space (e.g., [33], [35]), our method additionally combines complementary shape information from multiple views to predict anatomically plausible 3D edge map of myocardial wall on different time frames, which provides guidance for 3D motion estimation.

III. Method

Our goal is to estimate 3D motion fields of the LV myocardium from multi-view 2D cine CMR images. We formulate our task as follows: Let

be a SAX sequence which contains stacks of 2D images (

be a SAX sequence which contains stacks of 2D images (

slices) and

slices) and

be LAX sequences which contain 2D images in the 2CH and 4CH views.

be LAX sequences which contain 2D images in the 2CH and 4CH views.

and

and

are the height and width of each image and

are the height and width of each image and

is the number of frames. We want to train a network to estimate a 3D motion field

is the number of frames. We want to train a network to estimate a 3D motion field

by using the multi-view images of the ED frame (

by using the multi-view images of the ED frame (

) and of any

) and of any

-th frame (

-th frame (

).

).

describes the motion of the LV myocardium from ED frame to the

describes the motion of the LV myocardium from ED frame to the

-th frame. For each voxel in

-th frame. For each voxel in

, we estimate its displacement in the

, we estimate its displacement in the

,

,

,

,

directions.

directions.

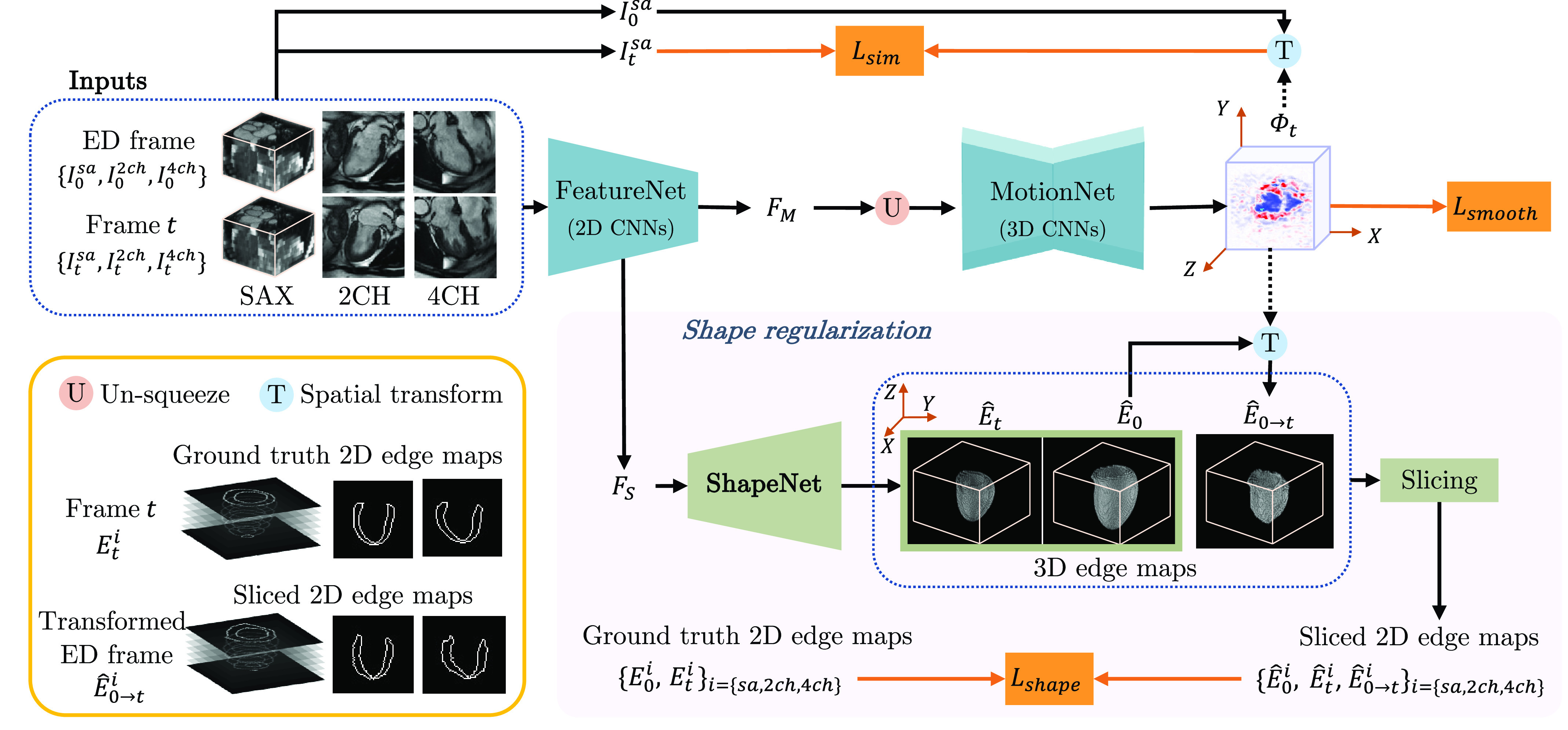

To solve this task, we propose MulViMotion that estimates 3D motion fields from multi-view images with shape regularization. The schematic architecture of our method is shown in Fig. 2. A hybrid 2D/3D network that contains FeatureNet (2D CNNs) and MotionNet (3D CNNs) is used to predict

from the input multi-view images. FeatureNet learns multi-view multi-scale features and is used to extract multi-view motion feature

from the input multi-view images. FeatureNet learns multi-view multi-scale features and is used to extract multi-view motion feature

and multi-view shape feature

and multi-view shape feature

from the input. MotionNet generates

from the input. MotionNet generates

based on

based on

. A shape regularization module is used to leverage anatomical shape information for 3D motion estimation during training. In this module, 3D edge maps of the myocardial wall are predicted from

. A shape regularization module is used to leverage anatomical shape information for 3D motion estimation during training. In this module, 3D edge maps of the myocardial wall are predicted from

using ShapeNet and warped from ED frame to the

using ShapeNet and warped from ED frame to the

-th frame by

-th frame by

. The sparse ground truth 2D edge maps derived from the multi-view images provide weak supervision to the predicted and warped 3D edge maps, and thus encourage an accurate estimation of

. The sparse ground truth 2D edge maps derived from the multi-view images provide weak supervision to the predicted and warped 3D edge maps, and thus encourage an accurate estimation of

, especially in the

, especially in the

direction. Here, a slicing step is used to extract corresponding multi-view planes from the 3D edge maps in order to compare 3D edge maps with 2D ground truth. During inference, a 3D motion field is directly generated from the input multi-view images by the hybrid network, without using shape regularization.

direction. Here, a slicing step is used to extract corresponding multi-view planes from the 3D edge maps in order to compare 3D edge maps with 2D ground truth. During inference, a 3D motion field is directly generated from the input multi-view images by the hybrid network, without using shape regularization.

Fig. 2.

An overview of MulViMotion. We use a hybrid 2D/3D network to estimate a 3D motion field

from the input multi-view images. In the hybrid network, FeatureNet learns multi-view motion feature

from the input multi-view images. In the hybrid network, FeatureNet learns multi-view motion feature

and multi-view shape feature

and multi-view shape feature

from the input, followed by MotionNet which generates

from the input, followed by MotionNet which generates

based on

based on

. A shape regularization module leverages anatomical shape information for 3D motion estimation. It encourages the predicted 3D edge maps of the myocardial wall

. A shape regularization module leverages anatomical shape information for 3D motion estimation. It encourages the predicted 3D edge maps of the myocardial wall

(predicted from

(predicted from

using ShapeNet) and the warped 3D edge map

using ShapeNet) and the warped 3D edge map

(warped from ED frame to the

(warped from ED frame to the

-th frame by

-th frame by

) to be consistent with the ground truth 2D edge maps defined on multi-view images. Shape regularization is only used during training.

) to be consistent with the ground truth 2D edge maps defined on multi-view images. Shape regularization is only used during training.

A. 3D Motion Estimation

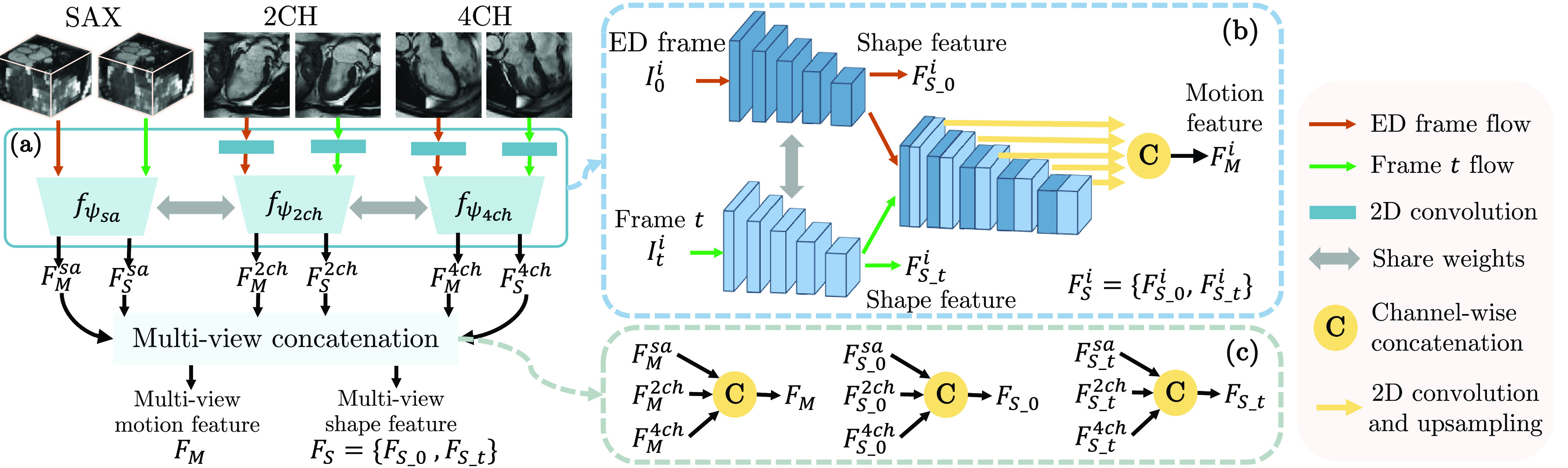

1). Multi-View Multi-Scale Feature Extraction (FeatureNet):

The first step of 3D motion estimation is to extract internal representations from the input 2D multi-view images

. We build FeatureNet to simultaneously learn motion and shape feature from the input because the motion and shape of the myocardial wall are closely related and can provide complementary information to each other [11], [36], [37]. FeatureNet consists of (1) multi-scale feature fusion and (2) multi-view concatenation (see Fig. 3).

. We build FeatureNet to simultaneously learn motion and shape feature from the input because the motion and shape of the myocardial wall are closely related and can provide complementary information to each other [11], [36], [37]. FeatureNet consists of (1) multi-scale feature fusion and (2) multi-view concatenation (see Fig. 3).

Fig. 3.

An overview of FeatureNet. FeatureNet takes multi-view images as input and extracts multi-view motion feature

and multi-view shape feature

and multi-view shape feature

. Panel (a) describes multi-scale feature fusion. Panel (b) shows the 2D encoder

. Panel (a) describes multi-scale feature fusion. Panel (b) shows the 2D encoder

, where

, where

refers to SAX, 2CH and 4CH views. Panel (c) describes the combination of multi-view features.

refers to SAX, 2CH and 4CH views. Panel (c) describes the combination of multi-view features.

In the multi-scale feature fusion (Fig. 3 (a)), the input multi-view images are unified to

-channel 2D feature maps by applying 2D convolution on 2CH and 4CH view images. Then three 2D encoders

-channel 2D feature maps by applying 2D convolution on 2CH and 4CH view images. Then three 2D encoders

are built to extract motion and shape features from each anatomical view,

are built to extract motion and shape features from each anatomical view,

|

Here,

represents anatomical views and

represents anatomical views and

refers to the network parameters of

refers to the network parameters of

.

.

and

and

are the learned motion feature and shape feature, respectively. As these encoders aim to extract the same type of information (i.e., shape and motion information), the three encoders share weights to learn representations that are useful and related to different views.

are the learned motion feature and shape feature, respectively. As these encoders aim to extract the same type of information (i.e., shape and motion information), the three encoders share weights to learn representations that are useful and related to different views.

In each encoder, representations at different scales are fully exploited for feature extraction.

consists of (1) a Siamese network that extracts features from both ED frame and

consists of (1) a Siamese network that extracts features from both ED frame and

-th frame, and (2) feature-fusion layers that concatenate multi-scale features from pairs of frames (Fig. 3 (b)). From the Siamese network, the last feature maps of the two streams are used as shape feature of the ED frame (

-th frame, and (2) feature-fusion layers that concatenate multi-scale features from pairs of frames (Fig. 3 (b)). From the Siamese network, the last feature maps of the two streams are used as shape feature of the ED frame (

) and the

) and the

-th frame (

-th frame (

), respectively, and

), respectively, and

. All features across different scales from both streams are combined by feature-fusion layers to generate motion feature

. All features across different scales from both streams are combined by feature-fusion layers to generate motion feature

. In detail, these multi-scale features are upsampled to the original resolution by a convolution and upsampling operation and then combined using a concatenation layer.

. In detail, these multi-scale features are upsampled to the original resolution by a convolution and upsampling operation and then combined using a concatenation layer.

With the obtained

, a multi-view concatenation generates the multi-view motion feature

, a multi-view concatenation generates the multi-view motion feature

and the multi-view shape feature

and the multi-view shape feature

via channel-wise concatenation

via channel-wise concatenation

(see Fig. 3 (c)),

(see Fig. 3 (c)),

|

Here

and

and

.

.

The FeatureNet model is composed of 2D CNNs which learns 2D features from the multi-view images and inter-slice correlation from SAX stacks. The obtained

is first unified to

is first unified to

-channels using 2D convolution and then is used to predict

-channels using 2D convolution and then is used to predict

in the next step. The obtained

in the next step. The obtained

is used for shape regularization in Sec. III-B.

is used for shape regularization in Sec. III-B.

2). Motion Estimation (MotionNet):

In this step, we introduce MotionNet to predict the 3D motion field

by learning 3D representations from the multi-view motion feature

by learning 3D representations from the multi-view motion feature

. MotionNet is built with a 3D encoder-decoder architecture.

. MotionNet is built with a 3D encoder-decoder architecture.

is predicted by MotionNet with

is predicted by MotionNet with

|

where

represents MotionNet and

represents MotionNet and

refers to the network parameters of

refers to the network parameters of

. The function

. The function

denotes an un-squeeze operation which changes

denotes an un-squeeze operation which changes

from a stack of 2D feature maps to a 3D feature map by adding an extra dimension.

from a stack of 2D feature maps to a 3D feature map by adding an extra dimension.

3). Spatial Transform (Warping):

Inspired by the successful application of spatial transformer networks [38], [39], the SAX stack of the ED frame (

) can be transformed to the

) can be transformed to the

-th frame using the motion field

-th frame using the motion field

. For voxel with location

. For voxel with location

in the transformed SAX stack (

in the transformed SAX stack (

), we compute the corresponding location

), we compute the corresponding location

in

in

by

by

. As image values are only defined at discrete locations, the value at

. As image values are only defined at discrete locations, the value at

in

in

is computed from

is computed from

in

in

using trillinear interpolation.2

using trillinear interpolation.2

4). Motion Loss:

As true dense motion fields of paired frames are usually unavailable in real practice, we propose an unsupervised motion loss

to evaluate the 3D motion estimation model using only the input SAX stack (

to evaluate the 3D motion estimation model using only the input SAX stack (

) and the generated 3D motion field (

) and the generated 3D motion field (

).

).

consists of two components: (1) an image similarity loss

consists of two components: (1) an image similarity loss

that penalizes appearance difference between

that penalizes appearance difference between

and

and

, and (2) a local smoothness loss

, and (2) a local smoothness loss

that penalizes the gradients of

that penalizes the gradients of

,

,

|

Here

is a hyper-parameter,

is a hyper-parameter,

is defined by voxel-wise mean squared error and

is defined by voxel-wise mean squared error and

is the Huber loss used in [11], [39] which encourages a smooth

is the Huber loss used in [11], [39] which encourages a smooth

,

,

|

Here

and we use the same approximation to

and we use the same approximation to

and

and

. Same to [11], [39],

. Same to [11], [39],

is set to 0.01. In Eq. 5 and Eq. 6,

is set to 0.01. In Eq. 5 and Eq. 6,

is the

is the

th voxel and

th voxel and

denotes the number of voxels.

denotes the number of voxels.

Note that

is only applied to SAX stacks because 2D images in 2CH and 4CH views typically consist of only one slice and can not be directly warped by a 3D motion field.

is only applied to SAX stacks because 2D images in 2CH and 4CH views typically consist of only one slice and can not be directly warped by a 3D motion field.

B. Shape Regularization

The motion loss (

) on its own is not sufficient to guarantee motion estimation in the

) on its own is not sufficient to guarantee motion estimation in the

direction due to the low through-plane resolution in SAX stacks. To address this problem, we introduce a shape regularization module which ensures the 3D edge map of the myocardial wall is correct before and after

direction due to the low through-plane resolution in SAX stacks. To address this problem, we introduce a shape regularization module which ensures the 3D edge map of the myocardial wall is correct before and after

warping, and thus enables an accurate estimation of

warping, and thus enables an accurate estimation of

. Here, the ground truth 2D edge maps derived from the multi-view images provide weak supervision to the predicted and warped 3D edge maps.

. Here, the ground truth 2D edge maps derived from the multi-view images provide weak supervision to the predicted and warped 3D edge maps.

1). Shape Estimation (ShapeNet):

ShapeNet is built to generate the 3D edge map of the myocardial wall in the ED frame (

) and the

) and the

-th frame (

-th frame (

) from

) from

,

,

|

Here

and

and

are the two branches in ShapeNet which contain shared 2D decoders and 3D convolutional layers in order to learn 3D edge maps from 2D features for all frames (Fig. 4). The dimension of

are the two branches in ShapeNet which contain shared 2D decoders and 3D convolutional layers in order to learn 3D edge maps from 2D features for all frames (Fig. 4). The dimension of

and

and

are

are

. With the spatial transform in Sec. III-A.3,

. With the spatial transform in Sec. III-A.3,

is warped to the

is warped to the

-th frame by

-th frame by

, which generates the transformed 3D edge map

, which generates the transformed 3D edge map

. Then

. Then

,

,

and

and

are weakly supervised by ground truth 2D edge maps.

are weakly supervised by ground truth 2D edge maps.

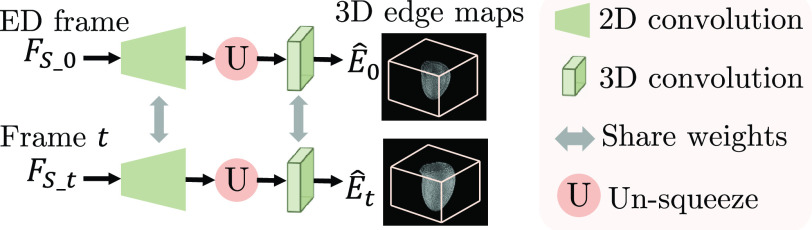

Fig. 4.

An overview of ShapeNet. ShapeNet predicts the 3D edge maps of the LV myocardial wall in ED frame and the

-th frame from the corresponding shape features

-th frame from the corresponding shape features

and

and

.

.

2). Slicing:

To compare the 3D edge maps with 2D ground truth, we use 3D masks

to extract SAX, 2CH and 4CH view planes from

to extract SAX, 2CH and 4CH view planes from

,

,

and

and

with

with

|

where

represents anatomical views and

represents anatomical views and

refers to element-wise multiplication. These 3D masks describe the locations of multi-view images in SAX stacks and are generated based on the input during image preprocessing.

refers to element-wise multiplication. These 3D masks describe the locations of multi-view images in SAX stacks and are generated based on the input during image preprocessing.

3). Shape Loss:

The sliced 2D edge maps

are compared to 2D ground truth

are compared to 2D ground truth

by a shape loss

by a shape loss

,

,

|

For each component in

, we utilize cross-entropy loss (

, we utilize cross-entropy loss (

) to measure the similarity of edge maps, e.g.,

) to measure the similarity of edge maps, e.g.,

|

Same to Eq. 10,

is computed by

is computed by

and

and

is computed by

is computed by

.

.

C. Optimization

Our model is an end-to-end trainable framework and the overall objective is a linear combination of all loss functions

|

where

and

and

are hyper-parameters chosen experimentally depending on the dataset. We use the Adam optimizer (

are hyper-parameters chosen experimentally depending on the dataset. We use the Adam optimizer (

) to update the parameters of MulViMotion. Our model is implemented by Pytorch and is trained on a NVIDIA Tesla T4 GPU with 16 GB of memory.

) to update the parameters of MulViMotion. Our model is implemented by Pytorch and is trained on a NVIDIA Tesla T4 GPU with 16 GB of memory.

IV. Experiments

We demonstrate our method on the task of 3D myocardial motion tracking. We evaluate the proposed method using quantitative metrics such as Dice, Hausdorff distance, volume difference and Jacobian determinant. Geometric mesh is used to provide qualitative results with 3D visualization. We compared the proposed method with other state-of-the-art motion estimation methods and performed extensive ablation study. In addition, we show the effectiveness of the proposed method on the subjects with severe slice misalignment. We further explore the applicability of the proposed method for wall thickening measurement. We show the key results in the main paper. More results (e.g., dynamic videos) are shown in the Appendix.3

A. Experiment Setups

1). Data:

We performed experiments on randomly selected 580 subjects from the UK Biobank study.4 All participants gave written informed consent [40]. The participant characteristics are shown in Table I. The CMR images of all subjects are acquired by a 1.5 Tesla scanner (MAGNETOM Aera, Syngo Platform VD13A, Siemens Healthcare, Erlangen, Germany). Each subject contains SAX, 2CH and 4CH view cine CMR sequences and each sequence contains 50 frames. More CMR acquisition details for UK Biobank study can be found in [41]. For image preprocessing, (1) SAX view images were resampled by linear interpolation from a spacing of

to a spacing of

to a spacing of

while 2CH and 4CH view images were resampled from

while 2CH and 4CH view images were resampled from

to

to

, (2) by keeping the middle slice of the resampled SAX stacks in the center, zero-padding was used on top or bottom if necessary to reshape the resampled SAX stacks to 64 slices, (3) to cover the whole LV as the ROI, based on the center of the LV in the middle slice, the resampled SAX stacks were cropped to a size of

, (2) by keeping the middle slice of the resampled SAX stacks in the center, zero-padding was used on top or bottom if necessary to reshape the resampled SAX stacks to 64 slices, (3) to cover the whole LV as the ROI, based on the center of the LV in the middle slice, the resampled SAX stacks were cropped to a size of

(note that we computed the center of the LV based on the LV myocardium segmentation of the middle slice of the SAX stack), (4) 2CH and 4CH view images were cropped to

(note that we computed the center of the LV based on the LV myocardium segmentation of the middle slice of the SAX stack), (4) 2CH and 4CH view images were cropped to

based on the center of the intersecting line between the middle slice of the cropped SAX stack and the 2CH/4CH view image, (5) each frame was independently normalized to zero mean and unitary standard deviation, and (6) 3D masks (Eq. 8) were computed by a coordinate transformation using DICOM image header information of SAX, 2CH and 4CH view images. Note that 2D SAX slices used in the shape regularization module were unified to 9 adjacent slices for all subjects, including the middle slice and 4 upper and lower slices. With this image preprocessing, the input SAX, 2CH and 4CH view images cover the whole LV in the center.

based on the center of the intersecting line between the middle slice of the cropped SAX stack and the 2CH/4CH view image, (5) each frame was independently normalized to zero mean and unitary standard deviation, and (6) 3D masks (Eq. 8) were computed by a coordinate transformation using DICOM image header information of SAX, 2CH and 4CH view images. Note that 2D SAX slices used in the shape regularization module were unified to 9 adjacent slices for all subjects, including the middle slice and 4 upper and lower slices. With this image preprocessing, the input SAX, 2CH and 4CH view images cover the whole LV in the center.

TABLE I. Participant Characteristics. Data are Mean±Standard Deviation for Continuous Variables and Number of Participant for Categorical Variable.

| Parameter | Value (Subject number is 580) |

|---|---|

| Age (years) | 64±8 |

| Sex (Female/Male) | 325 / 255 |

| Ejection fraction (%) | 60±6 |

| Weight (kg) | 74±15 |

| Height (cm) | 169±9 |

| Body mass index (kg/m2) | 26±4 |

| Diastolic blood pressure (mm Hg) | 79±10 |

| Systolic blood pressure (mm Hg) | 138±19 |

3D high-resolution segmentations of these subjects were automatically generated using the 4Dsegment tool [9] based on the resampled SAX stacks, followed by manual quality control. The obtained segmentations have been shown to be useful in clinical applications (e.g., [1]), and thus we use them to generate ground truth 2D edge maps (Fig. 1) in this work. In detail, we utilize the obtained 3D masks to extract SAX, 2CH and 4CH view planes from these 3D segmentations and then use contour extraction to obtain

used in Sec. III-B.2. Note that we use 3D segmentation(s) to refer to the 3D segmentations obtained by [9] in this section.

used in Sec. III-B.2. Note that we use 3D segmentation(s) to refer to the 3D segmentations obtained by [9] in this section.

We split the dataset into 450/50/80 for train/validation/test and train MulViMotion for 300 epochs. The hyper-parameters in Eq. 11 are selected as

.

.

2). Evaluation Metrics:

We use segmentations to provide quantitative evaluation to the estimated 3D motion fields. This is the same evaluation performed in other cardiac motion tracking literature [11], [12], [15]. Here, 3D segmentations obtained by [9] are used in the evaluation metrics. The framework in [9] performs learning-based segmentation, followed by an atlas-based refinement step to ensure robustness towards potential imaging artifacts. The generated segmentations are anatomically meaningful and spatially consistent. As our work aims to estimate real 3D motion of the heart from the acquired CMR images, such segmentations that approximate the real shape of the heart can provide a reasonable evaluation. In specific, on test data, we estimate the 3D motion field

from ED frame to ES frame, which shows large deformation. Then we warp the 3D segmentation of the ED frame (

from ED frame to ES frame, which shows large deformation. Then we warp the 3D segmentation of the ED frame (

) to ES frame by

) to ES frame by

. Finally, we compared the transformed 3D segmentation (

. Finally, we compared the transformed 3D segmentation (

) with the ground truth 3D segmentation of the ES frame (

) with the ground truth 3D segmentation of the ES frame (

) using following metrics. Note that the ES frame is identified as the frame with the least image intensity similarity to the ED frame.

) using following metrics. Note that the ES frame is identified as the frame with the least image intensity similarity to the ED frame.

Dice score and Hausdorff distance (HD) are utilized to respectively quantify the volume overlap and contour distance between

and

and

. A high value of Dice and a low value of HD represent an accurate 3D motion estimation.

. A high value of Dice and a low value of HD represent an accurate 3D motion estimation.

Volume difference (VD) is computed to evaluate the volume preservation, as incompressible motion is desired within the myocardium [13], [19], [25], [30].

, where

, where

computes the number of voxels in the segmentation volume. A low value of VD means a good volume preservation ability of

computes the number of voxels in the segmentation volume. A low value of VD means a good volume preservation ability of

.

.

The Jacobian determinant

(

(

) is employed to evaluate the local behavior of

) is employed to evaluate the local behavior of

: A negative Jacobian determinant

: A negative Jacobian determinant

indicates that the motion field at position

indicates that the motion field at position

results in folding and leads to non-diffeomorphic transformations. Therefore, a low number of points with

results in folding and leads to non-diffeomorphic transformations. Therefore, a low number of points with

corresponds to an anatomically plausible deformation from ED frame to ES frame and thus indicates a good

corresponds to an anatomically plausible deformation from ED frame to ES frame and thus indicates a good

. We count the percentage of voxels in the myocardial wall with

. We count the percentage of voxels in the myocardial wall with

in the evaluation.

in the evaluation.

3). Baseline Methods:

We compared the proposed method with three cardiac motion tracking methods, including two conventional methods and one deep learning method. The first baseline is a B-spline free form deformation (FFD) algorithm [23] which has been used in many recent cardiac motion tracking works [1], [6], [14], [26], [27]. We use the FFD approach implemented in the MIRTK toolkit.5 The second baseline is a diffeomorphic Demons (dDemons) algorithm [29] which has been used in [13] for cardiac motion tracking. We use a SimpleITK software package as the dDemons implementation.6 In addition, the UNet architecture has been used in many recent works for image registration [37], [42], [43], and thus our third baseline is a deep learning method with 3D-UNet [44]. The input of 3D-UNet baseline is paired frames (

) and output is a 3D motion field. Eq. 4 is used as the loss function for this baseline. We implemented 3D-UNet based on its online code.7 For the baseline methods with hyper-parameters, we evaluated several sets of parameter values. The hyper-parameters that achieve the best Dice score on the validation set are selected.

) and output is a 3D motion field. Eq. 4 is used as the loss function for this baseline. We implemented 3D-UNet based on its online code.7 For the baseline methods with hyper-parameters, we evaluated several sets of parameter values. The hyper-parameters that achieve the best Dice score on the validation set are selected.

B. 3D Myocardial Motion Tracking

1). Motion Tracking Performance:

For each test subject, MulViMotion is utilized to estimate 3D motion fields in the full cardiac cycle. With the obtained

, we warp the 3D segmentation of ED frame (

, we warp the 3D segmentation of ED frame (

) to the

) to the

-th frame. Fig. 5 (a) shows that the estimated

-th frame. Fig. 5 (a) shows that the estimated

enables the warped 3D segmentation to match the myocardial area in images from different anatomical views. In addition, we warp the SAX stack of the ED frame (

enables the warped 3D segmentation to match the myocardial area in images from different anatomical views. In addition, we warp the SAX stack of the ED frame (

) to the

) to the

-th frame. Fig. 5 (b) shows the effectiveness of

-th frame. Fig. 5 (b) shows the effectiveness of

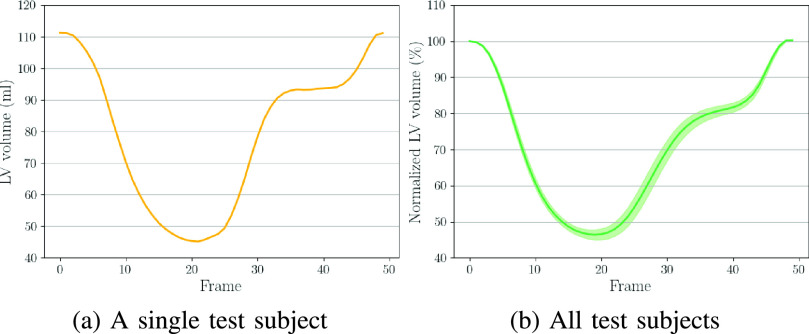

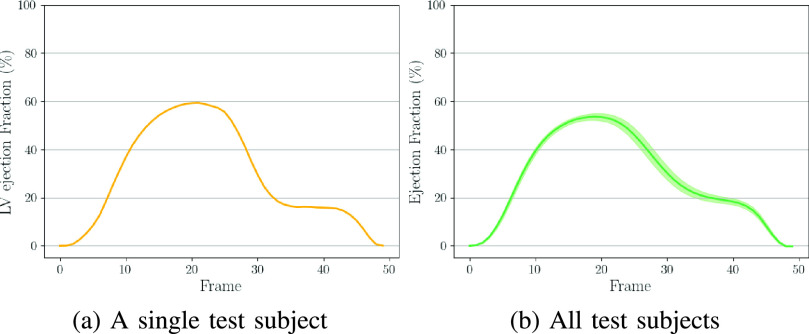

by comparing the warped and the ground truth SAX view images. By utilizing the warped 3D segmentation, we further compute established clinical biomarkers. Fig. 6 demonstrates the curve of LV volume over time. The shape of the curve are consistent with reported results in the literature [11], [45].

by comparing the warped and the ground truth SAX view images. By utilizing the warped 3D segmentation, we further compute established clinical biomarkers. Fig. 6 demonstrates the curve of LV volume over time. The shape of the curve are consistent with reported results in the literature [11], [45].

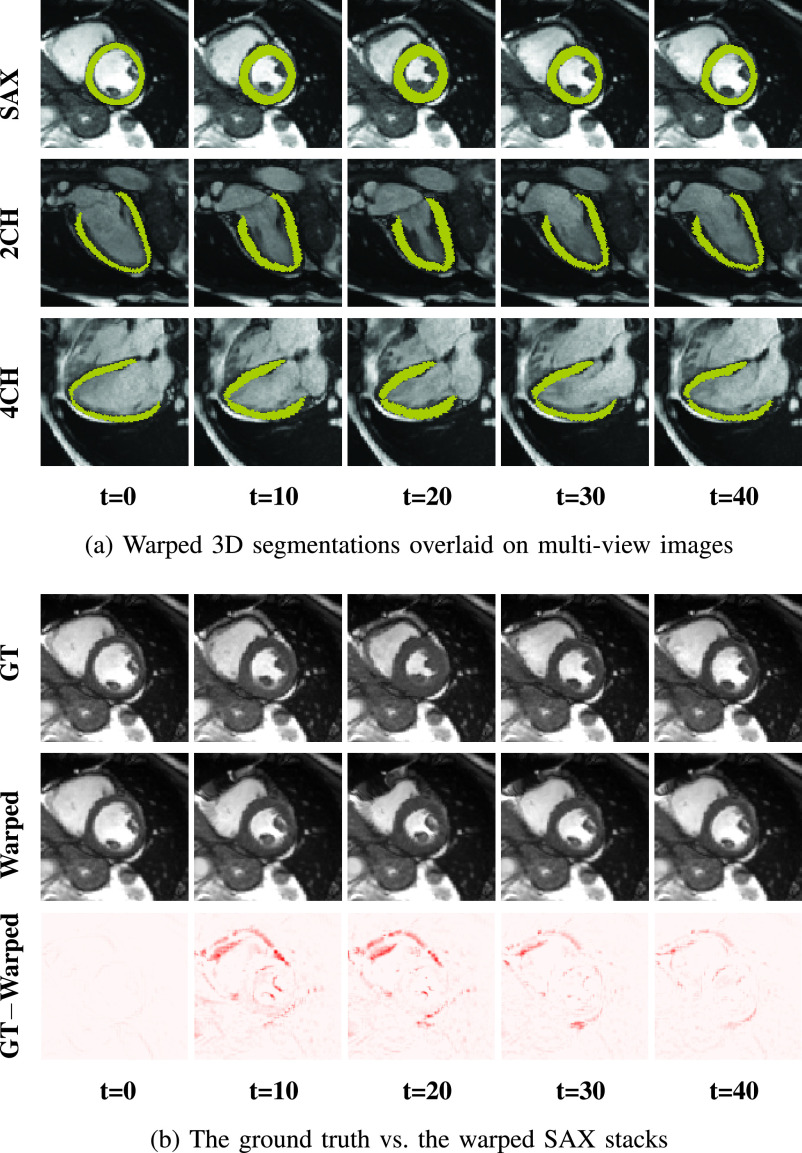

Fig. 5.

Examples of motion tracking results. 3D motion fields generated by MulViMotion are used to warp 3D segmentations and SAX stacks from ED frame to the

-th frame. (a) The warped segmentations overlaid on SAX, 2CH and 4CH view images. (b) The ground truth (GT) and the warped SAX stacks as well as their difference maps (i.e., GT–Warped).

-th frame. (a) The warped segmentations overlaid on SAX, 2CH and 4CH view images. (b) The ground truth (GT) and the warped SAX stacks as well as their difference maps (i.e., GT–Warped).

Fig. 6.

The results of LV volume across the cardiac cycle. (a) Results on a randomly selected test subject. (b) Results on all test subjects (mean values and confidence interval are presented). Note that, for each subject in (b), we normalized LV volume (dividing LV volume in all time frames by that in the ED frame) and show the average results of all test subjects.

We quantitatively compared MulViMotion with baseline methods in Table II. With the 3D motion fields generated by different methods, the 3D segmentations of ED frame are warped to ES frame and compared with the ground truth 3D segmentations of ES frame by using metrics introduced in Sec. IV-A.2. From this table, we observe that MulViMotion outperforms all baseline methods for Dice and Hausdorff distance, demonstrating the effectiveness of the proposed method on estimating 3D motion fields. MulViMotion achieves the lowest volume difference, indicating that the proposed method is more capable of preserving the volume of the myocardial wall during cardiac motion tracking. Compared to a diffeomorphic motion tracking method (dDemons [29]), the proposed method has a similar number of voxels with a negative Jacobian determinant. This illustrates that the learned motion field is smooth and preserves topology.

TABLE II. Comparison of Other Cardiac Motion Tracking Methods.

Indicates the Higher Value the Better While

Indicates the Higher Value the Better While

Indicates the Lower Value the Better. Results are Reported as “Mean (Standard Deviation)” for Dice, Hausdorff Distance (HD), Volume Difference (VD) and Negative Jacobian Determinant (

Indicates the Lower Value the Better. Results are Reported as “Mean (Standard Deviation)” for Dice, Hausdorff Distance (HD), Volume Difference (VD) and Negative Jacobian Determinant (

(

(

) < 0). CPU and GPU Runtimes are Reported as the Average Inference Time for a Single Subject. Best Results in Bold.

) < 0). CPU and GPU Runtimes are Reported as the Average Inference Time for a Single Subject. Best Results in Bold.

| Methods | Anatomical views | Dice

|

HD (mm)

|

VD (%)

|

(%) (%)

|

Times CPU (s)

|

Times GPU (s)

|

|---|---|---|---|---|---|---|---|

| FFD [23] | SAX | 0.7250 (0.0511) | 20.1138 (5.1130) | 14.45 (6.87) | 11.94 (5.01) | 15.91 | – |

| dDemons [29] | SAX | 0.7219 (0.0422) | 18.3945 (3.5650) | 14.46 (6.38) | 0.13 (0.17) | 28.32 | – |

| 3D-UNet [44] | SAX | 0.7382 (0.0293) | 17.4785 (3.1030) | 30.97 (9.89) | 0.95 (1.05) | 16.88 | 1.09 |

| MulViMotion | SAX, 2CH, 4CH | 0.8200 (0.0348) | 14.5937 (4.2449) | 8.62 (4.85) | 0.93 (0.94) | 3.55 | 1.15 |

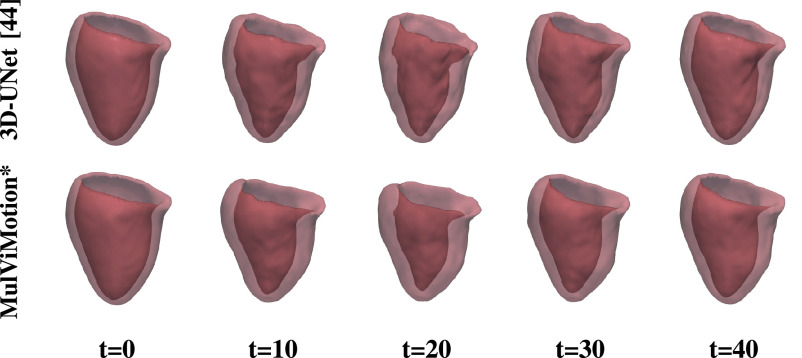

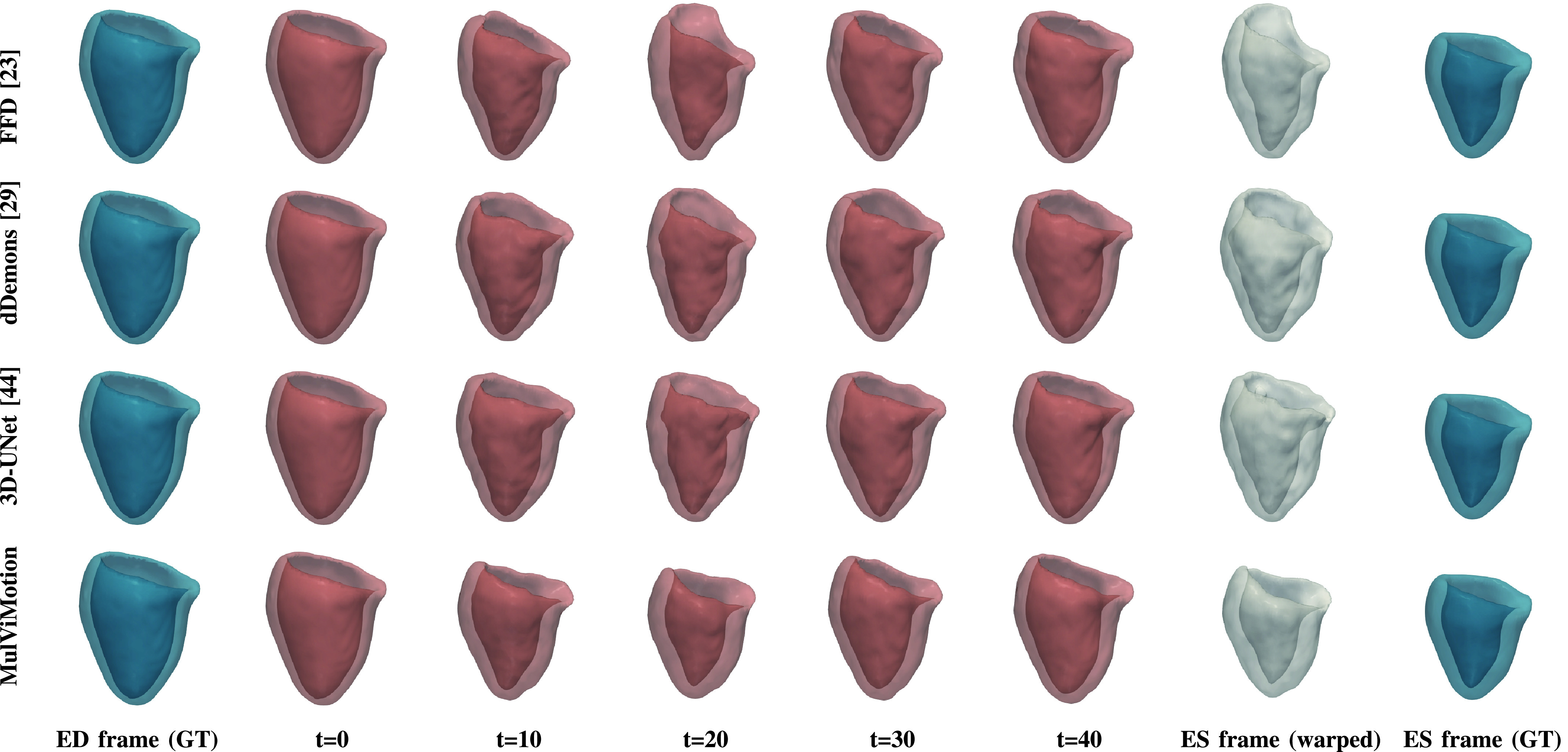

We further qualitatively compared MulViMotion with baseline methods in Fig. 7. A geometric mesh is used to provide 3D visualization of the myocardial wall. Specifically, 3D segmentations of ED frame are warped to any

-th frame in the cardiac cycle and geometric meshes are reconstructed from these warped 3D segmentations. Red meshes in Fig. 7 demonstrate that in contrast to all baseline methods which only show motion within SAX plane (i.e., along the

-th frame in the cardiac cycle and geometric meshes are reconstructed from these warped 3D segmentations. Red meshes in Fig. 7 demonstrate that in contrast to all baseline methods which only show motion within SAX plane (i.e., along the

and

and

directions), MulViMotion is able to estimate through-plane motion along the longitudinal direction (i.e., the

directions), MulViMotion is able to estimate through-plane motion along the longitudinal direction (i.e., the

direction) in the cardiac cycle, e.g., the reconstructed meshes of

direction) in the cardiac cycle, e.g., the reconstructed meshes of

frame is deformed in the

frame is deformed in the

,

,

,

,

directions compared to

directions compared to

and

and

frames. In addition, white meshes in Fig. 7 illustrate that compared to all baseline methods, the 3D motion field generated by MulViMotion performs best in warping ED frame to ES frame and obtains the reconstructed mesh of ES frame which is most similar to the ground truth (GT) ES frame mesh (blue meshes). These results demonstrate the effectiveness of MulViMotion for 3D motion tracking, especially for estimating through-plane motion.

frames. In addition, white meshes in Fig. 7 illustrate that compared to all baseline methods, the 3D motion field generated by MulViMotion performs best in warping ED frame to ES frame and obtains the reconstructed mesh of ES frame which is most similar to the ground truth (GT) ES frame mesh (blue meshes). These results demonstrate the effectiveness of MulViMotion for 3D motion tracking, especially for estimating through-plane motion.

Fig. 7.

3D visualization of motion tracking results using the baseline methods and MulViMotion. Column 1 (blue) shows the ground truth (GT) meshes of ED frame. Columns 2-6 (red) show 3D motion tracking results across the cardiac cycle. These meshes are reconstructed from the warped 3D segmentations (warped from ED frame to different time frames). Column 7 (white) additionally shows the reconstructed meshes of ES frame from the motion tracking results and Column 8 (blue) shows the ground truth meshes of ES frame.

2). Runtime:

Table II shows runtime results of MulViMotion and baseline methods using Intel Xeon E5-2643 CPU and NVIDIA Tesla T4 GPU. The average inference time for a single subject is reported. FFD [23] and dDemons [29] are only available on CPUs while the 3D-UNet [44] and MulViMotion are available on both CPU and GPU. The results show that our method achieves similar runtime to 3D-UNet [44] on GPU and at least 5 times faster than baseline methods on CPU.

3). Ablation Study:

For the proposed method, we explore the effects of using different anatomical views and the importance of the shape regularization module. We use evaluation metrics in Sec. IV-A.2 to show quantitative results.

Table III shows the motion tracking results using different anatomical views. In particular, M1 only uses images and 2D edge maps from SAX view to train the proposed method, M2 uses those from both SAX and 2CH views and M3 uses those from both SAX and 4CH views. M2 and M3 outperforms M1, illustrating the importance of LAX view images. In addition, MulViMotion (

) outperforms other variant models. This might be because more LAX views can introduce more high-resolution 3D anatomical information for 3D motion tracking.

) outperforms other variant models. This might be because more LAX views can introduce more high-resolution 3D anatomical information for 3D motion tracking.

TABLE III. 3D Motion Tracking With Different Anatomical Views.

and

and

are Variants of the Proposed Method and

are Variants of the Proposed Method and

Refers to MulViMotion. Results are Reported the Same Way as Table II. Best Results in Bold.

Refers to MulViMotion. Results are Reported the Same Way as Table II. Best Results in Bold.

| Anatomical views | Dice

|

HD (mm)

|

VD (%)

|

|||

|---|---|---|---|---|---|---|

| SAX | 2CH | 4CH | ||||

| M1 |

|

0.7780 (0.0275) | 18.2564 (3.4031) | 30.66 (7.73) | ||

| M2 |

|

|

0.7964 (0.0273) | 18.1014 (3.7146) | 24.05 (5.24) | |

| M3 |

|

|

0.7904 (0.0305) | 19.2265 (3.2441) | 17.50 (4.55) | |

| M |

|

|

|

0.8200 (0.0348) | 14.5937 (4.2449) | 8.62 (4.85) |

In Table IV, the proposed method is trained using all three anatomical views but optimized by different combination of losses. A1 optimizes the proposed method without shape regularization (i.e., without

in Eq. 11). A2 introduces basic shape regularization on top of A1, which adds

in Eq. 11). A2 introduces basic shape regularization on top of A1, which adds

and

and

for

for

. MulViMotion (

. MulViMotion (

) outperforms A1, illustrating the importance of shape regularization. MulViMotion also outperforms A2. This is likely because

) outperforms A1, illustrating the importance of shape regularization. MulViMotion also outperforms A2. This is likely because

and

and

are both needed to guarantee the generation of distinct and correct 3D edge maps for all frames in the cardiac cycle. These results show the effectiveness of all proposed components in

are both needed to guarantee the generation of distinct and correct 3D edge maps for all frames in the cardiac cycle. These results show the effectiveness of all proposed components in

.

.

TABLE IV. 3D Motion Tracking With Different Combination of Loss Functions.

Optimizes the Proposed Method Without Shape Regularization (Without

Optimizes the Proposed Method Without Shape Regularization (Without

in Eq. 11).

in Eq. 11).

Adds Basic Shape Regularization on Top of

Adds Basic Shape Regularization on Top of

.

.

Refers to MulViMotion. All Models are Trained by Three Anatomical Views. Results are Reported the Same Way as Table II. Best Results in Bold.

Refers to MulViMotion. All Models are Trained by Three Anatomical Views. Results are Reported the Same Way as Table II. Best Results in Bold.

|

Dice

|

HD (mm)

|

VD (%)

|

|||

|---|---|---|---|---|---|---|

|

|

|

||||

| A1 | 0.7134 (0.0316) | 18.9555 (3.1054) | 33.93 (10.27) | |||

| A2 |

|

|

0.7294 (0.0295) | 17.5047 (3.7485) | 12.51 (4.28) | |

| M |

|

|

|

0.8200 (0.0348) | 14.5937 (4.2449) | 8.62 (4.85) |

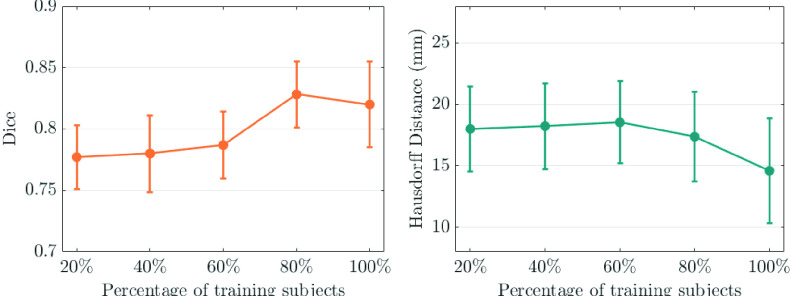

Fig. 8 shows motion estimation performance using different strength of shape regularization. In detail, the proposed method is trained by three anatomical views and all loss components, but the shape loss (

) is computed by different percentage of training subjects (20%, 40%, 60%, 80%, 100%). From Fig. 8, we observe that motion estimation performance is improved with an increased percentage of subjects.

) is computed by different percentage of training subjects (20%, 40%, 60%, 80%, 100%). From Fig. 8, we observe that motion estimation performance is improved with an increased percentage of subjects.

Fig. 8.

3D motion tracking with different strength of shape regularization, where the shape loss (

) is computed by different percentage of training subjects (20%, 40%, 60%, 80%, 100%). The left column is Dice score and the right column is Hausdorff distance.

) is computed by different percentage of training subjects (20%, 40%, 60%, 80%, 100%). The left column is Dice score and the right column is Hausdorff distance.

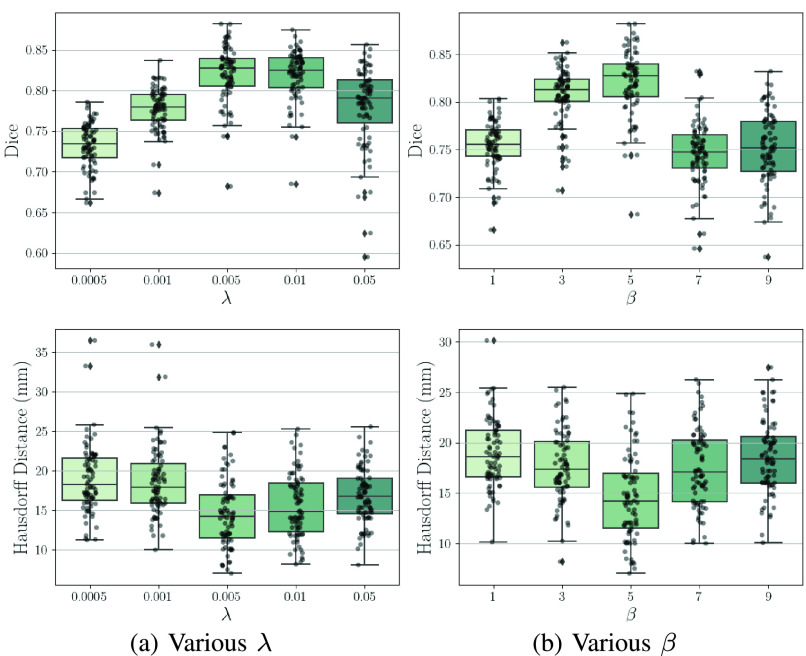

4). The Influence of Hyper-Parameters:

Fig. 9 presents Dice and Hausdorff distance (HD) on the test data for various smoothness loss weight

and shape regularization weight

and shape regularization weight

(Eq. 11). The Dice scores and HDs are computed according to Sec. IV-A.2. We observe that a strong constraint on motion field smoothness may scarify registration accuracy (see Fig. 9 (a)). Moreover, registration performance improves as

(Eq. 11). The Dice scores and HDs are computed according to Sec. IV-A.2. We observe that a strong constraint on motion field smoothness may scarify registration accuracy (see Fig. 9 (a)). Moreover, registration performance improves as

increases from 1 to 5 and then deteriorates with a further increased

increases from 1 to 5 and then deteriorates with a further increased

(from 5 to 9). This might be because a strong shape regularization can enforce motion estimation to focus mainly on the few 2D planes which contain sparse labels.

(from 5 to 9). This might be because a strong shape regularization can enforce motion estimation to focus mainly on the few 2D planes which contain sparse labels.

Fig. 9.

Effects of varied hyper-parameters on Dice and Hausdorff distance. (a) shows the results of using various

under

under

. (b) shows the results of using various

. (b) shows the results of using various

under

under

.

.

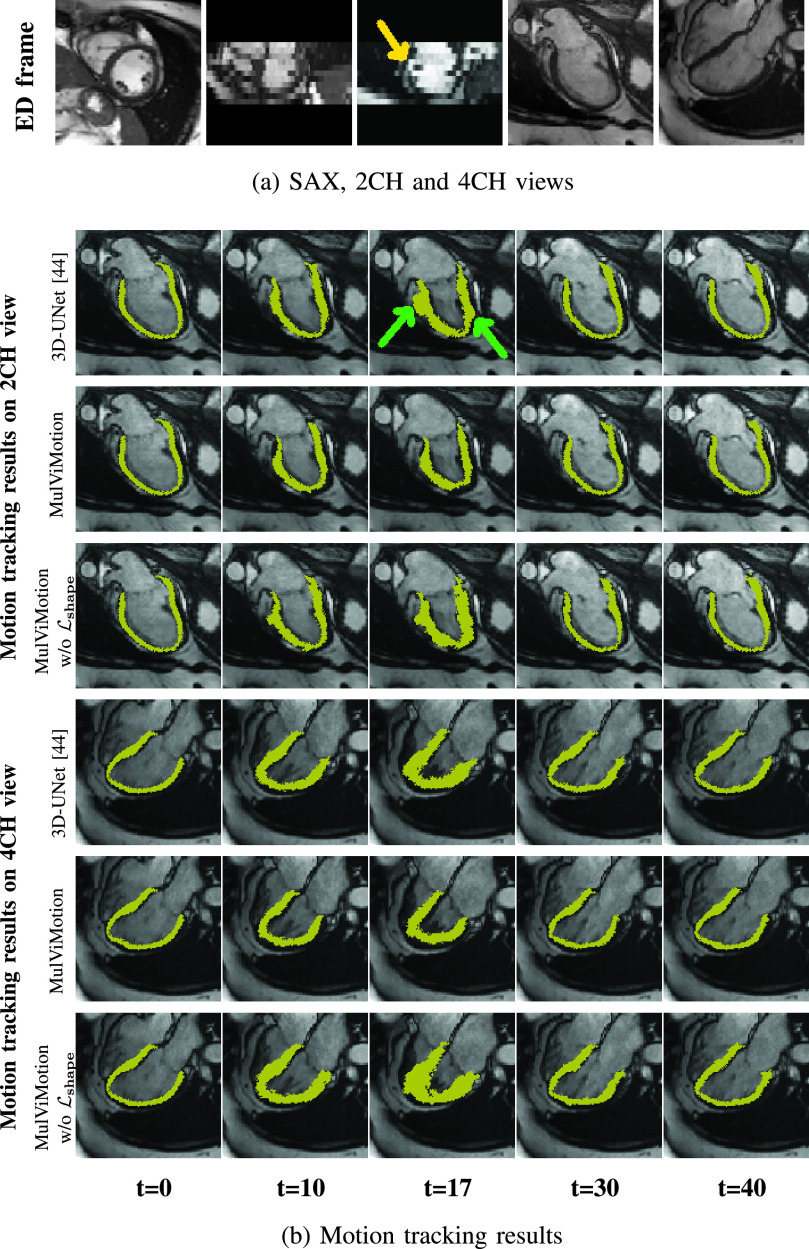

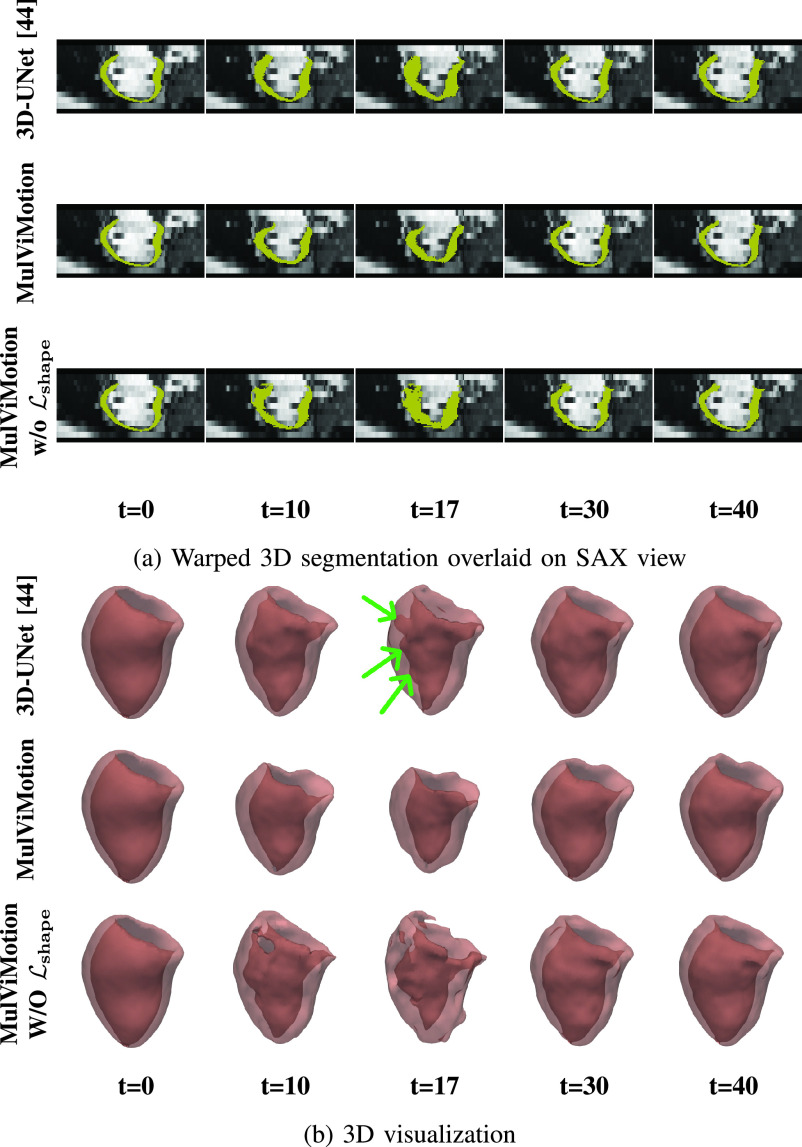

5). The Performance on Subjects With Slice Misalignment:

Acquired SAX stacks may contain slice misalignment due to poor compliance with breath holding instructions or the change of position during breath-holding acquisitions [46]. This leads to an incorrect representation of cardiac volume and result in difficulties for accurate 3D motion tracking. Fig. 10 compares the motion tracking results of 3D-UNet [44], MulViMotion and MulViMotion without

for the test subject with the severe slice misalignment (e.g., Fig. 10 (a) middle column). Fig. 10 (b) shows that in contrast to 3D-UNet, the motion fields generated by MulViMotion enables topology preservation of the myocardial wall (e.g., mesh of

for the test subject with the severe slice misalignment (e.g., Fig. 10 (a) middle column). Fig. 10 (b) shows that in contrast to 3D-UNet, the motion fields generated by MulViMotion enables topology preservation of the myocardial wall (e.g., mesh of

). MulViMotion outperforms MulViMotion without

). MulViMotion outperforms MulViMotion without

, which indicates the importance of the shape regularization module for reducing negative effect of slice misalignment. These results demonstrate the advantage of integrating shape information from multiple views and shows the effectiveness of the proposed method on special cases.

, which indicates the importance of the shape regularization module for reducing negative effect of slice misalignment. These results demonstrate the advantage of integrating shape information from multiple views and shows the effectiveness of the proposed method on special cases.

Fig. 10.

Motion tracking results on the test subject with slice misalignment. The first three columns in (a) are the three orthogonal planes of the SAX stack and the last two columns are 2CH and 4CH view images, respectively. (b) presents examples of motion tracking results using 3D-UNet [44], MulViMotion and MulViMotion without

. The yellow arrow shows an example of slice misalignment while green arrows show examples of motion tracking failures using 3D-UNet. Note that we show the results in frame

. The yellow arrow shows an example of slice misalignment while green arrows show examples of motion tracking failures using 3D-UNet. Note that we show the results in frame

for a more distinct comparison.

for a more distinct comparison.

6). Wall Thickening Measurement:

We have computed regional and global myocardial wall thickness at ED frame and ES frame based on ED frame segmentation and warped ES frame segmentation,8 respectively. The global wall thickness at ED frame is

, which is consistent with results obtained by [14] (

, which is consistent with results obtained by [14] (

). The wall thickness at the ES frame for American Heart Association 16-segments are shown in Table V. In addition, we have computed the fractional wall thickening between ED frame and ES frame by

). The wall thickness at the ES frame for American Heart Association 16-segments are shown in Table V. In addition, we have computed the fractional wall thickening between ED frame and ES frame by

. The results in Table V shows that the regional and global fractional wall thickening are comparable with results reported in literature [47], [48].

. The results in Table V shows that the regional and global fractional wall thickening are comparable with results reported in literature [47], [48].

TABLE V. Wall Thickness at the ES Frame and Fractional Wall Thickening Between ED and ES Frames. Results are Reported as “Mean (Standard Deviation)”.

| Segments | Wall thickness (mm) | Fractional wall thickening (%) | |

|---|---|---|---|

| Basal | Anterior (1) | 9.7 (2.7) | 34.0 (39.5) |

| Anteroseptal (2) | 5.7 (2.9) | −24.4 (38.7) | |

| Inferoseptal (3) | 5.5 (2.0) | −17.3 (30.2) | |

| Inferior (4) | 9.0 (1.7) | 47.8 (28.5) | |

| Inferolateral (5) | 11.0 (2.0) | 72.8 (25.9) | |

| Anterolateral (6) | 10.9 (1.8) | 62.0 (23.8) | |

| Mid-ventricle | Anterior (7) | 10.9 (1.5) | 79.9 (21.0) |

| Anteroseptal (8) | 11.9 (1.6) | 76.2 (21.4) | |

| Inferoseptal (9) | 10.8 (1.4) | 39.8 (12.3) | |

| Inferior (10) | 10.9 (1.3) | 62.5 (15.5) | |

| Inferolateral (11) | 11.2 (1.5) | 73.3 (17.1) | |

| Anterolateral (12) | 10.5 (1.2) | 63.9 (15.6) | |

| Apical | Anterior (13) | 10.8 (1.1) | 86.3 (23.2) |

| Septal (14) | 10.9 (1.4) | 76.7 (20.5) | |

| Inferior (15) | 10.6 (1.4) | 76.2 (15.1) | |

| Lateral (16) | 11.1 (1.4) | 84.3 (18.9) | |

| Global | 10.1 (2.5) | 55.9 (40.6) | |

V. Discussion

In this paper, we propose a deep learning-based method for estimating 3D myocardial motion from 2D multi-view cine CMR images. A naïve alternative to our method would be to train a fully unsupervised motion estimation network using high-resolution 3D cine CMR images. However, such 3D images are rarely available because (1) 3D cine imaging requires long breath holds during acquisition and are not commonly used in clinical practice, and (2) recovering high-resolution 3D volumes purely from 2D multi-view images is challenging due to the sparsity of multi-view planes.

Our focus has been on LV myocardial motion tracking because it is important for clinical assessment of cardiac function. Our model can be easily adapted to 3D right ventricular myocardial motion tracking by using the corresponding 2D edge maps in the shape regularization module during training.

In shape regularization, we use edge maps to represent anatomical shape, i.e., we predict 3D edge maps of the myocardial wall and we use 2D edge maps defined in the multi-view images to provide shape information. This is because (1) the contour of the myocardial wall is more representative of anatomical shape than the content, (2) compared to 3D dense segmentation, 3D edge maps with sparse labels are more likely to be estimated by images from sparse multi-view planes, and (3) using edge maps offers the potential of using automatic contour detection algorithms to obtain shape information directly from images.

An automated algorithm is utilized to obtain 2D edge maps for providing shape information in the shape regularization module. This is because manual data labeling is time-consuming, costly and usually unavailable. The proposed method can be robust to these automatically obtained 2D edge maps since the 2D edge maps only provides constraint to spatially sparse planes for the estimated 3D edge maps.

We use the aligned 2D edge maps of SAX stacks to train MulViMotion. This is reasonable because aligned SAX ground truth edge maps can introduce correct shape information of the heart, and thus can explicitly constrain the estimated 3D motion field to reflect the real motion of the heart. Nevertheless, we further test the effectiveness of the proposed method by utilizing unaligned SAX edge maps during training. In specific, MulViMotion* uses the algorithm in [49] to predict the 2D segmentation of myocardium for each SAX slice independently without accounting for the inter-slice misalignment. The contour of this 2D segmentation is used as the SAX ground truth edge map during training. LAX ground truth edge maps are still generated based on [9]. Table VI and Fig. 11 (e.g.,

) show that the proposed method is capable of estimating 3D motion even if it is trained with unaligned SAX edge maps. This indicates that the LAX 2CH and 4CH view images that provides correct longitudinal anatomical shape information can compensate the slice misalignment in the SAX stacks and thus makes a major contribution to the improved estimation accuracy of through-plane motion.

) show that the proposed method is capable of estimating 3D motion even if it is trained with unaligned SAX edge maps. This indicates that the LAX 2CH and 4CH view images that provides correct longitudinal anatomical shape information can compensate the slice misalignment in the SAX stacks and thus makes a major contribution to the improved estimation accuracy of through-plane motion.

TABLE VII. Quantitative Comparison Between VoxelMorph (VM) [42] and MulVi Motion on Test Set. VM Follows the Optimal Architecture and Hyper-Parameters Suggested by the Authors. VM† Uses a Bigger Architecture10. Results are Reported the Same Way as Table II. Best Results in Bold.

TABLE VI. Quantitative Comparison Between 3D-UNet and MulViMotion * on Test Set. MulViMotion * Uses Unaligned SAX Ground Truth Edge Maps During Training. Results are Reported the Same Way as Table II. Best Results in Bold.

| Methods | Dice

|

HD (mm)

|

VD (%)

|

|---|---|---|---|

| 3D-UNet [44] | 0.7382 (0.0293) | 17.4785 (3.1030) | 30.97 (9.89) |

| MulViMotion* | 0.7856 (0.0295) | 16.0028 (3.9749) | 21.35 (5.32) |

Fig. 11.

3D visualization of motion tracking results using 3D-UNet and MulViMotion*. MulViMotion* uses unaligned SAX ground truth edge maps during training.

In the proposed method, a hybrid 2D/3D network is built to estimate 3D motion fields, where the 2D CNNs combine multi-view features and the 3D CNNs learn 3D representations from the combined features. Such a hybrid network can occupy less GPU memory compared to a pure 3D network. In particular, the number of parameters in this hybrid network is 21.7 millions, much less than 3D-UNet (41.5 millions). Moreover, this hybrid network is able to take full advantage of 2D multi-view images because it enables learning 2D features from each anatomical view before learning 3D representations.

In the experiment, we use 580 subjects for model training and evaluation. This is mainly because our work tackles 3D data and the number of training subjects is limited by the cost of model training. In specific, we used 500 subjects to train our model for 300 epochs with a NVIDIA Tesla T4 GPU, which requires ~ 60 hours of training for each model. In addition, this work focus on developing the methodology for multi-view motion tracking and this sample size align with other previous cardiac analysis work for method development [11], [15], [32], [33]. A population-based clinical study for the whole UK Biobank (currently ~ 50,000 subjects) still requires future investigation.

With the view planning step in standard cardiac MRI acquisition, the acquired multi-view images are aligned and thus are able to describe a heart from different views [50]. In order to preserve such spatial connection between multiple separate anatomical views, data augmentations (e.g., rotation and scaling) that used in some 2D motion estimation works are excluded in this multi-view 3D motion tracking task.

We use two LAX views (2CH and 4CH) in this work for 3D motion estimation but the number of anatomical views is not a limitation of the proposed method. More LAX views (e.g., 3-chamber view) can be integrated into MulViMotion by adding extra encoders in FeatureNet and extra views in

for shape regularization. However, each additional anatomical view can lead to an increased occupation of GPU memory and extra requirement of image annotation (i.e., 2D edge maps).

for shape regularization. However, each additional anatomical view can lead to an increased occupation of GPU memory and extra requirement of image annotation (i.e., 2D edge maps).

The data used in the experiment is acquired by a 1.5 Tesla (1.5T) scanner but the proposed method can be applied on 3T CMR images. The possible dark band artifacts in 3T CMR images may affect the image similarity loss (

). However, the high image quality of 3T CMR and utilizing high weights for the regularization terms (e.g., shape regularization and the local smoothness loss) may potentially reduce the negative effect of these artifacts.

). However, the high image quality of 3T CMR and utilizing high weights for the regularization terms (e.g., shape regularization and the local smoothness loss) may potentially reduce the negative effect of these artifacts.

We utilize the ED frame and the

-th frame (

-th frame (

,

,

is the number of frames) as paired frames to estimate the 3D motion field. This is mainly because the motion estimated from such frame pairing is needed for downstream tasks such as strain estimation [27], [51], [52]. In the cardiac motion tracking task, the reference frame is commonly chosen as the ED or ES frame [15]. Such frame pairing can often be observed in other cardiac motion tracking literature, e.g., [11], [12], [15].

is the number of frames) as paired frames to estimate the 3D motion field. This is mainly because the motion estimated from such frame pairing is needed for downstream tasks such as strain estimation [27], [51], [52]. In the cardiac motion tracking task, the reference frame is commonly chosen as the ED or ES frame [15]. Such frame pairing can often be observed in other cardiac motion tracking literature, e.g., [11], [12], [15].

In this work, apart from two typical and widely used conventional algorithms, we also compared the proposed method with a learning-based method [31] which can represent most of the recent image registration works. In specific, the architecture of [31] has been used in many recent works, e.g., [37], [42], [43], and many other recent works, e.g., [42], [53], [54], are similar to [31] where only single view images are utilized for image registration. Nevertheless, we further thoroughly compared the proposed method with another recent and widely used learning-based image registration method [42] (VoxelMorph9). We train VoxelMorph following the optimal architecture and hyper-parameters suggested by the authors (VM) and we also train VoxelMorph with a bigger architecture10 (VM †). For fair comparison, 2D ground truth edge maps (

,

,

in Eq. 8) are used to generate the segmentation of SAX stacks for adding auxiliary information. Table VI shows that the proposed method outperforms VoxelMorph for 3D cardiac motion tracking. This is expected because SAX segmentation used in VoxelMorph has low through-plane resolution and thus can hardly help improve 3D motion estimation. Moreover, VoxelMorph only uses single view images while the proposed method utilizes information from multiple views.

in Eq. 8) are used to generate the segmentation of SAX stacks for adding auxiliary information. Table VI shows that the proposed method outperforms VoxelMorph for 3D cardiac motion tracking. This is expected because SAX segmentation used in VoxelMorph has low through-plane resolution and thus can hardly help improve 3D motion estimation. Moreover, VoxelMorph only uses single view images while the proposed method utilizes information from multiple views.

VI. Conclusion

In this paper, we propose multi-view motion estimation network (MulViMotion) for 3D myocardial motion tracking. The proposed method takes full advantage of routinely acquired multi-view 2D cine CMR images to accurately estimate 3D motion fields. Experiments on the UK Biobank dataset demonstrate the effectiveness and practical applicability of our method compared with other competing methods.

Supplementary Materials

Appendix

A. Examples of 3D Masks

Fig. 12 shows the examples of 3D masks used in the shape regularization module of MulViMotion. These 3D masks identify the locations of multi-view images in the SAX stack. We generate these 3D masks in image preprocessing step by a coordinate transformation using DICOM image header information.

Fig. 12.

Examples of 3D masks used in the shape regularization module of MulViMotion. The top row show the 2D images from different anatomical views in the space of the SAX stack. The bottom row show the 3D masks which represent the locations of these 2D images in the SAX stack. (a) The 2D images from SAX view (9 slices). (b) The single 2D image from 2CH view. (c) The single 2D image from 4CH view.

B. The Dynamic Videos of Motion Tracking Results

The dynamic videos of motion tracking results of different motion estimation methods have been attached as “Dynamic_videos.zip” in the supplementary material. This file contains four MPEG-4 movies where “FFD.mp4”, “dDemons.mp4”, “3D-UNet.mp4” are the results of the corresponding baseline methods and “MulViMotion.mp4” is the result of the proposed method. All methods are applied on the same test subject. The Codecs of these videos is H.264. We have opened the uploaded videos in computers with (1) Win10 operating system, Movies&TV player, (2) Linux Ubuntu 20.04 operating system, Videos player, and (3) Mac OS, QuickTime Player. However, if there is any difficulty to open the attached videos, the same dynamic videos can be found in https://github.com/qmeng99/dynamic_videos/blob/main/README.md

C. Additional 3D Motion Tracking Results

Fig. 13 shows the additional 3D motion tracking results on a test subject with slice misalignment. This test subject is the same subject used in Fig. 10 in the main paper. These more results further demonstrate that the proposed method is able to reduce the negative effect of slice misalignment on 3D motion tracking. In addition, we have computed more established clinical biomarkers. Fig. 14 shows the temporal ejection fraction across the cardiac cycle.

Fig. 13.

Motion tracking results on the test subject with slice misalignment. using 3D-UNet [44], MulViMotion, and MulViMotion without

. (a) The warped 3D segmentation overlaid on SAX view. (b) The 3D visualization of the motion tracking results. The green arrows show examples of motion tracking failures using 3D-UNet. Note that we show results in frame

. (a) The warped 3D segmentation overlaid on SAX view. (b) The 3D visualization of the motion tracking results. The green arrows show examples of motion tracking failures using 3D-UNet. Note that we show results in frame

for a more distinct comparison.

for a more distinct comparison.

Fig. 14.

The results of temporal ejection fraction across the cardiac cycle. (a) Results on a randomly selected test subject. (b) Results on all test subjects (mean values and confidence interval are presented).

D. Applications

1). Strain Estimation:

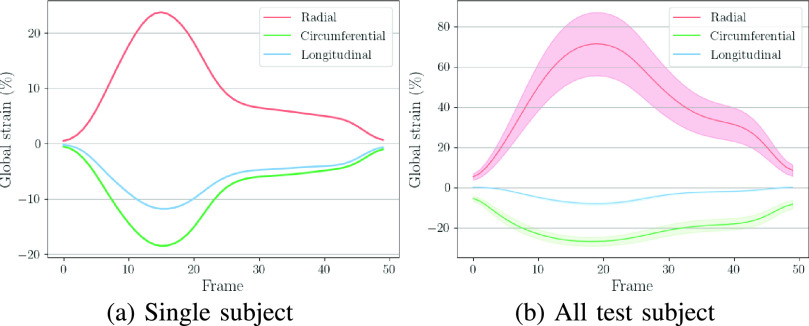

Myocardial strain provide a quantitative evaluation for the total deformation of a region of tissue during the heartbeat. It is typically evaluated along three orthogonal directions, namely radial, circumferential and longitudinal. Here, we evaluate the performance of the proposed method by estimating the three strains based on the estimated 3D motion field

. The myocardial mesh at the ED frame is warped to the

. The myocardial mesh at the ED frame is warped to the

-th frame using a numeric method and vertex-wise strain is calculated using the Lagrangian strain tensor formula [55] (implemented by https://github.com/Marjola89/3Dstrain_analysis). Subsequently, global strain is computed by averaging across all the vertices of the myocardial wall.

-th frame using a numeric method and vertex-wise strain is calculated using the Lagrangian strain tensor formula [55] (implemented by https://github.com/Marjola89/3Dstrain_analysis). Subsequently, global strain is computed by averaging across all the vertices of the myocardial wall.

Fig. 15 shows the estimated global strain curves on test subjects. Both the shapes of the curves and the value ranges of peak strains are consistent with reported results in the literature [52], [56], [57], i.e., radial peak strain is ~ 20% to ~ 70%, circumferential peak strain is ~ −15% to ~ −22% and longitudinal peak strain is ~ −8% to ~ −20%.

Fig. 15.

Global strains across the cardiac cycle which are estimated base on MulViMotion. (a) Results on a randomly selected test subject. (b) Results on all test subjects (mean values and confidence interval are presented).

To get more reference strains, we have separately computed global longitudinal and circumferential strains on the 2D LAX and SAX slices according to the algorithm in [14]. On the test set, global longitudinal peak strain is −18.55%±2.74% (ours is −9.72%±2.49%) while global circumferential peak strain is −22.76%±3.31% (ours is −27.38%±9.63%). It is possible that our strains are different from these strains. This is because these strains in [14] are computed only on sparse 2D slices by 2D motion field estimation, and in contrast, we compute global strains by considering the whole myocardium wall with 3D motion fields.

Compared to echocardiograpy, another widely used imaging modality for strain estimation, the average circumferential peak strain reported in our work (−27.38%) is consistent with those typically reported in echocardiograpy (~ −22% to ~ −32% [58]). The average longitudinal peak strain in our study (−9.72%) is lower than that reported in echocardiograpy (~ −20% to ~ −25% [58]). This difference is likely due to the higher spatial and temporal resolution of echocardiography (e.g.,

for spatial resolution and 40 – 60 frames/s for temporal resolution) compared to CMR (e.g., our data has

for spatial resolution and 40 – 60 frames/s for temporal resolution) compared to CMR (e.g., our data has

in-plane resolution,

in-plane resolution,

through-plane resolution and 50 frames/heart-beat temporal resolution) [41], [58].

through-plane resolution and 50 frames/heart-beat temporal resolution) [41], [58].

For strain estimation, our results are in general consistent with the value ranges reported in [52], [56], [57]. However, it has to be noted that we calculate the strain based on 3D motion fields, whereas most existing strain analysis methods or software packages are based on 2D motion fields, i.e. only accounting for in-plane motion within SAX or LAX views. This may result in difference between our estimated strain values and the reported strain values in literature. In addition, there is still a lack of agreement of strain value ranges (in particular for radial strains) even among mainstream commercial software packages [57]. This is because strain value ranges can vary depending on the vendors, imaging modalities, image quality and motion estimation techniques [57], [58]. It still requires further investigations to set up a reference standard for strain evaluation and to carry out clinical association studies using the reported strain values. Moreover, when manual segmentation is available, it could be used to provide more perfect and accurate shape constraint, which may further improve 3D motion estimation and thus strain estimation.

Funding Statement

This work was supported in part by the British Heart Foundation under Grant RG/19/6/34387 and Grant RE/18/4/34215, in part by the Medical Research Council under Grant MC-A658-5QEB0, in part by the National Institute for Health Research (NIHR) Imperial College Biomedical Research Centre, and in part by Wellcome Trust Grant 102431. This research has been conducted using the UK Biobank resource under Application 40616.

Footnotes

The contours are generated based on [9] and a manual quality control. Detailed information is shown in Sec. IV-A.