Abstract

The rapid and accurate in silico prediction of protein-ligand binding free energies or binding affinities has the potential to transform drug discovery. In recent years, there has been a rapid growth of interest in deep learning methods for the prediction of protein-ligand binding affinities based on the structural information of protein-ligand complexes. These structure-based scoring functions often obtain better results than classical scoring functions when applied within their applicability domain. Here we review structure-based scoring functions for binding affinity prediction based on deep learning, focussing on different types of architectures, featurization strategies, data sets, methods for training and evaluation, and the role of explainable artificial intelligence in building useful models for real drug-discovery applications.

Keywords: drug discovery, scoring function, artificial intelligence, explainable AI, deep learning, neural network, affinity

1 Introduction

The discovery and development of new small-molecule drugs is a very challenging and expensive process (Drews 2000; Dickson and Gagnon 2004; Schneider and Schneider 2016). Only a handful of new drugs are approved each year (Brown and Wobst 2021), which is minuscule compared to the vastness of chemical space (Reymond et al., 2010) and the billions of dollars poured into drug discovery campaigns (DiMasi et al., 2016). The discovery pipeline for small-molecule drugs usually starts with the identification of a protein target against which a hit compound is identified by high throughput screening (HTS) (Mayr and Bojanic 2009; Macarron et al., 2011). The hit compound is subsequently optimized to obtain a lead compound with good potency and favorable pharmacodynamics and pharmacokinetics properties.

Thanks to significant methodological and hardware advances, computer-aided drug discovery (CADD) has played an important role in the development of new small-molecule drugs over the last decades (Sliwoski et al., 2013). CADD speeds up the early stages of the drug discovery process—hit identification and hit-to-lead optimization—and lowers the costs of these phases by reducing time and experimental resources needed. CADD methods fall into two broad classes: (explicit) structure-based, and ligand-based (or implicit structure-based) methods. For the latter, similarities to known active molecules play an important role since either the protein target is unknown, or information about the protein target is either unavailable or not included. For structure-based methods, the target structure is known and this additional information is exploited in the modelling and optimization of drug-target interactions (DTIs).

One of the main goals in the computational elucidation of DTIs is the calculation of relative or absolute binding free energies to distinguish potent binders from weak binders (or non-binders) against a target of interest. A fast and accurate prediction of protein-ligand binding affinities would circumvent the need for many time-consuming and complex experiments. Rigorous computational methods based on all-atom molecular dynamics simulations in explicit solvent—such as free energy perturbation and thermodynamic integration (Adcock and McCammon 2006)—can compute accurate relative and absolute binding free energies (Bash et al., 1987; Boresch et al., 2003; Mobley et al., 2007; Aldeghi et al., 2016, Aldeghi et al., 2018a; Cournia et al., 2017), predict ligand selectivity (Aldeghi et al., 2017) and mutation effects (Aldeghi et al., 2018b; Hauser et al., 2018), and guide fragment elaborations (Alibay et al., 2022). Unfortunately, such rigorous methods are computationally expensive and often require a lot of expert knowledge and domain expertise (Mey et al., 2020; Hahn et al., 2021). This remains true even for simpler methods such as ligand-interaction energy (LIE) (Åqvist et al., 1994; Jones-Hertzog and Jorgensen 1997). Methods treating the solvent implicitly, such as the Poisson-Boltzmann and generalized Born models (Genheden and Ryde 2015), can offer significant speed increase but sometimes at the expense of accuracy.

The great successes of deep learning (DL) in the fields of computer vision (Voulodimos et al., 2018), natural language processing (NLP) (Young et al., 2018), and other fields of computer science in recent years kick-started the research and application of deep learning in many scientific disciplines including physics, chemistry, biology, and medicine (Baldi 2021). In the field of drug discovery, machine learning (ML) has been in use for a long time, and the potential usefulness of the use of deep learning in virtual screening was identified early on (Unterthiner et al., 2014). The application of modern deep learning architectures to all stages of the drug discovery pipeline is a very active area of research today (Jing et al., 2018; Brown, 2020; Muratov et al., 2020; Jiménez-Luna et al., 2021a; Gaudelet et al., 2021). The main applications in small-molecule drug design consists in the prediction of DTIs, identification of binding sites (Jiménez et al., 2017; Pu et al., 2019; Aggarwal et al., 2021), the generation of novel molecular entities (Schneider and Clark 2019; Meyers et al., 2021), and the prediction of absorption, distribution, metabolism, excretion, and toxicity (ADMET) properties (Huang D. Z. et al., 2021).

Bioactivity prediction can be performed as a classification task—where binders/actives are distinguished from non-binders/inactives—or as a regression task. Machine learning and deep learning scoring functions (SFs) for the prediction of binding affinities (regression) are useful in lead optimization, in contrast with SFs that try to identify binders amongst a large pool of non-binders (classification) and are used in virtual screening to identify a hit. Another task where SFs are commonly used is pose prediction, where near-native poses are distinguished from incorrect poses (classification). Pose prediction and binding affinity prediction are complementary tasks in molecular docking, where a pose is generated and subsequently scored according to the predicted binding affinity.

In this review, we will focus on SFs for binding affinity prediction (inhibition constant K i or dissociation constant K d ) or binding free energy prediction, but we will inevitably mention related SFs used in pose prediction and virtual screening—which often share the same algorithms and ideas. Recent reviews of structure-based SFs and deep learning for virtual screening are given by Li et al. (2021b), Kimber et al. (2021), and Rifaioglu et al. (2019). Additionally, to narrow the scope of the review, we focus on structure-based deep-learning methods and we refer the reader interested in ligand-based methods to Tropsha (2010), Muratov et al. (2020), Baskin (2020), and Palazzesi and Pozzan (2022). More general and broad reviews about the application of machine learning and deep learning in drug discovery are provided by Chen H. et al. (2018), Vamathevan et al. (2019), and Schneider et al. (2019).

2 Classical Scoring Functions

Historically, SFs for binding affinity prediction and virtual screening have been classified into three categories: force-field-based, empirical, and knowledge-based (Muegge and Rarey 2001; Böhm and Stahl 2002). However, recently Liu and Wang (2015) argued that this historical classification overlooks more recent developments in the field and thus proposed an updated classification scheme with four classes of scoring functions: force-field-based or physics-based, empirical or regression-based, knowledge-based or potential of mean force-based, and descriptor-based or machine learning-based.

This classification is useful to distinguish different methodologies and ideas appearing in the development of SFs. However, some SFs can’t be precisely assigned to only one category and the boundary between the four different classes remains rather fuzzy.

In this section we will briefly discuss the first three classes of SFs, often termed “classical” SFs. A good overview of the different SFs can be found in the paper of Liu and Wang (2015)—which proposed the current classification of SFs—and a more recent overview of different SFs used in protein-ligand docking is provided by Li et al. (2019a). While classical scoring functions are still actively developed and refined today, the research focus has certainly shifted to ML/DL based scoring functions.

2.1 Physics-Based (Force-Field Based) Scoring Functions

Physics-based (or force-field-based) SFs use energy terms of a molecular mechanics force-field—whose parameters are determined to reproduce experimental observables or ab initio quantum mechanical calculations (Monticelli and Tieleman 2012)—to evaluate protein-ligand interactions. The non-covalent interaction energy between protein and ligand atoms is usually expressed as the sum of van der Waals and electrostatic interaction terms. In their simplest form, such pairwise interactions are represented by a Lennard-Jones potential and Coulomb interaction between point charges.

Different physics-based scoring functions use different potentials to describe van der Waals and electrostatic interactions, depending on the design of the underlying force field. For example, the dielectric constant can be distance-dependent to take into account electrostatic screening due to the solvent and the lower dielectric constant in protein-ligand binding sites (Hingerty et al., 1985; Gilson and Honig 1986; Huang et al., 2010).

Often, additional shorter-range (and sometimes directional) terms are added to account for hydrogen bonding as well as solvation energy and therefore physics-based scoring function can take the following form:

| (1) |

The solvation energy term can take into account both polar and non-polar contributions. The former accounts for the loss of polar interactions between charged groups and water, while the latter accounts for the desolvation of hydrophobic groups upon binding.

Finally, empirical terms accounting for the loss of torsional degrees of freedom upon complexation can also be included. Oftentimes, simple approximations based on the number of rotatable bonds are used (Böhm 1994; Chang et al., 2007; Huey et al., 2007; Huang and Zou 2010), although more advanced treatments have been suggested (Guedes et al., 2021b). The same corrections are applied to empirical and knowledge-based scoring functions, discussed below.

Force-field-based scoring functions are attractive because of their physical origin and because they can leverage advances in force-field developments, including the latest advances in ML force-fields (Unke et al., 2021). However, describing solvent effects in ligand binding remains an outstanding challenge (Limongelli et al., 2012; Ross et al., 2012; Darby et al., 2019).

Notable examples of physics-based (force field-based) scoring functions are DOCK (DesJarlais et al., 1988; Meng et al., 1992; Shoichet et al., 1992; Ewing et al., 2001; Moustakas et al., 2006; Allen et al., 2015), AutoDock (Goodsell and Olson 1990) and AutoDock 2 (Morris et al., 1996) (AutoDock 3 and AutoDock 4 use hybrid scoring functions (Morris et al., 1998; Huey et al., 2007; Morris et al., 2009)), GoldScore (Jones et al., 1995, Jones et al., 1997), and GalaxyDock (Shin and Seok 2012; Shin et al., 2013).

2.2 Empirical (Regression-Based) Scoring Functions

Empirical or regression-based scoring functions are based on regression analysis to determine the coefficient of different pre-defined terms based on experimental data. This is also what machine learning (or descriptor-based) scoring functions do, however in empirical or regression-based scoring functions the functional form of the scoring function is predetermined and it is often quite simple (such as a linear combination of different contributions) (Ain et al., 2015). As we mentioned previously, the line between the four different classes of scoring functions suggested by Li et al. (2019a) is sometimes blurry.

Empirical scoring functions assuming a linear functional form take the following form (Guedes et al., 2018):

| (2) |

The functional form of empirical scoring functions is similar to physics-based scoring functions. However, in empirical scoring functions the parameters w are determined by regression analysis—usually multivariate linear regression or partial least squares (Li et al., 2019a)—to reproduce experimentally determined values.

Often, the different terms in empirical scoring functions are simple reward or penalty scores. For example, the ChemScore (Eldridge et al., 1997; Verdonk et al., 2003) scoring function has the following functional form:

| (3) |

where S hbond is the score assigned to hydrogen bonds, S metal scores acceptor-metal interactions, S lipo scores lipophilic interactions, H rot describes the loss in conformational entropy upon complexation, E int is the ligand’s internal energy, E cov is the covalent energy term, and E clash represents the energetic penalty of clashes between protein and ligand atoms.

One of the first empirical scoring functions was introduced by Böhm (1994) and notable examples include ChemScore (Eldridge et al., 1997; Verdonk et al., 2003), X-Score (Wang et al., 2002), Glide (Friesner et al., 2004, 2006) DockThor (de Magalhães et al., 2014), SFCscore (Sotriffer et al., 2008). More recent scoring functions are Vinardo (Quiroga and Villarreal 2016), Lin_F9 (Yang and Zhang 2021), DockTScore (Guedes et al., 2021a) (combined with ML), and AA-Score (Pan et al., 2022).

A fairly recent review of empirical scoring functions for structure-based virtual screening is provided by Guedes et al. (2018).

2.3 Knowledge-Based (Potential-Based) Scoring Functions

Knowledge-based or potential-based scoring functions are based on pairwise statistical potentials of the form:

| (4) |

where the distance-dependent pairwise potential ω ij (r) is given by:

| (5) |

ρ ij (r) is the number density of pairs of type i-j at distance r while is the same quantity for a reference state where there is no interaction between types i and j (Muegge and Martin 1999). Therefore, if ρ ij (r) is larger than the reference state it contributes favorably to the scoring function while if ρ ij (r) is smaller than the reference state then it contributes unfavorably to the scoring function. The pairwise potentials ω ij (r) are obtained from the analysis of interactions in a large data set of protein-ligand complexes and usually, only pairs of protein and ligand atoms within a certain cutoff are considered (r < r cutoff).

One of the advantages of knowledge-based scoring functions is that entropic and solvation contributions are taken into account implicitly (Muegge and Martin 1999). However, some knowledge-based scoring functions include solvation and entropy effects explicitly (Huang and Zou 2010).

Notable examples of knowledge-based (potential-based) scoring function are the SMoG (DeWitte and Shakhnovich 1996; DeWitte et al., 1997) (later extended to a hybrid knowledge-based and empirical scoring function (Debroise et al., 2017a)), the PMF scoring function developed by Muegge and co-workers (Muegge and Martin 1999; Muegge 2000, Muegge 2001), DrugScore (Gohlke et al., 2000; Velec et al., 2005; Neudert and Klebe 2011; Dittrich et al., 2018), ITScore (Huang and Zou 2006a; Huang and Zou 2006b, Huang and Zou 2010), KECSA (Zheng and Merz 2013), and M-score (Yang et al., 2005). More recent knowledge-based scoring functions are SMoG 2016 (Debroise et al., 2017b), Convex-PL (Kadukova and Grudinin 2017), DLIGAND2 (Chen P. et al., 2019), and KORP-PL (Kadukova et al., 2021).

3 Data Sets

To train ML and DL SFs, high-quality and reasonably large data sets are essential. The success of supervised machine learning and deep learning algorithms strongly depends on the quality and the size of the data set used for training. Thanks to the advances in high-throughput X-ray crystallography and cryo-electron microscopy (cryoEM), the number of available high-resolution structures in the Protein Data Bank (PDB) is constantly increasing (Goodsell et al., 2019b).

In this section, we briefly discuss some of the most common data sets encountered in the training and evaluation of machine learning and deep learning structure-based SFs for binding affinity prediction. The main data sets providing both co-crystal structures and experimental binding affinities are listed in Table 1.

TABLE 1.

Main data sets providing protein-ligand complexes (crystal structures) and corresponding binding affinities. N is the number of protein-ligand complexes (co-crystal structures) with associated binding affinities.

| Data Set | N | Superset | Website |

|---|---|---|---|

| PDBbind 2020 | 19 443 | — | pdbbind.org.cn |

| CASF-2016 | 285 | PDBbind 2016 | pdbbind.org.cn |

| CASF-2013 | 195 | PDBbind 2013 | pdbbind.org.cn |

| CASF-2007 | 195 | PDBbind 2007 | pdbbind.org.cn |

| Binding MOAD 2020 | 15 223 | — | bindingmoad.org |

| CSAR-NCS HiQ | 343 | Binding MOAD + PDBbind | csardock.org |

| CSAR-NCS HiQ Update | 123 | Binding MOAD + PDBbind | csardock.org |

| Astex Diverse Set | 74 | — | doi.org/10.1021/jm061277y |

| BindingDB | 11 442 | — | bindingdb.org |

| D3R GC 4 | 20 | — | drugdesigndata.org |

| D3R GC 3 | 24 | — | drugdesigndata.org |

| D3R GC 2 | 36 | — | drugdesigndata.org |

| D3R GC 2015 | 24 | — | drugdesigndata.org |

3.1 PDBbind

The PDBbind dataset (Wang et al., 2004) is a curated subset of the PDB and it is arguably one of the most common data sets used to train ML and DL SFs for protein-ligand binding affinity prediction. The dataset also contains protein-protein and ligand-nucleic acid complexes.

The origin of the database can be traced back to 2004, when Wang et al. (2004) collected protein-ligand complexes from the PDB (release 103, January 2003) and screened the primary references of the identified complexes to extract binding affinity data (K d , K i , IC50).

To train ML and DL SFs, high-quality data is essential—although it has been demonstrated that including lower quality data can improve performance (Li et al., 2015; Francoeur et al., 2020). The PDBbind database is therefore split into a “refined” set and a “general” set (Wang et al., 2004, Wang et al., 2005). The “refined” set is a selection of protein-ligand crystal structures with a resolution of 2.5 Å or lower, where there is a single ligand that is non-covalently bound without significant steric clashes (Wang et al., 2005). Only systems with associated equilibrium constants K i and K d are included in the refined set—IC50 values depend on the design of the binding assay—and complexes are filtered to only contain common organic elements.

The same approach was used to build the PDBbind refined set version 2007 (Cheng et al., 2009), but it was improved to produce the PDBbind refined set 2013 and subsequent versions (Li et al., 2014b; Liu et al., 2014, 2017). In addition to the previous criteria used to compile the PDBbind refined set 2007, the complexes added to the PDBbind refined set 2013 satisfy the following additional criteria (Li et al., 2014b): no missing backbone or side chain fragments within 8 Å from the ligand, no extreme values of binding affinity (1 pm < K < 10 mm, where K = {K i , K d }), no multiple binding sites with significantly different binding affinities ( folds difference), no non-standard amino acids within 5 Å from the ligand, and no shallow binders ( of buried ligand surface). The rules for selecting protein-ligand complexes into the PDBbind refined set 2013, together with their rationale and the difference with the rules used for the PDBbind refined set 2007, are very clearly summarized by Li et al. (2014b).

The PDBbind dataset can be downloaded from pdbbind.org.cn. The current release (PDBbind 2020) collects binding affinities and structural data for 23 496 biomolecular complexes, 19 443 of which are protein-ligand complexes.

3.1.1 CASF

The CASF benchmarks are a series of comparative assessments of scoring functions originally introduced by Cheng et al. (2009). They evaluate different scoring functions for their performance on scoring, ranking, docking, and screening on a diverse and high-quality set of protein-ligand complexes. Originally employed to compare mostly classical SFs, it has become the de facto standard for an initial evaluation of ML and DL SFs (especially for protein-ligand binding affinity prediction).

To test different scoring functions on a diverse and high-quality data set of protein-ligand complexes, a data set is extracted from the PDBbind refined set (where high-quality complexes have already been identified). The PDBbind refined set is clustered according to sequence similarity using BLAST (Altschul et al., 1990), with a similarity threshold of 90% (Cheng et al., 2009). This means that proteins with a sequence similarity higher than 90% are collected in the same cluster since they are likely to represent the same protein or the same protein family.

Once proteins from the PDBbind refined set are clustered by sequence similarity, clusters containing at least four complexes are retained (Cheng et al., 2009). This results in a total of 65 clusters, from which three complexes are sampled: the complex with lower binding affinity, the complex with higher binding affinity, and the complex with binding affinity closer to the mean between the highest and lowest binding affinities (Cheng et al., 2009). This clustered sub-sampling of the PDBbind refined set (called PDBbind core set) results in a total of 65 × 3 = 195 protein-ligand complexes used for the first comparative assessment of scoring functions (CASF-2007).

For the CASF-2013 comparative assessment of scoring functions (Li et al., 2014a), the construction of the PDBbind core set was improved by using the same sequence similarity program used by the PDB, and only clusters with five (and not four) proteins were retained (Li et al., 2014b). Additionally, the best binding affinity has to differ at least 10-fold from the median binding affinity, and the median binding affinity has to differ at least 10-fold from the poorest binding affinity (Li et al., 2014b). The electron density maps of the remaining complexes were visually assessed; if a complex failed at this step, the next best candidate was selected amongst the same cluster (Li et al., 2014b). The final PDBbind core set 2013 still consists of 195 protein-ligand complexes from 65 protein clusters (Li et al., 2014b).

The core set for CASF-2016 (Su et al., 2018) brought additional refinements and more data. As usual, the systems within the high-quality benchmark set are selected from the 4057 protein-ligand complexes in the PDBbind refined set (version 2016). The clustering of complexes based on protein sequence similarity remains the same. However, for CASF-2016, five representatives of each cluster were selected instead of the three selected for CASF-2007 and CASF-2013 (Su et al., 2018). The representative complexes were selected according to their binding affinity: the complex with the lowest binding affinity, the complex with the highest binding affinity, and three complexes distributed as evenly as possible between the lowest and highest binding affinity (Su et al., 2018). The lowest and highest binding affinities differ at least 100-fold and the difference between consecutive binding affinities is at least 1-fold. All ligands were inspected to ensure that there are no identical ligands or stereoisomers (Su et al., 2018). The final PDBbind core set (CASF-2016 benchmark set) consists of 57 × 5 = 285 protein-ligand complexes and it is arguably one of the test sets encountered more frequently in the development of ML and DL SFs.

Unlike the PDBbind data set, the CASF benchmark is not updated annually and therefore the latest release to date remains CASF-2016. The CASF benchmark packages can be downloaded from pdbbind.org.cn/casf.php.

It is very common for ML and DL SFs to be trained on the PDBbind refined or general set and subsequently tested on the CASF benchmark set. Recently, non-redundant subsets of the PDBbind refined set were introduced by Boyles et al. (2019) and Su et al. (2020) to evaluate the ability of ML and DL SFs to generalize when removing increasingly dissimilar examples from the training set that have some similarities with the CASF benchmark set.

3.2 Binding MOAD

The Binding MOAD (Mother Of All Databases) (Hu et al., 2005; Benson et al., 2007; Ahmed et al., 2014; Smith et al., 2019) is a subset of the PDB that collects high-quality and biologically relevant crystal structures of protein-ligand complexes together with experimentally determined binding affinities. Ligands available in the Binding MOAD include small peptides (ten amino acids or less), small oligonucleotides (four nucleotides or fewer), small and drug-like organic molecules, and enzymatic cofactors. Crystal structures have a resolution better than 2.5 Å. As for the PDBbind data set, experimental binding affinities are collected from the primary reference of the deposited PDB structure and consists of only K i , K d or IC50 values.

The Binding MOAD was first introduced in 2005, containing 5331 protein-ligand complexes from 1780 unique protein families and 2630 unique ligands (Hu et al., 2005). 1375 protein-ligand complexes were associated with binding affinity data spanning 13 orders of magnitude (Hu et al., 2005). The 1780 unique protein families were used to create a non-redundant subset for which 475 complexes have binding affinity data (Hu et al., 2005).

The Binding MOAD is extracted from the PDB as follows (Hu et al., 2005). The full PDB database is screened for high-resolution structures (better than 2.5 Å) excluding theoretical models and NMR structures. Structures containing nucleic acids larger than four nucleotides and peptides longer than ten amino acids were also discarded. Subsequently, complexes with covalently bound ligands as well as invalid ligand structures were filtered out. This reduced database of protein-ligand complexes is hand-curated: the primary citation associated with each structure is screened for binding affinity data while some “suspect ligands” were flagged for visual inspection, resulting in the final database of 5331 protein-ligand complexes.

The Binding MOAD has been expanded annually over the years by adding new protein-ligand complexes deposited on the PDB (together with binding affinity data), resulting in 23 269 total entries and 8156 entries with associated binding affinities in 2015 (Ahmed et al., 2014). In 2019, the Binding MOAD contained 32 747 structures comprising 9117 unique protein families and 16 044 unique ligands.

The Binding MOAD and the PDBbind databases are curated in a similar fashion, to the point that the two data sets could be compared to find and fix disagreements in overlapping systems (Hu et al., 2005). However, the Binding MOAD includes complexes with only binding cofactors, complexes with both a ligand and a cofactor present, and also includes high-quality complexes without binding affinity data (Hu et al., 2005).

Given that the development of the PDBbind was mostly driven by the development of scoring functions (Cheng et al., 2009; Li et al., 2014b,a; Su et al., 2018) while the development of the Binding MOAD was primarily driven by research on protein binding site prediction (Clark et al., 2020) and protein flexibility (Clark et al., 2019), it is more common to encounter the former in the development of ML and DL SFs. However, Binding MOAD can be certainly used for assessing the performance of scoring functions in binding affinity prediction (Xavier et al., 2016) and has been used to build the CSAR dataset discussed below.

3.2.1 CSAR

The CSAR dataset is a data set associated with the Community Structure-Activity Resource (CSAR) which has the goal of collecting high-quality data from both academia and industry to improve docking scoring functions and to organize community-wide assessments of current methods (Dunbar et al., 2011).

The first CSAR data set consisted of protein-ligand complexes from the PDB for which experimental binding affinities (K i or K d values) were available in the Binding MOAD database, augmented with data from the PDBbind; Dunbar et al. (2011) describe the CSAR data set as “the best of the PDB […] augmented with binding data from Binding MOAD and PDBbind”. The data set consists of 343 protein-ligand complexes which span binding affinities over several orders of magnitude.

The CSAR data set is subdivided into two subsets: Set 1, and Set 2. Initially, 2916 protein-ligand complexes were identified in the Binding MOAD database (version 2006) and filtered down to 1241 entries according to the quality of the crystal structures. Further processing consisted of the removal of ligands for which hybridization states and bond orders could not be automatically inferred, and for which the experimental binding affinity was expressed in terms of IC50 values. This resulted in a total of 309 complexes with associated K a , K d and K i values. Later on, an additional 1228 complexes from Binding MOAD (versions 2007 and 2008) were processed to obtain an additional 230 complexes with associated binding affinity data. After moving some complexes between the two groups to balance physicochemical properties, the final data set representing the initial release consisted of Set 1 (242 entries) and Set 2 (297 entries). Following community feedback, a more stringent quality assessment of the crystal structures was applied, thus reducing the size of the two sets, and errors concerning binding affinities were corrected. Following the CSAR benchmark exercise (Smith et al., 2011), the two sets were further processed resulting in the CSAR-NCS HiQ data set (September 2010), subdivided into Set 1 (176 entries) and Set 2 (167 entries). The CSAR-NCS HiQ data set consists of 52 protein targets with 2 or more structures and 191 targets with a single structure.

The CSAR-NCS HiQ dataset was subsequently updated with an additional 123 structures (set 3) applying the same criteria of the CSAR-NCS HiQ data set to structures in Binding MOAD added between 1/1/2009 and 12/31/2011.

The CSAR-NCS HiQ data set Dunbar et al. (2011), its update, and other data sets associated with the CSAR benchmark exercises (Damm-Ganamet et al., 2013; Dunbar et al., 2013; Smith et al., 2015; Carlson et al., 2016) can be downloaded from csardock.org or bindingmoad.org.

3.3 Astex Diverse Set

The Astex Diverse Set is another common data set encountered in the validation of protein-ligand scoring functions (Hartshorn et al., 2007), alongside the CASF and CSAR benchmarks. This data set contains 85 protein-ligand complexes, most of which are associated with experimentally measured binding potency.

The diverse set was obtained as follows. First, proteins from the PDB database were clustered based on sequence similarity leading to 9188 clusters of distinct proteins. Then, ligands bound to the clustered proteins were then screened to select high-quality structures of pharmaceutical or agrochemical interest and were filtered according to drug-likeliness criteria. The selected protein-ligand complexes were further assessed in terms of ligand clashes with the binding site residues, possible problems related to spurious interactions, and quality of the ligand electron density. This automated filtering procedure resulted in 427 clusters with high-quality protein-ligand complexes.

The final Astex Diverse Set was manually curated from the 427 clusters resulting in 85 complexes. Potency data for 74 of the 85 complexes was finally extracted from the literature.

3.4 Other Data Sets

The data sets described above are curated collections of binding affinities and structures and are therefore useful for the development and assessment of structure-based SFs for protein-ligand binding affinity predictions, both using classical and ML/DL scoring functions. However, there are several other data sets that might be useful to build and assess scoring functions, and some are briefly described below.

ChEMBL (Gaulton et al., 2011; Bento et al., 2013; Mendez et al., 2018) is a manually curated database of bioactive molecules, where data about drug-like molecules are collected together with results from bioactivity assays and genomic information. ChEMBL version 29 (10.6019/CHEMBL.database.29) contains data about 21 05 464 compounds and 14 554 targets. While ChEMBL is an extremely valuable resource and provides a large amount of binding affinity data, it does not contain structural data and it is, therefore, more commonly encountered in the development and assessment of ligand-based models (such as in Riniker and Landrum (2013a)).

The bioactivity data in ChEMBL is also exchanged with PubChem Bioassay (Wang et al., 2009, 2011) and BindingDB (Chen et al., 2001; Liu et al., 2007). The PubChem Bioassay database is a public repository containing bioactivity data for small molecules collecting more than 130 million assay results together with their protocols, while the BindingDB is a public database of experimental binding affinities between proteins (8,644 as of 8 November 2021) and drug-like molecules (1,023,385 as of 8 November 2021) which is accessible via a web interface. The BindingDB also contains 5988 protein-ligand crystal structures with associated binding affinity measurements.

Data sets released as part of the Drug Design Data Resources (D3R) Grand Challenges also constitute important datasets on which several ML and DL scoring functions have been designed or tested. D3R Grand Challenges promote the development and benchmarking of computational methods for binding pose and binding affinity prediction, by organizing blinded community challenges using high-quality data sets of pharmaceutical interest. The first D3R Grand Challenge was based on two targets (Gathiaka et al., 2016) using data from industrial drug discovery programs. Subsequent challenges (Gaieb et al., 2017, 2019; Parks et al., 2020) introduced novel targets and associated data for the blind prediction of binding poses, affinity rankings, and relative binding free energies. All the data sets are now easily accessible on the D3R website (drugdesigndata.org) as additional test sets for the development and evaluation of ML and DL scoring functions. Interestingly, in the D3R Grand Challenge 3 an increased number of ML methods was observed but overall they did not seem to perform any better than standard methods (Gaieb et al., 2019).

The databases that do not contain target structures are often employed to build ligand-based models or are used to put together new data sets with three-dimensional structures by generating different conformers for the ligand and collecting target structures from the PDB (Bernstein et al., 1977; Berman et al., 2000) to subsequently build structure-based models. For example, Boyles et al. (2021) recently released a new dataset—called the Updated DUD-E Diverse Subset—which combines data from the Directory of Useful Decoys Enhanced (DUD-E) data set (Mysinger et al., 2012) and ChEMBL.

Some data sets for binding affinity prediction discussed above are collected into benchmark data sets such as MoleculeNet (Wu et al., 2018) and Therapeutic Data Commons (Huang K. et al., 2021), which provide much-needed collections for the evaluation of different machine learning and deep learning methods for molecular properties prediction as well as drug discovery and development.

4 Machine Learning and Deep Learning Scoring Functions

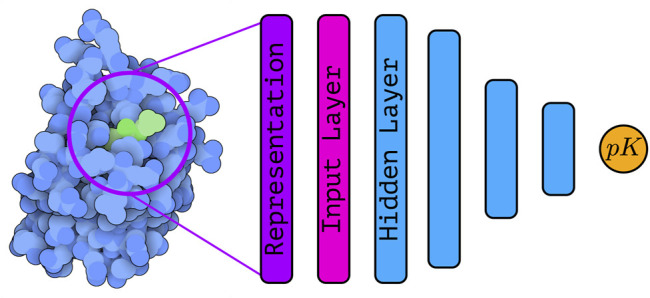

Machine learning (or descriptor-based) scoring functions have been developed and used for decades (Brown 2020). The simplest “scoring functions”—more commonly known as QSAR (Quantitative Structure-activity Relationship) models—were based on a small set of handcrafted descriptors and simple models (such as multiple linear regression Morris et al. (1998); Böhm (1992)), and typically ligand-based. Later, other machine learning (ML) algorithms—such as support vector machines (SVM) (Boser et al., 1992; Cortes and Vapnik 1995), random forests (RFs) (Ho 1995, Ho 1998; Breiman 2001), and gradient boosting (Mason et al., 1999; Friedman 2002)—have been applied in attempt to learn non-linear relationships between descriptors and the binding affinity. Figure 1 shows a schematic representation of a structure based deep learning architecture for binding affinity prediction.

FIGURE 1.

| Schematic representation of a structure-based deep learning architecture—with several hidden layers—for binding affinity prediction. The protein-ligand complex (PDB ID: 5S9H, rendered with Illustrate (Goodsell et al., 2019a)) is encoded into a suitable representation that is used as input to the deep learning architecture. Using a series of stacked hidden layers, a prediction of the binding affinity is finally obtained. The exact nature of the input layer as well as the hidden layers depends on the type of architecture under consideration.

For an in-depth and rigorous introduction to the deep learning (DL) architectures described below the reader should consult Goodfellow et al. (2016), while classical ML methods are described thoroughly in Bishop (2006), to which we refer the interested reader. For a more hands-on introduction to both ML and DL the reader should consult Géron (2019).

4.1 Descriptors

Descriptors for ML and DL SFs can encode information about the ligand, about the protein, or about intermolecular interactions in the protein-ligand complex. Ligand descriptors are commonly used in cheminformatics applications, quantitative structure-activity relationship (QSAR) modelling, and ligand-based virtual screening (Lo et al., 2018). Ligand-based descriptors can be combined with descriptors for the protein commonly employed in ML-based protein engineering (Xu et al., 2020) to obtain a SF that combines separate information about the ligand and the protein. However, here we focus on structure-based descriptors that encode the protein-ligand complex as a whole and form the basis for structure-based SFs. Methods that combine separate ligand and protein descriptors (van Westen et al., 2011), known as “pair methods” or “proteochemometric models”, have been reviewed by Qiu et al. (2016) and more recently by Kimber et al. (2021).

One common distinction between ML and DL models is that the latter are usually based on a simpler representation and learn descriptors directly from the data; this distinction is however somewhat arbitrary and most DL models still require some pre-processing to convert atom types and coordinates in the correct format for the architecture being used. Here we briefly review structure-based descriptors commonly employed with ML algorithms as well as the input representation used in DL architectures.

One common type of descriptor employed with ML models is an interaction fingerprint (IFP). Structural interaction FPs (SIFts) encode the 3D structure of a protein-ligand complex into a one-dimensional binary vector (Deng et al., 2003). Seven different interaction types involving the ligand and binding site residues are identified and a FP for the whole protein-ligand complex is obtained by concatenating the binding bit string of each binding site residue. Simple ligand-receptor interaction descriptors (SILIRID) are instead obtained from binary IFPs by summing the bits corresponding to the same amino-acids (Chupakhin et al., 2014), thus resulting in a FP with 168 elements (20 amino acids and one cofactor, and 8 interaction types per amino acid). Da and Kireev (2014) developed structural protein-ligand interaction fingerprints (SPLIF), where protein-ligand atom pairs within 4.5 Å are identified and expanded into circular fragments described by extended connectivity fingerprints (ECFPs) (Rogers and Hahn 2010). In this way, protein-ligand interactions are encoded implicitly instead of needing explicit ad-hoc interaction classes and therefore can encode all local interactions (Da and Kireev 2014). Similarly, Wójcikowski et al. (2018) developed the protein-ligand extended connectivity (PLEC) FP which combines the ECFP environments of the protein and the ligand atoms in contact to describe protein-ligand interactions. The atomic features employed to construct PLEC FPs—atomic number, isotope, number of neighboring heavy atoms, number of hydrogens, formal charge, ring membership, and aromaticity—are the same used to construct ECFP, but only pairs of interacting atoms within 4.5 Å are considered. The FPs computed for ligand and protein atoms are hashed together to a final bit position (Wójcikowski et al., 2018). The PLEC FP implementation is available as part of the Open Drug Discovery Toolkit (Wójcikowski et al., 2015) and has been successfully used in combination with different ML models for binding affinity prediction (Wójcikowski et al., 2018). There are several other IFPs such as APIF (Pérez-Nueno et al., 2009), PADIF (Jasper et al., 2018), and PyLIF (Radifar et al., 2013).

Ballester et al. (2014) evaluated the impact of the choice of chemical descriptors on ML scoring functions. They showed that more complex descriptors do not necessarily lead to more accurate scoring functions and they identify and discuss the factors that might be contributing to this observation: modelling assumptions, co-dependence of representation and regression method, and data set features.

In structure-based methods, the goal is to exploit the 3D information of protein-ligand complexes. One natural representation of the 3D structure of protein-ligand complexes is the electron density, which is used in X-ray crystallography to model the structure of both the protein and the bound ligand. To encode information about the nuclei available from resolved structures, a representation that clearly encodes the spatial relationship between the protein and the ligand are three-dimensional (3D) grids which discretize volumetric data. The voxel occupancy is often defined as the sum (or maximum) of decaying density functions centered at the different atoms, while atoms of different types are represented in different grids—which can be thought of as a generalization of the RGB channels used in 2D images to represent the different colors. Different representations have been proposed, but they are mostly based on atom-centered density functions g(r; t i ) centered at atom i of type t whose contributions are aggregated together:

| (6) |

r represents the coordinates of the voxel, R i represents the coordinates of atom i, while δ i,j is Kronecker delta so that only atoms of type t contribute to G(r; t, R). ⊕ is an aggregation function such as sum or maximum.

| (7) |

and the different channel represent hydrophobic, hydrogen-bond donor/acceptor, aromatic, ionizable, metallic, and excluded volume properties. R t represents an atom type-dependent characteristic length, often set to the van der Waals radius. The properties are duplicated to represent protein and ligand atoms in different channels, and the density for different atoms in the same channel is aggregated by taking the maximum. In Ragoza et al. (2017a) and subsequent publications (Sunseri et al., 2018; Francoeur et al., 2020) the following functional form is used:

| (8) |

and the different channels represent the different atom types from the Smina docking software (Koes et al., 2013), resulting in 16 channels for the receptor and 18 channels for the ligand (Ragoza et al., 2017a). Contributions from different atoms on the same channel are summed together.

The advantage of using 3D grid representations is that they encode clear spatial relationships between the different atom types and the computation can be performed very efficiently (Sunseri and Koes 2020) thus allowing on-the-fly data augmentation during training. However, grid representations have also several limitations. While computation of G(r; t) can be performed very efficiently, their dependence on the coordinate frame requires extensive data augmentation (Ragoza et al., 2017a) at increased computational costs, and the sparsity of some channels (such as the ones representing halogens or metals) implies wasteful computations. Additionally, the memory footprint of grid-based representations increases with the number of atom types. Despite the limitations, the close connection to the field of computer vision has led to the successful development of several SFs based on this representation, as discussed below.

For graph-based models such as graph neural networks (GNNs), descriptors are associated to atoms—the nodes of the graph—and bonds—the edges of the graph. A node descriptor is a vector containing information about the atom. An edge descriptor is a vector describing the chemical bond—such as the bond order. There are several descriptors employed in the literature, and they depend on the task at hand. For protein-ligand binding affinities, simple quantities related to an atom or a bond are commonly employed since higher-level features are learned by intermediate GNN layers (Feinberg et al., 2018). Such simple features for the nodes can include one-hot-encoded elements or atom types, formal charges, hybridization states, aromaticity, and other atomic properties (Jiang et al., 2021). Edge features can include both 2D and 3D information such as bond order, conjugation, bond length, and other bond properties (Jiang et al., 2021).

Descriptors commonly used in ML/DL for quantum chemistry have been successfully applied to the classification of active and decoys against different protein families (Bartók et al., 2017). Recently, the smooth overlap of atomic position (SOAP) descriptor (Bartók et al., 2013)—which allows comparing molecules across the structural and chemical space (De et al., 2016)—have been used together with Gaussian processes models to predict pIC50 values (McCorkindale et al., 2020). At the same time, atomic environment vectors developed for the ANI family of neural network potentials (Smith et al., 2017; Gao et al., 2020) and based on Behler-Parrinello symmetry functions (Behler and Parrinello 2007) have been used as descriptors of protein-ligand complexes for binding affinity predictions (Meli et al., 2021). Behler-Parrinello symmetry functions have also been employed as node features in GNNs for binding affinity prediction (Karlov et al., 2020) and inspired the atomic convolution architecture from Gomes et al. (2017). Both descriptors are strongly related (Musil et al., 2021) and provide a local descriptor of the structural and chemical environment of atoms in a way that is translationally and rotationally invariant.

Learned molecular representations also play an important role as descriptors (Chuang et al., 2020; Menke and Koch 2021). The characteristic of deep learning architecture is that useful and efficient internal representations are learned directly from the input data. Therefore, the fixed and ad-hoc descriptors or fingerprints described above can be substituted with learned representations. Yang et al. (2019) performed an extensive analysis of learned molecular representation for property predictions, showing that they achieve similar or better performance than fixed descriptors. While many learned representations for computational chemistry include only 2D information, learned representation for three-dimensional structures have been developed (Kuzminykh et al., 2018) but their application in structure-based drug discovery is still under-explored. The interest in DL architectures is that they can leverage the simple inputs described above (such as 3D atomic densities or coordinates and atom types) to automatically learn internally complex representations that can be used for molecular property prediction.

Some authors extracted descriptors from molecular dynamics (MD) trajectories, instead of using the crystal structure or docked poses, although the use of trajectory data remains rare (Wang and Riniker 2020). Yakovenko and Jones (2017) use atomic densities but trained their model on both docked poses and MD trajectory frames to obtain learned representations later used to predict LIE. Berishvili et al. (2019) developed 1D descriptors based on GROMACS (Berendsen et al., 1995; Abraham et al., 2015), AutoDock Vina (Trott and Olson 2009), and smina (Koes et al., 2013) terms to describe frames from MD trajectories. The descriptor for each frame where stacked together into a matrix of size n descriptor × n frames, representing the whole MD trajectory.

A more in-depth overview of featurization strategies for protein-ligand interactions that are commonly employed in the development of ML and DL SFs is given by Xiong et al. (2021), while an overview of common molecular representations used in AI-driven drug discovery is provided by David et al. (2020).

4.2 Overview of Classical Machine Learning Scoring Functions

Classical ML algorithms such as SVMs and RFs have been used in quantitative structure-activity relationship (QSAR) modelling and in the development of structure-based scoring functions for a while (Ain et al., 2015; Muratov et al., 2020).

One of the earliest ML SFs for binding affinity predictions has been developed by Deng et al. (2004). The model combines protein-ligand atom pair occurrence and distance-dependent atom pair features with a kernel partial least squares method (K-PLS) (Rännar et al., 1994, Rännar et al., 1995) to predict pK d , demonstrating that structure-based descriptors combined with ML regression can be effective for protein-ligand binding affinity prediction on different complexes. Das et al. (2010) introduced property-encoded shape distribution signatures—descriptors encoding molecular shapes and property distributions on protein and ligand surfaces—which were used in combination with SVM to build a regression model. SVM-based regression was also used by Li et al. (2011) to develop two SFs, one based on knowledge-based potentials (SVR-KB) and another based on physicochemical properties of the protein-ligand complex (SVR-EP). Both SFs show very good performance on the CASF benchmark when compared to classical SFs.

RF models have been quite successful in the development of structure-based ML SFs. Ballester and Mitchell (2010) introduced a novel SF based on RF, called RF-Score. Protein-ligand complexes are described by a 36-dimensional feature vector storing the occurrence count of different protein-ligand atom pairs within a cutoff of 12 Å. The feature vector is used as input of a RF regression model predicting the binding affinity. Thanks to the use of the PDBbind benchmark (Cheng et al., 2009), RF-Score could be easily compared to 16 other SFs, showing that RF-Score is a very competitive scoring function. SFCScoreRF improved the performance of RF-Score by using a different and larger feature vector including ligand-based features (such as the number of rotatable bonds) and interaction-specific descriptors (Zilian and Sotriffer 2013).

Gradient boosting (Mason et al., 1999; Friedman 2002)—often combined with decision trees—is another popular ML technique used in the development of SFs, also thanks to the availability of high-quality open-source implementations such as XGBoost (Chen and Guestrin 2016) and LightGBM (Ke et al., 2017). Notable scoring functions based on gradient boosting are XGB-Score (Li et al., 2019b), AGL-Score (Nguyen and Wei 2019), and OPRC-GBT (Wee and Xia 2021). Shen et al. (2021) recently developed several XGBoost-based classifiers to assess the impact of cross-docked poses on the performance on pose-prediction, highlighting the importance of cross-docked poses for training of ML SFs with a broad applicability domain and increased robustness for pose-prediction.

Instead of learning the experimental protein-ligand binding affinity directly, Wang and Zhang (2016), used a RF model learning to correct the AutoDock Vina scoring function (Trott and Olson 2009), which represent a reasonable baseline—especially for docking and virtual screening. The resulting scoring function, called ΔVinaRF, retains the very good scoring performance of other ML SFs on scoring and ranking while also working well for docking and virtual screening. ΔVinaRF showed great performance in the CASF-2016 benchmark (with a Pearson’s r of 0.82 in the scoring task), but this superior performance can be partially attributed to the overlap between the training set and the CASF-2016 test set (Su et al., 2018).

ML-based scoring functions are still under active development both in terms of methodology and training data. For example, Boyles et al. (2019) showed that including ligand features obtained with RDKit into structure-based ML scoring functions consistently improves the performance in protein-ligand binding affinity prediction. Combining features from RF-Score (v3) with RDKit molecular descriptors improves Pearson’s correlation for the CASF-2016 scoring benchmark from 0.79 to 0.84 (Boyles et al., 2019). Another example of recently developed scoring function using classical machine learning regression models for binding affinity prediction is RASPD+ (Holderbach et al., 2020).

Several other classical machine learning algorithms such as kernel ridge regression, Gaussian processes (Williams and Rasmussen 1996; Rasmussen 2003), and other methods have been used in the development of structure-based scoring functions but they are not the focus of this review. The interested reader can consult Ain et al. (2015) and (Li H. et al., 2020) for a more in-depth review of machine learning scoring functions.

4.3 Feed-Forward Neural Networks

Feed-forward neural networks (also known as multilayer perceptrons (MLPs), fully-connected neural networks, artificial neural networks (ANNs), or simply neural networks (NNs)) consist in a series of linear layers combined with point-wise non-linearities called activation functions (Bishop 2006). Originally, feed-forward neural networks were inspired by the way neurons in the brain work (McCulloch and Pitts 1943; Widrow and Hoff 1960; Rosenblatt 1962).

The basic unit of a neural network is a “neuron” (perceptron, or node) and the neurons in a neural network are clustered in different layers that are stacked. The neuron j in layer k takes an input vector x ∈ R N returns an output

| (9) |

where (weights) and (biases) for neuron j in layer k are learnable parameters to be determined during training and where g(⋅) is a non-linear function, called activation function. Neural networks are very expressive and can be regarded as universal approximators (Hornik et al., 1989), provided a large enough number of hidden neurons and some classes of activation functions (Bishop 2006).

Initially, neural networks were composed only of a small number of neurons with a single (hidden) layer between the input layer and the output layer but thanks to the development of algorithms able to train neural networks with multiple layers in a simple and efficient way (Rumelhart et al., 1986) neural networks became deeper and deeper (now called deep neural networks, DNNs) by staking together multiple hidden layers.

The use of simple and deep NNs for the determination of quantitative structure-activity relationships (QSAR) is not new (Salt et al., 1992; Dahl et al., 2014; Ma et al., 2015). One of the first use of NNs in binding affinity prediction was published by Artemenko (2008), where a subset of physicochemical descriptors and quasi-fragmental descriptors—describing pairwise statistics of interatomic distances—were selected using multiple linear regression and used as input of a feed-forward NN. NNs have been also successfully used for classification of actives and decoys. Durrant and McCammon (2010) introduced a NN-based SF—NNScore—to distinguish between well and poorly docked ligands as well as actives from decoys. NNScore was later extended to regression of binding affinities in NNScore 2.0 (Durrant and McCammon 2011b) thus providing a direct estimation of pK d . NNScore 2.0 uses terms from the Vina scoring function (Trott and Olson 2009)—to encode steric, hydrophobic, and hydrogen-bonding interactions–as well as BINANA features (Durrant and McCammon 2011a) as input and returns a estimate of pK d as output.

Ashtawy and Mahapatra (2015) used a collection of NNs whose predictions are combined with the bagging (Breiman 1996)—bootstrap aggregation—or boosting (Freund and Schapire 1997; Friedman 2002) ensemble methods. The input features were obtained as a combination of classical scoring functions terms, together with features from RF-Score. Their BgN-Score and BsN-Score SFs perform significantly better on the PDBbind core set 2007 than classical SF and surpass SFs based on RFs.

Wójcikowski et al. (2018) showed that a MLP combined with their PLEC fingerprint can achieve very good performance on the CASF-2016 benchmark. However, they also show that the PLEC FPs perform equally well when using a simpler linear model instead of a neural network, confirming that well-crafted descriptors can be extremely powerful.

More recently, Zhu et al. (2020) developed a model for pK d prediction where pairwise contributions are computed with a fully connected NN. Trained on the PDBbind 2018, they achieve a Pearson’s correlation coefficient of 0.75 and a RMSE of 1.44 on the CASF-2016 benchmark but the authors point out that there is a significant overlap between the test and training sets which might be boosting the performance of their model. Meli et al. (2021) used a collection of MLPs combined with a local representation of the atomic environment to predict protein-ligand binding affinities, reaching good performance on the CASF-2016 benchmark.

4.4 Convolutional Neural Networks

Convolutional neural networks (Fukushima 1980; Le Cun et al., 1989; Lecun et al., 1998; Krizhevsky et al., 2017) are a class of neural networks that tries to overcome some of the limitations of feed-forward neural networks, by using convolution operations instead of matrix multiplication in some of their layers (Goodfellow et al., 2016). Feed-forward neural networks use a one-dimensional vector as input which prevents the encoding of spatial relationships, and uses many parameters. CNNs are based on three main concepts (Bishop 2006): local receptive fields (inspired by the structure of the visual cortex (Hubel 1959; Hubel and Wiesel 1959)), weight sharing, and subsampling.

Local receptive fields are implemented in convolutional layers, where neurons in a layer do not receive the output of all neurons in the previous layer (as in fully-connected NNs) but only the ones in their local receptive field (Géron 2019). For two-dimensional grid-based inputs (such as images), the output of neuron at location (i, j) of feature map k of the convolutional layer l is given by (Géron 2019)

| (10) |

with

| (11) |

f h and f w are the height and the width of the receptive field (i.e. the size of the 2D convolutional kernel) while s h and s w represent the strides (i.e. the size of the displacement of the receptive field). denotes the number of feature maps in the previous layer (l − 1). is a bias term associated to feature map k while denotes the weight term associated to the connection between the input located at (u, v) in feature map k (l−1) (relative to the neuron’s receptive field) and the neuron in feature map k of layer l. Both and are learnable parameters to be determined during training. For clear depictions of the main building blocks of 2D CNNs we refer the reader to Dumoulin and Visin (2016).

Parameter sharing in a convolutional network comes from the fact that each weight of the kernel is used at every position of the input, avoiding the need to learn a parameter for each input element as it is the case in MLPs. Parameter sharing does not reduce the computational complexity of the forward pass, but significantly reduces the number of parameters in the network (when the size of the convolutional kernel is much smaller than the size of the input) and therefore the associated memory footprint (Goodfellow et al., 2016).

Pooling layers—such as maximum pooling (Zhou et al., 1988), and average pooling—are often inserted after (activated) convolutional layers to make the representation approximately invariant to small translations (Goodfellow et al., 2016). Additionally, they reduce the size of the input of the next layer thus increasing the computational efficiency of the CNN, and are essential for dealing with inputs of varying size (Goodfellow et al., 2016).

Convolutional neural networks have been very successfully applied to different tasks in computer vision such as image classification (Krizhevsky et al., 2017) in the ImageNet challenge (Deng et al., 2009; Russakovsky et al., 2015).

Wallach et al. (2015) introduced a structure-based deep convolutional network for bioactivity prediction (classification into two activity classes) of small drug-like molecules against a target of interest. In their architecture, denoted AtomNet, the protein-ligand binding site was converted into a 3D grid (20 Å per side at 1 Å resolution) containing values related to structural features such as the number of atom types or protein-ligand descriptors such as SPLIF (Da and Kireev 2014), SIFt (Deng et al., 2003), or APIF (Pérez-Nueno et al., 2009). They showed improved performance in the ROC-AUC compared to their baseline, provided by the Smina docking software (Koes et al., 2013). Ragoza et al. (2017a) introduced a similar approach to distinguish good (low RMSD) from bad (high RMSD) docking poses using CNNs based on an atomic density representation of the binding site (see Eq. 8). This approach was later extended to include binding affinity predictions in a multitask learning framework (Sunseri et al., 2018)—both the binding affinity and the pose quality are predicted at the same time—and it was shown to provide a good correlation between experimental and predicted binding affinities for the CASF-2016 benchmark (Francoeur et al., 2020). The various pre-trained CNN scoring functions are integrated and readily available in the Gnina docking software (McNutt et al., 2021). Jiménez et al. (2018) took a similar approach—with a slightly different density representation, first introduced in DeepSite Jiménez et al. (2017)—for binding affinity prediction with their Kdeep architecture and they achieved a very good correlation and low RMSE on the CASF-2016 benchmark. Interestingly, they analyzed the accuracy separately for the 58 different target classes of the CASF-2016 benchmark, revealing that accuracy is very sensitive to the specific protein used. Indeed, protein family-specific CNN models have been developed for virtual screening using a transfer-learning approach (Imrie et al., 2018).

Many other architectures for binding affinity predictions based on CNNs have been developed in recent years. Notable examples are Pafnucy (Stepniewska-Dziubinska et al., 2018), DeepAtom (Li Y. et al., 2019), OnionNet (Zheng et al., 2019; Wang S. et al., 2021) and OnionNet-2 (Wang Z. et al., 2021), and RoseNet (Hassan-Harrirou et al., 2020).

Pafnucy discretizes the binding site on a three-dimensional grid of 20 Å in side at 1 Å resolution and employs a set of 19 features including one-hot encoding of atom types (including selenium, halogens, and metals), hybridization state, number of bonds with heavy atoms, number of bonds with heteroatoms and a flag distinguishing protein and ligand atoms. DeepAtom uses a grid of 1 Å resolution to voxelize the binding site, with the same density representation of Jiménez et al. (2018) and using Arpeggio atom types (Jubb et al., 2017), but the architecture is inspired from ShuffleNet V2 (Ma et al., 2018). OnionNet (Zheng et al., 2019) also uses a deep convolutional neural network but the input features are based on intermolecular element-pair-specific contacts between ligand and protein atoms, which are grouped in different distance shells. Each shell is described by 64 features representing the intermolecular interactions—within the shell boundaries—between the protein and ligand for eight atoms types considered, and a total of 60 shells (of thickness 0.5 Å) is employed (Zheng et al., 2019). This idea was later extended in OnionNet-2 (Wang Z. et al., 2021), which uses protein residues types instead of protein atom types (increasing the number of features from 64 to 8 × 21 = 168). RoseNet (Hassan-Harrirou et al., 2020) uses an ensemble of CNNs—based on the ResNet architecture (He et al., 2016)—combining molecular mechanics energies from the Rosetta force field (Alford et al., 2017) voxelized onto a 3D grid (25 Å each side, at 1 Å resolution) and molecular descriptors—using an approach similar to K deep with descriptors from AutoDock Vina (Trott and Olson 2009)—to predict absolute binding affinities.

CNNs can also be employed with lower-dimensional descriptors. For example, TopologyNet (Cang and Wei 2017) encodes the three-dimensional protein-ligand complex structure into one-dimensional element-specific fingerprints based on topological invariants. Such element-specific topological fingerprints, stacked together over multiple channels—like a one-dimensional image representation—are then used as input of a CNN, and achieve good performance on the CASF-2016 benchmark. The work was later extended to explore additional algebraic topology approaches (Cang et al., 2018).

CNNs have also been successfully applied to the related task of pose prediction. The CNN developed by Ragoza et al. (2017a) has been developed initially for pose prediction, and it was extended to binding affinity prediction on a later stage (Francoeur et al., 2020). Other notable examples are DeepBSP (Bao et al., 2021), which uses a 3D voxel representation of protein-ligand complexes to predict the RMSD between a docked ligand and its native pose—an idea previously explored by Aggarwal and Koes (2020)—and MedusaNet (Jiang H. et al., 2020), which uses CNNs to predict if a pose generated by docking is a good pose to stop the docking process earlier when k good poses are found thus reducing computational costs.

The application of CNNs in the prediction of protein-ligand binding affinities has been quite successful, as demonstrated by the methods discussed above. However, while CNNs are translational invariant they are not rotationally invariant and therefore require extensive data augmentation where the protein-ligand complex is randomly rotated before computing its associated grid representation. Data augmentation with CNNs has proven to be essential to prevent overfitting in pose prediction (Ragoza et al., 2017a), and the average over multiple random rotations can be used during inference thus reducing the variance of the predictions (Jiménez et al., 2018). Many concepts from geometric deep learning (Atz et al., 2021; Bronstein et al., 2021), such as CNNs that are equivariant to rigid body motions (Weiler et al., 2018), will spill more and more into the field of protein-ligand binding affinity prediction as well as virtual screening to overcome some of the limitations of standard CNNs by encoding relevant symmetries directly into the model.

4.5 Graph Neural Networks

Graph neural networks (GNNs) are a collection of DL architectures to work with data that can be represented as a graph (Bronstein et al., 2021). The vast majority of GNNs falls under three categories (Bronstein et al., 2021): convolutional (Defferrard et al., 2016; Kipf and Welling 2016), attentional (Monti et al., 2017; Veličković et al., 2017; Zhang et al., 2018), and message-passing (Gilmer et al., 2017; Battaglia et al., 2018). A graph is composed of a set of vertices v i ∈ V and a set of edges e ij ∈ E connecting the vertices. Features x are associated to vertices (and, optionally, edges) and such features are subsequently updated as follows:

| (12) |

where ϕ and ψ are learnable functions (often learnable affine transformations with activation functions (Bronstein et al., 2021)) and where ⊕ represents a permutation-invariant function allowing the aggregation of features (such as sum, mean, and maximum (Bronstein et al., 2021)) over the neighborhood of node u. ψ is a message-passing function (which can be generalized to include edge features as well), while ϕ is a vertex update function. It is possible to learn edge features as well by introducing a hidden representation h uv for the edges (Kearnes et al., 2016; Gilmer et al., 2017).

Since molecules can be naturally represented as graphs—with nodes in the graphs representing different atoms and edges in the graph representing the chemical bonds between such atoms—GNNs are well suited to be applied in the field of chemistry (Atz et al., 2021). Message-passing GNNs, which are the most general flavor, have been successfully applied in quantum chemistry applications (Schütt et al., 2018; Qiao et al., 2020; Schäfer et al., 2020; Christensen et al., 2021). GNNs have also been applied to several molecular property predictions (Gaudelet et al., 2021), including bioactivity and protein-ligand binding affinity.

Gomes et al. (2017), inspired by the work of Behler and Parrinello (2007), developed an atom type convolution that uses a neighbor-listed distance matrix to automatically extract features about local chemical environments and combine this information with radial pooling to downsample the output of the atom type convolution. Essentially, the atom type convolution performs a graph convolution on the nearest neighbors graph in three-dimensional space. The resulting features are then passed to a collection of fully connected layers (all with the same weights and biases) to predict atomic contributions to the energy, which are summed together to obtain the total Gibbs free energy. To predict the binding free energy, three weight-sharing networks are used (one each for G complex, G protein and G ligand) and the results are then combined as

| (13) |

so that the whole architecture directly incorporates the thermodynamic cycle.

In PotentialNet (Feinberg et al., 2018) the node updates are of the form

| (14) |

where GRU is a gated recurrent unit (Hochreiter and Schmidhuber 1997; Cho et al., 2014; Chung et al., 2014), NN e is a trainable NN for edge type e, and denotes the neighbors of edge type e for atom i. Several updates are concatenated into different stages: in the first stage information is propagated only between nodes linked by a covalent bond, in the second stage information is propagated between non-covalent and covalent bonds and finally, everything is aggregated by a ligand-based graph gather. The first step essentially produces learned (bond-based) atom types, while the second step includes both bond and spatial information between the atoms (Feinberg et al., 2018). In stage three, all learned features for the ligand atoms are summed together and the resulting vector is used as input of a fully-connected neural network to produce the final prediction.

The graphDelta architecture uses a graph-based representation for the ligand and incorporates information about the target in the node features (Karlov et al., 2020). The node features represent radial and angular Behler-Parrinello atom centered symmetry functions (ACSFs) (Behler and Parrinello 2007), combined with a message-passing neural network. With enough training epochs, they achieve a Pearson’s correlation coefficient of 0.87 and a RMSE of 1.05 in the CASF-2016 benchmark for binding affinity prediction.

Li et al. (2021) developed a structure-aware interactive GNN which combines polar coordinate-inspired graph attention layers and pairwise interactive pooling. The graph attention layers leverage distances between nodes and angles between edges to iteratively update node and edge embeddings while preserving distance and angle information among atoms. The pairwise atomic type-aware pooling layer is then used to gather interactive edges to capture long-range interactions. Their model, called SIGN, achieves good results on the CASF-2016 benchmark for binding affinity prediction as well as the CSAR-NRC HiQ set.

Son and Kim (2021) developed GraphBAR, where a graph is constructed from all ligand atoms and protein atoms within 4 Å from the ligand (limited to a maximum of 200 nodes, with zero-padding of the adjacency matrix for smaller graphs). Node features consist of one-hot encoded atom types, atom hybridization states, number of neighboring atoms (heavy atoms and heteroatoms), and well as partial charges, stored in a 200, ×, 13 feature matrix. Multiple binary adjacency matrices are used to encode different interaction shells with fixed distance intervals. A graph convolution block is applied to each adjacency matrix together with the feature matrix pre-processed by a fully-connected layer. The outputs of the graph convolutional blocks are concatenated and a fully connected layer produces the final prediction. The model shows similar performance to Pafnucy (Stepniewska-Dziubinska et al., 2018), but the training time appears to be considerably shorter (Son and Kim 2021).

Jiang et al. (2021) developed InteractionGraphNet, where two independent graph convolution modules are stacked to sequentially learn intramolecular and intermolecular interactions using three molecular graphs (one for the ligand, one for the protein, and one for the protein-ligand complex). The protein-ligand bipartite graph is built using protein and ligand atoms within 8 Å of each other. At first, a series of message passing iterations is employed to update the node features in the protein and ligand graphs. Then, these learned node features are used as initial node features for the protein-ligand graph on which edge features representing non-covalent interactions are updated. The learned edge features on the protein-ligand graph, representing the non-covalent interactions between the protein and the ligand, are finally pooled together and used for downstream prediction tasks: binding affinity prediction, virtual screening and pose prediction. For binding affinity prediction, InteractionGraphNet shows good results on the CASF-2016 benchmark, although several systems were removed from the test set.

Moesser et al. (2022) recently developed a simple but effective way to include protein-ligand interactions into ligand-based graphs. Their protein-ligand interaction graphs (PLIGs) representation featurize an atom node in the molecular graph by including both atom properties and atom-atom contacts with protein atoms. Combined with the GAT architecture (Veličković et al., 2017), their model reaches a very good performance on the CASF-2016 benchmark.

Moon et al. (2022) used GNNs in a very interesting way. Instead of using standard and general architectures, Moon et al. (2022) included parametrized physics-based equations in the model architecture, to incorporate the appropriate inductive bias with the goal of improving model generalization by forcing the model to learn the underlying chemical interactions. A GNN is used to update node features across covalent bonds and intermolecular interactions, which are then used—together with pairwise distances—as input of physics-based parametrized equations describing intermolecular interactions as well as entropy loss. The parameters of the physics-informed equations are learned during training and contribute to model generalization.

GNNs have been also successfully applied for structure-based virtual screening (classification of actives and decoys) as well as pose prediction (classification of binding poses), as demonstrated by Lim et al. (2019), Morrone et al. (2020), and Stafford et al. (2022). The use of GNNs—and, more generally, geometric deep learning—in drug discovery and drug development is a very active area of research and a recent overview on several different applications beyond the narrow scope of this review is given by Gaudelet et al. (2021).

4.6 Other Methods

Above we briefly described widely used families of deep learning architectures—MLPs, CNNs, and GNNs—and their application on the development of structure-based scoring functions. One important omission is recurrent neural networks (RNNs) (Rumelhart et al., 1986; Hochreiter and Schmidhuber 1997; Graves 2012), which are suited to learn from sequential data (such as language or time series). RNNs are also applied to protein-ligand binding affinity prediction (Karimi et al., 2019) but they usually employ unrelated representation for the protein (often the sequence of amino acids) and the ligand (SMILES strings or related representations). As mentioned above, proteochemometric or pair models (Lenselink et al., 2017; Feng et al., 2018; Öztürk et al., 2018; Shin et al., 2019; Jiang M. et al., 2020; Nguyen et al., 2020; Yang et al., 2022) are outside the scope of this review and the reader can find more information in Kimber et al. (2021).

Similarly to proteochemometric models, which combine different—often learned—representations for the protein and the ligand, protein-ligand binding affinity predictions can also benefit from the use of complementary representations of the complex. Jones et al. (2021) combine learned representations of the protein-ligand complex obtained with CNNs and GNNs using mid-level or late deep fusion (Roitberg et al., 2019).

Seo et al. (2021) recently developed BAPA, an architecture based on 1D CNNs combined with an attention layer. The protein-ligand complex is encoded into a 1D descriptor of contacts between the protein and ligand atoms and processed using a 1D CNN to obtain learned features, which are then concatenated with terms from the AutoDock Vina scoring function. The learned features are then encoded into a latent representation using a MLP. The encoded vector is then passed to an attention layer. As described by Chen et al. (2018), an attention layer computes a weighted sum of input values, where the weights are determined based on the relevance of the different input components. In BAPA, the goal of the attention layer is to extract the components of the input important for binding affinity prediction in a context vector. The encoded and context vectors are then concatenated an used by an MLP to obtain the final prediction. Wang Y. et al. (2021) also used self-attention in their PointTransformer architecture. The use of the attention mechanism (Bahdanau et al., 2014; Luong et al., 2015) in binding affinity prediction is also found in proteochemometric models (Karimi et al., 2019; Zhao et al., 2019).