Abstract

This work explores the potential utility of neural network classifiers for real-time classification of field-potential based biomarkers in next-generation responsive neuromodulation systems. Compared to classical filter-based classifiers, neural networks offer an ease of patient-specific parameter tuning, promising to reduce the burden of programming on clinicians. The paper explores a compact, feed-forward neural network architecture of only dozens of units for seizure-state classification in refractory epilepsy. The proposed classifier offers comparable accuracy to filterclassifiers on clinician-labeled data, while reducing detection latency. As a trade-off to classical methods, the paper focuses on keeping the complexity of the architecture minimal, to accommodate the on-board computational constraints of implantable pulse generator systems.

Clinical relevance—A neural network-based classifier is presented for responsive neurostimulation, with comparable accuracy to classical methods at reduced latency.

I. Introduction

Deep brain stimulation (DBS) first received approval for the symptomatic treatment of Parkinson’s disease in 1997. While similar in design to cardiac pacemakers, the implantable pulse generators (IPG) of the time offered only an open-loop form of therapy, with typically a single stimulation pattern, set by a clinician for each patient. Real-time seizure detection and responsive neurostimulation (RNS) was first attempted using a computer-in-the-loop system by Gotman et al. in 1976 [1], it was Osorio et al. in 1998 [2], [3], [4] who introduced the more widely studied filter-based spectral biomarker detectors to the field of epilepsy research. With the continued development of IPGs and the maturation of low-power microprocessor technology, the first RNS system for epilepsy received approval for pre-clinical use in 2014. This system from Neuropace had the capabilities to sense bioelectric signals, and choose stimulation programs based on clinician-configured classification state [5].

Filter-based spectral-feature detectors have since been used successfully in other conditions, most notably for tremor suppression in Parkinson’s disease, through the discovery of beta oscillations as a correlate of disease state [6]. However, the smaller (1 μVrms) signal size of beta oscillations, compared to epileptiform activity (10 μVrms), made deploying the detector algorithm in IPGs challenging due to the presence of stimulation and other artifacts. Contemporary work focuses on improving the robustness of the signal chains to enable simultaneous sensing and stimulation, thus true closed-loop operation across targeted diseases [7], [8]. Examples include the Medtronic Percept [9] and the Picostim-DyNeuMo research systems [10], [11]. A complementary avenue of refinement is the use of feedforward predictors for adapting stimulation based on periodicities of disease state and patient needs, such the circadian scheduling of the SenTiva system from LivaNova or the Picostim-DyNeuMo [12]. Taking advantage of more long term, weekly or even monthly rhythms are being investigated for epilepsy management [13].

Patient-specific filter design, while possible to aid with software, can be a complex problem, likely to limit both clinician-capacity and patient-throughput. Establishing and validating a neural-network (NN) training pipeline based on clinician-labeled data could offer a systematic classifier tuning process. Networks could be pre-trained on aggregate data from multiple patients, and refined based on individual labeled data at the point of deployment [14]. Of course, due to the black-box nature of neural network classifiers, extensive validation work will be required to establish safety before first-in-human studies. Advances in interpretable deep learning could facilitate building trust in NN–classifiers for medical use [15].

Liu et al. [16] demonstrated the feasibility of deploying high accuracy classifiers for seizure detection on modern microprocessors (ARM® Cortex-M4), through model compression and quantization techniques, showcasing several advanced NN topologies.

This paper is meant as an initial study to bring focus to the fundamental challenge of NN classifiers: computational cost. As state-of-the-art deep neural networks reach ever increasing model sizes [14], [17], we aim to explore whether lean NNs of only dozens of units could in fact compete in accuracy with classical, filter-based systems for bioelectric signal classification.

II. Design

A. Baseline Method

To establish a baseline for performance as well as for computational cost, we used a classical band-power estimation filter chain to detect epileptiform discharges [3], [6], that we have previously deployed with success in the Picostim-DyNeuMo experimental IPG system [11]. The processing steps of this method are shown in the top panel of Fig. 1. While this algorithm is computationally efficient and has a very favorable memory footprint (refer to Table I), the demodulated envelope signal, thus the detector output, will always lag the input signal to reduce output ripple – irrespective of processing speed. This trade-off arises from the very nature of causal filtering, and is necessary to prevent rapid switching of the detector output for input signals near the classification threshold. The reference classifier was configured as follows. Our band-pass stage was an 8 – 22 Hz, 4th order Butterworth filter, with a Direct Form I IIR implementation (16-bit coefficients, 32-bit accumulators). Envelope demodulation was achieved using an exponential moving average filter with a decay-coefficient of 32 samples. The filter chain, and all other classifiers were designed to operate at a sampling rate of 256 Hz.

Fig. 1. Classifier architectures.

Top: classical filter-based spectral power detector [6]. Bottom: the multi-layer perceptron architecture evaluated in this paper. Through training on labeled data, the neural network is expected to assume an overall transfer function similar to the hand-crafted filter topology.

Table I. RESOURCE USAGE.

| Classifier | Execution time (Cycles / Sample) | Code (bytes) | Memory (bytes) |

|---|---|---|---|

| Filter (custom) | 750 | 675 | 100 |

| MLP (TF Lite) | 2250 | 31k | 8k |

| CNN (TF Lite) | 3200 | 31K | 13k |

| MLP (custom) | 2250* | 2.0k* | 300* |

predicted

B. New neural-based methods

In our search for a low-complexity classifier for time series input, we explored two main NN families. (1) Multi-Layer Perceptrons (MLP) are the simplest, and oldest family or of artificial neural networks [18], where the input vector is connected to ‘hidden’ layers of feedforward units, condensing information into an output unit. This architecture is shown in the bottom panel of Fig. 1. (2) As a step up in complexity, Convolutional Neural Network (CNN) introduce a convolution layer, also known as a filter bank, between the input vector and the neural layers as an initial extra step [14]. The input to our networks is formed by a windowed set of past time samples of the local field potential (LFP) signal. The output signal, calculated once for each complete window of samples, is thresholded into a binary label. We denote this classifier the ‘standalone MLP’ model.

Recurrent neural networks, an otherwise natural choice for processing time series data, were dismissed from consideration as recurrence necessitates the introduction of dynamic state variables, which significantly increases memory footprint [14]. Without recurrence, we introduced coherence into our classifier in a different way. We settled on requiring a consensus of three subsequent outputs from the NN to define our final output label, providing the ‘adjusted MLP’ model.

C. Training and data

Our raw dataset consisted of LFP signals recorded from two patients, for a combined 24 hours, with 30 professionally labeled events of clinical significance. The recordings were resampled to a 256 Hz sampling frequency for uniformity.

As seizures are comparatively rare events scattered among very long periods of normal activity, we decided to introduce class imbalance into our training sets to best prepare the NNs for real-life use. The training set was biased towards negative samples in a 3:1 ratio, based on clinician annotations. The dataset was split in the common 70:30 ratio between a training and a validation set. Network weights and biases were quantized to 8-bit integers.

D. Technical equipment used

Neural networks were modeled and trained in Tensorflow Lite version 2.7.0, using an Intel Core i7 CPU with 16 GB of RAM. Embedded performance was tested on an Arduino Nano 33 BLE Sense evaluation board for the nRF52840 ARM Cortex-M4F microprocessor.

E. Comparison of CNN and MLP models

Fig. 2 shows the performance of our CNN and MLP classifiers. While the CNN outperforms the standalone MLP, it performs with similar accuracy to the adjusted MLP model above 80% true positive rate (TPR). For safe use, the operating point of a seizure detection system should be biased towards high TPR – missed seizures (false negatives) pose significantly more risk to the patient than false positives, which merely result in unnecessary stimulation. Overall, in targeting resource constrained IPGs, we judged the minor edge of the CNN insufficient to justify the added computational burden of the convolutional layer.

Fig. 2. ROCs for different classifiers.

Note that performance converges towards high TPR and FPR, which is the desirable operating point of seizure detectors as FNs pose significantly greater risk of harm to patients than FPs.

F. Tuning the MLP classifier

The performance of the MLP model, when trained on a given dataset, is primarily determined by two hyperparameters: the number of timepoints in the input window, and the hidden layer’s size. We found that varying the number of hidden layers had very modest effects on accuracy (not shown in this paper). Fig. 3 systematically explores the effect of the two key hyperparameters on the classification error of a single output, single hidden layer MLP model. As expected, the network requires a certain size and complexity to encode a feature space sufficient for reliable classification, though increasing the number of units in either layer beyond a certain point leads to diminishing returns. To select one of the possible models from the error surface, one could define a scoring scheme including network size, computational time and the loss itself, to make an educated choice, however, this is beyond the scope of this paper. Favoring low complexity, we settled on using a 20-point input window and 8 hidden neurons, in the ‘transition zone’ of the error surface.

Fig. 3. Tuning the MLP classifier.

Grid search on the two main model hyperparameters: the input vector length and the number of hidden layer units. Loss is represented as binary cross entropy across all samples.

III. Model Performance and Interpretation

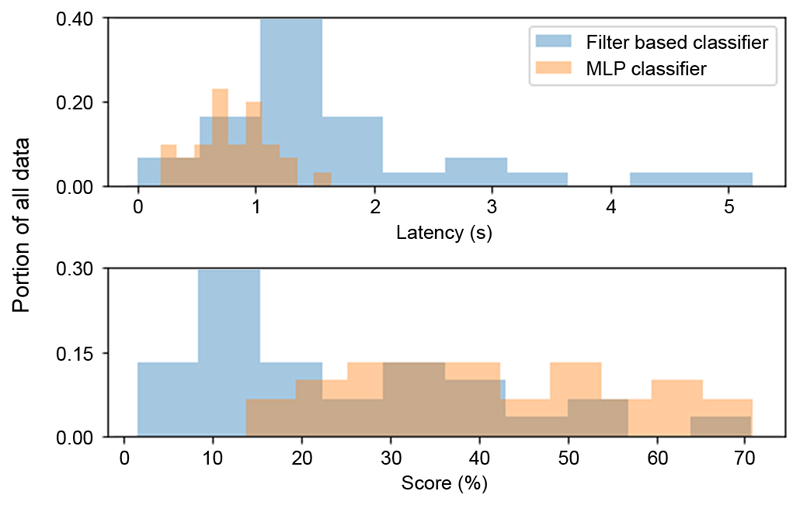

The next step is to compare our best MLP result to the baseline classifier. The ROC of Fig. 2 reveals that a well-tuned filter chain outperforms the small MLP model below 60% false positive rate, beyond which they converge in accuracy. Identifying a seizure does not present a holistic view of performance though. In Fig. 4 we highlight two additional characteristics to consider in classifier evaluation: (1) latency at event onset, and (2) the overlap between classifier and clinician labels. As shown, the MLP responds on average more rapidly to a commencing seizure (mean latency of 0.6 sec vs 1.7 sec), and tracks the clinician label more closely overall, compared to the baseline method.

Fig. 4. Detailed performance of classifiers.

Top: histogram of classification latency. Bottom: histogram showing the percentage of overlap between positive classifier output and clinician-labeled event.

To explore the MLP classifier’s internal representation of a seizure, we present a small interpretation experiment in Fig. 5. We presented the classifiers with second-long sinusoidal bursts of activity, performing parameter sweeps along both test frequency and test amplitudes. As seen, the MLP model (right) was successful in internalizing a notion of the spectral characteristics of epileptiform activity (low frequency lobe), that encompasses the pass-band of the filter classifier (left). The greater effective bandwidth of the MLP could explain the lower false positive rates seen on the ROC of Fig. 2. The activation lobes at higher frequencies likely represent a process analogous to aliasing, and we expect this periodicity to be a correlate of the input window size, which should be investigated further.

Fig. 5. Frequency response of classifiers to input signals of different magnitudes.

Left: filter chain classifier. Right: MLP classifier with average of 3 windows. Accuracy is presented as the mean classifier output for a 1 second sinusoidal test tone over 10 repeats.

IV. Discussion

The example design of the MLP classifier demonstrates that even tiny neural networks can be effective at simple signal processing tasks. As the final step, we should reflect on the embedded resource usage achieved, so we refer the reader to Table I. Importantly, the network achieved sufficiently low complexity for real time use. Note that NN execution times are reported per sample, though the output only changes at the end of a window of 20 samples. Notably, the true memory footprint of the classifier could not be determined with this evaluation system – Tensorflow Lite does not generate network code, rather it provides a network description file, to be run by a relatively large, general purpose interpreter library in the embedded system. For a more realistic, yet conservative outlook, we present estimates for the memory usage of the same network deployed using customized library, trimmed down to the features used in our design. In summary, the MLP could provide an alternative to existing tuned filter methods used in commercial devices.

ACKNOWLEDGMENT

The authors would like to thank Bence Mark Halpern for reviewing the manuscript, and Tom Gillbe at Bioinduction Ltd. for feedback on the filter-classifier design.

Footnotes

DISCLOSURES

The University of Oxford has research agreements with Bioinduction Ltd. Tim Denison also has business relationships with Bioinduction for research tool design and deployment, and stock ownership (< 1 %).

This work was supported by the John Fell Fund of the University of Oxford, the UK Medical Research Council (MC_UU_00003/3, MC_UU_00003/6) and the Royal Academy of Engineering.

References

- [1].Gotman J, Gloor P. Automatic recognition and quantification of interictal epileptic activity in the human scalp EEG. Clin Neurophysiol. 1976 Nov;41(5):513–529. doi: 10.1016/0013-4694(76)90063-8. [DOI] [PubMed] [Google Scholar]

- [2].Osorio I, Frei MG, Wilkinson SB. Real-time automated detection and quantitative analysis of seizures and short-term prediction of clinical onset. Epilepsia. 1998 Jun;39(6):615–627. doi: 10.1111/j.1528-1157.1998.tb01430.x. [DOI] [PubMed] [Google Scholar]

- [3].Osorio I, et al. Performance reassessment of a real-time seizuredetection algorithm on long ECoG series. Epilepsia. 2002 Dec;43(12):1522–1535. doi: 10.1046/j.1528-1157.2002.11102.x. [DOI] [PubMed] [Google Scholar]

- [4].Bhavaraju N, Frei M, Osorio I. Analog seizure detection and performance evaluation. IEEE Trans Biomed Eng. 2006 Feb;53(2):238–245. doi: 10.1109/TBME.2005.862532. [DOI] [PubMed] [Google Scholar]

- [5].Sun FT, Morrell MJ. The RNS system: responsive cortical stimulation for the treatment of refractory partial epilepsy. Expert Rev Med Devices. 2014 Nov;11(6):563–572. doi: 10.1586/17434440.2014.947274. [DOI] [PubMed] [Google Scholar]

- [6].Little S, et al. Adaptive deep brain stimulation in advanced parkinson disease: Adaptive DBS in PD. Ann Neurol. 2013 Sep;74(3):449–457. doi: 10.1002/ana.23951. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Sorkhabi MM, Benjaber M, Brown P, Denison T. Physiological artifacts and the implications for brain-machine-interface design; Proceedings of the 2020 IEEE International Conference on Systems, Man, and Cybernetics (SMC); 2020. pp. 1498–1498. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Ansó J, et al. Concurrent stimulation and sensing in bi-directional brain interfaces: a multi-site translational experience. J Neural Eng. 2022 Mar;19(2):026025. doi: 10.1088/1741-2552/ac59a3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [9].Neumann W-J, et al. The sensitivity of ECG contamination to surgical implantation site in brain computer interfaces. Brain Stimul. 2021 Sep;14(5):1301–1306. doi: 10.1016/j.brs.2021.08.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [10].Zamora M, et al. DyNeuMo Mk-1: Design and pilot validation of an investigational motion-adaptive neurostimulator with integrated chronotherapy. Exp Neurol. 2022 May;351:113977. doi: 10.1016/j.expneurol.2022.113977. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [11].Toth R, et al. DyNeuMo Mk-2: an investigational circadian-locked neuromodulator with responsive stimulation for applied chronobiology; Proceedings of the 2020 IEEE International Conference on Systems, Man, and Cybernetics (SMC); 2020. pp. 3433–3440. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].Zamora M, et al. Case report: Embedding “digital chronotherapy” into medical devices — a canine validation for controlling status epilepticus through multi-scale rhythmic brain stimulation. Front Neurosci. 2021;15:734265. doi: 10.3389/fnins.2021.734265. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [13].Baud MO, et al. Multi-day rhythms modulate seizure risk in epilepsy. Nature Commun. 2018 Jan;9(1) doi: 10.1038/s41467-017-02577-y. 88. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [14].LeCun Y, Bengio Y, Hinton G. Deep learning. Nature. 2015 May;521(7553):436–444. doi: 10.1038/nature14539. [DOI] [PubMed] [Google Scholar]

- [15].Lapuschkin S, et al. Unmasking Clever Hans predictors and assessing what machines really learn. Nat Commun. 2019 Dec;10(1):1096. doi: 10.1038/s41467-019-08987-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].Liu X, Richardson AG. Edge deep learning for neural implants: a case study of seizure detection and prediction. J Neural Eng. 2021 Apr;18(4):046034. doi: 10.1088/1741-2552/abf473. [DOI] [PubMed] [Google Scholar]

- [17].Bender EM, Gebru T, McMillan-Major A, Shmitchell S. On the dangers of stochastic parrots: Can language models be too big?; Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency (FAccT); 2021. Mar, pp. 610–623. [Google Scholar]

- [18].Rosenblatt F. The Perceptron: A probabilistic model for information storage and organization in the brain. Psychol Rev. 1958;65(6):386–408. doi: 10.1037/h0042519. [DOI] [PubMed] [Google Scholar]