Abstract

A common goal of fluorescence microscopy is to collect data on specific biological events. Yet, the event-specific content that can be collected from a sample is limited, especially for rare or stochastic processes. This is due in part to photobleaching and phototoxicity, which constrain imaging speed and duration. We developed an event-driven acquisition (EDA) framework, in which neural network-based recognition of specific biological events triggers real-time control in an instant structured illumination microscope (iSIM). Our setup adapts acquisitions on-the-fly by switching between a slow imaging rate while detecting the onset of events, and a fast imaging rate during their progression. Thus, we capture mitochondrial and bacterial divisions at imaging rates that match their dynamic timescales, while extending overall imaging durations. Because EDA allows the microscope to respond specifically to complex biological events, it acquires data enriched in relevant content.

Introduction

Live-cell fluorescence microscopy is an indispensable tool for studying the dynamics of biological systems. Yet, fluorescence microscopy also comes with limitations: as fluorophores go through excitation and emission cycles, they photobleach and produce reactive oxygen species, which interact with cellular components, causing phototoxicity. Thus, photobleaching and phototoxicity restrict the photon budget. Practically, this manifests as a trade-off between spatial resolution, temporal resolution, measurement duration, and signal-to-noise ratio (SNR), such that improving one parameter comes at a cost to the others1,2. For example, higher imaging rates degrade photon budget and sample health more rapidly, reducing measurement duration and SNR. Super-resolution microscopies resolve features below the diffraction limit, but also require higher light doses.

Methodological developments have addressed the limited photon budget with several complementary approaches. Brighter and more photostable fluorescent probes increase the photon budget3,4, while computational denoising or reconstruction5–8 restores low SNR or sparse images to use photons more efficiently, and adaptive microscopies improve how measurements allocate photons. These approaches may be combined synergistically, to permit access to longer, gentler measurements.

Adaptive microscopies implement feedback control systems that spatially modulate the excitation and reduce the overall light dose. Modulated scanning can adjust laser dwell times or intensities to amplify pixels with higher fluorescence signals, to enable faster and gentler confocal9,10 and super-resolution imaging11,12. Alternatively, modulation can maintain pixel-wise fluorescence intensities, and prioritize increased dynamic range while maintaining light exposure13. Additional inputs, such as prior knowledge of sample organization or shape have been used to optimize local excitation intensity in multi-photon measurements14,15. In widefield, digital micro-mirror devices can illuminate selectively where fluorescent structures reside, and reduce the overall light dose16. These adaptive approaches generally take into account the spatial heterogeneity of biological samples, and use their fluorescence signal to decide where, and how much to illuminate.

Biological processes, however, also occur over a broad range of temporal scales and with complex dynamic signatures. For example, within eukaryotic cells, mitochondrial fissions are sporadic events which typically only occur once every few minutes in an entire mammalian cell, and persist less than a minute from start to finish17. The constriction rate accelerates towards full scission – the final stages of which contain the richest information about membrane deformation and its mechanisms. By contrast, the bacterial cell cycle is deterministic, but also intermittent; cells elongate and partially constrict, before popping apart into two separate daughter cells during a small fraction of the cell cycle duration (0.1-0.0001%)18,19. As a result, such dynamic biological events are challenging to capture, especially under their native conditions without induction. Measurements taken at a fixed frame rate would risk missing events of interest, since a high frame rate limits measurement duration while a slow frame rate limits event-specific content. Thus, the optimal frame rate, set by the biological dynamics, changes during an acquisition.

Open-source control software enables users to define and execute their own automation settings, adapted to their biological samples and for their own instruments (MicroManager Intelligent Acquisitions plugin20,21,22). However, biological samples often undergo subtle changes in morphology and protein assembly patterns that herald the onset of events of interest. Under these conditions, adaptive control based on simple readouts like intensity23 or cell shape22 would lack the required sensitivity and specificity to detect them. The complexity of this challenge has prevented event-driven control from achieving its full potential to enable biological discovery through extension beyond spatial adaptation to include temporal adaptation, sculpting the measurement to enrich for events of interest.

We introduce an event-driven acquisition (EDA) framework, which responds to the distinct spatial signatures of biological events to adapt the microscope acquisition – as demonstrated here, by modulating the frame rate. Image analysis powered by deep learning is capable of recognizing surprisingly subtle signatures of events of interest within the sample. We harness a computer vision approach by training a neural network to detect precursors to events of interest and tailor the acquisition on-the-fly. To aid implementation of EDA in different microscope setups, we provide a plugin that integrates the neural network analysis and actuation into Micro-Manager. As proof of principle, we integrate EDA into a large field-of-view instant structured illumination microscope (iSIM)24,25 and apply it to capture super-resolved time-lapse movies of mitochondrial and bacterial divisions. In response to neural network outputs, EDA prioritizes the imaging speed or measurement duration. Such acquisitions spend the sample’s photon budget more efficiently compared with a fixed frame rate. Controlled by EDA, the iSIM collects data enriched in event-specific content and captures more completely the dynamic progression from constriction through scission.

Results

EDA concept and implementation

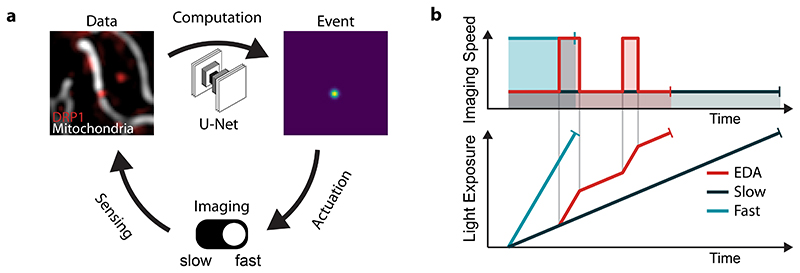

The EDA framework consists of a feedback loop between the live image stream and microscope controls, whose implementation requires: 1) sensing via images of the sample collected by the microscope, 2) computation to analyze images and detect events of interest and 3) actuation to adapt the measurement parameters (Figure 1a). In our implementation, the open software Micro-Manager20 captures images from the microscope. As new frames are received, a neural network trained on labeled bio-image data to recognize specific events of interests performs the computation step. For each image, the network generates a spatial relative probability map, or ‘event score’, for those events of interest. The event score acts as a decision parameter for the control loop, and actuates changes in the measurement. We offer this framework as a Micro-Manager plugin – capable of multithreading to parallelize image analysis – that allows EDA-enabled experiments on a wide variety of microscopes (Supplementary Notes 1 and 2). We demonstrate its utility here for measurements of mitochondrial and bacterial division events taken with our custom-built iSIM (Methods).

Figure 1. Event driven acquisition (EDA) concept.

a, The feedback control loop for EDA is composed of three main parts: 1) sensing by image capture to gather data, 2) computation by a neural network to detect events of interest and generate a heat map of event score, and 3) adaptation of the acquisition parameters in response to events in the sample. b, Schema of experiment duration and cumulative light exposure for different fixed imaging speeds or with EDA to switch between the two. The total excitation light dose (shaded areas in upper panel, final light exposure value in lower panel) is constant for all experimental configurations to represent the fixed photon budget.

Since our events of interest are intermittent, we use the event score to actuate a toggle between slow and fast imaging speeds as the measurement proceeds (top, Figure 1b). We rely on the global maximum event score found in the image, reflecting the likelihood of event-relevant content. When it exceeds a user-defined value, the microscope begins to acquire data at a high frame rate. Once in this fast mode, the microscope switches back to the slow mode only when the event score diminishes below a second, slightly lower, threshold. This hysteresis in the triggering mechanism makes the actuation less susceptible to noise from the readout and ensures that events are followed for their full duration, even if the event score peaks early during the event. Given a limited photon budget and fixed frame rate, the measurement will last longest for the slow imaging speed and shortest for the fast imaging speed. The EDA measurement has an intermediate duration, but spends more of its photon budget during events of interest, when the sample will accumulate more exposure to light (bottom, Figure 1b).

Detecting division using a neural network

Division underlies mitochondrial proliferation and maintenance26, similar to its role in free-living single-celled organisms. The structural and dynamic precursors to mitochondrial division are challenging to detect. They can occur infrequently, and at any location within the mitochondrial network, at any time. Mitochondria are also constantly in motion, and undergo shape changes unrelated to division. Once initiated, constrictions typically proceed to division within less than a minute17. Finally, mitochondrial width lies near the diffraction limit; thus, changes in their shape preceding fission are difficult to measure. Although numerous software packages propose automated segmentation27,28 and tracking of mitochondria as well as network morphology classification29–32, they rarely attempt to detect divisions33 or active constrictions preceding them. For these reasons, microscopists typically rely on manual analysis to identify mitochondrial divisions in their data, playing videos forward and backward and visually inspecting local changes in the network. Overall, detecting division events in real-time during imaging presents a major bottleneck.

The dynamin-related protein 1 (DRP1) drives mitochondrial membrane constriction and scission, and is required for spontaneous divisions34–36. Thus, its enrichment at division sites can help to identify them. But DRP1 also accumulates at non-dividing sites, since it can bind and oligomerize on both curved and flat membranes37. So, its presence alone cannot reliably predict future divisions, precluding a simple intensity threshold-based event detection.

To address these challenges and detect mitochondrial division sites, we rely on the combined information from mitochondrial shape and DRP1 enrichment. We trained a neural network with a U-net architecture38 on input images of mitochondria and DRP1. The shape of a constriction site is a saddle point, which is characterized by two principal curvatures of opposite signs. Those curvatures are represented by the eigenvalues of the Hessian matrix at that point in the image. Thus, as a putative ground truth, we used the intensity in the DRP1 channel, multiplied by the negative determinant of the Hessian matrix representing the Gaussian curvature of the mitochondrial outline (Supplementary Note 3, Methods). Importantly, to generate the final ground truth data, we manually curated the training set to increase the accuracy for real constriction events by removing false positives.

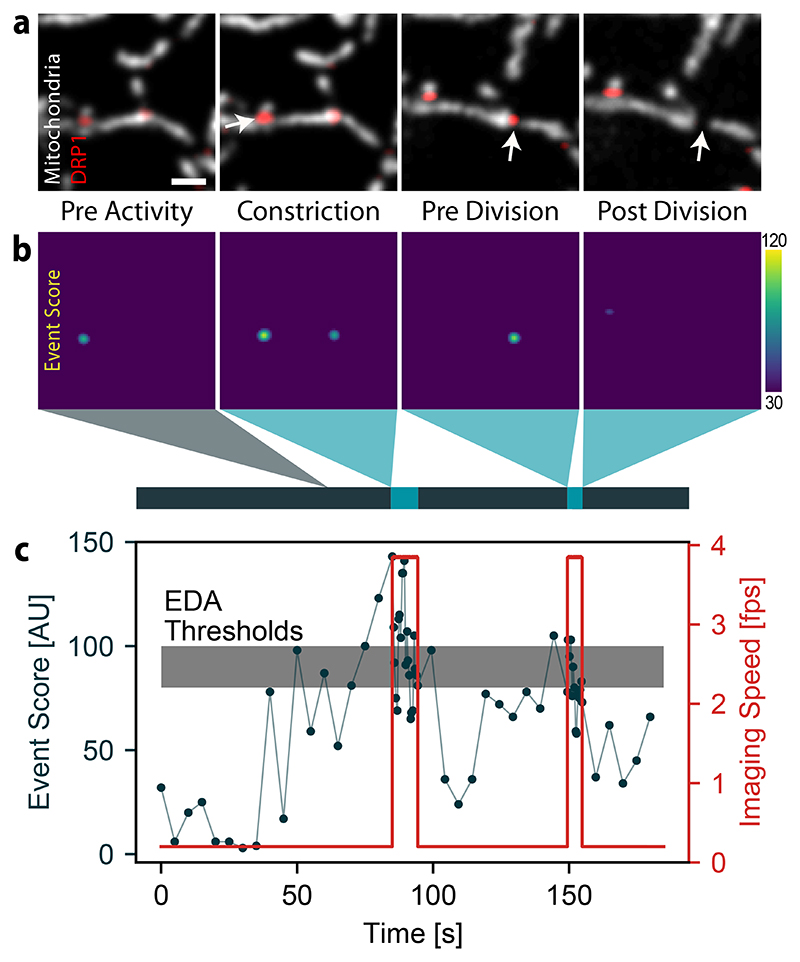

Our network, trained to detect mitochondrial constrictions in the presence of DRP1 (Figure 2a), produces a heat map of event scores: higher values mark locations within the image where division is more likely to occur (Figure 2b, Extended Data Figure 1). The maximum event score represents the most pronounced constriction site highly enriched in DRP1, and hence the most relevant content in a given frame. This network output acts as a decision-making parameter for the actuation step in the EDA feedback control loop (Figure 2c). Once the maximum event score exceeds a threshold value, the imaging speed increases after the subsequent frame, and remains high until the event score dips below a second value. In the example shown, the neural network detects two events of interest, which trigger fast imaging in the iSIM; the first constriction event relaxes without dividing, while the second one ends in division.

Figure 2. Event recognition of mitochondrial divisions during an iSIM acquisition.

a, Images of a COS-7 cell expressing mitochondrion-targeted Mito-TagRFP and Emerald-DRP1, including those containing events of interest (white arrow) that triggered a change in the imaging speed. (Scale bar: 1 μm; image intensities were corrected for photobleaching, N = 33 events in n = 4 independent experiments) b, Corresponding event score maps and measurement timeline (grey, slow speed; blue, fast speed). c, Time course of the maximum event score computed for each frame of the acquisition (black), and the adaptive imaging speed actuated by the event score (red). The upper boundary of the grey band denotes the maximum threshold above which the iSIM switches to fast imaging, and the lower boundary the minumum threshold below which it returns to slow imaging.

Temporally adaptive imaging of mitochondrial division

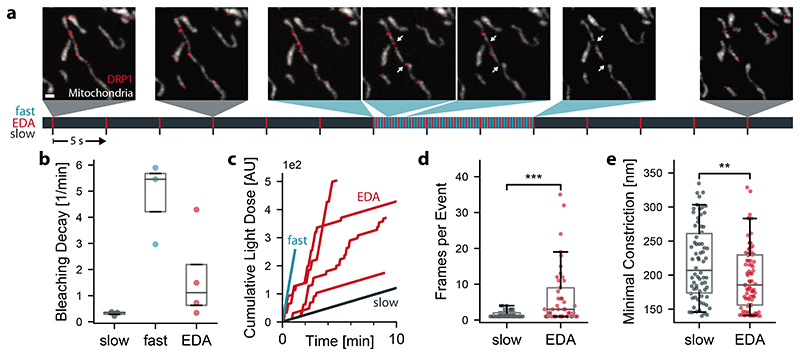

We performed EDA with our iSIM on COS-7 cells expressing mitochondrion-targeted Mito-TagRFP (Cox-8 presequence) and Emerald-DRP1. Typically, our FOV included one entire cell, and we focused in a plane near the coverslip, where many mitochondria were visible. During each measurement, the network recognized events of interest which triggered a switch from slow to fast imaging, followed by a return to slow imaging. Many sites that accumulated DRP1 did not lead to division, and also failed to trigger the network (Figure 3a), demonstrating the network’s ability to discriminate events of interest.

Figure 3. EDA versus fixed-rate imaging of mitochondrial divisions.

a, Mitochondrial dynamics (TagRFP-Mito, grey; Emerald-DRP1, red) captured by EDA (Scale bar: 1 μm, time first frame to last frame: 114 s). Below, measurement timeline indicating the approximate capture time of each image (grey, slow speed; blue, fast speed; red, frame capture, N = 33, n = 4). b, Decay constants obtained from exponential fits to the time-dependent mean intensity in the mitochondria (Extended Data Figure 2). Each point corresponds to a separate FOV. c, Cumulative light dose, defined as the laser power multiplied by the exposure time summed over all previous frames. EDA sometimes achieves a higher total light dose than fast imaging alone, due to recovery during slow imaging (Extended Data Figure 2). d, Number of frames with a constriction diameter below 200nm during the 20 seconds after the event score exceeded the threshold value. (P < 0.0003, Welch’s test) e, Minimal constriction width measured during the frames in d. (P < 0.005, independent two-sample t-test) slow: N = 360 frames in n = 3; fast: N = 765, n = 2; EDA: N = 1516, n = 4; Box plots mark the first quartile, median and third quartile with the whiskers spanning the 5th and 95th percentile.

To compare the duration of EDA to traditional imaging acquisitions using fixed parameters, we also collected data at a constant imaging speed of either 0.2 frames/sec (slow) or 3.8 frames/sec (fast). EDA showed less photobleaching decay relative to fast imaging, with an average 3-fold decrease in the exponential bleaching decay constant (Figure 3b). While the bleaching decay is still on average 5 times higher relative to slow imaging alone, it was possible to reach 10-minute imaging durations for many EDA experiments without excessive photobleaching or sample health degradation. EDA measurements sometimes showed photobleaching recovery while imaging at a slow speed (Extended Data Figure 2), enabling a higher cumulative light dose compared with fixed-rate fast imaging (Figure 3c). During EDA measurements, events of interest toggled the actuation from slow to fast on average 9 times for an average of 10 seconds resulting in EDA imaging fast for 18% of the frames.

The potential of intelligent microscopy includes measuring what standard acquisitions would miss – beyond measuring for longer overall durations. Ideally, EDA should collect constriction states leading up to division that would be inaccessible to slow imaging. We therefore use constriction diameters to quantify fission intermediates as a measure of event-relevant content, independent of the event score. During division events, (Figure 2c), we asked how many frames EDA collected with highly constricted diameters (below 200nm) compared to slow fixed-rate imaging (Figure 3d). The resolution of raw iSIM images is 210 nm prior to deconvolution. Therefore, information about constriction diameters below 200 nm is unavailable during on-the-fly processing, and reflects the ability of EDA to collect event-relevant content distinct from the ground truth for the neural network. We found the average number of such frames (constriction < 200 nm) during the first 20 seconds of an event was 5.4-fold higher for EDA compared with fixed slow imaging (7.5 versus 1.4 frames). This content enrichment is also reflected in the distribution of minimal constriction diameters (Figure 3e), both in terms of its lower mean value and its skew. For fixed fast imaging, a similar amount of data only showed three constriction events (and thus is not included in the figure panel), further highlighting the limitations of fixed-rate imaging compared with EDA. Thus, EDA better resolves the constricted states preceding division, as well as the progression of molecular and membrane states leading to fission, captured by each burst of fast images (Extended Data Figure 3).

Extending EDA to bacterial cell division

An endosymbiotic alphaproteobacterium is believed to be the ancestor to mitochondria39. Over time, the proteins required for division were replaced by homologues encoded in the host genome, which assemble on the mitochondrial exterior as opposed to the bacterial interior. A superficial resemblance remains between the sub-micrometric diameters of mitochondria and many bacteria, and the shape transitions they both undergo en route to division. But, the bacterial cell cycle occurs on the timescale of tens of minutes – over which the cells elongate and partially constrict, before popping apart into two separate daughter cells during a small fraction of the cell cycle duration (0.1-0.0001%)18,19 – in contrast with the tens of seconds for mitochondrial division. Thus, it poses a distinct set of challenges to live-cell microscopy.

We extended EDA to study the final stages of division of the alphaproteobacterium Caulobacter crescentus. We imaged cells expressing the cytoplasmic fluorescent protein mScarlet-I, and the bacterial divisome protein FtsZ-sfGFP (Figure 4a, Supplementary Note 4) with the iSIM. In newborn C. crescentus, FtsZ forms a punctum associated with one cell pole. As cells elongate, FtsZ depolymerizes and appears diffuse, before assembling into a narrow band of filaments that coordinates constriction at mid-cell. The band shrinks as the cell constricts, and finishes as a punctum at the division site. We found that our event detection network developed for mitochondrial constrictions could recognize the final stages of C. crescentus division without any additional training (Supplementary Note 3). This transferability is likely due to morphological similarities in constriction shape and the presence of a functionally similar molecular marker. High event scores remained specific to states close to division, preventing actuation from being triggered by the punctate polar arrangement of FtsZ at the beginning of the cell cycle (G0).

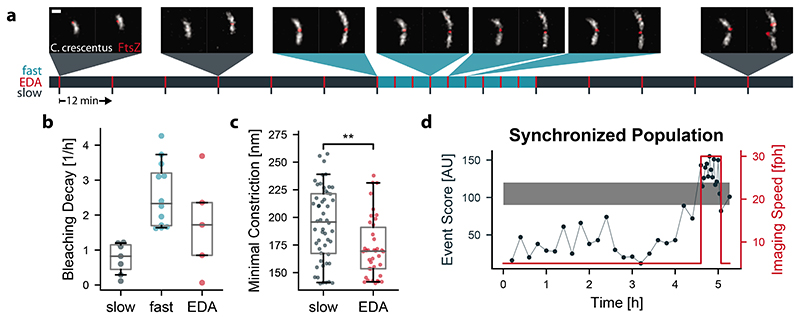

Figure 4. EDA versus fixed-rate imaging of bacterial divisions.

a, Representative images of C. crescentus (cytosolic mScarlet-I in grey and FtsZ-sfGFP in red) division events captured at different imaging speeds using an event-driven acquisition (Scale bar: 1 μm, time first frame to last frame: 5.8 h). b, Decay constants obtained from exponential fitting of the mean intensity in the bacteria over time. c, Minimal constriction width measured when following a constriction event over time. (P < 0.001, independent two-sample t-test) d, Event score and imaging speed for an EDA triggered experiment on cell-cycle synchronized bacteria. Long lag phase and many divisions at the same time imaged in high temporal resolution triggered by EDA. (slow: N = 228, n = 2; fast: N = 296, n = 3; EDA: N = 182, n = 5). Box plots mark the first quartile, median and third quartile with the whiskers spanning the 5th and 95th percentile.

C. crescentus cells at all stages of cell cycle were plated onto an agarose pad (Methods), with several hundred in the iSIM FOV. We collected data on cells at a fixed slow imaging speed of 6.7 frames/hour, a fast imaging speed of 20 frames/hour, or a variable speed switched by EDA. The bleaching decay rate diminished compared with the fixed fast imaging rate by a factor of 1.7 (Figure 4b). As for mitochondria, we found that constrictions measured with EDA had significantly smaller diameters on average compared with fixed slow imaging (Figure 4c), with the overall distribution shifted to lower values. This confirms that EDA improves access to details of bacterial cell division that are difficult to capture using a fixed imaging speed, and uses the photon budget more efficiently.

We also used EDA to image bacterial populations that were first synchronized into the beginning of the swarmer (G0) phase of cell cycle through density centrifugation (Methods). Initially, EDA maintained a slow imaging speed during the lag phase while bacteria increased in length without dividing (Figure 4d). This allowed a low light dose, so that cells could develop without division arrest and filamentation which occurred at faster imaging speeds. After several hours, the first wave of divisions in the population occurred, and EDA triggered high-speed imaging. In this manner, EDA captured many divisions simultaneously, as a consequence of a population-wide high and stable event score. After the population fully divided, imaging went back to the slow imaging speed, preserving the photon budget for the next wave of divisions (Extended Data Figure 4). Synchrony allowed for a further boost in events of interest, with EDA sensing and responding with the suitable timing based on cell-cycle progression.

Discussion

Overall, our results highlight the advantages of the EDA framework for biological systems with intermittent events of interest, even those operating at dramatically different timescales (seconds versus hours). Imaging of mitochondrial divisions benefits from extended imaging duration to increase the odds of capturing such rare events, and fast imaging speed once constrictions form to capture the structural intermediates of membrane remodelling. For C. crescentus, EDA allows us to follow the entire cell-cycle and image the final states of division at high temporal resolution. In both systems, as observed from the measured constriction diameters, EDA data gathered from individual cells contained smaller constriction sizes, difficult to capture by traditional imaging approaches due to their transience. Beyond that, EDA generates data with higher event-specific content density: more frames are dedicated to active time periods enriched in events of interest.

The EDA framework is generalizable to other microscopes capable of on-the-fly adaptation of acquisition parameters. For users seeking to implement it, we provide a Micro-Manager plugin (Supplementary Note 1), to assist in its adaptation to different imaging methods and biological systems. The plugin is compatible with multithread processing based on a user-provided neural network; thus, with sufficient computational power the response time of the adaptive feedback is limited by the time to process a single frame. While our implementation adapts the imaging speed in a discrete manner, EDA could evoke more complex responses such as multi-level or continuous actuation of the frame rate, as well as controlling other acquisition parameters such as the excitation power or exposure time. The benefits of applying EDA will depend on the specific dynamics of the observed events - the greatest advantages are expected if the sample and microscope are amenable to a large difference in imaging speeds. Brief, dynamic and rare events that can be predicted in advance are therefore best suited for observation using EDA.

As with all adaptive microscopies, care should be taken to decouple variations in experimental parameters from real biological changes. For example, phototoxicity varies non-linearly with the light dose40, and individual experiments using EDA will differ in the cumulative light dose based on the event-specific content of the sample. Consider the case of altered genetic backgrounds that change the frequency of events of interest: EDA would result in a different mean cumulative light dose for measurements of the same duration. Therefore, events collected under similar doses should be compared, and large sample sizes and randomization will be beneficial when pooling datasets acquired using EDA.

Our work contributes to the developing field of ‘smart’ or ‘self-driving’ microscopes2,41. By adapting acquisitions on-the-fly, microscopes can protect a sample from unnecessary illumination9–16, generate faster42,43 or better quality data44–46, optically highlight cells for genetic screening23, pinpoint single molecules with vanishingly small numbers of photons47, or manipulate nanoscale objects48. On the other hand, machine learning has proven useful for many image analysis tasks, allowing the detection of increasingly complex sample features. As event recognition networks become increasingly accessible, EDA can enhance the relevance of gathered data by repurposing such networks to actuate changes in acquisition routines. The synergy between nuanced detection and multifaceted adaptation offers a powerful means to boost biological discovery.

Methods

Sample preparation

Cos-7 cells for mitochondrial imaging

African green monkey kidney (Cos-7) cells were obtained from HPA culture collections (COS7-ECACC-87021302) and were cultured in Dulbecco’s modified Eagle medium (DMEM, ThermoFisher Scientific, 31966021) supplemented with 10% fetal bovine serum (FBS) at 37°C and 5% CO2. For imaging, cells were plated on 25 mm, #1.5 glass coverslips (Menzel) 16-24 h prior to transfection at a confluency of ~1×105 cells per well. Lipofectamine 2000 (ThermoFisher Scientific, 11668030) was used for dual transfections of Cox8-TagRFP and Emerald-DRP1. Transfections were performed 16-24 h before imaging, using 150 ng of plasmid and 1.5 μl of Lipofectamine 2000 per 100 μl Opti-MEM (ThermoFisher Scientific, 31985062). Imaging was performed at 37°C in pre-warmed Leibovitz medium (ThermoFisher Scientific, 21083027).

Caulobacter crescentus cells for bacterial imaging

Liquid C. crescentus cultures were grown overnight at 30 °C in 3 ml of 2x PYE1 (Peptone, Merck, #82303; Yeast Extract, Merck, #Y1626) medium under mechanical agitation (200 rpm). Liquid cultures were re-inoculated into fresh 2x PYE medium to grow cells until log-phase (OD660=0.2-0.4). Antibiotics (5 μg ml−1 kanamycin and 1 μg ml−1 gentamicin) were added in liquid cultures for selecting cells containing fluorescent protein fusions. To induce the expression of FtsZ-sfGFP and mScarlet-I under the Pxyl and Pvan promoter respectively, 0.5mM of vanillate and 0.2% weight/volume xylose were added to the culture 2 hours before imaging or synchronization2.

C. crescentus cell cultures were spotted onto a 2x PYE agarose pad for imaging. To make the agarose pad, a gasket (Invitrogen™, Secure-Seal™ Spacer, S24736) was placed on a rectangular glass slide, and filled with 1.5% 2x PYE agarose (Invitrogen™, UltraPure™ Agarose, 16500100) containing 0.5mM of vanillate and 0.2% of xylose. No antibiotics were added. Another glass slide was placed on the top of the silicone gasket, and the sandwich-like pad was placed at 4 °C until the agarose solidified. After 20 min, the top cover slide was removed, and a 1-2 μl drop of cell suspension was placed on the pad. After full absorption of the droplet, the pad was sealed with a plasma-cleaned #1.5 round coverslip of a diameter of 25mm (Menzel). For imaging the synchronized cells, a 1x PYE agarose pad was used to let cells grow slower.

iSIM imaging

Imaging was performed on a custom built instant structured illumination microscope (iSIM), previously described in detail3. Emerald/sfGFP and TagRFP/mScarlet-I fluorescence was excited using 488 and 561 nm lasers respectively. A system of microlens and pinhole arrays together with a galvo actuated mirror allows for instant super-resolution imaging on the camera chip (Photometrics Prime95B sCMOS). The implementation of a mfFIFI setup allows for homogeneous excitation over the full field of view3.

Event detection

Construction of input and output datasets

Detection of potential division events was performed using a neural network with U-net architecture4. An input dataset was constructed using 3700 dual-color images labeling mitochondria and DRP1, 1000 of which contain states close to division. This dataset was enhanced 10-fold by rotating the images, to make up the final dataset (37000 images). This data set was recorded on a fast dual-color SIM setup at Janelia Research Campus5 and published previously6, but processed via Gaussian filtering to imitate the expected resolution of raw iSIM data before deconvolution. The output ground truth dataset was generated from the fluorescence channels, followed by manual curation. Briefly, to identify division sites in the presence of a molecular marker, we identified constriction sites by looking for local saddle points. Saddle points are characterized by opposing principal curvatures, or eigenvalues of the local Hessian matrix. The product of the principal curvatures, or the Gaussian curvature, will therefore be negative - as will be reflected by the determinant of the local Hessian matrix. Therefore, computing the Hessian matrix for both the mitochondrial and the DRP1 channel highlights saddle-points in the mitochondrial channel overlapping with high-intensity DRP1 spots.

| (1) |

where Hchannel,i represents the largest or smallest eigenvalue of the Hessian matrix for i = 1, 2 respectively, Ichannel represents the raw fluorescence channel and × element-wise multiplication. The final GT matrix is normalized so that the full range is rescaled to 0-255 to make 8-bit heat-maps, with higher values representing more likely constriction sites.

Since mitochondrial division sites represent a large fraction, but not all events with DRP1 overlapping with mitochondrial saddle points, the processed frames were then curated manually to increase accuracy for real constriction events by visually removing false positives or ensuring consistent detection of active constriction sites. False positives most frequently corresponded to close mitochondrial contacts with nearby DRP1 bound to the outer membrane (but not constricting), very bright DRP1 spots and mitochondria with bi-concave disk-like shapes where thinning of the mitochondrion gets mistaken for a constriction site. False negatives were most often due to very late stages of fission or moments after fission as well as the onset of constriction which is nuanced and develops progressively. This ensured that the event detection network was able to discriminate events of interest with high accuracy. The dataset was then split 80:20 into training (29600 images) and testing (7400 images) datasets.

Network architecture and training

The network was trained on 29600 dual-color images (128x128x2) of mitochondria and DRP1 as input, and using the ground-truth images (128x128) marking the respective locations of divisions as output (Supplementary Note 3). We used pixel-wise mean-squared error as loss function, and trained the model using the Adam optimizer for 20 epochs with a batch size of 256. As neural network architecture we used a U-Net4 of depth 2 and kernelsize 7x7, with the number of initial feature channels set to 16 that were doubled after every pooling layer. Training was performed using tensorflow/keras and python 3.9.7.

Assessment of network prediction accuracy

To assess the accuracy of the network, individual event scores were first isolated from the heat maps using an intensity threshold of 80, since weak predictions and weak ground truth signals represent a low relative event probability and as such, will not contribute to the decision making step of the acquisition. Then, instead of counting discrete events, each event was weighed by its ground truth or predicted intensity: true positives were weighed by normalized ground truth intensity (representing what was the true value of the event that was detected), false positives were weighed by normalized predicted intensity (representing how wrong the prediction was, given no event was present) and false negatives were weighed by normalized ground truth intensity (representing the cost of missing the ground truth event). This way the strength of the prediction and true underlying signal is taken into account when evaluating the performance of the network. The total score for true positives was then divided by the sum of total true positives (false positives and false negatives) to produce an accuracy metric.

With this, the network reaches an accuracy of 86.7% when tested on test data, that was not used during training, with 5.4% false positives and 7.9% false negatives. The output of inference is a two dimensional map of relative division probabilities in the range 0 to 255. Inspecting false positives shows that events that most frequently contributed to false positives were close mitochondrial contacts with nearby DRP1 bound to the outer membrane (but not constricting), very bright DRP1 spots and mitochondria with bi-concave-disk-like shapes where thinning of the mitochondrion gets mistaken for a constriction site. False negatives were most often due to very late stages of fission or moments after fission as well as the onset of constriction which is nuanced and develops progressively.

Event-driven acquisition for adaptive temporal sampling

The different parts of the EDA framework were implemented in separated modules that allowed for continuous and independent testing of the components.

Data handling

The EDA framework is distributed over Micro-Manager8 for general microscope handling, and Matlab (MathWorks) for the control of the timing of the microscope components and Python for network inference. Furthermore, the Python module was used on a machine in the network due to hardware restrictions on the local computer used for microscope control. A network attached storage (NAS) unit was used to allow for communication of the different components of the system over the local 10 Gbit network. Recorded frames were stored as single .tif files to the NAS by Micro-Manager. A server implemented using the watchdog module (Python) on the remote machine detected new files for inference. After calculation of the decision parameter, the value was saved to a binary file that was used by Matlab to calculate the values for the next sequence of imaging.

Event Server

When a new file for each channel respectively was detected on the NAS, the event server implemented in Python running on a remote machine first performed data preparation on the recorded frames. The frames were resized by a factor of 0.7 to match the pixel size of the training data. Both frames were smoothed using a Gaussian with σ 1.5 px with an additional background subtraction in the DRP1 channel (Gaussian with σ 7.5 px). The frames were tiled into overlapping 128x128 px sized tiles to match the size of the training data. The individual tiles of the structure (mitochondria/C. crescentus) channel were again normalized individually.

Inference was performed on pairs of structure/foci tiles and the output was stitched together. The maximum value from the stitched frame was recorded as the highest relative probability in the frame for a division event and saved to the binary file on the NAS.

Hardware control

The timing of the microscope hardware is controlled using a PCI 6733 analog output device (National Instruments). The device is used in background mode requesting data when the existing sequence buffer has 1 s remaining. For mitochondria imaging, the slow mode sends a sequence containing one frame over five seconds (0.2 Hz) and a sequence containing five frames in one second (5 Hz) in the fast mode. For bacterial imaging, sequences with one frame over 3/9 or 2/12 minutes is provided for slow/fast and normal or synchronized colonies respectively. The parameters are chosen depending on which value was read from the binary file on the NAS that contains the latest event information written by the event server. In addition to a threshold, a hysteresis band is implemented here by defining an upper and a lower threshold. Fast acquisition starts at surpassing the upper threshold and is only stopped when the event score falls below the lower threshold. For C. crescentus imaging, the number of fast frames was further set to a minimum of three.

Due to the fast imaging rates in the mitochondria imaging, the new sequence is calculated before the event server has calculated the relative probability map for the last frames, leading to a delay in the reaction of the EDA framework. This is overcome for the C. crescentus imaging by delaying the calculation of the new sequences by 10 seconds allowing for mode switching on the newest data available.

Data Analysis

Statistical significance of differences in averages reported by asterisks was calculated using the the independent two-sample t-test for minimal constriction widths (Figures 3 & 4) and the Welch’s t-test for event scores (Suppl. Note 3) and number of events (Figure 3). Equality of variances in the data sets to decide between the two was tested for by the Levene test. All implementations were used as provided by the scipy.stats Python package.

Photobleaching Decay

The different photobleaching kinetics of the modes were characterized by the intensity contrast of the samples. The channel of the structural feature (mitochondria and caulobacter outline) was segmented using a Otsu-thresholding method after a median and Gaussian filter were applied (kernel size of 5 px each). The intensity contrast was then calculated as the mean intensity in the segmented region divided by the mean intensity in the rest of the image. Variation in the starting contrast between series was accounted for by normalization of the starting intensity contrast values. The decay constant was then obtained by fitting an exponential decay function (y = a exp(−bx) +c) to the intensity contrast over time data and extracting the b term. The reported imaging time ratios were calculated from the times from start of imaging to 90% of intensity contrast for each series.

Cumulative Light Dose

The cumulative light dose over time was taken to be proportional to the number of previously recorded frames. This is appropriate here, since the iSIM has a constant scan speed, and we did not modulate the laser intensity. Therefore, each sample received a fixed light dose per frame. Experiments were truncated after a user-defined minimum intensity contrast (SNR) was reached (1.1 for mitochondria and 1.02 for C. crescentus).

EDA Event Evaluation

The number of frames per event was calculated by counting the number of frames recorded for a fixed time after EDA triggered fast imaging or would have triggered fast imaging in slow mode. Events of interest were defined by a minimum value of the neural network output of 80 (90 for C. crescentus). Frames were analyzed until a maximal observation time of 20 seconds (1 hour for C. crescentus) was reached.

Constriction Width

The slow and EDA imaging modes were compared by calculating the minimal width of constriction measured during an event as described above. The measurement method was similar to that described in6. The deconvolution of the images was performed using the Richardson Lucy algorithm as implemented by the flowdec Python package with 30 iterations9. Segmentation, skeletonization and spline fitting the resulting points led to a backbone of the mitochondrion in a frame of 20 x 20 pixels around the position of the detected event. 100 perpendicular lines were generated around the closest point of the backbone to the event position. The intensity profile along those lines was fitted using a Gaussian profile. The full width at half maximum (FWHM) of the Gaussian profile with the smallest σ was recorded as the measured width for the frame. The minimal width measured for an event was calculated as the minimal FWHM over the observation time times the pixel size of the iSIM setup (56nm).

Extended Data

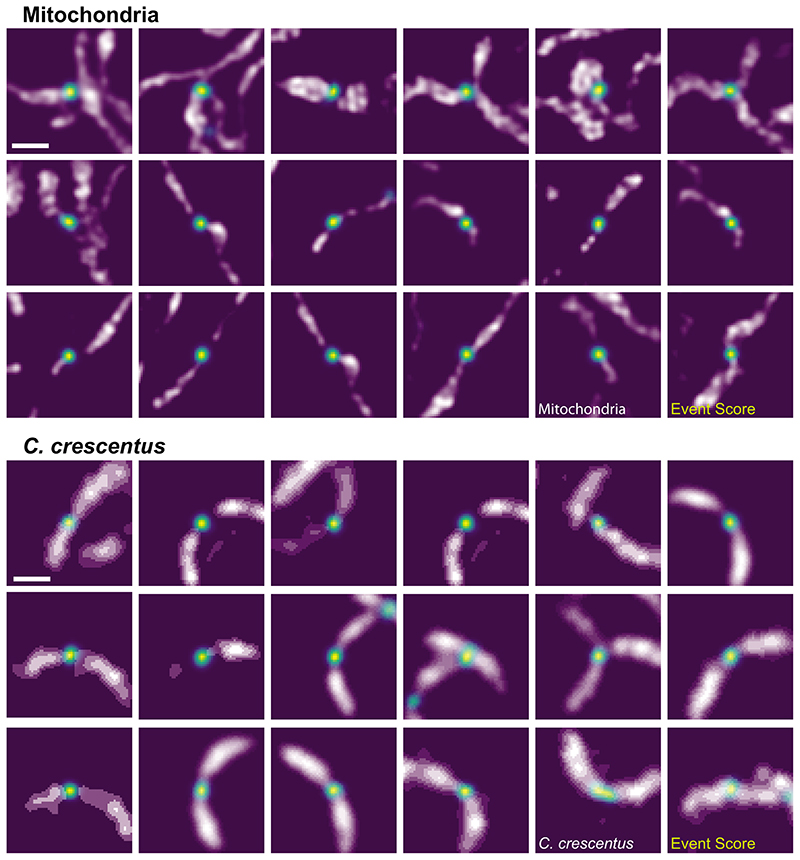

Extended Data Fig. 1. Example maximum event score regions which triggered EDA.

The event score output by the neural network can also be used to extract events of high interest from the datasets, after the acquisition is complete. Here, events that triggered EDA in different datasets are shown. The highest event score was used to define a region of interest around the event, representing a time and location of highest interest in the sample. Some regions appear twice, when the neural network event score was high enough to trigger EDA multiple times. Frames are shown in no specific order. Scale bars: 1 μm

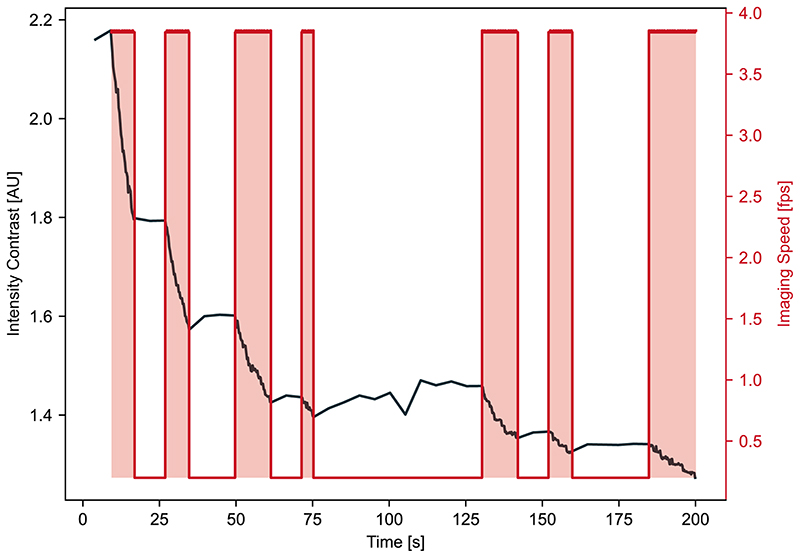

Extended Data Fig. 2. Bleaching behavior of a mitochondria sample during EDA imaging.

The different modes of imaging can clearly be seen in the bleaching curve represented by the signal-to-noise ratio calculated from the intensity inside the mitochondria compared to the signal outside of the mitochondria. For some parts with low frame rate, even a slight recovery of signal can be observed. (representative of n = 4 independent experiments)

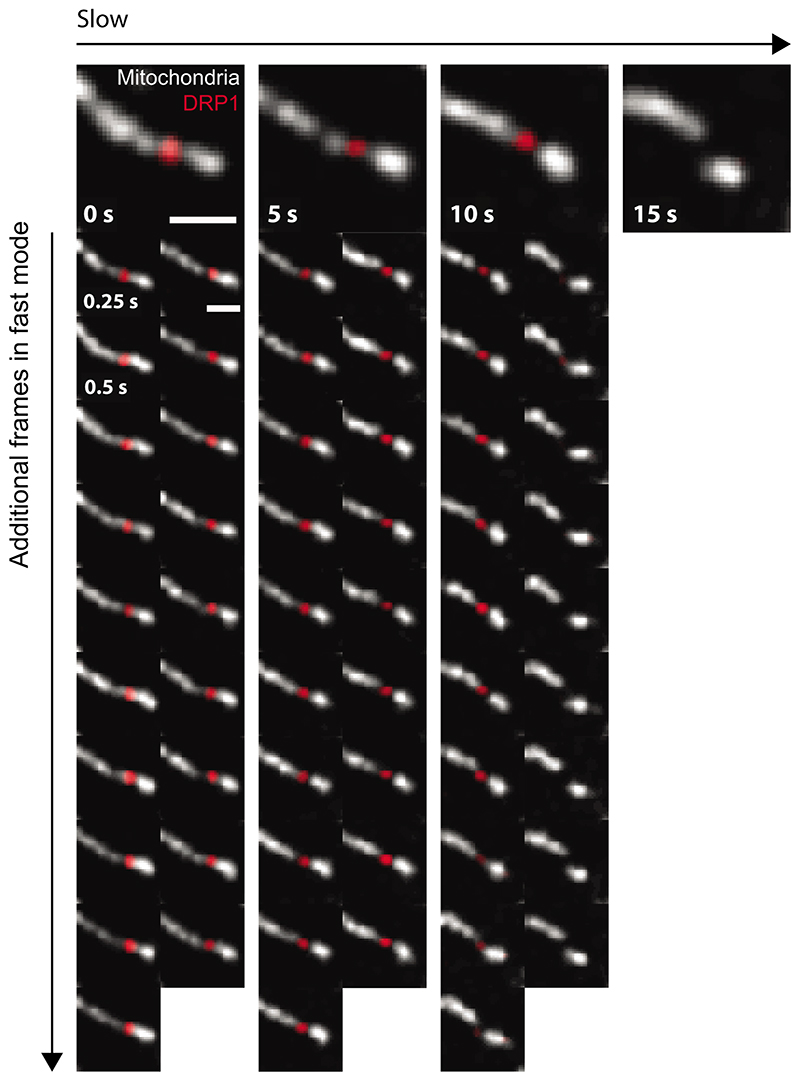

Extended Data Fig. 3. EDA delivers additional frames during events of interest.

Top row: mitochondrial division as it would have been recorded with the slow fixed imaging rate without EDA. Vertical frames: additional frames captured thanks to EDA switching to the fast imaging speed showing more detail of the dynamics of the event. Both the final constriction state and the fade of the DRP1 peak can be observed with higher temporal resolution, enhancing the relevant content of the dataset. This division event can also be seen in Supplementary Video 3.0. (Scale bars: 1 μm, representative of N = 33 events in n = 4 independent experiments)

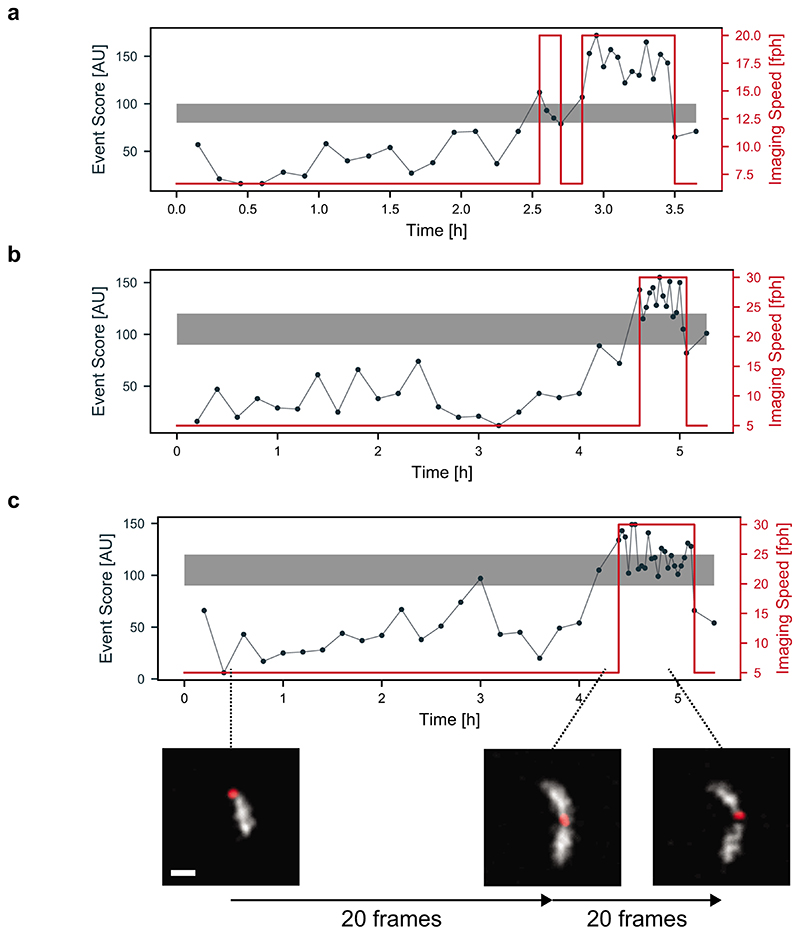

Extended Data Fig. 4. EDA imaging of synchronized bacteria populations.

C. crescentus, the strain used in this study, were synchronized via density centrifugation to obtain a population of cells that are all at the beginning of their cell cycle (G0, swarmer). This leads to a time lag before the next divisions take place. As they are synchronized, many bacteria in the sample will then divide at the same time. We used EDA to sense the onset of divisions in the sample and increase imaging speed during the divisions for high SNR and temporal resolution. We tested different times between images for fast and slow speeds, as well as different threshold event scores (grey band). a, slow: 9 min, fast 3 min. b and c, slow: 12 min, fast 2 min. (Scale bar: 1 μm, n = 4 independent synchronizations)

Supplementary Material

Acknowledgements

We thank Hélène Perreten for technical support with cell culture and plasmid construction, and Laurent Casini and Justine Collier (University of Lausanne) for sharing plasmids and protocols for the bacterial experiments. Imaging data used for training the neural network in this publication were produced in collaboration with the Advanced Imaging Center (AIC), a facility jointly supported by the Gordon and Betty Moore Foundation and HHMI at HHMI’s Janelia Research Campus. We thank Lin Shao and Teng-Leong Chew at Janelia AIC for their help with SIM imaging.

This work was supported by the Swiss National Science Foundation project grant (SNSF; 182429 to S.M., D.M. and W.L.S.), and National Centre for Competence in Research (NCCR Chemical Biology; S.M. and D.M.); and the European Union’s H2020 programme under the European Research Council (ERC; CoG 819823 Piko, S.M. and C.Z.), and the Marie Skłodowska-Curie Fellowships (890169 BALTIC, J.G.). MW is supported by the EPFL School of Life Sciences and a generous foundation represented by CARIGEST SA.

Footnotes

Author contributions

D.M., J.G. and S.M. conceived and designed the project. D.M., M.W. and S.M. supervised the project. D.M. collected the training data and performed the experiments on mitochondrial division. D.M. and M.W. implemented the neural network for event detection. W.L.S. and D.M. implemented the EDA framework and performed data analysis. W.L.S. developed the Python plugin for Micro-Manager and its documentation. W.L.S performed the experiments on C. crescentus. C.Z. cultivated the C. crescentus strains and prepared samples for imaging. W.L.S prepared the figures. D.M., W.L.S. and S.M. wrote the manuscript with contributions from all authors.

Competing Interests Statement

The authors declare that they have no conflict of interest.

Data Availability

The data contained in this manuscript and the training data for the model used can be found at 10.5281/zenodo.5548354.

Code Availability

All code used in this project is available at https://github.com/LEB-EPFL/EDA. The Python plugin described can be found at https://github.com/wl-stepp/eda_plugin.

References

- 1.Laissue PP, Alghamdi RA, Tomancak P, Reynaud EG, Shroff H. Assessing Phototoxicity in Live Fluorescence Imaging. Nat Methods. 2017 July;14:657–661. doi: 10.1038/nmeth.4344. ISSN: 1548-7091, 1548-7105. [DOI] [PubMed] [Google Scholar]

- 2.Scherf N, Huisken J. The Smart and Gentle Microscope. Nat Biotechnol. 2015 Aug;33:815–818. doi: 10.1038/nbt.3310. ISSN: 1087-0156, 1546-1696. [DOI] [PubMed] [Google Scholar]

- 3.Grimm JB, Lavis LD. Caveat Fluorophore: An Insiders’ Guide to Small-Molecule Fluorescent Labels. Nat Methods. 2022 Feb;19:149–158. doi: 10.1038/s41592-021-01338-6. ISSN: 1548-7091, 1548-7105. [DOI] [PubMed] [Google Scholar]

- 4.Rodriguez EA, et al. The Growing and Glowing Toolbox of Fluorescent and Photoactive Proteins. Trends Biochem Sci. 2017 Feb;42:111–129. doi: 10.1016/j.tibs.2016.09.010. ISSN: 0968-0004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Weigert M, et al. Content-Aware Image Restoration: Pushing the Limits of Fluorescence Microscopy. Nat Methods. 2018 Dec;15:1090–1097. doi: 10.1038/s41592-018-0216-7. ISSN: 1548-7105. [DOI] [PubMed] [Google Scholar]

- 6.Jin L, et al. Deep Learning Enables Structured Illumination Microscopy with Low Light Levels and Enhanced Speed. Nat Commun. 2020 Apr;11:1934. doi: 10.1038/s41467-020-15784-x. ISSN: 2041-1723. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Ouyang W, Aristov A, Lelek M, Hao X, Zimmer C. Deep Learning Massively Accelerates Super-Resolution Localization Microscopy. Nat Biotechnol. 2018 May;36:460–468. doi: 10.1038/nbt.4106. ISSN: 1546-1696. [DOI] [PubMed] [Google Scholar]

- 8.Chen J, et al. Three-Dimensional Residual Channel Attention Networks Denoise and Sharpen Fluorescence Microscopy Image Volumes. Nat Methods. 2021 June;18:678–687. doi: 10.1038/s41592-021-01155-x. ISSN: 1548-7105. [DOI] [PubMed] [Google Scholar]

- 9.Hoebe RA, et al. Controlled Light-Exposure Microscopy Reduces Photobleaching and Phototoxicity in Fluorescence Live-Cell Imaging. Nat Biotechnol. 2007 Feb;25:249–253. doi: 10.1038/nbt1278. ISSN: 1546-1696. [DOI] [PubMed] [Google Scholar]

- 10.Hoebe RA, Van der Voort HTM, Stap J, Van Noorden CJF, Manders EMM. Quantitative Determination of the Reduction of Phototoxicity and Photobleaching by Controlled Light Exposure Microscopy. J Microsc. 2008 July;231:9–20. doi: 10.1111/j.1365-2818.2008.02009.x. ISSN: 1365-2818. [DOI] [PubMed] [Google Scholar]

- 11.Heine J, et al. Adaptive-Illumination STED Nanoscopy. Proc Natl Acad Sci USA. 2017 Sept;114:9797–9802. doi: 10.1073/pnas.1708304114. ISSN: 0027-8424, 1091-6490. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Dreier J, et al. Smart Scanning for Low-Illumination and Fast RESOLFT Nanoscopy in Vivo. Nat Commun. 2019 Dec;10:556. doi: 10.1038/s41467-019-08442-4. ISSN: 2041-1723. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Chu KK, Lim D, Mertz J. Enhanced Weak-Signal Sensitivity in Two-Photon Microscopy by Adaptive Illumination. Opt Lett. 2007 Oct;32:2846–2848. doi: 10.1364/ol.32.002846. ISSN: 0146-9592. [DOI] [PubMed] [Google Scholar]

- 14.Li B, Wu C, Wang M, Charan K, Xu C. An Adaptive Excitation Source for High-Speed Multiphoton Microscopy. Nat Methods. 2020 Feb;17:163–166. doi: 10.1038/s41592-019-0663-9. ISSN: 1548-7105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Pinkard H, et al. Learned Adaptive Multiphoton Illumination Microscopy for Large-Scale Immune Response Imaging. Nat Commun. 2021 Mar;12:1916. doi: 10.1038/s41467-021-22246-5. ISSN: 2041-1723. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Chakrova N, Canton AS, Danelon C, Stallinga S, Rieger B. Adaptive Illumination Reduces Photobleaching in Structured Illumination Microscopy. Biomed Opt Express. 2016 Oct;7:4263. doi: 10.1364/BOE.7.004263. ISSN: 2156-7085, 2156-7085. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Mahecic D, et al. Mitochondrial Membrane Tension Governs Fission. Cell Reports. 2021 Apr;35:108947. doi: 10.1016/j.celrep.2021.108947. ISSN: 2211-1247. [DOI] [PubMed] [Google Scholar]

- 18.Lambert A, et al. Constriction Rate Modulation Can Drive Cell Size Control and Homeostasis in C. Crescentus. iScience. 2018 June;4:180–189. doi: 10.1016/j.isci.2018.05.020. ISSN: 2589-0042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Zhou X, et al. Mechanical Crack Propagation Drives Millisecond Daughter Cell Separation in Staphylococcus Aureus. Science. 2015 May;348:574–578. doi: 10.1126/science.aaa1511. ISSN: 0036-8075. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Edelstein AD, et al. Advanced Methods of Microscope Control Using μManager Software. J Biol Methods. 2014 Nov;1:e10. doi: 10.14440/jbm.2014.36. ISSN: 2326-9901. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Pinkard H, Stuurman N, Corbin K, Vale R, Krummel MF. Micro-Magellan: Open-Source, Sample-Adaptive, Acquisition Software for Optical Microscopy. Nat Methods. 2016 Oct;13:807–809. doi: 10.1038/nmeth.3991. ISSN: 1548-7091, 1548-7105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Almada P, et al. Automating Multimodal Microscopy with NanoJ-Fluidics. Nat Commun. 2019 Dec;10:1223. doi: 10.1038/s41467-019-09231-9. ISSN: 2041-1723. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Yan X, et al. High-Content Imaging-Based Pooled CRISPR Screens in Mammalian Cells. Journal of Cell Biology. 2021 Jan;220:e202008158. doi: 10.1083/jcb.202008158. ISSN: 0021-9525. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.York AG, et al. Instant Super-Resolution Imaging in Live Cells and Embryos via Analog Image Processing. Nat Methods. 2013 Nov;10:1122–1126. doi: 10.1038/nmeth.2687. ISSN: 1548-7091, 1548-7105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Mahecic D, et al. Homogeneous Multifocal Excitation for High-Throughput Super-Resolution Imaging. Nat Methods. 2020 July;17:726–733. doi: 10.1038/s41592-020-0859-z. ISSN: 1548-7091, 1548-7105. [DOI] [PubMed] [Google Scholar]

- 26.Twig G, et al. Fission and Selective Fusion Govern Mitochondrial Segregation and Elimination by Autophagy. EMBO J. 2008 Jan;27:433–446. doi: 10.1038/sj.emboj.7601963. ISSN: 1460-2075. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Sekh AA, et al. Physics-Based Machine Learning for Subcellular Segmentation in Living Cells. 2021 Dec; ISSN: 2522-5839. [Google Scholar]

- 28.Fischer CA, et al. MitoSegNet: Easy-to-use Deep Learning Segmentation for Analyzing Mitochondrial Morphology. iScience. 2020 Sept;23:101601. doi: 10.1016/j.isci.2020.101601. ISSN: 2589-0042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Lihavainen E, Mäkelä J, Spelbrink JN, Ribeiro AS. Mytoe: Automatic Analysis of Mitochondrial Dynamics. Bioinformatics. 2012 Apr;28:1050–1051. doi: 10.1093/bioinformatics/bts073. ISSN: 1367-4811. [DOI] [PubMed] [Google Scholar]

- 30.Peng J-Y, et al. Automatic Morphological Subtyping Reveals New Roles of Caspases in Mitochondrial Dynamics. PLoS Computational Biology. 2011;7:14. doi: 10.1371/journal.pcbi.1002212. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Valente AJ, Maddalena LA, Robb EL, Moradi F, Stuart JA. A Simple ImageJ Macro Tool for Analyzing Mitochondrial Network Morphology in Mammalian Cell Culture. Acta Histochem. 2017 Apr;119:315–326. doi: 10.1016/j.acthis.2017.03.001. ISSN: 1618-0372. [DOI] [PubMed] [Google Scholar]

- 32.Leonard AP, et al. Quantitative Analysis of Mitochondrial Morphology and Membrane Potential in Living Cells Using High-Content Imaging, Machine Learning, and Morphological Binning. Biochim Biophys Acta. 2015 Feb;1853:348–360. doi: 10.1016/j.bbamcr.2014.11.002. ISSN: 0006-3002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Lefebvre AEYT, Ma D, Kessenbrock K, Lawson DA, Digman MA. Automated Segmentation and Tracking of Mitochondria in Live-Cell Time-Lapse Images. Nat Methods. 2021 Sept;18:1091–1102. doi: 10.1038/s41592-021-01234-z. ISSN: 1548-7105. [DOI] [PubMed] [Google Scholar]

- 34.Smirnova E, Griparic L, Shurland D-L, van der Bliek AM. Dynamin-Related Protein Drp1 Is Required for Mitochondrial Division in Mammalian Cells. Mol Biol Cell. 2001 Aug;12:2245–2256. doi: 10.1091/mbc.12.8.2245. ISSN: 1059-1524. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Kamerkar SC, Kraus F, Sharpe AJ, Pucadyil TJ, Ryan MT. Dynamin-Related Protein 1 Has Membrane Constricting and Severing Abilities Sufficient for Mitochondrial and Peroxisomal Fission. Nat Commun. 2018 Dec;9:5239. doi: 10.1038/s41467-018-07543-w. ISSN: 2041-1723. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Kleele T, et al. Distinct Fission Signatures Predict Mitochondrial Degradation or Biogenesis. Nature. 2021 May;593:435–439. doi: 10.1038/s41586-021-03510-6. ISSN: 1476-4687. [DOI] [PubMed] [Google Scholar]

- 37.Ugarte-Uribe B, Müller H-M, Otsuki M, Nickel W, García-Sáez AJ. Dynamin-Related Protein 1 (Drp1) Promotes Structural Intermediates of Membrane Division. J Biol Chem. 2014 Oct;289:30645–30656. doi: 10.1074/jbc.M114.575779. ISSN: 1083-351X. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Ronneberger O, Fischer P, Brox T. U-Net: Convolutional Networks for Biomedical Image Segmentation. arXiv. 2015 May;:arXiv: 1505.04597 [cs] [Google Scholar]

- 39.Andersson SG, et al. The Genome Sequence of Rickettsia Prowazekii and the Origin of Mitochondria. Nature. 1998 Nov;396:133–140. doi: 10.1038/24094. ISSN: 0028-0836. [DOI] [PubMed] [Google Scholar]

- 40.Kilian N, et al. Assessing Photodamage in Live-Cell STED Microscopy. Nat Methods. 2018 Oct;15:755–756. doi: 10.1038/s41592-018-0145-5. ISSN: 1548-7091. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Eisenstein M. Smart Solutions for Automated Imaging. Nat Methods. 2020 Nov;17:1075–1079. doi: 10.1038/s41592-020-00988-2. ISSN: 1548-7091, 1548-7105. [DOI] [PubMed] [Google Scholar]

- 42.Waithe D, et al. Object Detection Networks and Augmented Reality for Cellular Detection in Fluorescence Microscopy. J Cell Biol. 2020 Oct;219:e201903166. doi: 10.1083/jcb.201903166. ISSN: 1540-8140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Kechkar A, Nair D, Heilemann M, Choquet D, Sibarita J-B. Real-Time Analysis and Visualization for Single-Molecule Based Super-Resolution Microscopy. PLOS ONE. 2013 Apr;8:e62918. doi: 10.1371/journal.pone.0062918. ISSN: 1932-6203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Royer LA, et al. Adaptive Light-Sheet Microscopy for Long-Term, High-Resolution Imaging in Living Organisms. Nat Biotechnol. 2016 Dec;34:1267–1278. doi: 10.1038/nbt.3708. ISSN: 1087-0156, 1546-1696. [DOI] [PubMed] [Google Scholar]

- 45.Štefko M, Ottino B, Douglass KM, Manley S. Autonomous Illumination Control for Localization Microscopy. Opt Express. 2018 Nov;26:30882. doi: 10.1364/OE.26.030882. ISSN: 1094-4087. [DOI] [PubMed] [Google Scholar]

- 46.Durand A, et al. A Machine Learning Approach for Online Automated Optimization of Super-Resolution Optical Microscopy. Nat Commun. 2018 Dec;9:5247. doi: 10.1038/s41467-018-07668-y. ISSN: 2041-1723. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Balzarotti F, et al. Nanometer Resolution Imaging and Tracking of Fluorescent Molecules with Minimal Photon Fluxes. Science. 2017 Feb;355:606–612. doi: 10.1126/science.aak9913. ISSN: 1095-9203. [DOI] [PubMed] [Google Scholar]

- 48.Cohen AE, Moerner WE. Method for Trapping and Manipulating Nanoscale Objects in Solution. Appl Phys Lett. 2005 Feb;86:093109. ISSN: 0003-6951, 1077-3118. [Google Scholar]

References

- 1.Ely B. Methods in Enzymology. Academic Press; 1991. Jan, pp. 372–384. [DOI] [PubMed] [Google Scholar]

- 2.Schrader JM, Shapiro L. Synchronization of Caulobacter Crescentus for Investigation of the Bacterial Cell Cycle. J Vis Exp. 2015 Apr;:52633. doi: 10.3791/52633. ISSN: 1940-087X. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Mahecic D, et al. Homogeneous Multifocal Excitation for High-Throughput Super-Resolution Imaging. Nat Methods. 2020 July;17:726–733. doi: 10.1038/s41592-020-0859-z. ISSN: 1548-7091, 1548-7105. [DOI] [PubMed] [Google Scholar]

- 4.Ronneberger O, Fischer P, Brox T. U-Net: Convolutional Networks for Biomedical Image Segmentation. arXiv. 2015 May;:arXiv: 1505.04597 [cs] [Google Scholar]

- 5.Fiolka R, Shao L, Rego EH, Davidson MW, Gustafsson MGL. Time-Lapse Two-Color 3D Imaging of Live Cells with Doubled Resolution Using Structured Illumination. PNAS. 2012 Apr;109:5311–5315. doi: 10.1073/pnas.1119262109. ISSN: 0027-8424, 1091-6490. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Mahecic D, et al. Mitochondrial Membrane Tension Governs Fission. Cell Reports. 2021 Apr;35:108947. doi: 10.1016/j.celrep.2021.108947. ISSN: 2211-1247. [DOI] [PubMed] [Google Scholar]

- 7.Chollet F. Keras. 2015 [Google Scholar]

- 8.Edelstein AD, et al. Advanced Methods of Microscope Control Using μManager Software. J Biol Methods. 2014 Nov;1:e10. doi: 10.14440/jbm.2014.36. ISSN: 2326-9901. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Czech E, Aksoy BA, Aksoy P, Hammerbacher J. Cytokit: A Single-Cell Analysis Toolkit for High Dimensional Fluorescent Microscopy Imaging. BMC Bioinformatics. 2019 Sept;20:448. doi: 10.1186/s12859-019-3055-3. ISSN: 1471-2105. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The data contained in this manuscript and the training data for the model used can be found at 10.5281/zenodo.5548354.

All code used in this project is available at https://github.com/LEB-EPFL/EDA. The Python plugin described can be found at https://github.com/wl-stepp/eda_plugin.