Abstract

Corrected misinformation can continue to influence inferential reasoning. It has been suggested that such continued influence is partially driven by misinformation familiarity, and that corrections should therefore avoid repeating misinformation to avoid inadvertent strengthening of misconceptions. However, evidence for such familiarity-backfire effects is scarce. We tested whether familiarity backfire may occur if corrections are processed under cognitive load. Although misinformation repetition may boost familiarity, load may impede integration of the correction, reducing its effectiveness and therefore allowing a backfire effect to emerge. Participants listened to corrections that repeated misinformation while in a driving simulator. Misinformation familiarity was manipulated through the number of corrections. Load was manipulated through a math task administered selectively during correction encoding. Multiple corrections were more effective than a single correction; cognitive load reduced correction effectiveness, with a single correction entirely ineffective under load. This provides further evidence against familiarity-backfire effects and has implications for real-world debunking.

Keywords: Misinformation, continued influence effect, cognitive load, familiarity, familiarity backfire effect

General Audience Summary

Misinformation can continue to influence an individual’s reasoning even after a correction. This is known as the continued-influence effect. It has previously been suggested that this effect occurs (at least partially) due to the familiarity of the misinformation. This has led to recommendations to avoid repeating the misinformation within a correction, as this may increase misinformation familiarity and thus, ironically, false beliefs. However, it has proven difficult to find strong evidence for such familiarity-backfire effects. One situation that may produce familiarity-driven backfire is if misinformation is repeated within a correction while participants are distracted—misinformation repetition may automatically boost its familiarity, while the distraction may impede proper processing and integration of the correction. In this study, we investigated how misinformation repetition during distraction affected the continued-influence effect. The current study extends the generalizability of traditional misinformation research by asking participants to listen to misinformation and corrections while in a driving simulator. Misinformation familiarity was manipulated through the number of corrections provided that contained the misinformation. Distraction was applied not only through the background task of driving in the simulator, but also manipulated through a secondary math task, which was administered selectively during the correctionencoding phase, and which required manual responses on a cockpit-mounted tablet. As hypothesized, cognitive load reduced the effectiveness of corrections. Furthermore, we found no evidence of familiarity-backfire effects, with multiple corrections being more effective in reducing misinformation reliance than a single correction. When participants were distracted, a single correction was entirely ineffective and multiple corrections were required to achieve a reduction in misinformation reliance. This provides further evidence against familiarity-backfire effects under conditions maximally favourable to their emergence and implies that practitioners can debunk misinformation without fear of inducing ironic backfire effects.

Listening to Misinformation while Driving: Cognitive Load and the Effectiveness of (Repeated) Corrections

Misinformation—false information initially presented as true—can continue to influence people’s reasoning even after a retraction. This is known as the continued-influence effect (CIE; Chan et al., 2017; Ecker et al., 2022; Johnson & Seifert, 1994; Paynter et al., 2019; Rich & Zaragoza, 2016; Walter & Tukachinsky, 2020). Two complementary theoretical explanations have been put forth to explain the CIE: the mental-model and the selective-retrieval accounts.

The mental-model account proposes that people create mental models of events that are continuously updated. This account suggests that a retraction must be integrated into the model to be effective (Brydges et al., 2018; Gordon et al., 2017; Kendeou et al., 2014; Rapp & Kendeou, 2007; Richter & Singer, 2017). However, if a retraction invalidates a critical piece of information, such as an event’s cause, this can threaten model coherence and may lead to poor retraction integration (Gordon et al., 2017). Thus, continued misinformation reliance occurs when retraction integration and model updating fail. This is supported by findings that retraction effectiveness is enhanced when a causal alternative is provided, presumably because it can replace the misinformation in the model, removing the threat to model coherence (e.g., Chan et al., 2017; Ecker et al., 2019; Walter & Murphy, 2018).

By contrast, the selective-retrieval account assumes that the CIE occurs because, at test, misinformation is retrieved but the corrective information is not (Ayers & Reder, 1998; Lewandowsky et al., 2012). An extension of this account assumes dual processes (see Yonelinas, 2002), specifically that misinformation reliance occurs when misinformation is automatically retrieved due to its familiarity, while there is concurrent failure of strategic processes necessary to recollect the retraction (Ecker et al., 2010). This is supported by findings that misinformation familiarity and factors that impair strategic memory processes can increase the CIE (Rich & Zaragoza, 2020; Swire et al., 2017; but see Brydges et al., 2020). In light of this, the repetition of misinformation within a correction is often considered detrimental (Peter & Koch, 2016; Schwarz et al., 2007; Schwarz et al., 2016); this is because the familiarity of the misinformation is boosted, which increases the likelihood of later automatic retrieval. For example, the correction “the blood-clotting was not caused by the vaccination” repeats the key words “blood-clotting” and “vaccination”, which makes the association more familiar and may in turn increase later misinformation reliance notwithstanding the negation (e.g., Nyhan et al., 2014; Paynter et al., 2019).

Some studies have reported that familiarity can ironically even increase belief in the corrected information (Pluviano et al., 2017; Skurnik et al., 2007); this has been termed the familiarity-backfire effect (Lewandowsky et al., 2012). However, there have been several failures to replicate this finding, and it has not been produced even in theoretically favourable circumstances (Cameron et al., 2013; Ecker, Lewandowsky et al., 2020; Ecker et al., 2011; Ecker, O’Reilly et al., 2020; Rich & Zaragoza, 2016; Swire et al., 2017; also see Swire-Thompson et al., 2020, 2022; Walter & Tukachinsky, 2020). For example, in the study by Ecker et al. (2011), if a piece of misinformation was presented three times, multiple retractions—each repeating the misinformation—were more effective than a single retraction. This is the opposite to what would be expected if familiarity was detrimental to belief updating. Some studies have even reported a beneficial effect of repeating the misinformation within a single correction (Carnahan & Garrett, 2019; Ecker et al., 2017; Wahlheim et al., 2020), presumably because the co-activation of misinformation and correction aids in conflict detection and knowledge revision, in line with the mental-model account (Ecker et al., 2017; Kendeou et al., 2014, 2019).

Thus, overall, there is no strong evidence for familiarity-backfire effects. However, it remains theoretically possible that a backfire effect could occur if a correction is elicited under cognitive load. Cognitive load refers to the division of cognitive resources between multiple tasks. Load can have a detrimental effect on memory processes including reduced depth of encoding, and impairments to strategic retrieval processes (Craik et al., 1996; Fernandes & Moscovitch, 2000; Hicks & Marsh, 2000). Previous research has examined the effect of cognitive load during misinformation and retraction encoding (Ecker, Lewandowsky et al., 2020; Ecker et al., 2011; Szumowska et al., 2021). Ecker et al. (2011) found that misinformation reliance was reduced by a retraction only if the retraction was encoded without load. This suggests that retractions require full cognitive resources at encoding to be effective. However, at the same time, misinformation repetition might boost misinformation familiarity even under load, thus potentially facilitating familiarity-backfire effects. This is supported by research on negations showing that people sometimes misremember negated information as true, especially when under load (Gilbert et al., 1990, 1993).

The Present Study

The current study investigated the effects of cognitive load and familiarity on the CIE under naturalistic conditions. Cognitive load applied during encoding of a retraction should increase the CIE inasmuch as load impairs integration and/or later retrieval of the correction, resulting in an increased CIE in the retraction conditions. Furthermore, the repetition of misinformation may boost its subsequent automatic activation and familiarity-based retrieval even under load, leading to a greater CIE and potentially a familiarity-backfire effect. From an applied perspective, understanding the impact of cognitive load is important because in today’s media landscape, people are exposed to an abundance of (mis)information, often while other tasks compete for their cognitive resources (e.g., listening to the radio while driving or scrolling through social media while having a conversation; Chotpitayasunondh & Douglas, 2018).

To this end, participants were presented auditorily with misinformation and retractions under the guise of a radio news segment while in a driving simulator. A driving simulator was used to enhance ecological validity; it was not intended to be the primary load task. A more specific manipulation of cognitive load was achieved through a secondary math task applied during retraction encoding and integration; this task allowed tight control over the onset of load and was known to be sufficiently taxing (Bowden et al., 2019). Familiarity was manipulated through repetition of retractions containing the misinformation (i.e., no-retraction control, 1-retraction, 3-retractions). Participants’ inferential reasoning—their reliance on the critical information when reasoning about the event—was measured with a series of inference questions probing the misinformation.

It was hypothesized that (1) cognitive load would hinder retraction encoding and/or integration and thus later retrieval of the retraction, resulting in less effective correction (i.e., a larger CIE compared to no-load retraction conditions). We expected load to only interfere with correction processing, which is challenging due to the need for conflict resolution and model updating. We did not expect load to interfere with basic information processing generally; load of a secondary task should only matter if load across tasks exceeds capacity limits. We therefore did not predict a load effect in the no-retraction condition. It was also hypothesized that (2) correction repetition would facilitate its encoding and integration, resulting in more effective correction (i.e., a smaller CIE) relative to a single retraction. Previous research has found that multiple corrections or counterarguments can increase correction effectiveness (Ecker et al., 2019; Vraga & Bode, 2017; Walter & Tukachinsky, 2020), although this is sometimes only observed when the misinformation is encoded particularly strongly (Ecker et al., 2011). Finally, (3) if load hinders retraction encoding/integration, we expected the effect of repetition to be smaller under load (i.e., a load-by-retraction interaction). Although we did not predict a familiarity-backfire effect, it was deemed theoretically most likely with multiple retractions under load.

Method

This study used a 2 × 3 within-subjects design contrasting load (no-load vs. load) and retraction (no-retraction, 1-retraction, 3-retractions) conditions. The dependent variable was participants’ inference score—reflecting their reliance on the critical information when reasoning about the event—derived from their responses to the post-manipulation inference questions. Inference questions rely more on reasoning and judgement about the event, compared to memory questions.

Participants

We tested N = 259 undergraduate students from The University of Western Australia, who participated in exchange for course credit. While the data were analysed with a linear mixed-effects model, an a-priori power analysis assuming a 2 × 3 repeated measures ANOVA served as a rough guide. Using MorePower 6.0.4 (Campbell & Thompson, 2012), this indicated a minimum sample size of 192 to detect a small (f = .20) main effect of the two-level factor with α = .05 and 1 - β = .80. The sample comprised 166 females, 92 males and one participant of undisclosed gender; mean age was M = 20.24 years (SD = 4.73), ranging from 17 to 50 years. Participants were required to have at least a probationary driver’s license. Participants received a bonus of up to AU$3 at the end of the experiment as a drivingperformance incentive (see below for details).

Apparatus

Driving Simulator

Driving simulators have been shown to be effective in mimicking real driving experiences (Reed & Green, 1999; Underwood et al., 2011). The driving simulator used Oktal’s SCANeR Studio software (Version 1.4) and comprised three parallel 27-inch monitors, providing a 135° wide-field video display, housed in an Obutto cockpit. The central monitor represented the front windscreen and included a central rear-vision mirror and digital speedometer; the two side monitors provided side views with mirrors (see Figure 1). Participants were seated approximately 85 cm from the central monitor and controlled the simulated automatic-transmission vehicle using a Logitech gaming steering wheel and pedal set. The simulated vehicle and environment were configured for right-hand drive conditions. Participants wore a set of headphones, through which the news reports were presented. An 8-inch Samsung Galaxy tablet (for the secondary math task) was mounted to the right of the steering wheel and within participants’ reach.

Figure 1. Driving Simulator Set-Up.

Note. The left panel shows the driving simulator with the tablet device positioned next to the steering wheel. The right panel shows the central monitor view of the driving environment with rear-view mirror and digital speedometer displayed.1

Materials

Auditory Event Reports

Six auditory event reports detailing fictitious newsworthy event scenarios (e.g., an emergency airplane landing) were used in the present study. The reports were presented as a radio news broadcast with host and guest-reporter segments. The first segment of each report was presented by the host and contained a critical piece of information about the cause of the event (e.g., extreme weather conditions). The second segment of the report was presented by a guest reporter and either retracted the critical information (e.g., the airplane’s difficulties were not due to the weather) or not. All reports existed in no-retraction, 1-retraction, and 3-retraction versions. In the 3-retraction version, the retraction was repeated three times as opposed to only once in the 1-retraction version (see Table 1), and there was no retraction at all in the no-retraction version. For each participant, four of the reports contained a retraction (either 1-retraction or 3-retractions) while the remaining two reports contained no retraction. The scenario order and assignment of scenarios to load and retraction conditions was counterbalanced across participants using a Graeco-Latin square (see Table 2; each participant received one of six survey versions). As no complete Graeco-Latin square of order 6 exists, some aspects had to be prioritized over others when designing the experiment. Aspects that we prioritized were that each participant received each scenario once, each retraction condition twice, and each load condition three times, and that load and no-load conditions alternated to achieve temporally distinct periods of load. Prioritizing these aspects meant that not all participants received each combination of retraction and load conditions. As such, we decided to use mixed-effects modelling which deals effectively with such design constraints. See the Supplement for transcripts of all reports. Audio files are available at: https://osf.io/qma2g/.

Table 1. Retraction Statements across Retraction Conditions and Event Report Scenarios.

| Event Report Scenario | 1-Retraction | 3-Retraction (boldface for misinformation repetitions added for emphasis) |

|---|---|---|

| A – Bushfire | After further investigation, we now believe the fire was not caused by arson. | Our initial thoughts that the fire was deliberately lit were unfounded. We have now had some time to look at the evidence, so after further investigation, we now believe the fire was not caused by arson. We are lucky that no one was harmed by the fire, and some of the residents really feel that they have avoided disaster. … I think they are also reassured that this was not the work of an arsonist. |

| B – Emergency Landing | Investigators have found that previous attributions to extreme weather were incorrect. | Investigators have found that previous attributions to extreme weather were incorrect; there was a press conference earlier where a statement was issued that extreme weather did not play any role in the emergency landing. The airport has provided passengers with accommodation and complimentary food on behalf of the airline. … The airline has been informed that weather was not the cause of the issue and are still investigating. |

| C – Woman’s collapse | The doctors have now ruled out drink-spiking as the cause of her symptoms. | The doctors have now ruled out drink-spiking as the cause of her symptoms. Further tests are being conducted on Sophia, but there is no evidence that her drink was spiked, and she is due to be released from hospital later today. … While drink spiking didn’t cause Sophia’s collapse, she urged party-goers to execute caution at nightclubs. |

| D – Train Derailment | The transport authorities have concluded that speed was not a factor in the derailment. | The transport authorities have concluded that speed was not a factor in the derailment. … The passenger described that there was a moment of silence and weightlessness before a horrible bang and the sound of screeching metal, but there is no evidence that the train was going excessively fast. It seems like it was a terrifying event for all involved, but ultimately, speed is not considered to have played a role. |

| E – Fish kill | Tests by the local water department have now confirmed that a chemical spill was not the cause. | Tests by the local water department have now confirmed that a chemical spill was not the cause. … While there is no evidence that chemicals contributed to the fish deaths, the waterintake shutdown was a critical issue for the region, as recent drought periods have resulted in record low water levels. … Residents have been reassured that a chemical spill was not the cause of the fish kill, and most importantly, that the local drinking water is as safe as it has ever been. |

| F – Death of a drug dealer | Authorities have revealed that an autopsy report has found the original suspicions of a death by assault were false. | Authorities have revealed that the original suspicions of a death by assault were false. An autopsy report has been handed down, which found no evidence to suggest the dealer was assaulted. On behalf of the family, the deceased man’s sister said they were extremely upset by their family member’s death but were relieved that it was not due to an assault. |

Table 2. Survey Versions Used.

| SV/Position | 1 | 2 | 3 | 4 | 5 | 6 |

|---|---|---|---|---|---|---|

| V1 | noL-1R-A | L-3R-B | noL-0R-C | L-1R-D | noL-0R-E | L-3R-F |

| V2 | noL-0R-F | L-1R-E | noL-3R-D | L-3R-C | noL-1R-B | L-0R-A |

| V3 | noL-3R-E | L-0R-D | noL-1R-F | L-0R-B | noL-3R-A | L-1R-C |

| V4 | L-0R-E | noL-1R-D | L-1R-A | noL-3R-F | L-0R-C | noL-3R-B |

| V5 | L-1R-B | noL-3R-C | L-0R-F | noL-0R-A | L-3R-D | noL-1R-E |

| V6 | L-3R-A | noL-0R-B | L-3R-E | noL-1R-C | L-1R-F | noL-0R-D |

Note. SV, survey version; noL, no load; L, load; 0R, no retraction; 1R, one retraction; 3R, three retractions; A-F, event-report scenarios.

Inferential Reasoning Questions

For each event, four inference questions were used to assess participants’ reliance on the critical information. Three of the inference questions involved statements (e.g., “Bad weather contributed to the emergency landing”) that participants rated on an 11-point Likert scale (0 “completely disagree” to 10 “completely agree”). The final inference question was a direct choice as to what caused the event (e.g., “What do you think was the main cause of the incident?” – a. Bad weather; b. Rudder deterioration; c. Foul play; d. Pilot error; e. None of the above); a recognition format (instead of recall) was used to encourage the use of familiarity-based judgements rather than recollection. See the Supplement for all inference questions.

Memory Questions

Memory for each report was assessed with four multiple-choice memory questions (e.g., “Where was the airplane flying to?” – a. Washington; b. Los Angeles; c. Boston; d. San Francisco). See the Supplement for all memory questions.

Driving Task

Participants drove at 50 km/h along a continuous four-lane road and were instructed to keep to the outside lane of the road. Participants did not turn off the main road at any point and no other vehicles appeared in their lane. The other three lanes had light traffic density (approx. 5 vehicles per minute). Participants were informed that they would receive a bonus of up to AU$3, which would be reduced gradually (eventually down to $0) if they drove either too slowly or too quickly. This was done to incentivise participants to travel close to the speed limit, as they would in real-world driving.

Load Task

The load task consisted of single-digit addition problems (e.g., 3 + 7; Harbluk et al., 2007). The problems were presented using a custom-built Android application on the tablet. The problems appeared on the tablet screen and participants responded by manually typing their answers using a number pad displayed on the tablet. At the end of each load period, the current addition problem disappeared and was replaced by a message instructing participants that the task had ended. An auditory chime provided accuracy feedback after each question was answered. This task was designed to mimic real-world distractor tasks such as replying to a text message on a phone or entering a new route on a navigation system while driving. This task has been demonstrated to have a detrimental effect on driving performance (see Bowden et al., 2019).

Participants were presented with a total of three load periods in the experiment. The load task targeted retraction encoding and integration, and began approx. 3 s before the (first) retraction sentence and lasted until approx. 10 s after the (last) retraction sentence. (In the no-retraction condition, a filler sentence at the same position in the narrative as the retraction sentence in the 1-retraction condition was used.) The load duration was on average 18 s in the no-retraction and 1-retraction conditions, and 37 s in the 3-retraction condition.

Procedure

Participants first completed a 6-min practice phase to familiarize them with the driving simulation and the addition task. Participants were then informed about the bonus, and started the driving task. The news audio started 30 s after participants started driving. Participants listened to the six event reports while completing the load task at certain points depending on condition. Participants then responded to the inference and memory questions for each report on a separate computer outside the simulator via a survey (following precedent; e.g., Ecker et al., 2014). Finally, participants were asked if they had put in a reasonable effort and whether their data should be used for analysis (with response options “Yes, I put in reasonable effort”; “Maybe, I was a little distracted”; or “No, I really wasn’t paying any attention”), before being debriefed. The entire experiment took approx. 35 min.

Scoring

Memory Scores

Memory scores were calculated from responses to the four multiple-choice memory questions for each scenario. Correct responses were given a score of 1 and incorrect responses received a score of 0. The maximum score that could be obtained was 24 (i.e., a maximum score of 4 for each event report).

Inference Scores

For each report, an inference score was calculated as the mean response to the three rating-scale inference question. Higher inference scores indicated greater misinformation reliance, with a maximum possible score of 10. This was the main dependent variable. For the multiple-choice inference questions, the response option associated with the critical information was scored 1, the lure options were scored 0.2

Results

Using a-priori criteria, data were excluded if participants either (1) reported poor English skills (n = 0), (2) identified as putting in no effort (via the self-reported effort question; n = 2), or (3) failed to correctly answer six or more of the 24 memory questions (n = 2; cumulative probability of at least 6 correct responses when guessing: p = .578). Additionally, n = 22 were excluded due to technical issues with the tablet or failure to follow instructions regarding the math task. Overall, n = 26 participants met at least one of these exclusion criteria and were removed, leaving a final sample size of N = 233.

Distraction Task

As expected, distraction task accuracy was at ceiling, M = .98 (SD = .14), indicating that participants were appropriately engaged in the simple addition task.

Driving Performance

Three measures were used to assess participants’ driving performance (based on Bowden et al., 2019): average speed in km/h, speed variability (the standard deviation of a participant’s speed), and positional variability, that is, participants’ ability to maintain a consistent position within their lane (standard deviation of the car’s position with respect to the center of the lane in meters). Participants’ average speed was M = 49.54 km/h (SD = 1.08) and their speed variability was M = 2.20 (SD = .73), indicating that participants generally followed the instruction to maintain a speed close to 50 km/h. Participants’ average positional deviation was M = 0.25 m (SD = 0.06).

We then assessed the impact of the distractor task on these driving-performance indicators. Note that an increase in speed and positional variability may reflect the potential costs to safety associated with distraction, and an average speed reduction may reflect compensatory strategic behavior. Periods of load were compared with two no-load baselines: either an equivalent time period from a corresponding no-load event report (i.e., an across-scenario comparison) or a time period from the same event report but immediately preceding the onset of load (i.e., a within-scenario comparison). Comparisons used averages across the three no-load and load periods. Load affected all three measures: average speed, t(232) ≥ 6.58, p < .001, indicating that participants drove slower under load; speed variability, t(232) ≤ -12.65, p < .001, indicating poorer speed control under load; and positional variability, t(232) ≤ -15.06, p < .001, indicating poorer lane-keeping under load. See Table S1 in the Supplement for descriptive statistics.

Memory Scores

The sole purpose of the memory questions was to identify individuals who did not sufficiently encode the reports (i.e., participants who answered fewer than 6/24 memory questions). The mean memory score was M = 13.46 (SD = 3.02).

Inference Scores

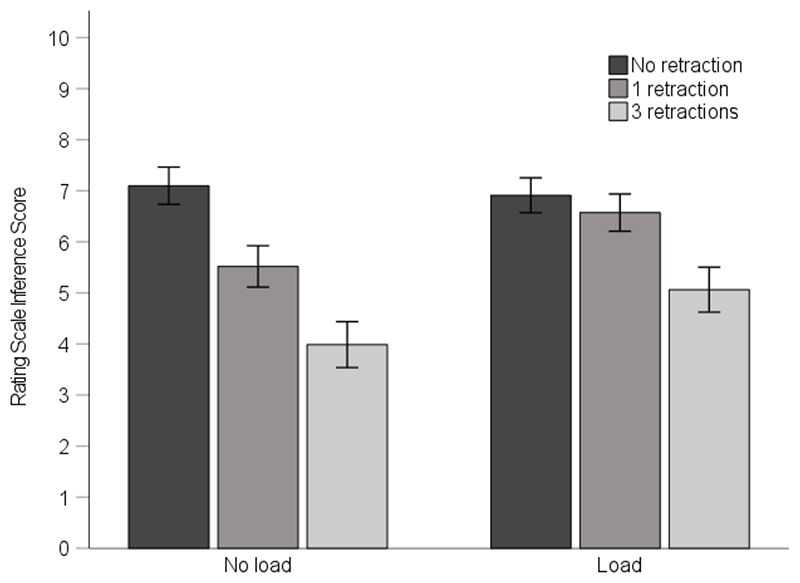

The mean rating scale inference scores across load and retraction conditions are shown in Figure 2. The inference score data were analysed using a linear mixed-effects model in RStudio (RStudio Team, 2020), using the lme4 (Bates et al., 2015) and lmerTest (Kuznetsova et al., 2017) packages. The data and R Script are available on the Open Science Framework (https://osf.io/qma2g/). The first model, which specified random intercepts and slopes for the retraction × load interaction effect for each event-report scenario, did not converge. We then suppressed estimation of correlation parameters to decrease model complexity; the model then converged.3

Figure 2. Mean Rating Scale Inference Scores across Conditions.

Note. Error bars denote 95% confidence intervals.

A regression using this model (function lmer) returned a significant main effect of retraction, showing that corrections facilitated belief updating (β = -1.24, SE = 0.09, t = -14.01, p < .001), as well as a significant main effect of load, indicating that load hindered belief updating (β = 0.64, SE = 0.20, t = 3.15, p = .029). There was also a significant retraction × load interaction (β = 0.57, SE = 0.19, t = 2.94, p = .003), indicating that load differentially influenced belief updating depending on the retraction condition.4

We followed this up with simple-effects analyses5 to test our specific hypotheses (see Table 3). These demonstrated that a retraction was effective in reducing inference scores— and thus misinformation reliance—compared to no-retraction when encoded without load (Contrast 1). Three retractions reduced inference scores further (Contrast 2). By contrast, one retraction processed under load failed to reduce inference scores relative to no-retraction (Contrast 3), although three retractions effected a reduction in inference scores (Contrast 4), which was of comparable magnitude to the reduction associated with one retraction processed without load. Load had no impact if no retraction was provided (Contrast 5) but impaired the effectiveness of both a single and multiple retractions (Contrasts 6 & 7).

Table 3. Simple Effects Analyses on Inference Scores.

| Contrast # | Contrast | β | SE | t | df | p |

|---|---|---|---|---|---|---|

| 1 | noL-0R vs. noL-1R | -1.61 | 0.25 | -6.39 | 342 | < .001 |

| 2 | noL-1R vs. noL-3R | -0.73 | 0.13 | -5.55 | 340 | < .001 |

| 3 | L-0R vs. L-1R | -0.37 | 0.22 | -1.68 | 327 | .094 |

| 4 | L-1R vs. L-3R | -0.74 | 0.13 | -5.67 | 352 | < .001 |

| 5 | noL-0R vs. L-0R | -0.24 | 0.23 | -1.05 | 451 | .294 |

| 6 | noL-1R vs. L-1R | 1.05 | 0.24 | 4.42 | 229 | < .001 |

| 7 | noL-3R vs. L-3R | 0.88 | 0.30 | 2.94 | 459 | .003 |

Note. noL, no load; L, load; 0R, no retraction; 1R, one retraction; 3R, three retractions.

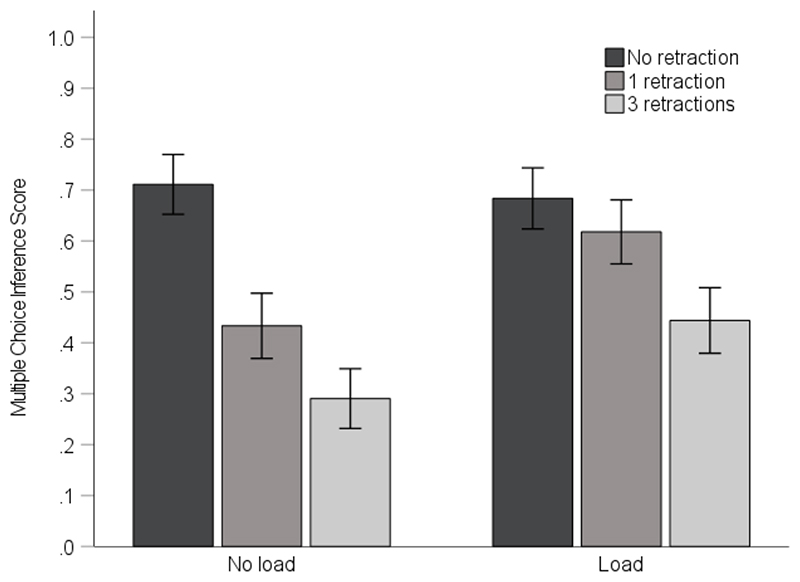

The mean multiple choice inference scores across load and retraction conditions are shown in Figure 3. The multiple-choice scores were analyzed with a binomial generalized linear mixed-effects model (using function glmer). In line with the inference-score analysis, this returned significant main effects of retraction (β = -0.88, SE = 0.09, z = -10.04, p < .001) and load (β = 0.55, SE = 0.18, z = 2.98, p = .003), as well as a significant retraction × load interaction (β = 0.42, SE = 0.17, z = 2.48, p = .013).

Figure 3. Mean Multiple Choice Inference Scores across Conditions.

Note. Error bars denote 95% confidence intervals.

Discussion

This study manipulated the strength of retraction encoding and integration through cognitive load, and tested for familiarity-backfire effects by manipulating the number of retractions repeating a piece of misinformation. It was hypothesized that (1) cognitive load during retraction processing would result in less effective correction; (2) correction repetition would increase correction effectiveness; and (3) correction repetition would be especially impactful without load. Results supported the first two hypotheses, but not the third. Moreover, as expected, we did not find evidence for a familiarity-driven backfire effect even under conditions designed to be maximally conducive.

Retraction Effects

This study was one of the first to use auditory materials in a continued-influence paradigm (also see Gordon et al., 2017). Consistent with previous research, retractions encoded without load were effective in reducing misinformation reliance compared to a noretraction condition (Ecker, O’Reilly et al., 2020; Johnson & Seifert, 1994; Lewandowsky et al., 2012; Paynter et al., 2019), and multiple corrections were more effective in reducing misinformation reliance than a single correction (Ecker et al., 2011, 2019; Vraga & Bode, 2017; Walter & Murphy, 2018).

Cognitive Load Effects

As predicted, retractions encoded under load were less effective than those encoded without load. Furthermore, load impaired the effectiveness of a single retraction to a degree that it was entirely ineffective, thus yielding equivalent reliance on the critical information in retraction and no-retraction conditions. Multiple retractions were able to overcome this, even though they were also less effective than multiple retractions encoded without load. This is consistent with previous research that has shown that load impairs the effectiveness of a retraction, especially if the misinformation is encoded without load (Ecker et al., 2011; Szumowska et al., 2021). Therefore, when correcting misinformation, retractions should ideally be processed with full cognitive resources, and if retractions are encoded while a person is distracted, multiple retractions are needed to reduce misinformation reliance.

Theoretically, this implies that load applied during retraction processing could disrupt integration of the retraction into the mental event model, or that poorer encoding could result in failure to subsequently retrieve the corrective information. However, we note that the predicted load-by-retraction interaction was only significant when including the no-retraction condition, indicating that the benefit of repetition was independent of load. This suggests that load nullified one retraction, or led to participants missing one or two retractions, while leaving the benefit associated with additional retractions intact.

Familiarity Effect

This study failed to find evidence of a familiarity-backfire effect. Multiple retractions, each containing the misinformation, resulted in lower misinformation reliance compared to a single retraction, even under load. This further allays concerns regarding the potential impacts of repeating misinformation when correcting it, specifically that retractions can enhance CIEs by inadvertently boosting misinformation familiarity (Lewandowsky et al., 2012; Schwarz et al., 2007; Skurnik et al., 2005). Our results are in line with a growing number of studies yielding no evidence of such ironic familiarity-driven effects (Cameron et al., 2013; Ecker, Lewandowsky et al., 2020; Ecker et al., 2011; Ecker, O’Reilly et al., 2020; Rich & Zaragoza, 2016; Swire et al., 2017).

Theoretical and Practical Implications

The implications of this research are clear: If an individual is distracted during misinformation correction (e.g., listening to the radio while driving), then multiple retractions are needed to reduce misinformation reliance. Repeated retractions seem to produce no harm in terms of people’s event-related inferences, even if the retractions repeat the to-be-corrected misinformation. In fact, repetition seems necessary if the recipient is not paying full attention. This may be because repetition facilitates the co-activation of the misinformation and its correction, as well as subsequent conflict-detection and information-integration processes, which have been identified as important for memory updating and knowledge revision (Ecker et al., 2017; Kendeou et al., 2014, 2019). From the perspective of the selective-retrieval account, multiple repetitions will promote stronger encoding and representation not only of the misinformation but also the retraction, facilitating subsequent retraction recall at test. This suggests that recommendations to avoid corrections that repeat the misinformation (e.g., Lewandowsky et al., 2012; Peter & Koch, 2016; Schwarz et al., 2007, 2016) should not be heeded, with the possible exception of situations where the misinformation is entirely novel, such that a retraction might spread the misinformation to new audiences (Autry & Duarte, 2021; but see Ecker, Lewandowsky et al., 2020).

Limitations and Future Research

There are several limitations of the current research. Firstly, the convenience sample comprised relatively inexperienced drivers. Although this may enhance the validity of the load manipulation (Klauer et al., 2014), future research might use a more heterogeneous sample to improve generalizability. Secondly, while this study attempted to be ecologically valid, a driving simulator is not directly equivalent to real-world driving, and drivers rarely engage in math tasks while driving. While the math task provided a high level of experimental control and impacted driving performance (also see Bowden et al., 2019), future research could use real text messages. Future research could also extend generalizability by using other real-world mediums such as video, by using real-world misinformation to explore the impact on relevant beliefs and behaviours, or by extending the interval between retractions. Finally, a limitation was the absence of a no-misinformation control condition, which provides the baseline required to formally establish the presence of continued influence, and the unbalanced design. Future replication work could include a baseline condition and use a fully-balanced design to allow for a more traditional analysis approach.

Conclusion

Continued reliance on corrected misinformation can have negative implications for individuals and society (e.g., Lazer et al., 2018). In this study, we found that cognitive load impaired retraction effectiveness, such that multiple retractions are needed when recipients are distracted. In addition, this study provides further evidence against the notion of familiarity backfire and the associated recommendation to avoid repeating misinformation when correcting it.

Supplementary Material

Acknowledgements

This research was supported by a Bruce and Betty Green Postgraduate Research Scholarship and an Australian Government Research Training Program Scholarship to the first author. UE was supported by Australian Research Council grant FT190100708. SL acknowledges financial support from the European Research Council (ERC Advanced Grant 101020961 PRODEMINFO), and the Humboldt Foundation through a research award. SL and BST were partly supported by the Volkswagen Foundation (grant “Reclaiming individual autonomy and democratic discourse online: How to rebalance human and algorithmic decision making”). We thank Troy Visser for initial discussions about the driving simulator, James Howard for programming of the distractor task, Charles Hanich for research assistance, and Nic Fay for assistance with the modelling. We thank Alex Mladenovic, Cathryn McKenzie, Matthew Hyett, Rachael Mumme, Sara Donaldson, and Tharen Kander for lending their voices to the event-report recordings. We also thank Paul McIlhiney, even though his Scottish accent prevented us from using his segment.

Footnotes

Reprinted with permission from Accident Analysis and Prevention, 124, Bowden, V. K., Loft, S., Wilson, M. D., Howard, J., & Visser, T. A. W., The long road home from distraction: Investigating the time-course of distraction recovery in driving, 23-32, Copyright Elsevier (2019).

We note that the original analysis plan was to include the multiple-choice item in the computation of the inference score. The analysis approach was changed due to reviewer feedback. The original analysis yielded identical results and is provided in the supplement.

Simplifying the model by removing terms yielded comparable results. Adding indicators of participants’ event memory, math performance, and driving performance as predictors did not change the outcome.

This interaction was no longer significant when excluding the no-retraction conditions which suggests that load affects the effectiveness of retractions per se but does not reduce the differential benefit of three over one retraction.

These used just the one relevant fixed effect and random intercepts for participant and scenario.

Author Contributions: JS, VB, BS, SL, UE contributed to conception and design; JS and UE contributed to analysis and interpretation of data; JS contributed to the acquisition of data and drafted the article; VB, BS, SL, UE contributed to article revisions; JS, VB, BS, SL, UE approved the submitted version for publication.

Competing interests: The authors declare no conflict of interest.

Data accessibility statement

Materials are provided in the Supplement. Recordings and data are available on the Open Science Framework website at https://osf.io/qma2g/ (DOI: 10.17605/OSF.IO/QMA2g)

References

- Autry KS, Duarte SE. Correcting the unknown: Negated corrections may increase belief in misinformation. Applied Cognitive Psychology. 2021;35(4):960–975. doi: 10.1002/acp.3823. [DOI] [Google Scholar]

- Ayers MS, Reder LM. A theoretical review of the misinformation effect: Predictions from an activation-based memory model. Psychonomic Bulletin & Review. 1998;5(1):1–21. doi: 10.3758/BF03209454. [DOI] [Google Scholar]

- Barr DJ, Levy R, Scheepers C, Tily HJ. Random effects structure for confirmatory hypothesis testing: Keep it maximal. Journal of Memory and Language. 2013;68(3):255–278. doi: 10.1016/j.jml.2012.11.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bates D, Maechler M, Bolker B, Walker S. Fitting Linear Mixed-Effects Models Using lme4. Journal of Statistical Software. 2015;67(1):1–48. doi: 10.18637/jss.v067.i01. [DOI] [Google Scholar]

- Bowden VK, Loft S, Wilson MD, Howard J, Visser TA. The long road home from distraction: Investigating the time-course of distraction recovery in driving. Accident Analysis & Prevention. 2019;124:23–32. doi: 10.1016/j.aap.2018.12.012. [DOI] [PubMed] [Google Scholar]

- Brydges CR, Gignac GE, Ecker UKH. Working memory capacity shortterm memory capacity, and the continued influence effect: A latent-variable analysis. Intelligence. 2018;69:117–122. doi: 10.1016/j.intell.2018.03.009. [DOI] [Google Scholar]

- Brydges CR, Gordon A, Ecker UK. Electrophysiological correlates of the continued influence effect of misinformation: an exploratory study. Journal of Cognitive Psychology. 2020;32(8):771–784. doi: 10.1080/20445911.2020.1849226. [DOI] [Google Scholar]

- Cameron KA, Roloff ME, Friesema EM, Brown T, Jovanovic BD, Hauber S, Baker DW. Patient knowledge and recall of health information following exposure to “facts and myths” message format variations. Patient Education and Counseling. 2013;92(3):381–387. doi: 10.1016/j.pec.2013.06.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Campbell JI, Thompson VA. MorePower 6.0 for ANOVA with relational confidence intervals and Bayesian analysis. Behavior Research Methods. 2012;44(4):1255–1265. doi: 10.3758/s13428-012-0186-0. [DOI] [PubMed] [Google Scholar]

- Carnahan D, Garrett RK. Processing style and responsiveness to corrective information. International Journal of Public Opinion Research. 2019;32(3):530–546. doi: 10.1093/ijpor/edz037. [DOI] [Google Scholar]

- Chan MS, Jones CR, Hall Jamieson K, Albarracin D. Debunking: A meta-analysis of the psychological efficacy of messages countering misinformation. Psychological Science. 2017;28:1531–1546. doi: 10.1177/0956797617714579. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chotpitayasunondh V, Douglas KM. The effects of “phubbing” on social interaction. Journal of Applied Social Psychology. 2018;48(6):304–316. doi: 10.1111/jasp.12506. [DOI] [Google Scholar]

- Craik FI, Govoni R, Naveh-Benjamin M, Anderson ND. The effects of divided attention on encoding and retrieval processes in human memory. Journal of Experimental Psychology: General. 1996;125(2):159–180. doi: 10.1037/0096-3445.125.2.159. [DOI] [PubMed] [Google Scholar]

- Ecker UKH, Hogan JL, Lewandowsky S. Reminders and repetition of misinformation: Helping or hindering its retraction? Journal of Applied Research in Memory and Cognition. 2017;6(2):185–192. doi: 10.1016/j.jarmac.2017.01.014. [DOI] [Google Scholar]

- Ecker UKH, Lewandowsky S, Chadwick M. Can corrections spread misinformation to new audiences? Testing for the elusive familiarity backfire effect. Cognitive Research: Principles and Implications. 2020;5(41):1–25. doi: 10.1186/s41235-020-00241-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ecker UK, Lewandowsky S, Chang EP, Pillai R. The effects of subtle misinformation in news headlines. Journal of Experimental Psychology: Applied. 2014;20(4):323–335. doi: 10.1037/xap0000028. [DOI] [PubMed] [Google Scholar]

- Ecker UKH, Lewandowsky S, Cook J, Schmid P, Fazio LK, Brashier N, Kendeou P, Vraga EK, Amazeen MA. The psychological drivers of misinformation belief and its resistance to correction. Nature Reviews Psychology. 2022;1:13–29. doi: 10.1038/s44159-021-00006-y. [DOI] [Google Scholar]

- Ecker UKH, Lewandowsky S, Jayawardana K, Mladenovic A. Refutations of equivocal claims: No evidence for an ironic effect of counterargument number. Journal of Applied Research in Memory and Cognition. 2019;8(1):98–107. doi: 10.1016/j.jarmac.2018.07.005. [DOI] [Google Scholar]

- Ecker UKH, Lewandowsky S, Swire B, Chang D. Correcting false information in memory: Manipulating the strength of misinformation encoding and its retraction. Psychonomic Bulletin & Review. 2011;18:570–578. doi: 10.3758/s13423-011-0065-1. [DOI] [PubMed] [Google Scholar]

- Ecker UKH, Lewandowsky S, Tang DTW. Explicit warnings reduce but do not eliminate the continued influence of misinformation. Memory & Cognition. 2010;38:1087–1100. doi: 10.3758/MC.38.8.1087. [DOI] [PubMed] [Google Scholar]

- Ecker UKH, O’Reilly Z, Reid JS, Chang EP. The effectiveness of shortformat refutational fact-checks. British Journal of Psychology. 2020;111(1):36–54. doi: 10.1111/bjop.12383. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fernandes MA, Moscovitch M. Divided attention and memory: evidence of substantial interference effects at retrieval and encoding. Journal of Experimental Psychology: General. 2000;129(2):155–176. doi: 10.1037/0096-3445.129.2.155. [DOI] [PubMed] [Google Scholar]

- Gilbert DT, Krull DS, Malone PS. Unbelieving the unbelievable: Some problems in the rejection of false information. Journal of Personality and Social Psychology. 1990;59(4):601–613. doi: 10.1037/0022-3514.59.4.601. [DOI] [Google Scholar]

- Gilbert DT, Tafarodi RW, Malone PS. You can’t not believe everything you read. Journal of Personality and Social Psychology. 1993;65(2):221–233. doi: 10.1037/0022-3514.65.2.221. [DOI] [PubMed] [Google Scholar]

- Gordon A, Brooks JCW, Quadflieg S, Ecker UKH, Lewandowsky S. Exploring the neural substrates of misinformation processing. Neuropsychologia. 2017;106:216–224. doi: 10.1016/j.neuropsychologia.2017.10.003. [DOI] [PubMed] [Google Scholar]

- Harbluk JL, Noy YI, Trbovich PL, Eizenman M. An on-road assessment of cognitive distraction: Impacts on drivers’ visual behavior and braking performance. Accident Analysis & Prevention. 2007;39(2):372–379. doi: 10.1016/j.aap.2006.08.013. [DOI] [PubMed] [Google Scholar]

- Hicks JL, Marsh RL. Toward specifying the attentional demands of recognition memory. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2000;26(6):1483–1498. doi: 10.1037/0278-7393.26.6.1483. [DOI] [PubMed] [Google Scholar]

- Johnson HM, Seifert CM. Sources of the continued influence effect: When misinformation in memory affects later inferences. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1994;20(6):1420–1436. doi: 10.1037/0278-7393.20.6.1420. [DOI] [Google Scholar]

- Kendeou P, Butterfuss R, Kim J, Van Boekel M. Knowledge revision through the lenses of the three-pronged approach. Memory & Cognition. 2019;47:33–46. doi: 10.3758/s13421-018-0848-y. [DOI] [PubMed] [Google Scholar]

- Kendeou P, Walsh EK, Smith ER, O'Brien EJ. Knowledge revision processes in refutation texts. Discourse Processes. 2014;51(5–6):374–397. doi: 10.1080/0163853X.2014.913961. [DOI] [Google Scholar]

- Klauer SG, Guo F, Simons-Morton BG, Ouimet MC, Lee SE, Dingus TA. Distracted driving and risk of road crashes among novice and experienced drivers. New England Journal of Medicine. 2014;370(1):54–59. doi: 10.1056/NEJMsa1204142. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuznetsova A, Brockhoff PB, Christensen RHB. lmerTest Package: Tests in Linear Mixed Effects Models. Journal of Statistical Software. 2017;82(13):1–26. doi: 10.18637/jss.v082.i13. [DOI] [Google Scholar]

- Lazer DM, Baum MA, Benkler Y, Berinsky AJ, Greenhill KM, Menczer F, Metzger MJ, Nyhan B, Pennycook G, Rothschild D, Schudson M, et al. The science of fake news. Science. 2018;359(6380):1094–1096. doi: 10.1126/science.aao2998. [DOI] [PubMed] [Google Scholar]

- Lewandowsky S, Ecker UKH, Seifert CM, Schwarz N, Cook J. Misinformation and its correction: Continued influence and successful debiasing. Psychological Science in the Public Interest. 2012;13(3):106–131. doi: 10.1177/1529100612451018. [DOI] [PubMed] [Google Scholar]

- Nyhan B, Reifler J, Richey S, Freed GL. Effective messages in vaccine promotion: a randomized trial. Pediatrics. 2014;133(4):e835–e842. doi: 10.1542/peds.2013-2365. [DOI] [PubMed] [Google Scholar]

- Paynter JM, Luskin-Saxby S, Keen D, Fordyce K, Frost G, Imms C, Miller S, Trembath D, Tucker M, Ecker UKH. Evaluation of a template for countering misinformation: Real-world autism treatment myth debunking. PLoS ONE. 2019;14(1):e0210746. doi: 10.1371/journal.pone.0210746. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peter C, Koch T. When debunking scientific myths fails (and when it does not): The backfire effect in the context of journalistic coverage and immediate judgments as prevention strategy. Science Communication. 2016;38(1):3–25. doi: 10.1177/1075547015613523. [DOI] [Google Scholar]

- Pluviano S, Watt C, Della Sala S. Misinformation lingers in memory: failure of three pro-vaccination strategies. PLoS ONE. 2017;12(7):e0181640. doi: 10.1371/journal.pone.0181640. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rapp DN, Kendeou P. Revising what readers know: Updating text representations during narrative comprehension. Memory & Cognition. 2007;35:2019–2032. doi: 10.3758/BF03192934. [DOI] [PubMed] [Google Scholar]

- Reed MP, Green PA. Comparison of driving performance on-road and in a low-cost simulator using a concurrent telephone dialling task. Ergonomics. 1999;42(8):1015–1037. doi: 10.1080/001401399185117. [DOI] [Google Scholar]

- Rich PR, Zaragoza MS. The continued influence of implied and explicitly stated misinformation in news reports. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2016;42(1):62–74. doi: 10.1037/xlm0000155. [DOI] [PubMed] [Google Scholar]

- Rich PR, Zaragoza MS. Correcting misinformation in news stories: An investigation of correction timing and correction durability. Journal of Applied Research in Memory and Cognition. 2020;9(3):310–322. doi: 10.1016/j.jarmac.2020.04.001. [DOI] [Google Scholar]

- Richter T, Singer M. In: Handbook of Discourse Processes. 2nd ed. Rapp D, Britt A, Schober M, editors. Taylor & Francis/Routledge; 2017. Discourse updating: Acquiring and revising knowledge through discourse. [Google Scholar]

- RStudio Team. RStudio: Integrated Development for R RStudio. PBC; Boston, MA: 2020. http://www.rstudio.com/ [Google Scholar]

- Sanderson JA, Bowden V, Swire-Thompson B, Lewandowsky S, Ecker UKH. Listening to Misinformation while Driving: Cognitive Load and the Effectiveness of (Repeated) Corrections. 2022. Retrieved from https://osf.io/qma2g/ [DOI] [PMC free article] [PubMed]

- Schwarz N, Newman E, Leach W. Making the truth stick & the myths fade: Lessons from cognitive psychology. Behavioral Science & Policy. 2016;2:85–95. doi: 10.1353/bsp.2016.0009. [DOI] [Google Scholar]

- Schwarz N, Sanna LJ, Skurnik I, Yoon C. Metacognitive experiences and the intricacies of setting people straight: Implications for debiasing and public information campaigns. Advances in Experimental Social Psychology. 2007;39:127–161. doi: 10.1016/S0065-2601(06)39003-X. [DOI] [Google Scholar]

- Skurnik I, Yoon C, Park DC, Schwarz N. How warnings about false claims become recommendations. Journal of Consumer Research. 2005;31(4):713–724. doi: 10.1086/426605. [DOI] [Google Scholar]

- Skurnik I, Yoon C, Schwarz N. Myths and facts about the flu: Health education campaigns can reduce vaccination intentions. 2007 Unpublished manuscript available from http://webuser.bus.umich.edu/yoonc/research/Papers/Skurnik_Yoon_Schwarz_2005_Myths_Facts_Flu_Health_Education_Campaigns_JAMA.pdf. [Google Scholar]

- Swire B, Ecker UKH, Lewandowsky S. The role of familiarity in correcting inaccurate information. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2017;43(12):1948–1961. doi: 10.1037/xlm0000422. [DOI] [PubMed] [Google Scholar]

- Swire-Thompson B, DeGutis J, Lazer D. Searching for the backfire effect: Measurement and design considerations. Journal of Applied Research in Memory and Cognition. 2020;9(3):286–299. doi: 10.1016/j.jarmac.2020.06.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Swire-Thompson B, Miklaucic N, Wihbey JP, Lazer D, DeGutis J. The backfire effect after correcting misinformation is strongly associated with reliability. Journal of Experimental Psychology: General. 2022 doi: 10.1037/xge0001131. (in press) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Szumowska E, Czarnek G, Dragon P, De Keersmaecker J. Correction after misinformation: Does engagement in media multitasking affect attitude adjustment? Comprehensive Results in Social Psychology. 2021;4(2):199–226. doi: 10.1080/23743603.2021.1884495. [DOI] [Google Scholar]

- Underwood G, Crundall D, Chapman P. Driving simulator validation with hazard perception. Transportation Research Part F: Traffic Psychology and Behaviour. 2011;14(6):435–446. doi: 10.1016/j.trf.2011.04.008. [DOI] [Google Scholar]

- Vraga EK, Bode L. Using expert sources to correct health misinformation in social media. Science Communication. 2017;39(5):621–645. doi: 10.1177/1075547017731776. [DOI] [Google Scholar]

- Wahlheim CN, Alexander TR, Peske CD. Reminders of everyday misinformation statements can enhance memory for and beliefs in corrections of those statements in the short term. Psychological Science. 2020;31(10):1325–1339. doi: 10.1177/0956797620952797. [DOI] [PubMed] [Google Scholar]

- Walter N, Murphy ST. How to unring the bell: A meta-analytic approach to correction of misinformation. Communication Monographs. 2018;85(3):423–441. doi: 10.1080/03637751.2018.1467564. [DOI] [Google Scholar]

- Walter N, Tukachinsky R. A meta-analytic examination of the continued influence of misinformation in the face of correction: How powerful is it, why does it happen, and how to stop it? Communication Research. 2020;47(2):155–177. doi: 10.1177/0093650219854600. [DOI] [Google Scholar]

- Yonelinas AP. The nature of recollection and familiarity: A review of 30 years of research. Journal of Memory and Language. 2002;46(3):441–517. doi: 10.1006/jmla.2002.2864. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Materials are provided in the Supplement. Recordings and data are available on the Open Science Framework website at https://osf.io/qma2g/ (DOI: 10.17605/OSF.IO/QMA2g)