Abstract

Individuals with autism are impaired in the recognition of fear, which may be due to their reduced tendency to look at the eyes. Here we investigated another potential perceptual and social consequence of reduced eye fixation. The eye region of the face is critical for identifying genuine, or sincere, smiles. We therefore investigated this ability in adults with autism. We used eye-tracking to measure gaze behaviour to faces displaying posed and genuine smiles. Adults with autism were impaired on the posed/genuine smile task and looked at the eyes significantly less than did controls. Also, within the autism group, task performance correlated with social interaction ability. We conclude that reduced eye contact in autism leads to reduced ability to discriminate genuine from posed smiles with downstream effects on social interaction.

Keywords: Asperger syndrome, social cognition, face processing, smile, eye-tracking

Introduction

Autism is a pervasive developmental disorder, characterised by verbal and non-verbal communication problems, difficulties with reciprocal social interactions, and unusual patterns of repetitive behaviour (DSM-IV; American Psychiatric Association (APA), 1994). Many of the deficits present in autism are social in nature, and a number of experimental studies have demonstrated abnormal processing of social information, including the recognition of faces and facial expression. For example, normal subjects find faces more difficult to recognise if they are inverted (Yin, 1969, Yovel & Kanwisher, 2004), whereas individuals with autism do not (Langdell, 1978). These processing differences translate into performance deficits on face-related tasks, such as impaired recognition memory for faces (Langdell, 1978; Klin, Jones, Schultz, Volkmar, & Cohen, 1999), and abnormal judgements of trustworthiness from faces (Adolphs, Sears & Piven, 2001).

Another documented deficit is in the recognition of facial expressions of emotion, in particular the detection of fear (Howard et al., 2000; Pelphrey et al., 2002). It has been suggested that this is because the identification of fear relies more heavily on the eyes than other emotions (Adolphs et al., 2005). Individuals with autism do not look at the eye region as often as normal controls when viewing images of faces (Pelphrey et al., 2002; Dalton et al., 2005; Dalton, Nacewicz, Alexander, & Davidson, 2006) or video clips of social scenes (Klin, Jones, Schultz, Volkmar, & Cohen, 2002). This suggests that poor performance of individuals with autism on tasks of face perception, such as recognising a fearful facial expression, might be linked to a reduced tendency to look at the eyes.

The eye region is also important when identifying genuine, or sincere, smiles. In 1862 the French neurologist, Duchenne de Boulogne, showed that the critical factor in distinguishing a posed from a genuine smile is contraction of the orbicularis oculi muscle which surrounds the eye. Genuine (or “Duchenne”) smiles are accompanied by contraction of these muscles, causing wrinkles of the skin in the outer corners of the eyes, known as ‘crow’s feet’ wrinkles (Hager & Ekman, 1985; Ekman, 1989). Hager and Ekman (1985) showed that the outer part of the orbicularis oculi muscle, which causes the crow’s feet wrinkles, is not under voluntary control, and therefore this region cannot contract in a posed smile. There is evidence that people make more and longer fixations to the crow’s feet region of happy faces than of sad or neutral faces, and thus it has been suggested that happy faces are automatically checked for the presence of the crow’s feet marker (Williams, Senior, David, Loughland, & Gordon, 2001).

The aim of the current study was to investigate the ability of adults with autism to distinguish genuine and posed smiles. At the same time, gaze behaviour in relation to the eye region and, for comparison, the mouth region of the face was monitored using an eye-tracker. We hypothesised that individuals with autism would, firstly, be impaired at discriminating genuine and posed smiles, and secondly, show a reduced tendency to look at the eye region of the face when making judgements about smiles. Furthermore, we investigated whether the ability to discriminate genuine and posed smiles was associated with social interaction impairment, as measured by the reciprocal social interaction score of the ADOS (Lord et al., 2000), in the autism group.

Method

Participants

We tested 18 individuals with autism (15 males) and 18 control subjects (15 males), matched for age (see table 1). The groups were matched for verbal and performance IQ, as measured by the Wechsler Abbreviated Scale of Intelligence (WASI). Full details of the groups are given in table 1.

Table 1. Details of participants included in the analysis of data from the behavioural and eye-tracking components of the study (ns = not significant).

| Behavioural experiment | Eye-tracking experiment | |||||

|---|---|---|---|---|---|---|

| Autism group | Control group | Group comparison | Autism group | Control group | Group comparison | |

| N | 18 | 18 | 11 | 11 | ||

| Gender (M:F) | 15:3 | 15:3 | 9:2 | 8:3 | ||

| Age in years | 35.4 (±12.3) |

36.7 (±14.0) |

t(34) = 0.316 p = 0.754, ns |

34.6 (±9.01) |

39.6 (±11.1) |

t(20) = 0.319 p = 0.753, ns |

| Verbal IQ | 117 (±11) |

112 (±11) |

t(34) = 1.215 p =0.233, ns |

118 (±11) |

111 (±12) |

t(20) = 1.412 p = 0.173, ns |

| Performance IQ | 119 (±15) |

114 (±11) |

t(34) = 1.165 p = 0.252, ns |

120 (±9) |

113 (±9) |

t(20) = 1.718 p = 0.101, ns |

| ADOS score (RSI subscale) | 6.94 (±2.51) |

N/A | N/A | 7.00 (±2.76) |

N/A | N/A |

| ADOS score (communication subscale) | 3.72 (±1.23) |

N/A | N/A | 3.82 (±1.08) |

N/A | N/A |

| ADOS score (total) | 10.7 (±3.40) |

N/A | N/A | 10.8 (±3.63) |

N/A | N/A |

All participants in the autism group had a diagnosis of autism, Asperger syndrome or autism spectrum disorder from a GP or psychiatrist. The Autism Diagnostic Observation Schedule (ADOS) (Lord et al., 2000) was administered by a researcher trained and experienced in the use of this interview, to confirm the diagnosis. In total, 10 participants met the criteria for autism, and the remaining 8 for autism spectrum disorder. We were unable to distinguish between participants with Asperger syndrome and autism, as we did not have information about early development of language and other skills in our participants. All participants were screened for exclusion criteria (dyslexia, epilepsy, and any other neurological or psychiatric conditions) by self-report prior to taking part in the study. All participants gave informed consent to take part in the study, which was approved by the local ethics committee.

Stimuli

Stimuli were colour photographs taken from a set of male and female faces. Three photographs of each individual were used: a neutral facial expression, a genuine smile and a posed smile. Full details of the creation of the stimuli can be found in an earlier paper (Miles & Johnston, 2006). In the current study, photographs of 10 female faces were used. Six of these were from a set used in previous studies (Miles & Johnston,2006; Peace, Miles & Johnson 2006) and the remaining four were created at a later date using the same procedure. For the purpose of this study, the photographs were close-cropped to show only the face (see figure 1).

Figure 1.

sample images of a genuine smile (left), and a posed smile (right) from the stimulus set. Gaze points that fell within the boxes were used to calculate gaze time to the eye region or the mouth region. From Miles & Johnston (2006). Adapted with permission.

Procedure

This experiment comprised two conditions. In the Smiles condition, participants were presented with a total of 60 faces: each of the 10 individuals in the stimulus set was shown three times with a genuine smile and three times with a posed smile. Participants were asked to make a decision about whether the smile in each photograph was real or posed. This task was described in detail and the experimenter checked that participants understood what was meant by the task.

In the Control condition, participants again saw 60 faces: each individual in the stimulus set was shown three times with a smiling expression and three times with a neutral expression. Half of the smiling pictures in the control task were taken from the genuine smile set, and the other half from the posed set. Participants were asked whether the facial expression in each photograph was smiling or neutral. This control task was chosen because it involved making judgements about the same set of faces as in the Smiles condition.

The order of presentation of the faces in both conditions was random, and the order in which the Smiles and Control conditions occurred was counterbalanced across participants.

Participants viewed each face on a computer monitor at a distance of approximately 800mm. The faces were scaled to the full height of the computer monitor, subtending approximately 17 degrees of visual angle. Faces were displayed for 2.5 seconds each, preceded by a central fixation cross of duration 1.5 seconds. Participants were instructed to look directly at the fixation cross while it was on the screen. In both conditions, after each face had disappeared, participants were required to make a response. Participants pressed one of two keys, corresponding to the labels ‘real’ and ‘posed’ (Smile condition), or ‘smiling’ and ‘neutral’ (Control condition), which appeared on the computer screen. Participants had unlimited time in which to make a response. Prior to the experiment participants practised the task with a different set of faces.

Collection of eye-tracking data

During the task, participants’ eye movements were recorded using an ASL6000 series remote eyetracker, in conjunction with a video head tracker. The position of the participant’s pupil and corneal reflection were recorded at a rate of 50Hz, and used to calculate the coordinates of the participant’s point of regard on the screen. To maintain the accuracy of this calculation, the eyetracker was calibrated before each condition by asking the participant to look at 9 predefined points on the screen. To allow for disruptions to this calibration caused by head movements, eye position was also recorded during the display of the central fixation cross prior to each trial. Any offset of the point of regard from the fixation cross was applied to correct the data collected on the subsequent trial.

Data from each trial comprised 125 coordinate pairs, detailing the position of the eye every 20ms. First, measurements corresponding to points of regard outside the computer monitor were removed. For each face, the eye region was defined prior to data collection, by drawing a rectangular box around each eye, to include the entire eye, plus the region lateral to the eye in which ‘crows feet’ wrinkles would be found in a genuine smile. The percentage of time for which participants looked at the mouth region was calculated in the same way, by drawing a rectangular box around the mouth. Examples of these boxes are shown in figure 1. For each individual in the photographs, boxes were the same size in the genuine smile photograph and the posed smile photograph.

Two measures of gaze behaviour were calculated: (a) Gaze time. Data from each trial were analysed to determine how many of the gaze coordinate pairs fell within the eye region of the face, indicating the percentage of the display time for which the point of regard was within this region. The same calculation was performed for the mouth region. (b) Fixations. Data from each trial were analysed to compute the number of fixations the participant made during the trial. A fixation was assumed if the eye remained within 1 degree of visual angle for at least 100ms. The coordinates of every fixation made were recorded for each trial. The fixation points for each trial were then analysed to determine how many were to the eye region and to the mouth region of the face.

Data analysis

For both the Smiles condition and the Control condition, scores from the two participant groups were compared using a univariate ANOVA with verbal IQ as a covariate. For the Smiles condition we measured 1-tailed significance, as our a priori hypothesis was that any difference between the two groups would arise from a deficit in performance in the autism group. Pearson’s correlation coefficient (Pearson’s r) was calculated as a measure of effect size. The two measures of gaze behaviour (gaze time and fixations) were each analysed using a mixed design repeated measures 2x2 ANOVA with the factors group (autism vs control) and facial region (eye vs mouth), using verbal IQ as a covariate. Again, Pearson’s r was calculated as a measure of effect size. Post-hoc simple-effects analyses were performed on individual contrasts for both of these measures. Throughout the analysis verbal IQ was used as the covariate rather than full-scale IQ given the large discrepancy between verbal and performance scores for some participants with autism which prevented a meaningful estimation of full-scale IQ.

Results

Behavioural data

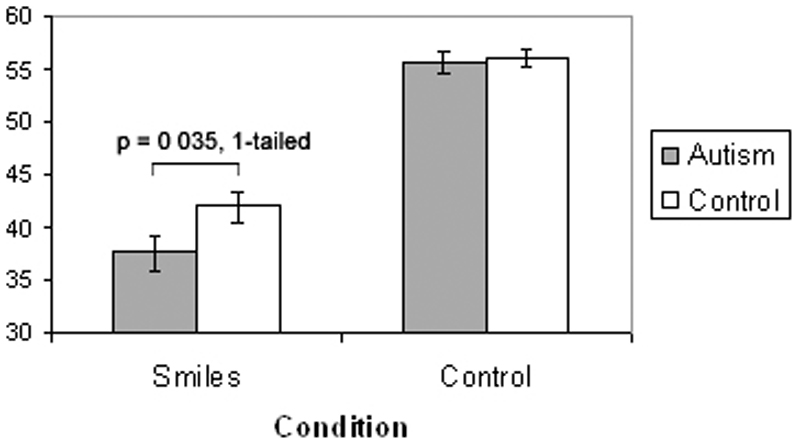

For both the Smiles and Control conditions, the number of faces correctly identified out of a possible 60 was totalled for each participant. A univariate ANOVA using verbal IQ as a covariate revealed that the autism group scored significantly lower than the control group in the Smiles condition (F(1,33) = 3.537, p = 0.035, 1-tailed given our directional hypothesis). This contrast yielded a medium effect size (r = 0.30). In contrast, scores in the Control condition were not significantly different between the two groups (F(1,35) = 0.872, p = 0.357). See Figure 2. On both tasks, scores were similar for participants with autism and those with autism spectrum disorder (mean smiles task scores: 36.7 for autism participants, 38.8 for autism spectrum participants; mean control task scores: 57.0 for autism, 53.9 for autism spectrum).

Figure 2.

mean scores of the autism group and the control group in the Smiles condition (discriminating a genuine from a posed smile), and in the Control condition (discriminating a happy from a neutral face). Participants with autism scored lower than controls in the Smiles condition (p = 0.035, 1-tailed).

Correlation between task performance and autism symptoms

For the participants with autism, we correlated performance in the Smiles condition with the participants’ social interaction skills as assessed by the reciprocal social interaction (RSI) measure of the ADOS (figure 3). A Pearson test revealed a significant negative correlation between each participant’s score on the RSI scale, and his or her score in the Smiles condition (r = -0.469, p = 0.049). As a comparison, we assessed the correlation between the RSI scores and the score in the Control condition. This correlation was not significant (r = 0.087, p = 0.732).

Figure 3. mean score in the Smiles and Control conditions for each participant with autism plotted against his or her RSI score (a higher RSI score indicates greater social impairment), r = -0.469, p = 0.049.

Eye-tracking data from the autism and control groups

For technical reasons, eye-tracking data were only available from a subset of these participants: 11 autistic adults and 11 control adults. Full details of the groups are given in table 1.

Gaze time

When looking at the percentage of gaze time spent looking at the eyes or the mouth, there was no significant main effect of facial region (F(1,19) = 2.113, p = 0.162) or participant group (F(1,19) = 0.865, p = 0.364). However, there was a significant interaction between group and facial region, and the associated value of Pearson’s r indicated a large effect size (F(1,19) = 8.071, p = 0.010, r = 0.55). An analysis of simple effects revealed that this was driven primarily by the autism group spending significantly less time than the control group looking at the eye region (p = 0.033, figure 4). There was a trend towards individuals with autism looking more than controls at the mouth region (p = 0.065).

Figure 4.

eyegaze data from the autism and control groups, showing the percentage of gaze time within the eye and mouth regions in the Smiles condition. There was a significant interaction between participant group and facial region (p = 0.010). An analysis of simple effects showed the autism group spent less time than controls looking at the eye region (p = 0.033). The difference for the mouth region approached significance (p = 0.065).

Fixations

For the autism and control groups, we looked at the percentage of fixations that were made to the eyes, or the mouth. A 2x2 ANOVA showed no significant main effect of facial region (F(1,19) = 1.302, p = 0.268) or participant group (F(1,19) = 0.922, p = 0.349). However, there was a significant interaction between region and participant group, again associated with a large effect size (F(1,19) = 6.920, p = 0.016, r = 0.52). Simple effects analysis demonstrated that the interaction was driven by the autism group making significantly fewer fixations than the control group to the eye region (p = 0.048, figure 5). There was also a trend towards individuals with autism making more fixations than the controls to the mouth (if an a priori direction of difference is assumed: p = 0.055, 1-tailed).

Figure 5.

eyegaze data from the autism and control groups, showing the percentage of fixations made to the eye and mouth regions in the Smiles condition. There was a significant interaction between participant group and facial region (p = 0.016). An analysis of simple effects showed the autism group made fewer fixations than controls to the eye region (p = 0.048). There was a trend towards a difference for the mouth region (p = 0.055, 1-tailed).

Correlation between task performance and gaze behaviour

For both participant groups, we correlated performance in the Smiles condition with the percentage of gaze time spent looking at the eye region, and the percentage of fixations that were to the eye region. A Pearson test revealed that none of these correlations was significant, for the autism group (with gaze time: r = 0.21, p > 0.05; with fixations: r = 0.33, p > 0.05) or for the control group (with gaze time: r = -0.25, p > 0.05; with fixations: r = -0.21, p > 0.05).

Discussion

The first aim of this study was to investigate the ability of adults with high functioning autism to distinguish genuine and posed smiles from photographs of faces. The second aim was to investigate gaze to the eye and mouth regions of the faces during the task. The results suggest that, compared with matched controls, individuals with autism show an impairment in the discrimination of posed from genuine smiles, but no impairment on a control facial expression discrimination task (figure 2). In addition, we found that the ability to discriminate genuine and posed smiles was inversely related to the degree of social interaction impairment in the autism group (figure 3). Finally, the results demonstrated a different gaze and fixation pattern in the autism and control groups, with individuals with autism looking significantly less at the eye region compared to the control group (figures 4 and 5).

The eye region is known to convey information about whether a smile is genuine or posed (Hager & Ekman, 1985; Williams et al., 2001). Our eye-tracking data suggest that the impaired discrimination of smiles in the autism group might be due to their reduced tendency to look at the eyes. We found a trend towards increased fixation of the mouth region in the autism group, but the differences between the groups are not as clear as for the eyes, perhaps due to greater variability in mouth fixation behaviour in the autistic population. This variability might explain the inconsistent results of previous studies: some studies have reported increased fixation of the mouth in autism (Klin et al., 2002; Spezio et al., 2006), others have found reduced fixation (Pelphrey et al., 2002) and others no difference (Dalton et al., 2005).

There are some alternative explanations for the findings reported here. First, ceiling effects in the control task might have obscured significant group differences. It proved difficult to find an ideal control task, as we wanted a task which was still social-perceptual in nature, but which did not require attention to the eyes. Second, poor performance of the autism group in the Smiles task could be due in part to factors other than a failure to look at the eyes. For example, poor performance could arise from impaired processing of subtle social information in general, rather than an impairment specific to this task. Alternatively, the difference could have been due to general difficulties with fine perceptual discrimination, as the differences between faces in this task may have been more subtle perceptually than between faces in the control task.

A lack of expertise in using information from the eyes would also hinder performance, regardless of a person’s gaze behaviour. Whether this is true of individuals with autism is unclear: there is some evidence that adults with autism fail to make use of information from the eyes when identifying facial expressions (Spezio et al., 2006), but a recent study of children with autism found that they were able to use this information (Back, Ropar & Mitchell, 2007). Neither of these studies looked specifically at the use of this information for identifying posed smiles. Alternatively, the key factor may not be expertise, but the strategy that individuals with autism use to recognise facial expressions. A rule-bound approach, perhaps explicitly learned, such as looking for upturned corners of the mouth to identify happiness, would aid performance in the control task of this study, but a rule for identifying a posed smile is less likely to have been explicitly taught, thus an individual with autism who adopted a rule-bound approach would have difficulty with unusual or subtle face-perception tasks such as the Smiles task.

We did not find a direct correlation between an individual’s score on the Smiles test and his or her tendency to look at the eye region. Such a correlation would have lent weight to our proposal that this difference in gaze behaviour between the groups was responsible for the reduced task performance by the autism group. However, it is possible that the important factor is a person’s tendency to look at the eyes in general, thus gaining expertise in using information from this region. We would hypothesise that this longer-term tendency to look at the eyes would be more closely correlated with task performance, but this is not something that can be measured. The tendency to look at the eyes during this experiment is clearly related to this more general trait, but there may have been individual differences, which reduced the strength of the correlation measured. For example, someone who avoided eye contact in daily life might in contrast be amenable to looking at the eyes of a photograph, as employed in this study.

It has been proposed that social information processing, including the interpretation of facial expressions, is linked to social interaction deficits in autism (Joseph & Tager-Flusberg, 2004). For this reason, we evaluated the correlation between an autistic participant’s score on the test, and his or her degree of reciprocal social interaction (RSI) impairment. Indeed, the results showed that those individuals who were most impaired in the recognition of genuine smiles had more deficits in the RSI domain (figure 3). The ability to distinguish a real from a posed smile has an obvious significance in everyday social interaction, as it is linked to the understanding of another’s mental state, perhaps even a higher-order mental state, as a posed smile can indicate the pretence of happiness or pleasure. Failure to identify these subtle facial cues could conceivably lead to difficulties in social interaction – for example, impaired judgement in social situations, or an inability to ‘take the hint’ or ‘read between the lines,’ similar to reported deficits in the interpretation of non-literal language, including irony, in autism (Happe, 1993; Martin & McDonald, 2004). We did not measure social interaction skills in the control group, so it is unclear whether an inability to recognise a posed smile would also impact on social interaction in individuals without autism.

Sensitive measures of face-processing such as this task could be useful in the testing of relatives of autistic individuals who are often described as fitting a ‘broad autism phenotype’ (BAP) - a mild predisposition towards autistic traits, which when combined with environmental influences might develop into autism in some cases (for a review see Piven, 2001). A recent study has shown that relatives of individuals with autism show unusual gaze patterns when viewing faces (Dalton, Nacewicz, Alexander, & Davidson, 2006), indicating that abnormal fixation patterns might form part of this BAP. An extension to the current study would be to investigate performance on this task in relatives of autistic individuals, to see if this particular impairment present in autism could form part of the BAP.

In summary, the results of this study demonstrated an impaired ability in the autism group to discriminate genuine from posed smiles. Second, we found that individuals who were most impaired in the recognition of genuine smiles had more severe social interaction deficits. Finally, we found that the autism group showed reduced fixation of the eye region. We suggest that this reduced fixation to the eyes could account for the problems discriminating genuine from posed smiles in the autism group.

Acknowledgments

This study was funded by the Wellcome Trust and the Royal Society. ZB is funded by the Wellcome Trust Four Year PhD Programme in Neuroscience at UCL. SJB is funded by the Royal Society UK. We would like to thank Rebecca Chilvers for conducting the ADOS interviews for this study.

References

- Adolphs R, Gosselin F, Buchanan TW, Tranel D, Schyns P, Damasio AR. A mechanism for impaired fear recognition after amygdala damage. Nature. 2005;433:68–72. doi: 10.1038/nature03086. [DOI] [PubMed] [Google Scholar]

- Adolphs R, Sears L, Piven J. Abnormal processing of social information from faces in autism. JCogn Neurosci. 2001;13:232–240. doi: 10.1162/089892901564289. [DOI] [PubMed] [Google Scholar]

- Back E, Ropar D, Mitchell P. Do the eyes have it? Inferring mental states from animated faces in autism. Child Development. 2007;78:397–411. doi: 10.1111/j.1467-8624.2007.01005.x. [DOI] [PubMed] [Google Scholar]

- Dalton KM, Nacewicz BM, Alexander AL, Davidson RJ. Gaze-Fixation, Brain Activation, and Amygdala Volume in Unaffected Siblings of Individuals with Autism. Biological Psychiatry. 2006 doi: 10.1016/j.biopsych.2006.05.019. [DOI] [PubMed] [Google Scholar]

- Dalton KM, Nacewicz BM, Johnstone T, Schaefer HS, Gernsbacher MA, Goldsmith HH, et al. Gaze fixation and the neural circuitry of face processing in autism. Nature Neuroscience. 2005;8:519–526. doi: 10.1038/nn1421. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ekman P, Wagner H. In: The biological psychology of emotions and social processes. Manstead A, editor. Wiley; New York: 1989. The argument and evidence about universals in facial expressions of emotion; pp. 143–164. [Google Scholar]

- Hager JC, Ekman P. The asymmetry of facial actions is inconsistent with models of hemispheric specialization. Psychophysiology. 1985;22:307–318. doi: 10.1111/j.1469-8986.1985.tb01605.x. [DOI] [PubMed] [Google Scholar]

- Happe FGE. Communicative competence and theory of mind in autism: A test of Relevance theory. Cognition. 1993;48:101–119. doi: 10.1016/0010-0277(93)90026-r. [DOI] [PubMed] [Google Scholar]

- Howard MA, Cowell PE, Boucher J, Broks P, Mayes A, Farrant A, et al. Convergent neuroanatomical and behavioural evidence of an amygdala hypothesis of autism. Neuroreport. 2000;11:2931–2935. doi: 10.1097/00001756-200009110-00020. [DOI] [PubMed] [Google Scholar]

- Joseph RM, Tager-Flusberg H. The relationship of theory of mind and executive functions to symptom type and severity in children with autism. Development and Psychopathology. 2004;16:137–155. doi: 10.1017/S095457940404444X. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Klin A, Jones W, Schultz R, Volkmar F, Cohen D. Visual fixation patterns during viewing of naturalistic social situations as predictors of social competence in individuals with autism. Archives of General Psychiatry. 2002;59:809–816. doi: 10.1001/archpsyc.59.9.809. [DOI] [PubMed] [Google Scholar]

- Klin A, Sparrow SS, de Bildt A, Cicchetti DV, Cohen DJ, Volkmar FR. A normed study of face recognition in autism and related disorders. Journal of Autism and Developmental Disorders. 1999;29:499–508. doi: 10.1023/a:1022299920240. [DOI] [PubMed] [Google Scholar]

- Langdell T. Recognition of faces: an approach to the study of autism. Journal of Child Psychology and Psychiatry and Allied Disciplines. 1978;19:255–268. doi: 10.1111/j.1469-7610.1978.tb00468.x. [DOI] [PubMed] [Google Scholar]

- Lord C, Risi S, Lambrecht L, Cook EH, Jr, Leventhal BL, DiLavore PC, et al. The autism diagnostic observation schedule-generic: a standard measure of social and communication deficits associated with the spectrum of autism. Journal of Autism and Developmental Disorders. 2000;30:205–223. [PubMed] [Google Scholar]

- Martin I, McDonald S. An exploration of causes of non-literal language problems in individuals with Asperger Syndrome. Journal of Autism and Developmental Disorders. 2004;34:311–328. doi: 10.1023/b:jadd.0000029553.52889.15. [DOI] [PubMed] [Google Scholar]

- Miles L, Johnston L. In: Cognition and language: Perspectives from New Zealand. Haberman G, Flethcher-Flinn C, editors. Australian Academic Press; Bowen Hills (Queensland, Australia): 2006. Not all smiles are created equal: Perceiver sensitivity to the differences between posed and genuine smiles. [Google Scholar]

- Peace V, Miles LK, Johnston L. It doesn’t matter what you wear: the impact of posed and genuine expressions of happiness on product evaluation. Social Cognition. 2006;24:137–168. [Google Scholar]

- Pelphrey KA, Sasson NJ, Reznick JS, Paul G, Goldman BD, Piven J. Visual scanning of faces in autism. Journal of Autism and Developmental Disorders. 2002;32:249–261. doi: 10.1023/a:1016374617369. [DOI] [PubMed] [Google Scholar]

- Piven J. The broad autism phenotype: a complementary strategy for molecular genetic studies of autism. American Journal of Medical Genetics. 2001;105:34–35. [PubMed] [Google Scholar]

- Schultz RT, Gauthier I, Klin A, Fulbright RK, Anderson AW, Volkmar F, et al. Abnormal ventral temporal cortical activity during face discrimination among individuals with autism and Asperger syndrome. Archives of General Psychiatry. 2000;57:331–340. doi: 10.1001/archpsyc.57.4.331. [DOI] [PubMed] [Google Scholar]

- Spezio ML, Adolphs R, Hurley RS, Piven J. Abnormal Use of Facial Information in High-Functioning Autism. Journal of Autism and Developmental Disorders. 2006 doi: 10.1007/s10803-006-0232-9. [DOI] [PubMed] [Google Scholar]

- Williams LM, Senior C, David A, Loughland CM, Gordon E. In search of the “Duchenne Smile”? Evidence from Eye movements. Journal of Psychophysiology. 2001;15:122–127. [Google Scholar]

- Yin RK. Looking at upside-down faces. Journal of Experimental Psychology. 1969;81:141–145. [Google Scholar]

- Yovel G, Kanwisher N. The neural basis of the behavioural faceinversion effect. Current Biology. 2005;15:2256–2262. doi: 10.1016/j.cub.2005.10.072. [DOI] [PubMed] [Google Scholar]