Abstract

In this article, we review eye-tracking studies with dogs (Canis familiaris) with a threefold goal; we highlight the achievements in the field of canine perception and cognition using eye tracking, then discuss the challenges that arise in the application of a technology that has been developed in human psychophysics, and finally propose new avenues in dog eye-tracking research. For the first goal, we present studies that investigated dogs’ perception of humans, mainly faces, but also hands, gaze, emotions, communicative signals, goal-directed movements, and social interactions, as well as the perception of animations representing possible and impossible physical processes and animacy cues. We then discuss the present challenges of eye tracking with dogs, like doubtful picture-object equivalence, extensive training, small sample sizes, difficult calibration, and artificial stimuli and settings. We suggest possible improvements and solutions for these problems in order to achieve better stimulus and data quality. Finally, we propose the use of dynamic stimuli, pupillometry, arrival time analyses, mobile eye tracking, and combinations with behavioral and neuroimaging methods to further advance canine research and open up new scientific fields in this highly dynamic branch of comparative cognition.

Keywords: eye tracking, dog, gaze, face perception, pupillometry

Introduction

In the rapidly growing field of canine (mainly dogs and wolves) cognition (Aria et al., 2021), researchers have investigated visual perception of dogs for (mainly) two reasons: to understand how dogs perceive objects in their environment and how they interpret dynamic changes and events related to these objects. Concerning the first goal, researchers have developed a strong focus on the dogs’ perception of humans (Huber, 2016), mainly because dogs show impressive abilities for interacting and communicating with us (e.g., Bensky et al., 2013; Kaminski & Marshall-Pescini, 2014; Miklósi, 2015). From an evolutionary point of view, such understanding of humans is especially interesting because the decoding of social signals from heterospecifics, or across the species boundary, is challenging. The relative contribution of the two major sources of information—the phylogenetic (i.e., during domestication) and the ontogenetic (i.e., during a pet dog’s life in the human environment)—is one of the main questions in canine science. Undisputable, however, is the fact that the success of dogs within human societies, including their adoption of the numerous roles humans give to them, likely depends on an interaction of nature and nurture.

Studying how dogs perceive humans and use this information to solve their everyday problems is important for understanding why they fit so well into the human environment (Huber, 2016). Head movements are performed to direct attention to the objects of interest in a scene and therefore are behavioral proxies of ongoing cognitive processing (Henderson, 2003). The assessment of the dogs’ head orientations can be used to investigate a dog’s perception of human pointing gestures (Kaminski & Nitzschner, 2013; ManyDogs et al., 2021), gaze following (Wallis et al., 2015), referential communication (Merola et al., 2012), and perspective taking (Catala et al., 2017; Maginnity & Grace, 2014). However, the dogs’ perception of the human face alone requires the collection of data on a much finer scale. Although operant procedures have been used to investigate whether dogs can discriminate between the owner’s face and the face of another familiar (Huber et al., 2013) or unfamiliar person (Mongillo et al., 2010), between happy and angry faces (Albuquerque et al., 2016; Müller et al., 2015; Nagasawa et al., 2011), or between human and dog faces (Racca et al., 2010), it is often not clear which (facial) features dogs use to accomplish these tasks.

Therefore, in the early 2010s, dog researchers began to make use of nonintrusive technology that has been developed in human psychology and psychophysics: eye tracking. Compared with traditional behavior coding, eye tracking allows for measuring overt visual attention at a much finer scale for spatial and temporal resolution (for recent evidence comparing the performance of behavior coding from videos and eye tracking in dog research, see Pelgrim et al., 2022). Indeed, whereas head movements can be scored from videos recorded with normal cameras, the inferences that can be drawn about the dogs’ focus of attention are limited to a macro-scale (such as left–right), which might be suitable for preferential looking paradigms but not for more detailed questions. Instead, with eye tracking, researchers can estimate the location of the dogs’ central focus of attention in a scene, with an accuracy spanning from less than 1° of visual field (for stationary eye trackers; Park et al., 2022) to 5.4° of visual field (for mobile eye trackers outdoors; Pelgrim & Buchsbaum, 2022).

The temporal accuracy is usually also greater than that of traditional cameras, given that eye trackers can have a sampling frequency of 1000 Hz. For these reasons, eye tracking allows researchers to investigate novel dependent variables, such as gaze arrival times into an area of interest (AoI), that would simply be inaccessible without this methodology.

Eye Tracking: Background and Terminology

Eye tracking is a method widely used in human psychology to investigate various perceptual-cognitive phenomena by tracking gaze coordinates and the pupil size. Fixations, saccades, and blinks are events determined by an event-parsing algorithm, and they depend on information derived from the basic measurements (gaze coordinates and pupil size). Actually, more than a century ago this method was developed to study the relation between (a) eye movements and our spatial judgments and (b) various geometrical optical illusions (Delabarre, 1898). Later, the method was firmly established as an accurate way of investigating how humans look at pictures (Buswell, 1935) and then applied in many different research fields such as perception, attention, memory, reading, psychopathology, ophthalmology, neuroscience, human−computer interaction, marketing, consumer behavior, and optometry (see Duchowski, 2007; Holmqvist & Andersson, 2017, for overviews).

Eye movements can be monitored in different ways, using (a) surface electrodes, (b) infrared corneal reflections, (c) video-based pupil monitoring, (d) infrared Purkinje image tracking, and (e) search coils attached like contact lenses to the surface of the eyes (Holmqvist, Örbom, Hooge, et al., 2022). The most commonly used method is video-based P−CR eye tracking, which estimates the gaze direction as a function of the relative positions of two landmarks in the eye—the center of the pupil (P) in the camera image, and the center of the reflection on the cornea (CR) from infrared illuminators—by subtracting the CR coordinate from the P coordinate in the pixel co-ordinate system of the video image (Holmqvist, Örbom, Hooge et al., 2022). This subtraction serves to account for small head movements that can still arise when the participant’s head is restrained (we discuss restraint-free methods in the section The Use of Mobile Eye Trackers).

Buswell (1935) concluded from his observations that an important relationship exists between eye movements and visual attention. Indeed, eye movements are an overt behavioral manifestation of the allocation of attention in a scene, and therefore they serve as a window into the operation of the attentional system. Moreover, they provide an unobtrusive, sensitive, real-time behavioral index of ongoing visual and cognitive processing (Henderson, 2003). The reason for this relationship is that in humans, but also in other species with developed visual systems, high-quality visual information is acquired only from a limited spatial region on the retina (in primates the fovea)—the visual quality falls off rapidly from the center of gaze into a low-resolution visual periphery—and only during periods of relative gaze stability (fixations).

If we perceive natural scenes with many objects of interest, we need to move the center of gaze, resulting in gaze shifts, to acquire information about these objects. This process of directing fixation through a scene in real time is called gaze control, which is a central element in the service of ongoing perceptual, cognitive, and behavioral activity. Visual perception is an active process, and gaze is controlled by moving not only the eyes but also the head and in some insects the whole body to make high-quality visual information available when needed (Land, 1999). However, when we scan a picture or scene, our eyes do not wander but jump around (Yarbus, 1967). Indeed, the majority of vertebrates show a pattern of stable fixations and rapid eye movements. The latter are called saccades. Actually, we humans move our eyes about three times each second to reorient the fovea through the scene. The fixations, periods of nearly stationary viewing, last for about 300 ms or longer if our attention is caught. Park et al. (2020) found that dog saccades follow the systematic relationships between saccade metrics previously shown in humans (e.g., an increase of peak velocity and duration with increasing amplitude); however, they seem to be slower, and fixations were longer than those of humans.

For humans, fixations are defined as periods in which the gaze coordinates remaining relatively stable that can span from some tens of milliseconds to several seconds (Holmqvist et al., 2011). In the dog literature, there is no clear consensus on how to quantify a fixation (Table S1, the Operationalization of Fixation column, discussed in the Calibration and Data Quality section), because of the species-specific eye movements and little research on how they compare with human eye movements (with the noticeable exception of Park et al., 2020, 2022). Because most information is acquired during fixations, the most important eye-tracking measures are related to fixations. Often, visual scenes are complex and contain many parts and stimuli, and subjects change the focus of their attention while processing the scene. AOIs are tools of analysis that are employed when the researcher’s interest is in what parts of a scene or stimulus attract gaze most effectively, and in what order (Buswell, 1935). AOI measures such as absolute or relative time spent (sum of fixations) in AOI, the number of transitions between various AOI, or the number of revisits may be used for such questions. Such looking patterns reveal the work of two mutually affecting processes: The visual-cognitive system directs the gaze toward important and informative objects and, vice versa, gaze direction affects several cognitive processes (Henderson, 2003). In general, eye fixation patterns may be regarded as an objective method for inferring ongoing cognitive and emotional processes of visual information (Loftus, 1972), such as visual-spatial attention and semantic information processing (Henderson, 2003; Kano & Tomonaga, 2009).

Applying the Eye-Tracking Technology in the Dog Lab

By and large, the advantages of eye tracking also exist if the method is applied for investigating visual processes in dogs. However, as we discuss in this article, these benefits do not come without costs. There is not a one-to-one correspondence between the visual systems of humans and dogs in terms of anatomy and physiology of the eye, nor can dogs be tested in the same way as humans. For instance, dogs cannot be verbally instructed what to do and therefore need training (to obtain high-quality data); the screen-based stimuli are also not as natural as for humans who are raised in an environment full of pictures and televisions. These drawbacks need to be taken into account when using the eye-tracking method in the dog laboratory. Still, since its introduction, 24 studies have been published (see Table 1 and Table S1 at https://osf.io/xbqkt), altogether proving the usability of the method and producing a decent number of results about dogs’ perception and cognition that otherwise would not have been achieved.

Figure 1. Table 1. Eye-Tracking Studies with Dogs Published Until October 2022.

| Reference (Stationary ET) |

Exp. No. |

N | No. Excluded Dogs |

No. Targets |

Targets | Restriction | Stimuli | No. Trials |

No. Sessions |

Tracking | Pretraining Head |

Pretraining Calibration |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Correia-Caeiro et al. (2021) | 1 | 92 | 8 | 3 | Real-life treats | No – remote mode | Dynamic - projected |

20 | 1 | Monocular | No | No |

| Correia-Caeiro et al. (2020) | 1 | 27 | 1 | 3 | Real-life treats | No – remote mode | Dynamic - projected |

20 | 1 | Not reported | No | No |

| Törnqvist et al. (2020) | 1 | 24 | 0 | 5 | Real-life treats | Chin rest | Static - screen |

72 | 2 | Binocular | Yes | No |

| Törnqvist et al. (2015) | 1 | 40 | 6 | 5 | Real-life treats | Chin rest | Static - screen |

60 | 2 | Binocular | Yes | No |

| Somppi et al. (2017) | 1 | 43 | 3 | 5 | Not reported | Chin rest | Static - screen |

2 | 2 | Binocular | Yes | No |

| Somppi et al. (2016) | 1 | 31 | 2 | 5 | Not reported | Chin rest | Static - screen |

60 | 2 | Binocular | Yes | No |

| Somppi et al. (2014) | 1 | 31 | 2 | 5 | Real-life treats | Chin rest | Static - screen |

6 | 2 | Binocular | Yes | No |

| Somppi et al. (2012) | 1 | 6 | 0 | 5 | Real-life treats | Chin rest | Static - screen |

3 (× 6 pictures) |

4-8 | Binocular | Yes | No |

| Barber et al. (2016) | 1 | 25 | 2 | 3 | Projected - static |

Chin rest | Projected - static |

16 | 5 | Monocular | Yes | Noa |

| Barber et al. (2017) | 1 | 12 | 0 | 3 | Projected - static |

Chin rest | Projected - static |

16 | 5 | Eyes not tracked | Yes | Noa |

| Gergely et al. (2019) | 1 | 27 | Not reported | 5 | Screen -dynamic |

no | Screen -static |

1 | 1 | Binocular | No | No |

| Karl et al. (2020) | 1 | 15 | 0 | 3 | Screen -static |

Chin rest | Screen -dynamic |

4 | 1 | Monocular | Yes | Yes |

| Karl et al. (2020) | 2 | 15 | 0 | 3 | Screen -static |

Chin rest | Screen -dynamic |

4 | 1 | Monocular | Yes | Yes |

| Kis et al. (2017) | 1 | 31 | 27 | 5 | Screen -dynamic |

No, but body was restrained by owner |

Screen -static |

6 | 1 | Binocular | No | No |

| Kis et al. (2017) | 2 | 46 | 79 | 5 | Screen -dynamic |

Screen -static |

2 | 1 | Binocular | No | No | |

| Ogura et al. (2020) | 1 | 3 | 4 | 5 | Real-life treats | Chin rest | Screen -static |

6 | 6 | Monocular | Yes | No |

| Park et al. (2020) | 1 | 9 | 24 | 3 | Screen -static |

Chin rest | Projected - static |

Not reported | 2 | Monocular | Yes | Yes |

| Park et al. (2022) | 1 | 14 | 18 | Not reported | Screen -static |

Chin rest | Screen -static |

24 | Not reported | Monocular | Yes | Yes |

| Téglás et al. (2012) | 1 | 14 | 32 | 5 | Screen -dynamic |

No | Screen -dynamic |

12 | 2 | Not reported | No | No |

| Téglás et al. (2012) | 2 | 13 | 32 | 5 | Screen -dynamic |

No | Screen -dynamic |

6 | 1 | Not reported | No | No |

| Völter et al. (2020) | 1 | 11 | 3 | 3-5 | Screen -dynamic |

Chin rest | Screen -dynamic |

1 | 1 | Monocular | Yes | Yes |

| Völter et al. (2020) | 2 | 9 | 3 | 3-5 | Screen -dynamic |

Chin rest | Screen -dynamic |

1 | 1 | Monocular | Yes | Yes |

| Völter & Huber (2021a) | 1 | 14 | 0 | 5 | Screen -dynamic |

Chin rest | Screen -dynamic |

2 | 2 | Monocular | Yes | Yes |

| Völter & Huber (2021b) | 1 | 15 | 0 | 5 | Screen -dynamic |

Chin rest | Screen -dynamic |

2 | 2 | Monocular | Yes | Yes |

| Völter & Huber (2021b) | 2 | 14 | 0 | 5 | Screen -dynamic |

Chin rest | Screen -dynamic |

2 | 2 | Monocular | Yes | Yes |

| Völter & Huber (2022) | 1 | 14 | 0 | 5 | Screen -dynamic |

Chin rest | Screen -dynamic |

24 | 2 | Monocular | Yes | Yes |

| Völter & Huber (2022) | 2 | 17 | 0 | 5 | Screen -dynamic |

Chin rest | Screen -dynamic |

12 | 4 | Monocular | Yes | Yes |

| Pelgrim et al. (2022) | 1 | 5 | 3 | 5 | Real-life treats | Owner during calibration | Real-life | 10 | 1 | Monocular | No | No |

| Rossi et al. (2014) | 1 | 5 | 1 | 9 | Real-life treats | No | Real-life | 16 | 2 | Monocular | No | No |

| Williams et al. (2011) | 1 | 1 | Not applicable | 5 | Real-life treats | No | Real-life | 19 | Not applicable | Monocular | No | Yes |

Table 1. Note: Exp. No. = whether the information refers to the first or second experiment reported in the publication; No. Targets = number of calibration targets; Targets = type of calibration targets; Restriction = head movement restriction; Pretraining Head = pretraining to maintain a stable head position; Pretraining Calibration = pretraining to perform a calibration.

Although, to increase the dogs’ attention to the screen, chin rest trained dogs were confronted with a two-choice conditional discrimination task between geometric figures.

In these studies, researchers examined fixation patterns when presented with static images such as faces (e.g., Barber et al., 2016), changes in looking behavior as the result of expectancy violation (Völter & Huber, 2021a, 2021b) or cross-modal matching (Gergely et al., 2019), and most recently anticipatory looking (Völter et al., 2020) and changes in the pupil size (Karl, Boch, Zamansky, et al., 2020; Karl et al., 2021; Somppi et al., 2017; Völter & Huber, 2021a). Eye tracking in dogs has also been used to diagnose ocular motor abnormalities such as nystagmus (e. g., Dell’Osso et al., 1998), but for the purpose of this article we concentrate on perceptuo-cognitive tasks.

A major issue for the evaluation of the usage of eye tracking in dogs is the difference of the visual systems of humans and dogs (Miller & Murphy, 1995). As just described, the technology has been developed for the sake of measuring human eye movements on the basis of knowledge of the anatomy and physiology of the human visual system. But the canine visual system and performance deviates more or less strongly from the human counterpart, with more or less meaningful relevance for the eye-tracking measurements (Byosiere et al., 2018). For instance, it has been argued that dogs, as running predators, could be expected to be tuned to motion detection rather than visual acuity (McGreevy et al., 2004). In contrast, however, dogs might have been selected for increased visual performance during domestication, especially in the service of cooperation with humans (Barber et al., 2020).

The most recent review of the functional performances of the visual systems of dogs and humans by Barber and colleagues (2020) ended with the conclusion that the apparent limitations in visual perception of dogs compared with humans, such as lower visual acuity, reduced color perception, and increased sensitivity to bright light (because of adaptation to function in dim light), come with compensations that allow them to outperform humans in other related ways. They include better discrimination of certain colors (blue hues), increased motion sensitivity, and superior night vision. The earlier statement of Somppi and colleagues (2012) that the deficiencies of the visual system of the dog do not impose crucial limitations on the use of the eye movement tracking method seems therefore justified. Moreover, several studies have provided convergent evidence that dogs can detect details even in small photographs presented on computer screens (Aust et al., 2008; Müller et al., 2015; Pitteri et al., 2014; Range et al., 2008).

These favorable arguments with regard to the application of eye tracking in canine science should not be used as green card for research but rather as a proof of principle. Caution is necessary if one is recruiting breeds that have been selected for services with low or no engagement in visual tasks, like scent hounds and sniffer dogs (Barber et al., 2020). In general, it is important to be aware that in dogs, varying facial morphologies exist—for instance, between brachycephalic and dolichocephalic breeds—that may complicate the interpretation of even the most basic physiologic measures assessing perception (Miller & Murphy, 1995). Not only is the size of the eye and the total number of retinal ganglion cells highly variable, but the retinal ganglion cell distribution varies. This variation seems to covary with skull measurements—in particular, nose length. Whereas in dogs with long noses the retinal ganglion cells are concentrated in a horizontal visual streak across the retina with a less pronounced area centralis (similar to the fovea in primates), in dogs with short noses they are more centralized in a strong area centralis (McGreevy et al., 2004). Indeed, it seems that brachycephalic dogs, with flat faces and more forward-facing eyes, outperform dolichocephalic dogs in using the human pointing gesture (Gácsi et al., 2009), even though the causal role of the organization of the visual system remains unclear. Several researchers have therefore warned against overlooking breed differences or drawing careless conclusions or making bold assumptions from small sample sizes (Byosiere et al., 2018; Gácsi et al., 2009; McGreevy et al., 2013). Rather, these differences need to be taken into account when devising experimental designs that aim at investigating perceptuo-cognitive abilities of dogs in the laboratory.

In this article, we first review the eye-tracking studies with dogs published so far, classified according to the research questions and the respective answers; then we discuss the challenges and limitations of eye tracking in dogs (some of the challenges and limitations are applicable to eye tracking with humans as well, and others are more specific to eye tracking with dogs); and finally we provide an outlook into the future with a focus on the possible improvements and extensions.

Eye-Tracking Studies With Dogs

The Dog’s Perception of Faces

About one third of all eye-tracking studies with dogs published so far have investigated how dogs perceive faces. Faces are an important visual category for many taxa because they differ in subtle ways and possess many idiosyncratic features, thus providing a rich source of information (Leopold & Rhodes, 2010). The human face allows dogs to interact and communicate with their caregiver, for instance, by obtaining information signaled through communicative gestures (Téglás et al., 2012) as well as attentive states (Gacsi et al., 2004; Schwab & Huber, 2006) and emotional states (Müller et al., 2015).

In the first published study on stimulus perception measured with the aid of an eye tracker, dogs exhibited a looking preference for faces over children’s toys and alphabetic characters (Somppi et al., 2012). Within the category of faces, the dogs preferred to look at the faces of conspecifics (dogs) over heterospecifics (humans) in terms of both the number of fixations and the total duration of fixations. Dogs looked less frequently at the images of children’s toys, and the letters received the lowest number of fixations. This finding suggests not only that dogs are able to discriminate images of different categories but that the preference for faces over meaningless, inanimate objects and letters provides evidence that they recognized somehow the content of the images.

The same group of researchers compared dogs’ viewing patterns of dog and human faces in different conditions (Somppi et al., 2014): in upright and inverted manner and representing familiar or unfamiliar individuals. The results revealed striking similarities in the way humans and dogs view faces: As in the previous study, dogs preferred conspecific faces over heterospecific faces, showed more interest in the eye area than other areas in the case of upright faces but not inverted faces, showed deficits in face processing when the image was turned upside down, and fixated more at familiar than unfamiliar faces. The inversion effect could be explained by the reliance on global configural rather than elemental or part-based processing. Somppi and colleagues (2014) also found that dogs targeted nearly half of the relative fixation duration at the region around the eyes, indicating an eye primacy effect. This result, however, could be only partially confirmed in another eye-tracking study that investigated the possible differences between pet and laboratory dogs (Barber et al., 2016). Whereas lab dogs fixated first on the eye region and later the mouth region, pet dogs allocated their first fixation equally into the eyes and mouth regions.

Another interesting result of the Barber et al. (2016) study was the interaction of facial expression and face region preference. Across dogs, the number of fixations was higher for the forehead if a positive expression (happy or neutral face) was displayed but higher for the mouth and eye region if a negative expression (angry or sad face) was displayed. This finding deviates from what is known from the human literature; in humans, the mouth receives the most attention in positive emotions and the eyes in negative emotions (Schyns et al., 2007; Smith et al., 2005). Of interest, Somppi et al. (2016) found that eyes were more interesting for dogs than the mouth region, if measured in terms of the targets of the first fixations and looking durations, regardless of the viewed expression. This was true for both human and dog faces. Dogs scanned the facial features of conspecifics and humans in a similar manner. Only when examining the looking patterns at faces of conspecifics separately did the authors find identifiable emotion effects; dogs looked longer at the face of threatening dogs than of pleasant or neutral dogs. Such heightened attention toward the threatening signals of conspecifics could be explained by higher arousal in view of aggressive dogs than in view of angry humans.

How dogs perceive human faces is likely influenced by the emotional state of the dog subject when viewing them. When dogs were confronted with pictures of unfamiliar male human faces after receiving oxytocin (nasal spray), they showed enhanced gaze toward the eyes of smiling (happy) faces—and reduced gaze toward the eyes of angry faces—than after the placebo treatment (saline; Somppi et al., 2017). However, this finding could not be replicated. Other researchers found quite the opposite effect of oxytocin treatment, namely, that dogs’ preferential gaze toward the eye region when processing happy human facial expressions disappears (Kis et al., 2017).

Whatever the exact effects, the two studies suggest at least that the allocation of attention toward human emotional faces is somehow related to the dog’s own emotional state. This conclusion was supported by the changes of pupil size, which can be considered as an indicator of emotional arousal (Somppi et al., 2017). In the nontreatment (placebo) control condition, the pupil size was larger when looking at angry faces, a finding confirmed in a later study with faces of the dog’s caregiver, familiar and unfamiliar people (Karl, Boch, Zamansky, et al., 2020). But oxytocin reversed this effect (Somppi et al., 2017). A study that examined the cardiac responses of domestic dogs upon seeing faces of humans with different emotional expressions (angry, happy, sad, neutral) provided further evidence for the influence of human facial expression on the emotional state of the dog observers (Barber et al., 2017).

Support for the claim that dogs react to the emotions shown by human facial expressions comes from another finding of the study by Barber and colleagues (2016); the data from the eye-tracking measurements revealed a strong left gaze bias, that is, dogs were looking preferentially into the right face hemisphere in the left visual field regardless of which face (positive or negative emotion) was shown. A preference for the left visual field is associated with the engagement of the opposite, that is, right brain hemisphere. This finding corresponds not only to findings of previous studies with dogs (Guo et al., 2009; Racca et al., 2012; Siniscalchi et al., 2010) but also to findings from a variety of species that show a lateralization toward emotive stimuli regardless of the valence (see review in Salva et al., 2012). And the result is in line with the human eye-tracking literature (Butler et al., 2005; Guo et al., 2009).

In conclusion, taking all eye-tracking studies about the dog’s perception of human faces together, we are confronted with considerable ambiguity. Whereas some studies found modest to strong effects of the human facial expressions on the dogs’ looking patterns (Barber et al., 2016; Somppi et al., 2016, 2017), but not a consistent allocation of attention toward the various face regions, no such effects were found in two other studies. Instead, the dogs’ face-viewing gaze allocation varied between the various face regions but not between faces with different expressions (Correia-Caeiro et al., 2020; Kis et al., 2017). Still, these studies deviated from the other ones in one important aspect: Dogs were not trained to maintain attention to stimuli, and they were not or only weakly restrained; no chin/head rest was used, and thus the dog’s head could freely move. Although this procedure is likely producing more spontaneous looking behavior of the dog observers, it is not known if it reduces data quality.

The Dog’s Perception of Gaze and Human Communicative Signals

To test whether dogs, like human infants (Senju & Csibra, 2008), would interpret the ostensive-communicative cues of humans as the expression of communicative intent, and therefore follow with their gaze the subsequent referential signal more readily, dogs were presented a video sequence showing a female actor behind a table on which two pots, on each side, were placed (Téglás et al., 2012). The actor addressed the dogs in either an ostensive manner (looking straight at the dog and saying “Hi dog!” in a high-pitched voice) or a nonostensive manner (remaining with her head facing down) before emitting a referential signal by turning her head toward one of the two containers and looking at it for 5 seconds. Using the eye tracker, the researchers found that the dogs looked longer at the cued pot in the ostensive condition than in the nonostensive condition. The striking similarity of the effect to the one found in a study of 6.5-month-old human infants (Senju & Csibra, 2008) suggests that the dogs’ gaze following the human’s head turn was triggered by the expression of communicative intent.

Following human-given signals is also relevant for the question why dogs are communicating and interacting so well with us humans. Perhaps the best-studied phenomenon in this respect is the dog’s following of the human pointing gesture. It was catalyzed by the finding that dogs perform more accurately than other species (e.g., Hare et al., 2002; Kaminski & Nitzschner, 2013; Miklósi et al., 1998; Soproni et al., 2001), even great apes (Bräuer et al., 2006), and do so unlike wolves even from a very young age on (Bray et al., 2021). But the question of why dogs do so remains unresolved to this day. Finding an answer to this question is difficult if one must rely on choices and overt behavior of dogs. Eye tracking may provide a solution, because analyzing the looking patterns of dogs when observing the human informant has the advantage of a more thorough investigation of what drives the dogs’ decisions after the cueing. In a study using this method, dogs could use the momentary distal pointing of the human informant to find hidden food but without reference to target object (Delay, 2016). Dogs rarely looked at the indicated food container but spent most of the time looking at the experimenter’s head area and the pointing arm both during and after the signal (but see Rossi et al., 2014, for providing some evidence of gazing at the correct cup). The conclusion was, therefore, that dogs perceive the human pointing signal as direction related rather than object related (Tauzin et al., 2015).

The Dog’s Perception of Bodies

Recent studies have investigated how dogs look at full-body videos of humans and conspecifics expressing emotions (Correia-Caeiro et al., 2021) and at full-body photographs of humans (with and without communicative hand signals), conspecifics, cats, and wild animals (Ogura et al., 2020; Törnqvist et al., 2020). In the study by Correia-Caeiro et al. (2021), when presented with actors expressing emotions (happiness, fear, positive anticipation, frustration, and neutral), dogs looked longer at stimuli with human than dog actors. They looked longer at human bodies than human heads and slightly longer (29% vs. 26%) at dog bodies than dog heads. To compare these findings across studies, however, it is important to notice that, contrary to previous dog eye-tracking studies (Somppi et al., 2014; Törnqvist et al., 2020), the proportion of viewing time to each AOI was not standardized by the size of the AOI. The authors maintain that standardizing the proportion of viewing time by the AOI size would disrupt the ecological validity of the stimuli and overestimate the looking time to smaller areas by altering the natural head/body proportion. However, this choice is also open to the critique that longer proportion of viewing times are expected to fall in larger AOIs (in this case, the body), based on random scanning of the videos alone.

Because the dogs’ heads were unrestrained (eye tracker remote mode), it was possible to code dogs’ facial expressions and head movements. Dogs tended to turn their head left more often when seeing humans than conspecifics and brought their ears closer together when seeing conspecifics rather than humans. Using the eye tracker remote mode and untrained dogs, the authors were also able to test the largest sample of dogs in an eye-tracking study so far (92 dogs). However, collecting data with untrained dogs using the remote mode also led to substantial data loss in dogs: During data acquisition, the dogs received a treat from an experimenter after every trial. Each trial lasted between approximately five and eight seconds (the duration of one video), plus the time it took each dog to fixate for 1 second on the drift point before the presentation of the video. The high frequency of the reward in this procedure might have captured dogs’ attention off the screen (in anticipation of the reward) for most of the trial duration. Indeed, Table S9 of Correia-Caeiro et al. (2021) shows that although, on average, humans participating in the same study watched the stimuli for 83% (SD ± 17%) of the time, the dogs rarely looked at the stimuli for longer than 30% of the trial time and on average looked at the stimuli for 22% of the time (SD ± 28%).

In the study by Ogura et al. (2020), three dogs were presented with color photographs of humans, dogs, and cats. In the case of pictures of humans, dogs made more fixations to the limbs AOIs (which included feet, part of the lower legs, and arms) and were also more likely than expected by chance to fixate first the limbs AOIs. In contrast, they made more fixations to the head AOI and were more likely than expected by chance to fixate first the head AOI when the depicted animals were dogs. When presented with pictures of cats, dogs made more fixations to the head and body AOIs and where more likely to fixate first the body AOI. Contrary to the number of fixations and first fixation analyses, the looking duration did not differ depending on the presented species.

The AOI that was fixated first could have been dependent on the dogs’ scanning of the screen prior to stimulus presentation and during interstimuli intervals. Indeed, the presentation of the stimuli was not contingent on the dogs’ gaze being in a certain position on the screen. Likewise, differences in body postures and sizes of the same AOI between species were not considered.

Similarly to Correia-Caeiro et al. (2021), Ogura et al. (2020) did not standardize the response variables by the (differing) sizes of the AOIs. The authors argue that dogs’ gaze allocation to the AOIs in this study was not random, because dogs made more fixations in smaller AOIs (e.g., more fixations to the dog head than to the dog limbs).

Törnqvist et al. (2020) investigated how pet and kennel dogs look at pictures of dogs, humans, and wild animals. They found that both types of dogs showed spontaneous object/background and head/rest of the body differentiations, irrespective of the depicted (mammalian) species. Specifically, both pet and kennel dogs looked longer at heads than bodies. Moreover, heads and bodies elicited longer looking times than background. Both types of dogs gazed longer at the heads of wild animals than at the heads of dogs or humans but longer at the bodies of humans and dogs than at the bodies of wild animals.

The two dog types did not always converge in their viewing patterns. Whereas pet dogs looked longer at bodies in pictures showing two animals than in pictures showing only one animal, kennel dogs did not differentiate with their looking times between these two types of images. This is interesting, because the extent to which breed can influence dogs’ viewing patterns is understudied (but see Abdai & Miklósi, 2022, for an exception). It is unfortunate, because usually the breeds are not matched between the two samples, and the differences found between kennel and companion dogs (see also Törnqvist et al., 2015) might not (only) reflect differences in the social environment but also breed differences.

The Dog’s Perception of Social Interactions

Törnqvist et al. (2015) compared how pet and kennel dogs scan pictures of social interactions. Overall, pets looked longer at scenes containing two individuals than did kennel dogs, irrespective of whether the images depicted pairs of humans or dogs, facing toward each other or away from each other. However, the two groups of dogs did not differ in their gazing times to scrambled images or in the proportions of gazing time allocated to the actors relative to the entire scene. Moreover, the proportion of gazing time to interacting actors was larger than the proportion of gaze time allocated to the two actors when these faced away from each other, irrespective of the actor species. Both pets and kennel dogs looked longer at humans than conspecifics, but only when the actors faced toward each other. Both groups of dogs exhibited more saccades between actors when the actors were humans facing each other than when they were humans facing away from each other.

In studies using pictures of faces (Somppi et al., 2012, 2014), dogs looked longer at faces of conspecifics than at human faces, whereas in the experiment of Törnqvist et al. (2015) they looked longer at interacting (whole body) humans than at interacting dogs. The authors speculate that the presentation of whole-body figures might have driven this difference.

The Dog’s Perception of Animacy Cues

Most eye-tracking studies to date used static stimuli. However, dogs are very attuned to motion, which is also reflected in the organization of their visual system as mentioned before. Motion detection and perception is important, especially for a social carnivore, for multiple reasons including the detection and tracking of social partners, competitors, threats, and prey. The application of eye tracking might allow for identifying motion-related cues that capture dogs’ attention. In the psychophysics literature, such motion-related cues have been labelled animacy cues (Scholl & Tremoulet, 2000; Tremoulet & Feldman, 2000). They include self-propulsion, direction and speed changes, and nonlinear trajectories.

We recently applied eye tracking to study dogs’ sensitivity to animacy cues, here specifically self-propulsion, speed changes, and the stimulus appearance (the presence of fur; Völter & Huber, 2022). The prediction was that videos showing object movements with such animacy cues would elicit a pupil dilation response as part of the dogs’ orientation toward this stimulus (similar to the human psychosensory response; Mathôt, 2018). In a first experiment, the dogs watched three different videos repeatedly, but sometimes the playback direction was forward (i.e., normal) or reversed. The videos depicted a ball rolling down a ramp and dog toys that were dropped on the floor. When the videos were playing with reversed playback direction, it appeared as if the object moved in a self-propelled way upward. The dogs’ pupil size was indeed more variable when they watched the videos that were presented with a reversed playback direction.

In the second experiment, the dogs watched videos depicting an animation of a ball rolling back and forth between two walls. The ball either changed speed while moving (sometimes stopping and starting to move again without any external cause that would explain this speed change) or moved at constant speed. Moreover, the ball either had a smooth surface or was covered by fur. Dogs reacted in a similar way as in the first experiment: Their pupil size was more variable when the ball moved with varying speed, and there was some indication that the presence of fur had a similar effect. These findings suggest that cues such as self-propulsion, speed changes, and fur can lead to an orienting response in dogs, which might facilitate their detection of animate beings. This study also complemented previous looking time studies that provided evidence for dogs’ sensitivity to another animacy cue, dependent, chasing-like motion patterns (Abdai et al., 2017, 2021).

The Dog’s Expectations About the Physical Environment

Eye tracking, particularly pupillometry, can also be used to study expectations about the physical environment. For instance, expectations concerning issues such as how objects move when unsupported, what happens when two objects collide, or when an object moving past another should be visible and when it should not. Showing events that violate certain physical regularities (e.g., concerning support, solidity, contact causality, and occlusion events) can provide evidence for such expectations if appropriate controls are administered. This “expectancy violation paradigm” has been applied extensively with human infants and in the past few decades increasingly with dogs (Brauer & Call, 2011; Müller et al., 2011; Pattison et al., 2010, 2013).

In research on human infants, pupillometry has been highlighted as a superior method in the context of the expectancy violation paradigm because changes in the pupil size are time sensitive and can be linked to specific events (Jackson & Sirois, 2009). We recently applied this method within series of eye-tracking studies with dogs (Völter & Huber, 2021a, 2021b, 2022). Dogs were presented with animations showing occlusion, support, and launching events that were either consistent or inconsistent with the corresponding physical regularity. In the launching event, one billiard ball rolled toward another one. In the control condition, the balls collided and the launching ball stopped moving while the other ball was set into motion. In the test condition, the two balls moved exactly in the same way (same kinematic properties and same timing), but a gap remained between the two balls and there was no collision. If the dogs had an expectation about contact causality (i.e., that contact is necessary for the transfer of momentum) we predicted that their pupils would dilate more in the test condition than the control condition. Indeed, this prediction was confirmed by the dogs’ pupil size response. Additionally, the dogs looked significantly longer at the launching ball in the test condition than control condition after it had stopped moving. In the occlusion event, the dogs saw a ball rolling past a narrow pole and either it reappeared on the other side (as it should; control condition) or it did not (test condition). In line with the prediction, the dogs again had larger pupils following the implausible disappearance of the ball.

Finally, we also presented the dogs with support events: The dogs watched a ball rolling along a surface toward a gap in the surface. The ball either fell down into the gap (control condition) or hovered over the gap by continuing rolling as if no gap was present (test condition). In this case, the dogs’ pupils dilated more so when presented with the falling-down event than when they saw the hovering event. We concluded that the dogs were more surprised to see the ball suddenly changing direction (which, when considered in isolation, can be seen as an animacy cue; see previous section) than when it started hovering. However, this finding might also be an artifact of the screen-based nature of the stimulus. It remains to be seen whether this finding holds with real-world demonstrations (but there is some indication from other studies that dogs indeed have no clear expectations concerning support events or a gravity bias (Osthaus et al., 2003; Tecwyn & Buchsbaum, 2019).

This series of studies confirms that eye tracking and pupillometry can be a useful tool to study not only how dogs perceive the social environment but also what expectations they have concerning the physical world.

Challenges and Limitations

The Challenges of Dog Training and Small Sample Sizes

The sample sizes of all experiments (N = 32) of all published eye-tracking studies (N = 24) conducted with dogs so far range from 1 to 92 (M = 20.8, SD = 17.9) dogs (see Table 1). Limited sample sizes might reduce power, force researchers to adopt designs that might not be directly comparable to previous behavioral studies, and prevent investigations of breed differences. As in any other field, the appropriate sample size for each study should be dictated by the effect of interest, the experimental design, and the desired statistical power, as well as by practical concerns regarding the duration of the training, the number of available dogs, and the presence of suitable resources and facilities. A limiting factor of sample sizes in dog eye-tracking studies is the training administered in some labs prior to data collection to improve data quality (see the Calibration and Data Quality section).

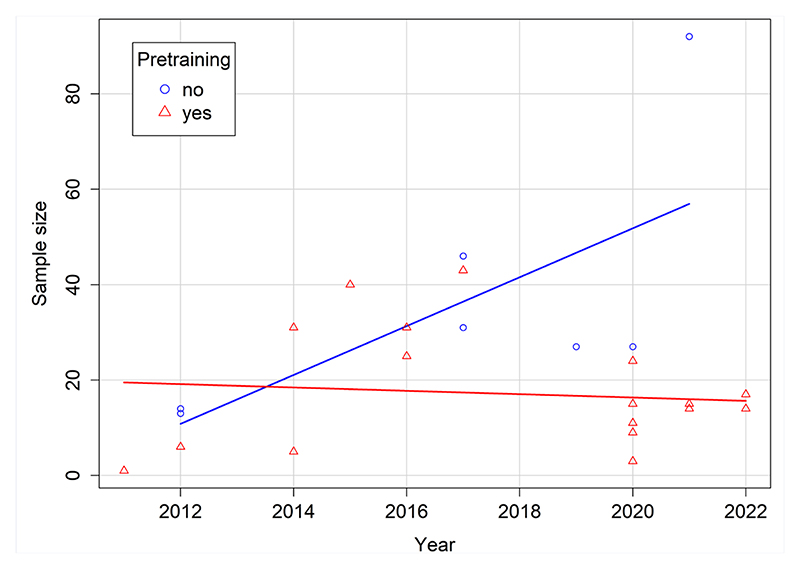

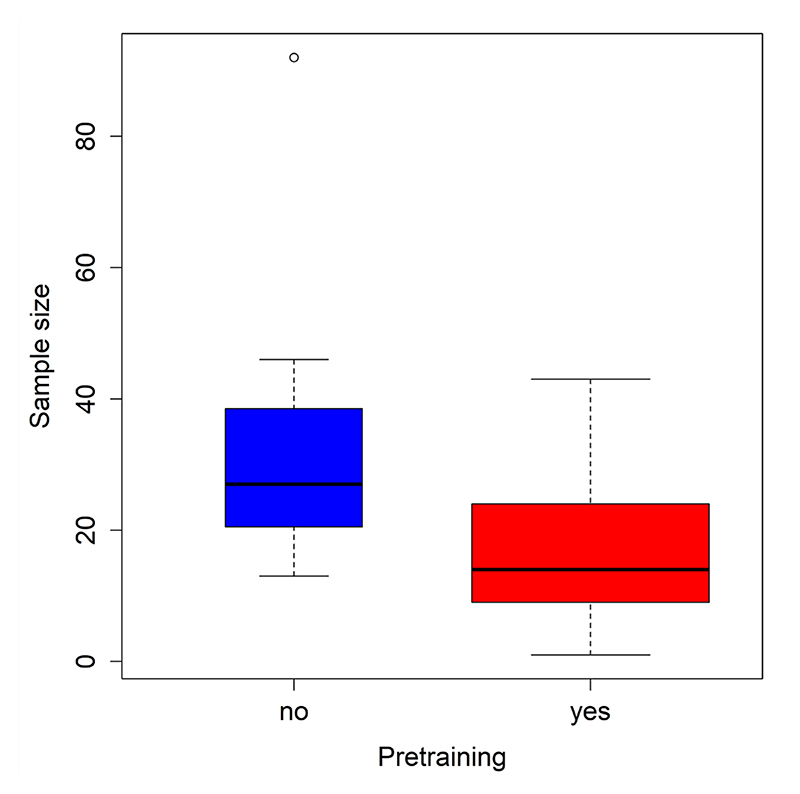

The experiments involving pretrained dogs had an average sample size of 17 dogs, and those using dogs that were not trained to keep their head immobile had, on average, 36 subjects (medians are shown in Figure 1). Figure 2 shows the trend of sample sizes over the years separately for studies that included a pretraining and those that did not.

Figure 2. Figure 1. The sample sizes of studies with pretrained (red) and task-naive dogs (blue). The width of the boxes is proportional to the square root of the number of studies in each group.

Calibration and Data Quality

The calibration is the most important step in eye tracking with respect to data accuracy (i.e., the deviation between actual and measured gaze location). It serves to map the dogs’ eye position (measured in camera co-ordinates) onto the screen or real-world coordinates. Put differently, the calibration allows for determining where (usually on the screen) the subject is looking.

During the calibration, a fixation target is shown at different locations of the screen and the subject is supposed to follow the target with the gaze. The quality of the calibration depends on various factors; some of the most important are the number of calibration targets, the size of the calibration targets, and the subjects’ ability and willingness to look at the center of the calibration target (for more details, see, e.g., Holmqvist, Orbom, Hooge, et al., 2022). Especially with nonverbal participants, either some kind of pretraining might be necessary—for dogs, this has been described by Karl, Boch, Virányi, et al. (2020)—or animated calibration targets might be used that increase the likelihood that the participants look at them (a method also commonly used with preverbal human infants and nonhuman primates). Given its importance, it is surprising how little information is provided about the calibration in comparative eye-tracking studies (Hopper et al., 2021). Often the only information provided is the number of targets that were used and whether the calibration targets were static or animated. In canine eye tracking, typically three (5 of 19 canine eye-tracking studies that reported the number of calibration targets) or more commonly five (14 of 19 studies) calibration targets have been used.

The calibration can be validated by repeating the calibration procedure. In this validation, the deviation between the first and second calibration can be used to quantify the accuracy. However, validation results are only rarely reported in canine cognition studies (only 7 of 21 stationary eye-tracking articles reported quantitative validation results, though occasionally previous publications from the same lab are cited that describe training criteria, including validation thresholds, in a more detailed manner). Sometimes the accuracy of the eye-tracking device (provided by the manufacturer) is reported instead (which merely shows which accuracies can be obtained with human participants) but not the results of the actual validation with dogs. Park et al. (2020) found accuracy in chin-rest trained dogs (0.88°) to be lower than in humans (0.51°; using an Eyelink 1000 system).

Data quality in eye tracking refers not only to accuracy but also to precision and data loss. The precision is defined as the extent to which repeated measurements of the same gaze position leads to the same measured values (i.e., the reproducibility of measurements). Data loss refers to missing data because the eye tracker cannot reliably identify the center of the pupil and/or corneal reflection. Data loss seems to be an important issue in canine eye-tracking studies, as some studies report data loss of more than 50% (reviewed in Park et al., 2022). Unfortunately, not all studies explicitly report data loss (i.e., the proportion of missing samples overall and per subject).

Additionally, researchers have applied various criteria for data exclusion. Across the reviewed studies, the criteria used to discard trials or participants because of excessive off-screen gaze, movement (e.g., the dog leaving the predefined viewing position), or technical problems varied considerably. For example, Correia-Caeiro et al. (2021) repeated data collection only with dogs that did not look at the stimuli in more than half of the trials. Törnqvist et al. (2015) excluded dogs with missing gaze data in more than 30% of the trials. Gergely et al. (2019) analyzed only trials containing at least 80 ms of on-screen gaze (out of a 17 s trial). Somppi et al. (2016) excluded trials in which the dog’s gaze was not detected for more than 50% of the time a stimulus was presented. Völter et al. (2020) excluded subjects with missing gaze data for more than 30% of the stimulus duration. Other adopted criteria focused on the AOIs. For example, Téglás et al. (2012) did not analyze trials in which the dogs had looked for less than 200 ms into the target AOIs.

Head movements can affect data quality: They can lead to inaccurate and imprecise results and data loss (Hessels et al., 2015; Holmqvist, Örbom, & Zemblys, 2022; Wass et al., 2014). Greater tracking accuracy was achieved with infants whose head movements were more restricted (when strapped to a baby seat compared with being placed in a high chair or in the parent’s lap; Hessels et al., 2015). To cope with this issue, the majority (16 of 21 of the reviewed studies) of canine eye-tracking studies used a chin rest to stabilize the dogs’ head during the recordings. Karl et al. (2020) described that the training that takes place prior to the experimental sessions, including the calibration and validation training, can take between 8 and 30 sessions. For some studies, pet dogs have been trained directly by their owners (Ogura et al., 2020; Somppi et al., 2012, 2014; Törnqvist et al., 2015, 2020), in other cases by experimenters or professional dog trainers (Karl, Boch, Zamansky, et al., 2020; Park et al., 2020, 2022; Völter et al., 2020; Völter & Huber, 2021a, 2021b, 2022) and only in some cases have they been explicitly trained for a calibration procedure (Barber et al., 2016; Binderlehner, 2017; Delay, 2016; Karl, Boch, Zamansky, et al., 2020; Ogura et al., 2020; Park et al., 2020; Park et al., 2022; Völter & Huber, 2021a, 2021b, 2022; Völter et al., 2020).

No study so far has tested whether the trainer’s background (professional, experimenter, or the dog’s owner) or the training for calibration has an influence on the dogs’ learning speed and the subsequent data quality. Based on the findings of the studies just mentioned, such an influence, if present, cannot be estimated, because relevant parameters, such as the dogs’ validation accuracy (in degrees of visual angle), are often not reported.

Although the influence of the trainer’s background and of the calibration pretraining on data quality remains unknown, stabilizing the dog’s head is likely to result in reduced data loss and greater accuracy (Hessels et al., 2015; Holmqvist, Örbom, Hooge, et al., 2022; Wass et al., 2014). Indeed, studies that did not use a chin rest used remote eye tracking (e.g., using the remote mode in Eyelink systems; for more details, see The Use of Mobile Eye Trackers section), restrained the dog’s movement in another way (e.g., the handler holding the dog by placing both hands on its chest), and/or had high attrition rate or data loss (e. g., Kis et al., 2017; Téglás et al., 2012). On one hand, high attrition rates might reflect a selection bias for calm and/ or obedient dogs. On the other hand, using specifically trained dogs may bias the sample in different ways, for instance, by selecting highly trainable dogs, with similar types of pre-experimental experiences such as participation in dog sports. Remote eye tracking without a chin rest also seems to increase the data loss in the sense of higher proportions of off-screen looks (e.g., Correia-Caeiro et al., 2021; Kis et al., 2017). For example, in the study by Kis et al. (2017), dogs looked at the screen on average 19.7% (2759.54 ms) of the total (2 × 7000 ms) time (range = 80–10,508 ms) when pictures of faces were presented. In the study by Correia-Caeiro et al. (2021), dogs rarely looked at the stimuli for longer than 30% of the trial time and on average looked at the stimuli for 22% of the time (SD±28%). Still, aside from potential selection biases and data loss, how exactly the data quality compares between studies that used a chin rest and those that did not remains unclear from the published dog eye-tracking literature and will require further investigation.

Dog eye-tracking data seem to be noisier than human data (Park et al., 2022). Apart from head movements, numerous other factors might affect data quality in canine eye tracking. Some of these factors are likely to be shared with other study populations (e.g., human infants) such as the color of the iris (Hessels et al., 2015) or the skill of the operator (Hessels & Hooge, 2019). Others are more specific to dogs, related to the morphological properties of their heads and eyes such as the color and length of the fur around the eyes, droopy eyelids, the size of the eye clefts, the shape of the iris (in our experience, dogs with an irregular shaped iris are difficult to track), the visibility of the third eyelid, and the head shape (for an extensive discussion, see Park et al., 2022). The resulting noisy data can bias dependent variables that are used to analyze the experiments (Park et al., 2022; Wass et al., 2014). Apart from minimizing head movements, data quality might be improved by ensuring that nothing is obstructing the view of the eye tracker camera to the eye (e.g., by cutting facial hair covering the view), using bright stimuli and a well-lit recording environment to avoid too large pupils (that would not be entirely visible to the eye tracker), and recruiting dogs while considering the aforementioned morphological factors. In our experience, the most important criterion for high-quality data (evidenced by the validation accuracy) are a rather dark and regular-shaped iris that can be reliably detected by the eye-tracking software. High room temperatures pose another challenge, as dogs control their body temperature by panting (Park et al., 2022). The head movements caused by panting (which can also be a sign of stress) preclude the collection of high-quality data even when the dogs put their heads on a chin rest.

Additionally, the event detection algorithm to parse the raw data into fixations and saccades affects the results and might bias their interpretation. Dogs’ fixations are on average longer and saccades slower than in humans (Park et al., 2020, 2022). Park and colleagues (2020, 2022) argued that using the default parsing thresholds optimized for eye tracking humans might lead to biased results, for example, because of higher proportions of artefactual fixations. They recommended using noise-adaptive event classification algorithms and post hoc filtering in future research. Although at the moment we cannot estimate how long a dog fixation should be for the content of the stimuli to be processed (and potentially retained), we do have initial evidence, from studies that directly compared the two species, that dogs’ fixations and blinks are longer and saccades are slower than those of human adults (Park et al., 2020, 2022). Whereas other studies (e.g., Correia-Caeiro et al., 2021; Gergely et al., 2019) found that dogs’ average fixation duration was shorter than humans’, these differing findings are difficult to reconcile because of differences in the algorithms used and the varying data quality across studies. Contrary to the recent findings by Park and colleagues (2020, 2022), in dog eye-tracking research, common practice has been to classify dog fixations using the same (default) thresholds proposed for humans (Barber et al., 2016; Karl, Boch, Zamansky, et al., 2020) or even to lower the thresholds proposed for humans (Gergely et al., 2019a; Somppi et al., 2012, 2014, 2016, 2017; Törnqvist et al., 2015, 2020) or to analyze the raw samples directly (Kis et al., 2017; Völter et al., 2020). The latter approach makes the least assumptions but also includes samples that are part of saccades, potentially assuming visual intake when this is in fact unlikely.

Given the high variability in the way a fixation was operationalized across studies and that the duration of fixations is considerably influenced by data quality (see Holmqvist et al., 2011), it should not come as a surprise that the average fixation durations vary across studies, even in response to similar stimuli (such as human faces). Aside from differing ways fixations were operationalized, these results hint at the possibility that context variables, such as the interest value (Somppi et al, 2012) or the biological relevance of the stimuli (Somppi et al., 2016; Törnqvist et al., 2020), fatigue (as suggested for humans; Schleicher et al., 2008), and possibly the length of the trial and the dogs’ training and life history (e.g., lab vs. pet dog) might influence their fixation durations (Barber et al., 2016; Törnqvist et al., 2015). Supporting the effect of trial number (and hence possibly fatigue or visual habituation) on dogs’ fixation durations, Somppi et al. (2012) found that dogs produced fewer but longer fixations with increasing trial number and that novel stimuli, presented after three to five repetitions of familiar stimuli, instead resulted in shorter fixation durations. Dogs tested with treats close to the screen area might be more distractible and show shorter on-screen fixation durations (Barber et al., 2016; Correia-Caeiro et al., 2020, 2021).

Perhaps more surprising, Park et al. (2022), who used the same setup, same comparable stimuli, and partly the same data-parsing algorithm as Barber et al. (2016), report a much longer average fixation duration (1159 ms vs. 827 ms in Barber et al., 2016). However, unlike Barber et al. (2016), who used unthresholded data, Park et al. (2022) considered as artefactual, and therefore filtered out from the analysis, fixations shorter than 50 ms as artefactual and therefore filtered them out (based on the human literature; Hessels et al., 2018), a procedure that might explain the longer average fixation duration in their study.

The current canine eye-tracking literature suffers from a lack of documentation standards. When reviewing the canine eye-tracking literature, we found that often important information for evaluating the findings (e.g., the size of the AoIs or quantitative validation results) were not reported. When they were reported they are presented in differing formats (AoI sizes were reported in screen pixel, degrees of visual angle, or centimeters). Even worse is information concerning data exclusion criteria and attrition rates both in terms whether information was provided at all and with respect to the format. Future research would benefit from adhering more closely to documentation recommendations in the field (Holmqvist, Orbom, Hooge, et al., 2022). This would help improving reproducibility of the studies and facilitate the evaluation of the results. We, therefore, concur with Park et al. (2022) in that it is crucial for the field of canine eye tracking moving forward to improve data quality and documentation standards.

Artificial Dog Behavior (Motionless Viewing)

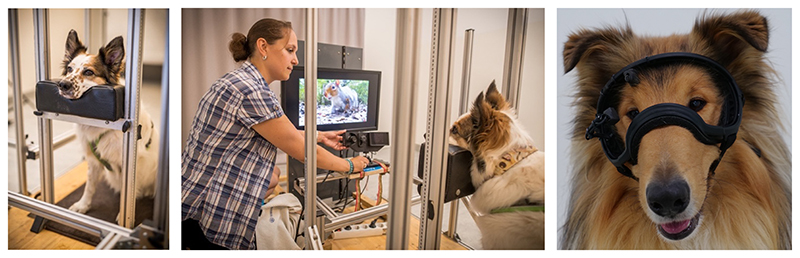

As described earlier, the majority of eye-tracking studies conducted with dogs so far used a chin rest to limit dogs’ head motion and consequently improve data quality (see Figure 3). Under natural conditions in which the head is free to move, eye movements also compensate for head movement to keep a stable retinal image (Kowler, 2011; Land, 1999). Instead, when testing dogs with a stabilized head, eye movements are assumed to indicate mainly dogs’ focus of attention. That is, they are assumed to be functionally equivalent to the head orientations that unrestrained dogs show toward relevant stimuli, although direct comparisons of dogs’ gazing behavior with and without head movement restriction is missing.

Figure 3. Figure 2. Samples sizes of canine eye-tracking studies as a function of time.

Moreover, future research should investigate whether inhibiting movement, as a result of the chin rest training, engages dogs’ motor system to an extent that can alter dogs’ looking behavior. For example, when observing others’ actions, humans’ predictive gaze shifts are drastically impaired if the participants’ hands are tied or occupied in a second task (Ambrosini et al., 2012; Cannon & Woodward, 2008). Such an interference might be effector-specific and hence might be apparent especially for actions that dogs would perform using their mouth, because dogs cannot open their mouth without lifting their head from the chin rest.

Although no study has directly addressed the influence of inhibiting dogs’ movement on their looking patterns, there is at least some evidence that dogs’ neural response to the perception of real-life objects is influenced by the effector used to interact with the object, at least when the effector is their mouth (Prichard et al., 2021a). Future studies comparing the looking patterns of dogs watching similar stimuli with and without head restriction are needed to test the effect of head restriction on the dogs’ looking patterns.

Fourteen of the studies conducted so far (that report this information) used monocular tracking (see Table 1 for the exact references), although very little information is available about how independently from each other dog eyes can move and blink (see the discussion in Williams et al., 2011). Especially when presented with two-dimensional images on a screen, dogs are confronted with impoverished stimuli (lacking depth information) and mainly stimulating their visual but no other sensory modalities.

Moreover, dogs are not allowed to interact with these stimuli at all. Future developments of the field could include giving dogs the possibility of controlling the stimuli presentation durations (as in self-paced tasks or in gaze-contingent tasks; e.g., a stimulus would be shown until the subject looks away for certain amount of time). Another possibility could be embedding the viewing of the stimuli in a task, such as a two-way object choice task. For example, this approach has already been undertaken by researchers studying how dogs perceive video-projected directional cues from conspecifics and humans (Bálint et al., 2015; Binderlehner, 2017; Péter et al., 2013; Pongrácz et al., 2018), although the combination of a task requiring a behavioral response with eye tracking has rarely been undertaken (for rare exceptions, see Binderlehner, 2017; Pelgrim & Buchsbaum, 2022; Rossi et al., 2014). Mobile eye tracking with dogs has great potential in this respect (see the section The Use of Mobile Eye Trackers).

Artificial Tasks, No Instruction What to Do

In all experiments that use a stationary eye tracker, dogs passively viewed the stimuli: They received no instructions and had no task to perform involving the stimuli. The downside of not being able to communicate instructions verbally is that, in the case of experiments where dogs have been pretrained to lay their head on a chin rest, they might consider not moving as their task. Especially for dogs that have not participated in many eye tracker experiments, we have to consider the possibility that the recorded gaze data might simply reflect dogs’ involuntary eye movements, as a way of reflexively orienting toward moving stimuli. At the same time, however, the results of the experiments conducted so far have partly disconfirmed this hypothesis. The upside to testing dogs is that they can be trained for stabilized-head eye tracking while likely remaining unaware that their gaze is being recorded. Hence dog data are unlikely to be subject to social biases arising from the recognition of being observed, which can instead affect human data (Risko & Kingstone, 2011).

Only a few studies have directly compared dogs’ gaze behavior to that of humans’ with eye-tracking technology to infer whether similar cognitive processes take place in the two species (Correia-Caeiro et al., 2020, 2021; Park et al., 2020a, 2022a; Törnqvist et al., 2015). However, this approach can be biased if the humans receive instructions to perform a task (e.g., categorization) while viewing the stimuli or to focus their attention on certain aspects of the stimuli (e.g., the attitude of the depicted agents). Scan paths in humans are known to depend on the task instructions (Henderson, 2017); therefore, a comparison with humans can be valid only when both species are not given any instructions or preliminary information about the content of the stimuli, as, for example, in Gergely et al. (2019) and Park et al. (2020).

Artificial Stimuli

Although eye tracking is possible with real-life stimuli such as live demonstrations of human pointing (Delay, 2016), most studies with dogs have so far used artificial stimuli as substitutes for real stimuli such as pictures or videos displayed on computer screens (e.g., Correia-Caeiro et al., 2020; Karl et al., 2021; Müller et al., 2015; Range et al., 2008; Somppi et al., 2012, 2014, 2016; Téglás et al., 2012) or projected at screens using video projectors (Barber et al., 2016, 2017; Correia-Caeiro et al., 2021). There are, of course, big advantages of using artificial stimuli over natural ones, and this is the reason for their frequent use. Experimenters can better control the timing of presentation, also the content using modern image editing software, and they can present the identical stimulus repeatedly to the same or to different subject animals (D’Eath, 1998).

In this review, however, we concentrate more on the possible disadvantages. There are at least two possible problems with artificial stimuli: One is technical in nature, and the other is more conceptual. On one hand, pictures and videos are displayed by devices such as televisions, video monitors, and video projectors that are designed with human vision in mind, but dogs—and other nonhuman animals—differ in aspects of visual processing such as their color vision, critical flicker-fusion threshold, perception of depth, and visual acuity. Therefore, they may perceive the stimuli differently than we do (D’Eath, 1998). This may be less problematic if the experimenter’s aim is to examine the ability to learn, discriminate, generalize, or categorize the stimuli on the basis of a specific predefined rule or hypothesis and the subjects perform as expected. However, if they do not respond to the depicted stimuli as they would to the real counterparts, the challenge becomes to identify the reasons for this discrepancy.

The bigger problem arises when researchers aim at understanding how animals perceive real stimuli (e.g., human faces) and use artificial stimuli as substitutes. Pictures are always abstractions of their three-dimensional referents (Bovet & Vauclair, 2000; Fagot, 2000) and must therefore appear quite different from real objects to most animals. Of course, nonhuman animals may recognize the content of the real object in a picture or video without perceiving them in the exact same manner. Picture–object recognition may therefore come along a scale from partial picture–object correspondence up to full picture–object equivalence. The animals’ place on this scale in the given experiment depends on various factors, including picture quality, functional properties of the visual system, and the subject’s prior experience with pictures or videos. But only if researchers address the question of whether their subjects perceive the correspondence between the image and its depicted referent can they correctly interpret the results of their experiments (Spetch, 2010).

At a low level, picture–object correspondence requires discriminating one or more visual features of the picture and recognizing them in the real object (or vice versa). Such a mechanism that is mediated by simple invariant two-dimensional characteristics without recognition of the real three-dimensional object is qualitatively different from perceiving pictures as representations of the real world, which is based on an ability to recognize the correspondence between objects and their pictures on a level beyond that of mere feature discrimination (see Fagot, 2000). On a higher level, the subject confuses the image and its referent. At the highest representational level, representing true picture–object equivalence, the animal comprehends that the picture is not only an entity in itself but also a representation of the depicted object (Fagot et al., 2010). For instance, Java monkeys were able to identify novel views of a familiar conspecific presented on slides or match different body parts of the same familiar group members. After first being familiarized with slides of their conspecifics, they were able to identify mother–offspring pairs or to match views of offspring to their mother (Dasser, 1987). By using a similar but extended logic, called complementary information procedure, Aust and Huber (2006) trained pigeons to discriminate between pictures of incomplete human figures and then tested them with pictures of the previously missing body parts. The pigeons could sort these complementary pictures into the correct categories, even if the test parts did not come from the same individuals as those shown during training, which provided an additional control for transfer by means of recognized item-specific properties. This result provided some evidence that the pigeons did not simply process some basic invariant features of the stimuli but had actually gained representational insight, a kind of symbol-referent relationship (Beilin, 1999).

Given the widespread use of two-dimensional stimuli in dog cognition research, surprisingly little effort has been directed to the investigation of dogs’ ability to recognize the content of two-dimensional representations of three-dimensional objects. Much more information is available about dogs’ perception of real-life events (reviewed in Byosiere et al., 2018). Some studies investigated whether dogs can use video-projected human pointing gestures to locate hidden food (Bálint et al., 2015; Péter et al., 2013; Pongrácz et al., 2018). Other studies compared directly dogs’ behavioral responses with (Binderlehner, 2017; Eatherington et al., 2021; Huber et al., 2013; Kaminski et al., 2009; Pongrácz et al., 2003) and their neural processing of (Prichard et al., 2021b) two-dimensional stimuli and their real-life counterparts.

For example, the ability to transfer knowledge acquired in one domain into another has been tested in our Clever Dog Lab in Vienna by borrowing an idea established with chimpanzees. Infant chimpanzees were able, after limited experience, to match what they observed on a television screen to events occurring elsewhere to determine the location of a hidden goal object in a familiar outdoor field (Menzel et al., 1978). We tested dogs if they would find a hidden object when before watching a video in the eye-tracker apparatus and then were asked to locate it in the real room (Binderlehner, 2017). With the aid of the eye tracker, we could prove that the dogs paid attention to the relevant parts of the video for most of the time. In the test, although the dogs did not provide unambiguous evidence for a transfer from the video to the real situation by going directly and without hesitation to the hiding place, they searched there much longer than dogs from a control group who had not seen the video.

Taken together, the results of the studies on dogs’ ability to generalize across two- and three-dimensional stimuli suggest that researchers should be cautious when assuming an equivalence between these two types of stimuli, especially when presenting dogs with static, novel pictures of inanimate objects. But there is no reason to be too pessimistic, because studies using cross-modal matching suggest that dogs have expectations about the vocalization a depicted dog or human should produce, on the basis of its species or emotional facial expression (Albuquerque et al., 2016; Gergely et al., 2019; Mongillo et al., 2021). Moreover, on the basis of two-dimensional information alone, dogs seem capable of recognizing different (dog and human) individuals (Racca et al., 2010), including discriminating their owner from a familiar or an unknown person (Adachi et al., 2007; Huber et al., 2013; Karl, Boch, Zamansky, et al., 2020) and prefer conspecific heads over human ones (Somppi et al., 2014). Finally, a study that projected a dog as informant in a two-choice task (Bálint et al., 2015) found that dogs reacted to the conspecific’s head directional cues by significantly avoiding the indicated bowl, contrary to dogs’ typical tendency to follow human-given directional cues in the same task (Miklósi et al., 1998; Soproni et al., 2001). Hence, the tentative conclusion that can be drawn from these studies is that dogs recognize the content of two-dimensional representations of at least dogs and humans. Still, more research on picture–object correspondence in the context of eye-tracking studies would be desirable.

In the next section, we turn now to the potential future directions of the field, describing methodological alternatives and additions that might overcome some of the limitations hitherto outlined.

The Future of Eye Tracking in Dogs

The Use of Dynamic Stimuli, Applying Pupillometry and Arrival Time Analyses

Whereas the first canine eye-tracking studies mainly focused on static stimuli (except for Téglás et al., 2012) in combination with AoI looking time analyses, researchers started to use more complex, dynamic stimuli (e.g., recorded videos and animations) in the past years to address new questions. This also entailed different types of data analyses, including pupillometry and arrival times analyses.

Dynamic stimuli bring about a number of challenges: If the object of interest is moving, dynamic AoIs might be necessary to quantify looking times. Additionally, when preparing stimuli, videos with a sufficient frame rate might be beneficial to increase the likelihood that they appear realistic to the dogs (e.g., Völter & Huber, 2021a, used videos with a frame rate of 100 Hz). Dogs’ visual perception appears to have a higher temporal resolution compared with humans as evidence by their higher flicker-fusion rates (Coile et al., 1989). The liquid-crystal displays (LCD) that are commonly used today, unlike cathode ray tube (CRT) displays, do not flicker between frames. Therefore, low monitor refresh rates should not result in a flickering effect (Byosiere et al., 2018). Nevertheless, low refresh and video frame rates might affect how realistic the stimuli appear to the dogs. Given the availability of (gaming) monitors with refresh rates of 100 Hz or higher and high-speed cameras, these potential confounds can be easily avoided.

Eye trackers provide information about the gaze location and the pupil size. With respect to pupil size, the pupil dilation response is a widely used measure of mental load, arousal, and the orienting response in humans (Mathôt, 2018). The involved neural pathways are similar in non-human primates (Wang & Munoz, 2015), evidence shows that nonprimates such as cats display an arousal-related pupil dilation response (Hess & Polt, 1960). Pupil dilation analyses require luminance-controlled stimuli. Various other factors can influence the pupil size as well, such as the angle between eye tracker and eye (the so-called pupil foreshortening error; Hayes & Petrov, 2016). Moreover, pupil size data are typically preprocessed, including a baseline correction (Mathôt et al., 2018).

As just reviewed, the first pupillometry studies with dogs emerged over the past few years. Whereas the first two studies (Karl, Boch, Zamansky, et al., 2020; Somppi et al., 2017) used only a summary statistic of the pupil size (the mean or the maximum) without conducting any baseline correction or time-course analysis, three recent studies focused specifically on the baseline-corrected pupil size as primary response variable (Völter & Huber, 2021a, 2021b, 2022). An advantage of the time course analysis is that the gaze coordinates can be accounted for in the analysis (van Rij et al., 2019), which might help to account for the gaze foreshortening error to some extent at least. Although these first studies provided promising first results, future research, also from different labs, will reveal the sensitivity and reproducibility of pupillometry in different areas of canine cognition research.