Abstract

Model-based treatment planning for transcranial ultrasound therapy typically involves mapping the acoustic properties of the skull from an x-ray computed tomography (CT) image of the head. Here, three methods for generating pseudo-CT images from magnetic resonance (MR) images were compared as an alternative to CT. A convolutional neural network (U-Net) was trained on paired MR-CT images to generate pseudo-CT images from either T1-weighted or zero-echo time (ZTE) MR images (denoted tCT and zCT, respectively). A direct mapping from ZTE to pseudo-CT was also implemented (denoted cCT). When comparing the pseudo-CT and ground truth CT images for the test set, the mean absolute error was 133, 83, and 145 Hounsfield units (HU) across the whole head, and 398, 222, and 336 HU within the skull for the tCT, zCT, and cCT images, respectively. Ultrasound simulations were also performed using the generated pseudo-CT images and compared to simulations based on CT. An annular array transducer was used targeting the visual or motor cortex. The mean differences in the simulated focal pressure, focal position, and focal volume were 9.9%, 1.5 mm, and 15.1% for simulations based on the tCT images, 5.7%, 0.6 mm, and 5.7% for the zCT, and 6.7%, 0.9 mm, and 12.1% for the cCT. The improved results for images mapped from ZTE highlight the advantage of using imaging sequences which improve contrast of the skull bone. Overall, these results demonstrate that acoustic simulations based on MR images can give comparable accuracy to those based on CT.

Index Terms: deep learning, convolutional neural network, MRI, CT, pseudo-CT, transcranial ultrasound stimulation, acoustic simulation

I. Introduction

Transcranial ultrasound therapy is a class of non-invasive techniques that leverage ultrasound energy to modify the structure or function of the brain, for example, to ablate brain tissue [1], modulate brain activity [2], or deliver therapeutic agents through the blood-brain barrier [3]. The major challenge is the delivery of ultrasound through the intact skull bone, which can significantly aberrate and attenuate the transmitted ultrasound waves. To counter the effect of the skull, computer simulations are often used to predict the intracranial pressure field [4], or to adjust phase delays to ensure a coherent focus [5]. Conventionally, these simulations are based on acoustic material property maps extracted from x-ray computed tomography (CT) images [6]. For clinical treatments in a hospital environment, obtaining pre-treatment CT images doesn’t necessarily pose significant challenges. However, for transcranial ultrasound stimulation (TUS), which is being widely explored as a neuroscientific tool in addition to its potential clinical applications [7], obtaining CT images for healthy volunteer studies can be more problematic. In the current work, the use of pseudo-CT (pCT) images for computer simulations of transcranial ultrasound propagation in a TUS setting is investigated. Three different methods of pCT generation are compared: (1) using a deep neural network based on T1-weighted (T1w) magnetic resonance (MR) images, (2) using a deep neural network based on zero-echo time (ZTE) MR images, and (3) directly mapping from ZTE MR images using classical image processing techniques following Wiesinger [8].

The image-to-image translation (I2IT) of MR to pCT images of the brain and skull has been widely explored in the imaging literature, particularly in the context of PET-MR, where the pCT images are used for PET attenuation correction [9], and radiotherapy planning [10]. In many cases, deep learning has been shown to out-perform classical techniques [11]. A variety of models and techniques have been used, including generative neural networks (GANs) [12], [13], supervised learning [14], contrastive learning [15], [16] and denoising diffusion probabilistic models [17]. Often, the network architecture accounts for features at multiple scales using a U-Net [11], [18]. The inputs to the neural network can be the full 3D image volumes [19], 2D slices along one or multiple planes [20], or small 3D patches [21].

The performance of the trained network is heavily influenced by the quality of the training dataset and choice of loss function. Ideally, the loss function should be directly related to the final performance of interest: in our case, the acoustic properties of the generated fields from the pCT (see Sec. II-E). However, in the absence of an efficient differentiable acoustic simulator, the corresponding training regime is often inefficient and losses are therefore defined in image space. Simple voxel-based metrics, such as mean absolute error (MAE) and mean squared error (MSE), are often used when registered image pairs are available [22].

Unpaired images can also be used for successfully training I2IT models, often by relying on some form of cycle-consistency loss [23]–[25] implemented using a discriminator neural network [26]. While theoretically effective and often capable of training complex models, such as ones that disentangle geometrical features and imaging modality [27], training generative neural networks against a discriminator can be challenging and often unstable [28].

Several previous works have investigated the use of learned pCTs for transcranial ultrasound simulation. In the context of high-intensity focused ultrasound (HIFU) ablation, Su et al [29] used a 2D U-Net trained on transverse slices from a dataset of 41 paired dual-echo ultrashort echo time (UTE) MR images and segmented CT images (only the segmented skull bone was used for training). Within the skull, the pCT images generated from the test set had an MAE of 105 ± 21 HU, and showed good correlation with CT in terms of skull thickness and skull density ratio. Coupled acoustic-thermal simulations were performed for a 1024 multi-element array and a deep brain target. Differences in the simulated acoustic field were not reported, but differences in the predicted peak temperature were less than 2 °C.

In a related study, Liu et al [30] used a 3D conditional generative adversarial network (cGAN) trained on patches from a dataset of 86 paired T1w and segmented CT skull images. Within the skull, the pCT images generated from the test set had an MAE of 191 ± 22 HU, and similarly showed good correlation with CT in terms of skull thickness and skull density ratio. Acoustic simulations for a 1024 multi-element array and deep brain target showed an average 23 ± 6.5 % difference in the simulated intracranial spatial-peak pressure when using pCT vs CT, and 0.35 ± 0.40 mm difference in the focal position.

In the context of TUS, Koh et al [31] used a 3D cGAN trained on 3D patches from a dataset of 33 paired T1w and CT images. The generated pCT images had an MAE of 86 ± 9 HU within the head, and 280 ± 24 HU within the skull. Acoustic simulations were performed using a single-element bowl transducer driven at 200 kHz targeted at three brain regions (motor cortex, visual cortex, dorsal anterior cingulate cortex). Across all targets for the test set, the mean difference in the simulated intracranial peak pressure was 3.11 ± 2.79 % and the mean difference in the focal position was 0.86 ± 0.52 mm. However, aberrations to the ultrasound waves are known to be significantly reduced at low transmit frequencies [32], [33], and thus the performance of learned pCTs at higher frequencies more commonly used for TUS remains an open question.

Several studies have also explored directly mapping the skull acoustic properties from T1w [34], UTE [35]–[37] or ZTE [38] images. Wintermark et al [34] generated virtual CTs from 3 different MR sequences and used a Bayesian segmentation strategy using a skull mask (obtained by CT thresholding) as a prior. Linear regression was used to estimate skull thickness and density, with the mappings from T1w images performing best. Acoustic simulations using calculated phase correction values from virtual CT showed good agreement with those from real CT. Phantom HIFU experiments were also performed where the difference in maximum temperature was 1 °C when using MR-based correction compared to CT-based correction.

In a similar study, Miller et al [35] investigated using UTE images instead of CT to apply aberration corrections for HIFU treatments. Three ex vivo skull phantoms were imaged by UTE and CT, and the UTE was segmented into a binary skull mask. Experimental transcranial sonications were performed on each skull using aberration corrections derived from MR, CT, and using no corrections. The measured temperature rises with aberration corrections were 45 % higher than non-corrected sonications, while there was no significant difference between the results from MR and CT-calculated corrections. UTE has also been shown to produce images with bone contrast highly correlated to that in CT images [36].

Guo et al [37] also investigated the use of UTE images using a series of linear mappings and thresholding operations to derive pCT images. A linear regression model comparing skull density from UTE and CT showed they were highly correlated. Acoustic properties of the skull derived from the UTE and CT images had less than 5 % error and were used to run acoustic and thermal simulations. The temperature rise was 1 °C higher in CT-based simulations compared to UTE simulations and the focal location was usually within 1 pixel (1.33 mm).

Finally, Caballero et al [38] extracted the bone from ZTE and CT images with a series of thresholding and morphological operators, and used the bone maps to extract skull measures, such as skull thickness and skull density ratio. It was demonstrated with linear regression that the skull measures derived from the two modalities highly correlate to each other, and also correlate with treatment efficiency.

The recent studies outlined above clearly demonstrate the feasibility of using pCT images for treatment planning in transcranial ultrasound therapy. Here, the previous results are extended in two ways. First, the ability to generate pCT images from T1w and ZTE images is directly compared using a unique dataset with high-resolution multi-modality imaging data. While T1w images are widely available, they suffer from poor skull contrast, particularly compared to specialised bone imaging sequences which enable visualisation of the bony anatomy [8]. Second, acoustic simulations are performed in the context of TUS using a commonly-used transducer geometry and a 500 kHz transmit frequency for both motor [39], [40] and visual [41] targets.

II. Methods

A. Multi-modality data set

The dataset used for the study consisted of paired high-resolution CT and MR images. Subjects had previously been scheduled for transcranial MR-guided Focused Ultrasound Surgery (tcMRgFUS) thalamotomy. The study protocol was approved by the HM Hospitales Ethics Committee for Clinical Research and all participants provided written consent forms. Each subject had a CT and T1-weighted (fast-spoiled gradient echo) MR image, and a subset of them also had a ZTE MR image, giving a total of 171 paired CT-T1w datasets and 90 paired CT-ZTE datasets. The CT images were reconstructed using a bone-edge enhancement filter (FC30) and had a slice thickness of 1 mm and an in-plane resolution of approximately 0.45 × 0.45 mm. The in-plane resolution varied slightly between subjects due to the selected field-of-view (the number of pixels was fixed at 512 × 512).

The MR images were acquired using a 3T GE Discovery 750 with an isotropic voxel size of 1 mm. Image acquisition parameters are described in detail in [38].

The images were processed as follows. First, bias-field correction was performed on the MR images using FAST [42] to reduce image intensity non-uniformities resulting from transmit RF inhomogeneities and receive coil sensitivities. The CT images were then registered to the corresponding MR images using FLIRT [43], [44], using affine transformation with 12 degrees-of-freedom and a mutual-information cost function. As part of the registration step, CT images were resampled to match the resolution (1 mm isotropic) and field-of-view of the MR images. After registration, all volumes were padded to a cube with an edge length of 256 voxels. Following Han et al [11], the MR image intensities were then processed using midway histogram equalisation [45]. The reference histogram was computed using images from the training set only (see Sec. II-B), and was computed separately for the ZTE and T1w images. The images were also normalised using the mean and standard deviation of the corresponding training set.

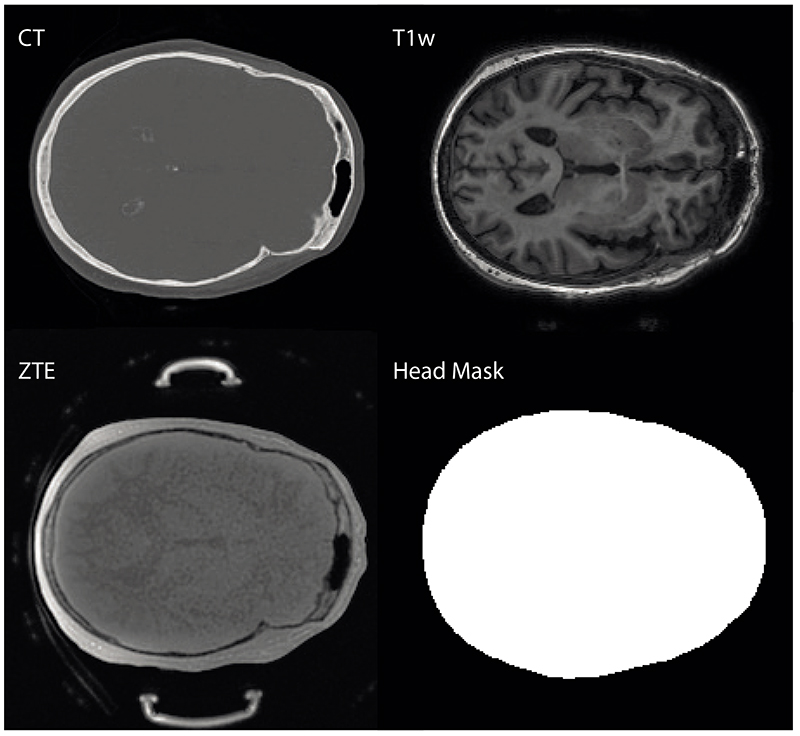

To remove image artefacts (such as dental implants, equipment, headphones) and the bones outside the neurocranium (which are not relevant to TUS), a binary mask was manually annotated on the MNI152_T1_1mm template brain [46], [47]. The MNI mask excluded the nasal and oral cavities, as well as everything below the foramen magnum. The MNI mask was then mapped to subject space by registering the MR images to the MNI template using FLIRT. The registered MNI mask was combined with a head mask (obtained by thresholding and filling the MR) to give a subject-specific mask that was used during training as described below. An example is given in Fig. 1. Note that the generation of the subject-specific mask doesn’t require a ground truth CT, so it can be applied to any new subject data during inference.

Figure 1. The input to the neural network is one or multiple adjacent transverse MR slices, acquired either using ZTE or T1w sequences.

The output of the neural network is multiplied by a head mask and compared to the registered CT inside the mask.

B. Network architecture and training

For mapping MR images to pCT, a 5-level U-Net [48] was implemented using Pytorch [49] similar to the architecture reported by Han [11]. Each level of the encoder consisted of either two (levels 1 and 2) or three (levels 3 to 5) convolutional layers with a rectified linear unit (ReLU) activation function followed by a batch normalisation layer. The convolutions used zero-padding, a 3 × 3 kernel, and a stride of 1. A max pooling layer was used between each level of the encoder, and skip connections between the encoder and decoder were used for the first four levels. The decoder was implemented as a mirrored version of the encoder with convolutional transpose (unpooling) layers [50] instead of max pooling layers. Both pooling and unpooling layers used a 2×2 kernel size and stride 2, followed by a dropout layer. The dropout probability pD was treated as a tunable hyperparameter which was optimised using the validation set. The best models for T1w and ZTE inputs were obtained with pD = 0.1 and pD = 0, respectively.

The input to the network was an n × 256 × 256 block of n consecutive 2D transverse MR slices (see top-right and bottom-left of Fig. 1 for an example of one slice). Using a small stack of images gives some 3D structural information to the network, as suggested in the discussion section of [11]. The network output was always a single 2D transverse slice corresponding to the middle input slice, and 3D pCT volumes were reconstructed slice by slice. Input stack sizes of n = 1, 3, 5, 7, 9, 11, and 15 were used in preliminary testing. A stack size of 11, i.e. 5 additional image slices on either side of the primary input slice, gave the lowest MAE on the validation set. Notably, the use of multiple input slices significantly reduced the occurrence of skull discontinuities between slices in the 3D pCT images, particularly for the T1w inputs (this is discussed further in Sec. III-A). A stack size of 11 was thus used in all subsequent training.

The input was mapped to 64 channels in the first convolution. The number of channels was doubled at each layer of the encoder and halved in each layer of the decoder, except for the deep-most layer. A final 1 × 1 convolutional layer was implemented to map each 64-component feature vector from the previous layer to image voxels in Hounsfield units.

The network was trained separately for T1w and ZTE inputs. The ZTE dataset consisted of 90 subjects, with 62 subjects used for the training set ΩT, 14 for the validation set ΩV and 14 for the testing set ΩE. Subjects were randomly assigned to each set. The T1w dataset consisted of 171 subjects. The same 14 subjects were used for the test set, with 26 subjects used for the validation set, and 131 for the training set. Data augmentation was also performed using random affine transformations with bilinear interpolation, with rotations in the range ±10°, translations between ± 5 % of the image size, and shears parallel to the x axis of ±2.5°.

The network fθ was trained by minimising the average l1 norm of the masked error over the training set

| (1) |

where xi is the stack of one or more adjacent input MR image slices, yi is the corresponding slice from the ground truth CT, mi is the corresponding mask (see Fig. 1) and Ni is the number of pixels in the i-th image. The loss function was evaluated stochastically for each optimisation step, by drawing a random mini-batch of size 32 from the training set. The Adam optimiser [51] was used along with reduce on plateau scheduling with patience = 5, factor = 0.2 and a learning rate reducing from 10−4 to 10−6. The networks were trained for 300 epochs on an NVIDIA Tesla P40 GPU, with 24 GB RAM.

C. Classical ZTE mapping

For comparison with the learned pCT mappings, a direct conversion of the ZTE images to pCT was also implemented following [8], [52]. In this case, bias field correction was applied to the ZTE images using the N4ITK method within 3D Slicer (V4.11.20210226). Voxel intensities for each image were individually normalised based on the soft-tissue peak in the image histogram to give a soft-tissue intensity of 1. A skull mask was generated by thresholding the voxel intensities between 0.2 and 0.75 (bone/air and bone/soft-tissue), taking the largest connected component, and then filling the mask using morphological operations. Within the skull mask, ZTE values were mapped directly to CT HU using the linear relationship CT = –2085 ZTE + 2329. This relationship was calculated by taking the first principal component of the density plot of ZTE values vs CT values within the skull. Outside the skull mask, air and soft-tissue were assigned values of -1000 and 42 HU, respectively.

D. Evaluation of the pseudo-CT images

The three different methods for generating pCT images were evaluated by comparing the generated 3D pCT volumes for the 14 subjects in the test set against the corresponding ground truth CTs. Image intensities were compared using mean absolute error (MAE) and the root mean squared error (RMSE). Both metrics were evaluated across the whole head (comparing voxels within the subject-specific head mask) and in the skull only (using a skull mask derived by thresholding the ground truth CTs and combining with the head mask to exclude bones outside the neurocranium). For convenience, the different generated pCTs are referred to as tCT (for the learned pCT mapped from a T1w image), zCT (for the learned pCT mapped from a ZTE image), and cCT (for the pCT directly converted from a ZTE image using classical image processing techniques as explained in Sec. II-C).

E. Ultrasound simulations using k-Wave

Acoustic simulations were performed using the open-source k-Wave toolbox [53], [54]. CT and pCT image pairs were converted to medium property maps as follows. First, the images were resampled to the simulation resolution (0.5 mm) using linear interpolation and then cropped. The images were then segmented into skull, skin, brain, and background regions using intensity-based thresholding along with morphological operations. Within the soft-tissue, reference values were assigned for the acoustic properties. Within the skull, the sound speed and density were mapped directly from the image values in Hounsfield units. The density was calculated using the conversion curve from [55] (using the hounsfield2density function in k-Wave). The sound speed c within the skull was then calculated from the density values ρ using a linear relationship of c = 1.33ρ + 167 [56]. A constant value of attenuation within the skull was used. This general approach to mapping the acoustic properties from CT has been widely used in the literature, and generally compares well with experimental and clinical measurements [4], [57], [58]. Note, other mappings from CT images to acoustic properties are also possible (e.g. [59]). However, as the same mapping is used for all image sets, this choice does not strongly influence the simulated results.

The simulations were based on the NeuroFUS CTX-500 4-element annular array transducer (Sonic Concepts, Bothell, WA). The transducer was modelled using a staircase-free formulation [60] using nominal values for the radius of curvature (63.2 mm) and element aperture diameters (32.8, 46, 55.9, 64 mm). Simulations were run at 6 points per wavelength (PPW) in water and 60 points per period (PPP), which was sufficient to reproduce the relevant benchmark results (PH1-BM7-SC1) reported in [61] with less than 0.2 % difference in the maximum pressure and no difference in the focal position. The transducer was driven using a continuous sinusoidal driving signal at 500 kHz until steady state was reached.

Simulations were performed for the 14 skulls that formed the test set. For each skull, 4 transducer positions were used targeting the occipital pole of the primary visual cortex and the hand knob of the primary motor cortex in both hemispheres (giving a total of 56 comparisons for each pCT image type). These positions are two common targets for TUS studies. The variation in the skull bone thickness for the V1 targets is typically greater due to the internal and external occipital protuberance. The target positions were first identified on the MNI152_T1_1mm magnetic resonance imaging template brain [46], [47]. Approximate positions of the targets on the individual skulls were then calculated by registering the MR images with the MNI template and using the transformation matrix to map the target positions back to the individual skulls. For comparison between the CT and pCT image pairs, all other simulation parameters were kept identical except the image used to derive the acoustic property maps.

The calculated acoustic pressure fields for each CT/pCT pair were compared using the focal metrics outlined in [61] using the code available from [62]. Briefly, the magnitude and position of the spatial peak pressure within the brain were compared, along with the -6 dB focal volume.

III. Results

A. Pseudo-CT Images

Results for the MAE and RMSE for the generated 3D pCT images against the ground truth CT images are given in Table I. The zCT images generated using the learned mapping from ZTE have significantly lower error values across both metrics and both regions (complete head and skull only) compared to the tCT and cCT images. This is not surprising given (1) the ZTE image visually contains significantly more information about the morphology of the skull bone compared to the T1w image, and (2) the network has significantly more power to learn an adaptive mapping to pCT compared to the fixed mapping used for the cCT. In the skull, the cCT images slightly out-perform the tCT images. This is possibly because the additional bone information available to the cCT (but not the tCT) outweighs the additional predictive power available to the tCT (but not the cCT). Overall, the error values are similar to those discussed in sec. I.

Table 1. Mean Absolute Error (Mae) And Root Mean Squared Error (Rmse) For The 3D Pct Images Generated Using The 14 Subjects In The Test Set Against The Ground Truth Ct Images.

| mask | MAE (HU) | RMSE (HU) | |

|---|---|---|---|

| head | tCT | 133 ± 46 | 288 ± 83 |

| zCT | 83 ± 26 | 186 ± 55 | |

| cCT | 145 ± 35 | 350 ± 62 | |

| skull | tCT | 398 ± 116 | 528 ± 144 |

| zCT | 222 ± 78 | 305 ± 102 | |

| cCT | 336 ± 80 | 465 ± 101 |

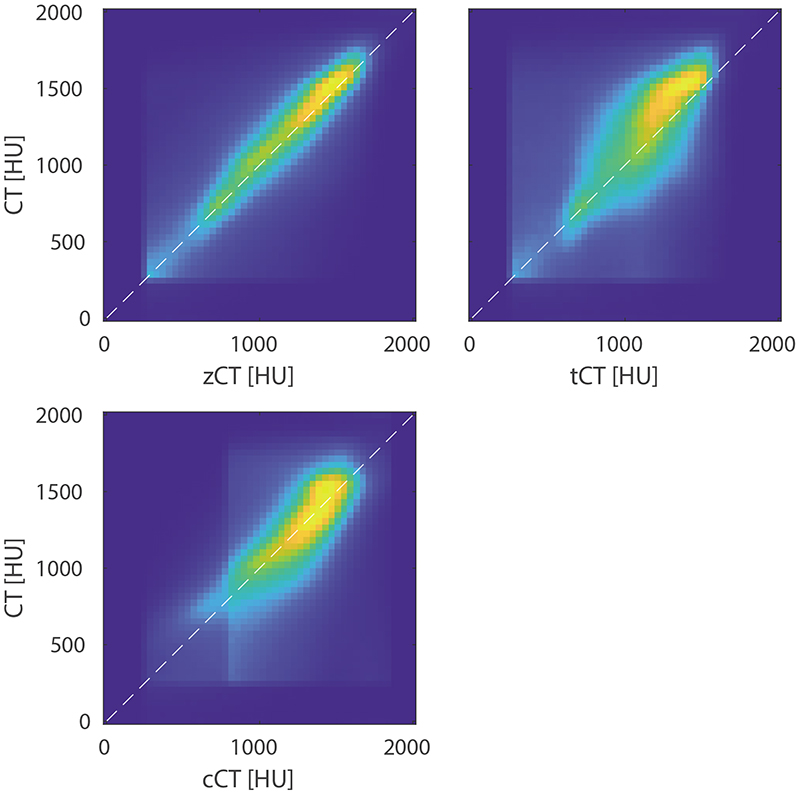

Figure 2 shows the correlation between the CT and pCT values within the skull mask (as identified from the ground truth CT). There is a good correlation observed for all pCT images, with the lowest spread for the zCT matching the results given in Table I. The good fit observed for the cCT values demonstrates that a linear mapping from ZTE is a reasonable choice. The robustness of these correlations to variations in the specific MR sequence parameters (along with processing parameters such as the debiasing settings) still needs to be explored further.

Figure 2. Density plot showing the correlation between the pCT and ground truth CT images for the test set.

The white lines show y = x.

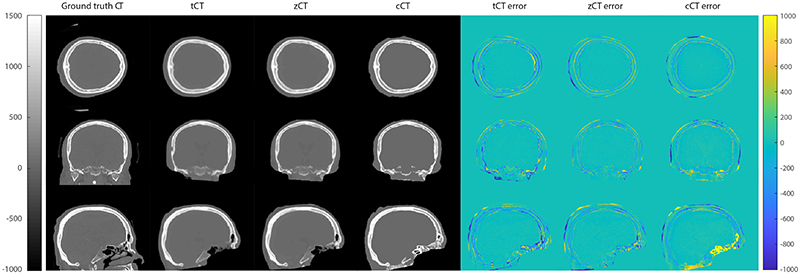

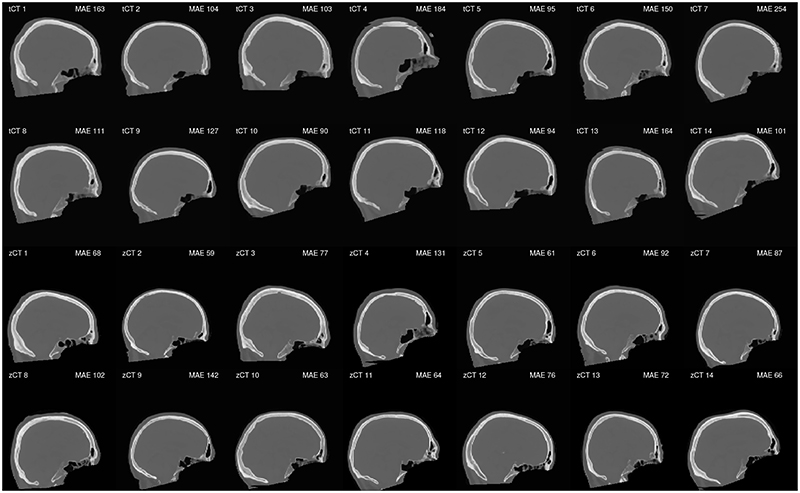

Examples of the generated pCT images and corresponding error maps against CT for one subject from the test set are shown in Fig. 3. The central sagittal slice through all 14 subjects along with individual MAE values are shown in Fig. 4. overall, there is a good quantitative agreement between the pCT and ground truth CT images. The biggest differences occur at the brain-skull and skull-skin boundaries, consistent with previous studies [11]. This is primarily due to the imperfect registration between the MR and CT images. There are two contributing factors. First, the skin-air interfaces are usually in slightly different places physically, e.g. due to the mobility of the skin and differences in the subject positioning during the MR and CT scans. Second, there are differences in the rigid brain-skull boundaries due to geometric distortions in the images that are not corrected by the affine transformation used in the registration step. This is evident in the difference plots for the cCT images, which uses a simple thresholding of the ZTE image to obtain the skull boundaries. The same misalignments are also apparent in the training/validation images. Improving the registration step, for example by learning the registration as part of training the network [63] or by iterating between training and reregistration of the generated pCT images, will likely improve the predictive capabilities of the learned mappings, and will be the subject of future work.

Figure 3. Example of pCT and error maps for a single subject in the test set.

The pseudo-CTs are registered to the ground truth CT before comparison.

Figure 4. Middle sagittal slice for the learned pseudo-CT images for the 14 test subjects.

The mean absolute error (MAE) value reported is calculated for the volume inside the head mask. A small number of the learned images mapped from T1w MR images have discontinuous skull boundaries.

As discussed in Sec. II-B, the input to the network was 11 consecutive 2D slices in the transverse direction. For the tCT images, this significantly reduced the occurrence of discontinuities in the skull between slices in the out-of-plane (e.g. sagittal) direction. The discontinuities occur because of the difficulty in consistently identifying the outer skull boundary in the 2D T1w image slices, which is improved by providing the network with local 3D information. However, even with 11 slices, visible discontinuities were still observed for a small number of subjects in the test set (tCT 4, 6, and 13 in Fig. 4). The equivalent network trained with a single input slice had similar MAE and RMSE values to those given in Table I, while the occurrence of local discontinuities in the skull was much worse. Thus, this feature does not seem to be captured by the error metrics used during training. This motivates using 3D networks in the future, following related work [19] which has shown that training on the full 3D skull volumes can produce better results, albeit at a higher computational cost. The same discontinuities were not observed for the zCT images, even when using a single slice as input to the network.

Although not formally investigated in this study, it is interesting to note that many of the subjects had small calcifications within the deep structures of the brain visible on the CT images (e.g., due to calcification of the choroid plexus). Some, but not all, of these were visible on the zCT images in the test set, and none were visible on the tCT images. Further work is needed to quantify the ability of the learned mappings to correctly reconstruct calcifications.

B. Acoustic Simulations

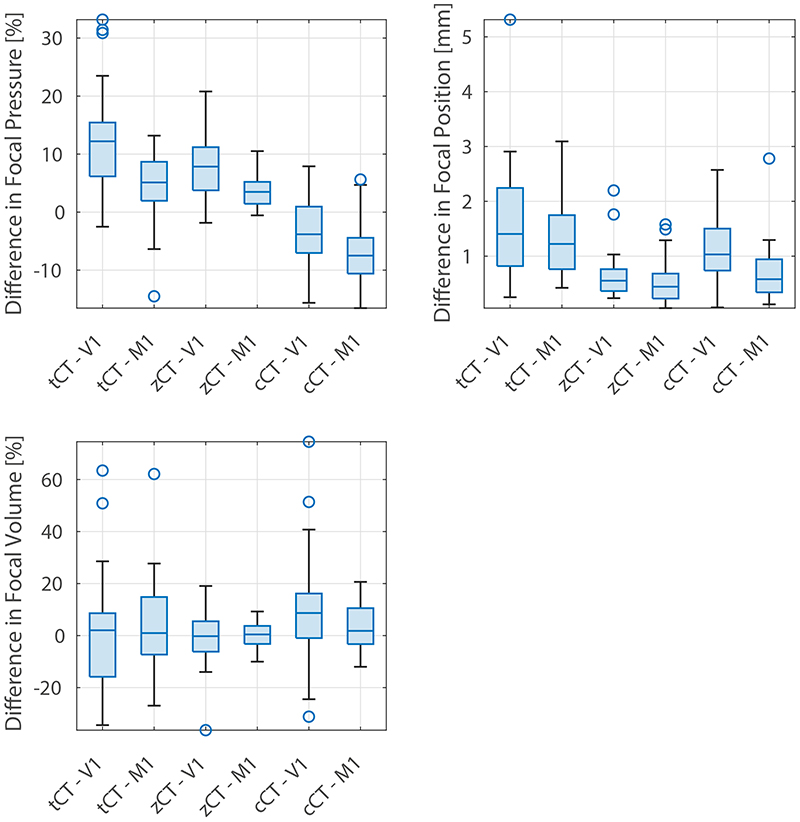

Results for the acoustic simulations are summarised in Table II and Fig. 5. Subjects 4 and 6 from the tCT dataset were excluded from the simulation evaluation as they displayed discontinuities on the top of the skull which made them unsuitable for acoustic simulations. Similarly, cCT from subject 10 was excluded as the predicted skull was not continuous due the thresholding value not being suitable for this subject. Across all pCT images and target locations, the mean differences in the simulated focal pressure, focal position, and focal volume were 7.3 ± 5.7 %, 0.99 ± 0.79 mm, and 11 ± 12 % (to two significant figures). The best results were obtained when using the zCT images for motor targets, where the equivalent differences were 3.7 %, 0.5 mm, and 3.9 %. These values compare well with the differences observed in experimental repeatability [64] and numerical intercomparison [61] studies. For reference, the focal volume simulated in water is 3.9 mm wide and 24 mm long.

Table 2. Acoustic Metrics Rounded To 1 Decimal Place.

| mean | std | mean | std | mean | std | |

|---|---|---|---|---|---|---|

| cCT | 5.7 | 4.0 | 1.1 | 0.6 | 16.8 | 17.6 |

| cCT | 7.8 | 3.8 | 0.7 | 0.5 | 7.4 | 5.7 |

| cCT | 6.7 | 4.0 | 0.9 | 0.6 | 12.1 | 13.8 |

Figure 5.

Differences in the focal pressure, focal position, and focal volume for acoustic simulations using different pseudo-CT images against simulations using a ground truth CT. Results are divided into two sets, targeting the visual cortex (V1) and motor cortex (M1).

Between the different pCT images, the simulations based on the zCT images generally had the lowest average errors, as well as the smallest variation (see the interquartile range shown using the blue boxes in Fig. 5). The simulations based on both the zCT and cCT images consistently outperformed the tCT images, demonstrating that skull-specific imaging can improve the accuracy of the predictions. Interestingly, the simulations based on the learned mappings (tCT and zCT) generally overestimated the focal pressure, while simulations based on the the direct mapping (cCT) generally under-estimated the focal pressure. This may be related to the sharpness of the skull boundaries in the cCT images compared to the learned images resulting in a stronger reflection coefficient.

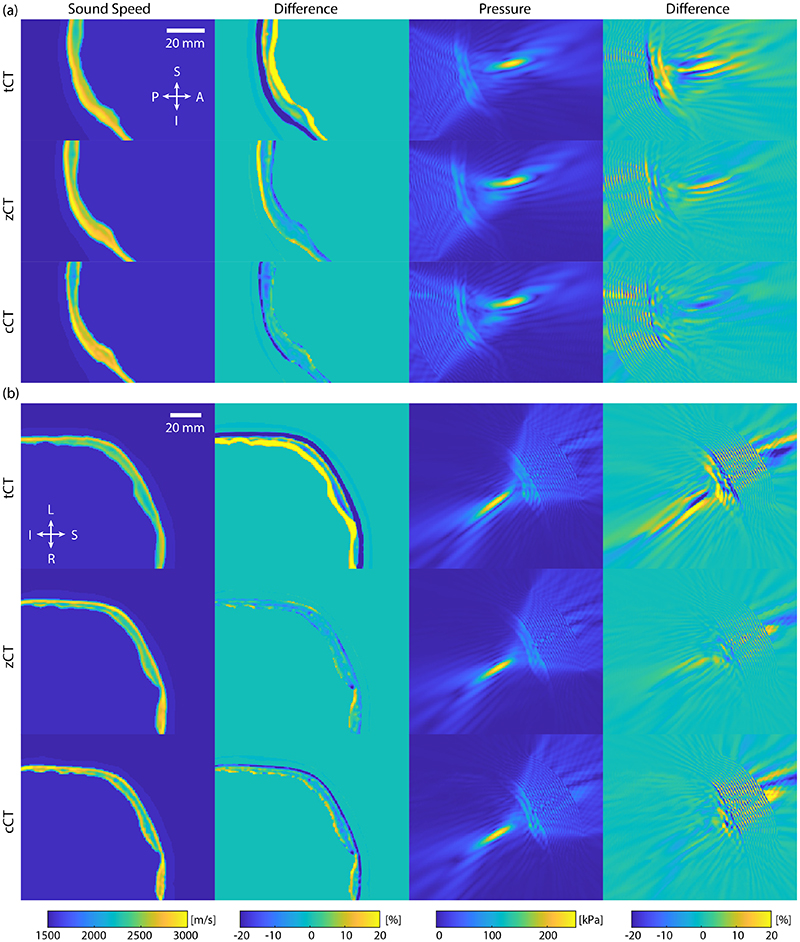

Between the two targets, the errors for the motor cortex were lower than the visual cortex, except for the focal pressure metric for the cCT images. This is expected, as the shape of the skull is generally more variable close to the visual cortex due to the internal and external occipital protuberance, which can result in stronger aberrations to the acoustic field. Examples from one test subject of the generated sound speed maps and acoustic field distributions are given in Fig. 6.

Figure 6.

Sound speed maps and simulated acoustic field for a subject from the test set for targets in the (a) visual cortex (V1) and (b) motor cortex (M1). The difference plots show the difference against the ground truth generated using the real CT images. The V1 and M1 results show sagittal and coronal slices through the spatial peak pressure, respectively.

IV. Summary And Discussion

Three different approaches for generating pCT images from MR images were investigated in the context of treatment planning simulations for transcranial ultrasound stimulation. A convolutional neural network (U-Net) was trained to generate pCT images using paired MR-CT data with either T1w or ZTE MR images as input. A direct mapping from ZTE to pCT was also implemented based on [8]. For the image-based metrics, the learned mapping from ZTE gave the lowest errors, with MAE values of 83 and 222 HU in the whole head and skull-only, respectively. The significant improvement compared to the mapping from T1w images demonstrates the advantage of using skull-specific MR imaging sequences.

Acoustic simulations were performed using the generated pCT images for an annular array transducer geometry operating at 500 kHz. The transducer was targeting either the left or right motor cortices, or the left or right visual cortices. The choice of transducer geometry and brain targets was motivated by devices and targets used in the rapidly-growing literature on human transcranial ultrasound stimulation (TUS).

Simulations using the pCT images based on ZTE showed close agreement with ground truth simulations based on CT, with mean errors in the focal pressure and focal volume less than 7 % and 9 % respectively, and mean errors in the focal position less than 0.8 mm (see Table II). Errors in simulations using the learned pCT images mapped from T1w images were higher, but still may be acceptable depending on the accuracy required. These results demonstrate that acoustic simulations based on mapping pCT images from MR can give comparable results to simulations based on ground truth CT.

For context, in the motor cortex, the smallest relevant target area for stimulation could reasonably be considered the cortical representation of a single hand muscle. A recent transcranial magnetic stimulation (TMS) study suggests that the functional area of the first dorsal interosseous (FDI), a muscle commonly targeted in motor studies, is approximately 26 mm2 [65]. In the primary visual cortex, the mean distance between approximate centres of TMS-distinct visual regions (V1 and V2d) is 11 mm [66], suggesting a similar necessary resolution to M1. The errors in the focal position using the pCT images are significantly less, which should allow for accurate targeting of the intended structures.

Interestingly, there was not a strong correlation between the pixel-based image errors (MAE and RMSE) and the errors in the predicted acoustic field. This highlights the importance of running acoustic simulations when developing image-to-image translation methods for TUS, and potentially calls for the use of acoustic-based loss functions. The use of MR images for treatment planning is particularly important for neuroscience studies in healthy populations, where obtaining ethical approval to acquire CT images is usually problematic. Even in a clinical setting, there may be benefits to using MR-based acquisitions when the therapy is performed under MR-guidance (for example, multi-modal image registration errors are reduced [67]). These results may also be of interest in other areas where pseudo-CTs are used, for example, PET-MR and radiotherapy planning.

Note, in a clinical setting, the regulatory approval of learned mappings remains an important open question. However, we demonstrate that the learned pseudo-CTs from T1w and ZTE can be used for acoustic simulations which have comparable accuracy to those based on CTs.

Acknowledgments

This work was supported by the Engineering and Physical Sciences Research Council (EPSRC), UK, grant number EP/S026371/1 and the UKRI CDT in AI-enabled Healthcare Systems, grant number EP/S021612/1.

Contributor Information

Maria Miscouridou, Department of Medical Physics and Biomedical Engineering, University College London, London, UK.

José A. Pineda-Pardo, HM CINAC, Fundación HM Hospitales de Madrid, University Hospital HM Puerta del Sur. CEU-San Pablo University, Mostoles, Madrid, Spain

Charlotte J. Stagg, Wellcome Centre for Integrative Neuroimaging, FMRIB, Nuffield Department of Clinical Neuroscience, University of Oxford, Oxford, UK

Bradley E. Treeby, Department of Medical Physics and Biomedical Engineering, University College London, London, UK

Antonio Stanziola, Department of Medical Physics and Biomedical Engineering, University College London, London, UK.

References

- [1].Elias WJ, Lipsman N, Ondo WG, Ghanouni P, Kim YG, Lee W, Schwartz M, Hynynen K, Lozano AM, Shah BB, et al. A randomized trial of focused ultrasound thalamotomy for essential tremor. New England Journal of Medicine. 2016;375(8):730–739. doi: 10.1056/NEJMoa1600159. [DOI] [PubMed] [Google Scholar]

- [2].Legon W, Sato TF, Opitz A, Mueller J, Barbour A, Williams A, Tyler WJ. Transcranial focused ultrasound modulates the activity of primary somatosensory cortex in humans. Nature Neuroscience. 2014;17(2):322–329. doi: 10.1038/nn.3620. [DOI] [PubMed] [Google Scholar]

- [3].Gasca-Salas C, Fernández-Rodríguez B, Pineda-Pardo JA, Rodriguez-Rojas R, Obeso I, Hernandez-Fernandez F, Del Alamo M, Mata D, Guida P, Ordas-Bandera C, et al. Blood-brain barrier opening with focused ultrasound in Parkinson’s disease dementia. Nature communications. 2021;12(1):1–7. doi: 10.1038/s41467-021-21022-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].Deffieux T, Konofagou EE. Numerical study of a simple transcranial focused ultrasound system applied to blood-brain barrier opening. IEEE transactions on ultrasonics, ferroelectrics, and frequency control. 2010;57(12):2637–2653. doi: 10.1109/TUFFC.2010.1738. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [5].Sun J, Hynynen K. Focusing of therapeutic ultrasound through a human skull: a numerical study. The Journal of the Acoustical Society of America. 1998;104(3):1705–1715. doi: 10.1121/1.424383. [DOI] [PubMed] [Google Scholar]

- [6].Aubry J-F, Tanter M, Pernot M, Thomas J-L, Fink M. Experimental demonstration of noninvasive transskull adaptive focusing based on prior computed tomography scans. The Journal of the Acoustical Society of America. 2003;113(1):84–93. doi: 10.1121/1.1529663. [DOI] [PubMed] [Google Scholar]

- [7].Darmani G, Bergmann T, Pauly KB, Caskey C, de Lecea L, Fomenko A, Fouragnan E, Legon W, Murphy K, Nandi T, et al. Non-invasive transcranial ultrasound stimulation for neuromodulation. Clinical Neurophysiology. 2022;135:51–73. doi: 10.1016/j.clinph.2021.12.010. [DOI] [PubMed] [Google Scholar]

- [8].Wiesinger F, Bylund M, Yang J, Kaushik S, Shanbhag D, Ahn S, Jonsson JH, Lundman JA, Hope T, Nyholm T, et al. Zero TE-based pseudo-CT image conversion in the head and its application in PET/MR attenuation correction and MR-guided radiation therapy planning. Magnetic resonance in medicine. 2018;80(4):1440–1451. doi: 10.1002/mrm.27134. [DOI] [PubMed] [Google Scholar]

- [9].Burgos N, Cardoso MJ, Thielemans K, Modat M, Pedemonte S, Dickson J, Barnes A, Ahmed R, Mahoney CJ, Schott JM, et al. Attenuation correction synthesis for hybrid PET-MR scanners: application to brain studies. IEEE transactions on medical imaging. 2014;33(12):2332–2341. doi: 10.1109/TMI.2014.2340135. [DOI] [PubMed] [Google Scholar]

- [10].Boulanger M, Nunes J-C, Chourak H, Largent A, Tahri S, Acosta O, De Crevoisier R, Lafond C, Barateau A. Deep learning methods to generate synthetic CT from MRI in radiotherapy: A literature review. Physica Medica. 2021;89:265–281. doi: 10.1016/j.ejmp.2021.07.027. [DOI] [PubMed] [Google Scholar]

- [11].Han X. MR-based synthetic CT generation using a deep convolutional neural network method. Medical physics. 2017;44(4):1408–1419. doi: 10.1002/mp.12155. [DOI] [PubMed] [Google Scholar]

- [12].Zhu J-Y, Park T, Isola P, Efros AA. Unpaired image-to-image translation using cycle-consistent adversarial networks; Proceedings of the IEEE international conference on computer vision; 2017. pp. 2223–2232. [Google Scholar]

- [13].Isola P, Zhu J-Y, Zhou T, Efros AA. Image-to-image translation with conditional adversarial networks; Proceedings of the IEEE conference on computer vision and pattern recognition; 2017. pp. 1125–1134. [Google Scholar]

- [14].Zhang P, Zhang B, Chen D, Yuan L, Wen F. Cross-domain correspondence learning for exemplar-based image translation; Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition; 2020. pp. 5143–5153. [Google Scholar]

- [15].Park T, Efros AA, Zhang R, Zhu J-Y. Contrastive learning for unpaired image-to-image translation; European Conference on Computer Vision; Springer; 2020. pp. 319–345. [Google Scholar]

- [16].Jiangtao W, Xinhong W, Xiao J, Bing Y, Lei Z, Yidong Y. MRI to CT Synthesis Using Contrastive Learning; 2021 IEEE International Conference on Medical Imaging Physics and Engineering (ICMIPE); 2021. pp. 1–5. [Google Scholar]

- [17].Sasaki H, Willcocks CG, Breckon TP. UNIT-DDPM: Unpaired image translation with denoising diffusion probabilistic models. arXiv preprint. 2021 Online Available: https://arxiv.org/abs/2104.05358. [Google Scholar]

- [18].Presotto L, Bettinardi V, Bagnalasta M, Scifo P, Savi A, Vanoli EG, Fallanca F, Picchio M, Perani D, Gianolli L, et al. Evaluation of a 2D UNet-Based Attenuation Correction Methodology for PET/MR Brain Studies. Journal of Digital Imaging. 2022:1–14. doi: 10.1007/s10278-021-00551-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [19].Fu J, Yang Y, Singhrao K, Ruan D, Chu F, Low D, Lewis J. Deep learning approaches using 2D and 3D convolutional neural networks for generating male pelvic synthetic computed tomography from magnetic resonance imaging. Med Phys. 2019;9(46):3788–3798. doi: 10.1002/mp.13672. Online Available. [DOI] [PubMed] [Google Scholar]

- [20].Spadea MF, Pileggi G, Zaffino P, Salome P, Catana C, Izquierdo-Garcia D, Amato F, Seco J. Deep convolution neural network (DCNN) multiplane approach to synthetic CT generation from MR images—application in brain proton therapy. International Journal of Radiation Oncology, Biology, Physics. 2019;105(3):495–503. doi: 10.1016/j.ijrobp.2019.06.2535. [DOI] [PubMed] [Google Scholar]

- [21].Roy S, Butman JA, Pham DL. Synthesizing CT from ultrashort echo-time MR images via convolutional neural networks; International Workshop on Simulation and Synthesis in Medical Imaging; Springer; 2017. pp. 24–32. [Google Scholar]

- [22].Martinez-Girones PM, Vera-Olmos J, Gil-Correa M, Ramos A, Garcia-Cafiamaque L, Izquierdo-Garcia D, Malpica N, Torrado-Carvajal A. Franken-CT: Head and neck MR-based pseudo-CT synthesis using diverse anatomical overlapping MR-CT scans. Applied Sciences. 2021;11(8):3508. [Google Scholar]

- [23].Jabbarpour A, Mahdavi SR, Sadr AV, Esmaili G, Shiri I, Zaidi H. Unsupervised pseudo CT generation using heterogenous multicentric CT/MR images and CycleGAN: Dosimetric assessment for 3D conformal radiotherapy. Computers in biology and medicine. 2022;143:105277. doi: 10.1016/j.compbiomed.2022.105277. [DOI] [PubMed] [Google Scholar]

- [24].Liu Y, Chen A, Shi H, Huang S, Zheng W, Liu Z, Zhang Q, Yang X. CT synthesis from MRI using multi-cycle GAN for head-and-neck radiation therapy. Computerized Medical Imaging and Graphics. 2021;91:101953. doi: 10.1016/j.compmedimag.2021.101953. [DOI] [PubMed] [Google Scholar]

- [25].Arabi H, Zeng G, Zheng G, Zaidi H. Novel adversarial semantic structure deep learning for MRI-guided attenuation correction in brain PET/MRI. European journal of nuclear medicine and molecular imaging. 2019;46(13):2746–2759. doi: 10.1007/s00259-019-04380-x. [DOI] [PubMed] [Google Scholar]

- [26].Creswell A, White T, Dumoulin V, Arulkumaran K, Sengupta B, Bharath AA. Generative adversarial networks: An overview. IEEE Signal Processing Magazine. 2018;35(1):53–65. [Google Scholar]

- [27].Wang R, Zheng G. Disentangled Representation Learning For Deep MR To CT Synthesis Using Unpaired Data; 2021 IEEE International Conference on Image Processing (ICIP) IEEE; 2021. pp. 274–278. [Google Scholar]

- [28].Wiatrak M, Albrecht SV, Nystrom A. Stabilizing generative adversarial networks: A survey. arXiv preprint. 2019:arXiv:1910.00927 [Google Scholar]

- [29].Su P, Guo S, Roys S, Maier F, Bhat H, Melhem E, Gandhi D, Gullapalli R, Zhuo J. Transcranial MR imaging-guided focused ultrasound interventions using deep learning synthesized CT. American Journal of Neuroradiology. 2020;41(10):1841–1848. doi: 10.3174/ajnr.A6758. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [30].Liu H, Sigona MK, Manuel TJ, Chen LM, Caskey CF, Dawant BM. Synthetic CT Skull Generation for Transcranial MR Imaging-Guided Focused Ultrasound Interventions with Conditional Adversarial Networks. Medical Imaging 2022: Image-Guided Procedures, Robotic Interventions, and Modeling. 2022;12034:135–143. [Google Scholar]

- [31].Koh H, Park TY, Chung YA, Lee J-H, Kim H. Acoustic simulation for transcranial focused ultrasound using GAN-based synthetic CT. IEEE Journal of Biomedical and Health Informatics. 2021;26(1):161–171. doi: 10.1109/JBHI.2021.3103387. [DOI] [PubMed] [Google Scholar]

- [32].Hynynen K, Jolesz FA. Demonstration of potential noninvasive ultrasound brain therapy through an intact skull. Ultrasound in Medicine & Biology. 1998;24(2):275–283. doi: 10.1016/s0301-5629(97)00269-x. [DOI] [PubMed] [Google Scholar]

- [33].Gimeno LA, Martin E, Wright O, Treeby BE. Experimental assessment of skull aberration and transmission loss at 270 khz for focused ultrasound stimulation of the primary visual cortex; 2019 IEEE International Ultrasonics Symposium (IUS) IEEE; 2019. pp. 556–559. [Google Scholar]

- [34].Wintermark M, Tustison NJ, Elias WJ, Patrie JT, Xin W, Demartini N, Eames M, Sumer S, Lau B, Cupino A, et al. T1-weighted MRI as a substitute to CT for refocusing planning in MR-guided focused ultrasound. Physics in Medicine & Biology. 2014;59(13):3599. doi: 10.1088/0031-9155/59/13/3599. [DOI] [PubMed] [Google Scholar]

- [35].Miller GW, Eames M, Snell J, Aubry J-F. Ultrashort echo-time MRI versus CT for skull aberration correction in MR-guided transcranial focused ultrasound: In vitro comparison on human calvaria. Medical physics. 2015;42(5):2223–2233. doi: 10.1118/1.4916656. [DOI] [PubMed] [Google Scholar]

- [36].Johnson EM, Vyas U, Ghanouni P, Pauly KB, Pauly JM. Improved cortical bone specificity in UTE MR imaging. Magnetic resonance in medicine. 2017;77(2):684–695. doi: 10.1002/mrm.26160. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [37].Guo S, Zhuo J, Li G, Gandhi D, Dayan M, Fishman P, Eisenberg H, Melhem ER, Gullapalli RP. Feasibility of ultrashort echo time images using full-wave acoustic and thermal modeling for transcranial MRI-guided focused ultrasound (tcMRgFUS) planning. Physics in Medicine & Biology. 2019;64(9):095008. doi: 10.1088/1361-6560/ab12f7. [DOI] [PubMed] [Google Scholar]

- [38].Caballero-Insaurriaga J, Rodriguez-Rojas R, Martinez-Fernandez R, Del-Alamo M, Diaz-Jimenez L, Avila M, Martinez-Rodrigo M, Garcia-Polo P, Pineda-Pardo JA. Zero TE MRI applications to transcranial MR-guided focused ultrasound: Patient screening and treatment efficiency estimation. Journal of Magnetic Resonance Imaging. 2019;50(5):1583–1592. doi: 10.1002/jmri.26746. [DOI] [PubMed] [Google Scholar]

- [39].Legon W, Bansal P, Tyshynsky R, Ai L, Mueller JK. Transcranial focused ultrasound neuromodulation of the human primary motor cortex. Scientific reports. 2018;8(1):1–14. doi: 10.1038/s41598-018-28320-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [40].Zeng K, Darmani G, Fomenko A, Xia X, Tran S, Nankoo J-L, Shamli Oghli Y, Wang Y, Lozano AM, Chen R. Induction of human motor cortex plasticity by theta burst transcranial ultrasound stimulation. Annals of neurology. 2022;91(2):238–252. doi: 10.1002/ana.26294. [DOI] [PubMed] [Google Scholar]

- [41].Lee W, Kim H-C, Jung Y, Chung YA, Song I-U, Lee J-H, Yoo S-S. Transcranial focused ultrasound stimulation of human primary visual cortex. Scientific reports. 2016;6(1):1–12. doi: 10.1038/srep34026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [42].Zhang Y, Brady M, Smith S. Segmentation of brain MR images through a hidden Markov random field model and the expectationmaximization algorithm. IEEE transactions on medical imaging. 2001;20(1):45–57. doi: 10.1109/42.906424. [DOI] [PubMed] [Google Scholar]

- [43].Jenkinson M, Smith S. A global optimisation method for robust affine registration of brain images. Medical image analysis. 2001;5(2):143–156. doi: 10.1016/s1361-8415(01)00036-6. [DOI] [PubMed] [Google Scholar]

- [44].Jenkinson M, Bannister P, Brady M, Smith S. Improved optimization for the robust and accurate linear registration and motion correction of brain images. Neuroimage. 2002;17(2):825–841. doi: 10.1016/s1053-8119(02)91132-8. [DOI] [PubMed] [Google Scholar]

- [45].Guillemot T, Delon J. Implementation of the midway image equalization. Image Processing On Line. 2016;6:114–129. [Google Scholar]

- [46].Fonov VS, Evans AC, McKinstry RC, Almli C, Collins D. Unbiased nonlinear average age-appropriate brain templates from birth to adulthood. NeuroImage. 2009;47:S102. [Google Scholar]

- [47].Fonov V, Evans AC, Botteron K, Almli CR, McKinstry RC, Collins DL Brain Development Cooperative Group. Unbiased average age-appropriate atlases for pediatric studies. NeuroImage. 2011;54(1):313–327. doi: 10.1016/j.neuroimage.2010.07.033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [48].Ronneberger O, Fischer P, Brox T. U-Net: Convolutional Networks for Biomedical Image Segmentation. Medical Image Computing and Computer-Assisted Intervention - MICCAI. 2015;2015:234–241. doi: 10.1007/978-3-319-24574-4_28. Online Available. [DOI] [Google Scholar]

- [49].Paszke A, Gross S, Massa F, Lerer A, Bradbury J, Chanan G, Killeen T, Lin Z, Gimelshein N, Antiga L, Desmaison A, et al. Advances in Neural Information Processing Systems 32. Curran Associates, Inc; 2019. PyTorch: An Imperative Style, High-Performance Deep Learning Library; pp. 8024–8035. Online Available: http://papers.neurips.cc/paper/9015-pytorch-an-imperative-style-high-performance-deep-learning-library.pdf. [Google Scholar]

- [50].Zeiler MD, Krishnan D, Taylor GW, Fergus R. Decon-volutional networks; 2010 IEEE Computer Society Conference on computer vision and pattern recognition; 2010. pp. 2528–2535. [Google Scholar]

- [51].Kingma DP, Ba J. Adam: A method for stochastic optimization. arXiv preprint. 2014:arXiv:1412.6980 [Google Scholar]

- [52].Wiesinger F, Sacolick LI, Menini A, Kaushik SS, Ahn S, Veit-Haibach P, Delso G, Shanbhag DD. Zero TE MR bone imaging in the head. Magnetic resonance in medicine. 2016;75(1):107–114. doi: 10.1002/mrm.25545. [DOI] [PubMed] [Google Scholar]

- [53].Treeby BE, Cox BT. k-Wave: MATLAB toolbox for the simulation and reconstruction of photoacoustic wave fields. Journal of biomedical optics. 2010;15(2):021314. doi: 10.1117/1.3360308. [DOI] [PubMed] [Google Scholar]

- [54].Treeby BE, Jaros J, Rendell AP, Cox B. Modeling nonlinear ultrasound propagation in heterogeneous media with power law absorption using a k-space pseudospectral method. The Journal of the Acoustical Society of America. 2012;131(6):4324–4336. doi: 10.1121/1.4712021. [DOI] [PubMed] [Google Scholar]

- [55].Schneider U, Pedroni E, Lomax A. The calibration of CT Hounsfield units for radiotherapy treatment planning. Physics in Medicine & Biology. 1996;41(1):111–124. doi: 10.1088/0031-9155/41/1/009. [DOI] [PubMed] [Google Scholar]

- [56].Marquet F, Pernot M, Aubry J-F, Montaldo G, Marsac L, Tanter M, Fink M. Non-invasive transcranial ultrasound therapy based on a 3D CT scan: protocol validation and in vitro results. Physics in Medicine & Biology. 2009;54(9):2597. doi: 10.1088/0031-9155/54/9/001. [DOI] [PubMed] [Google Scholar]

- [57].McDannold N, White PJ, Cosgrove R. Predicting bone marrow damage in the skull after clinical transcranial MRI-guided focused ultrasound with acoustic and thermal simulations. IEEE transactions on medical imaging. 2020;39(10):3231–3239. doi: 10.1109/TMI.2020.2989121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [58].Chauvet D, Marsac L, Pernot M, Boch A-L, Guillevin R, Salameh N, Souris L, Darrasse L, Fink M, Tanter M, et al. Targeting accuracy of transcranial magnetic resonance-guided high-intensity focused ultrasound brain therapy: a fresh cadaver model. Journal of neurosurgery. 2013;118(5):1046–1052. doi: 10.3171/2013.1.JNS12559. [DOI] [PubMed] [Google Scholar]

- [59].Pichardo S, Sin VW, Hynynen K. Multi-frequency characterization of the speed of sound and attenuation coefficient for longitudinal transmission of freshly excised human skulls. Physics in Medicine & Biology. 2010;56(1):219–250. doi: 10.1088/0031-9155/56/1/014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [60].Wise ES, Cox B, Jaros J, Treeby BE. Representing arbitrary acoustic source and sensor distributions in Fourier collocation methods. The Journal of the Acoustical Society of America. 2019;146(1):278–288. doi: 10.1121/1.5116132. [DOI] [PubMed] [Google Scholar]

- [61].Aubry J-F, Bates O, Boehm C, Butts Pauly K, Christensen D, Cueto C, Gelat P, Guasch L, Jaros J, Jing Y, Jones R, et al. Benchmark problems for transcranial ultrasound simulation: Intercomparison of compressional wave models. arXiv preprint. 2022:arXiv:2202.04552. doi: 10.1121/10.0013426. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [62].Treeby B. “Benchmark problems for transcranial ultrasound simulation: Intercomparison library”, (v1.0) [Code] GitHub. 2022. Online Available: https://github.com/ucl-bug/transcranial-ultrasound-benchmarks. [DOI] [PMC free article] [PubMed]

- [63].Kong L, Lian C, Huang D, Hu Y, Zhou Q, et al. Breaking the Dilemma of Medical Image-to-image Translation. Advances in Neural Information Processing Systems. 2021;34 [Google Scholar]

- [64].Martin E, Treeby B. Investigation of the repeatability and reproducibility of hydrophone measurements of medical ultrasound fields. The Journal of the Acoustical Society of America. 2019;145(3):1270–1282. doi: 10.1121/1.5093306. [DOI] [PubMed] [Google Scholar]

- [65].Reijonen J, Pitkanen M, Kallioniemi E, Mohammadi A, Il-moniemi RJ, Julkunen P. Spatial extent of cortical motor hotspot in navigated transcranial magnetic stimulation. Journal of Neuroscience Methods. 2020;346:108893. doi: 10.1016/j.jneumeth.2020.108893. [DOI] [PubMed] [Google Scholar]

- [66].Salminen-Vaparanta N, Noreika V, Revonsuo A, Koivisto M, Vanni S. Is selective primary visual cortex stimulation achievable with TMS? Human brain mapping. 2012;33(3):652–665. doi: 10.1002/hbm.21237. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [67].Edmund JM, Nyholm T. A review of substitute CT generation for MRI-only radiation therapy. Radiation Oncology. 2017;12(1):1–15. doi: 10.1186/s13014-016-0747-y. [DOI] [PMC free article] [PubMed] [Google Scholar]