Abstract

Background

The complexity of health care data and workflow presents challenges to the study of usability in electronic health records (EHRs). Display fragmentation refers to the distribution of relevant data across different screens or otherwise far apart, requiring complex navigation for the user’s workflow. Task and information fragmentation also contribute to cognitive burden.

Objective

This study aims to define and analyze some of the main sources of fragmentation in EHR user interfaces (UIs); discuss relevant theoretical, historical, and practical considerations; and use granular microanalytic methods and visualization techniques to help us understand the nature of fragmentation and opportunities for EHR optimization or redesign.

Methods

Sunburst visualizations capture the EHR navigation structure, showing levels and sublevels of the navigation tree, allowing calculation of a new measure, the Display Fragmentation Index. Time belt visualizations present the sequences of subtasks and allow calculation of proportion per instance, a measure that quantifies task fragmentation. These measures can be used separately or in conjunction to compare EHRs as well as tasks and subtasks in workflows and identify opportunities for reductions in steps and fragmentation. We present an example use of the methods for comparison of 2 different EHR interfaces (commercial and composable) in which subjects apprehend the same patient case.

Results

Screen transitions were substantially reduced for the composable interface (from 43 to 14), whereas clicks (including scrolling) remained similar.

Conclusions

These methods can aid in our understanding of UI needs under complex conditions and tasks to optimize EHR workflows and redesign.

Keywords: electronic health record, electronic medical record, medical informatics, information technology, data visualization, user computer interface

Introduction

Background

The ubiquity of electronic health record (EHR) systems has transformed the health care landscape over the past several decades. Yet, even as improved patient care and cost savings have begun to emerge, significant usability impediments have been well documented [1]. One such usability issue for EHRs is display fragmentation, which can be defined as the location of clinical elements or other care-related information on different screens or in different parts of the EHR, or in ways that require searching, scrolling, or other navigation actions to access [2]. Display fragmentation can affect EHR-mediated workflow and the clinician’s ability to analyze patient health information and provide optimal patient care [3].

Research on EHR system usability, including display fragmentation, has often characterized problems at a rather high level of abstraction (eg, violations of usability principles) or in terms of the user’s expression of dissatisfaction. Researchers are beginning to develop new approaches to documenting problems with increasing granularity and specificity as an extension of usability studies [4]. However, few granular methods have been applied to display fragmentation. Thus, the researcher’s ability to understand the impact of display fragmentation on usability, develop potential solutions, and evaluate these solutions is limited.

The work presented in this paper addresses this gap and problem by describing the theoretical background behind display fragmentation and its impact on a clinician’s ability to provide safe and high-quality patient care. It also introduces 2 methods for granularly assessing display fragmentation in health information technology (HIT) systems so that this challenge can be diagnosed, and system redesigns can be proposed.

Display Fragmentation and Task Fragmentation

Display fragmentation occurs in EHR systems when a user must click through and view many different screens or parts of screens to view all relevant clinical information [2]. This requires sequential viewing and calls for retaining information in memory while other information is sought. This sort of fragmentation may also occur in a densely populated or cluttered screen requiring much in the way of cognitive resources to locate information. Display fragmentation is closely related to and overlaps with 2 other types of fragmentation involved in clinical care. One is information fragmentation—the location of important information sources in forms outside the EHR, often in several different modalities such as paper records, faxes that have been scanned to a repository, messages from staff, and even Post-it notes [5]. This type of fragmentation is extremely common in health care, as it is often not possible or desirable for all patient information to be contained merely within the EHR [5]. Processes that predate EHRs and remain operative can determine information location and health professional use of patient information. Information fragmentation can contribute to the deleterious effects of display fragmentation, as information may not be available at the point of care and, as a result, may impair information seeking, clinical reasoning, and the subsequent quality of decision making by health professionals [6,7]. Information and display fragmentation share a core problem that makes it difficult for the health care provider to access needed patient data or pertinent EHR functions.

Although both display fragmentation and information fragmentation involve challenges accessing needed information, their point of emphasis is different. Display fragmentation emphasizes how features of an interface result in a user devoting cognitive resources to interacting with system complexity (eg, unnecessary actions) rather than thoughtful completion of the patient care task. The construct of information fragmentation emphasizes the difficulty of assembling needed information, some of which may be available outside of the system or application, and some of it may rely on the robustness of clinical communication as in patient handoff.

Another form of fragmentation is task fragmentation, in which there is a separation of the parts of a task in undesirable ways [6]. For example, the task may be broken into too many steps, or the steps are redundant. This usually slows the overall process of performing tasks using a system, such as an EHR, while at the same time increasing the cognitive load for the user (eg, physician or nurse) performing the task. Undesirable task fragmentation is often a result of display fragmentation and information fragmentation forcing the user to take additional actions to view related material to support their information seeking and decision making. It also fragments the user’s optimal workflow and can lead to workarounds for completing tasks [8]. This is especially the case when new systems introduce new ways of performing cognitive and physical work (eg, to support a therapeutic decision) [9]. This may also be due to other circumstances, including interruptions and the need to reprioritize clinical activities.

HIT systems such as EHRs often create new workflows or can be disruptive to existing workflows, leading to increases in cognitive and physical burdens [3,8,10]. For example, researchers found that the use of a computerized physician order entry system introduces additional steps to view the patient overview as compared with the work practices before the implementation [11]. Systems that are not coextensive with clinical workflow may increase the frequency of task switching and multitasking, thereby contributing to a fragmented experience [10]. A recent review of EHR usability and safety literature concluded that navigation is a crucial component of usability [12]. The authors of this paper argue that further usability research is necessary to identify and categorize navigation actions with greater precision [12]. These mapping efforts can provide a uniform approach to EHR usability research and enable systematic comparison between different systems [13].

Research has also shown that reasoning and decision making by clinicians can be highly sensitive to and influenced by the structure and organization of information and information categories in menus and lists, as it is displayed in an EHR system itself [14,15]. The fragmentation of clinical information can create inefficiencies and lead to suboptimal diagnostic reasoning [14,15]. This suggests a need to more closely scrutinize the impact of display fragmentation on clinical cognition. We do this by developing a new method for characterizing fragmentation guided by a cognitive engineering framework. Our approach is interdisciplinary and focuses on the development of methods and tools to assess and guide the design of computerized systems to support human performance [16,17].

Cognitive Engineering: Characterizing and Visualizing Fragmentation

User interaction can be analyzed as a combination of elementary cognitive, perceptual, and motoric behaviors [18]. All 3 elements are necessary for any task, and specific task-system combinations may be of a more memory-intensive nature or require more in the way of perceptual and motor behavior [19]. Users divide their cognitive resources between navigating through the system interface and performing specific tasks at hand (eg, documenting vital signs) [20]. Seamless navigation is characterized by a fluid interaction in which the effort expended while interacting with the system interface is minimal. Systems of greater navigational complexity necessitate that more effort be devoted to interacting with the system and less to thoughtful task completion [21].

Specific interface elements such as screen layout, pull-down menus, and dialog boxes can affect the levels of optimality or complexity in system interaction [22]. Optimizing the form in which information is displayed, accessed, and documented is dependent on identifying and understanding the flow of specific tasks [21]. Understanding the levels of fragmentation and navigational architecture by mapping specific vendor EHRs can have many applications, including the creation of new navigation tools and streamlining workflows, improving the usability of systems, and decision making. The navigational complexity can be operationalized and measured in terms of the flow or level of interactivity for a given task [21].

This paper describes the methodology behind 2 new approaches for visualizing and quantifying display fragmentation and task fragmentation as they apply to clinician use of EHRs. In the Methods section, we will describe the approaches in detail, including their methodology and examples of their application (titled Illustrations). In the Results section, we will present the results of the illustrations to gain insights into display fragmentation and task fragmentation. The short-term goal of this research, as reflected in this paper, is to show how these methods can provide valuable insight into HIT interface challenges related to display, information, and task fragmentation. The long-term goal is to improve the design of HIT interfaces, such as EHRs, so that they have better fit-to-task, lower cognitive burden, and can enhance clinical decision making, thus improving patient quality of care and safety.

Methods

Overview

Two methods used to visualize and characterize display fragmentation and task fragmentation were sunburst diagrams and time belt visualizations, respectively. We first present each of these methods in detail and 3 illustrations that exemplify how each of these methods can be applied to analyze display fragmentation and task fragmentation.

Method One: Sunburst Diagrams for Describing Display Fragmentation

To understand display fragmentation, we developed sunburst diagrams as a method for visualizing system navigation and, subsequently, display fragmentation. Using these diagrams, we developed a measure to quantify display fragmentation and allow easy comparison between systems.

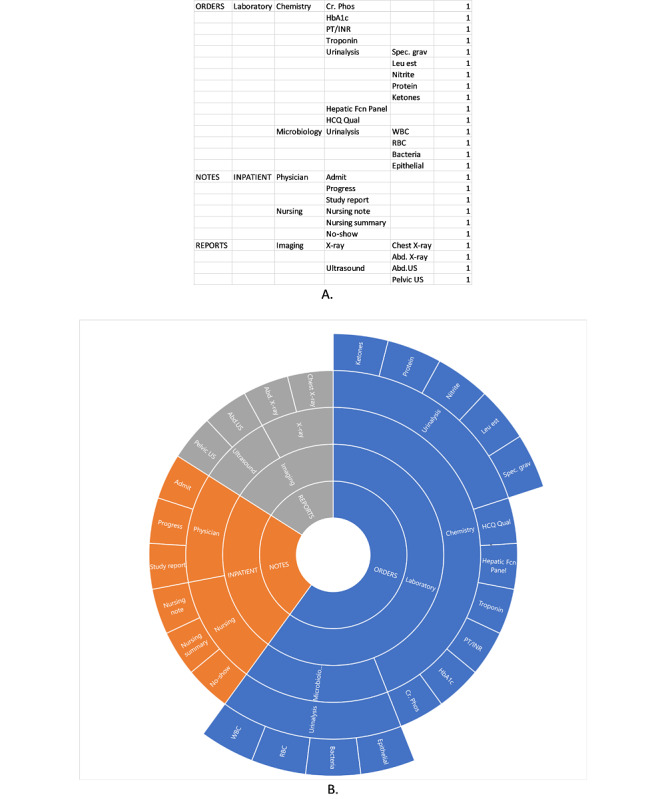

Navigation in menu-based systems is typically represented in the form of a tree arrangement; on the top-level screen, menus are usually displayed in a left-hand column or as tabs across the top or both. The root of the tree represents the highest-level screen, and each level of the tree and its leaves represent subsequent menu and submenu choices and varying levels of branching downward. The sunburst diagram presents an alternative, more concise representation of system navigation. The visualization shows the highest level of the system or tree as the first, innermost wrapped circle, and successive levels in the system or tree as successive concentric circles (Figure 1). Screens or levels of navigation that are in the same hierarchical level appear as segments within the same circle. The screens at different hierarchical levels appear as different circles. Therefore, a diagram with a greater number of circles indicates more system levels and screen transitions.

Figure 1.

An example of sunburst chart data in Excel describing the system architecture (A) and the resulting sunburst diagram (B).

To begin building a sunburst diagram, a modified cognitive walkthrough is performed, in which the researcher steps through all levels of the system’s navigation systematically, recording the menu structures and substructures and how they lead to different clinical data elements or other affordances [15]. This differs from usual cognitive walkthrough methods in that the aim is to create a map of the navigation structure of the EHR, rather than to elucidate the steps needed in the performance of specific tasks. We term this a modified cognitive walkthrough to make this distinction. After the modified walkthrough, the recorded information serves as the data that populate the sunburst diagram (Figure 1). Excel (Microsoft), for example, has a built-in sunburst diagram function that automatically creates the diagram based on the data. Figure 2 provides another example of a sunburst diagram that highlights a specific pathway.

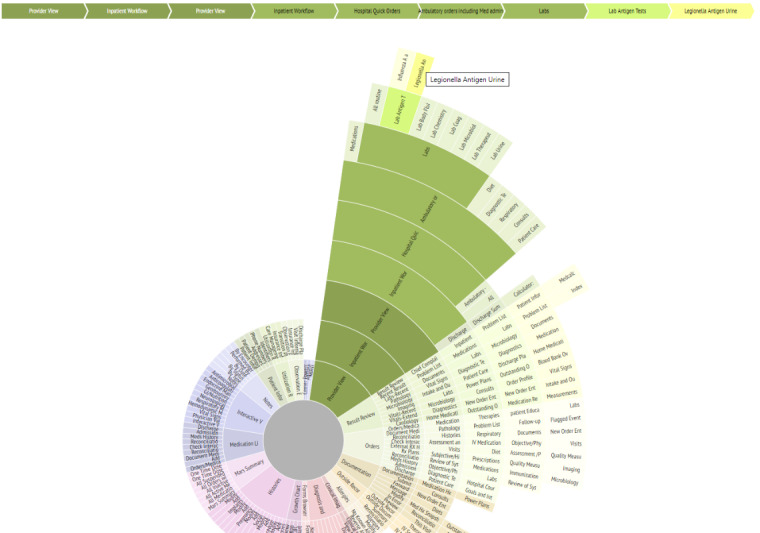

Figure 2.

Interactive sunburst highlighting one pathway (in green) from a root screen (Provider View) to a specific element (Legionella Antigen Urine lab results). The traced pathway is also described in the linear flow above the sunburst diagram. This diagram shows how 9 different screens must be navigated to access the desired element from the main screen.

Sunburst diagrams are advantageous in that, in addition to visualizing the structure and tracing pathways, we are also able to calculate the number of clicks, screen transitions, and other navigation actions needed, such as scrolling or filtering. For example, we can first shade the segments of the diagram that represent target information or screens a specific color (as in Figure 2). Knowing that the transition from one circle in the diagram to another represents a change in screens, and the transition from one segment in a circle to another segment in the same circle represents a click or perhaps screen scroll to view, we can use the sunburst diagram to systematically calculate the number of transitions and navigational actions needed to navigate from one target piece of information to another. We used this benefit of the sunburst diagram to create a measure to quantify display fragmentation and navigational complexity, which provides a basis for comparison between systems or between tasks. We termed this measure Display Fragmentation Index (DFI).

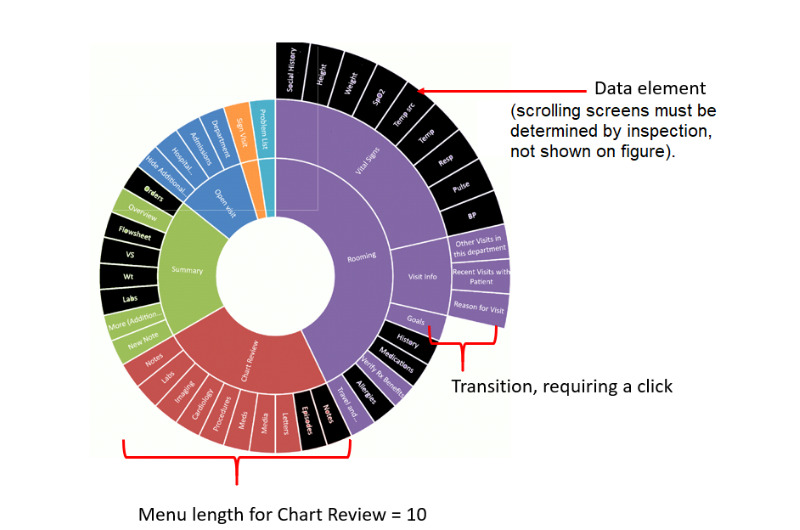

The DFI captures (as in Figure 3): the overall number of different content categories into which the required information is split; the different levels of the tree structure, with each level requiring additional clicks; navigation to elements at the same level, which also requires at least one click or scroll action (with multiple scroll actions counted as 2, as an average owing to the variability of such screens across cases); and the menu length (parallel items, which appear adjacently) at each stage, as this reflects the complexity of choice (and hence a taxing cognitive task) among menu items and results in greater visual search. Menu length is also highly indicative of the navigation time [23].

Figure 3.

Display Fragmentation Index element calculations. DFI: Display Fragmentation Index.

Thus, we can calculate DFI using the following equation:

| DFI = E + IS(XIS) + C(Xc) + SS(Xss) + ML |

where E refers to the number of data elements, IS the number of intermediate screens (transitions), C clicks (navigation action), SS scrolling screens (navigation action), ML menu length, and X a multiplier applied to each variable based on the number of levels traversed.

E does not have a multiplier because it simply represents the number of information elements that need to be accessed, regardless of their location.

To calculate this measure, we focus on the main obvious navigation paths as the measured pathway. As many EHRs may have several routes to get to an item, the fragmentation measure is a reasonable maximum; for some tasks, one may not have to go up all levels to get to the next item, as just the lower levels may be involved. The actual trajectory may vary depending on the user’s goals and preferences; the one presented is the longest reasonable pathway.

In this illustration, we show how sunburst diagrams allow easy comparison of fragmentation and navigational complexity between systems and can be used to calculate DFI.

Illustration Overview: Using Sunburst Diagrams to Describe Display Fragmentation in EHRs and Developing a Measure to Quantify Display Fragmentation

To show how sunburst diagrams can be used to describe and quantify display fragmentation, we illustrate how we used sunburst diagrams to visualize display fragmentation for 2 different, widely used, commercial EHRs. The context of this illustration is that the research team sought to understand the extent of display fragmentation in commercial EHRs, including differences in navigational architecture. Thus, the team conducted a modified cognitive walkthrough and used the sunburst diagram to display the results.

The team then conducted a more traditional, task-oriented cognitive walkthrough emulating the process of clinicians conducting general case reviews. This is a second step in the method, after creating the general navigation map described in the Method One section above. Data and information types for this task included admission notes, laboratory results, orders, medications, allergies, study reports (eg, of imaging or other studies), images (if available), discharge summaries, primary care and specialist provider notes, medications, demographic and insurance data, and nursing notes (if available), and automated data from devices or mobile apps, if applicable. Most current EHRs house similar data types together, necessitating complex navigation to see all relevant types while evaluating a patient case.

Once the diagram was created, the number of screen transitions and levels of navigation were counted using the visualization, and DFI was calculated.

Method Two: Time Belts for Mapping User Workflow and Task Fragmentation

We also developed a method for visually characterizing user workflow and task fragmentation. Visualization methods provide a systematic way of graphically representing information in a way that allows for understanding work and cognitive processes. Cognitive visualization methods allow for the use of visual metaphors for gaining insights into user mental steps and mapping of user workflow. Many of these methods are linear, but there are nonlinear metaphors as well, such as the desktop, tree, or swimlane metaphors. Understanding how EHR navigational structure affects workflow can be aided by additional mapping of the user’s actions while performing a task.

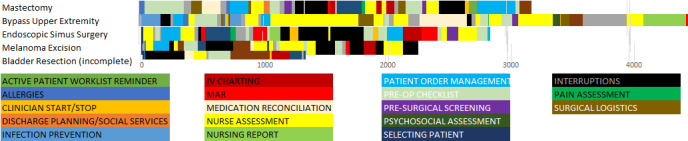

Time belt visualizations involve the linear depiction of the different phases and actions of a user (Figure 4). The different information types viewed, durations, and repetitious navigation to the same elements or element types can all be conveyed succinctly to understand a user’s work patterns. In the diagrams, the percentage of time shown in each section of the system is easily identified by a key, and the time sequence of the user is clearly shown from the start of interaction with a system to the completion of a task. Such an approach can be used to graphically depict an individual user’s patterns in accessing components of an EHR over time for comparison purposes (eg, comparing residents vs attending physician interactions with cases of differing complexity). Zheng et al [24] investigated the variation in preoperative workflow findings in 2 hospitals. Suboptimal patterns were identified, and the reasons for the variation were explored. Although both settings used the same EHR system, they observed marked differences in patterns of workflow with consequences for patient care. Figure 4 shows an example of a time belt that represents workflow as a series of discrete tasks representing their sequence (color-coded) and their duration (width of the colored segment). A simple representation can be used to compare clinicians, EHRs, patient conditions, and visit types. The time belt reflects the overall flow across the patient’s encounter. We can also drill down to examine the navigational complexity for specific tasks, such as medication reconciliation.

Figure 4.

An example of time belt visualization for 5 patient cases in preoperative care at a large tertiary care hospital. One single horizontal belt or row represents 1 patient case. The length of the belt indicates the case duration in seconds. Each belt comprised a sequence of tasks performed by the nurse and represented as color-coded segments. For example, Allergies refers to the task of checking allergies.

Time belts can be used to compare time on tasks across different systems, as well as the time spent on different tasks. This representation enables us to scrutinize task performance at a granular level, including time spent on different tasks, fragmentation in terms of repeated tasks, and sequential ordering of tasks. We can also examine each segment and determine the degree of interactivity. Importantly, we can break down a task and characterize clinicians’ clinical reasoning and, specifically, how diagnostic and therapeutic reasoning evolves over the course of time.

We also calculated the proportion per instance. Zheng et al [24] derived a measure of task fragmentation that relates the time on task for subtasks, normalized for EHR time. Their measure, time proportion per instance can be used to compare EHR tasks in different settings:

Proportion per instance = Instance task time/Number of task instances × Total EHR time

This is based on average continuous time (ACT) in which increased task fragmentation results in decreased time for a subtask [3]. The longer the ACT, the lower the task fragmentation. The proportion per instance normalizes this to accommodate total EHR time so that longer sessions do not inflate the measure.

Illustration Overview: Using Time Belts to Compare Task Fragmentation and Workflow for a Conventional EHR and a Composable EHR

We developed an experimental EHR interface to address some of the issues of fragmentation and cognitive load [25-27]. The following illustration details how the described visualization techniques were used to compare a commercial EHR with the experimental system.

The context for this illustration was a larger study where medical residents were recruited and presented with a series of cases using real patient data in a conventional EHR or the experimental system. The patient data were collected at a large health care site as part of a larger study examining EHR-mediated nursing workflow. For each of the cases, the patients were seen by other clinicians previously, and the study participants were asked to review the documentation, determine the reason for the patient’s problem, and present a therapeutic and management plan of action. The participants’ interactions with the systems were captured by Morae (TechSmith) [28], a powerful video recording and analytics tool widely used in human-computer interaction research. Participants were also asked to think-aloud while completing the tasks, and their dialogue was recorded and transcribed.

After the study, the recordings were analyzed, and time belt visualizations were created to compare time on tasks across the conventional and experimental systems. Note that this illustration is meant to show how time belt visualizations can be used to surface different dimensions of clinical cognition. It is not intended to compare the efficacy of the conventional and experimental systems, but rather exemplify how their interfaces yielded different patterns of interaction.

For the purpose of this illustration, we present the results for one resident participant who used the conventional EHR system to examine a patient case, John Smith, and a second participant who used the experimental system to examine the same patient. In the scenario, John Smith had an extensive medical history and presented with an array of cardiac and other clinical problems. He is in the emergency department (ED) due to exertional chest pain starting 2 hours previously (severe, 10/10, sharp or stabbing, localized as substernal, radiating to the back). The clinician is an ED physician treating the patient and has some past EHR records, including 2 prior progress notes and medical or surgical history, laboratory values, allergies, social history, and medications.

Illustration Overview: Putting It All Together—Sunburst and Time Belt Visualizations

We present a third and final illustration in which we show the 2 visualization methods in tandem. The context for this illustration is similar to the one for the time belts: a larger study where medical residents were recruited and presented with a series of patient cases that used anonymized but real patient data in the conventional EHR or experimental system.

Participant interaction with the system was recorded and then used to create a time belt to show the time spent on each screen or element. Similarly, the EHR was mapped using the sunburst diagram, and the resulting Excel sheet was used to show how the user participant navigated across the system.

Results

Illustration Results: Using Sunburst Diagrams to Describe Display Fragmentation for an EHR System and Developing a Method to Quantify Display Fragmentation

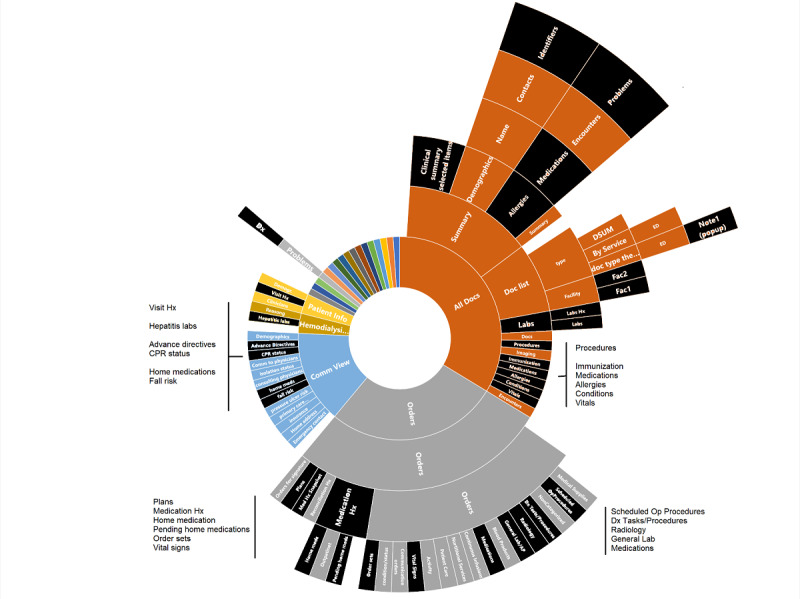

The sunburst diagram for the cognitive walkthrough of the first conventional EHR is presented in Figure 5. The elements colored in black represent the information relevant to the clinical task used for the cognitive walkthrough—general case review. One can see how the relevant information elements are scattered across different paths, levels, and main sections.

Figure 5.

Sunburst diagram representing display fragmentation of clinical data in a conventional, commercial electronic health record. Elements colored in black are those relevant for handling the clinical problem (general review of patient information). EHR: electronic health record; UI: user interface.

Using the DFI measure described above, the DFI for the sunburst diagram presented in Figure 5 was calculated as follows:

| DFI = 36 + 136 + 136 + 39 = 347 |

Note there are no scrolling screen terms incorporated into the above equation.

Thus, it is easy to conclude that the degree of fragmentation for this conventional commercial EHR is rather high and could likely lead clinicians to spend a large amount of time and cognitive resources while navigating, viewing, and retaining information.

The researchers also analyzed a second commercial EHR user interface (UI); the resulting sunburst diagram is presented in Figure 6. For this system, the DFI was calculated as follows:

Figure 6.

Sunburst diagram representing display fragmentation of clinical data in a second conventional, commercial electronic health record. Elements colored in black are those relevant for handling the clinical problem (general review of patient information). EHR: electronic health record.

| DFI = 19 + 47 + 47 + 19 = 132 (again, with no scrolling screens term) |

Thus, this system has a lower DFI (approximately one-third of the previous system), representing less fragmentation and fewer navigation levels.

Illustration Results: Using Time Belts to Compare Task Fragmentation and Workflow for a Conventional EHR and a Composable EHR

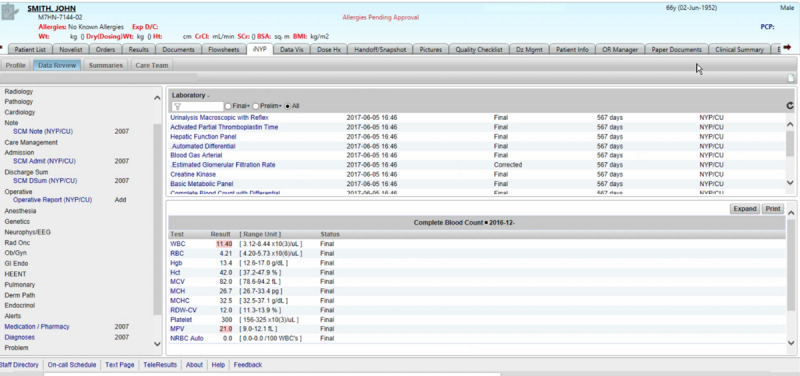

Figure 7 presents a captured screen from the conventional EHR system presenting laboratory results for the patient case, John Smith. In the conventional EHR UI, information is accessible through a hierarchical set of tabs, menus, and side panels. To its credit, the conventional EHR UI is well segregated and organized. Much of the patient information can be accessed through the display. On the other hand, the interaction space is immensely complex, and there are multiple ways to access the same information.

Figure 7.

Conventional electronic health record (EHR) system user interface (UI).

The participant used 346 mouse clicks, including just under 200 left-mouse clicks. In that short span of time, the resident visited 43 display screens, including repeat visits to several displays (eg, blood gas arterial panel). She experienced some difficulty locating an appropriate index document, such as a progress note or discharge summary. As a consequence, the resident devoted considerable time to searching for information. She focused largely on laboratory values, some of which seemed anomalous or contradictory, and then toward the end of the session, came across 2 ambulatory care text documents (at the 200-second mark) that facilitated her development of a complete patient problem representation.

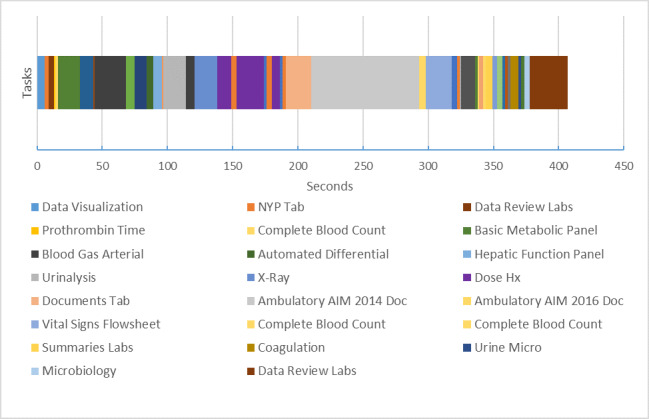

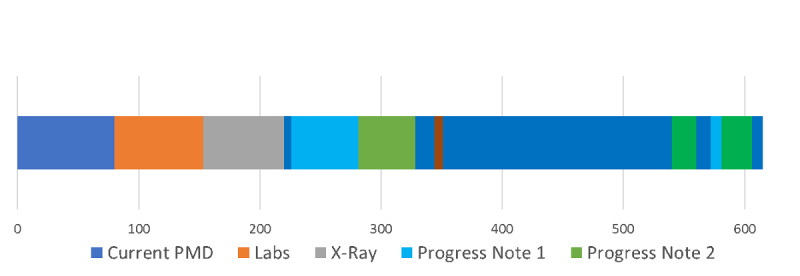

Figure 8 presents the time belt that illustrates the workflow or time on task for the single resident participant performing the task on the conventional EHR system. The participant required 6 min and 35 seconds to complete the task. The time belt is divided into task segments of variable durations.

Figure 8.

Time belt visualization of clinical task using commercial electronic health record. Note that the labels for the tasks have been abbreviated for readability. Each item represents a task, primarily searching and reviewing tasks. For example, “X-Ray” is short for “Reviewing X-Ray,” a task the clinician completed.

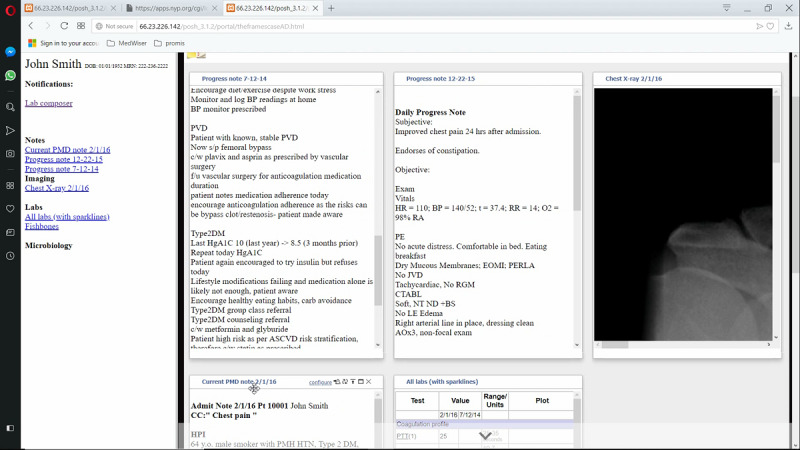

Figure 9 presents the experimental system interface for the same case. The interface is entirely configurable. The left-hand panel contains a set of available documents relevant to the case. There are only 7 documents, including the contemporary (current) note, 2 older progress notes, a chest x-ray, labs, and Fishbones. Users can drag and drop documents and rearrange them accordingly. The screen below includes 2 rows of documents in the form of widgets. The first row contains 2 older progress notes and an x-ray. The bottom row includes the current document and all laboratory values.

Figure 9.

Experimental system screen with user placement of data elements for the same case as in Figure 8.

Figure 10 illustrates the workflow or time on task for the resident performing the task using the experimental system. The task required 10 min and 48 seconds to complete. The user employed 389 mouse clicks, including only 20 left-mouse clicks. The remaining clicks reflect the extensive use of the scroll wheel. In that short span of time, the resident visited 14 display screens, including repeat visits to the current note and older progress notes. The current note acts as the index document to understand the patient’s problem. As we can see, the time belt and other visualizations can be used to characterize a state of affairs—the current state of navigational complexity and fragmentation. They can also be used as guideposts for design at a granular level of interactive behavior.

Figure 10.

Time belt visualization of clinical tasks using experimental electronic health record (EHR) system.

As noted, participants’ think-aloud statements were recorded. Multimedia Appendix 1 presents a summary of the participants’ think-aloud statements as it relates to the time belt presented in Figure 10.

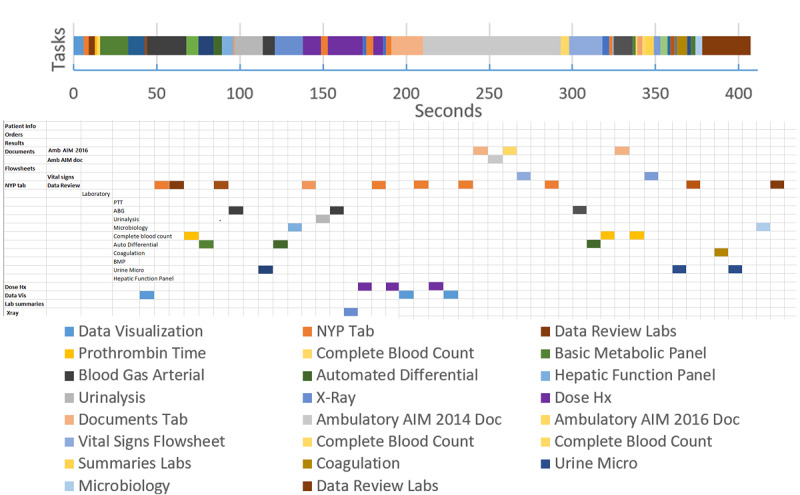

Illustration Results: Putting It All Together—Sunburst and Time Belt Visualizations

Figure 11 shows the time belt and sunburst diagram data for a user completing a patient review using a conventional, commercial EHR. The time belt shows the time spent on each task or screen. The Excel sheet shows the data for the sunburst diagram; however, in this instance, cells have been shaded to show the order and pathway the user took to navigate the system. For example, the user started on the Data Visualization Screen (light blue), then navigated to the NYP Tab (orange), then navigated to Data Review Labs (brown) within that tab, then Complete Blood Count (yellow), and so on and so forth.

Figure 11.

Color of each cell or segment represents the task or screen the user was completing in sequential order. The time belt visualization shows the time taken for each task, whereas the Excel data scheme shows the different screen or part of the system needed for each task.

From this combination of the time belt visualization and the sunburst diagram data scheme, we can see how task fragmentation corresponds with display fragmentation. Researchers can easily deduce how the user becomes stuck in several instances of back and forth navigation between 2 screens, and viewing sequences involving items far apart (see the number of orange-shaded cells indicating the user visited NYP Tab).

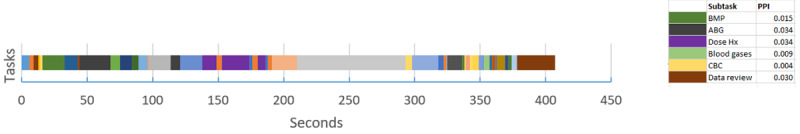

An example of proportion per instance is provided in Figure 11 time belt. Note that a lower proportion per instance value denotes more fragmented subtasks per unit time. In the time belt, this shows as a higher number of bands of the color corresponding to the task instances in a single patient encounter.

An example use of the measures can be seen to optimize displays by reducing fragmentation. In the time belt of Figure 12, the period from 140 to 200 seconds was spent in back and forth navigation looking at the same 2 elements 3 times each, with a navigation action screen between. Optimization could consist of juxtaposing these 2 elements, reducing the need for back and forth navigation. The proportion per instance would be improved for the same task.

Figure 12.

An example of proportion per instance calculated on the basis of the time belt from Figure 11. Proportion per instance for certain subtasks have been calculated in the small table to the right as examples. PPI: proportion per instance.

Discussion

Principal Findings

This paper illustrates a set of methods and visualizations to characterize navigation complexity. It is based on the analytic work and video capture of users. There is a range of methodologies that can further inform our understanding of the problem. For example, eye movement studies have the potential to elucidate the relationship between the visual apprehension of information and clinical reasoning [4,29-31]. It should also be noted that EHR-mediated workflow extends beyond the confines of navigational complexity to a host of other issues necessitating convergent methodologies [32-36]. The work presented in this paper is formative and is part of a growing body of theoretically motivated research that seeks to expand the vision of usability and better situate it in the context of clinical workflow and quantifying complexity [20,21,24,37].

The sunburst diagram can serve many cognitive science, usability, and workflow analysis purposes. First, a static sunburst diagram is useful in simply providing a visual representation of the current system state regarding navigational complexity—it shows the structure of the system. The diagram can also be used dynamically and interactively to show the influence of redesign decisions on navigational complexity or screen fragmentation. Interactive versions permit clicking on a segment, which will then be shown as the top (the inner circle) of the resulting subtree, with subsections as concentric circles (Figure 2). This can then permit extensive drill-down and visualization of the entire tree even if it is very complex.

Second, the sunburst diagram permits viewing of the relationships between different parts of the system and facilitates the tracing of navigation pathways through the system (Figure 2). Once created, viewers can easily trace pathways for access to a certain system element or screen and thus further characterize display fragmentation. Pathway tracing and navigation map building are completed during a cognitive walkthrough [38], as described above. In web-based systems, there are web tools that can automate this process (eg, Powermapper [39] and edraw [40]), but many major vendor systems are not web-based. Furthermore, greater insight can be gained by differentially coloring the segments of the diagram, which can help to further visualize the degree of fragmentation. For example, one color can denote the data of interest, and another color can denote irrelevant data for a task. Thus, someone can easily see the proportion of useful information to extraneous information and take action to redesign the system to remove the extraneous information.

The sunburst and time belt diagrams are complementary visualizations. The time belt is a succinct and clearly understood representation. One can view the distribution of tasks more readily. The time belt has a more explicit temporal dimension, which makes it easier to make inferences about the distribution of time.

Clinical care presents many pressures to the clinician; these are increased in emergency settings and where cases are complex. Current EHR systems interrupt clinical reasoning and workflow, increasing these pressures. The ideal system would rapidly present salient information and data critical to decision making and mitigate clinician cognitive load. We have found in studies of composed displays (where this is the case) that subjects find that the lack of the usual interruptions due to excessive navigation is cognitively supportive and helps their thought process. The methods described here can help to build such systems.

Ultimately, our aim is to make the arduous and already difficult work of clinical care smoother, more accurate, less cognitively demanding, and more pleasant. Ideally, information tools should be transparent, fun to use, enabling user control and freedom, and permitting focus on the tasks at hand rather than the tools themselves. Findings from our formative experiments suggest that composed displays that minimize the need for navigation can have this effect (prelim work, Y. Senathirajah et al, unpublished data, 2021). Some tool use in other domains approaches the level of artistic integration between users and tools to accomplish the most difficult of tasks. Although we are far from this in HIT, perhaps diligent further work can bring us close to the aim in the future. Consumer tools (such as some Apple products) have been studied for this quality of pleasure in use. Many clinicians view EHR use as unpleasant and unsatisfactory [41]. Reducing navigational complexity and facilitating task performance may free the clinician to be more creative, resulting in a more productive and pleasurable experience.

Although EHR usability has been much criticized, there is a very large installed base of the current EHR software. In conducting EHR mapping and task microanalysis, we aim to move beyond static conventional usability testing and bring together usability information with very particular guideposts, provide opportunities for EHR optimization, and more generally HIT redesign. A distinguishing feature of the composable EHR approach is its ability to juxtapose elements to decrease navigational complexity (thus increasing display integration). Understanding how the current EHR structure imposes fragmentation on both information access and task performance opens the way for specific focused redesign, which could shorten navigational pathways and thus time and effort taken. Decreasing screen fragmentation decreases the load on working memory. It could also permit specialized displays with low cognitive burden and machine delivery of UIs optimized for tasks.

We have derived useful measures addressing different needs for the comparison of EHR structure and its effect on navigation and task performance fragmentation. Having DFI and Zheng’s proportion per instance permits making a distinction between different EHRs or their subsections for the same task, and different tasks carried in the same EHR as well as in different locations, by different clinician roles, and other factors. DFI can be used to distinguish EHR structure and navigation, subtasks for different EHRs, subtask time efficiency, and EHR interface redesign. Proportion per instance can be used to distinguish tasks, subtasks for different EHRs, subtasks for the same EHR but different clinician groups, and subtask time efficiency.

Subtask time efficiency is an important measure for finding areas in which EHRs can be optimized. Although DFI has no time elements, by identifying areas of fragmentation in navigation structure and therefore likely in task performance, it can aid in finding areas in which pogo-sticking, that is, navigating back and forth between elements or sections of the EHR occurs or is likely to occur. When this occurs repeatedly (eg, when a clinician reads a note and switches back and forth repeatedly from the note to a lab values section to check the current values of laboratory tests against those listed in the previous note), it is a subtask. The juxtaposition of the two elements (note and laboratory test results) would avoid this repetitious navigation, shortening the subtask time, and removing the excess navigation or clicks. The juxtaposition is known to foster reduced cognitive load (as data need not be retained in working memory as it is on screen together), reflection, the association of data elements, and the identification of patterns. In the note or labs example, the user will be able to see the change in laboratory values and the implications of such changes more easily. Providing both better cognitive support and shorter times or less navigation would aid in reducing the burden on clinicians, particularly in high-stress settings. EHRs are heavily implicated in contributing to physician burnout primarily because of the mismatch between task and system, leading to poor efficiency and frustrating navigational complexity.

Finally, we address the experimental system used in our illustrations. MedWISER is a system in which elements are easily arranged by drag or drop. Therefore, we can design novel UIs using MedWISER using the same elements used in conventional system tasks (eg, lab panels) to represent data and experiment with different configurations with the intent to simplify navigation. In the above subtask example, the clinician user can juxtapose the note and lab together by drag or drop without requiring programmer intervention; this is a normal way the system functions. Thus, the end user, or others such as researchers or system administrators, can easily rearrange data elements to foster shorter navigation paths, the juxtaposition of related elements, and the creation of screens that maximize support of clinical reasoning while minimizing excess navigation. The user’s arrangements are stored, and patient-specific or specialty-specific displays can be shared (eg, with colleagues taking the next shift for that patient; they can also further modify the patient-specific display as new data comes in), further minimizing excess navigation and multiplying time savings. Thus, a set of displays could eventually be created with minimal fragmentation for the tasks being done (as can be calculated with new displays using our measures to evaluate degrees of optimization).

Limitations

There are several limitations to this study. Our presentation of cases is illustrative of the methods and is not representative of a larger set, which should perhaps be a next step in validating the work and formulae. Although the representations surface important dimensions of workflow, the comparative illustration of different systems cannot be used to infer that one system is better than another. There are many ways to represent data, and we have chosen ones that enable us to draw inferences about fragmentation based on a set of observations. The goal is also to convey the information visually and reliably so that readers can readily draw the same inferences or alternatively draw their own conclusions. However, there are other visualizations that may convey the same information. It is difficult to definitively prove that sunbursts or time belts are superior to other forms of representation. Expanding the space of potential visualizations can help advance the study of EHR-mediated workflow and, perhaps, its communication to stakeholders beyond academia.

Conclusions

In this paper, we described a methodological approach to addressing display fragmentation. Novel visualizations provide a suite of tools for communicating the exact nature of a navigation problem and creating the potential for precise and measurable design solutions. EHRs still present formidable usability challenges, but the potential for small tractable changes rather than large-scale prohibitively expensive ones is increasingly realizable. When combined with platforms such as MedWISER, which reduce the work involved in reconfiguration, they could provide pathways for rapid usability improvements and significantly improved the EHR-mediated workflow.

Acknowledgments

This project was funded by the Agency for Healthcare Research and Quality under grant 1-R01-HS023708-01A1.

Abbreviations

- ACT

average continuous time

- DFI

Display Fragmentation Index

- ED

emergency department

- EHR

electronic health record

- HIT

health information technology

- UI

user interface

Appendix

Participant think-aloud process.

Footnotes

Authors' Contributions: YS, DK, KC, EB, and AK contributed to the overall study design and manuscript writing. JF contributed to the overall manuscript writing.

Conflicts of Interest: AK is the Editor-in-Chief for the JMIR Human Factors. He had no influence on the decision to publish this article. The review and decision to publish were managed by a different editor at the journal.

References

- 1.Middleton B, Bloomrosen M, Dente MA, Hashmat B, Koppel R, Overhage JM, Payne TH, Rosenbloom ST, Weaver C, Zhang J, American Medical Informatics Association Enhancing patient safety and quality of care by improving the usability of electronic health record systems: recommendations from AMIA. J Am Med Inform Assoc. 2013 Jun;20(e1):e2–8. doi: 10.1136/amiajnl-2012-001458. http://europepmc.org/abstract/MED/23355463. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Senathirajah Y, Wang J, Borycki E, Kushniruk A. Mapping the electronic health record: a method to study display fragmentation. Stud Health Technol Inform. 2017;245:1138–42. [PubMed] [Google Scholar]

- 3.Zheng K, Haftel HM, Hirschl RB, O'Reilly M, Hanauer DA. Quantifying the impact of health IT implementations on clinical workflow: a new methodological perspective. J Am Med Inform Assoc. 2010;17(4):454–61. doi: 10.1136/jamia.2010.004440. http://europepmc.org/abstract/MED/20595314. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Zheng K, Padman R, Johnson MP, Diamond HS. An interface-driven analysis of user interactions with an electronic health records system. J Am Med Inform Assoc. 2009;16(2):228–37. doi: 10.1197/jamia.M2852. http://europepmc.org/abstract/MED/19074301. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Bourgeois FC, Olson KL, Mandl KD. Patients treated at multiple acute health care facilities: quantifying information fragmentation. Arch Intern Med. 2010 Dec 13;170(22):1989–95. doi: 10.1001/archinternmed.2010.439. [DOI] [PubMed] [Google Scholar]

- 6.Borycki EM, Lemieux-Charles L, Nagle L, Eysenbach G. Evaluating the impact of hybrid electronic-paper environments upon novice nurse information seeking. Methods Inf Med. 2009;48(2):137–43. doi: 10.3414/ME9222. [DOI] [PubMed] [Google Scholar]

- 7.Borycki EM, Lemieux-Charles L, Nagle L, Eysenbach G. Novice nurse information needs in paper and hybrid electronic-paper environments: a qualitative analysis. Stud Health Technol Inform. 2009;150:913–7. [PubMed] [Google Scholar]

- 8.Kushniruk A, Borycki E, Kuwata S, Kannry J. Predicting changes in workflow resulting from healthcare information systems: ensuring the safety of healthcare. Healthc Q. 2006;9 Spec No:114–8. doi: 10.12927/hcq..18469. http://www.longwoods.com/product.php?productid=18469. [DOI] [PubMed] [Google Scholar]

- 9.Kushniruk A, Borycki E, Kuwata S, Kannry J. Predicting changes in workflow resulting from healthcare information systems: ensuring the safety of healthcare. Healthc Q. 2006;9 Spec No:114–8. doi: 10.12927/hcq..18469. http://www.longwoods.com/product.php?productid=18469. [DOI] [PubMed] [Google Scholar]

- 10.Yen P, Kelley M, Lopetegui M, Rosado AL, Migliore EM, Chipps EM, Buck J. Understanding and visualizing multitasking and task switching activities: a time motion study to capture nursing workflow. AMIA Annu Symp Proc. 2016;2016:1264–73. http://europepmc.org/abstract/MED/28269924. [PMC free article] [PubMed] [Google Scholar]

- 11.Campbell EM, Sittig DF, Ash JS, Guappone KP, Dykstra RH. Types of unintended consequences related to computerized provider order entry. J Am Med Inform Assoc. 2006;13(5):547–56. doi: 10.1197/jamia.M2042. http://europepmc.org/abstract/MED/16799128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Roman LC, Ancker JS, Johnson SB, Senathirajah Y. Navigation in the electronic health record: a review of the safety and usability literature. J Biomed Inform. 2017 Mar;67:69–79. doi: 10.1016/j.jbi.2017.01.005. https://linkinghub.elsevier.com/retrieve/pii/S1532-0464(17)30005-9. [DOI] [PubMed] [Google Scholar]

- 13.Ellsworth MA, Dziadzko M, O'Horo JC, Farrell AM, Zhang J, Herasevich V. An appraisal of published usability evaluations of electronic health records via systematic review. J Am Med Inform Assoc. 2017 Jan;24(1):218–26. doi: 10.1093/jamia/ocw046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Patel VL, Kushniruk AW, Yang S, Yale JF. Impact of a computer-based patient record system on data collection, knowledge organization, and reasoning. J Am Med Inform Assoc. 2000;7(6):569–85. doi: 10.1136/jamia.2000.0070569. http://europepmc.org/abstract/MED/11062231. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Kushniruk AW, Kaufman DR, Patel VL, Lévesque Y, Lottin P. Assessment of a computerized patient record system: a cognitive approach to evaluating medical technology. MD Comput. 1996;13(5):406–15. [PubMed] [Google Scholar]

- 16.Roth E. Encyclopedia of Software Engineering: 2 Volume Set. New York, USA: Routedge; 1994. [Google Scholar]

- 17.Hettinger AZ, Roth EM, Bisantz AM. Cognitive engineering and health informatics: applications and intersections. J Biomed Inform. 2017 Mar;67:21–33. doi: 10.1016/j.jbi.2017.01.010. https://linkinghub.elsevier.com/retrieve/pii/S1532-0464(17)30010-2. [DOI] [PubMed] [Google Scholar]

- 18.Gray WD, Boehm-Davis DA. Milliseconds matter: an introduction to microstrategies and to their use in describing and predicting interactive behavior. J Exp Psychol Appl. 2000 Dec;6(4):322–35. doi: 10.1037//1076-898x.6.4.322. [DOI] [PubMed] [Google Scholar]

- 19.Gray WD, Sims CR, Fu W, Schoelles MJ. The soft constraints hypothesis: a rational analysis approach to resource allocation for interactive behavior. Psychol Rev. 2006 Jul;113(3):461–82. doi: 10.1037/0033-295X.113.3.461. [DOI] [PubMed] [Google Scholar]

- 20.Duncan BJ, Zheng L, Furniss SK, Solomon AJ, Doebbeling BN, Grando G, Burton MM, Poterack KA, Miksch TA, Helmers RA, Kaufman DR. In search of vital signs: a comparative study of EHR documentation. AMIA Annu Symp Proc. 2018;2018:1233–42. http://europepmc.org/abstract/MED/30815165. [PMC free article] [PubMed] [Google Scholar]

- 21.Duncan B. A microanalytic approach to understanding ehr navigation paths. J Biomed Inf. 2020:-. doi: 10.1016/j.jbi.2020.103566. epub ahead of print. [DOI] [PubMed] [Google Scholar]

- 22.Sharp H, Rogers Y, Preece J. Interaction Design: Beyond Human-Computer Interaction. New York, USA: John Wiley and Sons; 2007. [Google Scholar]

- 23.Roberts RD, Beh HC, Stankov L. Hick's law, competing-task performance, and intelligence. Intelligence. 1988 Apr;12(2):111–30. doi: 10.1016/0160-2896(88)90011-6. [DOI] [Google Scholar]

- 24.Zheng L, Kaufman DR, Duncan BJ, Furniss SK, Grando A, Poterack KA, Miksch TA, Helmers RA, Doebbeling BN. A task-analytic framework comparing preoperative electronic health record-mediated nursing workflow in different settings. Comput Inform Nurs. 2020 Jun;38(6):294–302. doi: 10.1097/CIN.0000000000000588. [DOI] [PubMed] [Google Scholar]

- 25.Senathirajah Y, Bakken S, Kaufman D. The clinician in the Driver's Seat: part 1 - a drag/drop user-composable electronic health record platform. J Biomed Inform. 2014 Dec;52:165–76. doi: 10.1016/j.jbi.2014.09.002. https://linkinghub.elsevier.com/retrieve/pii/S1532-0464(14)00199-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Senathirajah Y, Kaufman D, Bakken S. The clinician in the driver's seat: part 2 - intelligent uses of space in a drag/drop user-composable electronic health record. J Biomed Inform. 2014 Dec;52:177–88. doi: 10.1016/j.jbi.2014.09.008. https://linkinghub.elsevier.com/retrieve/pii/S1532-0464(14)00222-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Senathirajah Y, Kaufman D, Bakken S. Cognitive analysis of a highly configurable web 2.0 EHR interface. AMIA Annu Symp Proc. 2010 Nov 13;2010:732–6. http://europepmc.org/abstract/MED/21347075. [PMC free article] [PubMed] [Google Scholar]

- 28.Morae Tutorials. TechSmith: Global Leader in Screen Recording and Screen. [2020-09-21]. https://www.techsmith.com/tutorial-morae.html.

- 29.Calvitti A, Hochheiser H, Ashfaq S, Bell K, Chen Y, El Kareh R, Gabuzda MT, Liu L, Mortensen S, Pandey B, Rick S, Street RL, Weibel N, Weir C, Agha Z. Physician activity during outpatient visits and subjective workload. J Biomed Inform. 2017 May;69:135–49. doi: 10.1016/j.jbi.2017.03.011. https://linkinghub.elsevier.com/retrieve/pii/S1532-0464(17)30061-8. [DOI] [PubMed] [Google Scholar]

- 30.Senathirajah Y, Borycki EM, Kushniruk A, Cato K, Wang J. Use of eye-tracking in studies of EHR usability - the current state: a scoping review. Stud Health Technol Inform. 2019 Aug 21;264:1976–7. doi: 10.3233/SHTI190742. [DOI] [PubMed] [Google Scholar]

- 31.Mosaly PR, Mazur LM, Yu F, Guo H, Derek M, Laidlaw DH, Moore C, Marks LB, Mostafa J. Relating task demand, mental effort and task difficulty with physicians’ performance during interactions with electronic health records (EHRs) Int J Hum–Comput Interact. 2017 Sep 25;34(5):467–75. doi: 10.1080/10447318.2017.1365459. [DOI] [Google Scholar]

- 32.Unertl KM, Novak LL, Johnson KB, Lorenzi NM. Traversing the many paths of workflow research: developing a conceptual framework of workflow terminology through a systematic literature review. J Am Med Inform Assoc. 2010;17(3):265–73. doi: 10.1136/jamia.2010.004333. http://europepmc.org/abstract/MED/20442143. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Unertl KM, Weinger MB, Johnson KB, Lorenzi NM. Describing and modeling workflow and information flow in chronic disease care. J Am Med Inform Assoc. 2009;16(6):826–36. doi: 10.1197/jamia.M3000. http://europepmc.org/abstract/MED/19717802. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Carayon P, Wetterneck TB, Alyousef B, Brown RL, Cartmill RS, McGuire K, Hoonakker PL, Slagle J, van Roy KS, Walker JM, Weinger MB, Xie A, Wood KE. Impact of electronic health record technology on the work and workflow of physicians in the intensive care unit. Int J Med Inform. 2015 Aug;84(8):578–94. doi: 10.1016/j.ijmedinf.2015.04.002. http://europepmc.org/abstract/MED/25910685. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Helmers R, Doebbeling BN, Kaufman D, Grando A, Poterack K, Furniss S, Burton M, Miksch T. Mayo clinic registry of operational tasks (ROOT): a paradigm shift in electronic health RECO implementation evaluation. Mayo Clin Proc Innov Qual Outcomes. 2019 Sep;3(3):319–26. doi: 10.1016/j.mayocpiqo.2019.06.004. https://linkinghub.elsevier.com/retrieve/pii/S2542-4548(19)30077-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Kushniruk A, Senathirajah Y, Borycki E. Towards a usability and error 'safety net': a multi-phased multi-method approach to ensuring system usability and safety. Stud Health Technol Inform. 2017;245:763–7. [PubMed] [Google Scholar]

- 37.Borycki E. Quality and safety in ehealth: the need to build the evidence base. J Med Internet Res. 2019 Dec 19;21(12):e16689. doi: 10.2196/16689. https://www.jmir.org/2019/12/e16689/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Polson PG, Lewis C, Rieman J, Wharton C. Cognitive walkthroughs: a method for theory-based evaluation of user interfaces. Int J Man-Mach Stud. 1992 May;36(5):741–73. doi: 10.1016/0020-7373(92)90039-n. [DOI] [Google Scholar]

- 39.PowerMapper - Website Testing and Site Mapping Tools. [2020-09-21]. https://www.powermapper.com/

- 40.Easy Website Mapping Software. Edraw. [2020-09-21]. https://www.edrawsoft.com/website-mapping-software.html.

- 41.Shanafelt TD, Dyrbye LN, Sinsky C, Hasan O, Satele D, Sloan J, West CP. Relationship between clerical burden and characteristics of the electronic environment with physician burnout and professional satisfaction. Mayo Clin Proc. 2016 Jul;91(7):836–48. doi: 10.1016/j.mayocp.2016.05.007. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Participant think-aloud process.