Summary.

We consider integrating a non-probability sample with a probability sample which provides high dimensional representative covariate information of the target population. We propose a two-step approach for variable selection and finite population inference. In the first step, we use penalized estimating equations with folded concave penalties to select important variables and show selection consistency for general samples. In the second step, we focus on a doubly robust estimator of the finite population mean and re-estimate the nuisance model parameters by minimizing the asymptotic squared bias of the doubly robust estimator. This estimating strategy mitigates the possible first-step selection error and renders the doubly robust estimator root n consistent if either the sampling probability or the outcome model is correctly specified.

Keywords: Data integration, Double robustness, Generalizability, Penalized estimating equation, Variable selection

1. Introduction

Probability sampling is regarded as the gold standard in survey statistics for finite population inference. Fundamentally, probability samples are selected under known sampling designs and therefore are representative of the target population. However, many practical challenges arise in collecting and analysing probability sample data such as data collection costs and increasing non-response rates (Keiding and Louis, 2016). With advances of technology, non-probability samples become increasingly available for research purposes, such as remote sensing data and web-based volunteer samples. Non-probability samples provide rich information about the target population and can be potentially helpful for finite population inference. These complementary features of probability samples and non-probability samples raise the question of whether it is possible to develop data integration methods that leverage the advantages of both sources of data.

Existing methods for data integration can be categorized into three types. The first type is the so-called propensity score adjustment (Rosenbaum and Rubin, 1983). In this approach, the probability of a unit being selected into the non-probability sample, which is referred to as the propensity or sampling score, is modelled and estimated for all units in the non-probability sample. The subsequent adjustments, such as propensity score weighting or stratification, can then be used to adjust for selection biases; see, for example, Lee and Valliant (2009), Valliant and Dever (2011), Elliott and Valliant (2017) and Chen, Li and Wu (2018). Stuart et al. (2011, 2015) and Buchanan et al. (2018) used propensity score weighting to generalize results from randomized trials to a target population. O’Muircheartaigh and Hedges (2014) proposed propensity score stratification for analysing a non-randomized social experiment. One notable disadvantage of propensity score methods is that they rely on an explicit propensity score model and may be biased and highly variable if the model is misspecified (Kang and Schafer, 2007). The second type uses calibration weighting (Deville and Särndal, 1992; Kott, 2006; Chen, Valliant and Elliott, 2018; Chen et al., 2019). This technique forces the moments or the empirical distribution of auxiliary variables to be the same between the probability sample and the non-probability sample, so that after calibration the weighted distribution in the non-probability sample appears similar to that in the target population (DiSogra et al., 2011). The third type is mass imputation, which imputes the missing values for all units in the probability sample. In the usual imputation for missing data analysis, the respondents in the sample constitute a training data set for developing an imputation model. In the mass imputation, the non-probability sample is used as a training data set, and imputation is applied to all units in the probability sample; see, for example, Breidt et al. (1996), Rivers (2007), Kim and Rao (2012), Chipperfield et al. (2012) and Yang and Kim (2018).

Let be a vector of auxiliary variables (including an intercept) that are available from two sources of data, and let Y be a general-type study variable of interest. We consider combining a probability sample observing X, referred to as sample A, and a non-probability sample observing (X, Y), referred to as sample B, to estimate μ the population mean of Y. Because the sampling mechanism of a non-probability sample is unknown, the target population quantity is not identifiable in general. Researchers rely on an identification strategy that uses the non-informative sampling assumption imposed on the non-probability sample. To ensure that this assumption holds, researchers try to control for all covariates that are predictors of both sampling and the outcome variable. In practice, subject matter experts recommend a rich set of potentially useful variables but typically will not identify the set of variables to adjust for. In the presence of a large number of auxiliary variables, variable selection is important, because existing methods may become unstable or even infeasible, and irrelevant auxiliary variables can introduce a large variability in estimation. There is a large literature on variable-selection methods for prediction, but little work on variable selection for data integration that can successfully recognize the strengths and the limitations of each source of data and utilize all information captured for finite population inference. Gao and Carroll (2017) proposed a pseudolikelihood approach to combining multiple non-survey data with high dimensionality; this approach requires that all likelihoods are correctly specified and therefore is sensitive to model misspecification. Chen, Valliant and Elliott (2018) proposed a model-based calibration approach using lasso regression; this approach relies on a correctly specified outcome model. To our knowledge, robust inference has not been addressed in the context of data integration with high dimensional data.

We propose a doubly robust variable-selection and estimation strategy that harnesses the representativeness of the probability sample and the outcome information in the non-probability sample. The double robustness entails that the final estimator is consistent for the true value if either the probability of selection into the non-probability sample, which is referred to as the sampling score, or the outcome model is correctly specified, but not necessarily both (a double-robustness condition); see, for example, Bang and Robins (2005), Tsiatis (2006), Cao et al. (2009) and Han and Wang (2013). To handle high dimensional covariates, our strategy separates the variable selection step and the estimation step for the finite population mean to achieve two different goals.

In the first step, we select a set of variables that are important predictors of either the sampling score or the outcome model using penalized estimating equations. We assume that the sampling score follows a logistic regression model with unknown parameter and the outcome follows a generalized linear model with unknown parameter . Importantly, we separate the estimating equations for α and β to achieve stability in variable selection under the double-robustness condition. Specifically, we construct the estimating equation for α by calibrating the weighted average of X from sample B, weighted by the inverse of the sampling score, to the weighted average of X from sample A (i.e. a design-unbiased estimate of population mean of X). We construct the estimating equation for β by minimizing the standard least squared error loss under the outcome model. To establish the selection properties, we consider the ‘large n, diverging p’ framework. The major technical challenge is that, under the finite population framework, the selection indicators of sample A are not independent in general. To overcome this challenge, we construct martingale random variables with a weak dependence that enables the application of the Bernstein inequality. This construction is used in establishing our selection consistency result.

In the second step, we re-estimate (α, β) on the basis of the joint set of covariates selected from the first step and consider a doubly robust estimator of μ, .We propose to use different estimating equations for (α, β), derived by minimizing the asymptotic squared bias of . This estimation strategy is not new; see, for example, Kim and Haziza (2014) for missing data analyses in low dimensional data; here, we demonstrate its new role in high dimensional data to mitigate the possible selection error in the first step. In essence, our strategy for estimating (α, β) renders the first-order term in the Taylor series expansion of with respect to (α, β) to be exactly zero, and the remaining terms are negligible under regularity conditions. This estimating strategy makes the doubly robust estimator root n consistent if either the sampling probability or the outcome model is correctly specified. This also enables us to construct a simple and consistent variance estimator allowing for doubly robust inferences. Importantly, the estimator proposed enables model misspecification of either the sampling score or the outcome model. In the existing high dimensional causal inference literature, the doubly robust estimators have been shown to be robust to selection errors by using penalization (Farrell, 2015) or approximation errors by using machine learning (Chernozhukov et al., 2018). However, this double-robustness feature requires both nuisance models to be correctly specified. We relax this requirement by allowing one of the nuisance models to be misspecified. We clarify that, even though the set of variables for estimation may include the variables that are solely related to the sampling score but not the outcome and therefore may harm efficiency of estimating μ (De Luna et al., 2011; Patrick et al., 2011), it is important to include these variables for to achieve consistency in the case when the outcome model is misspecified and the sampling score model is correctly specified; see Section 6.

The paper proceeds as follows. Section 2 provides the basic set-up of the problem. Section 3 presents the proposed two-step procedure for variable selection and doubly robust estimation of the finite population mean. Section 4 describes the computation algorithm for solving penalized estimating equations. Section 5 presents the theoretical properties for variable selection and doubly robust estimation. Section 6 reports simulation results that illustrate the finite sample performance of the method. In Section 7, we present an application to analyse a non-probability sample collected by the Pew Research Center (PRC). We relegate all proofs to the on-line supplementary material.

2. Basic set-up

2.1. Notation: two samples

Let be the index set of N units for the finite population with N known. The finite population consists of . The parameter of interest is the finite population mean . We consider two sources of data: a probability sample, referred to as sample A, and a non-probability sample, referred to as sample B. Table 1 illustrates the observed data structure. Sample A consists of observations with sample size nA, where is known in sample A. Sample B consists of observations with sample size nB. We define IA,i and IB,i to be the selection indicators corresponding to sample A and sample B respectively. Although the non-probability sample contains rich information on (X, Y), the sampling mechanism is unknown, and therefore we cannot compute the first-order inclusion probability for Horvitz-Thompson estimation. The naive estimators applied to sample B without adjusting for the sampling process are subject to selection biases (Meng, 2018).

Table 1.

Two sources of data†

| Sample | Sampling weight π−1 | Covariate X | Study variable Y | |

|---|---|---|---|---|

| Probability sample | 1 | ✓ | ✓ | ? |

| ⋮ | ⋮ | ⋮ | ⋮ | |

| nA | ✓ | ✓ | ? | |

| Non-probability sample | nA + 1 | ? | ✓ | ✓ |

| ⋮ | ⋮ | ⋮ | ⋮ | |

| nA + nB | ? | ✓ | ✓ |

Sample A is a probability sample, and sample B is a non-probability sample. ‘✓’ and ‘?’ indicate observed and unobserved data respectively.

2.2. An identification assumption

Before presenting the proposed methodology for integrating the two sources of data, we first discuss the identification assumption. Let f(Y|X) be the conditional distribution of Y given X in the superpopulation model ζ that generates the finite population. We make the following assumption.

Assumption 1.

The selection indicator IB of sample B and the response variable Y are independent given X, i.e. P(IB = 1|X, Y) = P(IB = 1|X), which is referred to as the sampling score πB(X), and

πB(X) > Nγ−1δB > 0 for all X, where .

Assumption 1(a) implies that m(X) = E(Y|X) = E(Y|X, IB = 1) can be estimated solely on the basis of sample B. Assumption 1(b) specifies a lower bound of πB(X). A standard condition in the literature imposes a strict positivity in the sense that πB(X) > δB > 0; however, it implies that , which may be restrictive in survey practice. Here, we relax this condition and allow , where γ can be strictly less than 1.

Assumption 1 is a key assumption for identification. Under assumption 1, E(μ) is identifiable on the basis of sample A by E{IAm(X)} or sample B by E{IBY/πB(X)}. However, this assumption is not verifiable from the observed data. To ensure that this assumption holds, researchers often consider many possible predictors for the selection indicator IB or the outcome Y, resulting in a rich set of variables in X.

2.3. Existing estimators

In practice, the sampling score function πB(X) and the outcome mean function m(X) are unknown and need to be estimated from the data. Let πB(XTα) and m(XTβ) be the postulated models for πB(X) and m(X) respectively, where α and β are unknown parameters. Various estimators of μ have been proposed in the literature, each requiring different model assumptions and estimation strategies. We provide examples below and discuss their properties and limitations.

2.3.1. Example 1 (inverse probability of sampling score weighting)

Given an estimator , the inverse probability of sampling score weighting estimator is

| (1) |

The justification for relies on a correct specification of πB(X) and the consistency of . There are different approaches to obtain . Following Valliant and Dever (2011), we can obtain by fitting the sampling score model on the basis of the combined data , weighted by ωi. The resulting estimator is valid nB is relatively small (Valliant and Dever, 2011). Elliott and Valliant (2017) proposed an alternative strategy based on the Bayes rule: πB(X) ∝ P(IA = 1|X)OB(X), where is the odds of selection into sample B among the combined sample. This approach does not require nB to be small; however, if X does not correspond to the design variables for sample A, it requires postulating an additional model for P(IA = 1|X). Moreover, variable selection based on this approach is not straightforward with a high dimensional X. To obtain , we use the following estimating equation for α:

| (2) |

for some h(Xi; α) such that equation (2) has a unique solution. Kott and Liao (2017) advocated the use of h(X; α) = X and Chen, Li and Wu (2018) advocated the use of h(X; α) = π(XT; α)X.

2.3.2. Example 2 (outcome regression based on sample A)

The outcome regression estimator is

| (3) |

where is obtained by fitting the outcome model based solely on under assumption 1.

The justification for relies on a correct specification of m(XTβ) and the consistency of . If m(XTβ) is misspecified or is inconsistent, can be biased.

2.3.3. Example 3 (calibration weighting)

The calibration weighting estimator is

| (4) |

where satisfies constraint (i) or constraint (ii) (McConville et al., 2017; Chen, Valliant and Elliott, 2018; Chen et al., 2019).

The justification for subject to constraint (i) relies on the linearity of the outcome model, i.e. m(X) = XTβ* for some β*, or the linearity of the inverse probability of sampling weight, i.e. πB(X)−1 = XTα* for some α* (Fuller (2009), theorem 5.1). The linearity conditions are unlikely to hold for non-continuous variables. In these cases, may be biased. The justification for subject to constraint (ii) relies on a correct specification of m(X; β).

2.3.4. Example 4 (doubly robust estimator)

The doubly robust estimator is

| (5) |

The estimator is doubly robust with fixed dimensional X (Chen, Li and Wu, 2018), in the sense that it achieves consistency if either πB(XTα) or m(XTβ) is correctly specified, but not necessarily both. The double robustness is attractive; therefore, we shall investigate the potential of in a high dimensional set-up.

3. Methodology in high dimensional data

In the presence of a large number of covariates, not all of them are relevant for making inference of the population mean of the outcome. Including unnecessary covariates in the model makes the computation unstable and increases estimation errors. Variable selection is required to handle high dimensional covariates. For any vector , denote the number of non-zero elements in α as , the L1-norm as , the L2-norm as and the L∞-norm as . For any , let be the subvector of α formed by elements of α whose indices are in . Let be the complement of . For , and matrix , let be the submatrix of Σ formed by rows in and columns in . Following the literature on variable selection, we first standardize the covariates so that they have variances approximately equal to 1, which makes the variable-selection procedure more stable. We make the following modelling assumptions.

Assumption 2 (sampling score model).

The sampling mechanism of sample B, πB(X), follows a logistic regression model πB(XTα), i.e. logit{πB(XTα)} = XTα for .

Assumption 3 (outcome model).

The outcome mean function m(X) follows a generalized linear regression model, i.e. m(X) = m(XTβ) for , where m(·) denotes the link function.

Define α* to be the p-dimensional parameter that minimizes the Kullback-Leibler divergence,

and .

In assumption 2, we adopt the logistic regression model for the sampling score following most of the empirical literature; but our framework can be extended to the case of other models such as the probit model. The models πB(XTα) and m(XTβ) are working models, which may be misspecified. If the sampling score model is correctly specified, we have πB(X) = πB(XTα*). If the outcome model is correctly specified, we have m(X) = m(XTβ*).

The procedure proposed consists of two steps: the first step selects important variables in the sampling score model and the outcome model, and the second step focuses on doubly robust estimation of the population mean.

In the first step, we propose to solve penalized estimating equations for variable selection. Using equation (2) with h(X; α) = X, we define the estimating function for α as

To select important variables in m(XTβ), we define the estimating function for β as

Let U(θ) = (U1(α)T, U2(β)T)T be the joint estimating function for θ = (αT, βT)T. When p is large, following Johnson et al. (2008), we consider the penalized estimating function for (α, β) as

| (6) |

where and are some continuous functions, is the elementwise product of and sgn(α), and is the elementwise product of and sgn(β). We let qλ(x) = dpλ(x)/dx, where pλ(x) is some penalization function. Although the same discussion applies to different non-concave penalty functions, we specify pλ(x) to be a folded concave smoothly clipped absolute deviation penalty function (Fan and Lv, 2011). Accordingly, we have

| (7) |

for a > 0, where (·)+ is the truncated linear function, i.e., if x ⩾ 0, (x)+ = x and, if x < 0, (x)+ = 0. We use a = 3.7 following the suggestion of Fan and Li (2001). We select the variables if the corresponding estimates of coefficients are non-zero in either the sampling score or the outcome model, indexed by .

Remark 1.

To help to understand function (6) we discuss two scenarios. If |αj| is large, then is 0, and therefore U1,j(α) is not penalized. In contrast, if |αj| is small but non-zero, then is large, and U1,j(α) is penalized with a penalty term. The penalty term then forces to be 0 and excludes the jth element of X from the final selected set of variables. The same discussion applies to U2(β) and .

In the second step, we consider the doubly robust estimator in equation (5) with re-estimated on the basis of . As we shall show in Section 5, the set contains the true important variables in either the sampling score model or the outcome model with probability approaching 1 (the oracle property). Therefore, if either the sampling score model or the outcome model is correctly specified, the asymptotic bias of is 0; however, if both models are misspecified, the asymptotic bias of is

To minimize a.bias(α, β)2, we consider the estimating function

| (8) |

and the corresponding empirical estimating function

| (9) |

for estimating (α, β), constrained on .

To summarize, our two-step procedure is as follows.

Step 1: solve the penalized joint estimating equation Up(α, β) = 0, denoted by . Let , and .

-

Step 2: obtain the proposed estimator as

(10) where and are obtained by solving J(α, β) = 0 for α and β with and .

Remark 2.

The two steps use different estimating functions (6) and (9) for selection and estimation with the following advantages. First, function (6) separates the selection for α and β in U1(α) and U2(β), so it stabilizes the selection procedure if either the sampling score model or the outcome model is misspecified. Second, using equation (9) for estimation leads to an attractive feature for inference about μ. We point out that, although the joint estimating function (9) is motivated by minimizing the asymptotic bias of when both nuisance models are misspecified, we do not expect the proposed estimator for μ to be unbiased in this case. Instead, using function (9) has the advantage in the case when either the sampling score or the outcome model is correctly specified. It is well known that post-selection inference is notoriously difficult even when both models are correctly specified because the estimation step is based on a random set of variables being selected. We show that our estimation strategy based on function (9) mitigates the possible first-step selection error and makes root n consistent if either the sampling probability or the outcome model is correctly specified in high dimensional data. Heuristically this is achieved because the first Taylor series expansion term is set to be 0 because of function (8). We relegate the details to Section 5.

Remark 3.

Variable selection circumvents the instability or infeasibility of direct estimation of (α, β) with high dimensional X. Moreover, in step 2, we consider the union of covariates , where . It is worth comparing this choice with two other common choices in the literature. The first considers separate sets of variables for the two models, i.e. the sampling score is fitted on the basis of , and the outcome model is fitted on the basis of . However, we note that in the joint estimating equations J1(α, β) and J2(α, β) should have the same dimension; otherwise, a solution to J(α, β) = 0 may not exist. Moreover, Brookhart et al. (2006) and Shortreed and Ertefaie (2017) have shown that including outcome predictors in the propensity score model will increase the precision of the estimated average treatment effect without increasing bias. This implies that an efficient variable-selection and estimation method should take into account both sampling-covariate and outcome-covariate relationships. As a result, may have a better performance than the oracle estimator that uses the true important variables separately in the sampling score and the outcome model. Second, many researchers have suggested that including predictors that are solely related to the sampling score but not the outcome may harm estimation efficiency (De Luna et al., 2011; Patrick et al., 2011). However, this strategy is effective when both the sampling score and the outcome models are correctly specified. When the sampling score model is correctly specified but the outcome model is misspecified, restricting the variables to be the outcome predictors may make the sampling score misspecified by using the wrong set of variables. The simulation study suggests that restricted to the set of variables in is not doubly robust.

4. Computation

In this section, we discuss the computation for solving the penalized estimating equation (6). Following Johnson et al. (2008), we use an iterative algorithm that combines the Newton-Raphson algorithm for solving estimating equations and the minorization-maximization algorithm for the non-convex penalty of Hunter and Li (2005).

First, by the minorization-maximization algorithm, the penalized estimator solving equation (6) satisfies

| (11) |

where ϵ is a predefined small number. In our implementation, we choose ϵ to be 10−6.

Second, we solve equation (11) using the Newton-Raphson algorithm. It may be challenging to implement the Newton-Raphson algorithm directly, because it involves inverting a large matrix. For computational stability, we use a co-ordinate decent algorithm (Friedman et al., 2007) by cycling through and updating each of the co-ordinates. Define m(k)(t) = dkm(t)/dkt for k ⩾ 1:

| (12) |

and

Let θ start at an initial value . With the other co-ordinates fixed, the kth Newton-Raphson update for θj is

| (13) |

where ∇jj(θ) and Λjj(θ) are the jth diagonal elements in ∇(θ) and Λ(θ) respectively. The procedure cycles through all the 2p elements of θ and is repeated until convergence.

We use K-fold cross-validation to select tuning parameters (λα, λβ). More specifically, we partition both samples into approximately K equal sized subsets and pair subsets of sample A and subsets of sample B randomly. Of the K pairs, we retain one single pair as the validation data and the remaining K − 1 pairs as the training data. We fit the models on the basis of the training data and estimate the loss function on the basis of the validation data. We repeat the process K times, with each of the K pairs used exactly once as the validation data. Finally, we aggregate the K estimated loss function. We select the tuning parameter as the parameter that minimizes the aggregated loss function over a prespecified grid.

Because the weighting estimator uses the sampling score πB(X) to calibrate the distribution of between sample B and the target population, we use the following loss function for selecting λα:

where is the penalized estimator with tuning parameter λα.We use the prediction error loss function for selecting λβ:

where is the penalized estimator with tuning parameter λβ.

5. Asymptotic results for variable selection and estimation

We establish the asymptotic properties for the proposed double variable-selection and doubly robust estimation method. We assume that sample A is collected by simple random sampling or Poisson sampling with the following regularity conditions. Although it appears restrictive, our results extend to high entropy sampling designs; see remark 4.

Assumption 4.

For all 1 ⩽ i ⩽ N, πA,i ⩾ Nγ−1δA > 0, where .

Similarly to assumption 1(b), we relax the strict positivity on πA,i and assume nA = O(Nγ) for γ possibly strictly less than 1. Let n = min(nA, nB), which is O(Nγ) under assumptions 1 and 4.

Remark 4.

We discuss the applicability of our asymptotic framework to sample A with high entropy sampling designs. Examples of high entropy sampling designs include simple random sampling, correlated Poisson sampling designs, normalized conditional Poisson sampling designs, Rao-Sampford sampling, the Chao design and the stratified design; see Berger (1998a,b), Brewer and Donadio (2003) and Grafström (2010). The asymptotic properties for high entropy sampling designs are determined solely by their first-order inclusion probabilities. Therefore, for two high entropy sampling designs with the same first-order inclusion probabilities, their asymptotic behaviours are the same. In particular, we can consider the conditional Poisson sampling design (Hájek, 1964; Tillé, 2011), which appears when conditioning the Poisson design on a fixed sample size nA. Let pA = {pA,i: i = 1, … , N} with be the inclusion probabilities for the conditional Poisson sampling design. Given pA, it is possible to find πA = {πA,i: i = 1, … , N} with such that the conditional Poisson sampling design with pA is asymptotically equivalent to the Poisson sampling design with the inclusion probabilities πA (Hájek, 1964; Conti, 2014). Therefore, for a high entropy design, to apply our theoretical results, we check the conditions for the corresponding inclusion probability πA under Poisson sampling.

Let , and . Define sα = ∥α*∥0, sβ = ∥β*∥0, sθ = sα + sβ and λθ = min(λα, λβ).

Assumption 5.

The following regularity conditions hold.

Condition 1. The parameter θ belongs to a compact subset in , and θ* lies in the interior of the compact subset.

Condition 2. are fixed and uniformly bounded.

-

Condition 3. There are constants c1 and c2 such that

where λmin(·) and λmax(·) are the minimum and the maximum eigenvalue of a matrix respectively.

Condition 4. Let be the ith residual. There is a constant c3 such that E{|ϵi(β*)|2+δ} ⩽ c3 for all 1 ⩽ i ⩽ N and some δ > 0. There are constants c4 and c5 such that E[exp{c4|ϵi(β*)|}|Xi] ⩽ c5 for all 1 ⩽ i ⩽ N.

Condition 5. , and are uniformly bounded away from ∞ on for some τ > 0.

Condition 6. and , as n → ∞.

Condition 7. sθ = o(n1/3), λα, λβ → 0, , , and , as n → ∞.

These assumptions are typical in the literature on penalization methods. Condition 2 specifies a fixed design which is well suited under the finite population inference framework. Condition 4 holds for Gaussian distributions, sub-Gaussian distributions, and so on. Condition 5 holds for common models. Condition 7 specifies the restrictions on the dimension of covariates p and the dimension of the true non-zero coefficients sθ. To gain insight, when the true model size sθ is fixed, condition 7 holds for p = O(n), i.e. p can be the same size as n.

We establish the asymptotic properties of the penalized estimating equation procedure.

Theorem 1.

Under assumptions 1–5, there is an approximate penalized solution , which satisfies the selection consistency properties:

| (14) |

| (15) |

| (16) |

and

| (17) |

as n → ∞:

Results (14) and (15) imply that . Results (16) and (17) imply that, with probability approaching 1, the penalized estimating equation procedure would not overselect irrelevant variables and estimate the true non-zero coefficients at the ✓(sθ/n) convergence rate, which is the so-called oracle property of variable selection.

We now establish the asymptotic properties of . Define a sequence of events , where we emphasize that depends on n but we suppress the dependence of and on n. Following the same argument as for equation (17), given the event , we have . Combining with , we have

| (18) |

By Taylor series expansion,

| (19) |

| (20) |

where is defined in equation (10). Equation (19) follows because we solve equation (9) for (α, β). Equation (20) follows because of equation (18) and assumption 5. As a result, the way for estimating (α, β) leads to asymptotic equivalence between and .

We now show that is asymptotically unbiased for μ if either πB(XTα) or m(XTβ) is correctly specified. We note that

| (21) |

If πB(XTα) is correctly specified, then πB(XTα*) = πB(X) and therefore equation (21) is 0; if is correctly specified, then and therefore equation (21) is 0.

Following the variance decomposition of Shao and Steel (1999), the asymptotic variance of the linearized term is

where the conditional distribution in E(·|IB, X, Y) and V(·|IB, X, Y) is the sampling distribution for sample A. The first term V1 is the sampling variance of the Horvitz-Thompson estimator. Thus,

| (22) |

For the second term V2, note that

Thus,

| (23) |

Theorem 2 summarizes the asymptotic properties of .

Theorem 2.

Under assumptions 1–5, if either πB(XTα) or m(XTβ) is correctly specified,

as n→∞, where V = limn→∞(V1 + V2), and V1 and V2 are defined in equations (22)) and (23) respectively.

To estimate V1, we can use the design-based variance estimator applied to as

| (24) |

To estimate V2, we further express V2 as

| (25) |

Let , and let be a consistent estimator of . We can then estimate V2 by

By the law of large numbers, is consistent for V2 regardless of whether one of or is misspecificed, and therefore it is doubly robust.

Theorem 3 (double robustness of ).

Under assumptions 1–5, if either πB(XTα) or m(XTβ) is correctly specified, is consistent for V.

Remark 5.

It is worth discussing the relationship of our proposed method to existing variable-selection methods in the survey literature. On the basis of a single probability sample source, McConville et al. (2017) proposed a model-assisted survey regression estimator of finite population totals using the lasso (the least absolute shrinkage and selection operator) to improve the efficiency. Chen, Valliant and Elliott (2018) and Chen et al. (2019) proposed model-based calibration estimators using the lasso based on non-probability samples integrating with auxiliary known totals or probability samples respectively. However, their methods require that the working outcome model includes sufficient population information and therefore are not doubly robust. To the best of our knowledge, our paper is the first to propose doubly robust inference of finite population means after variable selection.

6. Simulation study

6.1. Set-up

In this section, we evaluate the finite sample performance of the procedure proposed. We first generate a finite population with N = 10000, where Yi is a continuous or binary outcome variable, and Xi = (1, X1,i, …, Xp−1,i)T is a p-dimensional vector of covariates with the first component being 1 and other components independently generated from the standard normal distribution. We set p = 50. From the finite population, we select a non-probability sample of size nB ≈ 2000, according to the selection indicator IB,i ~ Ber(πB,i). We select a probability sample of the average size nA = 500 under Poisson sampling with πA,i ∝ (0.25 + |X1i| + 0.03|Yi|). The parameter of interest is the population mean .

For the non-probability sampling probability, we consider both linear and non-linear sampling score models:

, where α0 = (−2, 1, 1, 1, 1, 0, 0, 0, … , 0) (model PSM I);

, where α0 = (0, 0, 0, 3, 3, 3, 3, 0, … , 0)T (model PSM II).

For generating a continuous outcome variable Yi, we consider both linear and non-linear outcome models with β0 = (1, 0, 0, 1, 1, 1, 1, 0, … , 0)T:

(model OM I);

(model OM II).

For generating a binary outcome variable Yi, we consider both linear and non-linear outcome models with β0 = (1, 0, 0, 3, 3, 3, 3, 0, … , 0)T,

Y ~ Ber{πY(X)} with (model OM III);

Y ~ Ber{πY(X)} with (model OM IV).

We consider the following estimators:

naive, , the naive estimator using the simple average of Yi from sample B, which provides the degree of the selection bias of sample B;

oracle, , the doubly robust estimator , where and are based on the joint estimator restricting to the known important covariates for comparison;

p-ipw, , the penalized inverse probability of sampling weighting estimator , where using a logistic regression model, and is obtained by a weighted penalized regression of IB,i on Xi based on the combined data from sample A and sample B, with the units in sample A weighted by the known sampling weights and the units in sample B weighted by 1.

p-reg, , the penalized regression estimator , where is obtained by a penalized regression of Yi on Xi based on sample B;

p-dr0, , the penalized double estimating equation estimator based on the set of outcome predictors ;

p-dr, , the proposed penalized double estimating equation estimator based on the union of sampling and outcome predictors .

We also note that without variable selection is severely biased and unstable and therefore is excluded for comparison.

6.2. Simulation results

All simulation results are based on 500 Monte Carlo runs. Table 2 reports the selection performance of the proposed penalization procedure in terms of the proportion of underselecting (‘Under’) or overselecting (‘Over’), the average false negative results, FN (the average number of selected covariates that have the true 0 coefficients), and the average false positive results, FP (the average number of selected covariates that have the true 0 coefficients). The procedure proposed selects all covariates with non-zero coefficients in both the outcome model and the sampling score model under the true model specification. Moreover, the number of false positive results is small under the true model specification.

Table 2.

Simulation results for selection performance for the proposed double-penalized estimating equation procedure under four scenarios†

| Scenario | β* | α* | ||||||

|---|---|---|---|---|---|---|---|---|

| Under (×102) | Over (×102) | FN | FP | Under (×102) | Over (×102) | FN | FP | |

| Continuous outcome | ||||||||

| (i) OM I and PSM I | 0.0 | 31.8 | 0.0 | 1.4 | 0.0 | 0.0 | 0.0 | 0.0 |

| (ii) OM II and PSM I | 70.6 | 15.0 | 0.9 | 0.2 | 0.0 | 0.0 | 0.0 | 0.0 |

| (iii) OM I and PSM II | 0.0 | 32.8 | 0.0 | 1.4 | 100.0 | 100.0 | 4.0 | 1.0 |

| (iv) OM II and PSM II | 0.0 | 0.4 | 0.0 | 0.4 | 100.0 | 100.0 | 3.5 | 4.3 |

| Binary outcome | ||||||||

| (i) OM III and PSM I | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| (ii) OM IV and PSM I | 100.0 | 0.0 | 2.1 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| (iii) OM III and PSM II | 0.0 | 0.0 | 0.0 | 0.0 | 100.0 | 100.0 | 4.0 | 1.0 |

| (iv) OM IV and PSM II | 100.0 | 0.0 | 4.0 | 0.0 | 100.0 | 96.0 | 4.0 | 1.0 |

Under OMI and OMII, or OMIII and OMIV, the outcome model is respectively correctly specified and misspecified, and, under PSM I and PSM II, the probability of sampling score model is respectively correctly specified or misspecified.

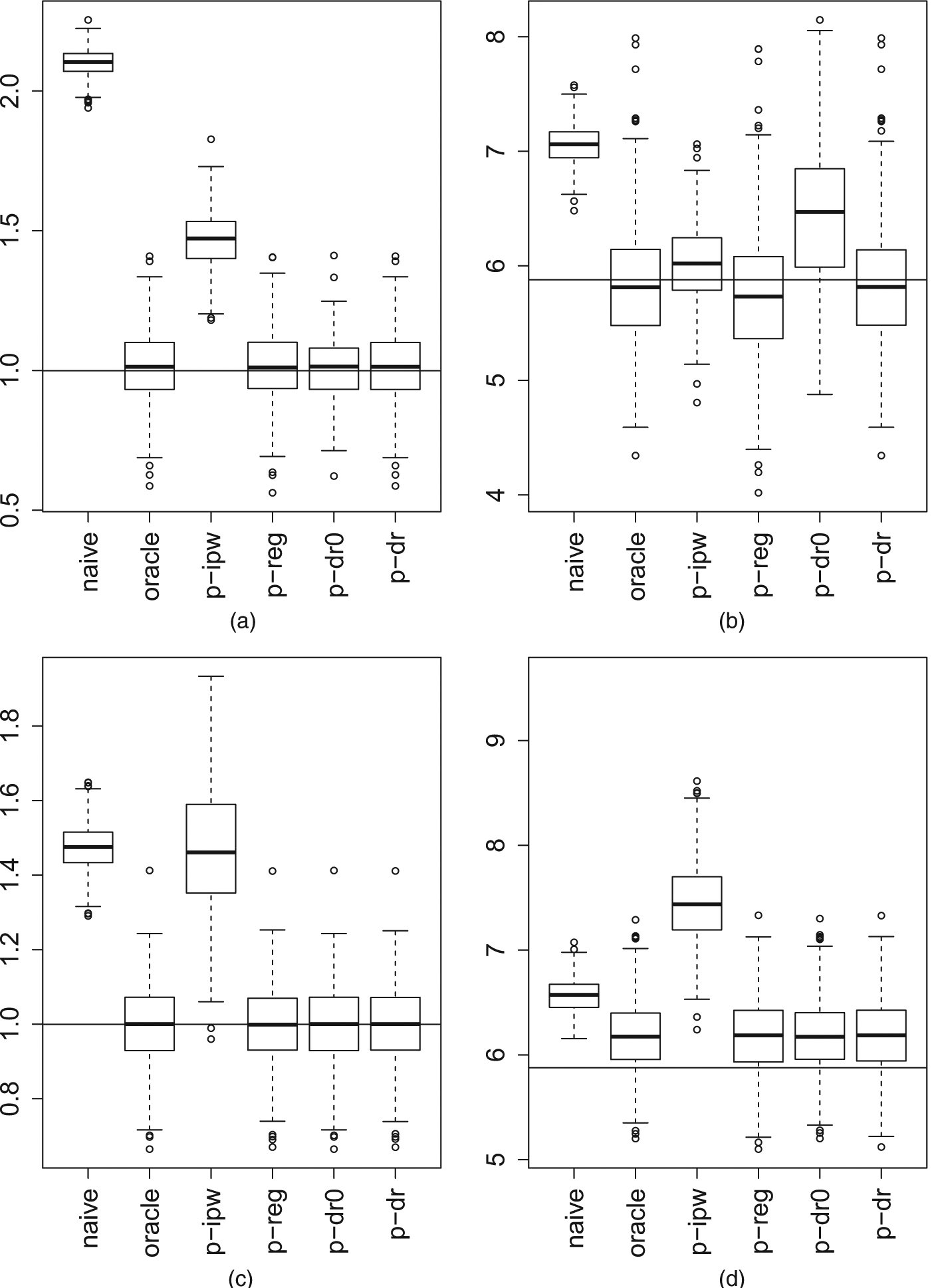

Fig. 1 displays the simulation results for the continuous outcome. The naive estimator shows large biases across all scenarios. The oracle estimator is doubly robust, in the sense that, if either the outcome or the sampling score is correctly specified, it is unbiased. The penalized inverse probability of sampling weighting estimator shows largest biases except for scenario (ii). This approach is justifiable only if the sampling rate of sample B is relatively small compared with the population size. The penalized regression estimator is only singly robust. When the outcome model is misspecified as in scenarios (ii) and (iv), it shows large biases. The proposed estimator based on is doubly robust, and its performance is comparable with the oracle estimator that requires knowing the true important variables. Moreover, is slightly more efficient than . This efficiency gain is due to using the union of covariates selected for the sampling score model and the outcome model. This is consistent with the findings in Brookhart et al. (2006) and Shortreed and Ertefaie (2017). The proposed penalized double estimating equation estimator based on is slightly more efficient than based on in scenario (i) when both the outcome and the sampling score models are correctly specified; however, has a large bias in scenario (ii) when the outcome model is misspecified and therefore is not doubly robust anymore; see remark 3.

Fig. 1.

Estimation results for the continuous outcome under four scenarios (under OM I and OM II, the outcome model is respectively correctly specified and misspecified, and, under PSM I and PSM II, the probability of sampling score model is respectively correctly specified and misspecified): (a) scenario (i),OMI and PSM I; (b) scenario (ii), OM II and PSM I; (c) scenario (iii), OM I and PSM II; (d) scenario (iv), OM II and PSM II

Fig. 2 displays the estimation results for the binary outcome. The same discussion above applies here. Moreover, when the outcome model is incorrectly specified, the oracle estimator has a large variability. In this case, the estimator proposed outperforms the oracle estimator, because the variable selection step helps to stabilize the estimation performance.

Fig. 2.

Estimation results for the binary outcome under four scenarios (under OMIII and OMIV, the outcome model is respectively correctly specified and misspecified, and, under PSM I and PSM II, the probability of sampling score model is respectively correctly specified and misspecified): (a) scenario (i), OM III and PSM I; (b) scenario (ii), OM IV and PSM I; (c) scenario (iii), OM III and PSM II; (d) scenario (iv), OM IV and PSM II

Table 3 reports the simulation results for the coverage properties for the continuous outcome and binary outcome. Under the double-robustness condition (i.e. if either the outcome model or the sampling score model is correctly specified), the coverage rates are close to the nominal coverage, whereas, if both models are misspecified, the coverage rates are off the nominal coverage.

Table 3.

Simulation results for the coverage properties for the continuous and binary outcomes: empirical coverage rate and empirical coverage rate ±2×Monte Carlo standard error

| Scenario | Results for continuous outcome | Results for binary outcome |

|---|---|---|

| (i) OM I or OM III and PSM I | 95.2 (93.3, 97.1) | 95.7 (93.9, 97.6) |

| (ii) OM II or OM IV and PSM I | 94.6 (92.6, 96.6) | 95.5 (93.6, 97.4) |

| (iii) OM I or OM III and PSM II | 96.2 (94.2, 97.8) | 95.6 (93.8, 97.5) |

| (iv) OM II or OM IV and PSM II | 88.2 (85.3, 91.1) | 42.9 (38.3, 47.6) |

7. An application

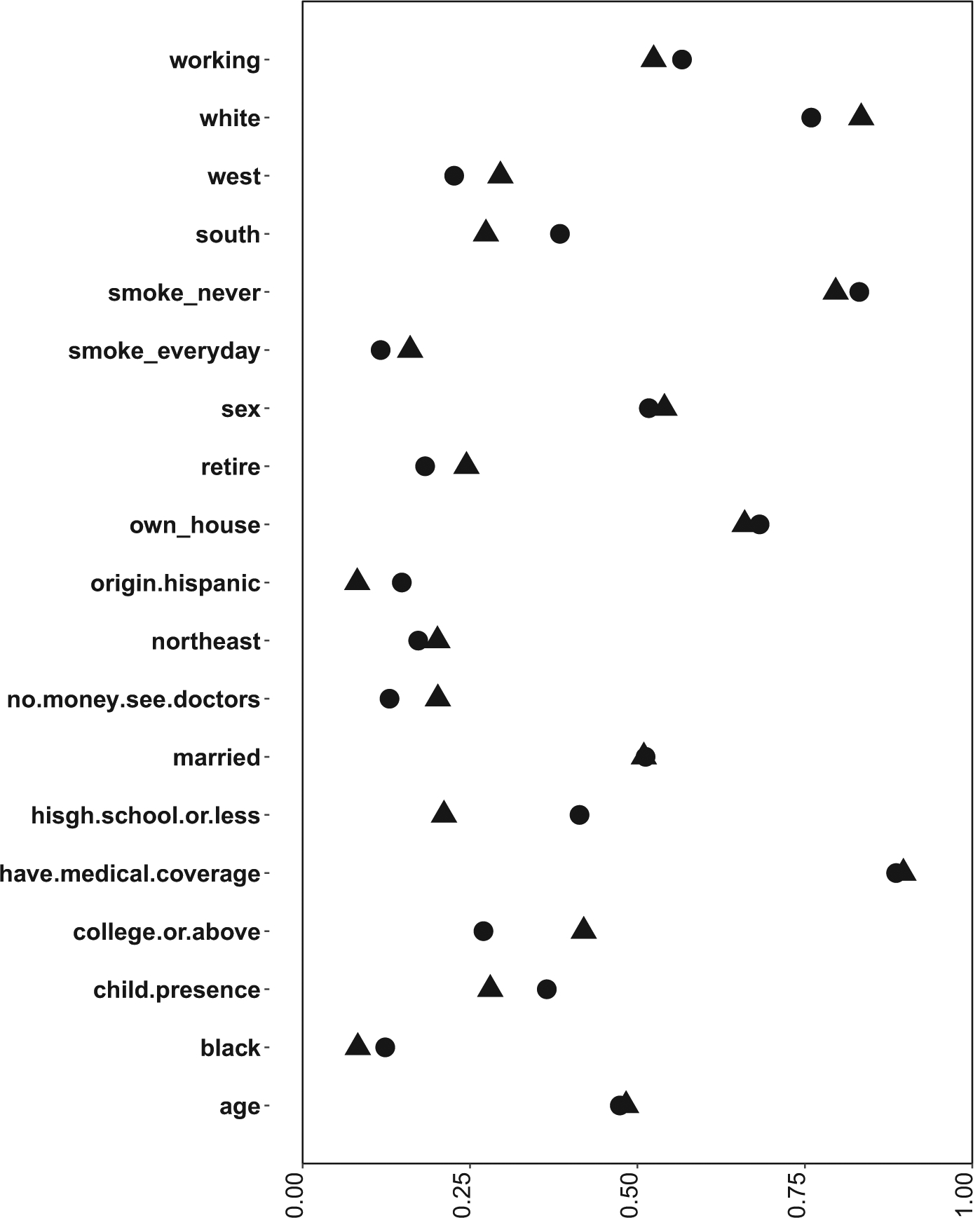

We analyse two data sets from the 2005 PRC (http://www.pewresearch.org/) and the 2005 behavioural risk factor surveillance system (BRFSS). The goal of the PRC study was to evaluate the relationship between individuals and community (Chen, Li and Wu, 2018; Kim et al., 2018). The 2005 PRC data set is from a non-probability sample provided by eight vendors, which consists of nB = 9301 subjects. We focus on two study variables: a continuous Y1 (days had at least one drink last month) and a binary Y2 (an indicator of voted in local elections). In contrast, the 2005 BRFSS sample is a probability sample, which consists of nA = 441456 subjects with survey weights. This data set does not have measurements on the study variables of interest; however, it contains a rich set of common covariates with the PRC data set listed in Fig. 3. To illustrate the heterogeneity in the study populations, Fig. 3 contrasts the covariate means from the PRC data and the design-weighted covariate means (i.e. the estimated population covariate means) from the BRFSS data set. The covariate distributions from the PRC sample and the BRFSS sample are considerably different, e.g. age, education (high school or less), financial status (no money to see doctors; own house), retirement rate and health (smoking). Therefore, the naive analyses of the study variables based on the PRC data set are subject to selection biases.

Fig. 3.

Covariate means by two samples (age is divided by 100): ● sample A; ▲ sample B

We compute the naive and proposed estimators. To apply the method proposed, we assume that the sampling score is a logistic regression model, the continuous outcome follows a linear regression model and that the binary outcome follows a logistic regression model. Using fivefold cross-validation, the double-selection procedure identifies 18 important covariates (all available covariates except for the north-east region) in the sampling score and the binary outcome model, and it identifies 15 important covariates (all available covariates except for black, an indicator of smoking every day, the north-east region and the south region) in the continuous outcome model.

Table 4 presents the point estimates, the standard errors and the 95% Wald confidence intervals. For estimating the standard error, because the second-order inclusion probabilities are unknown, following the survey literature, we compute the variance estimator in equation (24) by assuming that the survey design is single-stage Poisson sampling. We find significant differences in the results between the naive estimator and the proposed estimator. As demonstrated by the simulation study in Section 6, the naive estimator may be biased because of selection biases, and the estimator proposed utilizes a probability sample to correct for such biases. From the results, on average, the target population had at least one drink for 4.84 days over the last month, and 71.8% of the target population voted in local elections.

Table 4.

Point estimate, standard error and 95% Wald confidence interval CI

| Method | Y1 (days had at least 1 drink last month) | Y2 (whether voted in local elections) | ||||

|---|---|---|---|---|---|---|

| Estimate | Standard error | CI | Estimate ×102 | Standard error ×102 | CI×102 | |

| Naive | 5.36 | 0.90 | (5.17, 5.54) | 75.3 | 0.5 | (74.4, 76.3) |

| Proposed | 4.84 | 0.15 | (4.81, 4.87) | 71.8 | 0.2 | (71.3, 72.2) |

Supplementary Material

Acknowledgements

Dr Yang is partially supported by National Science Foundation grant DMS 1811245, National Cancer Institute grant P01 CA142538 and Oak Ridge Associated Universities. Dr Kim is partially supported by National Science Foundation grant MMS 1733572. Dr Song is partially supported by National Science Foundation grant DMS 1555244 and National Cancer Institute grant P01 CA142538. An R package IntegrativeFPM that implements the method proposed is available from https://github.com/shuyang1987/IntegrativeFPM.

Footnotes

Supplementary material

The supplementary material provides technical details and proofs.

Contributor Information

Shu Yang, North Carolina State University, Raleigh, USA.

Jae Kwang Kim, Iowa State University, Ames, USA.

Rui Song, North Carolina State University, Raleigh, USA.

References

- Bang H and Robins JM (2005) Doubly robust estimation in missing data and causal inference models. Bio-metrics, 61, 962–973. [DOI] [PubMed] [Google Scholar]

- Berger YG (1998a) Rate of convergence for asymptotic variance of the Horvitz-Thompson estimator. J. Statist. Planng Inf, 74, 149–168. [Google Scholar]

- Berger YG (1998b) Rate of convergence to normal distribution for the Horvitz-Thompson estimator. J. Statist. Planng Inf, 67, 209–226. [Google Scholar]

- Bethlehem J (2016) Solving the nonresponse problem with sample matching? Socl Sci. Comput. Rev, 34, 59–77. [Google Scholar]

- Breidt FJ, McVey A and Fuller WA (1996) Two-phase estimation by imputation. J. Ind. Soc. Agri. Statist, 49, 79–90. [Google Scholar]

- Brewer K and Donadio ME (2003) The high entropy variance of the Horvitz-Thompson estimator. Surv. Methodol, 29, 189–196. [Google Scholar]

- Brookhart MA, Schneeweiss S, Rothman KJ, Glynn RJ, Avorn J and Stürmer T (2006) Variable selection for propensity score models. Am. J. Epidem, 163, 1149–1156. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buchanan AL, Hudgens MG, Cole SR, Mollan KR, Sax PE, Daar ES, Adimora AA, Eron JJ and Mugavero MJ (2018) Generalizing evidence from randomized trials using inverse probability of sampling weights. J. R. Statist. Soc A, 181, 1193–1209. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cao W, Tsiatis AA and Davidian M (2009) Improving efficiency and robustness of the doubly robust estimator for a population mean with incomplete data. Biometrika, 96, 723–734. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen Y, Li P and Wu C (2018) Doubly robust inference with non-probability survey samples. J. Am. Statist. Ass, to be published, doi 10.1080/01621459.2019.1677241. [DOI] [Google Scholar]

- Chen JKT, Valliant R and Elliott MR (2018) Model-assisted calibration of non-probability sample survey data using adaptive LASSO. Surv. Methodol, 44, 117–144. [Google Scholar]

- Chen JKT, Valliant RL and Elliott MR (2019) Calibrating non-probability surveys to estimated control totals using LASSO, with an application to political polling. Appl. Statist, 68, 657–681. [Google Scholar]

- Chernozhukov V, Chetverikov D, Demirer M, Duflo E, Hansen C, Newey W and Robins J (2018) Double/debiased machine learning for treatment and structural parameters. Econmetr. J, 21, C1–C68. [Google Scholar]

- Chipperfield J, Chessman J and Lim R (2012) Combining household surveys using mass imputation to estimate population totals. Aust. New Zeal. J. Statist, 54, 223–238. [Google Scholar]

- Conti PL (2014) On the estimation of the distribution function of a finite population under high entropy sampling designs, with applications. Sankhya B, 76, 234–259. [Google Scholar]

- De Luna X, Waernbaum I and Richardson TS (2011) Covariate selection for the nonparametric estimation of an average treatment effect. Biometrika, 98, 861–875. [Google Scholar]

- Deville J-C and Särndal C-E (1992) Calibration estimators in survey sampling. J. Am. Statist. Ass, 87, 376–382. [Google Scholar]

- DiSogra C, Cobb C, Chan E and Dennis JM (2011) Calibrating non-probability internet samples with probability samples using early adopter characteristics. Proc. Surv. Res. Meth. Sect. Am. Statist. Ass, 4501–4515. [Google Scholar]

- Elliott MR and Valliant R (2017) Inference for nonprobability samples. Statist. Sci, 32, 249–264. [Google Scholar]

- Fan J and Li R (2001) Variable selection via nonconcave penalized likelihood and its oracle properties. J. Am. Statist. Ass, 96, 1348–1360. [Google Scholar]

- Fan J and Lv J (2011) Nonconcave penalized likelihood with np-dimensionality. IEEE Trans. Inform. Theory, 57, 5467–5484. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Farrell MH (2015) Robust inference on average treatment effects with possibly more covariates than observations. J. Econmetr, 189, 1–23. [Google Scholar]

- Friedman J, Hastie T, Höfling H and Tibshirani R (2007) Pathwise coordinate optimization. Ann. Appl. Statist, 1, 302–332. [Google Scholar]

- Fuller WA (2009) Sampling Statistics. Hoboken: Wiley. [Google Scholar]

- Gao X and Carroll RJ (2017) Data integration with high dimensionality. Biometrika, 104, 251–272. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grafström A (2010) Entropy of unequal probability sampling designs. Statist. Methodol, 7, 84–97. [Google Scholar]

- Hájek J (1964) Asymptotic theory of rejective sampling with varying probabilities from a finite population. Ann. Math. Statist, 35, 1491–1523. [Google Scholar]

- Han P and Wang L (2013) Estimation with missing data: beyond double robustness. Biometrika, 100, 417–430. [Google Scholar]

- Hunter DR and Li R (2005) Variable selection using MM algorithms. Ann. Statist, 33, 1617–1642 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson BA, Lin D and Zeng D (2008) Penalized estimating functions and variable selection in semiparametric regression models. J. Am. Statist. Ass, 103, 672–680. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kang JD and Schafer JL (2007) Demystifying double robustness: a comparison of alternative strategies for estimating a population mean from incomplete data. Statist. Sci, 22, 523–539. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keiding N and Louis TA (2016) Perils and potentials of self-selected entry to epidemiological studies and surveys (with discussion). J. R. Statist. Soc A, 179, 319–376. [Google Scholar]

- Kim JK and Haziza D (2014) Doubly robust inference with missing data in survey sampling. Statist. Sin, 24, 375–394. [Google Scholar]

- Kim JK, Park S, Chen Y and Wu C (2018) Combining non-probability and probability survey samples through mass imputation. Preprint. (Available from arxiv.org/abs/1812.10694.) [Google Scholar]

- Kim JK and Rao JNK (2012) Combining data from two independent surveys: a model-assisted approach. Biometrika, 99, 85–100. [Google Scholar]

- Kott PS (2006) Using calibration weighting to adjust for nonresponse and coverage errors. Surv. Methodol, 32, 133–142. [Google Scholar]

- Kott PS and Liao D (2017) Calibration weighting for nonresponse that is not missing at random: allowing more calibration than response-model variables. J. Surv. Statist. Methodol, 5, 159–174. [Google Scholar]

- Lee S and Valliant R (2009) Estimation for volunteer panel web surveys using propensity score adjustment and calibration adjustment. Sociol. Meth. Res, 37, 319–343. [Google Scholar]

- McConville KS, Breidt FJ, Lee TC and Moisen GG (2017) Model-assisted survey regression estimation with the LASSO. J. Surv. Statist. Methodol, 5, 131–158. [Google Scholar]

- Meng X-L (2018) Statistical paradises and paradoxes in big data (I): law of large populations, big data paradox, and the 2016 US presidential election. Ann. Appl. Statist, 12, 685–726. [Google Scholar]

- O’Muircheartaigh C and Hedges LV (2014) Generalizing from unrepresentative experiments: a stratified propensity score approach. Appl. Statist, 63, 195–210. [Google Scholar]

- Patrick AR, Schneeweiss S, Brookhart MA, Glynn RJ, Rothman KJ, Avorn J and Stürmer T (2011) The implications of propensity score variable selection strategies in pharmacoepidemiology: an empirical illustration. Pharmepidem. Drug Safty, 20, 551–559. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rivers D (2007) Sampling for web surveys Jt Statist. Meet, Salt Lake City. [Google Scholar]

- Rosenbaum PR and Rubin DB (1983) The central role of the propensity score in observational studies for causal effects. Biometrika, 70, 41–55. [Google Scholar]

- Shao J and Steel P (1999) Variance estimation for survey data with composite imputation and nonnegligible sampling fractions. J. Am. Statist. Ass, 94, 254–265. [Google Scholar]

- Shortreed SM and Ertefaie A (2017) Outcome-adaptive lasso: variable selection for causal inference. Biometrics, 73, 1111–1122. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stuart EA, Bradshaw CP and Leaf PJ (2015) Assessing the generalizability of randomized trial results to target populations. Prev. Sci, 16, 475–485. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stuart EA, Cole SR, Bradshaw CP and Leaf PJ (2011) The use of propensity scores to assess the generalizability of results from randomized trials. J. R. Statist. Soc A, 174, 369–386. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tillé Y (2011) Sampling Algorithms. Berlin: Springer. [Google Scholar]

- Tsiatis A (2006) Semiparametric Theory and Missing Data. New York: Springer. [Google Scholar]

- Valliant R and Dever JA (2011) Estimating propensity adjustments for volunteer web surveys. Sociol. Meth. Res, 40, 105–137. [Google Scholar]

- Yang S and Kim JK (2018) Integration of survey data and big observational data for finite population inference using mass imputation. Preprint arXiv:1807.02817 [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.