Abstract

Objective

To study the association between Electronic Health Record (EHR)/Computerized Physician Order Entry (CPOE) provider price display, and domains of healthcare quality (efficiency, effective care, patient centered care, patient safety, equitable care, and timeliness of care).

Methods

Randomized and non-randomized studies assessing the relationship between healthcare quality domains and EHR/CPOE provider price display published between 1/1/1980 to 2/1/2018 were included. MEDLINE, Web of Science, and Embase were searched. Assessment of internal validity of the included studies was performed with a modified Downs-Black checklist.

Results

Screening of 1118 abstracts was performed resulting in selection of 41 manuscripts for full length review. A total of 13 studies were included in the final analysis. Thirteen studies reported on efficiency domain, one on effectiveness and one on patient safety. Studies assessing relationship between provider price display and patient centered, equitable and timely care domains were not retrieved. Quality of the studies varied widely (Range 6-12 out of a maximum possible score of 13). Provider price display in electronic health record environment did not consistently influence domains of healthcare quality such as efficiency, effectiveness and patient safety.

Conclusions

Published evidence suggests that price display tools aimed at ordering providers in EHR/CPOE do not influence the efficiency domain of healthcare quality. Scant published evidence suggests that they do not influence the effectiveness and patient safety domains of healthcare quality. Future studies are needed to assess the relationship between provider price display and unexplored domains of healthcare quality (patient centered, equitable, and timely care).

Registration

PROSPERO registration: CRD42018082227

Keywords: computerized physician order entry system, data display, fees and charges, diagnostic techniques and procedures, physician practice patterns, attitude of health personnel, healthcare quality

Introduction

Physician price awareness is a recognized knowledge gap. 1,2 Lack of price awareness has been associated with increased resource utilization3 contributing to reduced efficiency, a healthcare quality domain.4 Price awareness has the potential to help providers and patients efficiently use healthcare dollars.

Use of physician education and feedback as strategies to increase price awareness have however yielded equivocal cost containment results.5 Price display tools as a price awareness strategy have been hypothesized to reduce inefficiency by improving knowledge about costs6,7 thereby changing ordering behavior. Price display on paper in the non-electronic health record era was associated with reduced costs.8,9 Coinciding with the introduction of electronic health records (years 1990-2000) and the diffusion of EHR adoption (years 2000-2015), various authors10–26 studied the impact of price display during computerized physician order entry on domains of healthcare quality such as efficiency, effectiveness, and safety. These studies differed in the setting, and design, as well as their conclusions.

Previous systematic reviews studying the relationship between price display and costs concluded that price display is associated with improved efficiency (ie reduced costs of care) without impacting patient safety.27,28 However, these reviews combined price display studies done in the electronic and non-electronic health record environments (ie paper display of price) in their analyses. Information processing and retention differs by mode of display (ie learning from paper display is better than from an electronic display, termed as “screen inferiority”).29,30 Learning from text characters under time pressure, a factor common to EHR order entry, is known to be less effective on an electronic screen when compared with paper.31 That is why studies of price display in the paper era are likely not applicable to the current electronic health record era.

A systematic review focused on provider price display in the electronic health record during computerized physician order entry was undertaken to study the relationship between price display and the domains of healthcare quality4 (efficiency[costs], effectiveness, patient safety, timely care, patient centered, and equitable care).

Methods

Data sources and search

A systematic review of studies published between 1/1/1980 to 2/1/2018 was performed based on searches of MEDLINE (PubMed), CINAHL (EBSCOhost), Scopus, Web of Science, and Embase databases.

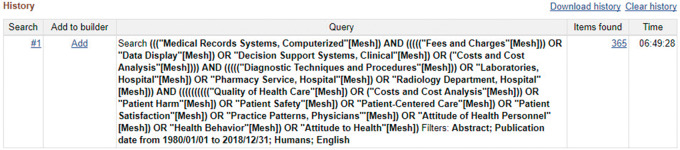

Results were restricted to the English language. The following keywords were used: Computerized Medical Records Systems, Fees and Charges, Data Display, Clinical Decision Support Systems, Diagnostic Techniques and Procedures, Hospital Laboratories, Hospital Pharmacy Service, Hospital Radiology Department, Quality of Health Care, Costs and Cost Analysis, Patient Harm, Patient Safety, Patient-Centered Care, Patient Satisfaction, Physician Practice Patterns, Attitude of Health Personnel, Health Behavior, Attitude to Health. Medical subject headings (MeSH) corresponding to these terms were used in MEDLINE searches and keywords as described above were used in other databases during the search execution. A Boolean strategy was employed to form an association between these terms in the final phase of search execution. An example of a search execution is provided in Figure 1. PRISMA32 checklist is supplied as Supplementary Appendix 3.

Figure 1.

An example of the search execution.

In addition, a “pearl-growing”33 strategy was employed using the references section of well-cited reviews and the search results. They were included to be analyzed in the full review phase of the study. Approval from the Institutional Review Board was unnecessary, because this was a systematic review of published literature and did not involve human subjects.

Study selection

Inclusion and exclusion criteria were framed prior to the implementation of the search strategy and registered with an international prospective register of systematic reviews – PROSPERO (https://www.crd.york.ac.uk/prospero/display_record.php? RecordID=82227; #CRD42018082227).34 To evaluate the effect of price display in computerized physician order entry (CPOE) on healthcare quality, we included studies based on the following PICO (T) criteria:

Population: Physicians requesting or patients receiving care orders (laboratory, imaging, pharmacy and procedural) through computerized physician order entry.

Intervention: Group that was exposed to price display tools during laboratory, imaging, procedural and pharmaceutical orders in CPOE.

Comparator/Control: Concurrent or historical group that received care orders through CPOE and usual workflow of the ordering provider without price display.

Outcomes: Healthcare quality domains as defined by the National Academy of Medicine’s (previously known as Institute of Medicine) definition of healthcare quality4 (efficiency measured by costs or total number of orders, effectiveness measured by number of appropriate or inappropriate orders, patient safety/harm, patient centered care markers, timely care).

Timing and effect measures: Price display intervention performed for ≥ 6 months.

Non-English publications, case reports, studies with additional co-interventions during the study period (eg price display accompanied by radiation dose display, price display accompanied by introduction of computerized physician order entry system), studies without a historical or concurrent control group, and studies with price display intervention less than 6 months were excluded. An internet-based product/platform (Covidence systematic review software, Veritas Health Innovation, Melbourne, Australia) was used for electronic importing of search results from the databases. Covidence performed automatic exclusion of duplicates during the process of importing results from diverse databases. Two authors [SM, RM] performed independent screening of titles and abstracts for full text screening by logging into their Covidence account. A record of votes resulting in “irrelevant,” “full text screening” and “disagreement” categories was generated by Covidence software. Disagreements were resolved by direct communication.

Data extraction and outcome measures

One author (SM) extracted and rated the data from the selected full-length articles using a standardized form. From each study, the data abstracted included study name/year, setting, study design (prospective controlled, randomized controlled trial, retrospective etc.), type of computerized physician order entry (CPOE [imaging vs laboratory vs procedures etc.]), population, intervention group(s), design of the price display intervention, comparator group(s), outcomes, and the results.

The National Academy of Medicine’s definition of healthcare quality4 was used to categorize the domain (efficiency, effectiveness, timely care, patient centered care, equitable and safe care) of the reported outcomes. For example, a study assessing whether price display in CPOE resulted in lower charges to the patient would have been categorized into the efficiency domain of healthcare quality. If a study assessed whether price display in CPOE resulted in increased patient satisfaction due to less number of invasive specimen acquisitions, it would have been categorized into the domain of patient centered care.

While extracting data from the full text articles, study results pertaining to overall analyses were prioritized over subgroup analyses. Results from exploratory analyses were not considered. Weighted and adjusted analyses were given priority over unweighted and unadjusted analyses.

Quality assessment criteria

Studies that met inclusion criteria were evaluated for risk of bias using components of the modified Downs Black35 checklist. Thirteen questions pertaining to the internal validity (bias and confounding) sections of the original Downs Black checklist35 were used in our quality assessment. The maximum possible score was 13. The modified Downs Black checklist with individual scoring for each study is supplied in the Supplementary Appendix 1.

Results

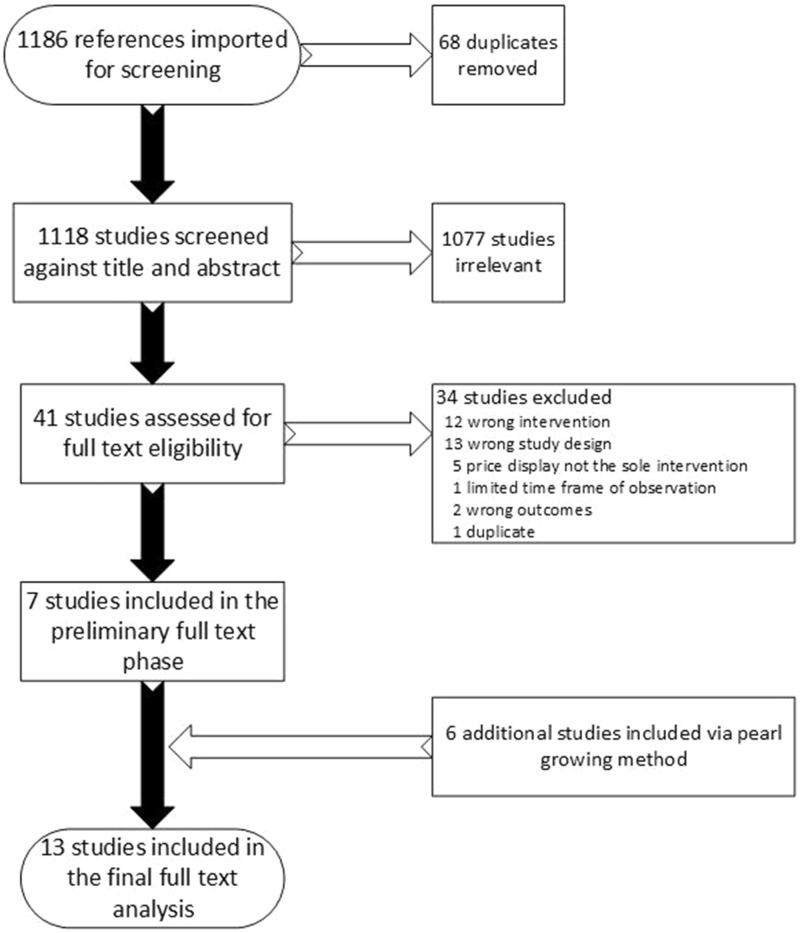

The initial search identified 1118 possible studies. These titles and abstracts were assessed independently by two reviewers with fair interrater reliability (Cohen’s κ = 0.33).36 After consensus was reached, 41 studies were selected for full text review, and the complete articles were independently assessed by two authors (SM, RM). Using the inclusion and exclusion criteria, 34 studies were excluded with moderate interrater reliability (Cohen’s κ = 0.53).36 A total of 7 studies entered the preliminary inclusion pool. Another 6 studies were added from those identified by pearling reference lists for a total of 13 studies10–21,26 for the final analysis. The results constituted 8 randomized controlled trials,10,12,15,16,18,20,21,26 2 interrupted time series studies,14,19 2 controlled clinical trials11,13 and a prospective comparative study.17 The sequence describing the above process can be found in Figure 2.

Figure 2.

Flowsheet of study selection process.

All 13 studies examined the relationship between price display and the efficiency domain of healthcare quality. One study20 additionally assessed the relationship between price display and effectiveness domain of healthcare quality. Another study10 assessed the relationship between price display and safety domain of healthcare quality. None of the included studies assessed the relationship between price display and patient centered care, timely, or equitable care.

The quality or risk of bias assessments of the included studies varied widely and are reported in Table 1 (Range 6-12, maximum possible score being 13). Designs of the studies varied as described above. Randomized studies differed based on level of randomization (Four at the level of test,15,16,21,26 2 at the level of ordering provider,18,20 1 at the level of patient12 and 1 at the level of physician’s computer session10) The population and the setting in which the studies were done also varied (Four studies done in a community outpatient setting where providers who completed graduate medical education practiced,11,14,18,20 9 studies done in hospital and outpatient settings of teaching hospitals10,12,13,15–17,19,21,26) The design of the price display also varied (2 studies displayed cost data,18,20 2 studies utilized hospital input cost,17,19 7 studied used charge data10,12,14–16,21,26 and 2 studies displayed wholesale market price11,13)

Table 1.

Quality assessment of the included studiesa

| Study/Year | Study type | Modified Downs/Black score | Quality problems |

|---|---|---|---|

| Schmidt 2017 |

|

8/13 | Unclear whether investigators and statisticians were blinded to the intervention group during analyses. Unclear whether randomization allocation was concealed from providers. Lack of comprehensive set of adjustment variables such as severity of illness, etc. |

| Chien et al. 201718 | RCTb | 10/13 | Unclear whether investigators and statisticians were blinded to the intervention group during analyses. Unclear whether randomization allocation was concealed from the providers. |

| Sedrak et al. 201721 | RCTb | 12/13 | Lack of randomization at the level of clinician in the study design. However, this was not pursued to prevent contamination between groups |

| Conway et al. 201719 | Retrospective; Interrupted time-series | 8/13 | Retrospective and non-randomized design, Lack of comprehensive set of adjustment variables such as severity of illness, etc. |

| Chien 201720 | RCTb | 10/13 | Unclear whether investigators and statisticians were blinded to the intervention group during analyses. Unclear whether randomization allocation was concealed from the providers |

| Fang et al. 201417 | Prospective comparative | 6/13 | Non-randomized design. Interrupted time series design not employed. Analyses done between two groups recruited over differing periods of time. Control cohort differs from intervention cohort in baseline characteristics. |

| Durand et al. 201316 | RCTb | 8/13 | Unclear whether investigators and statisticians were blinded to the intervention group during analyses. Lack of comprehensive set of adjustment variables such as severity of illness etc. Lack of randomization at the level of clinician in the study design. However, this was not pursued to prevent contamination between groups |

| Feldman et al. 201315 | RCTb | 10/13 | Unclear whether investigators and statisticians were blinded to the intervention group during analyses. Lack of comprehensive set of adjustment variables such as severity of illness, etc. Lack of randomization at the level of clinician in the study design. However, this was not pursued to prevent contamination between groups |

| Horn 201414 | Interrupted time series with a control group | 6/13 | Non- randomized design. Significant baseline differences in characteristics of patients seen by the intervention and control group of providers. This was not controlled for. Chronic disease burden in the two groups was not mentioned |

| Ornstein et al. 199913 | Controlled clinical trial | 7/13 | Non- randomized design. No concurrent control (historical control was used). Lack of estimation of chronic illness burden in the intervention and control periods. |

| Bates et al. 199712 | RCTb | 11/13 | Unclear whether investigators and statisticians were blinded to the intervention group during analyses |

| Vedsted et al. 199711 | Controlled trial | 8/13 | Non-randomized design. Unclear whether investigators and statisticians were blinded to the intervention group during analyses. Lack of comprehensive multifactorial analyses |

| Tierney et al. 199010 | RCTb | 10/13 | Lack of comprehensive multifactorial analyses. Intervention period coincided with the period of arrival of new trainees and this was not controlled for in the analyses |

Blinding study subjects (providers) to the intervention was not possible in any study due to the nature of intervention.

Randomized controlled trial.

Impact on efficiency domain

Results based on data extraction are presented in Table 2. Out of the 13 included studies, 10 did not find a relationship between price display and cost of care, while 3 reported that price display was associated with cost savings. More recent randomized controlled trials (2016 and 2017) did not find any relationship between efficiency and provider price display. All 4 studies done in the community setting where physicians who completed graduate training practiced did not show any relationship between cost savings and price display.11,14,18,20 Similarly, the 6 studies done in inpatient and outpatient settings of teaching hospitals did not find any cost savings with price display.12,13,16,19,21,26 Two randomized controlled trials, 1 done in an inpatient15 and 1 in an outpatient setting10 of teaching hospitals showed cost savings with price display. One prospective non-randomized study restricted to reference laboratory tests (ie tests sent to an outside laboratory) showed significant cost savings.17

Table 2.

Summary of results in the efficiency domain of healthcare quality

| Study Year | Setting | Study Design & Intervention Duration | Orders studied | Population | Intervention Group (s) | Design of the Intervention | Comparator Group (s) | Outcomes | Results |

|---|---|---|---|---|---|---|---|---|---|

| Schmidt et al. 201726 | Academic inpatient &outpatient services |

|

Laboratory | 1200 physicians and trainees in a 527-bed tertiary care hospital | 228 laboratory tests were assigned to the Medicare allowable fees display group | Display of Medicare allowable reimbursement |

|

|

|

| Chien et al. 201718 | Community ACOa; Outpatient; |

|

|

506 general Pediatricians, adult subspecialists and advanced practitioners caring for >160 000 patients 0-21 yrs. of age |

|

|

|

|

|

| Sedrak et al. 201721 | Academic inpatient services; | RCTb, Randomized at the level of test, 1 year | Laboratory | Trainee, advanced practitioners and faculty doctors involved in the care of 142 921 hospital admissions | 30 laboratory tests groups stratified based on cost were assigned to the Medicare allowable fees display group | Display of Medicare allowable reimbursement |

|

|

|

| Conway et al. 201719 | Academic inpatient services; | Retrospective, Interrupted time series, 6 months | Medications | Trainee and faculty doctors in a 1145-bed hospital | 9 intravenous medication orders |

|

|

Change in number of orders per 10 000 patient days following intervention | No change in the number of orders or ordering trends following intervention |

| Chien 201720 | Community ACOa; Outpatient | RCTb; Randomized at the level of clinician, 11 months |

|

1205 primary care physicians, specialists and advanced practitioners caring for ∼400 000 patients aged ≥ 21 yrs. |

|

|

|

|

|

| Fang et al. 201417 | Academic inpatient services | Prospective comparative study, 9 months | Reference laboratory tests (send out tests) | Trainee and faculty doctors in a 613-bed hospital with ∼25 000 inpatient admissions/yr. | A group of 12 506 reference laboratory orders that displayed cost and turnaround time. | Display of cost and turnaround time for each send out test |

|

|

|

| Durand et al. 201316 | Academic inpatient services | RCTb; Randomized at the level of test, 6 months | Imaging | Trainee and faculty doctors in a 1025-bed hospital | A group of 5 radiology tests with price display | Display of Medicare allowable charge |

|

Mean relative utilization change in display and no display groups between the baseline and intervention periods | No significant difference in mean relative utilization between the two groups |

| Feldman et al. 201315 | Academic inpatient services | RCTb; Randomized at the level of test.6 months | Laboratory |

|

A group of 30 laboratory tests with price display | Display of Medicare allowable charge |

|

|

|

| Horn 201414 | Community ACO; Outpatient; |

|

Laboratory | Adult primary care practitioners |

|

Display of Medicare reimbursement rate |

|

Monthly physician ordering rate | No significant overall change in monthly physician ordering rate (A modest decrease in monthly physician ordering rate for 5 tests [0.4-5.6 orders/1000 visits/month], No change in monthly physician ordering rate for 22 tests). |

| Ornstein et al. 199913 | Academic Outpatient | Controlled trial; 6 months | Medications | Trainee and faculty doctors in an academic family practice clinic providing care to 12 500 patients | All providers practicing in the center in the intervention period (6 months) |

|

|

Mean prescription cost per patient visit | No difference in overall prescription drug costs to the patients |

| Vedsted et al. 199711 | Community outpatient service | Prospective controlled trial; 2 years | Medications | Outpatient family medicine physicians in Aarhus county serving 600 000 patients | 28 doctors using APEX EMR and its price comparison module | Price comparison module shows the price for each prescription and indicates whether economical alternatives exist. Ability to substitute | Doctors not using APEX EMR or any EMR (n=231 doctors) | Trend in prescribed defined daily doses (DDD) | No significant differences in the trend in prescribed defined daily doses between the intervention and control groups. |

| Bates et al. 199712 | Academic inpatient services |

|

Imaging tests | Trainee and faculty doctors in a 720-bed hospital performing 240 000 tests/year | Inpatient medical and surgical patients randomized to charge display during the study period (n=8728) | Display of charges and a “cash register” window displaying the sum of total charges for tests ordered. | Inpatient medical and surgical patients randomized to NO charge display during the study period (n=8653) |

|

|

| Tierney et al. 199010 | Academic outpatient internal medicine clinic |

|

Laboratory and imaging orders | Trainee and faculty doctors in an academic internal medicine clinic serving 12 000 patients | A group of 16 sessions/week in which physicians were displayed charges | Display of charges per test and the total charges for all tests ordered for the patient during the session | A group of 16 sessions/week in which physicians were not displayed charges |

|

|

Accountable Care Organization: A type of risk-bearing health care organization that benefits financially from lower overall spending,

Randomized Controlled Trial.

Impact on other domains

The only study that studied effectiveness in relation to price display concluded that effectiveness did not improve with price display.20 Results are displayed in Table 3. A study that additionally examined patient safety and price display did not find a relationship between the two.10 As mentioned above, studies assessing the relationship between price display and patient centered care, timely, or equitable care domains were not found in our search results.

Table 3.

Summary of results in the effectiveness and safety domains of healthcare quality

| Study & Year | Setting | Study Design & Intervention Duration | Orders & Domain studied | Population | Intervention Group (s) | Design of the Intervention | Comparator Group (s) | Outcomes | Results |

|---|---|---|---|---|---|---|---|---|---|

| Chien 201720 | Community ACOa; Outpatient | RCTb; Randomized at the level of clinician, 11 months | Imaging, Procedures orders and effectiveness | 1205 primary care physicians, specialists and advanced practitioners caring for ∼400 000 patients aged ≥ 21 yrs. |

|

|

|

|

No difference in rate of appropriate and inappropriate orders between the intervention and control groups. |

| Tierney et al.10 1990 | Academic outpatient internal medicine clinic |

|

Imaging, Laboratory orders and patient safety | Trainee and faculty doctors in an academic internal medicine clinic serving 12 000 patients | A group of 16 sessions/wk. in which physicians were displayed charges | Display of charges per test and the total charges for all tests ordered for the patient during the session |

|

|

|

Accountable Care Organization: A type of risk-bearing health care organization that benefits financially from lower overall spending,

Randomized Controlled Trial.

Emergency Room.

Due to the heterogeneity of the study designs, interventions and outcomes, a meta-analysis was not feasible. Additional quantitative details of significant and non-significant findings in each study are presented in the Supplementary Appendix 2.

Discussion

Many experts believe introduction of price display in the electronic health record (EHR) during computerized physician order entry (CPOE) is quick to implement and easy to maintain. Therefore, price display was hypothesized to be a feasible and powerful weapon in reducing costs of care. However, this review, concludes that provider price display in the EHR does not consistently reduce the costs of care related to laboratory, imaging, procedural orders across setting (ie outpatient, inpatient, community, and teaching hospitals). This conclusion is in direct contrast to the findings of the previous systematic reviews in this field.27,28 Our review differs from the previous reviews in that it includes 4 additional high quality randomized controlled trials that involved >140 000 patient days in each study.18,20,21,26 This review excluded studies done using price display on paper8,9,37 which have usually shown significant cost savings and were included in previous reviews. This exclusion is an important departure from existing reviews as comprehension and learning especially under time constraints differ in paper and electronic screen environments (ie electronic screen based learning is inferior, termed as screen inferiority).29–31

Potential explanations for provider price display in EHRs not working to reduce costs of care include screen inferiority,29 reduced visibility of non-intrusive price display,26 price display not accessible to patients, perceived need of a diagnostic test that overrides cost concerns, and price awareness not being complete information about true costs of care. Two studies18,20 incorporated prices that were close to real costs of a diagnostic test and found no cost savings associated with display of such information. In some cases, price display tools can lead to increased utilization of diagnostic tests. Sedrak et al.21 found a relative modest increase (2%) in tests performed per patient day in the group randomized to price display. Likewise, Chien et al.18 found increased resource utilization in adult subspecialists taking care of children when exposed to price display in a randomized fashion. This phenomenon can be explained by a tendency to order tests when the displayed price is much less than the expected price. Such unintended consequences must be kept in mind before routine EHR price display is advocated for38 despite lack of efficacy based on the “no benefit, no harm” principle. Access to price information for patients in contrast to provider price display has the potential for significant cost reductions as evidenced by results from 2 recent studies that focused on patient price awareness.39,40

An important distinction needs to be made between the types of orders (laboratory, imaging, procedural orders) studied. It is likely that characteristics of diagnostic tests and ordering circumstances influence whether they have the potential for reduction in utilization. For example, inpatient imaging tests are not usually ordered daily except for the chest x ray in the intensive care unit.16 It is plausible to assume imaging orders are ordered based on a new clinical event. Therefore, it is likely that none of the studies that analyzed imaging orders and price display have shown any significant cost savings as a changing clinical context overrides cost concerns. Laboratory tests, however, are usually drawn daily because of the typical design of an institutional or provider’s customized EHR admission order set executed on the day of admission. Design factors such as pre-checked daily laboratory orders (eg “complete blood count Q AM”) result in default daily laboratory draws and potential loss of price display opportunities. Such loss of multiple price visualization opportunities could have impacted any benefits of price display especially in the inpatient studies. While price display did not result in consistent reduction in laboratory test utilization, other equally simple design-based interventions, such as eliminating default daily laboratory draw frequencies in EHR, resulted in significant reduction.24,41,42 Eliminating default daily laboratory orders is a particularly promising intervention as the prevalence of patients receiving admission day orders for daily recurring laboratory tests has been reported to be as high as 95% in a large urban teaching hospital.42

An argument can be made about improving the design of existing passive price display tools to create interactive second-generation price display tools based on sophisticated clinical decision support architecture. However, improving the design of a price display tool by adding more visible information and creating the need for additional provider-computer interaction has potential negative consequences such as physician dissatisfaction (increased time spent in CPOE) and increased investment required to design and maintain these tools. When pursued, the design of these interactive second-generation tools should incorporate accepted best practices43 to assure potential real-life effectiveness.

While it is accepted that gaps exist in physician price awareness, it is likely that no one single intervention aimed at improving physician price awareness will get us to the promised land of cost containment. Current evidence suggest that bundled sets of interventions based on redesign of electronic health record order(s)/order set(s) eliminating routine daily inpatient ordering, provider and patient education, patient price awareness,39,40 audit and feedback are likely the best possible route to cost containment.5

Our review has limitations. We were not able to perform a quantitative assessment of our findings due to significant heterogeneity in the included studies. Results were restricted to the English language, and we were unable to obtain any unpublished studies. However, due to the consistent, negative results in the included studies, the effect of a potential publication bias is likely to be negligible. Strengths include a robust search strategy and comprehensive a priori inclusion and exclusion criteria.

Conclusion

Published evidence suggests that price display tools aimed at ordering providers in EHR/CPOE do not influence the efficiency domain of healthcare quality. Scant published evidence suggests that they do not influence the effectiveness and patient safety domains of healthcare quality. Future studies are needed to assess the relationship between provider price display and unexplored domains of healthcare quality (patient centered, equitable, and timely care).

FUNDING

This research received no specific grant from any funding agency in the public, commercial or not-for-profit sectors.

Competing interests

None.

Contributors

Dr Srinivas R Mummadi has made substantial contributions to the conception or design of the work and in drafting the work and revising it critically for important intellectual content and approved the final version for publication. Dr Mummadi has agreed to be accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. Dr Raghav Mishra has made substantial contributions to the conception or design of the work and in drafting the work and revising it critically for important intellectual content and approved the final version for publication. Dr Mummadi has agreed to be accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved.

Supplementary Material

ACKNOWLEDGMENTS

The authors wish to thank Dr Alyna Chien and Dr Annette Totten for their thoughtful review of the manuscript.

SUPPLEMENTARY MATERIAL

Supplementary material is available at Journal of the American Medical Informatics Association online.

REFERENCES

- 1. Allan GM, Lexchin J, Wiebe N.. Physician awareness of drug cost: a systematic review. PLoS Med 2007; 49: e283.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Allan GM, Lexchin J.. Physician awareness of diagnostic and nondrug therapeutic costs: a systematic review. Int J Technol Assess Health Care 2008; 242: 158–65. [DOI] [PubMed] [Google Scholar]

- 3. Sedrak MS, Patel MS, Ziemba JB, et al. Residents’ self-report on why they order perceived unnecessary inpatient laboratory tests. J Hosp Med 2016; 1112: 869–72. [DOI] [PubMed] [Google Scholar]

- 4. Institute of Medicine Committee on Quality of Health Care in America. Crossing the Quality Chasm: A New Health System for the 21st Century. Washington, DC: National Academies Press (US; ); 2001. [Google Scholar]

- 5. Eaton KP, Levy K, Soong C, et al. Evidence-based guidelines to eliminate repetitive laboratory testing. JAMA Intern Med 2017; 17712: 1833–9. [DOI] [PubMed] [Google Scholar]

- 6. Long T, Bongiovanni T, Dashevsky M, et al. Impact of laboratory cost display on resident attitudes and knowledge about costs. Postgrad Med J 2016; 921092: 592–6. [DOI] [PubMed] [Google Scholar]

- 7. DeMarco SS, Paul R, Kilpatrick RJ.. Information system technologies’ role in augmenting dermatologists’ knowledge of prescription medication costs. Int J Med Inform 2015; 8412: 1076–84. [DOI] [PubMed] [Google Scholar]

- 8. Hampers LC, Cha S, Gutglass DJ, Krug SE, Binns HJ.. The effect of price information on test-ordering behavior and patient outcomes in a pediatric emergency department. Pediatrics 1999; 103 (4 Pt 2): 877–82. [PubMed] [Google Scholar]

- 9. Sachdeva RC, Jefferson LS, Coss-Bu J, et al. Effects of availability of patient-related charges on practice patterns and cost containment in the pediatric intensive care unit. Crit Care Med 1996; 243: 501–6. [DOI] [PubMed] [Google Scholar]

- 10. Tierney WM, Miller ME, McDonald CJ.. The effect on test ordering of informing physicians of the charges for outpatient diagnostic tests. N Engl J Med 1990; 32221: 1499–504. [DOI] [PubMed] [Google Scholar]

- 11. Vedsted P, Nielsen JN, Olesen F.. Does a computerized price comparison module reduce prescribing costs in general practice? Fam Pract 1997; 143: 199–203. [DOI] [PubMed] [Google Scholar]

- 12. Bates DW, Kuperman GJ, Jha A, et al. Does the computerized display of charges affect inpatient ancillary test utilization? Arch Intern Med 1997; 15721: 2501–8. [PubMed] [Google Scholar]

- 13. Ornstein SM, MacFarlane LL, Jenkins RG, Pan Q, Wager KA.. Medication cost information in a computer-based patient record system. Impact on prescribing in a family medicine clinical practice. Arch Fam Med 1999; 82: 118–21. [DOI] [PubMed] [Google Scholar]

- 14. Horn DM, Koplan KE, Senese MD, Orav EJ, Sequist TD.. The impact of cost displays on primary care physician laboratory test ordering. J Gen Intern Med 2014; 295: 708–14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Feldman LS, Shihab HM, Thiemann D, et al. Impact of providing fee data on laboratory test ordering: a controlled clinical trial. JAMA Intern Med 2013; 17310: 903–8. [DOI] [PubMed] [Google Scholar]

- 16. Durand DJ, Feldman LS, Lewin JS, Brotman DJ.. Provider cost transparency alone has no impact on inpatient imaging utilization. J Am Coll Radiol 2013; 102: 108–13. [DOI] [PubMed] [Google Scholar]

- 17. Fang DZ, Sran G, Gessner D, et al. Cost and turn-around time display decreases inpatient ordering of reference laboratory tests: a time series. BMJ Qual Saf 2014; 2312: 994–1000. [DOI] [PubMed] [Google Scholar]

- 18. Chien AT, Ganeshan S, Schuster MA, et al. The effect of price information on the ordering of images and procedures. Pediatrics 2017; 1392: e20161507.. [DOI] [PubMed] [Google Scholar]

- 19. Conway S, Brotman D, Pinto B, et al. Impact of displaying inpatient pharmaceutical costs at the time of order entry: lessons from a tertiary care center. J Hosp Med 2017; 128: 639–45. [DOI] [PubMed] [Google Scholar]

- 20. Chien AT, Lehmann LS, Hatfield LA, et al. A randomized trial of displaying paid price information on imaging study and procedure ordering rates. J Gen Intern Med 2017; 324: 434–48. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Sedrak MS, Myers JS, Small DS, et al. Effect of a price transparency intervention in the electronic health record on clinician ordering of inpatient laboratory tests: The PRICE randomized clinical trial. JAMA Intern Med 2017; 1777: 939–45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Tierney WM, Miller ME, Overhage JM, McDonald CJ.. Physician inpatient order writing on microcomputer workstations. Effects on resource utilization. JAMA 1993; 2693: 379–83. [PubMed] [Google Scholar]

- 23. Freedberg DE, Salmasian H, Abrams JA, Green RA.. Orders for intravenous proton pump inhibitors after implementation of an electronic alert. JAMA Intern Med 2015; 1753: 452–4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Sadowski BW, Lane AB, Wood SM, Robinson SL, Kim CH.. High-value, cost-conscious care: iterative systems-based interventions to reduce unnecessary laboratory testing. Am J Med 2017; 1309: 1112.e1–12.e7 [DOI] [PubMed] [Google Scholar]

- 25. Kruger JF, Chen AH, Rybkin A, et al. Displaying radiation exposure and cost information at order entry for outpatient diagnostic imaging: a strategy to inform clinician ordering. BMJ Qual Saf 2016; 2512: 977–85. [DOI] [PubMed] [Google Scholar]

- 26. Schmidt RL, Colbert-Getz JM, Milne CK, et al. Impact of laboratory charge display within the electronic health record across an entire academic medical center: results of a randomized controlled trial. Am J Clin Pathol 2017; 1486: 513–22. [DOI] [PubMed] [Google Scholar]

- 27. Goetz C, Rotman SR, Hartoularos G, Bishop TF.. The effect of charge display on cost of care and physician practice behaviors: a systematic review. J Gen Intern Med 2015; 306: 835–42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Silvestri MT, Bongiovanni TR, Glover JG, Gross CP.. Impact of price display on provider ordering: A systematic review. J Hosp Med 2016; 111: 65–76. [DOI] [PubMed] [Google Scholar]

- 29. Mangen A, Walgermo BR, Brønnick K.. Reading linear texts on paper versus computer screen: Effects on reading comprehension. Int J Educ Res 2013; 58: 61–8. [Google Scholar]

- 30. Lauterman T, Ackerman R.. Overcoming screen inferiority in learning and calibration. Comput Human Behav 2014; 35: 455–63. [Google Scholar]

- 31. Ackerman R, Lauterman T.. Taking reading comprehension exams on screen or on paper? A metacognitive analysis of learning texts under time pressure. Comput Human Behav 2012; 285: 1816–28. [Google Scholar]

- 32. Moher D, Liberati A, Tetzlaff J, Altman DG, Group TP.. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. PLoS Med 2009; 67: e1000097.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Schlosser RW, Wendt O, Bhavnani S, Nail-Chiwetalu B.. Use of information‐seeking strategies for developing systematic reviews and engaging in evidence‐based practice: the application of traditional and comprehensive Pearl Growing. A review. Int J Lang Commun Disord 2006; 415: 567–82. [DOI] [PubMed] [Google Scholar]

- 34. Mummadi SR. Effectiveness of price display in computerized physician order entry (CPOE) on health care quality: a systematic review. Secondary Effectiveness of price display in computerized physician order entry (CPOE) on health care quality: a systematic review. 2018. http://www.crd.york.ac.uk/PROSPERO/display_record.php?ID=CRD42018082227 Accessed January 4, 2018. [DOI] [PMC free article] [PubMed]

- 35. Downs SH, Black N.. The feasibility of creating a checklist for the assessment of the methodological quality both of randomised and non-randomised studies of health care interventions. J Epidemiol Community Health 1998; 526: 377–84. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. McHugh ML. Interrater reliability: the kappa statistic. Biochem Med (Zagreb) 2012; 223: 276–82. [PMC free article] [PubMed] [Google Scholar]

- 37. Nougon G, Muschart X, Gerard V, et al. Does offering pricing information to resident physicians in the emergency department potentially reduce laboratory and radiology costs? Eur J Emerg Med 2015; 224: 247–52. [DOI] [PubMed] [Google Scholar]

- 38. Gorfinkel I, Lexchin J.. We need to mandate drug cost transparency on electronic medical records. CMAJ 2017; 18950: E1541–E42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Robinson JC, Whaley C, Brown TT.. Association of reference pricing for diagnostic laboratory testing with changes in patient choices, prices, and total spending for diagnostic tests. JAMA Intern Med 2016; 1769: 1353–9. [DOI] [PubMed] [Google Scholar]

- 40. Robinson JC, Whaley CM, Brown TT.. Association of reference pricing with drug selection and spending. N Engl J Med 2017; 3777: 658–65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. May TA, Clancy M, Critchfield J, et al. Reducing unnecessary inpatient laboratory testing in a teaching hospital. Am J Clin Pathol 2006; 1262: 200–6. [DOI] [PubMed] [Google Scholar]

- 42. Iturrate E, Jubelt L, Volpicelli F, Hochman K.. Optimize your electronic medical record to increase value: reducing laboratory overutilization. Am J Med 2016; 1292: 215–20. [DOI] [PubMed] [Google Scholar]

- 43. Bates DW, Kuperman GJ, Wang S, et al. Ten commandments for effective clinical decision support: making the practice of evidence-based medicine a reality. J Am Med Inform Assoc 2003; 106: 523–30. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.