Abstract

Objective

To assess information sources that may elucidate errors related to radiologic diagnostic imaging, quantify the incidence of potential safety events from each source, and quantify the number of steps involved from diagnostic imaging chain and socio-technical factors.

Materials and Methods

This retrospective, Institutional Review Board-approved study was conducted at the ambulatory healthcare facilities associated with a large academic hospital. Five information sources were evaluated: an electronic safety reporting system (ESRS), alert notification for critical result (ANCR) system, picture archive and communication system (PACS)-based quality assurance (QA) tool, imaging peer-review system, and an imaging computerized physician order entry (CPOE) and scheduling system. Data from these sources (January-December 2015 for ESRS, ANCR, QA tool, and the peer-review system; January-October 2016 for the imaging ordering system) were collected to quantify the incidence of potential safety events. Reviewers classified events by the step(s) in the diagnostic process they could elucidate, and their socio-technical factors contributors per the Systems Engineering Initiative for Patient Safety (SEIPS) framework.

Results

Potential safety events ranged from 0.5% to 62.1% of events collected from each source. Each of the information sources contributed to elucidating diagnostic process errors in various steps of the diagnostic imaging chain and contributing socio-technical factors, primarily Person, Tasks, and Tools and Technology.

Discussion

Various information sources can differentially inform understanding diagnostic process errors related to radiologic diagnostic imaging.

Conclusion

Information sources elucidate errors in various steps within the diagnostic imaging workflow and can provide insight into socio-technical factors that impact patient safety in the diagnostic process.

Keywords: patient safety, health information technology, data sources, diagnostic imaging

BACKGROUND AND SIGNIFICANCE

A safe healthcare system constantly strives to prevent and mitigate iatrogenic patient harm. The seminal 1999 Institute of Medicine (IOM), now the National Academy of Medicine, report To Err is Human: Building a Safer Health System estimated that between 44 000 and 98 000 people die every year of preventable medical errors.1 In their 2012 report on health information technology (IT) and patient safety,2 the IOM appealed for a wider use and application of human factor engineering (HFE) to assess quality and safety. Application of HFE to healthcare is steadily increasing as an important contributor to improve quality and patient safety.3

Despite the abundant work that emphasizes human factors that impact patient safety,4–6 diagnostic errors have not been examined in detail. In part, this is due to challenges in reliably identifying these events. An “invisibility of diagnostic failure” has been cited,7 in part because the principal methodology that is used to understand safety events is retrospective chart review, and diagnostic errors are not readily visible in patient records, and are not typically discussed in patient notes. Thus, there is a need to identify and assess other potential information sources to elucidate diagnostic process errors that result from failures in various steps in the diagnostic process.

Radiologic diagnostic imaging in the ambulatory setting typically consists of a chain of processes, which include care planning8 and test ordering9 to report communication.10,11 These steps are further described in the Methods, but each step is critical to complete the imaging diagnostic process and is vulnerable to system failures. Each step is influenced by a confluence of socio-technical factors that may contribute to performance of inappropriate exams12 as well as delayed or missed exams,13 all leading to diagnostic failures.

Several factors that increase or mitigate such failures have been analyzed independently.5,14–19 These factors include cognitive factors related to lack of knowledge and provider fatigue,18 as well as systems factors related to imperfect systems for communication,20 institutional policies and technological innovations that add burden to providers,18,21 and recommendations and guidelines for testing that are not followed because of poor quality or decreased adoption.12,22

OBJECTIVE

In this study, we aim to elucidate process errors related to diagnostic imaging in multiple information sources in the radiological diagnostic imaging chain, including quantifying the incidence of potential safety events in each of these information sources. We also aim to classify the information sources into which steps within the diagnostic imaging chain they elucidated, and which socio-technical factors contributed to events in each source within the context of the Systems Engineering Initiative for Patient Safety (SEIPS) framework.6

MATERIALS AND METHODS

Study setting and human subjects protection

This retrospective, Institutional Review Board-approved, Health Insurance Portability and Accountability Act-compliant cohort study was conducted at a 777-bed university-affiliated tertiary care hospital with 44 000 inpatient admissions, 950 000 ambulatory visits, and 54 000 emergency department (ED) visits annually. The system’s outpatient network spans 183 practices, with 16 primary care ambulatory practices caring for 160 000 patients. Study sites included all ambulatory healthcare facilities affiliated with the study institution that utilize its radiologic imaging services; the study included inpatient, ED, and outpatient visits. Approximately 640 000 radiology reports are generated annually, corresponding to 102 000 inpatient, 380 000 outpatient, and 100 000 ED reports, using speech recognition software and structured reporting applications. These sites all utilize an enterprise-wide computerized physician order entry (CPOE) and scheduling system.

Study design overview

This study assessed potential safety events related to diagnostic imaging identified by the 5 information sources: an electronic safety reporting system (ESRS), an imaging interpretation radiology peer review program, a picture archive and communication system (PACS)-based quality assurance (QA) system, an imaging CPOE and scheduling system, and an alert notification of critical results (ANCR) system. ESRS is institution-wide, although we limited the study to safety events reported in ESRS that concern diagnostic imaging. All the other information sources are radiology-specific and integrated into the radiology information system. The incidence rate of safety events identified in each information source was calculated, as was the incidence of potential harm. Each information source was categorized into diagnostic imaging chain steps that were impacted by events reported in the information source, and what socio-technical factors contributed to these events per the SEIPS framework. The study period for 4 information sources (ESRS, radiology peer review program, PACS-based QA system, and ANCR) was January 1, 2015, through December 31, 2015; data from the imaging CPOE and scheduling system were limited to January 1, 2016, through October 21, 2016, due to technical limitations in obtaining CPOE data for 2015.

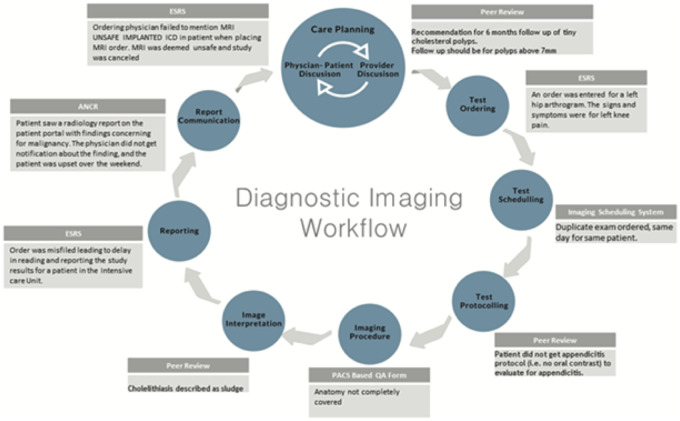

Diagnostic imaging chain workflow

The diagnostic imaging chain workflow (Figure 1) illustrates the steps in the imaging chain workflow within radiology departments. We describe 8 steps: care planning, test ordering, test scheduling, test protocoling, imaging procedure, image interpretation, reporting, and report communication (Table 1). These steps provide a broad characterization of the radiology workflow from different sources across institutions beyond our own.

Figure 1.

Diagnostic imaging chain workflow.

Table 1.

Description of steps in the diagnostic imaging chain

| Imaging chain step | Description |

|---|---|

| Care planning8,9 |

|

| Test ordering9,10,23,24 | Requires ordering physician to generate formal request of imaging modality and body part to be performed |

| Test scheduling23,24 | Involves patient/scheduling department communication to plan on a date and time for imaging |

| Test protocoling23,24 | Radiologist review of appropriateness of test for given indication and contrast safety if indicated |

| Imaging procedure9,10,23,25,26 | Involves actual capture/performance of imaging exam of any given modality |

| Image interpretation9,10,23–25 | Radiologist reads ordered images correlating to any clinical features provided or any diagnostic possibilities |

| Reporting25,26 | Involves the actual dictation into written language of image interpretation and enumeration of any findings |

| Report communication10,24 | Relay of relevant information to ordering provider of given imaging test |

SEIPS 2.0 framework for classifying diagnostic imaging safety events

The SEIPS model is based on a macro ergonomics system model related to Donabedian’s Structure-Process-Outcome framework to understand quality and provides a comprehensive conceptual framework for subsequent application of systems engineering to identified problems (Figure 2).3,27,28 SEIPS 2.0 is a modified version that further expands SEIPS by also considering 3 novel concepts: configuration, engagement, and adaptation. The SEIPS model facilitates evaluation of various causal pathways between team functioning and patient outcomes in team-based primary care. It also provides the framework for understanding various structures, processes, and outcomes for diagnostic follow-up testing.6 The structural component of SEIPS 2.0 is the Work System, which includes a combination of socio-technical factors described below:

Figure 2.

Systems Engineering Initiative for Patient Safety model (adapted from SEIPS).

Person factors – individual and collective characteristics of patients and healthcare professionals (eg, providers’ knowledge of follow-up recommendations, cooperation among providers regarding transfer of responsibility for follow-up)

Task factors – characteristics of individual actions within work processes (eg, multiple steps for communicating results, completing diagnostic testing)

Tools and Technology factors – objects that people use to work, or assist people in doing work (eg, ease of acknowledging follow-up recommendations, ease of transfer and acknowledgement of responsibility using health information technology [HIT])

Organization factors – structures external to a person put in place by people to assist them (eg, institutional mandate for communicating critical results to ordering provider instead of primary care provider [PCP])21

Environment factors (internal and external) – physical environment and external environment outside an organization (eg, Fleischner Society guidelines for pulmonary nodules)22

Information sources

The 5 existing systems that served as the information sources for the study are described here in more detail.

Electronic safety reporting system: The study institution has used ESRS, an electronic system for safety report submissions (RL Solutions, Cambridge, MA), since 2004. Reports for safety events, defined as events that harm or could cause harm to a patient, can be voluntarily submitted. Approximately 12 000 reports are submitted annually. On average, it takes about 3 to 10 minutes to file a safety report, depending on the complexity of the report and a user’s familiarity with the system. Users can report on 15 categories (eg, Imaging, Coordination of Care, Diagnosis/Treatment, Facilities/Environment, Healthcare IT, Medication/IV Fluid, Identification/Documentation/Consent, and Surgery/Procedure). Each category contains a list of specific event types to provide more granularity on the event being reported. Safety reports typically include the person(s) involved (and demographics), setting (eg, outpatient), the event category, the location where the event occurred, and a short narrative describing event details. The ESRS also requires each person who submits a safety report to specify a harm score ranging from 0 to 4: 0 = no harm – did not reach patient, 1 = no harm – did reach patient, 2 = temporary or minor harm, 3 = permanent or major harm, 4 = death. Nursing staff are the most frequent reporters of safety reports, completing about 90% of reports filed. However, all employees are allowed to file safety reports.

Radiology peer review program: This clinical workstation-embedded peer review program was implemented in January 2014 using the Radiological Society of North America Teaching File system (TFS) architecture.29 An institutional policy requires each radiology section to document peer review of a standard number of cases per week in a modality distribution consistent with its normal workflow. Once the reviewing radiologist has selected a report for review, the tool is immediately accessible from the PACS workstation. The report identifiers, including patient name, Medical Record Number (MRN), exam accession number, and reviewer identification, are automatically populated into the tool. A level of agreement score with the original interpretation is selected. The scoring system is modeled after the original 4-point scale in the American College of Radiology’s RADPEERTM program.30 Score options include: 1) concur with original interpretation; 2) minor discrepancy: unlikely to be clinically significant; 3) moderate discrepancy: may be clinically significant; and 4) major discrepancy: likely to be clinically significant. The reviewer has the option to provide comments for any agreement score. For reports scoring 3 or 4 by the reviewing radiologist, s/he is required to contact the original interpreting radiologist to discuss the details of the discrepancy. Both the reviewing and original interpreting radiologists have access to the case for personal review. Additionally, reports with a score of 3 or 4 require review at a monthly divisional staff meeting for the purpose of group learning. All cases are presented anonymously and no punitive actions are undertaken based on the results. Original interpreting radiologist, reviewing radiologist, modality, peer review score, and relevant comments are stored in a SQL clinical database.

PACS-based QA system: This system incorporates 2 forms into the clinical workstation and can be invoked at the radiologist’s discretion when reading images, either for 1or more randomly selected cases or those which the radiologist considers QA is necessary. The form automatically fills in relevant data, including patient identification (MRN), and exam accession number and date. A radiologist fills out either a CT scan or an MRI QA form, and selects whether the image quality is excellent, average, or below average. Other structured elements included in the form are slots for indicating whether the imaging protocol was not followed, anatomy was not completely covered, inadequate field of view (FOV) selection, inadequate contrast enhancement, poor contrast timing, or incomplete study. Artifacts are also potentially recorded, including motion, metal, phase, and others. Coil placement and selection and poor signal-to-noise ratio (SNR) are included in the MRI form. Insufficient technique is included in the CT scan form. Finally, radiologists can include free text within a “general comments” section. The PACS QA system is used for <1% of all CT and MRI reports at the study institution.

Imaging CPOE and scheduling system: The system includes a web-enabled CPOE and scheduling system for imaging (Epic Systems Corporation, Madison, WI). Upon entering a password-protected login, a physician or proxy creates orders for a specific study from predetermined structured menus. Physicians are identified with unique provider identification, and patients are identified by unique MRNs. Each CPOE session captures all relevant information necessary to specify a requested imaging procedure, including clinical indications for the examination. The CPOE system is integrated into an enterprise radiology resource-based scheduling module, which enables ordering providers or their proxies to schedule radiologic examinations online at any radiology provider’s facilities within our network (without the need to place a phone call), irrespective of the radiology information system in use at the radiology practice.

Alert notification of critical results: The ANCR system was implemented at our institution to facilitate critical test result notification, documentation, management, and communication among providers.11,31 ANCR is embedded in the workflow of radiologists and referring providers through integration with multiple systems, including the PACS, paging and e-mail systems, and the electronic health record (EHR). ANCR enables radiologists to communicate critical findings upon reviewing images through synchronous mechanisms (eg, paging) or asynchronously (ie, secure and HIPAA-compliant e-mail). The latter mechanism was specifically designed to address less urgent alerts that account for the majority of critical results. In addition, ANCR allows secure, web-enabled acknowledgment by ordering providers of the alerts received. Finally, all alerts are tracked and are auditable to ensure findings are acknowledged within the time frame established by departmental policy per type of alert, so that the communication loop is closed. Three alert levels are specified—red, orange, and yellow—with alert acknowledgement required within 1 hour, 3 hours, and 15 days, respectively.

Safety event identification and data collection

Study data were collected from each of the 5 sources. From the ESRS, we collected safety reports with an event category of imaging or from an imaging facility setting. From ANCR, we collected all alerts during the study period. From the PACS-based QA form and radiology peer review program, all events during the study period were collected. From the imaging CPOE system, we randomly selected 630 orders from among all radiology tests that had been ordered but not scheduled as potential safety events. Six hundred orders provide 95% confidence level that the true margin of error is within 4% of our result; 30 more radiology test orders were reviewed to account for potential missing reports. Three provider notes prior to the ordered test were collected to determine whether the test was necessary.

Safety events reported in the ESRS system with a score of 2 to 4 were characterized as “potential harm” for this study, as were all reports given a score of 3 or 4 by the reviewing radiologist in the radiology peer review system. For the ANCR system, all critical imaging findings that were not communicated in a timely manner were classified as events that could potentially lead to harm.

Manual review of safety events

Two independent investigators (initials redacted for peer review) reviewed the identified safety events from the imaging CPOE and scheduling system with a randomly selected 20% overlap to assess inter-reviewer agreement. We classified each safety event as to whether it could cause potential harm. Among the orders placed but not scheduled in the CPOE system, events classified as clinically necessary for acute episodic care, clinically unnecessary (duplicate order), and technical error (protocoling duplicate) were characterized as “potential harm.” The last 2 categories for duplicate orders can still be scheduled prospectively, leading to duplicate exams. Two physicians (initials redacted for peer review) also reviewed safety events in the PACS-based QA information source. Events that corresponded to imaging tests that needed to be redone, imaging tests that covered more body area than necessary (if CT scan), or delayed images for imaging examinations that are needed for emergency cases were characterized as “potential harm.”

The reviewers also classified each information source into: 1) which step(s) within the diagnostic imaging chain they elucidate, and 2) which SEIPS socio-technical factors are of relevance. The classification was non-exclusive; if any event in the information source involved more than 1 step, they were classified to more than 1 step. Similarly, each information source was categorized into socio-technical factors that were of relevance. A socio-technical factor from SEIPS was relevant if any event in the information source can be attributed to it, as previously described in several studies.32–34 The classification was also non-exclusive; if any event in the information source involved more than 1 socio-technical factor, they were classified to more than 1.

Outcome measures

The primary outcome measure was the incidence of safety events, calculated as the proportion of “potential harm” events to the total number of events recorded. Incidence was calculated separately for each information source (excluding the PACS-based QA forms).

As secondary outcome measures, we included the number of imaging chain steps elucidated in each information source, and the number of relevant socio-technical factors responsible for events in each information source. We enumerated contributory factors, classified into SEIPS socio-technical categories.

Statistical analysis

The primary outcome measure from each information source was calculated as counts and percentages. The secondary outcome measures were also reported as counts. Data were entered into Microsoft Excel for analysis (Microsoft Corp., Redmond, WA). Kappa analysis was used to calculate inter-reviewer agreement, using SAS (SAS Institute, Cary, NC).

RESULTS

Number of safety events by information source

The total numbers of safety events reported and reviewed are shown in columns 1 and 2 of Table 2. Among the total 11 570 events reported in the ESRS during the study period, 854 (7.4%) constituted diagnostic imaging events; all were reviewed. In the radiology peer review program, 12 320 events were reported and all of these were reviewed, while in the PACS-based QA form, 695 events were retrieved and all were reviewed. From the imaging ordering CPOE system, a total of 33 546 orders were retrieved and 630 were reviewed. In ANCR, 8536 alerts were retrieved and reviewed.

Table 2.

Number and incidence of safety events by information source

| Source | Total number of safety events reported in study period | Number of events reviewed | Number of potential patient harm events | Incidence of potential harm events |

|---|---|---|---|---|

| ESRS | 11 570/1 048 000 (1.1%) | 854* | 190 | 22.2% |

| Radiology peer review program | 12 320/640 000 (1.9%) | 12 320 | 67 | 0.5% |

| PACS-based QA form | 695/640 000 (0.1%) | 695 | 40 | 5.8% |

| Imaging ordering CPOE system | 33 546/640 000 (5.2%) | 630** | 391 | 62.1% |

| ANCR | 8536/640 000 (1.3%) | 8536 | 194 | 2.3% |

Diagnostic imaging events only.

Randomly selected for manual review.

Incidence of potential safety events

All 5 information sources captured events that potentially lead to patient harm (Table 2); the incidence of potential safety events ranged from 0.5% to 62.1%. Inter-annotator agreement for the imaging CPOE and scheduling system manual review for potential harm was good with Kappa = 0.629 (95% CI, 0.447-0.810). Kappa agreement for assessing steps in the diagnostic imaging chain was between 0.76 and 1.0, and for SEIPS socio-technical categories was between 0.82 and 1.0 for all information sources.

Each of the information sources contributed to elucidating diagnostic process errors in various steps of the diagnostic imaging chain (Table 3). ANCR, for instance, elucidates communication failures that may occur during provider discussions. While CPOE reveals ordering and scheduling failures, protocoling-related events are more clearly revealed in the PACS-based QA tool. The peer review system provides more detail regarding misdiagnosis or missed diagnosis, when there is discrepancy in image interpretation. Figure 3 illustrates events that may lead to “potential harm” from the 5 information sources and where they occur in various steps of the diagnostic imaging chain.

Table 3.

Classification of information sources by imaging chain steps and socio-technical factors elucidated

| Source | # of steps | Imaging chain steps | # of socio-technical factors | Socio-technical factors |

|---|---|---|---|---|

| ESRS | 8 |

|

5 |

|

| Radiology peer review program | 1 |

|

1 | 1.Person |

| PACS-based QA form | 1 | 5.Imaging procedure | 3 |

|

| Imaging ordering CPOE system | 2 |

|

2 |

|

| ANCR | 1 | 8.Report communication | 2 |

|

Figure 3.

Event examples from various information sources and where they occur in the diagnostic imaging chain.

Each information source was also classified into socio-technical factors that impact diagnostic process errors, as shown in Table 3. Examples of events corresponding to the socio-technical factors are enumerated below:

PERSON - Duplicate exam ordered (CPOE)

Chest CT scan (indication lung nodule follow-up) – ordered by primary care provider

Chest CT (no indication) – ordered by pulmonologist 1 month later

TASK - Incorrectly performed procedure (ESRS)

Intravenous extravasation of contrast agent

TOOLS AND TECHNOLOGY - Communication failure (ANCR)

The patient has renal cyst. The radiologist used ANCR notification system to reach the ordering provider and does not get a response.

ORGANIZATION - Deviation from standard operating procedure (PACS QA)

The chest CT scan image went way too low per protocol and included structures not previously seen. Unnecessary radiation.

ENVIRONMENT - Equipment malfunction (ESRS)

Patient had outpatient MRI and was not given ear plugs; he felt extreme discomfort with the noise from the machine.

Finally, Table 4 further lists contributory factors, classified into SEIPS socio-technical categories by information source. Contributory factors from each of the 5 information sources were identified in the reviewed sample of cases. The contributory factor nomenclature was obtained from an existing classification of diagnostic errors.35

Table 4.

Contributing socio-technical factors from various information sources

| Socio-technical factors | Diagnostic errors and information sources |

|---|---|

| Person |

|

| Task |

|

| Tools and Technology |

|

| Organization | |

| Environment |

Legend: Electronic Safety Reporting System (ESRS), PACS-Based Quality Assurance Form (PACS QA), Alert Notification of Critical Results (ANCR), Imaging Ordering System (CPOE).

Events that were added to the existing classification of diagnostic errors form.35

Events with examples provided in the text.

DISCUSSION

In previous publications within our institution, we recognize that diagnostic errors are a common, yet underreported, contributor to patient safety research.9,36 Further, we recognize that diagnosis errors are not synonymous to diagnostic process errors37 While diagnostic errors are defined as diagnosis that is delayed or missed, or misdiagnosis, these should also include diagnostic process errors, encompassing failure in timely access to care, elicitation or interpretation of signs/symptoms or lab results, formulation or weighing of differential diagnosis, and timely follow-up and specialty referral/evaluation. Schiff et al.37 therefore define diagnostic error more broadly as “any mistake or failure in the diagnostic process leading to a misdiagnosis, a missed diagnosis or a delayed diagnosis.”

The goal of this paper was to describe the incidence of diagnostic process errors in multiple information sources and categorize the socio-technical factors that contribute to these events. Incidence rates varied greatly with each of the information sources contributing to elucidating diagnostic process errors in various steps of the diagnostic imaging chain, and contributing socio-technical factors, primarily Person, Tasks, and Tools and Technology. We recognized that although there appears to be a substantial percentage of potentially harmful events readily visible in the ESRS and CPOE, it does not take into account the magnitude of potential harm to patients and the variability in reporting. Thus, although the ESRS highlights potential harms resulting from ordering an imaging examination on the wrong side of the body, these are recognized and reported readily. On the other hand, errors in interpreting images by a physician or failure in communicating critical results to another care provider are potential errors that are more difficult to recognize and are likely to be less frequently reported. We therefore did not compare the incidence of potential harm among information sources, which is expected to be varied. Rather, assessing the incidence may help inform future initiatives and allow evaluation of their success. Thus, the percentage of potential harms varies in information sources, reflecting variability in percentage of reporting, recognition, and magnitude of impact; these should therefore be monitored more diligently in future studies.

We were able to elucidate the diagnostic process further in radiologic imaging by using our diagnostic imaging chain workflow. We identified that the majority of events that are “potentially harmful” were in the report communication and image interpretation steps within the diagnostic imaging chain. ANCR was identified as an information source that elucidates the report communication step and could provide details regarding untimely communication of critical imaging findings. However, we highlight the persistent need for more detailed analysis of the provider discussions, which were not available in ANCR. More importantly, there is no documentation of the patient-provider discussions surrounding care management and the diagnostic process.

In assessing diagnostic errors, we were able to assess peer-review cases wherein 2 radiologists had moderate to major discrepancies in the interpretation of radiologic images. Although this information source provides a good understanding about the prevalence of certain types of diagnosis errors, this does not entirely address physicians’ cognitive processes, and other socio-technical factors that may have contributed to the discrepancies (eg, lighting, sleep deprivation).7,38

Our analysis of socio-technical factors that contributed to safety events in the diagnostic imaging process demonstrated that safety events were primarily attributed to person-related factors, with fewer attributed to tools and technology-related factors. Examples of the former include communication, awareness gaps, and incorrect actions. A duplicate order for the same exam within 1 month illustrates an “awareness gap” of a previously existing order. Further analysis of events that may lead to “potential harm” emphasized that more socio-technical factors related to task and internal environment categories play a role. Examples include complexity of tasks leading to contrast-induced reactions, and extravasation of contrast agent. Organizational factors include staffing limitations that impact imaging of ventilated patients.

Limitations to our study included the retrospective nature of reviewing data from various information sources, as well as the voluntary use of most of the reporting systems. Prospectively, we will acquire feedback from providers in the form of surveys or interviews to further elucidate diagnostic errors. For the imaging ordering system, we were provided data only from 2016; but we did not anticipate any significant change in data during the shifted study period. In addition, reporting systems generate numerators without meaningful denominators, such that although the number of patients who received incorrect interpretations of their medical imaging studies is reported, there is no indication of the total number of patients at risk for this event.39

In future studies, we plan to further elucidate diagnostic process errors from more information sources (eg, diagnostic test follow-up monitoring system). In addition, we will elucidate diagnostic errors by assessing individual contributions of each of the socio-technical factors.

Nevertheless, we identify various information sources that can inform diagnostic process errors related to diagnostic imaging. These sources provide insight into factors that impact patient safety in the diagnostic imaging process. Some of the factors identified were related to task complexity and internal environment. These are modifiable factors that can be addressed in a systematic manner by healthcare institutions. More importantly, they can inform decisions to enhance diagnostic imaging and various diagnostic processes, in general.

FUNDING

This work was supported by Agency for Healthcare Research and Quality grant number R01HS02722.

Conflict of interest statement. The authors have no competing interests to declare.

CONTRIBUTORS

All authors contributed to the study design and data acquisition; LC, RL, AW, NK, and RK are responsible for data analysis and interpretation. All authors contributed significant intellectual content during the manuscript preparation and revisions, approved the final version, and accept accountability for the overall integrity of the research process and the manuscript.

ACKNOWLEDGMENTS

The authors would like to thank Ms. Laura Peterson for reviewing the manuscript.

REFERENCES

- 1. Institute of Medicine: To Err is Human Washington, DC: National Academies Press (US); 2000. http://www.ncbi.nlm.nih.gov/books/NBK225182/. Accessed July 31, 2018.

- 2.Committee on Patient Safety and Health Information Technology. Health IT and Patient Safety: Building Safer Systems for Better Care Washington, DC: National Academies Press; 2011. [PubMed]

- 3. Carayon P, Karsh BT, Gurses AP, et al. Macroergonomics in healthcare quality and patient safety. Rev Hum Factors Ergon 2013; 81: 4–54. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Al-Jumaili AA, Doucette WR.. Comprehensive literature review of factors influencing medication safety in nursing homes: using a systems model. J Am Med Dir Assoc 2017; 186: 470–88. [DOI] [PubMed] [Google Scholar]

- 5. Gandhi TK, Kachalia A, Thomas EJ, et al. Missed and delayed diagnoses in the ambulatory setting: a study of closed malpractice claims. Ann Intern Med 2006; 1457: 488–96. [DOI] [PubMed] [Google Scholar]

- 6. Holden RJ, Carayon P, Gurses AP, et al. SEIPS 2.0: a human factors framework for studying and improving the work of healthcare professionals and patients. Ergonomics 2013; 5611: 1669–86. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Croskerry P. Perspectives on diagnostic failure and patient safety. Healthc Q 2012; 15: 50–6. [DOI] [PubMed] [Google Scholar]

- 8. Kripalani S, Jackson AT, Schnipper JL, Coleman EA.. Promoting effective transitions of care at hospital discharge: a review of key issues for hospitalists. J Hosp Med 2007; 25: 314–23. [DOI] [PubMed] [Google Scholar]

- 9. El-Kareh R, Hasan O, Schiff GD.. Use of health information technology to reduce diagnostic errors. BMJ Qual Saf 2013; 22 (Suppl 2): ii40–51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Towbin AJ, Perry LA, Larson DB.. Improving efficiency in the radiology department. Pediatr Radiol 2017; 477: 783–92. [DOI] [PubMed] [Google Scholar]

- 11. Lacson R, O'Connor SD, Sahni VA, et al. Impact of an electronic alert notification system embedded in radiologists’ workflow on closed-loop communication of critical results: a time series analysis. BMJ Qual Saf 2016; 257: 518–24. [DOI] [PubMed] [Google Scholar]

- 12. Lacson R, Ip I, Hentel KD, et al. Medicare imaging demonstration: assessing attributes of appropriate use criteria and their influence on ordering behavior. AJR Am J Roentgenol 2017; 2085: 1051–7. [DOI] [PubMed] [Google Scholar]

- 13. Singh H, Daci K, Petersen LA, et al. Missed opportunities to initiate endoscopic evaluation for colorectal cancer diagnosis. Am J Gastroenterol 2009; 10410: 2543–54. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Singh H, Naik AD, Rao R, Petersen LA.. Reducing diagnostic errors through effective communication: harnessing the power of information technology. J Gen Intern Med 2008; 234: 489–94. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Singh H, Thomas EJ, Khan MM, Petersen LA.. Identifying diagnostic errors in primary care using an electronic screening algorithm. Arch Intern Med 2007; 1673: 302–8. [DOI] [PubMed] [Google Scholar]

- 16. Singh H, Thomas EJ, Mani S, et al. Timely follow-up of abnormal diagnostic imaging test results in an outpatient setting: are electronic medical records achieving their potential? Arch Intern Med 2009; 16917: 1578–86. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Newman-Toker DE, Pronovost PJ.. Diagnostic errors–the next frontier for patient safety. JAMA 2009; 30110: 1060–2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Lee CS, Nagy PG, Weaver SJ, Newman-Toker DE.. Cognitive and system factors contributing to diagnostic errors in radiology. AJR Am J Roentgenol 2013; 2013: 611–7. [DOI] [PubMed] [Google Scholar]

- 19. Morozov S, Guseva E, Ledikhova N, Vladzymyrskyy A, Safronov D.. Telemedicine-based system for quality management and peer review in radiology. Insights Imaging 2018; 93: 337–41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Lacson R, Prevedello LM, Andriole KP, et al. Four-year impact of an alert notification system on closed-loop communication of critical test results. AJR Am J Roentgenol 2014; 2035: 933–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Anthony SG, Prevedello LM, Damiano MM, et al. Impact of a 4-year quality improvement initiative to improve communication of critical imaging test results. Radiology 2011; 2593: 802–7. [DOI] [PubMed] [Google Scholar]

- 22. MacMahon H. Compliance with Fleischner Society guidelines for management of lung nodules: lessons and opportunities. Radiology 2010; 2551: 14–5. [DOI] [PubMed] [Google Scholar]

- 23. Johnson CD, Swensen SJ, Applegate KE, et al. Quality improvement in radiology: white paper report of the Sun Valley Group meeting. J Am Coll Radiol 2006; 37: 544–9. [DOI] [PubMed] [Google Scholar]

- 24. Rubin DL. Informatics in radiology: measuring and improving quality in radiology: meeting the challenge with informatics. Radiographics 2011; 316: 1511–27. [DOI] [PubMed] [Google Scholar]

- 25. Enzmann DR. Radiology’s value chain. Radiology 2012; 2631: 243–52. [DOI] [PubMed] [Google Scholar]

- 26. Enzmann DR, Schomer DF.. Analysis of radiology business models. J Am Coll Radiol 2013; 103: 175–80. [DOI] [PubMed] [Google Scholar]

- 27. Carayon P, Schoofs Hundt A, Karsh BT, et al. Work system design for patient safety: the SEIPS model. Qual Saf Health Care 2006; 15 (Suppl 1): i50–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Carayon P, Wetterneck TB, Rivera-Rodriguez AJ, et al. Human factors systems approach to healthcare quality and patient safety. Appl Ergon 2014; 451: 14–25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Tellis WM, Andriole KP.. Implementing a MIRC interface for a database driven teaching file. In: AMIA Annual Symposium Proceedings/AMIA Symposium AMIA Symposium. Bethesda, MD: American Medical Informatics Association; 2003: 1029. [PMC free article] [PubMed] [Google Scholar]

- 30. Borgstede JP, Lewis RS, Bhargavan M, Sunshine JH.. RADPEER quality assurance program: a multifacility study of interpretive disagreement rates. J Am Coll Radiol 2004; 11: 59–65. [DOI] [PubMed] [Google Scholar]

- 31. Lacson R., O’Connor SD, Andriole KP, Prevedello LM, Khorasani R.. Automated critical test result notification system: architecture, design, and assessment of provider satisfaction. AJR Am J Roentgenol 2014; 2035: W491–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Abraham O, Myers MN, Brothers AL, Montgomery J, Norman BA, Fabian T.. Assessing need for pharmacist involvement to improve care coordination for patients on LAI antipsychotics transitioning from hospital to home: a work system approach. Res Social Adm Pharm 2017; 135: 1004–13. [DOI] [PubMed] [Google Scholar]

- 33. Hysong SJ, Sawhney MK, Wilson L, et al. Improving outpatient safety through effective electronic communication: a study protocol. Implement Sci 2009; 41: 62. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Khunlertkit A, Jantzi N.. Using the SEIPS Framework to reveal hidden factors that can complicate a vaccine documentation process. Proc Hum Factors Ergon Soc Annu Meet 2016; 601: 541–5. [Google Scholar]

- 35. Rogith D, Iyengar MS, Singh H.. Using fault trees to advance understanding of diagnostic errors. Jt Comm J Qual Patient Saf 2017; 4311: 598–605. [DOI] [PubMed] [Google Scholar]

- 36. Schiff GD, Hasan O, Kim S, et al. Diagnostic error in medicine: analysis of 583 physician-reported errors. Arch Intern Med 2009; 16920: 1881–7. [DOI] [PubMed] [Google Scholar]

- 37. Schiff GD, Kim S, Abrams R, et al. Diagnosing diagnosis errors: lessons from a multi-institutional collaborative project In: Henriksen K, Battles JB, Marks ES, Lewin DI, eds. Advances in Patient Safety: From Research to Implementation. (Volume 2: Concepts and Methodology). Advances in Patient Safety. Rockville, MD: Agency for Healthcare Research and Quality; 2005. [Google Scholar]

- 38. Croskerry P. Diagnostic failure: a cognitive and affective approach In: Henriksen K, Battles JB, Marks ES, Lewin DI, eds. Advances in Patient Safety: From Research to Implementation. (Volume 2: Concepts and Methodology). Advances in Patient Safety. Rockville, MD: Agency for Healthcare Research and Quality; 2005. [PubMed] [Google Scholar]

- 39. Jones DN, Benveniste KA, Schultz TJ, Mandel CJ, Runciman WB.. Establishing national medical imaging incident reporting systems: issues and challenges. J Am Coll Radiol 2010; 78: 582–92. [DOI] [PubMed] [Google Scholar]