Abstract

Objective

The Objective Structured Assessment of Debriefing (OSAD) is an evidence-based, 8-item tool that uses a behaviorally anchored rating scale in paper-based form to evaluate the quality of debriefing in medical education. The objective of this project was twofold: 1) to create an easy-to-use electronic format of the OSAD (eOSAD) in order to streamline data entry; and 2) to pilot its use on videoed debriefings.

Materials and Methods

The eOSAD was developed in collaboration with the LSU Health New Orleans Epidemiology Data Center using SurveyGizmo (Widgix Software, LLC, Boulder, CO, USA) software. The eOSAD was then piloted by 2 trained evaluators who rated 37 videos of faculty teams conducting pre-briefing and debriefing after a high-fidelity trauma simulation. Inter-rater reliability was assessed, and evaluators’ qualitative feedback was obtained.

Results

Inter-rater reliability was good [prebrief, intraclass correlation coefficient, ICC = 0.955 (95% CI, 0.912–0.977), P < .001; debrief, ICC = 0.853 (95% CI, 0.713–0.924), P < .001]. Qualitative feedback from evaluators found that the eOSAD was easy to complete, simple to read and add comments, and reliably stored data that were readily retrievable, enabling the smooth dissemination of information collected.

Discussion

The eOSAD features a secure login, sharable internet access link for distant evaluators, and the immediate exporting of data into a secure database for future analysis. It provided convenience for end-users, produced reliable assessments among independent evaluators, and eliminated multiple sources of possible data corruption.

Conclusion

The eOSAD tool format advances the post debriefing evaluation of videoed inter-professional team training in high-fidelity simulation.

Keywords: debriefing, patient simulation, user-computing interface, electronic evaluation, OSAD, assessment

INTRODUCTION

High-fidelity simulation-based training provides a safe, controlled learning environment for pre-professional licensure students to encounter purposely designed, realistic clinical situations and learn from their mistakes.1 Numerous inter-professional education formats of simulation-based training have demonstrated improvements in student team-based attitudes,2–5 perceptions of collaboration,4–9 and team-based performance.10–12 The International Nursing Association for Clinical Simulation and Learning (INACSL) developed INACSL Standards of Best Practice: SimulationSM.13 In accordance, criteria meeting the simulation design standard include starting a well-developed and tested simulation-based experience with a prebriefing (Criterion 7) and following the simulation-based experience with a debriefing (Criterion 8).13

A faculty-led pre-briefing that concludes immediately before the simulation-based experience should contain core components13,14 to maximize a student’s learning experience. The facilitator is responsible for ensuring that the prebriefing offers students an orientation to 1) the simulation environment (ie, available equipment and its functionality, mannequin capabilities, patient scenario), 2) goals and expectations for the simulation-based experience, 3) time allotment of learning activities, 4) simulation ground rules to ensure a safe learning environment (ie, treat the scenario as real, perform as a team exhibiting professional integrity and mutual respect, keep the case scenario and students’ actions confidential), and 5) the students’ roles and responsibilities pertaining to the simulated patient scenario. During inter-professional team-based student training, team members are encouraged to introduce themselves and describe the roles and responsibilities of one’s respective profession, particularly as related to the simulated patient scenario.

The most important component of the simulated-based learning experience is the debriefing,15,16 which should be led by faculty trained in debriefing techniques13 immediately after students’ completion of the simulation-based experience.17,18 Debriefing is the process whereby students re-reflect on the simulation-based experience and identify gaps in their knowledge and skills related to the appropriate competencies for clinical practice and develop strategies for improvement.18 These competencies not only involve mastering technologies and evidence-based clinical practices, but also ensuring safe delivery of patient care and demonstrated teamwork. Students may engage in reflective practice related to team-based competencies, which may include: shared mental model, role clarity, situation awareness, cross monitoring, open communication, resource management, flattened hierarchy, anticipatory response, and mental rehearsal.19 Debriefing sessions often conclude with student reflection on strategies for translation of skills to clinical practice.

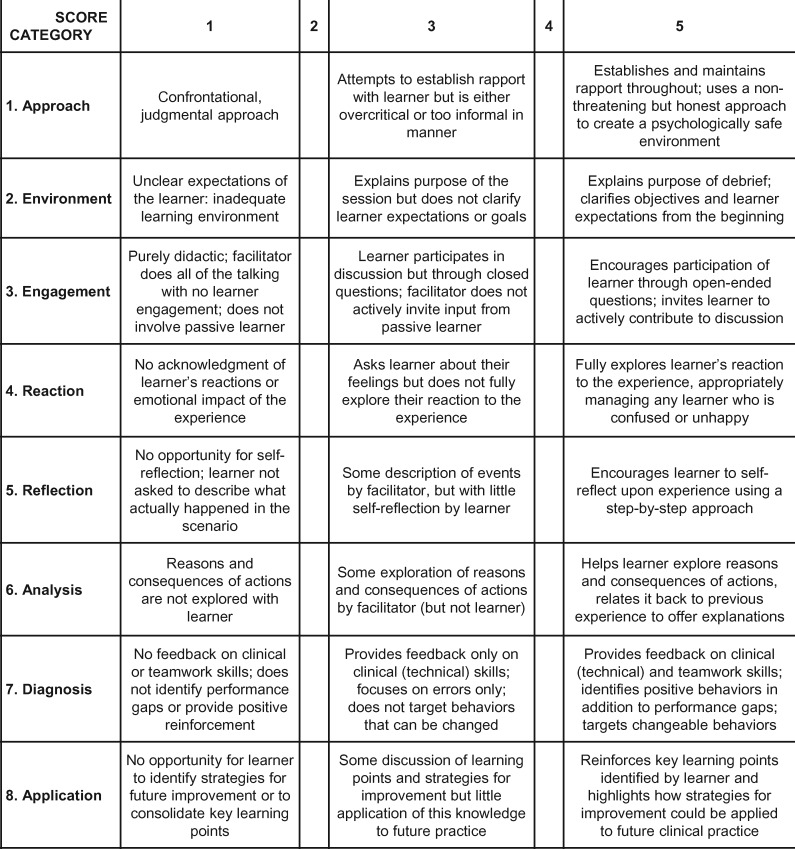

The provision of high-quality debriefing in inter-professional education is essential for optimizing learning by the different participating health professionals/students. Until recently, assessing the effectiveness of a debriefing and providing appropriate feedback relied on individualized coaching and evaluation by peers or experts that was more subjective than objective in nature. Fortunately, the introduction of several observer-based assessment tools has addressed this shortfall in the field.20–22 One of the earliest of these debriefing assessment instruments was the Objective Structured Assessment of Debriefing (OSAD), developed in 2012.20 Originally designed as an evidence-based,20 end-user informed23 approach to debriefing in surgery to improve practice and optimize learning during simulation-based training, its use in other areas of health professional education was quickly recognized.24,25 As described20 in its original form, the OSAD tool is based on a 40-point behaviorally anchored rating scale for estimating the quality of debriefing. To measure level of quality, the scale assigns numeric values (1–5) to 8 core categories of effective debriefing. Each category is rated on how well it is conducted by a debriefing facilitator. Descriptive anchors are provided at the lowest point, mid-point, and highest point of the scale to help guide ratings. Higher scores indicate higher quality of debriefings. Its typical application is as a paper-based form (Figure 1) that is completed by trained observers. In the original psychometric evaluation, the OSAD tool demonstrated very good content validity (global OSAD content validity index of 0.94), excellent inter-rater reliability [intraclass correlation coefficient (ICC) = 0.881] and excellent test-retest reliability (ICC = 0.898).20

Figure 1.

Paper-based version of the Objective Structured Assessment of Debriefing (OSAD) tool.20

Such a process of paper-based data collection, however, can lead to data corruption due to misreading of tallied results, loss of paper forms, and the like.26–28 In order to address these weaknesses, we set out to design an electronic version of the OSAD, the eOSAD. The objective of this project was twofold: 1) to create an easy-to-use electronic format of the OSAD in order to streamline data entry; and 2) to pilot its use on videoed debriefings.

METHODS

eOSAD design

Design of the eOSAD arose out of a collaboration between the authors (JP, JZ, RC) and the LSU Health New Orleans Epidemiology Data Center (MB) using SurveyGizmo (Widgix Software, LLC, Boulder, CO, USA) software. Key design features included a secure login procedure, the ability to share a link to the eOSAD with evaluators for online access anywhere, immediate exporting of data into a secure database for future analysis, and screen formatting to allow for ease of reading and evaluation by the online evaluator. In its final format, the eOSAD consisted of 2 main sections, each with its unique features and purpose.

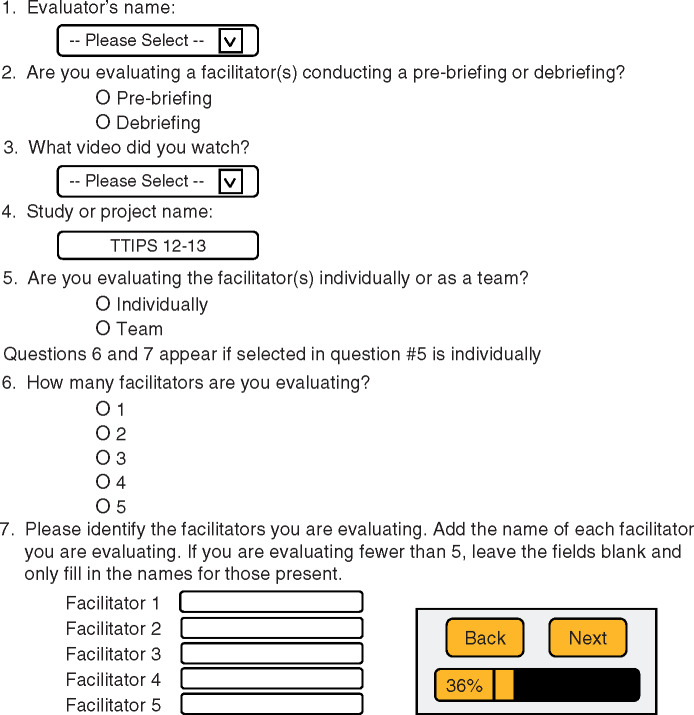

The first section of the eOSAD (Figure 2) collects descriptive information regarding the evaluator and the video of the briefing being evaluated. Prior to an evaluation or series of evaluations, names of evaluators are uploaded into this section to allow dropdown menu selection of the evaluator. The evaluator then chooses the type of briefing being evaluated (ie, pre-briefing given prior to a training intervention or debriefing conducted immediately after training), identifies the video of the briefing being evaluated, which has been linked to a user-defined study or project name, and designates the faculty conducting the pre-briefing or debriefing as being evaluated either as a team or individually. If team members are being individually evaluated, the evaluator then chooses how many individuals to evaluate and provides the names of those to be evaluated. At all times, evaluators are able to see how much of the eOSAD has been completed and move between pages by selecting back and next buttons, located above a completion bar shown at the bottom of every screen page.

Figure 2.

First section design of the electronic version of the OSAD tool, the eOSAD.

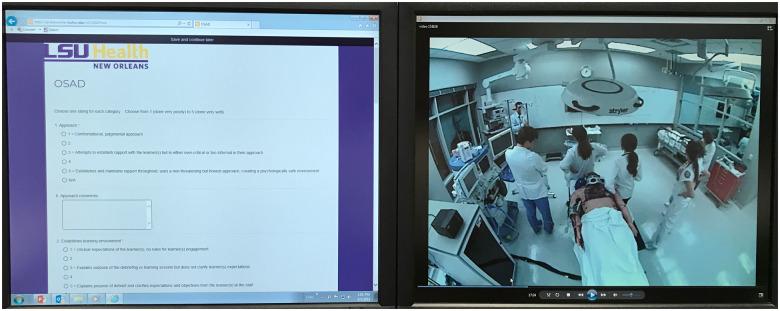

The second section of the eOSAD allows the evaluator to rate (score) how well an individual or team performed in each of the 8 OSAD categories during a pre-briefing or debriefing using a 5-point Likert scale (1 = “done very poorly” to 5 = “done very well”), as was subsequently developed in the pediatric OSAD tool.25 Likewise, scores of 1, 3, and 5 were anchored with the specific behavioral descriptions used at the lowest, middle, and highest points of the 5-point OSAD scale. These anchors provide evaluators a guide as to what would be expected of the facilitator to achieve that score.25 OSAD categories are presented to the evaluator sequentially within a single scrollable page. Unlike the paper-based version, however, the scale for each category is oriented vertically rather than in its original horizontal alignment. Furthermore, a “not applicable” option was included to allow users the ability to submit an evaluation of some, but not all, OSAD categories. This offers streamlining of data input specific to a particular category or categories of debriefing. Finally, a comments section follows each category item in order to provide evaluators the option to comment on their observations and rationale for a given OSAD category rating (ie, provide the opportunity for qualitative analysis). Figure 3 shows an example of a screen page that an evaluator would see when evaluating how well an individual or team during a debriefing conducted the first OSAD category element: approach. Note the 2 error codes, shown at the bottom of the figure, which appear following inadequate completion of the eOSAD. The first error code is triggered if evaluation of any eOSAD category element is omitted (no rating selected), unless “not applicable” was selected. A link is also provided that will take the evaluator to the first error needing correction. The second error code (ie, the statement “This question is required.”) appears when a compulsory question is skipped. Upon completion of all 8 elements of the OSAD, the evaluator has the option to evaluate another video of a briefing or logging out. If the evaluator selects to continue with another evaluation, the program automatically returns to the beginning screen of the eOSAD to begin a new evaluation (Figure 4).

Figure 3.

Example eOSAD screen page design showing what an evaluator would see when assessing how well the debriefer team conducted an OSAD category. Shown is the first of 8 eOSAD category elements required to be evaluated and the error codes that may be displayed during eOSAD completion.

Figure 4.

Final section design of the eOSAD tool.

eOSAD piloting

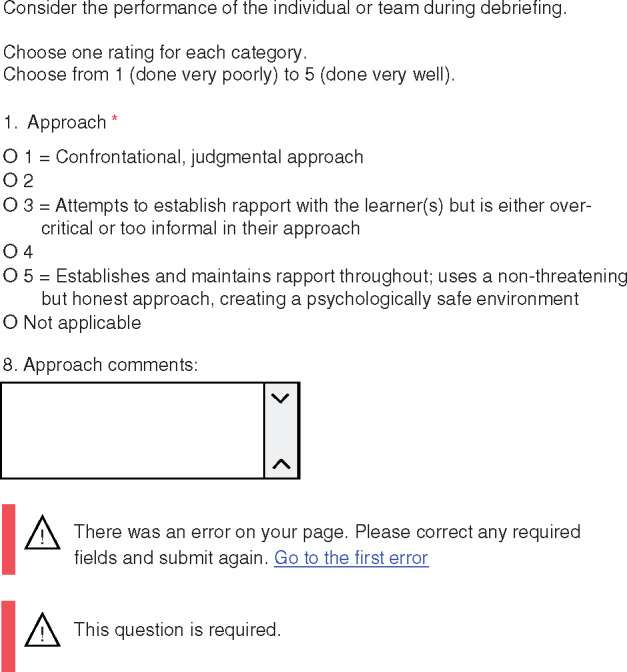

With the eOSAD format and design completed, 2 of the authors (JZ, RC) underwent training and calibration in rating pre-briefings and debriefings with the OSAD tool. Using the eOSAD, they then independently observed and evaluated 37 videos of faculty teams conducting pre-briefing and debriefing of inter-professional teams of third-year medical students and undergraduate senior nursing students participating in high-fidelity, simulation-based training sessions involving trauma resuscitation scenarios (Figure 5). Faculty for these sessions included a surgeon (JP), an internist, a nurse anesthetist, and a clinical nurse specialist (DG). For these inter-professional training sessions, faculty teams consisted of varying combinations of 1 to 3 members. Faculty teams provided a pre-briefing immediately before the simulation-based training session in which faculty introduced themselves, oriented learners to the simulated environment, and established the ground rules for the session. Following this pre-briefing, the learners underwent the trauma resuscitation scenario. Faculty then conducted an immediate post-action debriefing at the conclusion of the scenario. Consequently, the trained evaluators rated faculty teams for pre-briefing and debriefing sessions separately.

Figure 5.

Internet access to eOSAD tool and video recordings of faculty team debriefings were made available to raters through secure login.

The opportunity to evaluate videoed faculty teams performing pre-briefings, as well as debriefings, allowed us to examine if the OSAD tool, designed to assess quality of debriefings, could also be used to assess the quality of pre-briefings. The first 3 OSAD categories, approach, environment, and engagement, are related to behaviors exemplified during both a pre-briefing and debriefing, whereas the remaining 5 OSAD categories relate specifically to an after-exercise experience (ie, simulation-based scenario). Therefore, the quality of team pre-briefings was rated only for the first 3 OSAD categories. The behavioral anchors for each of these categories were unchanged, apart from using pre-briefing where debriefing was referenced. Each team debriefing session that followed a simulated scenario underwent rating for all 8 categories of OSAD: approach, environment, engagement, reaction, reflection, analysis, diagnosis, and application.

Evaluators’ ratings were analyzed using IBM SPSS Statistics 25 package. Inter-rater reliability of the OSAD was assessed using intraclass correlation coefficients (ICC) with absolute agreement. Values of 0.70 or higher indicate good reliability.29 A P value less than .05 was considered significant. The frequency of evaluators’ scores within and among pre-briefing and debriefing OSAD category elements was used to calculate the percentage of poor (OSAD score 1 or 2), average (OSAD score 3), or good (OSAD score 4 or 5) ratings. Evaluators provided qualitative feedback regarding a team’s facility with the OSAD category elements by typing their observations into the respective eOSAD comments sections (Figure 3). After completing the eOSAD pilot, evaluators were surveyed on the use of the eOSAD tool.

RESULTS

The pre-brief inter-rater reliability for the OSAD total score between the 2 independent evaluators, as assessed by intraclass correlations, was 0.955 (95% CI, 0.912–0.977), P < .001, and the debrief ICC was 0.853 (95% CI, 0.713–0.924), P < .001]. Inter-rater reliability was demonstrated with an ICC above 0.90 (P < .001) for all 3 prebrief dimensions of OSAD: approach (0.917), environment (0.917), and engagement (0.905). Inter-rater reliability was demonstrated with an ICC above 0.70 for 6 of 8 debrief dimensions of OSAD: approach (0.835), environment (0.882), engagement (0.719), reaction (0.713), diagnosis (0.770), and application (0.795). Inter-rater reliability was demonstrated with an ICC above 0.46 for the remaining 2 debrief dimensions of OSAD: reflection (0.469, P = .033) and analysis (0.593, P < .01).

The overall ratings (n = 222) of combined pre-briefing category elements (Table 1) were 10% poor, 30% average, and 60% good. A good approach OSAD element was rated lowest (47%) compared to good environment (64%) and good engagement (70%) OSAD elements. The environment OSAD element had the highest poor rating (14%). The overall ratings (n = 592) of combined debriefing category elements (Table 2) were 1% poor, 12% average, and 87% good. The environment, reaction, and application OSAD elements had a 3% poor rating. The environment, reaction, and approach OSAD elements had the lowest good ratings, 74%, 80%, and 81%, respectively. All other OSAD categories had a good rating ≥ 88% (range 88–95%).

Table 1.

Frequency of evaluators’ ratings on how well faculty teams conducted OSAD category elements during pre-briefings

| OSAD Category Element | Evaluator 1 Frequency | Evaluator 2 Frequency | Total Frequency (%)* |

|---|---|---|---|

| 1. Approach | n = 37 | n = 37 | n = 74 |

| Poor | 3 | 3 | 6 (8) |

| Average | 16 | 17 | 33 (45) |

| Good | 18 | 17 | 35 (47) |

| 2. Environment | n = 37 | n = 37 | n = 74 |

| Poor | 5 | 5 | 10 (14) |

| Average | 9 | 8 | 17 (23) |

| Good | 23 | 24 | 47 (64) |

| 3. Engagement | n = 37 | n = 37 | n = 74 |

| Poor | 3 | 3 | 6 (8) |

| Average | 8 | 8 | 16 (22) |

| Good | 26 | 26 | 52 (70) |

| Overall | n = 111 | n = 111 | n = 222 |

| Poor | 11 | 11 | 22 (10) |

| Average | 33 | 33 | 66 (30) |

| Good | 67 | 67 | 134 (60) |

Percentages may not equal 100% due to rounding.

Table 2.

Frequency of evaluators’ ratings on how well faculty teams conducted OSAD category elements during debriefings

| OSAD Category Element | Evaluator 1 Frequency | Evaluator 2 Frequency | Total Frequency (%)* |

|---|---|---|---|

| 1. Approach | n = 37 | n = 37 | n = 74 |

| Poor | 0 | 0 | 0 (0) |

| Average | 7 | 7 | 14 (19) |

| Good | 30 | 30 | 60 (81) |

| 2. Environment | n = 37 | n = 37 | n = 74 |

| Poor | 1 | 1 | 2 (3) |

| Average | 9 | 8 | 17 (23) |

| Good | 27 | 28 | 55 (74) |

| 3. Engagement | n = 37 | n = 37 | n = 74 |

| Poor | 0 | 0 | 0 (0) |

| Average | 4 | 5 | 9 (12) |

| Good | 33 | 32 | 65 (88) |

| 4. Reaction | n = 37 | n = 37 | n = 74 |

| Poor | 1 | 1 | 2 (3) |

| Average | 6 | 7 | 13 (18) |

| Good | 30 | 29 | 59 (80) |

| 5. Reflection | n = 37 | n = 37 | n = 74 |

| Poor | 0 | 0 | 0 (0) |

| Average | 3 | 3 | 6 (8) |

| Good | 34 | 34 | 68 (92) |

| 6. Analysis | n = 37 | n = 37 | n = 74 |

| Poor | 0 | 0 | 0 (0) |

| Average | 2 | 2 | 4 (5) |

| Good | 35 | 35 | 70 (95) |

| 7. Diagnosis | n = 37 | n = 37 | n = 74 |

| Poor | 0 | 0 | 0 (0) |

| Average | 2 | 3 | 5 (7) |

| Good | 35 | 34 | 69 (93) |

| 8. Application | n = 37 | n = 37 | n = 74 |

| Poor | 1 | 1 | 2 (3) |

| Average | 3 | 2 | 5 (7) |

| Good | 33 | 34 | 67 (91) |

| Overall | n = 296 | n = 296 | n = 592 |

| Poor | 3 | 3 | 6 (1) |

| Average | 36 | 37 | 73 (12) |

| Good | 257 | 256 | 513 (87) |

Percentages may not equal 100% due to rounding.

Qualitative feedback from both evaluators found that the eOSAD was easy to complete, simple to read and add comments, and stored data that were easily retrievable and accessible. Captured evaluators’ comments from each eOSAD category elucidated common team pre-briefing and debriefing behaviors associated with low and high ratings, which could be focal points of discussion for formative feedback to the faculty teams.

DISCUSSION

For this project, we designed an electronic format of an evidence-based, end-user informed debriefing assessment tool, the eOSAD. Pilot testing of this version of the tool to rate previously recorded debriefing sessions by faculty teams for inter-professional student simulation-based training of trauma resuscitation scenarios revealed very good inter-rater reliability among trained evaluators separated by time and distance. Additionally, pilot testing of the eOSAD tool designed to provide an evaluation of pre-briefing quality, using OSAD categories that were common features of a pre-briefing and excluding categories specific to a completed experience, also revealed very good inter-rater reliability among evaluators. As such, the eOSAD has potential for widespread use for both formative and summative assessment of debriefings and pre-briefings conducted in conjunction with health professional education. Furthermore, the adaptation of the OSAD to the eOSAD now offers a 5-point Likert scale to rate quality of debriefings, in conformity with the subsequently developed and validated pediatric OSAD tool.24,25 This provides enhanced strength to the original OSAD tool, as a continuous scale allows for more robust statistical analysis.

As with formats of any kind, both the paper-based and the electronic, web-based version of the OSAD have advantages and disadvantages related to data collection and evaluation. Advantages of the paper-based version include its ability to evaluate a debriefing conducted by an individual or a team and its lack of dependence on web access or electronic data entry, which allows its use anywhere. Furthermore, corrections are easy to do on the instrument when the need arises, and the evaluator can see all elements at once. Disadvantages of this version, however, are significant. They include a lack of space for comments, and the need to print physical copies of it that have to be carried, sorted, and stored. More importantly, opportunities for data corruption are manifold: 1) loss of data due to missing sheets; 2) missing data due to incomplete forms; 3) transformation of data due to misreading values; and 4) incorrect entry of data due to mistyping of values.

The eOSAD design attempts to address these adverse issues related to the paper-based version of the tool. The web-based format allows for instantaneous uploading of inputted data immediately upon completion of the evaluation, bypassing multiple steps of data entry prone to error: 1) storage of completed forms; 2) misreading of values; and 3) inaccurate input of values into database. Thus, data are likely more efficiently and securely stored. The eOSAD hard stops requiring completion of input for each of the categories of the OSAD ensure complete data input. These advantages alone make the eOSAD attractive as the format by which to evaluate debriefings. The added benefits of a secure login procedure, access by evaluators from anywhere in the world via online link, and user-friendly design for ease of reading and evaluation increase its utility. To further increase its utility, the development of an eOSAD research app for either or both iOS and Android platforms is being considered. Also, a public link to the eOSAD has been created to share with anyone who would like to see it in action.

Further advantages of the eOSAD include the ability to assess recorded debriefings at times convenient for the evaluator, the option for qualitative data collection using the comment sections, and, like the paper-based version, the ability to evaluate a debriefing conducted by an individual or a team. Also, the option for an evaluator to select “not applicable” when rating OSAD categories allows evaluators to submit an evaluation of some, but not all, OSAD categories. This offers streamlining of data input specific to a particular category or categories of debriefing. Although there are many advantages to the eOSAD, disadvantages do exist. The requirement for an electronic input device (ie, computer, cellular phone, and tablet) and internet access are the most apparent. Accidental upload of data is also a possibility given the immediate nature of database transfer. This event would require data scrubbing. Ideally, the hard stops designed to ensure complete data input prevent troublesome missing data points. However, it is possible for an evaluator to inadvertently select “not applicable” when wishing to rate an OSAD category, resulting in missing data points.

This study has certain limitations. Although separated by time and distance, only 2 independent evaluators established the feasibility of the eOSAD tool. Thus, the eOSAD tool should be tested across other multiple sites and evaluators to see if the demonstrated high level of inter-rater reliability can be replicated on a wider scale and to validate the tool’s ease of use and ability to streamline data collection beyond the positive qualitative feedback provided by the 2 evaluators in this study. In this study, very good overall inter-rater reliability was demonstrated among evaluators using the eOSAD to rate individuals and multi-facilitator teams. However, individuals within multi-facilitator teams were not evaluated, and therefore the overall level of inter-rater agreement may have differed.

CONCLUSION

Development of an easy-to-use, electronic version of the OSAD, the eOSAD, with good overall inter-rater agreement is feasible. Such a format has the benefit of cutting out multiple sources of data corruption due to its instantaneous export of data into a secure database. Stored quantitative and qualitative eOSAD data can be readily accessed and analyzed, thus providing timely feedback to debriefing facilitators. Next steps include using the eOSAD to assist in evaluating the quality of debriefing of faculty during simulation-based team training and correlating it with students’ learning.

Conflict of interest

JZ, RBC: no competing interests to declare. JP has the following disclosures: 1) royalties from Oxford University Press as co-editor for the book Simulation in Radiology, 2) research grant from Acell, Inc., for work on wound healing, 3) research support as lead faculty for a grant from the Healthcare Research and Services Agency (No. D09HP26947), 4) research support as co-PI for a LIFT2 grant from the LSU Board of Regents, 4) research support as PI for a grant from the Southern Group on Educational Affairs. DG received research support for a grant from Healthcare Research and Services Agency (No. D09HP26947) as well as the Southern Group for Educational Affairs grant. MB is the Manager of the LSU Health New Orleans School of Public Health Epidemiology Data Center.

Funding

This work was supported, in part, by the LSU Health Sciences Center, New Orleans Academy for the Advancement of Educational Scholarship Educational Enhancement Grant 2011–2012.

Contributors

JZ, RBC, MB, DG, JP made substantial contributions to conception and design of the work, interpretation of the data, and drafting or revising the manuscript critically for important intellectual content. JZ, RBC, JP, MB contributed to the design of the eOSAD model. MB constructed the eOSAD model. JZ, RBC, MB made substantial contributions to the acquisition of data. JZ contributed to the analysis of data. All authors have given final approval of the version to be published and agreement with the accuracy and integrity of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved.

Acknowledgments

We wish to thank Dr Vadym V. Rusnak and Dr Vladimir J. Kiselov for their expert technical assistance in video capturing and editing.

REFERENCES

- 1. Beaubien JM, Baker DP.. The use of simulation for training teamwork skills in health care: how low can you go? Qual Saf Health Care 2004; 13 (suppl_1): i51–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Hobgood C, Sherwood G, Frush K, et al. Teamwork training with nursing and medical students: does method matter? Results of an interinstitutional, interdisciplinary collaboration. Qual Saf Health Care 2010; 196: e25. [DOI] [PubMed] [Google Scholar]

- 3. Stewart M, Kennedy N, Cuene-Grandidier H.. Undergraduate interprofessional education using high-fidelity paediatric simulation. Clin Teach 2010; 72: 90–6. [DOI] [PubMed] [Google Scholar]

- 4. Sigalet E, Donnon T, Grant V.. Undergraduate students’ perceptions of and attitudes toward a simulation-based inter- professional curriculum. Simul Healthc 2012; 76: 353–8. [DOI] [PubMed] [Google Scholar]

- 5. Brock D, Abu-Rish E, Chiu C, et al. Interprofessional education in team communication: working together to improve patient safety. BMJ Qual Saf 2013; 225: 414–23. [DOI] [PubMed] [Google Scholar]

- 6. Whelan JJ, Spencer JF, Rooney K.. A ‘RIPPER’ project: advancing rural inter-professional health education at the University of Tasmania. Rural Remote Health 2008; 82: 1017.. [PubMed] [Google Scholar]

- 7. Dillon PM, Noble KA, Kaplan L.. Simulation as a means to foster collaborative interdisciplinary education. Nurs Educ Perspect 2007; 21: 72–90. [PubMed] [Google Scholar]

- 8. Reese CE, Jeffries PR, Engum SA.. Learning together: simulation to develop nursing and medical student collaboration. Nurs Educ Perspect 2011; 311: 33–7. [PubMed] [Google Scholar]

- 9. Vyas D, McCulloh R, Dyer C, Gregory G, Higbee D.. An interprofessional course using human patient simulation to teach patient safety and teamwork skills. Am J Pharm Educ 2012; 764: 71.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Jankouskas TS, Haidet KK, Hupcey JE, Kolanowski A, Murray WB.. Targeted crisis resource management training improves performance among randomized nursing and medical students. Simul Healthc 2011; 66: 316–26. [DOI] [PubMed] [Google Scholar]

- 11. Garbee DD, Paige JT, Bonanno LS, et al. Effectiveness of teamwork and communication education using an interprofessional high-fidelity human patient simulation critical care code. J Nurs Educ Pract 2012; 33: 1–12. [Google Scholar]

- 12. Sigalet E, Donnon T, Cheng A, et al. Development of a team performance scale to assess undergraduate health professionals. Acad Med 2013; 887: 989–96. [DOI] [PubMed] [Google Scholar]

- 13. INACSL Standards Committee. INACSL standards of best practice: simulationSM simulation design. Clin Simul Nurs 2016; 12: S5–12. [Google Scholar]

- 14. Stephenson E, Poore J.. Tips for conducting the pre-brief for a simulation. J Contin Educ Nurs 2016; 478: 353–5. [DOI] [PubMed] [Google Scholar]

- 15. Shinnick MA, Woo M, Horwich TB, et al. Debriefing: the most important component in simulation? Clin Simul Nurs 2011; 73: e105–ell. [Google Scholar]

- 16. Decker S, Fey M, Sideras S, et al. Standards of best practice: simulation standard VI: the debriefing process. Clin Simul Nurs 2013; 96: S26–9. [Google Scholar]

- 17. Salas E, Klein C, King H, et al. Debriefing medical teams: 12 evidence-based best practices and tips. Jt Comm J Qual Patient Saf 2008; 349: 518–27. [DOI] [PubMed] [Google Scholar]

- 18. INACSL Standards Committee. INACSL standards of best practice: simulationSM debriefing. Clin Simul Nurs 2016; 12: S21–5. [Google Scholar]

- 19. Paige JT, Garbee DD, Kozmenko V, et al. Getting a head start: high-fidelity, simulation-based operating room team training of interprofessional students. J Am Coll Surg 2014; 2181: 140–9. [DOI] [PubMed] [Google Scholar]

- 20. Arora S, Ahmed M, Paige J, et al. Objective structured assessment of debriefing: bringing science to the art of debriefing in surgery . Ann Surg 2012; 2566: 982–8. [DOI] [PubMed] [Google Scholar]

- 21. Brett-Fleegler M, Rudolph J, Eppich W, et al. Debriefing assessment for simulation in healthcare: development and psychometric properties. Simul Healthc 2012; 75: 288–94. [DOI] [PubMed] [Google Scholar]

- 22. Saylor JL, Wainwright SF, Herge EA, et al. Development of an instrument to assess the clinical effectiveness of the debriefer in simulation education. J Allied Health 2016; 453: 191–8. [PMC free article] [PubMed] [Google Scholar]

- 23. Ahmed M, Sevdalis N, Paige J, et al. Identifying best practice guidelines for debriefing in surgery: a tri-continental study. Am J Surg 2012; 2034: 523–9. [DOI] [PubMed] [Google Scholar]

- 24. Runnacles J, Thomas L, Sevdalis N, et al. Development of a tool to improve performance debriefing and learning: the paediatric objective structured assessment of debriefing (OSAD) tool. Postgrad Med J 2014; 901069: 613–21. [DOI] [PubMed] [Google Scholar]

- 25. Runnacles J, Thomas L, Korndorffer J, et al. Validation evidence of the paediatric objective structured assessment of debriefing (OSAD) tool. BMJ Simul Technol Enhanc Learn 2016; 23: 61–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Vergnaud AC, Touvier M, Méjean C, et al. Agreement between web-based and paper versions of a socio-demographic questionnaire in the NutriNet-Santé study. Int J Public Health 2011; 564: 407–17. [DOI] [PubMed] [Google Scholar]

- 27. Touvier M, Méjean C, Kesse-Guyot E, et al. Comparison between web-based and paper versions of a self-administered anthropometric questionnaire. Eur J Epidemiol 2010; 255: 287–96. [DOI] [PubMed] [Google Scholar]

- 28. Thriemer K, Ley B, Ame SM, et al. Replacing paper data collection forms with electronic data entry in the field: findings from a study of community-acquired bloodstream infections in Pemba, Zanzibar. BMC Res Notes 2012; 51: 113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Abell N, Springer DW, Kamata A.. Developing and Validating Rapid Assessment Instruments. Oxford, UK: Oxford University Press; 2009. [Google Scholar]